Best Practices for Catalyst 6500/6000 Series and Catalyst 4500/4000 Series Switches Running Cisco IOS Software

Available Languages

Contents

Introduction

This document provides best practices for Catalyst 6500/6000 and 4500/4000 series switches that run Cisco IOS® Software on the Supervisor Engine.

The Catalyst 6500/6000 and Catalyst 4500/4000 series switches support one of these two operating systems that run on the Supervisor Engine:

-

Catalyst OS (CatOS)

-

Cisco IOS Software

With CatOS, there is the option to run Cisco IOS Software on router daughter cards or modules such as:

-

The Multilayer Switch Feature Card (MSFC) in the Catalyst 6500/6000

-

The 4232 Layer 3 (L3) module in the Catalyst 4500/4000

In this mode, there are two command lines for configuration:

-

The CatOS command line for switching

-

The Cisco IOS Software command line for routing

CatOS is the system software, which runs on the Supervisor Engine. Cisco IOS Software that runs on the routing module is an option that requires CatOS system software.

For Cisco IOS Software, there is only one command line for configuration. In this mode, the functionality of CatOS has been integrated into Cisco IOS Software. The integration results in a single command line for both switching and routing configuration. In this mode, Cisco IOS Software is the system software, and it replaces CatOS.

Both CatOS and Cisco IOS Software operating systems are deployed in critical networks. CatOS, with the Cisco IOS Software option for router daughter cards and modules, is supported in these switch series:

-

Catalyst 6500/6000

-

Catalyst 5500/5000

-

Catalyst 4500/4000

Cisco IOS system software is supported in these switch series:

-

Catalyst 6500/6000

-

Catalyst 4500/4000

Refer to the document Best Practices for Catalyst 4500/4000, 5500/5000, and 6500/6000 Series Switches Running CatOS Configuration and Management for information on CatOS because this document covers Cisco IOS system software.

Cisco IOS system software provides users with some of these advantages:

-

A single user interface

-

A unified network management platform

-

Enhanced QoS features

-

Distributed switching support

This document provides modular configuration guidance. Therefore, you can read each section independently and make changes in a phased approach. This document assumes a basic comprehension and familiarity with the Cisco IOS Software user interface. The document does not cover overall campus network design.

Before You Begin

Background

The solutions that this document offers represent years of field experience from Cisco engineers who work with complex networks and many of the largest customers. Consequently, this document emphasizes real-world configurations that make networks successful. This document offers these solutions:

-

Solutions that have, statistically, the broadest field exposure and, thus, the lowest risk

-

Solutions that are simple, which trade some flexibility for deterministic results

-

Solutions that are easy to manage and that network operations teams configure

-

Solutions that promote high availability and high stability

References

There are many reference sites for the Catalyst 6500/6000 and Catalyst 4500/4000 product lines on Cisco.com. The references that this section lists provide additional depth into the topics that this document discusses.

Refer to the LAN Switching Technology Support for more information on any of the topics that this document covers. The support page provides product documentation as well as troubleshooting and configuration documents.

This document provides references to public online material so that you can read further. But, other good foundational and educational references are:

Basic Configuration

This section discusses features that are deployed when you use the majority of Catalyst networks.

Catalyst Control Plane Protocols

This section introduces the protocols that run between switches under normal operation. A basic comprehension of the protocols is helpful when you tackle each section.

Supervisor Engine Traffic

Most features that are enabled in a Catalyst network require two or more switches to cooperate. Therefore, there must be a controlled exchange of keepalive messages, configuration parameters, and management changes. Whether these protocols are Cisco proprietary, such as Cisco Discovery Protocol (CDP), or standards-based, such as IEEE 802.1D (Spanning Tree Protocol [STP]), all have certain elements in common when the protocols are implemented on the Catalyst series.

In basic frame forwarding, user data frames originate from end systems. The source address (SA) and destination address (DA) of the data frames are not changed throughout Layer 2 (L2)-switched domains. Content-addressable memory (CAM) lookup tables on each switch Supervisor Engine are populated by an SA learning process. The tables indicate which egress port forwards each frame that is received. If the destination is unknown or the frame is destined to a broadcast or multicast address, the address learning process is incomplete. When the process is incomplete, the frame is forwarded (flooded) out to all ports in that VLAN. The switch must also recognize which frames are to be switched through the system and which frames are to be directed to the switch CPU itself. The switch CPU is also known as the Network Management Processor (NMP).

Special entries in the CAM table are used in order to create the Catalyst control plane. These special entries are called system entries. The control plane receives and directs traffic to the NMP on an internal switch port. Thus, with the use of protocols with well-known destination MAC addresses, control plane traffic can be separated from the data traffic.

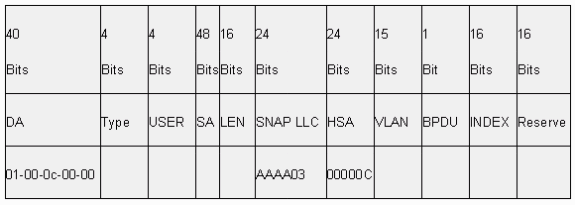

Cisco has a reserved range of Ethernet MAC and protocol addresses, as the table in this section shows. This document covers each reserved address in detail, but this table provides a summary, for convenience:

| Feature | SNAP1 HDLC2 Protocol Type | Destination Multicast MAC |

|---|---|---|

| PAgP3 | 0x0104 | 01-00-0c-cc-cc-cc |

| PVST+, RPVST+4 | 0x010b | 01-00-0c-cc-cc-cd |

| VLAN bridge | 0x010c | 01-00-0c-cd-cd-ce |

| UDLD5 | 0x0111 | 01-00-0c-cc-cc-cc |

| CDP | 0x2000 | 01-00-0c-cc-cc-cc |

| DTP6 | 0x2004 | 01-00-0c-cc-cc-cc |

| STP UplinkFast | 0x200a | 01-00-0c-cd-cd-cd |

| IEEE spanning tree 802.1D | N/A—DSAP7 42 SSAP8 42 | 01-80-c2-00-00-00 |

| ISL9 | N/A | 01-00-0c-00-00-00 |

| VTP10 | 0x2003 | 01-00-0c-cc-cc-cc |

| IEEE Pause 802.3x | N/A—DSAP 81 SSAP 80 | 01-80-C2-00-00-00>0F |

1 SNAP = Subnetwork Access Protocol.

2 HDLC = High-Level Data Link Control.

3 PAgP = Port Aggregation Protocol.

4 PVST+ = Per VLAN Spanning Tree+ and RPVST+ = Rapid PVST+.

5 UDLD = UniDirectional Link Detection.

6 DTP = Dynamic Trunking Protocol.

7 DSAP = destination service access point.

8 SSAP = source service access point.

9 ISL = Inter-Switch Link.

10 VTP = VLAN Trunk Protocol.

The majority of Cisco control protocols use an IEEE 802.3 SNAP encapsulation, which includes Logical Link Control (LLC) 0xAAAA03 and Organizational Unique Identifier (OUI) 0x00000C. You can see this on a LAN analyzer trace.

These protocols assume point-to-point connectivity. Note that the deliberate use of multicast destination addresses enables two Catalyst switches to transparently communicate over non-Cisco switches. Devices that do not understand and intercept the frames simply flood them. However, point-to-multipoint connections through multivendor environments can result in inconsistent behavior. In general, avoid point-to-multipoint connections through multivendor environments. These protocols terminate at Layer 3 routers and function only within a switch domain. These protocols receive prioritization over user data by ingress application-specific integrated circuit (ASIC) processing and scheduling.

Now the discussion turns to the SA. Switch protocols use a MAC address that is taken from a bank of available addresses. An EPROM on the chassis provides the bank of available addresses. Issue the show module command in order to display the address ranges that are available to each module for the sourcing of traffic such as STP bridge protocol data units (BPDUs) or ISL frames. This is a sample command output:

>show module

…

Mod MAC-Address(es) Hw Fw Sw

--- -------------------------------------- ------ ---------- -----------------

1 00-01-c9-da-0c-1e to 00-01-c9-da-0c-1f 2.2 6.1(3) 6.1(1d)

00-01-c9-da-0c-1c to 00-01-c9-da-0c-1

00-d0-ff-88-c8-00 to 00-d0-ff-88-cb-ff

!--- These are the MACs for sourcing traffic.

VLAN 1

VLAN 1 has a special significance in Catalyst networks.

When trunking, the Catalyst Supervisor Engine always uses the default VLAN, VLAN 1, in order to tag a number of control and management protocols. Such protocols include CDP, VTP, and PAgP. All switch ports, which includes the internal sc0 interface, are configured by default to be members of VLAN 1. All trunks carry VLAN 1 by default.

These definitions are necessary in order to help clarify some well-used terms in Catalyst networking:

-

The management VLAN is where sc0 resides for CatOS and low-end switches. You can change this VLAN. Bear this in mind when you are interworking both CatOS and Cisco IOS switches.

-

The native VLAN is the VLAN to which a port returns when it is not trunking. Also, the native VLAN is the untagged VLAN on an IEEE 802.1Q trunk.

There are several good reasons to tune a network and alter the behavior of ports in VLAN 1:

-

When the diameter of VLAN 1, like any other VLAN, gets large enough to be a risk to stability, particularly from an STP perspective, you need to prune back the VLAN. See the Switch Management Interface and Native VLAN section for details.

-

You need to keep the control plane data on VLAN 1 separate from the user data in order to simplify troubleshooting and maximize the available CPU cycles. Avoid Layer 2 loops in VLAN 1 when you design multilayer campus networks without STP. In order to avoid the Layer 2 loops, manually clear VLAN 1 from trunk ports.

In summary, note this information about trunks:

-

CDP, VTP, and PAgP updates are always forwarded on trunks with a VLAN 1 tag. This is the case even if VLAN 1 has been cleared from the trunks and is not the native VLAN. If you clear VLAN 1 for user data, the action has no impact on control plane traffic that is still sent with the use of VLAN 1.

-

On an ISL trunk, DTP packets are sent on VLAN1. This is the case even if VLAN 1 has been cleared from the trunk and is no longer the native VLAN. On an 802.1Q trunk, DTP packets are sent on the native VLAN. This is the case even if the native VLAN has been cleared from the trunk.

-

In PVST+, the 802.1Q IEEE BPDUs are forwarded untagged on the common Spanning Tree VLAN 1 for interoperability with other vendors, unless VLAN 1 has been cleared from the trunk. This is the case regardless of the native VLAN configuration. Cisco PVST+ BPDUs are sent and tagged for all other VLANs. See the Spanning Tree Protocol section for more details.

-

802.1s Multiple Spanning Tree (MST) BPDUs are always sent on VLAN 1 on both ISL and 802.1Q trunks. This applies even when VLAN 1 has been cleared from the trunks.

-

Do not clear or disable VLAN 1 on trunks between MST bridges and PVST+ bridges. But, in the case that VLAN 1 is disabled, the MST bridge must become root in order for all VLANs to avoid the MST bridge placement of its boundary ports in the root-inconsistent state. Refer to Understanding Multiple Spanning Tree Protocol (802.1s) for details.

Standard Features

This section of the document focuses on basic switching features that are common to any environment. Configure these features on all Cisco IOS Software Catalyst switching devices in the customer network.

VLAN Trunk Protocol

Purpose

A VTP domain, which is also called a VLAN management domain, is made up of one or more interconnected switches via a trunk that share the same VTP domain name. VTP is designed to allow users to make VLAN configuration changes centrally on one or more switches. VTP automatically communicates the changes to all the other switches in the (network) VTP domain. You can configure a switch to be in only one VTP domain. Before you create VLANs, determine the VTP mode that is to be used in the network.

Operational Overview

VTP is a Layer 2 messaging protocol. VTP manages the addition, deletion, and rename of VLANs on a network-wide basis in order to maintain VLAN configuration consistency. VTP minimizes misconfigurations and configuration inconsistencies that can result in a number of problems. The problems include duplicate VLAN names, incorrect VLAN-type specifications, and security violations.

By default, the switch is in VTP server mode and is in the no-management domain state. These default settings change when the switch receives an advertisement for a domain over a trunk link or when a management domain is configured.

VTP protocol communicates between switches with the use of a well-known Ethernet destination multicast MAC (01-00-0c-cc-cc-cc) and SNAP HDLC protocol type 0x2003. Similar to other intrinsic protocols, VTP also uses an IEEE 802.3 SNAP encapsulation, which includes LLC 0xAAAA03 and OUI 0x00000C. You can see this on a LAN analyzer trace. VTP does not work over nontrunk ports. Therefore, messages cannot be sent until DTP has brought the trunk up. In other words, VTP is a payload of ISL or 802.1Q.

Message types include:

-

Summary advertisements every 300 seconds (sec)

-

Subset advertisements and request advertisements when there are changes

-

Joins when VTP pruning is enabled

The VTP configuration revision number is incremented by one with every change on a server, and that table propagates across the domain.

At the deletion of a VLAN, ports that were once a member of the VLAN enter an inactive state. Similarly, if a switch in client mode is unable to receive the VTP VLAN table at bootup, either from a VTP server or another VTP client, all ports in VLANs other than the default VLAN 1 are deactivated.

You can configure most Catalyst switches to operate in any one of these VTP modes:

-

Server—In VTP server mode, you can:

-

Create VLANs

-

Modify VLANs

-

Delete VLANs

-

Specify other configuration parameters, such as VTP version and VTP pruning, for the entire VTP domain

VTP servers advertise their VLAN configuration to other switches in the same VTP domain. VTP servers also synchronize their VLAN configuration with other switches on the basis of advertisements that are received over trunk links. VTP server is the default mode.

-

-

Client—VTP clients behave in the same way as VTP servers. But you cannot create, change, or delete VLANs on a VTP client. Moreover, the client does not remember the VLAN after a reboot because no VLAN information is written in NVRAM.

-

Transparent—VTP transparent switches do not participate in VTP. A VTP transparent switch does not advertise its VLAN configuration and does not synchronize its VLAN configuration on the basis of received advertisements. But, in VTP version 2, transparent switches do forward VTP advertisements that the switches receive out their trunk interfaces.

| Feature | Server | Client | Transparent | Off1 |

|---|---|---|---|---|

| Source VTP messages | Yes | Yes | No | — |

| Listen to VTP messages | Yes | Yes | No | — |

| Create VLANs | Yes | No | Yes (locally significant only) | — |

| Remember VLANs | Yes | No | Yes (locally significant only) | — |

1 Cisco IOS Software does not have the option to disable VTP with use of the off mode.

This table is a summary of the initial configuration:

| Feature | Default Value |

|---|---|

| VTP Domain Name | Null |

| VTP Mode | Server |

| VTP Version | Version 1 is Enabled |

| VTP Pruning | Disabled |

In VTP transparent mode, VTP updates are simply ignored. The well-known VTP multicast MAC address is removed from the system CAM that is normally used to pick up control frames and direct them to the Supervisor Engine. Because the protocol uses a multicast address, the switch in transparent mode or another vendor switch simply floods the frame to other Cisco switches in the domain.

VTP version 2 (VTPv2) includes the functional flexibility that this list describes. But, VTPv2 is not interoperable with VTP version 1 (VTPv1):

-

Token Ring support

-

Unrecognized VTP information support—Switches now propagate values that they cannot parse.

-

Version-dependent transparent mode—Transparent mode no longer checks the domain name. This enables support of more than one domain across a transparent domain.

-

Version number propagation—If VTPv2 is possible on all switches, all switches can be enabled with the configuration of a single switch.

Refer to Understanding VLAN Trunk Protocol (VTP) for more information.

VTP Operation in Cisco IOS Software

Configuration changes in CatOS are written to NVRAM immediately after a change is made. In contrast, Cisco IOS Software does not save configuration changes to NVRAM unless you issue the copy run start command. VTP client and server systems require VTP updates from other VTP servers to be immediately saved in NVRAM without user intervention. The VTP update requirements are met by the default CatOS operation, but the Cisco IOS Software update model requires an alternative update operation.

For this alteration, a VLAN database was introduced into Cisco IOS Software for the Catalyst 6500 as a method to immediately save VTP updates for VTP clients and servers. In some versions of software, this VLAN database is in the form of a separate file in NVRAM, called the vlan.dat file. Check your version of software in order to determine if a backup of the VLAN database is required. You can view VTP/VLAN information that is stored in the vlan.dat file for the VTP client or VTP server if you issue the show vtp status command.

The entire VTP/VLAN configuration is not saved to the startup config file in NVRAM when you issue the copy run start command on these systems. This does not apply to systems that run as VTP transparent. VTP transparent systems save the entire VTP/VLAN configuration to the startup config file in NVRAM when you issue the copy run start command.

In Cisco IOS Software releases that are earlier than Cisco IOS Software Release 12.1(11b)E, you can only configure VTP and VLANs via the VLAN database mode. VLAN database mode is a separate mode from the global configuration mode. The reason for this configuration requirement is that, when you configure the device in VTP mode server or VTP mode client, VTP neighbors can update the VLAN database dynamically via VTP advertisements. You do not want these updates to automatically propagate to the configuration. Therefore, the VLAN database and the VTP information are not stored in the main configuration, but are stored in NVRAM in a file with the name vlan.dat.

This example shows how to create an Ethernet VLAN in VLAN database mode:

Switch#vlan database Switch(vlan)#vlan 3 VLAN 3 added: Name: VLAN0003 Switch(vlan)#exit APPLY completed. Exiting....

In Cisco IOS Software Release 12.1(11b)E and later, you can configure VTP and VLANs via VLAN database mode or via the global configuration mode. In VTP mode server or VTP mode transparent, the configuration of VLANs still updates the vlan.dat file in the NVRAM. However, these commands are not saved in the configuration. Therefore, the commands do not show in the running configuration.

Refer to the VLAN Configuration in Global Configuration Mode section of the document Configuring VLANs for more information.

This example shows how to create an Ethernet VLAN in global configuration mode and how to verify the configuration:

Switch#configure terminal Switch(config#vtp mode transparent Setting device to VTP TRANSPARENT mode. Switch(config#vlan 3 Switch(config-vlan)#end Switch# OR Switch#vlan database Switch(vlan#vtp server Switch device to VTP SERVER mode. Switch(vlan#vlan 3 Switch(vlan#exit APPLY completed. Exiting.... Switch#

Note: The VLAN configuration is stored in the vlan.dat file, which is stored in nonvolatile memory. In order to perform a complete backup of your configuration, include the vlan.dat file in the backup along with the configuration. Then, if the entire switch or the Supervisor Engine module requires replacement, the network administrator must upload both of these files in order to restore the complete configuration:

-

The vlan.dat file

-

The configuration file

VTP and Extended VLANs

The Extended System ID feature is used to enable extended-range VLAN identification. When Extended System ID is enabled, it disables the pool of MAC addresses used for the VLAN spanning tree, and leaves a single MAC address that identifies the switch. The Catalyst IOS Software Release 12.1(11b)EX and 12.1(13)E introduce Extended System ID support for Catalyst 6000/6500 to support 4096 VLANs in compliance with the IEEE 802.1Q standard. This feature is introduced in Cisco IOS Software Release12.1(12c)EW for Catalyst 4000/4500 switches. These VLANs are organized into several ranges, each of which can be used differently. Some of these VLANs are propagated to other switches in the network when you use the VTP. The extended-range VLANs are not propagated, so you must configure extended-range VLANs manually on each network device. This Extended System ID feature is equivalent to MAC Address Reduction feature in Catalyst OS.

This table describes the VLAN ranges:

| VLANs | Range | Usage | Propagated by VTP? |

|---|---|---|---|

| 0, 4095 | Reserved | For system use only. You cannot see or use these VLANs. | — |

| 1 | Normal | Cisco default. You can use this VLAN, but you cannot delete it. | Yes |

| 2–1001 | Normal | For Ethernet VLANs. You can create, use, and delete these VLANs. | Yes |

| 1002–1005 | Normal | Cisco defaults for FDDI and Token Ring. You cannot delete VLANs 1002–1005. | Yes |

| 1006–4094 | Reserved | For Ethernet VLANs only. | No |

Switch protocols use a MAC address taken from a bank of available addresses that an EPROM provides on the chassis as part of bridge identifiers for VLANs that run under PVST+ and RPVST+. Catalyst 6000/6500 and Catalyst 4000/4500 switches support either 1024 or 64 MAC addresses that depend on the chassis type.

Catalyst switches with 1024 MAC addresses do not enable Extended System ID by default. MAC addresses are allocated sequentially, with the first MAC address in the range assigned to VLAN 1, the second MAC address in the range assigned to VLAN 2, and so on. This enables the switches to support 1024 VLANs and each VLAN uses a unique bridge identifier.

| Chassis Type | Chassis Address |

|---|---|

| WS-C4003-S1, WS-C4006-S2 | 1024 |

| WS-C4503, WS-C4506 | 641 |

| WS-C6509-E,WS-C6509, WS-C6509-NEB, WS-C6506-E, WS-C6506, WS-C6009, WS-C6006, OSR-7609-AC, OSR-7609-DC | 1024 |

| WS-C6513, WS-C6509-NEB-A, WS-C6504-E, WS-C6503-E, WS-C6503, CISCO7603, CISCO7606, CISCO7609, CISCO7613 | 641 |

1 Chassis with 64 MAC addresses enables Extended System ID by default, and the feature can not be disabled.

Refer to the Understanding the Bridge ID section of Configuring STP and IEEE 802.1s MST for more information.

For Catalyst series switches with 1024 MAC addresses, to enable Extended System ID allows support of 4096 VLANs that run under PVST+ or 16 MISTP instances to have unique identifiers without the increase of the number of MAC addresses that are required on the switch. Extended System ID reduces the number of MAC addresses that are required by the STP from one per VLAN or MISTP instance to one per switch.

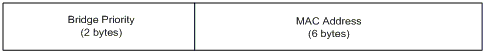

This figure shows the bridge identifier when Extended System ID is not enabled. The bridge identifier consists of a 2-byte bridge priority and a 6-byte MAC address.

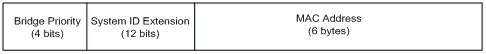

Extended System ID modifies the Spanning Tree Protocol (STP) Bridge Identifier portion of the Bridge Protocol Data Units (BPDU). The original 2-byte priority field is split into 2-fields; A 4-bit bridge priority field and a 12-bit system-ID extension that allows for Vlan numbering of 0-4095.

When Extended System ID is enabled on Catalyst switches to leverage extended range VLANs, it needs to be enabled on all switches within the same STP domain. This is necessary to keep the STP root calculations on all switches consistent. Once Extended System ID is enabled, the root bridge priority becomes a multiple of 4096 plus the VLAN ID. The switches without Extended System ID can possibly claim root inadvertently as they have a finer granularity in the selection of its bridge ID.

While it is recommended to maintain consistent Extended System ID configuration within the same STP domain, it is not practical to enforce Extended System ID on all network devices when you introduce new chassis with 64 MAC address to the STP domain. But, it is important to understand when two systems are configured with the same Spanning-tree priority, the system without Extended System ID has a better Spanning-tree priority. Issue this command in order to enable Extended System ID configuration:

spanning-tree extend system-id

The internal VLANs are allocated in ascending order, starting at VLAN 1006. It is recommended to assign the user VLANs as close to VLAN 4094 as possible in order to avoid conflicts between the user VLANs and the internal VLANs. Issue the command show vlan internal usage on a switch in order to display the internally assigned VLANs.

Switch#show vlan internal usage VLAN Usage ---- -------------------- 1006 online diag vlan0 1007 online diag vlan1 1008 online diag vlan2 1009 online diag vlan3 1010 online diag vlan4 1011 online diag vlan5 1012 PM vlan process (trunk tagging) 1013 Port-channel100 1014 Control Plane Protection 1015 L3 multicast partial shortcuts for VPN 0 1016 vrf_0_vlan0 1017 Egress internal vlan 1018 Multicast VPN 0 QOS vlan 1019 IPv6 Multicast Egress multicast 1020 GigabitEthernet5/1 1021 ATM7/0/0 1022 ATM7/0/0.1 1023 FastEthernet3/1 1024 FastEthernet3/2 ------deleted------

In Native IOS, vlan internal allocation policy descending can be configured so the internal VLANs are allocated in descending order. The CLI equivalent for CatOS software is not officially supported.

vlan internal allocation policy descending

Cisco Configuration Recommendation

VLANs can be created when a Catalyst 6500/6000 is in VTP server mode, even without VTP domain name. Configure the VTP domain name first, before you configure VLANs on Catalyst 6500/6000 switches that run Cisco IOS system software. Configuration in this order maintains consistency with other Catalyst switches that run CatOS.

There is no specific recommendation on whether to use VTP client/server modes or VTP transparent mode. Some customers prefer the ease of management of VTP client/server mode, despite some considerations that this section notes. The recommendation is to have two server mode switches in each domain for redundancy, typically the two distribution-layer switches. Set the rest of the switches in the domain to client mode. When you implement client/server mode with the use of VTPv2, remember that a higher revision number is always accepted in the same VTP domain. If a switch that is configured in either VTP client or server mode is introduced into the VTP domain and has a higher revision number than the VTP servers that exists, this overwrites the VLAN database within the VTP domain. If the configuration change is unintentional and VLANs are deleted, this overwrite can cause a major outage in the network. In order to ensure that client or server switches always have a configuration revision number that is lower than that of the server, change the client VTP domain name to something other than the standard name, and then revert back to the standard. This action sets the configuration revision on the client to 0.

There are pros and cons to the VTP ability to make changes easily on a network. Many enterprises prefer a cautious approach and use VTP transparent mode for these reasons:

-

This practice encourages good change control because the requirement to modify a VLAN on a switch or trunk port must be considered one switch at a time.

-

VTP transparent mode limits the risk of an administrator error, such as accidental deletion of a VLAN. Such errors can impact the entire domain.

-

VLANs can be pruned from trunks down to switches that do not have ports in the VLAN. This results in frame flooding to be more bandwidth-efficient. Manual pruning also has a reduced spanning-tree diameter. See the Dynamic Trunking Protocol section for more information. A per-switch VLAN configuration also encourages this practice.

-

There is no risk of the introduction into the network of a new switch with a higher VTP revision number that overwrites the entire domain VLAN configuration.

-

Cisco IOS Software VTP transparent mode is supported in Campus Manager 3.2, which is part of CiscoWorks2000. The earlier restriction that requires you to have at least one server in a VTP domain has been removed.

| VTP Commands | Comments |

|---|---|

| vtp domain name | CDP checks the name in order to help prevent miscabling between the domains. Domain names are case sensitive. |

| vtp mode {server | client | transparent} | VTP operates in one of the three modes. |

| vlan vlan_number | This creates a VLAN with the ID provided. |

| switchport trunk allowed vlan_range | This is an interface command that enables trunks to carry VLANs where needed. The default is all VLANs. |

| switchport trunk pruning vlan_range | This is an interface command that limits the STP diameter by manual pruning, such as on trunks from the distribution layer to access layer, where the VLAN does not exist. By default, all VLANs are prune-eligible. |

Other Options

VTPv2 is a requirement in Token Ring environments, where client/server mode is highly recommended.

The Cisco Configuration Recommendation section of this document advocates the benefits of pruning VLANs in order to reduce unnecessary frame flooding. The vtp pruning command prunes VLANs automatically, which stops the inefficient flooding of frames where they are not needed.

Note: Unlike manual VLAN pruning, automatic pruning does not limit the spanning-tree diameter.

The IEEE has produced a standards-based architecture in order to accomplish VTP-similar results. As a member of the 802.1Q Generic Attribute Registration Protocol (GARP), the Generic VLAN Registration Protocol (GVRP) allows VLAN management interoperability between vendors. However, GVRP is outside the scope of this document.

Note: Cisco IOS Software does not have VTP off mode capability, and it only supports VTPv1 and VTPv2 with pruning.

Fast Ethernet Autonegotiation

Purpose

Autonegotiation is an optional function of the IEEE 802.3u Fast Ethernet (FE) standard. Autonegotiation enables devices to automatically exchange information about speed and duplex abilities over a link. Autonegotiation operates at Layer 1 (L1). The function is targeted at ports that are allocated to areas where transient users or devices connect to a network. Examples include access layer switches and hubs.

Operational Overview

Autonegotiation uses a modified version of the link integrity test for 10BASE-T devices to negotiate speed and exchange other autonegotiation parameters. The original 10BASE-T link integrity test is referred to as Normal Link Pulse (NLP). The modified version of the link integrity test for 10/100-Mbps autonegotiation is referred to as Fast Link Pulse (FLP). The 10BASE-T devices expect a burst pulse every 16 (+/-8) milliseconds (ms) as part of the link integrity test. FLP for 10/100-Mbps autonegotiation sends these bursts every 16 (+/-8) ms with the additional pulses every 62.5 (+/-7) microseconds. The pulses within the burst sequence generate code words that are used for compatibility exchanges between link partners.

In 10BASE-T, a link pulse is sent out whenever a station comes up. This is a single pulse that is sent every 16 ms. The 10BASE-T devices also send a link pulse every 16 ms when the link is idle. These link pulses are also called heartbeat or NLP.

A 100BASE-T device sends out FLP. This pulse is sent out as a burst instead of one pulse. The burst is completed within 2 ms and is again repeated every 16 ms. Upon initialization, the device transmits a 16-bit FLP message to the link partner for the negotiation of speed, duplex, and flow control. This 16-bit message is sent repeatedly until the message is acknowledged by the partner.

Note: As per the IEEE 802.3u specification, you cannot manually configure one link partner for 100-Mbps full duplex and still autonegotiate to full duplex with the other link partner. An attempt to configure one link partner for 100-Mbps full duplex and the other link partner for autonegotiation results in a duplex mismatch. Duplex mismatch results because one link partner autonegotiates and does not see any autonegotiation parameters from the other link partner. The first link partner then defaults to half duplex.

All the Catalyst 6500 Ethernet switching modules support 10/100 Mbps and half duplex or full duplex. Issue the show interface capabilities command in order to verify this functionality on other Catalyst switches.

One of the most common causes of performance issues on 10/100-Mbps Ethernet links occurs when one port on the link operates at half duplex while the other port operates at full duplex. This situation occasionally happens when you reset one or both ports on a link and the autonegotiation process does not result in the same configuration for both link partners. The situation also happens when you reconfigure one side of a link and forget to reconfigure the other side. You can avoid the need to place performance-related support calls if you:

-

Create a policy that requires the configuration of ports for the required behavior for all nontransient devices

-

Enforce the policy with adequate change control measures

Typical symptoms of the performance issue increase frame check sequence (FCS), cyclic redundancy check (CRC), alignment, or runt counters on the switch.

In half duplex mode, you have one pair of receive and one pair of transmit wires. Both of the wires cannot be used at the same time. The device cannot transmit when there is a packet on the receive side.

In full duplex mode, you have the same pair of receive and transmit wires. However, both can be used at the same time because the Carrier Sense and Collision Detect functions have been disabled. The device can transmit and receive at the same time.

Therefore, a half-duplex to full-duplex connection works, but there is a large number of collisions at the half-duplex side that result in poor performance. The collisions occur becuse the device that is configured as full duplex can transmit at the same time that the device receives data.

The documents in this list discuss autonegotiation in detail. These documents explain how autonegotiation works and discuss various configuration options:

-

Configuring and Troubleshooting Ethernet 10/100/1000Mb Half/Full Duplex Auto-Negotiation

-

Troubleshooting Cisco Catalyst Switches to NIC Compatibility Issues

A common misconception about autonegotiation is that it is possible to manually configure one link partner for 100-Mbps full duplex and autonegotiate to full duplex with the other link partner. In fact, an attempt to do this results in a duplex mismatch. This is a consequence because one link partner autonegotiates, does not see any autonegotiation parameters from the other link partner, and defaults to half duplex.

Most Catalyst Ethernet modules support 10/100 Mbps and half/full duplex. However, you can confirm this if you issue the show interface mod/port capabilities command.

FEFI

Far end fault indication (FEFI) protects 100BASE-FX (fiber) and Gigabit interfaces, while autonegotiation protects 100BASE-TX (copper) against physical layer/signaling-related faults.

A far end fault is an error in the link that one station can detect while the other station cannot. A disconnected transmit wire is an example. In this example, the sending station still receives valid data and detects that the link is good via the link integrity monitor. The sending station cannot, however, detect that the other station does not receive the transmission. A 100BASE-FX station that detects such a remote fault can modify its transmitted IDLE stream in order to send a special bit pattern in order to inform the neighbor of the remote fault. The special bit pattern is referred to as the FEFI-IDLE pattern. The FEFI-IDLE pattern subsequently triggers a shutdown of the remote port (errDisable). See the UniDirectional Link Detection section of this document for further information on fault protection.

These modules/hardware support FEFI:

-

Catalyst 6500/6000 and 4500/4000:

-

All 100BASE-FX modules and GE modules

-

Cisco Infrastructure Port Recommendation

Whether to configure autonegotiation on 10/100-Mbps links or to hard code speed and duplex ultimately depends on the type of link partner or end device that you have connected to a Catalyst switch port. Autonegotiation between end devices and Catalyst switches generally works well, and Catalyst switches are compliant with the IEEE 802.3u specification. However, when network interface card (NIC) or vendor switches do not conform exactly, problems can result. In addition, vendor-specific advanced features that are not described in the IEEE 802.3u specification for 10/100-Mbps autonegotiation can cause hardware incompatibility and other issues. These types of advanced features include autopolarity and cabling integrity. This document provides an example:

In some situations, you need to set host, port speed, and duplex. In general, complete these basic troubleshooting steps:

-

Make sure that autonegotiation is configured on both sides of the link or that hard coding is configured on both sides.

-

Check the release notes for common caveats.

-

Verify the version of the NIC driver or operating system that you run. The latest driver or patch is often required.

As a rule, first use autonegotiation for any type of link partner. There are obvious benefits to the configuration of autonegotiation for transient devices such as laptops. Autonegotiation also works well with other devices, for example:

-

With nontransient devices such as servers and fixed workstations

-

From switch to switch

-

From switch to router

But, for some of the reasons that this section mentions, negotiation issues can arise. Refer to Configuring and Troubleshooting Ethernet 10/100/1000Mb Half/Full Duplex Auto-Negotiation for basic troubleshooting steps in these cases.

Disable autonegotiation for:

-

Ports that support network infrastructure devices such as switches and routers

-

Other nontransient end systems such as servers and printers

Always hard code the speed and duplex settings for these ports.

Manually configure these 10/100-Mbps link configurations for speed and duplex, which are usually 100-Mbps full duplex:

-

Switch-to-switch

-

Switch-to-server

-

Switch-to-router

If the port speed is set to auto on a 10/100-Mbps Ethernet port, both the speed and duplex are autonegotiated. Issue this interface command in order to set the port to auto:

Switch(config)#interface fastethernet slot/port Switch(config-if)#speed auto !--- This is the default.

Issue these interface commands in order to configure speed and duplex:

Switch(config)#interface fastethernet slot/port Switch(config-if)#speed {10 | 100 | auto} Switch(config-if)#duplex {full | half}

Cisco Access Port Recommendations

End users, mobile workers, and transient hosts need autonegotiation in order to minimize management of these hosts. You can make autonegotiation work with Catalyst switches as well. The latest NIC drivers are often required.

Issue these global commands in order to enable the autonegotiation of speed for the port:

Switch(config)#interface fastethernet slot/port Switch(config-if)#speed auto

Note: If you set the port speed to auto on a 10/100-Mbps Ethernet port, both speed and duplex are autonegotiated. You cannot change the duplex mode of autonegotiation ports.

When NICs or vendor switches do not conform exactly to the IEEE specification 802.3u, problems can result. In addition, vendor-specific advanced features that are not described in the IEEE 802.3u specification for 10/100-Mbps autonegotiation can cause hardware incompatibility and other issues. Such advanced features include autopolarity and cabling integrity.

Other Options

When autonegotiation is disabled between switches, Layer 1 fault indication can also be lost for certain problems. Use Layer 2 protocols to augment failure detection such as aggressive UDLD.

Autonegotiation does not detect these situations, even when autonegotiation is enabled:

-

The ports get stuck and do not receive or transmit

-

One side of the line is up but the other side has gone down

-

Fiber cables are miswired

Autonegotiation does not detect these problems because they are not at the physical layer. The problems can lead to STP loops or traffic black holes.

UDLD can detect all these cases and errdisable both the ports on the link, if UDLD is configured on both ends. In this way, UDLD prevents STP loops and traffic black holes.

Gigabit Ethernet Autonegotiation

Purpose

Gigabit Ethernet (GE) has an autonegotiation procedure that is more extensive than the procedure that is used for 10/100-Mbps Ethernet (IEEE 802.3z). With GE ports, autonegotiation is used to exchange:

-

Flow-control parameters

-

Remote fault information

-

Duplex information

Note: Catalyst series GE ports only support full duplex mode.

IEEE 802.3z has been superseded by IEEE 802.3:2000 specs. Refer to the Local and Metropolitan Area Networks + Drafts (LAN/MAN 802s) Standards Subscription ![]() for more information.

for more information.

Operational Overview

Unlike autonegotiation with 10/100-Mbps FE, GE autonegotiation does not involve the negotiation of port speed. Also, you cannot issue the set port speed command in order to disable autonegotiation. GE port negotiation is enabled by default, and the ports on both ends of a GE link must have the same setting. The link does not come up if the ports at each end of the link are set inconsistently, which means that the exchanged parameters are different.

For example, assume that there are two devices, A and B. Each device can have autonegotiation enabled or disabled. This is a table that has possible configurations and their respective link states:

| Negotiation | B Enabled | B Disabled |

|---|---|---|

| A Enabled | up on both sides | A down, B up |

| A Disabled | A up, B down | up on both sides |

In GE, synchronization and autonegotiation (if they are enabled) are performed upon link startup through the use of a special sequence of reserved link code words.

Note: There is a dictionary of valid words, and not all possible words are valid in GE.

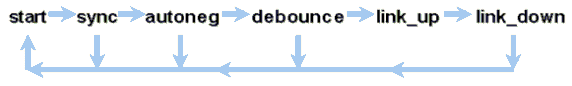

The life of a GE connection can be characterized in this way:

A loss of synchronization means that the MAC detects a link down. Loss of synchronization applies whether autonegotiation is enabled or disabled. Synchronization is lost under certain failed conditions, such as the receipt of three invalid words in succession. If this condition persists for 10 ms, a sync fail condition is asserted and the link is changed to the link_down state. After synchronization is lost, another three consecutive valid idles are necessary in order to resynchronize. Other catastrophic events, such as a loss of receive (Rx) signal, causes a link-down event.

Autonegotiation is a part of the linkup process. When the link is up, autonegotiation is over. However, the switch still monitors the status of the link. If autonegotiation is disabled on a port, the autoneg phase is no longer an option.

The GE copper specification (1000BASE-T) does support autonegotiation via a Next Page Exchange. Next Page Exchange allows autonegotiation for 10/100/1000-Mbps speeds on copper ports.

Note: However, the GE fiber specification only makes provisions for the negotiation of duplex, flow control, and remote fault detection. GE fiber ports do not negotiate port speed. Refer to sections 28 and 37 of the IEEE 802.3-2002 ![]() specification for more information on autonegotiation.

specification for more information on autonegotiation.

Synchronization restart delay is a software feature that controls the total autonegotiation time. If autonegotiation is not successful within this time, the firmware restarts autonegotiation in case there is a deadlock. The sync-restart-delay command only has an effect when autonegotiation is set to enable.

Cisco Infrastructure Port Recommendation

The configuration of autonegotiation is much more critical in a GE environment than in a 10/100 Mbps environment. Only disable autonegotiation in these situations:

-

On switch ports that attach to devices that are not able to support negotiation

-

Where connectivity issues arise from interoperability issues

Enable Gigabit negotiation on all switch-to-switch links and, generally, on all GE devices. The default value on Gigabit interfaces is autonegotiation. Still, issue this command in order to ensure that autonegotiation is enabled:

switch(config)#interface type slot/port switch(config-If)#no speed !--- This command sets the port to autonegotiate Gigabit parameters.

One known exception is when you connect to a Gigabit Switch Router (GSR) that runs Cisco IOS Software that is earlier than Cisco IOS Software Release 12.0(10)S, the release that added flow control and autonegotiation. In this case, turn off those two features. If you do not turn off those features, the switch port reports not connected and the GSR reports errors. This is a sample interface command sequence:

flowcontrol receive off flowcontrol send off speed nonegotiate

Cisco Access Port Recommendations

Since FLPs can vary between vendors, you must look at switch-to-server connections on a case-by-case basis. Cisco customers have encountered some issues with Gigabit negotiation on Sun, HP, and IBM servers. Have all devices use the Gigabit autonegotiation unless the NIC vendor specifically states otherwise.

Other Options

Flow control is an optional part of the 802.3x specification. Flow control must be negotiated if you use it. Devices can or cannot possibly be able to send and/or respond to a PAUSE frame (well-known MAC 01-80-C2-00-00-00 0F). And devices can possibly not agree to the flow-control request of the far-end neighbor. A port with an input buffer that begins to fill up sends a PAUSE frame to the link partner. The link partner stops the transmission and holds any additional frames in the link partner output buffers. This function does not solve any steady-state oversubscription problem. But, the function effectively makes the input buffer larger by some fraction of the partner output buffer throughout bursts.

The PAUSE function is designed to prevent the unnecessary discard of received frames by devices (switches, routers, or end stations) because of buffer overflow conditions that short-term transient traffic overload causes. A device under traffic overload prevents internal buffer overflow when the device sends a PAUSE frame. The PAUSE frame contains a parameter that indicates the length of time for the full duplex partner to wait before the partner sends more data frames. The partner that receives the PAUSE frame ceases to send data for the specified period. When this timer expires, the station begins to send data frames again, from where the station left off.

A station that issues a PAUSE can issue another PAUSE frame that contains a parameter of zero time. This action cancels the remainder of the pause period. So, a newly received PAUSE frame overrides any PAUSE operation that is currently in progress. Also, the station that issues the PAUSE frame can extend the PAUSE period. The station issues another PAUSE frame that contains a nonzero time parameter before the expiration of the first PAUSE period.

This PAUSE operation is not rate-based flow control. The operation is a simple start-stop mechanism that allows the device under traffic, the one that sent the PAUSE frame, a chance to reduce its buffer congestion.

The best use of this feature is on links between access ports and end hosts, where the host output buffer is potentially as large as the virtual memory. Switch-to-switch use has limited benefits.

Issue these interface commands in order to control this on the switch ports:

flowcontrol {receive | send} {off | on | desired}

>show port flowcontrol

Port Send FlowControl Receive FlowControl RxPause TxPause

admin oper admin oper

----- -------- -------- -------- -------- ------- -------

6/1 off off on on 0 0

6/2 off off on on 0 0

6/3 off off on on 0 0

Note: All Catalyst modules respond to a PAUSE frame if negotiated. Some modules (for example, WS-X5410 and WS-X4306) never send pause frames, even if they negotiate to do so, because they are nonblocking.

Dynamic Trunking Protocol

Purpose

In order to extend VLANs between devices, trunks temporarily identify and mark (link local) the original Ethernet frames. This action enables the frames to be multiplexed over a single link. The action also ensures that separate VLAN broadcast and security domains are maintained between switches. CAM tables maintain the frame to VLAN mapping inside the switches.

Operational Overview

DTP is the second generation of Dynamic ISL (DISL). DISL only supported ISL. DTP supports both ISL and 802.1Q. This support ensures that the switches at either end of a trunk agree on the different parameters of trunking frames. Such parameters include:

-

Configured encapsulation type

-

Native VLAN

-

Hardware capability

The DTP support also helps protect against the flooding of tagged frames by nontrunk ports, which is a potentially serious security risk. DTP protects against such flooding because it ensures that ports and their neighbors are in consistent states.

Trunking Mode

DTP is an Layer 2 protocol that negotiates configuration parameters between a switch port and its neighbor. DTP uses another well-known multicast MAC address of 01-00-0c-cc-cc-cc and a SNAP protocol type of 0x2004. This table describes the function on each of the possible DTP negotiation modes:

| Mode | Function | DTP Frames Transmitted? | Final State (Local Port) |

|---|---|---|---|

| Dynamic Auto (equivalent to the mode Auto in CatOS) | Makes the port willing to convert the link to a trunk. The port becomes a trunk port if the neighboring port is set to on or desirable mode. | Yes, periodic | Trunking |

| Trunk (equivalent to the mode ON in CatOS) | Puts the port into permanent trunking mode and negotiates to convert the link into a trunk. The port becomes a trunk port even if the neighboring port does not agree to the change. | Yes, periodic | Trunking, unconditionally |

| Nonegotiate | Puts the port into permanent trunking mode but does not allow the port to generate DTP frames. You must manually configure the neighboring port as a trunk port in order to establish a trunk link. This is useful for devices that do not support DTP. | No | Trunking, unconditionally |

| Dynamic desirable (CatOS comparable command is desirable) | Makes the port actively attempt to convert the link to a trunk link. The port becomes a trunk port if the neighboring port is set to on, desirable, or auto mode. | Yes, periodic | It ends up in trunking state only if the remote mode is on, auto, or desirable. |

| Access | Puts the port into permanent non-trunking mode and negotiates to convert the link into a nontrunk link. The port becomes a nontrunk port even if the neighboring port does not agree to the change. | No, in steady state, but transmits informs in order to speed up remote-end detection after a change from on. | Non-trunking |

Note: The ISL and 802.1Q encapsulation type can be set or negotiated.

In the default configuration, DTP assumes these characteristics on the link:

-

Point-to-point connections and Cisco devices support 802.1Q trunk ports that are only point-to-point.

-

Throughout DTP negotiation, the ports do not participate in STP. The port is added to STP only after the port type becomes one of these three types:

-

Access

-

ISL

-

802.1Q

PAgP is the next process to run before the port participates in STP. PAgP is used for EtherChannel autonegotation.

-

-

VLAN 1 is always present on the trunk port. If the port is trunking in ISL mode, DTP packets are sent out on VLAN 1. If the port is not trunking in ISL mode, the DTP packets are sent on the native VLAN (for 802.1Q trunking or nontrunking ports).

-

DTP packets transfer the VTP domain name, plus the trunk configuration and admin status. The VTP domain name must match in order to get a negotiated trunk to come up. These packets are sent every second throughout negotiation and every 30 seconds after negotiation. If a port in auto or desirable mode does not detect a DTP packet within 5 minutes (min), the port is set as nontrunk.

Caution: You must understand that the modes trunk, nonegotiate, and access explicitly specify in which state the port ends up. A bad configuration can lead to a dangerous/inconsistent state in which one side is trunking and the other is not trunking.

Caution: You must understand that the modes trunk, nonegotiate, and access explicitly specify in which state the port ends up. A bad configuration can lead to a dangerous/inconsistent state in which one side is trunking and the other is not trunking.

Refer to Configuring ISL Trunking on Catalyst 5500/5000 and 6500/6000 Family Switches for more ISL details. Refer to Trunking Between Catalyst 4500/4000, 5500/5000, and 6500/6000 Series Switches Using 802.1Q Encapsulation with Cisco CatOS System Software for more 802.1Q details.

Encapsulation Type

ISL Operational Overview

ISL is a Cisco proprietary trunking protocol (VLAN tagging scheme). ISL has been in use for many years. In contrast, 802.1Q is much newer, but 802.1Q is the IEEE standard.

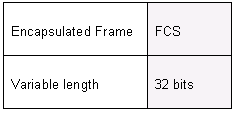

ISL completely encapsulates the original frame in a two-level tagging scheme. In this way, ISL is effectively a tunneling protocol and, as an additional benefit, carries non-Ethernet frames. ISL adds a 26-byte header and a 4-byte FCS to the standard Ethernet frame. Ports that are configured to be trunks expect and handle the larger Ethernet frames. ISL supports 1024 VLANs.

Frame Format – ISL Tag Is Shaded

Refer to InterSwitch Link and IEEE 802.1Q Frame Format for more information.

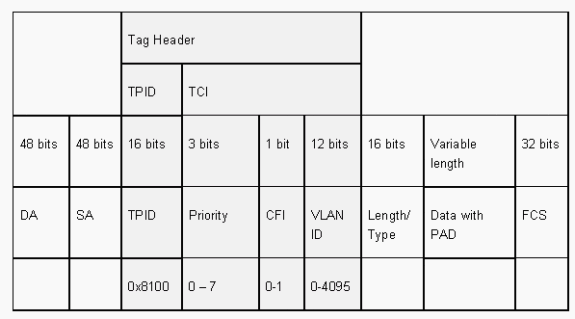

802.1Q Operational Overview

Although the IEEE 802.1Q standard only pertains to Ethernet, the standard specifies much more than encapsulation types. 802.1Q includes, among other Generic Attribute Registration Protocols (GARPs), spanning-tree enhancements and 802.1p QoS tagging. Refer to IEEE Standards Online ![]() for more information

for more information

The 802.1Q frame format preserves the original Ethernet SA and DA. However, switches must now expect to receive baby-giant frames, even on access ports where hosts can use tagging to express 802.1p user priority for QoS signaling. The tag is 4 bytes. The 802.1Q Ethernet v2 frames are 1522 bytes, which is an IEEE 802.3ac working group achievement. Also, 802.1Q supports numbering space for 4096 VLANs.

All data frames that are transmitted and received are 802.1Q tagged, except for those data frames that are on the native VLAN. In this case, there is an implicit tag that is based on the ingress switch port configuration. Frames on the native VLAN are always transmitted untagged and are normally received untagged. However, these frames can also be received tagged.

Refer to these documents for more information:

Cisco Configuration Recommendation

One primary Cisco design principal is to strive for consistency in the network where consistency is possible. All newer Catalyst products support 802.1Q and some only support 802.1Q, such as earlier modules in the Catalyst 4500/4000 and Catalyst 6500 series. Therefore, all new implementations need to follow this IEEE 802.1Q standard and older networks need to gradually migrate from ISL.

Issue this interface commands in order to enable 802.1Q trunking on a particular port:

Switch(config)#interface type slot#/port# Switch(config-if)#switchport !--- Configure the interface as a Layer 2 port. Switch(config-if)#switchport trunk encapsulation dot1q

The IEEE standard allows vendor interoperability. Vendor interoperability is advantageous in all Cisco environments as new host 802.1p-capable NICs and devices become available. Although both ISL and 802.1Q implementations are solid, the IEEE standard ultimately has greater field exposure and greater third-party support, which includes support for network analyzers. Also, a minor consideration is that the 802.1Q standard also has a lower encapsulation overhead than ISL.

For completeness, the implicit tagging on native VLANs creates a security consideration. The transmission of frames from one VLAN, VLAN X, to another VLAN, VLAN Y, without a router is possible. The transmission can occur without a router if the source port (VLAN X) is in the same VLAN as the native VLAN of an 802.1Q trunk on the same switch. The workaround is to use a dummy VLAN for the native VLAN of the trunk.

Issue these interface commands in order to establish a VLAN as native (the default) for 802.1Q trunking on a particular port:

Switch(config)#interface type slot#/port# Switch(config-If)#switchport trunk native vlan 999

Because all newer hardware supports 802.1Q, have all new implementations follow the IEEE 802.1Q standard and gradually migrate earlier networks from ISL. Until recently, many Catalyst 4500/4000 modules did not support ISL. Therefore, 802.1Q is the only option for Ethernet trunking. Refer to the output of the show interface capabilities command, or the show port capabilities command for CatOS. Because trunking support requires the appropriate hardware, a module that does not support 802.1Q can never support 802.1Q. A software upgrade does not confer support for 802.1Q. Most new hardware for the Catalyst 6500/6000 and Catalyst 4500/4000 switches supports both ISL and 802.1Q.

If VLAN 1 is cleared from a trunk, as the Switch Management Interface and Native VLAN section discusses, although no user data are transmitted or received, the NMP continues to pass control protocols on VLAN 1. Examples of control protocols include CDP and VTP.

Also, as the VLAN 1 section discusses, CDP, VTP, and PAgP packets are always sent on VLAN 1 when trunking. With the use of dot1q (802.1Q) encapsulation, these control frames are tagged with VLAN 1 if the switch native VLAN is changed. If dot1q trunking to a router and the native VLAN is changed on the switch, a subinterface in VLAN 1 is necessary in order to receive the tagged CDP frames and provide the CDP neighbor visibility on the router.

Note: There is a potential security consideration with dot1q that the implicit tagging of the native VLAN causes. The transmission of frames from one VLAN to another without a router can be possible. Refer to the Intrusion Detection FAQ ![]() for further details. The workaround is to use a VLAN ID for the native VLAN of the trunk that is not used for end-user access. In order to achieve this, the majority of Cisco customers simply leave VLAN 1 as the native VLAN on a trunk and assign access ports to VLANs other than VLAN 1.

for further details. The workaround is to use a VLAN ID for the native VLAN of the trunk that is not used for end-user access. In order to achieve this, the majority of Cisco customers simply leave VLAN 1 as the native VLAN on a trunk and assign access ports to VLANs other than VLAN 1.

Cisco recommends an explicit trunk mode configuration of dynamic desirable at both ends. This mode is the default mode. In this mode, network operators can trust syslog and command-line status messages that a port is up and trunking. This mode is different from on mode, which can make a port appear up even though the neighbor is misconfigured. In addition, desirable mode trunks provide stability in situations in which one side of the link cannot become a trunk or drops the trunk state.

If the encapsulation type is negotiated between switches with the use of DTP, and ISL is chosen as the winner by default if both ends support it, you must issue this interface command in order to specify dot1q1:

switchport trunk encapsulation dot1q

1 Certain modules that include WS-X6548-GE-TX and WS-X6148-GE-TX do not support ISL trunking. These modules do not accept command switchport trunk encapsulation dot1q .

Note: Issue the switchport mode access command in order to disable trunks on a port. This disablement helps to eliminate wasted negotiation time when host ports are brought up.

Switch(config-if)#switchport host

Other Options

Another common customer configuration uses dynamic desirable mode at the distribution layer and the simplest default configuration (dynamic auto mode) at the access layer. Some switches, such as the Catalyst 2900XL, Cisco IOS routers, or other vendor devices, do not currently support trunk negotiation via DTP. You can use nonegotiate mode in order to set a port to trunk unconditionally with these devices. This mode can help standardize on a common setting across the campus.

Cisco recommends nonegotiate when you connect to a Cisco IOS router. Throughout bridging, some DTP frames that are received from a port that is configured with switchport mode trunk can return to the trunk port. Upon reception of the DTP frame, the switch port tries to renegotiate unnecessarily. In order to renegotiate, the switch port brings the trunk down and then up. If nonegotiate is enabled, the switch does not send DTP frames.

switch(config)#interface type slot#/port# switch(config-if)#switchport mode dynamic desirable !--- Configure the interface as trunking in desirable !--- mode for switch-to-switch links with multiple VLANs. !--- And... switch(config-if)#switchport mode trunk !--- Force the interface into trunk mode without negotiation of the trunk connection. !--- Or... switch(config-if)#switchport nonegotiate !--- Set trunking mode to not send DTP negotiation packets !--- for trunks to routers. switch(config-if)#switchport access vlan vlan_number !--- Configure a fallback VLAN for the interface. switch(config-if)#switchport trunk native vlan 999 !--- Set the native VLAN. switch(config-if)#switchport trunk allowed vlan vlan_number_or_range !--- Configure the VLANs that are allowed on the trunk.

Spanning Tree Protocol

Purpose

Spanning tree maintains a loop-free Layer 2 environment in redundant switched and bridges networks. Without STP, frames loop and/or multiply indefinitely. This occurrence causes a network meltdown because high traffic interrupts all devices in the broadcast domain.

In some respects, STP is an early protocol that was initially developed for slow software-based bridge specifications (IEEE 802.1D). However, STP can be complicated in order to implement it successfully in large switched networks that have:

-

Many VLANs

-

Many switches in a domain

-

Multivendor support

-

Newer IEEE enhancements

Cisco IOS System Software has taken on new STP developments. New IEEE standards that include 802.1w Rapid STP and 802.1s Multiple Spanning Tree protocols provide rapid convergence, load sharing and control plane scaling. Additionally, STP enhancement features like RootGuard, BPDU filtering, Portfast BPDU guard and Loopguard provide additional protection against Layer 2 forwarding loops.

PVST+ Operational Overview

The root bridge election per VLAN is won by the switch with the lowest root Bridge Identifier (BID). The BID is the bridge priority combined with the switch MAC address.

Initially, BPDUs are sent from all switches and contain the BID of each switch and the path cost to reach that switch. This enables the determination of the root bridge and the lowest-cost path to the root. Additional configuration parameters that are carried in BPDUs from the root override those parameters that are locally configured so that the whole network uses consistent timers. For every BPDU that a switch receives from the root, the Catalyst central NMP processes a new BPDU and sends it out with the root information.

The topology then converges through these steps:

-

A single root bridge is elected for the entire spanning tree domain.

-

One root port (that faces the root bridge) is elected on every nonroot bridge.

-

A designated port is elected for BPDU forwarding on every segment.

-

Nondesignated ports become blocking.

Refer to these documents for more information:

| Basic Timers Default | Name | Function |

|---|---|---|

| 2 sec | hello | Controls the departure of BPDUs. |

| 15 sec | forward delay (Fwddelay) | Controls the length of time that a port spends in listening state and learning state and influences the topology change process. |

| 20 sec | maxage | Controls the length of time that the switch maintains the current topology before the switch looks for an alternative path. After the maximum aging (maxage) time, a BPDU is considered stale and the switch looks for a new root port from the pool of blocking ports. If no blocked port is available, the switch claims to be the root itself on the designated ports. |

Cisco recommends that you do not change timers because this can adversely affect stability. The majority of networks that are deployed are not tuned. The simple STP timers that are accessible via the command line (such as hello-interval, maxage, and so on) are themselves comprised of a complex set of other assumed and intrinsic timers. Therefore, it is difficult to tune timers and consider all the ramifications. Moreover, you can undermine UDLD protection. See the UniDirectional Link Detection section for more details.

Note on STP Timers:

The default STP timer values are based on a computation that considers a network diameter of seven switches (seven switch hops from the root to the edge of the network), and the time that is necessary for a BPDU to travel from the root bridge to the edge switches in the network, which are seven hops away. This assumption computes timer values that are acceptable for most networks. But, you can change these timers to more optimal values in order to speed up convergence times throughout network topology changes.

You can configure the root bridge with the network diameter for a specific VLAN, and the timer values are computed accordingly. Cisco recommends that, if you must make changes, only configure the diameter and optional hello time parameters on the root bridge for the VLAN.

spanning-tree vlan vlan-id [root {primary | secondary}] [diameter diameter-value [hello hello-time]] !--- This command needs to be on one line.

This macro makes the switch root for the specified VLAN, computes new timer values on the basis of the diameter and hello time specified, and propagates this information in configuration BPDUs to all other switches in the topology.

The section New Port States and Port Roles describes 802.1D STP and compares and contrasts 802.1D STP with Rapid STP (RSTP). Refer to Understanding Rapid Spanning Tree Protocol (802.1w) for more information on RSTP.

New Port States and Port Roles

802.1D is defined in four different port states:

-

Listening

-

Learning

-

Blocking

-

Forwarding

See the table in the Port States section for more information. The state of the port is mixed (whether it blocks or forwards traffic), as is the role that the port plays in the active topology (root port, designated port, and so on). For example, from an operational point of view, there is no difference between a port in blocking state and a port in listening state. They both discard frames and do not learn MAC addresses. The real difference lies in the role that the spanning tree assigns to the port. You can safely assume that a listening port is either designated or root and is on its way to the forwarding state. Unfortunately, once the port is in forwarding state, there is no way to infer from the port state whether the port is root or designated. This demonstrates the failure of this state-based terminology. RSTP addresses this failure because RSTP decouples the role and the state of a port.

Port States

Port States in STP 802.1D| Ports States | Means | Default Timings to Next State |

|---|---|---|

| Disabled | Administratively down. | |

| Blocking | Receives BPDUs and stops user data. | Monitors reception of BPDUs. 20 second wait for maxage expiration or immediate change if direct/local link failure is detected. |

| Listening | Sends or receives BPDUs in order to check if return to blocking is necessary. | Wait 15 seconds Fwddelay. |

| Learning | Builds topology/CAM table. | Wait 15 seconds Fwddelay. |

| Forwarding | Sends/receives data. |

The total basic topology change is:

-

20 + 2 (15) = 50 sec, if waiting for maxage to expire

-

30 seconds for direct link failure

There are only three port states that are left in RSTP, which correspond to the three possible operational states. The 802.1D states disabled, blocking, and listening have been merged into a unique 802.1w discarding state.

| STP (802.1D) Port State | RSTP (802.1w) Port State | Is Port Included in Active Topology? | Is Port Learning MAC Addresses? |

|---|---|---|---|

| Disabled | Discarding | No | No |

| Blocking | Discarding | No | No |

| Listening | Discarding | Yes | No |

| Learning | Learning | Yes | Yes |

| Forwarding | Forwarding | Yes | Yes |

Port Roles

The role is now a variable that is assigned to a given port. The root port and designated port roles remain, but the blocking port role is now split into the backup and alternate port roles. The spanning tree algorithm (STA) determines the role of a port on the basis of BPDUs. Remember this about BPDUs in order to keep things simple: there is always a way to compare any two BPDUs and decide if one is more useful than the other. The basis of the decision is the value that is stored in the BPDU and, occasionally, the port on which the BPDU is received. The remainder of this section explains very practical approaches to port roles.

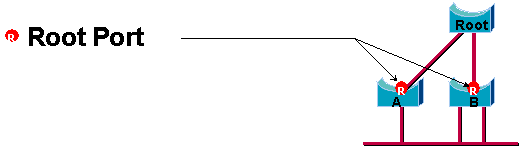

Root Port Role

The port that receives the best BPDU on a bridge is the root port. This is the port that is the closest to the root bridge in terms of path cost. The STA elects a single root bridge in the whole bridged network (per-VLAN). The root bridge sends BPDUs that are more useful than the ones that any other bridge can send. The root bridge is the only bridge in the network that does not have a root port. All other bridges receive BPDUs on at least one port.

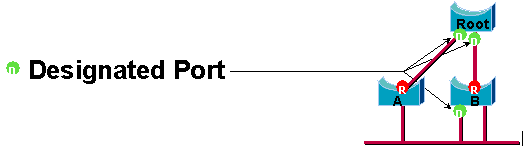

Designated Port Role

A port is designated if it can send the best BPDU on the segment to which the port is connected. 802.1D bridges link together different segments (Ethernet segments, for example) in order to create a bridged domain. On a given segment, there can be only one path toward the root bridge. If there are two paths, there is a bridging loop in the network. All bridges that are connected to a given segment listen to the BPDUs of the others and agree on the bridge that sends the best BPDU as the designated bridge for the segment. The corresponding port on that bridge is designated.

Alternate and Backup Port Roles

These two port roles correspond to the blocking state of 802.1D. The definition of a blocked port is a port that is not the designated or root port. A blocked port receives a more useful BPDU than the BPDU that it sends out on its segment. Remember that a port absolutely needs to receive BPDUs in order to stay blocked. RSTP introduces these two roles for this purpose.

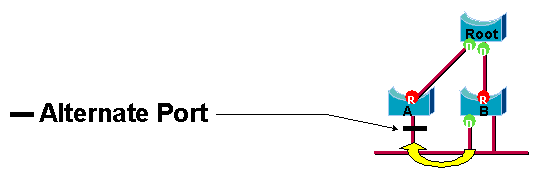

An alternate port is a port that is blocked by receiving more useful BPDUs from another bridge. This diagram illustrates:

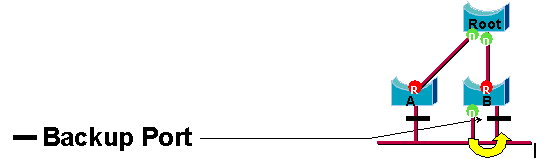

A backup port is a port that is blocked by receiving more useful BPDUs from the same bridge that the port is on. This diagram illustrates:

This distinction was already made internally within 802.1D. This is essentially how Cisco UplinkFast functions. The rationale behind this is that an alternate port provides an alternate path to the root bridge. Therefore, this port can replace the root port if it fails. Of course, a backup port provides redundant connectivity to the same segment and cannot guarantee an alternate connectivity to the root bridge. Therefore, the backup port was excluded from the uplink group.

As a result, RSTP calculates the final topology for the spanning tree with use of exactly the same criteria as 802.1D. There is no change in the way that the different bridge and port priorities are used. The name blocking is used for the discarding state in Cisco implementation. CatOS release 7.1 and later releases still display the listening and learning states, which gives even more information about a port than the IEEE standard requires. But, the new feature is that there is now a difference between the role that the protocol has determined for a port and its current state. For example, it is now perfectly valid for a port to be designated and blocking at the same time. While this typically happens for very short periods of time, it simply means that this port is in a transitory state toward designated forwarding.

STP Interactions with VLANs

There are three different ways to correlate VLANs with Spanning Tree:

-

A single Spanning Tree for all VLANs, or Common Spanning Tree Protocol (CST), such as IEEE 802.1D

-

A Spanning Tree per VLAN, or shared Spanning Tree, such as Cisco PVST

-

A Spanning Tree per set of VLANs, or Multiple Spanning Tree (MST), such as IEEE 802.1s

From a configuration standpoint, these three types of spanning tree modes as they relate to interaction with VLANs can be configured in one of three types of modes:

-

pvst—Per-VLAN Spanning Tree. This actually implements PVST+, but is noted in Cisco IOS Software as simply PVST.

-

rapid-pvst—The evolution of the 802.1D standard enhances convergence times and incorporates the standards-based (802.1w) properties of UplinkFast and BackboneFast.

-

mst—This is the 802.1s standard for a spanning tree per set of VLANs or MSTs. This also incorporates the 802.1w rapid component within the standard.

A mono Spanning Tree for all VLANs allows only one active topology and therefore no load balancing. A STP blocked port blocks for all VLANs and carries no data.

One Spanning Tree per VLAN or PVST+ allows load balancing but requires more BPDU CPU processing as the number of VLANs increases.

The new 802.1s standard (MST) allow the definition of up to 16 active STP instances/topologies, and the mapping of all VLANs to these instances. In a typical campus environment, only two instances need to be defined. This technique allows STP scale to many thousands of VLANs while it enables load balancing.

The support for Rapid-PVST and pre-standard MST is introduced in Cisco IOS Software Release 12.1(11b)EX and 12.1(13)E for Catalyst 6500. Catalyst 4500with Cisco IOS Software Release 12.1(12c)EW and later releases support pre-standard MST. Rapid PVST support is added in Cisco IOS Software Release12.1(19)EW for Catalyst 4500 platform. The standard compliant MST is supported in Cisco IOS Software Release 12.2(18)SXF for Catalyst 6500 and Cisco IOS Software Release12.2(25)SG for Catalyst 4500 series switches.

Refer to Understanding Rapid Spanning-Tree Protocol (802.1w) and Understanding Multiple Spanning-Tree Protocol (802.1s) for more information.

Spanning Tree Logical Ports

The Catalyst 4500 and 6500 release notes provide guidance on the number of logical ports in the Spanning Tree per switch. The sum of all logical ports equals the number of trunks on the switch times the number of active VLANs on the trunks, plus the number of non-trunking interfaces on the switch. Cisco IOS software generates a system log message if the maximum number of logical interfaces exceed the limitation. It is recommended to not exceed the recommended guidance.

This table compares the number of logical ports supported with various STP mode and supervisor type:

| Supervisor | PVST+ | RPVST+ | MST |

|---|---|---|---|

| Catalyst 6500 Supervisor 1 | 6,0001 total 1,200 per switching module | 6,000 total 1,200 per switching module | 25,000 total 3,0002 per switching module |

| Catalyst 6500 Supervisor 2 | 13,0001 total 1,8002 per switching module | 10,000 total 1,8002 per switching module | 50,000 total 6,0002 per switching module |

| Catalyst 6500 Supervisor 720 | 13,000 total 1,8002 per switching module | 10,000 total 1,8002 per switching module | 50,0003 total 6,0002 per switching module |

| Catalyst 4500 Supervisor II plus | 1,500 total | 1,500 total | 25,000 total |