Cisco-Nimble Solution on Cisco UCS and Nimble AF5000 with Citrix XenDesktop 7.11 VDI 5000 Seat Deployment with Graphics Support

Available Languages

Cisco-Nimble Solution on Cisco UCS and Nimble AF5000 with Citrix XenDesktop 7.11 VDI 5000 Seat Deployment with Graphics Support

Last Updated: March 22, 2017

About Cisco Validated Designs (CVD)

The CVD program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information visit

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2017 Cisco Systems, Inc. All rights reserved.

Cisco-Nimble Solution Benefits

Cisco Unified Computing System

Cisco UCS 5108 Blade Server Chassis

Cisco UCS 6200 Series Fabric Interconnects

Cisco Nexus 9000 Series Platform Switches

Cisco MDS 9100 Series Fabric Switches

Nimble Storage – All Flash Array

Citrix XenApp and XenDesktop 7.11

Citrix Provisioning Services 7.11

Citrix Desktop Studio for XenApp 7.11

Benefits for Desktop Administrators

Citrix Provisioning Services Solution

Citrix Provisioning Services Infrastructure

Cisco UCS Server – vSphere Configuration

Cisco UCS Server – Virtual Switching using Cisco Nexus 1000V

Cisco Nexus 9000 Series – vPC Best Practices

Architecture and Design Considerations for Desktop Virtualization

Understanding Applications and Data

Project Planning and Solution Sizing Sample Questions

Citrix XenDesktop Design Fundamentals

Example XenDesktop Deployments

Designing a XenDesktop Environment for a Mixed Workload

High-Level Storage Architecture Design

Solution Hardware and Software

Configuration Topology for Scalable Citrix XenDesktop Mixed Workload

Cisco Unified Computing System Configuration

Base Cisco UCS System Configuration

Set Jumbo Frames in Cisco UCS Fabric

Create Network Control Policy for Cisco Discovery Protocol

Cisco UCS System Configuration for Cisco UCS B-Series

Configure Update Default Maintenance Policy

Create vNIC Templates for Cisco UCS B-Series

Create vHBA Templates for Cisco UCS B-Series

Create Service Profile Templates for Cisco UCS B-Series

Nimble AF5000 Adaptive Array System Configuration

Nimble Management Tools: InfoSight

Register and Login to InfoSight

Configure Arrays to Send Data to InfoSight

Nimble Management Tools: vCenter Plugin

Configure Arrays to Monitor your Virtual Environment

Configure Setup Email Notifications for Alerts

SAN Configuration on the Cisco MDS 9148 Switches

Install and Configure ESXi 6 U2b

Download Cisco Custom Image for ESXi 6 Update 2b

Set Up Management Networking for ESXi Hosts

Download VMware vSphere Client

Log in to VMware ESXi Hosts by Using VMware vSphere Client

Install and Configure vCenter 6

Install the Nimble Connection Manager

Building the Virtual Machines and Environment

Install and Configure Cisco Nexus 1000v VSUM and VEM

Install Cisco Virtual Switch Update Manager

Install Cisco Virtual Switch Update Manager

Install Cisco Nexus 1000V using Cisco VSUM

Perform Base Configuration of the Primary VSM

Add VMware ESXi Hosts to Cisco Nexus 1000V

Migrate ESXi Host Redundant Network Ports to Cisco Nexus 1000V

Cisco Nexus 1000V Configuration

Installing and Configuring Infrastructure, XenDesktop and XenApp

XenDesktop and XenApp Prerequisites

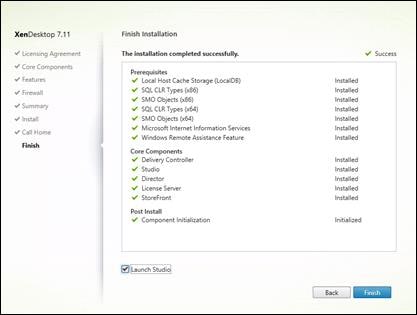

Install XenDesktop Delivery Controller, Citrix Licensing and StoreFront

Additional XenDesktop Controller Configuration

Add the Second Delivery Controller to the XenDesktop Site

Create Host Connections with Citrix Studio

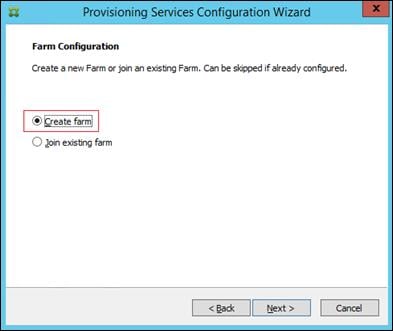

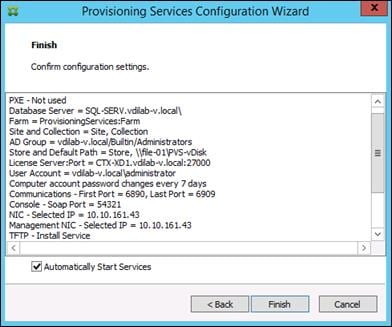

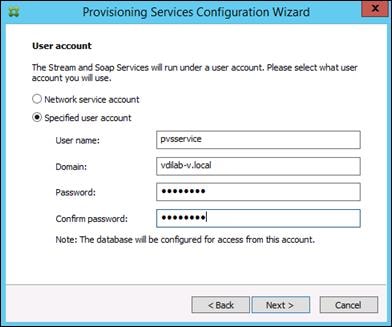

Installing and Configuring Citrix Provisioning Server 7.11

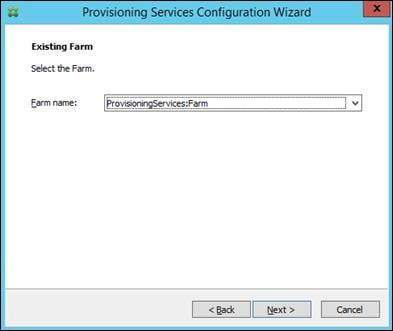

Install Additional PVS Servers

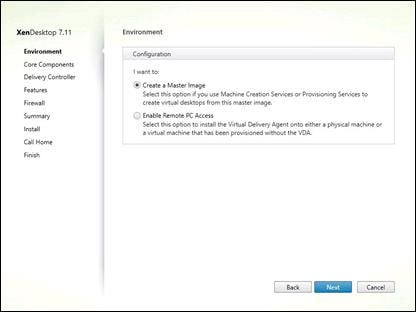

Install XenDesktop Virtual Desktop Agents

Install the Citrix Provisioning Services Target Device Software

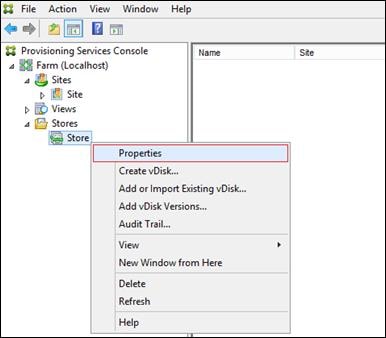

Create Citrix Provisioning Services vDisks

Configuring User Profile Management

Test Setup, Configuration, and Load Recommendation

Testing Methodology and Success Criteria

Pre-Test Setup for Single and Multi-Blade Testing

Server-Side Response Time Measurements

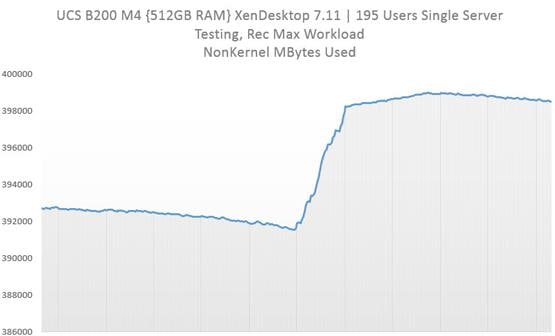

Test I: PVS Single Server on B200-M4 with 195 Users Test Results

Test II: PVS Non-Persistent Windows 10 VDI Cluster with 2500 Users Test Results

HSD Single-Server Recommended Maximum Workload for Cisco UCS B-Series

Test V: HSD Single Server on Cisco UCS B200-M4 with 290 Users Test Results

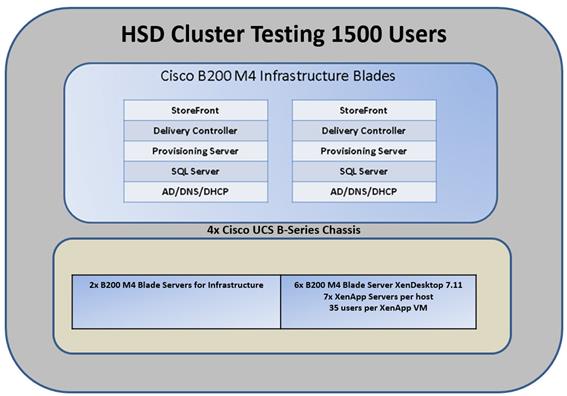

Test VI: HSD Scaling with 1500 Users Test Results

Solution Design Considerations – Nimble Storage

Storage Test Case I – 2500 XenDesktop Session Boot Storm

Nimble Storage – Absolute Resiliency and Non-Stop Availability

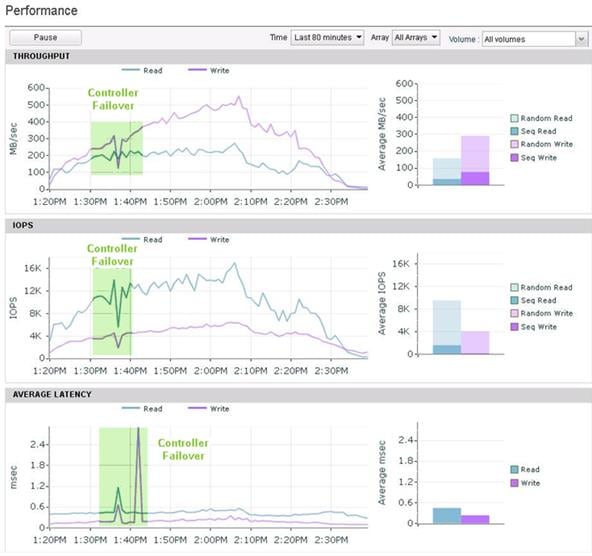

Storage Test Case II – Nimble Storage Controller Failover During an Active Workload

Storage Test Case III – Multiple Disk Drive Failures During an Active workload

Nimble Storage – Transparent Application Migration

Nimble Storage Monitoring and Predictive Analytics

Nimble Storage – Infosight VMVision

Single Server Testing Utilizing the NVIDIA M6 Card and vGPU

Cisco UCS B200 M4 Blade Server with NVIDIA M6 GRID Card

Install and Configure NVIDIA M6 Card

Physical Installation of the NVIDIA M6 Card into the Cisco UCS B200 M4 Server

Install the NVIDIA VMware VIB Driver

Install the GPU Drivers into Windows VM

Install and Configure NVIDIA Grid License Server

Testing Methodology and Results for the NVIDIA M6 Cards

Internet Explorer 11 Configuration

Validated Hardware and Software

Appendix A – Cisco Nexus 9372 Switch Configuration

Appendix B – Cisco MDS 9148 Switch Configuration

Cisco® Validated Designs include systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. These designs incorporate a wide range of technologies and products into a portfolio of solutions that have been developed to address the business needs of customers. Cisco and Nimble Storage have partnered to deliver this document, which serves as a specific step by step guide for implementing this solution. This Cisco Validated Design provides an efficient architectural design that is based on customer requirements. The solution that follows is a validated approach for deploying Cisco, Citrix and Nimble Storage technologies as a shared, high performance, resilient, virtual desktop infrastructure.

This document provides a reference architecture and design guide for up to 5000 seat mixed workload on Cisco UCS and Nimble Storage AF5000 array with Citrix XenApp server-based sessions, XenDesktop persistent Windows 10 virtual desktops and XenDesktop pooled Windows 10 virtual desktops on VMware vSphere 6. The solution is a predesigned, best-practice data center architecture built on the Cisco Unified Computing System (UCS), the Cisco Nexus® 9000 family of ethernet switches, Cisco MDS 9000 family of Fibre Channel switches and Nimble Storage all flash array.

This solution is 100 percent virtualized on Cisco UCS B200 M4 blade server booting VMware vSphere 6.0 Update 2 via fibre channel SAN from the Nimble Storage AF5000 storage array The pooled virtual desktops are powered by Citrix Provisioning Services 7.11 with a mix of Citrix XenDesktop 7.11, which supports both persistent and non-persistent virtual Windows 7/8/10 desktops and hosted shared Server 2008 R2, Server 2012 R2 or Server 2016 server desktops, providing unparalleled scale and management simplicity. Citrix XenDesktop pooled Window 10 desktops (2500,) persistent Windows 10 desktops (1000) and Citrix XenApp Server 2012 R2 RDS server based desktop sessions (1500) were provisioned on the Nimble Storage array. Where applicable the document provides best practice recommendations and sizing guidelines for customer deployment of this solution.

The Cisco-Nimble Solution outlined in this document delivers a converged infrastructure platform designed for Enterprise and Cloud datacenters.

The solution provides outstanding virtual desktop end user experience as measured by the Login VSI 4.1 Knowledge Worker workload running in benchmark mode.

The 5000 seat solution provides a large scale building block that can be replicated to confidently scale out to tens of thousands of users.

Introduction

Cisco, Nimble Storage, and Citrix have partnered to deliver a Cisco-Nimble Solution that combines Cisco Unified Computing System servers, Cisco Nexus ethernet and Cisco MDS fibre channel families of switches, and a Nimble Storage all-flash array into a large scale, enterprise desktop virtualization solution, including graphics support.

Customers looking to implement a desktop virtualization solution using shared data center infrastructure face a number of challenges. One key challenge is achieving the levels of IT agility and efficiency necessary to meet business objectives. Addressing these challenges requires having an optimal solution with the following characteristics:

· Availability: Helps ensure applications and services are accessible at all times with no single point of failure

· Flexibility: Ability to support new services without requiring infrastructure modifications.

· Efficiency: Facilitate efficient operation of the infrastructure through re-usable policies and API management.

· Speed: Ease of deployment and management to minimize operating costs.

· Scalability: Ability to expand and grow with some degree of investment protection

· Low Risk: Minimal risk by ensuring optimal design and compatibility of integrated components

Nimble Storage prescribes a data center platform with these characteristics by delivering an integrated architecture that incorporates compute, storage and network in a best practice design. The Cisco-Nimble Solution minimizes risk by testing the integrated architecture to ensure compatibility between its components. The Cisco-Nimble Solution addresses IT pain points by providing documented design, deployment and support that can be used in all stages (planning, design and implementation) of a deployment.

This document outlines the deployment procedures for implementing a virtual desktop infrastructure (VDI) on the Cisco-Nimble Solution platform solution using Citrix desktop and session virtualization technologies. This guide is based on the Cisco-Nimble Solution validation that was done using VMware vSphere 6.0 U2a, Cisco UCS B-Series, Cisco Nexus ethernet switches, Cisco MDS fibre channel switches, a Nimble AF5000 All Flash Array and Citrix XenDesktop, XenApp and Provisioning Services technologies with graphics support.

The Cisco-Nimble Solution is designed for high availability, with no single points of failure while maintaining cost-effectiveness and flexibility in design to support a variety of workloads in enterprise and cloud datacenters. The Cisco-Nimble Solution design can support different hypervisor options, and also be sized and optimized to support different use cases and requirements.

Audience

The intended audience of this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who want to take advantage of an infrastructure built to deliver IT efficiency and enable IT innovation.

Cisco-Nimble Solution Benefits

The Cisco-Nimble Solution is the result of a joint partnership between Cisco and Nimble Storage to deliver a series of infrastructure and application solutions optimized and validated on Cisco UCS, Nimble Storage and Cisco Nexus switches. Customers must use Cisco UCS, Nimble Storage and one of the approved application stacks to be a valid Cisco-Nimble Solution and they must also have valid support contracts with Cisco and Nimble Storage.

Cisco and Nimble Storage have a solid, joint support program focused on the Cisco-Nimble Solution, from customer account and technical sales representatives to professional services and technical support engineers. The support alliance provided by Cisco and Nimble Storage provides customers and channel partners with direct access to technical expert who can collaborate with cross vendors and have access to shared lab resources to resolve potential issues.

Solution Summary

This Cisco Validated Design provides a complete Virtual Desktop Solution utilizing the Cisco-Nimble Solution Architecture. The solution provides test cases and reference architectures for system scalability, self-contained functioning environment and multiple workload on Cisco and Nimble Storage hardware. The following scenarios and test cases where demonstrated in this solution,

1. Single Blade scalability for Citrix XenApp 7.11 RDS HSD, VDI Hosted Virtual Desktop (Persistent, non-persistent)

2. Multiple Workload Cluster scalability for Citrix XenApp 7.11 RDS HSD, VDI Hosted Virtual Desktop (Persistent, non-persistent)

3. Full Scale 5000 User Mixed Workload scalability for Citrix XenDesktop 7.11 Pooled VDI (50%) Persistent VDI (20%) and RDSH (30%)

4. Demonstrate full scale, mixed-workload scalability over a 24 hours (minimum) soak test period where LoginVSI is run in steady-state manner for an extended duration.

5. Demonstrate Nimble Storage features such as Transparent Application Migration, InfoSight and VMVision

6. 5000 user mixed user workload performance testing showcasing Nimble Storage’s metrics such as Bandwidth, IOPS and sub-milli second latency during peak workload.

7. Demonstrate Nimble Storage’s Absolute resiliency and Non-Stop Availability through Controller failover and Drive failure tests during an active workload.

8. NVIDIA M6 GPU integration with Cisco UCS blades and provide implementation guidance on the Cisco-Nimble Solution.

The Cisco-Nimble Solution is a data center architecture for Enterprise or Cloud deployments and uses the following infrastructure components for compute, network and storage:

· Cisco Unified Computing System (Cisco UCS)

· Cisco Nexus and MDS Switches

· Nimble Storage arrays

The validated Cisco-Nimble Solution design covered in this document uses the following models of the above infrastructure components.

· Cisco UCS 5100 Series Blade Server Chassis with 2200 Series Fabric Extenders (FEX)

· Cisco UCS B-Series Blade Servers

· Cisco UCS 6200 Series Fabric Interconnects (FI)

· Cisco Nexus 9300 Series Ethernet switches

· Cisco MDS 9100 Series 16GB Fibre Channel switches

· Nimble AF5000 All Flash Array

· NVidia M6 Graphics Cards for Blade Servers

The above components are integrated using design and component best practices to deliver a converged infrastructure for Enterprise and cloud data centers.

The next section provides a technical overview of the compute, network, storage and management components of the Cisco-Nimble Solution.

Cisco Unified Computing System

The Cisco Unified Computing System™ (Cisco UCS) is a next-generation data center platform that integrates computing, networking, storage access, and virtualization resources into a cohesive system designed to reduce total cost of ownership (TCO) and increase business agility. The system integrates a low-latency; lossless 10 Gigabit Ethernet unified network fabric with enterprise-class, x86-architecture servers. The system is an integrated, scalable, multi-chassis platform where all resources are managed through a unified management domain.

The main components of the Cisco UCS are:

Compute - The system is based on an entirely new class of computing system that incorporates blade servers and modular servers based on Intel processors.

Network - The system is integrated onto a low-latency, lossless, 10-Gbps unified network fabric. This network foundation consolidates LANs, SANs, and high-performance computing networks which are separate networks today. The unified fabric lowers costs by reducing the number of network adapters, switches, and cables, and by decreasing the power and cooling requirements.

Virtualization - The system unleashes the full potential of virtualization by enhancing the scalability, performance, and operational control of virtual environments. Cisco security, policy enforcement, and diagnostic features are now extended into virtualized environments to better support changing business and IT requirements.

Storage access – Cisco UCS system provides consolidated access to both SAN storage and Network Attached Storage over the unified fabric. This provides customers with storage choices and investment protection. Also, the server administrators can pre-assign storage-access policies to storage resources, for simplified storage connectivity and management leading to increased productivity. The Cisco-Nimble Solution can support either iSCSI or Fibre Channel based access. This design covers only Fibre Channel connectivity.

Management: The system uniquely integrates all the system components, enabling the entire solution to be managed as a single entity through Cisco UCS Manager software. Cisco UCS Manager provides an intuitive graphical user interface (GUI), a command-line interface (CLI), and a robust application-programming interface (API) to manage all system configuration and operations. Cisco UCS Manager helps increase IT staff productivity, enabling storage, network, and server administrators to collaborate on defining service profiles for applications. Service profiles are logical representations of desired physical configurations and infrastructure policies. They help automate provisioning and increase business agility, allowing data center managers to provision resources in minutes instead of days.

The Cisco Unified Computing System in the Cisco-Nimble Solution architecture consists of the following components:

· Cisco UCS Manager provides unified management of all software and hardware components in the Cisco Unified Computing System and manages servers, networking, and storage from a single interface.

· Cisco UCS 6200 Series Fabric Interconnects is a family of line-rate, low-latency, lossless, 10-Gbps Ethernet and Fibre Channel over Ethernet interconnect switches providing the management and communication backbone for the Cisco Unified Computing System.

· Cisco UCS 5100 Series Blade Server Chassis supports up to eight blade servers and up to two fabric extenders in a six-rack unit (RU) enclosure.

· Cisco UCS B-Series Blade Servers increase performance, efficiency, versatility and productivity with these Intel based blade servers.

· Cisco UCS Adapters wire-once architecture offers a range of options to converge the fabric, optimize virtualization and simplify management.

· Cisco Nexus 1000V Series Switches are virtual machine access switches for VMware vSphere environments that provide full switching capabilities and Layer 4 through Layer 7 services to virtual machines.

· Cisco UCS Blade Server M6 GPU – GRID 2.0 SW Required for VDI you can now expand your virtualization footprint without compromising performance or user experience while also increasing security. This means, you can empower your workforce to create anything around the world, from any location with the ease and flexibility.

The optional Cisco UCS components of the Cisco-Nimble Solution are:

· Cisco UCS Central provides a scalable management platform for managing multiple, globally distributed Cisco UCS domains with consistency by integrating with Cisco UCS Manager to provide global configuration capabilities for pools, policies, and firmware.

· Cisco UCS Performance Manager is purpose-built data center management solution that provides a single pane-of-glass visibility of a converged heterogeneous infrastructure datacenter for performance monitoring and capacity planning.

Cisco UCS Differentiators

· Embedded Management — Servers in the system are managed by embedded software in the Fabric Interconnects, eliminating need for any external physical or virtual devices to manage the servers.

· Unified Fabric — There is a single Ethernet cable to the FI from the server chassis (blade or modular or rack) for LAN, SAN and management traffic. This converged I/O results in reduced cables, SFPs and adapters – reducing capital and operational expenses of overall solution.

· Auto Discovery — By simply inserting a blade server in the chassis or connecting a rack server to the FI, discovery and inventory of compute resource occurs automatically without any intervention. Auto-discovery combined with unified fabric enables the wire-once architecture of Cisco UCS, where compute capability of Cisco UCS can be extended easily without additional connections to the external LAN, SAN and management networks.

· Policy Based Resource Classification — When a compute resource is discovered by Cisco UCS Manager, it can be automatically classified to a resource pool based on policies defined. This capability is useful in multi-tenant cloud computing. This CVD showcases the policy based resource classification of Cisco UCS Manager.

· Combined Rack, Blade and Modular Server Management — Cisco UCS Manager can manage B-series blade servers, C-series rack servers under the same Cisco UCS domain. Along with stateless computing, this feature makes compute resources truly agnostic to the hardware form factor.

· Model based Management Architecture — Cisco UCS Manager architecture and management database is model based and data driven. An open XML API is provided to operate on the management model. This enables easy and scalable integration of Cisco UCS Manager with other management systems.

· Policies, Pools, Templates — The management approach in Cisco UCS Manager is based on defining policies, pools and templates, instead of cluttered configuration, which enables a simple, loosely coupled, data driven approach in managing compute, network and storage resources.

· Loose Referential Integrity — In Cisco UCS Manager, a service profile, port profile or policies can refer to other policies or logical resources with loose referential integrity. A referred policy cannot exist at the time of authoring the referring policy or a referred policy can be deleted even though other policies are referring to it. This provides different subject matter experts from different domains, such as network, storage, security, server and virtualization the flexibility to work independently to accomplish a complex task.

· Policy Resolution — In Cisco UCS Manager, a tree structure of organizational unit hierarchy can be created that mimics the real life tenants and/or organization relationships. Various policies, pools and templates can be defined at different levels of organization hierarchy. A policy referring to another policy by name is resolved in the organization hierarchy with closest policy match. If no policy with specific name is found in the hierarchy of the root organization, then special policy named “default” is searched. This policy resolution logic enables automation friendly management APIs and provides great flexibility to owners of different organizations.

· Service Profiles and Stateless Computing — A service profile is a logical representation of a server, carrying its various identities and policies. This logical server can be assigned to any physical compute resource as far as it meets the resource requirements. Stateless computing enables procurement of a server within minutes, which used to take days in legacy server management systems.

· Built-in Multi-Tenancy Support — The combination of policies, pools and templates, loose referential integrity, policy resolution in organization hierarchy and a service profiles based approach to compute resources makes Cisco UCS Manager inherently friendly to multi-tenant environment typically observed in private and public clouds.

· Extended Memory — The enterprise-class Cisco UCS B200 M4 blade server extends the capabilities of Cisco’s Unified Computing System portfolio in a half-width blade form factor. The Cisco UCS B200 M4 harnesses the power of the latest Intel® Xeon® E5-2600 v4 Series processor family CPUs with up to 1536 GB of RAM (using 64 GB DIMMs) – allowing huge VM to physical server ratio required in many deployments, or allowing large memory operations required by certain architectures like big data.

· Virtualization Aware Network —Cisco VM-FEX technology makes the access network layer aware about host virtualization. This prevents domain pollution of compute and network domains with virtualization when virtual network is managed by port-profiles defined by the network administrators’ team. VM-FEX also off-loads hypervisor CPU by performing switching in the hardware, thus allowing hypervisor CPU to do more virtualization related tasks. VM-FEX technology is well integrated with VMware vCenter, Linux KVM and Hyper-V SR-IOV to simplify cloud management.

· Simplified QoS —Even though Fibre Channel and Ethernet are converged in Cisco UCS fabric, built-in support for QoS and lossless Ethernet makes it seamless. Network Quality of Service (QoS) is simplified in Cisco UCS Manager by representing all system classes in one GUI panel.

Cisco UCS 5108 Blade Server Chassis

The Cisco UCS 5100 Series Blade Server Chassis is a fundamental building block of the Cisco Unified Computing System, delivering a scalable and flexible blade server architecture. A Cisco UCS 5108 Blade Server chassis is six rack units (6RU) high and can house up to eight half-width or four full-width Cisco UCS B-series blade servers.

For a complete list of blade servers supported, see: http://www.cisco.com/c/en/us/products/servers-unified-computing/ucs-b-series-blade-servers/index.html.

There are four hot-swappable power supplies that are accessible from the front of the chassis. These power supplies are 94 percent efficient and can be configured to support non-redundant, N+1, and grid-redundant configurations. The rear of the chassis contains eight hot-swappable fans, four power connectors (one per power supply), and two I/O bays that can support Cisco UCS 2000 Series Fabric Extenders. The two fabric extenders can be used for both redundancy and bandwidth aggregation. A passive mid-plane provides up to 80 Gbps of I/O bandwidth per server slot and up to 160 Gbps of I/O bandwidth for two slots. The chassis is capable of supporting future 40 Gigabit Ethernet standards.

Cisco UCS 5108 blade server chassis uses a unified fabric and fabric-extender technology to simplify and reduce cabling by eliminating the need for dedicated chassis management and blade switches. The unified fabric also reduces TCO by reducing the number of network interface cards (NICs), host bus adapters (HBAs), switches, and cables that need to be managed, cooled, and powered. This architecture enables a single Cisco UCS domain to scale up to 20 chassis with minimal complexity.

For more information, see: http://www.cisco.com/c/en/us/products/servers-unified-computing/ucs-5100-series-blade-server-chassis/index.html.

Figure 1 Cisco UCS 5108 Blade Server Chassis

|

Front View |

Back View |

Cisco UCS 6200 Series Fabric Interconnects

Cisco UCS Fabric Interconnects are a family of line-rate, low-latency, lossless 1/10 Gigabit Ethernet and Fiber Channel over Ethernet (FCoE), and 4/2/1 and 8/4/2 native Fibre Channel switches. Cisco UCS Fabric Interconnects are the management and connectivity backbone of the Cisco Unified Computing System. Each chassis or connects to the FI using a single Ethernet cable for carrying all network, storage and management traffic. Cisco UCS Fabric Interconnects provide uniform network and storage access to servers and are typically deployed in redundant pairs.

Cisco UCS Manager provides unified, embedded management of all software and hardware components of the Cisco Unified Computing System™ (Cisco UCS) across multiple chassis with blade servers and thousands of virtual machines. The Cisco UCS Management software (Cisco UCS Manager) runs as an embedded device manager in a clustered pair fabric interconnects and manages the resources connected to it. An instance of Cisco UCS Manager with all Cisco UCS components managed by it forms a Cisco UCS domain, which can include up to 160 servers.

The Cisco UCS Fabric Interconnect family is currently comprised of the Cisco 6100 Series, Cisco 6200 Series and Cisco 6300 Series of Fabric Interconnects.

![]() Cisco UCS 6248UP Fabric Interconnects were used for this CVD.

Cisco UCS 6248UP Fabric Interconnects were used for this CVD.

For more information, see: http://www.cisco.com/c/en/us/products/servers-unified-computing/ucs-6200-series-fabric-interconnects/index.html

Figure 2 Cisco UCS 6248UP Fabric Interconnect

Cisco UCS Fabric Extenders

The Cisco UCS Fabric extenders multiplexes and forwards all traffic from servers in a chassis to a parent Cisco UCS Fabric Interconnect over from 10-Gbps unified fabric links. All traffic, including traffic between servers on the same chassis, or between virtual machines on the same server, is forwarded to the parent fabric interconnect, where network profiles and polices are maintained and managed by the Cisco UCS Manager. The Fabric extender technology was developed by Cisco. Up to two fabric extenders can be deployed in a Cisco UCS chassis.

The Cisco UCS Fabric Extender family currently comprises of Cisco UCS 2200 and Cisco Nexus 2000 Series of Fabric Extenders. The Cisco UCS 2200 Series Fabric Extenders come in two flavors as outlined below.

· The Cisco UCS 2204XP Fabric Extender has four 10 Gigabit Ethernet, FCoE-capable, SFP+ ports that connect the blade chassis to the fabric interconnect. Each Cisco UCS 2204XP has sixteen 10 Gigabit Ethernet ports connected through the mid-plane to each half-width slot in the chassis. Typically configured in pairs for redundancy, two fabric extenders provide up to 80 Gbps of I/O to the chassis.

· The Cisco UCS 2208XP Fabric Extender has eight 10 Gigabit Ethernet, FCoE-capable, SFP+ ports that connect the blade chassis to the fabric interconnect. Each Cisco UCS 2208XP has thirty-two 10 Gigabit Ethernet ports connected through the mid-plane to each half-width slot in the chassis. Typically configured in pairs for redundancy, two fabric extenders provide up to 160 Gbps of I/O to the chassis.

![]() Cisco UCS 2208 Fabric Extenders were used for this CVD.

Cisco UCS 2208 Fabric Extenders were used for this CVD.

For more information, see: http://www.cisco.com/c/en/us/products/collateral/servers-unified-computing/ucs-6200-series-fabric-interconnects/data_sheet_c78-675243.html

Figure 3 Cisco UCS 2208 Series Fabric Extenders

![]()

Cisco UCS Manager

Cisco Unified Computing System (UCS) Manager provides unified, embedded management for all software and hardware components in the Cisco UCS. Using Cisco Single Connect technology, it manages, controls, and administers multiple chassis for thousands of virtual machines. Administrators use the software to manage the entire Cisco Unified Computing System as a single logical entity through an intuitive GUI, a command-line interface (CLI), or an XML API. The Cisco UCS Manager resides on a pair of Cisco UCS 6200 Series Fabric Interconnects using a clustered, active-standby configuration for high availability.

Cisco UCS Manager offers unified embedded management interface that integrates server, network, and storage. Cisco UCS Manger performs auto-discovery to detect inventory, manage, and provision system components that are added or changed. It offers comprehensive set of XML API for third part integration, exposes 9000 points of integration and facilitates custom development for automation, orchestration, and to achieve new levels of system visibility and control.

Service profiles benefit both virtualized and non-virtualized environments and increase the mobility of non-virtualized servers, such as when moving workloads from server to server or taking a server offline for service or upgrade. Profiles can also be used in conjunction with virtualization clusters to bring new resources online easily, complementing existing virtual machine mobility.

For more information on Cisco UCS Manager, see: http://www.cisco.com/c/en/us/products/servers-unified-computing/ucs-manager/index.html

Cisco UCS B-Series M4 Servers

![]() Cisco UCS B200 M4 blade servers with Cisco Virtual Interface Card 1340 were used for this CVD.

Cisco UCS B200 M4 blade servers with Cisco Virtual Interface Card 1340 were used for this CVD.

The enterprise-class Cisco UCS B200 M4 Blade Server extends the capabilities of Cisco’s Unified Computing System portfolio in a half-width blade form factor. The Cisco UCS B200 M4 uses the power of the latest Intel® Xeon® E5-2600 v3 Series processor family CPUs with up to 768 GB of RAM (using 32 GB DIMMs), two solid-state drives (SSDs) or hard disk drives (HDDs), and up to 80 Gbps throughput connectivity. The Cisco UCS B200 M4 Blade Server mounts in a Cisco UCS 5100 Series blade server chassis or Cisco UCS Mini blade server chassis. It has 24 total slots for registered ECC DIMMs (RDIMMs) or load-reduced DIMMs (LR DIMMs) for up to 768 GB total memory capacity (Cisco UCS B200 M4 configured with two CPUs using 32 GB DIMMs). It supports one connector for Cisco’s VIC 1340 or 1240 adapter, which provides Ethernet and FCoE. There is also a second mezzanine card slot that can be used for the NVidia M6 Graphics cards.

For more information, see: http://www.cisco.com/c/en/us/products/servers-unified-computing/ucs-b200-m4-blade-server/index.html

Figure 4 Cisco UCS B200 M4 Blade Server

Cisco VIC 1340

The Cisco UCS Virtual Interface Card (VIC) 1340 is a 2-port 40-Gbps Ethernet or dual 4 x 10-Gbps Ethernet, FCoE-capable modular LAN on motherboard (mLOM) designed exclusively for the M4 generation of Cisco UCS B-Series Blade Servers. When used in combination with an optional port expander, the Cisco UCS VIC 1340 capabilities is enabled for two ports of 40-Gbps Ethernet. The Cisco UCS VIC 1340 enables a policy-based, stateless, agile server infrastructure that can present over 256 PCIe standards-compliant interfaces to the host that can be dynamically configured as either network interface cards (NICs) or host bus adapters (HBAs). In addition, the Cisco UCS VIC 1340 supports Cisco® Virtual Machine Fabric Extender (VM-FEX) technology, which extends the Cisco UCS Fabric interconnect ports to virtual machines, simplifying server virtualization deployment and management.

For more information, see: http://www.cisco.com/c/en/us/products/interfaces-modules/ucs-virtual-interface-card-1340/index.html

Figure 5 Cisco VIC 1340

Cisco Nexus 9000 Series Platform Switches

The Cisco Nexus 9000 family of switches offer both modular (9500 switches) and fixed (9300 switches) 1/10/40/100 Gigabit Ethernet switch configurations designed to operate in one of two modes:

· Application Centric Infrastructure (ACI) mode that uses an application centric policy model with simplified automation and centralized management

· Cisco NX-OS mode for traditional architectures – the Cisco-Nimble Solution design in this document uses this mode

Architectural Flexibility

· Delivers high performance and density, and energy-efficient traditional 3-tier or leaf-spine architectures

· Provides a foundation for Cisco ACI, automating application deployment and delivering simplicity, agility, and flexibility

Scalability

· Up to 60-Tbps of non-blocking performance with less than 5-microsecond latency

· Up to 2304 10-Gbps or 576 40-Gbps non-blocking layer 2 and layer 3 Ethernet ports

· Wire-speed virtual extensible LAN (VXLAN) gateway, bridging, and routing

High Availability

· Full Cisco In-Service Software Upgrade (ISSU) and patching without any interruption in operation

· Fully redundant and hot-swappable components

· A mix of third-party and Cisco ASICs provide for improved reliability and performance

Energy Efficiency

· The chassis is designed without a mid-plane to optimize airflow and reduce energy requirements

· The optimized design runs with fewer ASICs, resulting in lower energy use

· Efficient power supplies included in the switches are rated at 80 Plus Platinum

Investment Protection

· Cisco 40-Gb bidirectional transceiver allows for reuse of an existing 10 Gigabit Ethernet cabling plant for 40 Gigabit Ethernet

· Designed to support future ASIC generations

· Easy migration from NX-OS mode to ACI mode

![]() A pair of Cisco Nexus 9372PX Platform switches were used in this CVD.

A pair of Cisco Nexus 9372PX Platform switches were used in this CVD.

For more information, refer to: http://www.cisco.com/c/en/us/products/switches/nexus-9000-series-switches/index.html

Cisco Nexus 1000v

Cisco Nexus 1000V Series Switches provide a comprehensive and extensible architectural platform for virtual machine (VM) and cloud networking. Integrated into the VMware vSphere hypervisor and fully compatible with VMware vCloud® Director, the Cisco Nexus 1000V Series provides:

· Advanced virtual machine networking using Cisco NX-OS operating system. Capabilities include PortChannels (LACP), IEEE 802.1Q VLAN trunking, Jumbo Frame support and Virtual Extensible Local Area Network (VXLAN) for cloud deployments

· Cisco vPath technology for efficient and optimized integration of Layer 4-7 virtual networking services (e.g. Firewall)

· Mobile virtual machine security and network policy. Advanced security capabilities include Strom Control, BPDU Guard, Dynamic Host Configuration Protocol (DHCP) snooping, IP source guard, Dynamic Address Resolution Protocol (ARP) Inspection, and Cisco TrustSec® security group access (SGA), Security Group Tagging (SGT) and Security Group ACL (SGACL) support.

· Non-disruptive operational model for your server virtualization and networking teams

· Policy-based virtual machine connectivity

· Quality of service (QoS)

· Monitoring: NetFlow, Switch Port Analyzer (SPAN), and Encapsulated Remote SPAN (ERSPAN)

· Easy deployment using Cisco Virtual Switch Update Manager (VSUM) which allows you to install, upgrade, monitor and also migrate hosts to Cisco Nexus 1000V using the VMware vSphere web client.

· Starting with Cisco Nexus 1000V Release 4.2(1)SV2(1.1), a plug-in for the VMware vCenter Server, known as vCenter plug-in (VC plug-in) is supported on the Cisco Nexus 1000V virtual switch. It provides the server administrators a view of the virtual network and a visibility into the networking aspects of the Cisco Nexus 1000V virtual switch. The server administrator can thus monitor and manage networking resources effectively with the details provided by the vCenter plug-in. The vCenter plug-in is supported only on VMware vSphere Web Clients where you connect to VMware vCenter through a browser. The vCenter plug-in is installed as a new tab in the Cisco Nexus 1000V as part of the user interface in vSphere Web Client.

For more information, refer to:

· http://www.cisco.com/en/US/products/ps9902/index.html

· http://www.cisco.com/en/US/products/ps10785/index.html

Cisco MDS 9100 Series Fabric Switches

The Cisco® MDS 9148S 16G Multilayer Fabric Switch is the next generation of the highly reliable, flexible, and low-cost Cisco MDS 9100 Series switches. It combines high performance with exceptional flexibility and cost effectiveness. This powerful, compact one rack-unit (1RU) switch scales from 12 to 48 line-rate 16 Gbps Fibre Channel ports. Cisco MDS 9148S is powered by Cisco NX-OS and delivers advanced storage networking features and functions with ease of management and compatibility with the entire Cisco MDS 9000 Family portfolio for reliable end-to-end connectivity.

Cisco MDS 9148S is well suited as the following:

· Top-of-rack switch in medium-sized deployments

· Edge switch in a two-tiered (core-edge) data center topology

· Standalone SAN in smaller departmental deployments

The main features and benefits of Cisco MDS 9148S are summarized in the table below.

Table 1 Cisco MDS 9148S Features and Benefits

| Feature |

Benefit |

| Up to 48 autosensing Fibre Channel ports are capable of speeds of 2, 4, 8, and 16 Gbps, with 16 Gbps of dedicated bandwidth for each port. Cisco MDS 9148S scales from 12 to 48 high-performance Fibre Channel ports in a single 1RU form factor. |

High Performance and Flexibility at Low Cost |

| Supports dual redundant hot-swappable power supplies and fan trays, PortChannels for Inter-Switch Link (ISL) resiliency, and F-port channeling for resiliency on uplinks from a Cisco MDS 9148S operating in NPV mode. |

High-Availability Platform for Mission-Critical Deployments |

| Intelligent diagnostics/Hardware based slow port detection and Cisco Switched Port Analyzer (SPAN) and Remote SPAN (RSPAN) and Cisco Fabric Analyzer to capture and analyze network traffic. Fibre Channel ping and traceroute to identify exact path and timing of flows. Cisco Call Home for added reliability. |

Enhanced performance and monitoring capability. Increase reliability, faster problem resolution, and reduce service costs |

| In-Service Software Upgrades

|

Reduce downtime for planned maintenance and software upgrades |

| Aggregate up to 16 physical ISLs into a single logical PortChannel bundle with multipath load balancing. |

High performance ISLs and optimized bandwidth utilization |

| Virtual output queuing on each port by eliminating head-of-line blocking |

Helps ensure line-rate performance

|

| PowerOn Auto Provisioning to automate deployment and upgrade of software images. |

Reduces administrative costs |

| Smart zoning for creating and managing zones |

Reduces consumption of hardware resources and administrative time |

| SAN management through a command-line interface (CLI) or Cisco Prime DCNM for SAN Essentials Edition, a centralized management tool. Cisco DCNM task-based wizards simplify management of single or multiple switches and fabrics including management of virtual resources end-to-end, from the virtual machine and switch to the physical storage. |

Simplified Storage Management with built-in storage network management and SAN plug-and-play capabilities. Sophisticated Diagnostics |

| Fabric-wide per-VSAN role-based authentication, authorization, and accounting (AAA) services using RADIUS, Lightweight Directory Access Protocol (LDAP), Microsoft Active Directory (AD), and TACACS+. Also provides VSAN fabric isolation, intelligent, port-level packet inspection, Fibre Channel Security Protocol (FC-SP) host-to-switch and switch-to-switch authentication, Secure File Transfer Protocol (SFTP), Secure Shell Version 2 (SSHv2), and Simple Network Management Protocol Version 3 (SNMPv3) implementing Advanced Encryption Standard (AES). Other security features include control-plane security, hardware-enforced zoning, broadcast zones, and management access. |

Comprehensive Network Security Framework |

| Virtual SAN (VSAN) technology for hardware-enforced, isolated environments within a physical fabric. Access control lists (ACLs) for hardware-based, intelligent frame processing. Advanced traffic management features, such as fabric-wide quality of service (QoS) and Inter-VSAN Routing (IVR) for resource sharing across vSANs. Zone-based QoS simplifies configuration and administration by using the familiar zoning concept. |

Intelligent Network Services and Advanced Traffic Management for better and predictable network service without compromising scalability, reliability, availability, and network security

|

| Common software across all platforms by using Cisco NX-OS and Cisco Prime DCNM across the fabric. |

Reduce total cost of ownership (TCO) through consistent provisioning, management, and diagnostic capabilities across the fabric |

Nimble Storage – All Flash Array

The AF series array family starts at the entry level with AF1000, then expands up to the AF3000, AF5000, AF7000, and then finally, at the high end, to the AF9000. All Flash arrays can be nondisruptively upgraded from the entry level, all the way to the high end array model.

This CVD specifically uses the Nimble Storage AF5000 all flash array. The AF5000 is designed to deliver up to 120,000 IOPS at sub-millisecond response times. The AF5000 delivers the best price for performance value within the Nimble Storage all flash array family.

Each 4U chassis supports 48 SSD drives with additional flash capacity available via expansion shelves. The storage subsystem uses SAS 3.0 compliant 12Gbps SAS connectivity, with an aggregated bandwidth of 48Gbps across 4 channels per port. The AF5000 all flash array can scale flash capacity up to 184TB raw, extensible to 680TB effectively with a 5:1 data reduction through data deduplication and compression.

For additional information, refer to: https://www.nimblestorage.com/technology-products/all-flash-arrays.

Figure 6 Nimble AF5000 All Flash Array

The Nimble Storage Predictive Flash Platform

The Nimble Storage Predictive Flash platform enables enterprise IT organizations to implement a single architectural approach to dynamically cater to the needs of varying workloads, driven by the power of predictive analytics. Predictive Flash is the only storage platform that optimizes across performance, capacity, data protection, and reliability within a dramatically smaller footprint.

Predictive Flash is built upon Nimble’s CASL™ architecture, NimbleOS and InfoSight™, the company’s cloud-connected predictive analytics and management system. CASL scales performance and capacity seamlessly and independently. InfoSight leverages the power of deep data analytics to provide customers with precise guidance on the optimal approach to scaling flash, CPU, and capacity around changing application needs, while ensuring peak storage health.

NimbleOS Architecture

The Nimble Storage operating system, NimbleOS is based on its patented Cache Accelerated Sequential Layout (CASL™) architecture. CASL leverages the unique properties of flash and disk to deliver high performance and capacity – all within a dramatically small footprint. CASL and InfoSight™ form the foundation of the Predictive Flash platform, which allows for the dynamic and intelligent deployment of storage resources to meet the growing demands of business-critical applications.

Universal Hardware Architecture

All Nimble Storage arrays are built upon a universal hardware platform. All array components are modular and components, including controllers, can be nondisruptively upgraded easily by the customer or Nimble Storage representative. The universal hardware architecture spans both all flash and adaptive flash arrays, giving Nimble Storage and customers maximum flexibility and reuse with array hardware.

Figure 7 Nimble Storage Universal hardware Architecture

Nimble Storage InfoSight

Using systems modeling, predictive algorithms, and statistical analysis, InfoSight™ solves storage administrators’ most difficult problems. InfoSight also ensures storage resources are dynamically and intelligently deployed to satisfy the changing needs of business-critical applications, a key facet of Nimble Storage’s Predictive Flash platform. At the heart of InfoSight is a powerful engine comprised of deep data analytics applied to telemetry data gathered from Nimble arrays deployed across the globe. More than 30 million sensor values are collected per day per Nimble Storage array. The InfoSight Engine transforms the millions of gathered data points into actionable information that allows customers to realize significant operational efficiency through:

· Maintaining optimal storage performance

· Projecting storage capacity needs

· Proactively monitoring storage health and getting granular alerts

· Proactively diagnoses and automatically resolves complex issues, freeing up IT resources for value-creating projects

· Ensures a reliable data protection strategy with detailed alerts and monitoring

· Expertly guides storage resource planning, determining the optimal approach to scaling cache, IOPS to meet changing SLAs

· Identifies latency and performance bottlenecks through the entire virtualization stack

· Delivers transformed support experience from level 3 support

For more information, refer to: https://www.nimblestorage.com/infosight/architecture/

VMVision – Hypervisor and VMware Monitoring

VMVision provides granular view of resources used by each Virtual machine connected to a Nimble array. Using VMVision we can correlate performance of VMs in same datastore with insights on hypervisor and host resources constraints like vcpu, memory and network. VMVision also helps in Determining VM latency factors – storage, Host or Network. Also helps in taking corrective action on noisy neighbor VMs and reclaim space from underutilized VMs. Every hours those correlated stats are sent to InfoSight via the heartbeat mechanism for processing. There is no additional host-side agents/tools or licenses to administer for this feature to work.

Figure 8 Nimble Storage VMVision

In-Line Compression

CASL uses fast, in-line compression for variable application block sizes to decrease the footprint of inbound write data by as much as 75 percent. Once there are enough variable-sized blocks to form a full write stripe, CASL writes the data to disk. If the data being written is active data, it is also copied to SSD cache for faster reads. Written data is protected with triple-parity RAID.

In-Line Deduplication

Nimble storage all flash arrays include in-line data deduplication in addition to in-line compression. The combination of in-line deduplication and in-line compression delivers the most comprehensive data reduction capability that allows NimbleOS to minimize the data footprint on SSD, maximize usable space, and greatly minimize write amplification

Common Data Services and Transparent Application Migration

Enterprises can deploy All Flash, Adaptive Flash or a combination of both to meet the varying needs of all applications. Since both arrays run the same NimbleOS, management and functionality are identical, and arrays can be clustered together and managed as one.

Figure 9 Transparent Application Migration

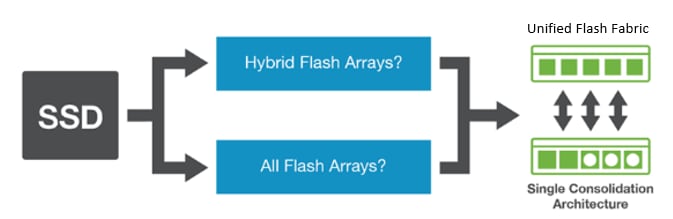

Unified Flash Fabric

The Nimble Unified Flash Fabric is a single consolidated architecture that enables flash for all Enterprise applications. Until now, enterprises have been forced to choose between Hybrid Flash and All Flash arrays. This is no longer the case with the Nimble Unified Flash Fabric. The Unified Flash Fabric enables flash for all Enterprise Applications by unifying All Flash and Adaptive Flash arrays into a single consolidated architecture with common data services.

Figure 10 Nimble Storage Unified Flash Fabric

Thin-Provisioning and Efficient Capacity Utilization

Capacity is only consumed as data is written. CASL efficiently reclaims free space on an ongoing basis, preserving write performance with higher levels of capacity utilization. This avoids fragmentation issues that hamper other architectures.

Accelerated Write Performance

Once writes are placed in NVDIMM (made persistent and mirrored to the passive partner controller) they are acknowledged back to the host and sent to SSD at a later time (generally when there is a full stripe be written). As a result, writes to a Nimble Storage Array are acknowledged at memory speeds.

Maximizing Flash Write Cycles

By sequencing random write data, NimbleOS sends full stripes of data to SSD. By compressing and deduplicating the data in-line, the data footprint on disk in minimized. Additionally, the data being sent to disk is of variable block size, which means NimbleOS does not have to break up data into smaller, fixed-sized chunks, to be placed on SSD. As a result, data is efficiently sent to SSD. This allows Nimble Storage arrays to maximize the deployable life of a flash drive by minimizing write wear on the flash cells.

Read Performance

NimbleOS and all flash arrays deliver sub-millisecond read latency and high throughput across a wide variety of demanding enterprise applications. This is because all reads come from SSD.

All Flash

Nimble Storage all flash arrays only use SSDs to store data. As a result, all read operations come directly from the SSDs themselves, providing for extremely fast read operations. All writes are also sent to SSD, but as a result of the NimbleOS architecture and the usage of NVDIMMs to store and organize write operations, all writes are acknowledged at memory speeds (just as with the adaptive flash arrays).

Nimble Storage all flash arrays use TLC (Triple Level Cell) SSD, which allows for maximum flash storage density. Traditional SSD issues revolving around write wear, write amplification, and so on are not an issue within the variable block NimbleOS architecture, which minimizes write amplification greatly due to intelligent data layout and management within the file system.

Efficient, Fully Integrated Data Protection

All-inclusive snapshot-based data protection is built into the Adaptive Flash platform. Snapshots and production data reside on the same array, eliminating the inefficiencies inherent to running primary and backup storage silos. InfoSight ensures that customers’ data protection strategies work as expected through intuitive dashboards and proactive notifications in case of potential issues.

SmartSnap: Thin, Redirect-on Write Snapshots

Nimble snapshots are point-in-time copies capturing just changed data, allowing three months of frequent snapshots to be easily stored on a single array. Data can be instantly restored, as snapshots reside on the same array as primary data.

SmartReplicate: Efficient Replication

Only compressed, changed data blocks are sent over the network for simple and WAN-efficient disaster recovery.

Zero-Copy Clones

Nimble’s snapshots allow fully functioning copies, or clones of volumes, to be quickly created. Instant clones deliver the same performance and functionality as the source volume, an advantage for virtualization, VDI, and test/development workloads.

Application-Consistent Snapshots

Nimble enables instant application/VM-consistent backups using VSS framework and VMware integration, using application templates with pre-tuned storage parameters.

SmartSecure: Flexible Data Encryption

NimbleOS enables individual volume level encryption with little to no performance impact. Encrypted volumes can be replicated to another Nimble target, and data can be securely shredded at the volume level of granularity.

Citrix XenApp and XenDesktop 7.11

Citrix XenApp and XenDesktop are application and desktop virtualization solutions built on a unified architecture so they're simple to manage and flexible enough to meet the needs of all your organization's users. XenApp and XenDesktop have a common set of management tools that simplify and automate IT tasks. You use the same architecture and management tools to manage public, private, and Flash cloud deployments as you do for on premises deployments.

Citrix XenApp delivers the following:

· XenApp published apps, also known as server-based hosted applications: These are applications hosted from Microsoft Windows servers to any type of device, including Windows PCs, Macs, smartphones, and tablets. Some XenApp editions include technologies that further optimize the experience of using Windows applications on a mobile device by automatically translating native mobile-device display, navigation, and controls to Windows applications; enhancing performance over mobile networks; and enabling developers to optimize any custom Windows application for any mobile environment.

· XenApp published desktops, also known as server-hosted desktops: These are inexpensive, locked-down Windows virtual desktops hosted from Windows server operating systems. They are well suited for users, such as call center employees, who perform a standard set of tasks.

· Virtual machine–hosted apps: These are applications hosted from machines running Windows desktop operating systems for applications that can’t be hosted in a server environment.

· Windows applications delivered with Microsoft App-V: These applications use the same management tools that you use for the rest of your XenApp deployment.

Citrix XenDesktop 7.11 includes significant enhancements to help customers deliver Windows apps and desktops as mobile services while addressing management complexity and associated costs. Enhancements in this release include:

· Unified product architecture for XenApp and XenDesktop—the FlexCast Management Architecture (FMA). This release supplies a single set of administrative interfaces to deliver both hosted-shared applications (RDS) and complete virtual desktops (VDI). Unlike earlier releases that separately provisioned Citrix XenApp and XenDesktop farms, the XenDesktop 7.11 release allows administrators to deploy a single infrastructure and use a consistent set of tools to manage mixed application and desktop workloads.

· Support for extending deployments to the cloud. This release provides the ability for Flash cloud provisioning from Amazon Web Services (AWS) or any Cloud Platform-powered public or private cloud. Cloud deployments are configured, managed, and monitored through the same administrative consoles as deployments on traditional on-premises infrastructure.

· Enhanced HDX technologies. Since mobile technologies and devices are increasingly prevalent, Citrix has engineered new and improved HDX technologies to improve the user experience for hosted Windows apps and desktops.

· A new version of StoreFront. The StoreFront 3.0 release provides a single, simple, and consistent aggregation point for all user services. Administrators can publish apps, desktops, and data services to StoreFront, from which users can search and subscribe to services.

· Remote power control for physical PCs. Remote PC access supports “Wake on LAN” that adds the ability to power on physical PCs remotely. This allows users to keep PCs powered off when not in use to conserve energy and reduce costs.

· Full AppDNA support. AppDNA provides automated analysis of applications for Windows platforms and suitability for application virtualization through App-V, XenApp, or XenDesktop. Full AppDNA functionality is available in some editions.

· Additional virtualization resource support. As in this Cisco Validated Design, administrators can configure connections to VMware vSphere 6 hypervisors.

Citrix XenDesktop delivers:

· VDI desktops: These virtual desktops each run a Microsoft Windows desktop operating system rather than running in a shared, server-based environment. They can provide users with their own desktops that they can fully personalize.

· Hosted physical desktops: This solution is well suited for providing secure access powerful physical machines, such as blade servers, from within your data center.

· Remote PC access: This solution allows users to log in to their physical Windows PC from anywhere over a secure XenDesktop connection.

· Server VDI: This solution is designed to provide hosted desktops in multitenant, cloud environments.

· Capabilities that allow users to continue to use their virtual desktops: These capabilities let users continue to work while not connected to your network.

![]() Some XenDesktop editions include the features available in XenApp.

Some XenDesktop editions include the features available in XenApp.

Release 7.11 of XenDesktop includes new features that make it easier for users to access applications and desktops and for Citrix administrator to manage applications:

· The session prelaunch and session linger features help users quickly access server-based hosted applications by starting sessions before they are requested (session prelaunch) and keeping application sessions active after a user closes all applications (session linger).

· Support for unauthenticated (anonymous) users means that users can access server-based hosted applications and server-hosted desktops without presenting credentials to Citrix StoreFront or Receiver.

· Connection leasing makes recently used applications and desktops available even when the site database in unavailable.

· Application folders in Citrix Studio make it easier to administer large numbers of applications.

Other new features in this release allow you to improve performance by specifying the number of actions that can occur on a site's host connection; display enhanced data when you manage and monitor your site; and anonymously and automatically contribute data that Citrix can use to improve product quality, reliability, and performance.

For more information about the features new in this release, see Citrix XenDesktop Release 7.11.

Figure 11 Logical Architecture of Citrix XenDesktop

Citrix Provisioning Services 7.11

Most enterprises struggle to keep up with the proliferation and management of computers in their environments. Each computer, whether it is a desktop PC, a server in a data center, or a kiosk-type device, must be managed as an individual entity. The benefits of distributed processing come at the cost of distributed management. It costs time and money to set up, update, support, and ultimately decommission each computer. The initial cost of the machine is often dwarfed by operating costs.

Citrix PVS takes a very different approach from traditional imaging solutions by fundamentally changing the relationship between hardware and the software that runs on it. By streaming a single shared disk image (vDisk) rather than copying images to individual machines, PVS enables organizations to reduce the number of disk images that they manage, even as the number of machines continues to grow, simultaneously providing the efficiency of centralized management and the benefits of distributed processing.

In addition, because machines are streaming disk data dynamically and in real time from a single shared image, machine image consistency is essentially ensured. At the same time, the configuration, applications, and even the OS of large pools of machines can be completely changed in the time it takes the machines to reboot.

Using PVS, any vDisk can be configured in standard-image mode. A vDisk in standard-image mode allows many computers to boot from it simultaneously, greatly reducing the number of images that must be maintained and the amount of storage that is required. The vDisk is in read-only format, and the image cannot be changed by target devices.

These same benefits apply to vDisks that are streamed to bare metal servers, which is the way we utilized PVS in this study.

Citrix Desktop Studio for XenApp 7.11

If you manage a pool of servers that work as a farm, such as Citrix XenApp servers or web servers, maintaining a uniform patch level on your servers can be difficult and time consuming. With traditional imaging solutions, you start with a clean golden master image, but as soon as a server is built with the master image, you must patch that individual server along with all the other individual servers. Rolling out patches to individual servers in your farm is not only inefficient, but the results can also be unreliable. Patches often fail on an individual server, and you may not realize you have a problem until users start complaining or the server has an outage. After that happens, getting the server resynchronized with the rest of the farm can be challenging, and sometimes a full reimaging of the machine is required.

With Citrix PVS, patch management for server farms is simple and reliable. You start by managing your golden image, and you continue to manage that single golden image. All patching is performed in one place and then streamed to your servers when they boot. Server build consistency is assured because all your servers use a single shared copy of the disk image. If a server becomes corrupted, simply reboot it, and it is instantly back to the known good state of your master image. Upgrades are extremely fast to implement. After you have your updated image ready for production, you simply assign the new image version to the servers and reboot them. You can deploy the new image to any number of servers in the time it takes them to reboot. Just as important, rollback can be performed in the same way, so problems with new images do not need to take your servers or your users out of commission for an extended period of time.

Benefits for Desktop Administrators

Because Citrix PVS is part of Citrix XenApp, desktop administrators can use PVS’s streaming technology to simplify, consolidate, and reduce the costs of both physical and virtual desktop delivery. Many organizations are beginning to explore desktop virtualization. Although virtualization addresses many of IT’s needs for consolidation and simplified management, deploying it also requires deployment of supporting infrastructure. Without PVS, storage costs can make desktop virtualization too costly for the IT budget. However, with PVS, IT can reduce the amount of storage required for VDI by as much as 90 percent. And with a single image to manage instead of hundreds or thousands of desktops, PVS significantly reduces the cost, effort, and complexity for desktop administration.

Different types of workers across the enterprise need different types of desktops. Some require simplicity and standardization, and others require high performance and personalization. XenApp can meet these requirements in a single solution using Citrix FlexCast delivery technology. With FlexCast, IT can deliver every type of virtual desktop, each specifically tailored to meet the performance, security, and flexibility requirements of each individual user.

Not all desktops applications can be supported by virtual desktops. For these scenarios, IT can still reap the benefits of consolidation and single-image management. Desktop images are stored and managed centrally in the data center and streamed to physical desktops on demand. This model works particularly well for standardized desktops such as those in lab and training environments and call centers and thin-client devices used to access virtual desktops.

Citrix Provisioning Services Solution

Citrix PVS streaming technology allows computers to be provisioned and re-provisioned in real time from a single shared disk image. With this approach, administrators can completely eliminate the need to manage and patch individual systems. Instead, all image management is performed on the master image. The local hard drive of each system can be used for runtime data caching or, in some scenarios, removed from the system entirely, which reduces power use, system failure rate, and security risk.

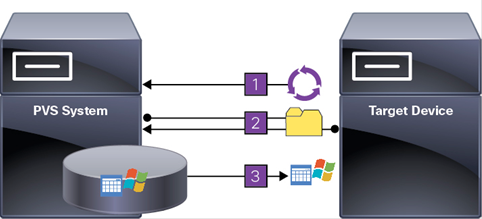

The PVS solution’s infrastructure is based on software-streaming technology. After PVS components are installed and configured, a vDisk is created from a device’s hard drive by taking a snapshot of the OS and application image and then storing that image as a vDisk file on the network. A device used for this process is referred to as a master target device. The devices that use the vDisks are called target devices. vDisks can exist on a PVS, file share, or in larger deployments, on a storage system with which PVS can communicate (iSCSI, SAN, network-attached storage [NAS], and Common Internet File System [CIFS]). vDisks can be assigned to a single target device in private-image mode, or to multiple target devices in standard-image mode.

Citrix Provisioning Services Infrastructure

The Citrix PVS infrastructure design directly relates to administrative roles within a PVS farm. The PVS administrator role determines which components that administrator can manage or view in the console.

A PVS farm contains several components. Figure 12 provides a high-level view of a basic PVS infrastructure and shows how PVS components might appear within that implementation.

Figure 12 Logical Architecture of Citrix Provisioning Services

Solution Architecture

Cisco-Nimble Solution delivers a converged infrastructure platform that incorporates compute, network and storage best practices from Cisco and Nimble to deliver a resilient, scalable and flexible datacenter architecture for Enterprise and cloud deployments.

The following platforms are integrated in this Cisco-Nimble Solution architecture:

· Cisco Unified Computing System (UCS) – B-Series blade servers

· Cisco UCS 6200 Series Fabric Interconnects (FI) – unified access to storage and LAN networks

· Cisco Nexus 9300 series switches – connectivity to users, other LAN networks and Cisco UCS domains

· Cisco MDS 9100 fabric switches – SAN fabric providing Fibre Channel (FC) connectivity to storage

· Nimble AF5000 array – SAN boot of Flash storage array with SSDs

· Cisco Nexus 1000V – access layer switch for virtual machines

· VMware vSphere 6.0 U2b – Hypervisor

· Cisco UCS Blade Server NVidia M6 GPU

This Cisco-Nimble Solution architecture uses Cisco UCS B-series servers for compute. Cisco UCS B-series servers are housed in a Cisco UCS 5108 blade server chassis that can support up to eight half-width blades or four full-width blades. Each server supports a number of converged network adaptors (CNAs) that converge LAN and SAN traffic onto a single interface rather than requiring multiple network interface cards (NICs) and host bust adapters (HBAs) per server. Cisco’s Virtual Interface Cards (VICs) support 256 virtual interfaces and supports Cisco’s VM-FEX technology (see link below). Two CNAs are typically deployed on a server for redundant connections to the fabric. Cisco VIC is available as a Modular LAN on Motherboard (mLOM) card and as a Mezzanine Slot card. For more information on the different models of Cisco UCS VIC adapters available, see: http://www.cisco.com/c/en/us/products/interfaces-modules/unified-computing-system-adapters/index.html

All compute resources in the data center connect into a redundant pair of Cisco UCS fabric interconnects that provide unified access to storage and other parts of the network. Each blade server chassis requires 2 Fabric Extender (FEX) modules that extend the unified fabric to the blade server chassis and connect into the FIs using 2, 4 or 8 10GbE links. Each Cisco UCS 5100 Series chassis can support up to two fabric extenders that provide active-active data plane forwarding with failover for higher throughput and availability. FEX is a consolidation point for all blade server I/O traffic, which simplifies operations by providing a single point of management and policy enforcement. For detailed information about FEX see:

Two second generation models of FEX are currently available for the Cisco UCS blade server chassis. Cisco UCS 2204XP and 2208XP connect to the unified fabric using multiple 10GbE links. The number of 10GbE links that are supported for connecting to an external fabric interconnect and to the blade servers within the chassis are shown in the table below. The maximum I/O throughput possible through each FEX is also shown.

Table 2 Blade Server Fabric Extender Models

| Blade Server Models |

Internal Facing Links to Blade Servers |

External Facing Links to FI |

Aggregate I/O Bandwidth |

| Cisco UCS 2204XP |

16 x 10GbE |

Up to 4 x 10GbE |

40Gbps per FEX |

| Cisco UCS 2208XP |

32 x 10GbE |

Up to 8 x 10GbE |

80Gbps per FEX |

By deploying a pair of Cisco 2208XP FEX, a Cisco UCS 5100 series chassis with blade servers can get up to maximum of 160Gbps of I/O throughput for the servers on that chassis. For additional details on the FEX, see:

http://www.cisco.com/en/US/prod/collateral/ps10265/ps10276/data_sheet_c78-675243.html

The rack mount servers can also benefit from a FEX architecture to aggregate I/O traffic from several rack mount servers but unlike the blade servers where the FEX modules fit into the back of the chassis, rack mount servers require a standalone FEX chassis. Cisco 2200 Series FEX model is functionally equivalent to the above blade server FEX models. Other than the physical cabling required to connect ports on Cisco Nexus FEX 2300 to servers and FIs, the discovery and configuration is same as that of the blade server to FI connectivity. For data centers migrating their access-switching layer to 40GbE speeds, Cisco Nexus 2300 series FEX is the newest model. For additional details on the Cisco Nexus 2000 Series FEX, see:

http://www.cisco.com/c/en/us/products/switches/nexus-2000-series-fabric-extenders/index.html

Rack Mount Servers can also be deployed by directly connecting into the FIs, without using Cisco UCS FEX chassis. However, this could mean additional operational overhead by having to manage server policies individually but nevertheless a valid option in smaller deployments.

The modular server chassis connect directly into FIs using break out cables that go from each 40G QSFP+ port on the server side to 4x10GbE ports on each FI. A second QSFP+ is used to connect to the secondary FI.

The fabric extenders in a blade server chassis connect externally to the unified fabric using multiple 10GbE links – the number of links depends on the aggregate I/O bandwidth required. Each FEX connect into a Cisco UCS 6200 series fabric interconnect using up to 4 or 8 10GbE links depending on the model of FEX. The links can be deployed as independent links (discrete Mode) or grouped together using link aggregation (port channel mode). In discrete mode, each server is pinned to a FEX link going to a port on the fabric interconnect and if the link goes down, the server’s connection also goes down through the FEX link. In port channel mode, the flows from the server will be redistributed across the remaining port channel members. This is less disruptive overall and therefore port channel mode is preferable.

Cisco UCS system provides the flexibility to individually select components of the system that meet the performance and scale requirements of a customer deployment. There are several options for blade and rack servers, network adapters, FEX and Fabric Interconnects that make up the Cisco UCS system.

Compute resources are grouped into an infrastructure layer and application data layer. Servers in the infrastructure layer are dedicated for hosting virtualized infrastructure services that are necessary for deploying and managing the entire data center. Servers in the application data layer are for hosting business applications and supporting services that Enterprise users will access to fulfill their business function.

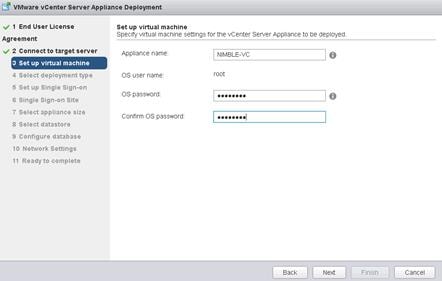

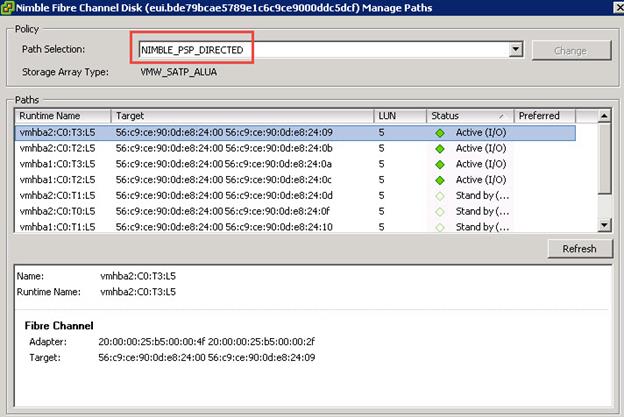

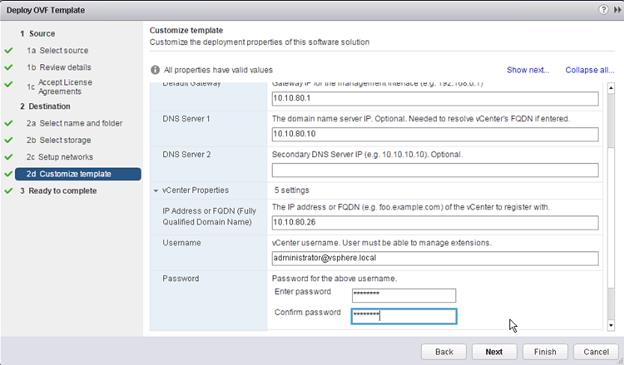

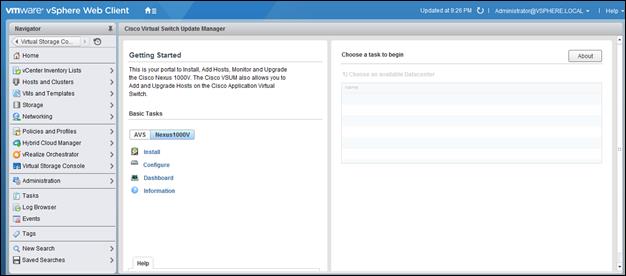

The architecture can support any hypervisor but VMware vSphere will be used to validate the architectures. High availability features available in the hypervisor will be leveraged to provide resiliency at the virtualization layer of the stack.