Key features

Simplified operations for powerful computing systems

Choose the right tier for your business

Intersight Infrastructure Service

Essentials

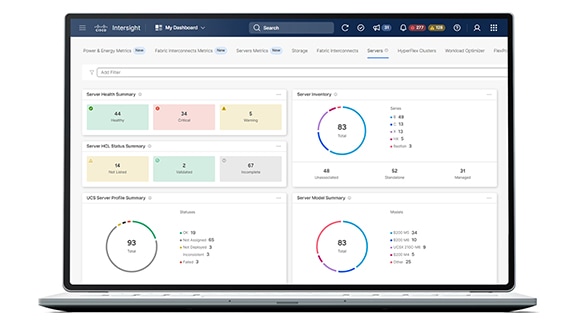

Server management with global health monitoring, inventory, proactive support through Cisco TAC integration, and multi-factor authentication plus SDK and API access.

Features:

- Server policies and profiles

- Firmware management

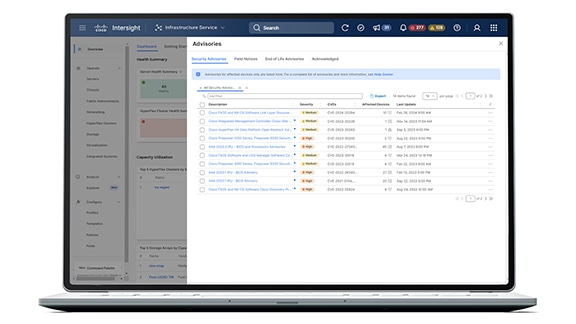

- Security advisories

- Energy and power dashboard

- Third-party servers: HPE, Dell

- Nutanix cluster lifecycle management

- Hardware compatibility compliance

- Proactive RMA

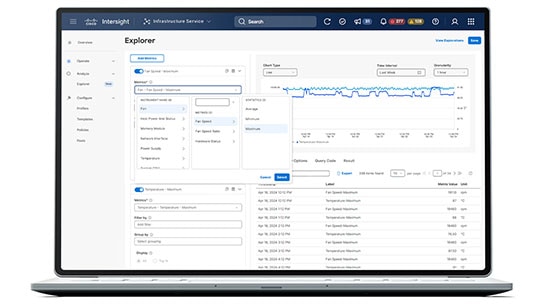

- Custom explorations with Metrics Explorer

Intersight Infrastructure Service

Advantage

Advanced server management with extended visibility, ecosystem integration, and automation of Cisco and third-party hardware and software plus multi-domain solutions.

Includes everything in Essentials, plus:

- Advanced server operations

- Drag-and-drop automation and workflow designer

- Multi-domain automation and orchestration

- Tunneled vKVM

- OS installation

- ServiceNow ITSM/ITOM integration

Services and support