Cisco ACI Multi-Site Architecture White Paper

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

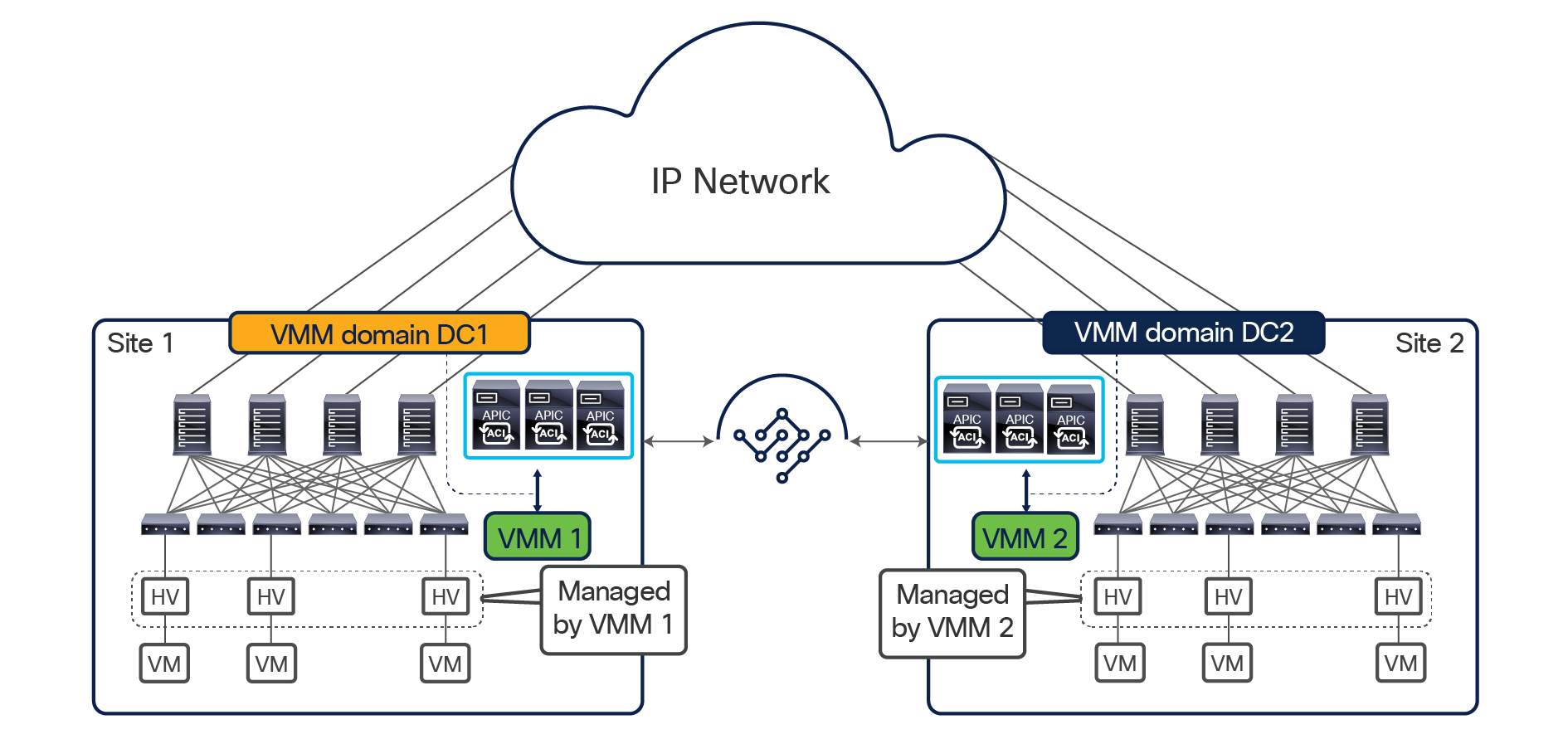

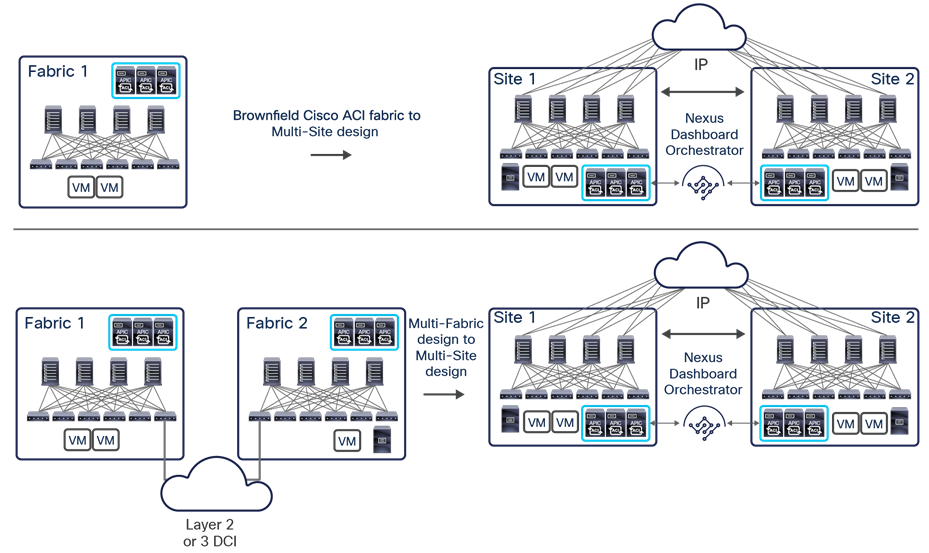

With the increasing adoption of Cisco Application Centric Infrastructure (Cisco ACI) as a pervasive fabric technology, enterprises and service providers commonly need to interconnect separate Cisco ACI fabrics. Business requirements (business continuance, disaster avoidance, etc.) lead to the deployment of separate data center fabrics, and these need to be interconnected with each other. Depending on the deployment option used (and as explained in this document), these fabrics may be called pods or fabrics and sites.

Note: To best understand the design presented in this document, readers should have at least a basic understanding of Cisco ACI and how it works and is designed for operation in a single site or pod. For more information, see the Cisco ACI white papers available at the following link: https://www.cisco.com/c/en/us/solutions/data-center-virtualization/application-centric-infrastructure/white-paper-listing.html.

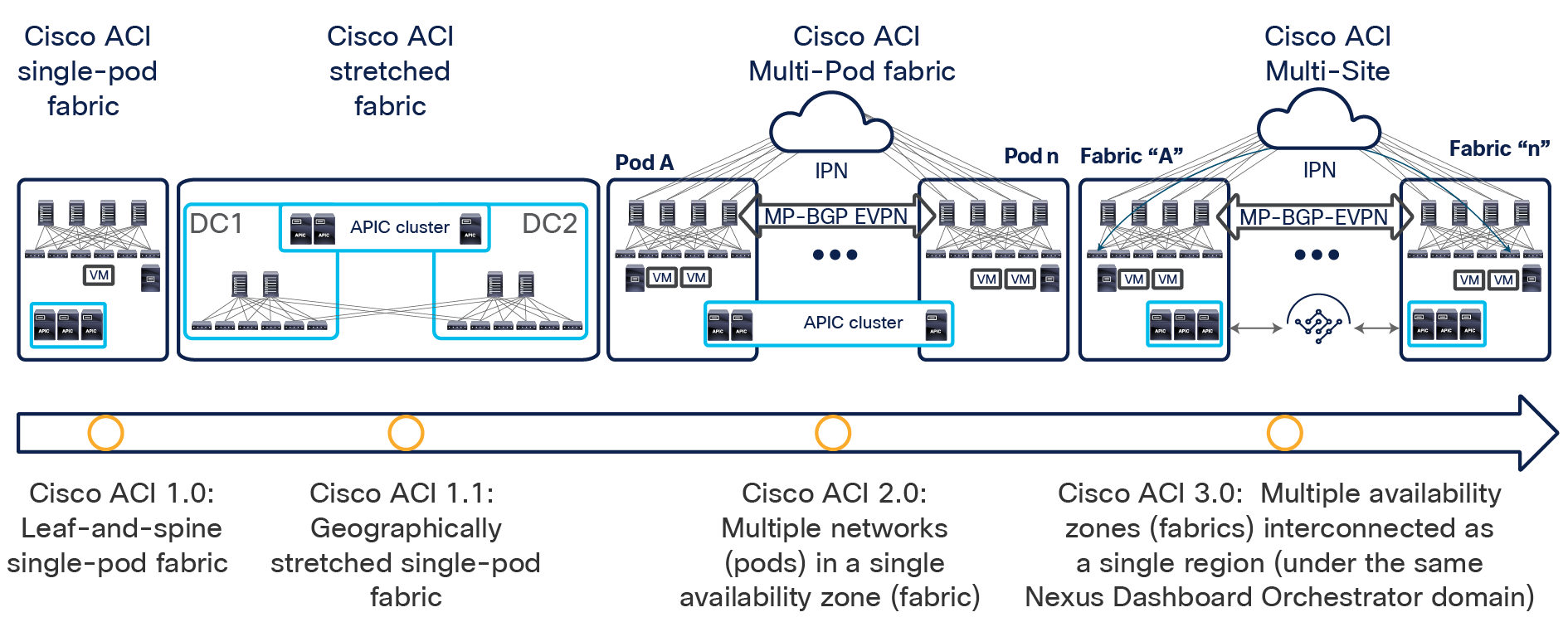

Figure 1 shows the architectural options to extend connectivity and policy enforcement between different ACI networks that have been offered from the launch of Cisco ACI up to today.

Cisco ACI connectivity options and policy domain evolution

● The first option, available from Cisco ACI Release 1.0, consists of a classic leaf-and-spine two-tier fabric (a single pod) in which all the deployed leaf nodes are fully meshed with all the deployed spine nodes. A single instance of Cisco ACI control-plane protocols runs between all the network devices within the pod. The entire pod is under the management of a single Cisco Application Policy Infrastructure Controller (APIC) cluster, which also represents the single point of policy definition.

● The next step in the evolution of Cisco ACI geographically stretches a pod across separate physical data center locations, usually deployed in the same metropolitan area. Given the common limited availability of fiber connections between those locations, the stretched fabric uses a partial mesh topology, in which some leaf nodes (called transit leaf nodes) are used to connect to both the local and remote spine nodes, and the rest of the leaf nodes connect only to the local spine nodes. Despite the use of partial mesh connectivity, functionally the stretched fabric still represents a single-pod deployment, in which a single instance of all the Cisco ACI control-plane protocols run across all the interconnected data center sites, and so creates a single failure domain.

Note: For more information about the Cisco ACI stretched-fabric deployment option, refer to the following link: https://www.cisco.com/c/en/us/td/docs/switches/datacenter/aci/apic/sw/kb/b_kb-aci-stretched-fabric.html.

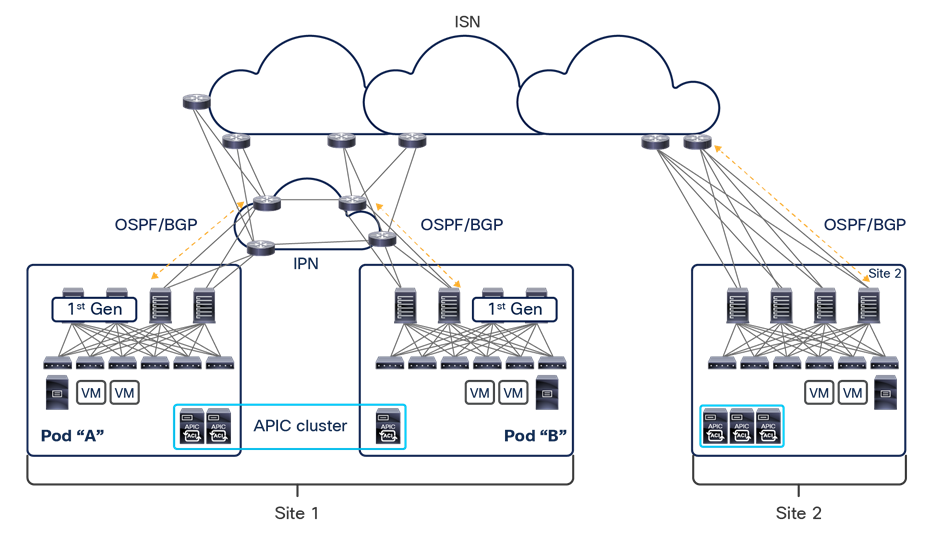

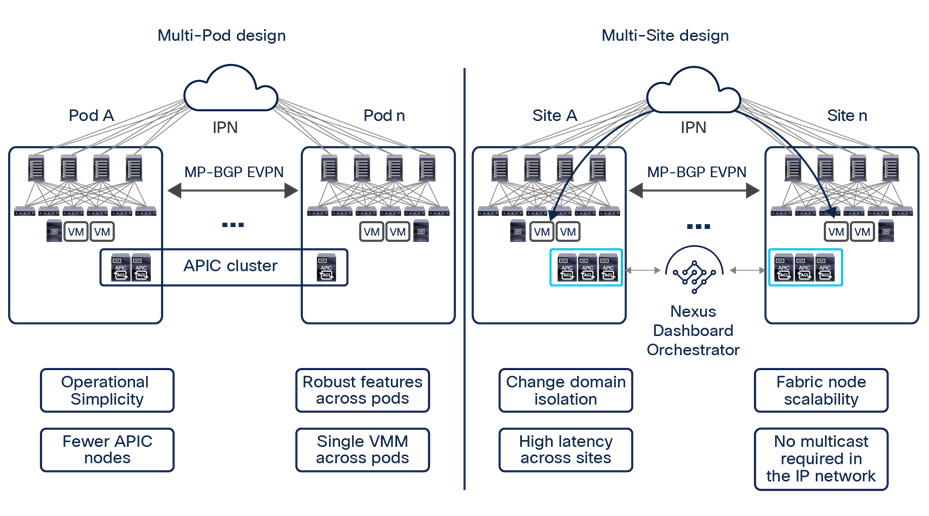

● To address the concerns about extending a single network fault domain across the entire stretched-fabric topology, Cisco ACI Release 2.0 introduced the Cisco ACI Multi-Pod architecture. This model calls for the deployment of separate Cisco ACI pods, each running separate instances of control-plane protocols and interconnected through an external IP routed network (or Interpod Network [IPN]). The Cisco ACI Multi-Pod design offers full resiliency at the network level across pods, even if the deployment remains functionally a single fabric, with all the nodes deployed across the pods under the control of the same APIC cluster. Each pod can hence be considered as a separate availability zone; all the pods under the control of the same APIC cluster are part of the same fabric (region).

The main advantage of the Cisco ACI Multi-Pod design is hence operational simplicity, with separate pods managed as if they were logically a single entity. This approach implies that all the Cisco ACI functions available in single-pod deployments (network service chaining, microsegmentation, Virtual Machine Manager [VMM] domain integration, etc.) can be deployed seamlessly across pods: a unique value provided by this architecture. Note, though, that because a Cisco ACI Multi-Pod architecture is managed as a single fabric (APIC domain), it represents a single tenant change domain, in which any configuration and policy changes applied in the context of a given tenant are immediately applied across all the pods. Although this behavior contributes to the operational simplicity of a Multi-Pod design, it also raises concerns about the propagation of configuration errors.

Note: Changes are applied immediately across all the pods, but only in the context of a given tenant. The implicit multitenant nature of a Cisco ACI fabric helps ensure complete isolation for all the resources deployed in separate tenants, shielding them from errors and disruptive events. For more information about the Cisco ACI Multi-Pod design, refer to the following link: https://www.cisco.com/c/en/us/solutions/collateral/data-center-virtualization/application-centric-infrastructure/white-paper-c11-737855.html.

Additionally, a maximum latency of 50 msec RTT can be supported between pods starting from Cisco ACI Release 2.3(1). In previous Cisco ACI releases, this limit is 10 msec RTT instead.

● The need for complete isolation (both at the network and tenant change-domain levels) across separate Cisco ACI networks led to the Cisco ACI Multi-Site architecture, introduced in Cisco ACI Release 3.0(1). This architecture is the main focus of this document and will be discussed in detail in the following sections.

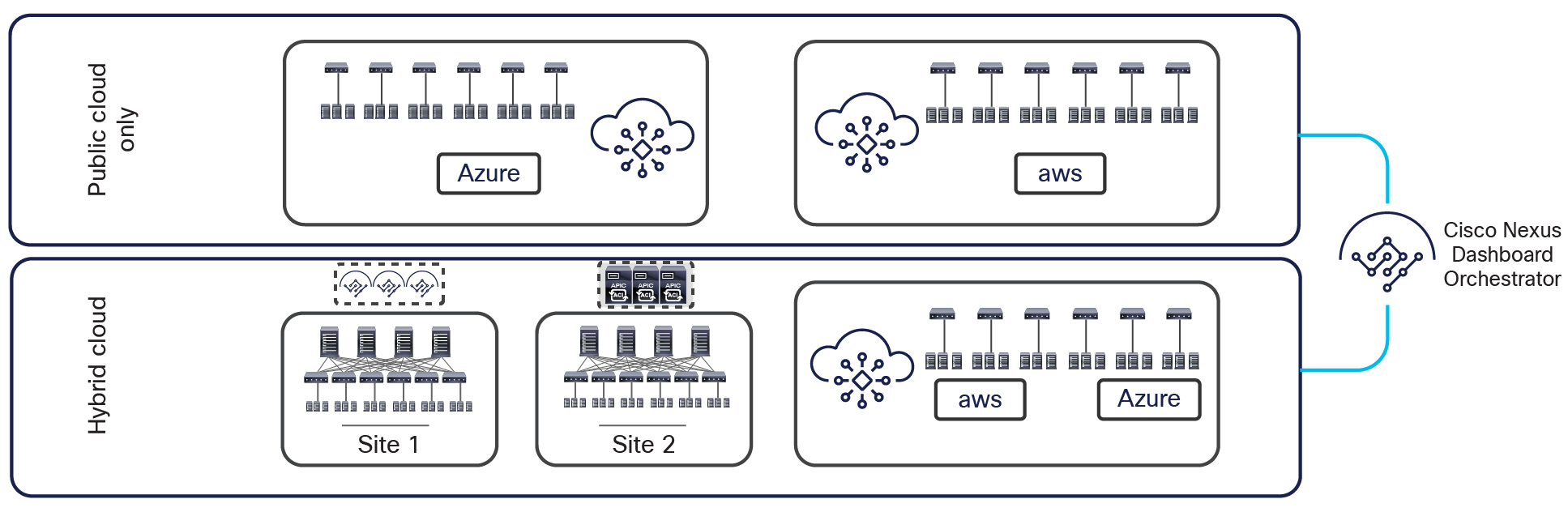

● The same architectural approach taken for ACI Multi-Site has also been extended to provide connectivity and policy extension between on-premises ACI fabrics and public cloud resources (integration with AWS and Azure is supported at the time of writing of this document).

Support for hybrid-cloud and public cloud only deployment options

As shown above, both hybrid-cloud (that is, on-premises ACI fabrics connecting to public cloud resources) and public-cloud-only scenarios are currently supported. Describing those deployment options more in detail is out of the scope of this paper. For more information, please refer to the white papers below:

Before exploring the details of the Cisco ACI Multi-Site design, you should understand why Cisco uses both Multi-Pod and Multi-Site architectures and how you can position them to complement each other to meet different business requirements. To start, you should understand the main terminology used in this document and leveraging some naming conventions heavily utilized in AWS public cloud deployments.

● Pod: A pod is a leaf-and-spine network sharing a common control plane (Intermediate System–to–Intermediate System [ISIS], Border Gateway Protocol [BGP], Council of Oracle Protocol [COOP], etc.). A pod is hence a single network fault domain that can be compared with an AWS availability zone.

◦ Fabric: A fabric is the set of leaf and spines nodes under the control of the same APIC domain. Each fabric represents a separate tenant change domain, because every configuration and policy change applied in the APIC is applied to a given tenant across the fabric. A fabric can hence be compared to an AWS Region.

● Multi-Pod: A Multi-Pod design consists of a single APIC domain with multiple leaf-and-spine networks (pods) interconnected. As a consequence, a Multi-Pod design is functionally a fabric (an interconnection of availability zones), but it does not represent a single network failure domain, because each pod runs a separate instance of control-plane protocols. A Multi-Pod fabric can hence be compared to an AWS region interconnecting different AWS availability zones.

◦ Multi-Site: A Multi-Site design is the architecture interconnecting multiple APIC cluster domains with their associated pods. A Multi-Site design could also be called a Multi-Fabric design, because it interconnects separate regions (fabrics) each deployed as either a single pod or multiple pods (a Multi-Pod design).

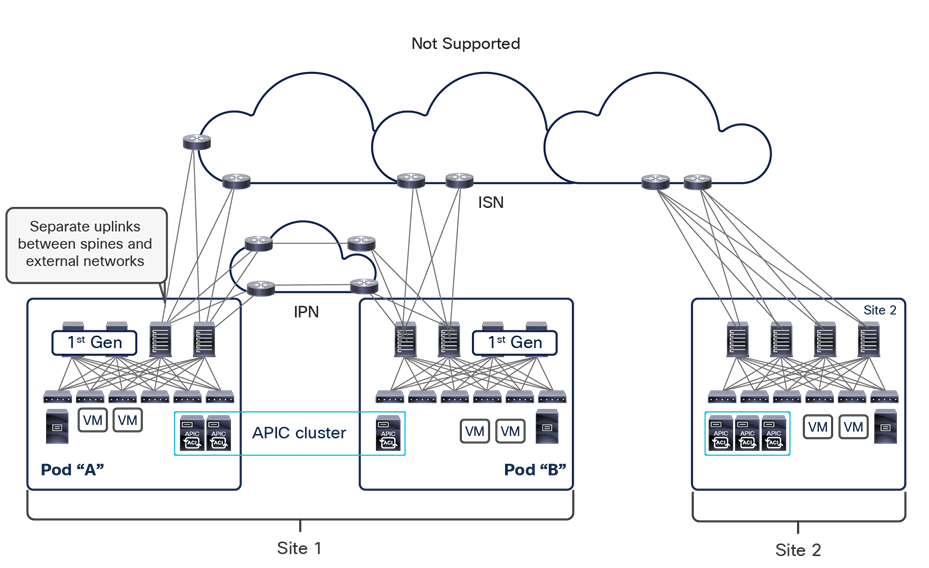

Note: Do not confuse the term “Multi-Fabric design” used here to identify the Multi-Site architecture discussed in this document with the term “dual-fabric design,” which refers to a precursor of the Multi-Site design. When operating more than one ACI Fabric, it is highly recommended to deploy Multi-Site instead of interconnecting multiple individual ACI fabric to each other via leaf switches (dual-fabric design). Although the latter option may have been one of the only ways prior to these features, it is currently officially not supported because no validations and quality assurance tests are performed in such topologies specifically when deployed in conjunction with a separate Data Center Interconnect (DCI) technology (such as OTV, VPLS, etc.) allowing extension of Layer 2 domains across sites. Hence, although ACI has a feature called Common Pervasive Gateway for interconnecting ACI fabrics prior to Multi-Site, it is highly recommended to design a new ACI Multi-Fabric deployment with Multi-Site instead when there is a requirement to extend Layer 2 between separate APIC domains.

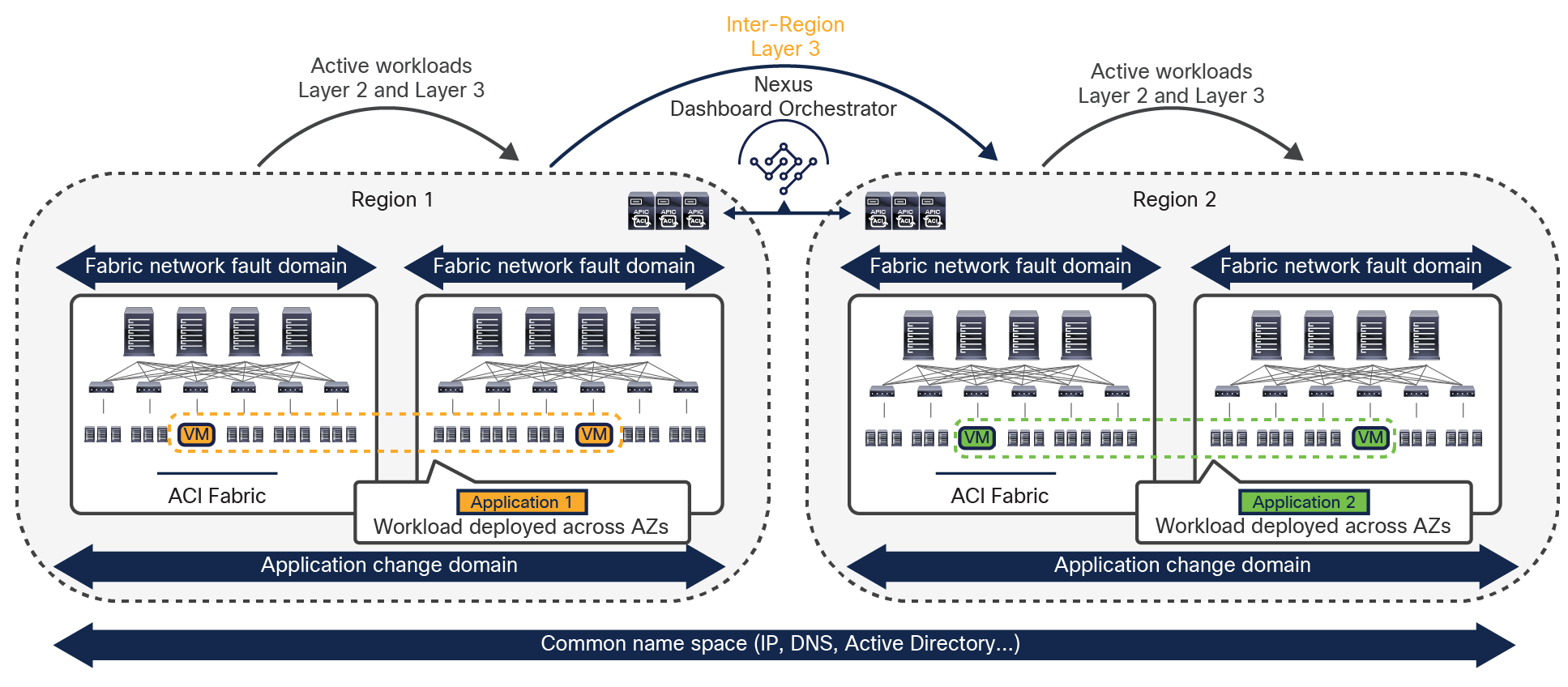

An understanding of AWS constructs as availability zones and regions is essential to understanding why Cisco decided to invest in a Multi-Site architecture after having already delivered the Cisco ACI Multi-Pod design. Organizations typically need to deploy different instances of applications across data center fabrics representing separate regions. This setup is critical to help ensure that any network-level failures or configuration or policy definition errors that occur in one region will not be propagated to the application’s workloads running in a separate region; thus reinforcing both disaster-avoidance and disaster-recovery capabilities.

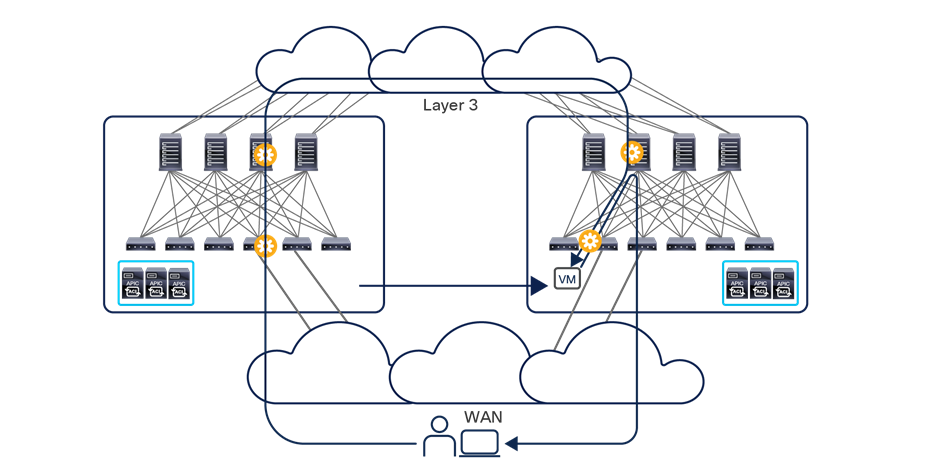

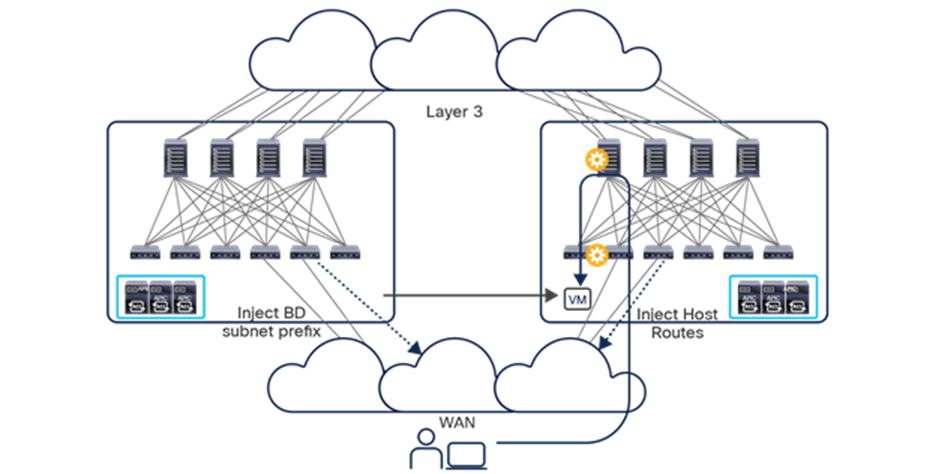

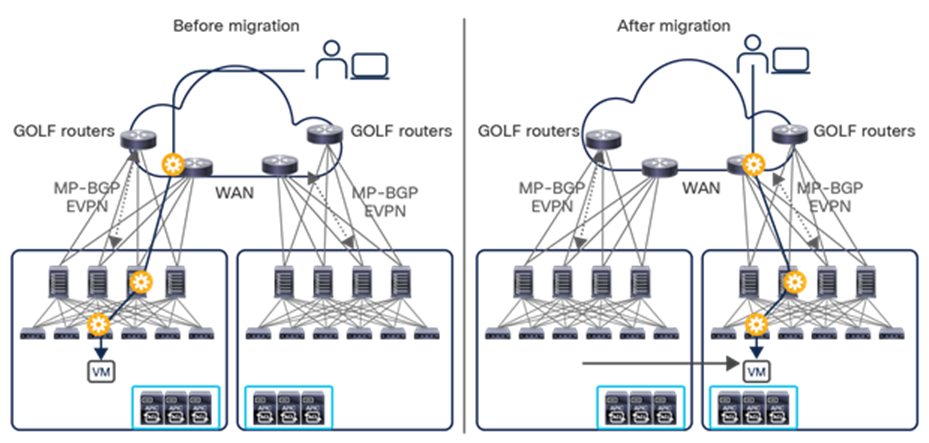

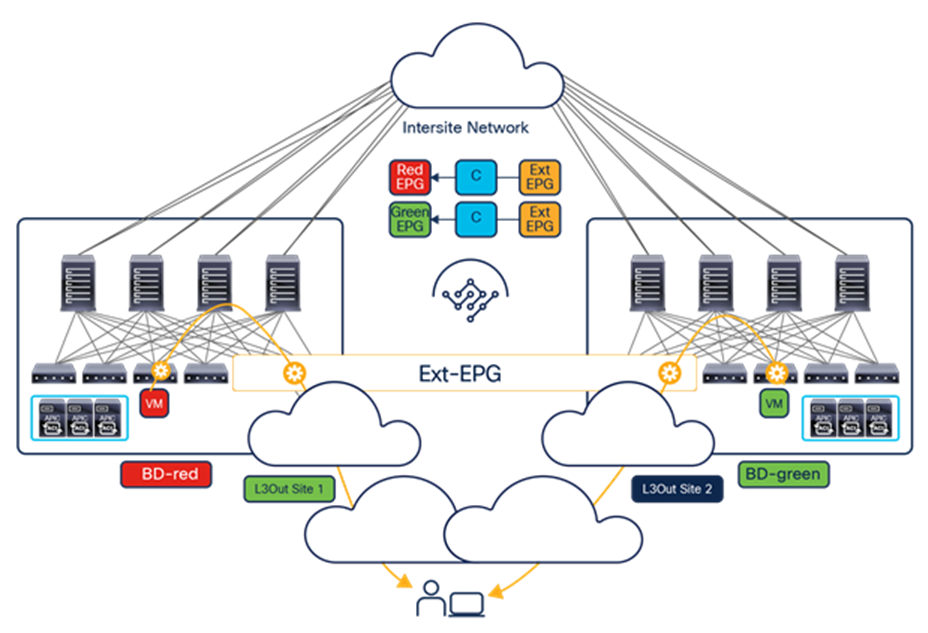

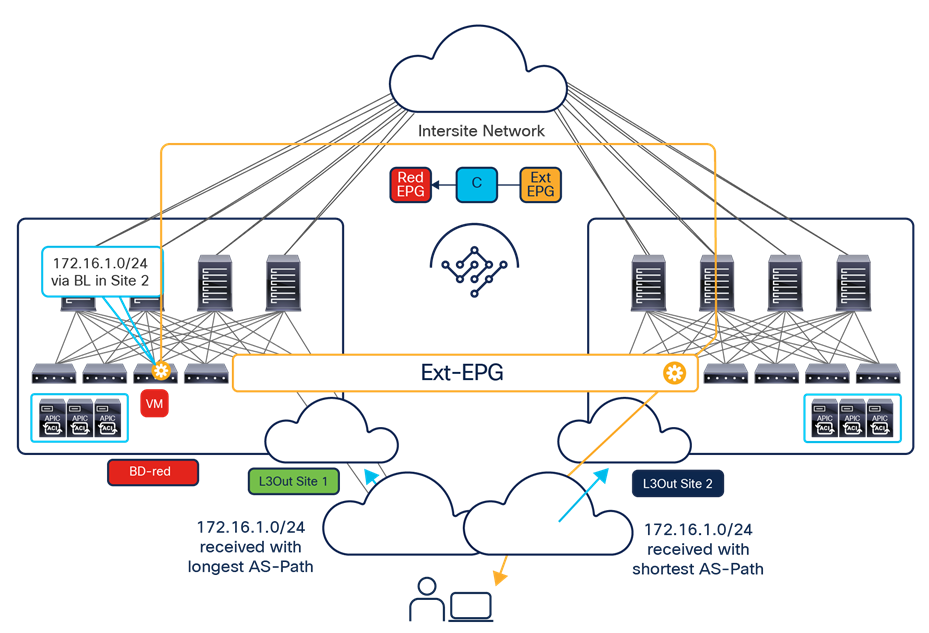

The deployment of Cisco ACI Multi-Pod and Multi-Site architectures thus can be combined to meet two different requirements. You can create a group of flexible Cisco ACI islands that can be seen and operated as a single logical entity (fabric or region) and used to deploy the functional components of a given application in a classic active/active model (that is, different endpoints building the application tiers can be freely deployed across availability zones that are part of the same fabric). You then can also reliably interconnect and scale those fabrics to be able to deploy different application instances in separate regions (a per-application active/active deployment model that is used for disaster-avoidance requirements) or to provide full application-recovery capabilities across them (a disaster recovery use case). See Figure 3.

Change and network fault domains isolation

Note: The combined deployment of a Cisco ACI Multi-Pod and Multi-Site architecture shown above is supported in Cisco ACI Release 3.2(1) and later.

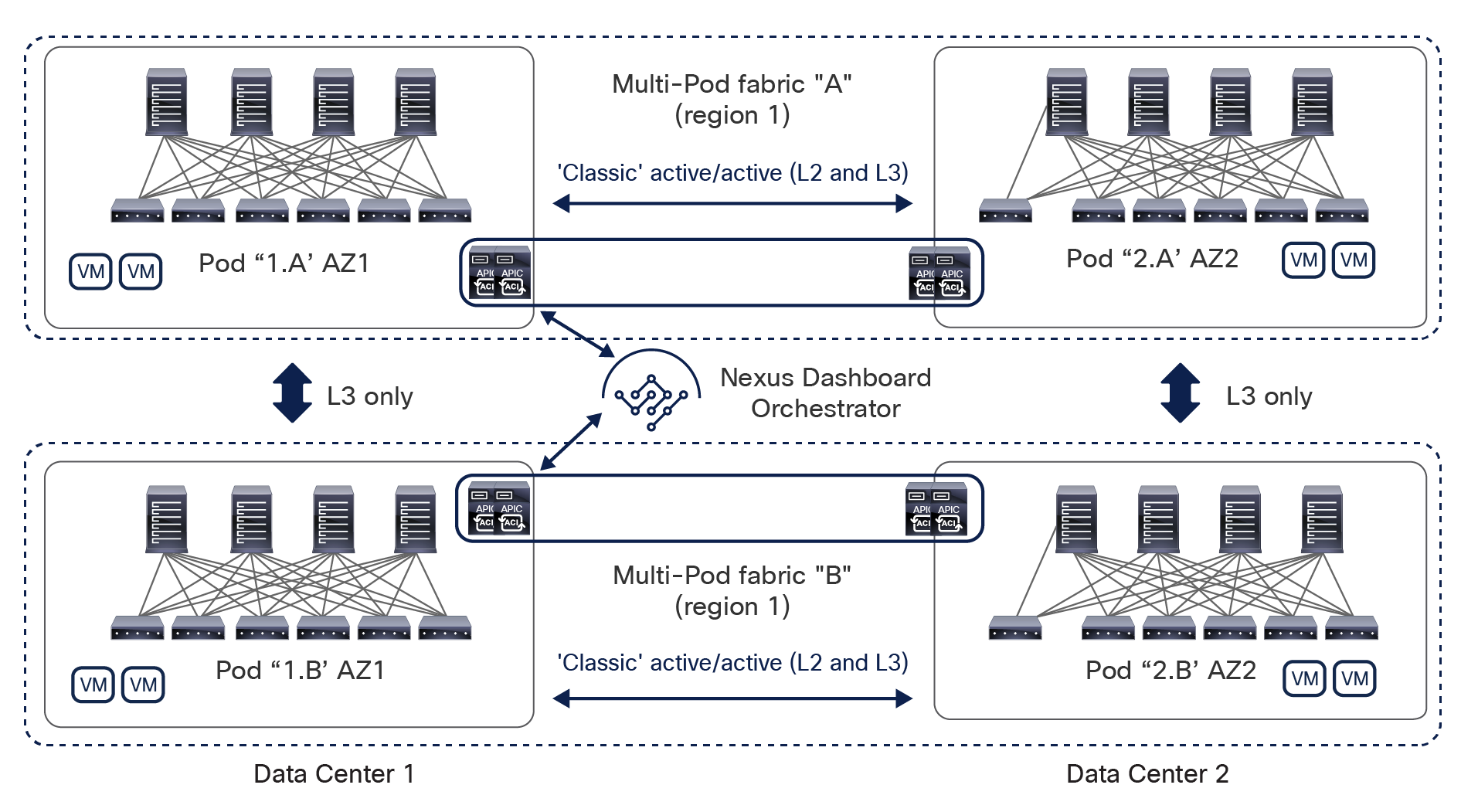

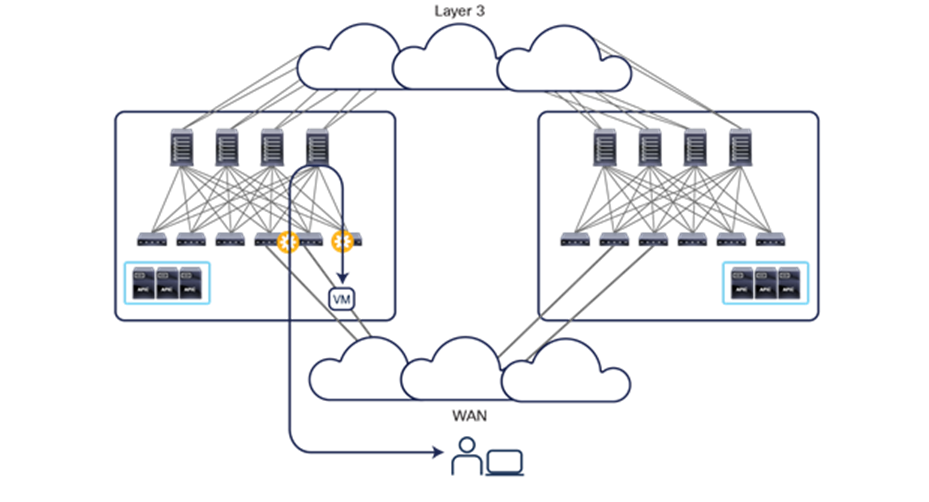

In lower-scale deployments, it is also quite common for customers to use the same two data center locations for addressing disaster-avoidance and disaster-recovery requirements. Combining ACI Multi-Pod with ACI Multi-Site also allows you to handle also those specific requirements, providing support at the same time for a classic active/active application deployment across sites and a typical application recovery mechanism required in disaster recovery scenarios (Figure 4).

Combined deployment of Cisco ACI Multi-Pod and Multi-Site architectures

The specific deployment of Multi-Site shown in the previous two figures as a means to interconnect at Layer 3 separate fabrics (regions), leaving at Multi-Pod the duty of providing Layer 2 extension services should be the preferred and recommended model. That said, and as it would be made clear in the rest of this paper, Multi-Site also offers native Layer 2 extension capabilities that allow to position this architecture to address some of the specific use cases where usually Multi-Pod could be considered a better fit. When doing so, it is important to keep into considerations some of the functional restrictions that may be encountered (as it is the case for example to integrate FW or SLB clusters into Multi-Site).

The remainder of this document focuses on the Cisco ACI Multi-Site architecture, starting with an overview of its functional components.

Cisco ACI Multi-Site architecture

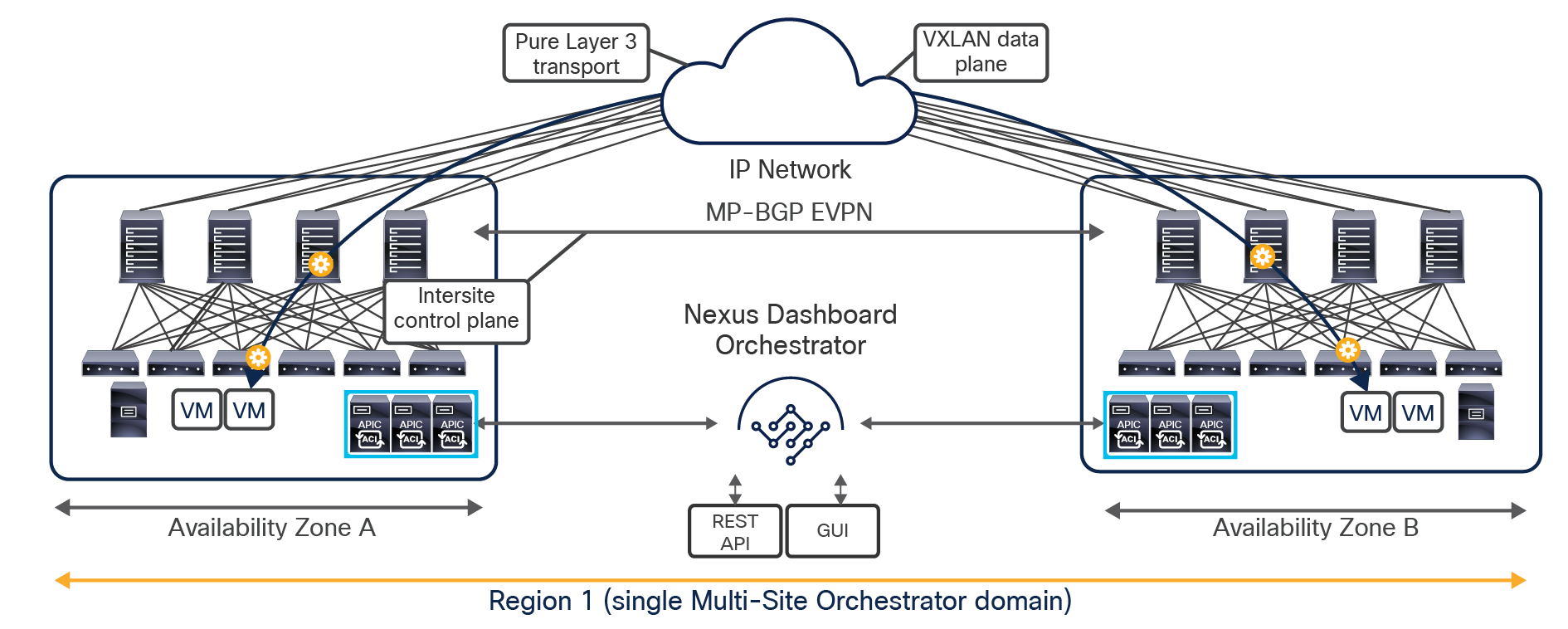

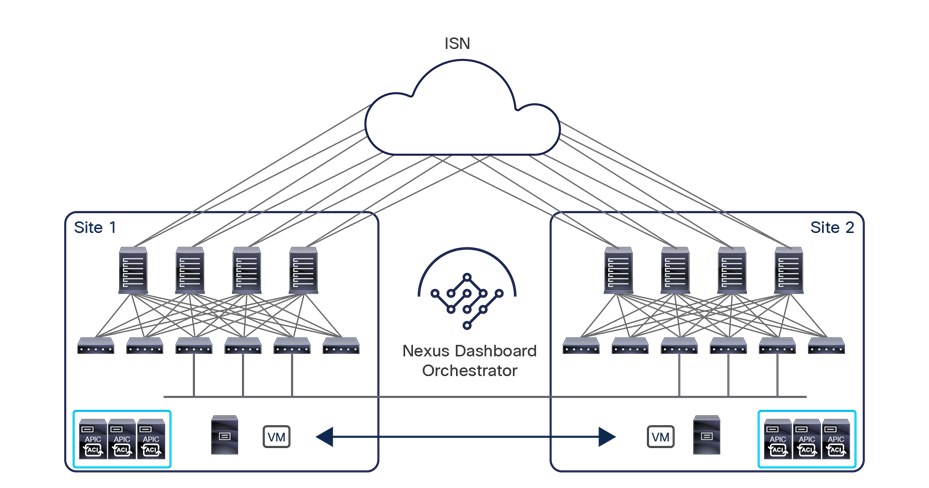

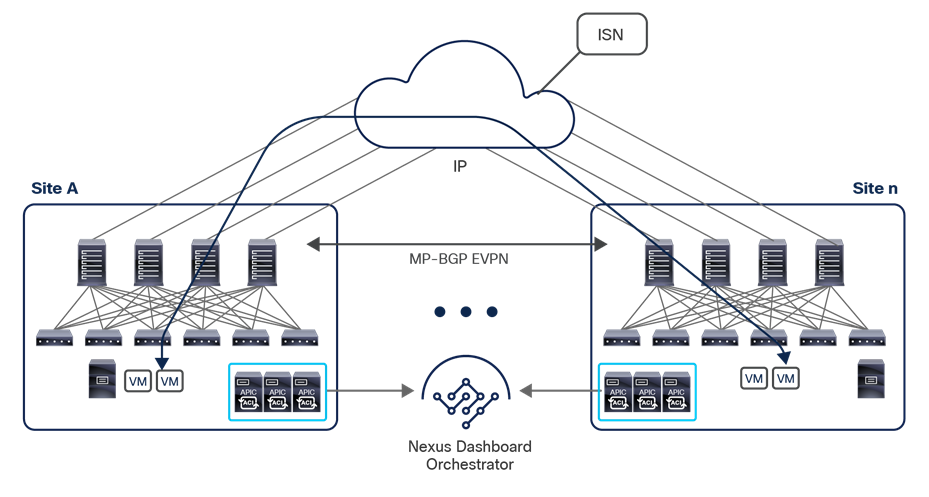

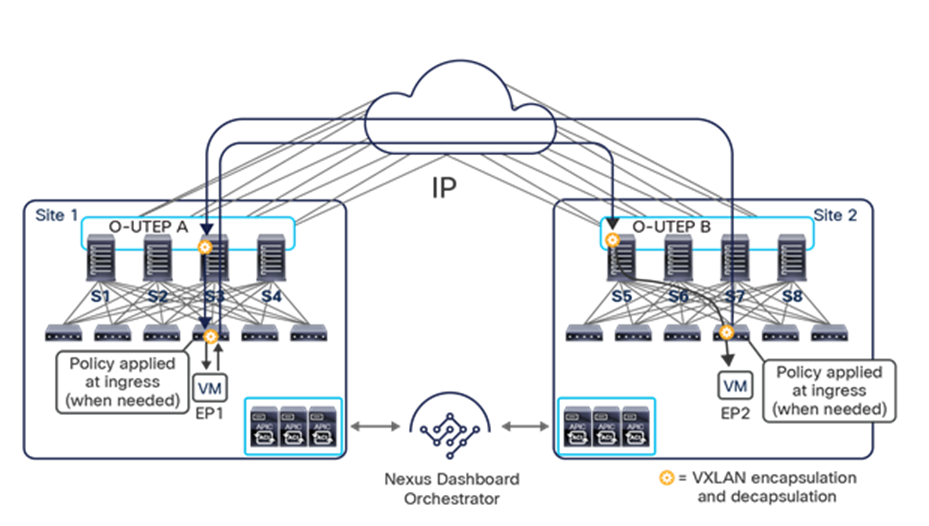

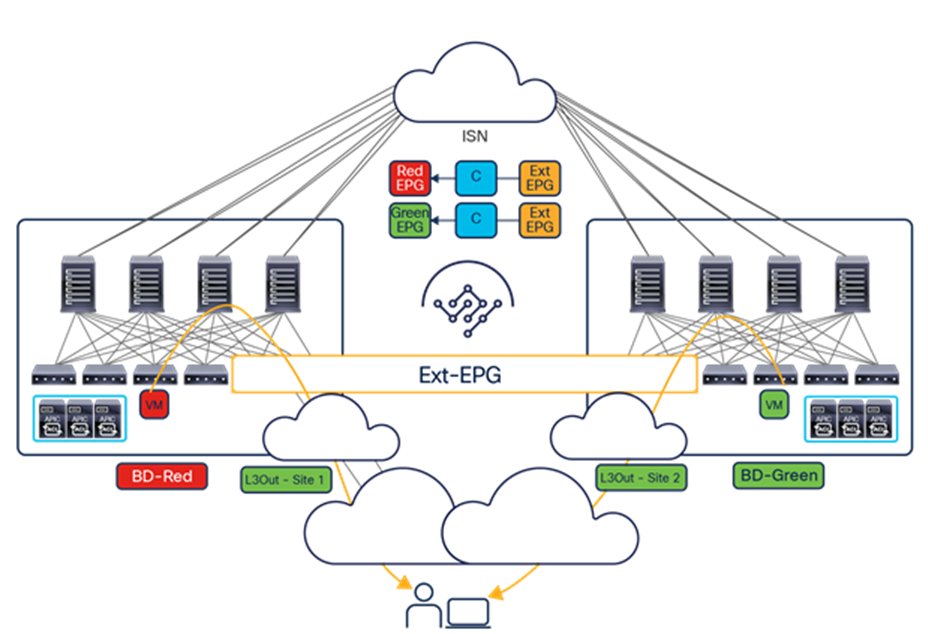

The overall Cisco ACI Multi-Site architecture is shown in Figure 5.

Cisco ACI Multi-Site architecture

Note:

This design is achieved by using the following functional components:

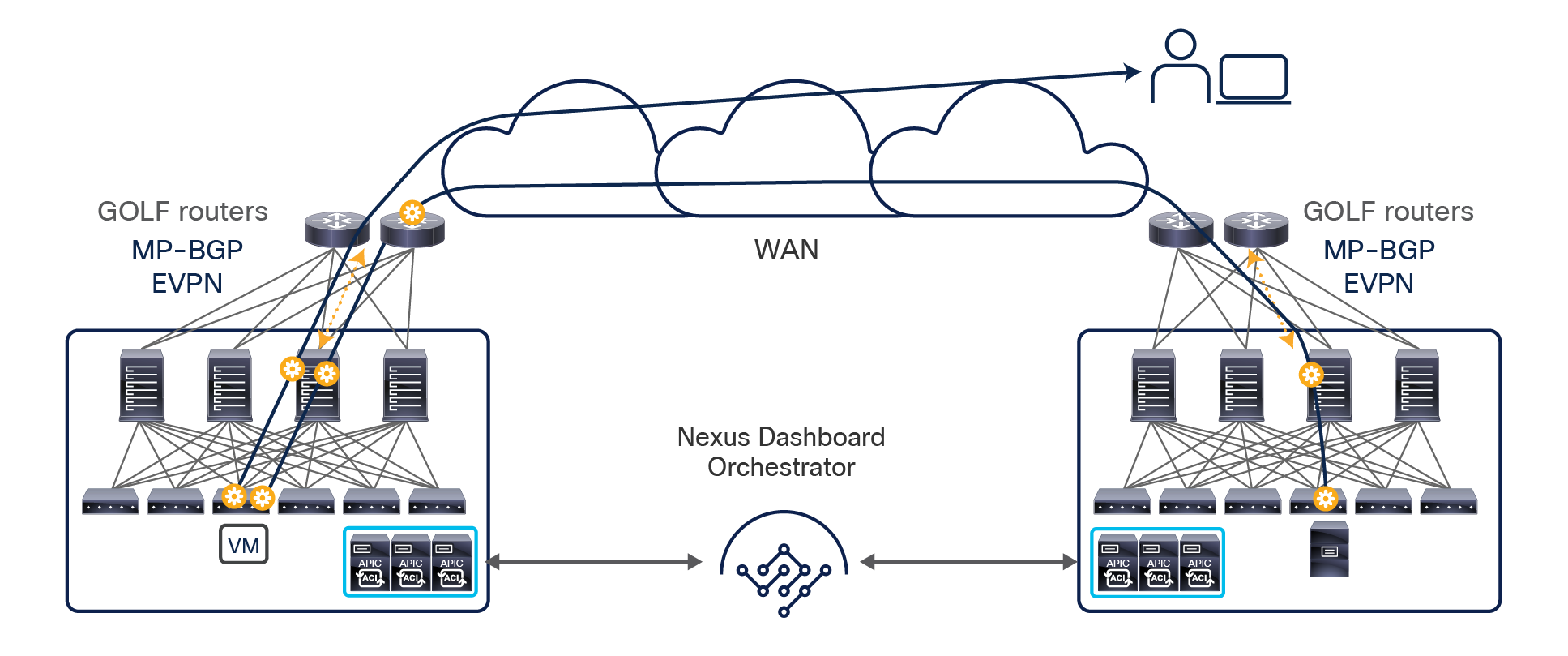

● Cisco Nexus Dashboard Orchestrator (NDO): This component is the intersite policy manager. It provides single-pane management, enabling you to monitor the health-score state for all the interconnected sites. It also allows you to define, in a centralized place, all the intersite policies that can then be pushed to the different APIC domains for rendering them on the physical switches building those fabrics. It thus provides a high degree of control over when and where to push those policies, hence allowing the change domain separation that uniquely characterizes the Cisco ACI Multi-Site architecture.

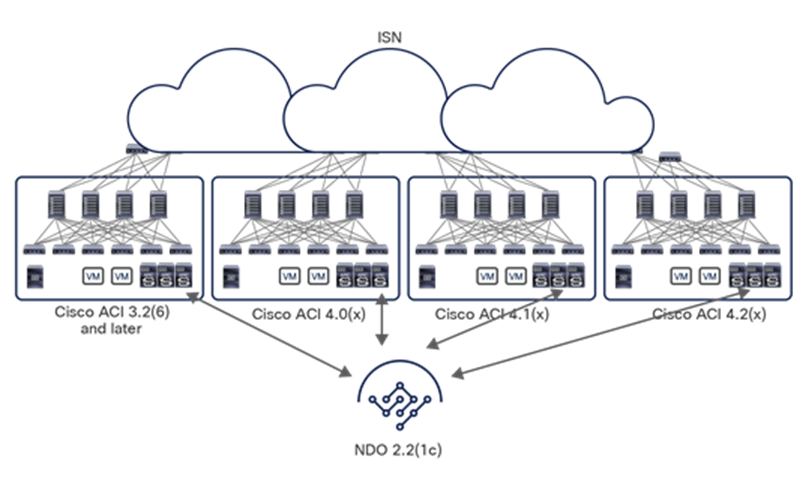

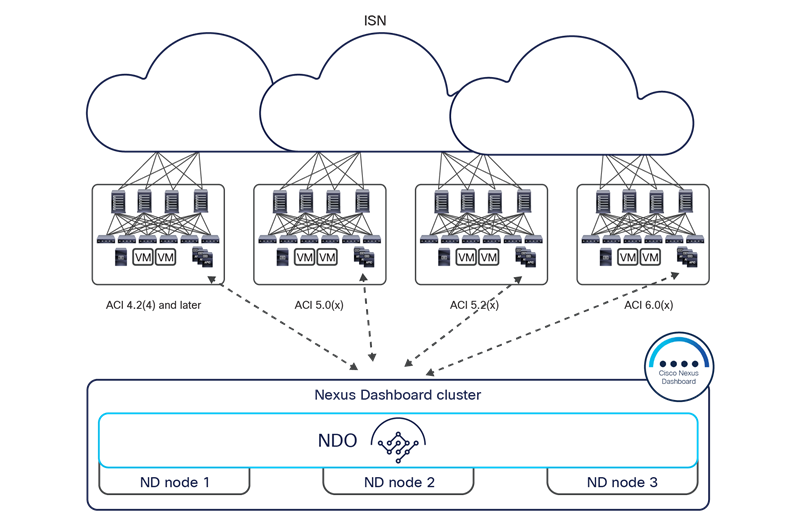

Prior to the Orchestrator Software Release 3.2(1), this component was named Multi-Site Orchestrator (MSO), whereas in newer releases it is supported only as an application running on the Cisco Nexus Dashboard (ND) compute platform, hence the new name Nexus Dashboard Orchestrator (NDO). However, in Cisco documentation you could indifferently find reference to “Multi-Site Orchestrator (MSO)”, “Nexus Dashboard Orchestrator” (NDO), or simply “Orchestrator service.” All these names refer to the same functional component of the Cisco Multi-Site architecture.

For more information about Cisco Nexus Dashboard Orchestrator, see the section “Cisco Nexus Dashboard Orchestrator.”

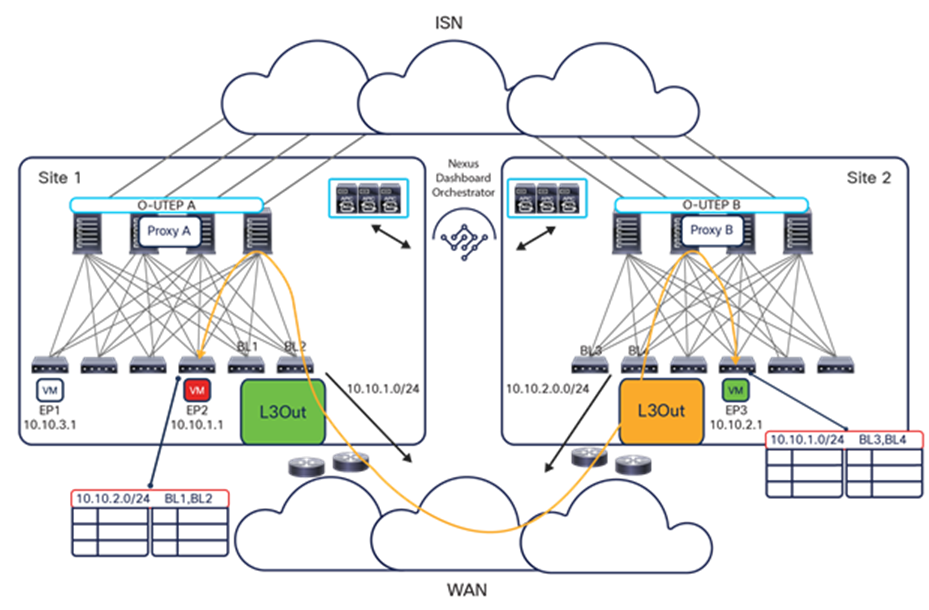

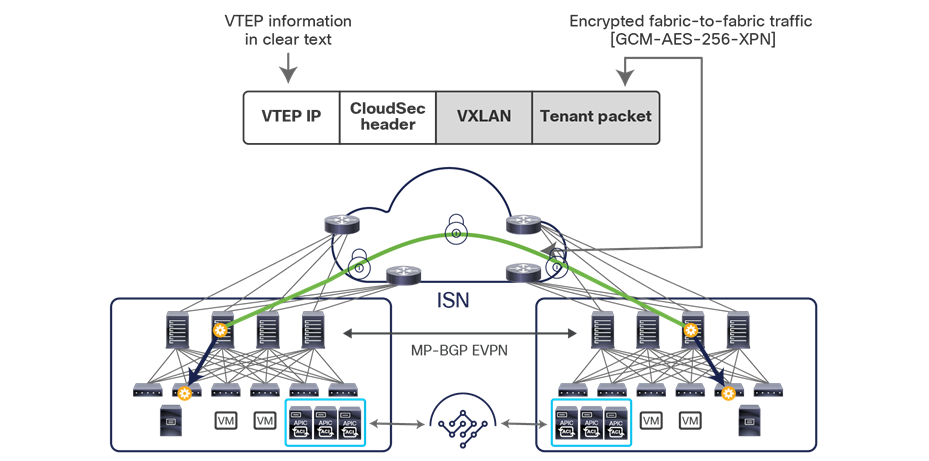

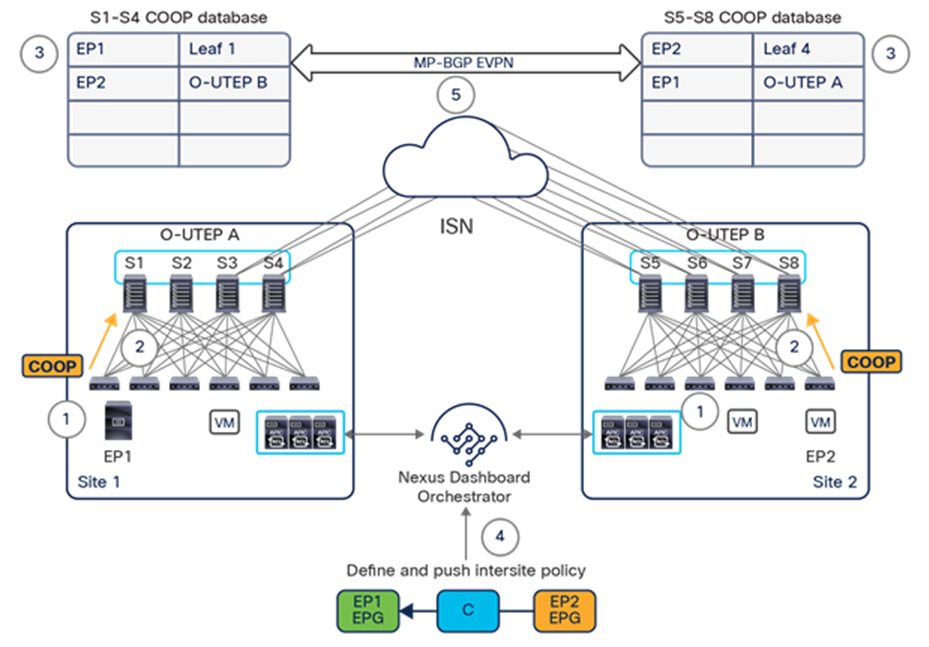

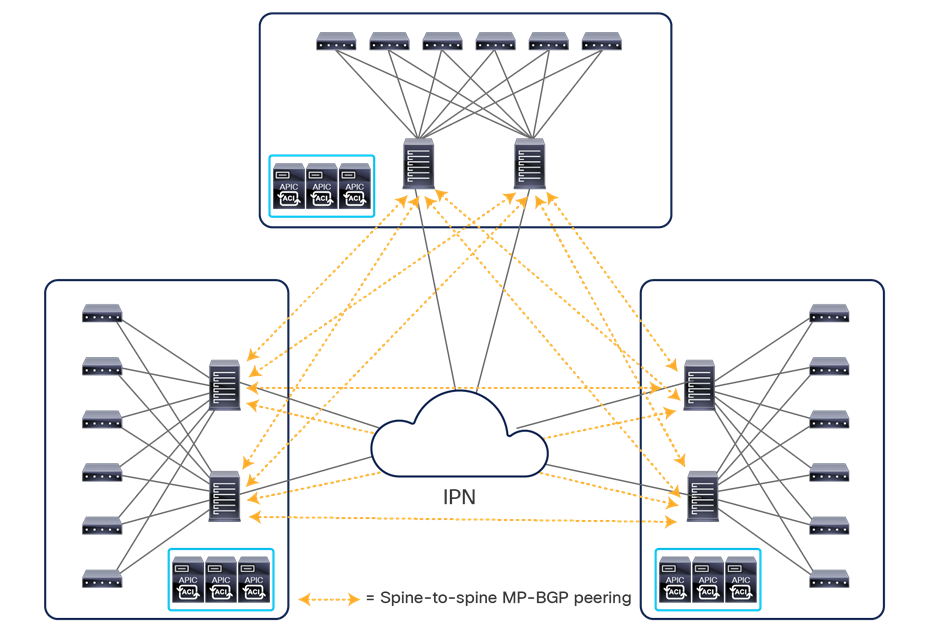

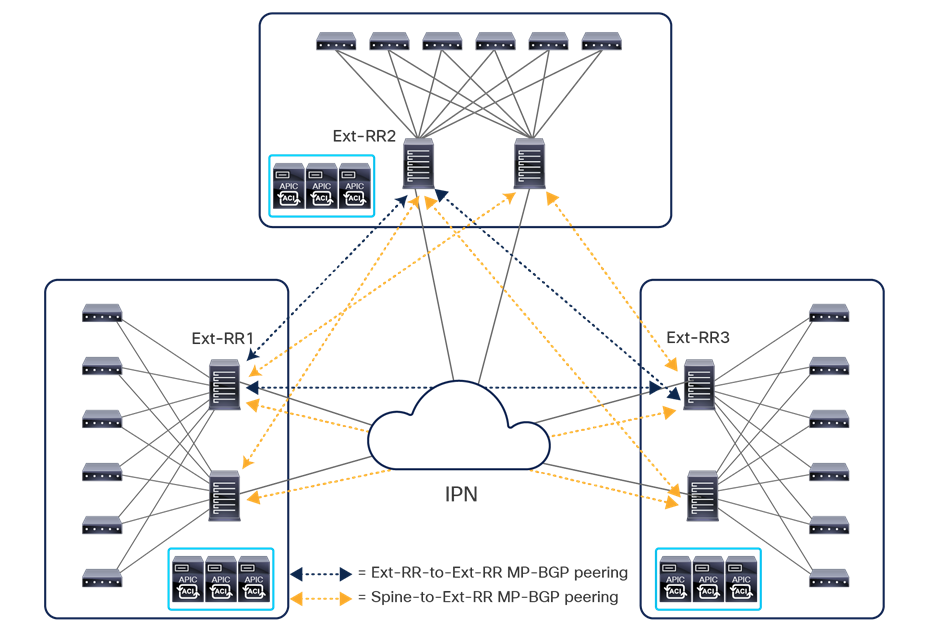

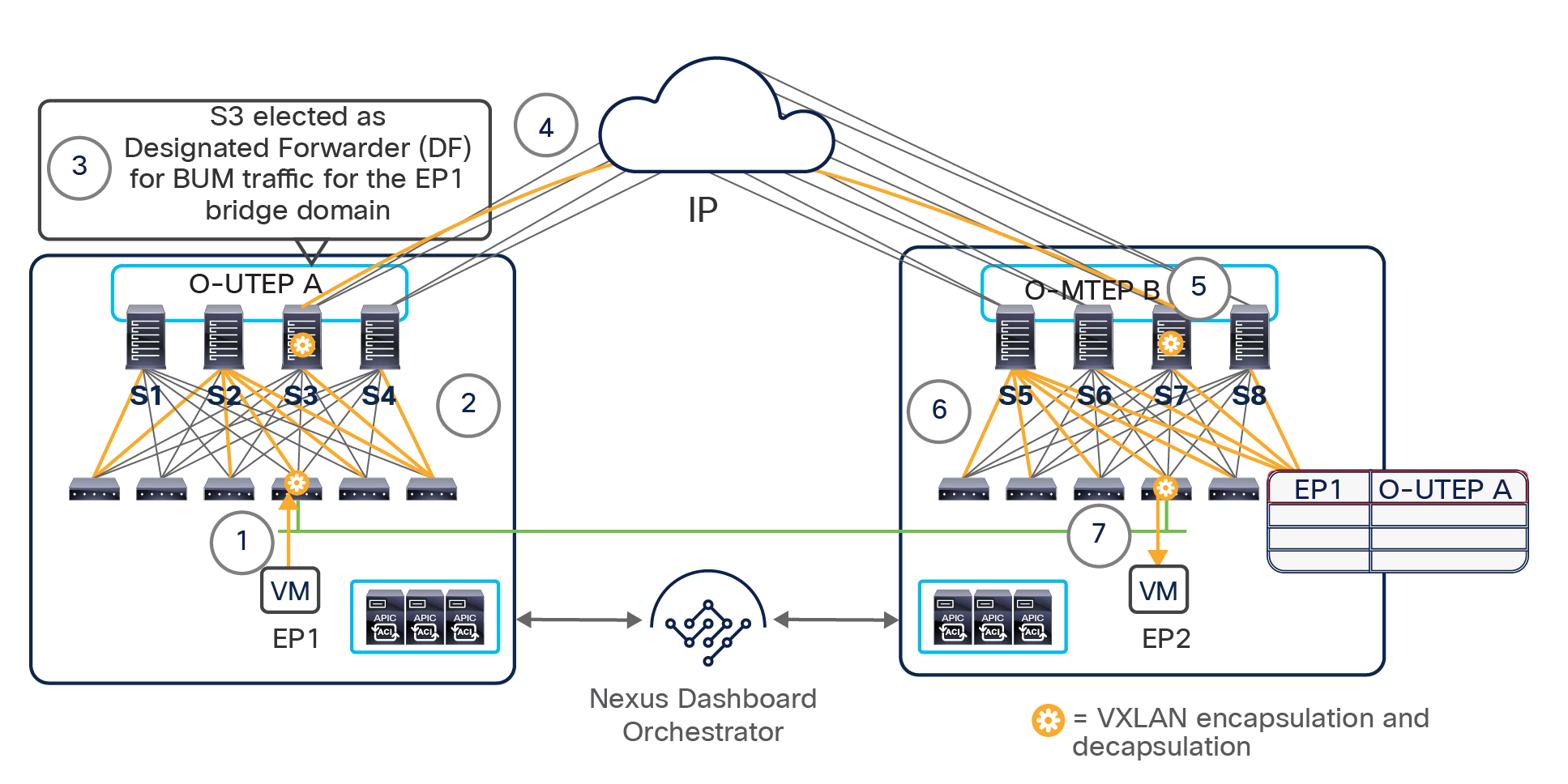

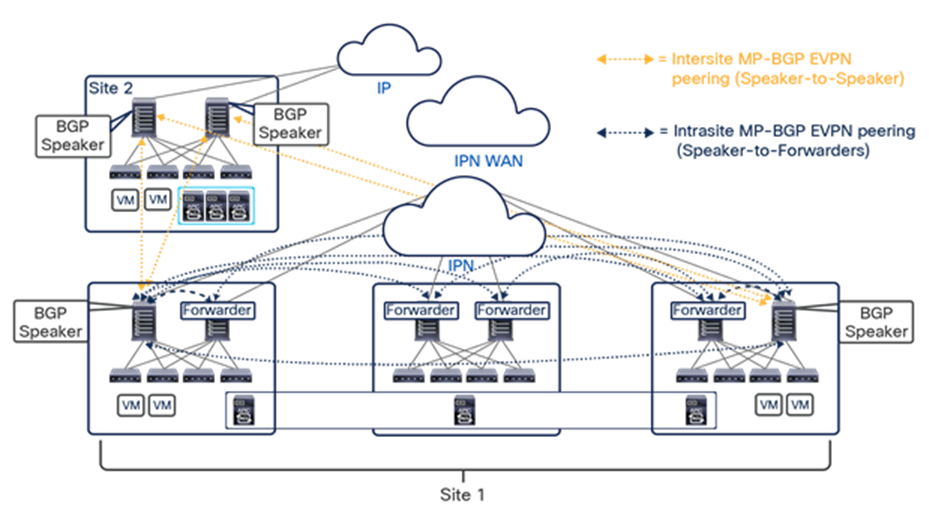

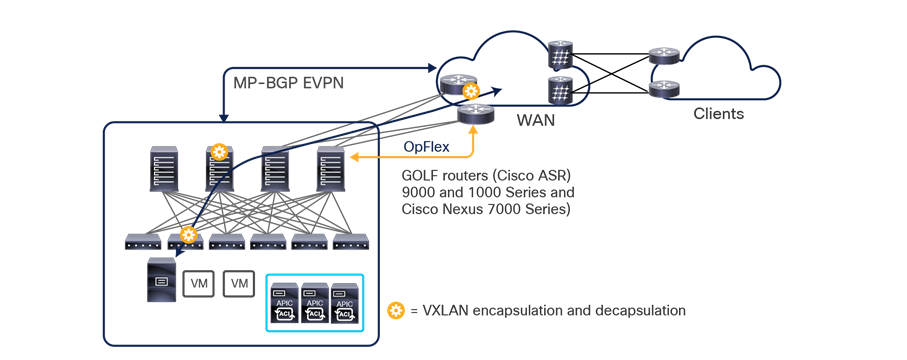

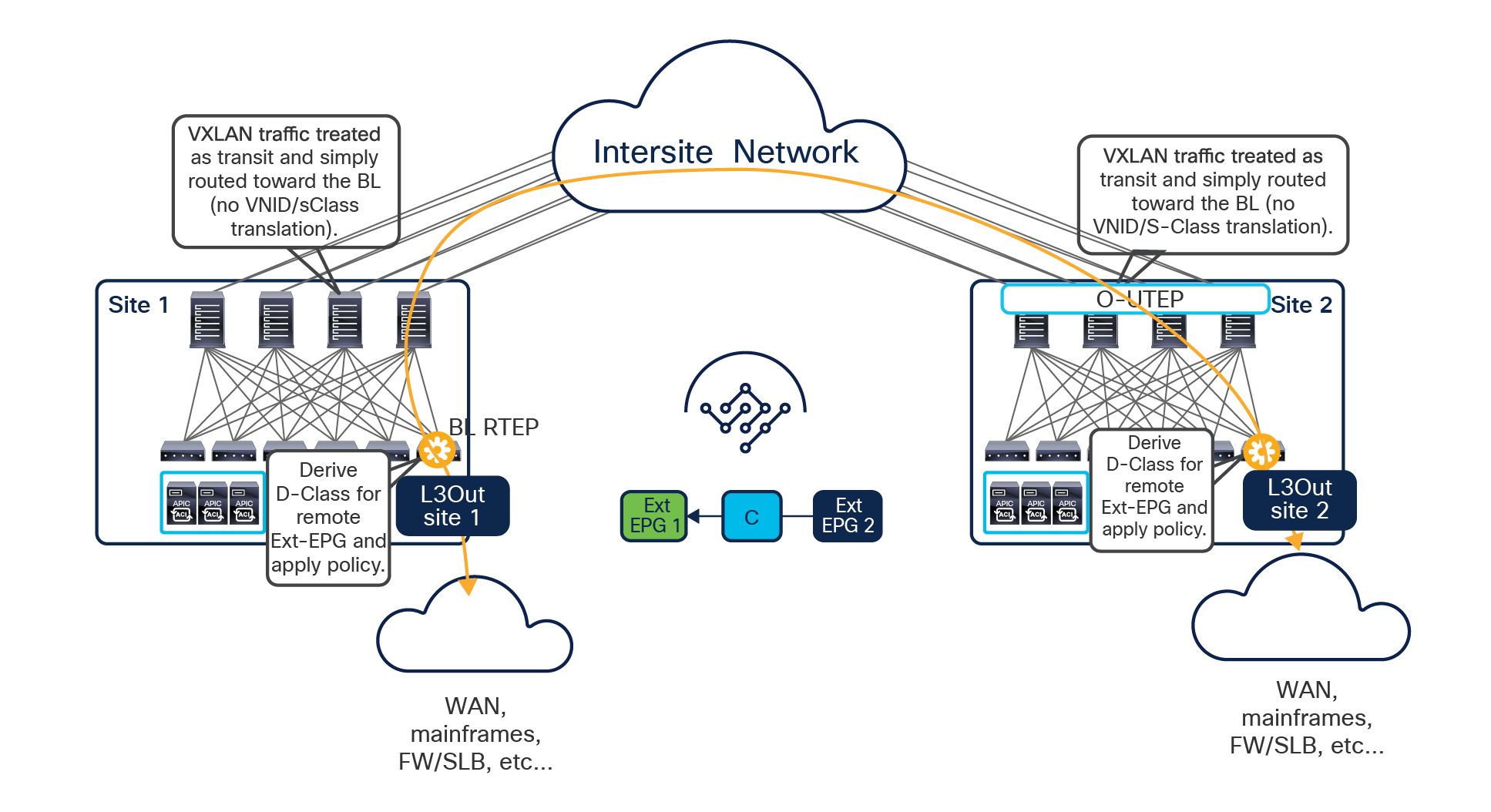

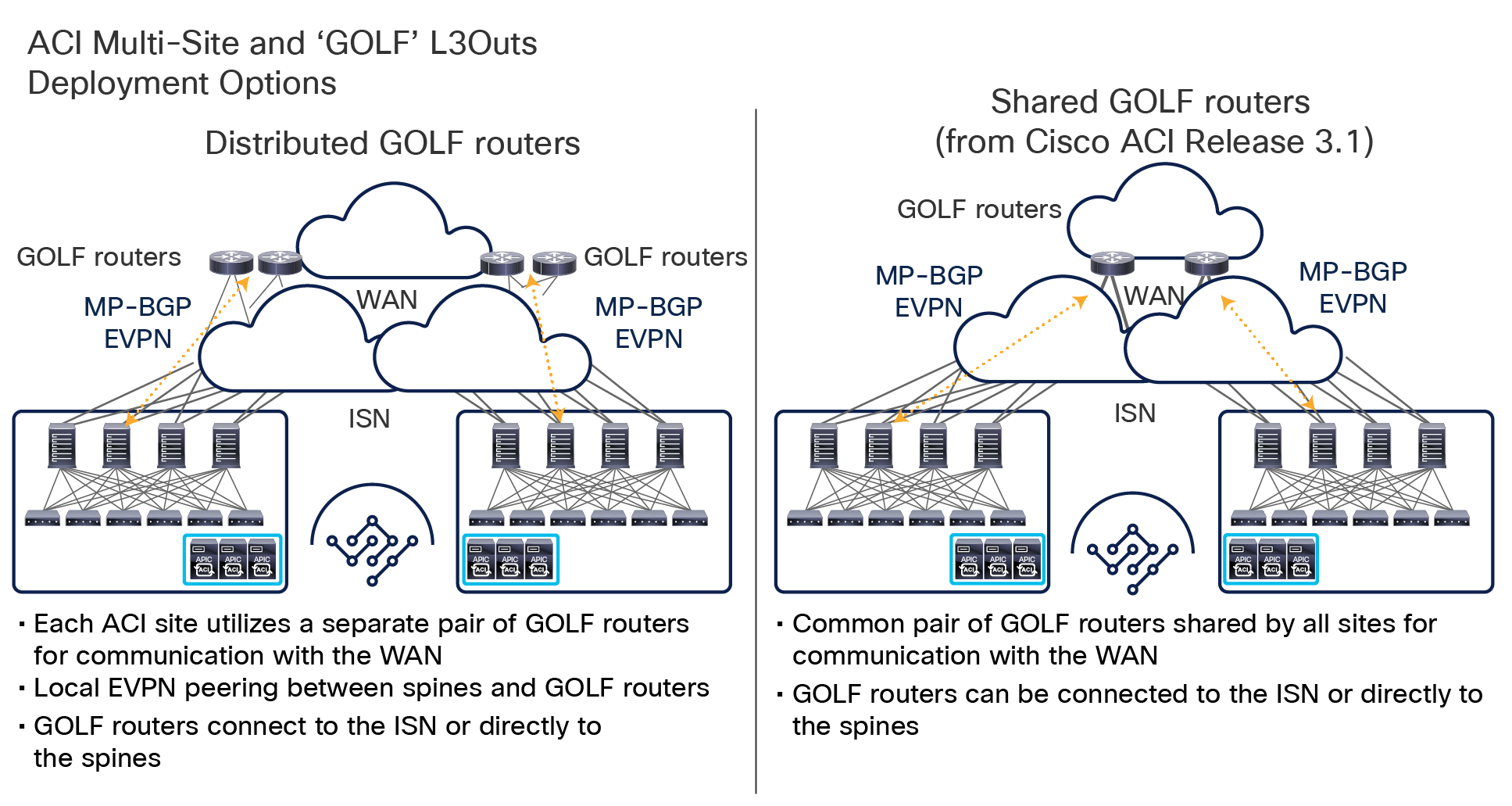

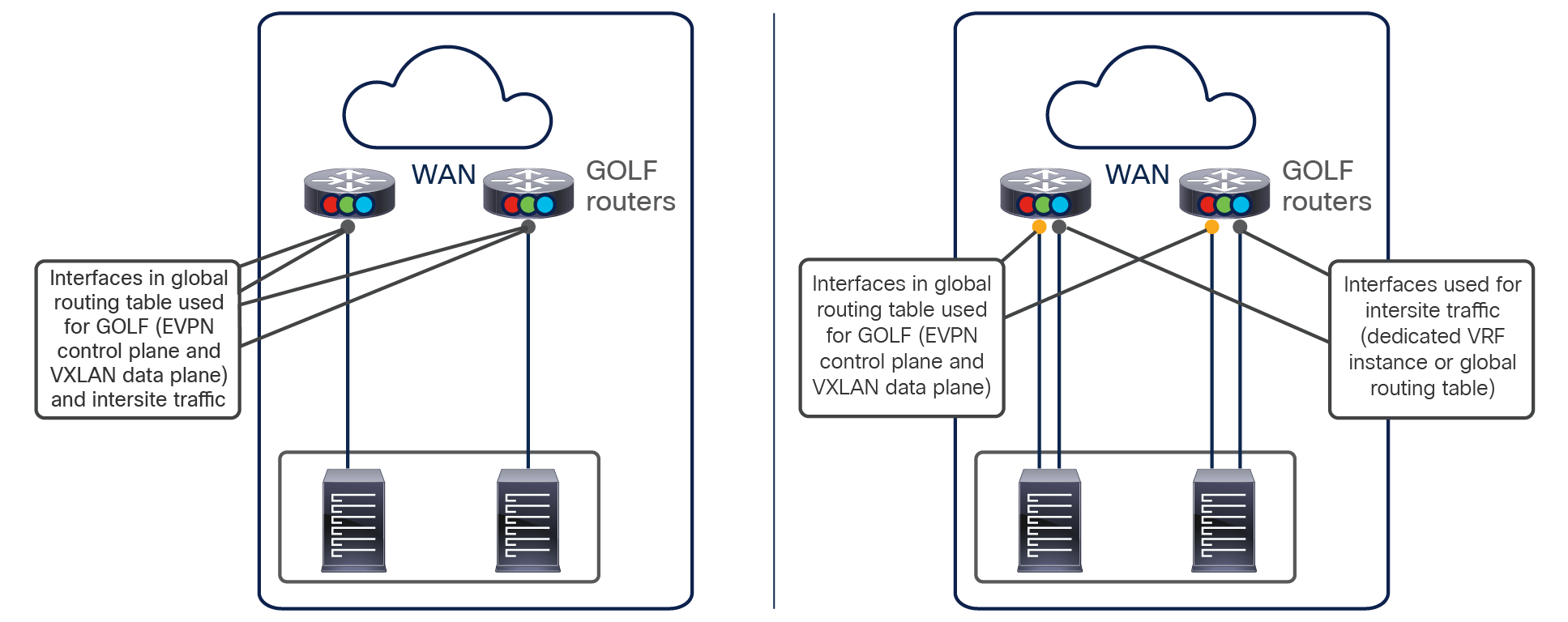

● Intersite control plane: Endpoint reachability information is exchanged across sites using a Multiprotocol-BGP (MP-BGP) Ethernet VPN (EVPN) control plane. This approach allows the exchange of MAC and IP address information for the endpoints that communicate across sites. MP-BGP EVPN sessions are established between the spine nodes deployed in separate fabrics that are managed by the same instance of Cisco Nexus Dashboard Orchestrator, as discussed in more detail in the section “Cisco ACI Multi-Site overlay control plane.”

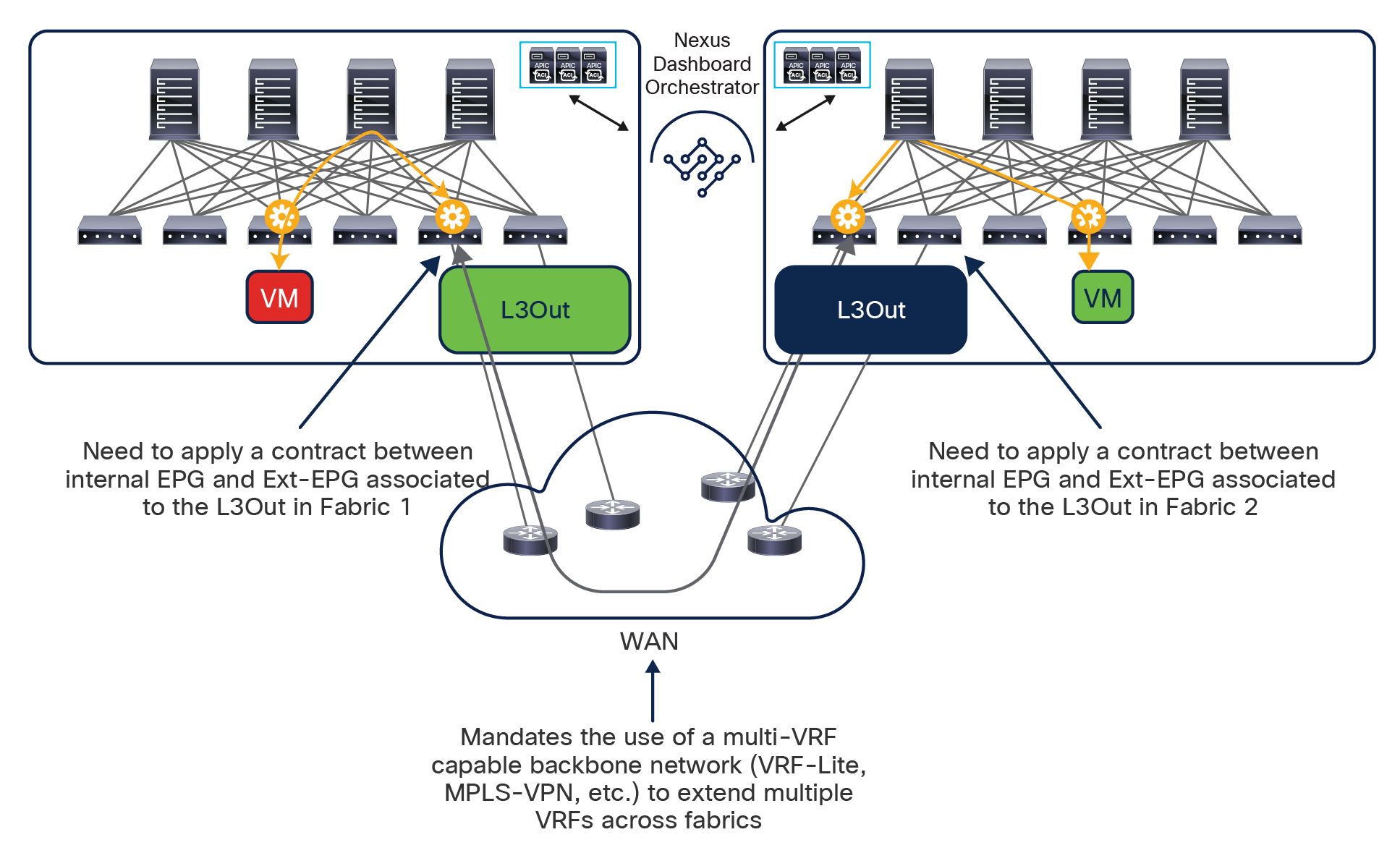

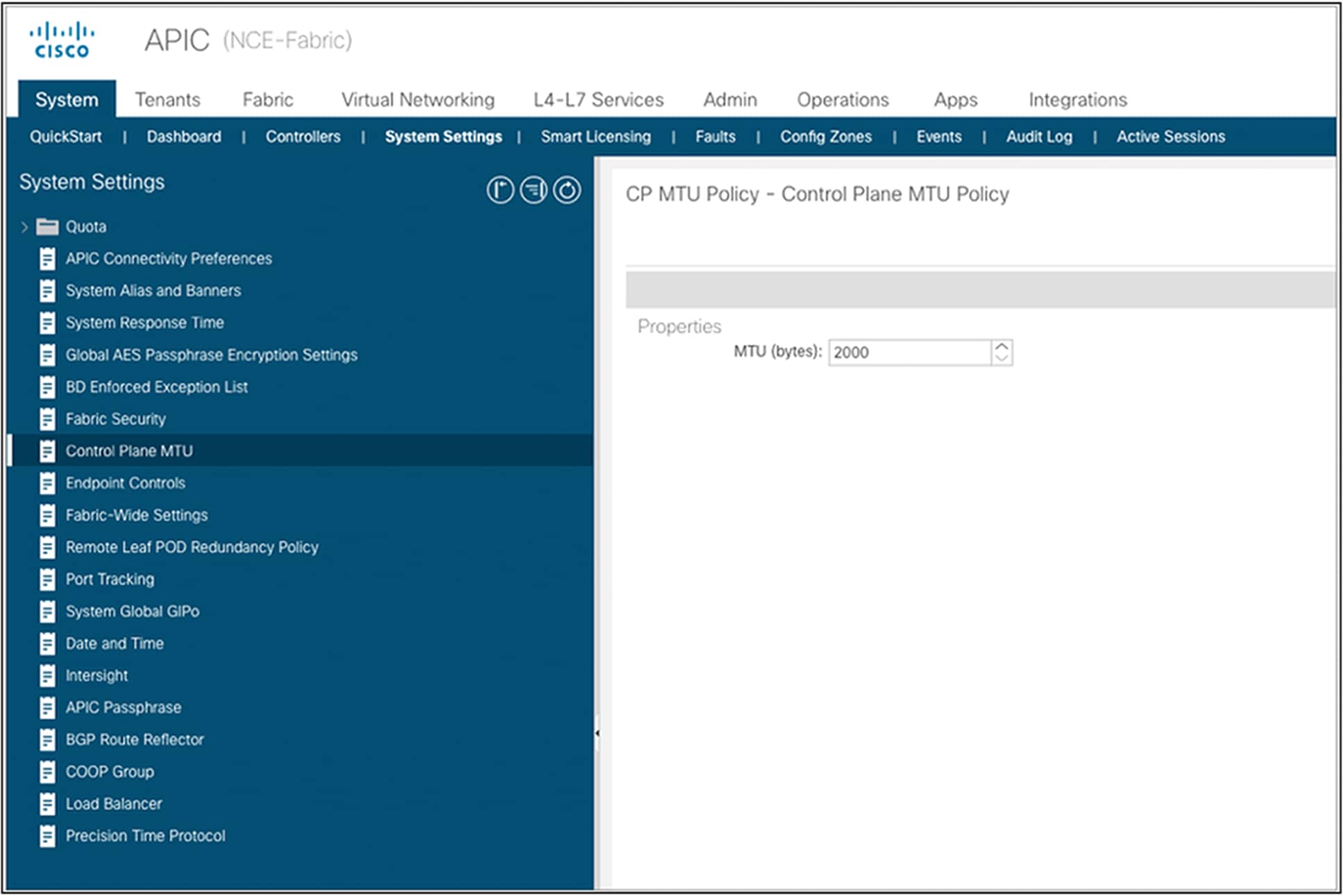

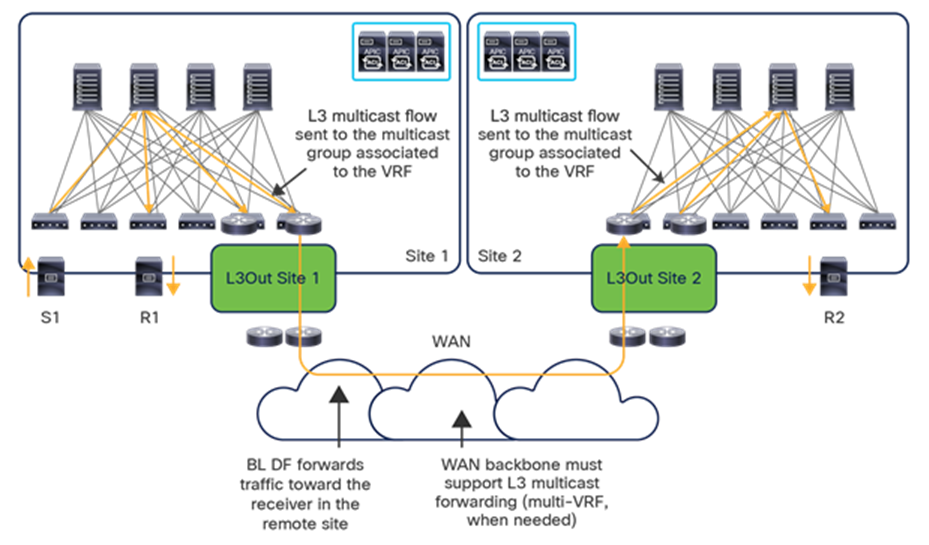

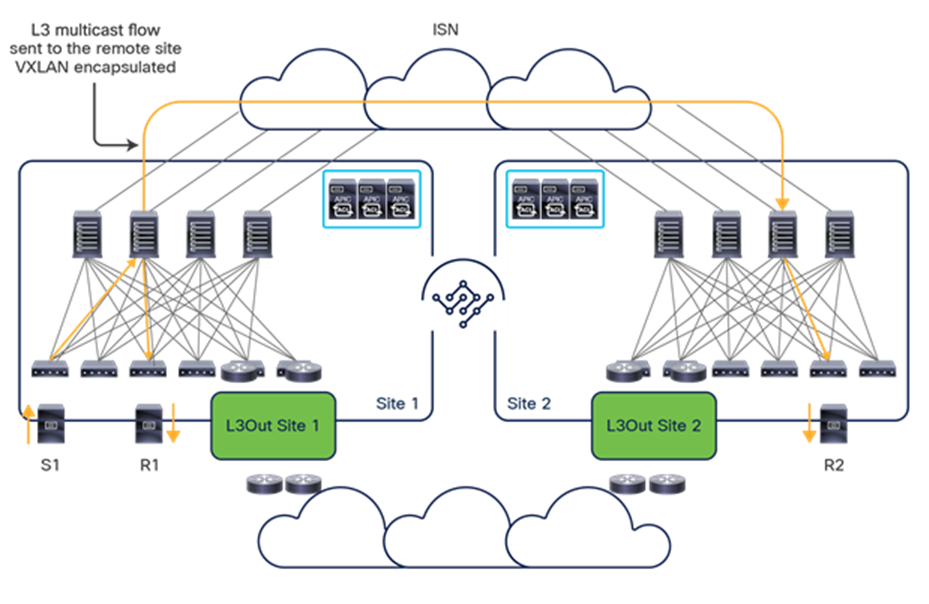

● Intersite data plane: All communication (Layer 2 or Layer 3) between endpoints connected to different sites is achieved by establishing site-to-site Virtual Extensible LAN (VXLAN) tunnels across a generic IP network that interconnects the various sites. As discussed in the section “Intersite connectivity deployment considerations”, this IP network has no specific functional requirements other than the capability to support routing and increased Maximum Transmission Unit (MTU) size (given the overhead from the VXLAN encapsulation).

Note: Starting from Nexus Dashboard Orchestrator Software Release 4.0(1), a new deployment model is supported, allowing you to deploy NDO to manage up to 100 “autonomous fabrics.” In that specific use case, there is no VXLAN EVPN intersite connectivity between the fabrics that are part of the Multi-Site domain, and the Orchestrator essentially becomes a single pane of glass from where to provision configurations to all those sites. Layer-3-only communication is possible between the fabrics, leveraging the L3Out data path. For more information on the deployment of NDO with “autonomous fabrics,” please refer to the “Layer-3-only connectivity across sites” section.

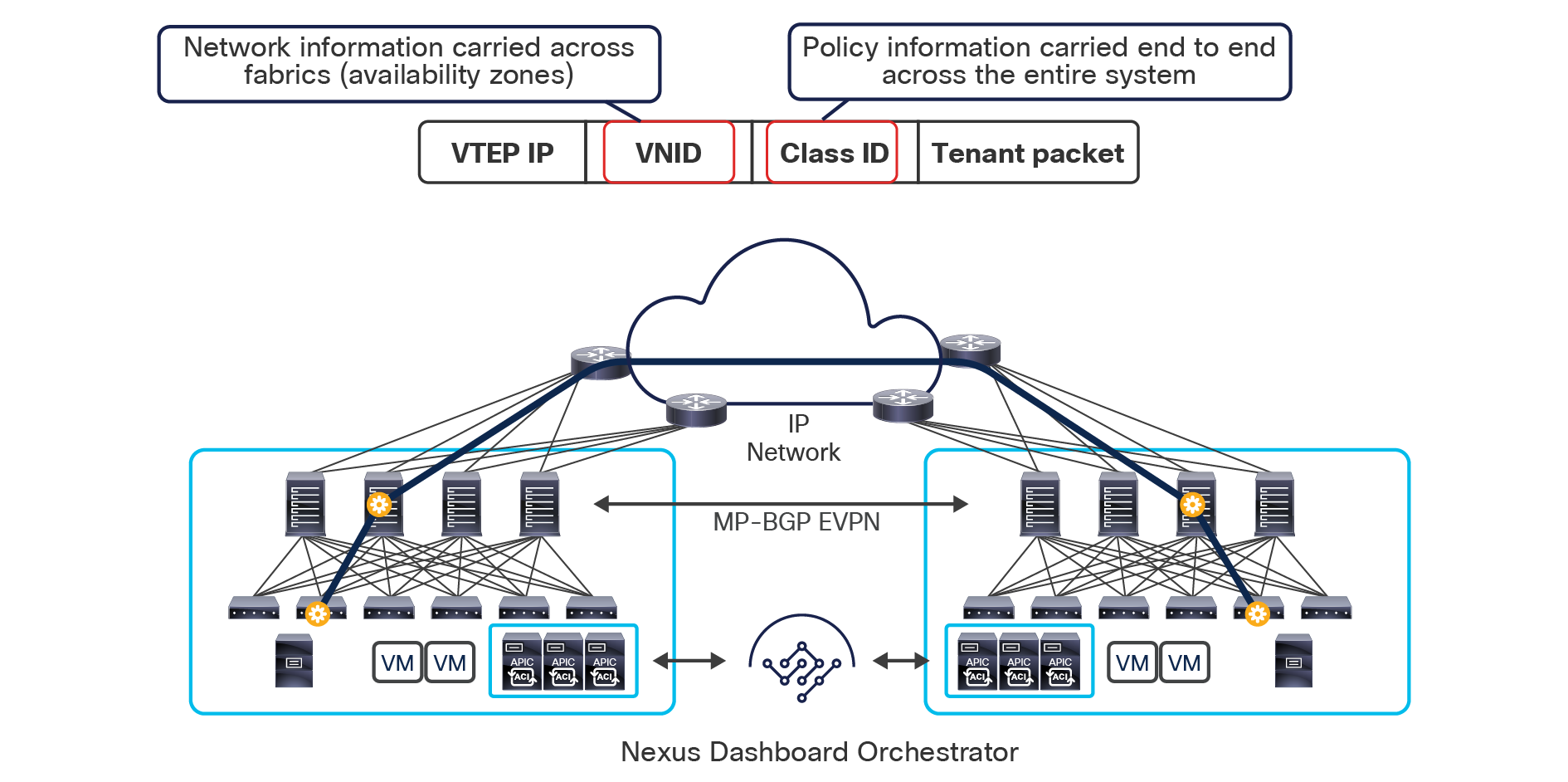

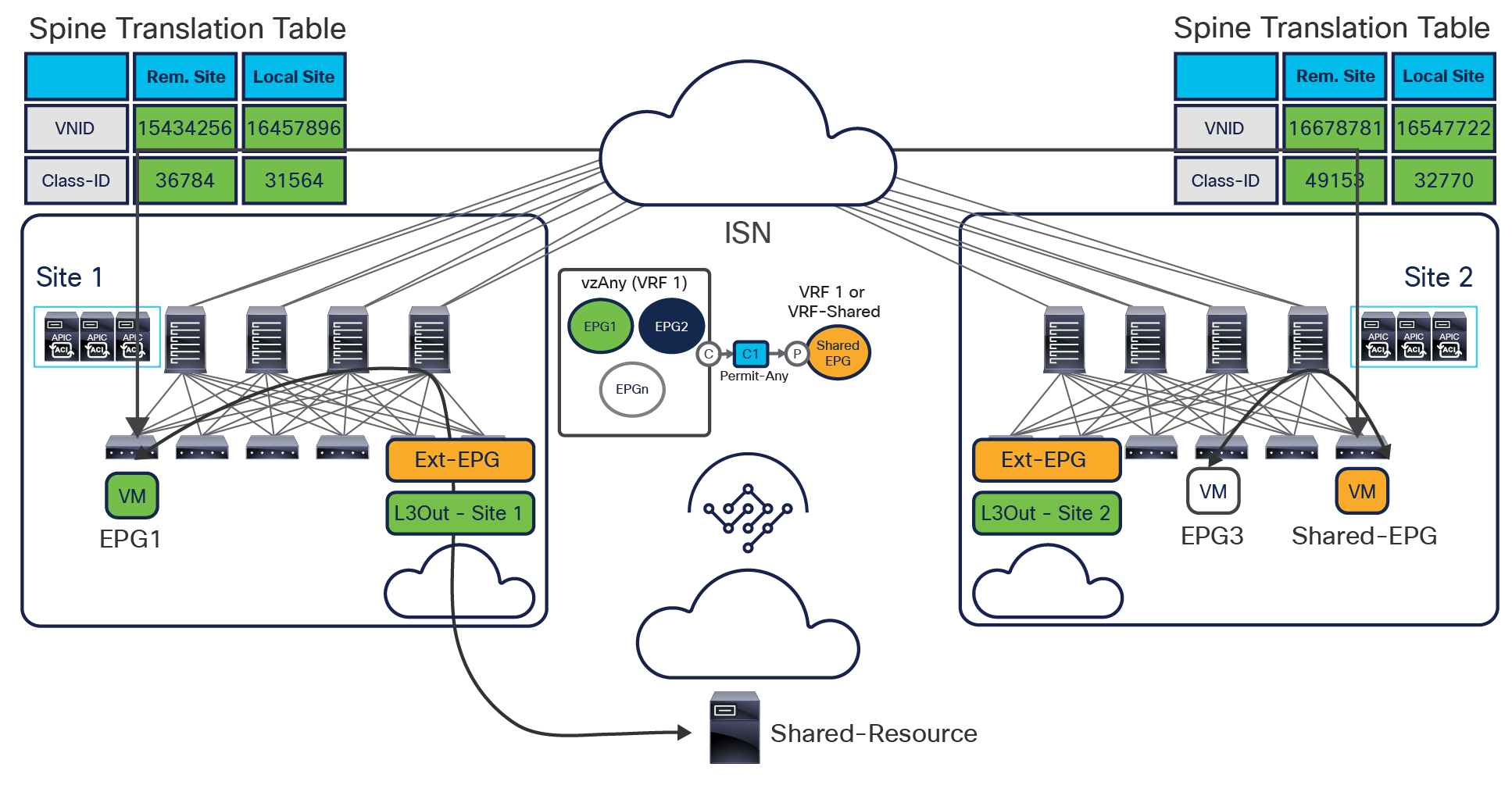

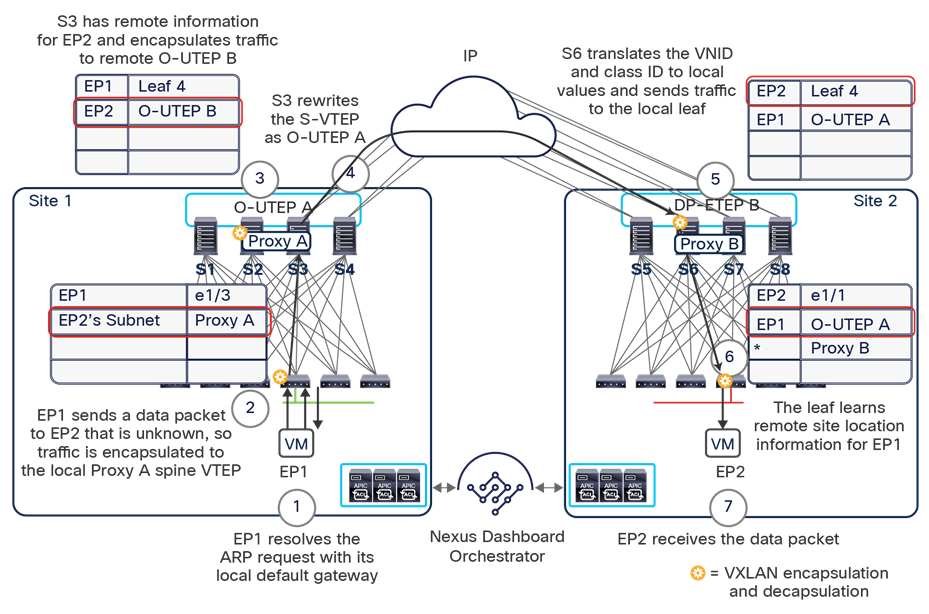

The use of site-to-site VXLAN encapsulation greatly simplifies the configuration and functions required for the intersite IP network. It also allows network and policy information (metadata) to be carried across sites, as shown in Figure 6.

Carrying network and policy information across sites

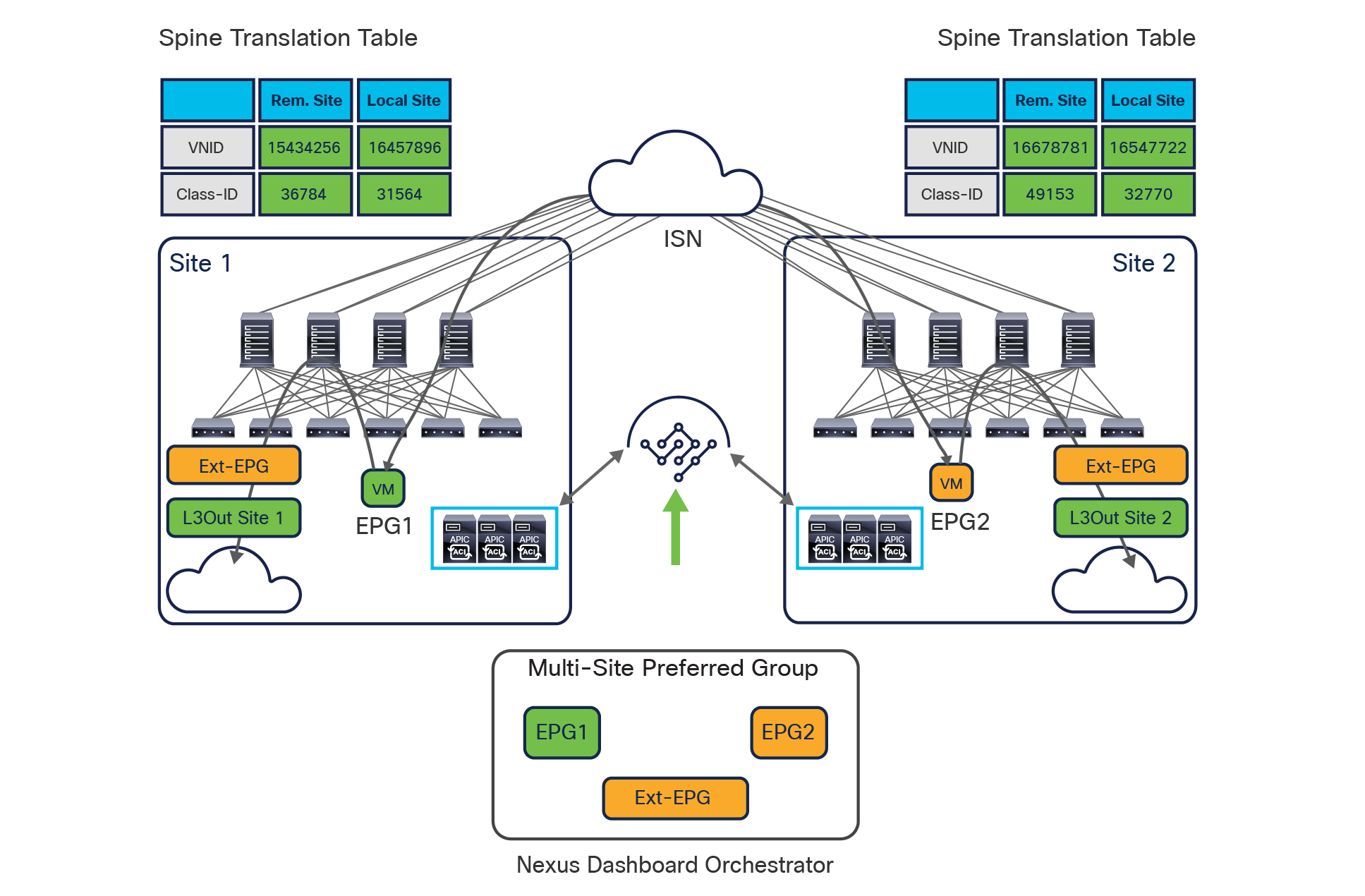

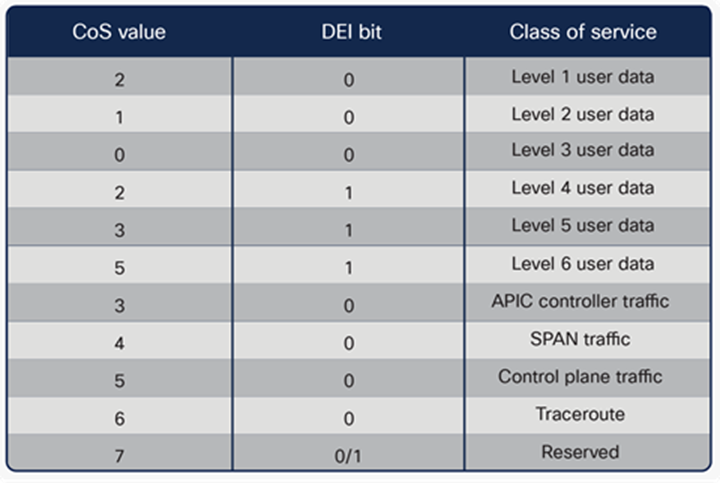

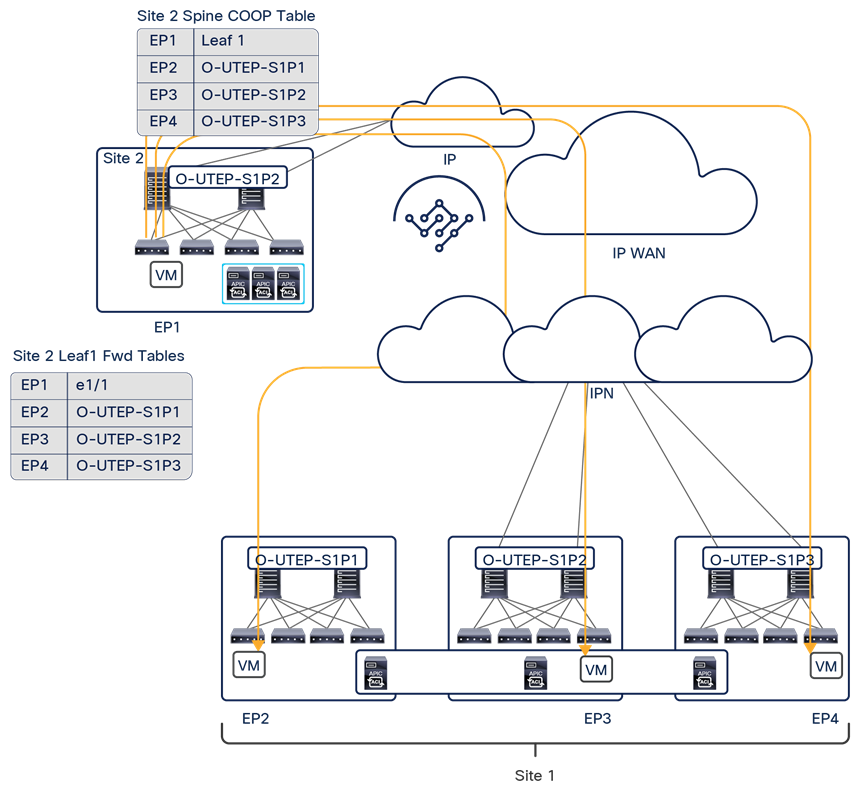

The VXLAN Network Identifier (VNID) identifies the bridge domain (BD) (for Layer 2 communication) or the Virtual Routing and Forwarding (VRF) instance (for Layer 3 traffic) of the endpoint sourcing the traffic (for intra-VRF communication). The class ID is the unique identifier of the source Endpoint Group (EPG). However, these values are locally significant within a fabric. Because a completely separate and independent APIC domain and fabric are deployed at the destination site, a translation function (also referred to as “name-space normalization”) must be applied before the traffic is forwarded inside the receiving site, to help ensure that locally significant values identifying that same source EPG, bridge domain, and VRF instance are used.

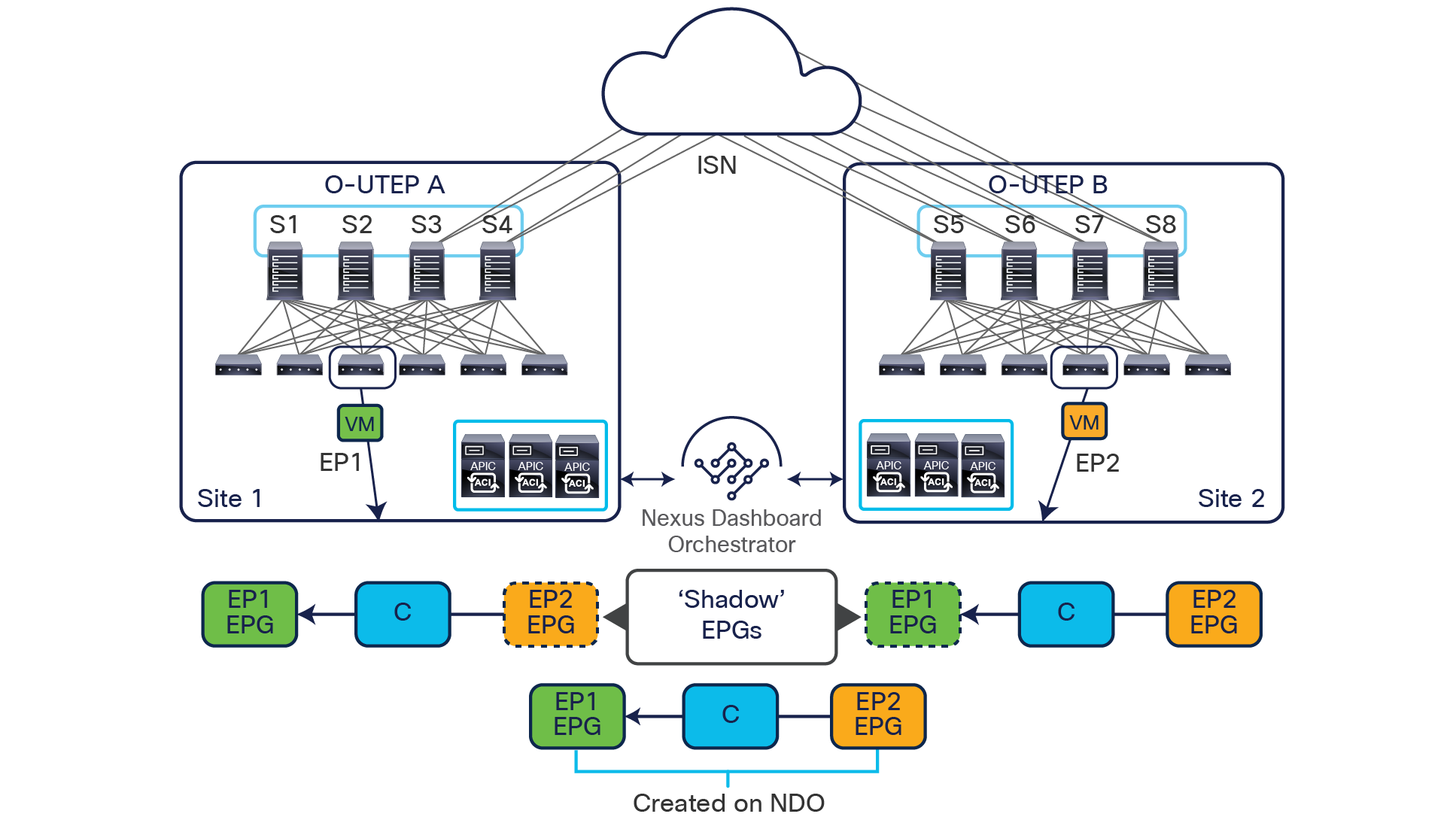

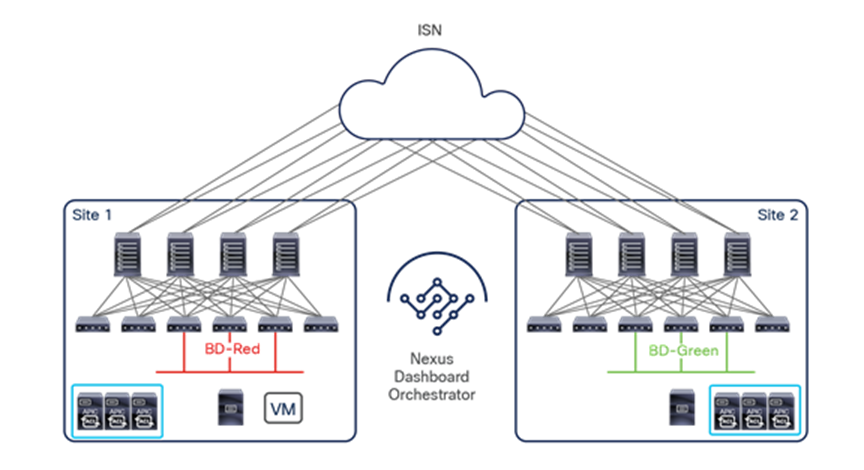

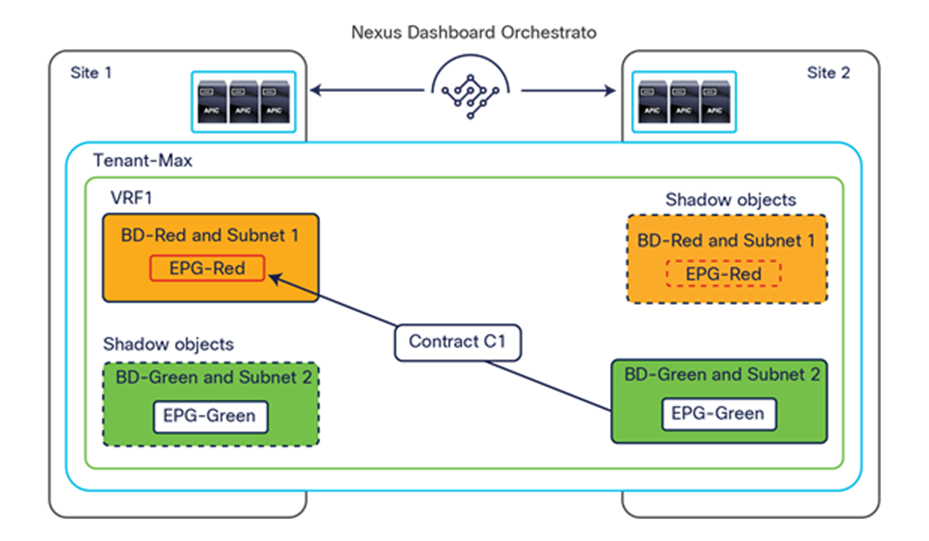

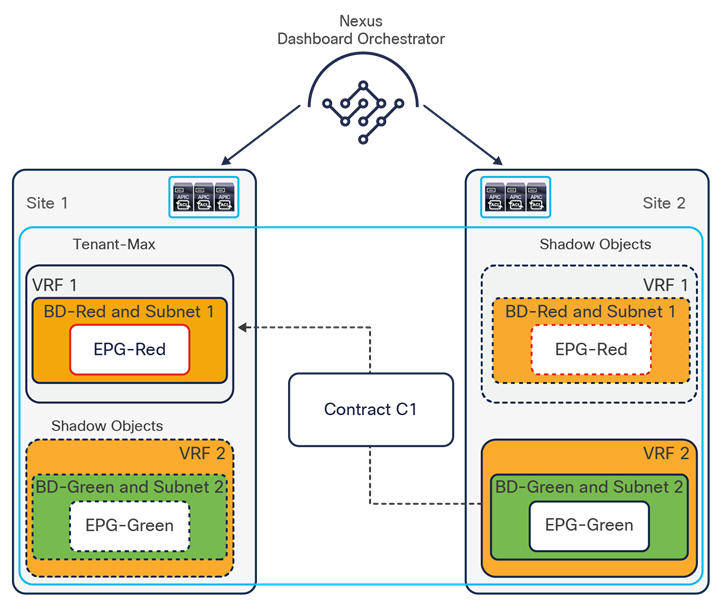

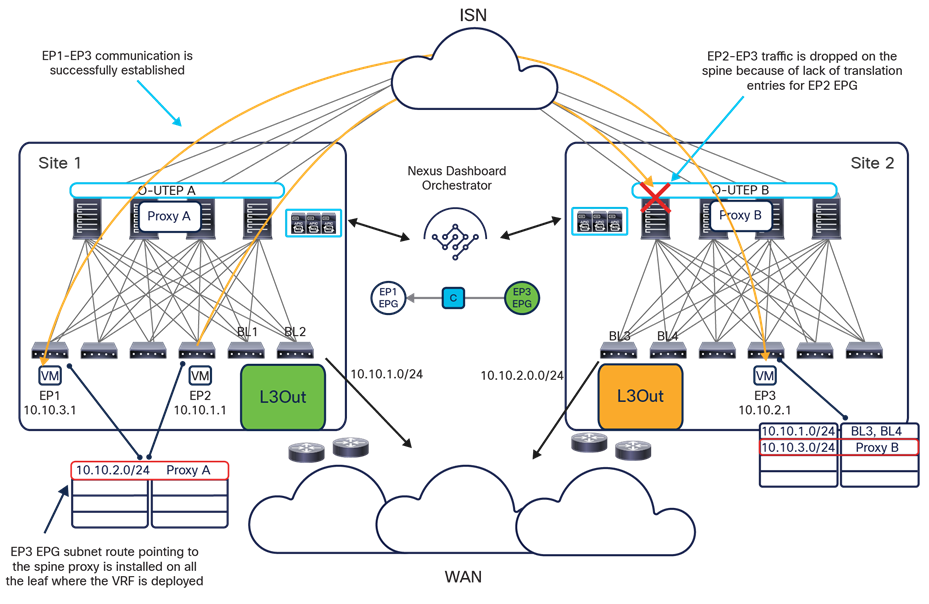

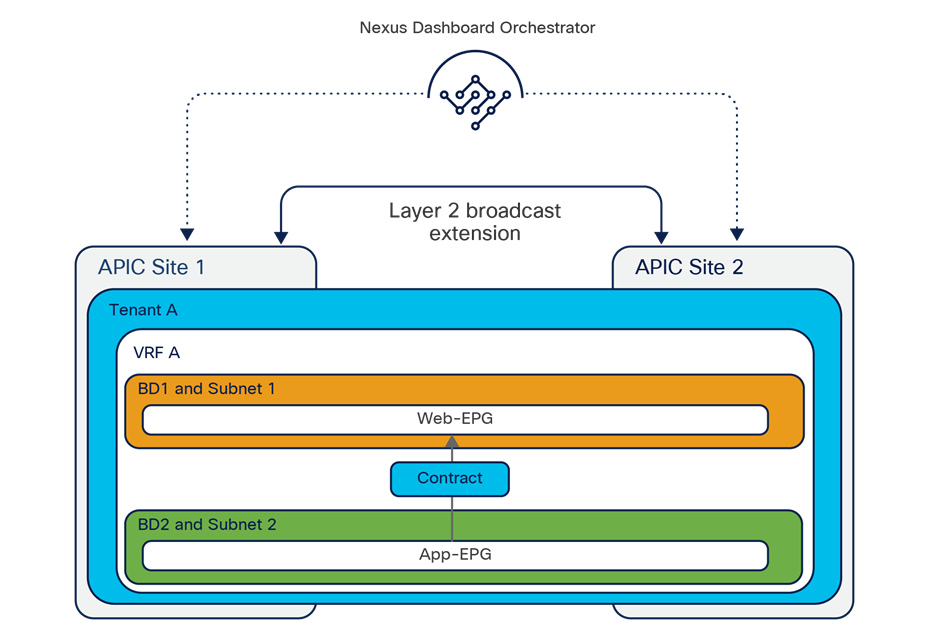

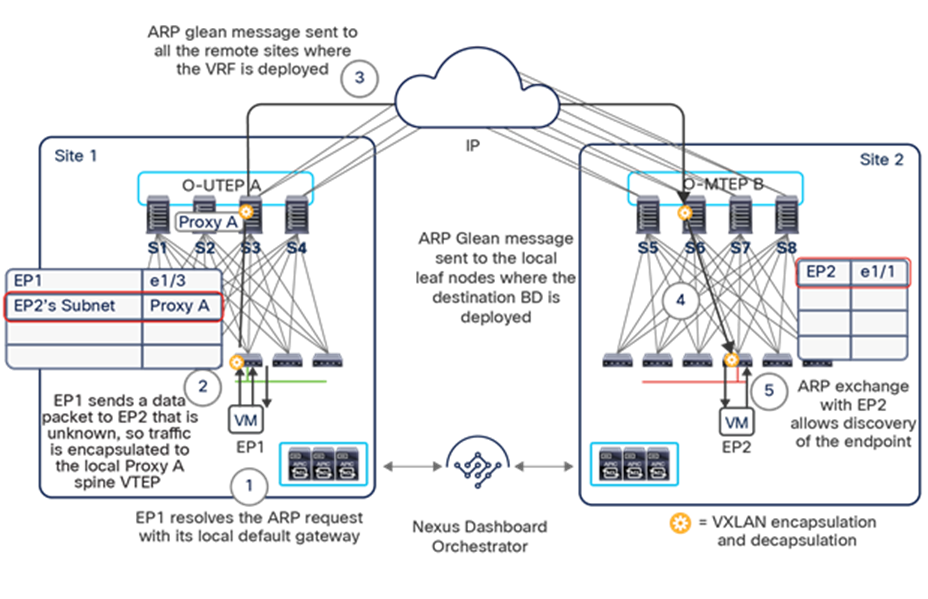

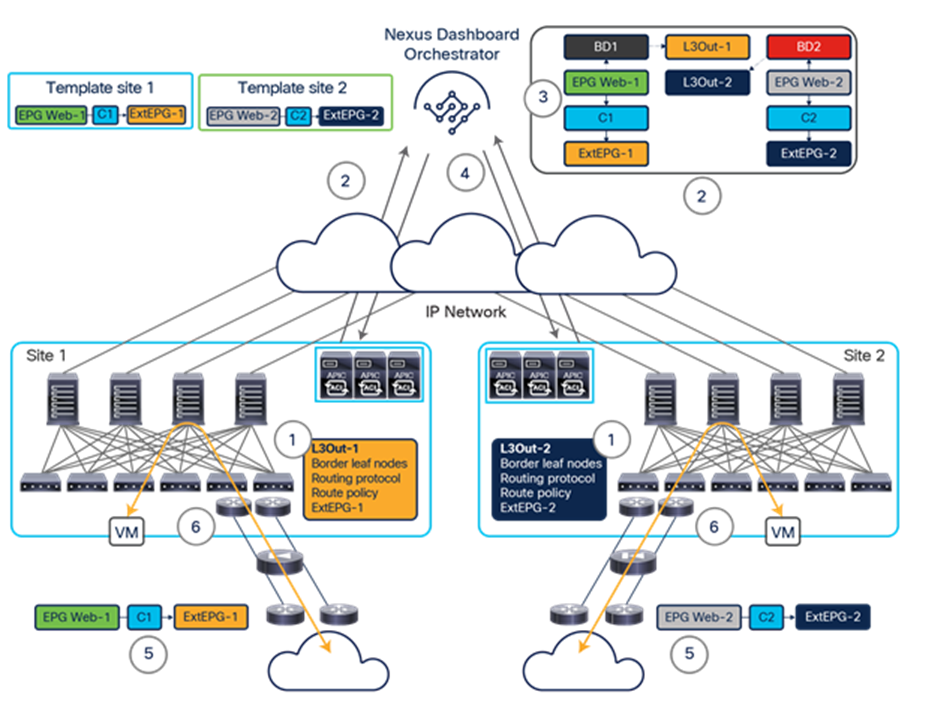

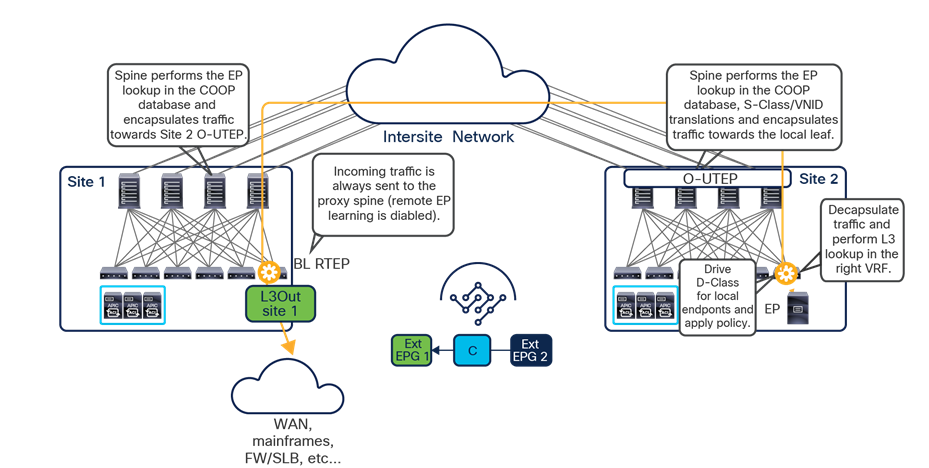

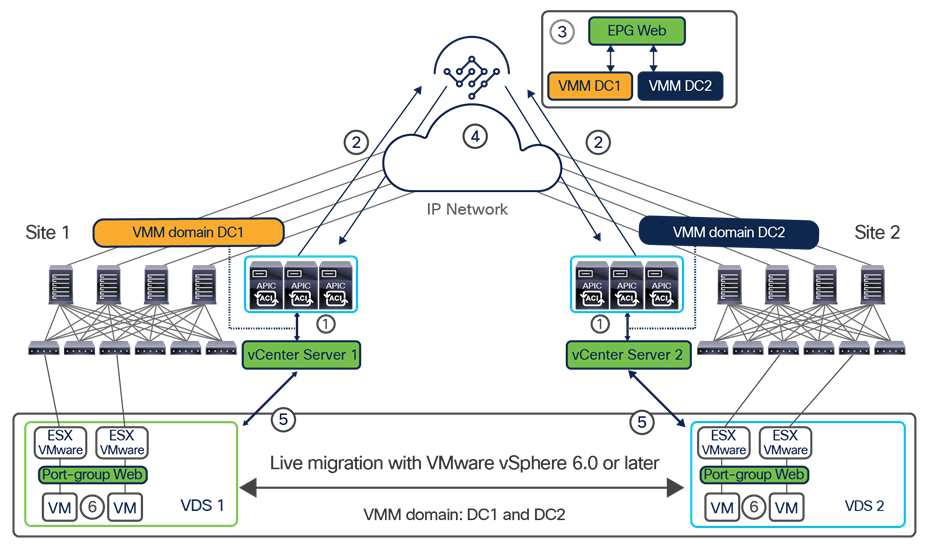

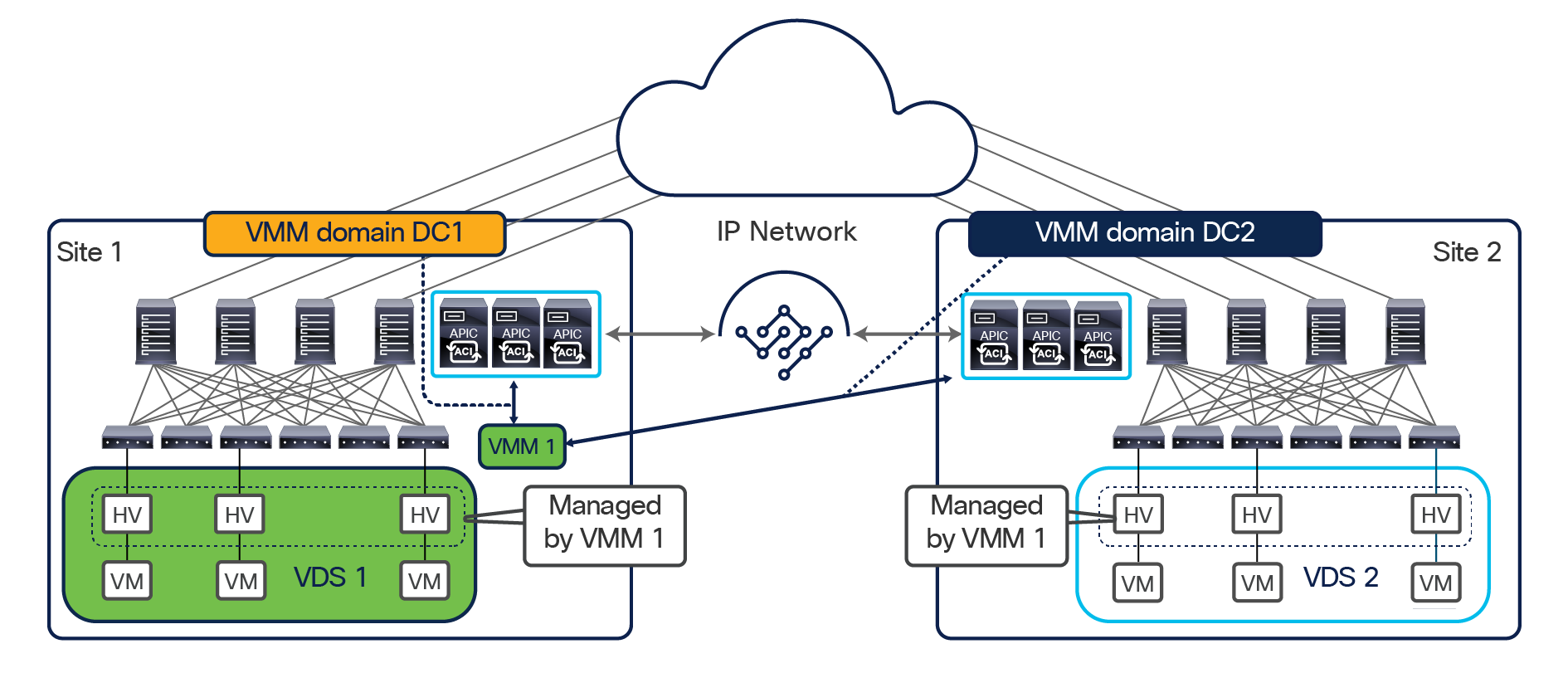

To better understand the need for this name-space normalization function, it is important to clarify what happens when a specific contract is defined between two EPGs deployed in separate sites in order to allow intersite communications between endpoints that are part of those EPGs. As shown in Figure 7, when the desired configuration (intent) is defined in Cisco Nexus Dashboard Orchestrator and then pushed to the different APIC domains, specific copies of EPGs, named shadow EPGs, are automatically created in each APIC domain. This ensures that the whole configuration centrally defined in NDO can be locally instantiated in each site and the security policy properly enforced, even when each EPG is only locally defined and not stretched across sites (specific VNIDs and class IDs are assigned to the shadow objects in each APIC domain).

In the example above, the yellow EP2 EPG (and its associated BD) is created as a “shadow” EPG in APIC domain 1, whereas the green EP1 EPG (and its associated BD) is a “shadow” EPG in APIC domain 2. Up to Cisco ACI Release 5.0(1), the shadow EPGs and BDs are not easily distinguishable in the APIC GUI, so it is quite important to be aware of their existence and role.

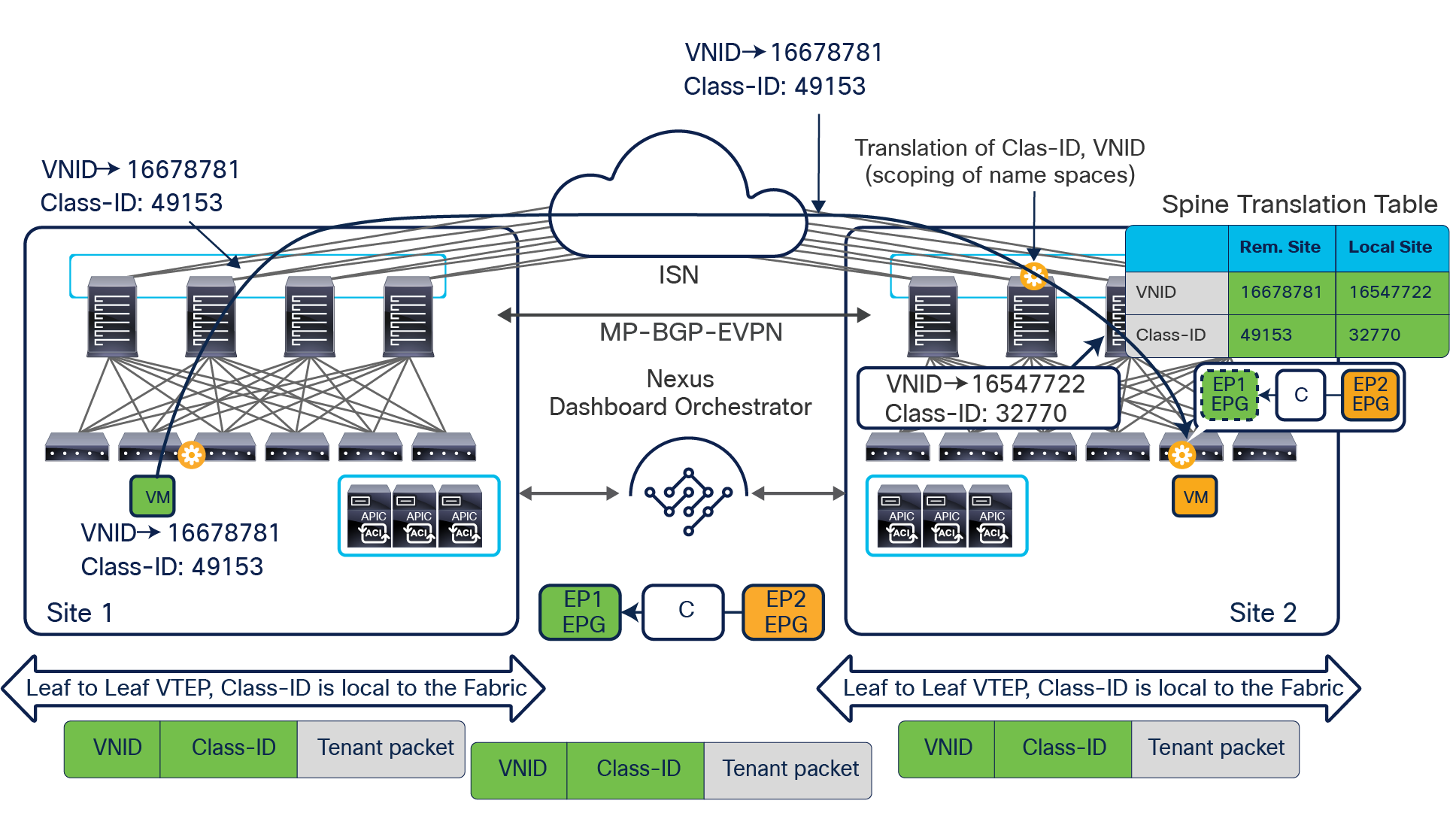

Since both APIC domains are completely independent from each other, it is logical to expect that different VNID and class-ID values would be assigned to a given EPG (the “real” and the “shadow” copy) across sites. This implies that a translation of those values is required on the spines receiving data-plane traffic from a remote site before injecting the traffic into the local site, as highlighted in Figure 8, below.

Name-space translation function on the receiving spine

When the policy is created on Cisco Nexus Dashboard Orchestrator stating that “EP1 EPG” must communicate with “EP2 EPG,” the Nexus Dashboard Orchestrator receives from each APIC controller the specific identifiers (pcTag, L2VNI, L3VNI) assigned to the local and shadow objects, and instructs the APIC controllers to program proper translation rules in the local spines. The end result is that the configured policy can then correctly be applied on the leaf node before sending the traffic to the destination endpoint. Additionally, the creation of a contract between EPGs locally deployed in separate sites usually results also in the configuration of the BD subnets associated to the EPGs on the leaf nodes of remote sites (in order to enable the proxy-path via the local spine nodes). More details about this can be found in the “Cisco ACI Multi-Site overlay data plane” section.

Note: The example in Figure 8 shows the policy being applied on the leaf node in the receiving site. This is normally the case until the leaf node in the source site learns via the data-plane the location information of the remote endpoint. From that moment on, the policy can be applied directly on the ingress leaf. The use of service-graph with Policy-Based Redirection (PBR) is a specific case where this may not be always the case, as discussed in more detail in the “Network services integration” section.

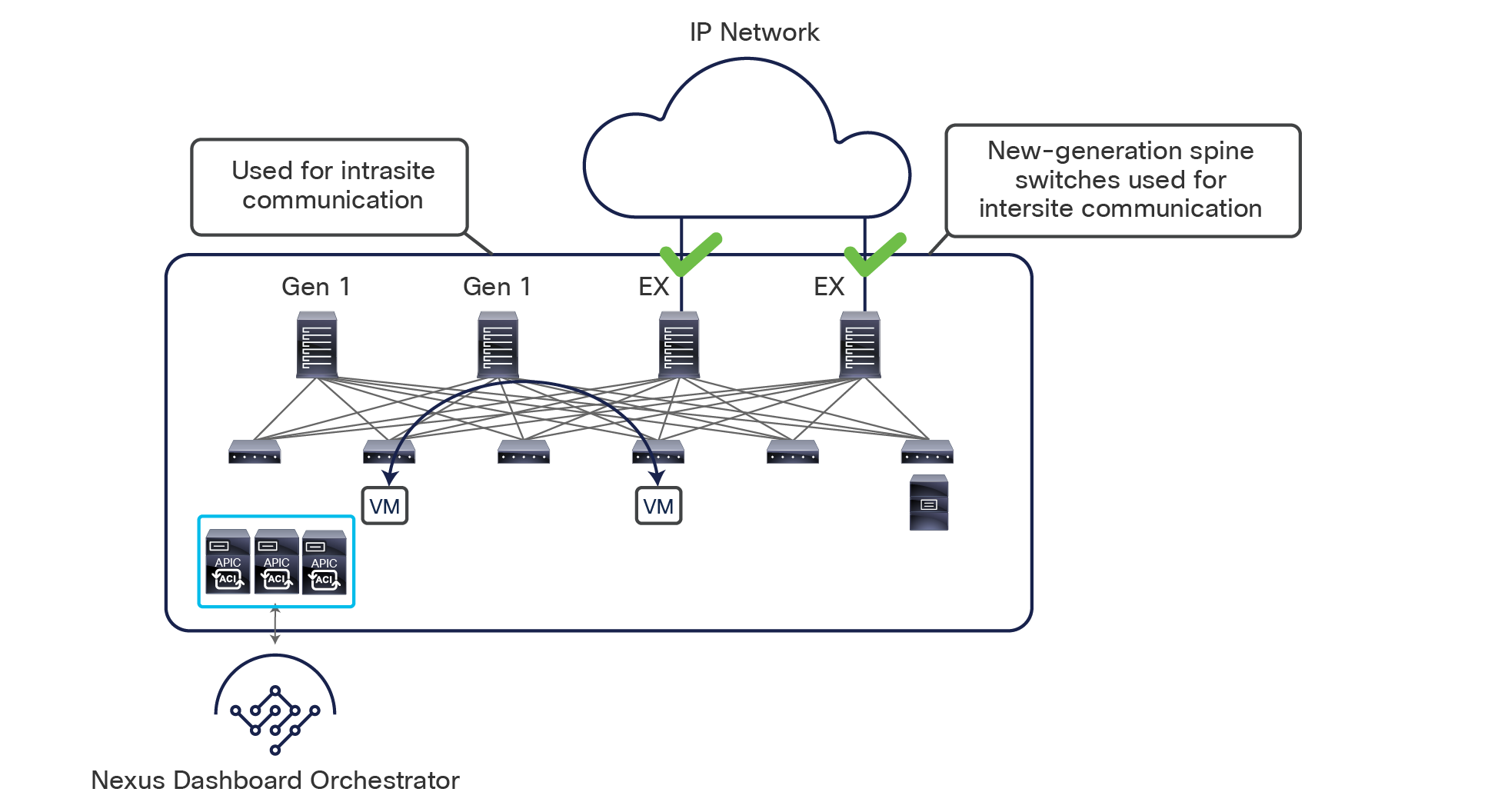

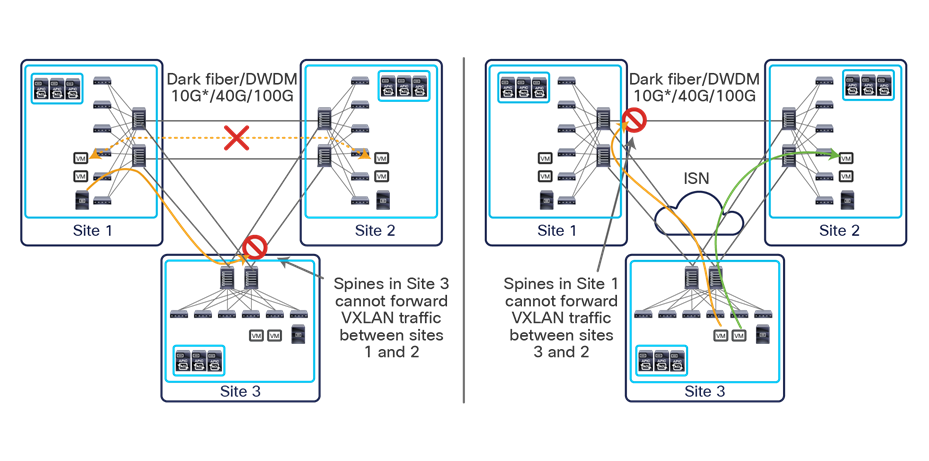

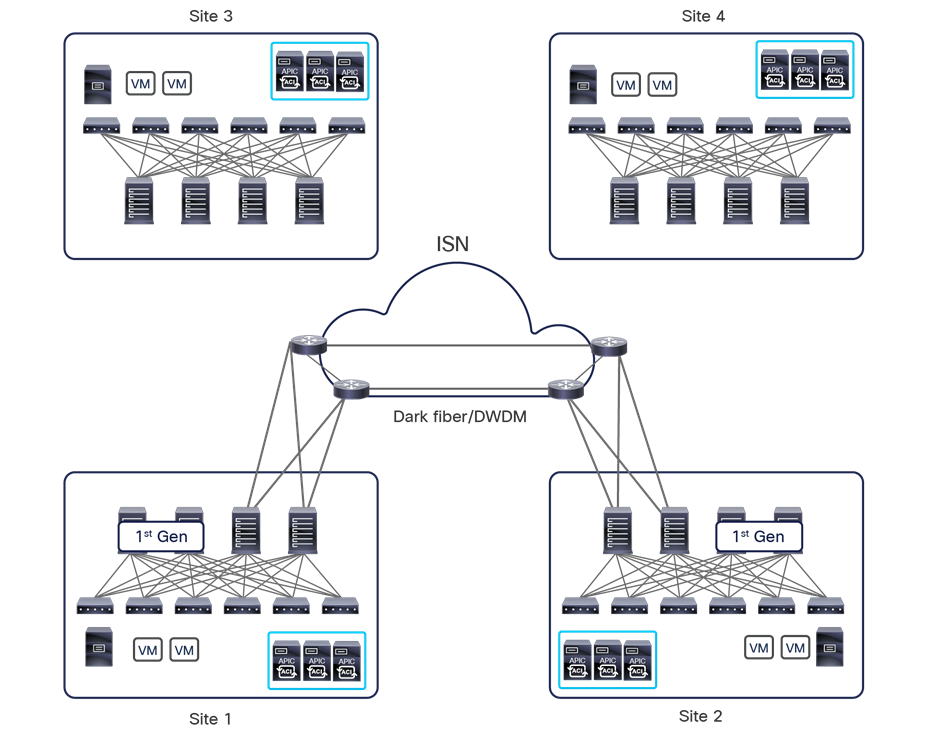

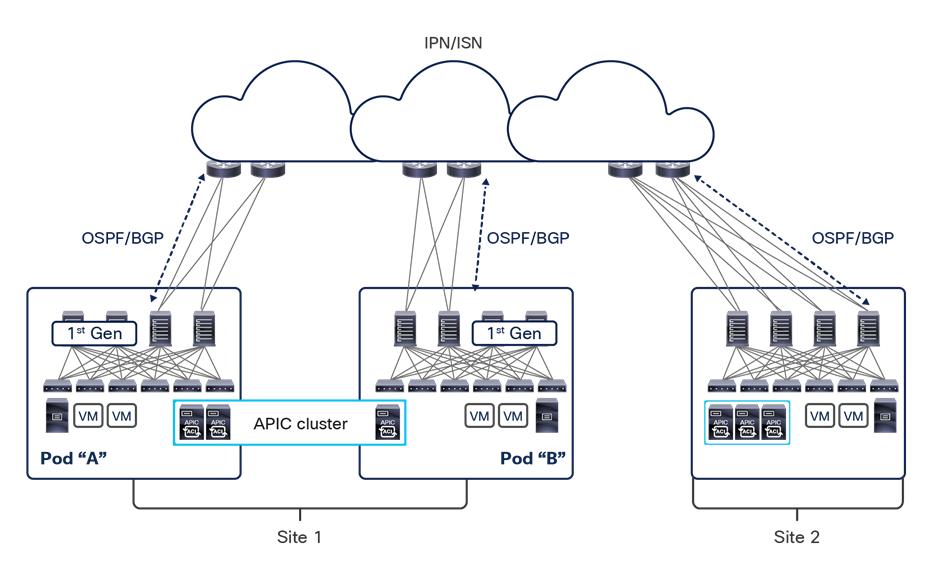

This name-space translation function should be performed at line rate to avoid negatively affecting the performance of intersite communication. To achieve this, you must use specific hardware for the spine nodes deployed in the Cisco ACI Multi-Site architecture: only the Cisco Nexus EX platform (and newer) generation of spine switches are supported in a Multi-Site deployment. Note that first-generation spine switches can coexist with the new spine-switch models, as long as the latter are the only ones connected to the external IP network and used for intersite communication, as shown in Figure 9.

Coexistence of first-generation spine switches with EX-platform (and newer) spine switches

The specific coexistence scenario displayed in Figure 9 also shows that not every deployed spine needs to be connected to the external Layer 3 domain. You determine the number of spines and links used to connect to the external IP network based on the specific hardware available and your desired level of resiliency and throughput.

Note: For specific non modular spine models, as the 9332C and 9364C platforms, it is also possible to use the native 10G interfaces (SFP based) to connect to Inter-Site Network (ISN) devices.

The introduction of the Cisco ACI Multi-Site architecture also allows you to scale up the total number of leaf and spine nodes deployed across the interconnected fabrics, as well as the total number of endpoints. This capability is one of the main points of differentiation between Cisco ACI Multi-Site and Multi-Pod designs, because the latter option is still bound by the scalability restrictions of a single fabric design.

Note: When planning a Cisco ACI deployment, you always should refer to the scalability guides available at Cisco.com. See, for example, the following link for the scalability guide valid for the Cisco ACI Release 4.0(1): https://www.cisco.com/c/en/us/td/docs/switches/datacenter/aci/apic/sw/4-x/verified-scalability/Cisco-ACI-Verified-Scalability-Guide-401.html.

The translation table entries on the spine nodes are always populated when an EPG is stretched across sites; this is required to allow intra-EPG communication that is permitted by default. If instead there is a requirement to establish intersite communication between endpoints that are part of EPGs locally defined in separate sites, the definition of a contract between the EPGs is required to trigger the proper population of those translation tables (this is the example shown in figures 7 and 8, above). It is therefore mandatory to define the contract and the EPGs and apply the contract between them directly on NDO when there is a requirement to establish intersite communication via the intersite network (VXLAN data-path). Notice that this contract could be very simple (the equivalent of a “permit all”) and could be applied between all the different EPG pairs if the goal is to allow any-to-any communication.

Support for two additional functionalities – preferred group and vzAny – has been introduced on Nexus Dashboard Orchestrator to simplify the definition of policies between EPGs and allow the proper programming of the translation tables on the spines for intersite connectivity. Those functionalities will be discussed in the following two sections.

Note: The content of the translation tables can be verified by connecting to the spine nodes and issuing the CLI command “show dcimgr repo {eteps | sclass-maps | vnid-maps}”.

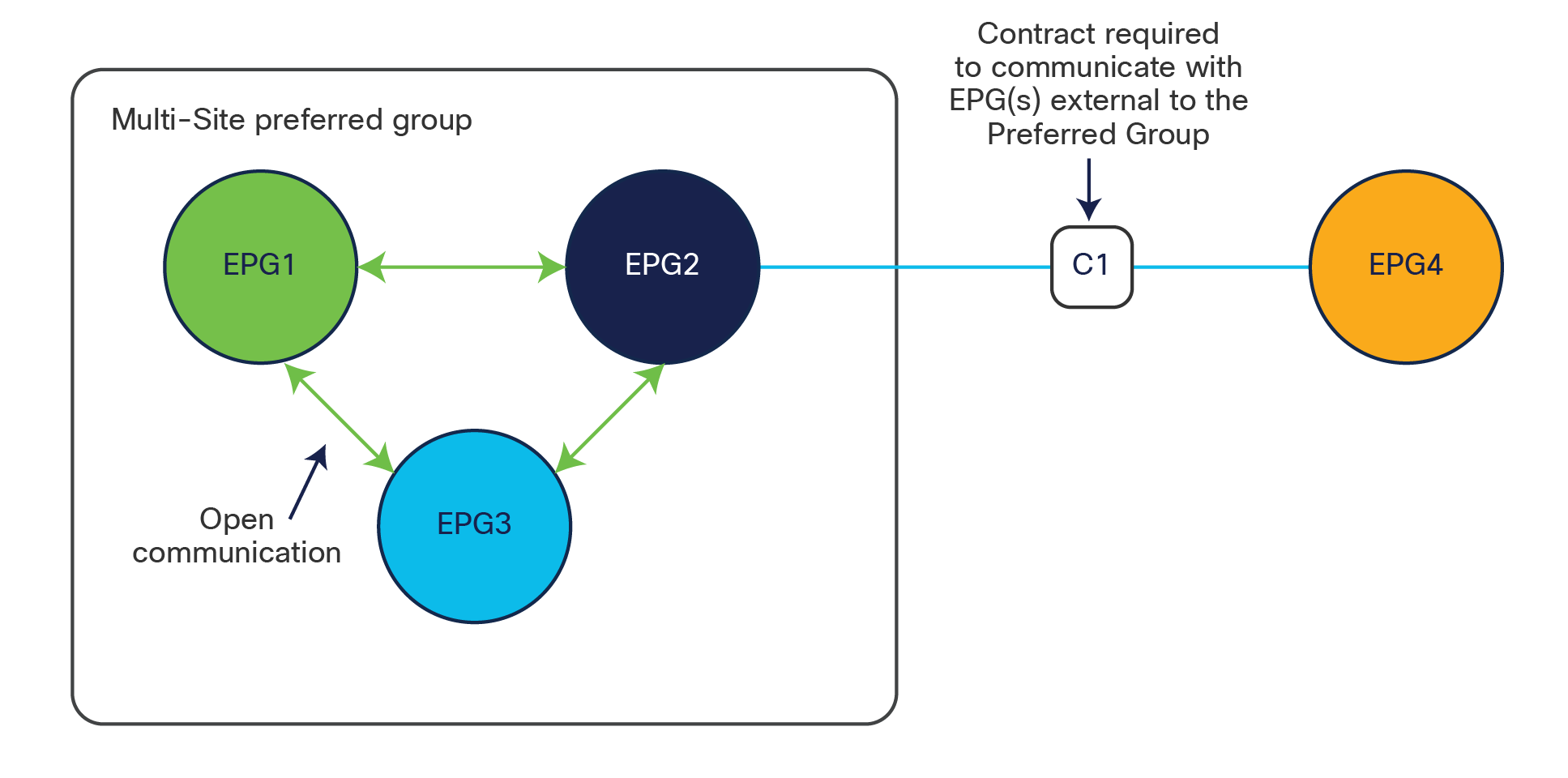

Cisco ACI Multi-Site and preferred group support

Cisco ACI Release 4.0(2) introduces support for EPG preferred groups with Cisco ACI Multi-Site. The preferred group construct is enabled at the VRF level and allows grouping together of all (or a subset of) the EPGs defined in that VRF. As shown in Figure 10, EPGs that are part of the preferred group can communicate with each other without requiring the creation of a contract. EPGs that are excluded from the preferred group still require the definition of a contract to communicate between them and with any of the EPGs included in the preferred group.

Note: A contract must be applied to all the individual EPGs part of the preferred group in order to communicate with EPGs external to the preferred group.

For the specific Cisco ACI Multi-Site scenario, the inclusion of EPGs as part of the preferred group must be done directly on NDO and causes the automatic creation of the proper translation entries on the spines to enable intersite communication between endpoints that are part of those EPGs and also north-south communication with the external network domain (Figure 11).

Use of preferred groups to enable north-south and east-west communication

As such, it is important to consider the overall scalability figures for the number of EPGs supported in a preferred group. As previously mentioned, this information is available on the Validates Scalability Guide available on the Cisco.com website.

One other important consideration is for the configuration of a preferred group for an external EPG (Ext-EPG) associated to an L3Out. When doing this, it is not possible to configure on an Ext-EPG the prefix 0.0.0.0/0 for classification purposes. This is because when traffic is received on an L3Out and classified based on such a prefix, the Class-ID of the VRF is assigned to the incoming packets and not the specific Class-ID of the Ext-EPG. As a consequence, communication with the other EPGs that are part of the preferred group for that VRF would not be allowed, since no security rule has been created to allow traffic from the VRF Class-ID to the specific EPG Class-IDs. As a workaround, it is instead possible to create for classification two separate prefixes (0.0.0.0/1 and 128.0.0.0/1), allowing you to cover the whole address space.

Finally, as of release 3.4(1) of the Orchestrator and 5.2(1) of the APIC, it is not possible to use preferred-group to enable Intersite L3Out connectivity. For more information about Intersite L3Out, refer to the “Introducing the Intersite L3Out Functionality (ACI 4.2(1) Release and onward)” section.

Cisco ACI Multi-Site and vzAny support

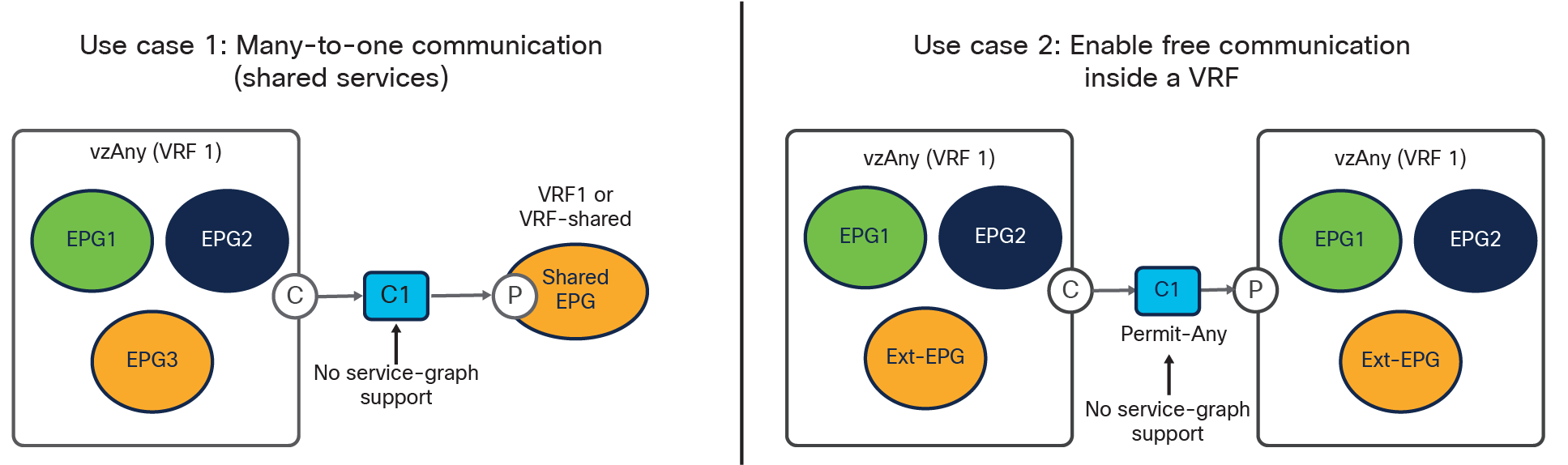

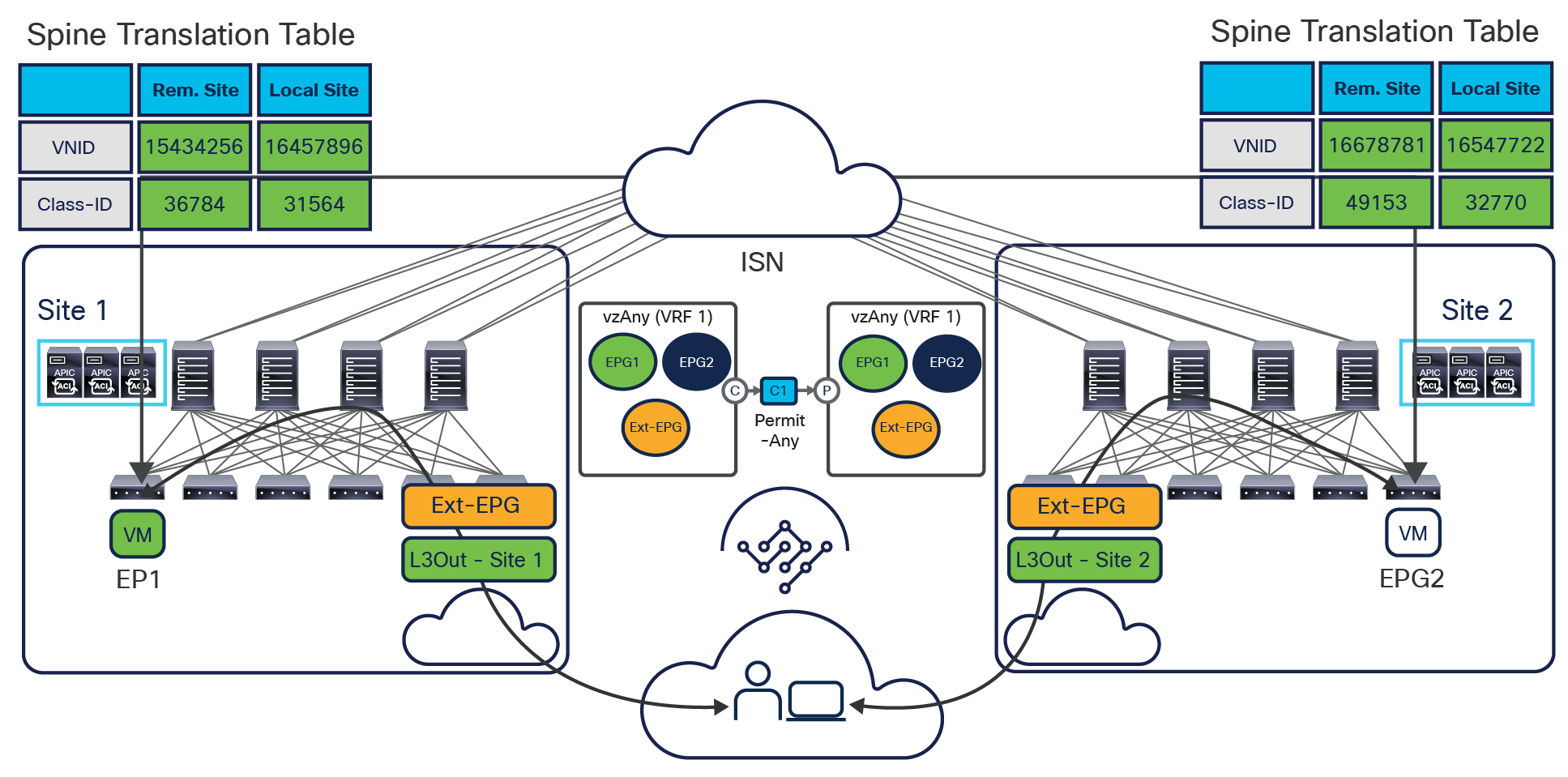

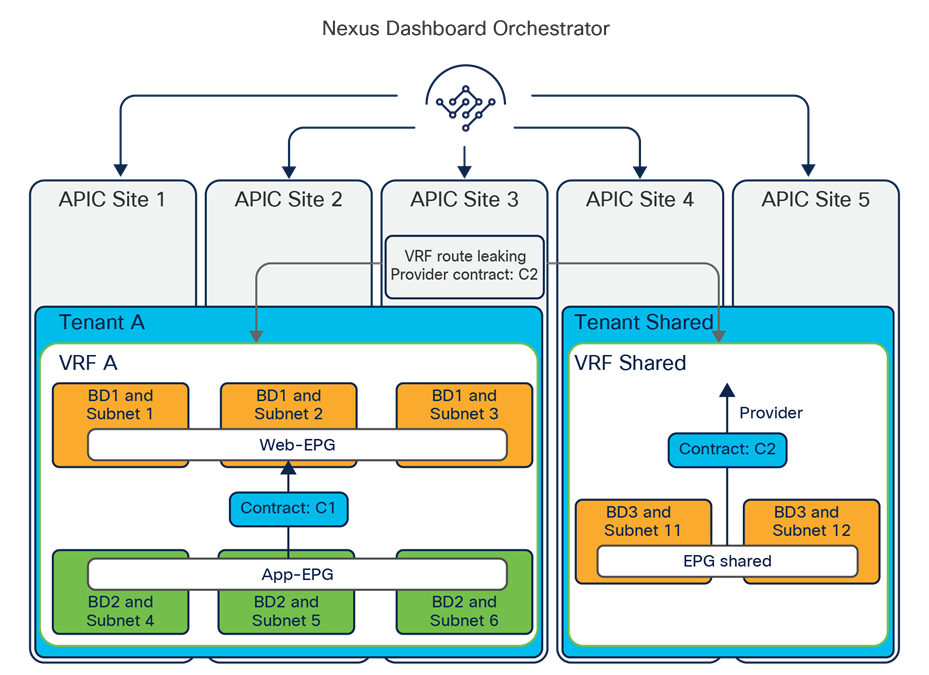

Cisco Multi-Site Orchestrator Release 2.2(4) introduces support in Multi-Site for the vzAny functionality. vzAny is a logical construct representing all the EPGs (internal and external) that are part of a given VRF. Being able to represent all those items with a single object simplifies the deployment of the two main cases shown in Figure 12.

Main use cases supported with vzAny

● The first use case consists in applying a “many-to-one” security policy between all the EPGs that are part of a given VRF and a shared resource that can be part of the same VRF or in a separate VRF. Instead of having to apply the contract between each individual EPG and the shared EPG, it is possible to configure “vzAny” as consumer of the contract provided by the shared EPG. Notice that doing so only ensures the application of the security policy between each EPG and the shared EPG, but not between EPGs that are part of the same VRF.

The exposure of the vzAny construct on the Orchestrator from Release 2.2(4) ensures that this “many-to-one” security policy enforcement paradigm can be deployed independently from the fact that the endpoints are part of the same ACI fabric or deployed across different sites, allowing also access to a shared resource in the external network domain (Figure 13).

Many-to-one communication across sites and with the external network

● If instead the goal is to open up communication between all the EPGs in the same VRF (an alternative configuration option to the use of Preferred Group), it is possible to configure vzAny to consume and provide a single contract defined with a “permit any” filter rule. Functionally, this allows you to achieve the same goal as the “VRF unenforced” option (not supported on NDO) removing the security policy from the equation and allowing you to use the ACI Multi-Site deployment only for network connectivity. As highlighted in Figure 14, this configuration option allows you to establish both east-west and north-south communication and results in the proper programming of the required translation entries in the spines.

Establishment of any-to-any intra-VRF communication

The use of vzAny in this scenario not only simplifies the configuration, removing the need for the creation of full mesh contracts between the EPGs, but also drastically reduces the TCAM utilization.

It is important to point out that the only requirement to support vzAny in a Multi-Site architecture is to run Cisco Multi-Site Orchestrator Release 2.2(4) (or newest NDO versions), and there is no dependency on the specific ACI SW versions deployed in the different fabrics that are part of the same Multi-Site domain. More considerations about dependencies between NDO and APIC SW releases can be found in the “Inter-version support (Cisco Multi-Site Orchestrator Release 2.2(1) and beyond)” section.

Also, as of Cisco ACI Release 6.0(4c) or later, and Cisco Nexus Dashboard Orchestrator (NDO) Release 4.2(3e) it is supported also associating a service graph to the contract used by vzAny (for both many-to-one and any-to-any use cases). For more information about this, please refer to the white paper below: https://www.cisco.com/c/en/us/solutions/collateral/data-center-virtualization/application-centric-infrastructure/white-paper-c11-743107.html

Cisco Nexus Dashboard Orchestrator

Cisco Nexus Dashboard Orchestrator (NDO) is the product responsible for provisioning, health monitoring, and managing the full lifecycle of Cisco ACI networking, fabric, and tenant policies across Cisco ACI sites around the world. It essentially represents the single source of truth for all the tenant policies required to establish intersite (that is, east-west) communication between endpoints deployed in separate ACI fabrics.

In its latest implementation, Cisco Nexus Dashboard Orchestrator is enabled as a service running on top of a Cisco compute cluster, named Nexus Dashboard. In previous implementations, the product was named “Cisco Multi-Site Orchestrator (MSO)”, so in the rest of this document you may still find references of the term “MSO,” especially when talking about functionalities that were originally introduced in that previous type of Orchestrator. For more details on the previous implementations of the Multi-Site Orchestrator, please refer to Appendix B.

Cisco Nexus Dashboard Orchestrator provides several main functions. It is worth noticing that the creation and management of user profiles and RBAC rules, together with the process of site onboarding, have been taken out of the Orchestrator and moved to the Nexus Dashboard compute platforms, because they represent services common to all the different applications that can be run on the ND compute cluster.

The following are the specific functionalities still offered by NDO:

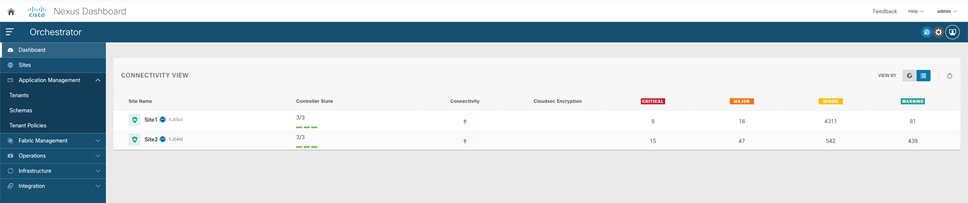

● Use the health dashboard to monitor the health, faults, and logs of intersite policies for all the Cisco ACI fabrics that are part of the Cisco Multi-Site domain. The health-score information is retrieved from each APIC domain and presented in a unified way, as shown in Figure 15.

Cisco Nexus Dashboard Orchestrator dashboard

● Provision day-0 infrastructure to allow the spine switches at all Cisco ACI sites to peer with the Inter-Site Network (ISN) devices directly connected. Once the peering with the ISN is completed for each fabric part of the Multi-Site domain, the MP-BGP EVPN configuration automatically provided by NDO on all the spines ensures that they can connect with each other. This allows the system to establish MP-BGP EVPN control-plane reachability and exchange endpoint host information (MAC and IPv4/IPv6 addresses) across sites.

● Create new tenants and deploy them in all the connected sites (or a subset of them).

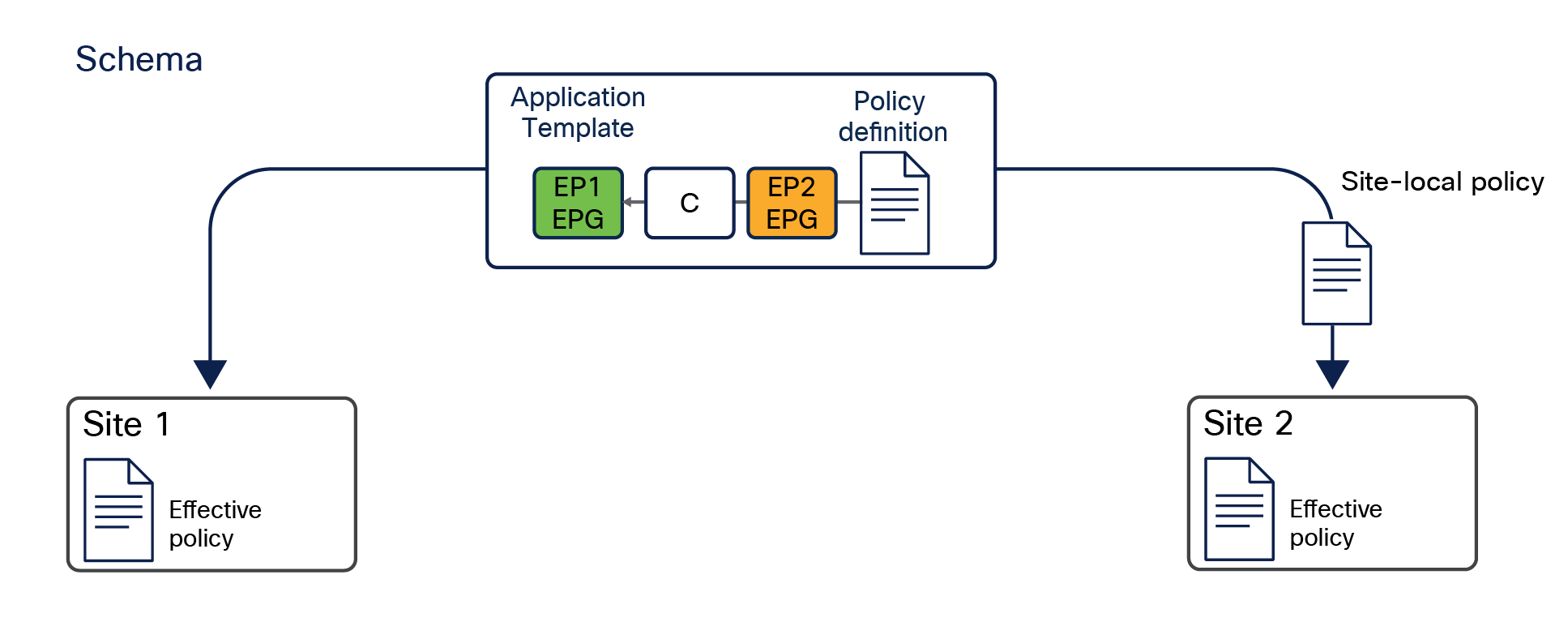

● Define application templates. Each application template can be associated with and pushed to a specific set of fabrics, as shown in Figure 16.

Note: One or more templates can be grouped together as part of a schema, which can be considered as a “container” of policies. However, the association of policies to a given tenant is always done at the template level (not at the schema level).

This feature is one of the most important that the Cisco Nexus Dashboard Orchestrator offers, together with the capability to define and provision scoped policies for change management. When you define intersite policies, Cisco Nexus Dashboard Orchestrator also properly programs the required name-space translation rules on the Multi-Site-capable spine switches across sites. As mentioned in the previous section, every intersite communication requires the creation of translation entries on the spine nodes of each fabric part of the Multi-Site domain. This happens only when the policy to allow intersite communication is defined on the Nexus Dashboard Orchestrator and then pushed to the different APIC cluster managing the fabrics. As a consequence, the best-practice recommendation is to manage the configuration of all the tenant objects (EPGs, BDs, etc.) directly on NDO, independently from the fact that those objects are stretched across multiple sites or locally defined in a specific site. For more information on how to deploy NDO schemas and application templates, please refer to the “Deploying NDO schemas and templates” section of this paper.

● From Software Release 4.0(1), other types of templates have been added to NDO (in addition to the Application templates mentioned above), allowing customers to provision specific template policies (tenant policies templates), fabric policies (fabric policies and fabric resource policies templates) and SPAN monitoring policies (monitoring policies templates). For more information on these new types of templates, please refer to the “Autonomous application templates (NDO Release 4.0(1))” section of this paper.

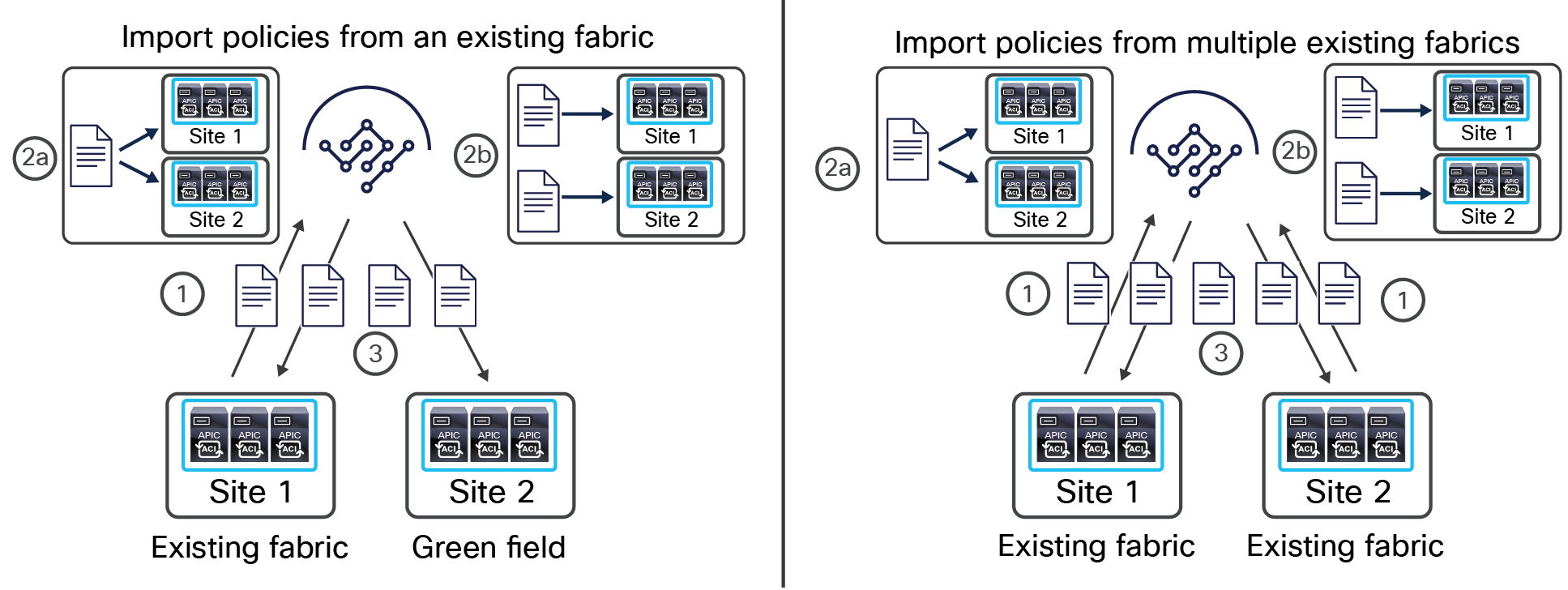

◦ Import policies from an already deployed Cisco ACI fabric (a brownfield deployment) and stretch them to another, newly deployed, site (a greenfield deployment). For more information, see the section “Brownfield integration scenarios.”

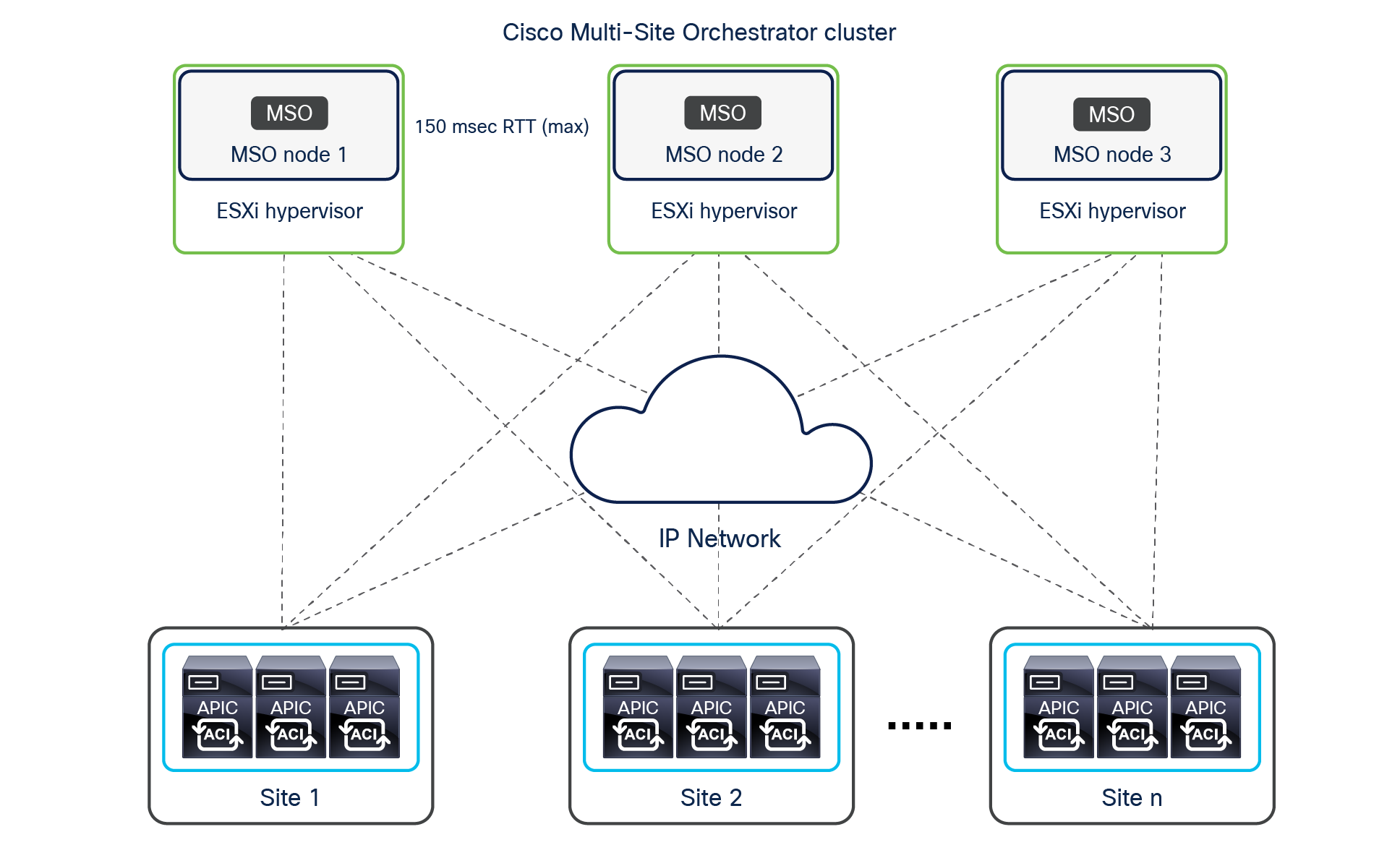

The Cisco Nexus Dashboard Orchestrator design is based on a microservices architecture in which the NDO services are deployed across Nexus Dashboard clustered nodes working together in an active/active fashion. The Cisco Nexus Dashboard Orchestrator services must communicate with the APIC nodes deployed in different sites. The communication between NDO and the APIC clusters can be established to the out-of-band (OOB) interface, the Inband (IB) interface, or both (more specific deployment information can be found in the “Cisco Nexus Dashboard deployment considerations” section). The Orchestrator also provides northbound access through representational state transfer (REST) APIs or the GUI (HTTPS), which allows you to manage the full lifecycle of networking and tenant policies that need to be deployed across sites (Figure 17).

Cisco Nexus Dashboard Orchestrator running on Nexus Dashboard cluster

Security hardening is built into the Cisco Nexus Dashboard Orchestrator cluster. Note that the Cisco Nexus Dashboard Orchestrator cluster design has passed all leading industry benchmark vulnerability tests, such as Nessus, WhiteHat, Chaos Corona, and Norad resulting in no security vulnerabilities discovered.

In addition, all traffic between Orchestrator services running on different physical (or virtual) nodes is always secured. For NDO services running on top of different Nexus Dashboard cluster nodes, the encryption of traffic is handled by each specific service. For example, TLS is used for distributing the information in the Mongo DB, and connections to APIC are through HTTPS; Kafka also uses TLS. Hence, the Orchestrator services can be deployed securely over whatever network infrastructure is available to interconnect them.

Typical use cases for Cisco Nexus Dashboard Orchestrator

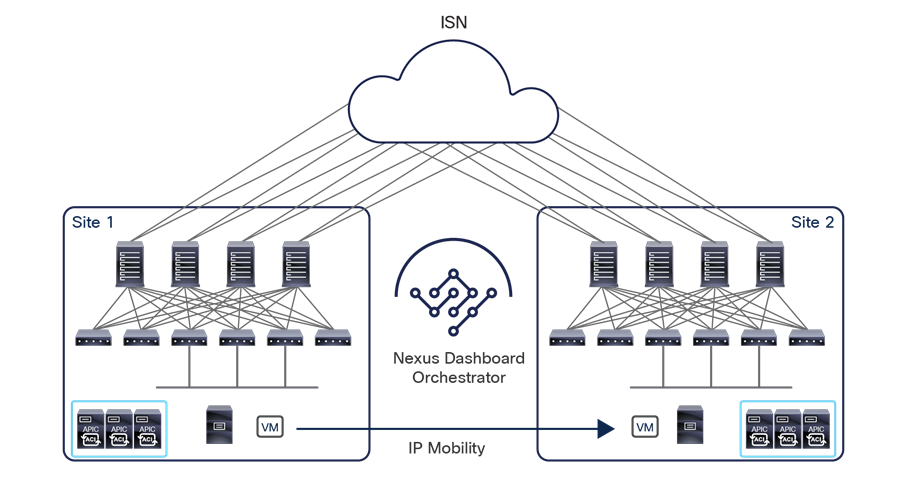

As previously discussed in the introduction, there are two popular use cases for the deployment of the Cisco ACI Multi-Site architecture managed by the Cisco Nexus Dashboard Orchestrator:

● Centralized (local) data center deployments, which require the creation of separate fabrics in the same DC location for scalability or fault domain isolation requirements

● Geographically distributed data centers across cities, countries, or continents, in which each data center is a treated as a “region” and requires a single pane of glass for provisioning, monitoring, and management to deploy stretched policies across regions

The following two sections provide more details about these deployment models. As will be clarified in a following section, it is also possible to deploy the ND cluster hosting the Orchestrator in the public cloud (that is, in a specific AWS or Microsoft Azure region) to manage from the cloud all of the ACI fabrics that are part of the Multi-Site domain. This approach can be applied to both of the use cases that are discussed below.

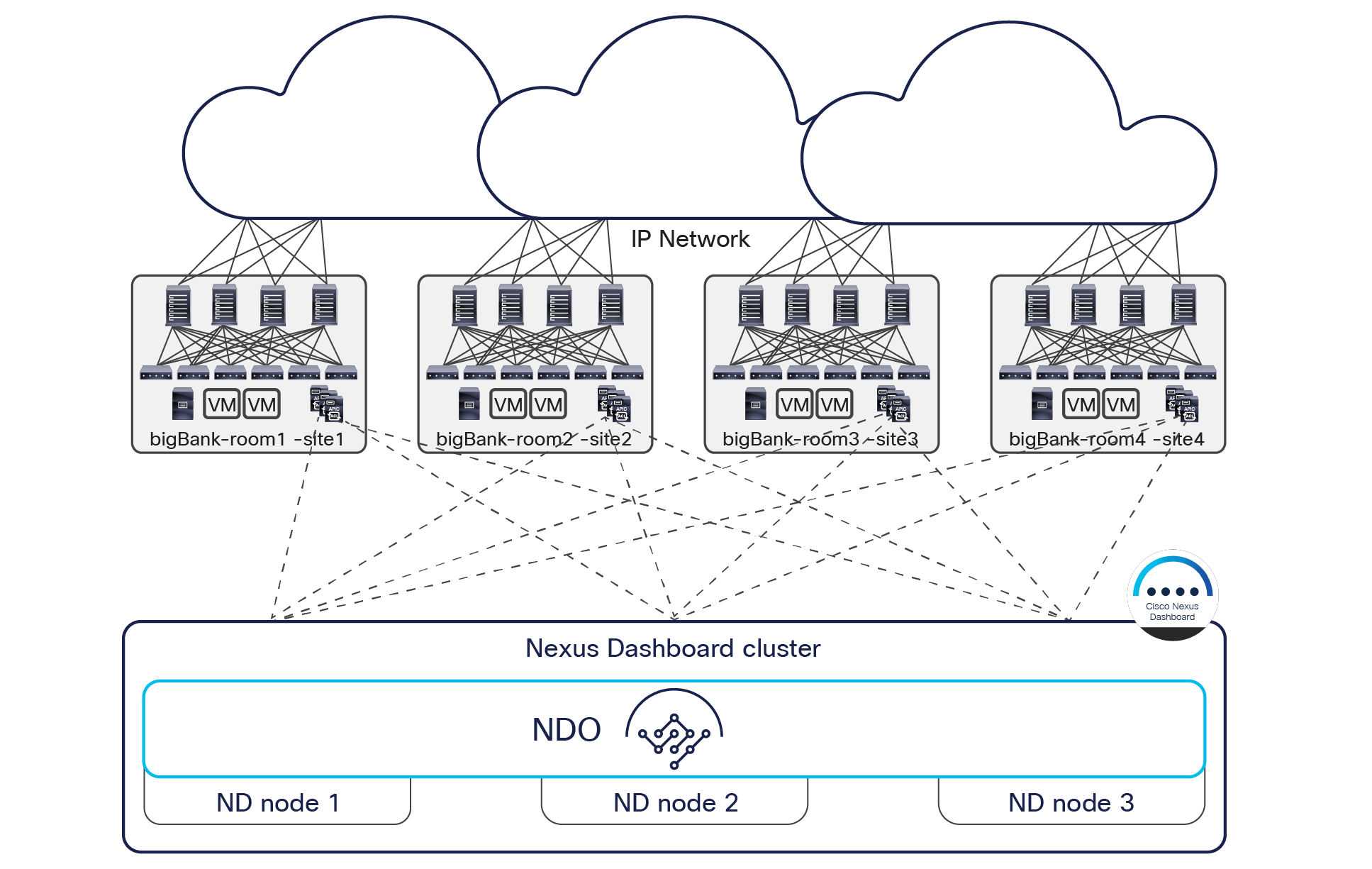

Cisco ACI Multi-Site deployment in a local data center for high leaf-node scale

The centralized deployment use case is popular in the financial and government sectors or with large service providers. In these scenarios, a Cisco ACI Multi-Site design is deployed in a building or a local campus with an ultra-high port count for bare-metal server, virtual machine, or container connectivity. A high number of leaf nodes are deployed across separate Cisco ACI fabrics to scale out the deployment and yet limit the scope of the failure domain and manage everything through a single pane of glass.

In the example shown in Figure 18, four Cisco ACI fabrics are deployed in one hall in four rooms, with each Cisco ACI fabric consisting of up to 500 leaf switches (when deploying a Multi-Pod fabric). The Cisco Nexus Dashboard Orchestrator service is deployed on top of a Nexus Dashboard cluster (three virtual machines in this example, but they could also be three physical nodes). Each ND virtual node can be deployed on its own separate hypervisor (ESXi or KVM) so that there is no single point of failure. All tenant policies can be stretched across all four Cisco ACI sites through the Cisco Nexus Dashboard Orchestrator interface. An additional ND standby primary node can be deployed to replace a failed ND active primary node.

Cisco Nexus Dashboard cluster deployed within a data center

It is not required (and usually not recommended) to connect the bare-metal or virtual servers hosting the Orchestrator services directly to the ACI fabrics; this is to avoid specific ACI fabric connectivity issues that may prevent NDO from communicating with the APIC clusters. Since, as will be clarified in a following section, communication to the OOB, IB, or both interfaces of the APIC clusters is possible (depending on the specific NDO deployment model), one has full flexibility when it comes to where those clusters should be connected (for example, they could also be deployed outside the data center).

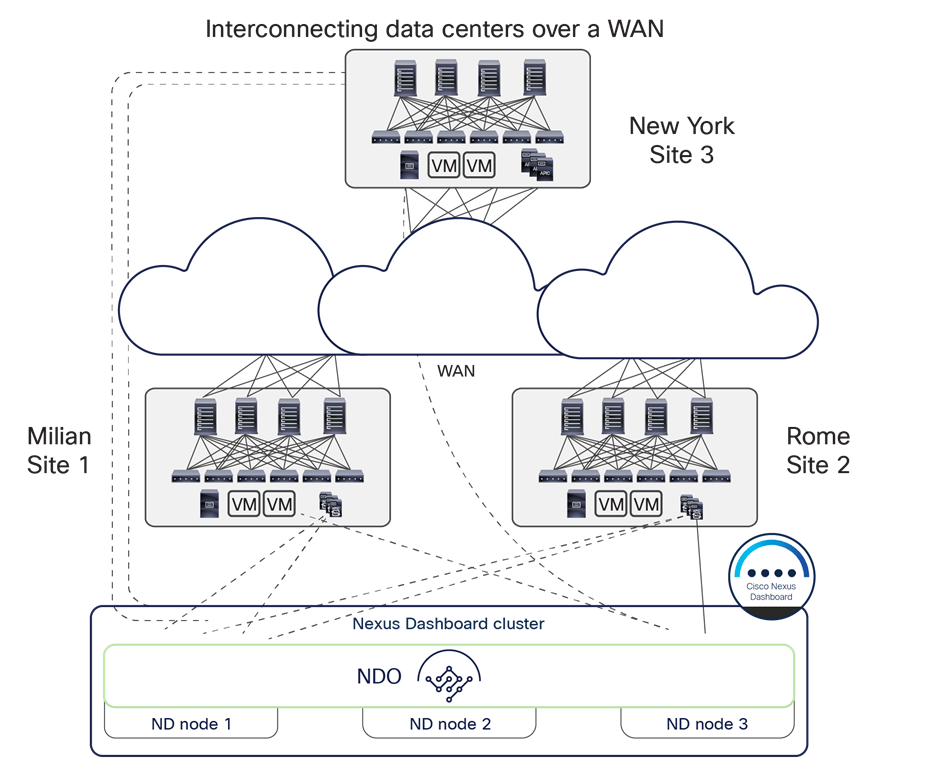

Cisco Nexus Dashboard Orchestrator deployment for data centers interconnected over WAN

The WAN use case is common among enterprises and service providers. In this scenario, geographically separated data centers are interconnected across cities in different countries or continents.

In the example shown in Figure 19, three Cisco ACI fabrics are deployed—in Rome, Milan, and New York—and all of them are managed from the Cisco Nexus Dashboard Orchestrator service running on the virtual (or physical) ND cluster stretched between Rome and Milan. An interesting point to note is that the New York site can be managed remotely by the NDO running on the Nexus Dashboard cluster deployed in Italy (due to the support for up to 500 msec RTT latency between an ND node and the APIC controller cluster it manages).

Cisco Nexus Dashboard cluster deployed across data centers interconnected over WAN

As a best practice, you should always deploy the Nexus Dashboard nodes hosting the Orchestrator as part of the same geographical region (United States, Europe, Asia, etc.) even when managing Cisco ACI fabrics that span the world. This is because of the 50 msec RTT latency supported for communication between the ND nodes part of the same ND cluster.

A stable data-plane connection must exist between the Cisco Nexus Dashboard nodes that are part of the same cluster when they are deployed over a WAN. The nodes in a Cisco Nexus Dashboard cluster communicate with each other over a TCP connection, so if any drops occur in the WAN, dropped packets will be retransmitted. Each ND cluster node is assigned a unique IP address; there is no requirement for those IP addresses to be part of the same IP subnet, as communication between the nodes can be routed.

The recommended connection bandwidth between nodes in a Cisco Nexus Dashboard Orchestrator cluster is from 300 Mbps to 1 Gbps. These numbers are based on internal stress testing while adding very large configurations and deleting them at high frequency.

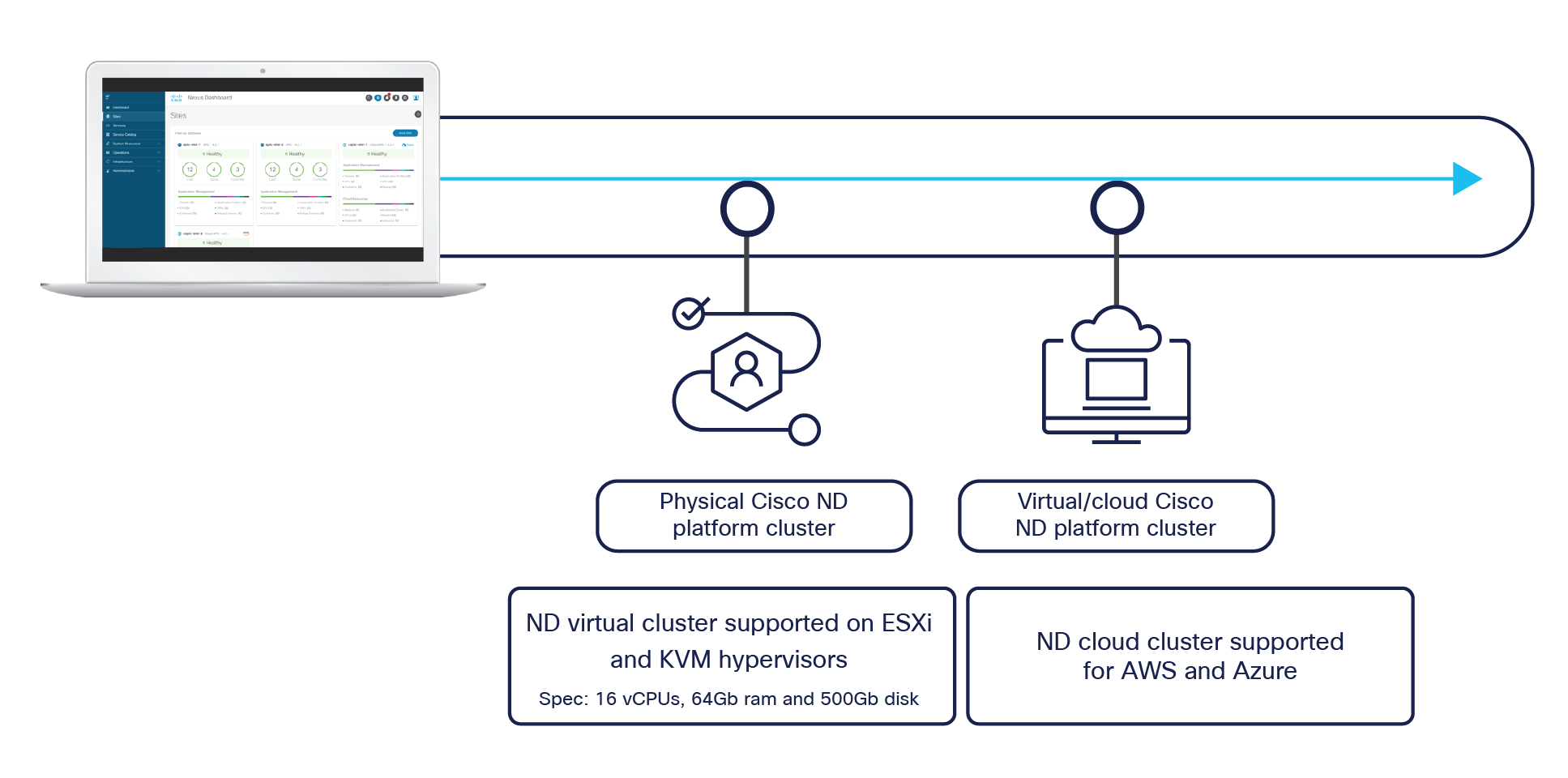

Cisco Nexus Dashboard deployment considerations

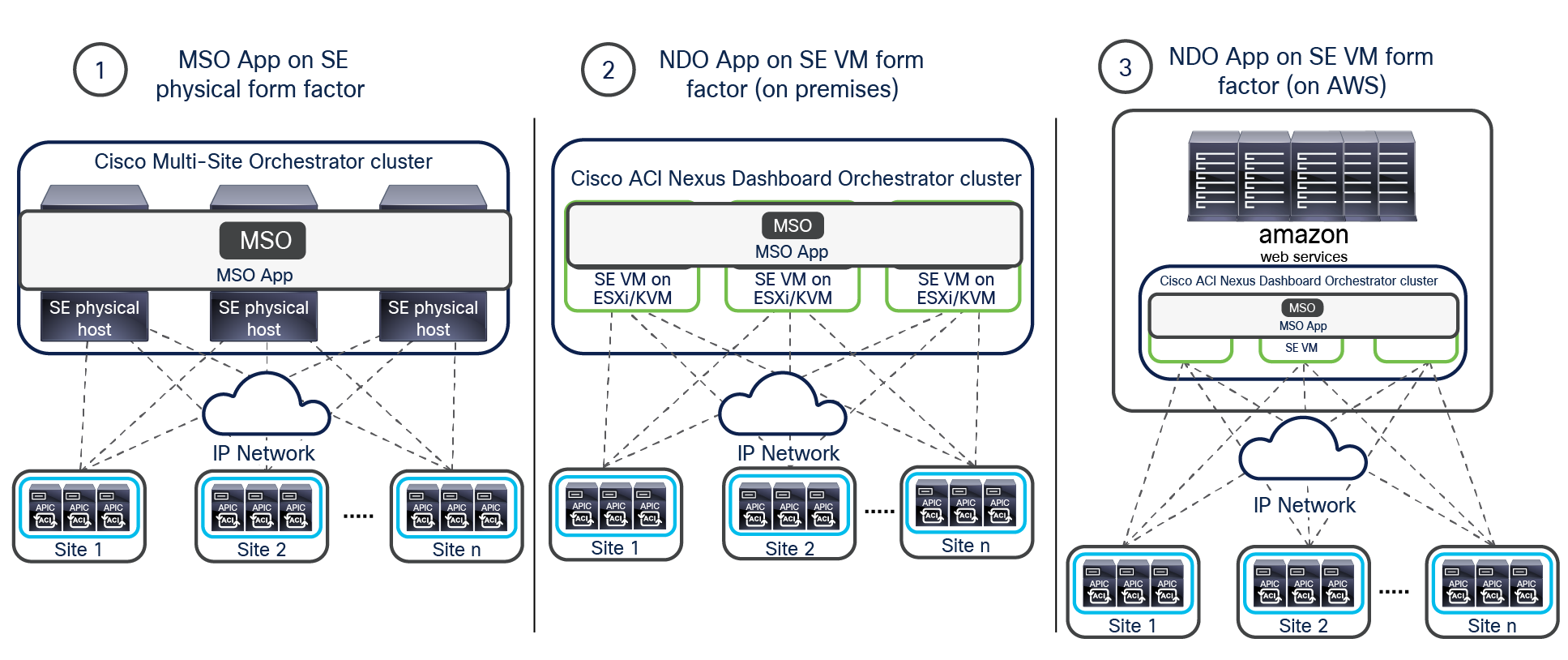

The Orchestrator (NDO) is deployed as an application running on a cluster of compute resources represented by the Cisco Nexus Dashboard (ND). The first release 2.0(1) of Nexus Dashboard only supported a cluster of three physical ND compute nodes. From Nexus Dashboard release 2.0(2) onward, it is available as virtual form factors to be deployed on premises or in a public cloud (Figure 20).

Nexus Dashboard cluster form factors supporting NDO

Note: To “mix and match” those form factors in the same cluster is not supported; only homogeneous deployment models are supported (that is, all physical nodes, all virtual nodes deployed on premises on the same hypervisor flavor, and all virtual nodes deployed in the cloud in the same cloud-service provider).

There are two differences when running NDO as a service on Nexus Dashboard when compared with the previous MSO deployment models described in Appendix B:

● The maximum latency between an ND node and the APIC cluster is reduced to 500 msec RTT (instead of the 1 sec RTT value previously supported with MSO).

● The maximum RTT latency supported for communication between the ND nodes part of the same ND cluster is 50 msec (instead of the 150 msec RTT value previously supported with MSO).

● The ND nodes can communicate with the APIC controllers using their OOB address, IB address, or both (whereas only OOB connectivity was supported with the previous MSO options).

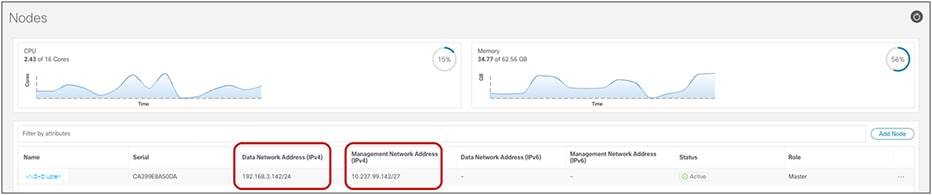

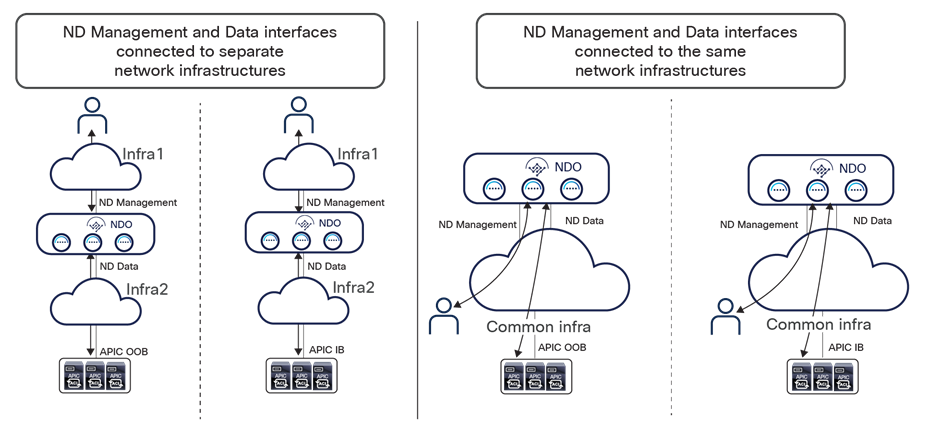

Some important considerations are required for this last specific point. Each Nexus Dashboard compute node, independently from its form factor, has two types of interfaces: a management interface and a data interface (Figure 21).

Nexus Dashboard compute node interfaces

Note: The figure above is for a single-node virtual ND cluster used for lab purposes. In real-life ND deployments, there would be 3 nodes part of the same cluster, each with its own interface.

A different routing table is associated to each of these interfaces, and a default route is added to each table pointing to the next-hop device. You can view the routing tables associated to the two interfaces by leveraging the commands below (after connecting in SSH to a Nexus Dashboard node as “rescue-user”):

Management Interface (bond1br)

rescue-user@vND-Cluster:~$ ip route show

default via 10.237.99.129 dev bond1br

10.237.99.128/27 dev bond1br proto kernel scope link src 10.237.99.142

100.80.0.0/16 dev bond0br scope link

169.254.0.0/25 dev k8br1 proto kernel scope link src 169.254.0.44

169.254.0.0/16 dev bond0br scope link metric 1006

169.254.0.0/16 dev bond1br scope link metric 1009

172.17.0.0/16 dev k8br0 proto kernel scope link src 172.17.222.0

192.168.3.0/24 dev bond0br proto kernel scope link src 192.168.3.142

Data Interface (bond0br)

rescue-user@vND-Cluster:~$ ip route show table 100

default via 192.168.3.150 dev bond0br

10.237.99.128/27 dev bond1br scope link

172.17.0.0/16 dev k8br0 scope link

192.168.3.0/24 dev bond0br scope link

For deciding how each ND cluster node should be connected to the network infrastructure, it is critical to understand that different functions and services running on ND have been designed to leverage (by default) the reachability information contained in one of the two routing tables described to communicate with various external services. This specific ND implementation leads to the following considerations:

For example, when ND tries to connect to an NTP server or to a proxy server, the lookup to reach that destination is done in the first routing table. On the other side, when trying to perform the onboarding of APIC controllers on ND, either by specifying the APIC's OOB or IB IP address, the lookup to reach that destination is done in the second routing table.

● The ND Management interface is dedicated to the management of the ND cluster, since its associated routing table is used by ND for connecting to NTP and DC proxy servers, Cisco Intersight clusters, and DNS servers, to provide UI access to ND (and ND Apps) and to perform firmware upgrades for ND and the applications running on it. Assuming all the services mentioned above are deployed in IP subnets different from the one assigned to the ND Management interface, the default route defined in that routing table would be used to communicate with all of them (in the example above, pointing to the next-hop device 10.237.99.129).

● The ND Data interface is used for to bring up the ND cluster (node-to-node communication) and by specific services (NDO, NDI, NDFC, etc.) running on ND for communication to controllers and switches. If the controllers and the switches are part of any IP subnet other than the one assigned to the ND Data interfaces, the default route in the routing table of the ND Data interface would be used to communicate with those devices (in the example above, pointing to the next-hop device 192.168.3.150).

● The default behavior described above could be changed by assigning to the ND Management or ND Data interface the same IP subnet of the external services and devices ND needs to communicate with. For example, if the ND Management interface was deployed in the same IP subnet of the APIC controllers, the entry 10.237.99.128/27 associated to the second routing table in the example above would ensure that the management interface would always be used to connect to the APICs. Alternatively, it is also possible to force the use of a specific interface by adding static routes associated to that ND interface. For example, if the APIC were part of IP subnet 192.168.1.0/24, associating that static route to the ND Management interface would ensure that the 192.168.1.0/24 entry would be installed in the second routing table pointing to the ND Management interface.

◦ Despite the flexibility offered by the ND platform in terms of connectivity (and described in the previous bullet point), the following are the best-practice recommendations when running NDO on the ND compute cluster:

◦ If running only the NDO service on the ND cluster, you can decide to assign both ND Management and Data interfaces to the same IP subnet. However, since this may create problems when enabling additional services (such as, for example, NDI) on the same cluster, it is strongly recommended to assign those two interfaces to different IP subnets.

◦ Also, it is strongly recommended to keep the default behavior of using the two ND interfaces for the specific default communications described in the first two points above. This implies assigning the ND Management and Data interfaces to IP subnets that are different from the ones used by the external entities ND needs to connect. The default routes in each routing table (and their associated interfaces) will hence be used depending on the specific communication that is required.

◦ The ND management interfaces of the node part of the same ND cluster can be part of the same IP subnet or be assigned to different IP subnets. The former is usually the case when the ND cluster is deployed in a specific DC location, the latter when the ND cluster is stretched across different DC locations. The same considerations apply for the ND data interfaces of the ND cluster nodes.

Figure 22 shows some typical deployment scenarios for a Nexus Dashboard cluster with NDO running on top.

Nexus Dashboard compute node interfaces

The two scenarios on the left show the ND Management and Data interfaces being connected to separate network infrastructures. This makes clearer the dedicating of each interface to specific connectivity duties that was described above.

The two use cases on the right show, on the contrary, the use of a common network infrastructure to connect both ND Management and Data interfaces. In such scenarios, it would probably be very common to use different VRFs inside that network infrastructure to keep isolated the different types of required communications.

Note: For all of the use cases shown in Figure 22, when running only NDO on the Nexus Dashboard cluster, it is fully supported to establish connectivity with the APIC out-of-band (OOB) or inband (IB) interfaces, or both. The onboarding on Nexus Dashboard of a site (for example, the onboarding of the APIC managing that ACI fabric) can be done by specifying one of the IP addresses (OOB or IB) of one of the APIC nodes. Assuming ND can connect to the specified address (using the data interface when following the best-practice recommendations previously described), the IP addresses for all the other APIC nodes that are part of the same cluster will also be automatically discovered.

For more information on Cisco Nexus Dashboard and its capabilities of hosting applications, please refer to the documentation available at the links below: https://www.cisco.com/c/en/us/support/data-center-analytics/nexus-dashboard/products-installation-guides-list.html

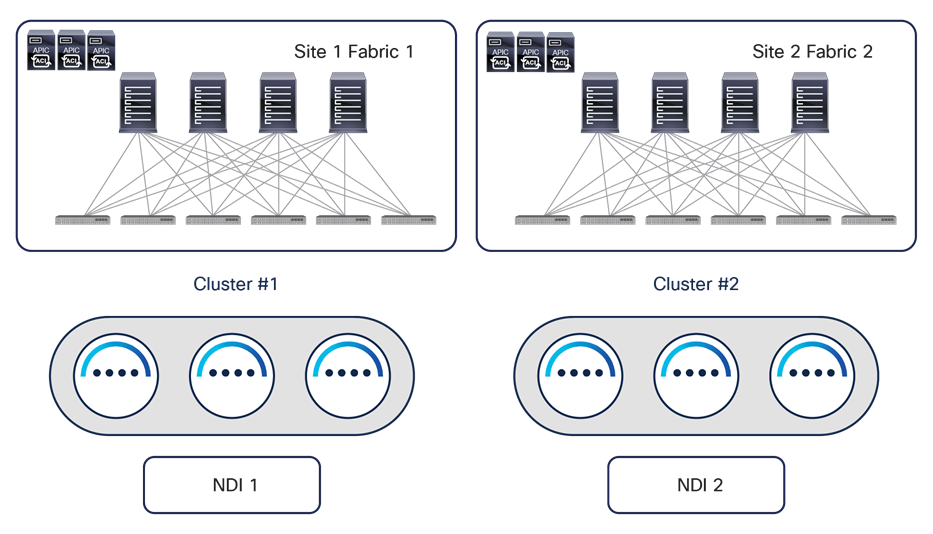

While a physical Nexus Dashboard compute cluster can host multiple applications and services, it is currently not possible to install the services associated to a single instance of NDO across separate Nexus Dashboard clusters, but only across the nodes of the same Nexus Dashboard cluster. This brings some interesting considerations for customers who are interested in leveraging both Nexus Dashboard Insights (NDI) and Nexus Dashboard Orchestrator services. While NDI deployment considerations are out of the scope for this paper, a basic rule of thumb is always not to spread an ND cluster hosting NDI across geographically separated locations (mostly because of the telemetry data ingestion requirements of NDI). This means that, for example, for a two-site deployment scenario, a separate physical ND cluster hosting NDI should be deployed in each DC location, as shown in Figure 23.

Typical NDI deployment for geographically dispersed data centers

When NDO needs also to be deployed in such scenarios, the restriction that currently a single NDO instance cannot be deployed across different ND compute clusters implies that a couple of different NDO deployment options are possible:

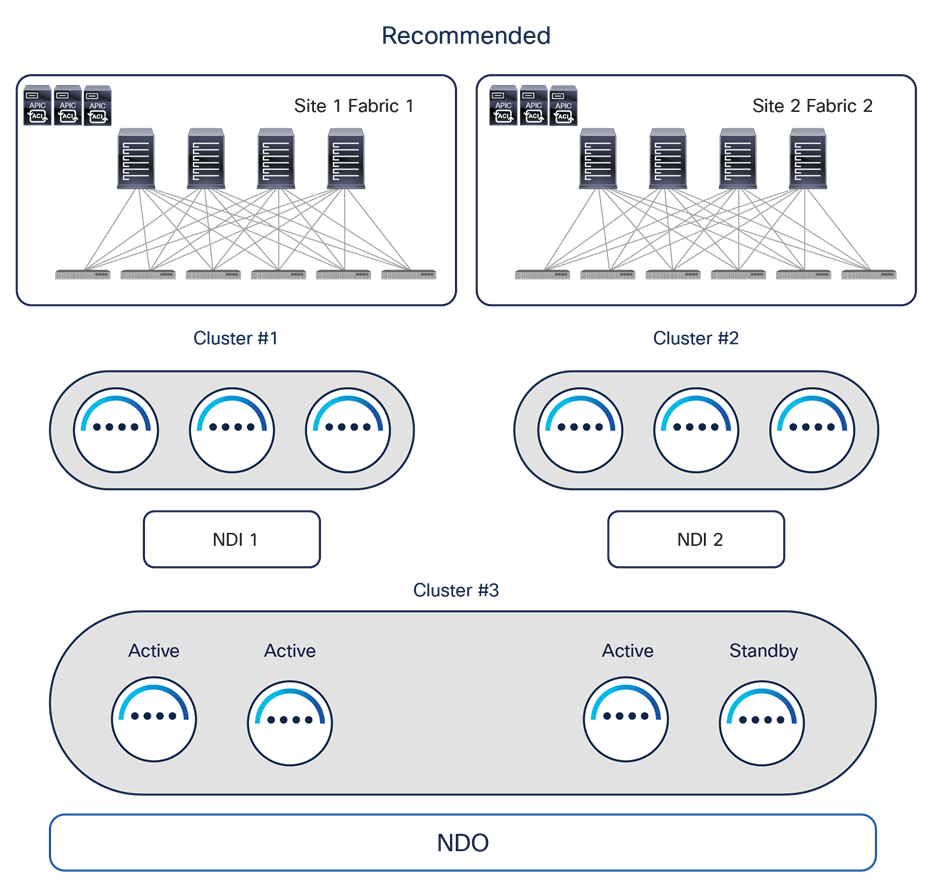

1. Deploy the Orchestrator service on a dedicated ND cluster (usually a virtual one) that can be spread across DC locations. As shown in Figure 24, in this recommended deployment model a vND cluster dedicated to NDO can be built using three virtual machines (ND primary nodes) hosted on premises and deployed in different sites. A fourth virtual ND primary node can be deployed as a standby to be able to take over and replace any failed active primary node.

Recommended NDO and NDI deployment option for geographically dispersed data centers

The main advantages of running the Orchestrator service on a dedicated virtual ND cluster are:

● It represents the most flexible deployment option; the vND nodes hosting the Orchestrator service can be geographically dispersed up to 50 msec RTT of latency between them. This is ideal for scenarios where geographically dispersed fabrics are part of the same Multi-Site domain because it allows customers to deploy the vND nodes in those distributed data center locations (reducing the chances of losing multiple vND nodes at the same time). In the scenario shown in Figure 24, if DC1 were to completely fail, the vND standby node in DC2 could be promoted to active to bring the vND cluster in majority state and be able to provision policies to the remaining data center(s).

◦ It allows you to choose how to communicate with the APIC clusters, since both IB and/or OOB communication channels are possible between the vND nodes and the APICs for the Orchestration service (this may not be the case when other services are co-hosted on the ND cluster).

● You can run with just 3 vND primary nodes, independently from the number of sites and leaf nodes per site (based on the maximum supported values). An ND cluster hosting other services may require the deployment of additional “worker” nodes based on the site/leaf scalability requirements. For more information on the ND resources required to support different combinations of services, please refer to the Nexus Dashboard Capacity Planning tool at the link: https://www.cisco.com/c/dam/en/us/td/docs/dcn/tools/nd-sizing/index.html

◦ It allows you to run the Orchestrator services on a vND cluster that can be directly hosted in the AWS/Azure public clouds.

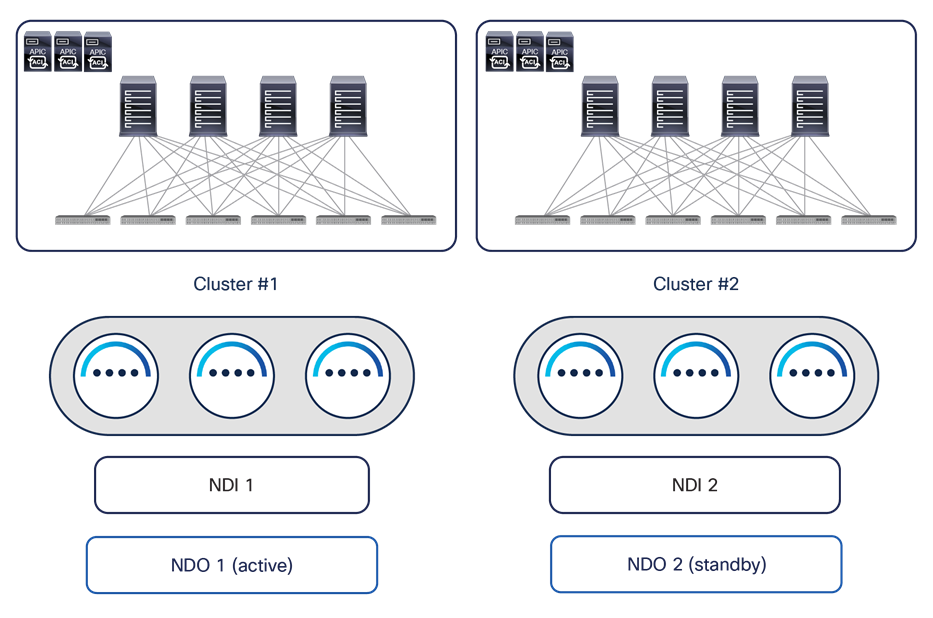

2. Install two separate NDO instances on the two ND clusters deployed in each data center and hosting the NDI instances, as shown in Figure 25.

Alternative NDO and NDI deployment options for geographically dispersed data centers

One NDO instance is run as “active,” meaning it is used to manage all the fabrics part of the Multi-Site domain (in the example above, the two fabrics in DC1 and DC2). The second NDO instance is, instead, installed but not actively used (in a sort of “standby” mode). Periodic backups of NDO configurations can be taken on the NDO active instance and saved in a safe remote location. If, for whatever reason, the NDO service running on the ND Cluster 1 becomes unusable, it is possible to import the latest available backup into the second NDO instance running in DC2 and roll back to that configuration. At that point the second NDO instance becomes effectively “active” and can be used to start managing all the fabrics that are part of the Multi-Site domain.

Note: As of NDO release 4.4 (part of the ND unified image 3.2), the NDO roll back procedure mentioned above can be successfully completed only if all the APIC clusters of the fabrics part of Multi-Site domain are reachable. The specific DR use case where both the NDO cluster and the APIC cluster deployed in a given DC location are lost at the same time will be addressed in a future ND/NDO release.

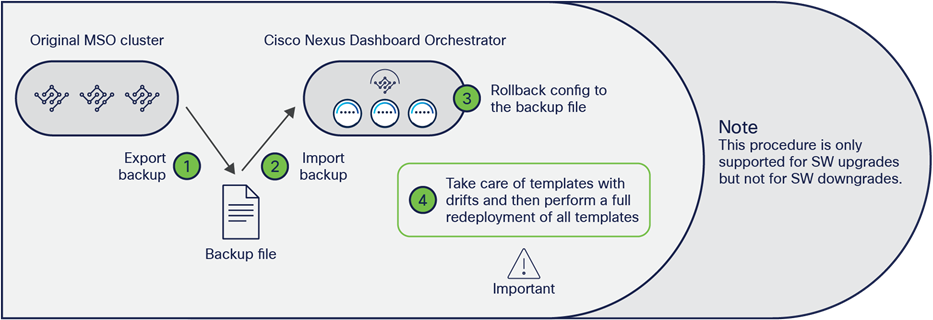

Another strong recommendation is always to deploy the latest version of the NDO software available. Upgrading between different NDO software releases is a quite straightforward process that can be handled directly from the Nexus Dashboard UI. Different considerations apply for what concerns the migration procedure between a VM-based or a CASE-based MSO cluster deployment and NDO. In that case, it is possible to perform the procedure shown in Figure 26.

Upgrading and/or migrating procedure between MSO and NDO

Note: The steps described below apply to migrating to any 3.x NDO release. Different considerations apply to migrating to NDO 4.0(1); please refer to the documents below for more information: https://www.cisco.com/c/en/us/td/docs/dcn/ndo/4x/deployment/cisco-nexus-dashboard-orchestrator-deployment-guide-401/ndo-deploy-migrate-40x.html

● Install the NDO application on a new ND cluster. You can connect the cluster to the network at this point, or alternatively after the step below, where the old MSO cluster is disconnected (this may be required if the same IP addresses need to be used for the old and new clusters).

● Create a backup configuration file on the old VM-based MSO cluster and download the file to a location from where it can be easily retrieved.

● Shut down (or simply disconnect) the old VM-based MSO cluster.

● Import the file into the new NDO application running on the ND cluster (after connecting it to the network, in case this was not already done in the initial step).

● Roll back the configuration of the new NDO application running on the ND cluster to the one contained in the configuration file just imported. This allows you to import both the NDO infrastructure configuration and tenant-specific policies in the new cluster.

Note: Since the onboarding of ACI sites is managed directly on the Nexus Dashboard, in order to be able to successfully roll back the NDO configuration, it is mandatory to ensure that the names assigned to the ACI fabrics onboarded on ND match the names of the ACI fabrics originally onboarded on the MSO cluster.

● Once the rollback of the configuration is completed, some of the templates may show some drift. A drift means that there is a discrepancy between the APIC and NDO configuration of one (or more) objects. A drift may appear after a configuration rollback between two Orchestrator releases that can manage different ACI objects (or objects’ properties). This is the case, for example, when migrating from MSO 2.2 to NDO 3.7, since NDO 3.7 can manage more objects than MSO 2.2. Therefore, once the rollback is completed, NDO 3.7 would assign default values to all the objects it manages but that were not previously managed by MSO 2.2. Those default values may be different from the actual values those objects have on the APICs managing the fabrics that are part of the Multi-Site domain; this would be, for example, the case if those values were changed directly on the APIC given that MSO 2.2 could not manage them. Those drifts can be resolved leveraging a drift-reconciliation workflow that has been introduced since NDO Software Release 3.4(1). For more information on that, please refer to the “NDO operational enhancements” section.

◦ Once all the drifts have been resolved, one last step is required when migrating to an NDO release before 3.8(1). This step consists in redeploying all the defined application templates; it is required to ensure the configuration information for all those templates is properly saved in the NDO database (since this database implements a new format in NDO compared to the format used in MSO). From NDO Release 3.8(1), this “redeployment” is instead automatically handled as part of the migration procedure, so the only thing you need to take care of after the rollback is to resolve configuration drifts (if present).

Note: For more information on this MSO-to-NDO migration procedure, please refer to the document at the link below: https://www.cisco.com/c/en/us/td/docs/dcn/ndo/3x/deployment/cisco-nexus-dashboard-orchestrator-deployment-guide-371/ndo-deploy-migrate-37x.html

Deploying NDO schemas and templates

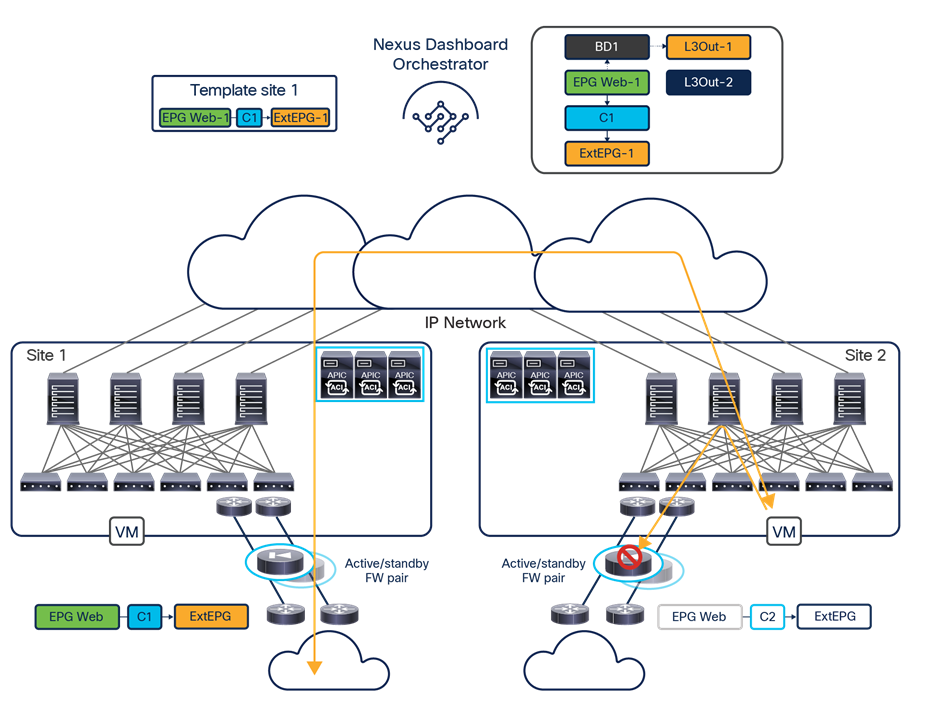

Multi-Site application templates

The creation of tenant-specific policies (EPGs, BDs, VRFs, contracts, etc.) is always done in NDO within a given application template, because each template is always associated to one (and only one) tenant. Multiple application templates can then be grouped together inside a schema, which essentially represents a container of application templates.

Despite the fact that a schema is not directly associated to a specific tenant, it is a quite common, and also a best-practice, deployment option to group together inside a schema all of the application templates associated to a given tenant, in order to have an easy way to visualize and modify specific tenant policies from the NDO GUI.

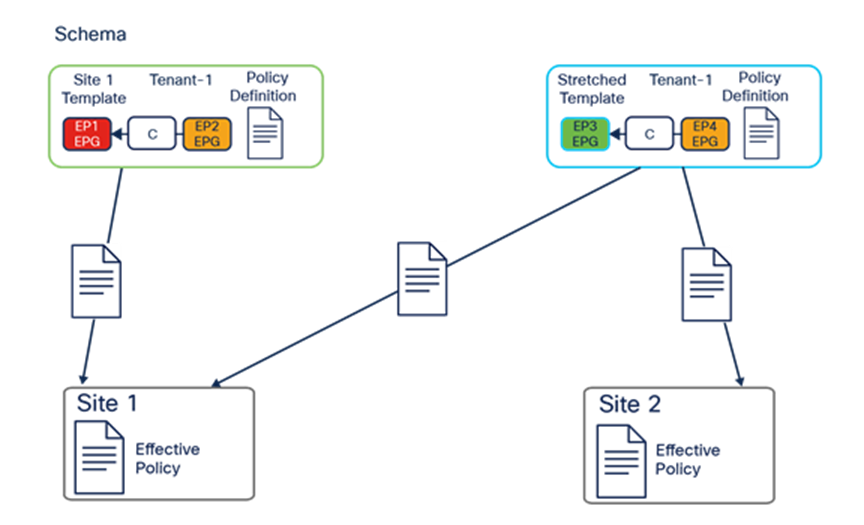

Each defined application template must then be mapped to one (or more) sites that are part of the same Multi-Site domain.

Mapping application templates to ACI sites

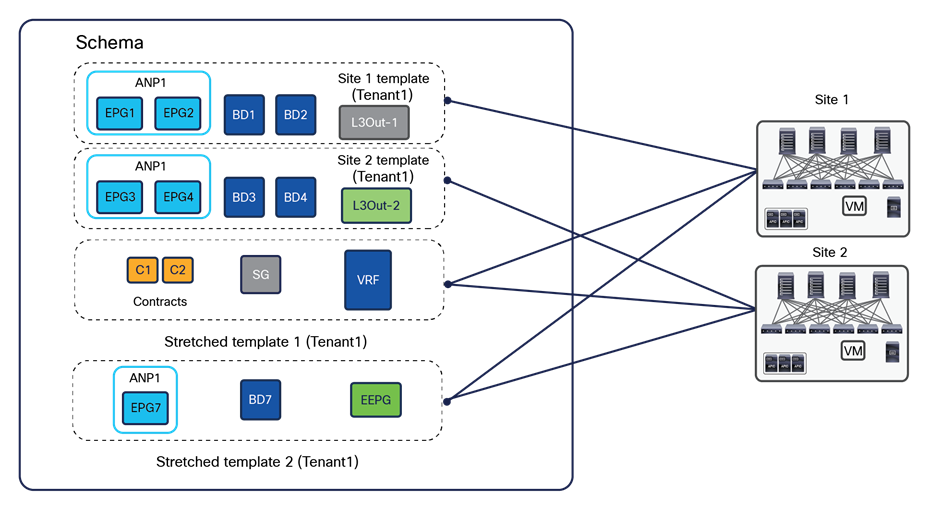

Figure 27 shows the creation of two application templates associated to the same Tenant-1:

● “Site 1 template” is mapped to the specific ACI site 1: This implies that all the specific tenant policies created in that application template can be pushed and deployed (that is, “rendered”) only to the APIC cluster managing that specific ACI fabric.

◦ “Stretched template” is mapped to both ACI sites 1 and 2: As a consequence, all the tenant policies thereby defined are deployed to both sites, leading to the creation of “stretched” objects (that is, objects that are rendered in multiple sites). As an example, and as it will be clarified in the “ACI Multi-Site use cases,” the use of a stretched application template is required to be able to extend a BD across sites.

Based on the current NDO implementation, an application template therefore represents the atomic unit of change for policies: this means that all the changes applied to that template are always pushed immediately to all the site(s) mapped to the template. Specific changes can be pushed only to a specific site when applied to an application template that is solely mapped to that site.

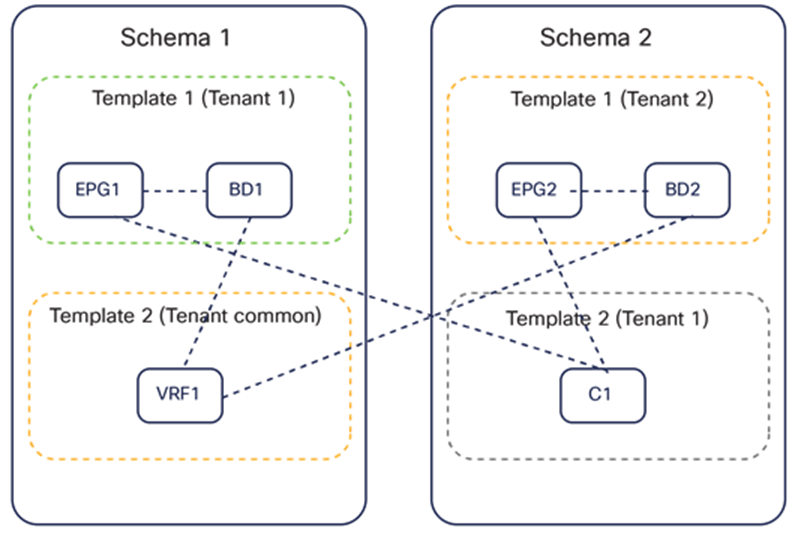

The organization of policy objects in application templates and schemas is quite flexible. In some cases, it may be handy to place network-specific objects (BDs, VRFs) and policy-specific objects (EPGs, contracts, etc.) in separate application templates or even schemas, under the assumption that policy-specific objects are modified more frequently than networking ones.

Figure 28 highlights how it can be possible to reference objects that are defined inside the same application template, across application templates that are part of the same schema or even across application templates contained in separate schemas. All those references can be created between application templates associated to the same tenant or even to separate tenants (as typically would be the case when defining network-specific objects in the “common” tenant).

Referencing objects across application templates and across schemas

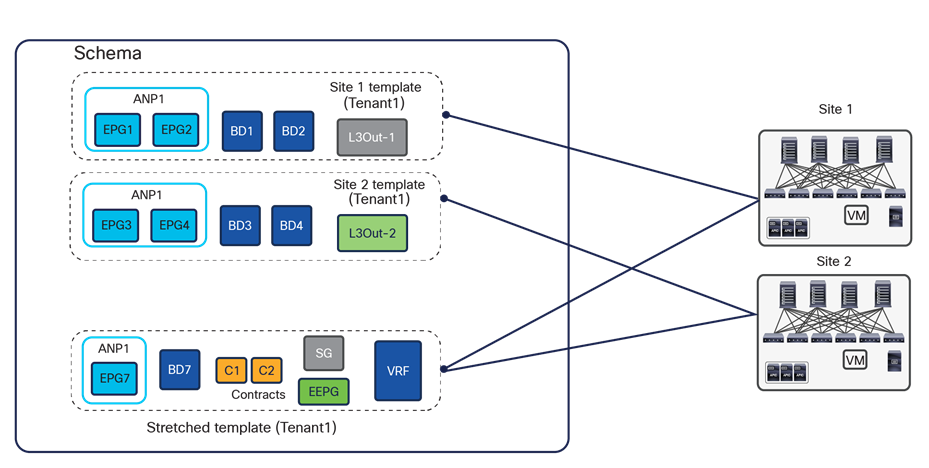

While technically it is possible to spread configuration objects for the same application tenant across multiple templates and schemas, it is strongly recommended consolidating all the application templates associated to the same tenant in a specific schema (the “tenant schema”), as shown in Figure 29.

Best practices to define application templates inside a schema with NDO 3.x releases

A dedicated application template is mapped 1:1 to each site in the Multi-Site domain; in addition, a stretched application template is mapped to all the sites. VRFs and contracts are typically defined in the stretched application template, as they normally must be available on all the sites. BDs/EPGs are defined either on the stretched application template or on the site-specific application templates, depending on whether they are local or extended across sites.

When following this approach, it is important to check the ACI Verified Scalability Guide (VSG) for the specific software release that is deployed, for the maximum number of application templates and overall objects that can be supported in a given schema. In large-scale deployments, it may be required to deploy templates associated to the same tenant across multiple schemas in order to stay within the validated and supported scalability boundaries for each schema.

The approach shown in Figure 29 represents the best-practice deployment model for any NDO 3.x software release. Beginning with Release 4.0(1), Nexus Dashboard Orchestrator will validate and enforce several best practices when it comes to application template design and deployment:

● All policy objects must be deployed in order according to their dependencies.

For example, when creating a bridge domain (BD), you must associate it with a VRF. In this case, the BD has a VRF dependency so the VRF must be deployed to the fabric before or together with the BD. If these two objects are defined in the same template, then the Orchestrator will ensure that during deployment, the VRF is created first and associate it with the bridge domain. However, if you define these two objects in separate templates and attempt to deploy the template with the BD first, the Orchestrator will return a validation error as the associated VRF is not yet deployed. In this case you must deploy the VRF template first, followed by the BD template.

● All policy objects must be undeployed in order according to their dependencies, or in other words in the opposite order in which they were deployed.

As a corollary to the point above, when you undeploy templates, you must not undeploy objects on which other objects depend. For example, it is not possible to undeploy a VRF before undeploying the BD with which the VRF is associated.

● No cyclical dependencies are allowed across multiple templates.

Consider a case of a VRF1 associated with a bridge domain BD1, which is in turn associated with EPG1. If you create VRF1 in template1 and deploy that template, then create BD1 in template2 and deploy that template, there will be no validation errors since the objects are deployed in the correct order.

However, if you then attempt to create EPG1 in template1, it will create a circular dependency between the two templates, so the Orchestrator will not allow you to save template1 with the newly added EPG.

The introduction of these additional rules and requirements has two main implications:

1. For greenfield configurations created directly on NDO 4.0(1), the best-practice recommendation previously shown in Figure 29 is slightly modified to the version show in Figure 30.

Best practices to define application templates inside a schema, beginning with NDO 4.x releases

The main difference is that two stretched templates are required with NDO 4.0(x) and later, to be able, for example, to define the VRF and the external EPG (referencing that VRF) in two separate templates and to avoid the creation of a cyclical dependency between those objects; this would cause an error when trying to deploy the single stretched template previously used.

Note: NDO release 4.1 introduces support for “L3Out Templates” that can be used to fully manage the provisioning of a Tenant L3Out directly from NDO. NDO release 4.2 introduces instead “Service Device” templates that can be used to define service nodes and PBR policies directly on NDO and consequently deploy the service graph functionality. As a consequence, when running NDO release post 4.2, it is likely that the L3Out and Service Graph objects are not going to be defined anymore as part of the Application templates shown in previous Figure 30.

2. Performing an upgrade to Release 4.0(1) or later from an earlier 3.x release requires an analysis of all existing templates and conversion of any template that does not satisfy the new requirements previously described. This is done automatically by the system during the migration process, and you will receive a detailed report of all the changes that must be applied to your existing templates to make them compliant with the new best practices. If starting with the best-practices deployment model shown in Figure 29, the system will automatically reorganize the objects to mimic the new best-practice deployment model shown in Figure 30.

Note: For more information on the specific procedure to follow to upgrade from NDO 3.x to NDO 4.x releases, please refer to the document at the link below: https://www.cisco.com/c/en/us/td/docs/dcn/ndo/4x/deployment/cisco-nexus-dashboard-orchestrator-deployment-guide-401/ndo-deploy-migrate-40x.html

To provide even more flexibility when organizing policy objects, Cisco Nexus Dashboard Orchestrator supports the migration of EPGs and BDs across application templates (in the same schema or even across schemas) that are associated to the same tenant. Typical use cases for this functionality are being able to start stretching across sites an EPG/BD pair originally defined locally in a site, and vice versa.

Autonomous application templates (NDO Release 4.0(1))

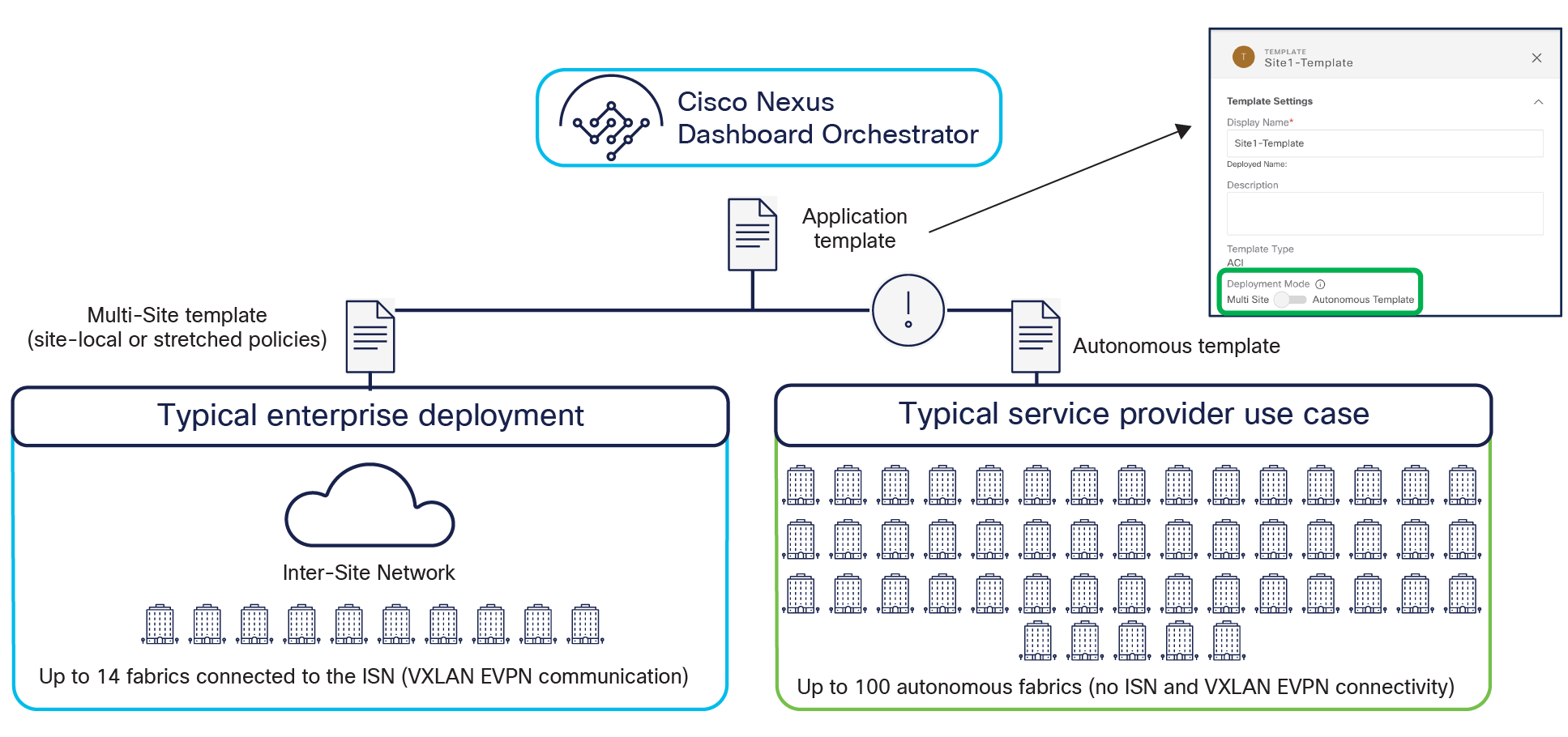

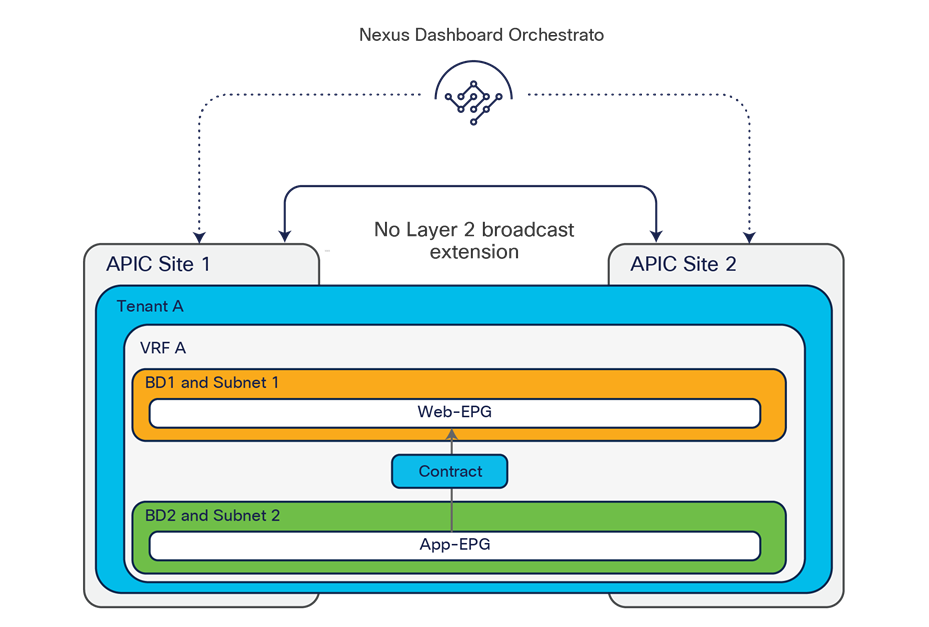

NDO Software Release 4.0(1) introduces a new type of application template, called an “autonomous template.” This type of application template allows you to provision the same objects (EPGs, BDs, VRFs, etc.) of a traditional application template (renamed “Multi-Site templates”); it can also be associated to a single site or to multiple sites. However, the fundamental difference between these two types of application templates is the fact that deploying an “autonomous template” to multiple sites does not result in the creation of “stretched” objects (and their associated translation entries, whose use will be discussed in a later section of this document).

As shown in Figure 31, the use case for the deployment of “autonomous application templates” is when there is a requirement to replicate the same configuration across multiple fabrics that are deployed and operated independently of each other (that is, there is no ISN infrastructure connecting the spines of the different ACI fabrics).

Multi-Site and autonomous application templates

It is important to reiterate how associating an autonomous template to multiple sites does not end up creating “stretched objects,” but, instead, creates independent objects that may simply share the same name. For example, if VRF1 is defined in an autonomous application template associated to sites 1 and 2, the same VRF1 object is going to be created on both APIC domains. However, from a functional perspective, they are two completely independent VRFs that can only be interconnected through the L3Out data-path. Similar considerations apply to the creation of EPGs with the same name, etc.

Given the considerations made above, it is important to follow some specific guidelines for deploying Multi-Site and autonomous application templates:

● A Multi-Site template must only be associated to multiple sites that are also “Multi-Site”–enabled. This implies that the “Multi-Site” knob associated to each site is turned on and the infrastructure configuration for each site has been fully provisioned to ensure connectivity to the Inter-Site Network (ISN). This is because, as already mentioned, the deployment of the Multi-Site template causes the provisioning of stretched objects that mandates VXLAN intersite communication through the ISN.

Note: Starting with NDO Release 4.0(3), the Orchestrator will enforce this guideline and prevent the association of a Multi-Site template to multiple “autonomous” sites.

● An autonomous template can also be associated to sites that are Multi-Site enabled (i.e. the corresponding “Multi-Site” flag is checked for those sites) and that are interconnected through the ISN. It is important to understand that no stretched objects are being created across sites when doing so.

New template types Introduced in NDO Release 4.0(1)

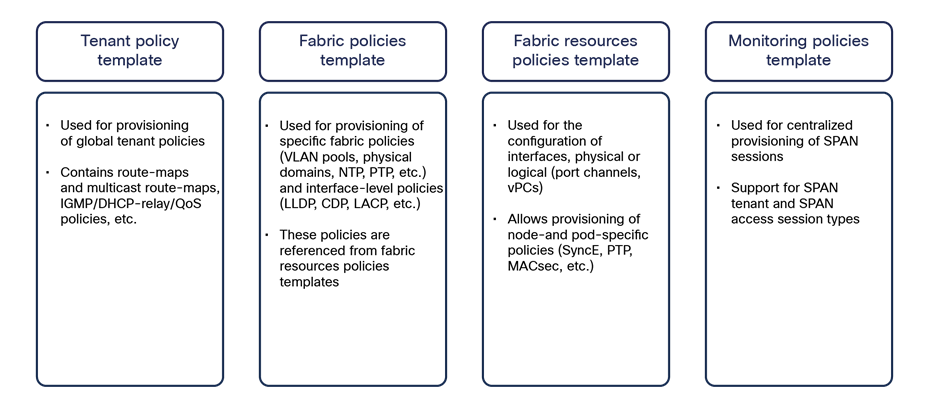

Starting from NDO Software Release 4.0(1), new template types have been introduced to expand the provisioning capabilities of the Nexus Dashboard Orchestrator. These new templates are shown in Figure 32 and briefly described below.

Template types introduced in NDO 4.0(1)

● Tenant policy template: This template is used to provision specific policies for a given tenant that can be used for different purposes. For example, route-maps to be used for tenant-routed multicast configurations or to control the routes advertised on SR-MPLS L3Outs, custom QoS policies, etc. Each defined tenant policy template can be associated to one or more sites, depending on whether the policies must be unique per site or can be commonly applied to a group of sites.

● Fabric policies template and fabric resources policies template: These two types of templates are examples of “fabric management” templates that can be used to provision fabric-specific policies (such as interfaces and interfaces’ properties, physical domains and associated VLAN pools, etc.). Before NDO 4.0(1), such configurations could only be provisioned independently at each specific APIC level. As is the case for tenant policy templates, those fabric management templates can also be associated to one or more sites, depending on whether the policies must be unique per site or can be commonly applied to a group of sites.

● Monitoring policies template: This type of template can be used to provision SPAN sessions for replicating traffic to be monitored toward an external collector. Two types of SPAN configurations are supported: tenant and access SPAN.

Note: Providing configuration details for those new templates is out of the scope for this paper. For more detailed provisioning information, please refer to the documents at the following links: TBD https://www.cisco.com/c/en/us/td/docs/dcn/ndo/4x/configuration/cisco-nexus-dashboard-orchestrator-configuration-guide-aci-401/ndo-configuration-aci-fabric-management-40x.html

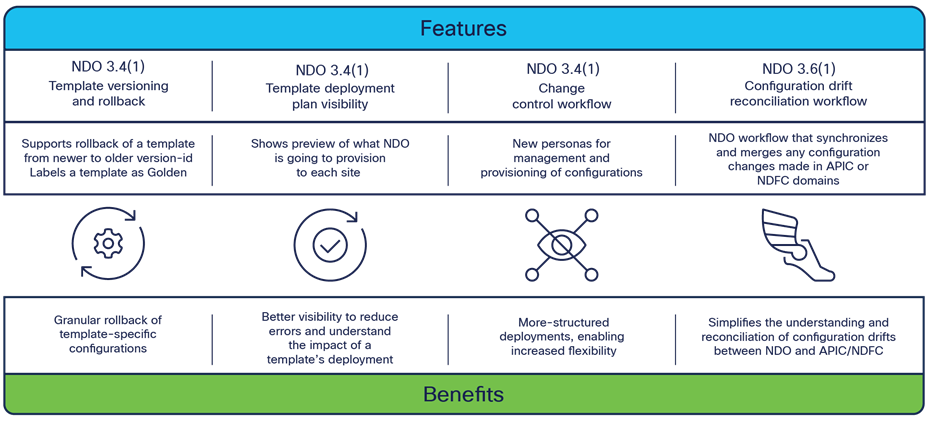

Different NDO software releases have introduced several critical enhancements to simplify and improve the operational aspects of the Cisco ACI Multi-Site architecture. Figure 33 highlights those template-level enhancements.

Note: Specific configuration information for all those functionalities can be found in the NDO configuration guide below: https://www.cisco.com/c/en/us/td/docs/dcn/ndo/3x/configuration/cisco-nexus-dashboard-orchestrator-configuration-guide-aci-371/ndo-configuration-aci-managing-schemas-37x.html?bookSearch=true

● Template versioning and rollback: In earlier releases of the Orchestrator, the only possibility to perform configuration backups and rollbacks was at the global-system level. While this functionality is quite useful and still available in the latest versions of the software, a more granular approach was required. Since the atomic unit of provisioning from NDO is the template (whatever type it is), this requirement led to the introduction of providing backups and rollback capabilities at the template level.

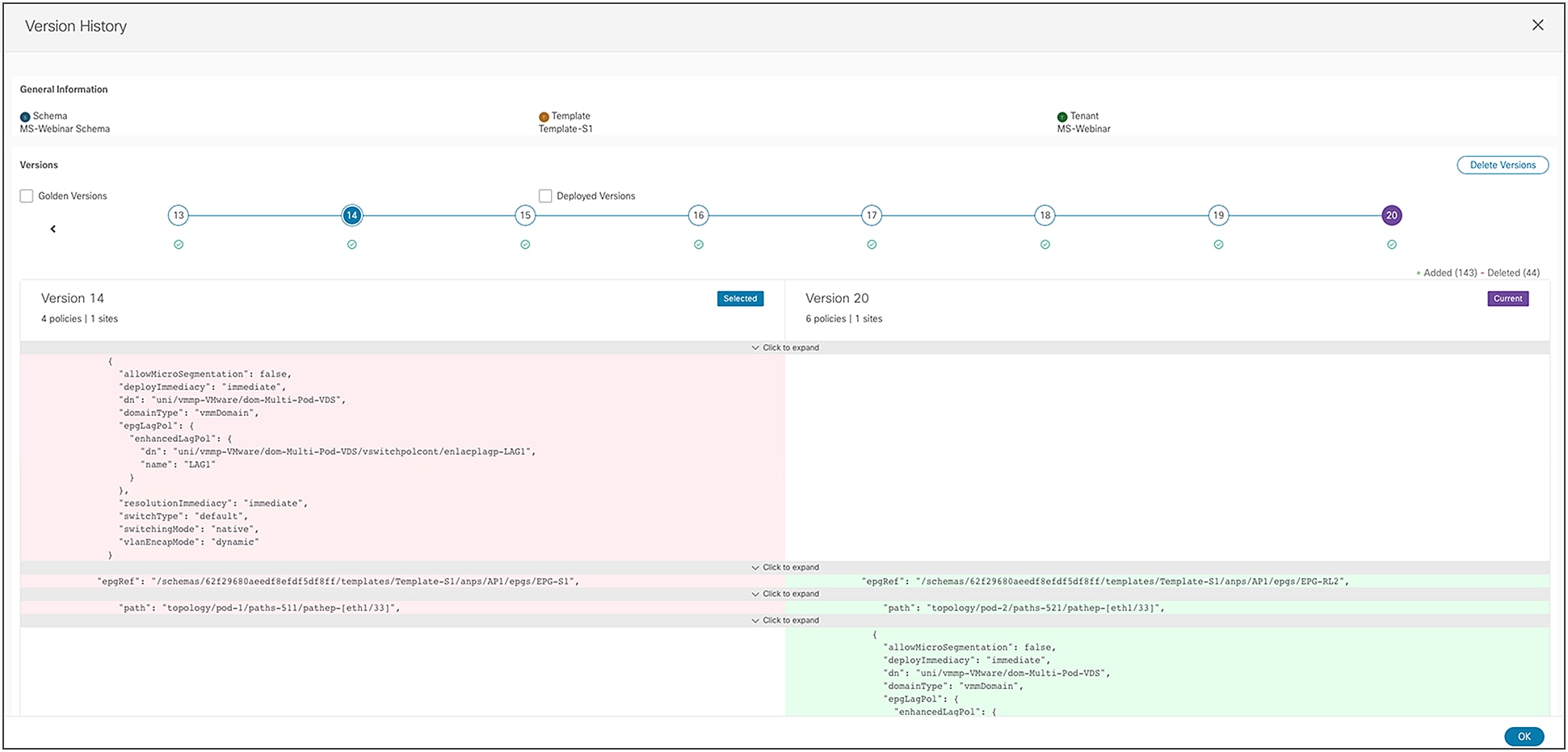

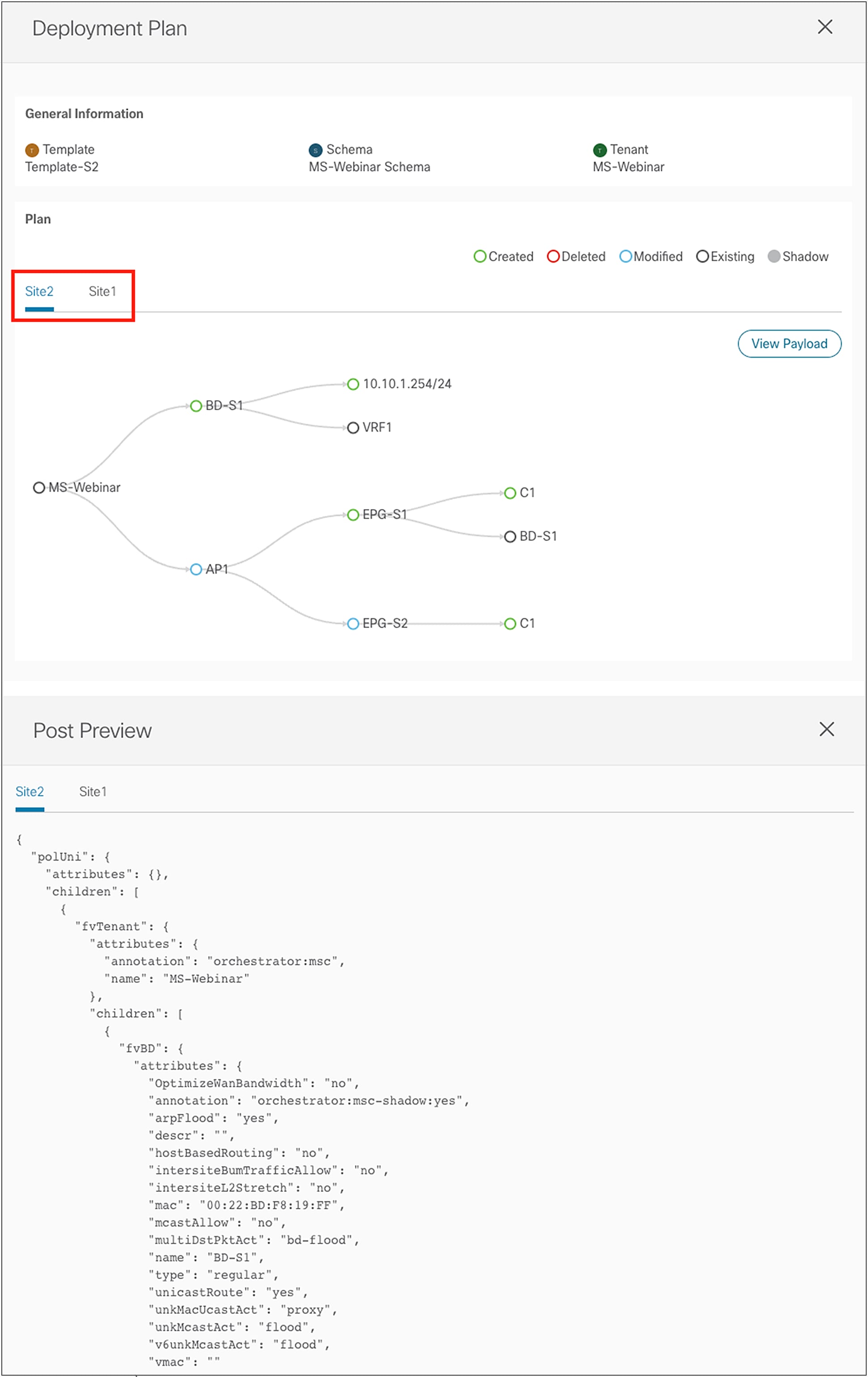

NDO can now keep track of different versions of a template (up to 20), set a specific version as “golden” (this is the version that will never be automatically deleted from the system), allow graphic display of detailed differences between the latest version of a template and any selected older version (see Figure 34), and roll back at any time the configuration of the template to any selected older version.

This latest functionality is particularly interesting as it provides a sort of “undo” functionality built into the system: if the deployment of a template at any given point in time causes any sort of functional or connectivity issue, it is possible to quickly roll back the configuration to an earlier deployment that was known to be working fine.

For more information and a live demonstration of template versioning and rollback, please refer to the link below: https://video.cisco.com/video/6277140235001

● Template deployment plan visibility: One of the main concerns customers adopting NDO always bring up is the fact they want to have better visibility into what configuration NDO is pushing and where (that is, to which APIC domains) when they deploy a specific template. Depending on the specific configuration created in a template, its deployment may in fact end up provisioning objects to sites different from the ones the template is associated to. This, for example, is the case when shadow objects are created to enable the VXLAN data-path between sites (the use of shadow objects will be discussed in detail later in this document). Template deployment plan visibility has been introduced to clearly display to the user, both in graphical and XML formats, what changes will be applied (and to which sites) as a result of the template’s deployment. This would allow the user to catch upfront any unexpected behavior (due to a misconfiguration or a bug in the system), halt the template’s deployment, and thus prevent a possible outage. Figure 35 shows the graphical and XML output of the template deployment plan.

Graphical and XML views of a template deployment plan

For more information and a live demonstration of template deployment plan visibility, please refer to the link below: https://video.cisco.com/video/6277137504001

● Change control workflow: Different customers operate NDO in different ways. Very often, they require having different types of users perform different parts of a specific template’s configuration provisioning, and they may have specific and strict rules to adhere to before any change can be applied to the system. The change-control workflow has been introduced into NDO to provide a capability to define three different types of user’s roles:

◦ The designer, responsible to create or modify a template’s configuration

◦ The approver, whose tasks are to review and approve or deny the configuration changes proposed by the designer. Note that it is possible to require approval from multiple approvers before a template may be deployed. If the approver(s) reject the deployment of the template, a message is sent back to the designer explaining the reasons behind that rejection.

◦ The deployer, who is responsible for deploying the template. The deployer can also reject deployment of the template and send back a message to the designer, who can then take any needed corrective action.

The definition of the roles described above is flexible, and, depending on specific requirements, different roles can be combined. Also, while this change-control workflow is embedded into NDO, it has been designed from the outset to be extensible and will be able, in future, to integrate with external change-management systems.

For more information and a live demonstration of change control workflow, please refer to the link below: https://video.cisco.com/video/6277140011001

● Configuration drift reconciliation workflow: while the recommendation for ACI Multi-Site deployment is to always (and only) provision configurations from NDO, the objects pushed by NDO to APIC (and rendered by APIC on the physical network devices) are not locked and can be modified and/or deleted directly from APIC.

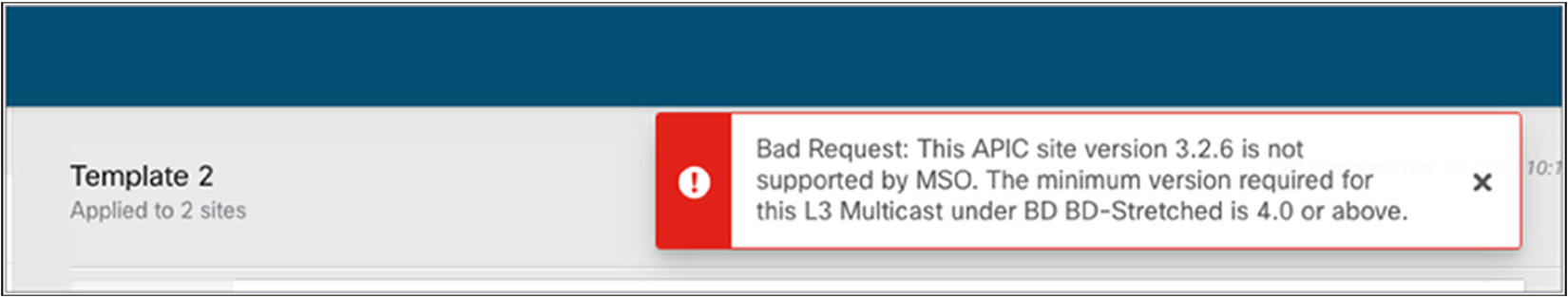

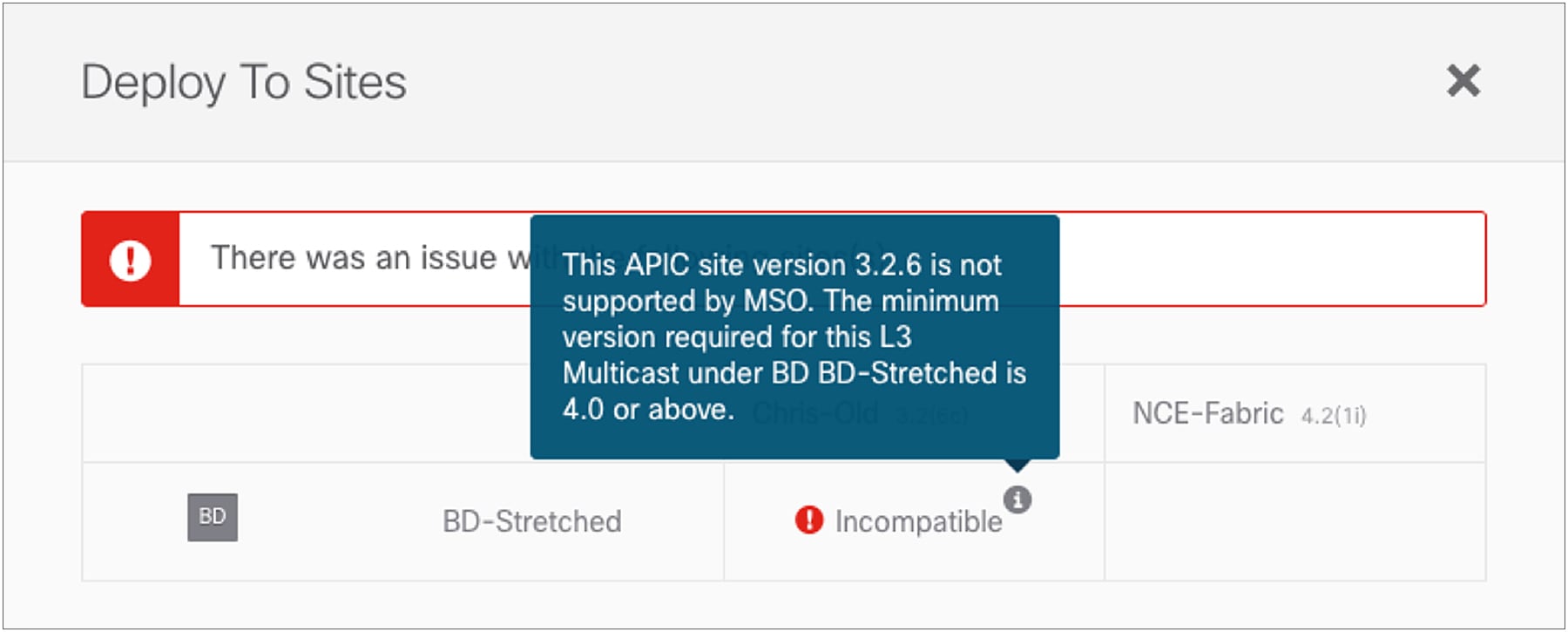

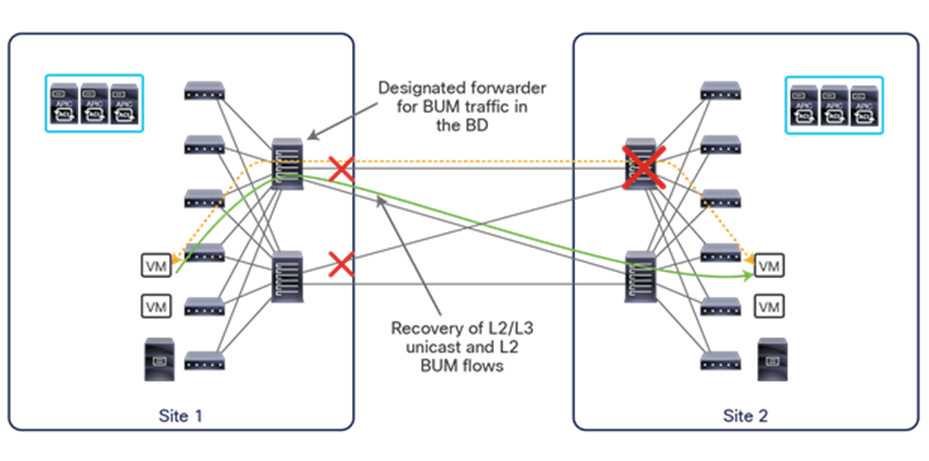

● It is therefore important, at any given point in time, to have a clear understanding whether or not the APIC and NDO “views” of a user’s intent are in sync. A difference between specific configurations of an object on APIC and on NDO results in “drift.” A notification channel exists between APIC and NDO that ensures that NDO can always compare the views of the two systems and notify users of detected drift conditions so that the user can take proper corrective actions.