Cisco ACI Multi-Site and Service Node Integration White Paper

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

This document describes the deployment considerations for integrating Layer-4 through Layer-7 (L4–L7) network services in a Cisco® Application Centric Infrastructure (Cisco ACI®) Multi-Site fabric. The document specifically focuses on stateful firewalls (FWs) and load balancers. The following use cases are considered:

● Layer-3 firewall design

● Layer-3 load-balancer design

● Layer-3 firewall and load-balancer service chain

● North-south and east-west service insertion design

● Independent clustered service nodes in each site

To best understand the design presented in this document, you should have basic knowledge of the Cisco ACI Multi-Site solution, the deployment of L3Out connectivity between the Multi-Site fabric and the external Layer-3 domain, and the functionality of service graphs with Policy-Based Redirect (PBR).

Starting from release 3.0(1) of the Cisco ACI software, Cisco offers the Cisco ACI Multi-Site solution, which allows you to interconnect multiple Cisco ACI sites, or fabrics, under the control of the different Cisco Application Policy Infrastructure Controller (APIC) clusters. This solution provides an operationally simple way to interconnect and manage different Cisco ACI fabrics that may be either physically collocated or geographically dispersed. For more information about the Cisco Multi-Site architecture, please refer to the following white paper: https://www.cisco.com/c/en/us/solutions/collateral/data-center-virtualization/application-centric-infrastructure/white-paper-c11-739609.html. In this document, we use the terms “site” and “fabric’ interchangeably to refer to a single “APIC domain,” which could represent a single pod or a Cisco ACI Multi-Pod fabric deployment.

Cisco ACI offers the capability to insert L4–L7 services, such as firewalls, load balancers, and Intrusion Prevention Services (IPSs), using a feature called a service graph. For more information, please refer to the Cisco ACI service-graph-design white paper: https://www.cisco.com/c/en/us/solutions/collateral/data-center-virtualization/application-centric-infrastructure/white-paper-c11-2491213.html.

The service-graph functionality can then be enhanced by associating to it one or more Policy-Based Redirection (PBR) policies. For more detailed information on Cisco ACI contracts and PBR, please refer to the Cisco ACI contract guide white paper and to the Cisco ACI PBR white paper: https://www.cisco.com/c/en/us/solutions/collateral/data-center-virtualization/application-centric-infrastructure/white-paper-c11-743951.html.

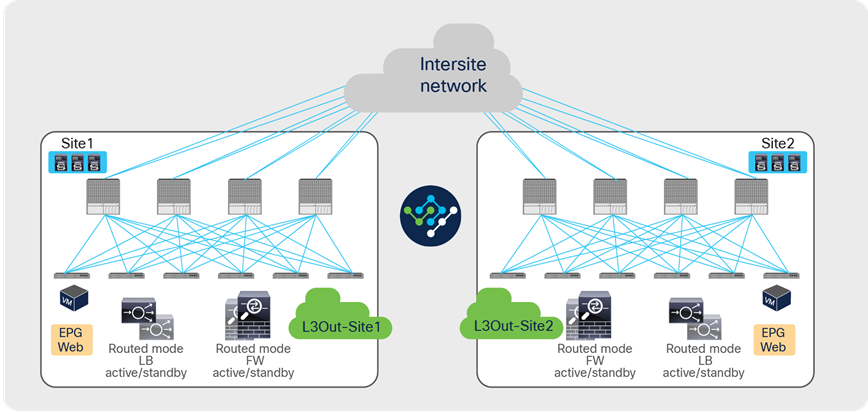

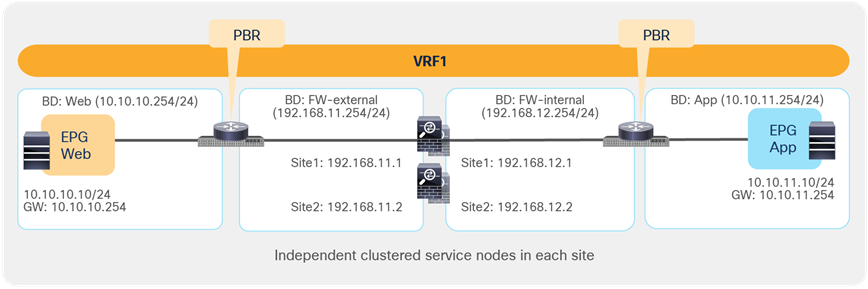

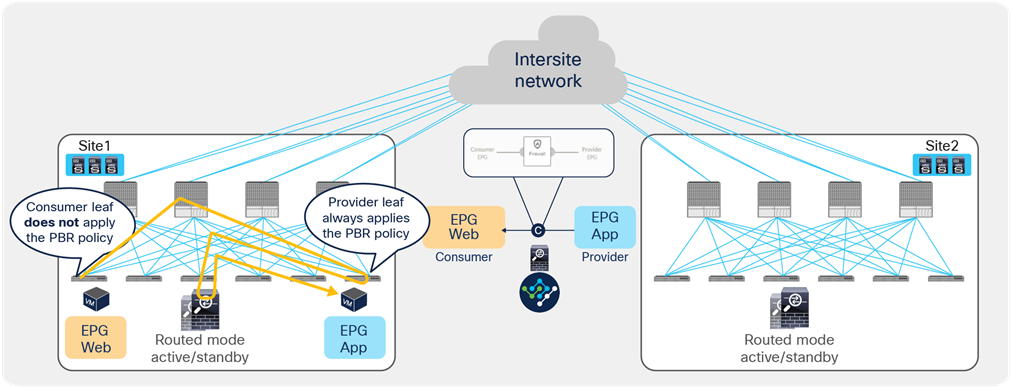

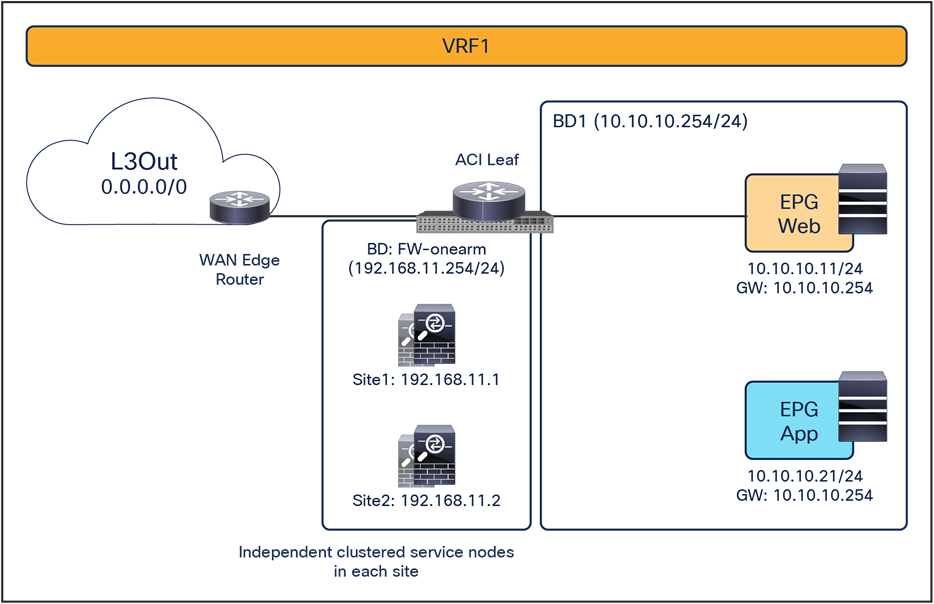

As of Cisco ACI Release 6.0(5), the recommended option for integrating L4–L7 services into a Cisco ACI Multi-Site architecture calls for the deployment of independent service nodes in each site (Figure 1).

This is the logical consequence of the fact that the ACI Multi-Site architecture has been designed to interconnect separate ACI fabrics, at both the network fault domain and management levels. The focus in this document, therefore, will be exclusively on this deployment model.

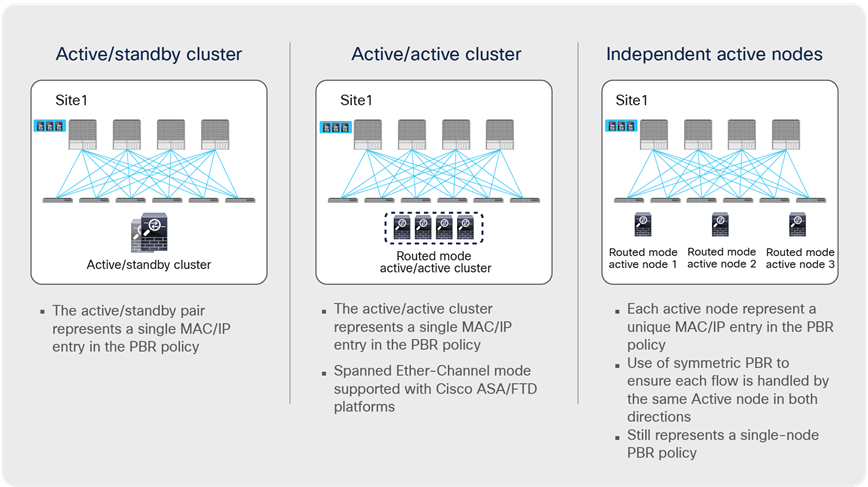

The service-node High Availability options considered in this paper are the following ones:

● Active/standby service-node pair in each site

● Active/active cluster in each site

● Independent active service nodes in each site

It is possible to mix and match each HA option in the different fabrics that are part of the Multi-Site domain:

Recommended network services deployment options with the Cisco ACI Multi-Site solution

Note: This white paper uses an active/standby service-node pair in each site mainly in the figures, though the other HA options shown in Figure 1 are also supported.

This model mandates that symmetric traffic flows through the service nodes be maintained, because the connection state is not synchronized between independent service nodes deployed in different sites. This requirement can be achieved with the following approaches:

● Use of host-route advertisement for north-south communication with stateful firewall nodes connected through L3Out: this allows connecting independent firewall nodes deployed between the border leaf nodes and the external WAN edge routers because inbound traffic is always optimally steered toward the site where the destination endpoint resides, whereas outbound traffic usually goes back through the same local L3Out connection. This approach, while fully supported and useful in many cases, relies on a more traditional routing design and only applies to north-south communication; this document therefore focuses on the second approach, described below, which leverages the advanced service insertion capabilities offered by an ACI network infrastructure.

● Use of service graph with PBR for both north-south and east-west communication: you can deploy service graph with policy-based redirect (PBR) for both north-south and east-west security policy enforcement. This approach is the most flexible and recommended solution. It consists of defining a PBR policy in each site that specifies at least a local active service node but it is also possible to deploy multiple active service nodes in the same site by leveraging symmetric PBR. The Cisco Nexus® 9000 Series Switches, used as leaf nodes, would then apply the PBR policy, selecting one of the available service nodes for the two directions of each given traffic flow (based on hashing). Different deployment models are supported for the service nodes: L3-routed mode (which has been supported from the beginning) but also L1/L2 inline/transparent mode as well.

● Starting from ACI Release 6.0(4c), support for new PBR use cases has been added, allowing to associate a service graph (with redirection enabled) to vzAny or for intersite transit routing communication. More details about those new use cases will be found in later sections of this paper.

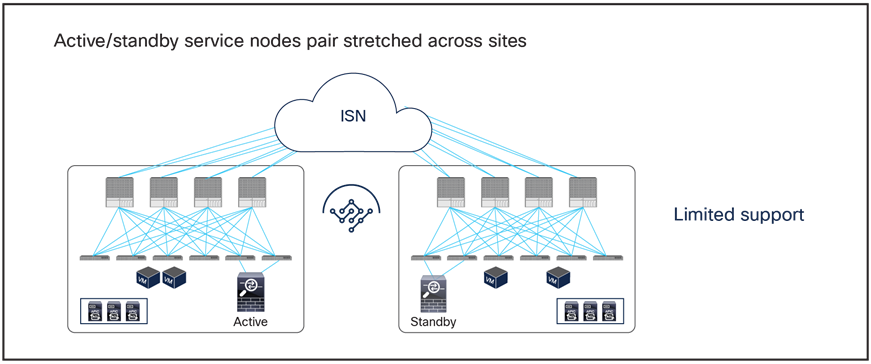

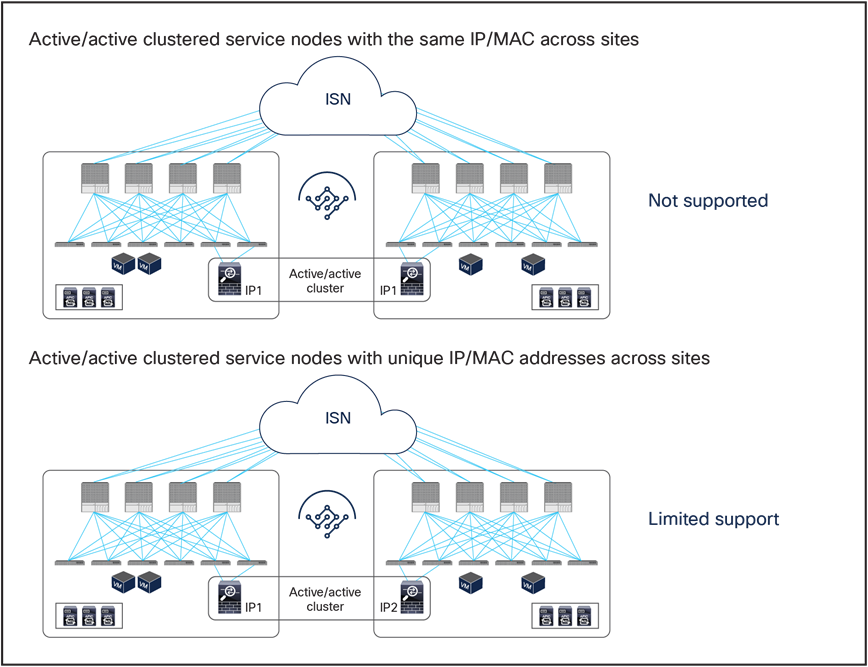

Figure 2 and Figure 3 illustrate the other two models for the deployment of clustered service nodes between sites.

Limited support network services deployment options with the Cisco ACI Multi-Site solution

● Active/standby service nodes pair stretched across sites: this model can be applied to both north-south and east-west traffic flows. This fail-safe model does not allow the creation of an asymmetric traffic path that could lead to communication drops. At the same time, because of the existence of a single active service node connected to the Multi-Site fabric, this option has certain traffic-path inefficiencies, because by design some traffic flows will hair-pin across the Intersite Network (ISN). Therefore, you should be sure to properly dimension the bandwidth available across sites and consider the possible latency impact on application components connected to separate sites. Also, this approach is only supported if ACI only performs Layer-2 forwarding (firewall as the default gateway for the endpoints or firewall in transparent mode) or when the active/standby firewall pair is connected to the fabrics via L3Out connections.

Active-active service node cluster deployment options with the Cisco ACI Multi-Site solution

● Active/active clustered service nodes that use the same virtual MAC and virtual IP addresses stretched across sites: this model cannot be applied to a Multi-Site environment as of ACI Release 6.0(4c), though an active/active firewall cluster can be stretched across pods in a Cisco ACI Multi-Pod environment. In the Cisco firewall implementation, this deployment model takes the name of Split Spanned EtherChannel cluster: all the firewall nodes that are part of the same cluster are seen as a logical distributed firewall reachable through a single virtual MAC and virtual IP. ACI Multi-Site does not currently support the capability of discovering the same virtual MAC and virtual IP pair across different sites. This situation, where the same endpoint is continuously learned in different locations, is considered to be an endpoint flapping scenario.

● Active/active clustered service nodes that use unique MAC and IP addresses across sites: this represents a second implementation option of an active/active firewall clustering, where each firewall node that is part of the same cluster owns its unique MAC and IP addresses. This option, supported by Cisco firewalls and some third-party implementations, can work today with an ACI Multi-Site architecture for some use cases[1]. Although this option works, the deployment models illustrated in Figure 1 are still primarily recommended, because clustering across sites potentially consumes more firewall resources, and connection sync across firewalls in different sites is not really required when deploying Cisco ACI PBR functionalities. The Cisco ACI fabric forwarding behavior is the same as the one used for independent clustered service nodes in each site, which is explained as part of the “Multi-Site service graph with PBR use cases” section in this paper.

Note: Cisco ACI Multi-Pod remains the recommended architectural approach for the deployment of active/standby service-node pairs across data centers and active/active clustered service nodes with the same virtual IP and virtual MAC addresses across data centers. For more information,

Please refer to the following white paper: https://www.cisco.com/c/en/us/solutions/collateral/data-center-virtualization/application-centric-infrastructure/white-paper-c11-739571.html.

Service node integration with Cisco ACI Multi-Site architecture

Design options and considerations

Several deployment models are available for integrating network services in a Cisco ACI Multi-Site architecture. To determine the best options to choose, you should consider all the specific requirements and characteristics of the design:

● Service-node insertion use case

◦ North-south service node (or perimeter service node), for controlling communications between the data center and the external Layer-3 network domain.

◦ East-west service node, for applying policies for traffic flows within the data center and across sites. For the east-west enforcement, there are two cases to consider: in the first one, the service node is used to apply policies between endpoint groups (EPGs) that are part of the same virtual routing and forwarding (VRF). The second scenario, very commonly deployed, is the one where a service node (or its virtual context) frontends each tenant/VRF, so as to be able to apply security policies to all of the inter-VRF traffic.

● Service-node appliance form factor

◦ Physical appliance

◦ Virtual appliance

● Service-node type

◦ Inline (Layer 1 [L1]), transparent (Layer 2 [L2]), or routed (Layer 3 [L3]) mode firewall/IPS with PBR

◦ Routed (Layer 3) mode load balancer with SNAT or without SNAT

● Service-node high-availability model

◦ Active/standby HA pair in each site

◦ Active/active cluster in each site

◦ Independent active nodes in each site

● Connectivity to the external Layer-3 network domain

◦ Traditional L3Outs deployed on the border leaf nodes

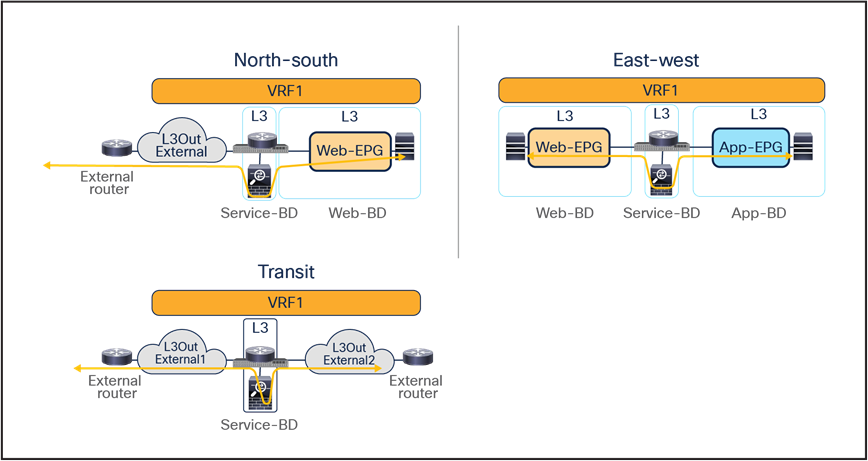

This document focuses on the service-node insertion use cases discussed below, describing traffic flows and associated deployment considerations for each option in detail:

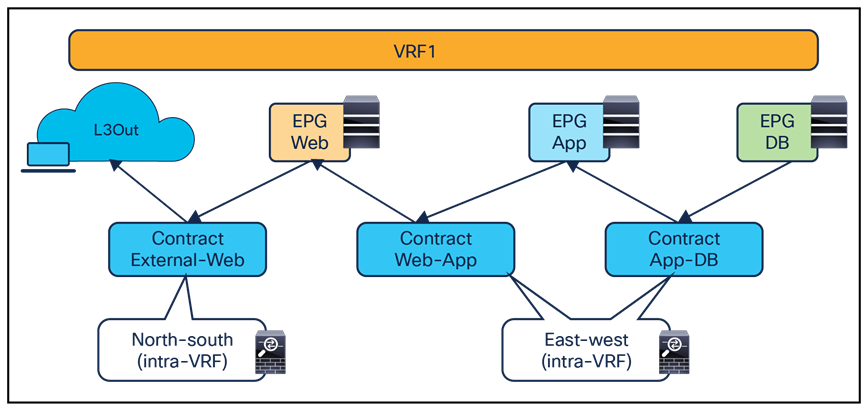

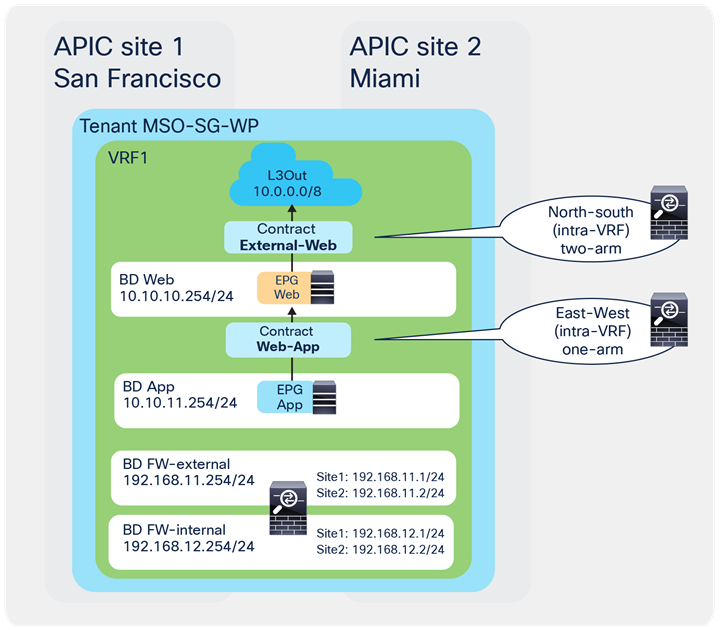

● North-south (intra-VRF): traffic flows between the external Layer-3 network domain and the web endpoint group (EPG) part of the same VRF.

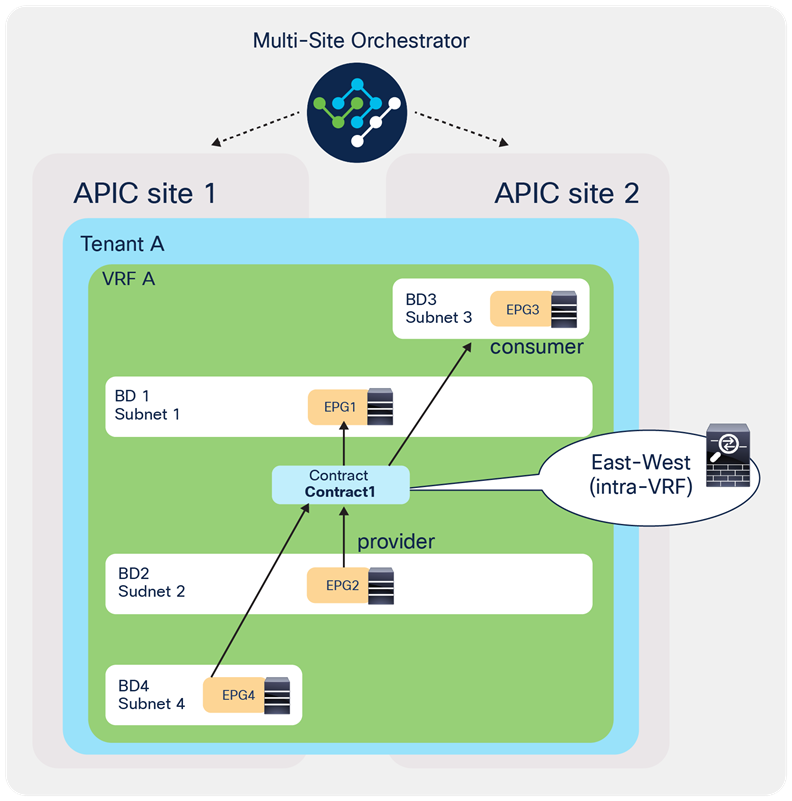

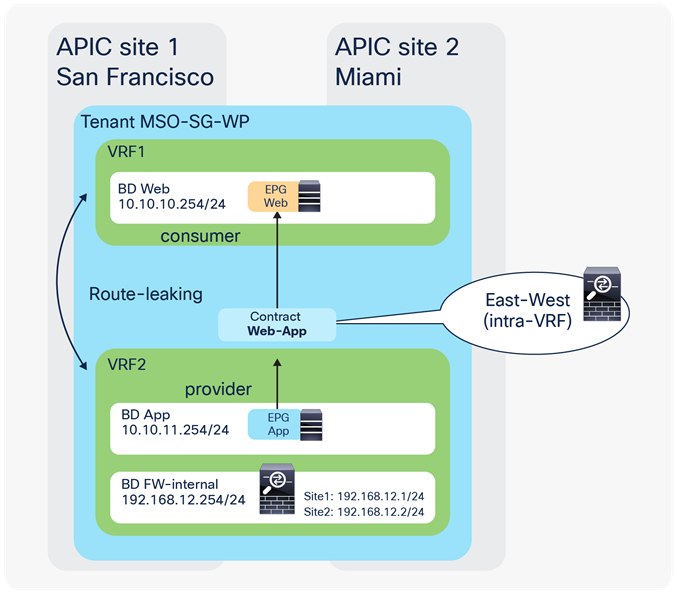

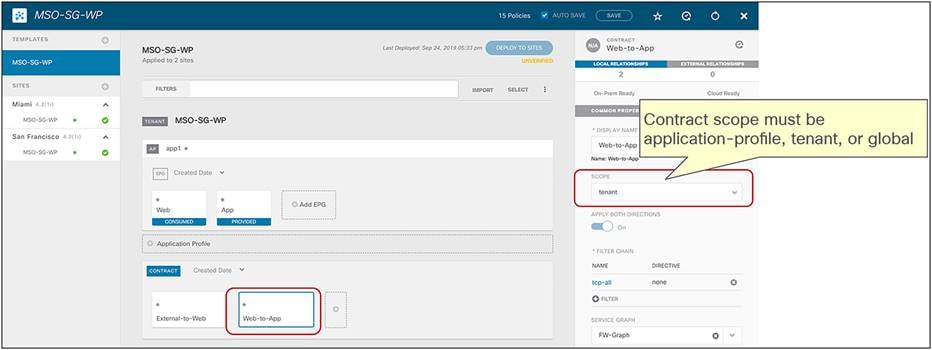

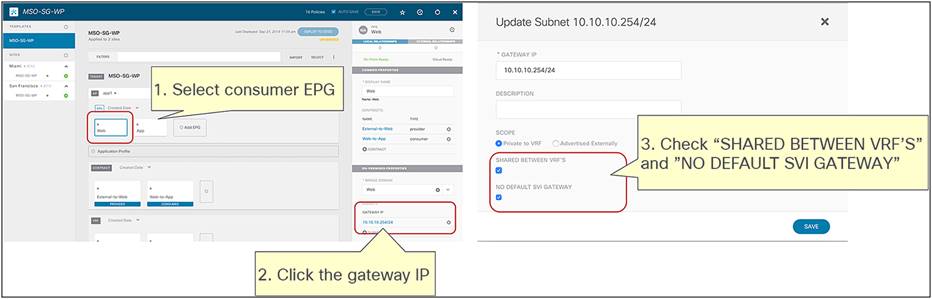

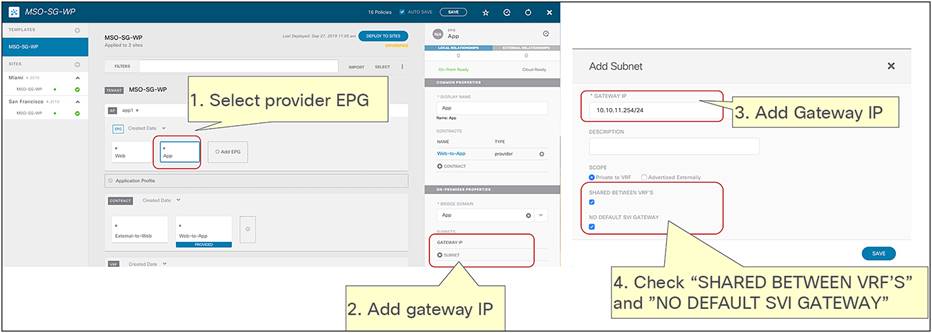

● East-west (intra-VRF): traffic flows between EPGs that are in the same VRF.

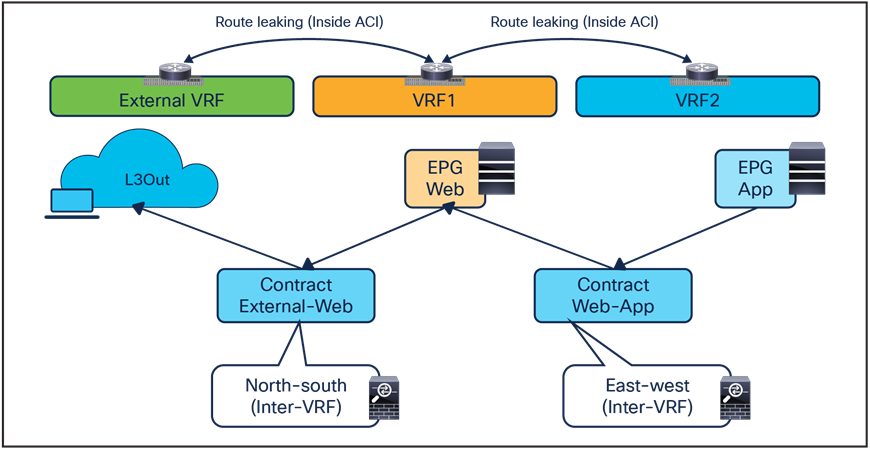

● East-west (inter-VRF): traffic flows between EPGs that are in different VRFs.

● Intersite transit routing (intra-VRF and inter-VRF): intersite traffic flows between external Layer-3 network domains that are in the same VRF or in different VRFs.

Note: All the use cases listed above will be discussed in the section “Multi-Site service graph with PBR use cases” which means that the internal endpoints or the L3Out connections with the external network domain can be either part of the same fabric or spread across different fabrics.

The figures below show the use cases covered in this document.

North-south and east-west service nodes (intra-VRF)

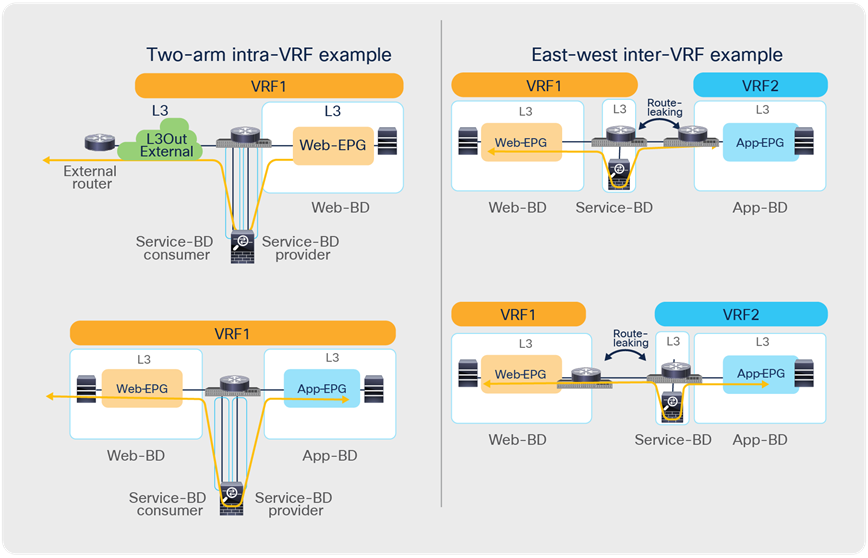

North-south and east-west service nodes (inter-VRF)

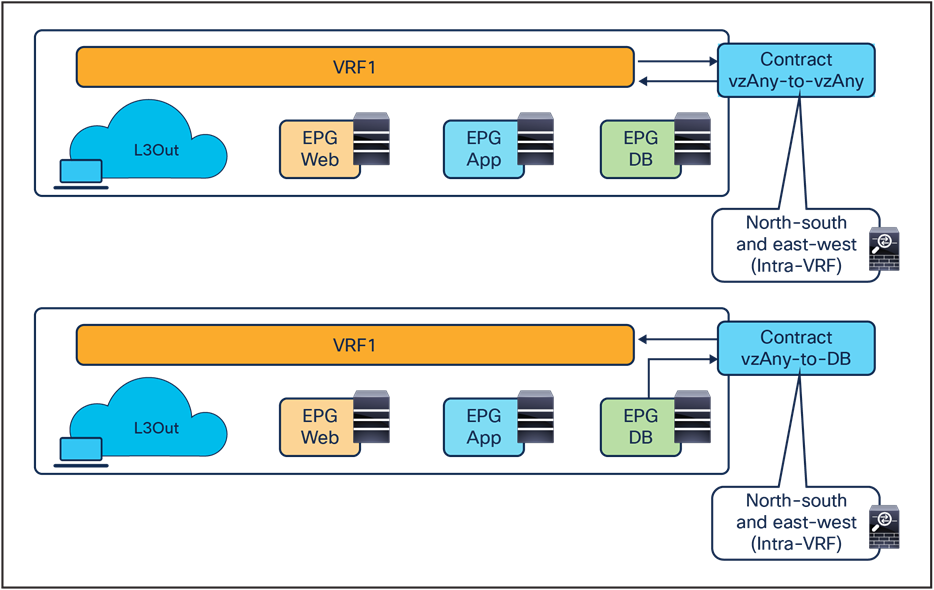

North-south and east-west service nodes with vzAny (intra-VRF)

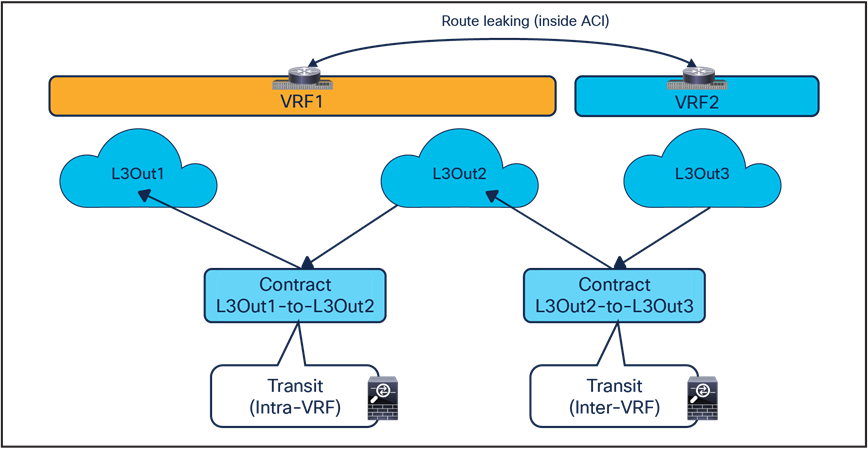

Transit service nodes (intra-VRF and inter-VRF)

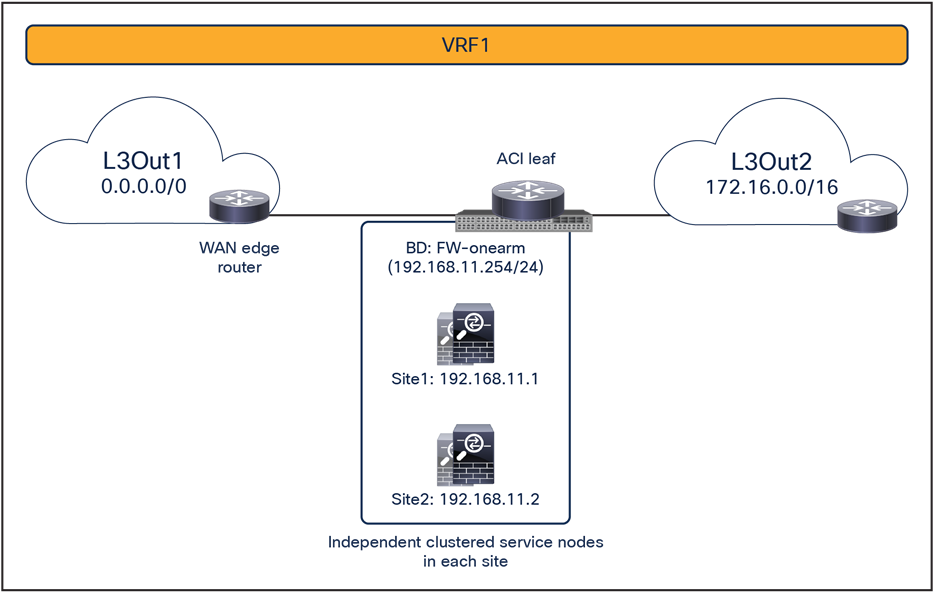

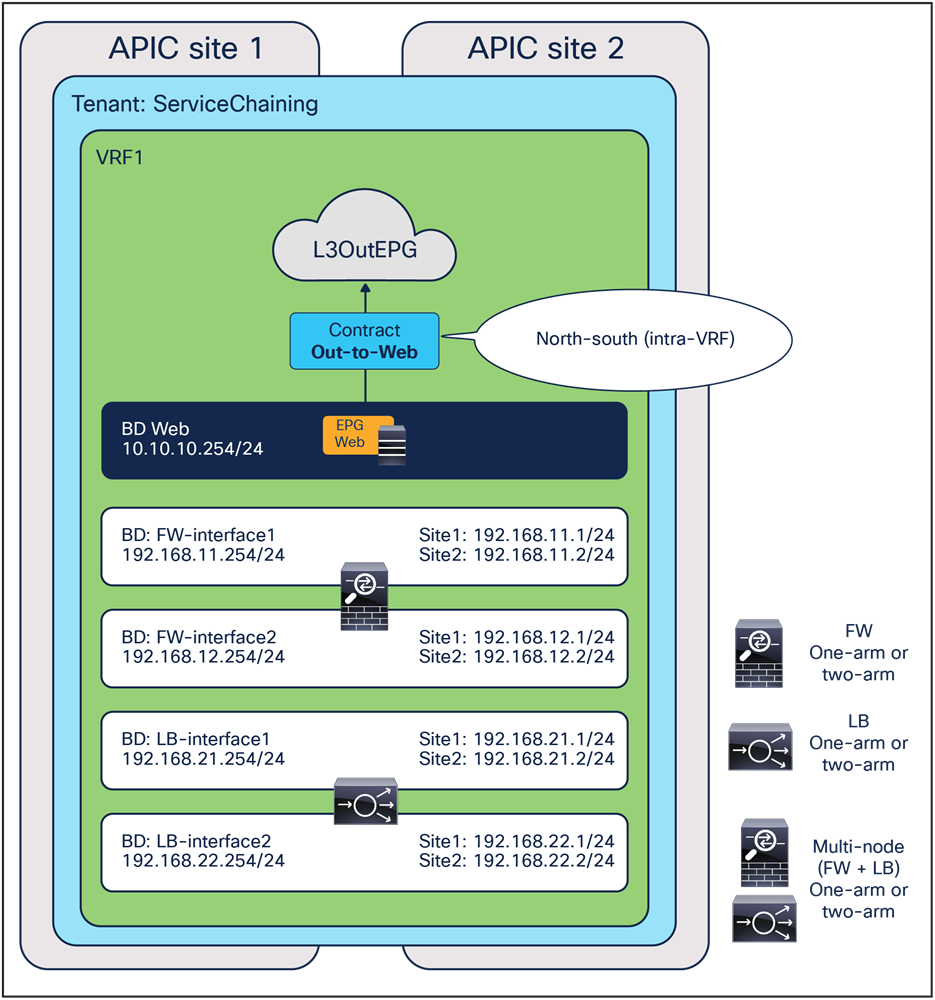

Overview of the recommended design

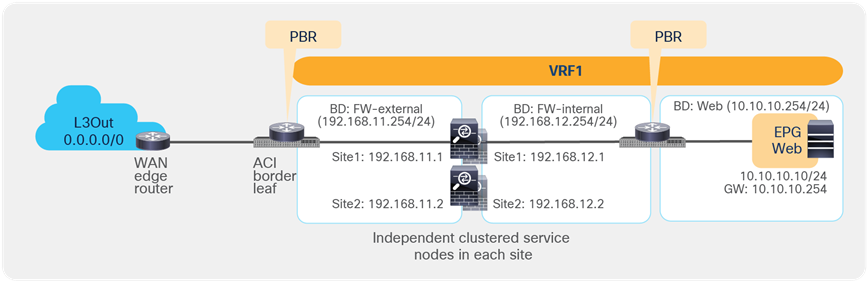

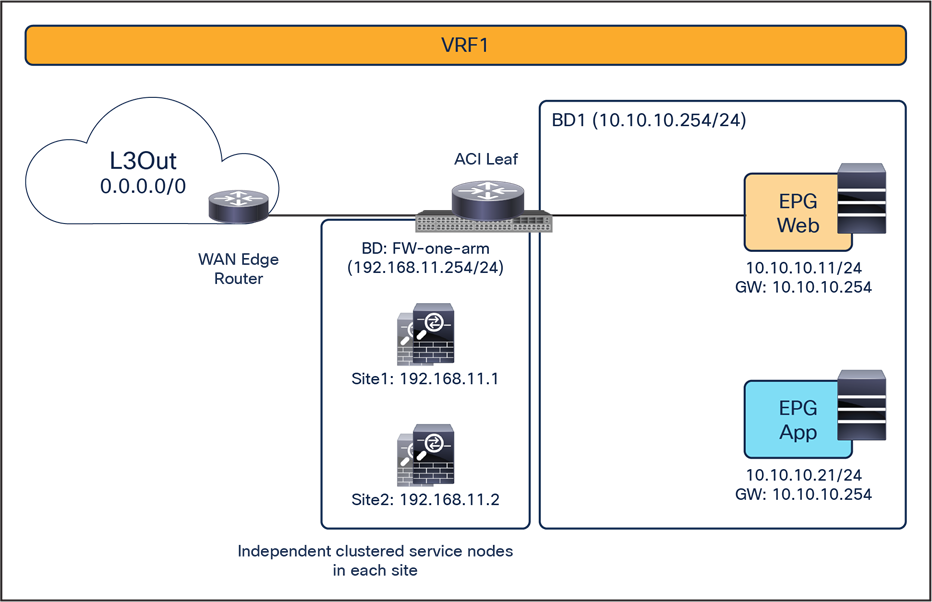

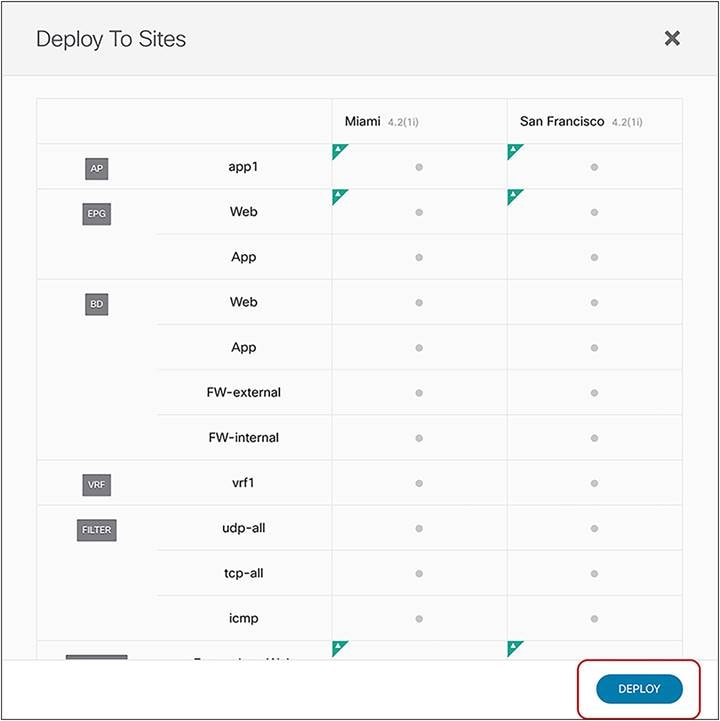

Figure 8 shows a high-level view of the topology representing the recommended deployment option with independent clustered service nodes in each site. We are going to use routed mode firewall, routed mode load balancer, and traditional L3Outs as examples in this document.

Independent clustered service nodes in each site

The deployment of independent service nodes across sites raises an operational concern about how to maintain policy configuration consistency across them. In the specific example of Cisco® firewalls, some options are available:

● Cisco Security Manager for Adaptive Security Appliances (ASAs):

For more information, see https://www.cisco.com/c/en/us/products/security/security-manager/index.html.

● Cisco Firepower® Management Center (FMC) for Cisco Firepower Next-Generation Firewall (NGFW) devices:

For more information, see https://www.cisco.com/c/en/us/products/security/firesight-management-center/index.html.

When planning for the deployment of this model, it is important to keep in mind a few important design requirements:

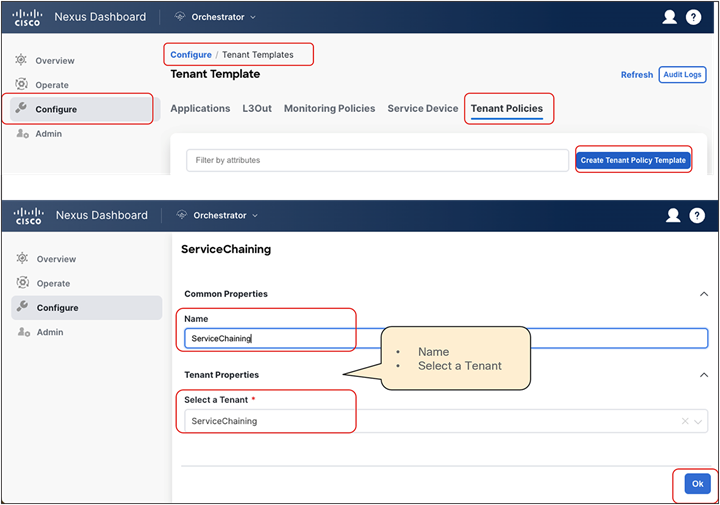

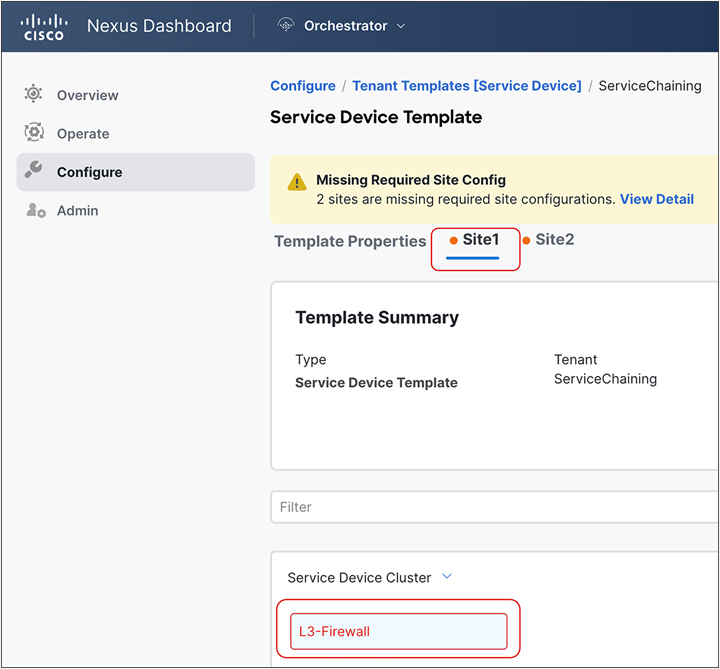

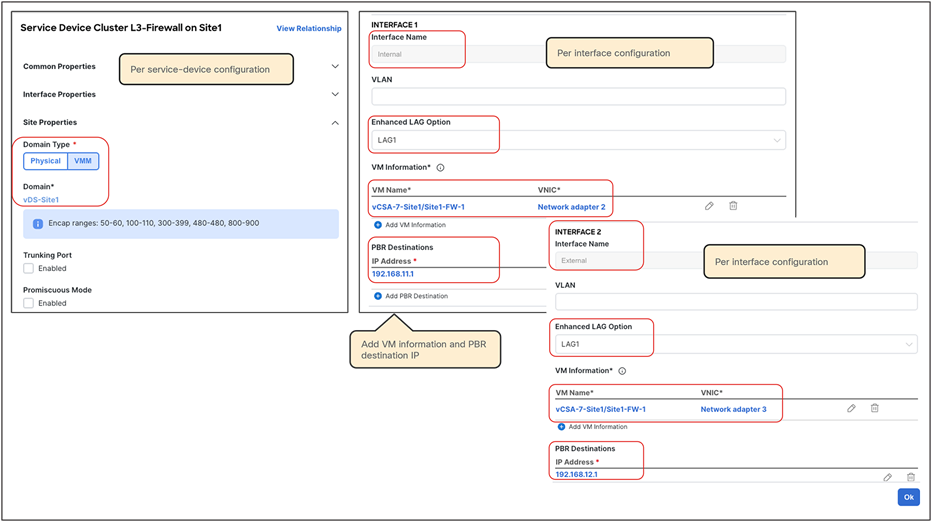

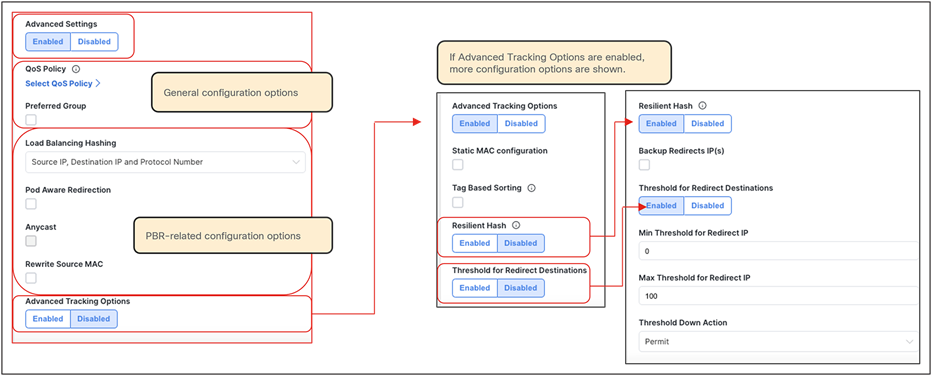

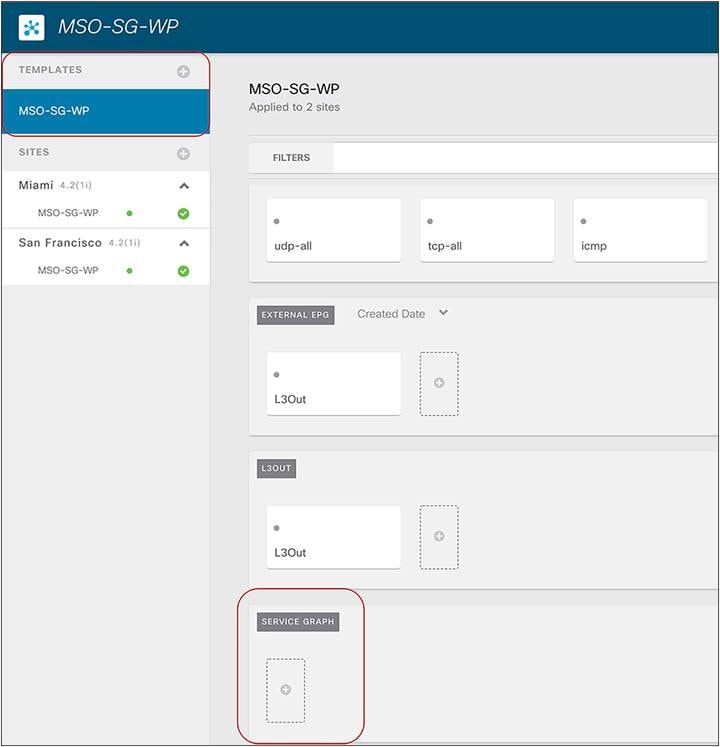

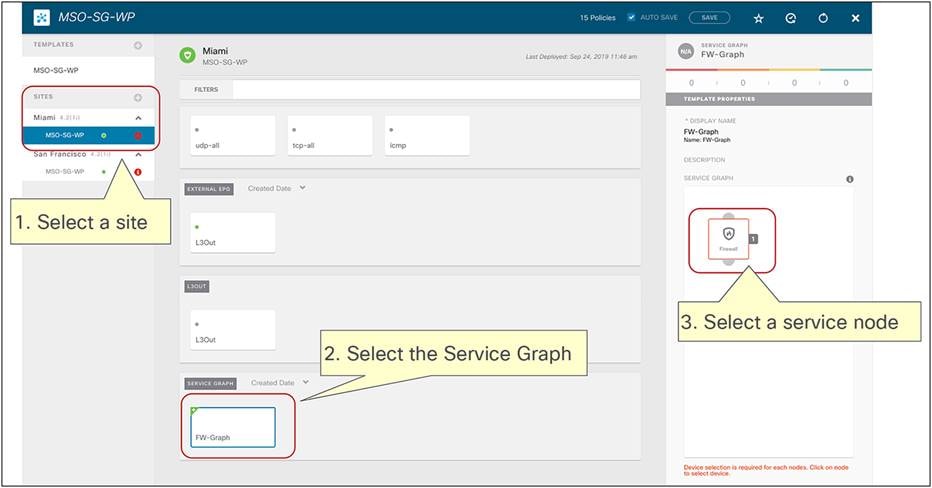

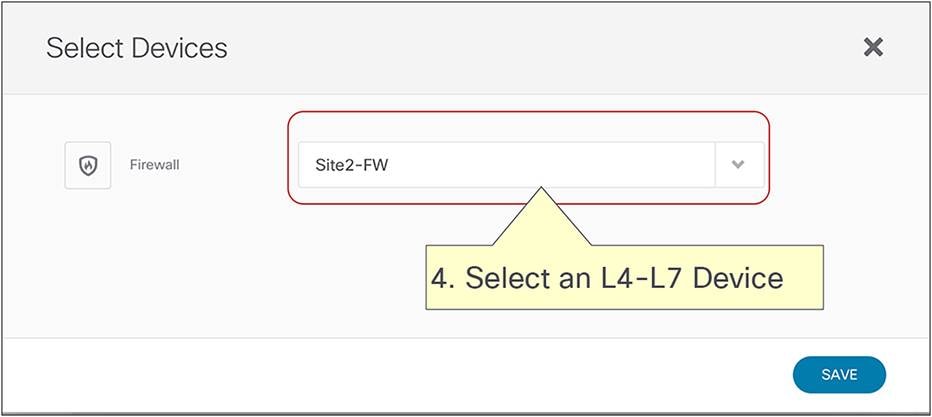

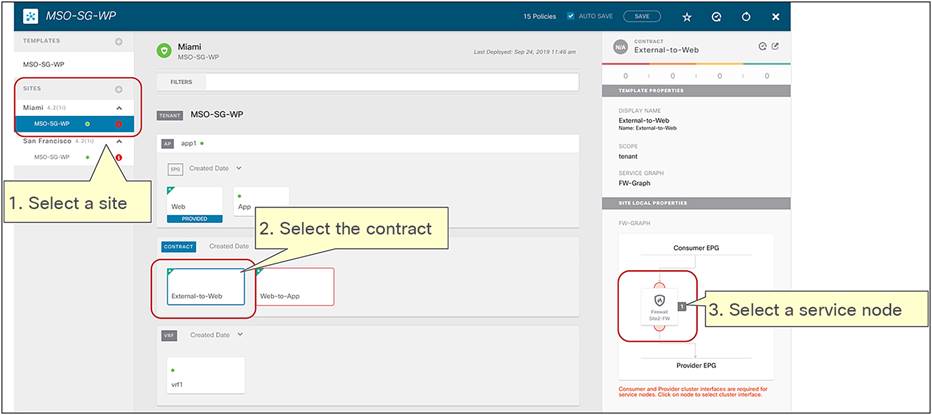

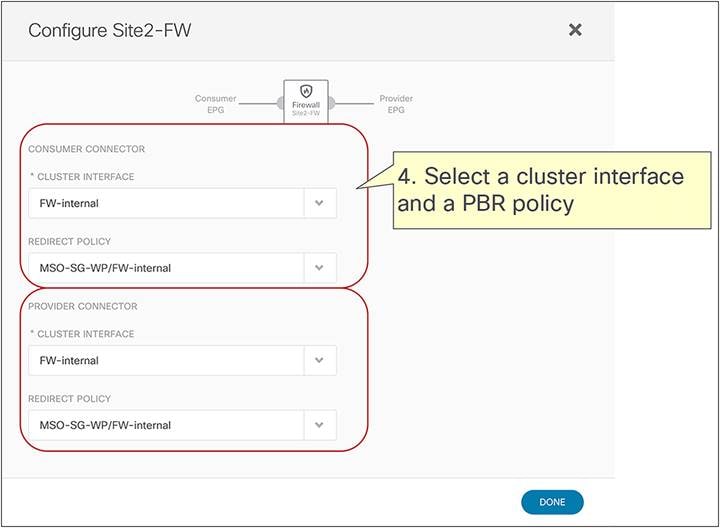

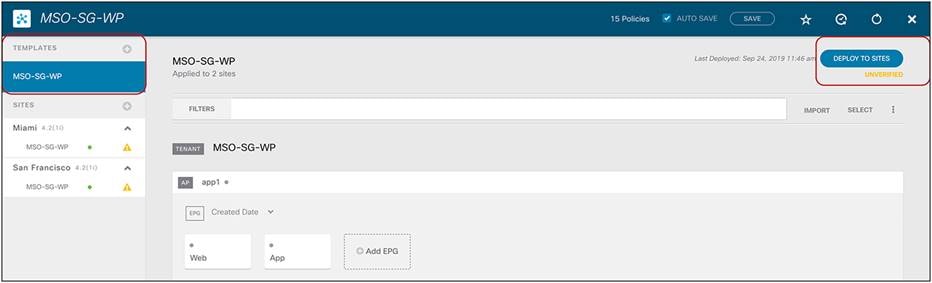

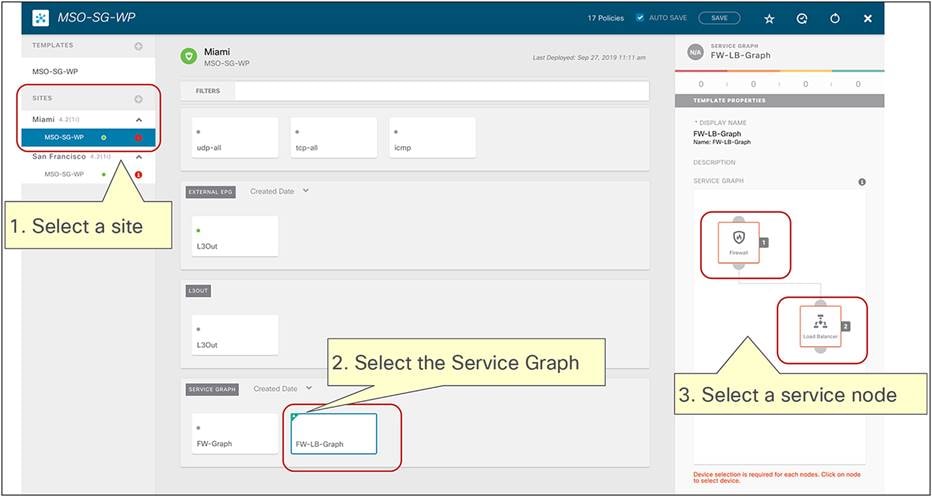

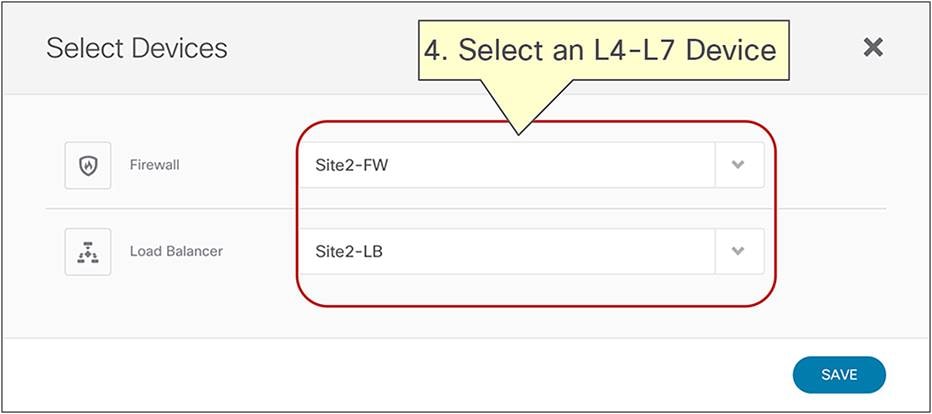

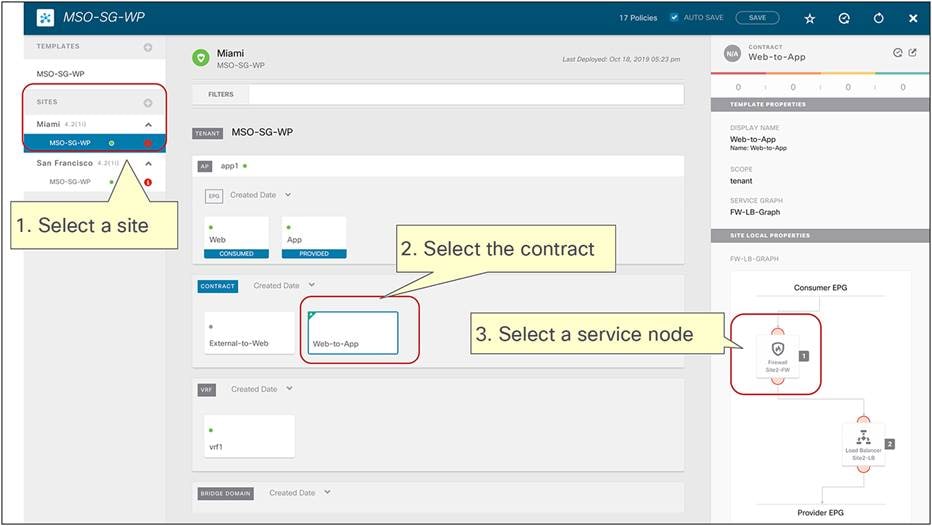

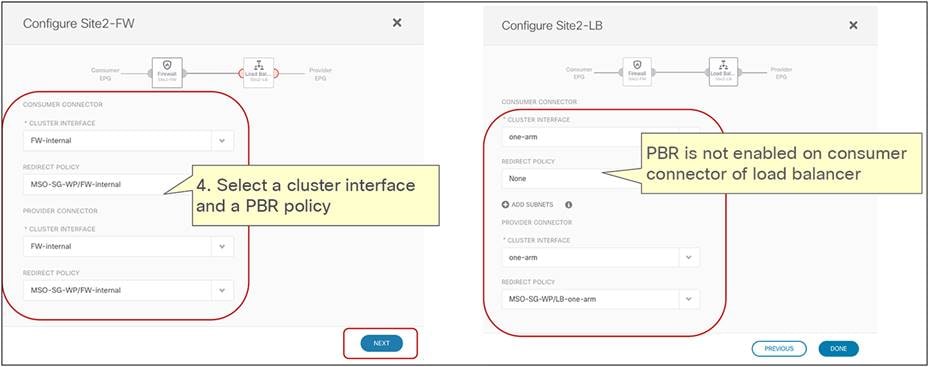

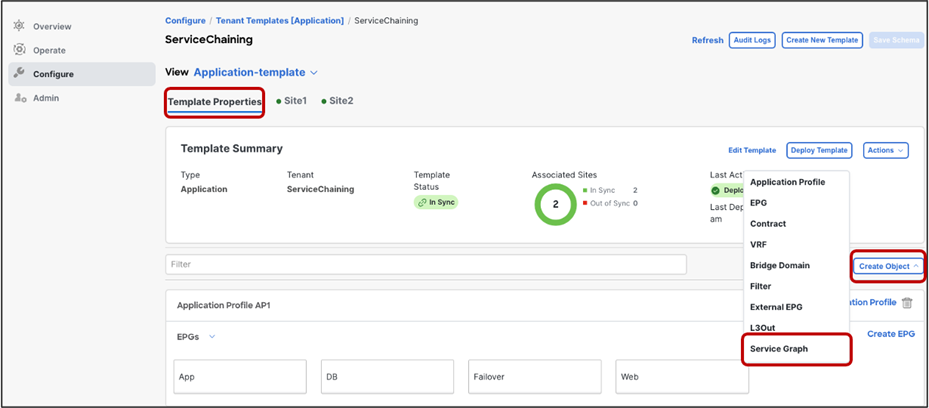

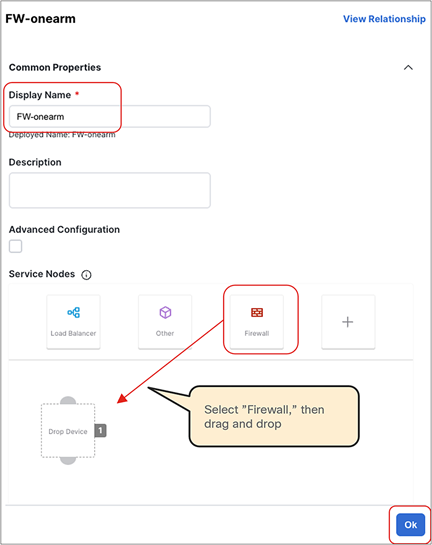

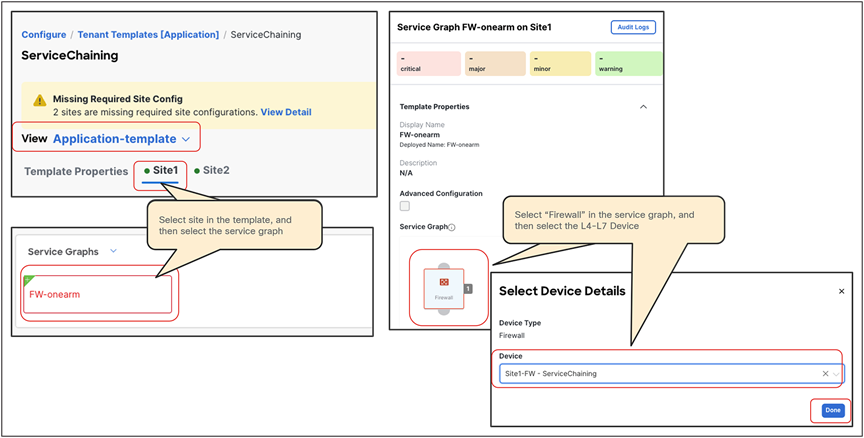

● The policy to be applied (the ‘intent”) is defined directly on Cisco Nexus Dashboard Orchestrator (NDO) and could, for example, specify that any communication between the external EPG (modeling the external Layer-3 network domain) and the internal Web EPG must be sent through a service node (or a chain of service nodes). Each specific service node is then mapped, at the site level, to the specific physical or virtual service appliances locally deployed.

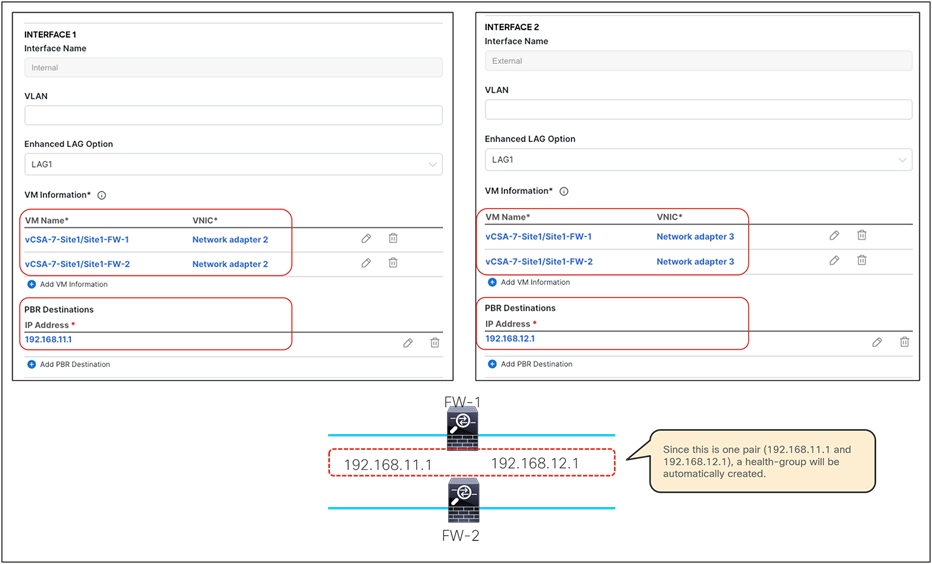

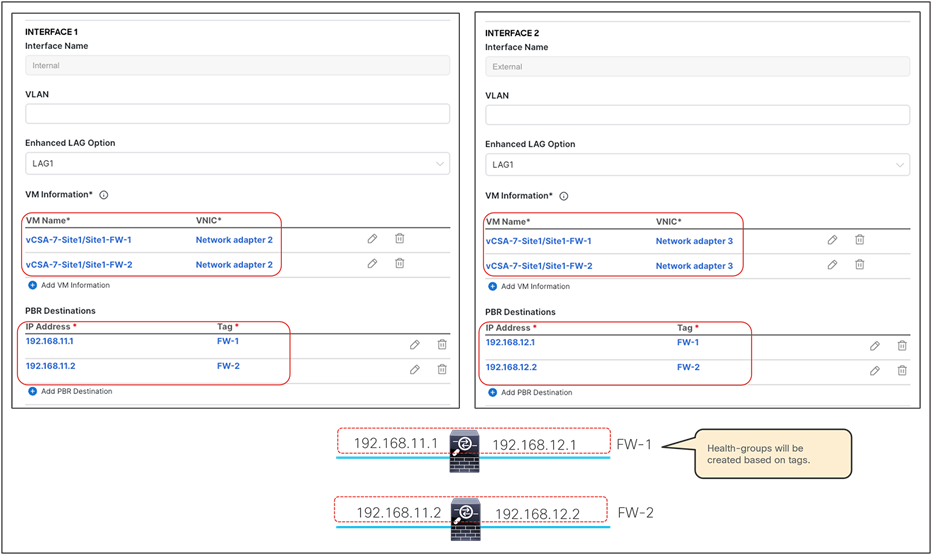

● In the current implementation, the PBR policy applied on a leaf switch can only redirect traffic to a service node deployed in the local site. As a consequence, it becomes paramount to improve the resiliency of the local service nodes. This can be achieved with the different options shown in Figure 9.

Deployment options to increase the resiliency of service nodes in a single site

The first two models are obvious, as they both ensure that the service node is seen by the fabric as a single entity, so the PBR policy would only contain a single MAC/IP pair. With the third option, multiple MAC/IP pairs are instead specified in the same PBR policy, so that a given traffic flow can be redirected to a service node. Use of symmetric PBR ensures that both the incoming and return directions of the same flow are steered through the same service node.

The definition and behavior of an active/active cluster differs depending on the vendors. In the case of Cisco ASA and a Cisco Firepower Threat Defense (FTD) active/active cluster, service nodes in the same cluster can use the same MAC and IP addresses, which is the second option in the figure above, whereas service nodes in the same Palo Alto Networks active/active HA use unique IPs, which is an enhanced version of the third option.

Note: Cisco ASA can also support an active/active cluster where each firewall node owns a unique MAC/IP address pair.

As previously mentioned, service graph with PBR can be used to handle service-node insertion for north-south, east-west, and transit traffic flows, as illustrated in Figure 10.

Service-node insertion for north-south, east-west, and transit traffic flows (one-arm example)

Several considerations apply when deploying service graph with PBR in an ACI Multi-Site architecture:

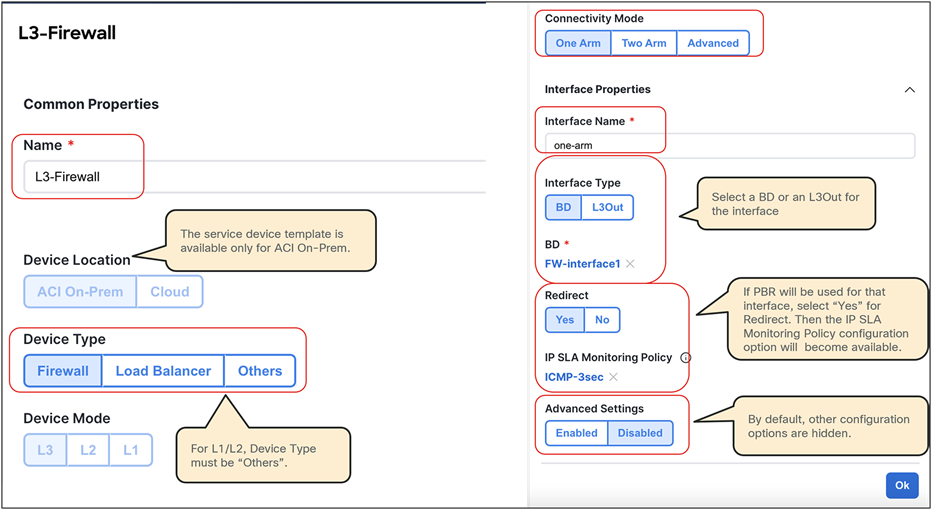

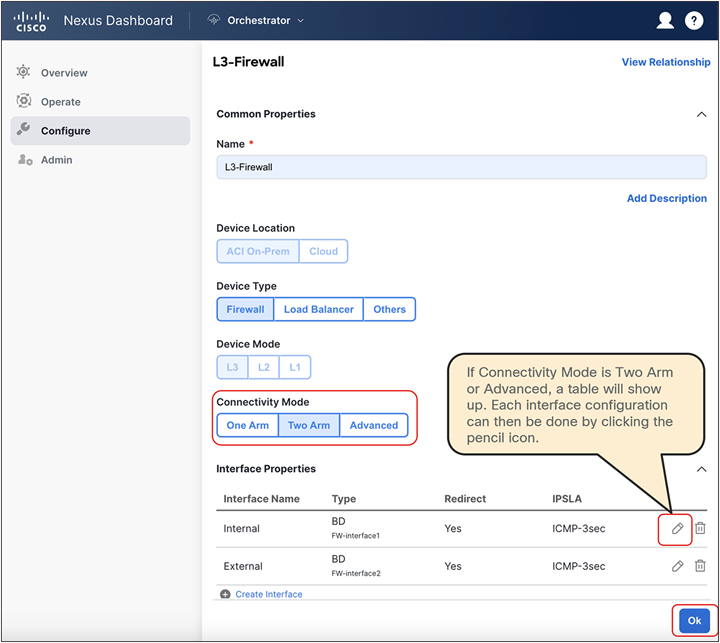

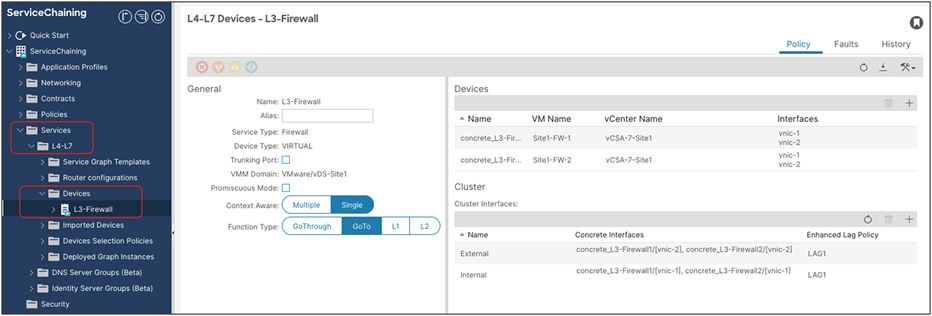

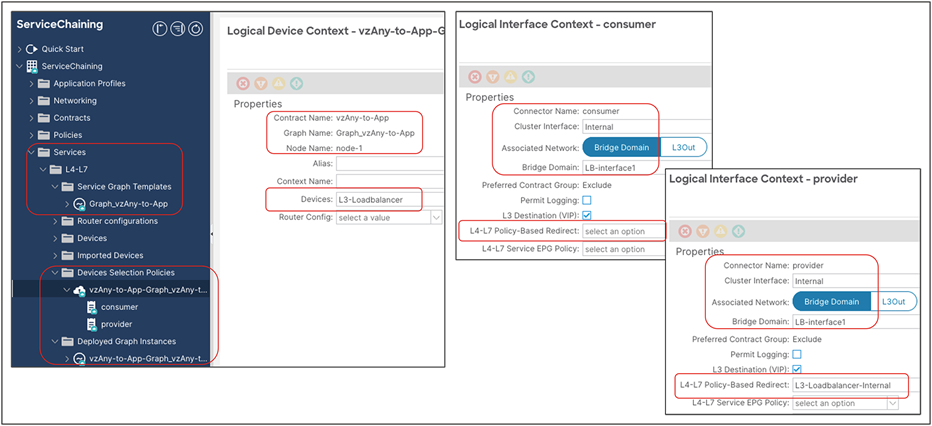

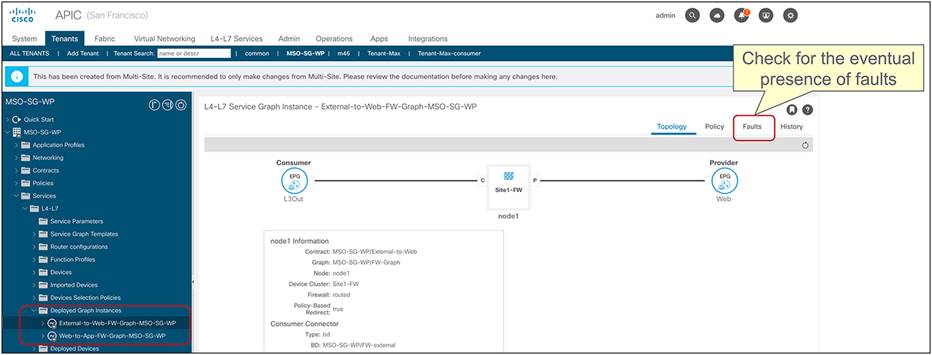

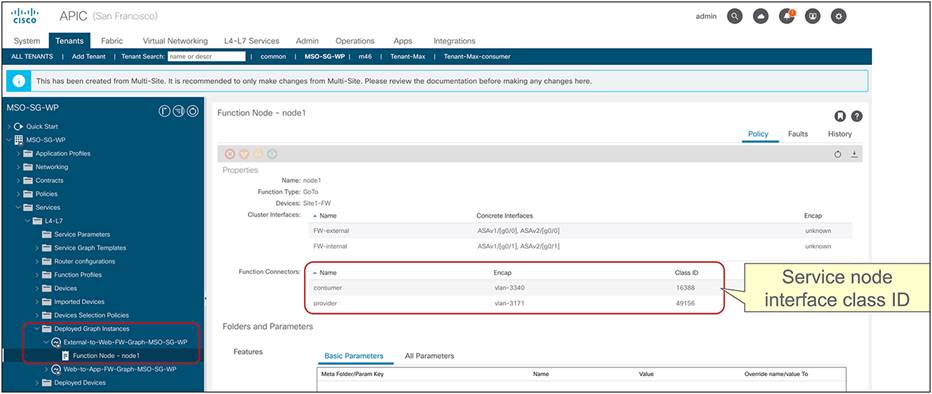

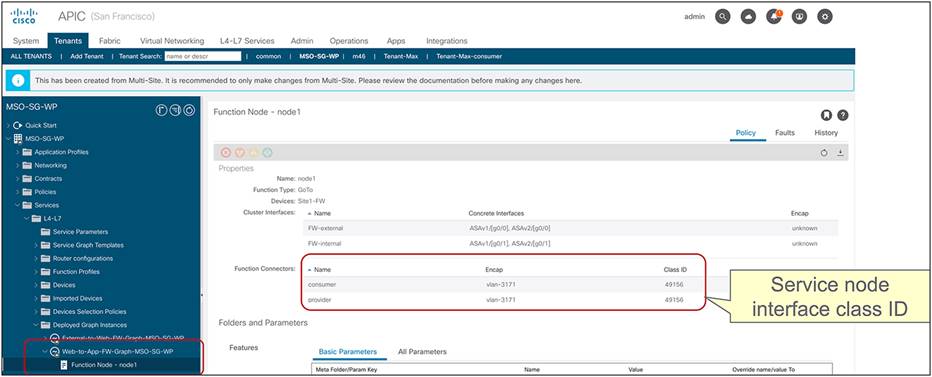

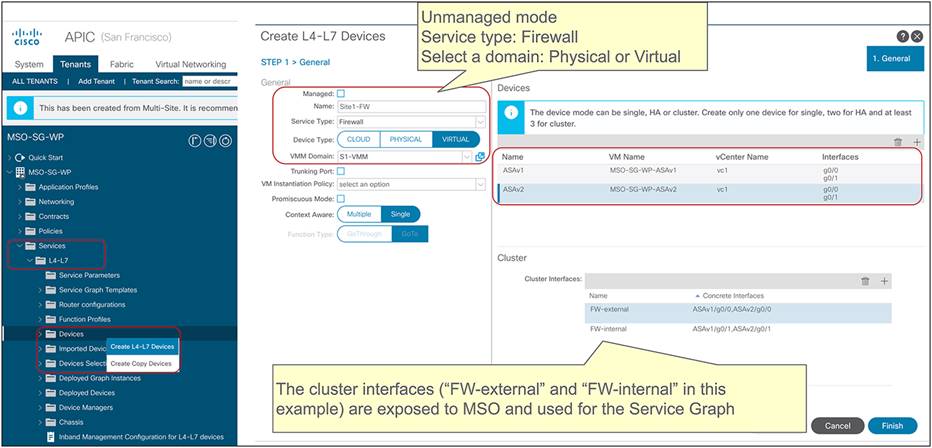

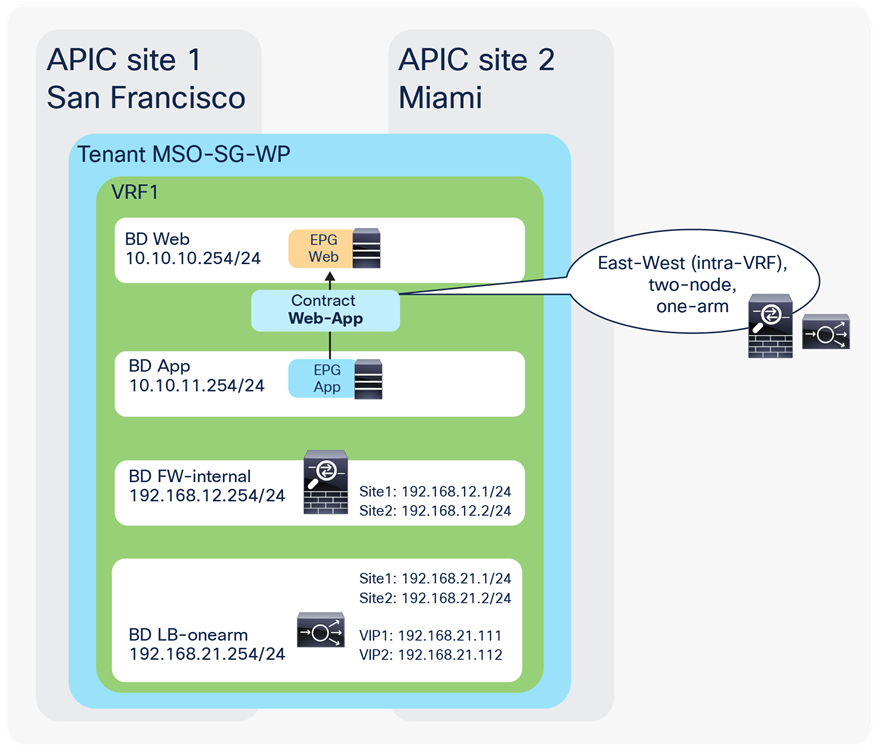

● Service graph with PBR integration with Multi-Site is only supported when the service node is deployed in unmanaged mode. This implies that ACI only takes care of steering the traffic through the service node; the configuration of the service node is, instead, not handled by the APIC. As such, there is no requirement to support any device package, and any service node (from Cisco or a third-party vendor) can be integrated with this approach.

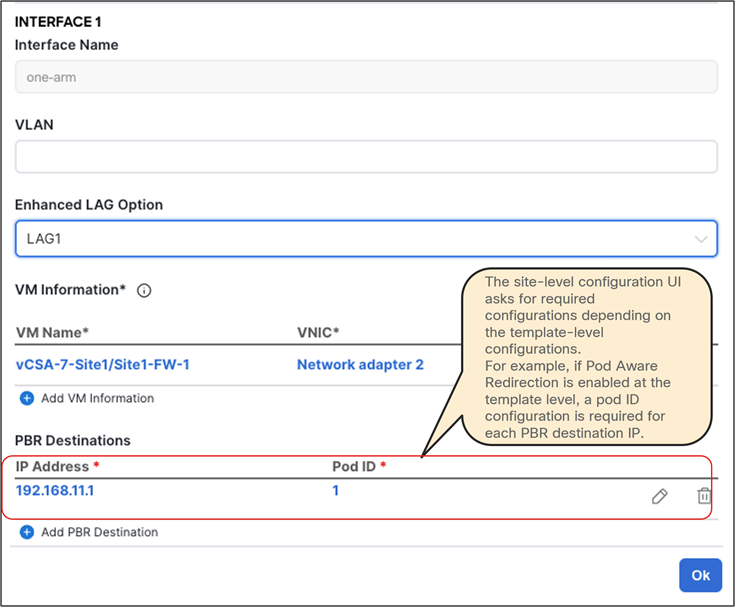

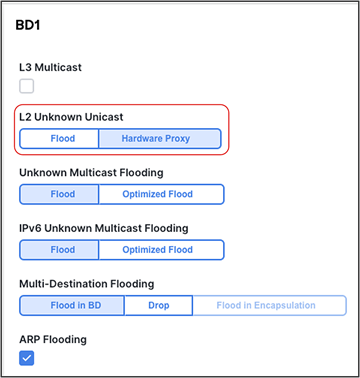

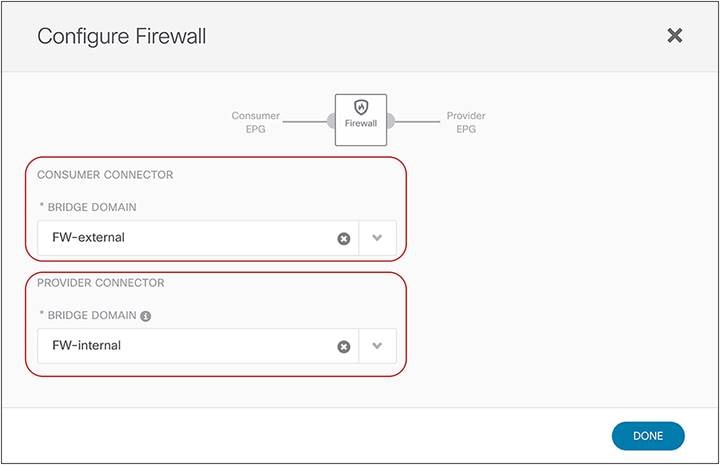

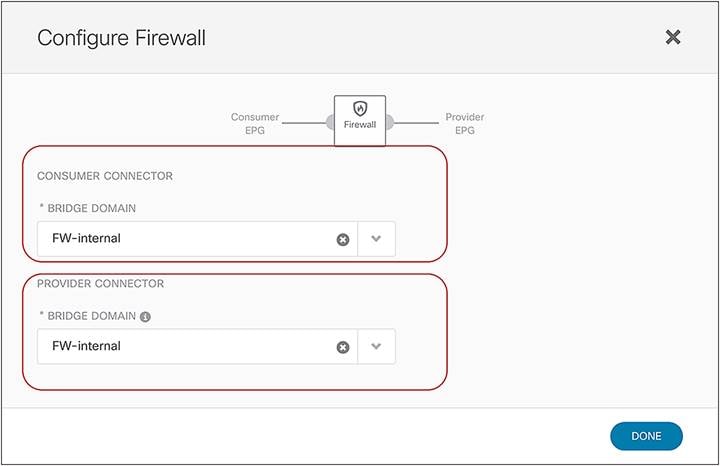

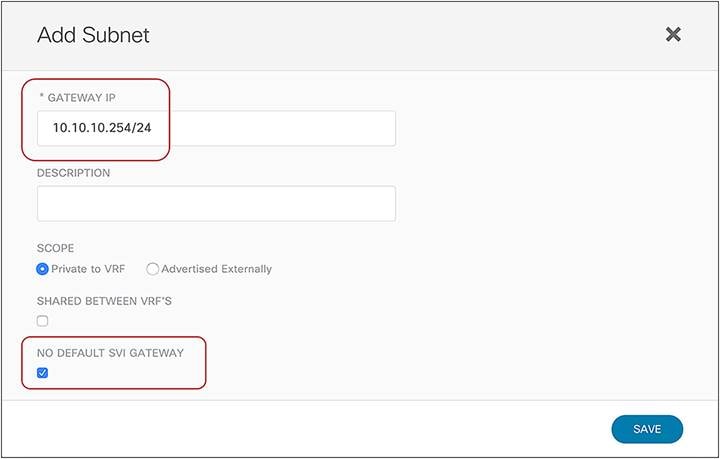

● In the example in Figure 10, the service node is deployed in one-arm mode, leveraging a single interface to connect to a dedicated service Bridge Domain (BD) defined in the ACI fabric. It is worth being reminded that in order to leverage service graph with PBR with ACI Multi-Site, the service node must be connected to a BD and not to an L3Out logical connection, which essentially means that no dynamic routing protocol can be used between the service node and the ACI fabric. The deployment of one-arm mode is therefore advantageous, because it simplifies the routing configuration of the service node, which requires only the definition of a default route pointing to the service BD IP address as next-hop. That said, two-arm deployment models (with inside and outside interfaces connected to separate BDs) are also fully supported, as shown in Figure 11.

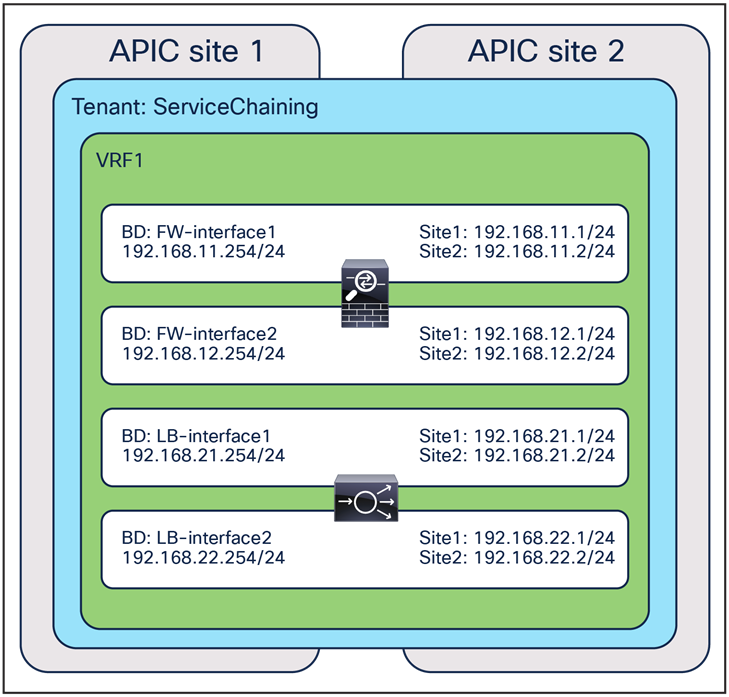

● The service BD(s) must be L2-stretched across sites. This means that the interfaces of the service nodes in different sites must be in the same service BD. The recommendation is to do this without extending BUM flooding, to avoid spreading broadcast storms outside a single fabric.

● For the north-south use case, the regular EPGs such as Web EPG and App EPG can be stretched across sites or locally confined in a site. As of Cisco ACI Release 6.0(5), the external EPG (L3Out EPG) can be a local object or a stretched object (that is, defined on the Cisco Nexus Dashboard Orchestrator as part of a template mapped to all the deployed sites), depending on the external connectivity design.

● For the east-west use case, the regular EPGs such as Web EPG and App EPG can be stretched across sites or locally confined in a site (or a combination of the two). For specific EPG-to-EPG contracts with PBR, an IP prefix must be configured under the consumer EPG, covering all the endpoints that are part of that EPG, which is easy to do if each EPG gets assigned to its own BD (and IP subnet) but may become more challenging if multiple EPGs are configured as part of the same BD (and IP subnet). Cisco ACI Release 6.0(3d) introduces support for the definition of /32 (IPv4) and /128 (IPv6) prefixes under the consumer EPG (this was not possible in previous releases because of CSCwa08796). While operationally complex, this approach offers a possible option for the deployment of east-west PBR in ACI “application-centric” Multi-Site deployments where multiple EPGs are configured as part of the same BD (and IP subnet).

● For east-west use case (EPG-to-EPG contract with PBR), features for specific IP endpoints or host prefixes (/32 for IPv4 and /128 for IPv6) such as static route on a bridge domain, Anycast MAC/IP and Microsoft NLB are not supported.

● Prior to Cisco ACI release 4.2(5) or 5.1(1), the north-south use case using an EPG-to-L3Out contract with PBR is supported only intra-VRF. The east-west use case can be supported either intra-VRF or inter-VRF (and inter-tenant). Support for the north-south inter-VRF (and inter-tenant) use case requires Cisco ACI release 4.2(5), 5.1(1), or later.

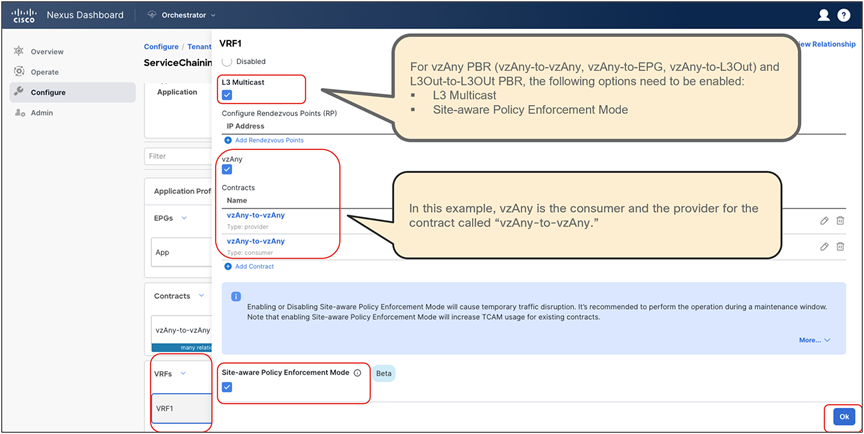

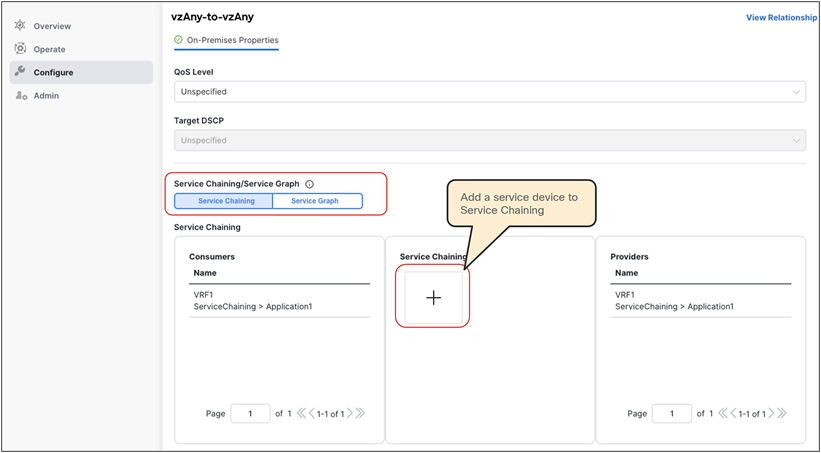

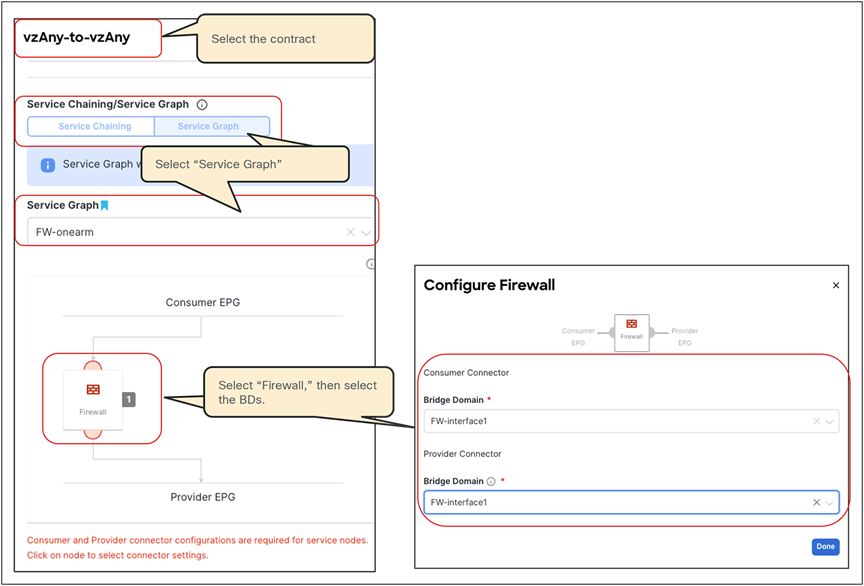

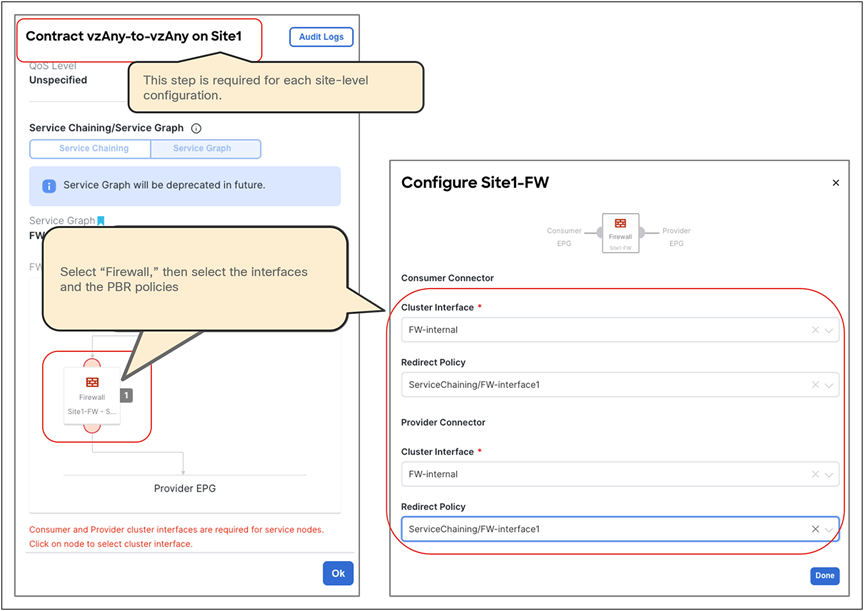

● vzAny PBR (vzAny-to-vzAny, vzAny-to-EPG, and vzAny-to-L3Out) and L3Out-to-L3Out PBR use cases require Cisco ACI Release 6.0(4c) or later, and Cisco Nexus Dashboard Orchestrator (NDO) Release 4.2(3e) or later. vzAny-to-vzAny, vzAny-to-L3Out, and L3Out-to-L3Out PBR must use one-arm service node instead of two-arm. For more guidelines and deployment considerations for those new cases, please refer to the section “Multi-Site service graph with PBR use cases.”

● Consumer endpoints of an east-west contract with PBR must not be connected under the border leaf node where an intersite L3Out resides.

Other service-insertion examples (one-arm and two-arms examples)

Though this document uses mainly a two-arm mode design in its examples, both one-arm and two-arm are valid options, except for the vzAny PBR (vzAny-to-vzAny, vzAny-to-EPG, and vzAny-to-L3Out) and L3Out-to-L3Out PBR use cases, which mandate one-arm mode service nodes.

The following are general L3 PBR design considerations that are applied to PBR in Multi-Site as well:

● In a Multi-Site design, redirection to a PBR node is only supported to an interface that is connected to a bridge domain (that is, the interface cannot be connected to an L3Out). However, the same physical interface connecting a PBR node to the fabric could be used for both types of connectivity when leveraging different logical interfaces (each associated to a separate VLAN tag). For example:

◦ The PBR node is connected to Leaf1 Ethernet 1/1 and uses VLAN 10 to connect to the service bridge domain. This is the logical interface used for PBR for east-west communication between EPGs.

◦ The PBR node uses, instead, VLAN 20 on the same interface Ethernet 1/1 on Leaf1 to connect to an L3Out (the L3Out must use SVIs in that case). This is the logical interface that could be used for north-south traffic, using the service node as a perimeter firewall (FW).

● The PBR node interfaces can be part of the same bridge domain used by the consumer/provider EPG, or you can define different dedicated service bridge domains.

● The PBR node can be deployed in two-arm mode or in one-arm mode with a single interface connected to a service bridge domain. As already mentioned, this is not valid for the vzAny PBR and L3Out-to-L3Out use cases, which, as of Cisco ACI Release 6.0(5), still mandate the deployment of one-arm mode service nodes.

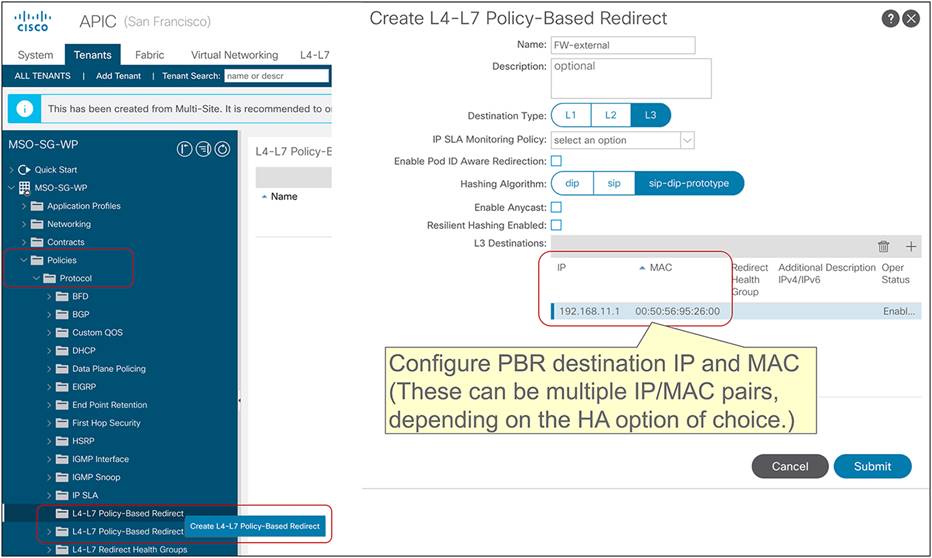

● Prior to Cisco ACI Release 5.2(1), the deployment of an active/standby service node pair is only supported if the active device always uses the same virtual MAC (vMAC) address. This is because those older ACI releases do not support dynamic PBR destination MAC address detection, and traffic redirection is performed by statically configuring the MAC address associated to the active service-node Virtual IP (VIP). This requirement implies that when a service node failover occurs, the standby unit that is activated must inherit both the VIP and vMAC addresses of the failed active unit (this is, for example, the case with Cisco ASA and Cisco Firepower models). Depending on the service node vendor, this might not be the default behavior, but it might have the vMAC address as a configuration option. Starting from Cisco ACI Release 5.2, this consideration is no longer applicable if dynamic PBR destination MAC detection is used instead of static PBR destination MAC configuration.

While not the main focus of this document, the following are general L1/L2 PBR design considerations that are also applied to PBR in Multi-Site:

● Cisco ACI Release 4.1(1) or later is required.

● The PBR node interfaces must be part of dedicated bridge domains.

● The PBR node can be deployed in two-arm mode, not one-arm mode.

Note: The term “PBR node” refers to the network services node (firewall, load balancer, etc.) specified in the PBR policy.

In addition to this, the deployment of the vzAny PBR use cases (vzAny-to-vzAny, vzAny-to-EPG, and vzAny-to-L3Out) and the L3Out-to-L3Out PBR use case in an ACI Multi-Site architecture is subject to the following considerations:

● Cisco ACI Release 6.0(4c) or later is required.

● Cisco Nexus Dashboard Orchestrator Release 4.2(3e) or later is required.

● Single-node service graph is supported, not multiple-nodes service graph.

● Only one-arm mode is supported for the service device.

For more information about generic PBR design considerations and configurations, please refer to the document below:

https://www.cisco.com/c/en/us/solutions/data-center-virtualization/application-centric-infrastructure/white-paper-c11-739971.html.

Multi-Site service graph with PBR use cases

The following sections describe different use cases where service graph with PBR can be used to redirect north-south and east-west traffic flows to a service node (or to a chain of service nodes). The specific scenarios that will be considered are:

● The deployment of a single-node service graph, for redirecting traffic flows either to a firewall service or to a load-balancer service.

● The deployment of a multi-nodes service graph for redirecting traffic flows to a service chain built with firewall and load-balancer services.

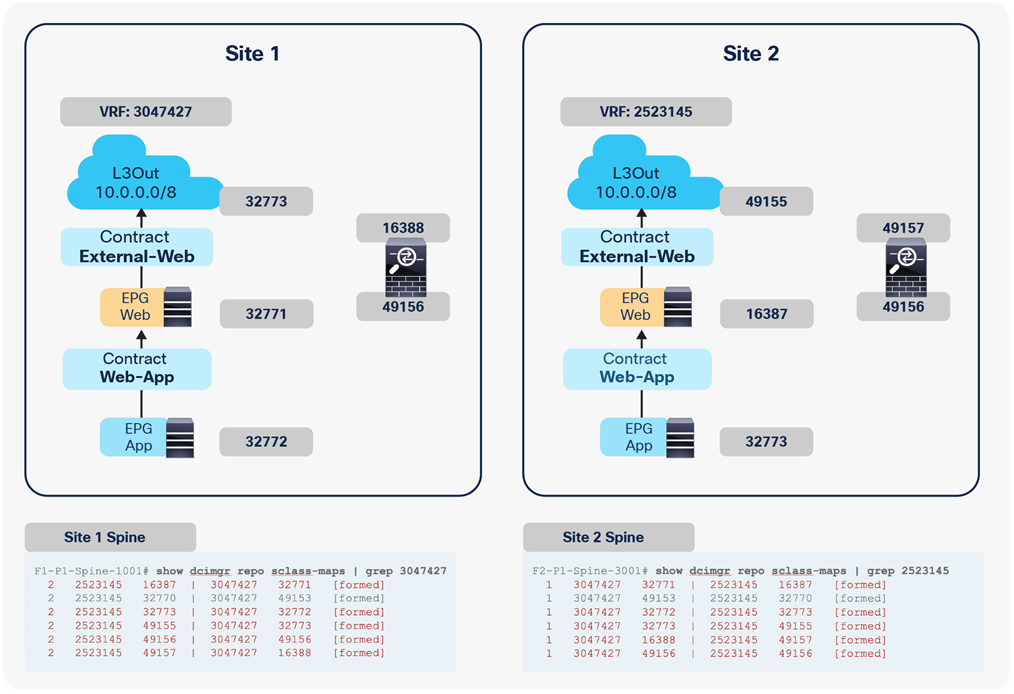

As previously discussed in the “Recommended design overview” section, in an ACI Multi-Site architecture each specific type of service is implemented by leveraging a distributed model, with a separate set of service nodes in each fabric. For example, a firewall service can be represented by the deployment in each site of an active/standby pair of firewalls or of one of the other redundancy options previously shown in Figure 1. The same considerations apply to the deployment of a load-balancer service.

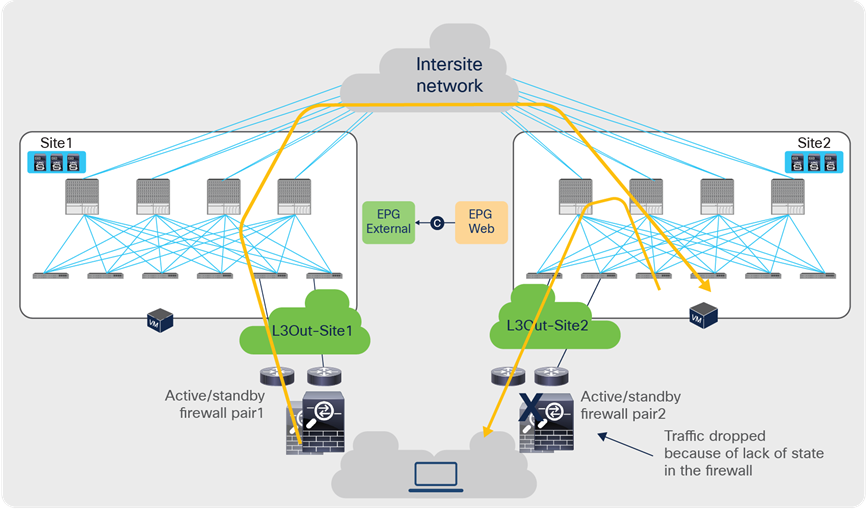

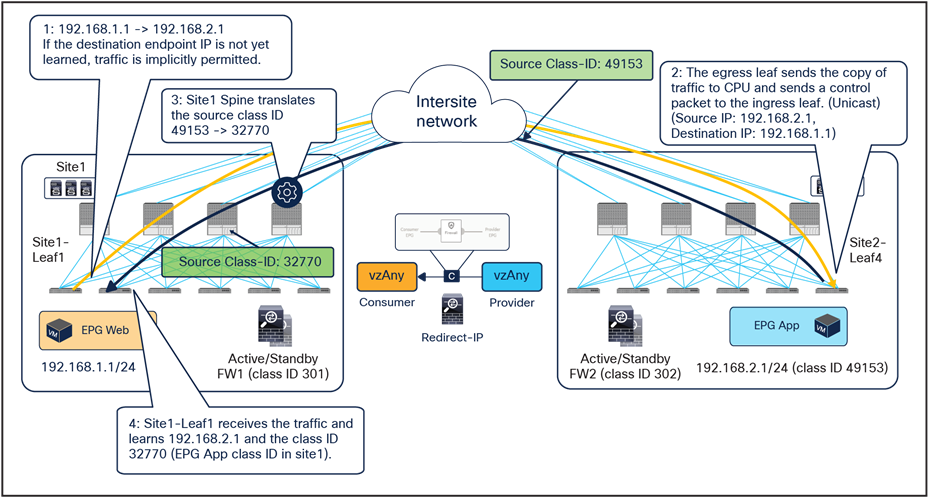

The critical requirement for integrating distributed stateful service nodes into an ACI Multi-Site architecture is avoiding the creation of asymmetric traffic paths for the incoming and return directions of flows, because doing so would cause communication drops due to the stateful nature of those service nodes. Figure 12 illustrates an example. For incoming traffic from an external client to an internal endpoint in site2, traffic may be steered toward the L3Out in site1, depending on the routing design. However, the outbound traffic from the internal endpoint goes out (by default) through the local L3Out in site2. The return traffic would, hence, be dropped by the external firewall connected to site2 since the firewall does not have the connection state information for the traffic flow that was created earlier on the external firewall connected to site1.

Why traffic symmetricity is important in multilocation data centers

Even if the external firewall connected to site2 has an Access Control List (ACL) to permit outgoing traffic, the external firewall connected to site2 will drop the asymmetric outgoing traffic because firewalls are generally stateful regardless of traffic direction. For example, Cisco ASA and FTD firewalls only match the first packet of a connection to an ACL. For Transmission Control Protocol (TCP), any new connection initiation segment that is not a SYN will be dropped by an implicit stateful check and will never be matched against an ACL permit-rule by default. Only User Datagram Protocol (UDP) connections may be permitted in an asymmetrical fashion with bidirectional ACLs.

A solution is therefore required to keep both directions of traffic flowing through the same service node. The asymmetric traffic path shown in the previous figure for traffic destined to endpoints that are part of bridge domains that are stretched across sites, can be avoided by leveraging host-route advertisement to optimize the traffic path for ingress communication, but this approach to avoid asymmetricity can be used for a north-south traffic path only.

Advanced logic has been built into the ACI Multi-Site implementation to provide an elegant answer to such a requirement. As a result, the PBR policy will be enforced on specific fabric-leaf nodes that may be different depending on the specific traffic flow considered (north-south vs. east-west) and on the type of contract defined between the endpoint groups (EPG-to-L3Out, EPG-to-EPG, use of vzAny, etc.). For example, we’ll see how the compute leaf node is always used to enforce the PBR policy for north-south communication when using a specific EPG-to-L3Out contract.

Upcoming sections will detail the use of those advanced functionalities for all those different use cases. Table 1 and Table 2 summarize where the PBR policy must be applied in Multi-Site for each supported use case to prevent the creation of asymmetric traffic across independent stateful service devices.

Table 1. PBR policy enforcement in different use cases in Multi-Site (after Cisco ACI Release 4.0(1))

| VRF design |

North-south (L3Out-to-EPG) |

East-west (EPG-to-EPG) |

| Intra-VRF |

Non border leaf (ingress-mode enforcement) |

Provider leaf |

| Inter-VRF |

Consumer leaf (The L3Out EPG must be the provider.)* |

Provider leaf |

Table 2. PBR policy enforcement in different use cases in Multi-Site (Cisco ACI Release 6.0(4c) or later is required.)

| VRF design |

East-west (vzAny-to-EPG) |

North-south (vzAny-to-L3Out) |

East-west and North-south (vzAny-to-vzAny) |

Transit (L3Out-to-L3Out) |

| Intra-VRF |

Provider leaf |

Intrasite traffic: either source or destination leaf node Intersite traffic: both source and destination leaf nodes |

Intrasite traffic: either source or destination leaf node Intersite traffic: both source and destination leaf nodes |

Intrasite traffic: either source or destination leaf node Intersite traffic: both source and destination leaf nodes |

| Inter-VRF |

Not supported |

Not supported |

Not supported |

Intrasite traffic: either source or destination leaf node Intersite traffic: both source and destination leaf nodes |

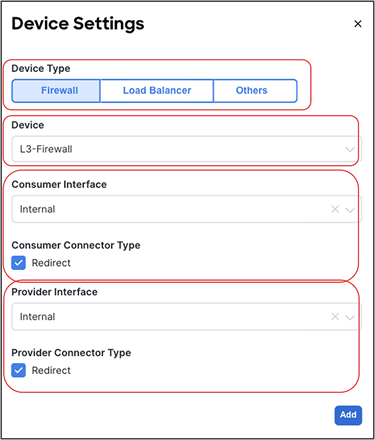

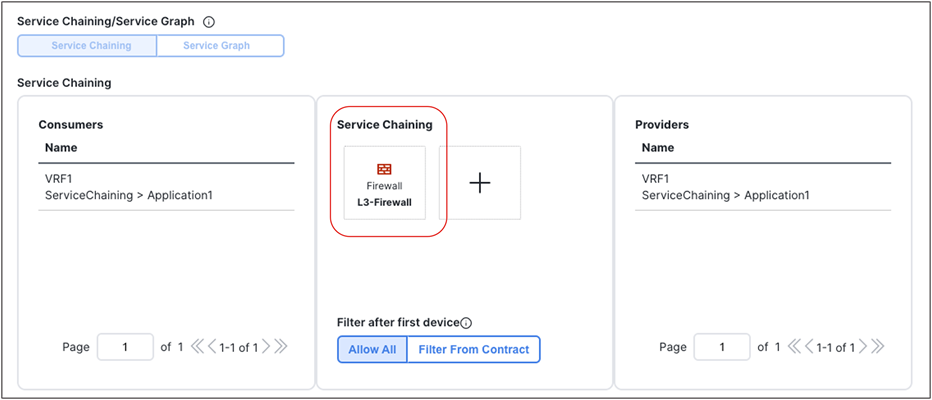

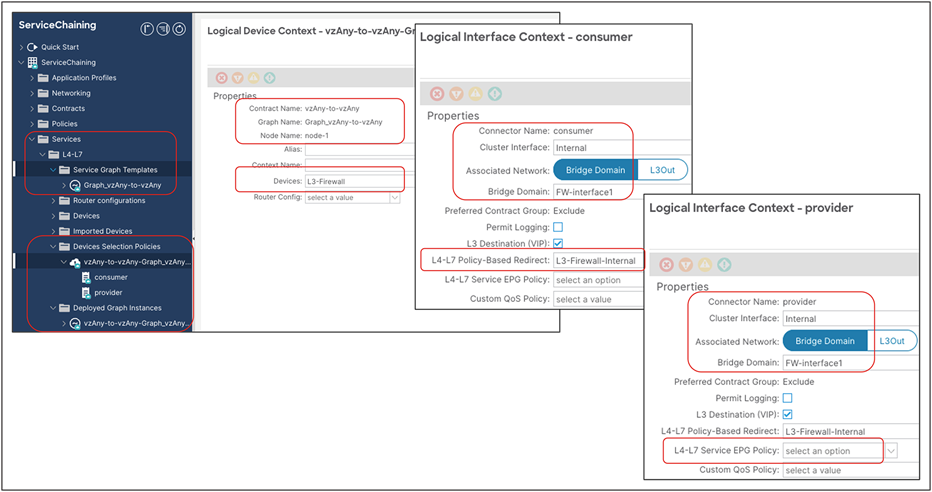

This section explains firewall insertion with PBR for north-south and east-west traffic flows for the following PBR use cases:

● North-south traffic use case (EPG-to-L3Out)

● East-west traffic use case (EPG-to-EPG)

● East-west and north-south traffic use case (vzAny-to-vzAny)

● East-west and north-south traffic use case (vzAny-to-EPG)

● North-south traffic use case (vzAny-to-L3Out)

● Transit traffic use case (L3Out-to-L3Out)

North-south traffic use case (EPG-to-L3Out)

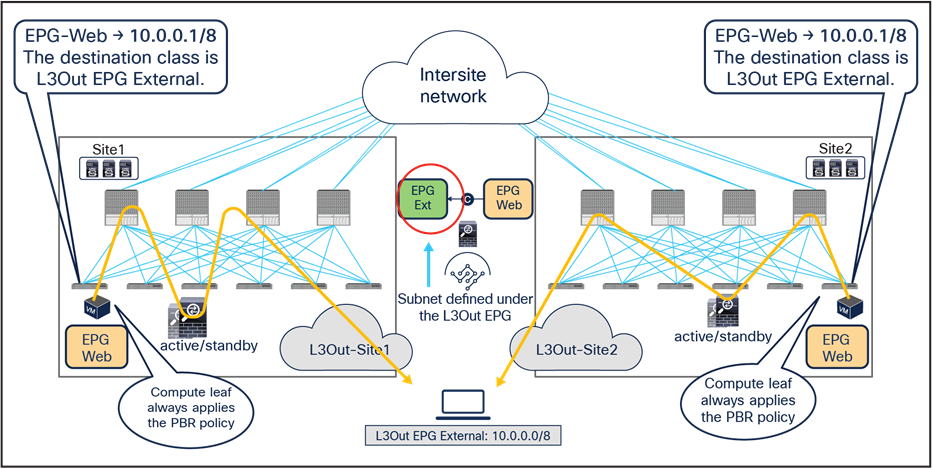

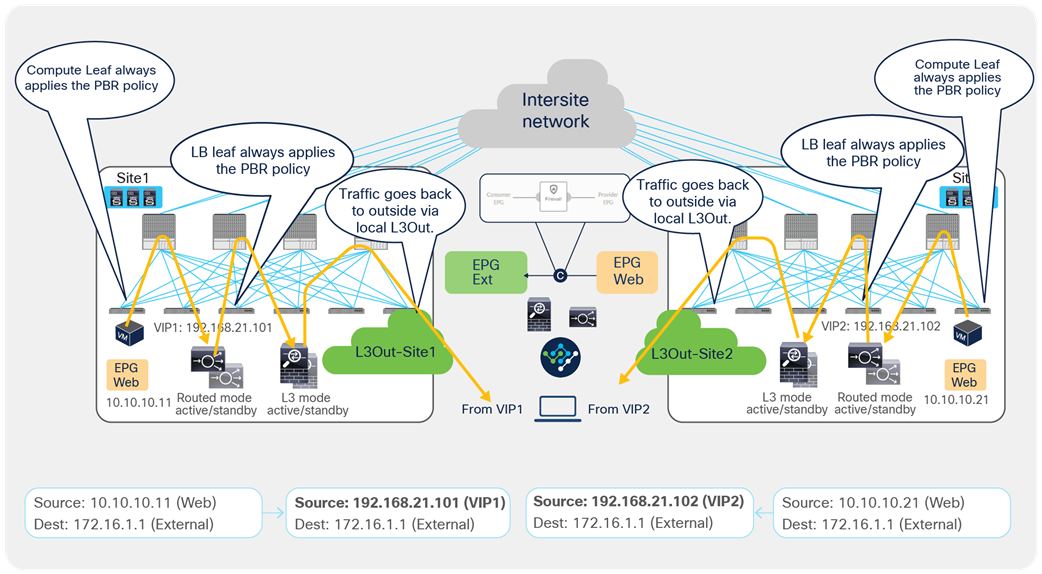

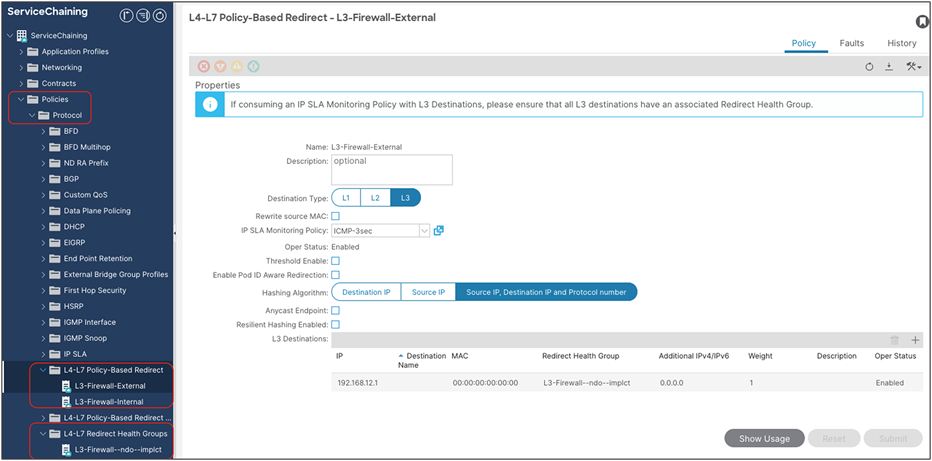

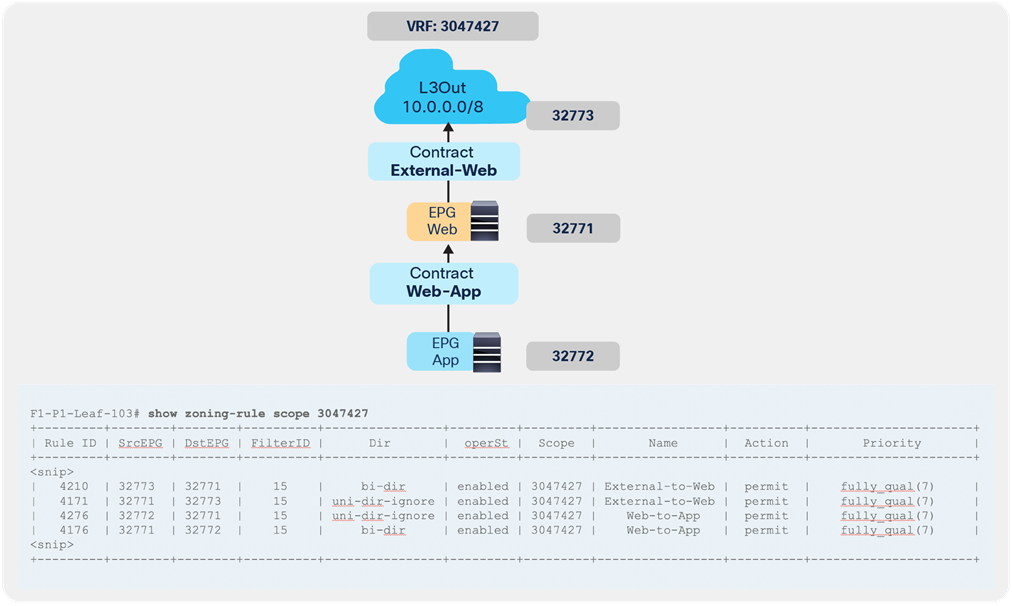

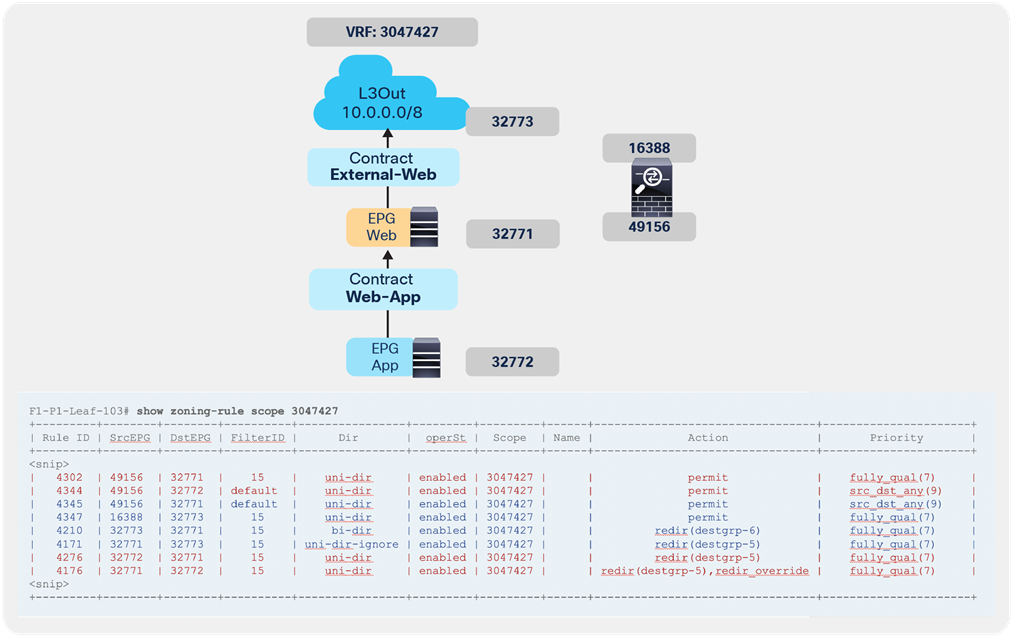

Figure 13 shows a Cisco ACI network design for a north-south-routed firewall insertion with an intra-VRF EPG-to-L3Out contract with PBR. A contract with a service graph attached is applied between an L3Out EPG and a Web EPG. The service graph is configured with PBR enabled in both directions to steer the traffic to a firewall service device.

Example of a north-south firewall with PBR design (intra-VRF)

For this EPG-to-L3Out use case, traffic symmetricity through the independent firewall service deployed in each fabric is guaranteed by ensuring that for each north-south flow the PBR policy is always (and only) applied on the compute leaf nodes. As a consequence, independently from the data path taken by the inbound traffic flow, the redirection of traffic always steers the traffic to the firewall service deployed on the fabric where the internal EPG endpoint is connected.

For intra-VRF communication, assuming the VRF enforcement mode configuration is ingress (which is the default setting), the enforcement of the PBR policy always happens on the compute leaf, regardless of which EPG is the consumer or the provider of the contract (note that this is the case also for non-Multi-Site deployments). For inter-VRF communication, in order to continue to enforce the PBR policy only on the compute leaf node, it is instead mandatory to ensure that the External EPG associated to the L3Out is the provider of the contract.

In order to implement the behavior described above, two things need to happen:

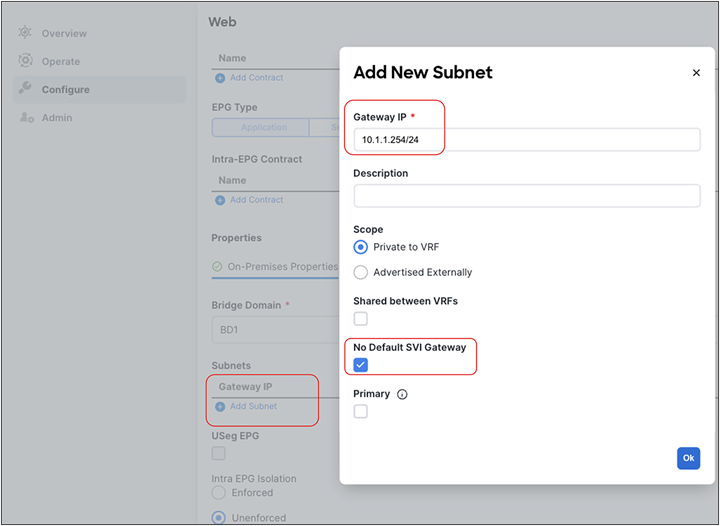

1. The compute leaf must always know the class ID information associated to the external destination, so as to be able to apply the PBR policy. This is possible because, as shown in Figure 14, the external prefix must be associated to the L3Out EPG to be able to properly classify inbound traffic. This information is therefore statically configured on the compute leaf as a result of the creation of the EPG-to-L3Out contract.

2. The border leaf nodes must be properly configured for not applying the PBR policy to inbound and outbound traffic flows, which is always the case for an EPG-to-L3Out contract in Cisco ACI Multi-Site (the Policy Control Enforcement Direction option on VRF is always set to “ingress” if the VRF is created through Cisco Nexus Dashboard Orchestrator [NDO]).

Destination IP subnet‒based classification for L3Out EPG

Figure 15 illustrates the service-graph PBR deployment for steering to the firewall the inbound flows between an external network and an internal Web EPG, in the example where the internal Web EPG and the L3Out are defined in the same VRF and with the default VRF configuration (that is, ingress policy enforcement).

This is the sequence of events needed for establishing inbound connectivity:

● The traffic is received from the external network on the border leaf nodes, and it is simply forwarded to the compute leaf nodes connected to the internal destination endpoints without applying any security policy (the policy-applied bit in the VXLAN header is set to 0 by the border leaf node). Figure 15, below, shows the deployment of a stretched internal EPG (and associated subnet), and how all the inbound flows are steered from the external network toward the L3Out defined in site1, even if the destination internal endpoint is connected in site2.

● Once the compute leaf nodes receive the traffic, they can apply the PBR policy (because the leaf nodes know both the source and destination class IDs), and the traffic is redirected to the local active firewall node specified in the PBR policy (or to one of the local nodes, based on the hashing decision, when deploying multiple active nodes per site).

● After the service nodes apply the configured security policy, the traffic is then sent back into the fabric and to the destination endpoints.

Use of PBR for inbound traffic flows (north-south)

The outbound flows are characterized by the following sequence of events:

● The destination endpoints send traffic back to the external destination, and the PBR policy is again applied on the same compute leaf nodes where it was applied for the inbound direction. This means that the return flows are steered toward the same service nodes that already saw the incoming connection (and hence created the connection state).

● Once the firewalls have applied the locally configured security policies, the traffic is then sent back to the fabric and forwarded to the external client through the local L3Out connection. This is the default behavior, unless specific routing policies are configured to ensure the outbound flow is sent through an L3Out connection deployed in a remote site.

Use of PBR for outbound traffic flows (north-south)

When comparing the two previous figures, it is evident, regarding the endpoint sitting in site2, that there may be an “asymmetric” use of the L3Out connection (that is, inbound traffic uses L3Out-Site1, whereas outbound traffic is sent through L3Out-Site2), but there may be a “fully symmetric” use of the same service node for both directions of the communication. This is always the case for north-south intra-VRF communication, independently from the fact that the EPG and L3Out EPG are the consumer and/or provider of the contract with the associated service graph (with PBR enabled).

In the scenario where the internal EPG and the L3Out EPG are part of separate VRFs, it becomes instead important to define who is the consumer or the provider of the contract, because that would determine if the PBR policy gets applied on the compute leaf or on the border leaf. Thus, north-south inter-VRF service insertion with PBR in Multi-Site is supported only when the L3Out EPG is the provider, because that will ensure that the PBR policy is always (and only) applied on the compute leaf node (as it is the case for the intra-VRF north-south use case described above). This inter-VRF north-south use case is only supported with Multi-Site with Cisco ACI Release 4.2(5), 5.1(1), or later. Table 1 and Table 2 summarize the policy enforcement in the different use cases.

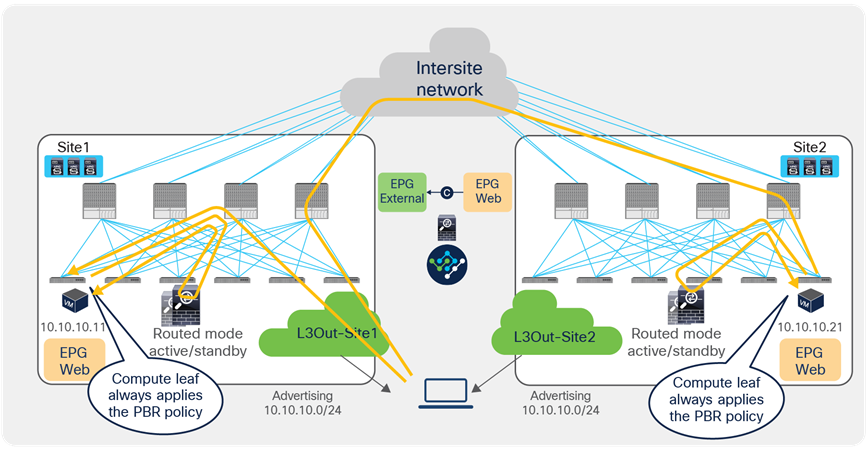

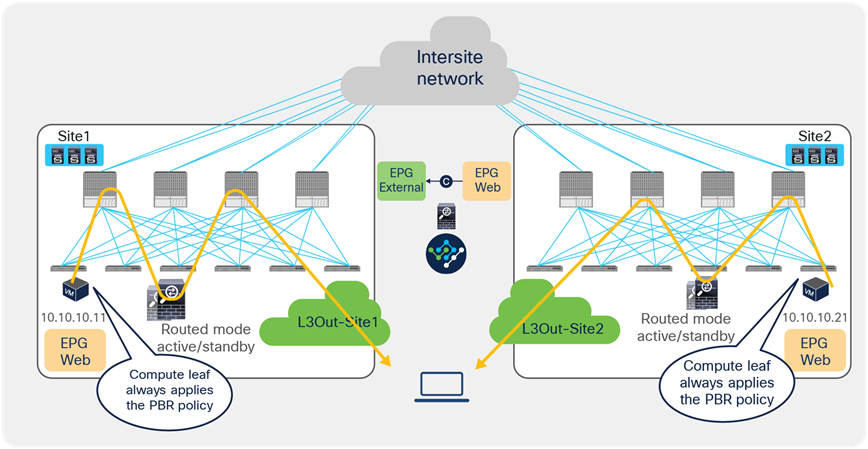

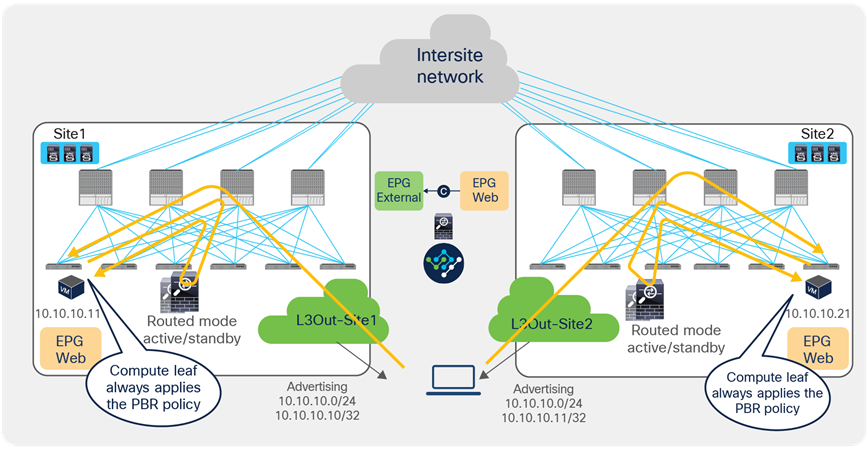

When you have available L3Out connections in both fabrics, the web server subnet stretched across sites is advertised through the border leaf nodes in both sites. As previously discussed, depending on the specific routing metric design, incoming traffic may be steered to the border leaf nodes of one of the sites. This suboptimal inbound traffic can be avoided by leveraging host-route advertisement to optimize the traffic path for ingress communication. With the use of service graph and PBR, such an approach represents only an optimization, but it is not necessary to avoid the establishment of asymmetric traffic across stateful services (as the previous example in Figure 15 and Figure 16 describes). Figure 17 illustrates how to optimize the inbound traffic flows: the destination IP address is the endpoint 10.10.10.11 located in Site1, and, because of the host route advertisement function, traffic originating from an external client can be selectively steered to Site1 and reach the destination leaf where the 10.10.10.11 endpoint is located. The destination leaf in Site1 then selects the local active PBR node, which sends traffic back to the destination. Similar behavior is achieved for traffic destined for the endpoint 10.10.10.21 in Site2.

Use of host route advertisement for ingress traffic optimization (north-south)

Note: In order to keep the configuration simple and to be able to apply a single EPG-to-L3Out contract, the External EPG associated to the L3Out (that is, the L3Out EPG) must be deployed as a “stretched” object associated to each site-specific L3Out. For more information, please refer to the ACI Multi-Site paper below: https://www.cisco.com/c/en/us/solutions/collateral/data-center-virtualization/application-centric-infrastructure/white-paper-c11-739609.html.

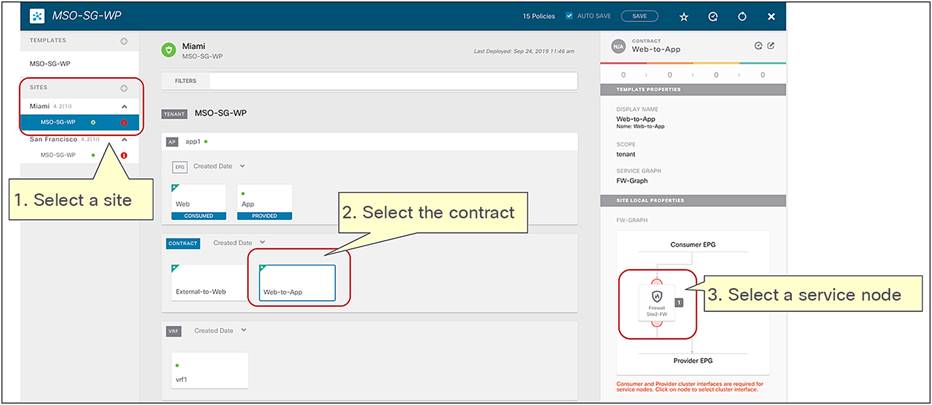

East-west traffic use case (EPG-to-EPG)

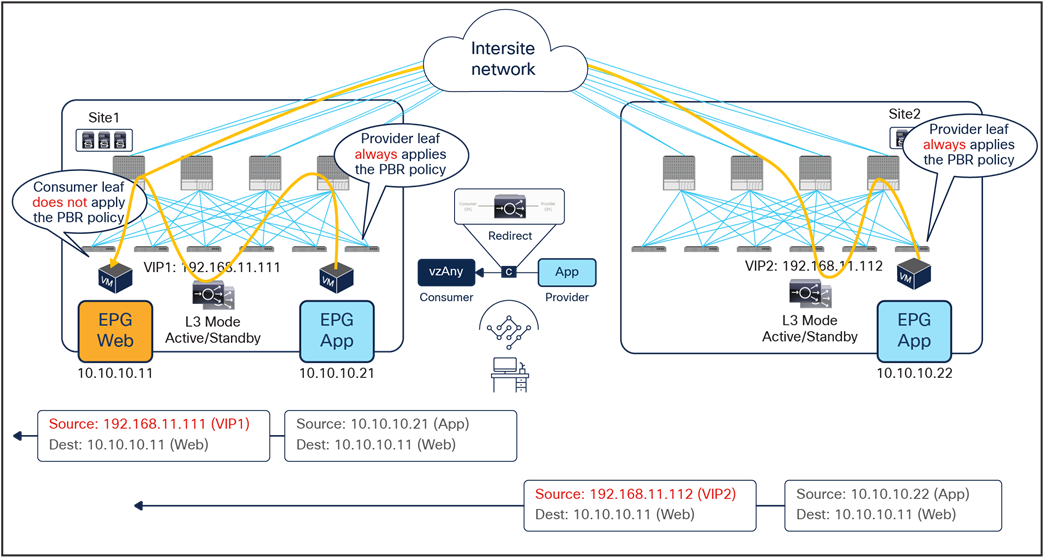

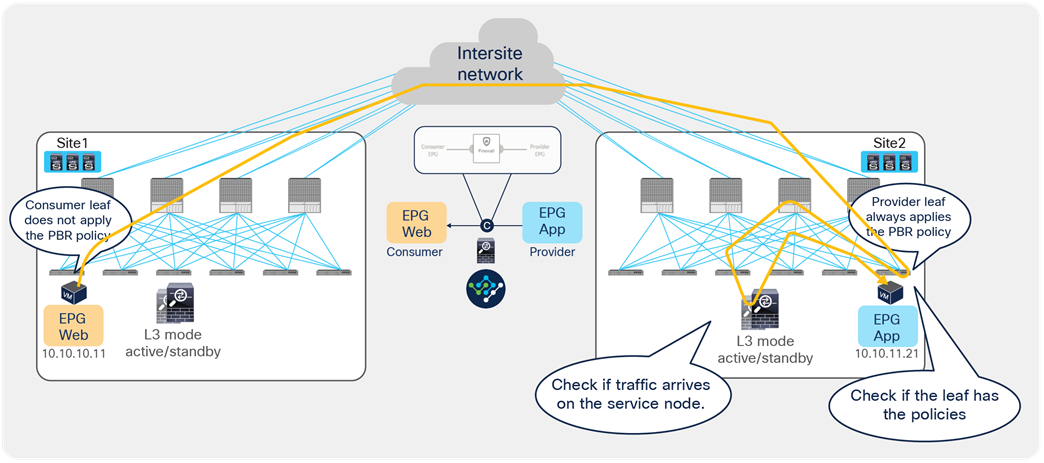

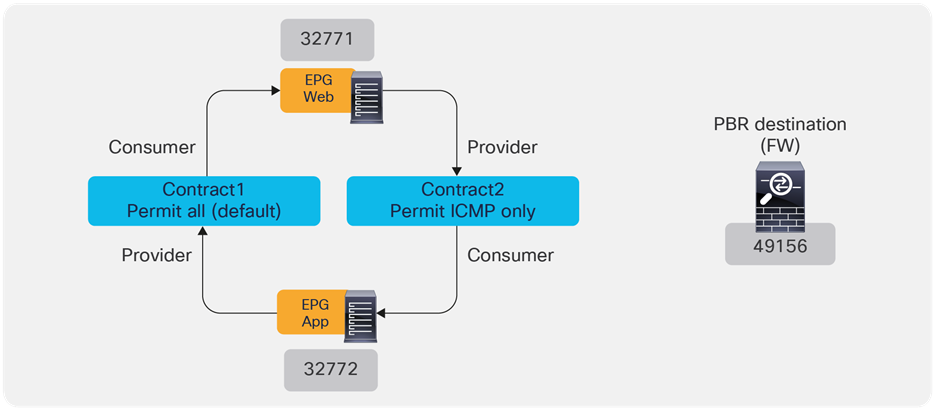

Figure 18 shows a typical Cisco ACI network design for east-west firewall insertion with PBR. This design is similar to that for the north-south firewall use case. The consumer Web EPG and the provider App EPG have a contract with an associated firewall service graph with PBR enabled in both directions.

East-west firewall with PBR design example (intra-VRF)

Note: Though this example is for an intra-VRF contract, an inter-VRF contract for east-west communication is also supported.

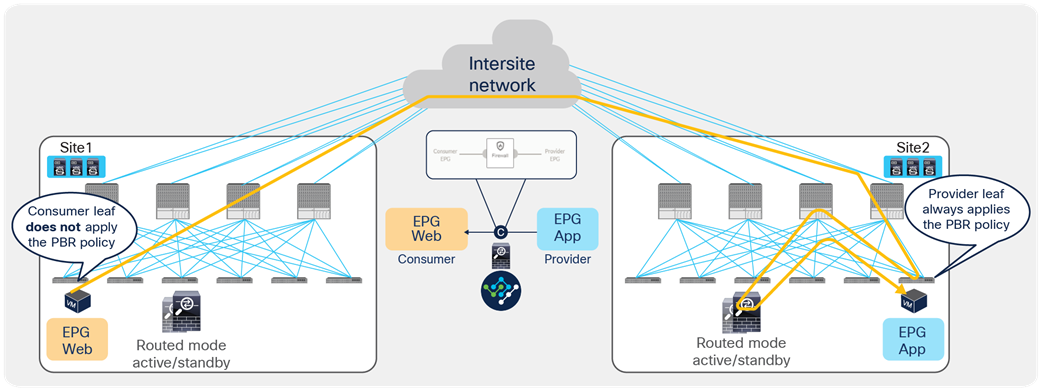

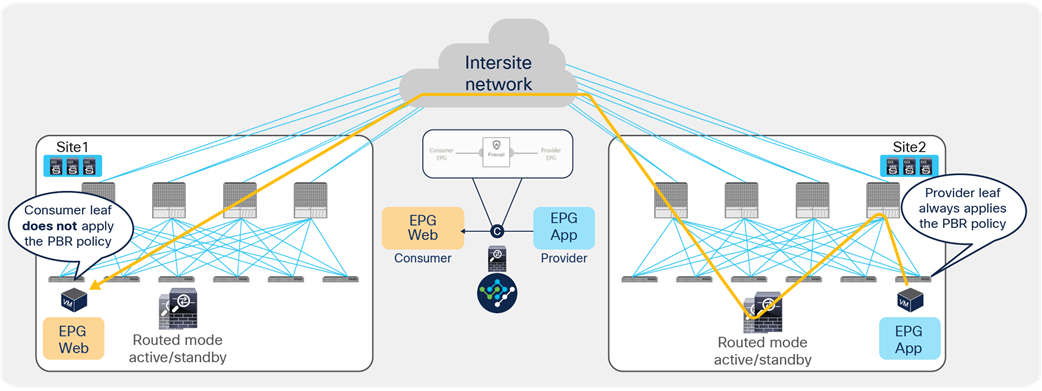

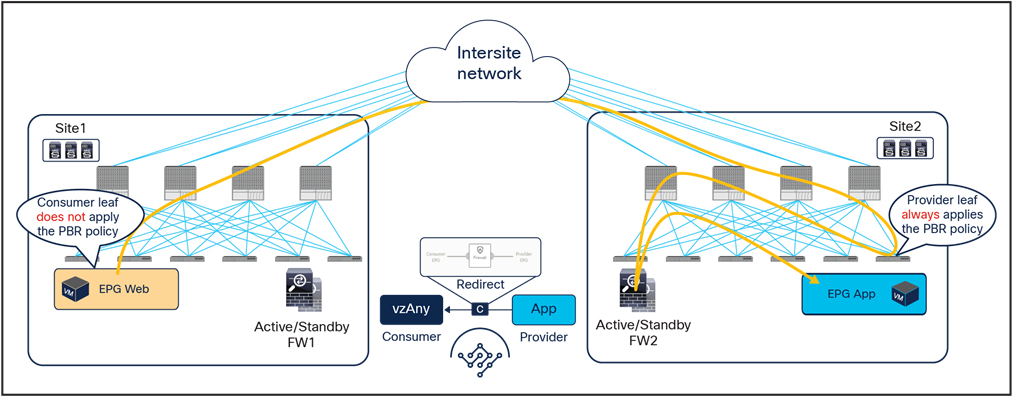

In this case, in order to avoid the creation of an asymmetric path across separate firewall nodes, we can leverage the fact that a contract between two EPGs always has a “consumer” and a “provider” side. It is hence possible to “anchor” the application of the PBR policy on only one side of the contract relationship, to be able to use the same firewall node for both directions of traffic. Starting from Cisco ACI Release 4.0(1), the “anchoring” of the application of the PBR policy is done on the provider leaf node, which is the leaf node where the endpoint providing the contract is locally connected.

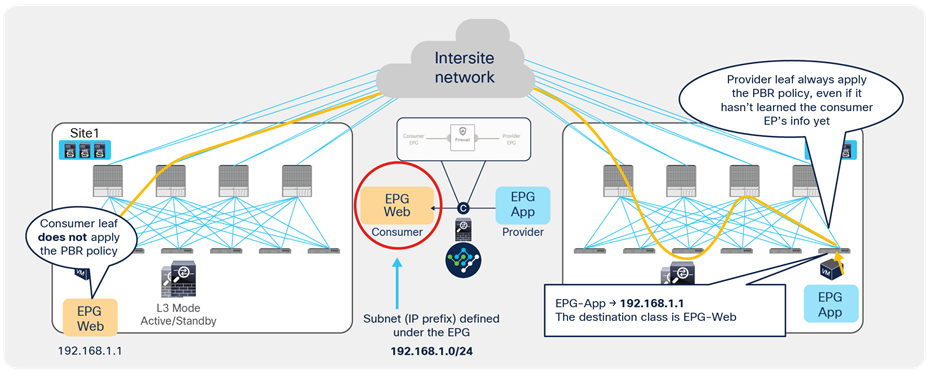

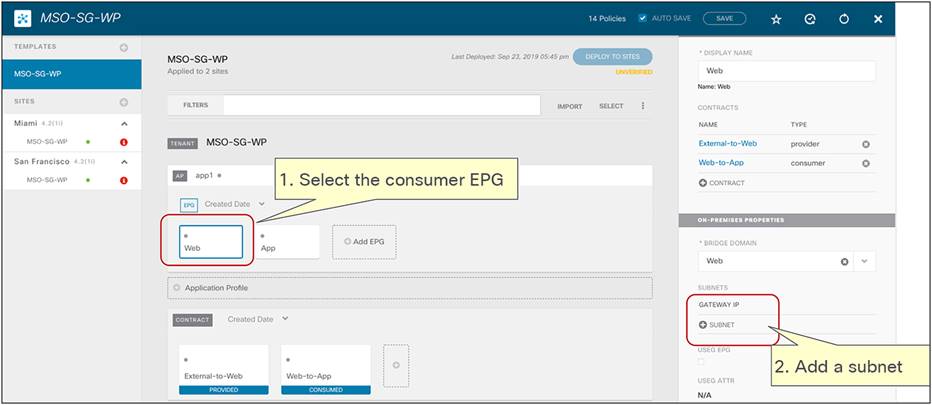

This mandates that the provider leaf node always knows the class ID information for the consumer EPG, even if the specific endpoint information were not yet learned based on data-plane traffic activity (or if, for example, data-plane learning was disabled). Figure 19 highlights how this is achieved by configuring an IP prefix under the consumer EPG matching all the endpoints that are part of that EPG. This configuration could be easily applied in a “network-centric” deployment where a single EPG is associated to a BD (and its subnet), whereas the provisioning of specific /32 prefixes (or /128 for IPv6) would likely be required for “application-centric” scenarios.

Note: Prior to Cisco ACI Release 6.0(3d), /32 for IPv4 or /128 for IPv6 prefixes could not be provisioned under the consumer EPG because of CSCwa08796.

Destination IP prefix‒based classification for consumer EPG

In the example above, the IP prefix 192.168.1.0/24 provisioned under the consumer EPG is statically configured on the provider leaf node, together with the class ID of the consumer EPG. This ensures that the PBR policy can always be applied on the provider leaf to redirect the traffic to the local firewall, even if the provider endpoint was the one initiating a traffic flow toward the consumer endpoint.

The examples in Figure 20 and Figure 21 show the specific behavior implemented starting from Cisco ACI Release 4.0(1), where the PBR policy is always applied on the provider leaf node.

● When the consumer Web endpoint sends traffic toward the App endpoints, the consumer leaf just forwards the traffic toward the provider leaf where the App endpoint has been discovered. The consumer leaf must not apply the PBR policy even if the consumer leaf can resolve the destination class ID of the provider EPG. In the case of an EPG-to-EPG contract with PBR, the zoning-rule for consumer-to-provider traffic has a special flag called “redirect override,” based on which the leaf avoids applying the policy unless the destination endpoint is locally learned. Thus, the use of the “redirect override” zoning-rule ensures that the provider leaf always applies the PBR policy even for the consumer-to-provider traffic.

● The traffic is received on the provider leaf node, and the PBR policy kicks in redirecting the traffic through the local active firewall node.

● Once the firewall has applied the locally configured security policies, the traffic is sent back toward the fabric and forwarded to the App endpoint.

Use of PBR for consumer-to-provider traffic flows (east-west)

When the App endpoint replies back:

● The PBR policy is applied on the same provider leaf (otherwise the traffic could be steered to a different firewall node than the one used for the incoming direction). The traffic is therefore steered through the same firewall node that built the connection state by receiving the incoming traffic.

● Once the firewall has applied the security policy, the traffic is sent back toward the remote site and forwarded to the Web endpoint.

● The consumer leaf does not apply the policy, because this was already done on the provider leaf (the policy-applied bit in the VXLAN header is set to 1).

Use of PBR for provider-to-consumer traffic flows (east-west)

If the source endpoint and the destination endpoints are located in the same site, the traffic is always redirected to a local firewall node, and there is no traffic hair-pinning across sites (Figure 22).

East-west traffic within a site

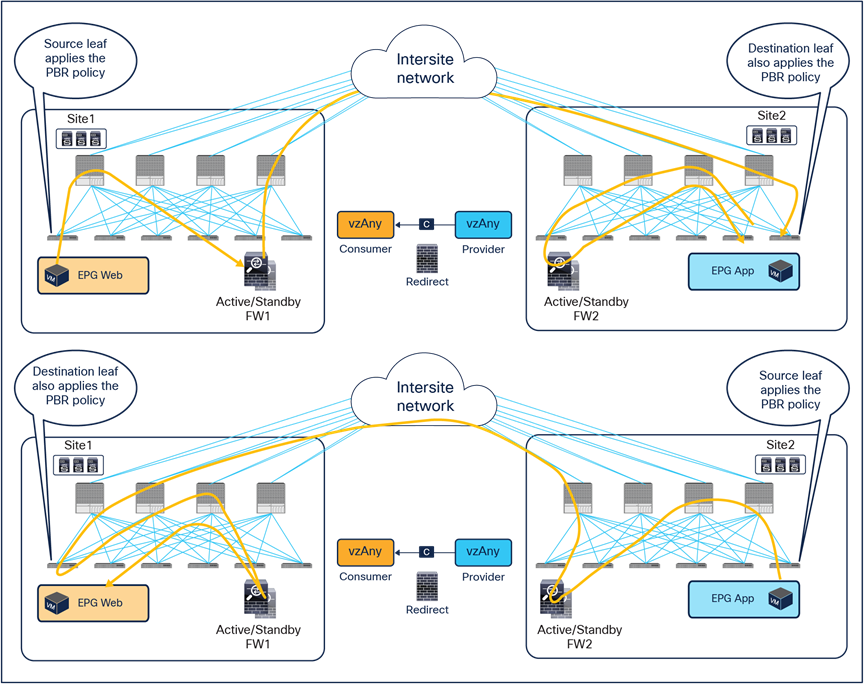

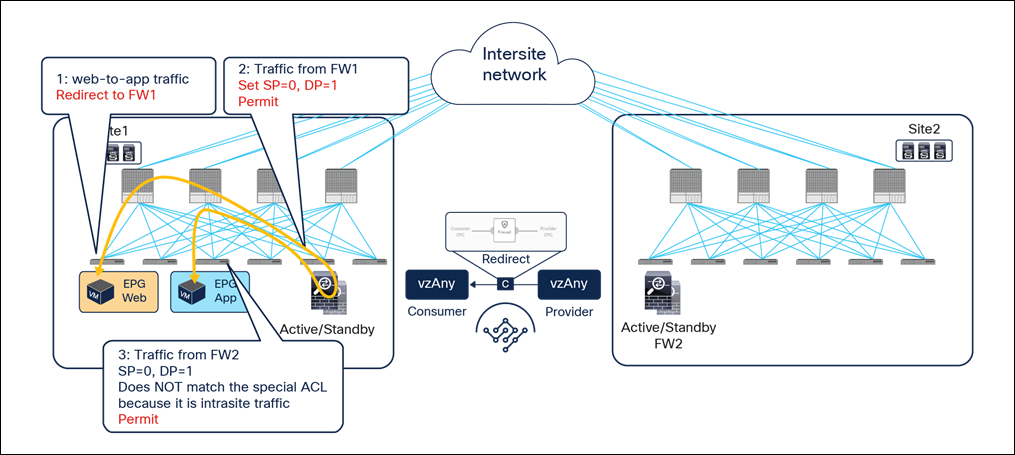

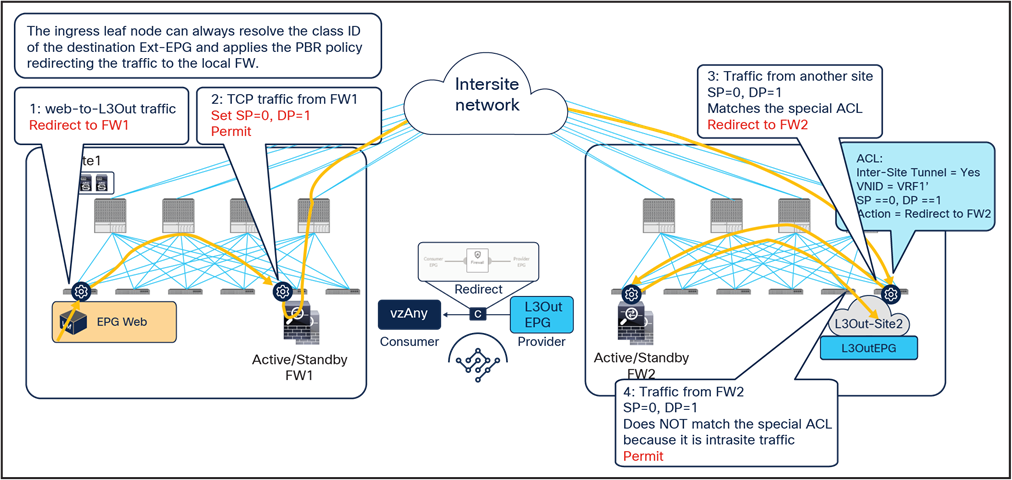

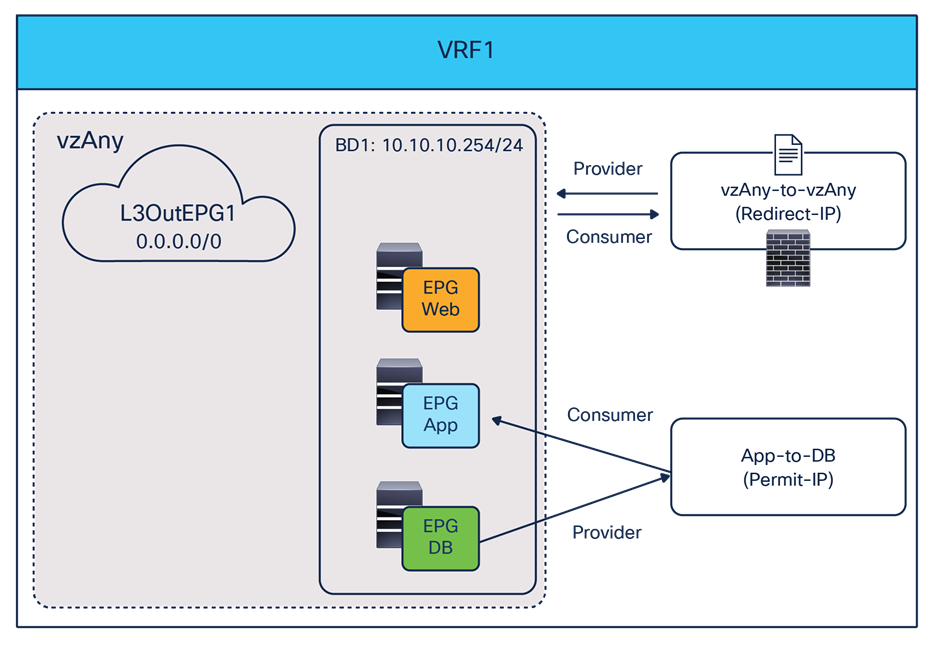

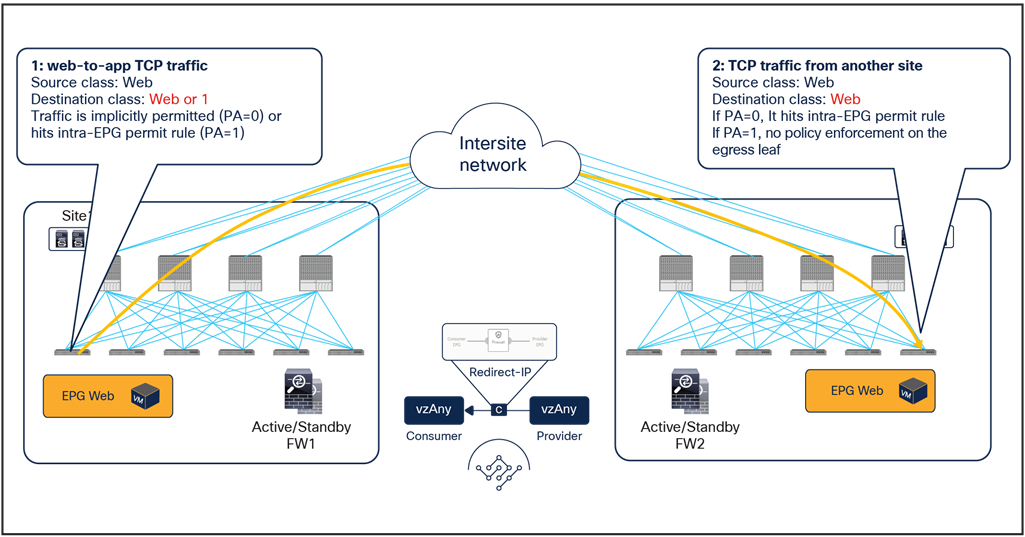

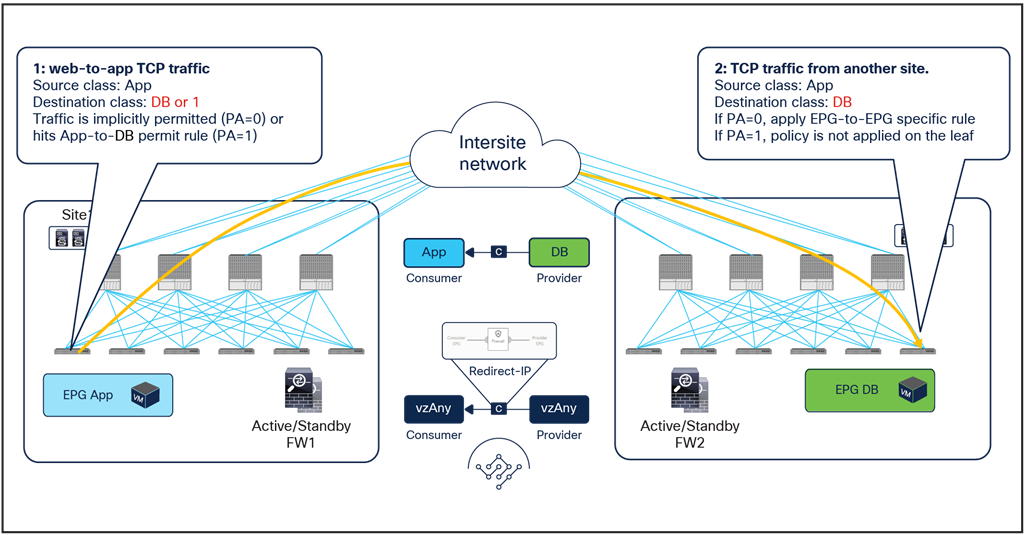

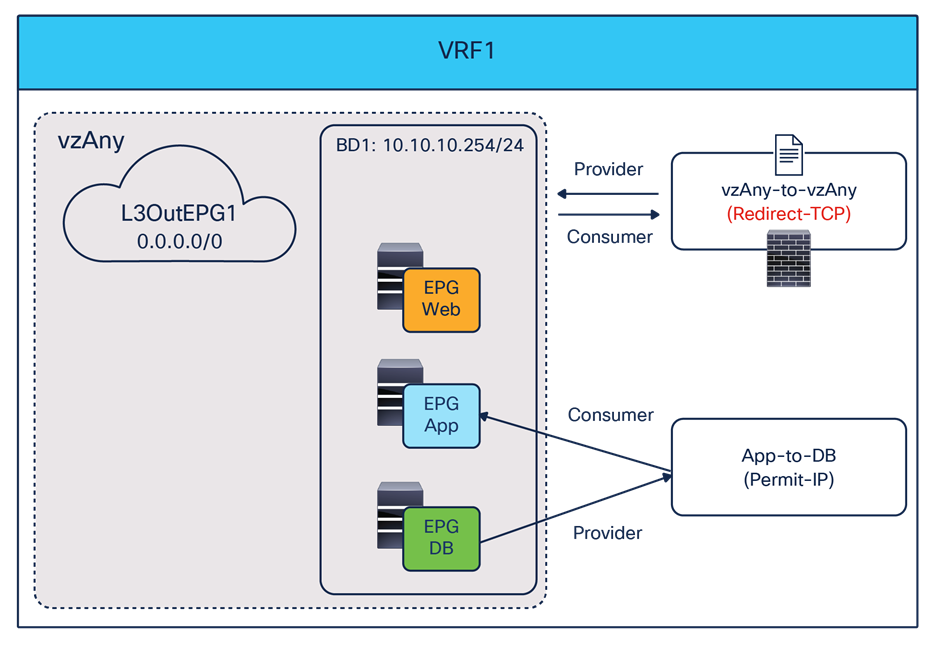

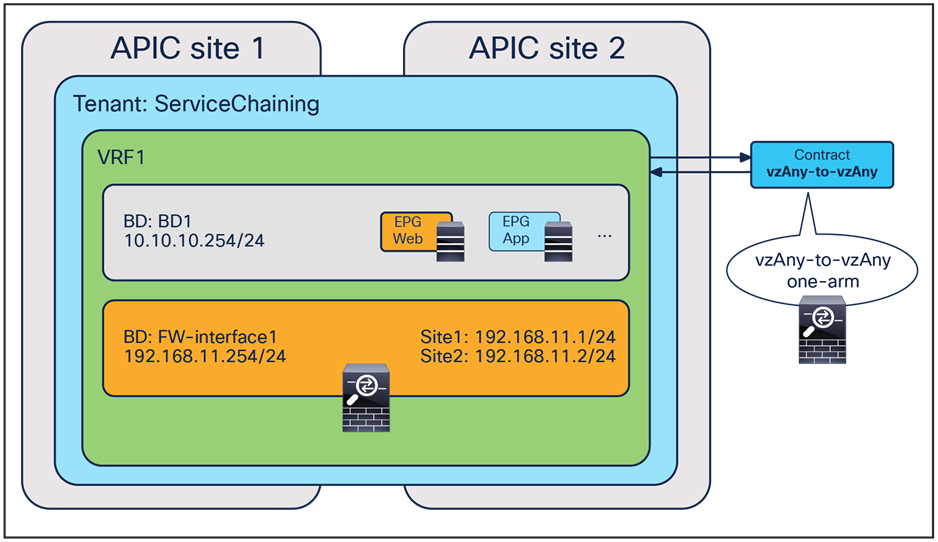

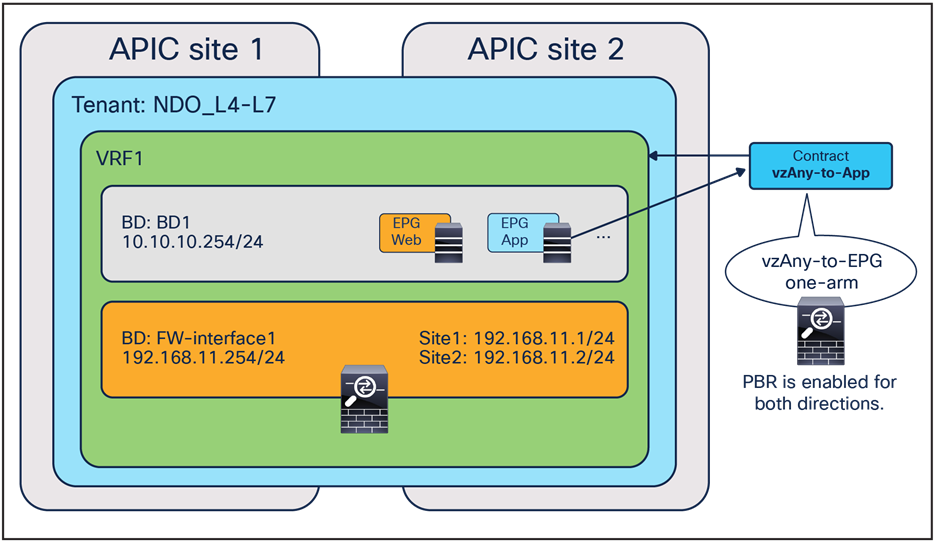

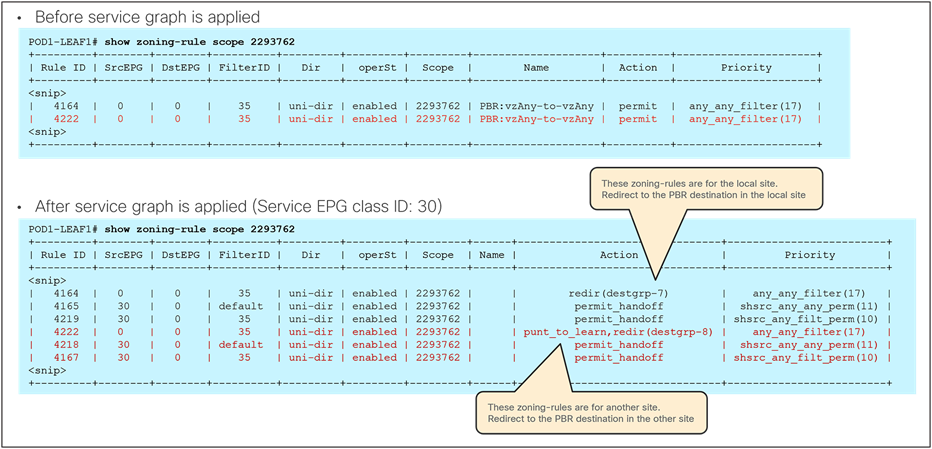

East-west and north-south traffic use case (vzAny-to-vzAny)

The deployment of this service graph with PBR use case requires the use of Cisco ACI Release 6.0(4c) or later and Cisco Nexus Dashboard Orchestrator Release 4.2(3e) or later. Figure 23 shows a sample Cisco ACI network design with vzAny-to-vzAny PBR. vzAny is both the consumer and the provider of a contract with a firewall service graph attached to it with PBR enabled in both directions.

Although this example shows just three EPGs (L3Out EPG, Web EPG, and App EPG), the VRF could have more EPGs in the same or different BDs, and the firewall could be inserted for all inter-EPGs communications because of the vzAny-to-vzAny contract with PBR.

North-south and east-west firewall with PBR design example (vzAny-to-vzAny)

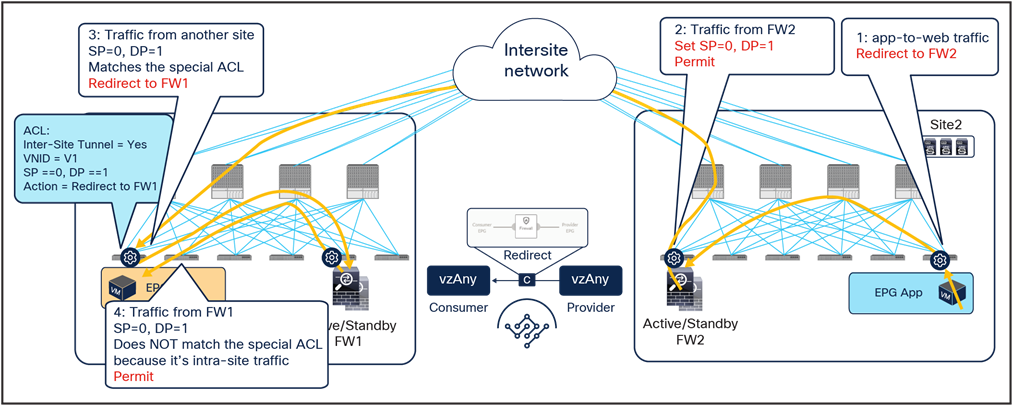

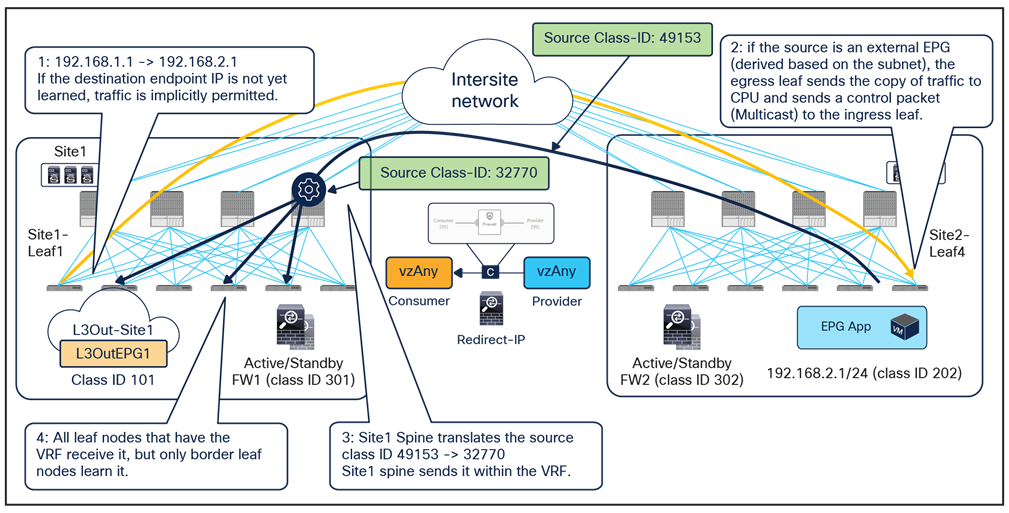

When considering the use of vzAny-to-vzAny PBR for redirecting to the firewall intersite east-west traffic between internal EPGs, the difficulty of avoiding the creation of an asymmetric traffic path through the independent firewall services deployed across fabrics becomes immediately clear. This is because, differently from the previously discussed EPG-to-EPG PBR use case, when applying a vzAny-to-vzAny PBR contract, it is not possible anymore to distinguish the role of the consumer and the provider of the contract.

A different approach is therefore required in this case, and the chosen solution has been to redirect all east-west traffic flows to both firewall services deployed in the source and in the destination fabric (Figure 24).

Use of ACI PBR to keep intersite traffic symmetric for the vzAny-to-vzAny PBR use case

The behavior shown above can be achieved if the PBR is applied on the source and destination leaf node for both directions of the same traffic flow. But for this to be possible, those leaf nodes should always know the class ID for the destination endpoint, and this cannot always be guaranteed under normal circumstances, hence some innovative functionalities have been introduced into ACI Multi-Site to achieve that.

Figure 25 illustrates an example of the ideal behavior with a vzAny-to-vzAny PBR contract where intersite communication between an endpoint in Web EPG and an endpoint in App EPG is steered through the firewall services in both the source and the destination sites.

● When the Web endpoint sends traffic toward the App endpoint, the ingress leaf in site1 redirects the traffic to the local active firewall node. As mentioned, for this to be possible we are assuming here that the source leaf node has all the required information (that is, the source and destination class IDs) to enforce the PBR policy.

● Once the firewall in the source site has applied the locally configured security policies, the traffic is sent to the destination leaf. The service leaf in site1 sets special flags[2] in the VXLAN header, to indicate that the local firewall has been inserted and has applied its security policy.

● When the traffic arrives to the destination leaf in site2, the special flags setting and the specific source VTEP address convey the information to the leaf that the firewall in the remote source site has already seen the traffic. The leaf can therefore just apply the PBR policy to redirect the traffic through the local active firewall node.

● After the local firewall has applied its security policy, the traffic is sent to the destination leaf node again. The service leaf in site2 also set the special flags in the VXLAN header (as was done by the service leaf nodes in the source site) to indicate the fact that the local firewall has seen the traffic. However, this information is now ignored by the destination leaf because this is intrasite VXLAN traffic (that is, it originated from a local service leaf node), so the destination leaf must simply forward it to the destination endpoint.

Use of PBR for Web-to-App traffic flows (vzAny-to-vzAny)

The insertion of the firewall services in both sites must also be done for the return traffic flow (Figure 25)

● When the App endpoint sends traffic toward the Web endpoint, the ingress leaf in site2 redirects traffic to the local active firewall node. Again, we are assuming that the ingress leaf knows the destination class ID information to be able to locally enforce the PBR policy.

● Once the firewall has applied its locally configured security policy, the traffic is sent to the destination leaf. The service leaf in site2 encapsulates the traffic with special flags properly set in the VXLAN header.

● When the traffic arrives to the leaf in site1, it is again redirected through the local active firewall node because of the special flags setting in the traffic received from the remote site.

● After the firewall has applied the locally configured security policies, the traffic is sent back to the destination leaf. The service leaf in site1 also set the special flags in the VXLAN header, but the destination leaf does not redirect traffic again because it is intrasite traffic.

Use of PBR for App-to-Web traffic flows (vzAny-to-vzAny)

For both directions of the flow, the redirection on the ingress leaf node is predicated on the leaf’s knowledge of the class ID of the destination endpoint. If, for whatever reason, that is not the case, the ingress leaf cannot apply the policy, and a different mechanism is required to ensure redirection of traffic to the firewall services in both the source and the destination site.

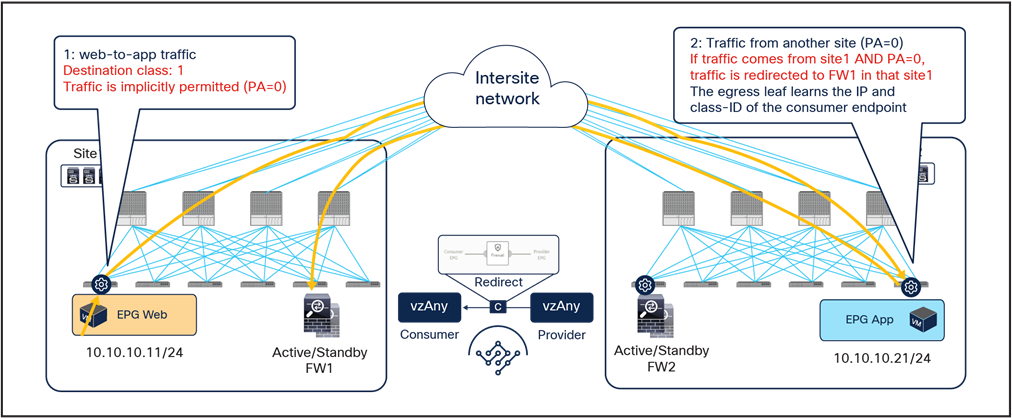

As shown in Figure 27, if the ingress leaf cannot apply the PBR policy, the traffic is implicitly permitted, and the Policy-Applied (PA) bit in the VXLAN header is not set (PA = 0). The traffic is forwarded across sites and received by the destination leaf in the remote site, which will redirect traffic back to the active firewall node in the source site. This is because setting the PA bit to 0 indicates that the policy was not applied by the ingress leaf (and consequently not sent to the firewall in that site), and the destination leaf redirects the flow back to the firewall in the source site. After the firewall in site1 has applied its locally configured policy, the traffic is sent back to the destination leaf. The remaining flow (Figure 28) is then the same as already shown in Figure 25 (the traffic is redirected to the local firewall in site2 before reaching the destination endpoint).

Hair-pinning of traffic when the consumer leaf cannot apply the PBR policy

Traffic forwarded back to the destination site

The traffic hair-pinning shown above, while not creating asymmetricity through the different firewall nodes, represents suboptimal data-path behavior. In order to eliminate it, an additional functionality named “conversational learning” has been implemented in Cisco ACI fabric for this vzAny-to-vzAny PBR use case.

Figure 29 shows how the reception of traffic with the PA bit set to 0 on the destination leaf triggers (in parallel to the data-plane traffic redirection shown in Figure 27 the origination of a control packet containing information about the destination endpoint IP address and class ID. This control packet is sent to the source leaf in site1, which receives it and installs the destination endpoint information on the source leaf in site1.

After the ingress leaf has learned the destination endpoint information, traffic is forwarded optimally from the source to the destination site, as previously shown in Figure 24.

It is worth noticing that if the east-west flow is between two endpoints connected to the same fabric, the traffic is redirected by either the source or the destination leaf. The endpoint IP learning status does not matter, because the local PBR destination is always used regardless of which leaf applies the PBR policy.

Intrasite traffic (vzAny-to-vzAny)

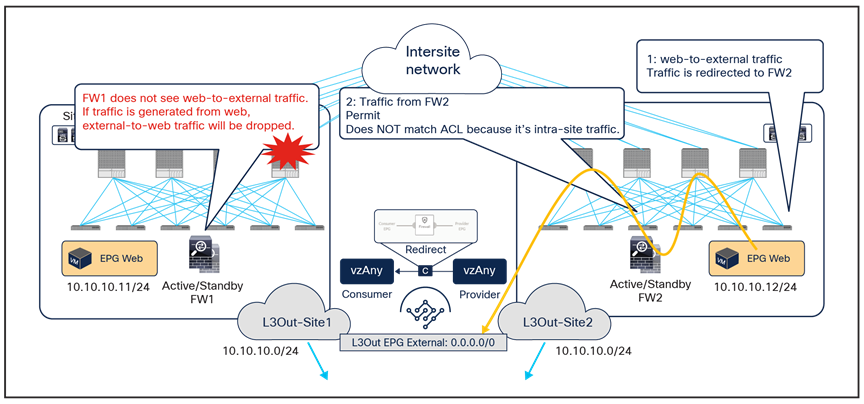

Applying the policy on the ingress leaf node, which is a requirement for east-west communication in the vzAny-to-vzAny PBR use case, may represent a problem for the redirection of north-south traffic flows without inbound traffic path optimization. To better understand this issue, let’s consider the scenario depicted in Figure 31.

Inbound flow redirected to both firewall services

Inbound traffic is received in site1 even if the destination is the Web EPG endpoint connected to site2. The border leaf node in site1 applies the PBR policy (because it is the ingress leaf), and traffic is redirected first to the firewall in site1 and then to the firewall in site2, as expected for the vzAny-to-vzAny use case.

Figure 32 shows instead the return traffic flow, from the Web EPG endpoint in site2 to the external destination.

Outbound flow redirected only to the firewall service in site2

The outbound flow can only be redirected to the firewall service in site2, and this causes an asymmetric behavior that will cause traffic drop when the north-south communication is initiated by the Web endpoint.

The solution to this problem, highlighted in Figure 33, consists in enabling host-based routing advertisement so that inbound traffic paths are optimized. Notice that this means that, for north-south traffic redirection with the vzAny-to-vzAny PBR use case, the redirection should only happen to the firewall located in the site where the internal endpoint is connected.

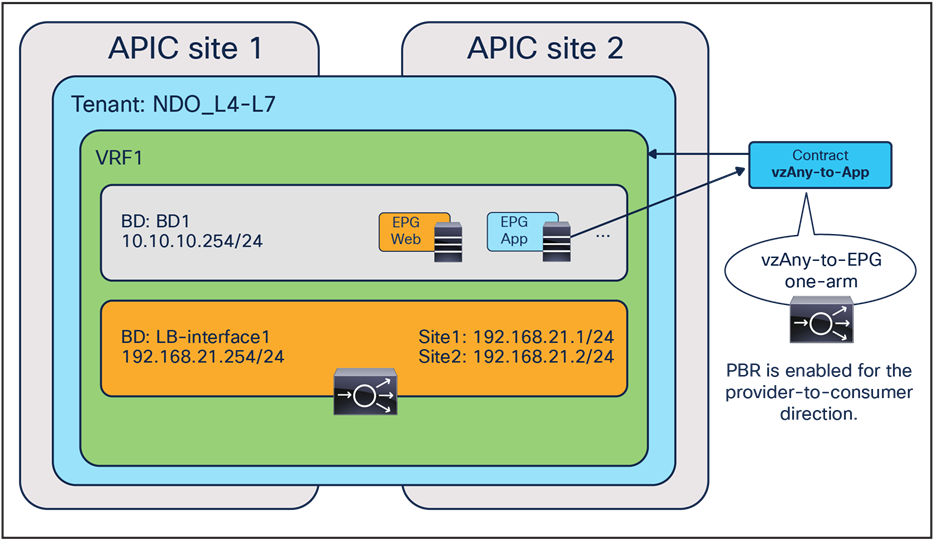

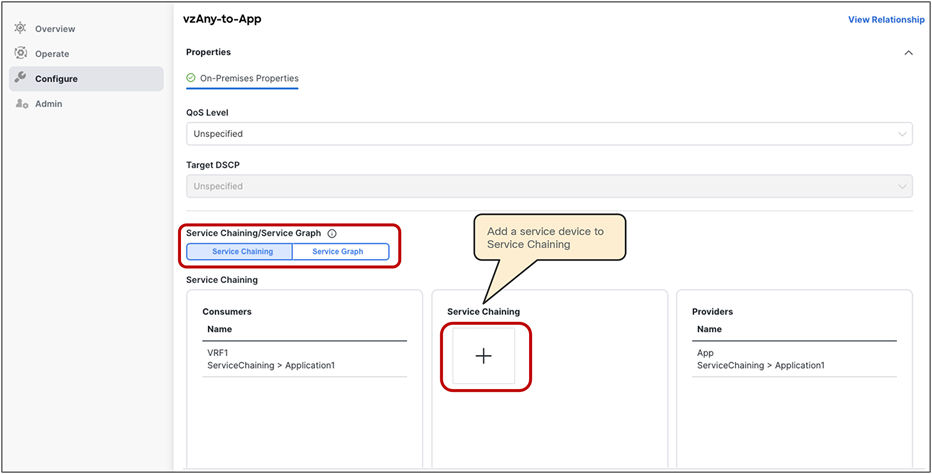

East-west and north-south traffic use case (vzAny-to-EPG)

As in the case for the previous vzAny-to-vzAny scenario, this service graph PBR option also requires Cisco ACI Release 6.0(4c) or later and Cisco Nexus Dashboard Orchestrator Release 4.2(3e) or later. Figure 34 shows a sample Cisco ACI network design for east-west and north-south firewall insertion with vzAny-to-EPG PBR. App EPG and vzAny have a contract with a firewall service graph attached to it, with PBR enabled in both directions.

Although the figure below shows just three EPGs (L3Out EPG, Web EPG, and App EPG), the VRF could have more EPGs in the same or in different BDs, and the firewall is inserted for the communication between all the EPGs in the VRF and the App EPG, as a result of the vzAny-to-App contract with PBR.

East-west firewall with PBR design example

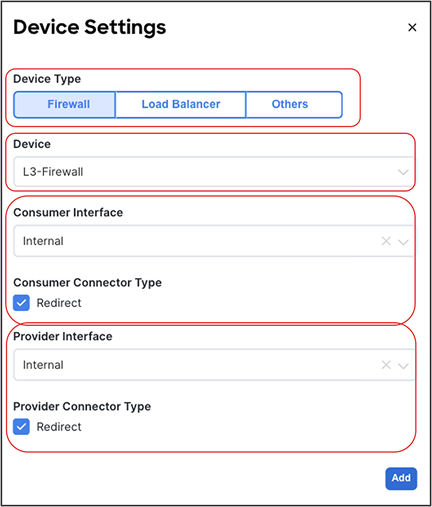

Figure 35 illustrates an example of a service-graph PBR deployment for steering to the firewall the intersite communications between an endpoint in Web EPG and an endpoint in App EPG. In this case, in order to avoid the creation of an asymmetric path across separate firewall nodes, the policy must be applied on the provider leaf node for both directions of the same flow, similarly to the east-west traffic use case with EPG-to-EPG PBR. However, for this use case this requirement can be satisfied without provisioning the IP prefix under the consumer EPGs.

● When the Web endpoint (representing a consumer EPG part of vzAny) sends traffic toward an App endpoint, the consumer leaf just forwards the traffic toward the provider leaf where the App endpoint has been discovered. As previously mentioned for the EPG-to-EPG PBR use case, the consumer leaf is programmed for not applying the PBR policy, which uses a “redirect override” flag.

● The PBR policy kicks in on the provider leaf, and the traffic gets redirected through the local active firewall node.

● Once the firewall has applied its locally configured security policy, the traffic is sent back toward the fabric and forwarded to the App endpoint.

Use of PBR for consumer-to-provider traffic flows (vzAny-to-EPG)

The same firewall is inserted for the return traffic flow (Figure 36).

● The PBR policy is applied on the provider leaf, and the traffic is steered through the same firewall node that built the connection state by receiving the incoming traffic. This is under the assumption that the provider leaf knows the class ID for the consumer endpoint. If that was not the case, the traffic would be sent directly to the consumer leaf in site1, which would redirect the flow to the remote firewall in site2 and generate the control-plane packet required for conversation learning similar to the example shown in Figure 29 (the same behavior already discussed for the previous vzAny-to-vzAny PBR use case).

● Once the firewall has applied its local security policy, the traffic is sent back toward the remote site and forwarded to the Web endpoint.

● The consumer leaf does not apply the policy, because this was already done on the provider leaf.

Use of PBR for provider-to-consumer traffic flows (vzAny-to-EPG)

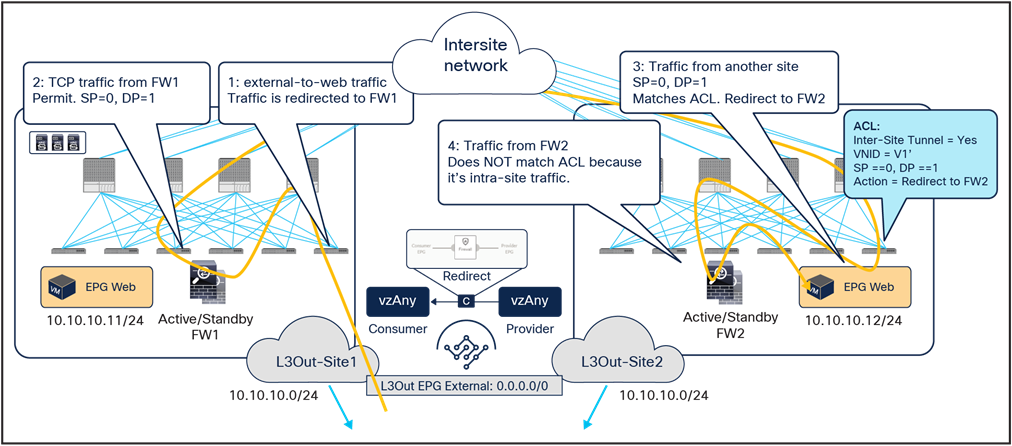

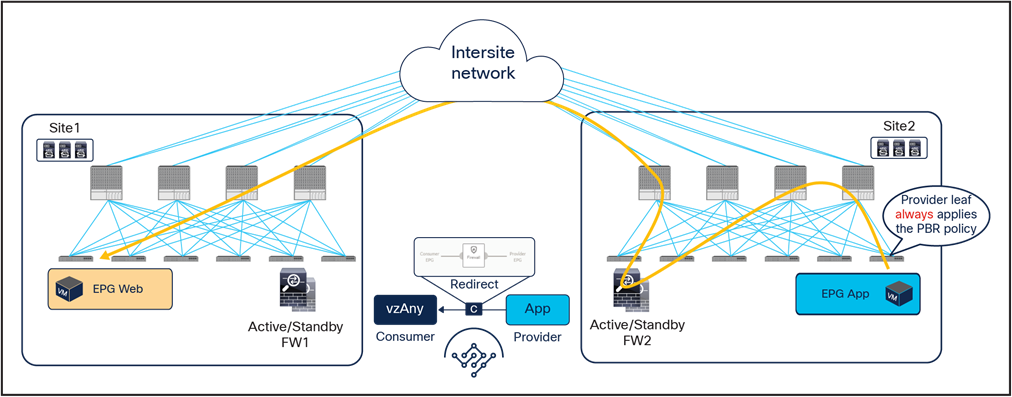

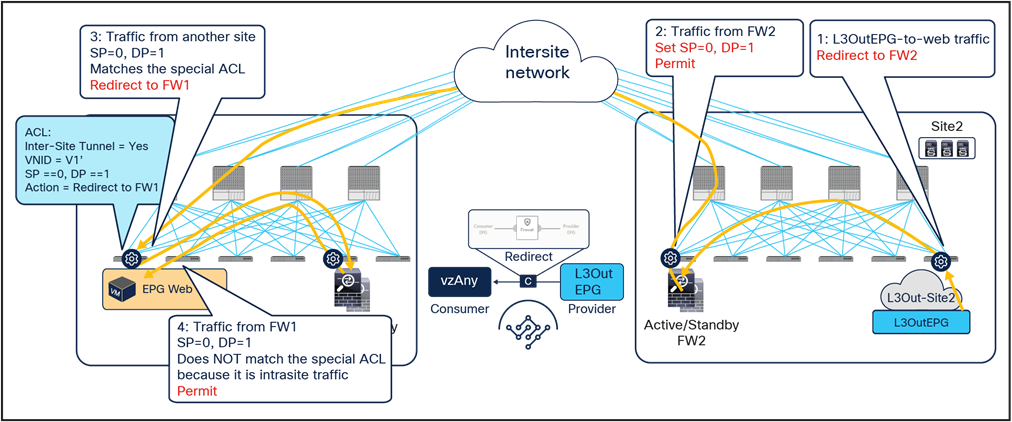

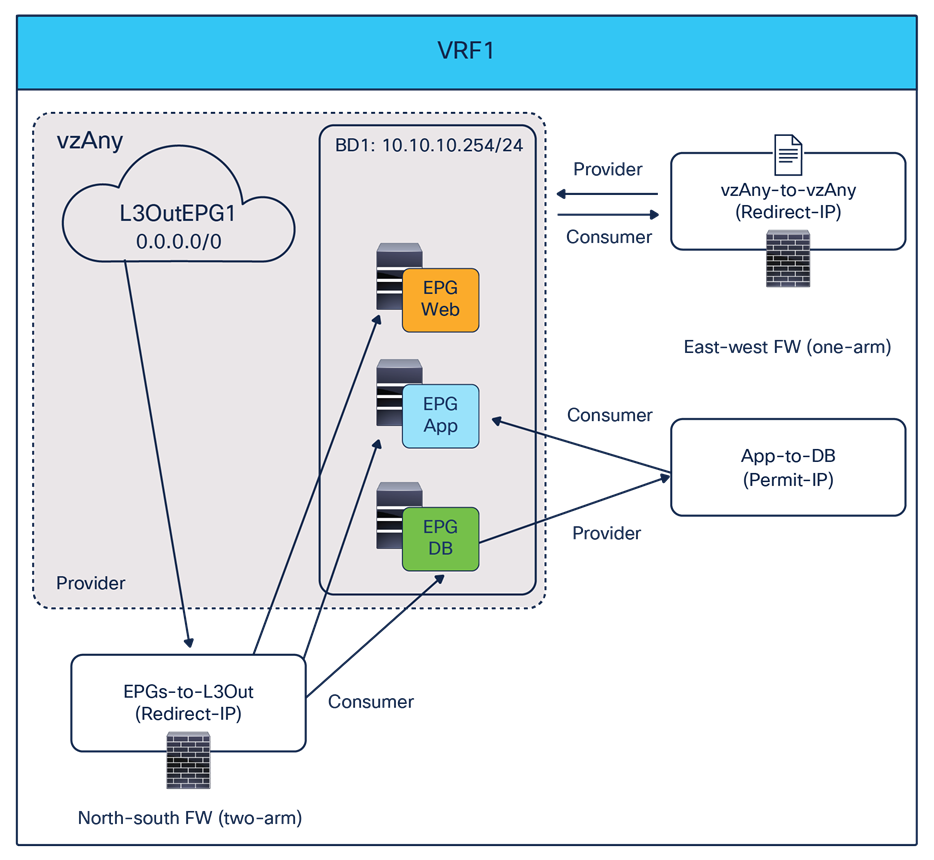

North-south traffic use case (vzAny-to-L3Out)

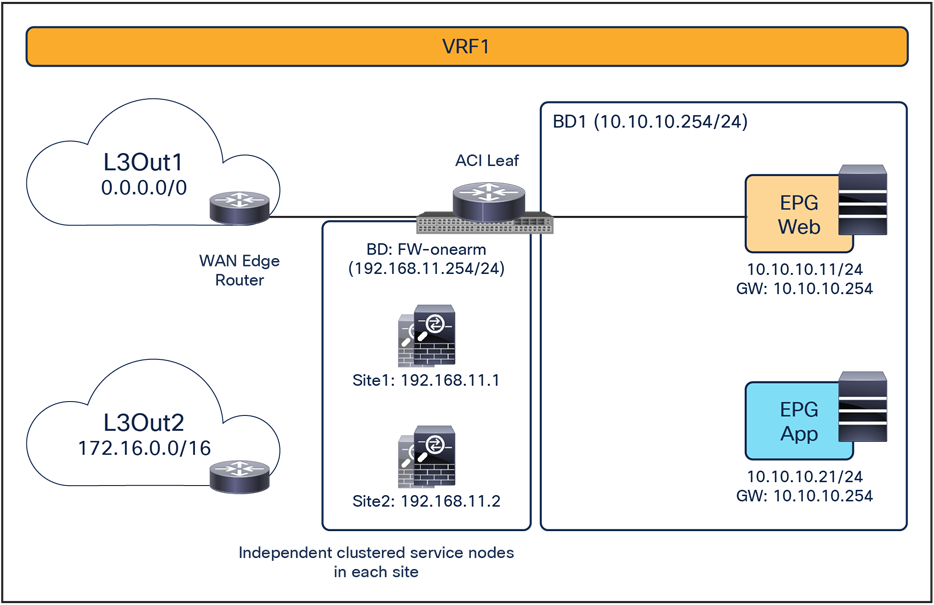

This service-graph option also requires Cisco ACI Release 6.0(4c) and Cisco Nexus Dashboard Orchestrator Release 4.2(3e) or later. Figure 37 shows a sample Cisco ACI network design for north-south firewall insertion with vzAny-to-L3Out PBR. L3Out1 EPG and vzAny have a contract with a firewall service graph attached to it, with PBR enabled in both directions.

Note: If the VRF has another L3Out EPG (L3Out2 EPG in this example), that L3Out EPG is also part of vzAny, thus a vzAny-to-L3Out PBR contract can also be used for L3Out-to-L3Out firewall insertion.

North-south firewall with PBR design example (vzAny-to-L3Out)

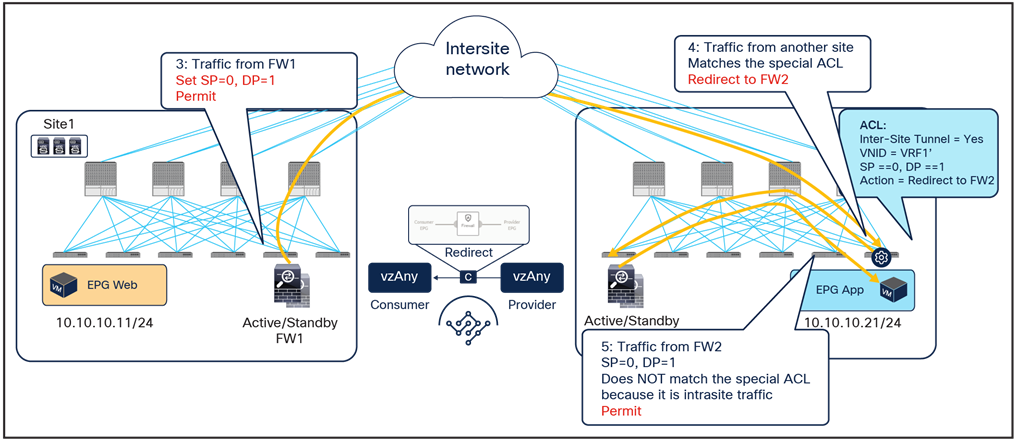

Figure 38 illustrates an example of service-graph PBR deployment for steering to the firewall the intersite communication between an endpoint in Web EPG and an external endpoint in the L3OutEPG. In this case, in order to avoid the creation of asymmetric firewall insertion, the traffic is redirected to the firewall services in both source and destination sites, which is similar to the behavior described for the vzAny-to-vzAny PBR use case.

● When a Web endpoint sends traffic toward an external endpoint in the L3OutEPG, the ingress leaf in site1 redirects traffic through the local active firewall node. Because an L3Out EPG classification is based on the IP prefix, it should always be possible for the ingress leaf to resolve the destination L3Out EPG class ID.

● Once the firewall has applied its locally configured security policy, the traffic is sent to the destination leaf. The service leaf in site1 permits traffic with special flags in the VXLAN header, which indicates that the firewall was inserted. Please refer to the footnote on page 29 for more information on the use of those flags.

● When the traffic arrives to the destination leaf in site2, it is again redirected through the local active firewall node because the special flags are set and the traffic is received from another site.

● After the firewall has applied its locally configured security policy, the traffic is sent to the destination leaf. The service leaf in site2 also set the special flag in the VXLAN header, but the destination leaf doesn’t redirect traffic again because the traffic is intrasite.

Use of PBR for consumer-to-provider traffic flows (vzAny-to-L3Out)

The same firewall services in both sites must be inserted for the return flow (Figure 39).

● When the external endpoint sends traffic toward the Web endpoint, the ingress leaf in site2 redirects traffic through the local active firewall node. This is under the assumption that the provider leaf knows the class ID information for the consumer endpoint. If that was not the case, the traffic would be sent directly to the consumer leaf in site1, which would redirect the flow to the remote firewall in site2 and generate the control-plane packet required for conversation learning similar to the example shown in Figure 29 (the same behavior was already discussed for the previous vzAny-to-vzAny PBR use case).

● Once the firewall has applied its locally configured security policy, the traffic is sent to the destination leaf. The service leaf in site2 permits the traffic with special flags set in the VXLAN header.

● When the traffic arrives to the destination leaf in site1, traffic is again redirected through the local active firewall node because of the special flags set in the traffic received from another site.

● After the firewall has applied its locally configured security policy, the traffic is sent to the destination leaf. The service leaf in site1 also set the special flags in the VXLAN header, but the destination leaf does not redirect traffic again because the traffic is intrasite.

Use of PBR for provider-to-consumer traffic flows (vzAny-to-L3Out)

If the traffic is intrasite, it is redirected by either the source or the destination leaf. The endpoint IP learning status does not matter because the local PBR destination is always used regardless of which leaf applies the PBR policy, which is similar to the scenario shown in Figure 30.

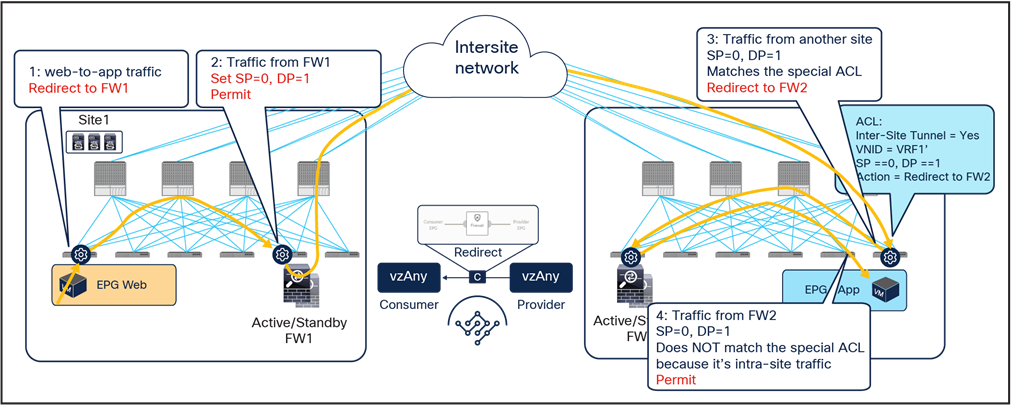

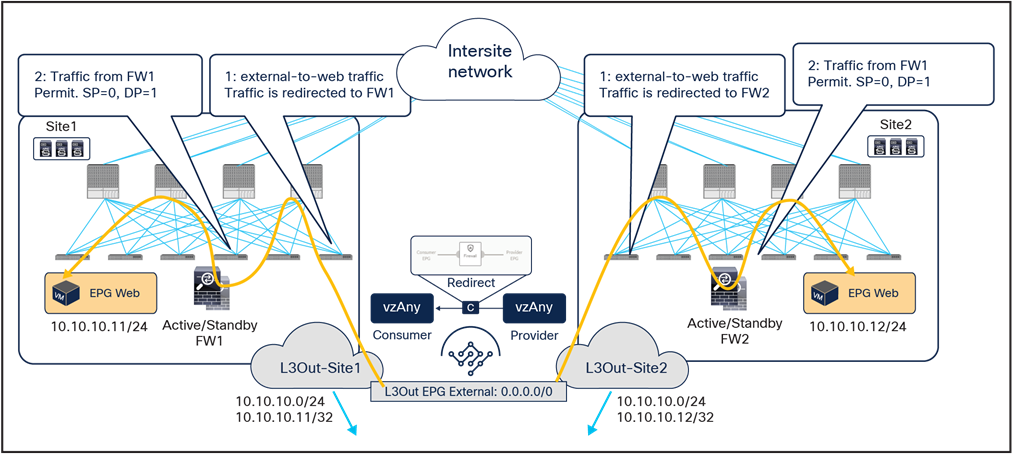

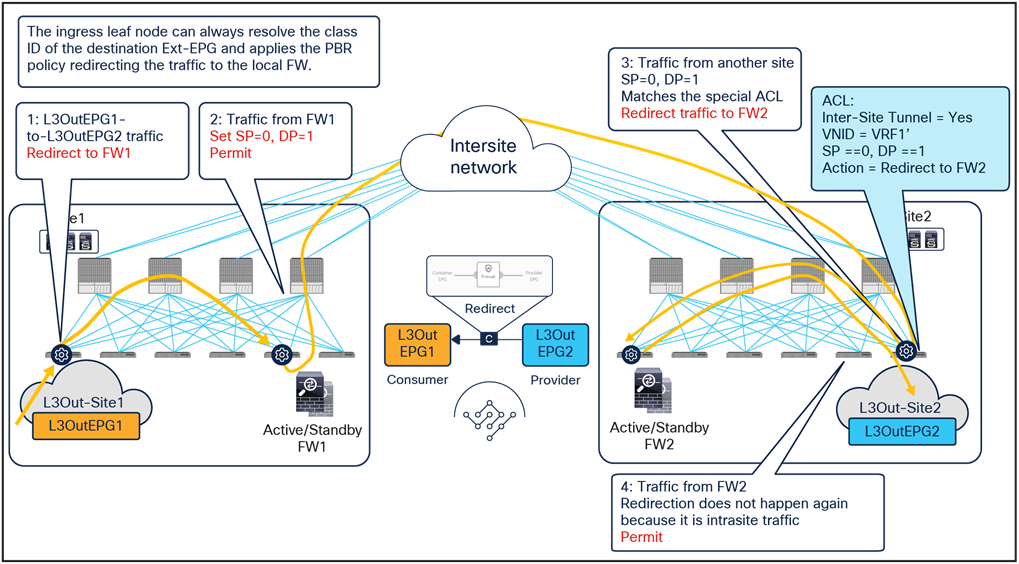

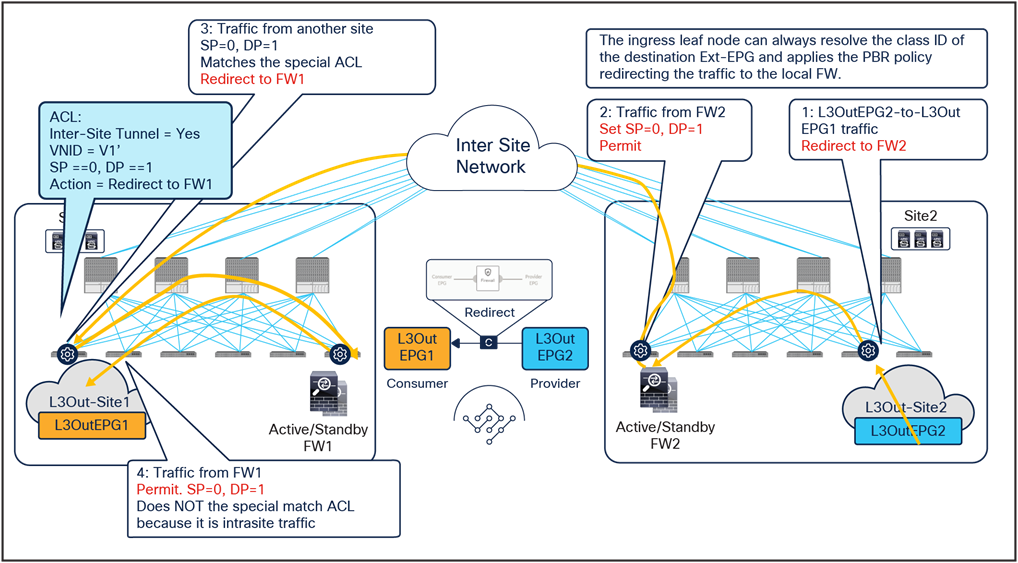

Transit traffic use case (L3Out-to-L3Out)

This service-graph option also requires Cisco ACI Release 6.0(4c) and Cisco Nexus Dashboard Orchestrator Release 4.2(3e) or later. The figure below shows a sample Cisco ACI network design with L3Out-to-L3Out PBR. L3Out EPG1 and L3Out EPG2 have a contract with a firewall service graph attached to it, with PBR enabled in both directions.

Transit traffic with PBR design (L3Out-to-L3Out)

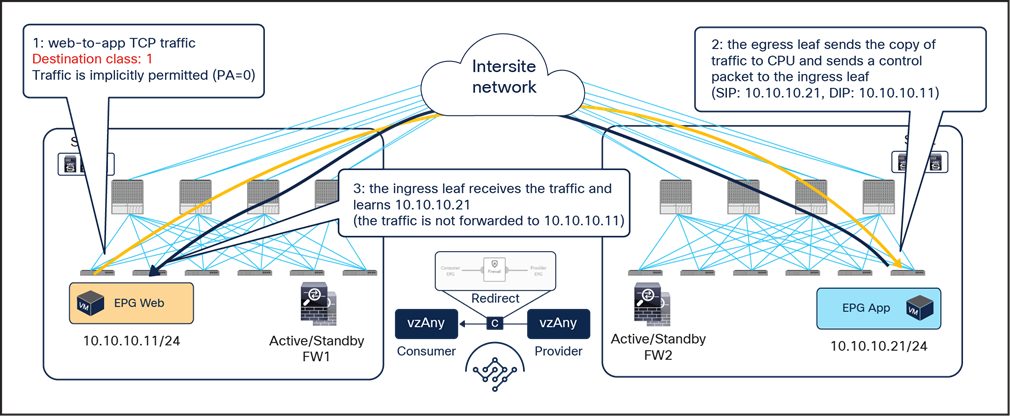

Figure 41 illustrates an example of a service-graph PBR deployment for steering to the firewall the intersite communications between external endpoints in different L3Outs. In this case, in order to avoid the creation of an asymmetric firewall insertion, traffic is redirected to the firewall nodes in both source and destination sites, which is similar to the vzAny-to-vzAny PBR use case described in Figure 24.

● When an external endpoint sends traffic toward another external endpoint in a different L3Out EPG, the ingress border leaf in site1 redirects traffic through the local active firewall node. Because an L3Out EPG classification is based on the IP prefix, it should always be possible for the ingress leaf to resolve the destination L3Out EPG class ID.

● Once the firewall has applied its locally configured security policy, the traffic is sent to the destination leaf. The service leaf in site1 permits traffic with special flags in the VXLAN header, which indicates that the firewall was inserted (see the footnote on page 29 for more information).

● When the traffic arrives to the destination leaf in site2, traffic is again redirected through the local active firewall node because the special flags are set for traffic received from another site.

● After the firewall has applied its locally configured security policy, the traffic is sent to the destination leaf. The service leaf in site2 also set the special flags in the VXLAN header, but the destination leaf does not redirect traffic again because the traffic is intrasite.

Use of PBR for L3Out1-to-L3Out2 traffic flows (L3Out-to-L3Out)

The same firewall services in both sites are inserted for the return flow (Figure 42).

● When the external endpoint sends traffic toward the other external endpoint, the ingress border leaf in site2 redirects traffic through the local active firewall node. Because an L3Out EPG classification is based on the IP prefix, it should always be possible for the ingress leaf to resolve the destination L3Out EPG class ID.

● Once the firewall has applied its locally configured security policy, the traffic is sent to the destination leaf. The service leaf in site2 permits traffic with special flags in the VXLAN header.

● When traffic arrives at the destination leaf in site1, traffic is again redirected through the local active firewall node because the special flags are set for traffic received from another site.

● After the firewall has applied its locally configured security policy, the traffic is sent to the destination leaf. The service leaf in site1 also set the special flags in the VXLAN header, but the destination leaf does not redirect traffic again because the traffic is intrasite.

Use of PBR for L3Out2-to-L3Out1 traffic flows (L3Out-to-L3Out)

If the traffic is intrasite, it is redirected by either the source or the destination leaf, which is similar to the scenario shown in Figure 30.

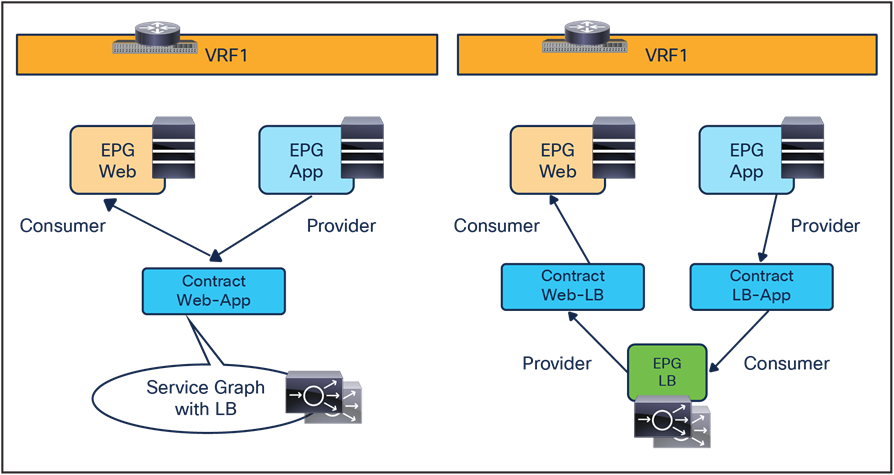

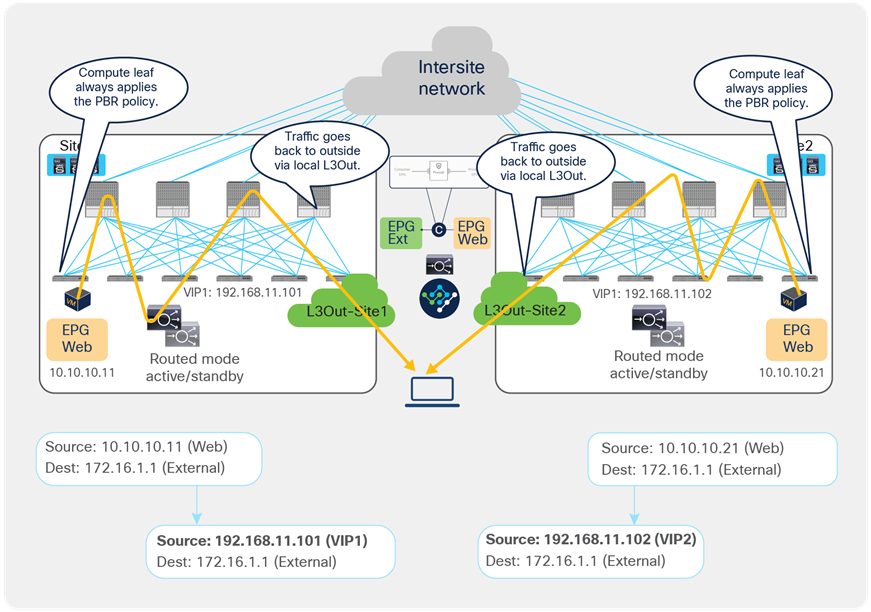

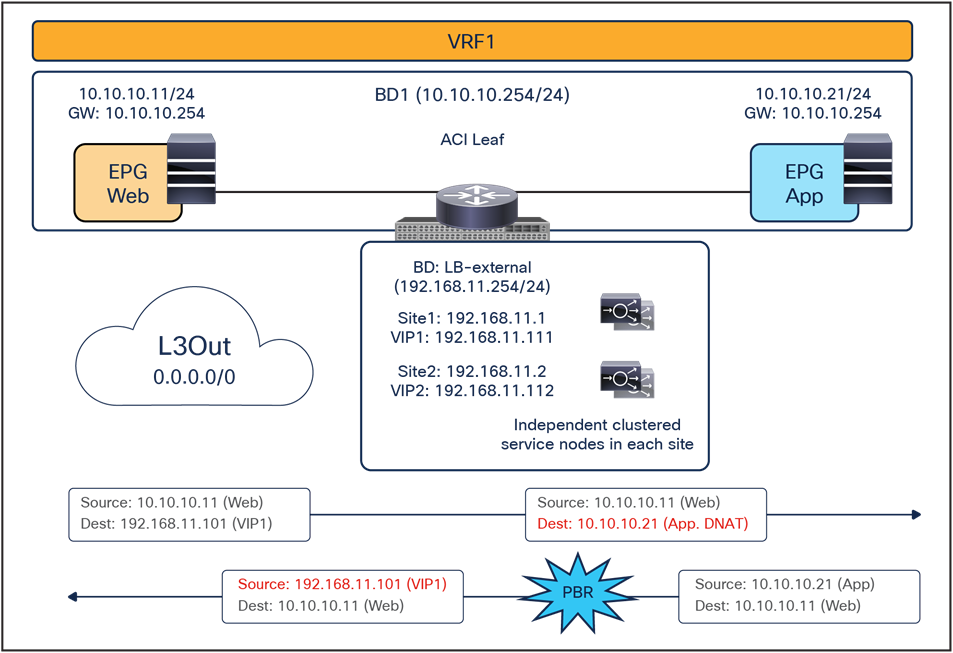

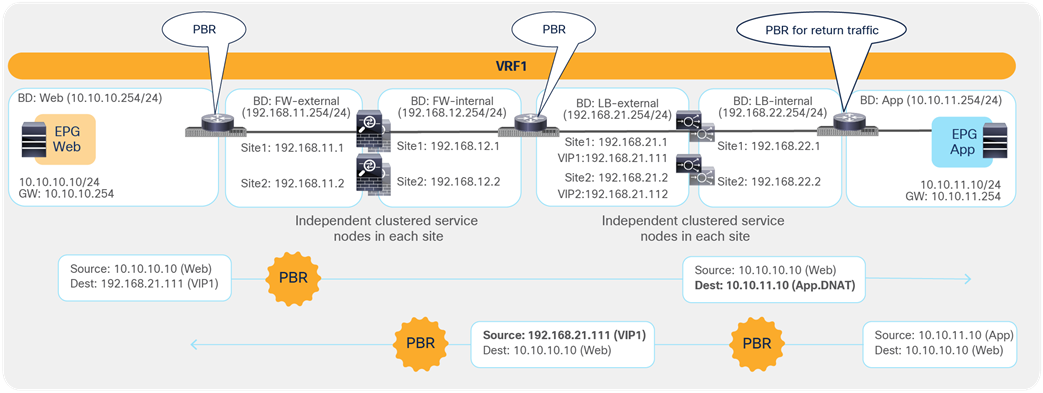

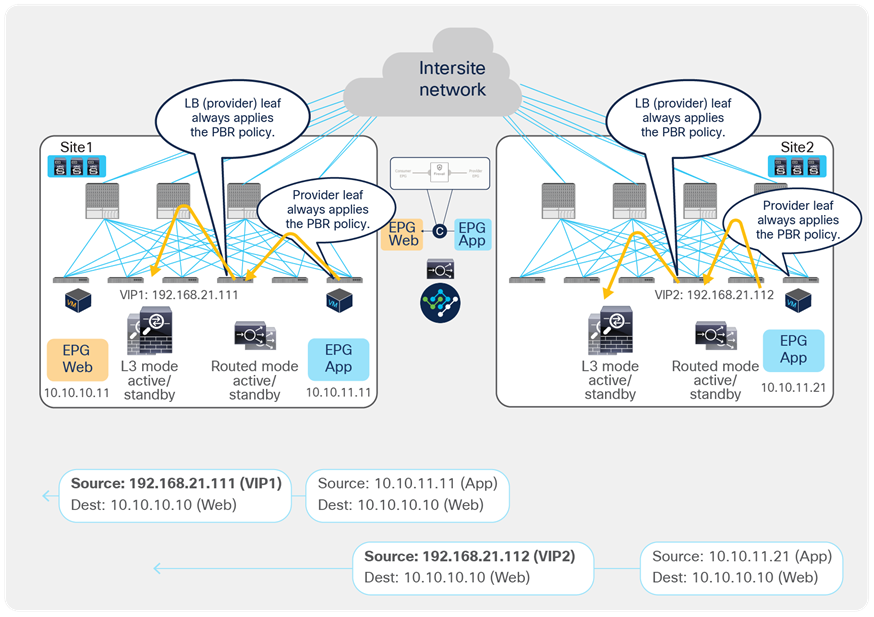

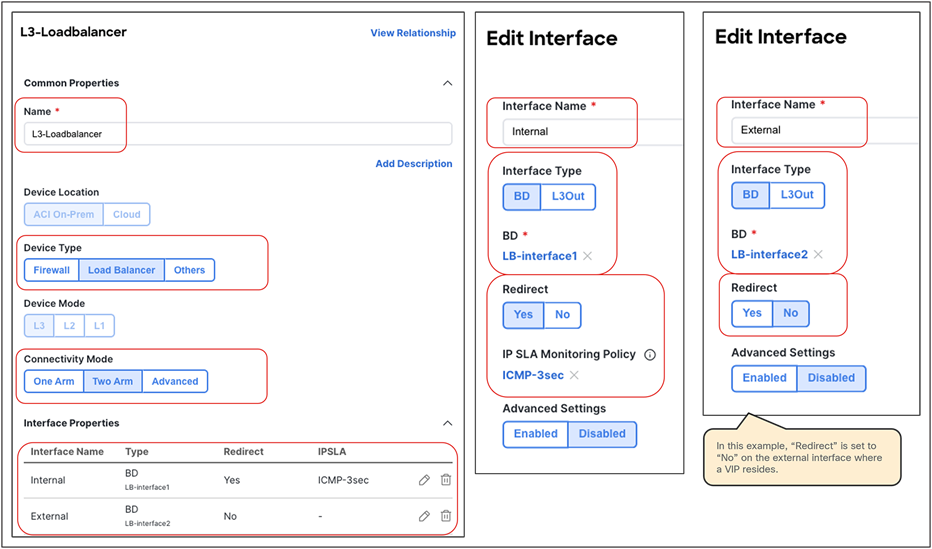

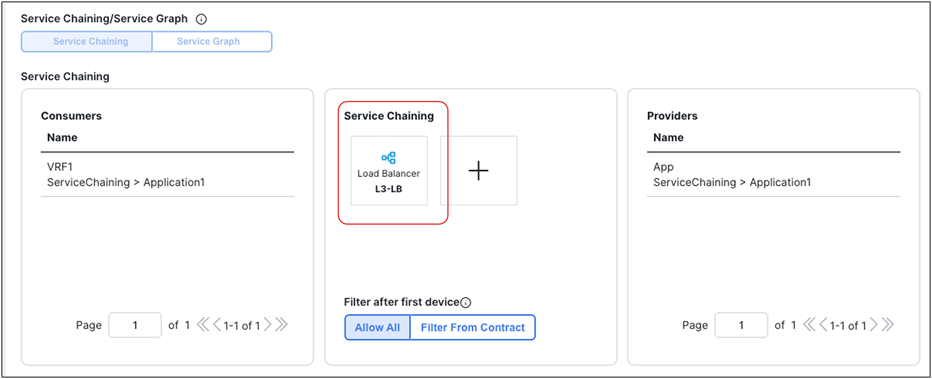

Load balancer with Source Network Address Translation (SNAT)

This section explains load-balancer insertion with Source Network Address Translation (SNAT) for north-south and east-west traffic use cases. In this deployment model, applying a PBR policy is not required because both incoming traffic flows (toward the VIP address) and return traffic flows (toward the IP address translated by SNAT) are destined to the load balancer and do not need redirection services.

Though this document uses a contract with a load-balancer service graph as an example, a service graph is not mandatory for this design. The main differences between the use of a service graph without PBR and the non-use of a service graph are the following:

● Use of service graph without PBR

As shown on the left side of Figure 43, using the service graph without PBR brings the advantage of being able to simply define a contract between the consumer (clients) and the provider (server farm). The EPGs for the load-balancer interfaces are automatically created through the service graph, together with the required contracts to ensure traffic can flow in both directions.

● Non-use of service graph

In this case, two different contracts are required. The first one is between the consumer EPG (clients) and the EPG for the interface of the load balancer facing the clients. The second is between the EPG for the interface of the load balancer performing SNAT and the provider EPG (server farm) associated to the Virtual IP (VIP). If there is no contract security requirement, use of the same EPG for clients and the load balancer, and servers and the load balancer is also an option.

Load-balancer insertion with (left) and without (right) service graph

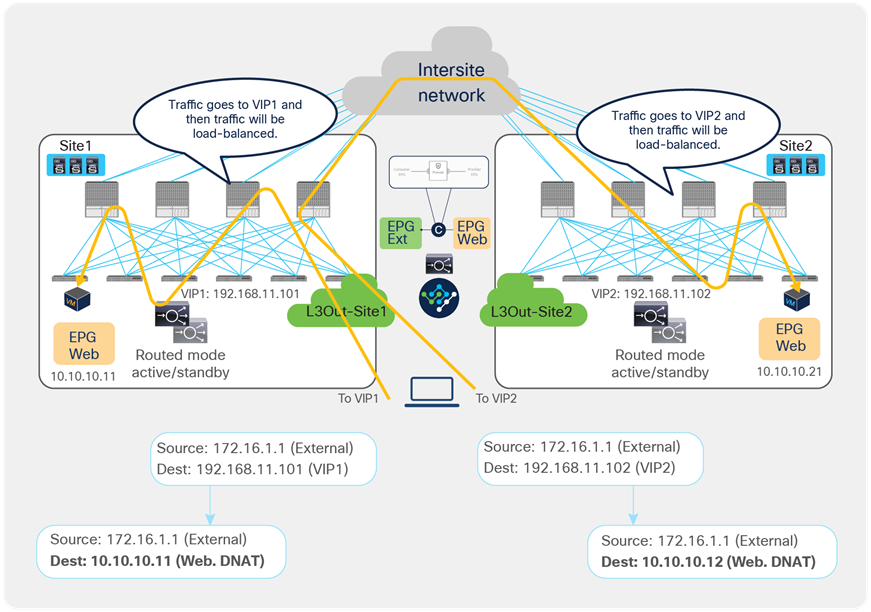

North-south traffic use case (EPG-to-L3Out)

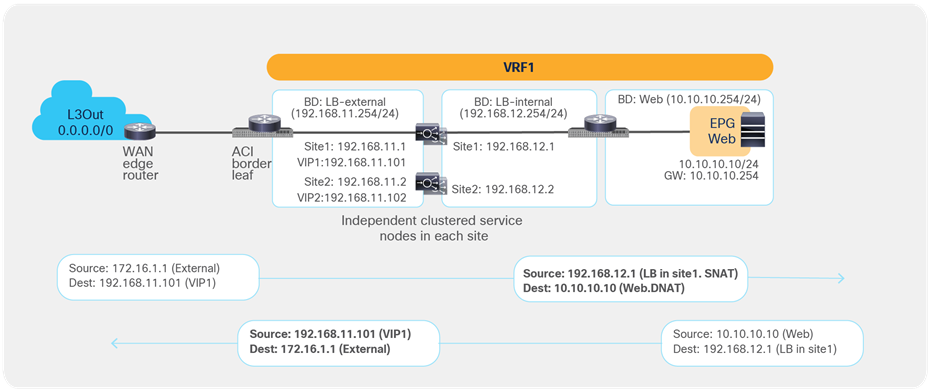

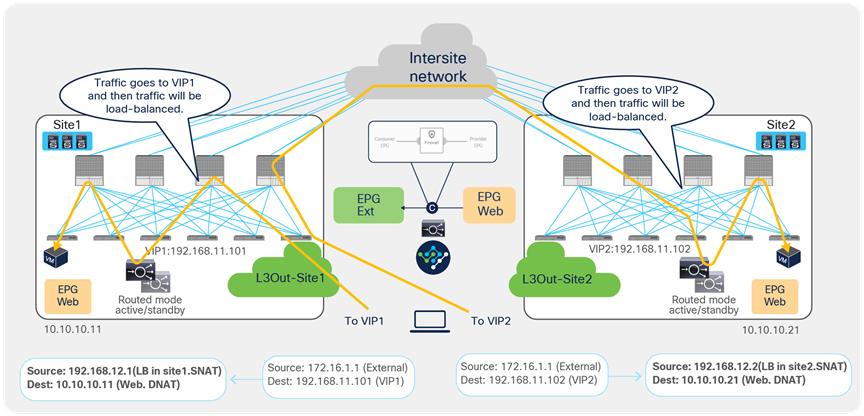

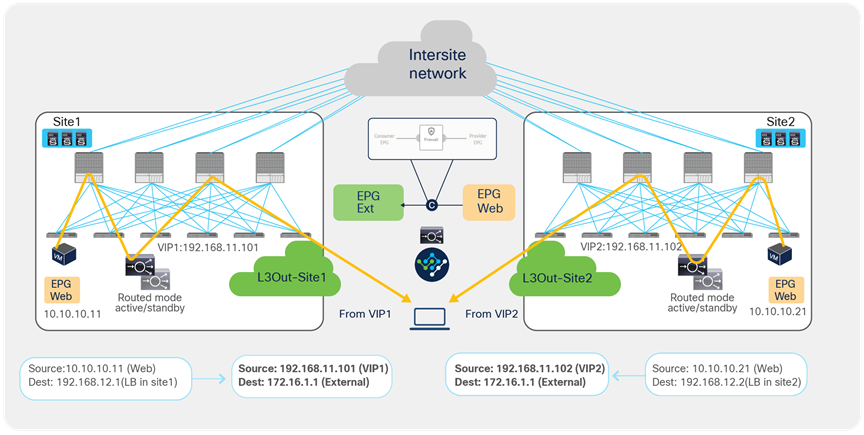

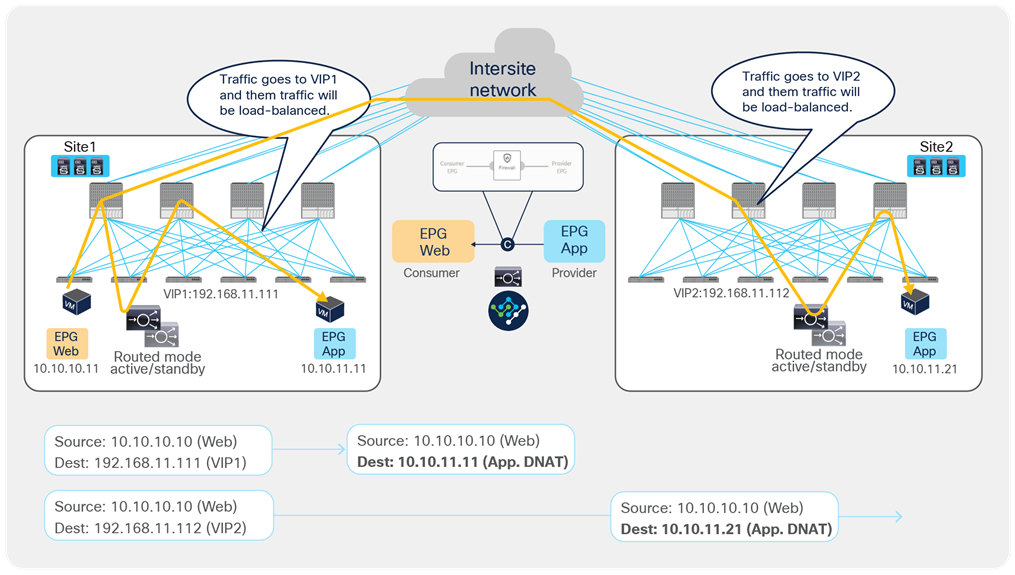

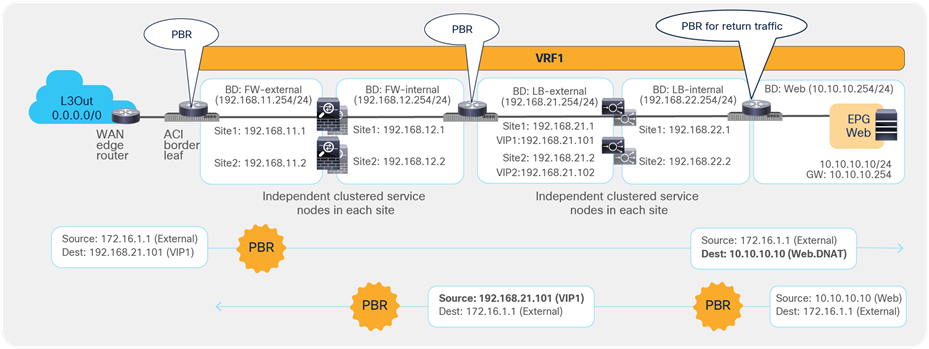

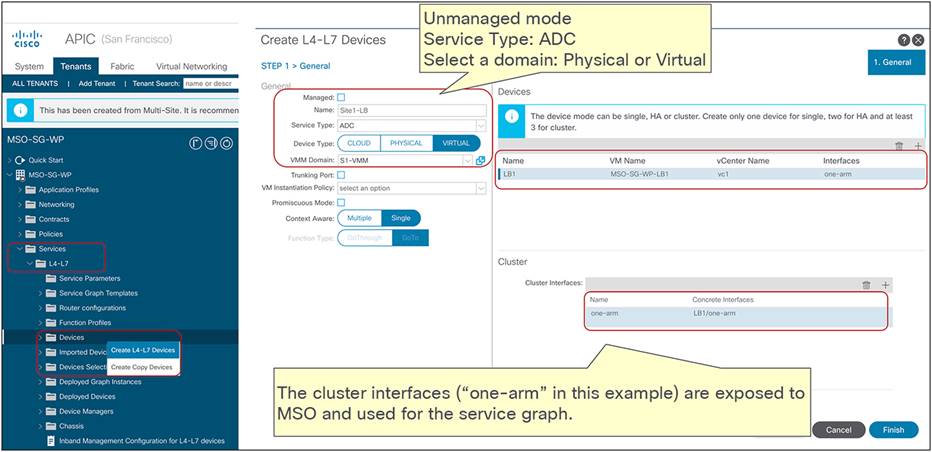

Figure 44 shows a sample Cisco ACI network design for north-south-routed load-balancer insertion with SNAT. The consumer L3Out EPG and the provider Web EPG have a contract with a load-balancer service graph (without PBR). The endpoints in the Web EPG are the real servers that are part of the server farm associated to the VIP of the load balancer. You can have multiple load balancers, which can be represented by multiple high-availability pairs deployed in separate sites.

The assumption here is that each load balancer pair has assigned a unique VIP address that is part of the same service BD, as shown in the example below. In this scenario, Global Server Load Balancing (GSLB) can be used for load balancing access to a specific application through multiple VIPs.

Note: If a service graph is not defined, using the same service BD for each load balancer pair is not mandatory. Each load-balancer pair can use a unique VIP address in different service BDs. Also, without a service graph, an inter-VRF design is also possible.

Example of a north-south load balancer with a SNAT design

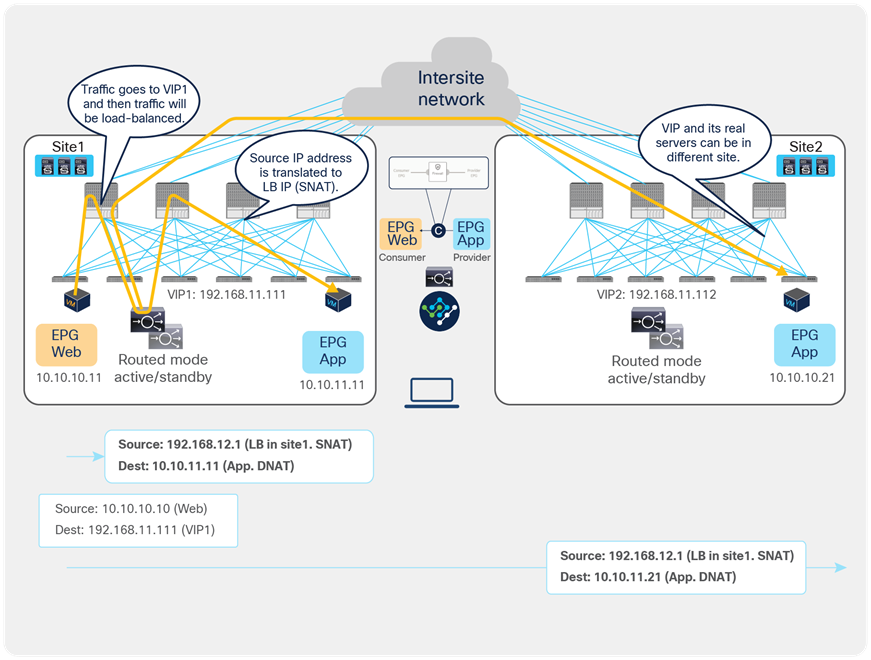

Figure 45 illustrates an example of communication between the external network and an internal Web EPG in an ACI Multi-Site deployment where we have two connections: one is between the external network and the VIP (the frontend connection), and the other one is between the load-balancer internal IP address and the real servers in the Web EPG (the backend connection). In this example, the internal Web EPG and the L3Out are defined in the same VRF.

● The incoming traffic originating from the external client is destined to the VIP, so it will be received on the L3Out connection of one of the connected sites and will then reach the load balancer without PBR as long as the VIP is reachable (this is basic forwarding behavior).

● The load balancer changes the destination IP to one of the real servers associated to the VIP. In this example, the load balancer also translates the source IP to the SNAT IP owned by the load balancer.

● After that, the traffic is forwarded to the real server.

Note: The suboptimal inbound traffic can be avoided by leveraging host-route advertising to optimize the traffic path for ingress, if the VIP of the load balancer belongs to a stretched subnet. Alternatively, it is possible to use VIP addresses in separate IP subnets for load balancers deployed in different sites. In the case of service graph, the separate IP subnets need to be configured under the same service BD because an L2-stretched service BD is required for a service graph.

Load balancer with SNAT inbound traffic flows (north-south)

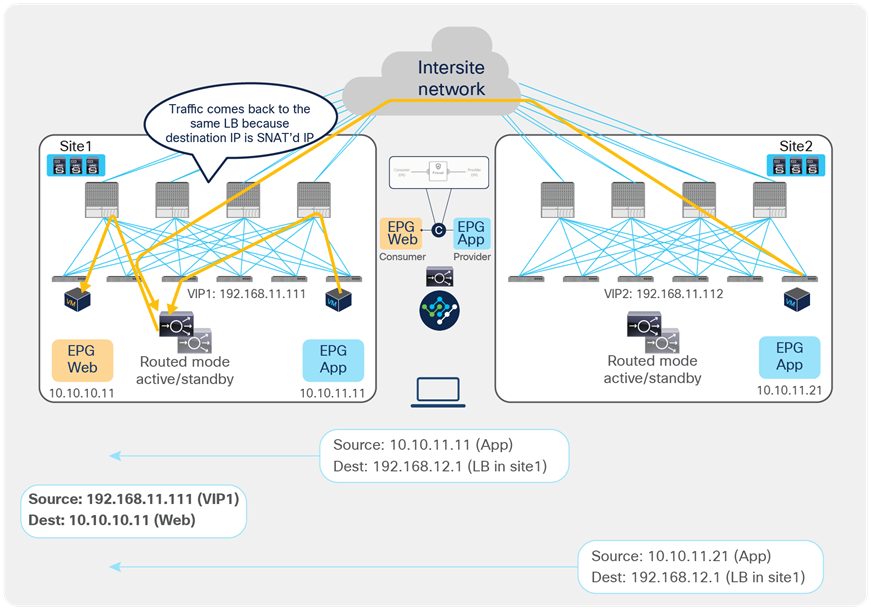

Because the return traffic is destined to the SNAT IP owned by the load balancer that handled the incoming traffic flow, PBR is not required for the return traffic either.

● The load balancer receives the traffic from the Web real server endpoint and changes the source and destination IP addresses (the source becomes the VIP, the destination becomes the external client).

● The traffic is sent back to the fabric and forwarded to the external client through a local L3Out connection (unless a specific configuration is provisioned to prefer a remote L3Out connection to communicate with the external client).

Though there may be an “asymmetric” use of the L3Out connection (for example, for VIP2, inbound traffic uses L3Out-Site1, whereas outbound traffic is sent through L3Out-Site2), there is always a “fully symmetric” use of the same service node for both legs of the communication.

Load balancer with SNAT inbound traffic flows (north-south)

The examples above show the load balancer and the real server as part of the same site, but in this use case they could also be deployed in different sites (Figure 47 and Figure 48). This is because the VIP, the SNAT IP, and the real servers’ addresses are always reachable through regular forwarding from different sites. That said, the use of a local real-server farm is ideal in terms of traffic path optimization.

Load balancer with SNAT inbound traffic flows (with VIP and real server in different sites)

Load balancer with SNAT outbound traffic flows (with VIP and real server in different sites)

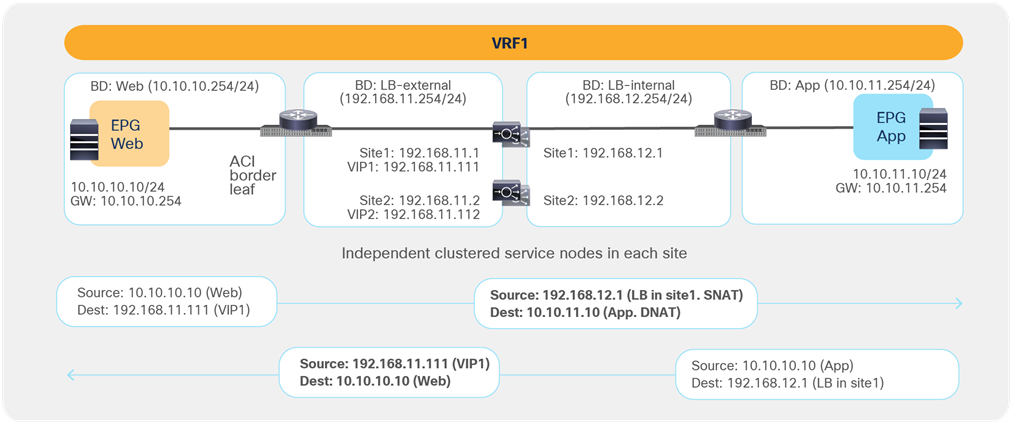

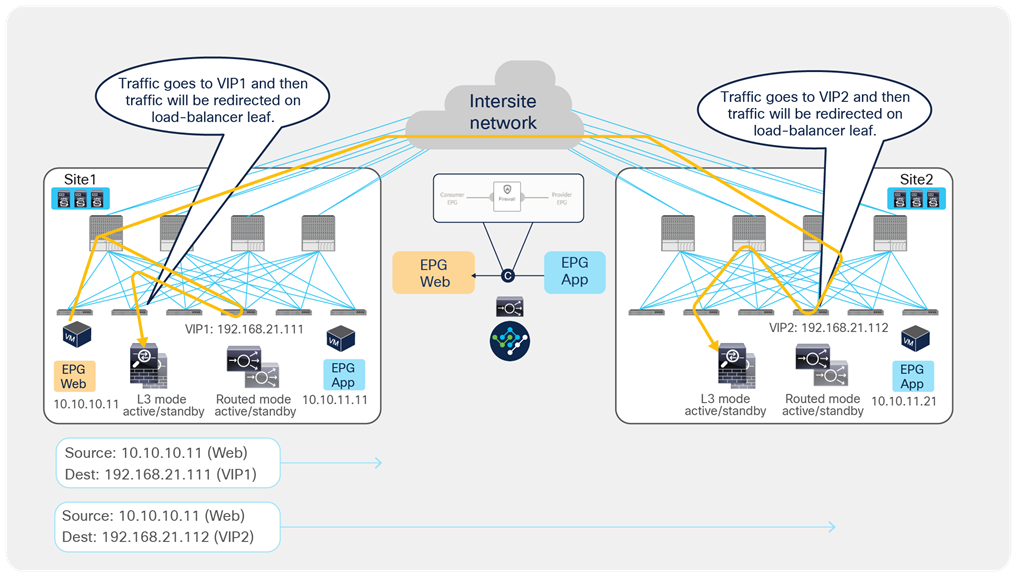

East-west traffic use case (EPG-to-EPG)

Figure 49 shows a typical Cisco ACI network design for east-west-routed load-balancer insertion with SNAT. This design is similar to that for the north-south-routed load-balancer use case. In this example, the consumer Web EPG and the provider App EPG have a contract with a load-balancer service graph. Endpoints in the App EPG are real servers associated to the VIP on the load balancer.

As previously discussed for the north-south use case, the assumption is that each load balancer pair has assigned a unique VIP address that is part of the same service BD. If a service graph is not defined, each load balancer pair can use a unique VIP address in different service BD. Also, even if this example focuses on an intra-VRF contract, an inter-VRF contract for east-west communication is also supported.

Example of an east-west load balancer with a SNAT design

Figure 50 illustrates an example of east-west communication between a consumer EPG Web and a provider EPG App in a Multi-Site scenario where we have two connections: one is between the Web endpoint and the VIP (the frontend connection) and the other is between the load balancer and the real servers in the App EPG (the backend connection).

● The traffic originating from the Web endpoint is destined to the VIP, so it will reach the load balancer without requiring PBR as long as the VIP is reachable.

● The load balancer changes the destination IP to one of the real servers associated to the VIP. At the same time, the load balancer translates the source IP to the SNAT IP owned by the load balancer.

● The traffic is then sent back to the fabric and forwarded to the real server.

Load balancer with SNAT incoming traffic flows (east-west)

For the provider-to-consumer traffic direction:

● The return traffic originated by the App real server is destined to the SNAT IP owned by the load balancer that took care of the incoming traffic; therefore, applying the PBR policy is not required for the return traffic either.

● The load balancer changes the source and destination IPs and sends the traffic back to the fabric.

● The traffic is forwarded back to the consumer endpoint.

Load balancer with SNAT return traffic flows (east-west)

Note: Though, in this example, the load balancer and the real server are in the same site, they can be in different sites, similar to the north-south-routed load-balancer insertion example earlier.

The use of SNAT is very handy to ensure that the return traffic goes back to the same load balancer that handled the incoming flow, therefore simplifying the design. However, a possibly undesirable consequence is that real servers lose visibility into the client’s source IP address. When such visibility is a design requirement, you should avoid using SNAT on the load balancer, in order to ensure preserving the client’s source IP. This mandates the introduction of PBR to properly steer the return traffic through the same load balancer that handled the first leg of the communication, as discussed in the next section.

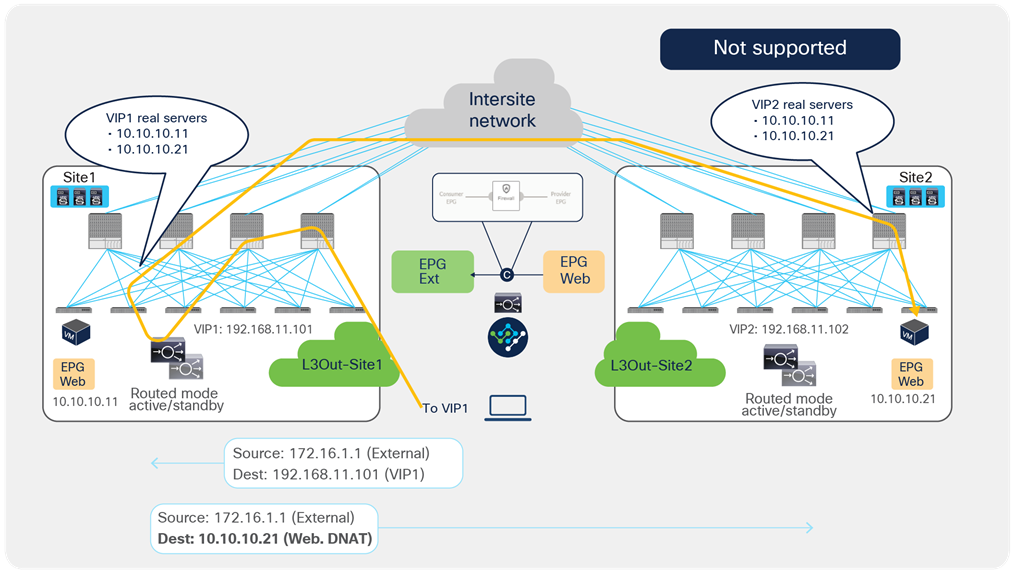

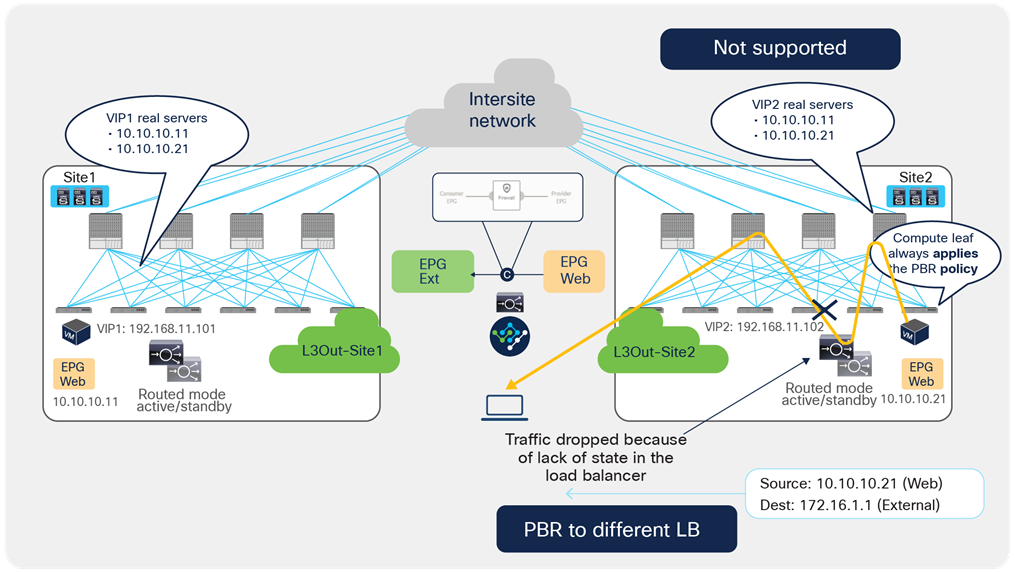

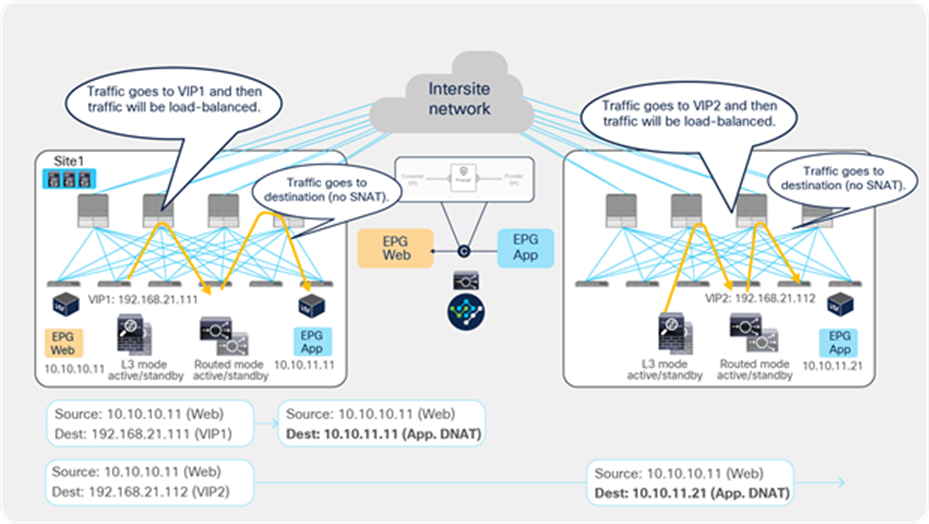

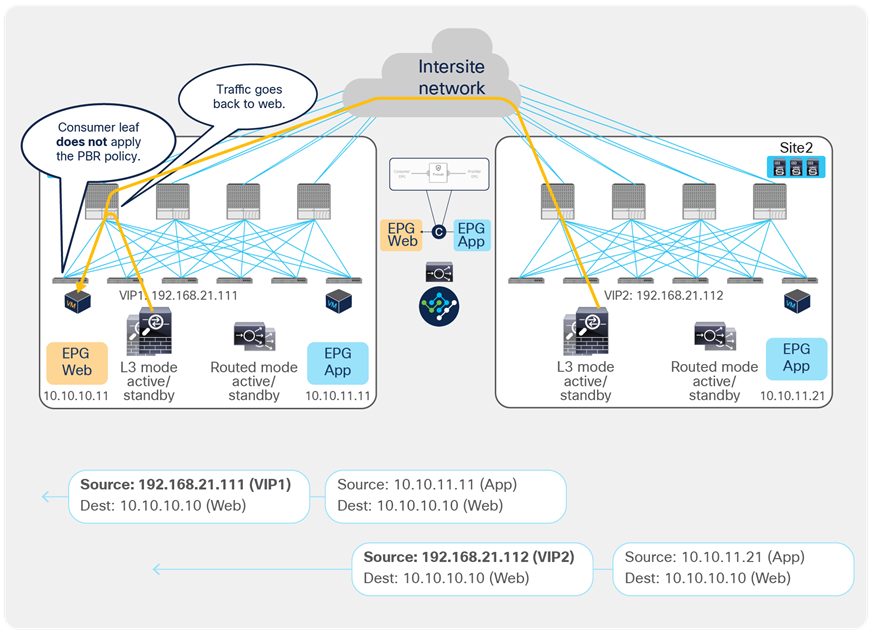

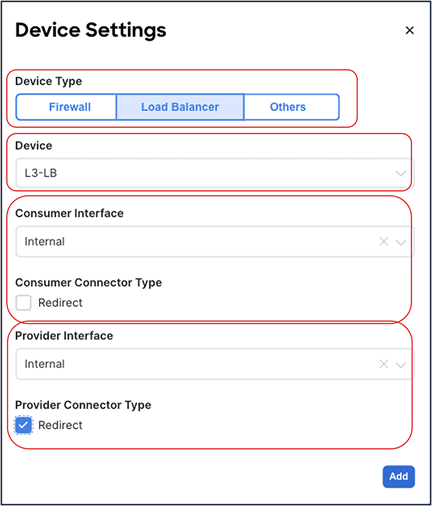

Load balancer without SNAT (use of PBR for the return traffic)

In this deployment model, PBR is required for the return traffic between the real servers and the clients, because the load balancer does not perform SNAT for incoming traffic. The Incoming traffic flow destined to the VIP still does not require PBR and leverages basic forwarding.

There are two important considerations for deploying this design option with ACI Multi-Site:

● The load balancer and the real-server farm where traffic is load balanced must be deployed in the same site.

● Cisco ACI Release 4.0(1) or later is required for the EPG-to-EPG and EPG-to-L3Out use cases. For the vzAny-to-EPG use case, Cisco ACI Release 6.0(4c) and Cisco Nexus Dashboard Orchestrator Release 4.2(3e) or later are needed instead.

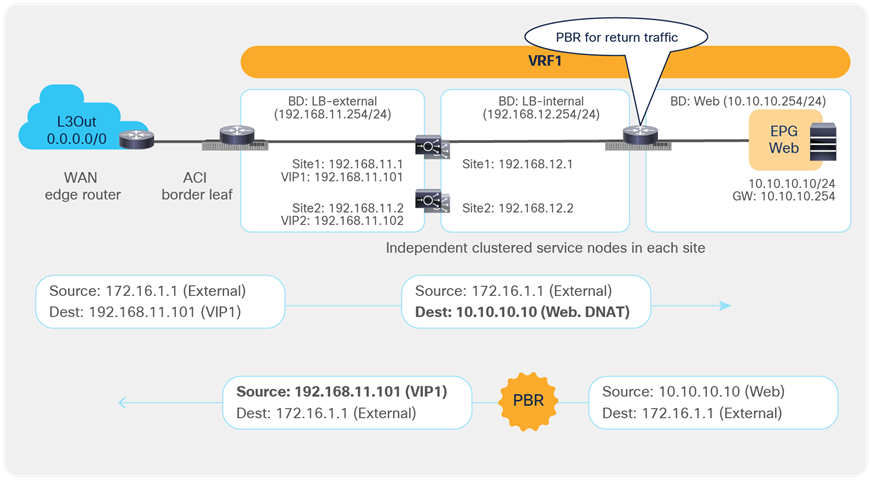

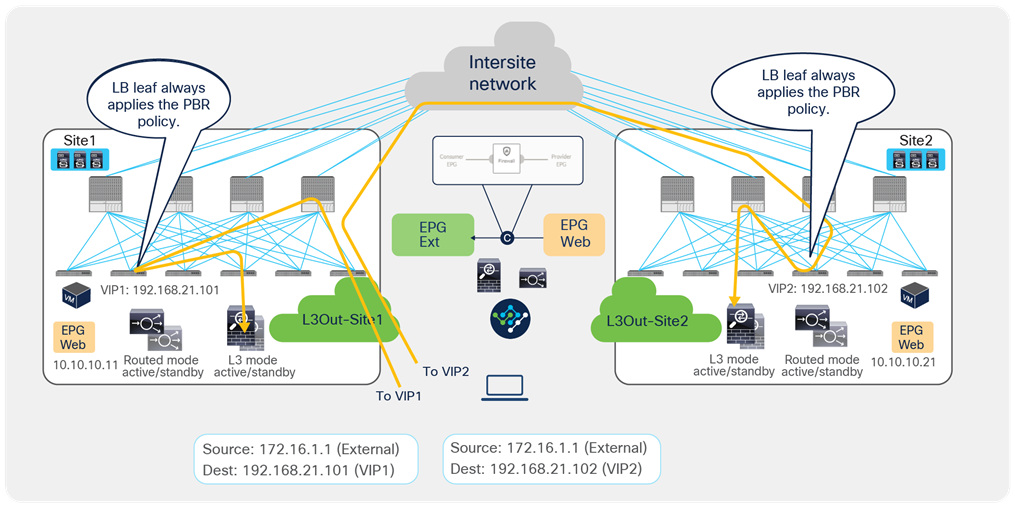

North-south traffic use case (EPG-to-L3Out)

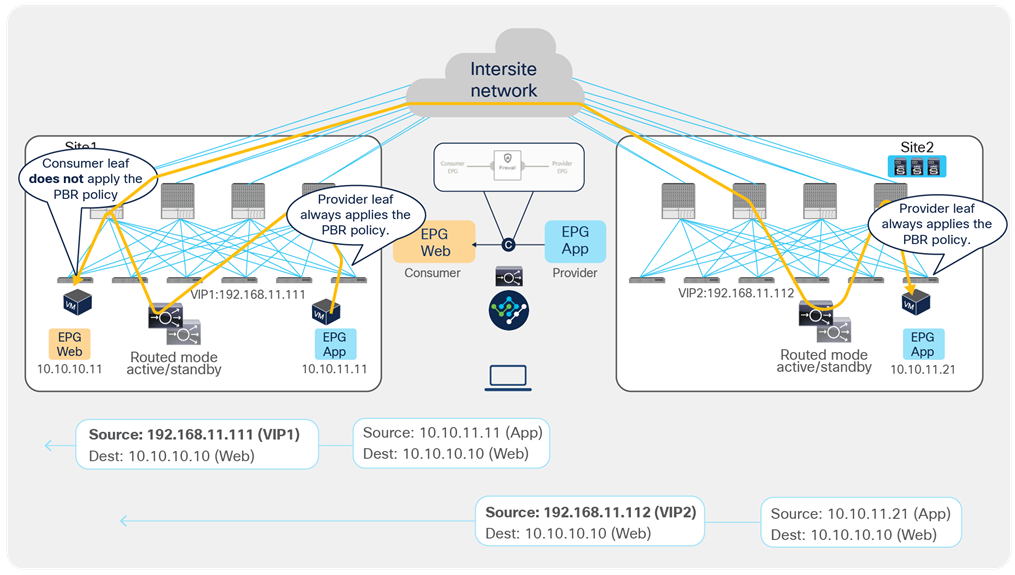

Figure 52 shows a sample Cisco ACI network design for north-south-routed load balancer insertion without SNAT. The consumer L3Out EPG and the provider Web EPG have a contract with associated a service graph with PBR for the return traffic flow. Endpoints in the Web EPG are the real servers associated to the VIP of the load balancer. There can be multiple load balancers, which can be represented by multiple high-availability pairs deployed in separate sites.

The usual assumption here is that each load balancer gets assigned a unique VIP address that is part of the same BD and that Global Server Load Balancing (GSLB) is then used for load balancing traffic for a given application to multiple VIPs. Although the figure below illustrates an intra-VRF design, the definition of Web EPG and L3Out EPG in different VRFs is also a valid design. In this multi-VRF scenario, the service BD where the load balancer is connected must be in either the consumer or the provider VRF.

Example of a north-south load-balancer design without SNAT

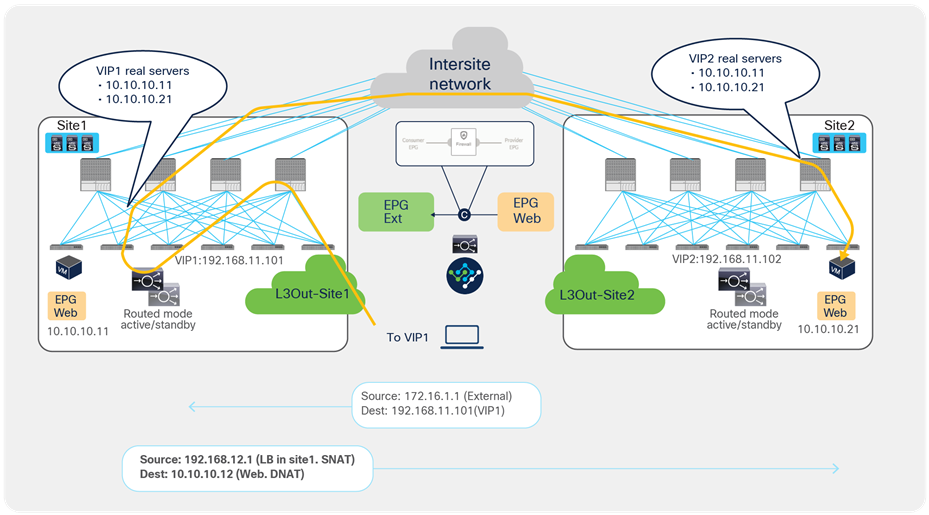

Figure 53 illustrates an example of an inbound traffic flow between the external network and an internal Web EPG in a Multi-Site deployment where we have two connections: one is between the external client and the VIP (the frontend connection) and the other is between the load balancer and the real servers that are part of the Web EPG (the backend connection).

● The incoming traffic originated from the external client and destined to the VIP is received on the L3Out connection of a given site, and reaches the load balancer without requiring PBR as long as the VIP is reachable (this is basic intrasite or intersite forwarding).

● The load balancer changes the destination IP to one of the real servers associated to the VIP, but leaves unaltered the source IP addresses (representing the external client) and forwards the traffic back to the fabric.

● The traffic is then forwarded to the real server, which must be deployed in the local site. As clarified below, this is needed to ensure that PBR can steer the return flow to the same load balancer that handled the incoming traffic.

As usual, the suboptimal inbound traffic shown for communicating with the VIP2 could be avoided by leveraging host-route advertisement to optimize the traffic path for ingress communication or by taking the VIP addresses of the load balancers deployed in separate sites from different IP subnets. Note that the service BD must be L2-stretched when using a service graph. Thus, multiple IP subnets must be provisioned for the same service BD to use VIP addresses from different IP subnets.

Load balancer without SNAT inbound traffic flows (north-south)

For the outbound direction, the traffic is destined to the original client’s IP address connection; therefore, PBR is required to steer the return traffic back to the load balancer. Otherwise the external client would receive the traffic with the source IP being the real server’s IP instead of the VIP. Such traffic will be dropped because the external client did not initiate traffic to the real server IP.

● The Web EPG sends traffic back to the external client. The PBR policy is always applied on the compute leaf node (provider leaf) where the Web endpoint is connected, so it can only redirect the traffic to a local load-balancer. This is the reason why the VIP and the real servers must be in the same site in this deployment model. The provider leaf always applies the PBR policy, because the IP prefix identifying the external clients and associated to the L3Out EPG is statically configured (with its class ID) on that leaf node.

● The load balancer changes only the source IP address to match the locally defined VIP and sends the traffic back to the fabric.

● The traffic is forwarded toward the external client leveraging, by default, a local L3Out connection.

Load balancer without SNAT outbound traffic flows (north-south)

Though there may be an “asymmetric” use of the L3Out connections (that is, for VIP2, inbound traffic uses L3Out in site1, whereas outbound traffic is sent through L3Out in site2), there is always a “fully symmetric” use of the same service node for both legs of the communication as long as the load balancer and the real servers are deployed in the same site. Otherwise the return traffic would be redirected to the load balancer in a different site and lose traffic symmetricity.

Figure 55 and Figure 56 illustrate an example of this problem: the load balancer in site1 has both local site endpoint 10.10.10.11 and remote site endpoint 10.10.10.21 as real servers associated to VIP1. If the incoming traffic to VIP1 is load balanced to 10.10.10.21 in site2, the PBR policy for the return traffic enforced on the provider leaf in site2 would redirect the traffic to the local load balancer, creating traffic asymmetry.

Load balancer without SNAT inbound traffic flows (Having the VIP and real server in different sites is not supported).

Load balancer without SNAT inbound traffic flows (Having the VIP and real server in different sites is not supported).

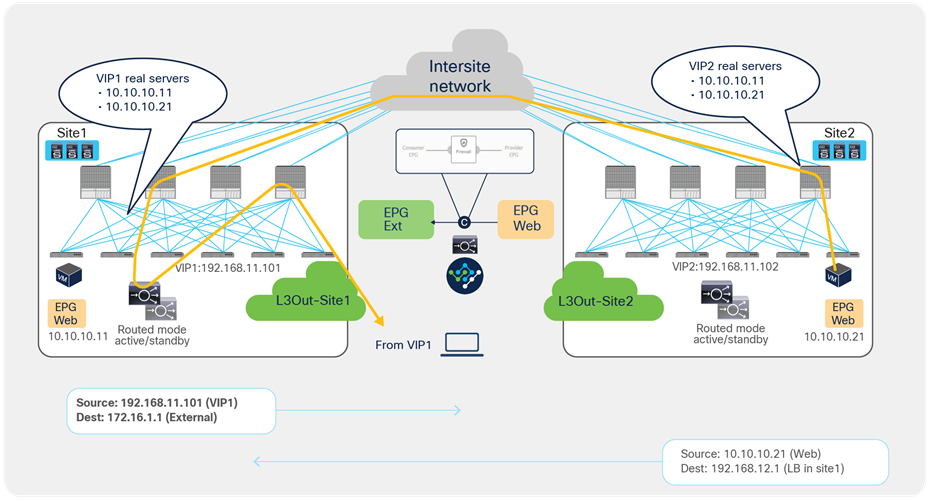

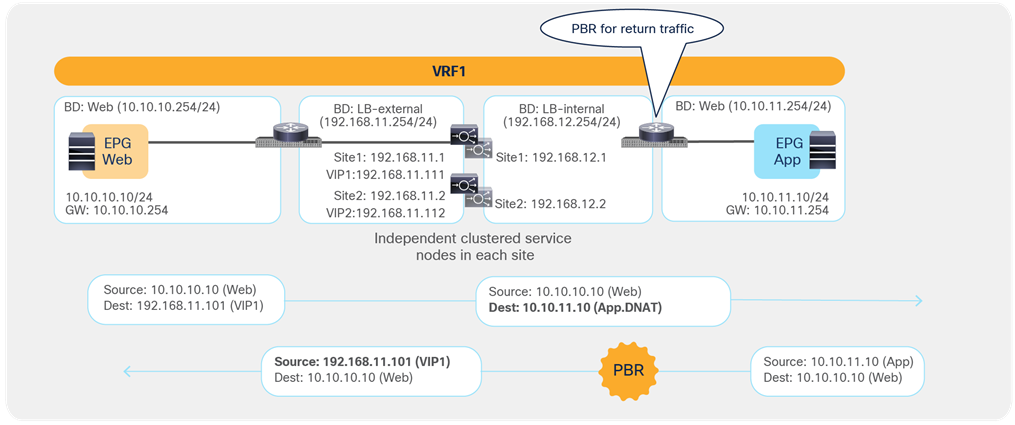

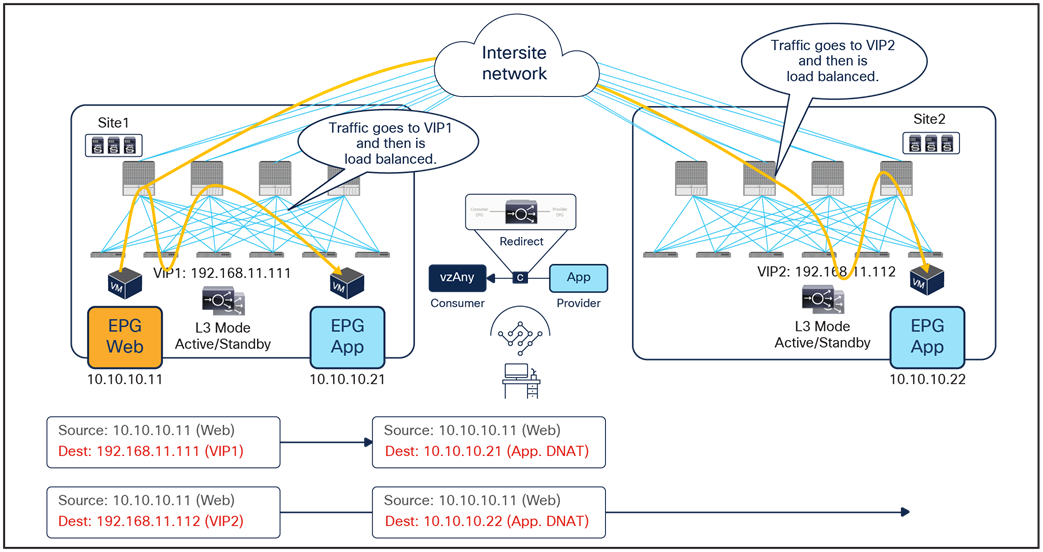

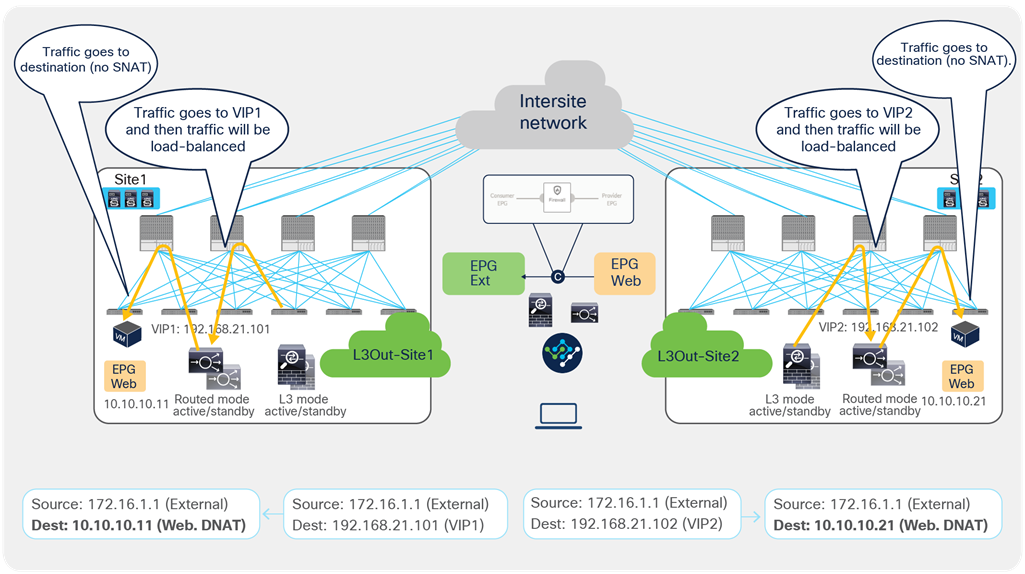

East-west traffic use case (EPG-to-EPG)

Figure 57 shows a typical Cisco ACI network design for east-west-routed load-balancer insertion without SNAT. This design is similar to that for the north-south load-balancer use case previously discussed. The consumer Web EPG and the provider App EPG have a contract with a load-balancer service graph. The endpoints in App EPG are real servers associated to the VIP on the load balancer and must be connected in the same site where the VIP is active.

The assumption here is that the VIP is in the same BD, each load balancer pair has a unique VIP address, and Global Server Load Balancing (GSLB) is used for load balancing to multiple VIPs.

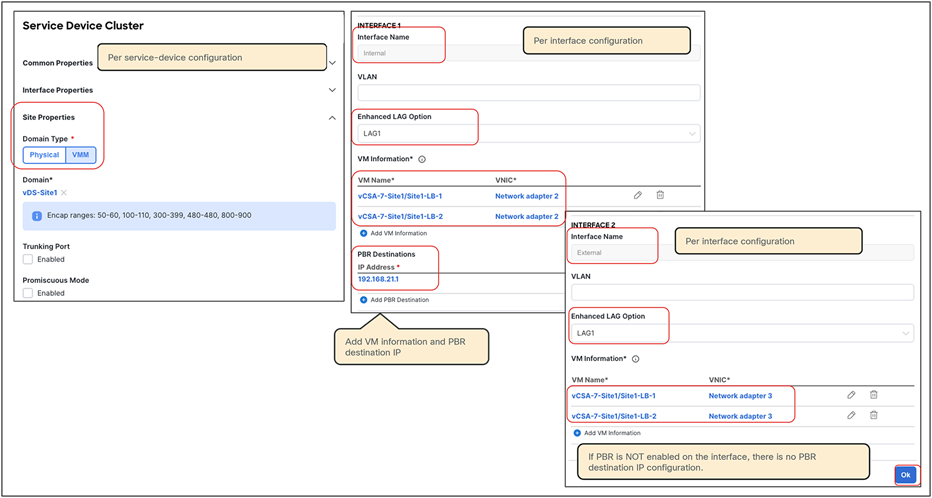

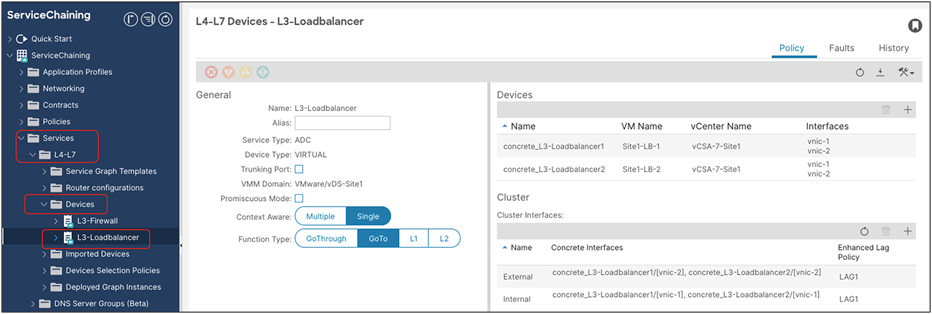

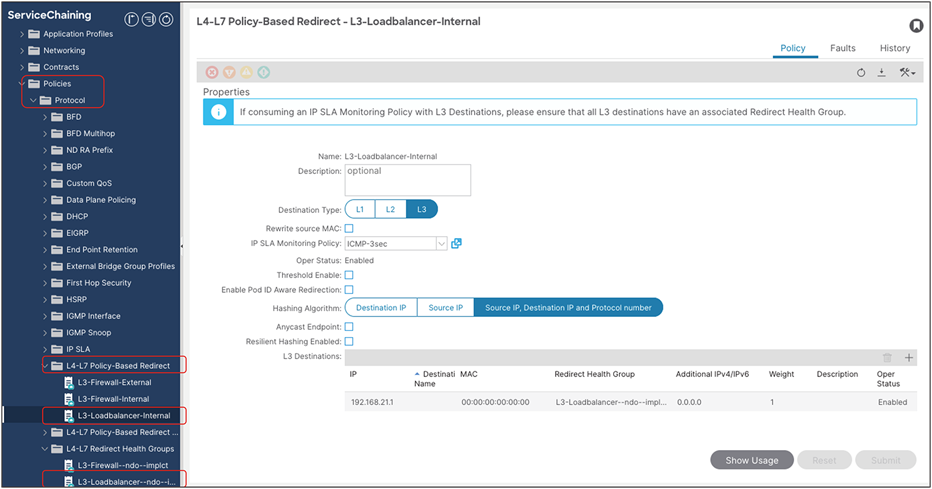

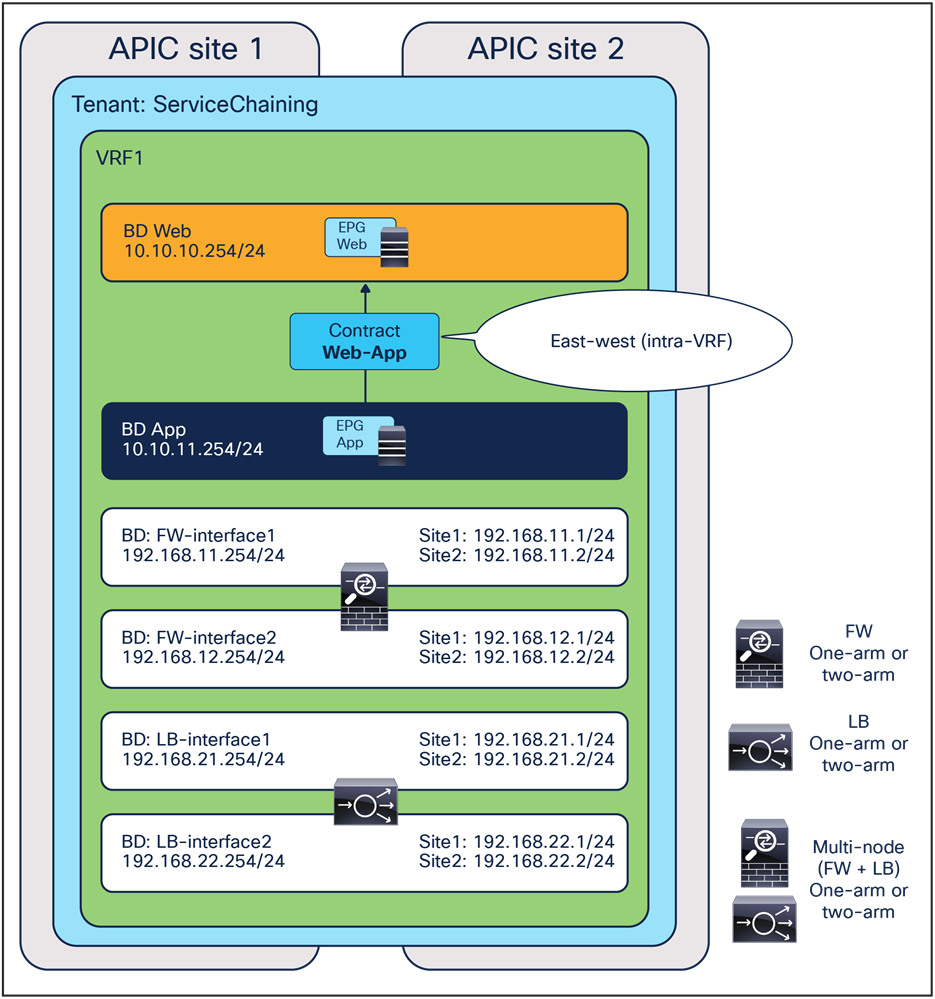

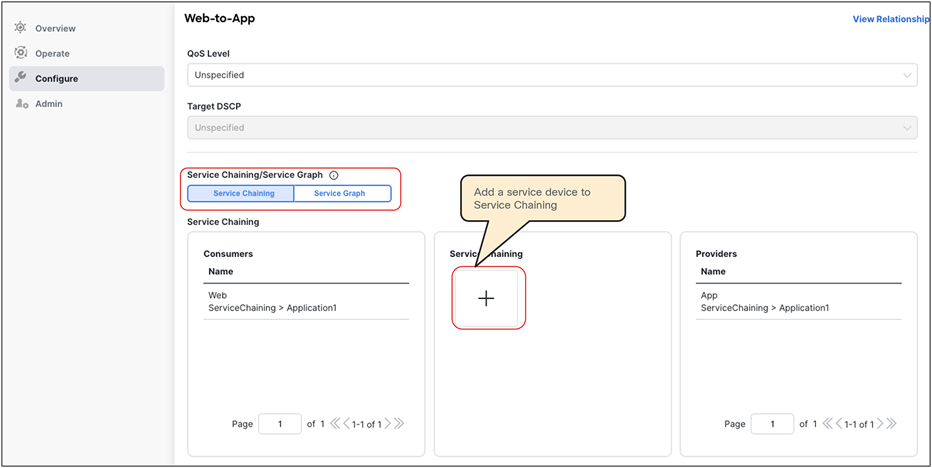

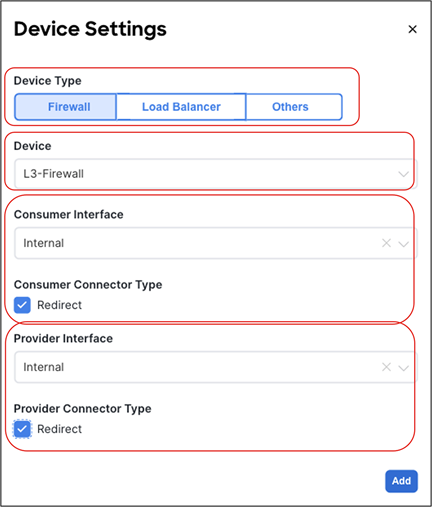

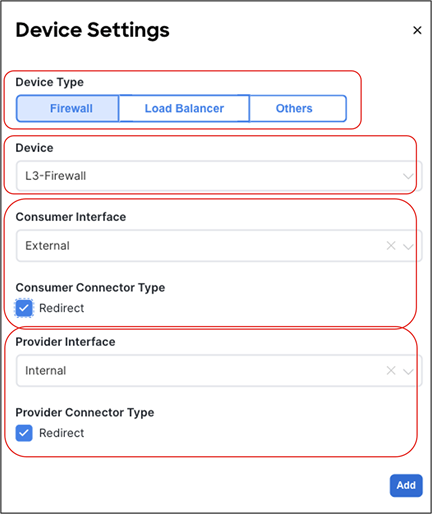

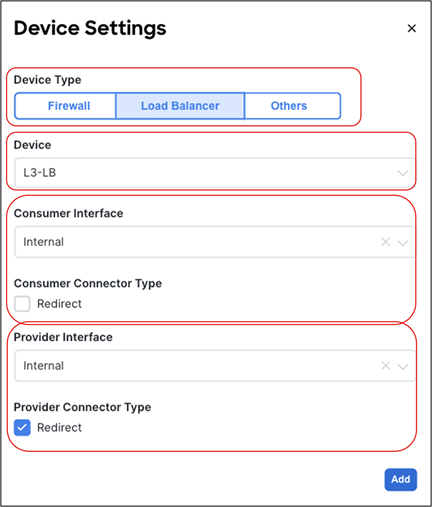

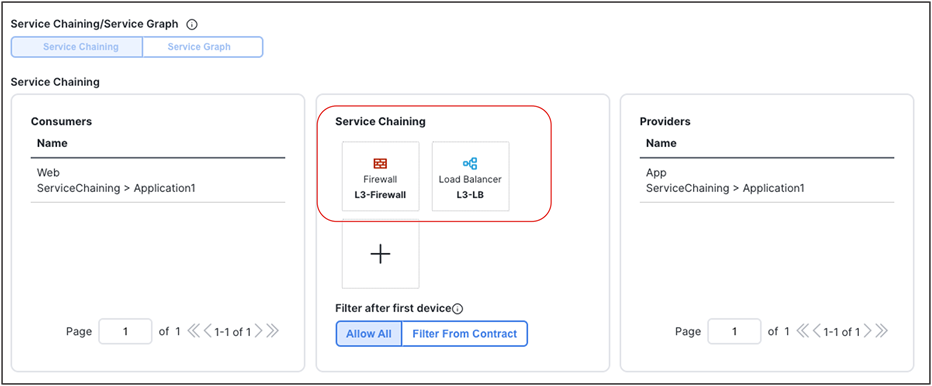

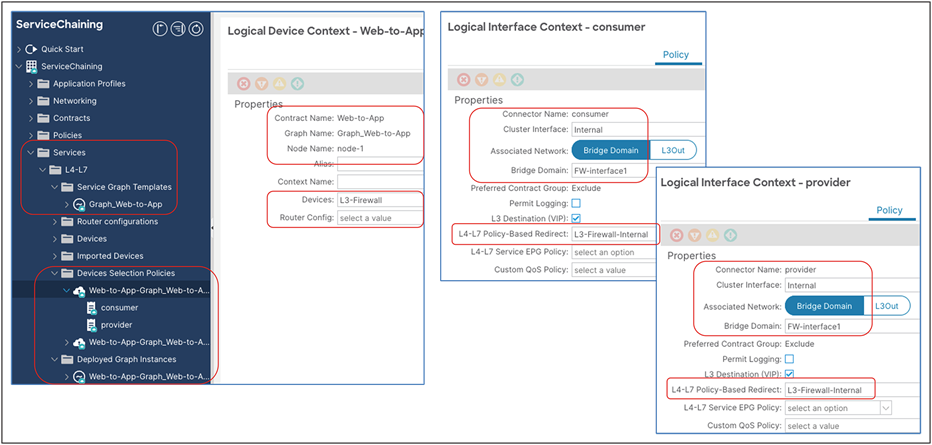

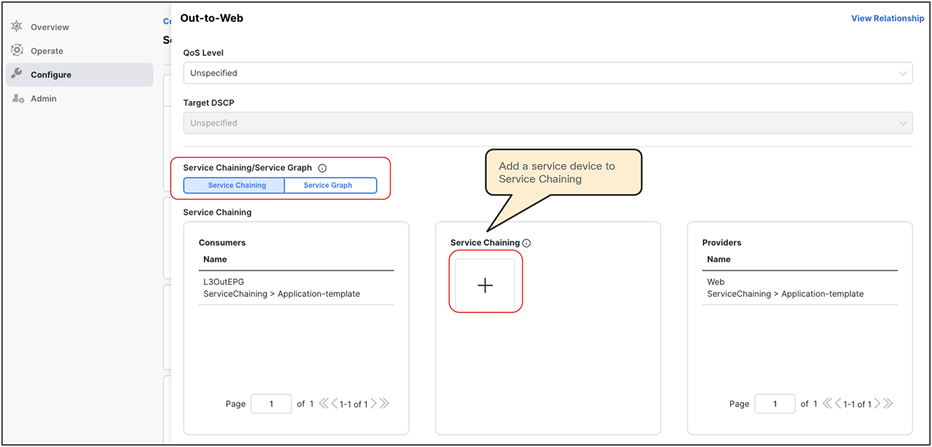

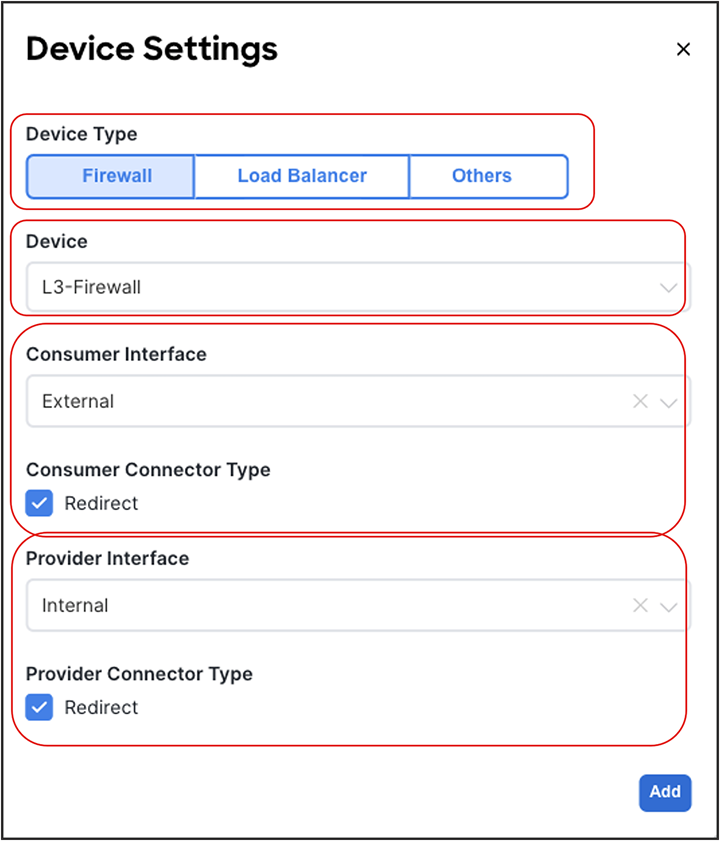

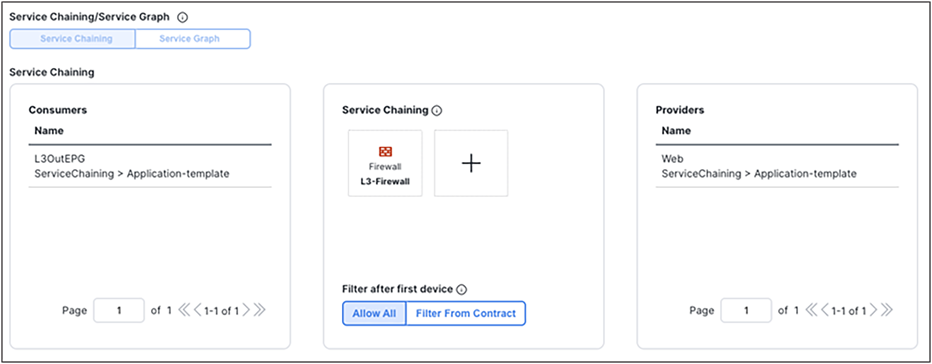

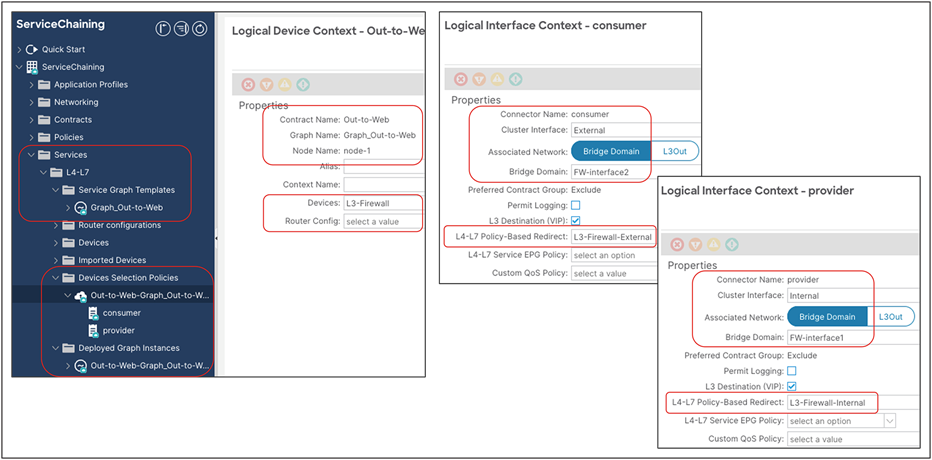

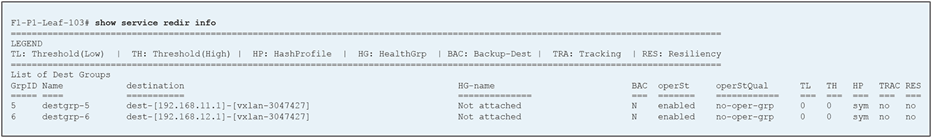

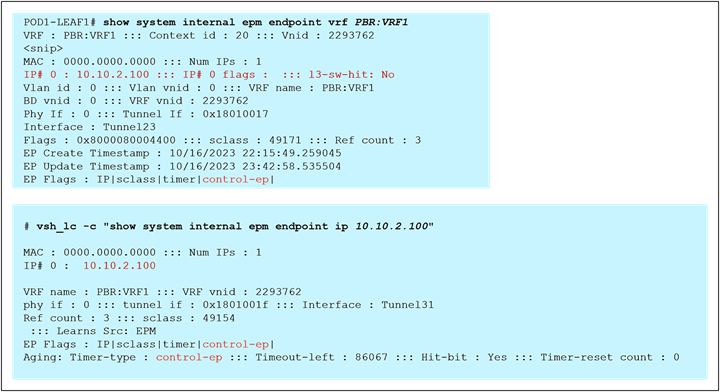

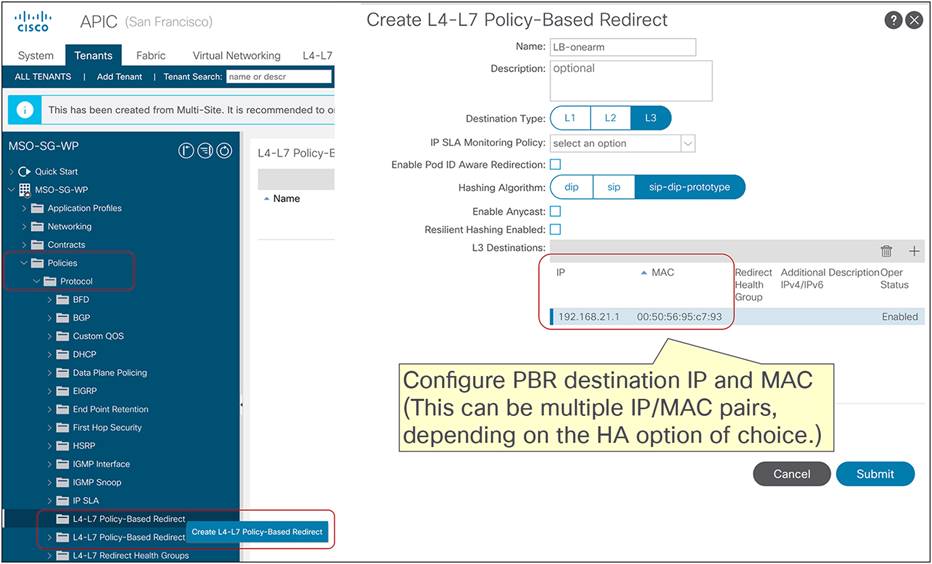

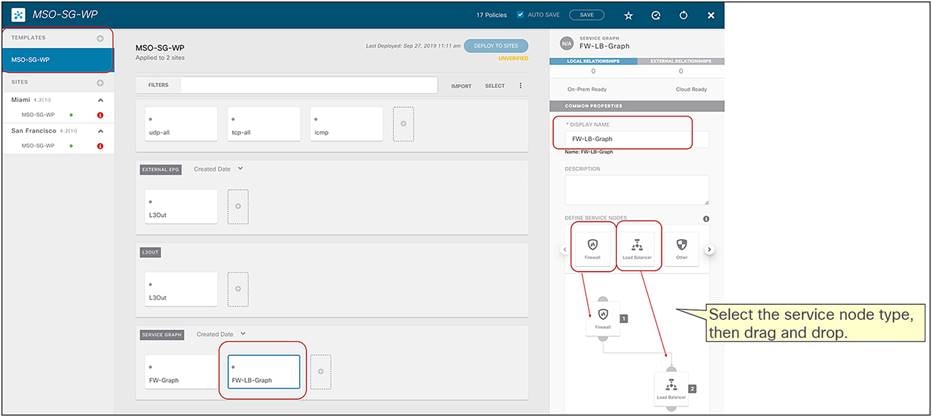

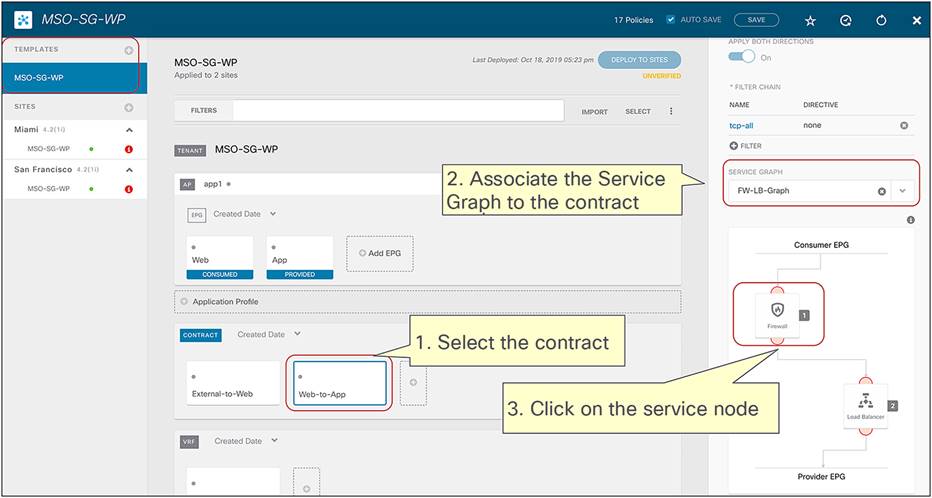

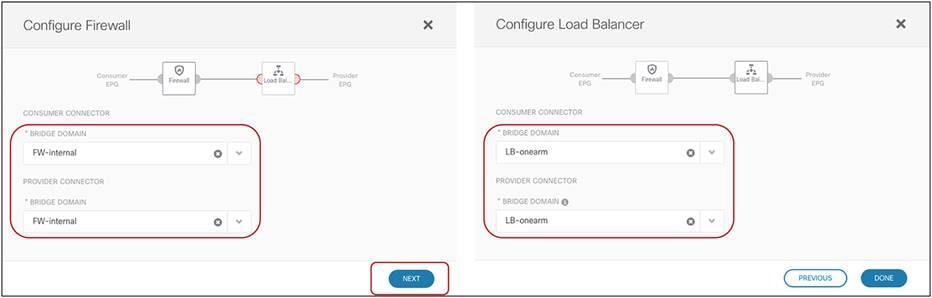

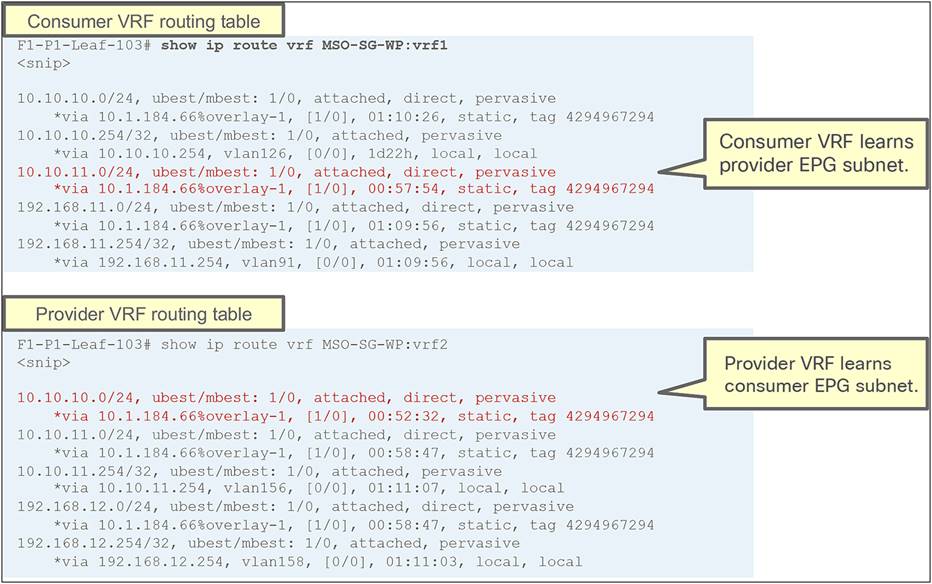

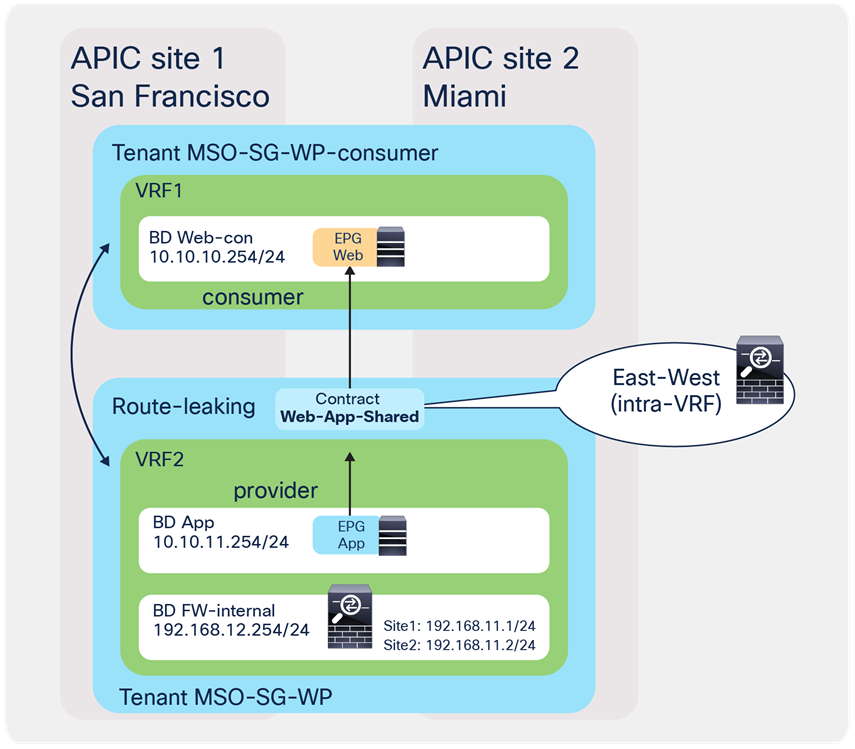

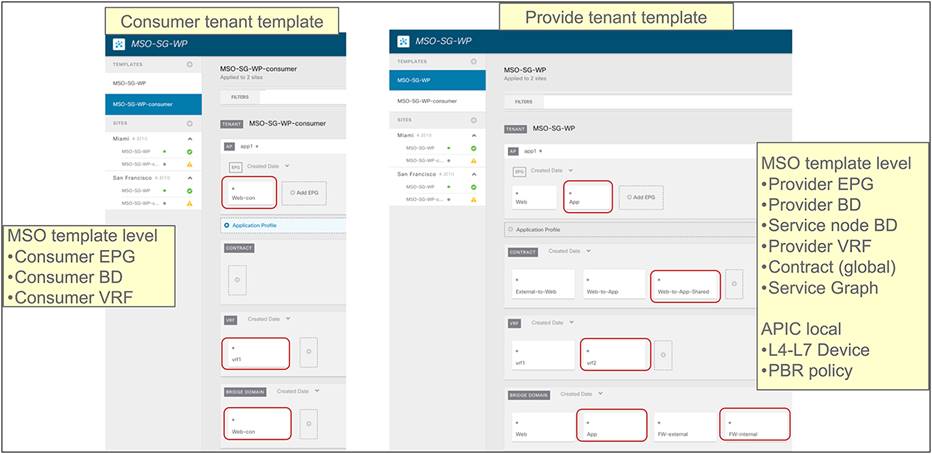

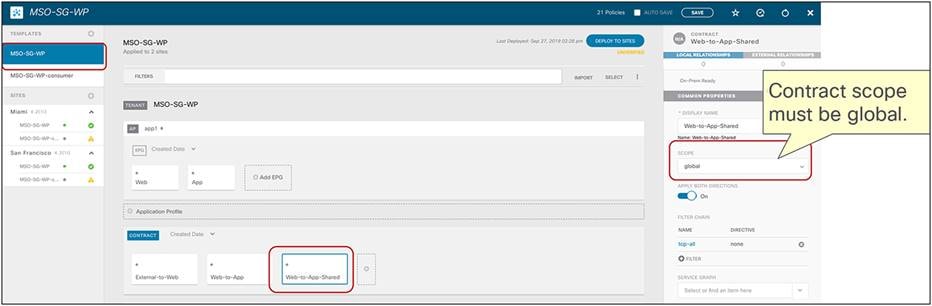

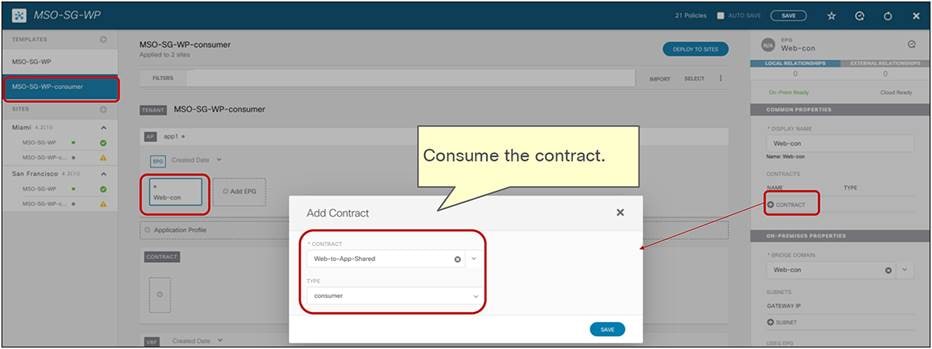

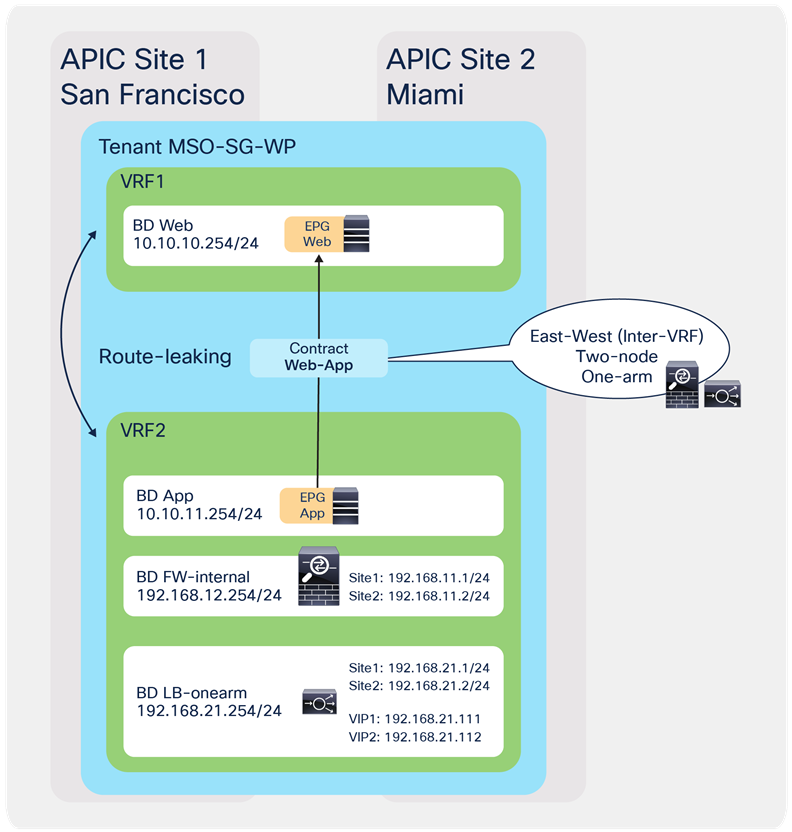

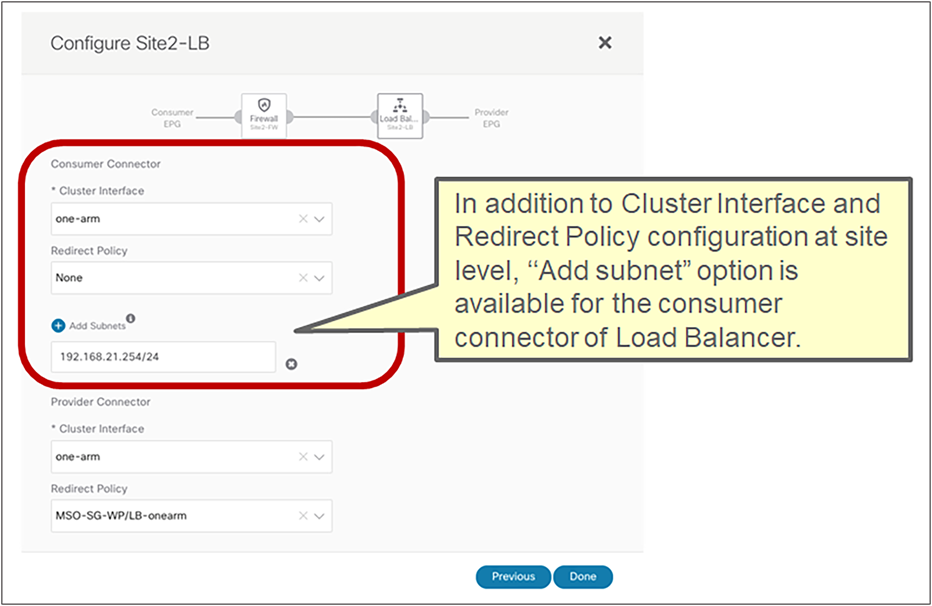

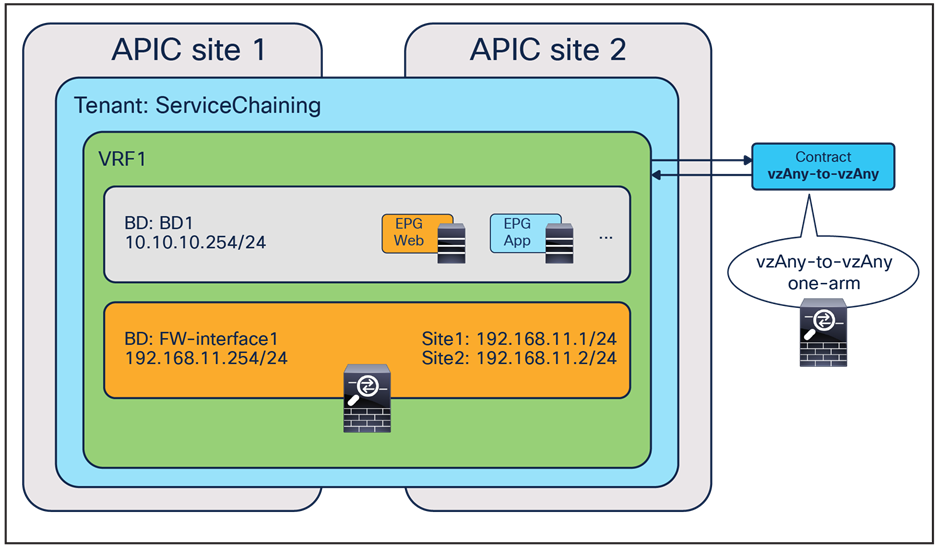

Example of east-west load balancer design without a SNAT