Introduction

This document describes the analysis of network connectivity or life of the packet for a Unified Computing System (UCS) Domain in Intersight Managed Mode and identifies the internal connection for servers with the API Explorer and NXOS commands.

Contributed by Luis Uribe, Cisco TAC engineer.

Prerequisites

Requirements

Cisco recommends that you have knowledge of these topics:

- Intersight

- Physical Network Connectivity

- Application Programming Interface (API)

Components used

The information in this document is based on these software and hardware versions:

- Cisco UCS 6454 Fabric Interconnect, firmware 4.2(1e)

- UCSB-B200-M5 blade server, firmware 4.2(1a)

- Intersight software as a service (SaaS)

The information in this document was created from the devices in a specific lab environment. All of the devices used in this document started with a cleared (default) configuration. If your network is live, ensure that you understand the potential impact of any command.

Background Information

The connection between Fabric interconnects and Virtual Network Interface (vNICs) is established through virtual circuits, called Virtual Interface (VIF). Such VIF's are pinned to uplinks and allow communication with the upstream network

In Intersight Managed Mode there is no command that maps the virtual interfaces with each server such as show service-profile circuit. API Explorer/NXOS commands can be used in order to determine the relationship of the internal circuits created within the UCS domain.

API Explorer

API explorer is available from the Graphical User Interface (GUI) of either of the fabric interconnects (primary or subordinate). Once logged into the console, navigate to Inventory, select the server and then click on Launch API Explorer.

The API Explorer contains an API Reference, which lists the available calls. It also includes a representational state transfer (REST) Client interface to test API calls.

Identify VIF through API calls

You can use a set of API calls to determine which VIF corresponds to each virtual vNIC. This allows you to troubleshoot NXOS more effectively.

For the purpose of this document, navigation with API calls is done through these items: Chassis, Server, Network Adapter, vNIC/vHBA.

|

API Call

|

Syntax

|

|

GET Chassis ID

|

/redfish/v1/Chassis

|

|

GET Adapter ID

|

/redfish/v1/Chassis/{ChassisId}/NetworkAdapters

|

|

GET Network details (list of vnics/vhbas)

|

/redfish/v1/Chassis/{ChassisId}/NetworkAdapters/{NetworkAdapterId}

|

|

GET Network device funtions (vNIC configuration)

|

/redfish/v1/Chassis/{ChassisId}/NetworkAdapters/{NetworkAdapterId}/NetworkDeviceFunctions

|

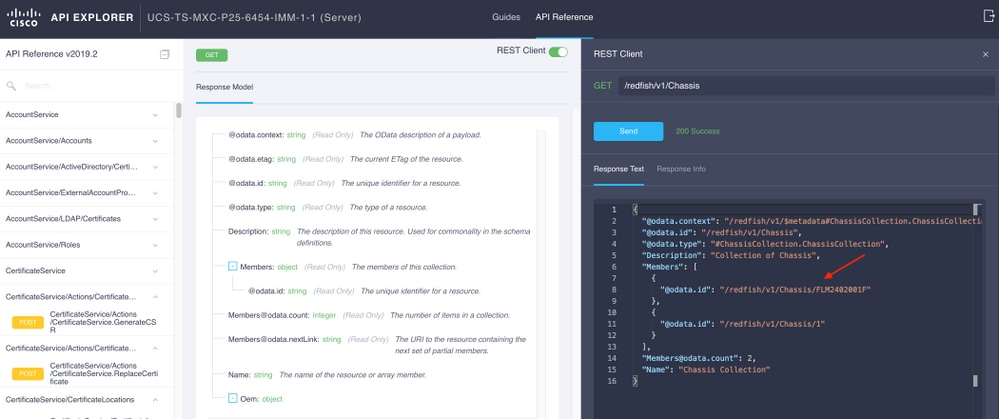

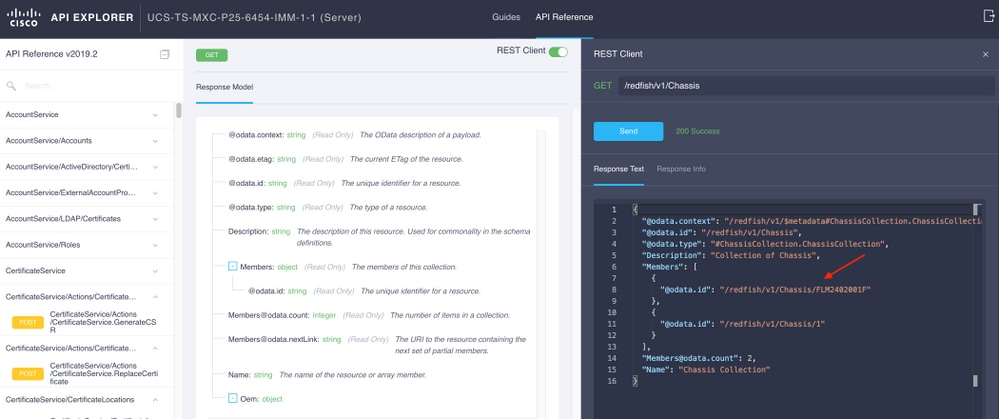

Retrieve Chassis ID

Copy the Chassis ID for the API call.

/redfish/v1/Chassis/FLM2402001F

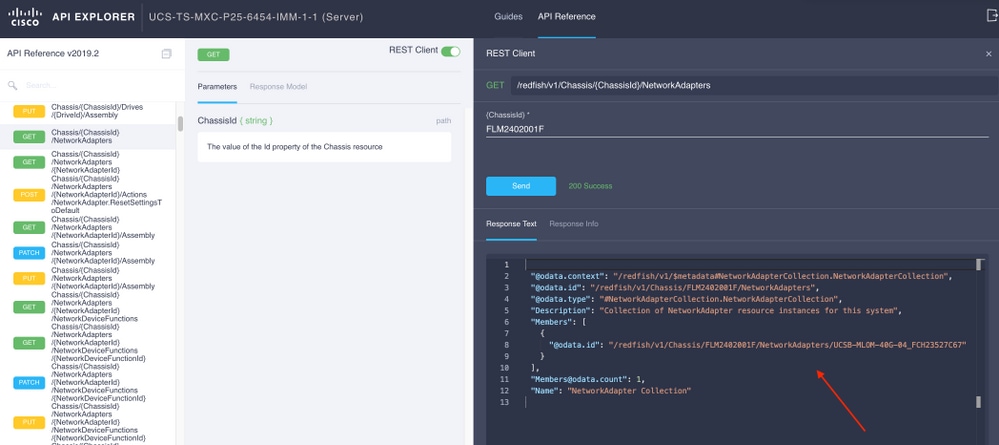

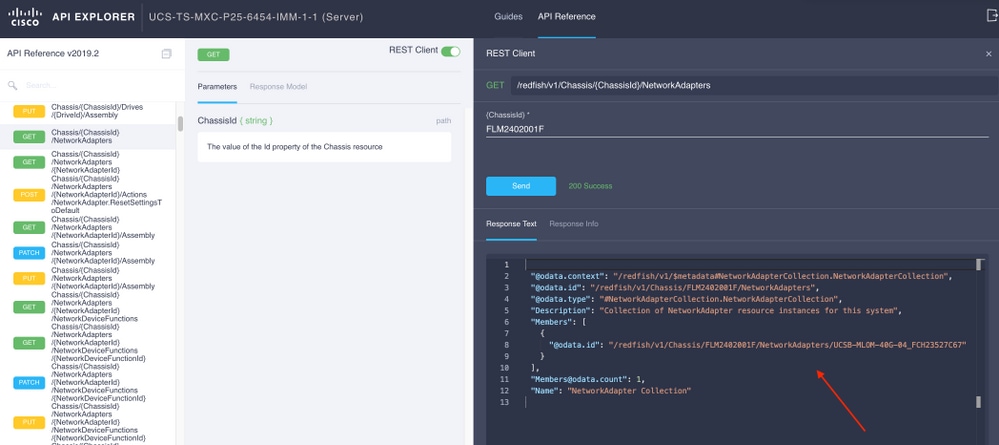

Retrieve the network adapter ID

Copy the Network ID for the next API call.

/redfish/v1/Chassis/FLM2402001F/NetworkAdapters/UCSB-MLOM-40G-04_FCH23527C67

Retrieve vNIC ID

Copy the Network adapter(s) ID.

/redfish/v1/Chassis/FLM2402001F/NetworkAdapters/UCSB-MLOM-40G-04_FCH23527C67/NetworkDeviceFunctions/Vnic-A

/redfish/v1/Chassis/FLM2402001F/NetworkAdapters/UCSB-MLOM-40G-04_FCH23527C67/NetworkDeviceFunctions/Vnic-B

Retrieve the VIF ID of the corresponding vNIC

In this case, vNIC-A is mapped to VIF 800. From here, NXOS commands contain this virtual interface.

Identifying VIF with NXOS and Grep Filters

If API Explorer is not available, or you have no access to the GUI, CLI commands can be used to retrieve VIF information.

Note: You must know the Server Profile in order to use these commands.

UCS-TS-MXC-P25-6454-IMM-A(nx-os)# show run interface | grep prev 1 IMM-Server-1

switchport trunk allowed vsan 1

switchport description SP IMM-Server-1, vHBA vhba-a, Blade:FLM2402001F

--

interface Vethernet800

description SP IMM-Server-1, vNIC Vnic-A, Blade:FLM2402001F

--

interface Vethernet803

description SP IMM-Server-1, vNIC Vnic-b, Blade:FLM2402001F

--

interface Vethernet804

description SP IMM-Server-1, vHBA vhba-a, Blade:FLM2402001F

|

Command Syntax

|

Use

|

|

show run interface | grep prev 1 <server profile name>

|

Lists Vethernets associated with each vNIC/vHBA

|

|

show run interface | grep prev 1 next 10 <server profile name>

|

Lists detailed Vethernet configuration

|

NXOS Troubleshoot

Once the vNIC has been mapped to the correspondent Vethernet, analysis can be done on NXOS with the same commands used to troubleshoot physical interfaces.

Notation for vNICs is veth - Vethernet.

show interface brief shows Veth800 in downstate with ENM Source Pin Failure as the reason.

UCS-TS-MXC-P25-6454-IMM-A# connect nxos

UCS-TS-MXC-P25-6454-IMM-A(nx-os)# show interface brief | grep -i Veth800

Veth800 1 virt trunk down ENM Source Pin Fail auto

show interface shows Vethernet 800 is in an initializing state.

UCS-TS-MXC-P25-6454-IMM-A(nx-os)# show interface Vethernet 800

Vethernet800 is down (initializing)

Port description is SP IMM-Server-1, vNIC Vnic-A, Blade:FLM2402001F

Hardware is Virtual, address is 0000.abcd.dcba

Port mode is trunk

Speed is auto-speed

Duplex mode is auto

300 seconds input rate 0 bits/sec, 0 packets/sec

300 seconds output rate 0 bits/sec, 0 packets/sec

Rx

0 unicast packets 0 multicast packets 0 broadcast packets

0 input packets 0 bytes

0 input packet drops

Tx

0 unicast packets 0 multicast packets 0 broadcast packets

0 output packets 0 bytes

0 flood packets

0 output packet drops

UCS-TS-MXC-P25-6454-IMM-A(nx-os)# show running-config interface Vethernet 800

!Command: show running-config interface Vethernet800

!Running configuration last done at: Mon Sep 27 16:03:46 2021

!Time: Tue Sep 28 14:35:22 2021

version 9.3(5)I42(1e) Bios:version 05.42

interface Vethernet800

description SP IMM-Server-1, vNIC Vnic-A, Blade:FLM2402001F

no lldp transmit

no lldp receive

no pinning server sticky

pinning server pinning-failure link-down

no cdp enable

switchport mode trunk

switchport trunk allowed vlan 1,470

hardware vethernet mac filtering per-vlan

bind interface port-channel1280 channel 800

service-policy type qos input default-IMM-QOS

no shutdown

A VIF needs to be pinned to an uplink interface, in this scenario show pinning border interface does not display the Vethernet pinned to any uplink.

UCS-TS-MXC-P25-6454-IMM-A(nx-os)# show pinning border-interfaces

--------------------+---------+----------------------------------------

Border Interface Status SIFs

--------------------+---------+----------------------------------------

Eth1/45 Active sup-eth1

Eth1/46 Active Eth1/1/33

This indicates that uplinks require additional configuration. This output corresponds to the show running configuration of Ethernet Uplink 1/46.

UCS-TS-MXC-P25-6454-IMM-B(nx-os)# show running-config interface ethernet 1/45

!Command: show running-config interface Ethernet1/45

!No configuration change since last restart

!Time: Wed Sep 29 05:15:21 2021

version 9.3(5)I42(1e) Bios:version 05.42

interface Ethernet1/45

description Uplink

pinning border

switchport mode trunk

switchport trunk allowed vlan 69,470

no shutdown

show mac address-table details that Veth800 uses VLAN 1 which is not present on the uplinks.

UCS-TS-MXC-P25-6454-IMM-A(nx-os)# show mac address-table

Legend:

* - primary entry, G - Gateway MAC, (R) - Routed MAC, O - Overlay MAC

age - seconds since last seen,+ - primary entry using vPC Peer-Link,

(T) - True, (F) - False, C - ControlPlane MAC, ~ - vsan

VLAN MAC Address Type age Secure NTFY Ports

---------+-----------------+--------+---------+------+----+------------------

* 1 0025.b501.0036 static - F F Veth800

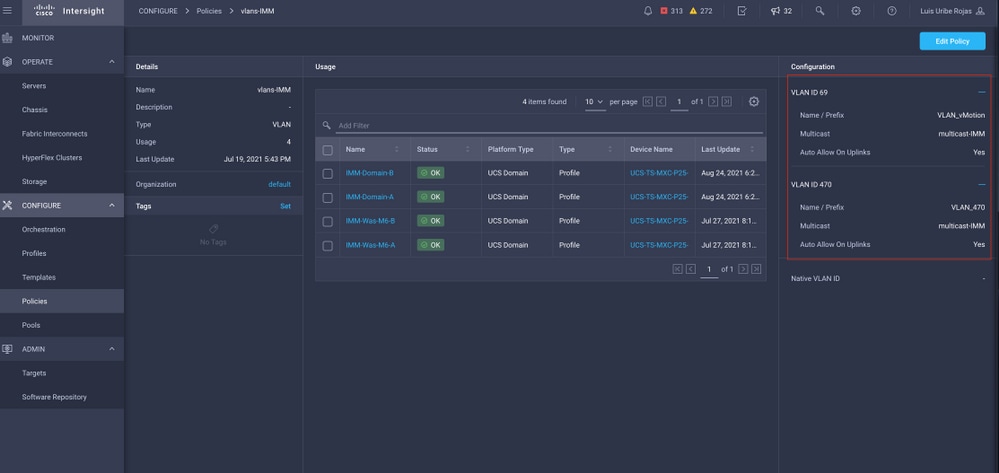

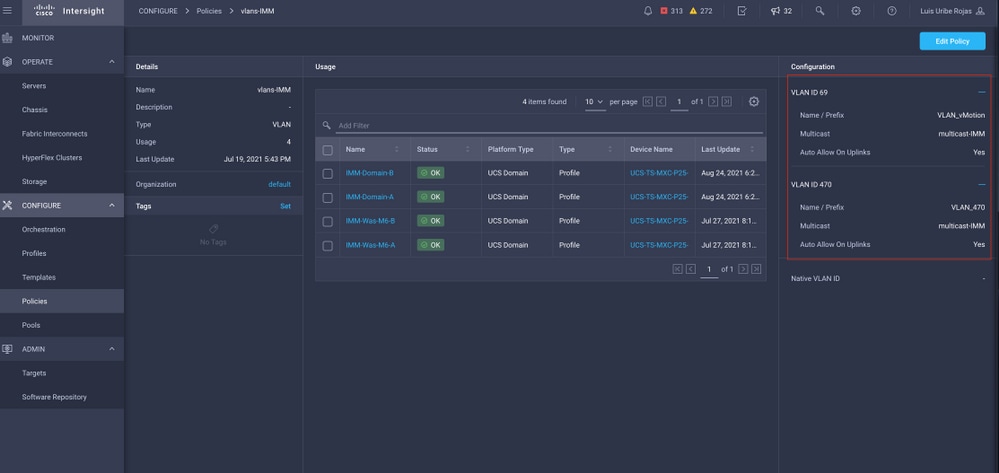

On a UCS Domain, the VLAN in use must be included on the vNIC and the uplinks as well. VLAN policy configures the VLANs on the fabric interconnects. The image shows the configuration of this UCS domain.

VLAN 1 is not present on the policy so it must be added.

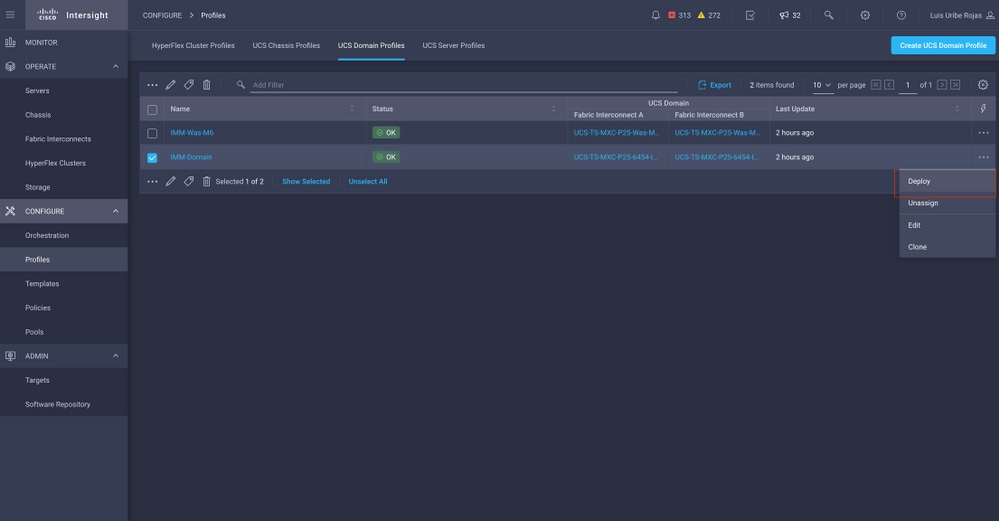

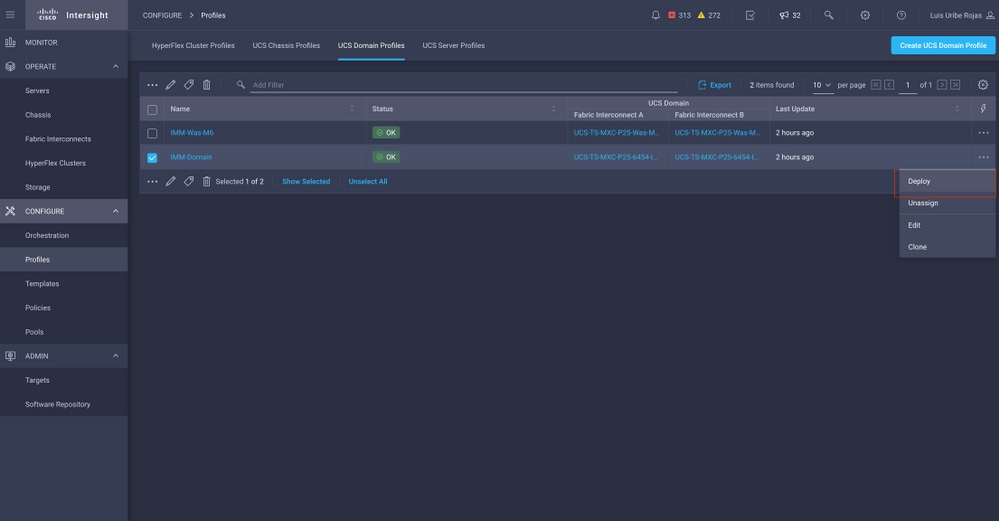

Select Edit Policy in order to allow connectivity. This change requires the deployment of the UCS Domain Profile.

VLAN assignment can be verified by CLI:

UCS-TS-MXC-P25-6454-IMM-A(nx-os)# show running-config interface ethernet 1/45

!Command: show running-config interface Ethernet1/45

!Running configuration last done at: Wed Sep 29 07:50:43 2021

!Time: Wed Sep 29 07:59:31 2021

version 9.3(5)I42(1e) Bios:version 05.42

interface Ethernet1/45

description Uplink

pinning border

switchport mode trunk

switchport trunk allowed vlan 1,69,470

udld disable

no shutdown

UCS-TS-MXC-P25-6454-IMM-A(nx-os)#

Now that the necessary VLAN(s) is added, the same set of commands can be used in order to verify connectivity on Vethernet800:

UCS-TS-MXC-P25-6454-IMM-A(nx-os)# show interface brief | grep -i Veth800

Veth800 1 virt trunk up none auto

UCS-TS-MXC-P25-6454-IMM-A(nx-os)# show interface Vethernet 800

Vethernet800 is up

Port description is SP IMM-Server-1, vNIC Vnic-A, Blade:FLM2402001F

Hardware is Virtual, address is 0000.abcd.dcba

Port mode is trunk

Speed is auto-speed

Duplex mode is auto

300 seconds input rate 0 bits/sec, 0 packets/sec

300 seconds output rate 0 bits/sec, 0 packets/sec

Rx

0 unicast packets 1 multicast packets 6 broadcast packets

7 input packets 438 bytes

0 input packet drops

Tx

0 unicast packets 25123 multicast packets 137089 broadcast packets

162212 output packets 11013203 bytes

0 flood packets

0 output packet drops

UCS-TS-MXC-P25-6454-IMM-A(nx-os)# show running-config interface Vethernet 800

!Command: show running-config interface Vethernet800

!Running configuration last done at: Wed Sep 29 07:50:43 2021

!Time: Wed Sep 29 07:55:51 2021

version 9.3(5)I42(1e) Bios:version 05.42

interface Vethernet800

description SP IMM-Server-1, vNIC Vnic-A, Blade:FLM2402001F

no lldp transmit

no lldp receive

no pinning server sticky

pinning server pinning-failure link-down

switchport mode trunk

switchport trunk allowed vlan 1,69,470

hardware vethernet mac filtering per-vlan

bind interface port-channel1280 channel 800

service-policy type qos input default-IMM-QOS

no shutdown

Veth800 is listed on the pinned interfaces to the uplink Ethernet interfaces:

UCS-TS-MXC-P25-6454-IMM-A(nx-os)# show pinning border-interfaces

--------------------+---------+----------------------------------------

Border Interface Status SIFs

--------------------+---------+----------------------------------------

Eth1/45 Active sup-eth1 Veth800 Veth803

Eth1/46 Active Eth1/1/33

Total Interfaces : 2

UCS-TS-MXC-P25-6454-IMM-A(nx-os)#

VIFs are now ready to transmit traffic to the upstream network.

Related Information

Feedback

Feedback