Multicast Support for MPLS VPNs Configuration Example

Available Languages

Contents

Introduction

This document provides a sample configuration and general guidelines to configure multicast support for Multiprotocol Label Switching (MPLS) VPNs. This feature was introduced in Cisco IOS® Software Release 12.0(23)S and 12.2(13)T.

Prerequisites

Requirements

Before attempting this configuration, ensure that you meet these requirements:

-

Service providers must have a multicast-enabled core in order to use the Cisco Multicast VPN feature.

Components Used

The information in this document is based on Cisco IOS Software Release 12.2(13)T

Note: To obtain updated information about platform support for this feature, use the Software Advisor (registered customers only) . The Software Advisor dynamically updates the list of supported platforms as new platform support is added for the feature.

The information in this document was created from the devices in a specific lab environment. All of the devices used in this document started with a cleared (default) configuration. If your network is live, make sure that you understand the potential impact of any command.

Conventions

For more information on document conventions, refer to Cisco Technical Tips Conventions.

Background Information

For background information, refer to the Cisco IOS Software Release 12.2(13)T new feature documentation for IP Multicast Support for MPLS VPNs.

Configure

In this section, you are presented with the information to configure the features described in this document.

Network Diagram

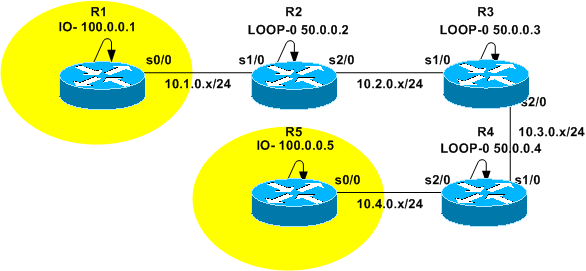

This document uses the network setup shown in this diagram.

Configurations

The network diagram represents a service provider's backbone. This consists of routers R2, R3, and R4. The backbone is configured to support MPLS VPN. R2 and R4 are Provider Edge (PE) routers while R3 is a Provider (P) router. R1 and R5 represent Customer Edge (CE) routers that belong to the same VPN Routing and Forwarding (VRF) instance, yellow.

In order to provide multicast services, the backbone must be configured to run multicast routing. The multicast protocol selected for this purpose is Protocol Independent Multicast (PIM), and R3 is configured as the Rendezvous Point (RP). R2 and R4 are also configured to run multicast routing into VRF yellow. PIM sparse-dense mode is configured as the multicast routing protocol between the PEs and the CEs. R2 has been configured to be the RP for VRF yellow.

In order to test the multicast connectivity, the s0/0 interface of R5 is configured to join multicast group 224.2.2.2. Pings are sent from R1's loopback address to 224.2.2.2. The Internet Control Message Protocol (ICMP) echo is a multicast packet, while the ICMP reply is a unicast packet since the IP destination address is the R1 loopback address.

The configurations presented in this document include these:

| R1-(CE) |

|---|

version 12.2 service timestamps debug datetime msec service timestamps log datetime msec no service password-encryption ! hostname R1 ! ! clock timezone CET 1 ip subnet-zero no ip domain lookup ! ip multicast-routing !--- Enable multicast routing. ! ! interface Loopback0 ip address 100.0.0.1 255.255.255.255 ! interface Serial0/0 ip address 10.1.0.1 255.255.255.0 ip pim sparse-dense-mode !--- PIM sparce-dense mode is used between the PE and CE. !--- PIM sparce-dense mode is the multicast routing protocol. ! router rip version 2 network 10.0.0.0 network 100.0.0.0 no auto-summary ! ip classless no ip http server ip pim bidir-enable ! ! ! ! line con 0 exec-timeout 0 0 line aux 0 line vty 0 4 login ! end |

| R2-(PE) |

|---|

version 12.2 service timestamps debug datetime msec service timestamps log datetime msec no service password-encryption ! hostname R2 ! ! clock timezone CET 1 ip subnet-zero no ip domain lookup ! ip vrf yellow rd 2:200 route-target export 2:200 route-target import 2:200 mdt default 239.1.1.1 !--- Configure the default Multicast Distribution Tree (MDT) !--- for VRF yellow. mdt data 239.2.2.0 0.0.0.255 threshold 1 !--- Configure the range global addresses for !--- data MDTs and the threshold. ip multicast-routing !--- Enable global multicast routing. ip multicast-routing vrf yellow !--- Enable multicast routing in VRF yellow. ip cef mpls label protocol ldp tag-switching tdp router-id Loopback0 ! ! ! interface Loopback0 ip address 50.0.0.2 255.255.255.255 ip pim sparse-dense-mode !--- Multicast needs to be enabled on loopback !--- interface. This is used as a source !--- for MPBGP sessions between PE routers that participate in MVPN. ! interface Loopback100 ip vrf forwarding yellow ip address 100.0.0.2 255.255.255.255 ip pim sparse-dense-mode ! !--- This router needs to be RP for !--- multicast in VRF yellow. Therefore, multicast !--- needs to be enabled on the interface which is used as RP. ! interface Serial1/0 ip vrf forwarding yellow ip address 10.1.0.2 255.255.255.0 ip pim sparse-dense-mode !--- Multicast is enabled on PE-CE interfaces in VRF. ! interface Serial2/0 ip address 10.2.0.2 255.255.255.0 ip pim sparse-dense-mode !--- Service provider core needs to run multicast !--- to support MVPN services, !--- so multicast is enabled on PE-P links. tag-switching ip ! router ospf 1 router-id 50.0.0.2 log-adjacency-changes network 10.0.0.0 0.255.255.255 area 0 network 50.0.0.0 0.0.0.255 area 0 ! router rip version 2 no auto-summary ! address-family ipv4 vrf yellow version 2 redistribute bgp 1 network 10.0.0.0 network 100.0.0.0 default-metric 5 no auto-summary exit-address-family ! router bgp 1 no synchronization no bgp default ipv4-unicast bgp log-neighbor-changes redistribute rip neighbor 50.0.0.4 remote-as 1 neighbor 50.0.0.4 update-source Loopback0 neighbor 50.0.0.4 activate neighbor 50.0.0.6 remote-as 1 neighbor 50.0.0.6 update-source Loopback0 neighbor 50.0.0.6 activate no auto-summary ! address-family ipv4 vrf yellow redistribute connected redistribute rip no auto-summary no synchronization exit-address-family ! address-family vpnv4 neighbor 50.0.0.4 activate neighbor 50.0.0.4 send-community extended neighbor 50.0.0.6 activate neighbor 50.0.0.6 send-community extended no auto-summary exit-address-family ! ip classless no ip http server ip pim bidir-enable ip pim vrf yellow send-rp-announce Loopback100 scope 100 ip pim vrf yellow send-rp-discovery Loopback100 scope 100 !--- Configure auto-RP. The R2's loopback !--- 100 is the RP in VRF yellow. ! ! ! line con 0 exec-timeout 0 0 line aux 0 line vty 0 4 login ! end |

| R3-(P) |

|---|

version 12.2 service timestamps debug datetime msec service timestamps log datetime msec no service password-encryption ! hostname R3 ! ! clock timezone CET 1 ip subnet-zero ! ip multicast-routing !--- Enable global multicast routing. ip cef mpls label protocol ldp tag-switching tdp router-id Loopback0 ! ! ! interface Loopback0 ip address 50.0.0.3 255.255.255.255 ip pim sparse-dense-mode ! ! interface Serial1/0 ip address 10.2.0.3 255.255.255.0 ip pim sparse-dense-mode !--- Enable multicast on links to PE routers !--- which have MVPNs configured. tag-switching ip ! interface Serial2/0 ip address 10.3.0.3 255.255.255.0 ip pim sparse-dense-mode tag-switching ip ! router ospf 1 router-id 50.0.0.3 log-adjacency-changes network 10.0.0.0 0.255.255.255 area 0 network 50.0.0.0 0.0.0.255 area 0 ! ip classless no ip http server ip pim bidir-enable ip pim send-rp-announce Loopback0 scope 100 ip pim send-rp-discovery Loopback0 scope 100 !--- R3 is configured to announce itself as !--- the RP through auto-RP. ! ! ! ! line con 0 exec-timeout 0 0 line aux 0 line vty 0 4 login ! end |

| R4-(PE) |

|---|

version 12.2 service timestamps debug datetime msec service timestamps log datetime msec no service password-encryption ! hostname R4 ! ! clock timezone CET 1 ip subnet-zero no ip domain lookup ! ip vrf yellow rd 2:200 route-target export 2:200 route-target import 2:200 mdt default 239.1.1.1 !--- Configure the default MDT address. mdt data 238.2.2.0 0.0.0.255 threshold 1 !--- Configure the data MDT range and threshold. ! ip multicast-routing !--- Enable global multicast routing. ip multicast-routing vrf yellow !--- Enable multicast routing in VRF yellow. ip cef mpls label protocol ldp tag-switching tdp router-id Loopback0 ! ! ! interface Loopback0 ip address 50.0.0.4 255.255.255.255 ip pim sparse-dense-mode ! interface Loopback100 ip vrf forwarding yellow ip address 100.0.0.4 255.255.255.255 ip pim sparse-dense-mode ! interface Serial1/0 ip address 10.3.0.4 255.255.255.0 ip pim sparse-dense-mode tag-switching ip ! interface Serial2/0 ip vrf forwarding yellow ip address 10.4.0.4 255.255.255.0 ip pim sparse-dense-mode !--- Enable the PIM toward the CE. ! router ospf 1 router-id 50.0.0.4 log-adjacency-changes network 10.0.0.0 0.255.255.255 area 0 network 50.0.0.0 0.0.0.255 area 0 ! router rip version 2 no auto-summary ! address-family ipv4 vrf yellow version 2 redistribute bgp 1 network 10.0.0.0 network 100.0.0.0 default-metric 5 no auto-summary exit-address-family ! router bgp 1 no synchronization no bgp default ipv4-unicast bgp log-neighbor-changes redistribute rip neighbor 50.0.0.2 remote-as 1 neighbor 50.0.0.2 update-source Loopback0 neighbor 50.0.0.2 activate no auto-summary ! address-family ipv4 vrf yellow redistribute connected redistribute rip no auto-summary no synchronization exit-address-family ! address-family vpnv4 neighbor 50.0.0.2 activate neighbor 50.0.0.2 send-community extended no auto-summary exit-address-family ! ip classless no ip http server ip pim bidir-enable ! ! ! ! ! line con 0 exec-timeout 0 0 line aux 0 line vty 0 4 login ! end |

| R5-(CE) |

|---|

version 12.2 service timestamps debug datetime msec service timestamps log datetime msec no service password-encryption ! hostname R5 ! ! clock timezone CET 1 ip subnet-zero no ip domain lookup ! ip multicast-routing !--- Enable global multicast routing in the CE. ! ! interface Loopback0 ip address 100.0.0.5 255.255.255.255 ! interface Serial0/0 ip address 10.4.0.5 255.255.255.0 ip pim sparse-dense-mode ip igmp join-group 224.2.2.2 ! router rip version 2 network 10.0.0.0 network 100.0.0.0 no auto-summary ! ip classless no ip http server ip pim bidir-enable ! ! ! ! ! line con 0 exec-timeout 0 0 line aux 0 line vty 0 4 login ! end |

Design Tips

-

Multicast for MPLS VPNs (MVPN) is configured on top of the VPN configuration. The MPLS VPN network needs to be carefully designed, to observe all recommendations for MPLS VPN networks first.

-

The service provider core must be configured for native multicast service. The core must be configured for PIM Sparse mode (PIM-SM), Source specific multicast (PIM-SSM), or Bidirectional PIM (PIM-BIDIR). Dense mode PIM (PIM-DM) is not supported as core protocol in MVPN configurations. It is possible to configure a mix of supported protocols in the provider's core. This can be done when some multicast groups are handled by one PIM mode and some other groups are handled by another supported PIM mode.

-

All multicast protocols are supported within multicast VRF. That is, within multicast VRF you can use MSDP and PIM-DM in addition to PIM-SM, PIM-SSM and PIM-BIDIR

-

The MVPN service can be added separately on a VRF-by-VRF basis. That is, one PE router may have both multicast-enabled VRFs and unicast-only VRFs configured.

-

Not all sites of a single unicast VRF must be configured for multicast. It is possible to have some sites (and even interfaces of MVPN PE router) where multicast is not enabled. You must ensure that routes are never calculated to point to non-multicast enabled interfaces. Otherwise, multicast forwarding will be broken.

-

More than one VRF can belong to the same MVPN multicast domain. IP addressing must be unique within a multicast domain. Leaking of routes and/or packets between multicast domains or into a global multicast routing table is currently not possible.

-

An MDT default configuration is mandatory for MVPN to work. Configuring data MDT is optional. It is highly recommended to set the threshold for data MDT if you choose to configure one.

-

The IP address of the default MDT determines which multicast domain VRF belongs to. Therefore, it is possible to have the same default MDT address for more than one VRF. However, they will share multicast packets between them and must observe other requirements on multicast domains (such as unique IP addressing scheme).

-

Data MDT might or might not be configured with the same range of IP addresses on different PE routers. This depends on which PIM mode is used in a provider's core. If the service provider core is using Sparse mode PIM, then each PE router must use a unique range of IP addresses for Data MDT groups. If the service provider core is using Source-specific multicast, then all PE routers might be configured with the same range of IP addresses for Data MDT of each multicast domain.

Verify

This section provides information you can use to confirm your configuration is working properly.

Certain show commands are supported by the Output Interpreter Tool (registered customers only) , which allows you to view an analysis of show command output.

-

show ip igmp groups—Displays the multicast groups with receivers that are directly connected to the router and that were learned through Internet Group Management Protocol (IGMP).

-

show ip pim mdt bgp—Displays detailed Border Gateway Protocol (BGP) advertisement of the Route Distinguisher (RD) for the MDT default group.

-

show ip pim vrf <vrf-name> mdt send—Displays the data MDT advertisements that the router has made in the specified VRF.

-

show ip pim vrf <vrf-name> mdt receive—Displays the data MDT advertisements received by the router in the specified VRF.

-

show ip mroute—Displays the contents of the IP multicast routing table in the provider's core.

-

show ip mroute vrf <vrf-name> —Displays the multicast routing table in the client's VRF.

Complete these steps to verify that your configuration is working properly.

-

Check that the PEs have joined the IGMP group for the default MDT tunnel.

If it is configured after the default-mdt command is issued under the VRF configuration, the PE might fail to join the default MDT group. Once the loopback is configured, remove the mdt command from the VRF and put it back to solve the problem.

-

For PE-R2, issue the show ip igmp groups command.

IGMP Connected Group Membership Group Address Interface Uptime Expires Last Reporter 224.0.1.40 Serial2/0 02:21:23 stopped 10.2.0.2 239.1.1.1 Loopback0 02:36:59 stopped 0.0.0.0

-

For PE-R4, issue the show ip igmp groups command.

IGMP Connected Group Membership Group Address Interface Uptime Expires Last Reporter 224.0.1.40 Loopback0 02:51:48 00:02:39 50.0.0.4 239.1.1.2 Loopback0 02:51:45 stopped 0.0.0.0 239.1.1.1 Loopback0 02:51:45 stopped 0.0.0.0 239.2.2.0 Loopback0 01:40:03 stopped 0.0.0.0

-

-

Check the BGP advertisement received for each PE.

Note: For this example, check the MDTs sourced from the peer PEs PE-R2 and PE-R4.

-

For PE-R2, issue the show ip pim mdt bgp command.

MDT-default group 239.1.1.1 rid: 50.0.0.4 next_hop: 50.0.0.4 WAVL tree nodes MDT-default: 239.1.1.1 Tunnel0 source-interface: Loopback0

-

For PE-R4 issue the show ip pim mdt bgp command

MDT-default group 239.1.1.1 rid: 50.0.0.2 next_hop: 50.0.0.2 WAVL tree nodes MDT-default: 239.1.1.1 Tunnel0 source-interface: Loopback0 MDT-data : 239.2.2.0 Tunnel0 source-interface: Loopback0

-

-

Check the data MDTs.

Note: For this example, check the data MDT sourced or joined by PE-R2 and PE-R4.

-

For PE-R2, issue the show ip pim vrf yellow mdt send command.

MDT-data send list for VRF: yellow (source, group) MDT-data group ref_count (100.0.0.1, 224.2.2.2) 239.2.2.0 1

-

For PE-R2, issue the show ip pim vrf yellow mdt receive command.

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected, L - Local, P - Pruned, R - RP-bit set, F - Register flag, T - SPT-bit set, J - Join SPT, M - MSDP created entry, X - Proxy Join Timer Running, A - Candidate MSDP Advertisement, U - URD, I - Received Source Specific Host Report, Z - Multicast Tunnel Y - Joined MDT-data group, y - Sending to MDT-data group Joined MDT-data groups for VRF: yellow group: 239.2.2.0 source: 0.0.0.0 ref_count: 1 -

Check the global multicast routing table for the default MDT.

Note: Notice this information:

-

The outgoing interface list is MVRF yellow on the PEs.

-

The P router sees the group as a regular Multicast group.

-

Each PE is a source for the default MDT, and is only in the PE routers.

-

A new flag, Z, indicates this is a multicast tunnel.

-

-

For PE-R2, issue the show ip mroute 239.1.1.1 command.

IP Multicast Routing Table Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected, L - Local, P - Pruned, R - RP-bit set, F - Register flag, T - SPT-bit set, J - Join SPT, M - MSDP created entry, X - Proxy Join Timer Running, A - Candidate MSDP Advertisement, U - URD, I - Received Source Specific Host Report, Z - Multicast Tunnel Y - Joined MDT-data group, y - Sending to MDT-data group Outgoing interface flags: H - Hardware switched Timers: Uptime/Expires Interface state: Interface, Next-Hop or VCD, State/Mode (*, 239.1.1.1), 02:37:16/stopped, RP 50.0.0.3, flags: SJCFZ Incoming interface: Serial2/0, RPF nbr 10.2.0.3 Outgoing interface list: MVRF yellow, Forward/Sparse-Dense, 02:21:26/00:00:28 (50.0.0.2, 239.1.1.1), 02:37:12/00:03:29, flags: FTZ Incoming interface: Loopback0, RPF nbr 0.0.0.0 Outgoing interface list: Serial2/0, Forward/Sparse-Dense, 02:36:09/00:02:33 ( 50.0.0.4, 239.1.1.1), 02:36:02/00:02:59, flags: JTZ Incoming interface: Serial2/0, RPF nbr 10.2.0.3 Outgoing interface list: MVRF yellow, Forward/Sparse-Dense, 02:21:26/00:00:28 -

For P-R3, issue the show ip mroute 239.1.1.1 command.

IP Multicast Routing Table Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected, L - Local, P - Pruned, R - RP-bit set, F - Register flag, T - SPT-bit set, J - Join SPT, M - MSDP created entry, X - Proxy Join Timer Running, A - Candidate MSDP Advertisement, U - URD, I - Received Source Specific Host Report, Z - Multicast Tunnel Y - Joined MDT-data group, y - Sending to MDT-data group Outgoing interface flags: H - Hardware switched Timers: Uptime/Expires Interface state: Interface, Next-Hop or VCD, State/Mode (*, 239.1.1.1), 02:50:24/stopped, RP 50.0.0.3, flags: S Incoming interface: Null, RPF nbr 0.0.0.0 Outgoing interface list: Serial1/0, Forward/Sparse-Dense, 02:34:41/00:03:16 Serial2/0, Forward/Sparse-Dense, 02:49:24/00:02:37 (50.0.0.2, 239.1.1.1), 02:49:56/00:03:23, flags: T Incoming interface: Serial1/0, RPF nbr 10.2.0.2 Outgoing interface list: Serial2/0, Forward/Sparse-Dense, 02:49:24/00:02:37 (50.0.0.4, 239.1.1.1), 02:49:47/00:03:23, flags: T Incoming interface: Serial2/0, RPF nbr 10.3.0.4 Outgoing interface list: Serial1/0, Forward/Sparse-Dense, 02:34:41/00:03:16 -

For PE-R4, issue the show ip mroute 239.1.1.1 command.

IP Multicast Routing Table Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected, L - Local, P - Pruned, R - RP-bit set, F - Register flag, T - SPT-bit set, J - Join SPT, M - MSDP created entry, X - Proxy Join Timer Running, A - Candidate MSDP Advertisement, U - URD, I - Received Source Specific Host Report, Z - Multicast Tunnel Y - Joined MDT-data group, y - Sending to MDT-data group Outgoing interface flags: H - Hardware switched Timers: Uptime/Expires Interface state: Interface, Next-Hop or VCD, State/Mode (*, 239.1.1.1), 02:51:06/stopped, RP 50.0.0.3, flags: SJCFZ Incoming interface: Serial1/0, RPF nbr 10.3.0.3 Outgoing interface list: MVRF yellow, Forward/Sparse-Dense, 02:51:06/00:00:48 (50.0.0.2, 239.1.1.1), 02:50:06/00:02:58, flags: JTZ Incoming interface: Serial1/0, RPF nbr 10.3.0.3 Outgoing interface list: MVRF yellow, Forward/Sparse-Dense, 02:50:06/00:00:48 (50.0.0.4, 239.1.1.1), 02:51:00/00:03:10, flags: FTZ Incoming interface: Loopback0, RPF nbr 0.0.0.0 Outgoing interface list: Serial1/0, Forward/Sparse-Dense, 02:35:24/00:03:00

-

-

Check the global multicast routing table for data MDTs.

Note: For PE-R2, notice that the outgoing interface is tunnel0.

-

For PE-R2, where the source is located (VRF side), issue the show ip mroute vrf yellow 224.2.2.2 command.

IP Multicast Routing Table Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected, L - Local, P - Pruned, R - RP-bit set, F - Register flag, T - SPT-bit set, J - Join SPT, M - MSDP created entry, X - Proxy Join Timer Running, A - Candidate MSDP Advertisement, U - URD, I - Received Source Specific Host Report, Z - Multicast Tunnel Y - Joined MDT-data group, y - Sending to MDT-data group Outgoing interface flags: H - Hardware switched Timers: Uptime/Expires Interface state: Interface, Next-Hop or VCD, State/Mode (*, 224.2.2.2), 2d01h/stopped, RP 100.0.0.2, flags: S Incoming interface: Null, RPF nbr 0.0.0.0 Outgoing interface list: Tunnel0, Forward/Sparse-Dense, 2d01h/00:02:34 (100.0.0.1, 224.2.2.2), 00:05:32/00:03:26, flags: Ty Incoming interface: Serial1/0, RPF nbr 10.1.0.1 Outgoing interface list: Tunnel0, Forward/Sparse-Dense, 00:05:37/00:02:34 -

For PE-R2, where the source is located (global multicast route), issue the show ip mroute 239.2.2.0 command.

IP Multicast Routing Table Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected, L - Local, P - Pruned, R - RP-bit set, F - Register flag, T - SPT-bit set, J - Join SPT, M - MSDP created entry, X - Proxy Join Timer Running, A - Candidate MSDP Advertisement, U - URD, I - Received Source Specific Host Report, Z - Multicast Tunnel Y - Joined MDT-data group, y - Sending to MDT-data group Outgoing interface flags: H - Hardware switched Timers: Uptime/Expires Interface state: Interface, Next-Hop or VCD, State/Mode (*, 239.2.2.0), 02:13:27/stopped, RP 50.0.0.3, flags: SJPFZ Incoming interface: Serial2/0, RPF nbr 10.2.0.3 Outgoing interface list: Null (50.0.0.2, 239.2.2.0), 02:13:27/00:03:22, flags: FTZ Incoming interface: Loopback0, RPF nbr 0.0.0.0 Outgoing interface list: Serial2/0, Forward/Sparse-Dense, 02:13:27/00:03:26

Note: Only the PE router with has the multicast source attached to it appears as the source for multicast traffic of data MDT group address.

-

Troubleshoot

-

Issue the show ip pim vrf neighbor command to check that PE routers established a PIM neighbor relationship through the dynamic Tunnel interface. If they did, then Default MDT operates properly.

-

If Default MDT does not function, issue the show ip pim mdt bgp command to check that loopbacks of remote PE routers participating in MVPN are known by the local router. If they are not, verify that PIM is enabled on loopback interfaces used as a source of MP BGP sessions

-

Check that the SP core is properly configured to deliver multicast between PE routers. For test purposes you might configure ip igmp join-group on the loopback interface of one PE router and do multicast ping sourced from the loopback of another PE router.

Related Information

Revision History

| Revision | Publish Date | Comments |

|---|---|---|

1.0 |

10-Aug-2005 |

Initial Release |

Feedback

Feedback