FlexPod Nexus 5k in vPC behavior during disruption

Available Languages

Download Options

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

Contents

Introduction

This document will outline behavior of the network in reaction to different disruptions, concentrating on Virtual Port-Channel (vPC).

A typical disruption would be - a reload, link loss, or connecivity loss.

The aim of this document is to demonstrate packet loss during common scenarios.

Topology

During testing, unless otherwise stated following topology is used.

Green and blue lines indicate a vPC port channel from each of the Fabric Interconnects to both Nexus switches.

Not outlined is the out of band management network.

It is a simplified topology commonly recommended in FlexPod deployments as seen for example in:

http://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flexpod_esxi51_ucsm2.html

Components used

Two Nexus 5548P switches.

Two Unified Computing System (UCS) 6120 Fabric Interconnect running 2.2(4b) software.

One 5108 UCS chassis.

Two B200M3 blades with VIC 1240 adapter running 2.2(4) software.

To perform and verify connectivity tests two blades were installed and RedHat Enterprise Linux 7.1 operating system is installed.

Configuration.

Both the vPC and portchannel configuration is using default.

feature vpc

vpc domain 75

role priority 3000

peer-keepalive destination 10.48.43.79 source 10.48.43.78

delay restore 150

peer-gateway

interface port-channel1

description vPC Peer-Link

switchport mode trunk

spanning-tree port type network

vpc peer-link

Example vPC leading to UCS Fabirc Interconnect (FI) in this case bdsol-6120-05--A

interface port-channel101

description bdsol-6120-05-A

switchport mode trunk

spanning-tree port type edge trunk

vpc 101

Tests

Following test will be performed.

- Data link loss.

- Disruptive upgrade

- In-Service Software Upgrade (ISSU)

- Loss of peer keepalive link - mgmt0 interface in case of this topology/configuration.

- Loss of peer portchannel - Port-channel 1 in this configuration.

- Disabling vPC feature

Basic traffic flow.

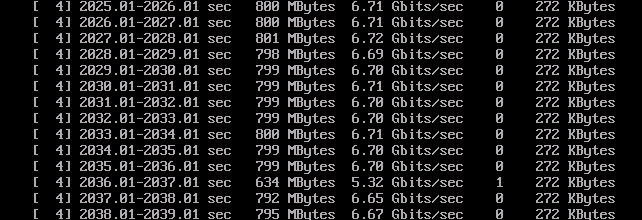

A single iperf3 session is used to generate 6.5 gigabits per second of test TCP traffic to verify frame loss during transitions.

RedHat2 is pinned to Fabric Interconnect B while RedHat1 is pinned to fabric interconnect A - this results in traffic which needs to cross the switching portion.

Iperf3 parameters:

- Server: iperf3 -s -i 1

- Client iperf3 -c 10.37.9.131 -t 0 -i 1 -w 1M -V

The above parameters were picked to allow high rate of traffic and easy to spot packet loss.

TCP window is clamped to avoid data bursts iperf is know for. Allowing iperf to run unclamped could result in occasional drops in ingress buffers along the path - depending on QoS configuration. The above parameters allow for a sustained rate of 6-7 Gbps without frame loss.

To verify we can check cumulative rate of traffic on interfaces.

bdsol-n5548-07# show interface ethernet 1/1-2 | i rate

30 seconds input rate 5612504 bits/sec, 9473 packets/sec

30 seconds output rate 7037817832 bits/sec, 578016 packets/sec

input rate 5.60 Mbps, 9.38 Kpps; output rate 7.01 Gbps, 576.10 Kpps

30 seconds input rate 7037805336 bits/sec, 578001 packets/sec

30 seconds output rate 5626064 bits/sec, 9489 packets/sec

input rate 7.01 Gbps, 575.71 Kpps; output rate 6.56 Mbps, 9.79 Kpps

The above output shows 7 Gbps of traffic entering on interface Ethernet 1/2 and leaving on interface Ethernet 1/1.

Data link loss

This test is designated to test how data will behave if a link which is part of vPC is shut down.

This example will use Ethernet 1/1, the output interface for data traffic, it will be shut down using command line.

bdsol-n5548-07# conf t

Enter configuration commands, one per line. End with CNTL/Z.

bdsol-n5548-07(config)# int et1/1

bdsol-n5548-07(config-if)# shut

In this case only a single packet was lost, out of flood of 6.5 Gbps stream.

Traffic is almost immediately balanced among remaining links in portchannel on UCS, in this case using UCS FI B's Ethernet 1/8 (the only remaining) port going up to Nexus 5548 B, from there it will be transported to UCS FI A using Ethernet 1/1.

bdsol-n5548-08# show interface ethernet 1/1-2 | i rate

30 seconds input rate 5575896 bits/sec, 9413 packets/sec

30 seconds output rate 6995947064 bits/sec, 574567 packets/sec

input rate 2.21 Mbps, 3.70 Kpps; output rate 2.78 Gbps, 227.99 Kpps

30 seconds input rate 6995940736 bits/sec, 574562 packets/sec

30 seconds output rate 5581920 bits/sec, 9418 packets/sec

input rate 2.78 Gbps, 227.99 Kpps; output rate 2.22 Mbps, 3.71 Kpps

Disruptive upgrade or reload

A combined data and control plane disruption can be emulated by performing a disruptive upgrade the bdsol-n5548-07 (primary vPC).

Traffic loss is expected.

Functionally this test is the same as reloading a vPC peer.

bdsol-n5548-07# install all kickstart bootflash:n5000-uk9-kickstart.7.1.0.N1.1a.bin system bootflash:n5000-uk9.7.1.0.N1.1a.bin

(...)

Compatibility check is done:

Module bootable Impact Install-type Reason

------ -------- -------------- ------------ ------

1 yes disruptive reset Incompatible image

(...)

Switch will be reloaded for disruptive upgrade.

Do you want to continue with the installation (y/n)? [n] y

Install is in progress, please wait.

Performing runtime checks.

[####################] 100% -- SUCCESS

Setting boot variables.

[####################] 100% -- SUCCESS

Performing configuration copy.

[####################] 100% -- SUCCESS

Finishing the upgrade, switch will reboot in 10 seconds.

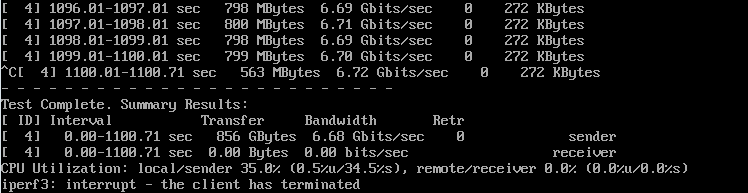

After the 10 seconds mentioned packet loss occurs.

During that time only 55 packets are lost (out of the stream of 6.6 Gbps).

If the iperf3 was restarted immediately the operator can verify that traffic indeed switched over to bdsol-n5548-08.

bdsol-n5548-08# show interface ethernet 1/1-2 | i rate

30 seconds input rate 5601392 bits/sec, 9455 packets/sec

30 seconds output rate 7015307760 bits/sec, 576159 packets/sec

input rate 2.25 Mbps, 3.77 Kpps; output rate 2.81 Gbps, 231.14 Kpps

30 seconds input rate 7015303696 bits/sec, 576152 packets/sec

30 seconds output rate 5605280 bits/sec, 9462 packets/sec

input rate 2.81 Gbps, 231.14 Kpps; output rate 2.25 Mbps, 3.77 Kpps

The traffic rate is showing below 6Gbps since the rate counter is averaged over 30 seconds.

vPC peer link going down

In this example the vPC peer link goes down, triggered by a configuration change.

At that time traffic is handled by bdsol-n5548-07, acting vPC secondary.

The sequence of events.

Port-channel 1 goes down.

2015 Jul 10 15:00:25 bdsol-n5548-07 %ETHPORT-5-IF_DOWN_CFG_CHANGE: Interface port-channel1 is down(Config change)

Since bdsol-n5548-07 is acting secondary it will suspend its vPCs since it cannot guarantee loopless topology:

2015 Jul 10 15:00:28 bdsol-n5548-07 %VPC-2-VPC_SUSP_ALL_VPC: Peer-link going down, suspending all vPCs on secondary

2015 Jul 10 15:00:28 bdsol-n5548-07 %ETHPORT-5-IF_DOWN_INITIALIZING: Interface port-channel928 is down (Initializing)

2015 Jul 10 15:00:28 bdsol-n5548-07 %ETHPORT-5-IF_DOWN_INITIALIZING: Interface port-channel102 is down (Initializing)

2015 Jul 10 15:00:28 bdsol-n5548-07 %ETHPORT-5-IF_DOWN_INITIALIZING: Interface port-channel101 is down (Initializing)

During this time iperf3 lost a portion of traffic - 90 packets.

But was able to recover pretty fast.

Since vPCs are suspended on bdsol-n5548-07, all traffic is handled by bdsol-n5548-08

bdsol-n5548-08# show int ethernet 1/1-2 | i rate

30 seconds input rate 5623248 bits/sec, 9489 packets/sec

30 seconds output rate 7036030160 bits/sec, 577861 packets/sec

input rate 2.83 Mbps, 4.74 Kpps; output rate 3.54 Gbps, 290.64 Kpps

30 seconds input rate 7036025712 bits/sec, 577854 packets/sec

30 seconds output rate 5627216 bits/sec, 9498 packets/sec

input rate 3.54 Gbps, 290.64 Kpps; output rate 2.83 Mbps, 4.75 Kpps

Again, the rate does not show 6.5 gigabits per second immediately due to load average being calculated.

Recovery from vPC link down.

When vPC peer link comes back alive, traffic may be re-balanced between links and a short lived packet loss due to topology change may be expected.

In case of this lab test 1 packet was lost.

In-Service Software Upgrade (ISSU)

In this test an ISSU upgrade was performed in order to verify traffic disruption.

The vPC roles during this test are as follows:

bdsol-n5548-07 - primary

bdsol-n5548-08 - secondary.

To perform an ISSU defined criteria must be met.

In order to find information regarding commands used to check these criteria and perform an ISSU the following guide was used:

After performing an ISSU first on the primary and afterwards on the secondary vPC peer no packets have been lost.

This is due to the fact that ISSU all data plane functionality remains undisrupted and only control plane traffic would be affected.

Known Issues with ISSU

Layer 3 features and licenses.

During the ISSU testing a number of issues needed to be resolved. The "show install all impact ..." command may provide output that ISSU cannot be performed with the following explanation: "Non-disruptive install not supported if L3 was enabled." In the testing environment this was due to the LAN_BASE_SERVICES_PKG being in use in the installed license file.

LAN_BASE_SERVICES_PKG includes L3 functionality and in order to perform the ISSU this package must be unused and the license file has to be cleared from the device by using the "clear license LICENSEFILE" command. It is possible that the license file is currently in use by the device. In order to clear such a license file it is important to check which packages are in use by using the "show license usage" and disabling the features of these packages.

Non-edge STP ports

During testing it was also necessary to shutdown the northbound port-channel as it did not pass the "show spanning-tree issu-impact" non-edge, Criteria 3, check and this would have lead to a disruptive upgrade. This northbound port-channel was listed not as a vPC Edge in the "show spanning-tree vlan 1" command.

Loss of peer keepalive link

After the loss of the peer keepalive mgmt0 link no disruption in the traffic was recorded. In this topology, the management interface (mgmt0) is used as keepalive link, hence does not impact the data traffic generated during testing.

The devices notice mgmt0 interface going down, and peer keepalives failing, but since peer link is up data place communication can continue.

2015 Jul 14 12:11:28 bdsol-n5548-07 %IM-5-IM_INTF_STATE: mgmt0 is DOWN in vdc 1

2015 Jul 14 12:11:32 bdsol-n5548-07 %VPC-2-PEER_KEEP_ALIVE_RECV_FAIL: In domain 75, VPC peer keep-alive receive has failed

2015 Jul 14 12:12:07 bdsol-n5548-07 %IM-5-IM_INTF_STATE: mgmt0 is UP in vdc 1

Disabling vPC feature

This test will describe what happens when vPC is disabled on one of the switches during live data transfer.

VPC feature can be disabled using the following command in the global configuration mode:

bdsol-n5548-07(config)# no feature vpc

Disabling the vPC feature on either primary or secondary vPC peer leads to instant loss of data connectivity. This is due to the peer based nature of vPC. As soon as the feature is disabled, all vPC functionality on the switch ceases to function, the peer link goes down, vPC keepalive status is Suspended and port-channel 101 of the testing environment goes down. This is evident in the show vPC output of the peer switch which still has vPC feature enabled.

bdsol-n5548-07# show vpc

Legend:

(*) - local vPC is down, forwarding via vPC peer-link

vPC domain id : 75

Peer status : peer link is down

vPC keep-alive status : Suspended (Destination IP not reachable)

...

vPC status

----------------------------------------------------------------------------

id Port Status Consistency Reason Active vlans

------ ----------- ------ ----------- -------------------------- -----------

101 Po101 down success success -

The traffic interruption, as before, is only short lived.

Under above mentioned testing conditions 50-80 packets were lost from a single session.

Remove "feature vpc" command also caused vPC configuration o be removed from port-channels.

This configuration needs to be readded.

Conclusion

vPC feature is intended to bring resiliency performance by splitting data traffic in a port channel among multiple devices.

This simple idea requires complicated control plane implementations.

The above tests were meant to show disruptions to both the control- and data-plane which may occur during life cycle of the feature.

As expected data plane disruptions were detected and corrected almost immediately - with single packets lost in tests.

The control plane disruptions tested show that vPC still maintains sub-second convergence time even when control plane is affected.

The most disruptive test performed - vPC peer link being shut down - potentially combines both data and control plane failure. Still a fast convergence time was demonstrated.

Contributed by Cisco Engineers

Contact Cisco

- Open a Support Case

- (Requires a Cisco Service Contract)

Feedback

Feedback