New and Changed Information

The following table provides an overview of the significant changes up to this current release. The table does not provide an exhaustive list of all changes or of the new features up to this release.

| Release Version | Feature | Description |

|---|---|---|

|

NDFC release 12.2.1 |

Support for Layer 3 VNI without VLAN feature |

Beginning with NDFC release 12.2.1, support is available for configuring a Layer 3 VNI without a VLAN per VRF when creating or editing the following fabric types:

A new field is available when creating or editing these fabric types to enable this feature. For more information, see Layer 3 VNI Without VLAN. |

|

NDFC release 12.2.1 |

Support for AI/ML QOS and queuing policies |

Beginning with NDFC release 12.2.1, support is available for configuring artificial intelligence (AI) and machine learning (ML) QoS and queuing policies when creating or editing the following fabric types:

A new field is available when creating or editing these fabric types to enable this feature. For more information, see AI/ML QoS Classification and Queuing Policies. |

VXLAN EVPN Fabrics Provisioning

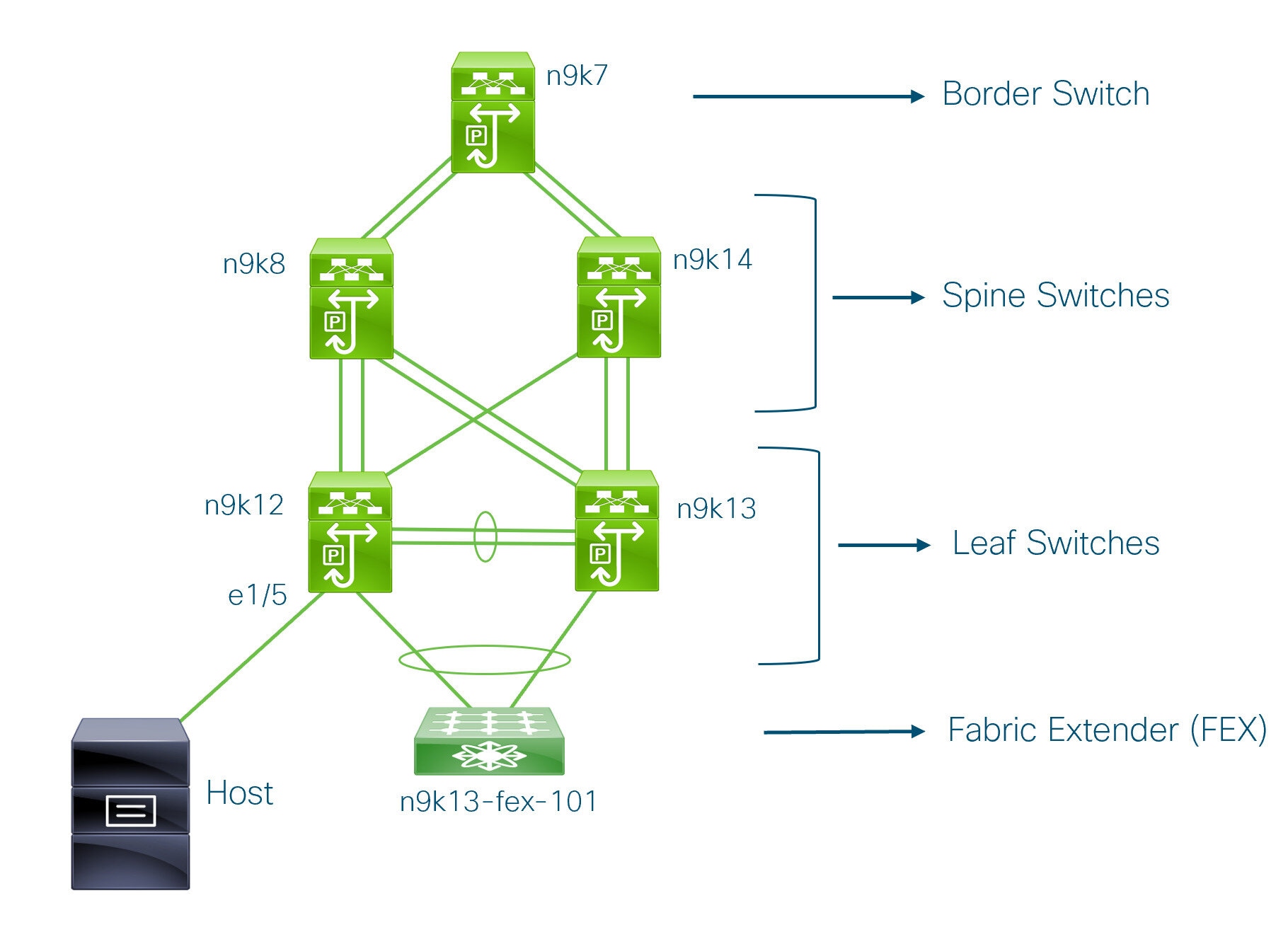

Cisco Nexus Dashboard Fabric Controller provides an enhanced "Easy" fabric workflow for unified underlay and overlay provisioning of the VXLAN BGP EVPN configuration on Nexus 9000 and 3000 series of switches. The configuration of the fabric is achieved via a powerful, flexible, and customizable template-based framework. Using minimal user inputs, an entire fabric can be brought up with Cisco-recommended best practice configurations in a short period of time. The set of parameters exposed in the Fabric Settings allow you to tailor the fabric to your preferred underlay provisioning options.

Border devices in a fabric typically provide external connectivity via peering with appropriate edge/core/WAN routers. These edge/core routers may either be managed or monitored by Nexus Dashboard Fabric Controller. These devices are placed in a special fabric called the External Fabric. The same Nexus Dashboard Fabric Controller can manage multiple VXLAN BGP EVPN fabrics while also offering easy provisioning and management of Layer-2 and Layer-3 DCI underlay and overlay configuration among these fabrics using a special construct called a Multi-Site Domain (MSD) fabric.

The Nexus Dashboard Fabric Controller GUI functions for creating and deploying VXLAN BGP EVPN fabrics are as follows:

Manage > Fabrics > Create Fabric under Actions drop-down list.

Create, edit, and delete a fabric:

-

Create new VXLAN, MSD, and external VXLAN fabrics.

-

View the VXLAN and MSD fabric topologies, including connections between fabrics.

-

Update fabric settings.

-

Save and deploy updated changes.

-

Delete a fabric (if devices are removed).

Device discovery and provisioning start-up configurations on new switches:

-

Add switch instances to the fabric.

-

Provision start-up configurations and an IP address to a new switch through POAP configuration.

-

Update switch policies, save, and deploy updated changes.

-

Create intra-fabric and inter-fabric links (also called Inter-Fabric Connections [IFCs]).

Manage > Inventory > Interfaces > Create New Interface under Actions drop-down list.

Underlay provisioning:

-

Create, deploy, view, edit, and delete a port-channel, vPC switch pair, Straight Through FEX (ST-FEX), Active-Active FEX (AA-FEX), loopback, subinterface, etc.

-

Create breakout and unbreakout ports.

-

Shut down and bring up interfaces.

-

Rediscover ports and view interface configuration history.

Manage > Inventory > Switches > Add Switches under Actions drop-down list.

Overlay network provisioning.

-

Create new overlay networks and VRFs (from the range specified in fabric creation).

-

Provision the overlay networks and VRFs on the switches of the fabric.

-

Undeploy the networks and VRFs from the switches.

-

Remove the provisioning from the fabric in Nexus Dashboard Fabric Controller.

Manage > Inventory > Switches > Switch Overview > Services menu option.

Provisioning of configuration on service leafs to which L4-7 service appliances may be attached. For more information, see L4-L7 Service Basic Workflow.

This chapter mostly covers configuration provisioning for a single VXLAN BGP EVPN fabric. EVPN Multi-Site provisioning for Layer-2/Layer-3 DCI across multiple fabrics using the MSD fabric, is documented in a separate chapter. The deployment details of how overlay Networks and VRFs can be easily provisioned from the Fabric Controller is covered in the "Networks" and "VRFs" sections in About Fabric Overview for LAN Operational Mode Setups.

Guidelines for VXLAN BGP EVPN Fabrics Provisioning

-

For any switch to be successfully imported into Nexus Dashboard Fabric Controller, the user specified for discovery/import, should have the following permissions:

-

SSH access to the switch

-

Ability to perform SNMPv3 queries

-

Ability to run the show commands including show run, show interfaces, etc.

-

Ability to execute the guestshell commands, which are prefixed by run guestshell for the Nexus Dashboard Fabric Controller tracker.

-

-

The switch discovery user need not have the ability to make any configuration changes on the switches. It is primarily used for read access.

-

When an invalid command is deployed by Nexus Dashboard Fabric Controller to a device, for example, a command with an invalid key chain due to an invalid entry in the fabric settings, an error is generated displaying this issue. This error is not cleared after correcting the invalid fabric entry. You need to manually clean up or delete the invalid commands to clear the error.

Note that the fabric errors related to the command execution are automatically cleared only when the same failed command succeeds in the subsequent deployment.

-

LAN credentials are required to be set of any user that needs to be perform any write access to the device. LAN credentials need to be set on the Nexus Dashboard Fabric Controller, on a per user per device basis. When a user imports a device into the Easy Fabric, and LAN credentials are not set for that device, Nexus Dashboard Fabric Controller moves this device to a migration mode. Once the user sets the appropriate LAN credentials for that device, a subsequent Save & Deploy retriggers the device import process.

-

The Save & Deploy button triggers the intent regeneration for the entire fabric as well as a configuration compliance check for all the switches within the fabric. This button is required but not limited to the following cases:

-

A switch or a link is added, or any change in the topology

-

A change in the fabric settings that must be shared across the fabric

-

A switch is removed or deleted

-

A new vPC pairing or unpairing is done

-

A change in the role for a device

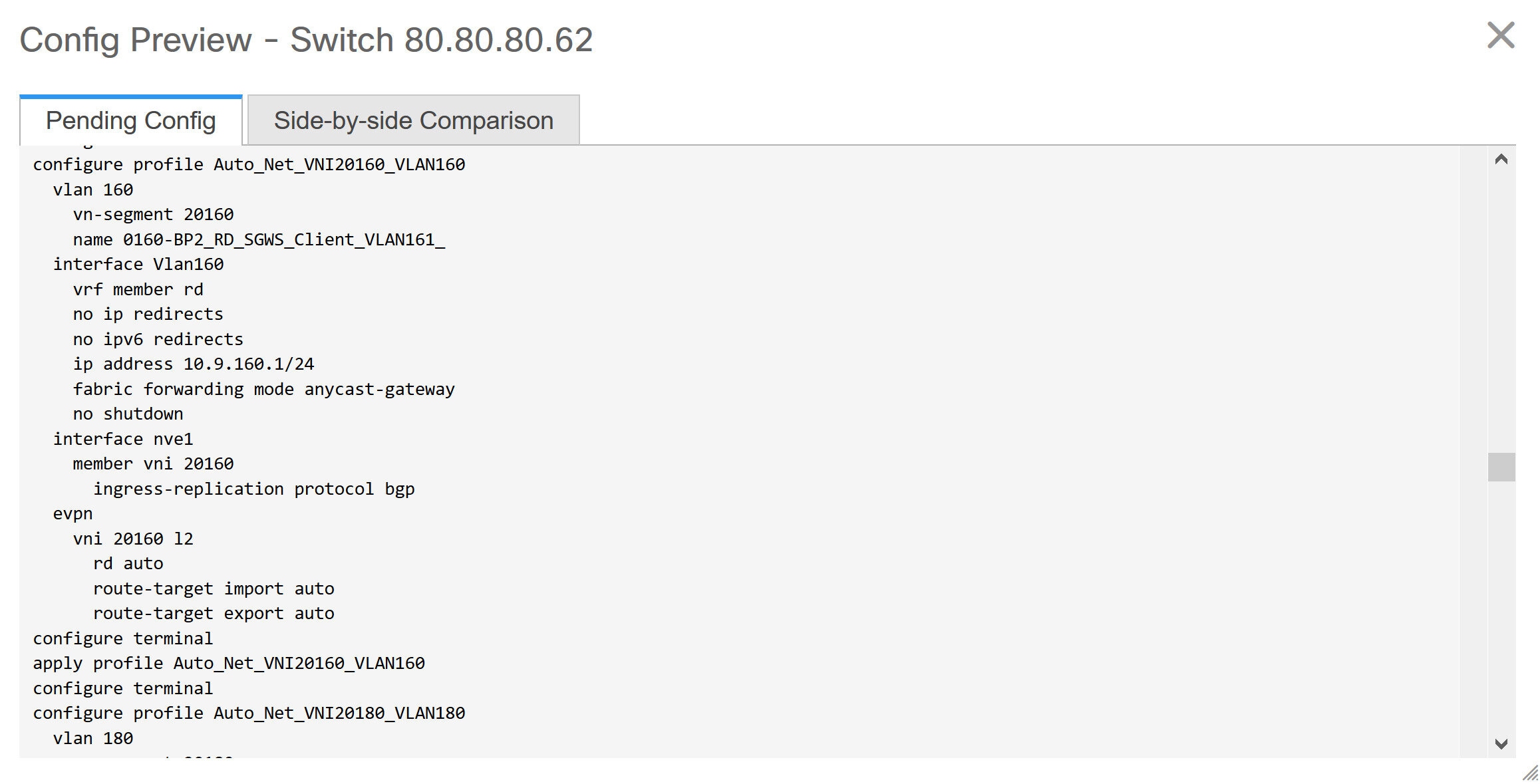

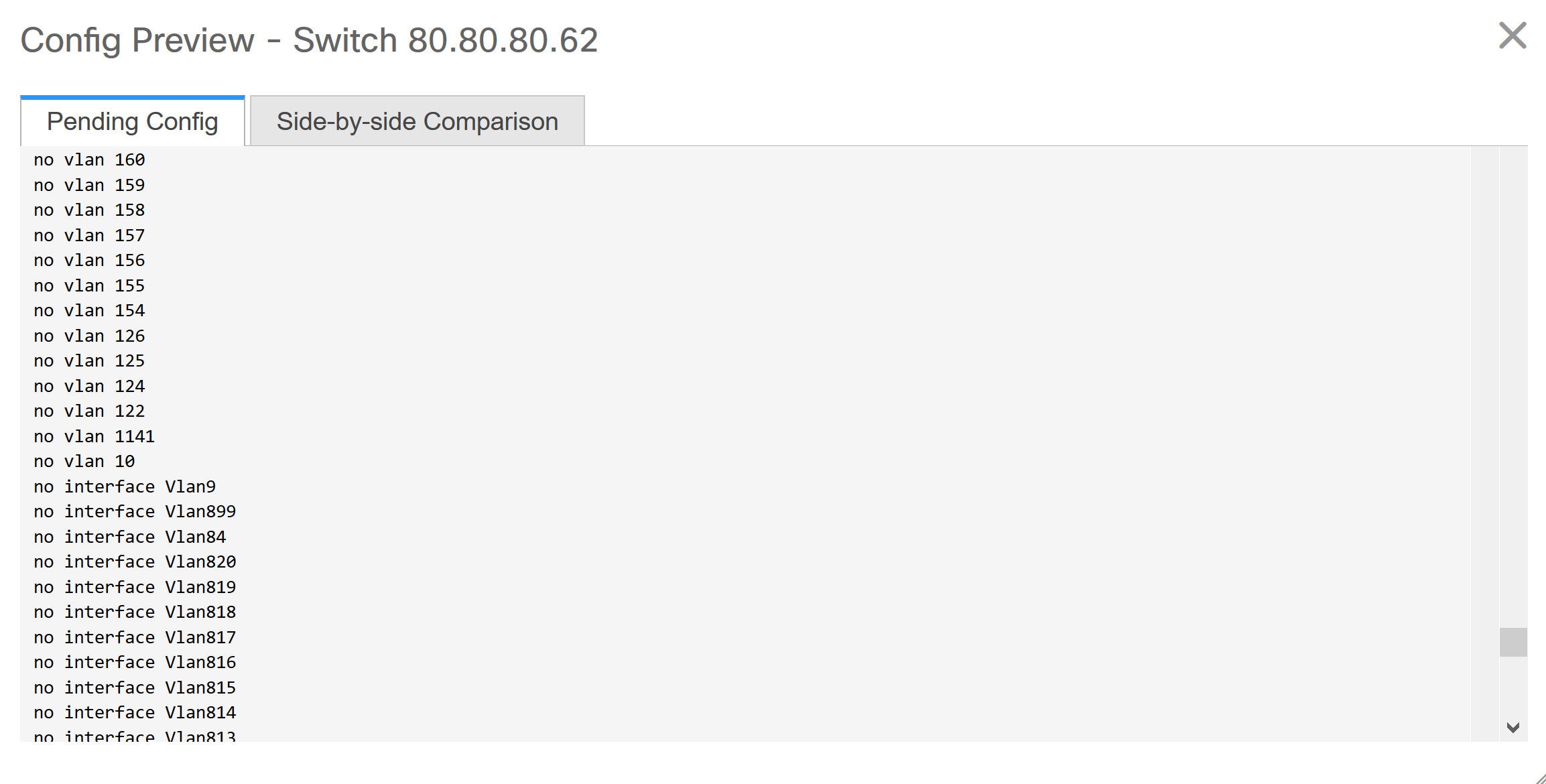

When you click Recalculate Config, the changes in the fabric are evaluated, and the configuration for the entire fabric is generated. Click Preview Config to preview the generated configuration, and then deploy it at a fabric level. Therefore, Deploy Config can take more time depending on the size of the fabric.

+ When you right-click on a switch icon, you can use the Deploy config to switches option to deploy per switch configurations. This option is a local operation for a switch, that is, the expected configuration or intent for a switch is evaluated against it’s current running configuration, and a config compliance check is performed for the switch to get the In-Sync or Out-of-Sync status. If the switch is out of sync, the user is provided with a preview of all the configurations running in that particular switch that vary from the intent defined by the user for that respective switch.

-

-

Persistent configuration diff is seen for the command line: system nve infra-vlanintforce. The persistent diff occurs if you have deployed this command via the freeform configuration to the switch. Although the switch requires the force keyword during deployment, the running configuration that is obtained from the switch in Nexus Dashboard Fabric Controller doesn’t display the force keyword. Therefore, the system nve infra-vlanintforce command always shows up as a diff.

The intent in Nexus Dashboard Fabric Controller contains the line:

system nve infra-vlan int force

The running config contains the line:

system nve infra-vlan [int]

As a workaround to fix the persistent diff, edit the freeform config to remove the force keyword after the first deployment such that it is system nve infra-vlan int.

The force keyword is required for the initial deploy and must be removed after a successful deploy. You can confirm the diff by using the Side-by-side Comparison tab in the Config Preview window.

The persistent diff is also seen after a write erase and reload of a switch. Update the intent on Nexus Dashboard Fabric Controller to include the force keyword, and then you need to remove the force keyword after the first deployment.

-

When the switch contains the hardware access-list tcam region arp-ether 256 command, which is deprecated without the double-wide keyword, the below warning is displayed:

Configuring the arp-ether region without "double-wide" is deprecated and can result in silent non-vxlan packet drops. Use the "double-wide" keyword when carving TCAM space for the arp-ether region.

Since the original hardware access-list tcam region arp-ether 256 command doesn’t match the policies in Nexus Dashboard Fabric Controller, this config is captured in the switch_freeform policy. After the hardware access-list tcam region arp-ether 256 double-wide command is pushed to the switch, the original tcam command that does not contain the double-wide keyword is removed.

You must manually remove the hardware access-list tcam region arp-ether 256 command from the switch_freeform policy. Otherwise, config compliance shows a persistent diff.

Here is an example of the hardware access-list command on the switch:

switch(config)# show run | inc arp-ether switch(config)# hardware access-list tcam region arp-ether 256 Warning: Please save config and reload the system for the configuration to take effect switch(config)# show run | inc arp-ether hardware access-list tcam region arp-ether 256 switch(config)# switch(config)# hardware access-list tcam region arp-ether 256 double-wide Warning: Please save config and reload the system for the configuration to take effect switch(config)# show run | inc arp-ether hardware access-list tcam region arp-ether 256 double-wide

You can see that the original tcam command is overwritten.

Creating a VXLAN EVPN Fabric Using the Data Center VXLAN EVPN Template

This topic describes how to create a new VXLAN EVPN fabric using the Data Center VXLAN EVPN template and contains descriptions for the IPv4 underlay.

You can create a Data Center VXLAN EVPN fabric with IPv6 only underlay. The IPv6 underlay is supported only for the Data Center VXLAN EVPN template. For information about the IPv6 underlay, see Configuring a VXLANv6 Fabric.

-

Navigate to the LAN Fabrics page:

Manage > Fabrics

-

Click Actions > Create Fabric.

The Create Fabric window appears.

-

Enter a unique name for the fabric in the Fabric Name field, then click Choose Fabric.

A list of all available fabric templates are listed.

-

From the available list of fabric templates, choose the Data Center VXLAN EVPN template, then click Select.

-

Enter the necessary field values to create a fabric.

The tabs and their fields in the screen are explained in the following sections. The overlay and underlay network parameters are included in these tabs.

If you’re creating a standalone fabric as a potential member fabric of an MSD fabric (used for provisioning overlay networks for fabrics that are connected through EVPN Multi-Site technology), see VXLAN EVPN Multi-Site before creating the member fabric.

-

When you have completed the necessary configurations, click Save.

-

Click on the fabric to display a summary in the slide-in pane.

-

Click on the Launch icon to display the Fabric Overview.

-

General Parameters

The General Parameters tab is displayed by default. The fields in this tab are described in the following table.

| Field | Description |

|---|---|

|

BGP ASN |

Enter the BGP AS number the fabric is associated with. This must be same as existing fabric. |

|

Enable IPv6 Underlay |

Enable the IPv6 underlay feature. For information, see the section "Configuring a VXLANv6 Fabric" in Data Center VXLAN EVPN_. |

|

Enable IPv6 Link-Local Address |

Enables the IPv6 Link-Local address. |

|

Fabric Interface Numbering |

Specifies whether you want to use point-to-point (p2p) or unnumbered networks. |

|

Underlay Subnet IP Mask |

Specifies the subnet mask for the fabric interface IP addresses. |

|

Underlay Subnet IPv6 Mask |

Specifies the subnet mask for the fabric interface IPv6 addresses. |

|

Underlay Routing Protocol |

The IGP used in the fabric, OSPF, or IS-IS. |

|

Route-Reflectors (RRs) |

The number of spine switches that are used as route reflectors for transporting BGP traffic. Choose 2 or 4 from the drop-down box. The default value is 2. To deploy spine devices as RRs, Nexus Dashboard Fabric Controller sorts the spine devices based on their serial numbers, and designates two or four spine devices as RRs. If you add more spine devices, existing RR configuration won’t change. Increasing the count - You can increase the route reflectors from two to four at any point in time. Configurations are automatically generated on the other two spine devices designated as RRs. Decreasing the count - When you reduce four route reflectors to two, remove the unneeded route reflector devices from the fabric. Follow these steps to reduce the count from 4 to 2.

You can preselect RRs and RPs before performing the first Save & Deploy operation. For more information, see Preselecting Switches as Route-Reflectors and Rendezvous-Points. |

|

Anycast Gateway MAC |

Specifies the anycast gateway MAC address. |

|

Enable Performance Monitoring |

Check the check box to enable performance monitoring. Ensure that you do not clear interface counters from the Command Line Interface of the switches. Clearing interface counters can cause the Performance Monitor to display incorrect data for traffic utilization. If you must clear the counters and the switch has both |

What’s next: Complete the configurations in another tab if necessary, or click Save when you have completed the necessary configurations for this fabric.

Replication

The fields in the Replication tab are described in the following table. Most of the fields are automatically generated based on Cisco-recommended best practice configurations, but you can update the fields if needed.

| Field | Description |

|---|---|

|

Replication Mode |

The mode of replication that is used in the fabric for BUM (Broadcast, Unknown Unicast, Multicast) traffic. The choices are Ingress Replication or Multicast. When you choose Ingress replication, the multicast related fields get disabled. You can change the fabric setting from one mode to the other, if no overlay profile exists for the fabric. |

|

Multicast Group Subnet |

IP address prefix used for multicast communication. A unique IP address is allocated from this group for each overlay network. The replication mode change isn’t allowed if a policy template instance is created for the current mode. For example, if a multicast related policy is created and deployed, you can’t change the mode to Ingress. |

|

Enable Tenant Routed Multicast (TRM) |

Check the check box to enable Tenant Routed Multicast (TRM) that allows overlay multicast traffic to be supported over EVPN/MVPN in the VXLAN BGP EVPN fabric. |

|

Default MDT Address for TRM VRFs |

The multicast address for Tenant Routed Multicast traffic is populated. By default, this address is from the IP prefix specified in the Multicast Group Subnet field. When you update either field, ensure that the TRM address is chosen from the IP prefix specified in Multicast Group Subnet. For more information, see the section "Overview of Tenant Routed Multicast" in Configuring Tenant Routed Multicast. |

|

Rendezvous-Points |

Enter the number of spine switches acting as rendezvous points. |

|

RP mode |

Choose from the two supported multicast modes of replication, ASM (for Any-Source Multicast [ASM]) or BiDir (for Bidirectional PIM [BIDIR-PIM]). When you choose ASM, the BiDir related fields aren’t enabled. When you choose BiDir, the BiDir related fields are enabled. BIDIR-PIM is supported on Cisco’s Cloud Scale Family platforms 9300-EX and 9300-FX/FX2, and software release 9.2(1) onwards. When you create a new VRF for the fabric overlay, this address is populated in the Underlay Multicast Address field, in the Advanced tab. |

|

Underlay RP Loopback ID |

The loopback ID used for the rendezvous point (RP), for multicast protocol peering purposes in the fabric underlay. |

|

Underlay Primary RP Loopback ID |

Enabled if you choose BIDIR-PIM as the multicast mode of replication. The primary loopback ID used for the phantom RP, for multicast protocol peering purposes in the fabric underlay. |

|

Underlay Backup RP Loopback ID |

Enabled if you choose BIDIR-PIM as the multicast mode of replication. The secondary loopback ID used for the phantom RP, for multicast protocol peering purposes in the fabric underlay. |

|

Underlay Second Backup RP Loopback Id |

Used for the second fallback Bidir-PIM Phantom RP. |

|

Underlay Third Backup RP Loopback Id |

Used for the third fallback Bidir-PIM Phantom RP. |

What’s next: Complete the configurations in another tab if necessary, or click Save when you have completed the necessary configurations for this fabric.

VPC

The fields in the VPC tab are described in the following table. Most of the fields are automatically generated based on Cisco-recommended best practice configurations, but you can update the fields if needed.

| Field | Description |

|---|---|

|

vPC Peer Link VLAN |

VLAN used for the vPC peer link SVI. |

|

Make vPC Peer Link VLAN as Native VLAN |

Enables vPC peer link VLAN as Native VLAN. |

|

vPC Peer Keep Alive option |

Choose the management or loopback option. If you want to use IP addresses assigned to the management port and the management VRF, choose management. If you use IP addresses assigned to loopback interfaces (and a non-management VRF), choose loopback. If you use IPv6 addresses, you must use loopback IDs. |

|

vPC Auto Recovery Time |

Specifies the vPC auto recovery time-out period in seconds. |

|

vPC Delay Restore Time |

Specifies the vPC delay restore period in seconds. |

|

vPC Peer Link Port Channel ID |

Specifies the Port Channel ID for a vPC Peer Link. By default, the value in this field is 500. |

|

vPC IPv6 ND Synchronize |

Enables IPv6 Neighbor Discovery synchronization between vPC switches. The check box is enabled by default. Uncheck the check box to disable the function. |

|

vPC advertise-pip |

Select the check box to enable the Advertise PIP feature. You can enable the advertise PIP feature on a specific vPC as well. . |

|

Enable the same vPC Domain Id for all vPC Pairs |

Enable the same vPC Domain ID for all vPC pairs. When you select this field, the vPC Domain Id field is editable. |

|

vPC Domain Id |

Specifies the vPC domain ID to be used on all vPC pairs. |

|

vPC Domain Id Range |

Specifies the vPC Domain Id range to use for new pairings. |

|

Enable QoS for Fabric vPC-Peering |

Enable QoS on spines for guaranteed delivery of vPC Fabric Peering communication. QoS for vPC fabric peering and queuing policies options in fabric settings are mutually exclusive. |

|

QoS Policy Name |

Specifies QoS policy name that should be same on all fabric vPC peering spines. The default name is spine_qos_for_fabric_vpc_peering. |

What’s next: Complete the configurations in another tab if necessary, or click Save when you have completed the necessary configurations for this fabric.

Protocols

The fields in the Protocols tab are described in the following table. Most of the fields are automatically generated based on Cisco-recommended best practice configurations, but you can update the fields if needed.

| Field | Description |

|---|---|

|

Underlay Routing Loopback Id |

The loopback interface ID is populated as 0 since loopback0 is usually used for fabric underlay IGP peering purposes. |

|

Underlay VTEP Loopback Id |

The loopback interface ID is populated as 1 since loopback1 is used for the VTEP peering purposes. |

|

Underlay Anycast Loopback Id |

The loopback interface ID is greyed out and used for vPC Peering in VXLANv6 Fabrics only. |

|

Underlay Routing Protocol Tag |

The tag defining the type of network. |

|

OSPF Area ID |

The OSPF area ID, if OSPF is used as the IGP within the fabric. The OSPF or IS-IS authentication fields are enabled based on your selection in the Underlay Routing Protocol field in the General tab. |

|

Enable OSPF Authentication |

Select the check box to enable OSPF authentication. Deselect the check box to disable it. If you enable this field, the OSPF Authentication Key ID and OSPF Authentication Key fields get enabled. |

|

OSPF Authentication Key ID |

The Key ID is populated. |

|

OSPF Authentication Key |

The OSPF authentication key must be the 3DES key from the switch. Plain text passwords are not supported. Log in to the switch, retrieve the encrypted key and enter it in this field. Refer, Retrieving the Authentication Key section for details. |

|

IS-IS Level |

Select the IS-IS level from this drop-down list. |

|

Enable IS-IS Network Point-to-Point |

Enables network point-to-point on fabric interfaces which are numbered. |

|

Enable IS-IS Authentication |

Select the check box to enable IS-IS authentication. Deselect the check box to disable it. If you enable this field, the IS-IS authentication fields are enabled. |

|

IS-IS Authentication Keychain Name |

Enter the Keychain name, such as CiscoisisAuth. |

|

IS-IS Authentication Key ID |

The Key ID is populated. |

|

IS-IS Authentication Key |

Enter the Cisco Type 7 encrypted key. Plain text passwords are not supported. Log in to the switch, retrieve the encrypted key and enter it in this field. Refer the Retrieving the Authentication Key section for details. |

|

Set IS-IS Overload Bit |

When enabled, set the overload bit for an elapsed time after a reload. |

|

IS-IS Overload Bit Elapsed Time |

Allows you to clear the overload bit after an elapsed time in seconds. |

|

Enable BGP Authentication |

Select the check box to enable BGP authentication. Deselect the check box to disable it. If you enable this field, the BGP Authentication Key Encryption Type and BGP Authentication Key fields are enabled. If you enable BGP authentication using this field, leave the iBGP Peer-Template Config field blank to avoid duplicate configuration. |

|

BGP Authentication Key Encryption Type |

Choose the 3 for 3DES encryption type, or 7 for Cisco encryption type. |

|

BGP Authentication Key |

Enter the encrypted key based on the encryption type. Plain text passwords are not supported. Log in to the switch, retrieve the encrypted key and enter it in the BGP Authentication Key field. Refer the Retrieving the Authentication Key section for details. |

|

Enable PIM Hello Authentication |

Select this check box to enable PIM hello authentication on all the intra-fabric interfaces of the switches in a fabric. This check box is editable only for the Multicast replication mode. Note this check box is valid only for the IPv4 underlay. |

|

PIM Hello Authentication Key |

Specifies the PIM hello authentication key. For more information, see Retrieving PIM Hello Authentication Key. To retrieve the PIM Hello Authentication Key, perform the following steps:

|

|

Enable BFD |

Check the check box to enable feature bfd on all switches in the fabric. This feature is valid only on IPv4 underlay and the scope is within a fabric. BFD within a fabric is supported natively. The BFD feature is disabled by default in the Fabric Settings. If enabled, BFD is enabled for the underlay protocols with the default settings. Any custom required BFD configurations must be deployed via the per switch freeform or per interface freeform policies. The following config is pushed after you select the Enable BFD check box: For information about BFD feature compatibility, refer your respective platform documentation and for information about the supported software images, see Compatibility Matrix for Cisco. |

|

Enable BFD for iBGP |

Check the check box to enable BFD for the iBGP neighbor. This option is disabled by default. |

|

Enable BFD for OSPF |

Check the check box to enable BFD for the OSPF underlay instance. This option is disabled by default, and it is grayed out if the link state protocol is ISIS. |

|

Enable BFD for ISIS |

Check the check box to enable BFD for the ISIS underlay instance. This option is disabled by default, and it is grayed out if the link state protocol is OSPF. |

|

Enable BFD for PIM |

Check the check box to enable BFD for PIM. This option is disabled by default, and it is be grayed out if the replication mode is Ingress.Following are examples of the BFD global policies: |

|

Enable BFD Authentication |

Check the check box to enable BFD authentication. If you enable this field, the BFD Authentication Key ID and BFD Authentication Key fields are editable. BFD Authentication is not supported when the Fabric Interface Numbering field under the General tab is set to unnumbered. The BFD authentication fields will be grayed out automatically. BFD authentication is valid for only for P2P interfaces. |

|

BFD Authentication Key ID |

Specifies the BFD authentication key ID for the interface authentication. The default value is 100. |

|

BFD Authentication Key |

Specifies the BFD authentication key. For information about how to retrieve the BFD authentication parameters. |

|

iBGP Peer-Template Config |

Add iBGP peer template configurations on the leaf switches to establish an iBGP session between the leaf switch and route reflector. If you use BGP templates, add the authentication configuration within the template and uncheck the Enable BGP Authentication check box to avoid duplicate configuration. In the sample configuration, the 3DES password is displayed after password 3. The following fields can be used to specify different configurations:

In a brownfield migration, if the spine and leaf use different peer template names, both iBGP Peer-Template Config and Leaf/Border/Border Gateway iBGP Peer-Template Config fields need to be set according to the switch config. If spine and leaf use the same peer template name and content (except for the "route-reflector-client" CLI), only iBGP Peer-Template Config field in fabric setting needs to be set. If the fabric settings on iBGP peer templates do not match the existing switch configuration, an error message is generated and the migration will not proceed. |

What’s next: Complete the configurations in another tab if necessary, or click Save when you have completed the necessary configurations for this fabric.

Advanced

The fields in the Advanced tab are described in the following table. Most of the fields are automatically generated based on Cisco-recommended best practice configurations, but you can update the fields if needed.

| Field | Description |

|---|---|

|

VRF Template |

Specifies the VRF template for creating VRFs. |

|

Network Template |

Specifies the network template for creating networks. |

|

VRF Extension Template |

Specifies the VRF extension template for enabling VRF extension to other fabrics. |

|

Network Extension Template |

Specifies the network extension template for extending networks to other fabrics. |

|

Overlay Mode |

VRF/Network configuration using config-profile or CLI, default is config-profile. For more information, see Overlay Mode. |

|

Enable L3VNI w/o VLAN |

Beginning with NDFC release 12.2.1, check the box to enable the Layer 3 VNI without VLAN feature. The setting at this fabric-level field affects the related field at the VRF level. For more information, see:

|

|

Site ID |

The ID for this fabric if you are moving this fabric within an MSD. The site ID is mandatory for a member fabric to be a part of an MSD. Each member fabric of an MSD has a unique site ID for identification. |

|

Intra Fabric Interface MTU |

Specifies the MTU for the intra fabric interface. This value should be an even number. |

|

Layer 2 Host Interface MTU |

Specifies the MTU for the layer 2 host interface. This value should be an even number. |

|

Unshut Host Interfaces by Default |

Check this check box to unshut the host interfaces by default. |

|

Power Supply Mode |

Choose the appropriate power supply mode. |

|

CoPP Profile |

Choose the appropriate Control Plane Policing (CoPP) profile policy for the fabric. By default, the strict option is populated. |

|

VTEP HoldDown Time |

Specifies the NVE source interface hold down time. |

|

Brownfield Overlay Network Name Format |

Enter the format to be used to build the overlay network name during a brownfield import or migration. The network name should not contain any white spaces or special characters except underscore () and hyphen (-). The network name must not be changed once the brownfield migration has been initiated. See the _Creating Networks for the Standalone Fabric section for the naming convention of the network name. The syntax is [<string> | $$VLAN_ID$$] $$VNI$$ [<string>| $$VLAN_ID$$] and the default value is Auto_Net_VNI$$VNI$$_VLAN$$VLAN_ID$$. When you create networks, the name is generated according to the syntax you specify. The following list describes the variables in the syntax:

An example overlay network name: Site_VNI12345_VLAN1234 Ignore this field for greenfield deployments. The Brownfield Overlay Network Name Format applies for the following brownfield imports:

|

|

Enable CDP for Bootstrapped Switch |

Enables CDP on management (mgmt0) interface for bootstrapped switch. By default, for bootstrapped switches, CDP is disabled on the mgmt0 interface. |

|

Enable VXLAN OAM |

Enables the VXLAM OAM functionality for devices in the fabric. This is enabled by default. Uncheck the check box to disable VXLAN OAM function. If you want to enable the VXLAN OAM function on specific switches and disable on other switches in the fabric, you can use freeform configurations to enable OAM and disable OAM in the fabric settings. The VXLAN OAM feature in Cisco Nexus Dashboard Fabric Controller is only supported on a single fabric or site. |

|

Enable Tenant DHCP |

Check the check box to enable feature dhcp and associated configurations globally on all switches in the fabric. This is a pre-requisite for support of DHCP for overlay networks that are part of the tenant VRFs. Ensure that Enable Tenant DHCP is enabled before enabling DHCP-related parameters in the overlay profiles. |

|

Enable NX-API |

Specifies enabling of NX-API on HTTPS. This check box is checked by default. |

|

Enable NX-API on HTTP Port |

Specifies enabling of NX-API on HTTP. Enable this check box and the Enable NX-API check box to use HTTP. This check box is checked by default. If you uncheck this check box, the applications that use NX-API and supported by Cisco Nexus Dashboard Fabric Controller, such as Endpoint Locator (EPL), Layer 4-Layer 7 services (L4-L7 services), VXLAN OAM, and so on, start using the HTTPS instead of HTTP. If you check the Enable NX-API check box and the Enable NX-API on HTTP check box, applications use HTTP. |

|

Enable Policy-Based Routing (PBR) |

Check this check box to enable routing of packets based on the specified policy. Starting with Cisco NX-OS Release 7.0(3)I7(1) and later releases, this feature works on Cisco Nexus 9000 Series switches with Nexus 9000 Cloud Scale (Tahoe) ASICs. This feature is used along with the Layer 4-Layer 7 service workflow. For information on Layer 4-Layer 7 service, refer the Layer 4-Layer 7 Service chapter. |

|

Enable Strict Config Compliance |

Enable the Strict Config Compliance feature by selecting this check box. It enables bi-directional compliance checks to flag additional configs in the running config that are not in the intent/expected config. By default, this feature is disabled. |

|

Enable AAA IP Authorization |

Enables AAA IP authorization, when IP Authorization is enabled in the remote authentication server. This is required to support Nexus Dashboard Fabric Controller in scenarios where customers have strict control of which IP addresses can have access to the switches. |

|

Enable NDFC as Trap Host |

Select this check box to enable Nexus Dashboard Fabric Controller as an SNMP trap destination. Typically, for a native HA Nexus Dashboard Fabric Controller deployment, the eth1 VIP IP address will be configured as SNMP trap destination on the switches. By default, this check box is enabled. |

|

Anycast Border Gateway advertise-pip |

Enables to advertise Anycast Border Gateway PIP as VTEP. Effective on MSD fabric 'Recalculate Config'. |

|

Greenfield Cleanup Option |

Enable the switch cleanup option for switches imported into Nexus Dashboard Fabric Controller with Preserve-Config=No, without a switch reload. This option is typically recommended only for the fabric environments with Cisco Nexus 9000v Switches to improve on the switch clean up time. The recommended option for Greenfield deployment is to employ Bootstrap or switch cleanup with a reboot. In other words, this option should be unchecked. |

|

Enable Precision Time Protocol (PTP) |

Enables PTP across a fabric. When you check this check box, PTP is enabled globally and on core-facing interfaces. Additionally, the PTP Source Loopback Id and PTP Domain Id fields are editable. For more information, see the section "Precision Time Protocol for Data Center VXLAN EVPN Fabrics" in Precision Time Protocol for Data Center VXLAN EVPN Fabrics. |

|

PTP Source Loopback Id |

Specifies the loopback interface ID Loopback that is used as the Source IP Address for all PTP packets. The valid values range from 0 to 1023. The PTP loopback ID cannot be the same as RP, Phantom RP, NVE, or MPLS loopback ID. Otherwise, an error will be generated. The PTP loopback ID can be the same as BGP loopback or user-defined loopback which is created from Nexus Dashboard Fabric Controller. If the PTP loopback ID is not found during Deploy Config, the following error is generated: Loopback interface to use for PTP source IP is not found. Create PTP loopback interface on all the devices to enable PTP feature. |

|

PTP Domain Id |

Specifies the PTP domain ID on a single network. The valid values range from 0 to 127. |

|

Enable MPLS Handoff |

Check the check box to enable the MPLS Handoff feature. For more information, see MPLS SR and LDP Handoff. |

|

Underlay MPLS Loopback Id |

Specifies the underlay MPLS loopback ID. The default value is 101. |

|

Enable TCAM Allocation |

TCAM commands are automatically generated for VXLAN and vPC Fabric Peering when enabled. |

|

Enable Default Queuing Policies |

Check this check box to apply QoS policies on all the switches in this fabric. To remove the QoS policies that you applied on all the switches, uncheck this check box, update all the configurations to remove the references to the policies, and save and deploy. Pre-defined QoS configurations are included that can be used for various Cisco Nexus 9000 Series Switches. When you check this check box, the appropriate QoS configurations are pushed to the switches in the fabric. The system queuing is updated when configurations are deployed to the switches. You can perform the interface marking with defined queuing policies, if required, by adding the required configuration to the per interface freeform block. Review the actual queuing policies by opening the policy file in the template editor. From Cisco Nexus Dashboard Fabric Controller Web UI, choose Manage > Templates. Search for the queuing policies by the policy file name, for example, queuing_policy_default_8q_cloudscale. Choose the file. From the Actions drop-down list, select Edit template content to edit the policy. See the Cisco Nexus 9000 Series NX-OS Quality of Service Configuration Guide for platform specific details. |

|

N9K Cloud Scale Platform Queuing Policy |

Choose the queuing policy from the drop-down list to be applied to all Cisco Nexus 9200 Series Switches and the Cisco Nexus 9000 Series Switches that ends with EX, FX, and FX2 in the fabric. The valid values are queuing_policy_default_4q_cloudscale and queuing_policy_default_8q_cloudscale. Use the queuing_policy_default_4q_cloudscale policy for FEXes. You can change from the queuing_policy_default_4q_cloudscale policy to the queuing_policy_default_8q_cloudscale policy only when FEXes are offline. |

|

N9K R-Series Platform Queuing Policy |

Choose the queuing policy from the drop-down list to be applied to all Cisco Nexus switches that ends with R in the fabric. The valid value is queuing_policy_default_r_series. |

|

Other N9K Platform Queuing Policy |

Choose the queuing policy from the drop-down list to be applied to all other switches in the fabric other than the switches mentioned in the above two options. The valid value is queuing_policy_default_other. |

|

Enable AI/ML QOS and Queuing Policies |

Beginning with NDFC release 12.2.1, check the box to enable AI/ML QoS and queuing policies in the BGP fabric. For more information, see AI/ML QoS Classification and Queuing Policies. This option is not available if you also enabled either of the following options:

|

|

AI / ML QOS & Queuing Policy |

This field is available if you checked the Enable AI/ML QOS and Queuing Policies option above. Beginning with NDFC release 12.2.1, choose the queuing policy from the drop-down list based on the predominant fabric link speed for certain switches in the fabric. For more information, see AI/ML QoS Classification and Queuing Policies. Options are:

|

|

Enable MACsec |

Enables MACsec for the fabric. For more information, Enabling MACsec. Freeform CLIs - Fabric level freeform CLIs can be added while creating or editing a fabric. They are applicable to switches across the fabric. You must add the configurations as displayed in the running configuration, without indentation. Switch level freeform configurations should be added via the switch freeform on NDFC. For more information, see Enabling Freeform Configurations on Fabric Switches. |

|

Leaf Freeform Config |

Add CLIs that should be added to switches that have the Leaf,Border, and Border Gateway roles. |

|

Spine Freeform Config |

Add CLIs that should be added to switches with a Spine, Border Spine, Border Gateway Spine, and Super Spine roles. |

|

Intra-fabric Links Additional Config |

Add CLIs that should be added to the intra-fabric links. |

What’s next: Complete the configurations in another tab if necessary, or click Save when you have completed the necessary configurations for this fabric.

Resources

The fields in the Resources tab are described in the following table. Most of the fields are automatically generated based on Cisco-recommended best practice configurations, but you can update the fields if needed.

| Field | Description |

|---|---|

|

Manual Underlay IP Address Allocation |

Do not check this check box if you are transitioning your VXLAN fabric management to Nexus Dashboard Fabric Controller.

|

|

Underlay Routing Loopback IP Range |

Specifies loopback IP addresses for the protocol peering. |

|

Underlay VTEP Loopback IP Range |

Specifies loopback IP addresses for VTEPs. |

|

Underlay RP Loopback IP Range |

Specifies the anycast or phantom RP IP address range. |

|

Underlay Subnet IP Range |

IP addresses for underlay P2P routing traffic between interfaces. |

|

Underlay MPLS Loopback IP Range |

Specifies the underlay MPLS loopback IP address range. For eBGP between Border of Easy A and Easy B, Underlay routing loopback and Underlay MPLS loopback IP range must be a unique range. It should not overlap with IP ranges of the other fabrics, else VPNv4 peering will not come up. |

|

Underlay Routing Loopback IPv6 Range |

Specifies Loopback0 IPv6 Address Range |

|

Underlay VTEP Loopback IPv6 Range |

Specifies Loopback1 and Anycast Loopback IPv6 Address Range. |

|

Underlay Subnet IPv6 Range |

Specifies IPv6 Address range to assign Numbered and Peer Link SVI IPs. |

|

BGP Router ID Range for IPv6 Underlay |

Specifies BGP router ID range for IPv6 underlay. |

|

Layer 2 VXLAN VNI Range |

Specifies the overlay VXLAN VNI range for the fabric (min:1, max:16777214). |

|

Layer 3 VXLAN VNI Range |

Specifies the overlay VRF VNI range for the fabric (min:1, max:16777214). |

|

Network VLAN Range |

VLAN range for the per switch overlay network (min:2, max:4094). |

|

VRF VLAN Range |

VLAN range for the per switch overlay Layer 3 VRF (min:2, max:4094). |

|

Subinterface Dot1q Range |

Specifies the subinterface range when L3 sub interfaces are used. |

|

VRF Lite Deployment |

Specify the VRF Lite method for extending inter fabric connections. The VRF Lite Subnet IP Range field specifies resources reserved for IP address used for VRF Lite when VRF Lite IFCs are auto-created. If you select Back2Back&ToExternal, then VRF Lite IFCs are auto-created. |

|

Auto Deploy for Peer |

This check box is applicable for VRF Lite deployment. When you select this checkbox, auto-created VRF Lite IFCs will have the Auto Generate Configuration for Peer field in the VRF Lite tab set. To access VRF Lite IFC configuration, navigate to the Links tab, select the particular link, and then choose Actions > Edit. You can check or uncheck the check box when the VRF Lite Deployment field is not set to Manual. This configuration only affects the new auto-created IFCs and does not affect the existing IFCs. You can edit an auto-created IFC and check or uncheck the Auto Generate Configuration for Peer field. This setting takes priority always. |

|

Auto Deploy Default VRF |

When you select this check box, the Auto Generate Configuration on default VRF field is automatically enabled for auto-created VRF Lite IFCs. You can check or uncheck this check box when the VRF Lite Deployment field is not set to Manual. The Auto Generate Configuration on default VRF field when set, automatically configures the physical interface for the border device, and establishes an EBGP connection between the border device and the edge device or another border device in a different VXLAN EVPN fabric. |

|

Auto Deploy Default VRF for Peer |

When you select this check box, the Auto Generate Configuration for NX-OS Peer on default VRF field is automatically enabled for auto-created VRF Lite IFCs. You can check or uncheck this check box when the VRF Lite Deployment field is not set to Manual. The Auto Generate Configuration for NX-OS Peer on default VRF field when set, automatically configures the physical interface and the EBGP commands for the peer NX-OS switch. To access the Auto Generate Configuration on default VRF and Auto Generate Configuration for NX-OS Peer on default VRF fields for an IFC link, navigate to the Links tab, select the particular link and choose Actions > Edit. |

|

Redistribute BGP Route-map Name |

Defines the route map for redistributing the BGP routes in default VRF. |

|

VRF Lite Subnet IP Range and VRF Lite Subnet Mask |

These fields are populated with the DCI subnet details. Update the fields, as needed. The values shown in your screen are automatically generated. If you want to update the IP address ranges, VXLAN Layer 2/Layer 3 network ID ranges or the VRF/Network VLAN ranges, ensure the following: When you update a range of values, ensure that it does not overlap with other ranges. You should only update one range of values at a time. If you want to update more than one range of values, do it in separate instances. For example, if you want to update L2 and L3 ranges, you should do the following.

|

|

Service Network VLAN Range |

Specifies a VLAN range in the Service Network VLAN Range field. This is a per switch overlay service network VLAN range. The minimum allowed value is 2 and the maximum allowed value is 3967. |

|

Auto Allocation of Unique IP on VRF Extension over VRF Lite IFC |

Automatically allocates a unique IPv4 address with subnet for the source and the destination interfaces for VRF extensions over VRF Lite IFC. When enabled, the system auto populates a unique IP address for the source and the destination interfaces for each extension in the VRF attachment. When you disable the feature, the system auto populates the same IP address for the source and the destination interfaces for the VRF extensions and these IP addresses are allocated in resource manager with the VRFs attached. The resource manager ensures that they are not used for any other purpose on the same VRF. |

|

Per VRF Per VTEP Loopback Auto-Provisioning |

Auto provisions a loopback address in IPv4 format for a VTEP that the system uses for VRF attachment. This option is not enabled by default. When enabled, the system allocates an IPv4 address from the IP pool that you have assigned for the VTEP loopback interface. |

|

Per VRF Per VTEP IP Pool for Loopback |

A pool of IP addresses assigned to the loopback interfaces on VTEPs for each VRF. |

|

Route Map Sequence Number Range |

Specifies the route map sequence number range. The minimum allowed value is 1 and the maximum allowed value is 65534. |

What’s next: Complete the configurations in another tab if necessary, or click Save when you have completed the necessary configurations for this fabric.

Manageability

The fields in the Manageability tab are described in the following table. Most of the fields are automatically generated based on Cisco-recommended best practice configurations, but you can update the fields if needed.

| Field | Description |

|---|---|

|

Inband Management |

Enabling this allows the management of the switches over their front panel interfaces. The Underlay Routing Loopback interface is used for discovery. If enabled, switches cannot be added to the fabric over their out-of-band (OOB) mgmt0 interface. To manage easy fabrics through Inband management, ensure that you have chosen Data in NDFC Web UI, Admin > System Settings > Server Settings > Admin. Both inband management and out-of-band connectivity (mgmt0) are supported for this setting. For more information, see the section "Inband Management and Inband POAP in Easy Fabrics" in Configuring Inband Management, Inband POAP Management, and Secure POAP. |

|

DNS Server IPs |

Specifies the comma separated list of IP addresses (v4/v6) of the DNS servers. |

|

DNS Server VRFs |

Specifies one VRF for all DNS servers or a comma separated list of VRFs, one per DNS server. |

|

NTP Server IPs |

Specifies comma separated list of IP addresses (v4/v6) of the NTP server. |

|

NTP Server VRFs |

Specifies one VRF for all NTP servers or a comma separated list of VRFs, one per NTP server. |

|

Syslog Server IPs |

Specifies the comma separated list of IP addresses (v4/v6) IP address of the syslog servers, if used. |

|

Syslog Server Severity |

Specifies the comma separated list of syslog severity values, one per syslog server. The minimum value is 0 and the maximum value is 7. To specify a higher severity, enter a higher number. |

|

Syslog Server VRFs |

Specifies one VRF for all syslog servers or a comma separated list of VRFs, one per syslog server. |

|

AAA Freeform Config |

Specifies the AAA freeform configurations. If AAA configurations are specified in the fabric settings, switch_freeform PTI with source as UNDERLAY_AAA and description as AAA Configurations will be created. |

What’s next: Complete the configurations in another tab if necessary, or click Save when you have completed the necessary configurations for this fabric.

Bootstrap

The fields in the Bootstrap tab are described in the following table. Most of the fields are automatically generated based on Cisco-recommended best practice configurations, but you can update the fields if needed.

| Field | Description |

|---|---|

|

Enable Bootstrap |

Select this check box to enable the bootstrap feature. Bootstrap allows easy day-0 import and bring-up of new devices into an existing fabric. Bootstrap leverages the NX-OS POAP functionality. Starting from Cisco NDFC Release 12.1.1e, to add more switches and for POAP capability, chose check box for Enable Bootstrap and Enable Local DHCP Server. For more information, see the section "Inband Management and Inband POAP in Easy Fabrics" in Configuring Inband Management, Inband POAP Management, and Secure POAP. After you enable bootstrap, you can enable the DHCP server for automatic IP address assignment using one of the following methods:

|

|

Enable Local DHCP Server |

Select this check box to initiate enabling of automatic IP address assignment through the local DHCP server. When you select this check box, the DHCP Scope Start Address and DHCP Scope End Address fields become editable. If you do not select this check box, Nexus Dashboard Fabric Controller uses the remote or external DHCP server for automatic IP address assignment. |

|

DHCP Version |

Select DHCPv4 or DHCPv6 from this drop-down list. When you select DHCPv4, the Switch Mgmt IPv6 Subnet Prefix field is disabled. If you select DHCPv6, the Switch Mgmt IP Subnet Prefix is disabled. Cisco Nexus 9000 and 3000 Series Switches support IPv6 POAP only when switches are either Layer-2 adjacent (eth1 or out-of-band subnet must be a /64) or they are L3 adjacent residing in some IPv6 /64 subnet. Subnet prefixes other than /64 are not supported. |

|

DHCP Scope Start Address and DHCP Scope End Address |

Specifies the first and last IP addresses of the IP address range to be used for the switch out of band POAP. |

|

Switch Mgmt Default Gateway |

Specifies the default gateway for the management VRF on the switch. |

|

Switch Mgmt IP Subnet Prefix |

Specifies the prefix for the Mgmt0 interface on the switch. The prefix should be between 8 and 30. DHCP scope and management default gateway IP address specification - If you specify the management default gateway IP address 10.0.1.1 and subnet mask 24, ensure that the DHCP scope is within the specified subnet, between 10.0.1.2 and 10.0.1.254. |

|

Switch Mgmt IPv6 Subnet Prefix |

Specifies the IPv6 prefix for the Mgmt0 interface on the switch. The prefix should be between 112 and 126. This field is editable if you enable IPv6 for DHCP. |

|

Enable AAA Config |

Select this check box to include AAA configurations from the Manageability tab as part of the device start-up config post bootstrap. |

|

DHCPv4/DHCPv6 Multi Subnet Scope |

Specifies the field to enter one subnet scope per line. This field is editable after you check the Enable Local DHCP Server check box. The format of the scope should be defined as: DHCP Scope Start Address, DHCP Scope End Address, Switch Management Default Gateway, Switch Management Subnet Prefix For example: 10.6.0.2, 10.6.0.9, 10.6.0.1, 24 |

|

Bootstrap Freeform Config |

(Optional) Enter additional commands as needed. For example, if you require some additional configurations to be pushed to the device and be available post device bootstrap, they can be captured in this field, to save the desired intent. After the devices boot up, they will contain the configuration defined in the Bootstrap Freeform Config field. Copy-paste the running-config to a freeform config field with correct indentation, as seen in the running configuration on the NX-OS switches. The freeform config must match the running config. For more information, see Enabling Freeform Configurations on Fabric Switches. |

What’s next: Complete the configurations in another tab if necessary, or click Save when you have completed the necessary configurations for this fabric.

Configuration Backup

The fields in the Configuration Backup tab are described in the following table. Most of the fields are automatically generated based on Cisco-recommended best practice configurations, but you can update the fields if needed.

| Field | Description |

|---|---|

|

Hourly Fabric Backup |

Select the check box to enable an hourly backup of fabric configurations and the intent. The hourly backups are triggered during the first 10 minutes of the hour. |

|

Scheduled Fabric Backup |

Check the check box to enable a daily backup. This backup tracks changes in running configurations on the fabric devices that are not tracked by configuration compliance. |

|

Scheduled Time |

Specify the scheduled backup time in a 24-hour format. This field is enabled if you check the Scheduled Fabric Backup check box. Select both the check boxes to enable both back up processes. The backup process is initiated after you click Save. The scheduled backups are triggered exactly at the time you specify with a delay of up to two minutes. The scheduled backups are triggered regardless of the configuration deployment status. The number of fabric backups that will be retained on NDFC is decided by the Admin > System Settings > Server Settings > LAN Fabric > Maximum Backups per Fabric.The number of archived files that can be retained is set in the # Number of archived files per device to be retained: field in the Server Properties window. To trigger an immediate backup, do the following:

You can also initiate the fabric backup in the fabric topology window. Click Backup Now in the Actions pane. |

What’s next: Complete the configurations in another tab if necessary, or click Save when you have completed the necessary configurations for this fabric.

Flow Monitor

The fields in the Flow Monitor tab are described in the following table. Most of the fields are automatically generated based on Cisco-recommended best practice configurations, but you can update the fields if needed.

| Field | Description |

|---|---|

|

Enable Netflow |

Check this check box to enable Netflow on VTEPs for this fabric. By default, Netflow is disabled. When enabled, NetFlow configuration will be applied to all VTEPS that support netflow. When Netflow is enabled on the fabric, you can choose not to have netflow on a particular switch by having a dummy no_netflow PTI. If netflow is not enabled at the fabric level, an error message is generated when you enable netflow at the interface, network, or vrf level. For information about Netflow support for Cisco NDFC, see section "Netflow Support" in Understanding LAN Fabrics. |

In the Netflow Exporter area, choose Actions > Add to add one or more Netflow exporters. This exporter is the receiver of the netflow data. The fields on this screen are:

-

Exporter Name - Specifies the name of the exporter.

-

IP - Specifies the IP address of the exporter.

-

VRF - Specifies the VRF over which the exporter is routed.

-

Source Interface - Specifies the source interface name.

-

UDP Port - Specifies the UDP port over which the netflow data is exported.

Click Save to configure the exporter. Click Cancel to discard. You can also choose an existing exporter and choose Actions > Edit or Actions > Delete to perform relevant actions.

In the Netflow Record area, click Actions > Add to add one or more Netflow records. The fields on this screen are:

-

Record Name - Specifies the name of the record.

-

Record Template - Specifies the template for the record. Enter one of the record templates names. In Release 12.0.2, the following two record templates are available for use. You can create custom netflow record templates. Custom record templates saved in the template library are available for use here.

-

netflow_ipv4_record - Uses the IPv4 record template.

-

netflow_l2_record - Uses the Layer 2 record template.

-

-

Is Layer2 Record - Check this check box if the record is for Layer2 netflow.

Click Save to configure the report. Click Cancel to discard. You can also choose an existing record and select Actions > Edit or Actions > Delete to perform relevant actions.

In the Netflow Monitor area, click Actions > Add to add one or more Netflow monitors. The fields on this screen are:

-

Monitor Name - Specifies the name of the monitor.

-

Record Name - Specifies the name of the record for the monitor.

-

Exporter1 Name - Specifies the name of the exporter for the netflow monitor.

-

Exporter2 Name - (optional) Specifies the name of the secondary exporter for the netflow monitor.

The record name and exporters referred to in each netflow monitor must be defined in "Netflow Record" and "Netflow Exporter".

Click Save to configure the monitor. Click Cancel to discard. You can also choose an existing monitor and select Actions > Edit or Actions > Delete to perform relevant actions.

What’s next: Complete the configurations in another tab if necessary, or click Save when you have completed the necessary configurations for this fabric.

Layer 3 VNI Without VLAN

Beginning with NDFC release 12.2.1, the Layer 3 VNI without VLAN feature is now supported with Nexus Dashboard Fabric Controller. With this feature, Layer 3 VNI configurations no longer require a VLAN per VRF.

Following is the upper-level process to enable the Layer 3 VNI without VLAN feature in a fabric:

-

(Optional) When configuring a new fabric, check the Enable L3VNI w/o VLAN field to enable the Layer 3 VNI without VLAN feature at the fabric level. The setting at this fabric-level field affects the related field at the VRF level, as described below.

-

When creating or editing a VRF, check the Enable L3VNI w/o VLAN field to enable the Layer 3 VNI without VLAN feature at the VRF level. The default setting for this field varies depending on the following factors:

-

For existing VRFs, the default setting is disabled (the Enable L3VNI w/o VLAN box is unchecked).

-

For newly-created VRFs, the default setting is inherited from the fabric settings, as described above.

-

This field is a per-VXLAN fabric variable. For VRFs that are created from a VXLAN EVPN Multi-Site fabric, the value of this field will be inherited from the fabric setting in the child fabric. You can edit the VRF in the child fabric to change the value, if desired.

See the "Creating a VRF" section in About Fabric Overview for LAN Operational Mode Setups for more information.

-

The VRF attachment (new or edited) then uses the new Layer 3 VNI without VLAN mode if the following conditions are met:

-

The Enable L3VNI w/o VLAN is enabled at the VRF level

-

The switch supports this feature and the switch is running on the correct release (see Guidelines and Limitations: Layer 3 VNI Without VLAN)

The VLAN is ignored in the VRF attachment when these conditions are met.

Guidelines and Limitations: Layer 3 VNI Without VLAN

Following are the guidelines and limitations for the Layer 3 without VLAN feature:

-

The Layer 3 VNI without VLAN feature is supported on the -EX, -FX, and -GX versions of the Nexus 9000 switches. When you enable this feature at the VRF level, the feature setting on the VRF will be ignored on switch models that do not support this feature.

-

When used in a Campus VXLAN EVPN fabric, this feature is only supported on Cisco Nexus 9000 series switches in that type of fabric. This feature is not supported on Cisco Catalyst 9000 series switches in the Campus VXLAN EVPN fabric; those switches require VLANs for Layer 3 VNI configurations.

-

This feature is supported on switches running on NX-OS release 10.3.1 or later. If you enable this feature at the VRF level, the feature setting on the VRF will be ignored on switches running an NX-OS image earlier than 10.3.1.

-

When you perform a brownfield import in a Data Center VXLAN EVPN fabric, if one switch configuration is set with the Enable L3VNI w/o VLAN configuration at the VRF level, then you should also configure this same setting for the rest of the switches in the same fabric that are associated with this VRF, if the switch models and images support this feature.

-

If you upgrade from an earlier release to NDFC 12.2.1, already-configured VRFs and fabrics will retain their existing pre-12.2.1 settings where the Layer 3 VNI without VLAN feature is disabled (the Enable L3VNI w/o VLAN box is unchecked). Once you are fully upgraded to NDFC release 12.2.1, you can manually change these settings to enable the Layer 3 VNI without VLAN feature, if desired.

AI/ML QoS Classification and Queuing Policies

The following sections provide information about the AI/ML QoS classification and queuing policies feature, introduced in NDFC release 12.2.1:

About AI/ML QoS Classification and Queuing Policies

Beginning with NDFC release 12.2.1, support is available for configuring a low latency, high throughput and lossless fabric configuration that can be used for artificial intelligence (AI) and machine learning (ML) traffic.

This feature allows you to:

-

Easily configure a network with homogeneous interface speeds, where most or all of the links run at 400Gb, 100Gb, or 25Gb speeds.

-

Provide customizations to override the predominate queuing policy for a host interface.

When you apply the AI/ML QoS policy, NDFC will automatically pre-configure any inter-fabric links with QoS and system queuing policies, and will also enable Priority Flow Control (PFC). If you enable the AI/ML QoS feature on a VXLAN EVPN fabric, then the Network Virtual (NVE) interface will have the attached AI/ML QoS policies.

Use the following areas to enable this feature:

-

When configuring a BGP fabric, new fields are available to enable the feature and to set the queuing policy parameters based on the interface speed. For more information, see Advanced.

-

You can also use the following AI/ML-specific switch templates to create custom device policies, which can be used on host interfaces:

-

AI_Fabric_QOS_Classification_Custom: An interface template that is available for applying a custom queuing policy to an interface.

-

AI_Fabric_QOS_Queuing_Custom: A switch template that is available for user-defined queuing policy configurations.

Policies defined with these custom Classification and Queuing templates can be used in various host interface polices. For more information, see Using the Custom QoS Templates to Create a Policy.

-

Guidelines and Limitations: AI/ML QoS Classification and Queuing Policies

Following are the guidelines and limitations for the AI/ML QoS and queuing policy feature:

-

This feature does not automate any per-interface speed settings.

-

This feature is supported only on Nexus devices with Cisco Cloud Scale technology, such as the Cisco Nexus 9300-FX2, 9300-FX3, 9300-GX, and 9300-GX2 series switches.

-

This feature is not supported in fabrics with devices that are assigned with a ToR role.

Configuring AI/ML QoS Classification and Queuing Policies

Follow these steps to configure AI/ML QoS and queuing policies:

-

Enable AI/ML QoS and queuing policies at the fabric level.

-

Create a fabric as you normally would.

-

In the Advanced tab in those intructions, make the necessary selections to configure AI/ML QoS and queuing policies at the fabric level.

-

Configure any remaining fabric level settings as necessary in the remaining tabs.

-

When you have completed all the necessary fabric level configurations, click Save, then click Recalculate and Deploy.

At this point in the process, the network QoS and queuing policies are configured on each device, the classification policy is configured on NVE interfaces (if applicable), and priority flow control and classification policy is configured on all intra-fabric link interfaces.

-

-

For host interfaces, selectively enable priority flow control, QoS, and queuing by editing the policy associated with that host interface.

See Add Interfaces for LAN Operational Mode for more information.

-

Within a fabric where you enabled AI/ML QoS and queuing policies in the previous step, click the Interfaces tab.

The configured interfaces within this fabric are displayed.

-

Locate the host interface where you want to enable AI/ML QoS and queuing policies, then click the box next to that host interface to select it and click Actions > Edit.

The Edit Interfaces page is displayed.

-

In the Policy field, verify that the policy that is associated with this interface contains the necessary fields that will allow you to enable AI/ML QoS and queuing policies on this host interface.

For example, these policy templates contain the necessary AI/ML QoS and queuing policies fields:

-

int_access_host

-

int_dot1q_tunnel_host

-

int_pvlan_host

-

int_routed_host

-

int_trunk_host

-

-

Locate the Enable priority flow control field and click the box next to this field to enable Priority Flow Control for this host interface.

-

In the Enable QoS Configuration field, click the box next to this field to enable AI/ML QoS for this host interface.

This enables the QoS classification on this interface if AI/ML queuing is enabled at the fabric level.

-

If you the checked the box next to the Enable QoS Configuration field in the previous step and you created a custom QoS policy using the procedures provided in Using the Custom QoS Templates to Create a Policy, enter that custom QoS classification policy in the Custom QoS Policy for this interface field to associate that custom QoS policy with this host interface, if necessary.

If this field is left blank, then NDFC will use the default QOS_CLASSIFICATION policy, if available.

-

If you created a custom queuing policy using the procedures provided in Using the Custom QoS Templates to Create a Policy, enter that custom queuing policy in the Custom Queuing Policy for this interface field to associate that custom queuing policy with this host interface, if desired.

-

Click Save when you have completed the AI/ML QoS and queuing policy configurations for this host interface.

-

Using the Custom QoS Templates to Create a Policy

Follow these procedures to use the custom QoS templates to create a policy, if desired. See Templates for general information on templates.

-

Within a fabric where you enabled AI/ML QoS and queuing policies, click Switches, then double-click the switch that has the host interface where you enabled AI/ML QoS and queuing policies.

The Switch Overview page for that switch appears.

-

Click the Policies tab.

-

Click Actions > Add Policy.

The Create Policy page appears.

-

Set the priority and enter a description for the new policy.

Note that the priority for this policy must be lower (must come before) the priority that was set for the host interface.

-

In the Select Template field, click the No Policy Selected text.

The Select Policy Template page appears.

-

Select the appropriate custom Classification or Queuing template from the list, then click Select.

The following templates are specific to the AI/ML QoS and queuing policies feature that is introduced in NDFC release 12.2.1. Use these templates to create policies that can be used on one or more host interfaces:

-

AI_Fabric_QOS_Classification_Custom: An interface template that is available for applying a custom queuing policy to an interface.

-

AI_Fabric_QOS_Queuing_Custom: A switch template that is available for user-defined queuing policy configurations.

-

-

Make the necessary QoS classification or queuing configurations in the template that you selected, then click Save.

Any custom QoS policy created using these procedures are now available to use when you configure QoS and queuing policies for the host interface.

Precision Time Protocol for Data Center VXLAN EVPN Fabrics

In the fabric settings for the Data Center VXLAN EVPN template, select the Enable Precision Time Protocol (PTP) check box to enable PTP across a fabric. When you select this check box, PTP is enabled globally and on core-facing interfaces. Additionally, the PTP Loopback Id and PTP Domain Id fields are editable.

The PTP feature works only when all the devices in a fabric are cloud-scale devices. Warnings are displayed if there are non-cloud scale devices in the fabric, and PTP is not enabled. Examples of the cloud-scale devices are Cisco Nexus 93180YC-EX, Cisco Nexus 93180YC-FX, Cisco Nexus 93240YC-FX2, and Cisco Nexus 93360YC-FX2 switches.

For more information, see the Configuring PTP chapter in Cisco Nexus 9000 Series NX-OS System Management Configuration Guide and Cisco Nexus Dashboard Insights User Guide.

For Nexus Dashboard Fabric Controller deployments, specifically in a VXLAN EVPN based fabric deployments, you have to enable PTP globally, and also enable PTP on core-facing interfaces. The interfaces could be configured to the external PTP server like a VM or Linux-based machine. Therefore, the interface should be edited to have a connection with the grandmaster clock.

It is recommended that the grandmaster clock should be configured outside of Easy Fabric and it is IP reachable. The interfaces toward the grandmaster clock need to be enabled with PTP via the interface freeform config.

All core-facing interfaces are auto-enabled with the PTP configuration after you click Deploy Config. This action ensures that all devices are PTP synced to the grandmaster clock. Additionally, for any interfaces that are not core-facing, such as interfaces on the border devices and leafs that are connected to hosts, firewalls, service-nodes, or other routers, the TTAG related CLI must be added. The TTAG is added for all traffic entering the VXLAN EVPN fabric and the TTAG must be stripped when traffic is exiting this fabric.

Here is the sample PTP configuration:

feature ptp

ptp source 100.100.100.10 -> _IP address of the loopback interface (loopback0) that is already created or user created loopback interface in the fabric settings_

ptp domain 1 -> _PTP domain ID specified in fabric settings_

interface Ethernet1/59 -> _Core facing interface_

ptp

interface Ethernet1/50 -> _Host facing interface_

ttag

ttag-stripThe following guidelines are applicable for PTP:

-

The PTP feature can be enabled in a fabric when all the switches in the fabric have Cisco NX-OS Release 7.0(3)I7(1) or a higher version. Otherwise, the following error message is displayed:

PTP feature can be enabled in the fabric, when all the switches have NX-OS Release 7.0(3)I7(1) or higher version. Please upgrade switches to NX-OS Release 7.0(3)I7(1) or higher version to enable PTP in this fabric.

-

For hardware telemetry support in NIR, the PTP configuration is a prerequisite.

-

If you are adding a non-cloud scale device to an existing fabric which contains PTP configuration, the following warning is displayed:

TTAG is enabled fabric wide, when all devices are cloud scale switches so it cannot be enabled for newly added non cloud scale device(s).

-

If a fabric contains both cloud scale and non-cloud scale devices, the following warning is displayed when you try to enable PTP:

TTAG is enabled fabric wide, when all devices are cloud scale switches and is not enabled due to non cloud scale device(s).

MACsec Support in Data Center VXLAN EVPN and BGP Fabrics

MACsec is supported in the Data Center VXLAN EVPN and BGP fabrics on intra-fabric links. You should enable MACsec on the fabric and on each required intra-fabric link to configure MACsec. Unlike CloudSec, auto-configuration of MACsec is not supported.

MACsec is supported on switches with minimum Cisco NX-OS Releases 7.0(3)I7(8) and 9.3(5).

Guidelines

-

If MACsec cannot be configured on the physical interfaces of the link, an error is displayed when you click Save. MACsec cannot be configured on the device and link due to the following reasons:

-

The minimum NX-OS version is not met.

-

The interface is not MACsec capable.

-

-

MACsec global parameters in the fabric settings can be changed at any time.

-

MACsec and CloudSec can coexist on a BGW device.

-

MACsec status of a link with MACsec enabled is displayed on the Links window.

-

Brownfield migration of devices with MACsec configured is supported using switch and interface freeform configs.

For more information about MACsec configuration, which includes supported platforms and releases, see the Configuring MACsec chapter in Cisco Nexus 9000 Series NX-OS Security Configuration Guide.

The following sections show how to enable and disable MACsec in Nexus Dashboard Fabric Controller.

Enabling MACsec

-

Navigate to Manage > Fabrics.

-

Click Actions > Create to create a new fabric or click Actions > Edit Fabric on an existing Easy or eBGP fabric.

-

Click the Advanced tab and specify the MACsec details.

Enable MACsec - Select the check box to enable MACsec for the fabric.

MACsec Primary Key String - Specify a Cisco Type 7 encrypted octet string that is used for establishing the primary MACsec session. For AES_256_CMAC, the key string length must be 130 and for AES_128_CMAC, the key string length must be 66. If these values are not specified correctly, an error is displayed when you save the fabric.

The default key lifetime is infinite.

MACsec Primary Cryptographic Algorithm - Choose the cryptographic algorithm used for the primary key string. It can be AES_128_CMAC or AES_256_CMAC. The default value is AES_128_CMAC.

You can configure a fallback key on the device to initiate a backup session if the primary session fails.

MACsec Fallback Key String - Specify a Cisco Type 7 encrypted octet string that is used for establishing a fallback MACsec session. For AES_256_CMAC, the key string length must be 130 and for AES_128_CMAC, the key string length must be 66. If these values are not specified correctly, an error is displayed when you save the fabric.

MACsec Fallback Cryptographic Algorithm - Choose the cryptographic algorithm used for the fallback key string. It can be AES_128_CMAC or AES_256_CMAC. The default value is AES_128_CMAC.

MACsec Cipher Suite - Choose one of the following MACsec cipher suites for the MACsec policy:

-

GCM-AES-128

-

GCM-AES-256

-

GCM-AES-XPN-128

-

GCM-AES-XPN-256

The default value is GCM-AES-XPN-256.

The MACsec configuration is not deployed on the switches after the fabric deployment is complete. You need to enable MACsec on intra-fabric links to deploy the MACsec configuration on the switch.

MACsec Status Report Timer - Specifies MACsec operational status periodic report timer in minutes.

-

-

Click a fabric to view the Summary in the side kick. Click the side kick to expand. Click Links tab.

-

Choose an intra-fabric link on which you want to enable MACsec and click Actions > Edit.

-

In the Link Management - Edit Link window, click Advanced in the Link Profile section, and select the Enable MACsec check box.

If MACsec is enabled on the intra fabric link but not in the fabric settings, an error is displayed when you click Save.

When MACsec is configured on the link, the following configurations are generated:

-

Create MACsec global policies if this is the first link that enables MACsec.

-

Create MACsec interface policies for the link.