New and Changed Information

The following table provides an overview of the significant changes up to this current release. The table does not provide an exhaustive list of all changes or of the new features up to this release.

|

Cisco APIC Release Version |

Feature |

Description |

|---|---|---|

| N/A | N/A | Added Hardware Requirements section. |

|

3.1(2) |

-- |

This document was made available to describe network connectivity models supported for the Cisco ACI integration of Cloud Foundry, Kubernetes, OpenShift, and OpenStack. |

OpFlex Connectivity for Orchestrators

Cisco ACI supports integration with the following orchestrators through the OpFlex protocol:

-

Cloud Foundry

-

Kubernetes

-

OpenShift

-

OpenStack

This section describes the network connectivity models supported for the Cisco ACI integration of these orchestrators.

Hardware Requirements

This section provides the hardware requirements:

-

Connecting the servers to Gen1 hardware or Cisco Fabric Extenders (FEXes) is not supported and results in a nonworking cluster.

-

The use of symmetric policy-based routing (PBR) feature for load balancing external services requires the use of Cisco Nexus 9300-EX or -FX leaf switches.

For this reason, the Cisco ACI CNI Plug-in is only supported for clusters that are connected to switches of those models.

Note |

UCS-B is supported as long as the UCS Fabric Interconnects are connected to Cisco Nexus 9300-EX or -FX leaf switches. |

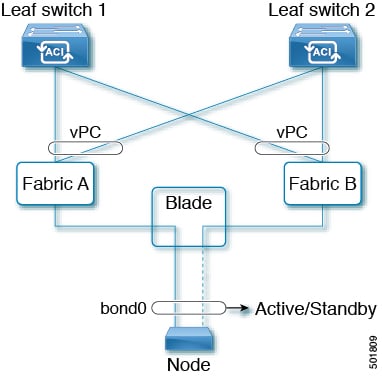

OpFlex Connectivity for Blade Systems

Follow the guidelines and illustration in this section when integrating blade systems into the Cisco ACI fabric.

-

Configure port channels on interfaces in Fabric A and Fabric B for uplink connectivity to Cisco ACI leaf switches. Each fabric connects to both leaves in vPC.

-

On the blade server, configure two vnics, one pinned to Fabric A and one to Fabric B.

-

On the Linux server, configure the vnics with bonding active/standby.

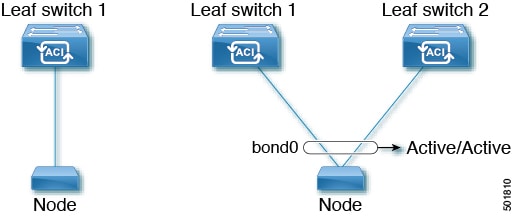

OpFlex Connectivity for Rack-mount Servers

Follow the guidelines and illustration in this section when integrating rack-mount servers into the Cisco ACI fabric.

-

For a host connected to a single leaf:

-

Do not make the leaf part of a vPC pair.

-

Configure a single uplink; port channel is not supported.

-

-

For a host connected to two leaf switches:

-

Configure vPC with LACP Active/ON.

-

Configure active/active Linux bonding.

-

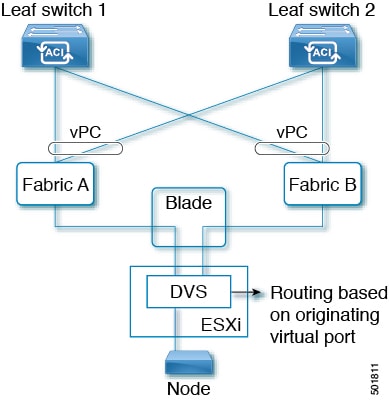

OpFlex Connectivity for Nested Blade Systems

Follow the guidelines and illustration in this section when integrating nested blade systems into the Cisco ACI fabric.

-

Configure port channels on interfaces in Fabric A and Fabric B for uplink connectivity to Cisco ACI leaf switches. Each fabric connects to both leaves in a vPC.

-

On the blade server, configure two vnics, one pinned to Fabric A and one pinned to Fabric B.

-

Route the VDS based on the originating virtual port.

Note |

We recommended that when using ESXi nested for Kubernetes hosts, you provision one Kubernetes host for each Kubernetes cluster for each ESXi server. Doing so ensures—in case of an ESXi host failure—that only a single Kubernetes node is affected for each Kubernetes cluster. |

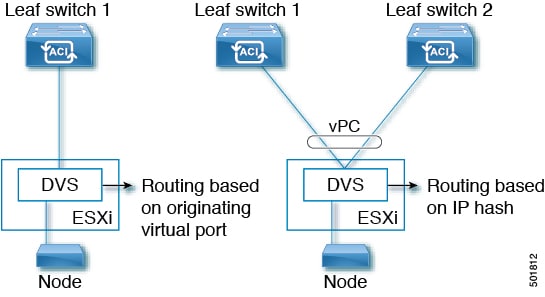

OpFlex Connectivity for Nested Rack-mount Servers

Follow the guidelines and illustration in this section when integrating nested rack-mount systems into the Cisco ACI fabric.

-

For a host connected to a single leaf switch:

-

Do not make the leaf part of a vPC pair.

-

Configure a single uplink; port channel is not supported.

-

Route the VDS based on IP hashing.

-

-

For a host connected to two leaf switches:

-

Configure the vPC with LACP Active/ON

-

Route the VDS based on IP hashing.

-

Note |

We recommended that when using ESXi nested for Kubernetes hosts, you provision one Kubernetes host for each Kubernetes cluster for each ESXi server. Doing so ensures—in case of an ESXi host failure—that only a single Kubernetes node is affected for each Kubernetes cluster. |

Feedback

Feedback