Setting Up an ACI Fabric: Initial Setup Configuration Example

Available Languages

Setting Up a Cisco ACI Fabric:

Initial Deployment Cookbook

Cisco Systems, Inc.

Corporate Headquarters

170 West Tasman Drive

San Jose, CA 95134-1706 USA

http://www.cisco.com

Tel: 408 526-4000 Toll Free: 800 553-NETS (6387)

Fax: 408 526-4100

Hardware Installation Overview

Configure Each APIC's Integrated Management Controller

Logging into the IMC Web Interface

Check APIC Firmware & Software

Check Image Type (NXOS vs. ACI) & Software Version of Your Switches

Setup Remainder of APIC Cluster

Setting Up Day 1 Fabric Policies

Creating & Applying a Pod Policy

Create Fabric Level Syslog Source

Creating Access Level Syslog Policy

Creating Tenant Level Syslog Policies

Adding Software Images to the APIC

Daily Configuration Export Policy

Introduction into access policies

Attachable Access Entity Profile

Blade Chassis Connectivity with VMM

Connecting the First UCS Fabric Interconnect (FI-A)

Connecting the Cecond UCS Fabric Interconnect (FI-B)

Bare Metal Connectivity with Existing VMM Domain

Connecting the Bare Metal VMM Server

Bare Metal Connectivity with Physical Domain

L3out to router2 – Asymmetric policies

Create Application Profile and End Point Groups (EPGs)

Create the Application Profile

Create EPGs Under the Application Profile

Associate EPGs with Physical or VMM Domains

Associate EPGs with Physical Domains

Add Static Binding for EPGs in Physical Domain

Create and Apply Contracts to EPGs

Alternative Options for Security Policy Configuration

North-South L3out Configuration

Configure a BD to advertise BD subnet

Configure a contract between the L3out and an EPG

Transit L3out Configuration step

Configure Export Route Control Subnet

Configure a contract between the L3outs

This section provides an overview of the goals and prerequisites for this document.

Note: The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product.

This document describes step-by-step Cisco ACI configuration based on common design use cases.

To best understand the design presented in this document, the reader must have a basic working knowledge of Cisco ACI technology. For more information, see the Cisco ACI white papers available at Cisco.com: https://www.cisco.com/c/en/us/solutions/data-center-virtualization/application-centric-infrastructure/white-paper-listing.html.

This document uses the following terms with which you must be familiar:

■ VRF: Virtual Routing and Forwarding

■ BD: Bridge domain

■ EPG: Endpoint group

■ Class ID: Tag that identifies an EPG

■ Policy: In Cisco ACI, "policy" can mean configuration in general or contract action. In the context of forwarding action, "policy" refers specifically to the Access Control List (ACL)–like Ternary Content-Addressable Memory (TCAM) lookup used to decide whether a packet sourced from one security zone (EPG) and destined for another security zone (EPG) is permitted, redirected, or dropped

This document covers multiple features up to Cisco ACI Release 4.0(1h). It discusses step-by-step configuration using the topology and IP addressing in Figure 1, Table 1 and Figure 2. The detail will be provided in each section.

| Device |

Out of band Management |

Out of band |

Version |

| APIC1 |

10.48.22.69/24 |

10.48.22.100 |

Release 4.1(1h) |

| APIC2 |

10.48.22.70/24 |

10.48.22.100 |

Release 4.1(1h) |

| APIC3 |

10.48.22.61/24 |

10.48.22.100 |

Release 4.1(1h) |

| vCenter |

10.48.22.68 |

- |

- |

| NTP server |

10.48.35.151 |

- |

- |

| DNS server |

10.48.35.150 |

- |

- |

| BD |

BD Subnet |

EPG |

VM-Name |

VM IP Address |

| BD-Web |

192.168.21.254/24 |

EPG-Web |

VM-Web |

192.168.21.11 |

| BD-App |

192.168.22.254/24 |

EPG-App |

VM-App |

192.168.22.11 |

| BD-DB |

192.168.23.254/24 |

EPG-DB |

VM-DB |

192.168.23.11 |

| L3Out1 |

External-Client1 |

172.16.10.1 |

||

| L3Out2 |

External-Client2 |

172.16.20.1 |

||

Hardware Installation Overview

Setting up your first ACI fabric can be a bit of a challenge if you're new to Cisco server appliances and Nexus 9000 switches. The goal of this chapter is to help you navigate the process of setting up your ACI fabric from scratch. Greater details for the installation process can be found in the Installation Guides and Getting Started Guides found on Cisco.com for Cisco ACI. To begin, let's review the various components of a Cisco ACI fabric:

■ Cisco Application Policy Infrastructure Controllers (APICs) – One or more, typically three.

■ Nexus 9000 switches running a Cisco ACI software image (spines and leaf Switches)

■ Out-of-Band Management Network connectivity for Cisco APICs and switches

■ Fabric connectivity (Cisco APICs and switches)

■ Cisco APIC integrated management controller (IMC)

The following tasks will be covered

1. Rack and cable the hardware

2. Configure each Cisco APIC's integrated management controller

3. Check APIC firmware and software

4. Check the image type (NX-OS vs. Cisco ACI) and software version of your switches

5. APIC1 initial setup

6. Fabric discovery

7. Set up the remainder of APIC cluster

This section provides detailed configuration procedures.

In this step you configure APIC and switch connectivity.

The first task for setting up your physical fabric devices will be racking & cabling. Depending on your rack space, it might be preferred to spread leaf pairs across racks. Note that all Leaf switches will need to connect to Spine switches, so you will want to locate Leaf & Spine switches in relative proximity to each other to limit cabling requirements. The APICs will be connected to Leaf switches. When using multiple APICs, we recommend connecting APICs to separate Leafs for redundancy purposes.

From the diagram above at minimum you'll want to connect the following:

■ #2 – 1G/10G LAN connections for the APIC OS Management Interfaces

■ #3 – VGA Video Port. Temporarily needed for initial setup of the IMC to configure remote management (Virtual KVM access)

■ #4- Integrated Management Controller (IMC) for remote platform management. Similar to HP iLO, Dell iDRAC & IBM RAS platforms. This will serve your virtual Keyboard, Video & Mouse (vKMV) services and provide the ability mount virtual ISO (vMedia) images for manual APIC software upgrades.

■ #7- Power Supply Unit 1 & 2. These should be connected to separate power sources (ie. Blue & Red circuits)

■ #9-Fabric Connectivity Ports. These can operate at 10G or 25G speeds (depending on the model of APIC) when connected to Leaf host interfaces. We recommend connecting two fabric uplinks, each to a separate leaf and/or VPC leaf pairs.

If it's APIC M3/L3, VIC 1445 has four ports (port-1, port-2, port-3, and port-4 from left to right). Port-1 and port-2 make a single pair corresponding to eth2-1 on the APIC; port-3 and port-4 make another pair corresponding to eth2-2 on the APIC. Only a single connection is allowed for each pair. For example, you can connect one cable to either port-1 or port-2 and another cable to either port-3 or port-4, but not 2 cables to both ports on the same pair. All ports must be configured for the same speed, either 10G or 25G.

Switch connectivity is pretty straight forward. Leaf switches only connect to Spine switches and vice-versa. This provides your fabric with a fully redundant switching fabric. In addition to the fabric network connections, you'll also connect redundant PSUs to separate power sources, Management Interface to your 1G out-of-band management network, and a console connection to a Terminal server (optional, but highly recommended). You'll want to ensure your switches are mounted correctly such that the fan air flow is in the appropriate direction with your hot/cool aisle of your datacenter. Fans can be ordered with airflow in both directions so ensure you check this prior to ordering. Switches will have two operational port types – Host Interfaces & Fabric Uplinks. Leaf switches connect to Spines using Fabric Uplinks. APICs and other host devices connect to Leaf Host interfaces. Refer to your specific switch model documentation to determine the interface ranges & default port modes.

Configure Each APIC's Integrated Management Controller

When you first connect your APIC's IMC connection marked with "mgmt" on the Rear facing interface, it will be configured for DHCP by default. Cisco recommends that you assign a static address for this purpose to avoid any loss of connectivity or changes to address leases. You can modify the IMC details by connecting up a crash cart (physical monitor, USB keyboard and mouse) to the server and powering it on. During the boot sequence, it will prompt you to press "F8" to configure the IMC. From here you will be presented with a screen similar to below – depending on your firmware version.

For the "NIC mode" we recommend using Dedicated which utilizes the dedicated "mgmt" interface in the rear of the APIC appliance for IMC platform management traffic. Using "Shared LOM" mode which will send your IMC traffic over the LAN on Motherboard (LOM) port along with the APICs OS management traffic. This can cause issues with fabric discovery if not properly configured and not recommended by Cisco. Aside from the IP address details, the rest of the options can be left alone unless there's a specific reason to modify them. Once a static address has been configured you will need to Save the settings & reboot. After a few minutes you should then be able to reach the IMC Web Interface using the newly assigned IP along with the default IMC credentials of admin and password. Its recommended that you change the IMC default admin password after first use.

Logging into the IMC Web Interface

To log into the IMC, open a web browser to https://<IMC_IP>. You'll need to ensure you have flash installed & permitted for the URL. Once you've logged in with the default credentials you'll be able to manage all the IMC features including launching the KVM console.

Launching the KVM console will require that you have Java version 1.6 or later installed. Depending on your client security settings, you may need to whitelist the IMC address within your local Java settings in order for the KVM applet to load. Open the KVM console and you should be at the Setup Dialog for the APIC assuming the server is powered on. If not powered up, you can do so from the IMC Web utility.

Note: The IMC will remain accessible assuming the appliance has at least one power source connected. This allows independent power up/down of the APIC appliance operating system.

Check APIC Firmware & Software

About ACI Software Versions: It's important to know which version of ACI software you wish to deploy. ACI software releases include the concept of "Long Lived Release (LLR)" versions which will be maintained and patches longer than non LLR versions. As of this writing, the current LLRs are versions 2.2 and 3.2. Unless you have specific feature requirements available in later releases, you may be best suited deploying the latest LLR as it will have a larger installation base and reduce the possibility of having to perform major software version upgrades. Typically, a new LLR is added for each Major version (ie. 2.x, 3.x etc). Equally important to note is that all your APICs require to run the same version when joining a cluster. This may require manually upgrading/downgrading your APICs manually prior to joining them to the fabric. Instructions on upgrading standalone APICs using KVM vMedia can be found in the "Cisco APIC Management, Installation, Upgrade, and Downgrade Guide" for your respective version.

Switch nodes can be running any version of ACI switch image and can be upgraded/downgraded once joined to the fabric via firmware policy.

Check Image Type (NXOS vs. ACI) & Software Version of Your Switches

For a Nexus 9000 series switch to be added to an ACI fabric, it needs to be running an ACI image. Switches that are ordered as "ACI Switches" will be typically be shipped with an ACI image. If you have existing standalone Nexus 900 switches running traditional NXOS, then you may need to install the appropriate image (For example, aci-n9000-dk9.14.0.1h.bin). For detailed instructions on converting a standalone NXOS switch to ACI mode, please see the "Cisco Nexus 9000 Series NX-OS Software Upgrade and Downgrade Guide" on CCO for your respective version of NXOS.

Sample Nexus 9000 running NXOS mode, with n9000-dk9.7.0.3.I1.1a.bin being the NXOS image:

Switch1# show version | grep image

NXOS image file is: bootflash:///n9000-dk9.7.0.3.I1.1a.bin

Sample Nexus 9000 running ACI mode, with aci-n9000-dk9.14.0.1h.bin being the NXOS image:

Switch2# show version | grep image

kickstart image file is: /bootflash/aci-n9000-dk9.14.0.1h.bin

system image file is: /bootflash/auto-s

Now that you have basic remote connectivity, you can complete the setup of your ACI fabric from any workstation with network access the APIC. If the server is not powered on, do so now from the IMC interface. The APIC will take 3-4 mins to fully boot. Next thing we'll do is open up a console session via the IMC KVM console using the procedure detailed previously. Assuming the APIC has completed the boot process it should sitting at a prompt "Press any key to continue…". Doing so will begin the setup utility.

From here, the APIC will guide you through the initial setup dialogue. Carefully answer each question. Some of the items configured can't be change after initial setup, so review your configuration before submitting it.

Some of the fields are self-explanatory. Select fields are highlighted below for explanation:

■ Fabric Name: User defined, will be the logical friendly name of your fabric.

■ Fabric ID: Leave this ID as the default 1.

■ # of Controllers in fabric: Set this to the # of APICs you plan to configure. This can be increased/decreased later.

■ Pod ID: The Pod ID to which this APIC is connected to. If this is your first APIC or you don't have more than a single Pod installed, this will be always be 1. If you are located additional APICs across multiple Pods, you'll want to assign the appropriate Pod ID where it's connected.

■ Standby Controller: Beyond your active controllers (typically 3) you can designate additional APICs as standby. In the event you have an APIC failure, you can promote a standby to assume the identity of the failed APIC.

■ APIC-X: A special-use APIC model use for telemetry and other heavy ACI App purposes. For your initial setup this typically would not be applicable. Note: In future release this feature may be referenced as "ACI Services Engine".

■ TEP Pool: This will be a subnet of addresses used for Internal fabric communication. This subnet will NOT be exposed to your legacy network unless you're deploying the Cisco AVS or Cisco ACI Virtual Edge. Regardless, our recommendation is to assign an unused subnet of size between and /16 and /21 subnet. The size of the subnet used will impact the scale of your Pod. Most customer allocate an unused /16 and move on. This value can NOT be changed once configured. Having to modify this value requires a wipe of the fabric.

The 172.17.0.0/16 subnet is not supported for the infra TEP pool due to a conflict of address space with the docker0 interface. If you must use the 172.17.0.0/16 subnet for the infra TEP pool, you must manually configure the docker0 IP address to be in a different address space in each Cisco APIC before you attempt to put the Cisco APICs in a cluster.

■ Infra VLAN: This is another important item. This is the VLAN ID for all fabric connectivity. This VLAN ID should be allocated solely to ACI, and not used by any other legacy device in your network. Though this VLAN is used for fabric communication, there are certain instances where this VLAN ID may need to be extended outside of the fabric such as the deployment of the Cisco AVS/AVE. Due to this, we also recommend you ensure the Infra VLAN ID selected does not overlap with any "reserved" VLANs found on your networks. Cisco recommends a VLAN smaller than VLAN 3915 as being a safe option as it is not a reserved VLAN on Cisco DC platforms as of today. This value can NOT be changed once configured. Having to modify this value requires a wipe of the fabric.

■ BD Multicast Pool (GIPO): Used for internal connectivity. We recommend leaving this as the default or assigning a unique range not used elsewhere in your infrastructure. This value can NOT be changed once configured. Having to modify this value requires a wipe of the fabric.

Once the Setup Dialogue has been completed, it will allow you to review your entries before submitting. If you need to make any changes enter "y" otherwise enter "n" to apply the configuration. After applying the configuration allow the APIC 4-5 mins to fully bring all services online and initialize the REST login services before attempting to login though a web browser.

With our first APIC fully configured, now we will login to the GUI and complete the discovery process for our switch nodes.

1. When logging in for the first time, you may have to accept the Cert warnings and/or add your APIC to the exception list.

2. Go ahead and login with the admin account and password you assigned during the setup procedure.

On first login you will presented with a "What's New" Window which highlight some of the new features and videos included with this version of APIC. You can optionally click "Do not show me this again" if you wish to prevent this popping up at each login. If you wanted to re-enable this pop up you can do so from the Settings menu for your user account which will be covered next.

3. Before we get too far into configuration there's a few settings that will make navigation and using the APIC UI easier. You can access UI settings from the top right menu. These settings will be maintained as long as the cookie for this URL managed by your browser exists.

![]()

4. Some of the options you may want to enable are:

■ Remember Tree Selection: Maintains the folder expansion & location when navigating back & forth between tabs in the APIC UI

■ Preserve Tree Divider Position: Makes navigation pane changes persistent

■ Default Page Size for Tables: Default is 10 items, but you can increase this to avoid having to click "Next page" on tables.

5. Now we'll proceed with the fabric discovery procedure. We'll need to navigate to Fabric tab > Inventory sub-tab > Fabric Membership folder.

From this view you are presented with a view of your registered fabric nodes. Click on the Nodes Pending Registration tab in the work pane and we should see our first Leaf switch waiting discovery. Note this would be one of the Leaf switches where the APIC is directly connected to.

To register our first node, click on the first row, then from the Actions menu (Tool Icon) select Register.

![]()

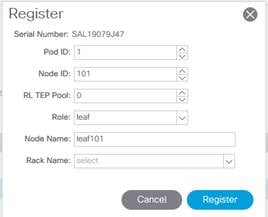

6. The Register wizard will pop up and require some details to be entered including the Node ID you wish to assign, and the Node Name (hostname).

Hostnames can be modified, but the Node ID will remain assigned until the switch is decommissioned and remove from the APIC. This information is provided to the APIC via LLDP TLVs. If a switch was previously registered to another fabric without being erase, it would never appear as an unregistered node. It's important that all switches have been wiped clean prior to discovery. It's a common practice for Leaf switches to be assigned Node IDs from 100+, and Spine switches to be assigned IDs from 200+. To accommodate your own numbering convention or larger fabrics you can implement your own scheme. RL TEP Pool is reserved for Remote Leafs usage only and doesn't apply to local fabric-connected Leaf switches. Rack Name is an optional field.

7. Once the registration details have been submitted, the entry for this leaf node will move from the Nodes Pending Registration tab to the Registered Nodes tab under Fabric Membership. The node will take 3 to 4 minutes to complete the discovery, which includes the bootstrap process and bringing the switch to an "Active" state. During the process, you will notice a tunnel endpoint (TEP) address gets assigned. This will be pulled from the available addresses in your Infra TEP pool (such as 10.0.0.0/16).

8. After the first Leaf has been discovered and move to an Active state, it will then discovery every Spine switch it's connected to. Go ahead and register each Spine switch in the same manner.

9. Since each Leaf Switch connect to every Spine switch, once the first Spine completes the discovery process, you should see all remaining Leaf switch pending registration. Go ahead with Registering all remaining nodes and wait for all switches to transition to an Active state.

10. With all the switches online & active, our next step is to finish the APIC cluster configuration for the remaining nodes. Navigate to System > Controllers sub menu > Controllers Folder > apic1 > Clusters as Seen by this Node folder.

From here you will see your single APIC along with other important details such as the Target Cluster Size and Current Cluster Size. Assuming you configured apic1 with a cluster size of 3, we'll have two more APICs to setup.

Setup Remainder of APIC Cluster

At this point we would want to now open the KVM console for APIC2 and begin running through the setup Dialogue just as we did for APIC1 previously. When joining additional APICs to an existing cluster it's imperative that you configure the same Fabric Name, Infra VLAN and TEP Pool. The controller ID should be set to ID 2. You'll notice that you will not be prompted to configure Admin credentials. This is expected as they will be inherited from APIC1 once you join the cluster.

Allow APIC2 to fully boot and bring its service online. You can confirm everything was successfully configure as soon as you see the entry for APIC2 in the Active Controllers view. During this time, it will also begin syncing with APIC1's config. Allow 4-5 mins for this process to complete. During this time you may see the State of the APICs transition back & forth between Fully Fit and Data Layer Synchronization in Progress. Continue through the same process for APIC3, ensuring you assign the correct controller ID.

This concludes the entire fabric discovery process. All your switches & controllers will now be in sync and under a single pane of management. Your ACI fabric can be managed from any APIC IP. All APICs are active and maintain a consistent operational view of your fabric.

Setting Up Day 1 Fabric Policies

With our fabric up & running, we can now continue configuring some basic policies based on our best-practice recommendations. These policies will include:

■ Out-of-Band Node Management IPs

■ NTP

■ System Settings (Connectivity Preferences, Route Reflectors etc)

■ DNS

■ SNMP

■ Syslog

■ Firmware Policies

■ Exports (Configuration & Techsupport)

In order to be able to connect to your switches directly and to enable some external services such as NTP, you're going to need to configure Management IPs for your switches. During the Initial fabric setup, you would have assigned Management IPs to your APICs, now we'll do the same for your Spine & Leaf nodes.

1. Navigate to Tenants > mgmt > Node Management Addresses > Static Node Management Addresses.

2. Right click on the Static Node Management Addresses folder and select Create Static Node Management Addresses. A window will appear and you will start by defining a range of Nodes. If the address block you intend to assign to your nodes is sequential, it simplifies the config. You will enter the range of nodes that corresponds to the sequential range of addresses you plan on assigning. For example, if you had only 2 sequential addresses, you could create the first block for your first two nodes. Additional nodes can be added indecently if your address blocks are non-sequential. Next put a check on the Out-Of-Band Addresses box. For the Management EPG, leave it as default. For the OOB IPv4 Address, enter the starting IP & subnet mask. Ex. 10.48.22.77/24. This will increment this address by 1 for each Node in the range. Lastly enter the Gateway IP and click submit. If you need to add additional blocks for a different IP or node range, you can repeat the steps accordingly. It's also recommended create static addresses for each of your APICs. This will be required latter on for external services such as SNMP.

Time synchronization plays a critical role in the ACI fabric. From validating certificates, to keeping log files across devices consistent it's strongly encouraged to sync your ACI fabric to 1 or more reliable time sources. Setting up NTP is simple.

Note: Simply creating an NTP policy does not apply it to your fabric. You will also need to assign this policy to a "Pod Policy" which will be covered later in this chapter.

1. Navigate to Fabric > Quickstart, and click on the "Create an NTP Policy Link"

![]()

2. A new window will pop up and ask for various information. Provide a name for your policy and set the State to Enabled. If you'd like your Leaf switches to serve time requests to downstream endpoints, you can enable the Server option. For downstream endpoints they can use the Management Interface IP of nodes as an NTP Server source. When done, click Next to define the NTP Sources.

Note: Using a Bridge Domain SVI (Subnet IP) as an NTP Source for downstream clients is not recommended. When a Leaf switch is enabled as NTP server, it will respond on any Interface. Issues can arise when attempting to use the SVI address of a Leaf, rather than the management IP.

3. Next you will define one or more NTP providers. This will be the upstream device the nodes will poll for Time synchronization. You can optionally assign one of the sources as Preferred, which will force the switches to always to attempt time sync with this source first, then re-try against alternate sources. We recommend at least 2 different NTP sources to ensure availability. Leave all the default options and click OK.

4. The last step for Time configuration is to set the Display Format and/or Time Zone for your fabric. Navigate to Fabric > Fabric Policies > Pod > Date and Time > default.

5. Configure the Display time to be either local time or UTC and assign the appropriate Time Zone where your APICs are located.

The next section we're going to cover include a bunch of different unrelated fabric settings that are grouped in the same UI panel. Navigate to System > System Settings sub menu. Below includes a list of settings that are recommended to be changed with a brief explanation. Understand that these suggestions are suited for most customers but can be modified pending specific needs. Some of the options below may have you wondering - Why they aren't enabled by default? Some of the settings below may have been made configurable after the fact, so respective of not changing default behaviors, sometimes the optimal settings need to be changed manually.

■ APIC Connectivity Preference: Change to ooband. This option select which Management EPG profile should be used by default if both oob & inband management is configured. Out-of-band is far simpler to understand and fine for most use cases.

■ Global AES Passphrase Encryption Settings: Assign Passphrase and Enable (two steps). This is used to export secure fields when generating a Config Export task for your configuration. Without this enabled, all secure fields including passwords and certificates are omitted from your Config Export, requiring you to have to manually re-apply them upon config import. This makes your import/export tasks fast & secure.

■ Fabric Wide Settings: Enable Force Subnet Check and Enforce Domain Validation.

· Enabling the subnet check applies to Gen2 or later switches. This feature limits the local learning of endpoint MAC & IPs to only those belonging to a Bridge Domain defined subnet. This feature Is explained in greater detail in the ACI Endpoint Learning Whitepaper.

· Enforcing Domain Validation restricts EPG VLAN usage by ensuring that the respective Domain & VLAN Pool are bound to the EPG. This prevents accidental or malicious programming of EPGs to use VLAN IDs they may not be permitted to use. Tenant Admins (responsible for Application level policies) typically have different permissions than the Infrastructure Admin (responsible for networking & external connectivity) in ACI – and with this separation of roles you can have one user role responsible for allowing certain VLAN Ranges per Domains using RBAC & Security Domains. Then this limits your Tenant admins access to only specific Domains and in-turn the VLANs they're permitted to assign to EPGs via Static Path Bindings etc.

· BGP Route Reflector: The ACI fabric route reflectors use multiprotocol BGP (MP-BGP) to distribute external routes within the fabric. To enable route reflectors in the ACI fabric, the fabric selects the spine switches that will be the route reflectors and provide the autonomous system (AS) number. Once route reflectors are enabled in the ACI fabric you can configure connectivity to external routers. Assign an Autonomous System Number (ASN) to your fabric and configure up to eight Spines as Route Reflectors. In a multipod environment it would be recommended to spread them across Pods.

Note: Simply creating a Route Reflector policy does not apply it to your fabric. You will also need to assign this policy to a "Pod Policy" which will be covered later in this chapter.

Creating & Applying a Pod Policy

Now that you've configured various fabric policies, some of which require to be assigned to your nodes via Fabric Pod Policy. You can use the default policy or create a new one. The Pod Policy group is collection of policies previously created and applied to one or more Pods in your fabric. This granularity allows you to apply different policies to different Pods if required. In our use case we're going to create a single Pod Policy Group name "PolGrp1"

1. Native to Fabric > Fabric Policies sub menu > Pods > Policy Groups folder

2. Right click on the Policy Groups folder and select Create Pod Policy Group

3. For the Policies we previously created, you'll want to select them here. If you configured the default polices, you can leave the default policy name selected, otherwise you'll want to select the appropriate policy name.

Note: In our example we configured one policy for NTP with a named policy "NTP_Servers" whereas all other policies are using the "default" policy. This is to show the two valid ways to configure various Pod Policies.

4. Navigate to Fabric > Fabric Policies sub menu > Pods > Profiles > Pod Profile > default. With the default Pod Selector selected in the navigation pane, change the Fabric Policy Group to the one created in the previous step.

To allow your APIC to resolve hostname to external resources, such as Virtual Machine Managers (VMMs), Remote Locations etc, you'll need to configure the DNS profile.

1. Navigate to Fabric > Fabric Policies sub menu > Policies > Global > DNS Profiles > default

2. In the work pane, click the + sign to add one or more DNS providers. You can assign a Preferred provider if you wish.

3. In the DNS Domains, you can click the + sign to assign a domain suffix that should be used by your APIC when resolving DNS hostnames.

By default, the APIC will allow a few default protocols such as HTTPS & SSH to access the out-of-band (oob) management interfaces. When we start adding additional services such as SNMP and other monitoring services, we will need to explicitly add all "allowed" protocols to the Management Contracts/Filters including both HTTPS & SSH. Telnet is disabled by default and Cisco recommends using only SSH for Management CLI access as it's a more secure protocol. It's a best practice to configure your Management Contracts on Day 1 to enforce a complete whitelist model against access to your management interfaces. The next procedure will walk you through configuring

1. Navigate to Tenants > mgmt > Contracts > Filters

2. Right-click on the Filters folder and select Create Filter.

3. In the pop-up, provide a name. Ex. allow-ssh

4. Under Entries, click the + sign and add a filter entry with the following values (all other columns can be left blank):

a. Name: allow-ssh

b. EtherType: IP

c. IP Protocol: TCP

d. Source Port/Range: 22-22

e. Dest. Port/Range: unspecified

5. Click Submit. Next step will be creating the Contract & attach the newly created Filter.

6. Repeat Steps 1 – 5 to allow Web Access using HTTPS (TCP 443)

7. Navigate to Tenants > mgmt > Contracts > Out-Of-Band Contracts

8. Right Click on the Out-of-Band Contracts folder and select Create Out-Of-Band Contracts

9. Provide a name for the contract. Ex. allow-management

10. Click the + sign to add a subject. Provide a name for the Contract Subject. Ex. allow-management

11. Click the + sign, select each filter then click Update to add both filter chains previously created. Ex. allow-ssh & allow-https

12. Click Ok then click Submit. With the security rules created, we'll create a Management Instance Profile and attach these rules.

13. Navigate to Tenants > mgmt > External Management Network Instance Profile

14. Right-click on the folder and select Create External Management Network Instance Profile or select an existing Profile.

15. Provide a name for the Profile. Ex. 'default' if not already created

16. Under Consumed Out-of-Band Contracts click the + sign and add your previously created allow-management contract then click Update

17. Under the Subnets, enter the target Subnet you wish to restrict accessing the APIC then click Update. In our example we are using a 0.0.0.0/0 entry to allow unrestricted access.

18. Click Submit.

SNMP monitoring is an important aspect of ensuring your ACI administrators are quickly made aware of any potential problems. It's just one of the many monitoring aspects you can take advantage of and widely adopted standard. Assuming you have a SNMP monitoring system already setup, this section will guide you through setting up the ACI portion of SNMP monitoring. Configuring SNMP requires that you have already configured your Static Management Node Addresses and have In-band or Out-of-Band network connections to your switches. SNMP is configured through two different Scopes; Global & VRF Context. We will start with the Global scope configuration as the VRF Context requires your User tenant to be created.

Global Scope configuration will allow you to monitor the physical status of the fabric including Interfaces, Interface States, Interface Stats and environmental information.

1. Navigate to Fabric > Fabric Policies sub menu > Policies > Pod > SNMP folder

2. Right-Click on the SNMP folder and select Create SNMP Policy.

3. Give your policy a name. Ex. SNMP_Pol

4. Set the admin state to Enabled

5. Optional items include the Contact & Location details.

6. Under Community Policies click the + sign.

To allow SNMP via your Management Interfaces on your switches, you'll need to create and apply the respective Filter & Contracts.

1. Navigate to Tenants > mgmt > Contracts > Filters

2. Right-click on the Filters folder and select Create Filter.

3. In the pop-up, provide a name. Ex. allow-snmp

4. Under Entries, click the + sign and add a filter entry with the following values (all other columns can be left blank):

a. Name: allow-snmp

b. EtherType: IP

c. IP Protocol UDP

d. Source Port/Range: 161 – 162

e. Dest. Port/Range: 161 – 162

5. Click Submit. Next step will be creating the Contract & attach the newly created Filter.

6. Navigate to Tenants > mgmt > Contracts > Out-Of-Band Contracts > allow-management

7. Click on the Contract Subject previously created named allow-management

8. Click the + sign to add a filter chain and select the previously created SNMP filter. Ex. allow-snmp

9. Click Ok then click Submit. With the security rules created, we'll create a Management Instance Profile and attach these rules.

Another useful tool for monitor the fabric is the popular Syslog policy to aggregating faults and alerts. Syslog configuration is comprised of first defining one or more Syslog Destination targets, then defining Syslog policies within various locations within the UI to accommodate Fabric, Access Policy and Tenant level syslog messages. Enable all these syslog sources will ensure the greatest amount of details are captured but will increase the amount of data & storage requirements depending on the logging level you set.

1. Navigate to Admin > External Data Collectors > Monitoring Destinations > Syslog

2. From the Actions Menu select Create Syslog Monitoring Destination Group

3. Provide a name for the Syslog Group. Ex. syslog_servers

4. Leave all other options default and click Next

5. Under Create Remote Destinations, click the "+" icon

a. Enter hostname or IP address.

b. If necessary, modify any additional details as you wish such as severity & port number

c. Set the Management EPG as default (Out-of-band)

d. Click OK.

6. If necessary, add additional Remove Destinations

7. Click Finish

Create Fabric Level Syslog Source

The fabric Syslog policy will export alerts for monitoring details including physical ports, switch components (fans, memory, PSUs etc) and linecards

1. Navigate to Fabric > Fabric Policies sub menu > Policies > Monitoring > Common Policy > Callhome/Smart Callhome/SNMP/Syslog/TACACs.

2. From the Actions Menu select Create Syslog Source

a. Provide a name for the source. Ex fabric_common_syslog

b. Leave the severity as warnings unless desired to increase logging details

c. Check any additional Log types such as Audit Logs (optional)

d. Set the Dest Group to the Syslog Destination Group previously created.

e. Click Submit

3. Navigate to Fabric > Fabric Policies sub menu > Policies > Monitoring > default > Callhome/Smart Callhome/SNMP/Syslog/TACACs.

4. In the work pane, set the Source Type to Syslog

5. Click the "+" icon to add a Syslog Source

a. Provide a name for the source. Ex fabric_default_syslog

b. Leave the severity as warnings unless desired to increase logging details

c. Check any additional Log types such as Audit Logs (optional)

d. Set the Dest Group to the Syslog Destination Group previously created.

e. Click Submit

Creating Access Level Syslog Policy

The Acess Syslog policy will export alerts for monitoring details including VLAN Pools, Domains, Interface Policy Groups, and Interface & Switch Selectors Policies.

1. Navigate to Fabric > Access Policies sub menu > Policies > Monitoring > default > Callhome/Smart Callhome/SNMP/Syslog/TACACs.

2. In the work pane, set the Source Type to Syslog

3. Click the "+" icon to add a Syslog Source

a. Provide a name for the source. Ex access_default_syslog

b. Leave the severity as warnings unless desired to increase logging details

c. Check any additional Log types such as Audit Logs (optional)

d. Set the Dest Group to the Syslog Destination Group previously created.

e. Click Submit

Creating Tenant Level Syslog Policies

Tenant level syslogging will include all tenant related polices include Application Profiles, EPGs, Bridge domains, VRFs, external networking etc. To simplify the syslog configuration across multiple tenants you can leverage Common Tenant syslog configuration and share that across other tenants. This would provide a consistent level of logging for all tenants. Alternately if you wanted to have varied levels of logging/severities for different Tenants, you could create the respective Syslog policy within each tenant. For our purposes we will deploy a single consistent syslog policy using the Common Tenant.

1. Navigate to Tenants > common > Policies > Mentoring > > default > Callhome/Smart Callhome/SNMP/Syslog/TACACs.

2. In the work pane, set the Source Type to Syslog

3. Click the "+" icon to add a Syslog Source

a. Provide a name for the source. Ex tenant_default_syslog

b. Leave the severity as warnings unless desired to increase logging details

c. Check any additional Log types such as Audit Logs (optional)

d. Set the Dest Group to the Syslog Destination Group previously created.

e. Click Submit

4. Navigate to Tenants > Your_Tenant > Policy tab

5. Set the Monitoring Policy drop down box to be the default policy from the common tenant.

![]()

Firmware upgrades may not be thought as a Day 1 task but setting up the appropriate policies is. By default, when you install APIC from scratch, it will be deployed only with running firmware. The APICs firmware repository will be empty at this point. Our first task is going to be to load the "current" firmware images for the version you plan on running on your fabric today. In our example we deployed the APIC with 4.0(1h), and therefore we'll need to download the APIC & Switch images for 4.0(1h) / 14.0(1h) respectively to the APIC.

Adding Software Images to the APIC

*It's assumed by this point you've already downloaded the appropriate APIC & Switch images from Cisco.com and stored them locally.

1. Navigate to Admin > Firmware > Images tab

2. From the Actions drop down, select Add Firmware to APIC

3. From here you can select a Local Upload via your browser from your workstation, or you can use a Remote location. Remote locations can be HTTP or SCP sources. Select the appropriate option.

4. Enter the appropriate details and click submit. If the details are valid, the download status should progress.

5. Repeat the process for the corresponding second image (switch or APIC).

6. Once completed, you should both images in the repository.

When performing ACI fabric upgrades, there are typically two categories of upgrades. One task for the controllers and one or more subsequent tasks for the switch nodes. The controller upgrade task completely automated. Once initiated, each controller node will upgrade serially. This ensures that the next controller begins its turn only once the previous controller has come back online and sync'd back with the cluster. Any standby controllers will also be upgrade at this time. Once all controllers have been upgraded, the switches will be next. A common practice is to group nodes by odd or even nodes IDs. Typically switch nodes are grouped together in redundant pairs by function such as Border Leafs, Compute Leafs, Service Leafs etc. Assuming all functional node pairs contain at least one odd & one even node, the impact will be minimal. This does assume that most if not all workloads are dual connected to Leaf nodes with an Odd & Even ID. The odd/even group upgrades are simply a guideline. You may wish to further divide your switch upgrade groups based on physical pods, remote leafs, and Virtual Leafs (vLeafs). For each additional group comes with less risk in the event any issues manifest with switches running the new software version, but this will have an impact on the overall upgrade window duration. A standard upgrade for a Leaf switch is typically 15-30mins, but this can often vary based on the underlying software activities required. Depending on whether the upgrade is a major or minor version change, additional components may be upgraded during this time, for example Erasable Programmable Logic Devices (EPLDs), SSD firmware and other switch components. Switches upgraded within the same Upgrade Group will be upgrade simultaneously, except for VPC Peer switches. VPC Peer Switches will always be upgraded serially regardless of the upgrade group membership to prevent unexpected network outages. When considering on upgrading, always review the Release Notes & Firmware Management Guide for the new version to confirm the upgrade path, compatibility and known caveats. An Upgrade Matrix tool is available to help you determine the correct upgrade path: https://www.cisco.com/c/dam/en/us/td/docs/Website/datacenter/apicmatrix/index.html

We strongly recommends that you connect, configure & test console access to all controllers and switches prior to attempting any upgrade. In the event a controller or switch upgrade encounter issues, this may be the only method to access the device to troubleshooting and/or recover.

Assuming you've uploaded the respective Software images to the APIC, you can now initiate the upgrade process.

1. Navigate to Admin > Firmware > Infrastructure tab > Controllers

2. From the Actions Menu, select Schedule Controller Upgrade.

3. Set the target firmware version

4. Set the Upgrade start time

5. [Optional] Ignore Compatibility Check. This will allow you to perform an upgrade that might not follow a supported upgrade path, which could potentially cause disruptions. For pre-Prod/Lab this is safe to enable.

6. Click Submit.

Allow the controller process to complete which could take anywhere between 30mins > 1hr+ depending on the number of controllers in the cluster.

Once all controllers have been successfully upgraded, and the cluster shows "fully fit", you can proceed with upgrading the switches. The procedure below assumes you intend on performing the upgrade immediately. Alternately, you can schedule the upgrade to start during a scheduled date/time.

1. Navigate to Admin > Firmware > Infrastructure tab > Nodes

2. From the Actions menu, select Schedule Node Upgrade

3. Select the group type (Switch or vPod). Always perform the Switch upgrade prior to upgrading vPods

4. Provide a name for the Upgrade group. Ex. "Odd_Nodes"

5. Set the target firmware

6. Check Enable Graceful Maintenance

7. From the Node Selection, choose Manual

8. Check the Switch nodes that correspond to your group. Ex. 101, 103, 201

9. Click Submit

Based on your Start Time option, the upgrade will immediately begin. You can remain on this screen to monitor progress. During the process the switches will download the appropriate software image from the APIC, perform the software upgrade, then reload with the new image. During this time, you may see the Switches disappear from the Fabric Inventory. Barring no issues, each switch will reappear once the upgrade has been completed. Be patient as some switches may take slightly longer than others.

Export policies encompass a wide range of options which include Configuration Backup and troubleshooting logs. Some of these policies can and should be setup during your deployment phase. A few common polices to configure include a Weekly (or even daily) configuration backup task, and a Core Export policy. Both of these options will come in handy if/when needed. The next section will walk you through setting up a few of these recommended policies.

Setting up a Remote Location

1. Navigate to Admin > Import/Export sub-tab > Export Policies > Remote Locations folder

2. From the Actions menu, select Create Remote Location

3. Enter a friendly name for the Remote Location. Ex. lab_ftp

4. Provide the Hostname / IP for the remove device

5. Select the respective protocol: ftp / scp / sftp

6. Enter the Remote path. Ie. /home/files

7. Provide your username & password

8. Click Submit

Now with the Remote Location created, you will be able to use/reference it with subsequent export policies. Note: The remote location path & credentials will not be validated until an Export police attempts to send data to it.

In the event a service or Data Management Engine (DME) fail, it's helpful to provide the core dump file to Cisco TAC for analysis. Configuring a Core Export policies ensures a copy of this is maintained in the event of a device failure. Along with the Core Export you can optionally include a techsupport bundle when the failure occurs – this prevents any log roll over if an issue occurs and goes unnoticed for an extended period of time.

1. Navigate to Admin > Import/Export > Export Policies > Core > default

2. In the work pane, change the collection type to be Core and TechSupport.

3. Uncheck the Export to Controller check box

4. Set the Export Destination to the Remote Location previously created.

Daily Configuration Export Policy

Since the configuration file for ACI is relatively small, it's a good practice to configure a Daily Configuration export task.

1. Navigate to Admin > Import/Export > Export Policies > Configuration

2. From the Actions Menu select Create Configuration Export Policy

3. Provide a name for your policy. Ex. DailyConfigExport

4. Choose the desired format JSON/XML.

5. From the Scheduler drop down box, select Create Trigger Scheduler

a) Enter a name for the Scheduler. Ex. daily_12am

b) Under the Schedule Window click the "+" icon to add

c) Change the Window Type to Recurring

d) Enter a Window Name. Ex. daily_12am

e) Click Ok

f) Click Submit

6. Change the Export Destination to the one created Previously.

7. Click Submit

Note: The Global AES Encryption Setting should be already enabled. If not, please refer to earlier in this guide to assign an AES Passphrase and enabling Encryption. Configuring a Configuration Export Policy without this enabled will remove all Secure Fields & Passwords from your Exported file, making the Import process more involved requiring having to manually re-assign the passwords for VMM domains, Remote Locations and other policies.

Smart Licensing for Cisco Product provides customers with a single pane of glass to manage all their software subscriptions & licenses for purchased & entitled products. Smart Licensing within ACI is currently not enforced. Though Cisco highly encourages the use of Smart Licensing, failing to register your fabric with the Cisco Smart Software Manager (CSSM) has no impact on functionality. Registering your fabric with CSSM requires that the APIC has reachability to tools.cisco.com and DNS properly configured. There is a great Technote that fully explains the Registration & licensing process for ACI, so it will not be covered further in this guide. Please see the following link for details on configuration Smart Licensing for ACI: https://www.cisco.com/c/en/us/td/docs/switches/datacenter/aci/apic/sw/3-x/smart_licensing/b_Smart_Licensing.html

This section describes the fabric configuration.

Introduction to Access Policies

How do you configure a VLAN on a port in an ACI fabric? In this section we will explain how these specific policies are configured and how you can achieve this goal. To begin with it's important to understand that we do not configure a VLAN directly on a port but use policies which will allow us to scale configuration and apply similar behavior to a group of objects such as switches or ports.

Let's focus on a sample use case. We have a server connected to our ACI fabric. This server has 2 NIC's and these adapters are configured in an LACP port-channel. The server is connected on port 1/9 on ACI leafs 101 and 102. On these ports we would like to configure the EPG name "EPG-Web" which will result in the server being able to provide Web services in the network. In order todo so we will use an external VLAN 1501 which will allow us to extend the EPG out of the ACI fabric. For more information regarding Tenant / APP / EPG configuration and extension please consult the "Tenant" chapter.

The first thing we need to do is create a policy in ACI which defines which switches need to be used. For this we use a "Switch profile" in which we define trough a "Switch Selector" which switches are part of it.

In most ACI deployments we recommend configuring 1 switch profile per switch, and 1 switch profile per vPC domain using a naming scheme which indicates the nodes which are part of the profile.

The wizard deploys a naming scheme which can easily be followed. The name scheme consists of Switch{node-id}_Profile. As an example "Switch101_Profile" will be for a switch profile containing node-101 and Switch101-102_Profile for a switch profile containing switches 101-102 which are part of a vPC domain.

Once we have defined which switches need the configuration we can define the ports on these switches which require the configuration. We do this by creating an "Interface Profile" which consists of 1 or more "Access Port Selectors".

Interface profiles can be used in many different ways. Similar to switch profiles, a single interface profile can be created per physical switch along with an interface profile for each vPC domain. These policies should have a 1 to 1 mapping to their matching switch profile. Following this logic, the fabric access policies are greatly simplified, and easy for other users to follow.

The default naming schemes employed by the wizard can also be used here. It consists of the switch profile name + ifselector to indicate this profile is used to select interfaces. An example would be Switch101_Profile_ifselector. This Interface profile would be used to configure non vPC interfaces on switch 101 and it would map directly to the Switch101_Profile switch profile.

Notice because we have linked an "Interface Profile" with 1 port to the "Switch Profile" which includes 2 leaves, we have now with a simple link associated both switches with port Eth1/9.

At this point in time we have linked ports to switches but we did not define the characteristics of these ports. In order todo so we need to define an "Interface Policy Group" which will define the properties of this port. In our case because we need to create an LACP port-channel, we will create a "VPC Interface Policy Group".

To form the relationship between this "VPC Interface Policy Group" and the involved interface we link towards this "Interface Policy Group" from the "Access Port Selector".

As we are creating an LACP port-channel over 2 switches, we need to define a VPC between Leaf 101 and Leaf 102. In order todo so we create the following objects linked to the leaves.

As a next step we will create the VLAN 1501 in our fabric. In order todo so we need to create a "VLAN Pool" with "Encap Blocks"

When considering the size of your vlan pool ranges, keep in mind that most deployments only need a single VLAN pool and one additional pool when using VMM integration. If you plan on bringing VLANs from your legacy network into ACI, define the range of legacy VLANs as your static VLAN pool.

As an example, lets assume VLANs 1-2000 are used in our legacy environment. Create one Static VLAN pool which contains VLANs 1-2000. This will allow you to trunk ACI Bridge Domains & EPGs towards your legacy fabric. If you plan on deploying VMM, a second dynamic pool can be created using a range of free VLAN IDs.

When deploying VLANs on a switch, ACI will encapsulate Spanning-tree BPDUs with a unique VXLAN ID which is based on the pool the VLAN came from. Due to this, it is important to use the same VLAN pool whenever connecting devices which require STP communication with other bridges.

VLAN VXLAN IDs are also used to allow vPC switches to synchronize vPC learned mac and IP addresses. Due to this, the simplest design for VLAN pools is to use a single pool for static deployments and creating a second one for dynamic deployments.

The next step we do is to create a domain. A domain defines the 'scope' of a VLAN pool, i.e. where that pool will be applied. A domain could be physical, virtual or external (either bridged or routed). In our example I will use a physical domain as I need to connect a bare metal server into the fabric. I will link this domain to my "VLAN Pool" giving access to the required vlan(s).

For most deployments, a single physical domain is sufficient for static path deployments and a single routed domain to allow the creation of L3Outs. Both of these can map to the same static VLAN pool. If the fabric is deployed in a multi-tenancy environments or more granular control is required to restrict which users can deploy specific EPGs & VLANs on a port, a more strategic deployment needs to be considered. Domains also provide the ability to restrict user access with Security Domains using Roles Based Access Control (RBAC).

Attachable Access Entity Profile

We now have built two blocks of configuration, on one side we have the switch and interface configurations and on the other side we have the Domain/VLAN(s). We will use an object called "Attachable Access Entity Profile" or AEP to glue these two blocks together.

A "Policy Group" is linked towards an AEP in a "1:n" relationship which means that an AEP's goal is to group Interfaces on Switches together which share similar policy requirements. This means that you can further on in the fabric refer to one AEP which is representing a group of interfaces on specific switches.

In most deployments, a single AEP should be used for static paths and one additional AEP per VMM domain.

The most important consideration is that VLANs can be deployed on interfaces through the AEP. This can be done by mapping EPGs to an AEP directly or by configuring a VMM domain for pre-provision. Both these configurations make the associated interface a Trunk port ('switchport mode trunk').

Due to this, it is important to create a separate AEP for L3Out when using routed ports or routed sub-interfaces. If SVIs are used in the L3Out, it is not necessary to create an additional AEP.

ACI uses a different means of defining connectivity using a policy-based approach.

The lowest object we have available is called an "Endpoint Group" or EPG. We use the construct of an EPG to define a group of VMs or servers with similar policy requirements. To group these EPG's together we use a logical construct called "Application Profiles" which are part of a specific tenant.

The next step is to link the EPG to the domain, hence, making the link between the logical object representing our workload (the EPG) and the physical switches / interfaces.

The last step because we are using a "Physical" domain is that we need to tell the EPG where exactly to program which VLAN out of the "VAN Pool". This will allow the EPG to be stretched externally out of the fabric and it will allow us to connect the bare metal server into the EPG.

The referenced "Port Encap" off course needs to be resolvable against the "VLAN Pool".

With all the previous policies created, what would it mean to connect one more server on port Eth1/10 on leaf switches 101 and 102 with a port-channel?

Looking at the previous image "Bare metal ACI connectivity", it means we have to create:

■ An extra "Access Port Selector" + "Port Block"

■ An extra "VPC Interface Policy Group"

■ An extra "Static Binding" with "Port Encap"

Notice that due to the fact that we are using an LACP port channel, we need to use a dedicated "Interface Policy Group", being a "VPC Interface Policy Group", because we need to have a single VPC per port-channel / "Access Port Selector".

In the case we would have been using individual links, we could re-use the "Interface Policy Group" we previously created also for the extra server.

The resulting policies would look like the following image.

Blade Chassis Connectivity with VMM

In this section we will connect a UCS Fabric Interconnect running a VMware workload into the ACI fabric. As we are starting with a new clean fabric, this means we must create.

■ Access policies to connect FI-A

■ Access policies to connect FI-B

■ VMM Domain

Also keeps in mind that UCS FI connectivity in combination with VMware has the following characteristics:

■ From UCS FI towards the ACI leaf switches we will run a port-channel. We will create 1 for each FI

■ From the ESXi server running on the blade towards the FI's we cannot create a port-channel as we are connecting to 2 individual switches, this means we cannot use a load-balancing algorithm that uses IP-hashing. Make sure to use a load balancing algorithm such as "Virtual Port ID" on VMware side which is switch dependent and which will hence support this topology.

See also the below diagram:

Our connectivity diagram for this UCS Fabric Interconnect looks like the following:

Notice that the FI's are connected symmetric to the ACI fabric, meaning, FI-A is connected to Eth1/41 on both leaf101 and leaf102. FI-B is connected to port Eth1/42 on leaf101 and leaf102. Using symmetric connectivity will greatly simplify the configuration.

Connecting the First UCS Fabric Interconnect (FI-A)

The first step we will do is launch the wizard to create the access policies involved.

1. You can do this by navigating to Fabric > Access Policies > Quick Start

2. This will bring us to the following screen where we will first create a VPC pair on leaf switches 101 and 102.

3. Fill in the fields as per the below screen

4. Notice we must fill in a unique ID for the "VPC Domain ID". In our case we have chosen 101 referring to the first leaf in our VPC port-channel. When done make sure to click "Save".

After saving you can see the VPC appearing on the left in the wizard showing that you have correctly created this.

5. You can verify in the "Access Policies" that this policy has been correctly created:

6. Next we will start the wizard again and on the left select the VPC pair where we need to create the UCS FI-A connectivity.

7. After doing this we click on the + sign on the top right to start the wizard.

8. The first step we need to do is create a "Switch Policy".

9. After filling in the required information, click "Save".

10. This will launch the "Interface Policy" creation wizard.

We will first fill in the interface where FI-A will connect, and we will also give it the name "ucs-FI-A".

11. After this we will create a CDP policy.

Give the "CDP Interface Policy" a name, select "Admin State" to "Disabled" and select "Submit".

12. We will now create the LLDP policy.

13. Give the policy a name and click "Submit".

14. In the next step we will create a "Port Channel Policy".

15. Give this policy a name, select the correct "Mode" and click "Submit".

We will first select "Associated Device Type" as "ESX Hosts" and then fill in a "Domain Name" and "VLAN Range". We will then configure the "vCenter Login Name" and "Password".

17. After having filled in the required information click "OK" to continue.

18. Now as a last step we will fill in the required vSwitch configuration.

Notice we are using "Mac Pinning-Physical-NIC-load" which is a switch-independent protocol due to the use of FI's. We also enable LLDP at vSwitch level.

19. Now click "Save"

20. Now click "Save"

21. And last click "Submit"

22. You will now notice if you relaunch the wizard all the configuration has been created:

Make sure to select the create "Interface Profile" on the left which will provide the information you can see on the right.

23. In order to match the above configuration make sure to to add in UCM the following config:

■ VLAN 1001-1100 in the ACI connected vNIC's trough a vNIC template (make sure to use the vnic templates referenced for the NIC's in the service profile)

■ Enable LLDP in the Network Control Policy used in your vNIC template

Connecting the Cecond UCS Fabric Interconnect (FI-B)

Now we will connect the second fabric interconnect. Because we already created some policies, you will now notice we will reuse a lot of them.

1. In order todo so we will start the wizard again under Fabric > Access Policies.

2. After launching the wizard, the following screen appears.

3. On the left select the switch pair (the "Switch Policy") and then on the right click "+". The following screen appears:

4. Fill in the correct interface under "Interfaces" and give the "Interface Policy" and new, in our case we will choose ucs-FI-B. In a next step we will define our "Interface Policy Group" and create a new one.

5. Notice we are reusing the earlier created CDPoff, LLDPon and LACPactive policies and we move to the Domain section.

6. In the domain section we choose the existing VMM Domain named ACI_VDS and choose again "MAC Pinning-Physical-NIC-load" and LLDP.

After doing this we select "Save".

Then click "Save" again.

7. And finally click "Submit".

9. You will now notice under Virtual Networking Inventory that the servers have been discovered by ACI and that the connectivity towards the leaves is active.

So, what happened when we have been executing this wizard? The following image shows all policies that have been created.

This means we now have a VMM domain that is fully equipped with access policies which can be linked to EPG's which we will later create.

The following is an overview of the created policies in detail.

Bare Metal Connectivity with Existing VMM Domain

The following sections describe bare metal connectivity with VMM domains.

Connecting the Bare Metal VMM Server

In this section we will connect server2 to the fabric and prepare it for VMM workload. The connectivity is as per the below diagram.

1. We will first launch the Access Policy wizard to configure this connectivity by going to Fabric > Access Policies > Quick Start

2. The following screen will appear. Select the 101-102 "Switch Policy" on the left and click on the right "+" to provide the access port details.

3. As per our diagram we will be using "MAC pinning" hence we need to use Individual links and make a normal "Access Policy".

4. As in previous section we select CDPoff and LLDPon policy and leave all other policies to the defaults.

Notice we have to configure the "Port Channel Mode" and as our already previously connected Fabric Interconnects blades have been configure with "MAC Pinning-Physical-NIC-load" we will use a similar load balancing algorithm here. We will also enable LLDP to enable dynamic VMM learning. After having configured this we click "Save"

7. And finally we click "Submit"

So, what happened when we have been executing this wizard? The following image shows all policies that have been created or linked to (already existing).

This means we now have all policies in place to connect our server "server2" to the existing VMM domain and later on we can use the "VMM Domain" to run virtual workload on our server.

The following is an overview of the created or re-used policies in detail.

Bare Metal Connectivity with Physical Domain

The following sections describe bare metal connectivity with physical domain.

In this section we will connect server1 to the fabric and prepare it for bare metal workload. The connectivity is as per the below diagram.

1. We will first launch the Access Policy wizard to configure this connectivity by going to Fabric > Access Policies > Quick Start

2. The following screen will appear. Select the correct "Switch Policy" on the left and click "+" on the right.

3. Select "VPC" Interface Type and fill in the "Interfaces" and "Interface Selector Name".

4. Select the existing CDP, LLDP and Port Channel Policy and move to the "Domain" section.

So, what happened when we have been executing this wizard? The following image shows all policies that have been created or linked to (already existing).

This means we now have a Physical domain that is fully equipped with access policies which can be linked to EPG's which we will later create.

The following is an overview of the created or re-used policies in detail.

The following sections describe connecting Router1 to the fabric.

In this section we will connect router1 to the fabric which will be later on used as part of our L3out1 domain. This domain will also include router2 which we will configure in the next section. The connectivity diagram is as follows:

Notice we will connect router1 with 2 individual links and notice the symmetry which will allow us to use one "Switch Policy" with 2 leaves.

1. To start we will first launch the wizard.

2. On the left select the "Switch Policy" for leaves 101 and 102 and click "+" on the right.

4. Now we select our existing "CDPoff" and "LLDPon" policy and last, we fill in the Domain section.

5. Here we select "External Routed Devices" and provide a name for this Routed Domain and create a new "VLAN Pool".

6. We click again "Save".

7. And finally, we click "Submit" to push all the policies.

So, what happened when we have been executing this wizard? The following image shows all policies that have been created or linked to (already existing).

As we have symmetric interfaces in place we only created 1 "Switch Policy" and we have created an "External Routed Domain" called "RoutedDomain1" which is ready to be used in L3out configuration at tenant level in the chapter of this document. The following is an overview of the created or re-used policies in detail.

L3out to router2 – Asymmetric policies

The following sections describe connecting Router2.

In this section we will connect router2 to the fabric which will be later on used as part of our L3out1 domain. In the previous section we already created the "External Routed Domain" called "L3out1" so we will re-use that for this connectivity. The connectivity diagram is as follows:

Notice we will connect router2 with 2 individual links and notice this router is asymmetric connected to leaf103 and leaf104. This means we have some more work when creating the policies through the wizard as we will need to make 2 individual "Switch Policy's" with their respective configuration linked to them.

1. To start we will first launch the wizard.

2. Select "+" on the right.

3. Now fill in the fields to create the "Switch Policy"

Notice we are only selecting Leaf 103 because do to the asymmetric routing in place we have to use a dedicated "Switch Policy" for each connected interface. Make sure to select Leaf 103 and click "+" to continue.

We will now provide the interface details, make sure to select as "Interface Type" "Individual" and give the interface a human understandable "Interface Selector Name".

4. Next, we will link to the CDP and LLDP policies and continue to the Domain section.

7. And click "Submit". Now relaunch the wizard

8. And click "+" to add a new "Switch Policy".

9. Make sure to fill in the correct switch ID. Select "Interface Type" "Individual" and fill in the "Interfaces" field with port 1/1. Make sure to put a human understandable name for "Interface Selector Name" for easy reference in the future.

10. Make sure to select the CDPoff and LLDPon policy and continue to the Domain section.

11. We will link to an existing domain called "RoutedDomain1" and click "Save".

So, what happened when we have been executing this wizard? The following image shows all policies that have been created or linked to (already existing).

As we have asymmetric interfaces in place we have two "Interface Profiles" created with their respective policies. We are linking to an existing "External Routed Domain" which can later on be used at tenant level. The following is an overview of the created or re-used policies in detail.

The following sections describe conneting Router3.

In this section we will connect router3 to the fabric which will be later on used as part of our L3out2 domain. This router will be connected with an LACP port-channel into the fabric, this means we also need to create for leaf 105 and leaf 106 a VPC pair. The connectivity diagram is as follows:

Notice we will connect router3 with 2 individual links and notice the symmetry which will allow us to use one "Switch Policy" with 2 leaves.

1. To start we will first launch the wizard.

2. The first thing we will do is create a VPC policy for switch 105 and 106.

3. Select the "+" sign and launch the VPC add wizzard.

We will use "VPC Domain ID" 105 and select both leaf 105 and 106. Now click "Save".

4. Now click "+" to create a new "Switch Policy"

5. And select both leaf 105 and 106. Click "Save" to continue.

7. Now we select our existing "CDPoff", "LLDPon" and "Port Channel" policies and last, we fill in the Domain section.

10. And finally, we click "Submit" to push all the policies.

So, what happened when we have been executing this wizard? The following image shows all policies that have been created or linked to (already existing).

As we have symmetric interfaces in place we only created 1 "Switch Policy" and we have created an "External Routed Domain" called "RoutedDomain1" which is ready to be used in L3out configuration at tenant level in the chapter of this document. The following is an overview of the created or re-used policies in detail.

This section describes step-by-step configuration of ACI tenant network with detailed examples. The logical construct of a typical ACI tenant network consists of tenants, VRFs, bridge domains (BD), endpoint groups (EPG), L3outs, L4-7 services insertions, as well as contracts that are applied between EPGs for white-list-based communication policy control.

Mirroring the logical construct, the ACI tenant network configuration intuitively includes the following steps:

■ Create a tenant space

■ In the tenant space, create VRFs

■ Create and configure bridge domains (BD) in VRFs

■ Create and configure an Application Profile with end point groups (EPGs). Each EPG is associated with a BD in the overlay network construct.

■ Create contracts and apply contracts between EPGs to enable white-list-based communication between EPGs.

■ Create and configure L3outs

This document uses the 3-tier application shown in the following figure as an example to illustrate the tenant configuration steps.

The example uses the following network design that represents a typical practice, but some other design options are discussed as alternatives throughout this session. Their configuration steps are shown as well where it is needed.

■ One tenant named Prod

■ One VRF in tenant Prod named VRF1

■ Three bridge domains in VRF1, named BD-Web, BD-App and BD-DB

■ Three EGPs named EPG-Web, EPG-App and EPG-DB, each in the bridge domain that has the corresponding name

■ A contract named External-Web to allow the communication between the L3out external EPG and the internal EPG-Web

■ A contract named Web-App to allow the communication between EPG-Web and EPG-App

■ A contract named App-DB to allow the communication between EPG-App and EPG-DB

■ Two L3out, named L3Out1 and L3Out2

The following table shows the IP addressing we use in this example.

Table 3. IP Addressing used in the example

| BD |

BD Subnet |

EPG |

VM-Name |

VM IP Address |

| BD-Web |

192.168.21.254/24 |

EPG-Web |

VM-Web |

192.168.21.11 |

| BD-App |

192.168.22.254/24 |

EPG-App |

VM-App |

192.168.22.11 |

| BD-DB |

192.168.23.254/24 |

EPG-DB |

VM-DB |

192.168.23.11 |

| L3Out1 |

External-Client1 |

172.16.10.1 |

||

| L3Out2 |

External-Client2 |

172.16.20.1 |

||

The rest of this session details step-by-step configuration of the tenant network for the 3-tired application example.

The following sections describe tenant configuration.

You can create a tenant in the APIC GUI by using the "Create a tenant and VRF" wizard from the Tenant Quick Start menu or by using the "Create Tenant" option under Tenant/All Tenants.

With either of the two methods, you see a pop-up window to create a new tenant and VRF (optional for this step), as shown below.

You need to provide the name of the tenant, and the name of the VRF if choosing to create a VRF in this step. Put in "Prod" as the tenant name, and "VRF1" for the VRF, then click on the "Submit" button. The tenant "Prod" and the VRF "VRF1" are then created.

In this example, we use only one VRF that is created in the previous step of creating the tenant Prod. If needed, more VRFs can be created in the tenant space under Networking > VRFs by right clicking on "VRFs" in the left panel to bring up the pop-up option for "Create VRF". Alternatively, you can use the drop-down menu in the right panel to start creating a new VRF.

Bridge domains (BD) are created under Tenant > Networking > Bridge Domains.

1. You can right click on "Bridge Domains" and chose the pop-up option "Create Bridge Domain" or use the drop down menu in the right panel to select "Create Bridge Domain".

2. In the window that opens, provide the BD information, such as the name of the BD and the VRF that it belongs to. The following figure shows the example to create the BD "BD-Web" in VRF1 of the tenant Prod.

Once you provide the BD name and select the VRF you want it to belong to, you can click on the "Next" button in the window. It will proceed to the next step of BD configuration, the Layer3 Configurations, as shown below. In this example, you can use the default settings for the BD-Web.