FlashStack for Microsoft SQL Server 2022 with Cisco UCS X-Series and Pure Storage

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

In partnership with:

![]()

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to: http://www.cisco.com/go/designzone.

Ever-changing business needs demand organizations to adopt modern business delivery technologies, tools, platforms, and systems that enable them to develop, test and deploy their applications quickly and continuously. Consistent performance, scalability, high availability, disaster recovery capabilities, and life cycle management are few other challenges organizations are facing today.

This FlashStack® solution is a validated, converged infrastructure developed jointly by Cisco and Pure Storage. The solution offers a predesigned data center architecture that incorporates computing, storage, and network design best practices to reduce IT risk by validating the architecture and helping to ensure compatibility among the components. The solution also addresses IT pain points by providing documented design and deployment guidance and support that can be used in various stages (planning, designing and implementation) of a deployment. The FlashStack solution provides a scalable and agile system with consistent performance, by combining and stitching the best of breed software technologies and modern hardware from both partners.

This document describes a FlashStack reference architecture using the latest hardware and software products providing design and deployment recommendations for hosting Microsoft SQL Server 2022 databases in VMware ESXi virtualized environments. The document provides hardware and software configurations of the components involved, implementation best practices guidance for deploying the solution, results of various performance tests, additional benefits of using latest Intel 4th generation CPUs hardware accelerators, Pure Storage Integrations with vSphere vVols for Snapshots, Clone, and so on, and replication to Azure cloud use case using Pure Cloud Block Storage (CBS) for Disaster Recovery (DR) of the databases.

This chapter contains the following:

● Audience

The current IT industry is experiencing a variety of transformations in datacenter solutions. In recent years, the interest in pre-validated and engineered datacenter solutions have grown tremendously. IT management can no longer have their staff take months trying to test and determine the best practices to set up new infrastructures to support Microsoft SQL Server 2022 deployments. Architectures that combine leading server, storage, management software and data replication tools tested and documented address the IT team’s challenge to implement new solutions quickly and deliver on the promised return on investment (ROI).

The introduction of virtualization technology adds an additional layer of complexity that has significantly impacted the design principles and architectures of these solutions as customers look to consolidate workloads to gain higher system efficiencies from their investment in SQL Server processor core-based database licenses.

Microsoft SQL Server is the most widely installed database management system in the world today and supports many critical applications that impact a company’s bottom line. “Database Sprawl” is one of the challenges that many customers are facing today. Some of the challenges this represents include underutilized servers, incorrect licensing, security concerns, management concerns, huge operational costs, and so on. To overcome these challenges, many customers are moving towards migrating and consolidating databases into robust, flexible, and resilient platforms such as FlashStack. This document describes a FlashStack reference architecture for deploying and consolidating SQL Server 2022 databases.

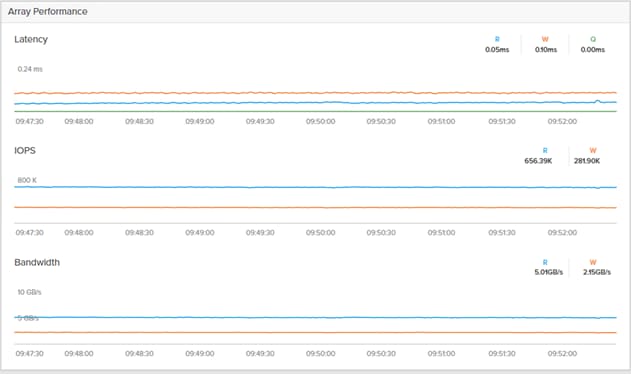

Hosting SQL Server databases on Pure Storage FlashArray is an extremely popular use case. FlashArray’s Direct Flash Modules (DFMs) offer sub-millisecond latency for your business’s most demanding OLTP workloads. And the Purity Operating Environment brings numerous benefits, including global data-deduplication, encryption-at-rest, and a UI focused on simplicity and efficiency. Purity also offers multiple replication solutions, including snapshots, and near real time asynchronous and synchronous streaming replication, enabling simple and fast data mobility both on-prem and/or in the cloud. It is this combination of hardware and software that makes Pure Storage’s FlashArray a true data platform solution for SQL Server databases.

Because Pure Storage’s FlashArray storage technology is incorporated into the FlashStack design, this converged infrastructure is uniquely positioned for relational databases such as SQL Server 2022. FlashStack is pre-tested and pre-validated to ensure a documented balanced performance that is easy to implement. Leveraging the Cisco Intersight Service Profile capability that assigns the basic set up or “personality” to each server not only ensures a unified error-free set up, but this setup can be quickly changed to enable a server to run alternate workloads to help businesses adjust to seasonal trends in business such as the Christmas shopping season. Profiles also enable database administrators to perform “rolling” upgrades to ease migration to a new version of the database and to test infrastructure limitations by moving the database to servers that have, for example, more memory or more processor cores. Data obtained can help justify future investments to the finance team. The Pure Storage Purity features such as snapshots, clones, and replication enable you to achieve faster data recoverability, higher availability, and mobility, thereby achieving improved Return On Investment (ROI).

The ability of FlashStack to combine server, storage and networking technologies help enable it to easily support current IT initiatives such as Cisco ACI, cloud-based solutions, or unforeseen future challenges.

By implementing the solution documented in this CVD, your IT team will save time, money, and realize the benefits of FlashStack’s ability to rapidly reduce risk and improve ROI. Customers who have implemented FlashStack over the years have realized these benefits and enjoyed the “safety net” of having Cisco TAC to call should they run into any issues following the recommendations specified in this document.

The intended audience for this document includes, but is not limited to, sales engineers, field consultants, database administrators, professional services, IT managers, partner engineers, and customers who want to take advantage of an infrastructure built to deliver IT efficiency and enable IT innovation. It is expected that the reader should have prior knowledge on FlashStack Systems and its components.

This document describes a FlashStack reference architecture and step-by-step implementation guidelines for deploying Microsoft SQL Server 2022 databases on the FlashStack system.

The following software and hardware products distinguish this reference architecture from previous releases:

● Microsoft SQL Server 2022 deployment on Windows Server 2022 Guest VMs running on VMware vSphere 8.0 Cluster.

● Tested and validated using Cisco UCS X-Series X210c M7 compute nodes powered by Intel 4th Generation Intel Xeon Scalable processors, Cisco UCS 5th Generation Fabric Interconnects, Cisco Nexus 9000 Series Switches enabling end-to-end 100 Gbps connectivity.

● Pure Storage FlashArray//XL170 with Purity//FA 6.3.3 with a set of enterprise grade storage platform features, and so on.

● Accelerate SQL Server database backups using Intel 4th generation scalable CPUs by offloading compression to the built-in hardware accelerators.

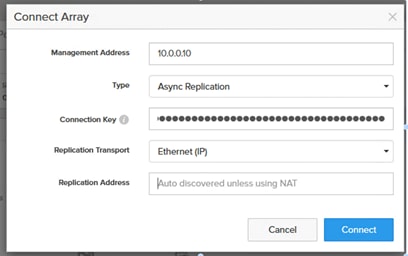

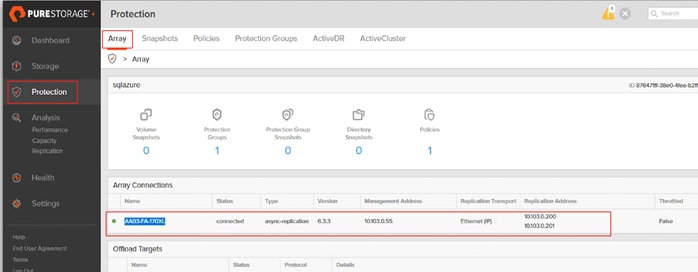

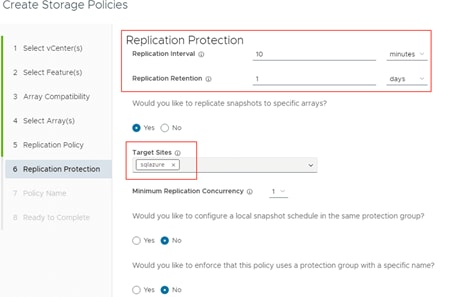

● Pure Storage Cloud Block Storage (CBS) for Asynchronous snapshot replication to the Azure Cloud for database Disaster Recovery use case.

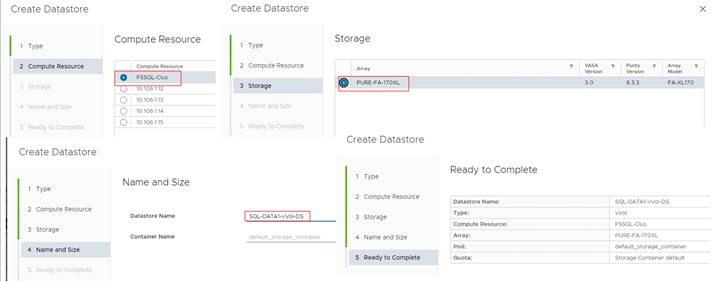

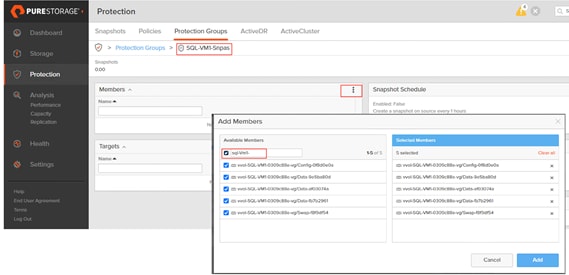

● Pure integration with vSphere Virtual Volumes (vVols) in vCenter for seamless recovery of VMs using Pure Protection Groups and Snapshots.

● Pure Storage plugin for vSphere client for provisioning and managing storage objects like vVols, snapshots, and so on.

● The Cisco Intersight platform is a software-as-a-service (SaaS) infrastructure lifecycle management platform that delivers simplified configuration, deployment, maintenance, and support. Cisco Intersight works with certain third-party infrastructure, including Pure Storage’s FlashArray and VMware vCenter, using third-party device connectors.

This FlashStack solution, which is built for confidently running enterprise grade Microsoft SQL Server databases, consists of the following components.

● Compute and networking components from Cisco.

● Storage Systems from Pure Storage.

● Server virtualization using VMware vSphere.

● Window Server and Microsoft SQL Server databases.

Bringing a carefully validated architecture built on superior compute, world-class networking, and the leading innovations in All Flash storage. These components are integrated and validated, and the entire stack is automated so that customers can deploy the solution quickly and efficiently while eliminating many of the risks associated with researching, designing, building, and deploying similar solutions from the ground up.

This FlashStack solution designed with Cisco UCS X-Series, Cisco UCS 5th Generation Fabric Technology and VMware 8.0 U1 is configurable according to the demand and usage. Customers can purchase exactly the infrastructure they need for their current application requirements, then can scale up by adding more resources to the FlashStack system or scale out by adding more FlashStack instances. By moving the management from the fabric interconnects into the cloud, the solution can respond to speed and scale of customer deployments with a constant stream of new capabilities delivered from the Cisco Intersight SaaS model at cloud scale.

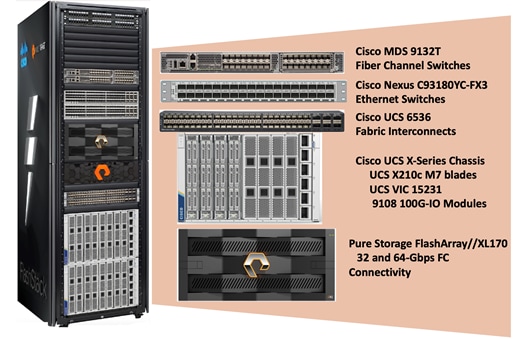

Figure 1 illustrates the major physical components involved in the FlashStack solution.

As shown in Figure 1, the reference architecture described in this document leverages the Pure Storage FlashArray//XL170 for shared storage, Cisco UCS X210 M7 Blade Server for Compute, Cisco MDS 9000 Series switches for storage connectivity using Fibre Channel protocol, Cisco Nexus 9000 Series Ethernet switches for networking element and Cisco Fabric Interconnects 6500 Series for System Management.

The FlashStack architecture components are connected and configured according to both Cisco and Pure best practices and provide the ideal platform for running a variety of enterprise workloads (including databases) with confidence. FlashStack can scale up for greater performance and capacity (adding compute, network, or storage resources independently and non-disruptively as needed), or it can scale out for environments that require multiple consistent deployments. The architecture brings together a simple, wire once solution that is SAN booted from FC and is highly resilient at each layer of the design.

Cisco and Pure Storage have also built a robust and experienced support team focused on FlashStack solutions, from customer accounts and technical sales representatives to professional services and technical support engineers. The support alliance between Pure Storage and Cisco gives customers and channel services partners direct access to technical experts who collaborate with cross vendors and have access to shared lab resources to resolve potential issues.

For more details and specifications of individual components, go to the References section where all the necessary links are provided.

This chapter contains the following:

● Cisco Unified Computing System X-Series

● Cisco UCS 9108-100G Intelligent Fabric Module (IFM)

● Cisco UCS X210c M7 Compute Node

● Cisco UCS 6536 Fabric Interconnects

● Cisco UCS and Intersight Security

● Cisco Nexus Switching Fabric

● Cisco MDS 9132T 32G Multilayer Fabric Switch

● Pure Cloud Block Store for Azure VMware Solution

● Microsoft Windows Server 2022

FlashStack architecture is built using the following infrastructure components for compute, network, and storage:

● Cisco Unified Computing System (Cisco UCS)

● Cisco Nexus 9000 Series Switches

● Cisco MDS 9000 Series Switches

● Pure Storage FlashArray

All the FlashStack components are integrated, so customers can deploy the solution quickly and economically while eliminating many of the risks associated with researching, designing, building, and deploying similar solutions from the foundation. One of the main benefits of FlashStack is its ability to maintain consistency at scale. Each of the component families shown in Figure 2, offers platform and resource options to scale-up performance or scale-out capacity while supporting the same features and functions.

This FlashStack solution described in this document comprises the following hardware and software components:

● Cisco 5th Generation Cisco UCS 6500 Fabric Interconnects to support 1/10/25 and 100 Gigabit Ethernet connectivity from various components.

● Cisco UCS X9508 Chassis with Cisco UCS X210c M7 compute nodes installed with 5th Generation Cisco VIC 15231 and with Cisco UCS 9108 100G Intelligent Fabric Module to connect the I/O fabric between the 6500 Fabric Interconnect and the Cisco UCS X9508 Chassis.

● High-Speed Cisco NX-OS based Nexus 93180YC-FX3 switching design to support up to 25/40/100GbE connectivity.

● High-Speed Cisco NX-OS based MDS 9132T switching design to support up to 32Gb end-to-end connectivity to support SCSI and NVMe over Fibre Channel.

● Pure Storage FlashArray//XL170 All Flash Storage is designed to support up to 64Gb with high-speed traditional Fibre Channel (FC) and NVMe over Fibre Channel connectivity and up to 100GbE Ethernet for iSCSI, NVMe/RoCE, NVMe/TCP connectivity for unifies block and file protocols including SMB and NFS.

The software components consist of:

● Cisco Intersight Assist virtual appliance to help connect the Pure Storage FlashArray and VMware vCenter with the Cisco Intersight platform.

● VMware vCenter 8.0 to manage the VMware vSphere 8.0 virtual environment.

● Pure Storage Plugin for vSphere Client for managing vSphere Virtual Volumes (vVols).

● Pure Storage Purity 6.3.3.

● Microsoft Windows Server 2022 and SQL Server 2022 databases.

Cisco Unified Computing System X-Series

The Cisco Unified Computing System (Cisco UCS) X-Series is a modular, next-generation data center platform that builds upon the unique architecture and advantages of the previous Cisco UCS 5108 system but with the following key enhancements that simplify IT operations:

● Cloud-managed infrastructure: With Cisco UCS X-Series, the management of the network infrastructure is moved to the cloud, making it easier and simpler for IT teams to respond quickly and at scale to meet the needs of your business. The Cisco Intersight cloud-operations platform allows you to adapt the resources of the Cisco UCS X-Series Modular System to meet the specific requirements of a workload. Additionally, you can seamlessly integrate third-party devices such as Pure Storage and VMware vCenter. This integration also enables global visibility, monitoring, optimization, and orchestration for all your applications and infrastructure.

● Adaptable system designed for modern applications: Today's cloud-native and hybrid applications are dynamic and unpredictable. Application and DevOps teams frequently deploy and redeploy resources to meet evolving requirements. To address this, the Cisco UCS X-Series provides an adaptable system that doesn't lock you into a fixed set of resources. It combines the density, manageability, and efficiency of blade servers with the expandability of rack servers, allowing you to consolidate multiple workloads onto a single platform. This consolidation results in improved performance, automation, and efficiency for both hybrid and traditional data center applications.

● Platform engineered for the future: The Cisco UCS X-Series is designed to adapt to emerging technologies with minimal risk. It is a modular system that can support future generations of processors, storage, nonvolatile memory, accelerators, and interconnects. This eliminates the need to purchase, configure, maintain, power, and cool separate management modules and servers. Cloud-based management through Intersight ensures automatic updates and access to new capabilities delivered through a software-as-a-service model.

● Broad support for diverse workloads: The Cisco UCS X-Series supports a broad range of workloads, reducing the need for different products which lowers support costs, training costs, and gives you more flexibility in your data center environment.

The Cisco UCS X-Series chassis is engineered to be adaptable and flexible. As shown in Figure 3, the Cisco UCS X9508 chassis has only a power-distribution midplane. This midplane-free design provides fewer obstructions for better airflow. For I/O connectivity, vertically oriented compute nodes intersect with horizontally oriented fabric modules, allowing the chassis to support future fabric innovations. Cisco UCS X9508 Chassis’ superior packaging enables larger compute nodes, thereby providing more space for actual compute components, such as memory, GPU, drives, and accelerators. Improved airflow through the chassis enables support for higher power components, and more space allows for future thermal solutions (such as liquid cooling) without limitations.

The Cisco UCS X-Series modular system is designed to take the current generation of the Cisco UCS platform to the next level with its design that will support future innovations and management in the cloud. Decoupling and moving platform management to the cloud allows the Cisco UCS platform to respond to features and scalability requirements much faster and more efficiently. Cisco UCS X-Series state-of-the-art hardware simplifies the datacenter design by providing flexible server options. A single server type that supports a broader range of workloads results in fewer different datacenter products to manage and maintain. The Cisco Intersight cloud management platform manages the Cisco UCS X-Series as well as integrates with third-party devices. These devices include VMware vCenter and Pure Storage to provide visibility, optimization, and orchestration from a single platform, thereby enhancing agility and deployment consistency.

Cisco UCS 9108-100G Intelligent Fabric Module (IFM)

Cisco UCSX-I-9508-100G Intelligent Fabric Modules In the end-to-end 100Gbps Ethernet design, for the Cisco UCS X9508 Chassis, the network connectivity is provided by a pair of Cisco UCSX-I-9108-100G Intelligent Fabric Modules (IFMs). Like the fabric extenders used in the Cisco UCS 5108 Blade Server Chassis, these modules carry all network traffic to a pair of Cisco UCS 6536 Fabric Interconnects (FIs). IFMs also host the Chassis Management Controller (CMC) for chassis management. In contrast to systems with fixed networking components, Cisco UCS X9508’s midplane-free design enables easy upgrades to new networking technologies as they emerge making it straightforward to accommodate new network speeds or technologies in the future.

Each IFM supports eight 100Gb uplink ports for connecting the Cisco UCS X9508 Chassis to the FIs and 8 100Gb or 32 25Gb server ports for the eight compute nodes. IFM server ports can provide up to 200 Gbps of unified fabric connectivity per compute node across the two IFMs. The uplink ports connect the chassis to the Cisco UCS FIs, providing up to 1600Gbps connectivity across the two IFMs. The unified fabric carries management, VM, and Fibre Channel over Ethernet (FCoE) traffic to the FIs, where server management traffic is routed to the Cisco Intersight cloud operations platform, FCoE traffic is forwarded to either native Fibre Channel interfaces through unified ports on the FI (to Cisco MDS switches) or to FCoE uplinks (to Cisco Nexus switches supporting SAN switching), and data Ethernet traffic is forwarded upstream to the data center network (using Cisco Nexus switches).

Cisco UCS X210c M7 Compute Node

The Cisco UCS X9508 Chassis is designed to host up to 8 Cisco UCS X210c M7 or X210c M6 Compute Nodes. The hardware details of the Cisco UCS X210c M7 Compute Nodes are shown in Figure 6.

The Cisco UCS X210c M7 features:

● CPU: Up to 2x 4th Gen Intel Xeon Scalable Processors with up to 60 cores per processor and 2.625 MB Level 3 cache per core and up to 112.5 MB per CPU.

● Memory: Up to 32 x 256 GB DDR5-4800 DIMMs for a maximum of 8 TB of main memory.

● Disk storage: Up to 6 SAS or SATA drives or NVMe drives can be configured with the choice of an internal RAID controller or passthrough controllers. Two M.2 memory cards can be added to the Compute Node with optional hardware RAID.

● GPUs: The optional front mezzanine GPU module allows support for up to two HHHL GPUs. Adding a mezzanine card and a Cisco UCS X440p PCIe Node allows up to four more GPUs to be supported with a Cisco UCS X210c M7.

● Virtual Interface Card (VIC): Up to 2 VICs including an mLOM Cisco UCS VIC 15231 or an mLOM Cisco UCS VIC 15420 and a mezzanine Cisco UCS VIC card 15422 can be installed in a Compute Node.

● Security: The server supports an optional Trusted Platform Module (TPM). Additional security features include a secure boot FPGA and ACT2 anticounterfeit provisions.

Intel Xeon Scalable processors feature the broadest and widest set of built-in accelerator engines for today’s most demanding workloads. Whether on-prem, in the cloud, or at the edge, Intel Accelerator Engines can help take your business to new heights, increasing application performance, reducing costs, and improving power efficiency.

With 4th-generation Intel Xeon Scalable processors shipped with integrated hardware accelerators for offloading most common tasks like compression, encryption and so on, from applications to its accelerators.

For more details on the various built-in accelerator engines supported by Intel, go to: https://www.intel.in/content/www/in/en/now/xeon-accelerated/accelerators-eguide.html

Microsoft and Intel worked together to enable the support for offloading backup compression using the Intel QAT hardware accelerator. The Microsoft SQL Server 2022 supports offloading and backup acceleration using the Intel QAT accelerator and improves the backup performance. See System Validation and Testing for the tests and results.

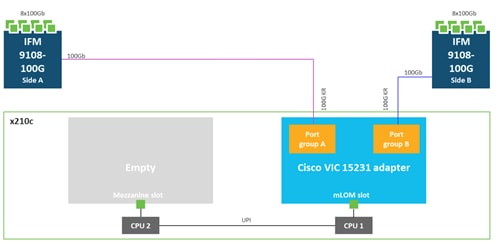

Cisco UCS Virtual Interface Card 15231

Cisco UCS X210c M7 Compute Nodes support multiple Cisco UCS VIC cards. In this solution, Cisco UCS VIC 15231 is used for the validation.

Cisco UCS VIC 15231 fits the mLOM slot in the Cisco UCS X210c Compute Node and enables up to 100 Gbps of unified fabric connectivity to each of the chassis IFMs for a total of 200 Gbps of connectivity per server. Cisco UCS VIC 15231 connectivity to the IFM and up to the fabric interconnects is delivered through 100Gbps. Cisco UCS VIC 15231 supports 512 virtual interfaces (both FCoE and Ethernet) along with the latest networking innovations such as NVMeoF over FC or TCP, VxLAN/NVGRE offload, and so forth.

Cisco UCS 6536 Fabric Interconnects

The Cisco UCS Fabric Interconnects (FIs) provide a single point for connectivity and management for the entire Cisco Unified Computing System. Typically deployed as an active/active pair, the system’s FIs integrate all components into a single, highly available management domain controlled by Cisco Intersight. Cisco UCS FIs provide a single unified fabric for the system, with low-latency, lossless, cut-through switching that supports LAN, SAN, and management traffic using a single set of cables.

The Cisco UCS 6536 utilized in the current design is a 36-port Fabric Interconnect. This single RU device includes up to Thirty-Six 10/25/40/100 Gbps Ethernet ports, four 8/16/32-Gbps Fibre Channel ports supporting connectivity using 4x32 Gbps breakouts on ports 33-36. All 36 ports support breakout cables or QSA interfaces.

The Cisco Intersight platform is a Software-as-a-Service (SaaS) infrastructure lifecycle management platform that delivers simplified configuration, deployment, maintenance, and support. The Cisco Intersight platform is designed to be modular, so you can adopt services based on your individual requirements. The platform significantly simplifies IT operations by bridging applications with infrastructure, providing visibility and management from bare-metal servers and hypervisors to serverless applications, thereby reducing costs and mitigating risk. This unified SaaS platform uses a unified Open API design that natively integrates with third-party platforms and tools.

The main advantages of the Cisco Intersight infrastructure services are as follows:

● Simplify daily operations by automating many daily manual tasks.

● Combine the convenience of a SaaS platform with the capability to connect from anywhere and manage infrastructure through a browser or mobile application.

● Stay ahead of problems and accelerate trouble resolution through advanced support capabilities.

● Gain global visibility of infrastructure health and status along with advanced management and support capabilities.

● Upgrade to add workload optimization and other services when needed.

Cisco Intersight is a pow management platform that enables the centralized control of diverse infrastructure components, including both 3rd party infrastructure such as Pure Storage and VMware vCenter, as well as non-Cisco UCS infrastructure like Cisco MDS and Cisco Nexus switches. With Cisco Intersight, you can have a comprehensive, global, and consolidated view of your entire infrastructure from one place.

A data center could have multiple devices that do not connect directly with Cisco Intersight. Any device that is supported by Cisco Intersight, but does not connect directly with it, will need a connection mechanism. This is where Cisco Intersight Assist comes into picture and provides that connection mechanism. It serves as a proxy, enabling the connection and management of third-party and non-Cisco UCS infrastructure components. For each supported endpoint, Intersight Assist ships with an endpoint connector (for example, VMware vCenter or Pure Storage Connector) that communicates with the endpoint using REST APIs. The claiming process involves claiming Intersight Assist as a target endpoint in Intersight and then using this to claim other 3rd party endpoint devices with the Claim Through Intersight Assist option.

In the context of the FlashStack solution, the following third-party and non-Cisco UCS infrastructure components can be claimed and managed from Intersight through the assistance of Cisco Intersight Assist:

● VMware vCenter

● Pure Storage FlashArray (including Cloud Block Store)

● Cisco MDS SAN switches

● Cisco Nexus switches

For more information on Cisco Intersight Assist, see: Cisco Intersight Virtual Appliance and Intersight Assist Getting Started Guide, 1.0.9

The Cisco Intersight platform uses a new subscription-based license model now with two tiers. You can purchase a subscription duration of one, three, or five years and choose the required Cisco UCS server volume tier for the selected subscription duration. For Cisco UCS M6 and below servers, each Cisco endpoint can be claimed into Intersight at no additional cost (no license) and can access base-level features listed in the Intersight Licensing page referenced below. All Cisco UCS M7 servers require either an Essentials or Advantage license listed below. You can purchase any of the following Cisco Intersight licenses using the Cisco ordering tool:

● Cisco Intersight Essentials: The Essentials includes Lifecycle Operations features, including Cisco UCS Central and Cisco UCS-Manager entitlements, policy-based configuration with server profiles (IMM), firmware management, Global Monitoring and Inventory, Custom Dashboards, and evaluation of compatibility with the Cisco Hardware Compatibility List (HCL). Also, Essentials includes Proactive Support features, including Proactive RMA, Connected TAC, Advisories, and Sustainability.

● Cisco Intersight Advantage: Advantage offers all the features of the Essentials tier plus In-Platform Automation features such as Tunneled KVM, Operating System Install Automation, Storage/Virtualization/Network Automation, and Workflow Designer. It also includes Ecosystem Integrations for Ecosystem Visibility, Operations, Automation, and ServiceNow Integration.

Servers in the Cisco Intersight Managed Mode require at least the Essentials license. For more information about the features provided in the various licensing tiers, see https://intersight.com/help/saas/getting_started/licensing_requirements/lic_infra.

Cisco UCS and Intersight Security

From a Security perspective, all Cisco UCS user interfaces are hardened with the latest security ciphers and protocols including redirection of http to https, password and password expiry policies, integration with secure authentication systems, and so on. Additionally, Cisco UCS servers support confidential computing (both Intel SGX and AMD based), although confidential computing is not addressed in this CVD. Finally, almost all Cisco UCS servers now sold come with Trusted Platform Modules (TPMs), that in VMware allows attestation of Unified Extended Firmware Interface Forum (UEFI) secure boot, which allows only securely signed code to be loaded. Many of the latest available operating systems, such as Microsoft Windows 11 require a TPM. The latest versions of VMware allow the assignment of a virtual TPM to VMs running operating systems that require a TPM.

The Cisco Nexus 9000 Series Switches offer both modular and fixed 1/10/25/40/100 Gigabit Ethernet switch configurations with scalability up to 60 Tbps of nonblocking performance with less than five-microsecond latency, wire speed VXLAN gateway, bridging, and routing support.

The Cisco Nexus 93180YC-FX3 Switch (Figure 10) is a 1RU switch that supports 3.6 Tbps of bandwidth and 1.2 Bpps. The 48 downlink ports on the 93180YC-FX3 can support 1/10/25-Gbps Ethernet, offering deployment flexibility and investment protection. The 6 uplink ports can be configured as 40 or 100-Gbps Ethernet, offering flexible migration options. The Cisco Nexus 93180YC-FX3 switch supports standard PTP telecom profiles with SyncE and PTP boundary clock functionality for telco datacenter edge environments.

Cisco MDS 9132T 32G Multilayer Fabric Switch

The Cisco MDS 9132T 32G Multilayer Fabric Switch is the next generation of the highly reliable, flexible, and low-cost Cisco MDS 9100 Series switches. It combines high performance with exceptional flexibility and cost effective-ness. This powerful, compact one Rack-Unit (1RU) switch scales from 8 to 32 line-rate 32 Gbps Fibre Channel ports.

![]()

The Cisco MDS 9132T delivers advanced storage networking features and functions with ease of management and compatibility with the entire Cisco MDS 9000 family portfolio for reliable end-to-end connectivity. This switch also offers state-of-the-art SAN analytics and telemetry capabilities that have been built into this next-generation hardware platform. This new state-of-the-art technology couples the next-generation port ASIC with a fully dedicated network processing unit designed to complete analytics calculations in real time. The telemetry data extracted from the inspection of the frame headers are calculated on board (within the switch) and, using an industry-leading open format, can be streamed to any analytics-visualization platform. This switch also includes a dedicated 10/100/1000BASE-T telemetry port to maximize data delivery to any telemetry receiver, including Cisco Data Center Network Manager.

The Pure Storage FlashArray Family delivers software-defined all-flash power and reliability for businesses of every size. FlashArray is all-flash enterprise storage that is up to 10X faster, space and power efficient, reliable, and far simpler than other available solutions. Compared to traditional performance disk arrays, FlashArray costs less with total cost of ownership (TCO) savings of up to 50%. At the top of the FlashArray line is the new FlashArray//XL; this new platform is designed for today’s higher-powered multi core CPUs, allowing //XL to increase performance even over our FlashArray//X models, with more power to take apps to the next level. //XL represents next-level scale and performance for high-demand enterprise applications. The //XL platform enhancements give higher performance, higher capacity density per RU, and higher scale with better resiliency. By being engineered for next-gen CPU and flash technologies to future-proof your investment, you can achieve workload consolidation with room to grow in place, with less frequent servicing by IT staff.

Pure Cloud Block Store with Azure VMware Solution

Pure Storage's Cloud Block Store (CBS) is a software defined storage solution powered by the Purity//FA Operating Environment (OE), which uses the native resources of either Amazon Web Services or Microsoft Azure to enhance storage services with enterprise features, ensuring consistent management without any customer tuning.

Pure Storage provides an external block storage for Microsoft’s Azure VMware Solution to improve TCO for VMware workloads in Azure. For customers with storage-intensive VMware workloads, Pure Cloud Block Store™ provides the flexibility to match their Azure VMware Solution compute needs with the storage capacity needs—optimizing the cost in the cloud.

Architecture

Cloud Block Store's high-level architecture consists of two Azure virtual machines acting as controllers and Azure managed disks for the NVRAM and persistent storage (PremiumV2 or Ultra SSDs). The Cloud Block Store controllers process data and provide enterprise data services such as data reduction, snapshots, encryption, and replication. Two managed disks will be deployed for NVRAM and 14 managed disks for persistent storage. Cloud Block Store instances can be non-disruptively upgraded (NDU) with additional capacity by upgrading the managed disk sizes. All Cloud Block Store NDU's are performed by Pure Storage Support in an automated fashion which removes any additional effort from the customer.

Highlights

● Data Efficiency - industry leading data reduction, space saving snapshots and cloning reduces cloud storage costs, enables fast and easy data portability, and increases data processing agility

● Cloud Data Protection - built in replication, disaster recovery and business continuity between public and private cloud targets along with higher availability protects your business from downtime in the cloud or on-premises.

● Evergreen//One™ - unifies experience and storage between on-premises and cloud to deliver a flexible hybrid cloud experience

● Application Migration - When migrating data to the public cloud, the requirement to redesign existing applications deployed on-premises is a common challenge. The enterprise data storage services of Cloud Block Store allow customers to easily migrate and run their existing mission critical data in the public cloud without redesigning their applications. Cloud Block Store shares a common abstraction layer as the Pure Storage Array for both management and data access which allows customers to preserve the same operational workflows, scripts, and orchestration tools. Cloud Block Store's built-in resiliency allows for the most mission critical data to run without requiring the application layer to manage data availability

● Reduce Storage Costs - Once customers successfully make the transition to Azure with Cloud Block Store, they can realize the benefits of Cloud Block Store enterprise features. Customers can consolidate data onto Cloud Block Store to take advantage of Purity's data reduction capabilities. Existing FlashArray customers can expect the same data reduction ratio already observed on their physical FlashArray. Thin Provisioning with Cloud Block Store further improves a customer's total efficiency. Developers can confidently provision volumes as large as needed without the cost concern or the need to constantly resize their disks.

● Increase Availability - In production environments, data availability and protection is the number one priority. Customers can rely on the highly efficient snapshot capabilities of Cloud Block Store to provide periodic point-in-time volume snapshots to protect from unintentional data changes. Additionally, customers who require the highest form of data availability can replicate their data between availability zones or regions. ActiveCluster allows data to be synchronously replicated between Cloud Block Store instances in different availability zones. This feature allows for automatic and transparent application failovers in the event of a complete outage of an availability zone. For larger-scale regional outage protection, data can also be replicated asynchronously between Cloud Block Store instances residing in different regions.

Disaster Recovery and Migration using Replication

The Purity Operating Environment enables both the FlashArray and Cloud Block Store to use the same replication technology despite running on two different environments. This feature opens the door to new use cases including disaster recovery, data migration, and back-up to the public cloud.

For disaster recovery (DR) solutions, many customers search for ways to incorporate the public cloud. Leveraging the public cloud alleviates the need to manage remote secondary or tertiary physical data centers. In a disaster recovery solution, customers can use Cloud Block Store as a replication target. During a DR failover event, customers can use a replicated snapshot volume on Cloud Block Store to instantaneously clone and attach to the respective application hosts in the public cloud. For protection against a single AZ or regional failure, Cloud Block Store can replicate its own source volumes to other Cloud Block Store instances.

Customers looking to migrate data from their on-premises data center to the public cloud can rely on Cloud Block Store to not only provide the vehicle to move their data, but also provide ongoing enhanced data services. Once data volumes are replicated to Cloud Block Store, customers can easily attach the volumes to the application compute instances over iSCSI with the same simple steps as on a FlashArray.

Hybrid Cloud

Cloud Block Store provides an abstraction layer that allows applications to be agnostic to the private or public cloud that it runs on. Pure Storage's vision is to enable customers to seamlessly move their data between all the major public cloud vendors using the native replication capabilities of Cloud Block Store. Furthermore, Cloud Block Store's data reduction is preserved when data is replicated, thus reducing the required bandwidth, time, and potential data egress costs. Customers are protected from being locked into any single environment and are afforded the flexibility to migrate or replicate data with minimal effort.

Purity for FlashArray (Purity//FA)

Every FlashArray is driven by Purity Operating Environment software. Purity//FA implements advanced data reduction, storage management, and flash management features, enabling customers to enjoy tier 1 data services for all workloads. Purity software provides proven 99.9999-percent availability over 2 years, completely non disruptive operations, 2X better data reduction, and the power and efficiency of DirectFlashTM. Purity also includes enterprise-grade data security, comprehensive data-protection options, and complete business continuity with an ActiveCluster multi-site stretch cluster. All these features are included with every Pure Storage array.

The Pure Storage FlashArray product line includes FlashArray//C, FlashArray//X, FlashArray//XL, and FlashArray//E.

FlashArray//XL Specification

Table 1 lists both the capacity and physical aspects of various FlashArray systems.

Table 1. FlashArray//XL170 Specifications

|

|

Capacity |

Physical |

| //XL170 |

Up to 5.5 PB/5.13 PiB effective capacity** Up to 1.4 PB/1.31 PiB raw capacity* |

5RU, 1850-2355 watts (normal - peak) 167.0lb. (75.7kg) fully loaded; 8.72 x 18.94 x 29.72 in |

| //XL130 |

Up to 3.8 PB/3.5PiB effective capacity Up to 1024TB/932.1TiB raw capacity |

5-11U, 1550-2000 watts (normal - peak) 167.0lb. (75.7kg) fully loaded; 8.72 x 18.94 x 29.72 in |

| DirectFlash Shelf |

Up to 1.9 PB effective capacity Up to 512 TB / 448.2 TiB raw capacity |

3U; 460–500 watts (nominal–peak) 87.7 lbs. (39.8 kg) fully loaded; 5.12” x 18.94” x 29.72” |

** Effective capacity assumes high availability, RAID, and metadata overhead, GB-to-GiB conversion, and includes the benefit of data reduction with always-on inline deduplication, compression, and pattern removal. Average data reduction is calculated at 5-to-1 and does not include thin provisioning.

* FlashArray//XL will only support NVMe DirectFlash Modules.

Table 2 lists the various connectivity options using onboard and host I/O cards.

Table 2. FlashArray//XL170 Connectivity

| Chassis |

Onboard ports (per controllers) |

Host I/O cards (3 slots/controller) |

| //XL170 & //XL130 |

Two 1-/10-/25-GE iSCSI/RoCE |

2 -port 10-/25 or 100-Gb NVMe/RoCE |

| //XL170 & //XL130 |

Four 10/25-GE replication |

2-port 32-/64-Gb Fibre Channel (NVMe-oF Ready) |

| //XL170 & //XL130 |

Two 1-Gb management ports |

4-port 32/64 Gb Fibre Channel (NVMe-oF Ready) |

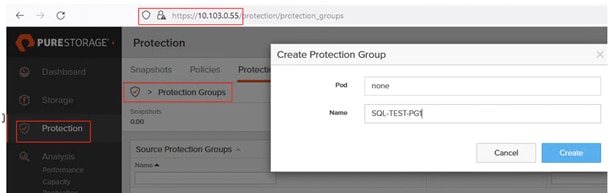

Protection Groups

Protection Groups are designed to protect a volume, set of volumes, Host or Host Group from human and software errors using techniques like Snapshot (point-in-time copies) and Remote Replication (to a remote on-prem or cloud Pure Storage).

Snapshots

A snapshot is a point-in-time image of a volume. Pure Storage FlashArray supports following two types of snapshots:

● Crash-consistent Snapshots: Snapshots capture a point-in-time representation of a volume, without needing to create a second discrete copy. It does not deal with in-transit updates (no I/O quiescing, flushing, and so on) therefore it is much faster and has zero impact on the application performance. Crash-consistent snapshot works with SQL Server’s write-ahead logging protocol to provide a recoverable database but not point-in-time recovery with transactional logs. The point of recovery is the time of snapshot plus SQL Server crash recovery.

● Application-Consistent: These snapshots ensure consistency of the application by freezing ongoing updates and flushing updated memory pages to disk before taking a snapshot. For SQL Server 2019 and prior, the VSS framework is required to take Application Consistent snapshots. While the freezing of I/O on the SQL Server using VSS may take longer than a few seconds, the subsequent snapshot operation on FlashArray is nearly instantaneous. Starting in SQL Server 2022, VSS is no longer required, and Application Consistent snapshots may now be orchestrated with the new T-SQL Snapshot feature. This also means that the freezing of SQL Server is also greatly reduced; in fact, read traffic is no longer halted, only write traffic.

On FlashArray, because volumes are logical constructs, snapshots merely copy the “state” of the volume at time of snapshot. This means snapshots do not consume additional capacity and can be manipulated nearly instantaneously. This is extremely beneficial for SQL Server databases, as it enables one to snapshot Very Large Databases (VLDBs) and clone “copies” to other SQL Servers nearly instantaneously, regardless of the size of data. Because snapshots take place at the storage volume level, using vSphere Virtual Volumes (vVols) for storing SQL Server virtual machine disks brings more granular control and seamless data management and recovery of volumes.

Pure1

Pure1, a cloud-based management, analytics, and support platform, expands the self-managing, plug-n-play design of Pure all-flash arrays with the machine learning predictive analytics and continuous scanning of Pure1 Meta to enable an effortless, worry-free data platform.

Pure1 Manage

Pure1 Manage is a SaaS-based offering that allows customers to manage their array from any browser or from the Pure1 Mobile App with nothing extra to purchase, deploy, or maintain. From a single dashboard, customers can manage all their arrays and have full storage health and performance visibility.

Pure1 Analyze

Pure1 Analyze delivers true performance forecasting, giving customers complete visibility into the performance and capacity needs of their arrays, now and in the future. Performance forecasting enables intelligent consolidation and workload optimization.

Pure1 Support

Pure Storage support team with the predictive intelligence of Pure1 Meta delivers unrivaled support that’s a key component in FlashArray 99.9999% availability. Some of the customer issues are identified and fixed without any customer intervention.

Pure1 META

The foundation of Pure1 services, Pure1 Meta is global intelligence built from a massive collection of storage array health and performance data. By continuously scanning call-home telemetry from Pure’s installed base, Pure1 Meta uses machine learning predictive analytics to help resolve potential issues, optimize workloads, and provide accurate forecasting. Meta is always expanding and refining what it knows about array performance and health.

Pure1 VM Analytics

Pure1 helps you narrow down the troubleshooting steps in your virtualized environment. VM Analytics provides you with a visual representation of the IO path from the VM all the way through to the FlashArray. Other tools and features guide you through identifying where an issue might be occurring to help eliminate potential candidates for a problem.

VM Analytics doesn’t only help when there’s a problem. The visualization allows you to identify which volumes and arrays particular applications are running on. This brings the whole environment into a more manageable domain.

VMware vSphere 8.0

VMware vSphere is a virtualization platform for holistically managing large collections of infrastructures (resources including CPUs, storage, and networking) as a seamless, versatile, and dynamic operating environment. Unlike traditional operating systems that manage an individual machine, VMware vSphere aggregates the infrastructure of an entire datacenter to create a single powerhouse with resources that can be allocated quickly and dynamically to any application in need.

VMware vSphere 8.0 has several improvements and simplifications including, but not limited to:

● Limits with VMware vSphere 8.0 have been increased including the number of GPU devices increased to 8, the number of ESXi hosts that can be managed by Lifecycle Manager is increased from 400 to 1000, the maximum number of VMs per cluster is increased from 8,000 to 10,000, and the number of VM DirectPath I/O devices per host is increased from 8 to 32.

● Security improvements include adding an SSH timeout on ESXi hosts, a TPM Provisioning policy allowing a vTPM to be replaced when cloning VMs, and TLS 1.2 as the minimum supported TLS version.

● Implementation of VMware vMotion Unified Data Transport (UDT) to significantly reduce the time to storage migrate powered off virtual machines.

● Lifecycle Management improvements including VMware vSphere Configuration Profiles as a new alternative to VMware Host Profiles, staging cluster images and remediating up to 10 ESXi hosts in parallel instead of one at a time.

● New Virtual Hardware in VM hardware version 20 supporting the latest guest operating systems, including Windows 11.

● Distributed Resource Scheduler and vMotion improvements.

● Implementation of the VMware Balanced Power Management Policy on each server, which reduces energy consumption with minimal performance compromise.

● Implementation of VMware Distributed Power Management, which along with configuration of the Intelligent Platform Management Interface (IPMI) on each Cisco UCS server allows a VMware host cluster to reduce its power consumption by powering hosts on and off based on cluster resource utilization.

For more information about VMware vSphere and its components, go to: https://www.vmware.com/products/vsphere.html

The FlashStack solution supports VMware Virtual Volumes (vVols) that provides a more granular control of your shared storage environment. VMware vVols is a storage technology that provides policy-based, granular storage configuration for VM storage. When a VM is created, the administrator selects a VM storage policy to associate with it. On the back-end storage array, the storage is provisioned for the VM on a per-VM basis (with 1 config vVol per VM, 1 data vVol per disk) without the VMware administrator being aware of the array specific configuration. Instead, VMware administrators provision storage using native VMware interfaces. Through API-based interaction with an underlying array, VMware administrators can maintain storage configuration compliance using only native VMware interfaces. The Pure Storage FlashArray Plugin for the vSphere Web Client provides the APIs necessary to create, manage, and use vVols from VMware vCenter.

With SCSI or NFS transports, ESXi hosts use a logical I/O proxy, called the protocol endpoint, to communicate with virtual volumes. ESXi uses protocol endpoints to establish a data path on demand from virtual machines to their respective virtual volumes. Each virtual volume is bound to a specific protocol endpoint. When a virtual machine on the host performs an I/O operation, the protocol endpoint directs the I/O to the appropriate virtual volume. Typically, a storage system requires just a few protocol endpoints. A single protocol endpoint can connect to hundreds or thousands of virtual volumes.

The VMware vSphere APIs for Storage Awareness (VASA) is a VMware interface for out-of-band communication between VMware ESXi, vCenter and storage arrays. The arrays’ VASA providers are instances of the VASA service which are registered with the vCenter Server. Pure Storage hosts the VASA provider as redundant instances of the VASA service running on each controller; there is no separate installation or configuration. VASA allows for advanced management functionality and reporting at both VMs and per-disk level of granularity.

Figure 14 illustrates high level architecture of the vVols.

Some of the benefits of VMware vVols are:

● Virtual Disk Granularity: Each virtual disk is a separate volume on the array with its own unique properties.

● Automatic Provisioning: When a VMware administrator requests a new virtual disk for a VM, VMware automatically directs the array to create a volume and present it to the VM. Similarly, when a VMware administrator resizes or deletes a virtual disk, VMware directs the array to resize or remove the volume.

● Array-level VM Visibility: Because arrays recognize both VMs and their virtual disks, they can manage and report on performance and space utilization with both VM and individual virtual disk granularity.

● Storage Policy Based Management: With visibility to individual virtual disks, arrays can take snapshots and replicate volumes at the precise granularity required. VMware can discover an array’s virtual disks and allow VMware administrators to manage each vVol’s capabilities either ad hoc or by specifying policies. If a storage administrator overrides a vVol capability configured by a VMware administrator, the VMware administrator is alerted to the non-compliance.

Virtual Volume Best Practices and Other Considerations:

● A VM's Config vVol stores the files required to build and manage the VM. Ensure that the Config vVol is part of an existing Pure Storage FlashArray Protection Group. Alternately, if you are using a storage policy that includes snapshot or if you prefer manual snapshots, Config vVol should be part of these snapshots. This will help with the VM recovery process if the VM is deleted.

● Create a local array admin user to register the storage provider instead of using the local “pureuser” account.

● vCenter Server should not reside on vVols.

● ESXi Hosts, vCenter Server and FlashArray should synchronize time to the same NTP Server.

● Use the Pure Storage Plugin for the vSphere Client to register the Pure Storage FlashArray storage provider and mount the vVols datastore.

● A single PE utilizing the default device queue depth is sufficient in the design.

● vVol Virtual Disk resizing should be completed from the vSphere Client and not from the FlashArray GUI.

● TCP port 8084 must be open and accessible from vCenter Servers and ESXi hosts to the FlashArray that will be used for vVol.

Windows Server 2022 is the latest OS platform release from Microsoft. Windows Server 2022 is an excellent platform for running Microsoft SQL Server 2022 databases. It offers new features and enhancements related to security, patching, domains, clusters, storage, and support for various new hardware features, and so on. It enables Windows Server to provide best-in-class performance and a highly scalable platform for deploying SQL Server databases.

SQL Server 2022 (16.x) is the latest relational database from Microsoft and builds on previous releases to grow SQL Server as a platform that gives you choices of development languages, data types, on-premises or cloud environments, and operating systems. It offers various enhancements and new features that enables SQL Server deployments to be more reliable, highly available, performant, and secured than ever. SQL Server 2022 can leverage new hardware capabilities from partners like Intel to provide extended capabilities. For example, now it can leverage Intel Quick Assist Technology (QAT) for offloading backup compression thereby improving backup and restore performance.

For more details about the new capabilities of SQL Server 2022, go to: https://learn.microsoft.com/en-us/sql/sql-server/what-s-new-in-sql-server-2022?view=sql-server-ver16

This chapter contains the following:

● VMware vSphere – ESXi Networking Design

The FlashStack solution in this CVD was designed to address the following key goals:

● Resilient design across all layers of the infrastructure with no single point of failure.

● Scalable design with the ability to independently add compute, storage, and network bandwidth as needed.

● Modular design where sub-system components and resources can be changed, expanded, or upgraded as needed. Also, the design can be replicated as a unit to meet growth and expansion requirements.

● Flexible design with design options for the different subsystems in the solution, including the individual components used, storage configuration and connectivity options.

● Best-practices based design, incorporating design, technology, and product best practices.

● Simplify deployments through automation and make the solution available as Infrastructure as Code (IaC).

● Simplify operations using SaaS management where possible.

The high-level design of the FlashStack solution is shown in Figure 15.

The FlashStack solution is FC-based solution with storage access to Pure Storage FlashArray//XL170 array over traditional Fibre Channel connections. The solution includes the latest generation of Cisco UCS hardware running VMware vSphere 8.0. The solution incorporates design, technology, and product best practices to deliver a highly scalable and available architecture with no single point of failure. The compute, storage, network, and virtualization layers of the end-to-end design is built using the following components.

● Cisco UCS X9508 server chassis with 2 x Cisco UCS X9108-100G Intelligent Fabric Modules (IFMs) where 4 x 100GbE ports on each IFM connect to a pair of Cisco UCS Fabric Interconnects to provide upstream connectivity and all networks within and outside the Enterprise data center, including external networks.

● Cisco UCS X210c M7 compute nodes using 2 x 4th generation Intel Xeon Scalable processors with 512GB of DDR5 memory that can be increased up to a max of 8TB. The server is equipped with a Cisco UCS VIC 15231 network adaptor in the modular LAN On Motherboard (mLOM) slot and provides up to 200Gbps (2x100Gbps) of unified fabric connectivity from each compute node to the 100G Intelligent Fabric Modules (IFMs) on the Cisco UCS X-Series chassis.

● Pair of Cisco UCS 6536 Fabric Interconnects (FIs) provides line-rate, low-latency, lossless connectivity for LAN, SAN and management traffic from the Cisco UCS X-Series and Cisco UCS C-Series servers to Pure Storage and other upstream and external networks. The Cisco Fabric Interconnects provide:

◦ 4 x 32Gb FC connectivity to a Pure Storage FlashArray//XL170 through a pair of Cisco MDS switches.

◦ 2x100GbE uplink network connectivity to a pair of Cisco Nexus switches deployed in a vPC configuration and provide uplink connectivity to other internal and external networks.

● Pair of Cisco Nexus 93180YC-FX3 switches in NX-OS mode provide upstream connectivity to the Cisco UCS 6536FIs, enabling 100Gbps or higher speeds for connecting the FlashStack compute and storage infrastructure to other parts of an Enterprise’s internal and external networks as needed.

● Pair of Cisco MDS 9132T FC switches provides 32Gbps Fibre Channel connectivity to a SAN fabric with consistent low-latency performance using a chip-integrated non-blocking arbitration. MDS can operate in either switch mode or NPV mode and includes a dedicated Network Processing Unit (NPU) per port for real-time analytics calculations. The switch can inspect FC and SCSI headers at wire speed on every flow and analyze the flows on the switch itself. By using an industry-leading open format, the telemetry data can then be streamed to any analytics-visualization platform. This switch also includes a dedicated 10/100/1000BASE-T telemetry port to maximize data delivery to any telemetry receiver including Cisco Data Center Network Manager. Note that since this solution uses Cisco UCS 6536FIs running in NPV mode, the MDS switches will not be used in NPV mode in this CVD. Instead, the MDS switches will be deployed in Fibre Channel switching mode with NPIV mode.

● Pure Storage FlashArray//XL170 connects to the Cisco MDS 9132T switches using 32-Gbps Fibre Channel connections for Fibre Channel SAN connectivity.

● VMware vSphere 8.0 is deployed on the Cisco UCS X210M7 blades to host virtualized workloads.

● Cisco Intersight in Intersight Managed Mode (IMM) will manage the infrastructure from the cloud.

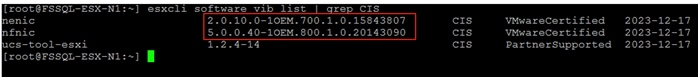

In this solution, VMware ESXi 8.0 virtual environment is tested and validated for deploying SQL Server 2022 databases on virtual machines running Windows Server 2022 guest operating system. The ESXi hosts are configured to boot from the Pure Storage using Fibre Channel. SQL Server virtual machine OS and Database files are stored over vSphere vVols using Fibre Channel protocol. Table 3 lists the software and hardware components along with image versions used in this solution.

Table 3. Software Components and Hardware

| Layer |

Device |

Image |

Quantity |

| Network |

Cisco Nexus 9000 C93180YC-FX3 Cisco MDS 9132T |

9.3(7) 9.2(2) |

2 2 |

| Compute |

Cisco UCS Fabric Interconnects 6536 Cisco UCS 9108-100G IFM X210c M7 compute nodes BIOS Cisco VIC 15231 nenic Drivers Cisco VIC 15231 nfnic Drivers |

4.3(2.230117) 4.3(2a) 5.2(0.230041) 1.0.6.0 5.0.0.43 |

2 2 4 1

|

| VMware |

VMware vSphere vCenter Appliance VMware vSphere ESXi |

8.0.1 8.0.1

|

1 |

| Cisco Intersight Assist Appliance |

Cisco Intersight Assist Appliance |

1.0.9 |

|

| Storage |

Pure Storage FlashArray Purity Version Pure Storage VMware vSphere Plugin Clou Block Store Purity//FA Version

VASA provider |

FlashArray//XL170 Purity 6.3.3 5.3.5 CBS on Azure Cloud 6.4.10 3.5 |

1 HA Pair with 30x 3.9TB NVMe SSDs and each controller equipped with 1x 324-port 32Gbps FC Adapter

|

| Guest OS |

Microsoft Windows Server 2022 Datacenter Eval |

20348.587 |

|

| Database |

Microsoft SQL Server 2022 |

16.0.1000.6 |

|

| Workload Testing Tool |

HammerDB |

4.9 |

|

Figure 16 details the cabling used for this validation. As shown, the Pure Storage FlashArray is connected to Cisco UCS Fabric Interconnects through Cisco MDS switches using 32Gb FC links. Cisco UCS 9108 blade chassis is connected to the Cisco UCS Fabric interconnects through IFM modules using 2x 100Gbps Ethernet connections on each side of the Fabric. The Cisco UCS Fabric Interconnects are connected to Cisco MDS switches over 100G – 4x 32GB FC break out cables for FC storage traffic.

The Cisco UCS Fabric Interconnects are connected to Cisco Nexus switches through 100Gbps connections. Finally, the Nexus switches are connected to customer network. Each Cisco UCS fabric interconnect and Cisco Nexus switch is connected to the out-of-band network switch, and each Pure controller has a connection to the out-of-band network switch.

IMPORTANT! For this FlashStack with SQL Server database validation, on each storage controller only two FC ports, with scsi-fc services enabled, are used.

VLAN Configuration

Table 4 lists the VLANs configured for setting up the FC-based FlashStack environment along with their usage.

| VLAN ID |

Name |

Usage |

| 2 |

Native-VLAN |

Use VLAN 2 as native VLAN instead of default VLAN (1) |

| 1060 |

OOB-MGMT-VLAN |

Out-of-band management VLAN to connect management ports for various devices |

| 1061 |

MGMT-VLAN |

In-band management VLAN utilized for all in-band management connectivity - for example, ESXi hosts, VM management, and so on |

| 1062 |

SQL-MGMT |

For SQL Client connectivity |

| 3000 |

vMotion |

VMware vMotion traffic |

VSAN Configuration

Table 5 lists the VSAN configured for setting up the FC-based FlashStack environment along with their usage.

| VSAN ID |

Name |

Usage |

| 103 |

VSAN-A |

For storage traffic through MDS Fabric-A |

| 104 |

VSAN-B |

For storage traffic through MDS Fabric-A |

Pure Storage FlashArray – Storage Design

To set up Pure Storage FlashArray, you must configure the following items:

● Volumes

◦ ESXi boot LUNs: These LUNs enable ESXi host boot from SAN functionality using Fibre Channel.

◦ The vSphere environment: vSphere uses the infrastructure datastore(s) to store the virtual machines. These volumes can be exposed to the ESXi hosts using traditional Fibre Channel protocol.

◦ vSphere Virtual Volumes for Application data storage: vVols are used for storing SQL Server VM’s virtual disks. For each virtual disk you add for a VM, a volume (data volumes) will be created on the Pure storage. In addition, two more volumes will be created ( one for config and other volume for swap).

● Hosts

◦ All FlashArray ESXi hosts are defined using the FC WWNs (scsi-fc based initiators).

◦ Add every active initiator for a given ESXi host.

● Host groups

◦ All ESXi hosts in a VMware cluster are part of the host group.

◦ Host groups are used to mount VM infrastructure application storage datastores in the VMware environment so that all the ESXi hosts that part of Host Group will get access storage volumes.

The ESXi host is configured with two vHBAs for storage access. vHBA-A will be connected to Pure storage via Fabric-A and vHBA-B will be connected to storage via Fabric-B. These HBA adapters will be used for ESXi boot traffic as well as SQL Server data traffic. Figure 17 depicts the storage layout used for this validation.

VMware vSphere – ESXi Networking Design

Multiple vNICs and vHBAs are created for the ESXi hosts using the Cisco Intersight server profile and are then assigned to specific virtual and distributed switches. The vNIC and vHBA distribution for the ESXi hosts is as follows:

● Two vNIC ( one for each fabric) for vSwitch0 to support core services such as management traffic.

● Two vNIC ( one for each fabric) for vSphere Distributed Switch (vDS) to support the customer application traffic and vMotion traffic.

● One vHBA each for Fabric-A and Fabric-B for FC stateless boot and SQL Server data traffic.

Figure 18 shows the ESXi vNIC and vHBAs configurations in detail.

Install and Configure Solution Deployment

This chapter contains the following:

● Hardware and Software Revisions

● Cisco Nexus Switch Configuration

● Cisco MDS Switch Configuration

● Pure Storage FlashArray//XL170 Configuration

● Cisco UCS Server Configuration using Cisco Intersight

● VMware vSphere ESXi Host Configuration

● VMware vSphere Virtual Volumes (vVols) Provisioning

● SQL Server Virtual Machine Configuration

● Intel Quick Assist Technology Drivers Installation and Configuration

● Cloud Block Storage Deployment on Azure Cloud

● System Validation and Testing

This chapter describes the specific configurations and recommendations that are important for running FlashStack Datacenter for SQL Server workloads. For a detailed step-by-step deployment guide to configure the network, compute, and storage stacks of the FlashStack solutions, refer to the base infrastructure CVDs here:

https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flashstack_m7_vmware_8_ufs_fc.html https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flashstack_vsi_vmware70_ucsx.html

Hardware and Software Revisions

Table 3 lists the hardware and software versions used during solution validation. It is important to note that the validated FlashStack solution explained in this document adheres to Cisco, Pure Storage, and VMware interoperability matrix to determine support for various software and driver versions. You should use the same interoperability matrix to determine support for components that are different from the current validated design.

Refer to the following links for more information:

● Pure Storage Interoperability Matrix. Note this interoperability list will require a support login from Pure: https://support.purestorage.com/FlashArray/Getting_Started/Compatibility_Matrix

● Pure Storage FlashStack Compatibility Matrix. Note, this interoperability list will require a support login from Pure: https://support.purestorage.com/FlashStack/Product_Information/FlashStack_Compatibility_Matrix

● Cisco UCS Hardware and Software Interoperability Tool: http://www.cisco.com/web/techdoc/ucs/interoperability/matrix/matrix.html

● VMware Compatibility Guide: http://www.vmware.com/resources/compatibility/search.php

Cisco Nexus Switch Configuration

On each Cisco Nexus Switch, required VLANs, Port Channels, vPC Domain and vPC-Peer links need to be done. These configurations, except the Ethernet interface numbers and VLAN numbers, are standard and no different than what is explained in the base infrastructure CVD. Please refer to the Cisco Nexus switch configuration in the base infrastructure CVD here: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flashstack_vsi_vmware70_ucsx.html#NetworkSwitchConfiguration

Cisco MDS Switch Configuration

The Pure Storage FlashArray//XL170 array is connected to a pair of Cisco MDS switches to provide storage access to the Cisco UCS servers over Fibre Channel network. The Cisco UCS fabric interconnects were in Fibre Channel end-host mode (NPV mode) and uplinked through a SAN port channel to the Cisco MDS 9132T switches in NPV mode. Zoning in the Cisco MDS 9132T switches connected the vHBAs to storage targets for both FC-NVMe and Fibre Channel. Single-initiator, multiple-target zones were used for both FCP and FC-NVMe. These configurations, except the fibre channel interface numbers and VSAN numbers, are standard and no different than what is covered in the base infrastructure CVD. Refer to the Cisco MDS switch configuration in the base infrastructure CVDs here: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flashstack_vsi_vmware70_ucsx.html#SANSwitchConfiguration

Pure Storage FlashArray//XL170 Configuration

The Pure Storage configuration includes the following steps.

● Initial Pure Storage FlashArray Configuration

● Host Port identification, Host registration using WWNs for fc initiators and NQNs for fc-nvme initiators,

● Host Group creation.

● Mapping boot volume to the ESXi hosts registered using WWNs

The Pure Storage FlashArray//XL170 configuration is a standard activity. For detailed steps to configure a Pure Storage FlashArray, go to:

For this solution, each controller of the Pure Storage FlashArray//XL170 array is connected to a pair of Cisco MDS switches in a redundant fashion to the MDS switches. On each controller, two FC interfaces with traditional scsi-fc capabilities are used for providing access to the ESXi boot volumes, other infrastructure related volumes and used for providing access to the volumes for storing database files.

Cisco UCS Server Configuration using Cisco Intersight

This section provides more details on the specific Cisco UCS policies and settings used for configuring Cisco UCS X210c M7 blade server for hosting critical Microsoft SQL Server 2022 database virtual machine workloads in vSphere cluster. The Cisco UCS X210c blades are installed in the Cisco UCS X9508 chassis and are connected to a pair of Cisco UCS 6536 Fabric Interconnects. The Cisco UCS fabric interconnects are managed by Intersight (IMM mode).

It is important to use right network and storage adapter policies for low latency and better storage bandwidth as the underlying Cisco VIC resources are shared various types of traffics such as Application management traffic hosted on the vSphere cluster, application storage access, ESXi host management, vMotion, and so on.

Network and LAN Connectivity Policies

The following vNICs are defined in a LAN connectivity policy to derive the vNICs for the ESXi host networking. The LAN connectivity policy is then used in Server profiles template to derive the Server profile.

● 00-vSwitch0-A: Is used for ESXi host management traffic via Fabric A

● 01-vSwitch0-B: Is used for ESXi host management traffic via Fabric B

● 02-vDS0-A: Is used for application management traffic and vMotion traffic via Fabric A

● 03-vDS0-B: Is used for application management traffic and vMotion traffic via Fabric B

Table 6 lists the additional configuration details of the vNICs used in this reference architecture.

| vNICs |

00-vSwitch-A |

01-vSwitch-B |

02-vDS0-A |

03-vDS0-B |

| Slot ID and PCI Link |

Auto for Slot and PCI Link |

Auto for Slot and PCI Link |

Auto for Slot and PCI Link |

Auto for Slot and PCI Link |

| PCI Order |

0 |

1 |

2 |

3 |

| Switch ID |

A |

B |

A |

B |

| Fabric Failover |

Disabled |

Disabled |

Disabled |

Disabled |

| Network Group Policy for list of Allowed VLANs and Native VLAN |

1060, 1061 and 2 |

1060, 1061 and 2 |

1061, 1062, 3000 and 2 |

1061, 1062, 3000 and 2 |

| Network Control Policy for CDP and LLDP |

CDP and LLDP Enabled |

CDP and LLDP Enabled |

CDP and LLDP Enabled |

CDP and LLDP Enabled |

| QoS & MTU |

Best Effort and 9000 |

Best Effort and 9000 |

Best Effort and 9000 |

Best Effort and 9000 |

| Ethernet Adapter Policy |

EthAdapter-VMware-Policy |

EthAdapter-VMware-Policy |

EthAdapter- vDS-VMW-EthAdapter |

EthAdapter- vDS-VMW-EthAdapter |

Note: Ensure the ports on the upstream Nexus switches are appropriately configured with MTU, VLANs for end-to-end consistent configuration.

For the 00-vSwitch-A and 01-vSwitch-B vNICs, default ethernet adapter policy “VMWare” is used.

Ethernet Adapter Policy used for vDS0 vNICs

The adapter policy allows the administrator to declare the capabilities of the vNIC, such as the number of rings, ring sizes, and offload enablement and disablement. The transmit queues and receive queues defined in the default VMware adapter policy may not be sufficient as more SQL Server databases are consolidated which would generate a lot of VM management traffic on the FlashStack system.

Figure 19 shows the Adapter policy used for the vNICs 02-vDS0-A and 03-vDS0-3.

BIOS Policy

BIOS settings to be applied on the host will change based on the workload they run. The default BIOS settings promote power savings by reducing the operating speeds of processors and move the cores to deeper sleep states. These states need to be disabled for sustained high performance of database queries. For the server bios settings recommended for enterprise workloads are discussed in more detailed here:

For this solution, the following BIOS settings are used and validated. The rest of the settings were set to Platform Default.

| Category |

Setting |

Value Used |

| PCI |

SR-IOV Enabled |

Enabled |

| Processor |

Adjacent Cache Line Prefetcher Inter HyperThreading Technology Inter TurboBoost Tech LLC Prefetch Patrol Scrub Processor C1E Processor C3 Report Processor C6 Report P-STATE Coordination Power Performance Tuning XPT Prefetch |

Enabled Enabled Enabled Enabled Enabled Disabled Disabled Disabled HW-ALL OS Enabled |

Note: Before changing any bios setting in the production environments, the changes should be first tested and analyzed in the test environments.

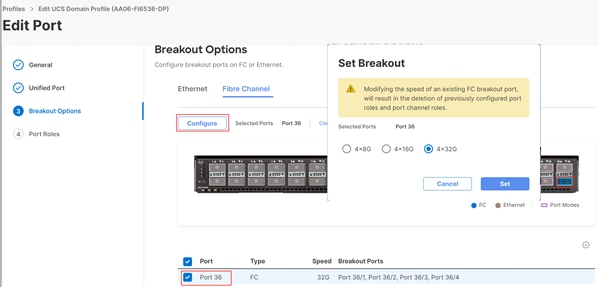

FC SAN Configuration on Fabric Interconnects

Cisco UCS 6536 Fabric Interconnects allows us to configure the last four ports (33 to 36) as unified ports to carry the Fibre channel traffic. Since these unified ports on FI6536 are 100G ports, these need to be configured as 4x 32G break out ports when they are connected to MDS 6132T Fibre Channel switches.

For this validation, only the last port (port 36) is configured as unified port on each Fabric Interconnect. Table 8 lists more details on how the Unified Port on Fabric Interconnects 6536 are configured.

Table 8. FC SAN Unified Ports Configuration on FI 6536

| Fabric Interconnect |

Unified Port Number |

Break Out Option and Ports |

Connected to MDS |

VSAN ID |

| A |

Port 36 |

1x 100G to 4x 32G ( 36/1, 36/2, 36/3, 36/4) |

MDS-A (Ports 3,4,5,6) |

103 |

| B |

Port 36 |

1x 100G to 4x 32G ( 36/1, 36/2, 36/3, 36/4) |

MDS-B (Ports 3,4,5,6) |

104 |

Note: You can always increase the unified port count and connect them to Cisco MDS for additional storage bandwidth and High Availability (HA) purposes.

Figure 20 shows how to set Ports 36 as 4x 32G break out ports.

The four break out cables, which connect port 36 on each FI to MDS switch, will form a Fibre Channel Port Channel and will have aggregated bandwidth of 128G on MDS switches.

The following vHBAs are defined in a SAN connectivity policy for the Pure Storage connectivity of the ESXi host. The SAN connectivity policy is used in the Server profile template to derive the Server profile and the server profile is associated to a Cisco UCS X210c M7 blade:

● vHBA-A: This HBA will be connected to fabric A side of MDS switches and used for ESXi SAN boot traffic as well as database traffic.

● vHBA-B: This HBA will be connected to fabric A side of MDS switches and used for ESXi SAN boot traffic as well as database traffic.

Table 9 lists all the setting used for the above vHBAs.

| vHBA Name |

vHBA-A |

vHBA-B |

| vHBA Type |

Fc-initiator |

1x |

| Slot ID |

MLOM |

MLOM |

| PCI Link |

0 |

0 |

| Switch ID |

A |

B |

| PCI Order |

4 |

5 |

| Persistent Lun Binding |

Enabled |

Enabled |

| Fibre Channel Network (VSAN ID) |

103 |

104 |

| QoS |

Rate Limit=0 Class of Service=3 |

Rate Limit=0 Class of Service=3 |

| Fibre Channel Adapter Policy |

Default “VMware” Adapter policy with “IO Throttle” set to 1024 |

Default “VMware” Adapter policy with “IO Throttle” set to 1024 |

After using the LAN and SAN connectivity policies within a server profile template, four server profiles are instantiated and associated to four Cisco UCS X210c M7 blades.

Figure 21 shows the four derived server profiles and associated with the blades (slots 3 to 7).

Figure 22 shows the vNIC and vHBA interfaces after successfully associating a server profile to a Cisco UCS X210c M7 blade server.

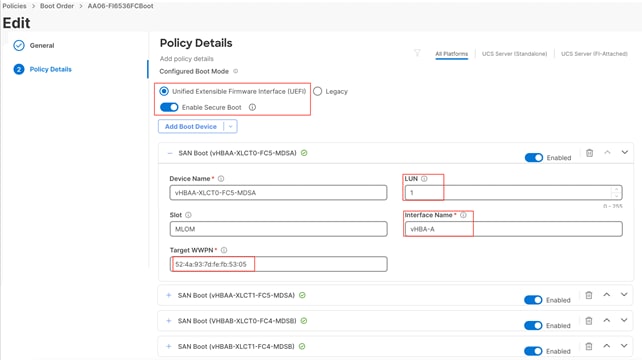

In this solution, the Cisco UCS X210c M7 blade servers are configured to boot from the Pure Storage volumes. For the ESXi hosts to establish connections to the Pure Storage over Fibre Channel, required WWN names of the Pure Storage FlashArray need to be configured in the Boot policy. Gather the WWN names of scsi-fc ports of Pure Storage array by executing “pureport list” command on the Pure Storage SSH terminal.