VersaStack for Data Center with All Flash Storage Design Guide

Available Languages

VersaStack for Data Center with All Flash Storage Design Guide

Design Guide for Cisco Unified Computing System and IBM FlashSystem V9000 with VMware vSphere 5.5 Update 2

Last Updated: November 24, 2015

The CVD program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information visit

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, IronPort, the IronPort logo, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2015 Cisco Systems, Inc. All rights reserved.

Table of Contents

Fabric Infrastructure Resilience

VersaStack and Cisco Nexus 9000 Modes of Operation

VersaStack: Cisco Nexus 9000 Design Options

Design Focus: VersaStack All Flash Block Only

VersaStack All Flash Design Direct Attached

Validated System Hardware Components

Cisco Unified Computing System

Cisco Nexus 2232PP 10GE Fabric Extender

Cisco Nexus 9000 Series Switch

Cisco MDS 9100 Multilayer Fabric Switch

Cisco Unified Computing System Manager

IBM FlashSystem V9000 Easy-to-Use Management GUI

VMware vCenter Server Plug-Ins

Cisco Nexus 1000v vCenter Plug-In

IBM Tivoli Storage FlashCopy Manager

Hardware and Software Revisions

Hardware and Software Options for VersaStack

Cisco Unified Computing System

IBM FlashSystem V9000 High Availability

Cisco® Validated Designs include systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. These designs incorporate a wide range of technologies and products into a portfolio of solutions that have been developed to address the business needs of customers.

This document describes the Cisco, IBM® and VMware vSphere 5.5 Update 2 on VersaStack® solution with the Cisco Nexus 9000 switches. A VersaStack solution is a validated approach for deploying Cisco and IBM technologies as a shared cloud infrastructure.

VersaStackTM is a predesigned, integrated platform architecture for the data center that is built on the Cisco Unified Computing System (Cisco UCS), the Cisco Nexus® family of switches, and IBM Storewize V7000 Unified and FlashSystem V9000 all-flash Enterprise storage. VersaStack is designed with no single point of failure and a focus of simplicity, efficiency, and versatility. VersaStack is a suitable platform for running a variety of virtualization hypervisors as well as bare metal operating systems to support enterprise workloads.

VersaStack delivers a baseline configuration and also has the flexibility to be sized and optimized to accommodate many different use cases and requirements. System designs discussed in this document have been validated for resiliency by subjecting to multiple failure conditions while under load. Fault tolerance to operational tasks e such as firmware and operating system upgrades, switch, cable and hardware failures, and loss of power has also been ascertained. This document describes a solution with VMware vSphere 5.5 Update 2 built on the VersaStack. The document from Cisco and IBM discusses design choices made and best practices followed in deploying the shared infrastructure platform.

Introduction

Industry trends indicate a vast data center transformation toward converged solutions and cloud computing. Enterprise customers are moving away from disparate layers of compute, network and storage to integrated stacks providing the basis for a more cost-effective virtualized environment that can lead to cloud computing for increased agility and reduced cost.

To accelerate this process and simplify the evolution to a shared cloud infrastructure, Cisco and IBM have developed the solution on VersaStackTM for VMware vSphere®. Enhancement of this solution with automation and self-service functionality and development of other solutions on VersaStackTM are envisioned under this partnership.

Audience

The intended audience of this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who want to take advantage of an infrastructure built to deliver IT efficiency and enable IT innovation.

Purpose of this Document

Customers looking to transition toward shared infrastructure, with or without cloud functionality, face a number of challenges. Most of these challenges are due to not knowing where to start and how to proceed in order to achieve expected levels of efficiency and reach business objectives. Even with customers experienced in hosting multi-tenant integrated platforms, there is a need to address critical areas leading up to an optimal solution. These areas include compatibility of selected components, scalability with investment protection and efficient and easy management of the integrated stack. All these factors are major considerations in realizing expected return on investment (ROI) as well as achieving objectives of recovery time (RTO) and recovery point (RPO).

By integrating standards based components that are compatible, scalable and easy to use, VersaStack addresses customer issues during the planning, design and implementation stages. When deployed, the efficient and intuitive front-end tools provide the means to manage the platform in an easy and agile manner. The VersaStack architecture thus mitigates customer risk and eliminates critical pain points while providing necessary guidance and measurable value. The result is a consistent platform with characteristics to meet changing workloads of any customer.

What’s New?

The following design elements distinguish this version of VersaStack from previous models:

· Validation of the Cisco Nexus 9000 switches with a IBM FlashSystem V9000 storage array

· Validation of Cisco MDS 9148S Switches with 8G and 16G ports

· Support for the Cisco UCS 2.2(3) release or later and Cisco UCS B200-M4 servers

· Support for the latest release of IBM FlashSystem software 7.4.1.2 and 7.5.1.0.

· A Fibre Channel based storage design with block storage based datastores

· Support for iSCSI and FCOE access to LUNs

Cisco and IBM have thoroughly validated and verified the VersaStack solution architecture and its many use cases while creating a portfolio of detailed documentation, information, and references to assist customers in transforming their data centers to this shared infrastructure model. This portfolio will include, but is not limited to the following items:

· Best practice architectural design

· Workload sizing and scaling guidance

· Implementation and deployment instructions

· Technical specifications (rules for what is, and what is not, a VersaStack configuration)

· Frequently asked questions (FAQs)

· Cisco Validated Designs (CVDs) and IBM Redbooks focused on a variety of use cases

Cisco and IBM have also built a robust and experienced support team focused on VersaStack solutions, from customer account and technical sales representatives to professional services and technical support engineers. The support alliance provided by IBM and Cisco provides customers and channel services partners with direct access to technical experts who collaborate with cross vendors and have access to shared lab resources to resolve potential issues.

VersaStack supports tight integration with hypervisors leading to virtualized environments and cloud infrastructures, making it the logical choice for long-term investment. Table 1 lists the features in VersaStack:

Table 1 VersaStack Component Features

| IBM FlashSystem V9000 all-flash Storage |

Cisco UCS and Cisco Nexus 9000 Switches |

| Real time compression |

Unified Fabric |

| Enhanced IP Replication |

Virtualized IO |

| Form factor scaling capability |

Extended Memory |

| Application agnostic tiering |

Stateless Servers through policy based management |

| Flash optimization |

Centralized Management |

| Big data and analytics enablement |

Application Centric Infrastructure (ACI) |

|

|

Investment Protection |

|

|

Scalability |

|

|

Automation |

Integrated System

VersaStack is a pre-validated infrastructure that brings together compute, storage, and network to simplify, accelerate, and minimize the risk associated with data center builds and application rollouts. These integrated systems provide a standardized approach in the data center that facilitates staff expertise, application onboarding, and automation as well as operational efficiencies relating to compliance and certification.

Fabric Infrastructure Resilience

VersaStack is a highly available and scalable infrastructure that IT can evolve over time to support multiple physical and virtual application workloads. VersaStack has no single point of failure at any level, from the server through the network, to the storage. The fabric is fully redundant and scalable and provides seamless traffic failover should any individual component fail at the physical or virtual layer.

Network Virtualization

VersaStack delivers the capability to securely connect virtual machines into the network. This solution allows network policies and services to be uniformly applied within the integrated compute stack using technologies such as virtual LANs (VLANs), quality of service (QoS), and the Cisco Nexus 1000v virtual distributed switch. This capability enables the full utilization of VersaStack while maintaining consistent application and security policy enforcement across the stack even with workload mobility.

VersaStack provides a uniform approach to IT architecture, offering a well-characterized and documented shared pool of resources for application workloads. VersaStack delivers operational efficiency and consistency with the versatility to meet a variety of SLAs and IT initiatives, including:

· Application rollouts or application migrations

· Business continuity/disaster recovery

· Desktop virtualization

· Cloud delivery models (public, private, hybrid) and service models (IaaS, PaaS, SaaS)

· Asset consolidation and virtualization

VersaStack is a best practice data center architecture that includes the following components:

· Cisco Unified Computing System (Cisco UCS)

· Cisco Nexus and MDS switches

· IBM FlashSystem and IBM Storwize family storage

Figure 1 VersaStack Components

These components are connected and configured according to best practices of both Cisco and IBM and provide the ideal platform for running a variety of enterprise workloads with confidence. The reference architecture covered in this document leverages the Cisco Nexus 9000 for the switching element. VersaStack can scale up for greater performance and capacity (adding compute, network, or storage resources individually as needed), or it can scale out for environments that need multiple consistent deployments (rolling out additional VersaStack stacks).

These components are connected and configured according to best practices of both Cisco and IBM and provide the ideal platform for running a variety of enterprise workloads with confidence. The reference architecture covered in this document leverages the Cisco Nexus 9000 for the switching element. VersaStack can scale up for greater performance and capacity (adding compute, network, or storage resources individually as needed), or it can scale out for environments that need multiple consistent deployments (rolling out additional VersaStack stacks).

One of the key benefits of VersaStack is the ability to maintain consistency at scale. Each of the component families shown in Figure 1 (Cisco Unified Computing System, Cisco Nexus, and IBM Storewize and FlashSystem) offers platform and resource options to scale the infrastructure up or down, while supporting the same features and functionality that are required under the configuration and connectivity best practices of VersaStack.

VersaStack Design Principles

Design principles are guidelines to help ensure the architectural implementation fulfills its mission. It provides a means to tie components and methods to the business objectives.

VersaStack addresses five primary design objectives:

· Availability: Helps ensure applications and services are accessible and ready to use.

· Scalability: Provide for capacity needs while staying within the architectural framework

· Versatility: Ability to quickly support new services without requiring infrastructure modifications.

· Efficiency. Facilitate efficient operation of the infrastructure through policy and API management.

· Simplicity: A strong focus on ease of use to help reduce deployment and operating costs.

The VersaStack architecture priorities scalability, availability and simplicity by using modular, redundant components, managed by centralized easy to use interfaces.

Some architectural principles and rationale for inclusion are described below:

· Least common mechanism: This principle highlights the need to globalize common/shared modules as it has the effect of reducing duplicates which can lead to higher efficiency and provide ease of maintenance. VersaStack implements centralized element managers such as Cisco UCS Manager and IBM FlashSystem V9000 and Storwize V7000 software for fully scaled out deployments of compute and storage.

· Efficient Mediated Access: Application centric infrastructure (ACI) functionality, in VersaStack, consists of hardware and software components to provide performance with flexibility.

Inclusion of Cisco UCS Director has the potential for further consolidation at the management layer, leading to an even more agile and manageable integrated stack.

![]() Performance and comprehensive security are key design criteria that are not directly addressed in this solution but will be addressed in other collateral, benchmarking, and solution testing efforts. This Design Guide validates the functionality and basic security elements.

Performance and comprehensive security are key design criteria that are not directly addressed in this solution but will be addressed in other collateral, benchmarking, and solution testing efforts. This Design Guide validates the functionality and basic security elements.

VersaStack Design Benefits

The following are the VersaStack design benefits; the current VersaStack Design includes IBM FlashSystem V9000 as the storage.

· VersaStack All Flash leverages hardware and software features of the IBM FlashSystem V9000 and the full capabilities of the IBM FlashCore technology plus a rich set of storage virtualization features.

· VersaStack All Flash harnesses IBM FlashSystem V9000 hardware to support up to 57 TB of internal storage attached to a single set of V9000 control enclosures, and up to 2.2 PB of all flash protected effective capacity for a fully-scaled system. Hardware-assisted Real-time compression and flash optimization with Easy Tier further boost storage efficiency and performance.

· VersaStack Unified system leverages hardware and software features of IBM Storwize V7000 Unified to transform data center efficiency while simplifying administrative tasks.

· VersaStack Unified includes IBM Storwize V7000 Generation 2 hardware to support up to 1.5 PB of internal storage attached to a single V7000 control enclosure, and up to 4PB for a fully-scaled system. Hardware-assisted Real-time compression and flash optimization with Easy Tier further boost storage efficiency and performance.

· A key benefit of VersaStack is the virtualizing capabilities of FlashSystem V9000 and Storwize software, which enables Administrators to centrally manage external storage volumes from a single point. Virtualization simplifies management tasks with the easy-to-use FlashSystem and Storwize GUI, while leveraging all functional features to boost storage utilization and capability. Administrators can migrate data without disruption to applications, avoiding downtime for backups, maintenance and upgrades.

· VersaStack Unified uses IBM Storwize V7000 Unified to integrate support for file and block data through one interface.

VersaStack and Cisco Nexus 9000 Modes of Operation

The Cisco Nexus 9000 family of switches supports two modes of operation: NxOS standalone mode and Application Centric Infrastructure (ACI) fabric mode. In standalone mode, the switch performs as a typical Cisco Nexus switch with increased port density, low latency and 40G connectivity. In fabric mode, the administrator can take advantage of Cisco ACI.

The Cisco Nexus 9000 stand-alone mode VersaStack design consists of a single pair of Cisco Nexus 9000 top of rack switches. When in ACI fabric mode, the Cisco Nexus 9500 and 9300 switches are deployed in a spine-leaf architecture. Although the reference architecture covered in this document does not leverage ACI, it lays the foundation for customer migration to ACI by integrating the Cisco Nexus 9000 switches.

Application Centric Infrastructure (ACI) is a holistic architecture with centralized automation and policy-driven application profiles. ACI delivers software flexibility with the scalability of hardware performance. Key characteristics of ACI include:

· Simplified automation by an application-driven policy model

· Centralized visibility with real-time, application health monitoring

· Open software flexibility for DevOps teams and ecosystem partner integration

· Scalable performance and multi-tenancy in hardware

The future of networking with ACI is about providing a network that is deployed, monitored, and managed in a fashion that supports DevOps and rapid application change. ACI does so through the reduction of complexity and a common policy framework that can automate provisioning and managing of resources.

VersaStack: Cisco Nexus 9000 Design Options

The compute, network and storage components within VersaStack provide interfaces and protocol options for block (iSCSI and Fibre Channel) and file (NFS/CIFS) leading to flexibility and multiple design options. Some of the relevant options that utilize available interfaces and supported protocols are discussed below.

The Current Design with IBM FlashSystem V9000 only provides block storage and does not supports file Storage such as NFS/CIFS, the design though allows NFS connectivity to access existing storage in the customers datacenters. VersaStack ALL Flash system provides fast fibre channel connections for block storage access to the IBM FlashSystem V9000 storage.

Design Focus: VersaStack All Flash Block Only

VersaStack All Flash system deployment leverages all-flash block storage with IBM FlashSystem V9000 Storage. As Figure 2 illustrates, the designs are fully redundant in the compute, network, and storage layers. There is no single point of failure from a device or traffic path perspective. The FlashSystem V9000 depicted shows 2 Controller Enclosures and a flash storage enclosure that contain 12 MicroLatency Flash Modules and 2 canisters, providing redundancy at the proper levels.

Figure 2 All Flash VersaStack Design

As illustrated, link aggregation technologies play an important role, providing improved aggregate bandwidth and link resiliency across the solution stack. Cisco Unified Computing System, and Cisco Nexus 9000 platforms support active port channeling using 802.3ad standard Link Aggregation Control Protocol (LACP). Port channeling is a link aggregation technique offering link fault tolerance and traffic distribution (load balancing) for improved aggregate bandwidth across member ports. In addition, the Cisco Nexus 9000 series features virtual PortChannel (vPC) capabilities. vPC allows links that are physically connected to two different Cisco Nexus 9000 Series devices to appear as a single "logical" port channel to a third device, essentially offering device fault tolerance. vPC addresses aggregate bandwidth, link, and device resiliency.

The Cisco Nexus design incorporates a 8Gb-enabled, 16Gb-capable FC fabric for block storage access, defined by FC uplinks from the IBM FlashSystem V9000 control enclosure to Cisco MDS switches and from the Cisco MDS switches to the Cisco UCS Fabric Interconnects.

The Cisco Nexus 9000 design can be deployed with a dedicated SAN switching environment, or requiring no direct Fibre Channel connectivity using iSCSI or FCOE for the SAN protocol, FCOE only supports 10GbE lossless Ethernet or faster. The current system validation covers dedicated SAN switching environment enabled by the MDS 9148S switches in order to meet the high performance and high availability requirements of All Flash VersaStack system.

Figure 3 below details the SAN connectivity within the VersaStack All Flash system, redundant host VSANs support 8GB host or server traffic to the V9000 Controllers through independent fabrics and another pair of redundant VSANs provide Controllers to Storage enclosure connectivity using 16GB ports on the MDS Switches.

Figure 3 SAN Connectivity – All Flash Block Storage

The SAN infrastructure allows addition of storage enclosures or additional building blocks non-disruptively. A pair of MDS 9148S switches has been used for the fibre connectivity, providing redundancy. Creation of separate fabrics by utilizing VSAN’s on the MDS switches provides dedicated hosts or server-side storage area networks (SANs) and a private fabric to support the cluster interconnect.

The logical fabric isolation provides:

1. No access for any host or server to the storage enclosure accidentally.

2. No congestion to the host or server-side SAN can cause potential performance implications for both the host or server-side SAN and the FlashSystem V9000

The SAN infrastructure allows scalability by adding additional Controllers and Storage enclosures and expanding the VersaStack System from one Single building block to multiple building blocks to support the data center requirements.

VersaStack All Flash Design Direct Attached

The other design option of All Flash VersaStack, which this CVD does not cover, is direct attached with V9000 fixed building block. For smaller deployments VersaStack All Flash can be deployed in a direct attached configuration to eliminate the requirement for separate Fibre Channel switches.

A fixed building block contains one FlashSystem V9000. The storage enclosure is cabled directly to each controller enclosure using 8 Gb links, and each control enclosure is connected directly to the Cisco UCS fabric interconnects. The control enclosures are directly connected without the use of switches or a SAN fabric, to form cluster links. A fixed building block can be upgraded to a scalable building block, but the upgrade process is disruptive to operations.

In this type of deployment the flexibility of the Fabric Interconnects is leveraged and they are changed to FC Switching Mode. The FC zoning is automated through Cisco UCS SAN Connectivity Polies and applied on the Fabric Interconnects to simplify deployment of new servers.

Figure 4 VersaStack All Flash Direct Attached Design

VersaStack Unified Design

This design is included for reference purpose to demonstrate the flexibility of VersaStack designs in supporting file and block based storage, the design is not covered in the current CVD, the current design includes IBM FlashSystem V9000 which offers block based storage only.

The VersaStack design covered below depicts a unified infrastructure consisting of both NFS access for VMware datastores and FC for SAN boot as well as additional VMFS datastores using IBM Storwize V7000 Unified

Figure 5 details the VersaStack Unified system with both file and block storage using IBM Storwize V7000 Unified. Similar to the ALL Flash design, the VersaStack Unified design is also fully redundant in the compute, network, and storage layers without single point of failure from a device or traffic path perspective. The Storwize V7000 Controller depicted shows 1 enclosure that contains 2 canisters, 1 for each of the 2 cluster nodes, as well as 24 disk drives.

Figure 5 Scalable Multi-Protocol VersaStack Design

The VersaStack Unified design incorporates an IP and FC-based storage solution that supports file access using NFS, and block access using FC. The solution provides a 10GbE-enabled, 40GbE-capable ethernet fabric defined by ethernet uplinks from the Cisco UCS Fabric Interconnects and from IBM Storwize V7000 storage connected to the Cisco Nexus switches through the V7000 file modules.

The Cisco Nexus design also incorporates an 8Gb-enabled, 16Gb-capable FC fabric for block access, defined by FC uplinks from the IBM Storwize V7000 control enclosure to the Cisco Nexus switches through Cisco MDS switches.

The Cisco Nexus 9000 design can be deployed with a dedicated SAN switching environment, or requiring no direct Fibre Channel connectivity using iSCSI for the SAN protocol.

![]() For Information regarding the VersaStack designs, please refer the following URL: http://www.cisco.com/c/en/us/solutions/enterprise/data-center-designs-cloud-computing/versastack-designs.html

For Information regarding the VersaStack designs, please refer the following URL: http://www.cisco.com/c/en/us/solutions/enterprise/data-center-designs-cloud-computing/versastack-designs.html

Validated System Hardware Components

The following components are required to deploy this VersaStack All Flash design:

· Cisco Unified Compute System

· Cisco Nexus 9372PX Series Switch

· Cisco MDS 9148S Series Switch

· IBM FlashSystem V9000 with 16GB fibre channel adapters for cluster connectivity and 8GB fibre channel adapters for the host connectivity.

· VMware vSphere 5.5 U2

Cisco Unified Computing System

The Cisco Unified Computing System is a next-generation solution for blade and rack server computing. The system integrates a low-latency, lossless 10 Gigabit Ethernet unified network fabric with enterprise-class, x86-architecture servers. The system is an integrated, scalable, multi-chassis platform in which all resources participate in a unified management domain. The Cisco Unified Computing System accelerates the delivery of new services simply, reliably, and securely through end-to-end provisioning and migration support for both virtualized and non-virtualized systems.

The Cisco Unified Computing System consists of the following components:

· Cisco UCS Manager (http://www.cisco.com/en/US/products/ps10281/index.html) provides unified, embedded management of all software and hardware components in the Cisco Unified Computing System.

· Cisco UCS 6200 Series Fabric Interconnects (http://www.cisco.com/en/US/products/ps11544/index.html) is a family of line-rate, low-latency, lossless, 10-Gbps Ethernet and Fibre Channel over Ethernet interconnect switches providing the management and communication backbone for the Cisco Unified Computing System.

· Cisco UCS 5100 Series Blade Server Chassis (http://www.cisco.com/en/US/products/ps10279/index.html) supports up to eight blade servers and up to two fabric extenders in a six-rack unit (RU) enclosure.

· Cisco UCS B-Series Blade Servers (http://www.cisco.com/c/en/us/products/servers-unified-computing/ucs-b-series-blade-servers/index.html) increase performance, efficiency, versatility and productivity with these Intel based blade servers.

· Cisco UCS C-Series Rack Mount Server (http://www.cisco.com/en/US/products/ps10493/index.html) deliver unified computing in an industry-standard form factor to reduce total cost of ownership and increase agility.

· Cisco UCS Adapters (http://www.cisco.com/en/US/products/ps10277/prod_module_series_home.html) wire-once architecture offers a range of options to converge the fabric, optimize virtualization and simplify management.

Cisco Nexus 2232PP 10GE Fabric Extender

The Cisco Nexus 2232PP 10G provides 32 10 Gb Ethernet and Fibre Channel Over Ethernet (FCoE) Small Form-Factor Pluggable Plus (SFP+) server ports and eight 10 Gb Ethernet and FCoE SFP+ uplink ports in a compact 1 rack unit (1RU) form factor.

When a Cisco UCS C-Series Rack-Mount Server is integrated with Cisco UCS Manager, through the Cisco Nexus 2232 platform, the server is managed using the Cisco UCS Manager GUI or Cisco UCS Manager CLI. The Cisco Nexus 2232 provides data and control traffic support for the integrated Cisco UCS C-Series server.

Cisco Nexus 9000 Series Switch

The Cisco Nexus 9000 Series Switches offer both modular (9500 switches) and fixed (9300 switches) 1/10/40/100 Gigabit Ethernet switch configurations designed to operate in one of two modes:

· Cisco NX-OS mode for traditional architectures

· ACI mode to take full advantage of the policy-driven services and infrastructure automation features of ACI

Architectural Flexibility

· Delivers high performance and density, and energy-efficient traditional 3-tier or leaf-spine architectures

· Provides a foundation for Cisco ACI, automating application deployment and delivering simplicity, agility, and flexibility

Scalability

· Up to 60 Tbps of non-blocking performance with less than 5-microsecond latency

· Up to 2304 10-Gbps or 576 40-Gbps non-blocking layer 2 and layer 3 Ethernet ports

· Wire-speed virtual extensible LAN (VXLAN) gateway, bridging, and routing support

High Availability

· Full Cisco In-Service Software Upgrade (ISSU) and patching without any interruption in operation

· Fully redundant and hot-swappable components

· A mix of third-party and Cisco ASICs provide for improved reliability and performance

Energy Efficiency

· The chassis is designed without a midplane to optimize airflow and reduce energy requirements

· The optimized design runs with fewer ASICs, resulting in lower energy use

· Efficient power supplies included in the switches are rated at 80 Plus Platinum

Investment Protection

· Cisco 40-Gb bidirectional transceiver allows for reuse of an existing 10 Gigabit Ethernet cabling plant for 40 Gigabit Ethernet

· Designed to support future ASIC generations

· Support for Cisco Nexus 2000 Series Fabric Extenders in both NX-OS and ACI mode

· Easy migration from NX-OS mode to ACI mode

When leveraging ACI fabric mode, the Cisco Nexus 9500 and 9300 switches are deployed in a spine-leaf architecture. Although the reference architecture covered in this document does not leverage ACI, it lays the foundation for customer migration to ACI by introducing the Cisco Nexus 9000 switches. Application Centric Infrastructure (ACI) is a comprehensive architecture with centralized automation and policy-driven application profiles. ACI delivers software flexibility with the scalability of hardware performance.

For more information, refer to:

http://www.cisco.com/c/en/us/products/switches/nexus-9000-series-switches/index.html

Cisco Nexus 1000v

Cisco Nexus 1000V Series Switches provide a comprehensive and extensible architectural platform for virtual machine (VM) and cloud networking. Integrated into the VMware vSphere hypervisor and fully compatible with VMware vCloud® Director, the Cisco Nexus 1000V Series provides:

· Advanced virtual machine networking based on Cisco NX-OS operating system and IEEE 802.1Q switching technology

· Cisco vPath technology for efficient and optimized integration of virtual network services

· Virtual Extensible Local Area Network (VXLAN), supporting cloud networking

· Policy-based virtual machine connectivity

· Mobile virtual machine security and network policy

· Non-disruptive operational model for your server virtualization and networking teams

· Virtualized network services with Cisco vPath providing a single architecture for L4 -L7 network services such as load balancing, firewalling and WAN acceleration. For more information, refer to:

— http://www.cisco.com/en/US/products/ps9902/index.html

— http://www.cisco.com/en/US/products/ps10785/index.html

Cisco MDS 9100 Multilayer Fabric Switch

The Cisco® MDS 9148S 16G Multilayer Fabric Switch is the next generation of the highly reliable, flexible, and low-cost Cisco MDS 9100 Series switches. It combines high performance with exceptional flexibility and cost effectiveness. This powerful, compact one rack-unit (1RU) switch scales from 12 to 48 line-rate 16 Gbps Fibre Channel ports. The Cisco MDS 9148S delivers advanced storage networking features and functions with ease of management and compatibility with the entire Cisco MDS 9000 Family portfolio for reliable end-to-end connectivity.

Table 2 summarizes the main features and benefits of the Cisco MDS 9148S.

| Feature |

Benefit |

| Common software across all platforms |

Reduce total cost of ownership (TCO) by using Cisco NX-OS and Cisco Prime DCNM for consistent provisioning, management, and diagnostic capabilities across the fabric. |

| PowerOn Auto Provisioning |

Automate deployment and upgrade of software images. |

| Smart zoning |

Reduce consumption of hardware resources and administrative time needed to create and manage zones. |

| Intelligent diagnostics/Hardware based slow port detection |

Enhance reliability, speed problem resolution, and reduce service costs by using Fibre Channel ping and traceroute to identify exact path and timing of flows, as well as Cisco Switched Port Analyzer (SPAN) and Remote SPAN (RSPAN) and Cisco Fabric Analyzer to capture and analyze network traffic. |

| Virtual output queuing |

Help ensure line-rate performance on each port by eliminating head-of-line blocking. |

| High-performance ISLs |

Optimize bandwidth utilization by aggregating up to 16 physical ISLs into a single logical PortChannel bundle with multipath load balancing. |

| In-Service Software Upgrades |

Reduce downtime for planned maintenance and software upgrades. |

For more information, refer to:

IBM FlashSystem V9000

The storage controller leveraged for this validated design, IBM FlashSystem V9000, is engineered to satisfy the most demanding of workloads. IBM FlashSystem V9000 is a virtualized, flash-optimized, enterprise-class storage system that provides the foundation for implementing an effective storage infrastructure with simplicity, and transforming the economics of data storage. Designed to complement virtual server environments, these modular storage systems deliver the flexibility and responsiveness required for changing business needs.

IBM FlashSystem V9000 has the following host interfaces:

· SAN-attached 16 Gbps Fibre Channel

· SAN-attached 8 Gbps Fibre Channel

· 1 Gbps iSCSI

· Optional 10 Gbps iSCSI/FCoE

Each IBM FlashSystem V9000 node canister has up to 128GB internal cache to accelerate and optimize writes, and hardware acceleration to boost the performance of Real-time Compression.

IBM FlashSystem V9000 can deploy the full range of Storwize software features, including:

· IBM Real-time compression

· IBM Easy Tier for automated storage tiering

· External storage virtualization and data migration

· Synchronous data replication with Metro Mirror

· Asynchronous data replication with Global Mirror

· FlashCopy for near-instant data backups

IBM FlashSystem V9000 with Real-time Compression

A key differentiator in the storage industry is IBM's Real-time Compression. Unlike other approaches to compression, Real-time Compression is designed to work on active primary data, by harnessing dedicated hardware acceleration. This achieves extraordinary efficiency on a wide range of candidate data, such as production databases and email systems, enabling storage of up to five times as much active data in the same physical disk space.

IBM Easy Tier

IBM Easy Tier further improves performance while increasing efficiency, by automatically identifying and moving active data to faster storage, such as flash. This means flash storage is used for the data that will benefit the most, to deliver better performance from even small amounts of flash capacity. Even in systems without flash, hot spots are automatically detected and data is redistributed, to optimize performance.

For more information, refer to: http://www-03.ibm.com/systems/storage/flash/v9000/

VMware vSphere

VMware vSphere is the leading virtualization platform for managing pools of IT resources consisting of processing, memory, network and storage. Virtualization allows for the creation of multiple virtual machines to run in isolation, side-by-side and on the same physical host. Unlike traditional operating systems that dedicate all server resources to one instance of an application, vSphere provides a means to manage server hardware resources with greater granularity and in a dynamic manner to support multiple instances.

For more information, refer to: http://www.vmware.com/products/datacenter-virtualization/vsphere/overview.html

Domain and Element Management

This section of the document provides general descriptions of the domain and element managers relevant to the VersaStack:

· Cisco UCS Manager

· Cisco UCS Central

· IBM FlashSystem V9000 Unified management GUI

· VMware vCenter™ Server

· Cisco UCS Performance Manager

Cisco Unified Computing System Manager

Cisco UCS Manager provides unified, centralized, embedded management of Cisco Unified Computing System (UCS) software and hardware components across multiple chassis for up to 160 servers supporting thousands of virtual machines. Administrators use the software to manage the entire Cisco Unified Computing System as a single logical entity, called a domain, through an intuitive GUI, a command-line interface (CLI) or an XML API.

The Cisco UCS Manager resides on a pair of Cisco UCS 6200 Series Fabric Interconnects using a clustered, active-standby configuration for high availability. The software, along with programmability of the Cisco VIC, provides statelessness to servers and gives administrators a single interface for performing server provisioning, device discovery, inventory, configuration, diagnostics, monitoring, fault detection, auditing, and statistics collection. Statelessness refers to the capability to separate server identity from the underlying hardware, thus setting the stage for assuming new server identity as needed.

Cisco UCS servers provide for this functionality by storing server identifiers such as the MAC, UUID, WWN, firmware and BIOS versions in pools within Cisco UCS Manager which is resident in the redundant fabric interconnects. Cisco UCS Manager service profiles and templates support versatile role- and policy-based management, and system configuration information can be exported to configuration management databases (CMDBs) to facilitate processes based on IT Infrastructure Library (ITIL) concepts.

Compute nodes are deployed in a Cisco UCS environment by leveraging Cisco UCS service profiles. Service profiles let server, network, and storage administrators treat Cisco UCS servers as raw computing capacity to be allocated and reallocated as needed. The profiles define server I/O properties, personalities, properties and firmware revisions and are stored in the Cisco UCS 6200 Series Fabric Interconnects. Using service profiles, administrators can provision infrastructure resources in minutes instead of days, creating a more dynamic environment and more efficient use of server capacity.

Each service profile consists of a server software definition and the server's LAN and SAN connectivity requirements. When a service profile is deployed to a server, Cisco UCS Manager automatically configures the server, adapters, fabric extenders, and fabric interconnects to match the configuration specified in the profile. The automatic configuration of servers, network interface cards (NICs), host bus adapters (HBAs), and LAN and SAN switches lowers the risk of human error, improves consistency, and decreases server deployment times.

Service profiles benefit both virtualized and non-virtualized environments. The profiles increase the mobility of non-virtualized servers, such as when moving workloads from server to server or taking a server offline for service or upgrade. Profiles can also be used in conjunction with virtualization clusters to bring new resources online easily, complementing virtual machine capacity and mobility.

For more Cisco UCS Manager information, refer to: http://www.cisco.com/en/US/products/ps10281/index.html

Cisco UCS Central

For Cisco UCS customers managing growth within a single data center, growth across multiple sites, or both, Cisco UCS Central software centrally manages multiple Cisco UCS domains using the same concepts that Cisco UCS Manager uses to support a single domain. Cisco UCS Central Software manages global resources (including identifiers and policies) that can be consumed by instances of Cisco UCS Manager (Figure 6). It can delegate the application of policies (embodied in global service profiles) to individual domains, where Cisco UCS Manager puts the policies into effect. In its first release, Cisco UCS Central Software can support up to 10,000 servers in a single data center or distributed around the world in as many domains as are used for the servers.

Figure 6 Cisco UCS Management Stack

Cisco UCS Performance Manager

Cisco UCS Performance Manager can help unify the monitoring of critical infrastructure components, network connections, applications, and business services across dynamic heterogeneous physical and virtual data centers powered by Cisco UCS.

Cisco UCS Performance Manager delivers detailed monitoring from a single customizable console. The software uses APIs from Cisco UCS Manager and other Cisco® and third-party components to collect data and display comprehensive, relevant information about your Cisco UCS integrated infrastructure.

Cisco UCS Performance Manager does the following:

· Unifies performance monitoring and management of Cisco UCS integrated infrastructure

· Delivers real-time views of fabric and data center switch bandwidth use and capacity thresholds

· Discovers and creates relationship models of each system, giving your staff a single, accurate view of all components

· Provides coverage for Cisco UCS servers, Cisco networking, storage, hypervisors, and operating systems

· Allows you to easily navigate to individual components for rapid problem resolution

An Express version of Cisco UCS Performance Manager is also available for Cisco UCS-based compute platform coverage only (physical and virtual). The Express version covers Cisco UCS servers, hypervisors, and operating systems.

Figure 7 Cisco UCS Performance Manager View of Converged Infrastructure Components

Figure 8 Cisco UCS Performance Manager Topology

IBM FlashSystem V9000 Easy-to-Use Management GUI

The IBM FlashSystem V9000 built-in user interface (Figure 9) hides complexity and makes it possible for administrators to quickly and easily complete common block storage tasks from the same interface, such as creating and deploying volumes and host mappings. Users can also monitor performance in real-time (Figure 10).

The IBM FlashSystem V9000 management interface has the ability to check for the latest updates, and through an upgrade wizard, keep you running the latest software release with just a few mouse clicks. The interface provides auto-discovery and presets that help the admin greatly reduce setup time as well as help them easily implement a successful deployment. The interface is web-accessible and built into the product, removing the need for the administrator to download and update management software.

Figure 9 IBM FlashSystem V9000 Management GUI Example

Figure 10 Real-time Performance Monitoring on the IBM FlashSystem V9000 Management GUI

VMware vCenter Server

VMware vCenter is a virtualization management application for managing large collections of IT infrastructure resources such as processing, storage and networking in a seamless, versatile and dynamic manner. It is the simplest and most efficient way to manage VMware vSphere hosts at scale. It provides unified management of all hosts and virtual machines from a single console and aggregates performance monitoring of clusters, hosts, and virtual machines. VMware vCenter Server gives administrators a deep insight into the status and configuration of compute clusters, hosts, virtual machines, storage, the guest OS, and other critical components of a virtual infrastructure. A single administrator can manage 100 or more virtualization environment workloads using VMware vCenter Server, more than doubling typical productivity in managing physical infrastructure. VMware vCenter manages the rich set of features available in a VMware vSphere environment.

For more information, refer to: http://www.vmware.com/products/vcenter-server/overview.html

VMware vCenter Server Plug-Ins

vCenter Server plug-ins extend the capabilities of vCenter Server by providing more features and functionality. Some plug-ins are installed as part of the base vCenter Server product, for example, vCenter Hardware Status and vCenter Service Status, while other plug-ins are packaged separately from the base product and require separate installation. These are some of the plug-ins used during the VersaStack validation process.

Cisco Nexus 1000v vCenter Plug-In

The Cisco Nexus 1000V virtual switch is a software-based Layer 2 switch for VMware ESX virtualized server environments. The Cisco Nexus 1000V provides a consistent networking experience across both physical and the virtual environments. It consists of two components: the Virtual Ethernet Module (VEM), a software switch that is embedded in the hypervisor, and a Virtual Supervisor Module (VSM), a module that manages the networking policies and the quality of service for the virtual machines.

Starting with Cisco Nexus 1000V Release 4.2(1)SV2(1.1), a plug-in for the VMware vCenter Server, known as vCenter plug-in (VC plug-in) is supported on the Cisco Nexus 1000V virtual switch. It provides the server administrators a view of the virtual network and a visibility into the networking aspects of the Cisco Nexus 1000V virtual switch. The vCenter plug-in is supported on VMware vSphere Web Clients only. VMware vSphere Web Client enables you to connect to a VMware vCenter Server system to manage a Cisco Nexus 1000V through a browser. The vCenter plug-in is installed as a new tab in the Cisco Nexus 1000V as part of the user interface in vSphere Web Client.

The vCenter plug-in allows the administrators to view the configuration aspects of the VSM. With the vCenter plug-in, the server administrator can export necessary networking details for further analysis. The server administrator can thus monitor and manage networking resources effectively with the details provided by the vCenter plug-in.

IBM Tivoli Storage FlashCopy Manager

IBM Tivoli Storage FlashCopy Manager delivers high levels of protection for key applications and databases using advanced integrated application snapshot backup and restore capabilities.

It lets you perform and manage frequent, near-instant, non-disruptive, application-aware backups and restores using integrated application and VM-aware snapshot technologies.

For more information, refer to: http://www-03.ibm.com/software/products/en/tivoli-storage-flashcopy-manager

Hardware and Software Revisions

Table 3 below describes the hardware and software versions used during solution validation. It is important to note that Cisco, IBM, and VMware have interoperability matrices that should be referenced to determine support for any specific implementation of VersaStack. Please refer to the following links for more information:

· IBM System Storage Interoperation Center

· Cisco UCS Hardware and Software Interoperability Tool

Table 3 Validated Software Versions

| Layer |

Device |

Image |

Comments |

| Compute |

Cisco UCS Fabric Interconnects 6200 Series, |

2.2(5a) |

Embedded management |

| Cisco UCS C 220 M3/M4 |

2.2(3g) |

Software bundle release |

|

| Cisco UCS B 200 M3/ M4 |

2.2(3g) |

Software bundle release |

|

| Cisco ESXi eNIC |

2.1.2.59 |

Ethernet driver for Cisco VIC |

|

| Cisco ESXi fnic Driver |

1.6.0.12 |

FCoE driver for Cisco VIC |

|

| Network |

Cisco Nexus 9372 |

6.1(2)I3(4b) |

Operating system version |

|

|

|

|

|

| Cisco MDS 9148S |

6.2(9c) |

FC switch firmware version |

|

| Storage |

IBM FlashSystem V9000 |

7.4.1.2 |

Software version |

| Software |

VMware vSphere ESXi |

5.5u2 |

|

| VMware vCenter |

5.5u2 |

|

|

| Cisco Nexus 1000v |

5.2(1)SV3(1.4) |

Software version |

Hardware and Software Options for VersaStack

While VersaStack Deployment CVD’s are configured with specific hardware and software, the components used to deploy a VersaStack can be customized to suit the specific needs of the environment as long as all the components and operating systems are on the HCL lists referenced in this document. VersaStack can be deployed with all advanced software features such as replication and storage virtualization on any component running support levels of code. It is recommended to use the software versions specified in the deployment CVD when possible. Other operating systems such as Linux and Windows are also supported on VersaStack either as a Hypervisor, guest OS within the hypervisor environment, or directly installed onto bare metal servers. Other protocols, iSCSI for example, are supported as well. Note that basic networking components such as IP only switches are typically not on the IBM HCL. Please refer to Table 4 for examples of additional hardware options.

Interoperability links:

http://www-03.ibm.com/systems/support/storage/ssic/interoperability.wss

http://www.cisco.com/web/techdoc/ucs/interoperability/matrix/matrix.html

Table 4 Examples of other VersaStack Hardware and Software Options

| Layer |

Hardware |

Software |

| Compute |

Rack |

2.2(3b) or later |

|

|

5108 chassis |

2.2(3b) or later |

|

|

Blade: |

2.2(3b) or later |

|

|

|

|

| Network |

93XX, 55XX, 56XX, 35XX series |

6.1(2)I3(1) or later |

|

|

Cisco Nexus 1000v |

5.2(1)SV3(1.1) or later |

|

|

UCS VIC 12XX series ,13XX series |

|

| Storage |

Cisco MDS 91XX, 92XX, 95XX, 97XX series |

6.2(9) or later |

|

|

IBM FlashSystem V9000 |

Version 7.4.1.2 or later |

|

|

IBM Storwize V7000 |

Version 7.3.0.9 or later |

|

|

LFF Expansion (2076-12F) SFF Expansion (2076-24F)

|

|

|

|

IBM Storwize V7000 Unified File Modules (2073-720) |

Version 1.5.0.5-1 or later |

| Software |

VMware vSphere ESXi |

5.5u1 or later |

|

|

VMware vCenter |

5.5u1 or later |

|

|

Windows |

2008R2, 2012R2 |

Physical Topology

Figure 11illustrates the new All Flash VersaStack design. The infrastructure is physically redundant across the stack, addressing Layer 1 high-availability requirements where the integrated stack can withstand failure of a link or failure of a device. The solution also incorporates additional Cisco and IBM technologies and features that to further increase the design efficiency. The compute, network and storage design overview of the All Flash VersaStack solution is covered in Figure 11. The individual details of these components will be covered in the upcoming sections.

Figure 11 Cisco Nexus 9000 Design for VersaStack All Flash

Logical Topology

Figure 2 above in the technology overview section illustrates the VersaStack All Flash System logical topology with Cisco Nexus 9000 and Cisco MDS switches. The design is physically redundant at every level and connected for high-availability to provide a reliable platform. The solution also incorporates additional Cisco and IBM technologies and features for a differentiated and effective design. This section of the document discusses the logical configuration validated for VersaStack All Flash System.

Cisco Unified Computing System

The VersaStack design supports both Cisco UCS B-Series and C-Series deployments. This section of the document discusses the integration of each deployment into VersaStack. The Cisco Unified Computing System supports the virtual server environment by providing robust, highly available, and extremely manageable compute resources. The components of the Cisco Unified Computing System offer physical redundancy and a set of logical structures to deliver a very resilient VersaStack compute domain. In this validation effort, multiple Cisco UCS B-Series ESXi servers are booted from SAN using Fibre Channel. The ESXi nodes consisted of Cisco UCS B200-M3 and B200-M4 series blades with Cisco 1240 and 1340 VIC adapters. These nodes were allocated to a VMware DRS and HA enabled cluster supporting infrastructure services such as vSphere Virtual Center, Microsoft Active Directory and database services.

Cisco Unified Computing System I/O Component Selection

VersaStack allows customers to adjust the individual components of the system to meet their particular scale or performance requirements. Selection of I/O components has a direct impact on scale and performance characteristics when ordering the Cisco UCS components. Figure 12 illustrates the available backplane connections in the Cisco UCS 5100 series chassis. As shown, each of the two Fabric Extenders (I/O module) has four 10GBASE KR (802.3ap) standardized Ethernet backplane paths available for connection to the half-width blade slot. This means that each half-width slot has the potential to support up to 80Gb of aggregate traffic depending on selection of the following:

· Fabric Extender model (2204XP or 2208XP)

· Modular LAN on Motherboard (mLOM) card

· Mezzanine Slot card

Figure 12 Cisco UCS B-Series M3 Server Chassis Backplane Connections

Fabric Extender Modules (FEX)

Each Cisco UCS chassis is equipped with a pair of Cisco UCS Fabric Extenders. The fabric extenders have two different models, 2208XP and 2204XP. Cisco UCS 2208XP has eight 10 Gigabit Ethernet, FCoE-capable ports that connect the blade chassis to the fabric interconnect. The Cisco UCS 2204 has four external ports with identical characteristics to connect to the fabric interconnect. Each Cisco UCS 2208XP has thirty-two 10 Gigabit Ethernet ports connected through the midplane to the eight half-width slots (4 per slot) in the chassis, while the 2204XP has 16 such ports (2 per slot).

Table 5 Fabric Extenders

|

|

Network Facing Interface |

Host Facing Interface |

| UCS 2204XP |

4 |

16 |

| UCS 2208XP |

8 |

32 |

MLOM Virtual Interface Card (VIC)

VersaStack solution is typically validated using Cisco VIC 1240 or Cisco VIC 1280; with the addition of the Cisco UCS B200 M4 servers to VersaStack All Flash system, VIC 1340 and VIC 1380 are also validated on these new blade servers. Cisco VIC 1240 is a 4-port 10 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE)-capable modular LAN on motherboard (mLOM) designed exclusively for the M3 generation of Cisco UCS B-Series Blade Servers. The Cisco VIC 1340, the next generation 2-port, 40 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE)-capable modular LAN on motherboard (mLOM) mezzanine adapter is designed for both B200 M3 and M4 generation of Cisco UCS B-Series Blade Servers. When used in combination with an optional Port Expander, the Cisco UCS VIC 1240 and VIC 1340 capabilities can be expanded to eight ports of 10 Gigabit Ethernet with the use of Cisco UCS 2208 fabric extender.

Mezzanine Slot Card

A Cisco VIC 1280 and 1380 are an eight-port 10 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE)-capable mezzanine cards designed exclusively for Cisco UCS B-Series Blade Servers.

Server Traffic Aggregation

Selection of the FEX, VIC and Mezzanine cards plays a major role in determining the aggregate traffic throughput to and from a server. Figure 13 shows an overview of backplane connectivity for both the I/O Modules and Cisco VICs. The number of KR lanes indicates the 10GbE paths available to the chassis and therefore blades. As shown in Figure 12, depending on the models of I/O modules and VICs, traffic aggregation differs. 2204XP enables 2 KR lanes per half-width blade slot while the 2208XP enables all four. Similarly number of KR lanes varies based on selection of VIC 1240/1340, VIC 1240/1340 with Port Expander and VIC 1280/1380.

Validated I/O Component Configurations

Two of the most commonly validated I/O component configurations in VersaStack designs are:

· Cisco UCS B200M3 with VIC 1240 and FEX 2204

· Cisco UCS B200M3 with VIC 1240 and FEX 2208

· Cisco UCS B200M4 with VIC 1340 and FEX 2208 *

![]() * Cisco UCS B200M4 with VIC 1340 and FEX 2208 configuration is very similar to B200M3 with VIC 1240 and FEX 2208 configuration as shown in Figure 12 and is therefore not covered separately

* Cisco UCS B200M4 with VIC 1340 and FEX 2208 configuration is very similar to B200M3 with VIC 1240 and FEX 2208 configuration as shown in Figure 12 and is therefore not covered separately

Figure 13 and Figure 14 show the connectivity for the first two configurations.

Figure 13 Validated Backplane Configuration—VIC 1240 with FEX 2204

In Figure 13, the FEX 2204XP enables 2 KR lanes to the half-width blade while the global discovery policy dictates the formation of a fabric port channel. This results in 20GbE connection to the blade server.

Figure 14 Validated Backplane Configuration—VIC 1240 with FEX 2208

In Figure 14, the FEX 2208XP enables 8 KR lanes to the half-width blade while the global discovery policy dictates the formation of a fabric port channel. Since VIC 1240 is not using a Port Expander module, this configuration results in 40GbE connection to the blade server.

Cisco Unified Computing System Chassis/FEX Discovery Policy

Cisco Unified Computing System can be configured to discover a chassis using Discrete Mode or the Port-Channel mode (Figure 15). In Discrete Mode each FEX KR connection and therefore server connection is tied or pinned to a network fabric connection homed to a port on the Fabric Interconnect. In the presence of a failure on the external "link" all KR connections are disabled within the FEX I/O module. In Port-Channel mode, the failure of a network fabric link allows for redistribution of flows across the remaining port channel members. Port-Channel mode therefore is less disruptive to the fabric and hence recommended in the VersaStack designs.

Figure 15 Cisco UCS Chassis Discovery Policy—Discrete Mode vs. Port Channel Mode

Cisco Unified Computing System—QoS and Jumbo Frames

VersaStack accommodates a myriad of traffic types (vMotion, NFS, FCoE, control traffic, etc.) and is capable of absorbing traffic spikes and protect against traffic loss. Cisco UCS and Nexus QoS system classes and policies deliver this functionality. In this validation effort the VersaStack was configured to support jumbo frames with an MTU size of 9000. Enabling jumbo frames allows the VersaStack environment to optimize throughput between devices while simultaneously reducing the consumption of CPU resources.

![]() When setting the Jumbo frames, it is important to make sure MTU settings are applied uniformly across the stack to prevent fragmentation and the negative performance.

When setting the Jumbo frames, it is important to make sure MTU settings are applied uniformly across the stack to prevent fragmentation and the negative performance.

Cisco Unified Computing System—Cisco UCS C-Series Server Design

Fabric Interconnect—Direct Attached Design

Cisco UCS Manager 2.2 and above now allows customers to connect Cisco UCS C-Series servers directly to Cisco UCS Fabric Interconnects without requiring a Fabric Extender (FEX). While the Cisco UCS C-Series connectivity using Cisco Nexus 2232 FEX is still supported and recommended for large scale Cisco UCS C-Series server deployments, direct attached design allows customers to connect and manage Cisco UCS C-Series servers on a smaller scale without buying additional hardware.

![]() For detailed connectivity requirements, refer to: http://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/c-series_integration/ucsm2-2/b_C-Series -Integration_UCSM2-2/b_C-Series-Integration_UCSM2-2_chapter_0110.html#reference_EF9772524 CF3442EBA65813C2140EBE6

For detailed connectivity requirements, refer to: http://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/c-series_integration/ucsm2-2/b_C-Series -Integration_UCSM2-2/b_C-Series-Integration_UCSM2-2_chapter_0110.html#reference_EF9772524 CF3442EBA65813C2140EBE6

Fabric Interconnect—Fabric Extender Attached Design

Figure 16 illustrates the connectivity of the Cisco UCS C-Series server into the Cisco UCS domain using a Fabric Extender. Functionally, the 1 RU Nexus FEX 2232PP replaces the Cisco UCS 2204 or 2208 IOM (located within the Cisco UCS 5108 blade chassis). Each 10GbE VIC port connects to Fabric A or B through the FEX. The FEX and Fabric Interconnects form port channels automatically based on the chassis discovery policy providing a link resiliency to the Cisco UCS C-series server. This is identical to the behavior of the IOM to Fabric Interconnect connectivity. Logically, the virtual circuits formed within the Cisco UCS domain are consistent between B and C series deployment models and the virtual constructs formed at the vSphere are unaware of the platform in use.

Figure 16 Cisco UCS C-Series with VIC 1225

Cisco Nexus 9000

Cisco Nexus 9000 provides Ethernet switching fabric for communications between the Cisco UCS domain and the enterprise network. In the VersaStack All Flash design, Cisco UCS Fabric Interconnects are connected to the Cisco Nexus 9000 switches using virtual PortChannels (vPC).

Virtual Port Channel (vPC)

A virtual PortChannel (vPC) allows links that are physically connected to two different Cisco Nexus 9000 Series devices to appear as a single PortChannel. In a switching environment, vPC provides the following benefits:

· Allows a single device to use a PortChannel across two upstream devices

· Eliminates Spanning Tree Protocol blocked ports and use all available uplink bandwidth

· Provides a loop-free topology

· Provides fast convergence if either one of the physical links or a device fails

· Helps ensure high availability of the overall VersaStack system

Figure 17 Cisco Nexus 9000 Connections to IBM FlashSystem V9000

Figure 17 illustrates the connections between Cisco Nexus 9000 and the Fabric Interconnects connected through a vPC require a "peer link" which is documented as port channel 10 in this diagram. In addition to the vPC peer-link, vPC peer keepalive link is a required component of a vPC configuration. The peer keepalive link allows each vPC enabled switch to monitor the health of its peer. This link accelerates convergence and reduces the occurrence of split-brain scenarios. In this validated solution, the vPC peer keepalive link uses the out-of-band management network.

Cisco Nexus 9000 Best Practices

Cisco Nexus 9000 related best practices used in the validation of the VersaStack architecture are summarized below:

· Cisco Nexus 9000 features enabled

— Link Aggregation Control Protocol (LACP part of 802.3ad)

— Cisco Virtual Port Channeling (vPC) for link and device resiliency

— Enable Cisco Discovery Protocol (CDP) for infrastructure visibility and troubleshooting

· vPC considerations

— Define a unique domain ID

— Set the priority of the intended vPC primary switch lower than the secondary (default priority is 32768)

— Establish peer keepalive connectivity. It is recommended to use the out-of-band management network (mgmt0) or a dedicated switched virtual interface (SVI)

— Enable vPC auto-recovery feature

— Enable peer-gateway. Peer-gateway allows a vPC switch to act as the active gateway for packets that are addressed to the router MAC address of the vPC peer allowing vPC peers to forward traffic

— Enable IP arp synchronization to optimize convergence across the vPC peer link.

![]() Cisco Fabric Services over Ethernet (CFSoE) is responsible for synchronization of configuration, Spanning Tree, MAC and VLAN information, which removes the requirement for explicit configuration. The service is enabled by default.

Cisco Fabric Services over Ethernet (CFSoE) is responsible for synchronization of configuration, Spanning Tree, MAC and VLAN information, which removes the requirement for explicit configuration. The service is enabled by default.

— A minimum of two 10 Gigabit Ethernet connections are required for vPC

— All port channels should be configured in LACP active mode

· Spanning tree considerations

— The spanning tree priority was not modified. Peer-switch (part of vPC configuration) is enabled which allows both switches to act as root for the VLANs

— Loopguard is disabled by default

— BPDU guard and filtering are enabled by default

— Bridge assurance is only enabled on the vPC Peer Link.

Cisco MDS 9000

Cisco MDS 9000 provides Fibre Channel switching fabric for communications between the Cisco UCS domain and the IBM FlashSystem V9000. In the VersaStack design, Cisco UCS Fabric Interconnects are connected to the Cisco MDS 9000 switches using PortChannels and utilizing virtual storage area networks (VSAN) to segregate and secure the fibre channel traffic.

Virtual SAN

A virtual storage area network (VSAN) is a collection of ports from a set of connected Fibre Channel switches, which form a virtual fabric. Ports within a single switch can be partitioned into multiple VSANs, despite sharing hardware resources. Conversely, multiple switches can join a number of ports to form a single VSAN. Each VSAN is a separate self-contained fabric using distinctive security policies, zones, events, memberships, and name services. Traffic is also separate.

Unlike a typical fabric that is resized switch-by-switch, a VSAN can be resized port-by-port.

The use of VSANs allows the isolation of traffic within specific portions of the network. If a problem occurs in one VSAN, that problem can be handled with a minimum of disruption to the rest of the network. VSANs can also be configured separately and independently.

Isolated fabric topologies are created on the Cisco MDS switches using VSANs to support Host and Cluster interconnect traffic.

Table 6 VSAN Usage

|

|

Cisco MDS A |

Cisco MDS B |

| Host VSANs |

101 |

102 |

| Cluster Interconnect VSANs |

201 |

202 |

PortChannels

PortChannels refer to the aggregation of multiple physical fibre channel interfaces into one logical interface to provide higher aggregated bandwidth, load balancing, and link redundancy. PortChannels can connect to interfaces across switching modules, so a failure of a switching module cannot bring down the PortChannel link.

Figure 18 illustrates connections between Cisco MDS 9000 and the Fabric Interconnects connected through a portchannel. Uplinks between the Cisco UCS Fabric Interconnects and Cisco MDS 9000 Family SAN Switch are aggregated in to portchannels, which provides uplink bandwidth of 32 Gbps from each Fabric Interconnect to the cisco MDS switches.

FlashSystem Controllers have 8Gbps host facing ports and 16 Gbps storage facing ports connected to the Cisco MDS switches and have two ports per fabric providing redundancy. Dedicated VSANs are used to enable isolated host connectivity on each MDS switch.

Figure 18 Cisco MDS connections to FlashSystem V9000 for Host Connectivity

Figure 19 illustrates connections between FlashSystem V9000 Controllers and the Storage Enclosure using Cisco MDS 9000 switches. All connectivity between the Controllers and the Storage Enclosure is 16 Gbps.

This design allows scalability by adding additional V9000 building blocks and integrating them when connected to the same fabrics. Adding additional MDS switches in the existing fabrics can increase the number of fibre ports if the existing ports on the switches are saturated.

Figure 19 Cisco MDS connections to FlashSystem V9000 for Cluster Interconnect

Cisco Nexus 1000v

The Cisco Nexus 1000v is a virtual distributed switch that fully integrates into a vSphere enabled environment. The Cisco Nexus 1000v operationally emulates a physical modular switch where:

· Virtual Supervisor Module (VSM) provides control and management functionality to multiple modules

· Cisco Virtual Ethernet Module (VEM) is installed on ESXi nodes and each ESXi node acts as a module in the virtual switch

Figure 20 Cisco Nexus 1000v Architecture

The VEM takes configuration information from the VSM and performs Layer 2 switching and advanced networking functions, as follows:

· PortChannels

· Quality of service (QoS)

· Security: Private VLAN, access control lists (ACLs), and port security

· Monitoring: NetFlow, Switch Port Analyzer (SPAN), and Encapsulated Remote SPAN (ERSPAN)

· vPath providing efficient traffic redirection to one or more chained services such as the Cisco Virtual Security Gateway and Cisco ASA 1000v

![]() VersaStack architecture will fully support other intelligent network services offered through the Cisco Nexus 1000v such as Cisco VSG, ASA1000v, and vNAM.

VersaStack architecture will fully support other intelligent network services offered through the Cisco Nexus 1000v such as Cisco VSG, ASA1000v, and vNAM.

Figure 21 illustrates a single ESXi node with a VEM registered to the Cisco Nexus 1000v VSM. The ESXi vmnics are presented as Ethernet interfaces in the Cisco Nexus 1000v.

Figure 21 Cisco Nexus 1000v VEM in an ESXi Environment

Cisco Nexus 1000v Interfaces and Port Profiles

Port profiles are logical templates that can be applied to the Ethernet and virtual Ethernet interfaces available on the Cisco Nexus 1000v. Cisco Nexus 1000v aggregates the Ethernet uplinks into a single port channel named the "System-Uplink" port profile for fault tolerance and improved throughput (Figure 21).

![]() Since the Cisco Nexus 1000v provides link failure detection, disabling Cisco UCS Fabric Failover within the vNIC template is recommended.

Since the Cisco Nexus 1000v provides link failure detection, disabling Cisco UCS Fabric Failover within the vNIC template is recommended.

The virtual machine facing virtual Ethernet ports employ port profiles customized for each virtual machines network, security and service level requirements. The VersaStack architecture employs four core VMkernel NICs (vmknics), each with its own port profile:

· vmk0 - ESXi management

· vmk1 - vMotion interface

· vmk2 - NFS interface

· vmk3 - Infrastructure management

The NFS and vMotion interfaces are private subnets supporting data access and VM migration across the VersaStack infrastructure. The management interface support remote vCenter access and if necessary, ESXi shell access.

The Cisco Nexus 1000v also supports Cisco's MQC to assist in uniform operation and enforcement of QoS policies across the infrastructure. The Cisco Nexus 1000v supports marking at the edge and policing traffic from VM-to-VM.

For more information about "Best Practices in Deploying Cisco Nexus 1000V Series Switches on Cisco UCS B and C Series Cisco UCS Manager Servers" refer to:

http://www.cisco.com/en/US/prod/collateral/switches/ps9441/ps9902/white_paper_c11-558242.html

IBM FlashSystem V9000 High Availability

IBM FlashSystem V9000 can be used to deploy a high availability storage solution with no single point of failure (Figure 22 and Figure 23).

Each IBM FlashSystem V9000 system can be used to deploy a high availability storage solution with no single point of failure.

Each IBM FlashSystem V9000 control enclosure within a system are a pair of active-active I/O paths. If a control enclosure fails, the other control enclosure seamlessly assumes I/O responsibilities of the failed control enclosure. Control enclosures communicate over the FC SAN, for increased redundancy and performance.

Each FlashSystem V9000 control enclosure has up to eight fibre-channel ports that can attach to multiple SAN fabrics. For high availability, attach node canisters to at least two fabrics.

Figure 22 IBM FlashSystem V9000 building block HA Cabling: Host Connectivity

IBM FlashSystem V9000 supports fully redundant connections for communication between control enclosures, external storage, and host systems. If a SAN fabric fault disrupts communication or I/O operations, the system recovers and retries the operation through an alternative communication path. Host systems should be configured to use multi-pathing, so that if a SAN fabric fault or node canister failure occurs, the host can retry I/O operations.

Figure 23 IBM FlashSystem V9000 building block HA Cabling: Cluster Connectivity

IBM FlashSystem V9000 supports up to four building blocks, for a maximum 8 control enclosures, and up to 4 additional flash storage expansion enclosures (then a total of 8 storage enclosures). Systems containing multiple building blocks or any expansion flash storage enclosures must connect node canisters through FC SAN to allow communication between them. A single building block fixed configuration can be directly cabled amongst its enclosures, not direct-attached are routed over the FC SAN (Figure 24 and Figure 25).

![]() The Fully scaled architecture of All Flash VersaStack is not validated as part of the VersaStack program, but it is a supported configuration and the addition of building blocks can be based on the customer’s requirements

The Fully scaled architecture of All Flash VersaStack is not validated as part of the VersaStack program, but it is a supported configuration and the addition of building blocks can be based on the customer’s requirements

Figure 24 IBM FlashSystem V9000 Fully-Scaled HA Communication

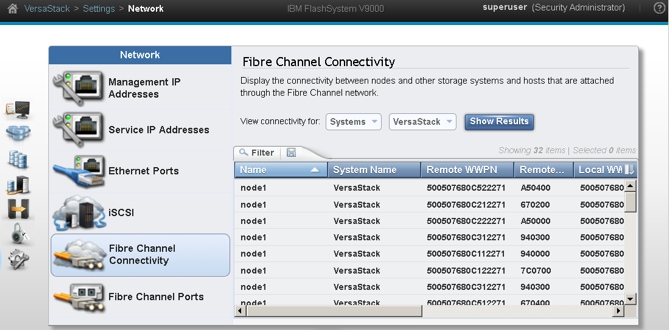

Figure 25 Fibre Channel Connectivity Details

VMware vCenter and vSphere

VMware vSphere 5.5 Update 2 provides a platform for virtualization that includes multiple components and features. In this validation effort, the following key components and features were utilized:

· VMware ESXi

· VMware vCenter Server

· VMware vSphere SDKs

· VMware vSphere Virtual Machine File System (VMFS)

· VMware vSphere High Availability (HA)

· VMware vSphere Distributed Resource Scheduler (DRS)

The VersaStack solution combines the innovation of Cisco UCS Integrated Infrastructure with the efficiency of the IBM storage systems. The Cisco UCS Integrated Infrastructure includes the Cisco Unified Computing System (Cisco UCS), Cisco Nexus, Cisco MDS switches, and Cisco UCS Director.

The IBM Storage Systems enhances virtual environments with Data Virtualization, Real-time Compression and Easy Tier features.