Cisco and Hitachi Adaptive Solution VDI for VMware Horizon 8 VMware vSphere 8.0 U2

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

In partnership with:

![]()

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to: http://www.cisco.com/go/designzone.

Cisco Validated Designs (CVDs) consist of systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. These designs incorporate a wide range of technologies and products into a portfolio of solutions that have been developed to address the business needs of our customers.

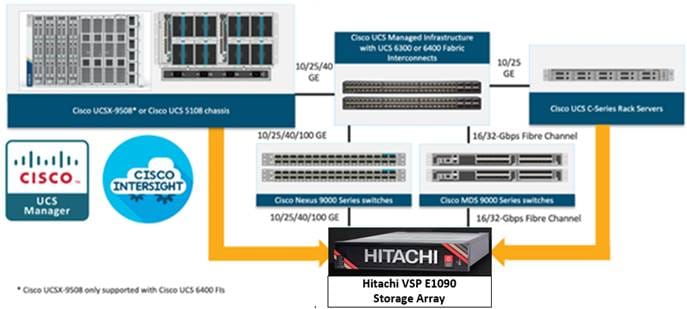

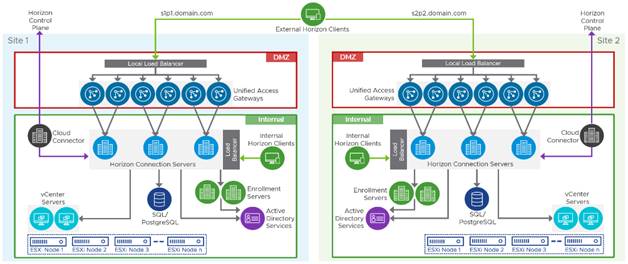

This document details the design of the Cisco and Hitachi Adaptive Solution Virtual Desktop Infrastructure for VMware Horizon 8 VMware vSphere 8.0 U2 Design Guide, which describes a validated Converged Infrastructure (CI) jointly developed by Cisco and Hitachi.

This solution explains the deployment of a predesigned, best-practice data center architecture with:

● VMware Horizon and VMware vSphere.

● Cisco Unified Computing System (Cisco UCS) incorporating the Cisco B-Series modular platform.

● Cisco Nexus 9000 family of switches.

● Cisco MDS 9000 family of Fibre Channel switches.

● Hitachi VSP E1090 Virtual Storage Platform (VSP E1090) supporting Fibre Channel storage access.

Cisco Intersight cloud platform delivers monitoring, orchestration, workload optimization and lifecycle management capabilities for the solution.

When deployed, the architecture presents a robust infrastructure viable for a wide range of application workloads implemented as a Virtual Desktop Infrastructure (VDI).

Additional Cisco Validated Designs created in a partnership between Cisco and Hitachi can be found here: https://www.cisco.com/c/en/us/solutions/design-zone/data-center-design-guides/data-center-design-guides-all.html#Hitachi

Solution Overview

This chapter contains the following:

● Audience

The current industry trend in data center design is towards shared infrastructures. By using virtualization along with pre-validated IT platforms, enterprise customers have embarked on the journey to the cloud by moving away from application silos and toward shared infrastructure that can be quickly deployed, thereby increasing agility, and reducing costs. Cisco, Hitachi VSP storage and VMware have partnered to deliver this Cisco Validated Design, which uses best of breed storage, server, and network components to serve as the foundation for desktop virtualization workloads, enabling efficient architectural designs that can be quickly and confidently deployed.

The intended audience for this document includes, but is not limited to IT architects, sales engineers, field consultants, professional services, IT managers, IT engineers, partners, and customers who are interested in learning about and deploying the Virtual Desktop Infrastructure (VDI).

This document provides a step-by-step design, configuration, and implementation guide for the Cisco Validated Design as follows:

● Large-scale VMware Horizon 8 VDI

● Hitachi VSP E1090 Storage System

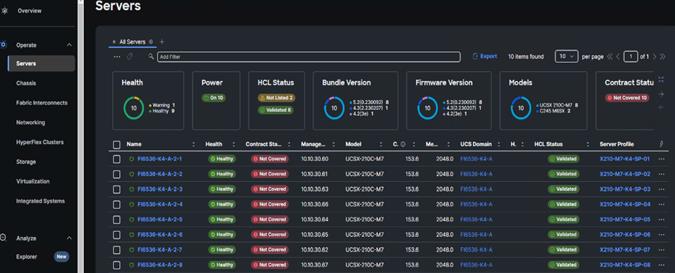

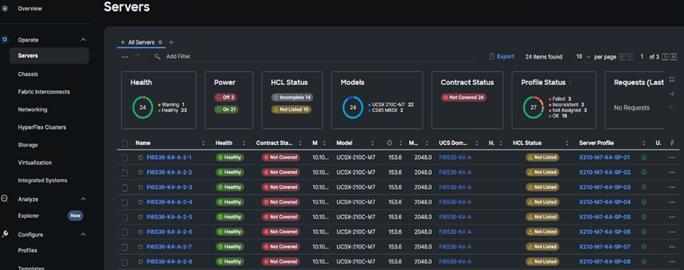

● Cisco UCS X210c M7 Blade Servers running VMware vSphere 8.0 U2

● Cisco Nexus 9000 Series Ethernet Switches

● Cisco MDS 9100 Series Multilayer Fibre Channel Switches

Highlights for this design include:

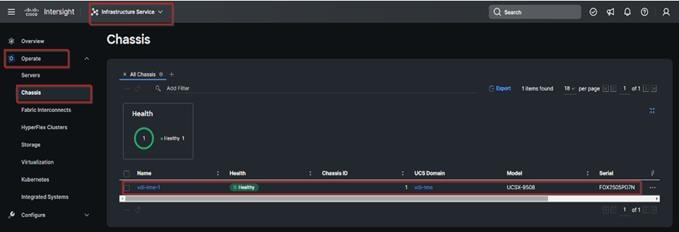

● Support for Cisco UCS 9508 blade server chassis with Cisco UCS X210c M7 compute nodes

● Support for Hitachi Virtual Storage Platform E1090 (VSP E1090) storage system with Hitachi code version 93-07-21-80/00

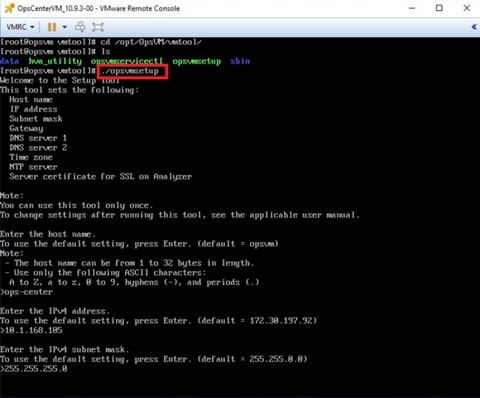

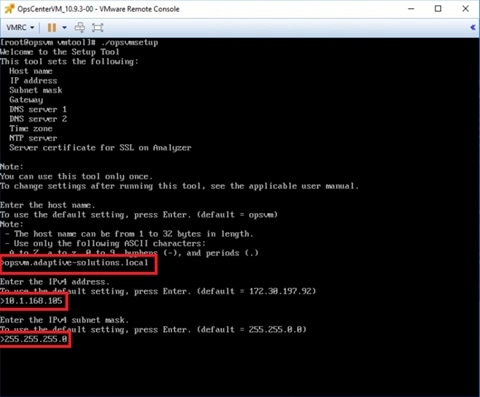

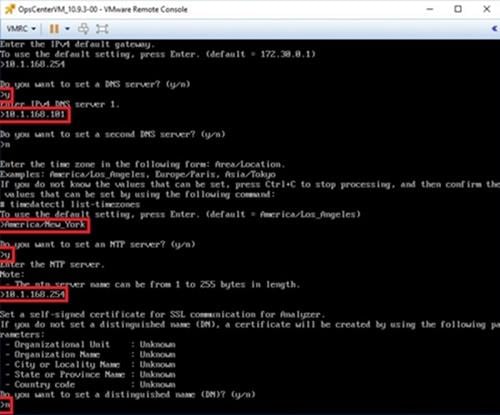

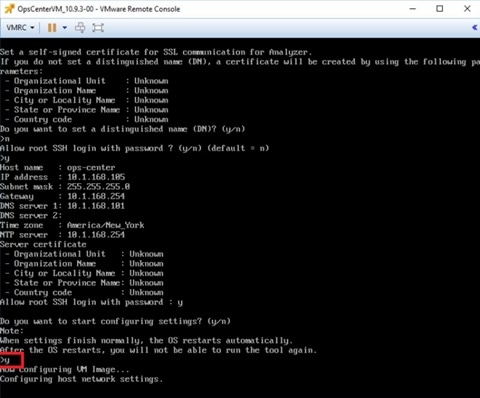

● Hitachi Ops Center 10.9.3

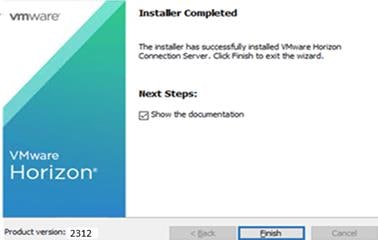

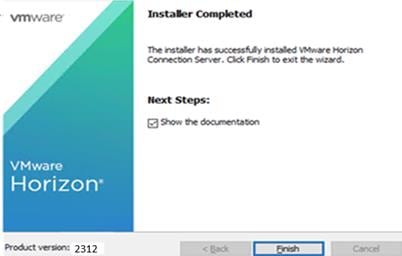

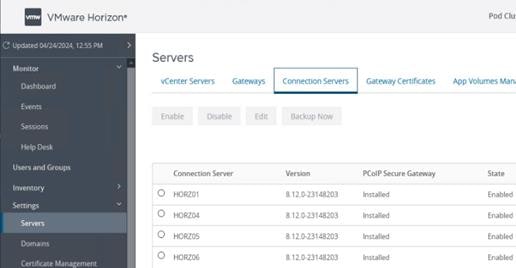

● VMware Horizon 8 2312 (Horizon 8 version 8.12)

● Support for VMware vSphere 8.0 U2

● Support for the Cisco UCS Manager 4.2

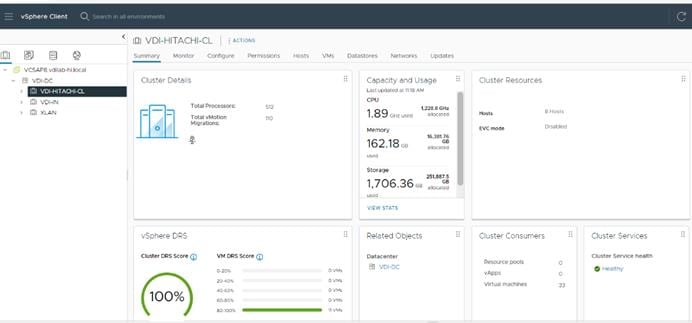

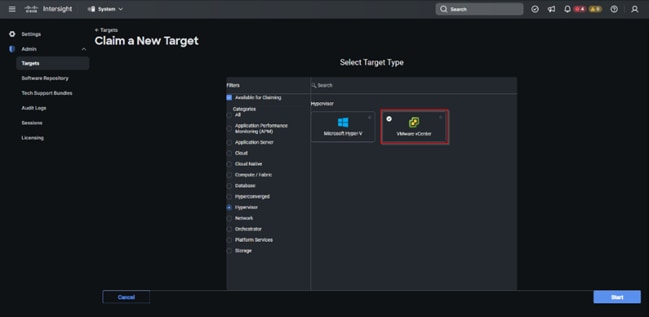

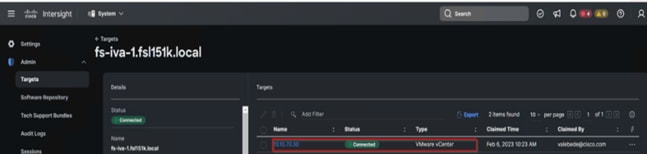

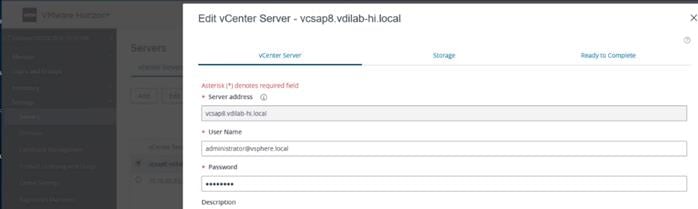

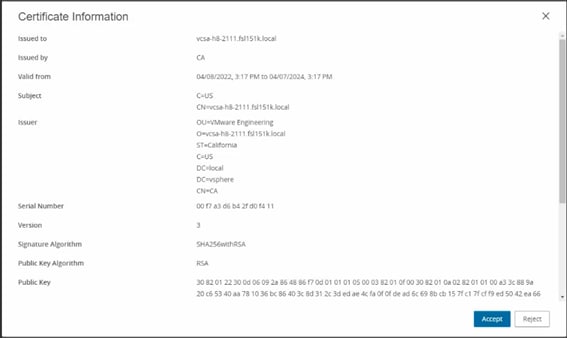

● Support for VMware vCenter 8.0 U2 to set up and manage the virtual infrastructure as well as integration of the virtual environment with Cisco Intersight software

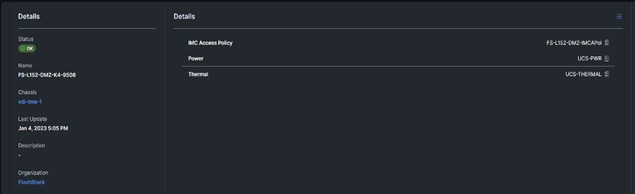

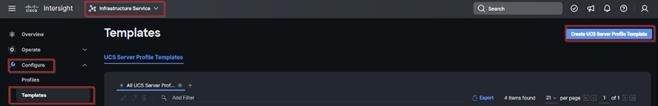

● Support for Cisco Intersight platform to deploy, maintain, and support the Cisco Hitachi Adaptive Components

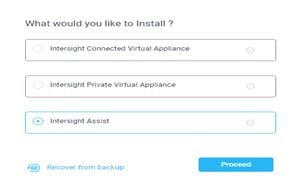

● Support for Cisco Intersight Assist virtual appliance to help connect the Hitachi Storage and VMware vCenter with the Cisco Intersight platform

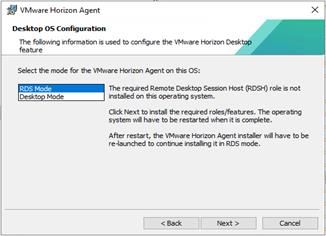

● Support for Microsoft Server 2022 OS for Remote Desktop Session Host (RDSH) server multi-sessions deployment

● Support for Microsoft Windows 11 OS for Instant clone/non-persistent and full clone/persistent clone VDI virtual machine deployment

These factors have led to the need for a predesigned computing, networking and storage building blocks optimized to lower the initial design cost, simplify management, and enable horizontal scalability and high levels of utilization.

The use cases include:

● Enterprise Data Center

● Service Provider Data Center

● Large Commercial Data Center

This chapter contains the following:

● Cisco and Hitachi Adaptive Solutions

● Cisco Unified Computing System

● Cisco UCS Fabric Interconnect

● Cisco UCS Virtual Interface Cards (VICs)

● VMware Horizon Remote Desktop Session Host (RDSH) Sessions and Windows 11 Desktops

● Cisco Intersight Assist Device Connector for VMware vCenter and Hitachi Storage

● Hitachi Virtual Storage Platform

● Hitachi Storage Virtualization Operating System RF

● Hitachi Virtual Storage Platform Sustainability

Cisco and Hitachi VSP Storage have partnered to deliver several Cisco Validated Designs, which use best-in-class storage, server, and network components to serve as the foundation for virtualized workloads such as Virtual Desktop Infrastructure (VDI), enabling efficient architectural designs that you can deploy quickly and confidently.

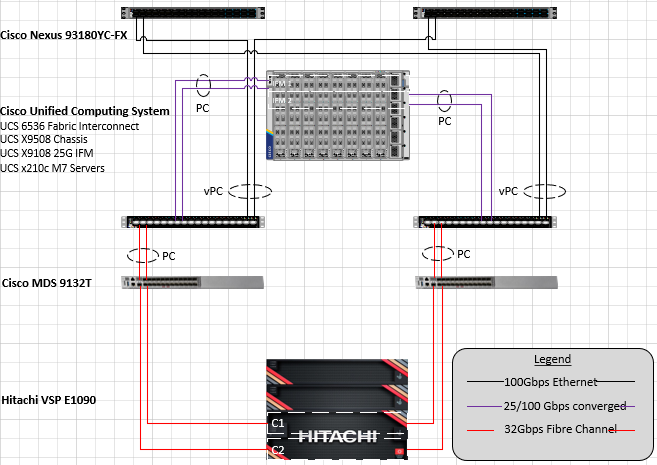

Cisco and Hitachi Adaptive Solutions

Cisco and Hitachi jointly developed the Cisco and Hitachi Adaptive Solution architecture. All components are integrated, allowing customers to deploy the solution quickly and economically while eliminating many of the risks associated with researching, designing, building, and deploying similar solutions from the foundation. One of the main benefits of Cisco and Hitachi Adaptive Solution is its ability to maintain consistency at scale. Each of the component families shown in Figure 1 (Cisco UCS, Cisco Nexus, Cisco MDS, and Hitachi VSP) offers platform and resource options to scale up or scale out the infrastructure while supporting the same features and functions.

Cisco Unified Computing System

Cisco Unified Computing System (Cisco UCS) is a next-generation data center platform that integrates computing, networking, storage access, and virtualization resources into a cohesive system designed to reduce total cost of ownership and increase business agility. The system integrates a low-latency, lossless 10-100 Gigabit Ethernet unified network fabric with enterprise-class, x86-architecture servers. The system is an integrated, scalable, multi-chassis platform with a unified management domain for managing all resources.

Cisco Unified Computing System consists of the following subsystems:

● Compute: The compute piece of the system incorporates servers based on the fourth/fifth-generation Intel Xeon Scalable processors. Servers are available in blade and rack form factor, managed by Cisco UCS Manager.

● Network: The integrated network fabric in the system provides a low-latency, lossless, 10/25/40/100 Gbps Ethernet fabric. Networks for LAN, SAN and management access are consolidated within the fabric. The unified fabric uses the innovative Single Connect technology to lower costs by reducing the number of network adapters, switches, and cables. This in turn lowers the power and cooling needs of the system.

● Virtualization: The system unleashes the full potential of virtualization by enhancing the scalability, performance, and operational control of virtual environments. Cisco security, policy enforcement, and diagnostic features are now extended into virtual environments to support evolving business needs.

● Storage access: Cisco UCS system provides consolidated access to both SAN storage and Network Attached Storage over the unified fabric. This provides customers with storage choices and investment protection. Also, the server administrators can pre-assign storage-access policies to storage resources, for simplified storage connectivity and management leading to increased productivity.

● Management: The system uniquely integrates compute, network, and storage access subsystems, enabling it to be managed as a single entity through Cisco UCS Manager software. Cisco UCS Manager increases IT staff productivity by enabling storage, network, and server administrators to collaborate on Service Profiles that define the desired physical configurations and infrastructure policies for applications. Service Profiles increase business agility by enabling IT to automate and provision re-sources in minutes instead of days.

Cisco UCS Differentiators

Cisco Unified Computing System is revolutionizing the way servers are managed in the datacenter. The following are the unique differentiators of Cisco Unified Computing System and Cisco UCS Manager:

● Embedded Management: In Cisco UCS, the servers are managed by the embedded firmware in the Fabric Interconnects, eliminating the need for any external physical or virtual devices to manage the servers.

● Unified Fabric: In Cisco UCS, from blade server chassis or rack servers to FI, there is a single Ethernet cable used for LAN, SAN, and management traffic. This converged I/O results in reduced cables, SFPs and adapters – reducing capital and operational expenses of the overall solution.

● Auto Discovery: By simply inserting the blade server in the chassis or connecting the rack server to the fabric interconnect, discovery and inventory of compute resources occurs automatically without any management intervention. The combination of unified fabric and auto-discovery enables the wire-once architecture of Cisco UCS, where compute capability of Cisco UCS can be extended easily while keeping the existing external connectivity to LAN, SAN, and management networks.

● Policy Based Resource Classification: Once Cisco UCS Manager discovers a compute resource, it can be automatically classified to a given resource pool based on policies defined. This capability is useful in multi-tenant cloud computing. This CVD showcases the policy-based resource classification of Cisco UCS Manager.

● Combined Rack and Blade Server Management: Cisco UCS Manager can manage Cisco UCS B-series blade servers and Cisco UCS C-series rack servers under the same Cisco UCS domain. This feature, along with stateless computing makes compute resources truly hardware form factor agnostic.

● Model based Management Architecture: The Cisco UCS Manager architecture and management database is model based, and data driven. An open XML API is provided to operate on the management model. This enables easy and scalable integration of Cisco UCS Manager with other management systems.

● Policies, Pools, Templates: The management approach in Cisco UCS Manager is based on defining policies, pools, and templates, instead of cluttered configuration, which enables a simple, loosely coupled, data driven approach in managing compute, network, and storage resources.

● Loose Referential Integrity: In Cisco UCS Manager, a service profile, port profile or policies can refer to other policies or logical resources with loose referential integrity. A referred policy cannot exist at the time of authoring the referring policy or a referred policy can be deleted even though other policies are referring to it. This provides different subject matter experts to work independently from each other. This provides great flexibility where different experts from different domains, such as network, storage, security, server, and virtualization work together to accomplish a complex task.

● Policy Resolution: In Cisco UCS Manager, a tree structure of organizational unit hierarchy can be created that mimics the real-life tenants and/or organization relationships. Various policies, pools and templates can be defined at different levels of organization hierarchy. A policy referring to another policy by name is resolved in the organizational hierarchy with closest policy match. If no policy with specific name is found in the hierarchy of the root organization, then the special policy named “default” is searched. This policy resolution practice enables automation friendly management APIs and provides great flexibility to owners of different organizations.

● Service Profiles and Stateless Computing: A service profile is a logical representation of a server, carrying its various identities and policies. This logical server can be assigned to any physical compute resource as far as it meets the resource requirements. Stateless computing enables procurement of a server within minutes, which used to take days in legacy server management systems.

● Built-in Multi-Tenancy Support: The combination of policies, pools and templates, loose referential integrity, policy resolution in the organizational hierarchy and a service profiles-based approach to compute resources makes Cisco UCS Manager inherently friendly to multi-tenant environments typically observed in private and public clouds.

● Extended Memory: The enterprise-class Cisco UCS Blade server extends the capabilities of the Cisco Unified Computing System portfolio in a half-width blade form factor. It harnesses the power of the latest Intel Xeon Scalable Series processor family CPUs and Intel Optane DC Persistent Memory (DCPMM) with up to 18TB of RAM (using 256GB DDR4 DIMMs and 512GB DCPMM).

● Simplified QoS: Even though Fibre Channel and Ethernet are converged in the Cisco UCS fabric, built-in support for QoS and lossless Ethernet makes it seamless. Network Quality of Service (QoS) is simplified in Cisco UCS Manager by representing all system classes in one GUI panel.

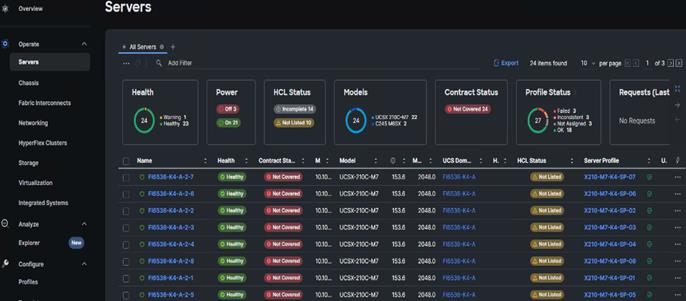

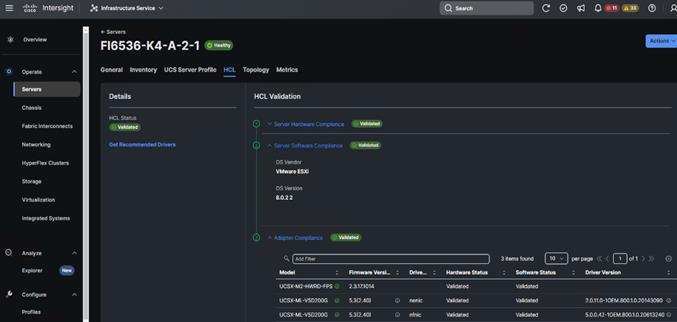

Cisco Intersight

Cisco Intersight is a lifecycle management platform for your Cisco UCS ecosystem as well as the Hitachi VSP, regardless of where it resides. In your enterprise data center, at the edge, in remote and branch offices, at retail and industrial sites—all these locations present unique management challenges and have typically required separate tools. Cisco Intersight Software as a Service (SaaS) unifies and simplifies your experience of the Cisco Unified Computing System (Cisco UCS).

Cisco Intersight software delivers a new level of cloud-powered intelligence that supports lifecycle management with continuous improvement. It is tightly integrated with the Cisco Technical Assistance Center (TAC).

Expertise and information flow seamlessly between Cisco Intersight and IT teams, providing global management of Cisco infrastructure, anywhere. Remediation and problem resolution are supported with automated upload of error logs for rapid root-cause analysis.

I

I

● Automate your infrastructure.

Cisco has a strong track record for management solutions that deliver policy-based automation to daily operations. Intersight SaaS is a natural evolution of our strategies. Cisco designed Cisco UCS to be 100 percent programmable. Cisco Intersight simply moves the control plane from the network into the cloud. Now you can manage your Cisco UCS and infrastructure wherever it resides through a single interface.

● Deploy your way.

If you need to control how your management data is handled, comply with data locality regulations, or consolidate the number of outbound connections from servers, you can use the Cisco Intersight Virtual Appliance for an on-premises experience. Cisco Intersight Virtual Appliance is continuously updated just like the SaaS version, so regardless of which approach you implement, you never have to worry about whether your management software is up to date.

● DevOps ready.

If you are implementing DevOps practices, you can use the Cisco Intersight API with either the cloud-based or virtual appliance offering. Through the API you can configure and manage infrastructure as code—you are not merely configuring an abstraction layer; you are managing the real thing. Through the API and support of cloud-based RESTful API, Terraform providers, Microsoft PowerShell scripts, or Python software, you can automate the deployment of settings and software for both physical and virtual layers. Using the API, you can simplify infrastructure lifecycle operations and increase the speed of continuous application delivery.

● Pervasive simplicity.

Simplify the user experience by managing your infrastructure regardless of where it is installed.

● Actionable intelligence.

● Use best practices to enable faster, proactive IT operations.

● Gain actionable insight for ongoing improvement and problem avoidance.

● Manage anywhere.

● Deploy in the data center and at the edge with massive scale.

● Get visibility into the health and inventory detail for your Intersight Managed environment on-the-go with the Cisco Inter-sight Mobile App.

For more information about Cisco Intersight and the different deployment options, go to: Cisco Intersight – Manage your systems anywhere.

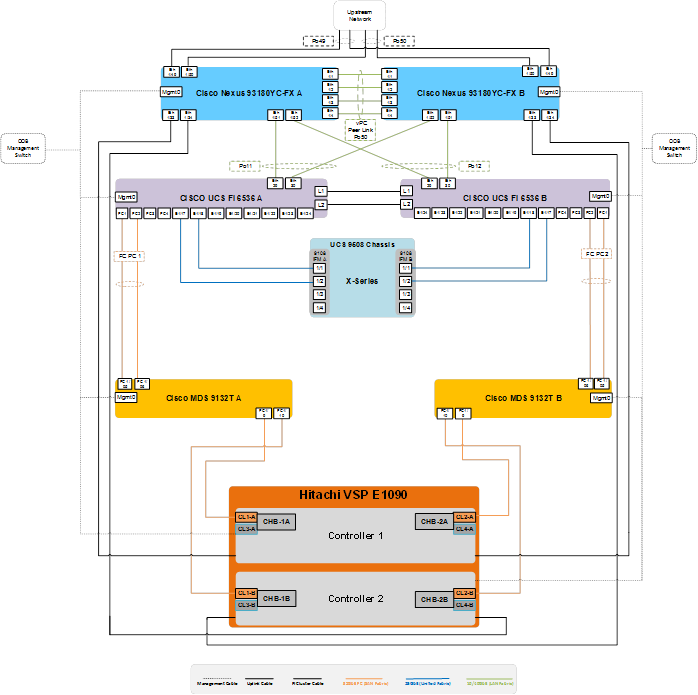

The Cisco UCS Fabric Interconnect (FI) is a core part of the Cisco Unified Computing System, providing both network connectivity and management capabilities for the system. Depending on the model chosen, the Cisco UCS Fabric Interconnect offers line-rate, low-latency, lossless 10 Gigabit, 25 Gigabit, 40 Gigabit, or 100 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE) and Fibre Channel connectivity. Cisco UCS Fabric Interconnects provide the management and communication backbone for the Cisco UCS C-Series, S-Series and HX-Series Rack-Mount Servers, Cisco UCS B-Series Blade Servers, and Cisco UCS 9500 Series Blade Server Chassis. All servers and chassis, and therefore all blades, attached to the Cisco UCS Fabric Interconnects become part of a single, highly available management domain. In addition, by supporting unified fabrics, the Cisco UCS Fabric Interconnects provide both the LAN and SAN connectivity for all servers within its domain.

For networking performance, the Cisco UCS 6536 Series uses a cut-through architecture, supporting deterministic, low latency, line rate 10/25/40/100 Gigabit Ethernet ports, 3.82 Tbps of switching capacity, and 320 Gbps bandwidth per Cisco 9508 blade chassis when connected through the IOM 2208/2408 model. The product family supports Cisco low-latency, lossless 10/25/40/100 Gigabit Ethernet unified network fabric capabilities, which increase the reliability, efficiency, and scalability of Ethernet networks. The Fabric Interconnect supports multiple traffic classes over the Ethernet fabric from the servers to the uplinks. Significant TCO savings come from an FCoE-optimized server design in which network interface cards (NICs), host bus adapters (HBAs), cables, and switches can be consolidated.

Cisco UCS 6536 Fabric Interconnects

The Cisco UCS Fabric Interconnects (FIs) provide a single point for connectivity and management for the entire Cisco Unified Computing System. Typically deployed as an active/active pair, the system’s FIs integrate all components into a single, highly available management domain controlled by Cisco Intersight. Cisco UCS FIs provide a single unified fabric for the system, with low-latency, lossless, cut-through switching that supports LAN, SAN, and management traffic using a single set of cables.

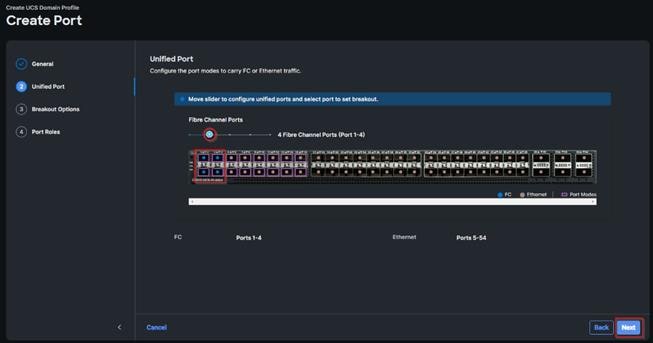

The Cisco UCS 6536 utilized in the current design is a 36-port Fabric Interconnect. This single RU device includes up to 36 10/25/40/100 Gbps Ethernet ports, 16 8/16/32-Gbps Fibre Channel ports via 4 128 Gbps to 4x32 Gbps breakouts on ports 33-36. All 36 ports support breakout cables or QSA interfaces.

The Cisco UCS X210c M7 features:

● CPU: Up to 2x 4th Gen Intel Xeon Scalable Processors with up to 60 cores per processor and up to 2.625 MB Level 3 cache per core and up to 112.5 MB per CPU.

● Memory: Up to 8TB of main memory with 32x 256 GB DDR5-4800 DIMMs.

● Disk storage: Up to six hot-pluggable, solid-state drives (SSDs), or non-volatile memory express (NVMe) 2.5-inch drives with a choice of enterprise-class redundant array of independent disks (RAIDs) or passthrough controllers, up to two M.2 SATA drives with optional hardware RAID.

● Optional front mezzanine GPU module: The Cisco UCS front mezzanine GPU module is a passive PCIe Gen 4.0 front mezzanine option with support for up to two U.2 NVMe drives and two HHHL GPUs.

● mLOM virtual interface cards:

◦ Cisco UCS Virtual Interface Card (VIC) 15420 occupies the server's modular LAN on motherboard (mLOM) slot, enabling up to 50 Gbps of unified fabric connectivity to each of the chassis intelligent fabric modules (IFMs) for 100 Gbps connectivity per server.

◦ Cisco UCS Virtual Interface Card (VIC) 15231 occupies the server's modular LAN on motherboard (mLOM) slot, enabling up to 100 Gbps of unified fabric connectivity to each of the chassis intelligent fabric modules (IFMs) for 100 Gbps connectivity per server.

● Optional mezzanine card:

◦ Cisco UCS 5th Gen Virtual Interface Card (VIC) 15422 can occupy the server's mezzanine slot at the bottom rear of the chassis. This card's I/O connectors link to Cisco UCS X-Fabric technology. An included bridge card extends this VIC's 2x 50 Gbps of network connections through IFM connectors, bringing the total bandwidth to 100 Gbps per fabric (for a total of 200 Gbps per server).

◦ Cisco UCS PCI Mezz card for X-Fabric can occupy the server's mezzanine slot at the bottom rear of the chassis. This card's I/O connectors link to Cisco UCS X-Fabric modules and enable connectivity to the Cisco UCS X440p PCIe Node.

● All VIC mezzanine cards also provide I/O connections from the Cisco UCS X210c M7 compute node to the X440p PCIe Node.

● Security: The server supports an optional Trusted Platform Module (TPM). Additional security features include a secure boot FPGA and ACT2 anticounterfeit provisions.

Cisco UCS Virtual Interface Cards (VICs)

The Cisco UCS VIC 15000 series is designed for Cisco UCS X-Series M6/M7 Blade Servers, Cisco UCS B-Series M6 Blade Servers, and Cisco UCS C-Series M6/M7 Rack Servers. The adapters are capable of supporting 10/25/40/50/100/200-Gigabit Ethernet and Fibre Channel over Ethernet (FCoE). They incorporate Cisco’s next-generation Converged Network Adapter (CNA) technology and offer a comprehensive feature set, providing investment protection for future feature software releases.

Cisco UCS VIC 15231

The Cisco UCS VIC 15231 (Figure 5) is a 2x100-Gbps Ethernet/FCoE-capable modular LAN on motherboard (mLOM) designed exclusively for the Cisco UCS X210 Compute Node. The Cisco UCS VIC 15231 enables a policy-based, stateless, agile server infrastructure that can present to the host PCIe standards-compliant interfaces that can be dynamically configured as either NICs or HBAs.

Cisco Nexus 93180YC-FX Switches

The Cisco Nexus 93180YC-FX Switch provides a flexible line-rate Layer 2 and Layer 3 feature set in a compact form factor. Designed with Cisco Cloud Scale technology, it supports highly scalable cloud architectures. With the option to operate in Cisco NX-OS or Application Centric Infrastructure (ACI) mode, it can be deployed across enterprise, service provider, and Web 2.0 data centers.

● Architectural Flexibility

◦ Includes top-of-rack or middle-of-row fiber-based server access connectivity for traditional and leaf-spine architectures.

◦ Leaf node support for Cisco ACI architecture is provided in the roadmap.

◦ Increase scale and simplify management through Cisco Nexus 2000 Fabric Extender support.

● Feature Rich

◦ Enhanced Cisco NX-OS Software is designed for performance, resiliency, scalability, manageability, and programmability.

◦ ACI-ready infrastructure helps users take advantage of automated policy-based systems management.

◦ Virtual Extensible LAN (VXLAN) routing provides network services.

◦ Rich traffic flow telemetry with line-rate data collection.

◦ Real-time buffer utilization per port and per queue, for monitoring traffic micro-bursts and application traffic patterns.

● Highly Available and Efficient Design

◦ High-density, non-blocking architecture.

◦ Easily deployed into either a hot-aisle and cold-aisle configuration.

◦ Redundant, hot-swappable power supplies and fan trays.

● Simplified Operations

◦ Power-On Auto Provisioning (POAP) support allows for simplified software upgrades and configuration file installation.

◦ An intelligent API offers switch management through remote procedure calls (RPCs, JSON, or XML) over a HTTP/HTTPS infrastructure.

◦ Python Scripting for programmatic access to the switch command-line interface (CLI).

◦ Hot and cold patching, and online diagnostics.

● Investment Protection

A Cisco 40 Gbe bidirectional transceiver allows reuse of an existing 10 Gigabit Ethernet multimode cabling plant for 40 Giga-bit Ethernet Support for 1 Gbe and 10 Gbe access connectivity for data centers migrating access switching infrastructure to faster speed. The following is supported:

● 1.8 Tbps of bandwidth in a 1 RU form factor.

● 48 fixed 1/10/25-Gbe SFP+ ports.

● 6 fixed 40/100-Gbe QSFP+ for uplink connectivity.

● Latency of less than 2 microseconds.

● Front-to-back or back-to-front airflow configurations.

● 1+1 redundant hot-swappable 80 Plus Platinum-certified power supplies.

● Hot swappable 3+1 redundant fan trays.

Cisco MDS 9132T 32-Gb Fiber Channel Switch

The next-generation Cisco MDS 9132T 32-Gb 32-Port Fibre Channel Switch (Figure 8) provides high-speed Fibre Channel connectivity from the server rack to the SAN core. It empowers small, midsize, and large enterprises that are rapidly deploying cloud-scale applications using extremely dense virtualized servers, providing the dual benefits of greater bandwidth and consolidation.

Small-scale SAN architectures can be built from the foundation using this low-cost, low-power, non-blocking, line-rate, and low-latency, bi-directional airflow capable, fixed standalone SAN switch connecting both storage and host ports.

Medium-size to large-scale SAN architectures built with SAN core directors can expand 32-Gb connectivity to the server rack using these switches either in switch mode or Network Port Virtualization (NPV) mode.

Additionally, investing in this switch for the lower-speed (4- or 8- or 16-Gb) server rack gives you the option to upgrade to 32-Gb server connectivity in the future using the 32-Gb Host Bus Adapter (HBA) that are available today. The Cisco MDS 9132T 32-Gb 32-Port Fibre Channel switch also provides unmatched flexibility through a unique port expansion module (Figure 9) that provides a robust cost-effective, field swappable, port upgrade option.

This switch also offers state-of-the-art SAN analytics and telemetry capabilities that have been built into this next-generation hardware platform. This new state-of-the-art technology couples the next-generation port ASIC with a fully dedicated Network Processing Unit designed to complete analytics calculations in real time. The telemetry data extracted from the inspection of the frame headers are calculated on board (within the switch) and, using an industry-leading open format, can be streamed to any analytics-visualization platform. This switch also includes a dedicated 10/100/1000BASE-T telemetry port to maximize data delivery to any telemetry receiver including Cisco Data Center Network Manager.

● Features

◦ High performance: Cisco MDS 9132T architecture, with chip-integrated nonblocking arbitration, provides consistent 32-Gb low-latency performance across all traffic conditions for every Fibre Channel port on the switch.

◦ Capital Expenditure (CapEx) savings: The 32-Gb ports allow users to deploy them on existing 16- or 8-Gb transceivers, reducing initial CapEx with an option to upgrade to 32-Gb transceivers and adapters in the future.

◦ High availability: Cisco MDS 9132T switches continue to provide the same outstanding availability and reliability as the previous-generation Cisco MDS 9000 Family switches by providing optional redundancy on all major components such as the power supply and fan. Dual power supplies also facilitate redundant power grids.

◦ Pay-as-you-grow: The Cisco MDS 9132T Fibre Channel switch provides an option to deploy as few as eight 32-Gb Fibre Channel ports in the entry-level variant, which can grow by 8 ports to 16 ports, and thereafter with a port expansion module with sixteen 32-Gb ports, to up to 32 ports. This approach results in lower initial investment and power consumption for entry-level configurations of up to 16 ports compared to a fully loaded switch. Upgrading through an expansion module also reduces the overhead of managing multiple instances of port activation licenses on the switch. This unique combination of port upgrade options allow four possible configurations of 8 ports, 16 ports, 24 ports and 32 ports.

◦ Next-generation Application-Specific Integrated Circuit (ASIC): The Cisco MDS 9132T Fibre Channel switch is powered by the same high-performance 32-Gb Cisco ASIC with an integrated network processor that powers the Cisco MDS 9700 48-Port 32-Gb Fibre Channel Switching Module. Among all the advanced features that this ASIC enables, one of the most notable is inspection of Fibre Channel and Small Computer System Interface (SCSI) headers at wire speed on every flow in the smallest form-factor Fibre Channel switch without the need for any external taps or appliances. The recorded flows can be analyzed on the switch and also exported using a dedicated 10/100/1000BASE-T port for telemetry and analytics purposes.

◦ Intelligent network services: Slow-drain detection and isolation, VSAN technology, Access Control Lists (ACLs) for hardware-based intelligent frame processing, smart zoning, and fabric wide Quality of Service (QoS) enable migration from SAN islands to enterprise-wide storage networks. Traffic encryption is optionally available to meet stringent security requirements.

◦ Sophisticated diagnostics: The Cisco MDS 9132T provides intelligent diagnostics tools such as Inter-Switch Link (ISL) diagnostics, read diagnostic parameters, protocol decoding, network analysis tools, and integrated Cisco Call Home capability for greater reliability, faster problem resolution, and reduced service costs.

◦ Virtual machine awareness: The Cisco MDS 9132T provides visibility into all virtual machines logged into the fabric. This feature is available through HBAs capable of priority tagging the Virtual Machine Identifier (VMID) on every FC frame. Virtual machine awareness can be extended to intelligent fabric services such as analytics[1] to visualize performance of every flow originating from each virtual machine in the fabric.

◦ Programmable fabric: The Cisco MDS 9132T provides powerful Representational State Transfer (REST) and Cisco NX-API capabilities to enable flexible and rapid programming of utilities for the SAN as well as polling point-in-time telemetry data from any external tool.

◦ Single-pane management: The Cisco MDS 9132T can be provisioned, managed, monitored, and troubleshot using Cisco Data Center Network Manager (DCNM), which currently manages the entire suite of Cisco data center products.

◦ Self-contained advanced anticounterfeiting technology: The Cisco MDS 9132T uses on-board hardware that protects the entire system from malicious attacks by securing access to critical components such as the bootloader, system image loader and Joint Test Action Group (JTAG) interface.

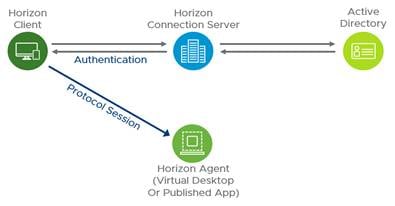

VMware Horizon is a modern platform for running and delivering virtual desktops and apps across the hybrid cloud. For administrators, this means simple, automated, and secure desktop and app management. For users, it provides a consistent experience across devices and locations.

For more information, go to: VMware Horizon.

VMware Horizon Remote Desktop Session Host (RDSH) Sessions and Windows 11 Desktops

The virtual app and desktop solution is designed for an exceptional experience.

Today's employees spend more time than ever working remotely, causing companies to rethink how IT services should be delivered. To modernize infrastructure and maximize efficiency, many are turning to desktop as a service (DaaS) to enhance their physical desktop strategy, or they are updating on-premises virtual desktop infrastructure (VDI) deployments. Managed in the cloud, these deployments are high-performance virtual instances of desktops and apps that can be delivered from any datacenter or public cloud provider.

DaaS and VDI capabilities provide corporate data protection as well as an easily accessible hybrid work solution for employees. Because all data is stored securely in the cloud or datacenter, rather than on devices, end-users can work securely from anywhere, on any device, and over any network—all with a fully IT-provided experience. IT also gains the benefit of centralized management, so they can scale their environments quickly and easily. By separating endpoints and corporate data, resources stay protected even if the devices are compromised.

As a leading VDI and DaaS provider, VMware provides the capabilities organizations need for deploying virtual apps and desktops to reduce downtime, increase security, and alleviate the many challenges associated with traditional desktop management.

For more information, go to:

https://docs.vmware.com/en/VMware-Horizon/8-2312/rn/vmware-horizon-8-2312-release-notes/index.html

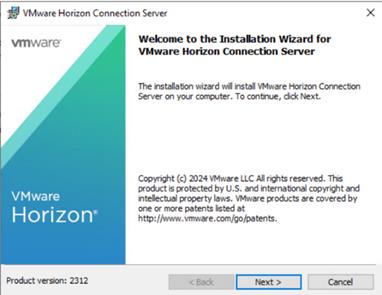

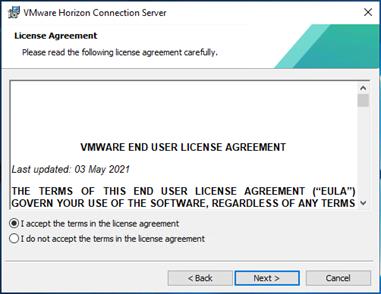

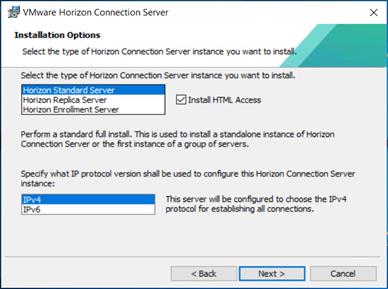

VMware Horizon 8 2312

VMware Horizon 8 version 2312 includes the following new features and enhancements.

Horizon 8 2312 is an Extended Service Branch (ESB). Approximately once a year, VMware designates one VMware Horizon release as an Extended Service Branch (ESB). An ESB is a parallel release branch to the existing Current Releases (CR) of the product. By choosing to deploy an ESB, customers receive periodic service packs (SP) updates, which include cumulative critical bug fixes and security fixes. Most importantly, there are no new features in the SP updates, so customers can rely on a stable Horizon platform for their critical deployments. For more information on the ESB and the Horizon versions that have been designated an ESB, see VMware Knowledge Base (KB) article 86477.

VMware Horizon 8 version 2312 includes the following new features and enhancements. This information is grouped by installable component.

● This release adds support for the following Linux distributions:

◦ Red Hat Enterprise Linux (RHEL) Workstation 8.8 and 9.3

◦ Red Hat Enterprise Linux (RHEL) Server 8.9 and 9.3

◦ Rocky Linux 8.9 and 9.3

◦ Debian 12.2

● This release drops support for the following Linux distributions:

◦ RHEL Workstation 8.4

◦ RHEL Server 8.4

● This release adds support for VMware Horizon Recording. This feature allows administrators to record desktop and application sessions to monitor user behavior for Linux remote desktops and applications.

● VMware Integrated Printing now supports the ability to add a watermark to printed jobs. Administrators can enable this feature using the printSvc.watermarkEnabled property in /etc/vmware/config.

● Administrators can store the VMwareBlastServer CA-signed certificate and private key in a BCFKS keystore. Two new configuration options in /etc/vmware/viewagent-custom.conf, SSLCertName and SSLKeyName, can be used to customize the names of the certificate and private key.

● Horizon Client

◦ For client certificates, Horizon 8 administrators can set the Connection Server enforcement state for Windows Client sessions and specify the minimum desired security setting to restrict client connections.

◦ The Unlock a Desktop with True SSO and Workspace ONE feature is now supported on Horizon Mac and Linux clients.

● Horizon RESTful APIs

◦ New REST APIs for Application pool, Application icon, Virtual Center summary statistics, UserOrGroupsSummary, Datastore usage, Session statistics, Machines etcetera are available along with existing API updates to create robust automations. For more details, refer to API documentation.

◦ Horizon Agent for Linux now supports Real-Time Audio-Video redirection, which includes both audio-in and webcam redirection of locally connected devices from the client system to the remote session.

◦ VMware Integrated Printing now supports printer redirection from Linux remote desktops to client devices running Horizon Client for Chrome or HTML Access.

◦ Session Collaboration is now supported on the MATE desktop environment.

◦ A vGPU desktop can support a maximum resolution of 3840x2160 on one, two, three, or four monitors configured in any arrangement.

◦ A non-vGPU desktop can support a maximum resolution of 3840x2160 on a single monitor only.

◦ Agent and Agent RDS levels are now supported for Horizon Agent for Linux.

● Virtual Desktops

◦ Option to create multiple custom compute profiles (CPU, RAM, Cores per Socket) for a single golden image snapshot during desktop pool creation.

◦ VMware Blast now detects the presence of a vGPU system and applies higher quality default settings.

◦ VMware vSphere Distributed Resource Scheduler (DRS) in vSphere 7.0 U3f and later can now be configured to automatically migrate vGPU desktops when entering ESXi host maintenance mode. For more information, see https://kb.vmware.com/s/article/87277

◦ Forensic quarantine feature that archives the virtual disks of selected dedicated or floating Instant Clone desktops for forensic purposes.

◦ The VMware Blast CPU controller feature is now enabled by default in scale environments.

◦ Removed pop-up for suggesting best practices during creation of an instant clone. The information now appears on the first tab/screen of the creation wizard.

◦ Help Desk administrator roles are now applicable to all access groups, not just to the root access group as with previous releases.

◦ Administrators can encrypt a session recording file into a .bin format so that it cannot be played from the file system.

◦ If using an older Web browser, a message appears when launching the Horizon Console indicating that using a modern browser will provide a better user experience.

◦ Added Support for HW encoding on Intel GPU for Windows:

- Supports Intel 11th Generation Integrated Graphics and above (Tigerlake+) with a minimum required driver version of 30.0.101.1660. See https://www.intel.com/content/www/us/en/download/19344/727284/intel-graphics-windows-dch-drivers.html.

◦ VVC-based USB-R is enabled by default for non-desktop (Chrome/HTML Access/Android) Blast clients.

◦ Physical PC (as Unmanaged Pool) now supports Windows 11 Education and Pro (20H2 and later) and Windows 11 Education and Pro (21H2) with the VMware Blast protocol.

◦ Storage Drive Redirection (SDR) is now available as an alternative option to USB or CDR redirection for better I/O performance.

● Horizon Connection Server

◦ Horizon Connection Server now enables you to configure a customized timeout warning and set the timer for the warning to appear before a user is forcibly disconnected from a remote desktop or published application session. This warning is supported with Horizon Client for Windows 2312 and Horizon Client for Mac 2312 or later.

◦ Windows Hello for Business with certificate authentication is now supported when you Log in as Current User on the Horizon Client for Windows.

◦ Added limited support for Hybrid Azure AD.

● Horizon Agent

◦ The Horizon Agent Installer now includes a pre-check to confirm that .NET 4.6.2 is installed if Horizon Performance Tracker is selected.

● Horizon Client

◦ For information about new features in a Horizon client, including HTML Access, review the release notes for that Horizon client.

● General

◦ The View Agent Direct-Connection Plug-In product name was changed to Horizon Agent Direct-Connection Plug-In in the documentation set.

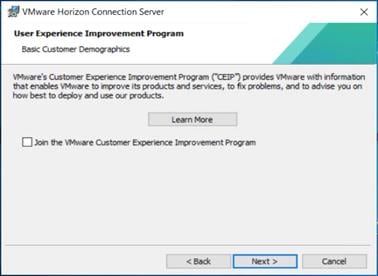

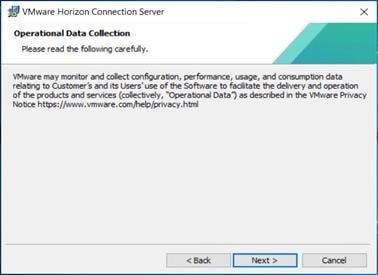

◦ Customers are automatically enrolled in the VMware Customer Experience Improvement Program (CEIP) during installation.

For more information about VMware vSphere and its components, see: https://www.vmware.com/products/vsphere.html.

VMware vSphere is an enterprise workload platform for holistically managing large collections of infrastructures (resources including CPUs, storage, and networking) as a seamless, versatile, and dynamic operating environment. Unlike traditional operating systems that manage an individual machine, VMware vSphere aggregates the infrastructure of an entire data center to create a single powerhouse with resources that can be allocated quickly and dynamically to any application in need.

Note: VMware vSphere 8 became available in November of 2022.

The VMware vSphere 8 Update 2 release delivered enhanced value in operational efficiency for admins, supercharged performance for higher-end AI/ML workloads, and elevated security across the environment. VMware vSphere 8 Update 2 has now achieved general availability.

For more information about VMware vSphere 8 Update 2 three key areas of enhancements, go to: VMware vSphere

VMware vSphere vCenter

VMware vCenter Server provides unified management of all hosts and VMs from a single console and aggregates performance monitoring of clusters, hosts, and VMs. VMware vCenter Server gives administrators deep insight into the status and configuration of compute clusters, hosts, VMs, storage, the guest OS, and other critical components of a virtual infrastructure. VMware vCenter manages the rich set of features available in a VMware vSphere environment.

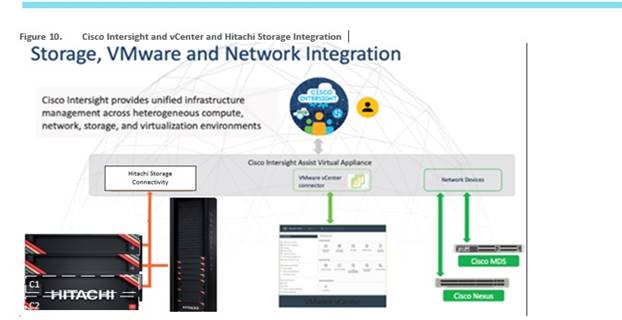

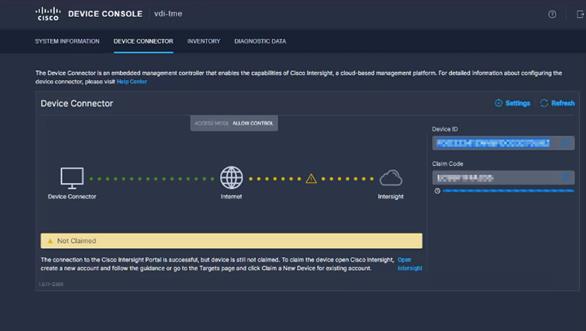

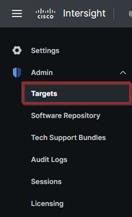

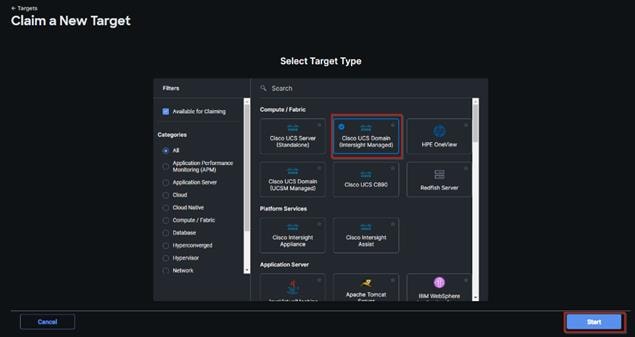

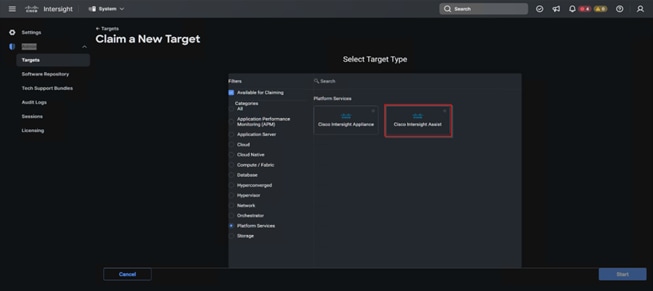

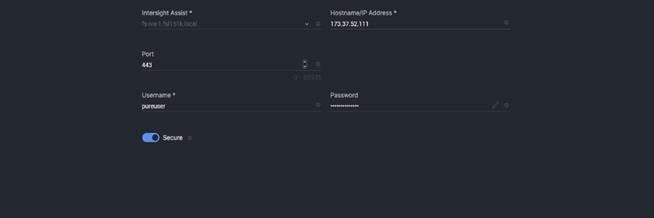

Cisco Intersight Assist Device Connector for VMware vCenter and Hitachi Virtual Storage Platform

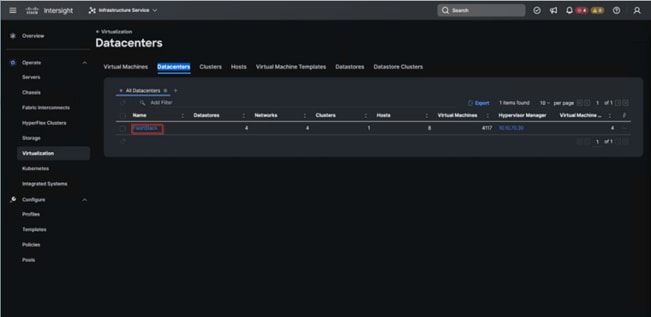

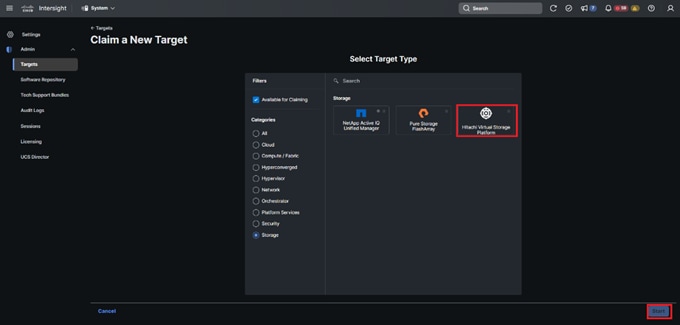

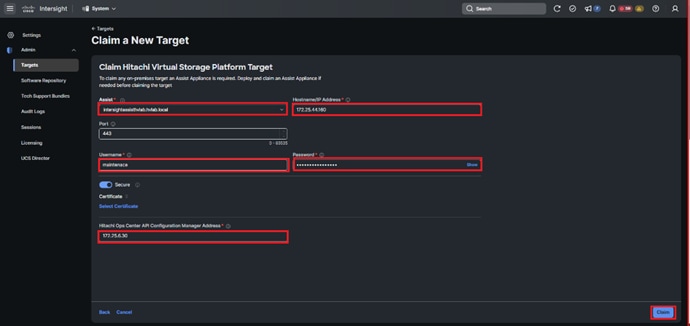

Cisco Intersight integrates with VMware vCenter, and Hitachi Virtual Storage Platform (VSP) as follows:

● Cisco Intersight uses the device connector running within Cisco Intersight Assist virtual appliance to communicate with VMware vCenter.

● Cisco Intersight uses the device connector running within a Cisco Intersight Assist virtual appliance to integrate the Hitachi VSP.

For further information and supported VSP models, go to:

The device connector provides a safe way for connected targets to send information and receive control instructions from the Cisco Intersight portal using a secure Internet connection. The integration brings the full value and simplicity of Cisco Intersight infrastructure management service to VMware hypervisor and Hitachi VSP environments. The integration architecture enables you to use new management capabilities without compromise in your existing VMware or Hitachi VSP operations. IT users will be able to manage heterogeneous infrastructure from a centralized Cisco Intersight portal. At the same time, the IT staff can continue to use VMware vCenter and the Hitachi VSP dashboard for comprehensive analysis, diagnostics, and reporting of virtual and storage environments. The next section addresses the functions that this integration provides.

Hitachi Virtual Storage Platform

The Hitachi Virtual Storage Platform (VSP) is a highly scalable, true enterprise-class storage system that can virtualize external storage and provide virtual partitioning and quality of service for diverse workload consolidation. With the industry’s only 100 percent data availability guarantee, VSP delivers the highest uptime and flexibility for your block-level storage needs.

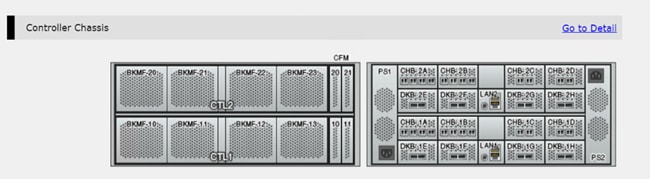

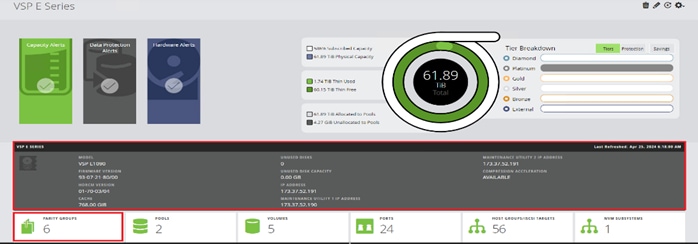

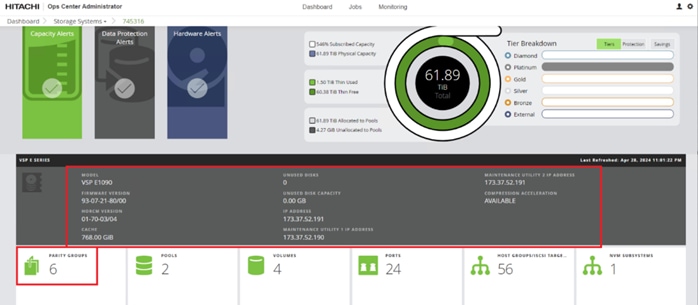

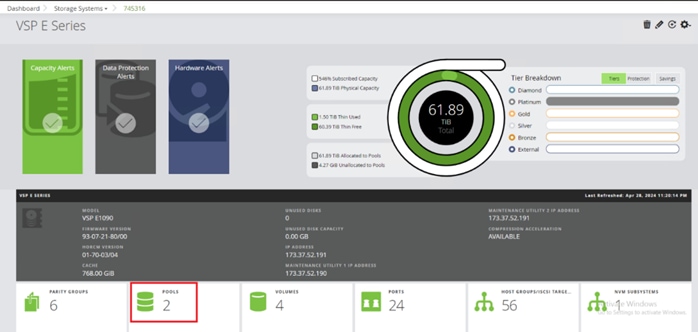

Hitachi Virtual Storage Platform E1090

The Hitachi Virtual Storage Platform E series builds over 50 years of proven Hitachi engineering experience, offering you a superior range of business continuity options that provide the best reliability in the industry. As a result, 85 percent of Fortune 100 financial services companies trust Hitachi storage systems with their mission-critical data.

The Hitachi Virtual Storage Platform E1090 (VSP E1090) storage system is a high-performance, large-capacity data storage system. The VSP E1090 all-flash arrays (AFAs) support NVMe and SAS solid-state drives (SSDs). The VSP E1090H hybrid models can be configured with both SSDs and hard disk drives (HDDs).

The NVMe flash architecture delivers consistent, low-microsecond latency, which reduces the transaction costs of latency-critical applications and delivers predictable performance to optimize storage resources.

The hybrid architecture allows for greater scalability and provides data-in-place migration support.

The storage systems offer superior performance, resiliency, and agility, featuring response times as low as 41μ, all backed by the industry’s first and most comprehensive 100 percent data availability guarantee.

The Hitachi Virtual Storage Platform E series innovative active-active controller architecture protects your business against local faults while mitigating performance issues as well as providing all enterprise features of the VSP 5000 series in a lower cost form factor to satisfy midrange customer needs and business requirements.

Hitachi VSP E1090 Key Features

● High performance

◦ Multiple controller configuration distributes processing across controllers

◦ High-speed processing facilitated by up to 1,024 GiB of cache

◦ I/O processing speed increased by NVMe flash drives

◦ High-speed front-end data transfer up to 32 Gbps for FC and 10 Gbps for iSCSI

◦ I/O response times as low as low as 41 μ

◦ Integrated with Hitachi Ops Center to improve IT operational efficiencies.

● High reliability

◦ Service continuity for all main components due to redundant configuration!

◦ RAID 1, RAID 5, and RAID 6 support (RAID 6 including 14D+2P)

◦ Data security by transferring data to cache flash memory in case of a power outage.

● Scalability and versatility

◦ Scalable capacity up to 25.9 PB, 287 PB (external), and 8.4M IOPS

◦ The hybrid architecture allows for greater scalability and provides data-in-place migration support.

● Performance and Resiliency Enhancements

◦ Upgraded controllers with 14 percent more processing power than VSP E990 and 53 percent more processing power than VSP F900

◦ Significantly improved adaptive data reduction (ADR) performance through Compression Accelerator Modules

◦ An 80 percent reduction in drive rebuild time compared to earlier midsized enterprise platforms.

◦ Smaller access size for ADR metadata reduces overhead.

◦ Support for NVMe allows extremely low latency with up to 5 times higher cache miss IOPS per drive.

● Reliability and serviceability

The Virtual Storage Platform E1090 (VSP E1090) storage system is designed to deliver industry-leading performance and availability. The VSP E1090 features a single, flash-optimized Storage Virtualization Operating System (SVOS) image running on 64 processor cores, sharing a global cache of 1 TiB. The VSP E1090 offers higher performance with fewer hardware resources than competitors. The VSP E1090 was upgraded to an advanced Cascade Lake CPU, permitting read response times as low as 41 microseconds and data reduction throughput was improved up to 2X by the new Compression Accelerator Module. Improvements in reliability and serviceability allow the VSP E1090 to claim an industry-leading 99.9999% availability (on average, 0.3 seconds per year of downtime).

● Advanced AIOps and easy-to-use management

The Hitachi Virtual Storage Platform E series achieves greater efficiency and agility with Hitachi Ops Center’s advanced AIOps which provide real-time monitoring for VSP E series systems located on-premises or in a colocation facility. Advanced AIOps provides unique integration of IT analytics and automation that identifies issues and, through automation, quickly resolves issues before they impact your critical workloads. Ops Center uses the latest AI and machine learning (ML) capabilities to improve IT operations, and simplifies day-to-day administrative, optimization, and management orchestration for VSP E-Series, freeing you to focus on innovation and strategic initiatives.

For more information about Hitachi Virtual Storage Platform E-Series, go to: https://www.hitachivantara.com/en-us/products/storage-platforms/primary-block-storage/vsp-e-series.html

Hitachi Storage Virtualization Operating System RF

Hitachi Storage Virtualization Operating System (SVOS) RF (Resilient Flash) delivers best-in-class business continuity and data availability and simplifies storage management of all Hitachi VSPs by sharing a common operating system. Flash performance is optimized with a patented flash-aware I/O stack to further accelerate data access. Adaptive inline data reduction increases storage efficiency while enabling a balance of data efficiency and application performance. Industry-leading storage virtualization allows Hitachi Storage Virtualization Operating System RF to use third-party all-flash and hybrid arrays as storage capacity, consolidating resources and extending the life of storage investments.

Hitachi Storage Virtualization Operating System RF works with the virtualization capabilities of Hitachi VSP storage systems to provide the foundation for global storage virtualization. SVOS RF delivers software-defined storage by abstracting and managing heterogeneous storage to provide a unified virtual storage layer, resource pooling, and automation. Hitachi Storage Virtualization Operating System RF also offers self-optimization, automation, centralized management, and increased operational efficiency for improved performance and storage utilization. Optimized for flash storage, Hitachi Storage Virtualization Operating System RF provides adaptive inline data reduction to keep response times low as data levels grow, and selectable services enable data-reduction technologies to be activated based on workload benefit.

Hitachi Storage Virtualization Operating System RF integrates with Hitachi’s base and advanced software packages to deliver superior availability and operational efficiency. You gain active-active clustering, data-at-rest encryption, insights from machine learning, and policy-defined data protection with local and remote replication.

For more information about Hitachi Storage Virtualization Operating System RF, go to: https://www.hitachivantara.com/en-us/products/storage-platforms/primary-block-storage/virtualization-operating-system.html

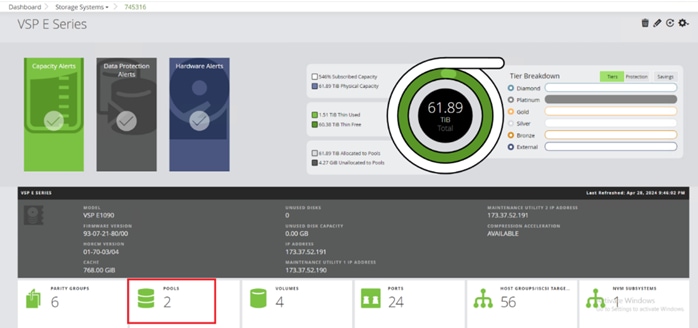

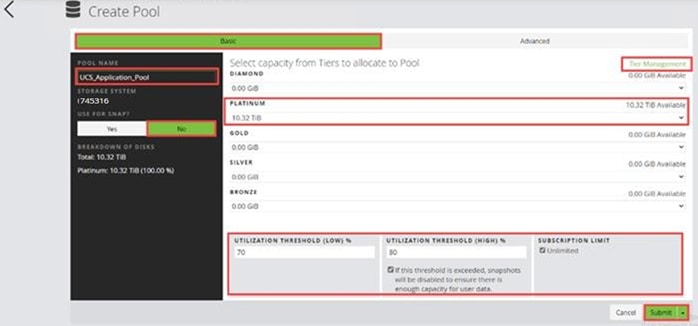

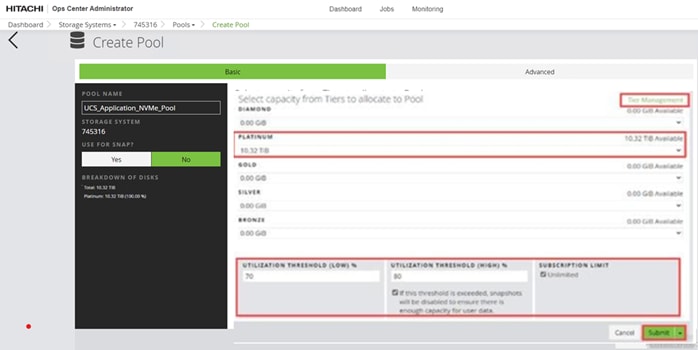

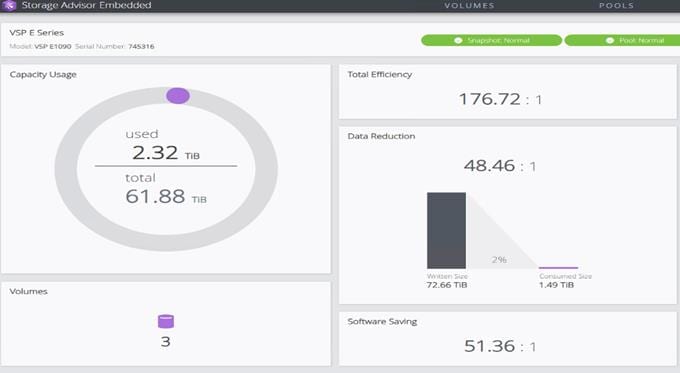

Capacity Saving

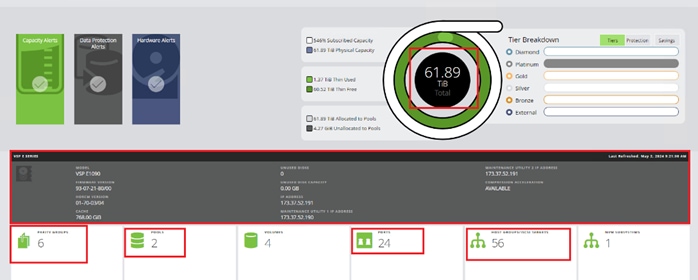

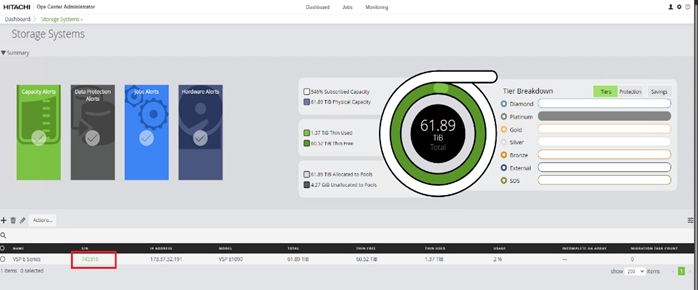

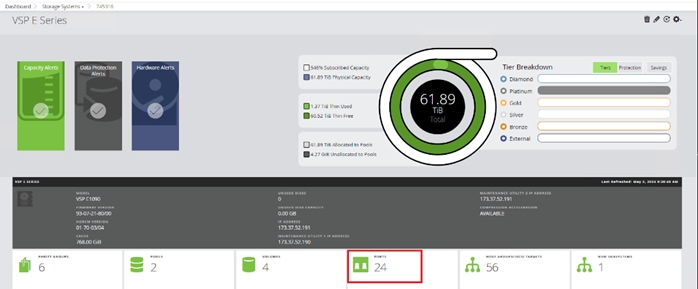

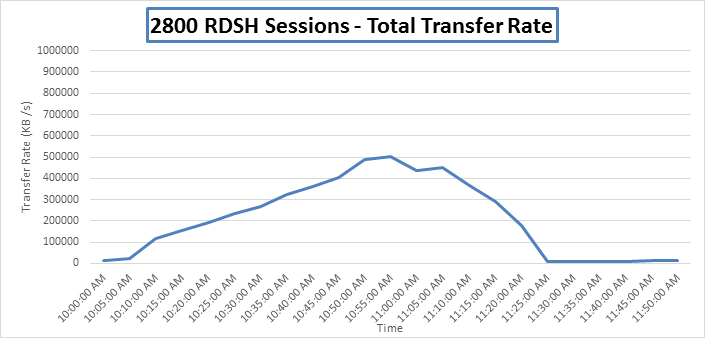

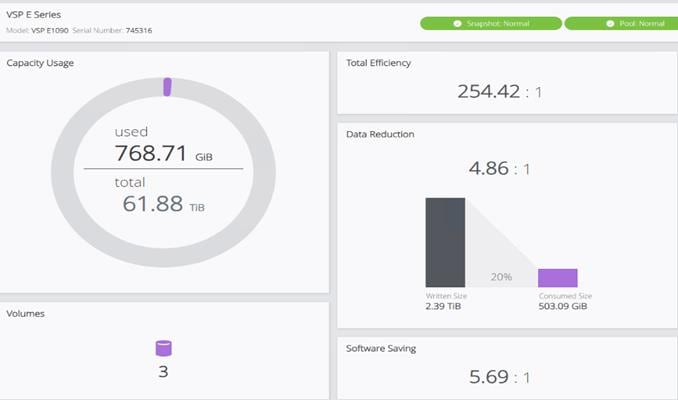

The capacity saving feature is native to the Hitachi VSP. When enabled, data deduplication and compression is performed to reduce the size of data to be stored. Capacity saving can be enabled on dynamic provisioning volumes in Hitachi Dynamic Provisioning (HDP) pools and Hitachi Dynamic Tiering (HDT) pools. You can use the capacity saving function on internal flash drives only, including data stored on encrypted flash drives and external storage. Within this guide, the capacity saving function was enabled to show the use case benefits in conjunction with VDI deployments. The following image demonstrates how the Hitachi VSP makes use of the capacity saving function after it is enabled on the DP pool.

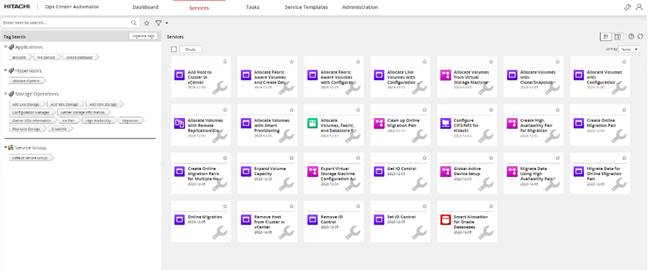

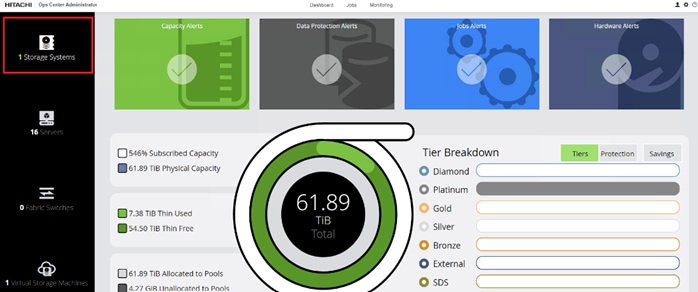

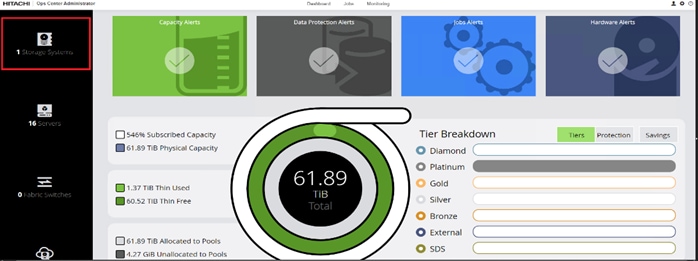

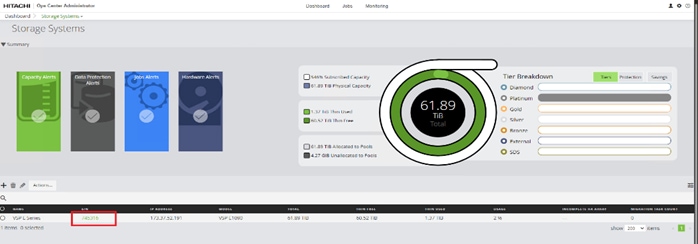

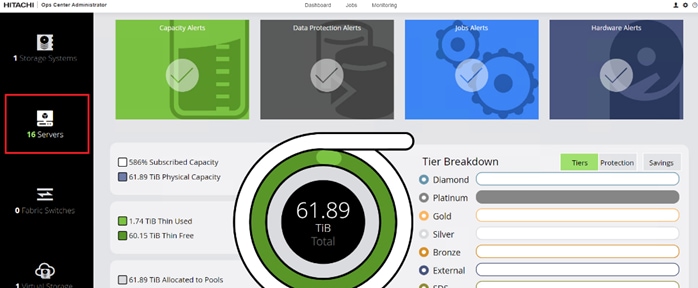

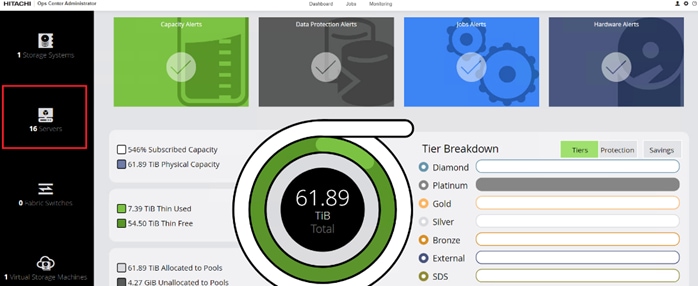

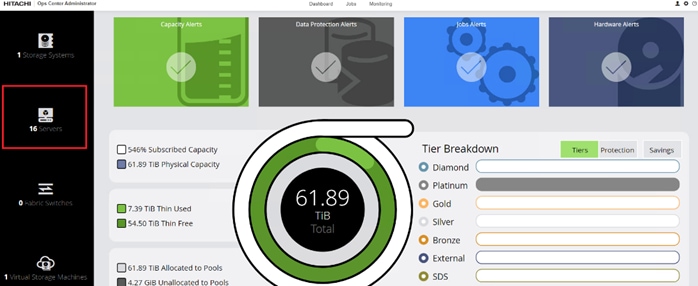

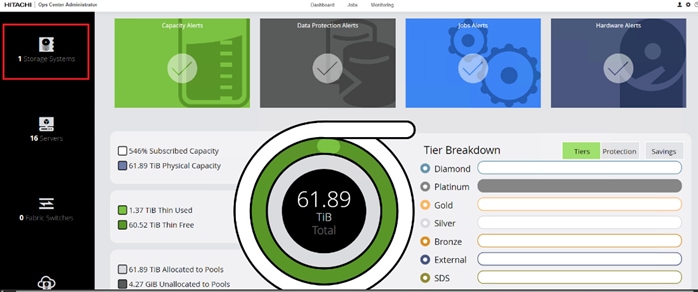

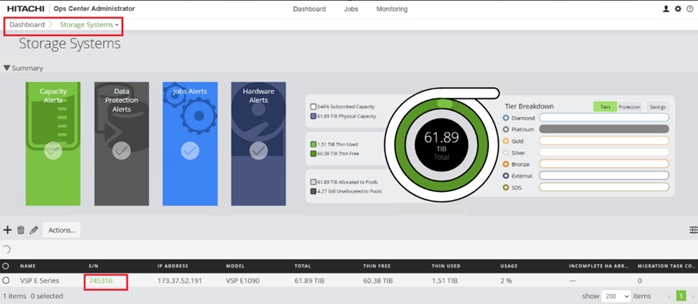

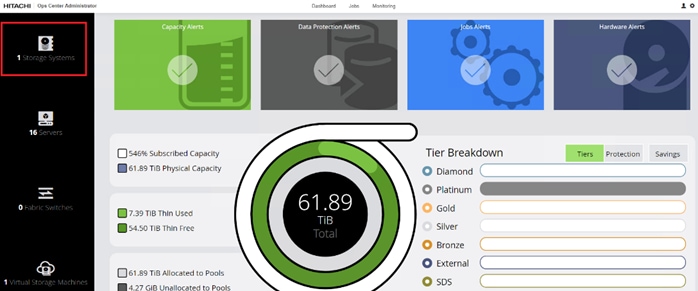

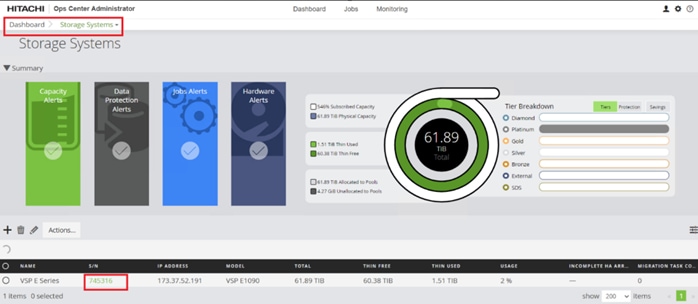

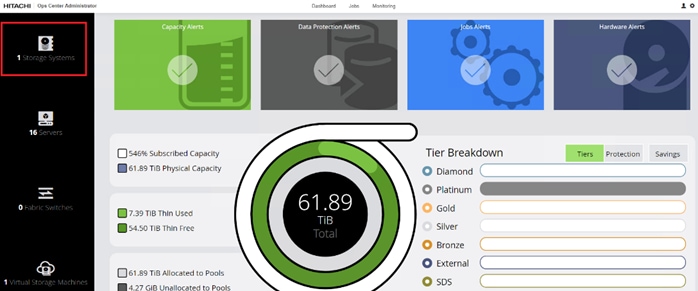

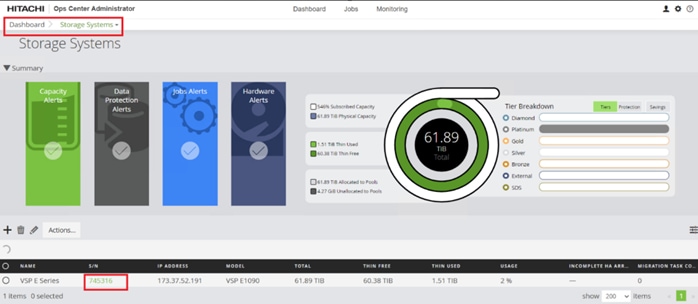

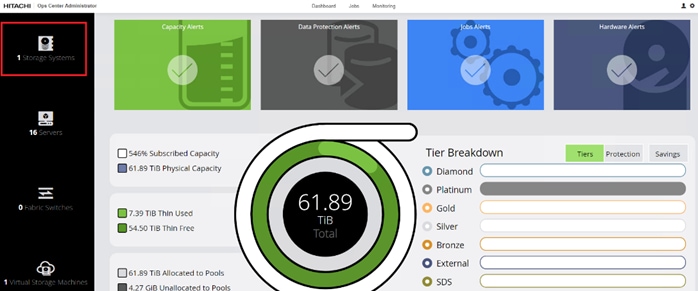

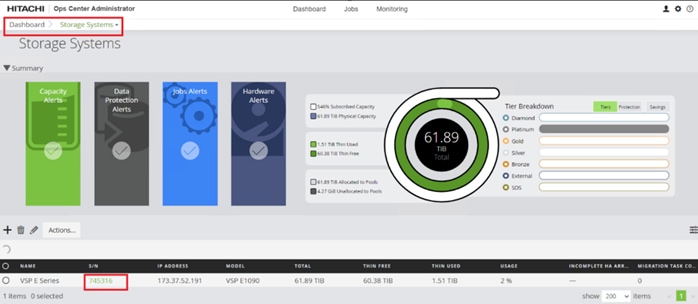

Hitachi Ops Center is an integrated suite of applications that enable you to optimize your data center operations through integrated configuration, analytics, automation, and copy data management. These features allow you to administer, automate, optimize, and protect your Hitachi storage infrastructure.

The following modules are included in the Hitachi Ops Center:

● Ops Center Administrator

● Ops Center Analyzer

● Ops Center Automator

● Ops Center Protector

● Ops Center API Configuration Manager

● Ops Center Analyzer Viewpoint

For more Information about Ops Center, go to: https://www.hitachivantara.com/en-us/products/storage-software/ai-operations-management/ops-center.html

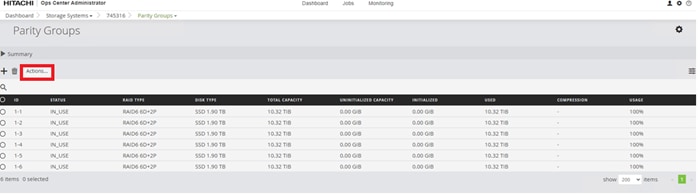

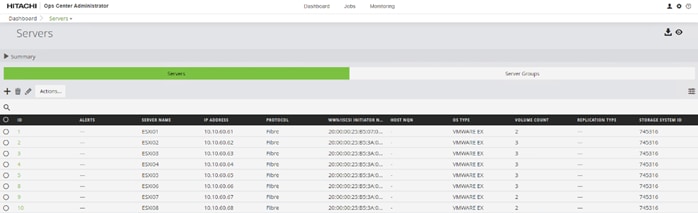

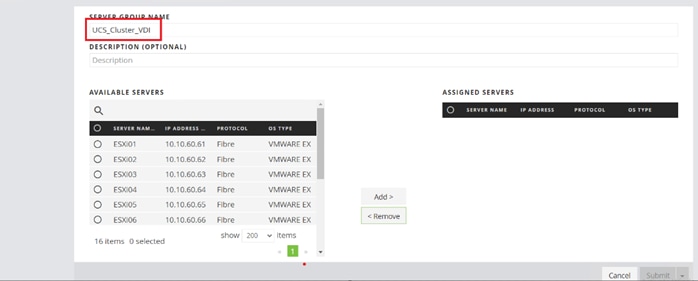

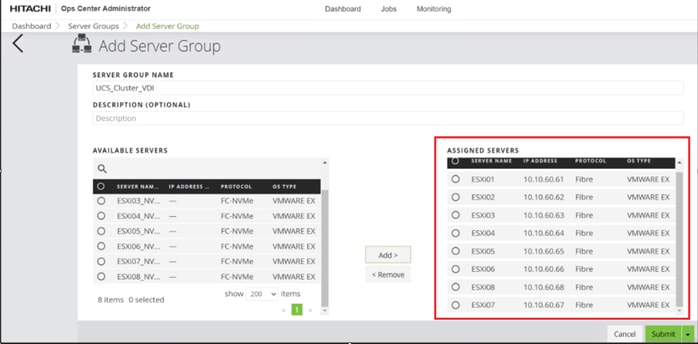

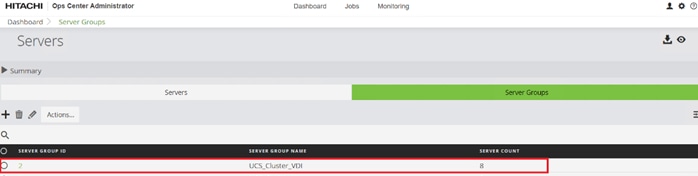

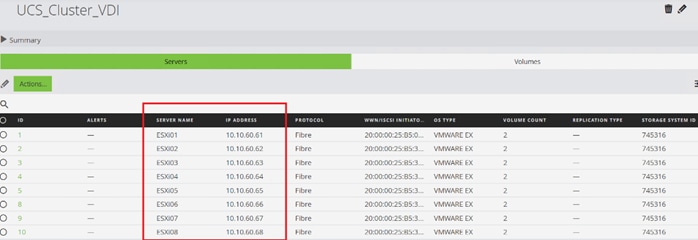

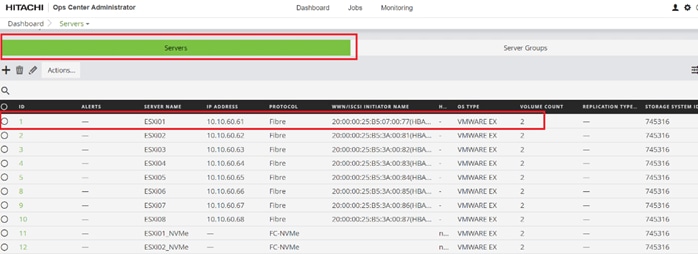

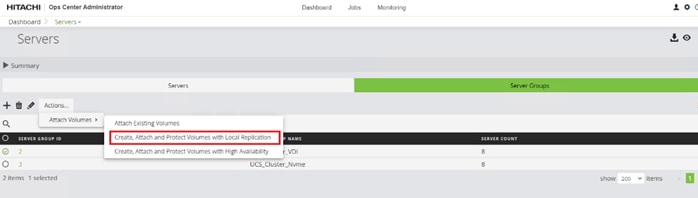

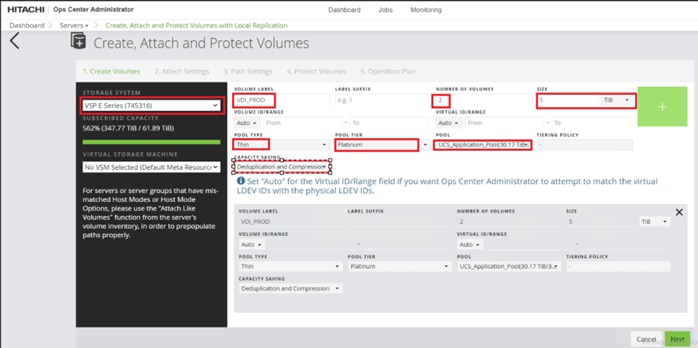

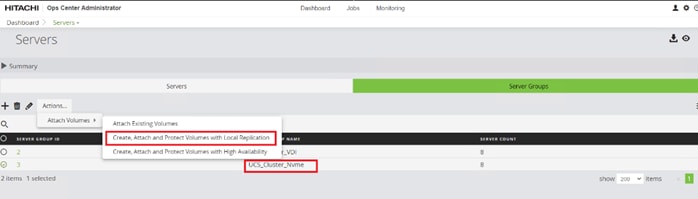

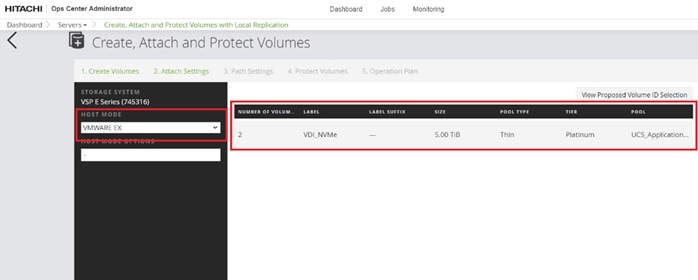

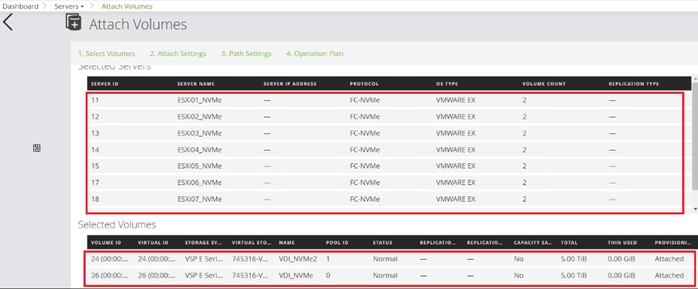

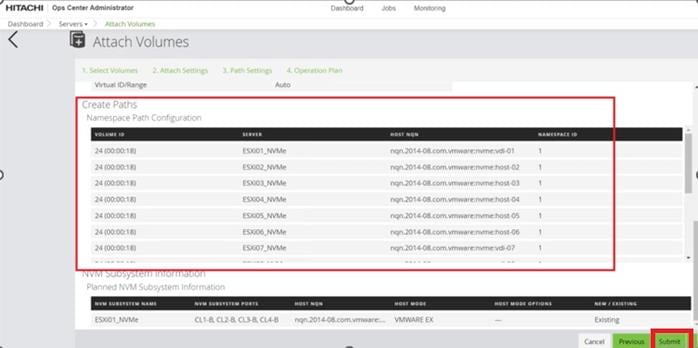

Hitachi Ops Center Administrator

Hitachi Ops Center Administrator is an infrastructure management solution that unifies storage provisioning, data protection, and storage management across the entire Hitachi VSP family.

The Hitachi Ops Center Administrator key benefits are:

● Reduction in administration time and effort to efficiently deploy and manage new storage resources using Administrator’s easy to use interface.

● Utilization of common configurations to centrally manage multiple Hitachi Virtual Storage Platform storage systems.

● Standards-based APIs that enable fast storage provisioning operations and integration with external tools.

● Use of common administrative workflows to manage highly available storage volumes.

● Integrate with Hitachi Ops Center management to incorporate analytics, automation, and data protection.

Ops Center Analyzer

Ops Center Analyzer provides a comprehensive application service-level and storage performance management solution that enables you to quickly identify and isolate performance problems, determine the root cause, and provide solutions. It enables proactive monitoring from the application level through server, network, and storage resources for end-to-end visibility of your monitored environment. It also increases performance and storage availability by identifying problems before they can affect applications.

The Ops Center Analyzer collects and correlates data from these sources:

● Storage systems

● Fibre Channel switches

● Hypervisors

● Hosts

Ops Center Analyzer Viewpoint

Ops Center Analyzer viewpoint displays user the operational status of data centers around the world in a single window allowing comprehensive insight to global operations.

With Hitachi Ops Center Analyzer Viewpoint, the following information can be viewed:

● Check the overall status of multiple data centers: By accessing Analyzer viewpoint from a web browser, you can collectively display and view information about supported resources in the data centers. Even for a large-scale system consisting of multiple data centers, you can check the comprehensive status of all data centers.

● Easily analyze problems related to resources: By using the UI, you can display information about resources in a specific data center in a drill-down view and easily identify where a problem occurred. Additionally, because you can launch the Ops Center Analyzer UI from the Analyzer viewpoint UI, you can quickly perform the tasks needed to resolve the problem.

Hitachi Ops Center Protector

With Hitachi Ops Center Protector you can easily configure in-system or remote-replication with the Hitachi VSP. Protector as an enterprise data copy management platform provides business-defined data protection, which simplifies the creation and management of complex, business-defined policies to meet service-level objectives for availability, recoverability, and retention.

Hitachi Ops Center Automator

Hitachi Ops Center Automator is a software solution that provides automation to simplify end-to-end data management tasks such as storage provisioning for storage and data center administrators. The building blocks of the product are prepackaged automation templates known as service templates which can be customized and configured for other people in the organization to use as a self-service model, reducing the load on traditional administrative staff.

Hitachi Ops Center API Configuration Manager

Hitachi Ops Center API Configuration Manager REST is an independent and lightweight binary that enables programmatic management of Hitachi VSP storage systems using restful APIs. This component can be deployed stand -alone or as a part of Hitachi Ops Center.

The REST API supports the following storage systems:

● VSP 5000 series

● VSP E Series

● VSP F Series VSP G Series

Hitachi Virtual Storage Platform Sustainability

Hitachi Virtual Storage Platform (VSP) storage systems are certified with CFP (Carbon Footprint of Products) that is a method of “visualizing” CO2 equivalent emissions obtained by converting Greenhouse Gas emissions from the entire life cycle stages of a product (goods or service), that is from the raw material acquisition stage to the disposal and recycling stage. Eco-friendly storage products from Hitachi help reduce CO2 emissions by approximately 30 percent to 60 percent compared to previous models.

For more information, go to: https://ecoleaf-label.jp/en/organization/7

Additionally, the Hitachi VSP brings the following advantages for a greener datacenter:

● Unique hardware-based data compression achieves approximately 60 percent reduction in power consumption.

● Fast data compression processing improves read/write performance by 40 percent.

● Automatic switching enables high performance and energy savings.

● Eliminating the need for data migration saves energy while minimizing waste.

The Hitachi VSP E1090 storage solution is also certified under the USA ENERGY STAR program and is number 1 in its class.

For more information, go to: https://www.energystar.gov/productfinder/product/certified-data-center-storage/details/2406163/export/pdf

This chapter contains the following:

● Design Considerations for Desktop Virtualization

● Understanding Applications and Data

● Project Planning and Solution Sizing Sample Questions

● Hitachi SAN and VMware Configuration Best Practices

Design Considerations for Desktop Virtualization

There are many reasons to consider a virtual desktop solution such as an ever growing and diverse base of user devices, complexity in management of traditional desktops, security, and even Bring Your Own Device (BYOD) to work programs. The first step in designing a virtual desktop solution is to understand the user community and the type of tasks that are required to successfully execute their role. The following user classifications are provided:

● Knowledge Workers today do not just work in their offices all day – they attend meetings, visit branch offices, work from home, and even coffee shops. These anywhere workers expect access to all of their same applications and data wherever they are.

● External Contractors are increasingly part of your everyday business. They need access to certain portions of your applications and data, yet administrators still have little control over the devices they use and the locations they work from. Consequently, IT is stuck making trade-offs on the cost of providing these workers a device vs. the security risk of allowing them access from their own devices.

● Task Workers perform a set of well-defined tasks. These workers access a small set of applications and have limited requirements from their PCs. However, since these workers are interacting with your customers, partners, and employees, they have access to your most critical data.

● Mobile Workers need access to their virtual desktop from everywhere, regardless of their ability to connect to a network. In addition, these workers expect the ability to personalize their PCs, by installing their own applications and storing their own data, such as photos and music, on these devices.

● Shared Workstation users are often found in state-of-the-art university and business computer labs, conference rooms or training centers. Shared workstation environments have the constant requirement to re-provision desktops with the latest operating systems and applications as the needs of the organization change, tops the list.

After the user classifications have been identified and the business requirements for each user classification have been defined, it becomes essential to evaluate the types of virtual desktops that are needed based on user requirements. There are five potential desktops environments for each user:

● Traditional PC: A traditional PC is what typically constitutes a desktop environment: a physical device with a locally installed operating system.

● Remote Desktop Session Host (RDSH) Sessions: A hosted; server-based desktop is a desktop where the user interacts through a delivery protocol. With hosted, server-based desktops, a single installed instance of a server operating system, such as Microsoft Windows Server 2022, is shared by multiple users simultaneously. Each user receives a desktop "session" and works in an isolated memory space. Remote Desktop Session Host Server sessions: A hosted virtual desktop is a virtual desktop running on a virtualization layer (ESX). The user does not work with and sit in front of the desktop, but instead the user interacts through a delivery protocol.

● Published Applications: Published applications run entirely on the VMware RDS server virtual machines and the user interacts through a delivery protocol. With published applications, a single installed instance of an application, such as Microsoft Office, is shared by multiple users simultaneously. Each user receives an application "session" and works in an isolated memory space.

● Streamed Applications: Streamed desktops and applications run entirely on the user‘s local client device and are sent from a server on demand. The user interacts with the application or desktop directly, but the resources may only be available while they are connected to the network.

● Local Virtual Desktop: A local virtual desktop is a desktop running entirely on the user‘s local device and continues to operate when disconnected from the network. In this case, the user’s local device is used as a type 1 hypervisor and is synced with the data center when the device is connected to the network.

Note: For the purposes of the validation represented in this document, both Single-session OS and Multi-session OS VDAs were validated.

Understanding Applications and Data

When the desktop user groups and sub-groups have been identified, the next task is to catalog group application and data requirements. This can be one of the most time-consuming processes in the VDI planning exercise but is essential for the VDI project’s success. If the applications and data are not identified and co-located, performance will be negatively affected.

The process of analyzing the variety of application and data pairs for an organization will likely be complicated by the inclusion of cloud applications, for example, SalesForce.com. This application and data analysis is beyond the scope of this Cisco Validated Design but should not be omitted from the planning process. There are a variety of third-party tools available to assist organizations with this crucial exercise.

Project Planning and Solution Sizing Sample Questions

The following key project and solution sizing questions should be considered:

● Has a VDI pilot plan been created based on the business analysis of the desktop groups, applications, and data?

● Is there infrastructure and budget in place to run the pilot program?

● Are the required skill sets to execute the VDI project available? Can we hire or contract for them?

● Do we have end user experience performance metrics identified for each desktop sub-group?

● How will we measure success or failure?

● What is the future implication of success or failure?

Below is a short, non-exhaustive list of sizing questions that should be addressed for each user sub-group:

● What is the Single-session OS version?

● 32-bit or 64-bit desktop OS?

● How many virtual desktops will be deployed in the pilot? In production?

● How much memory per target desktop group desktop?

● Are there any rich media, Flash, or graphics-intensive workloads?

● Are there any applications installed? What application delivery methods will be used, Installed, Streamed, Layered, Hosted, or Local?

● What is the Multi-session OS version?

● What is a method be used for virtual desktop deployment?

● What is the hypervisor for the solution?

● What is the storage configuration in the existing environment?

● Are there sufficient IOPS available for the write intensive VDI workload?

● Will there be storage dedicated and tuned for VDI service?

● Is there a voice component to the desktop?

● Is there a 3rd party graphics component?

● Is anti-virus a part of the image?

● What is the SQL server version for database?

● Is user profile management (for example, non-roaming profile based) part of the solution?

● What is the fault tolerance, failover, disaster recovery plan?

● Are there additional desktop sub-group specific questions?

VMware vSphere 8.0 2 has been selected as the hypervisor for this VMware Horizon Virtual Desktops and Remote Desktop Session Host (RDSH) Sessions deployment.

VMware vSphere: VMware vSphere comprises the management infrastructure or virtual center server software and the hypervisor software that virtualizes the hardware resources on the servers. It offers features like Distributed Resource Scheduler, vMotion, high availability, Storage vMotion, VMFS, and a multi-pathing storage layer. More information on vSphere can be obtained at the VMware website.

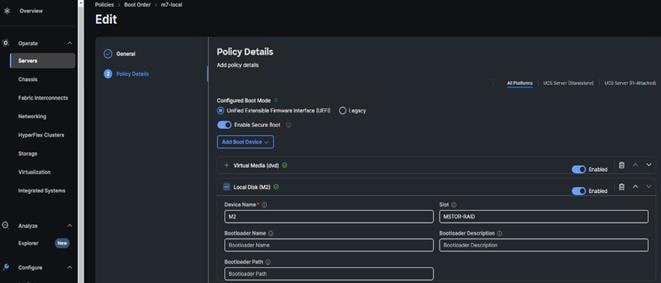

When utilizing Cisco UCS Server technology, it is recommended to configure Boot from SAN and store the boot partitions on remote storage, this enables architects and administrators to take full advantage of the stateless nature of service profiles for hardware flexibility across lifecycle management of server hardware generational changes, Operating Systems/Hypervisors, and overall portability of server identity. Boot from SAN also removes the need to populate local server storage creating more administrative overhead.

Note: Within this document M.2 boot drives were used under lab testing. But the direction sets documented provide SAN boot configuration.

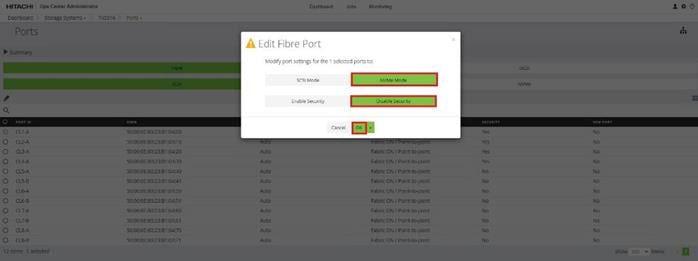

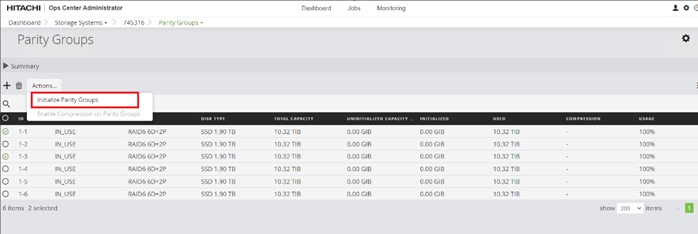

Each VSP storage system is comprised of multiple controllers and Fibre Channel adapters that control connectivity to the Fibre Channel fabrics using the MDS FC switches. Channel boards (CHBs) are used within the VSP E1090 models and have two controllers contained within the storage system. The multiple CHBs within each storage system allow for designing multiple layers of redundancy within the storage architecture, increasing availability, and maintaining performance during a failure event.

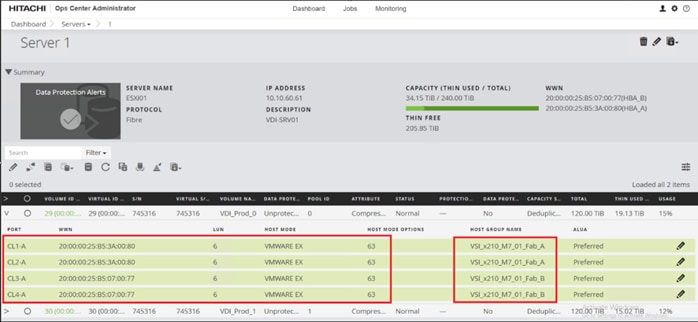

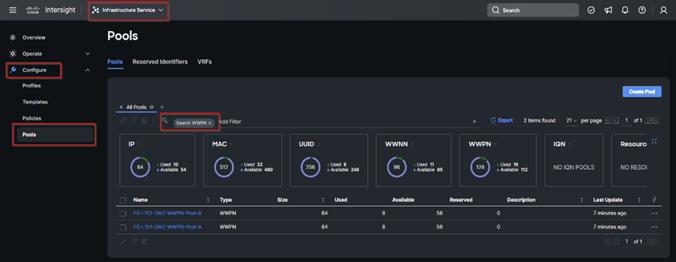

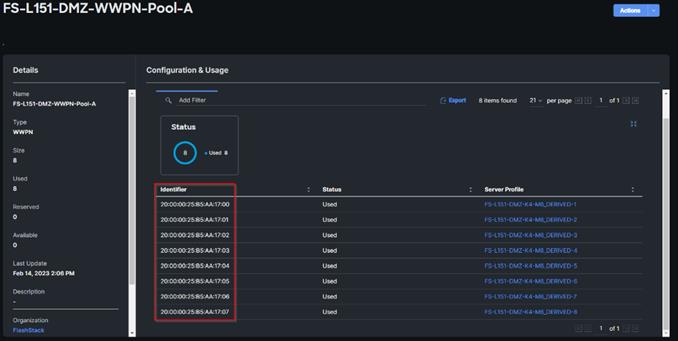

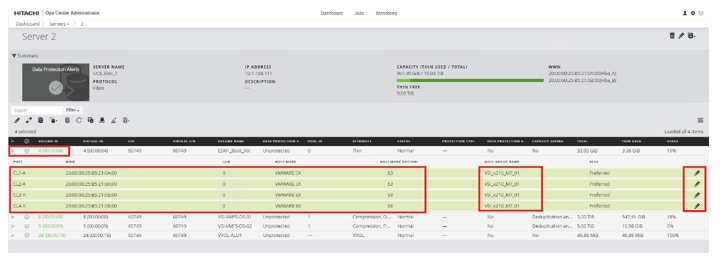

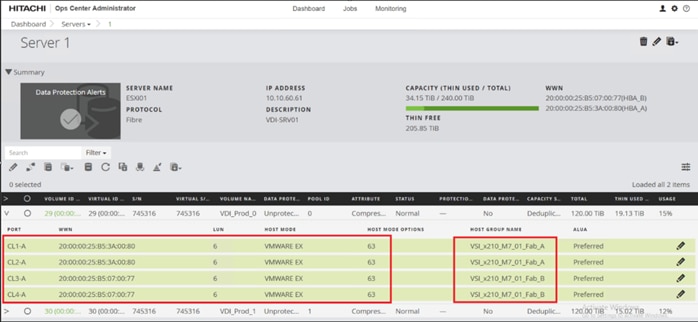

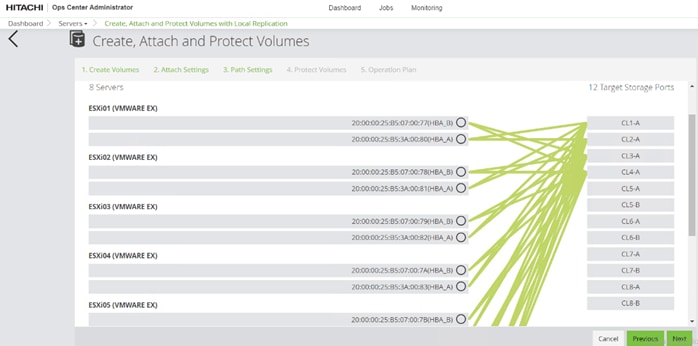

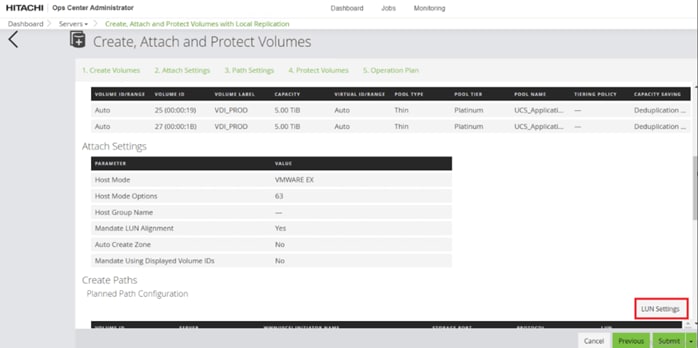

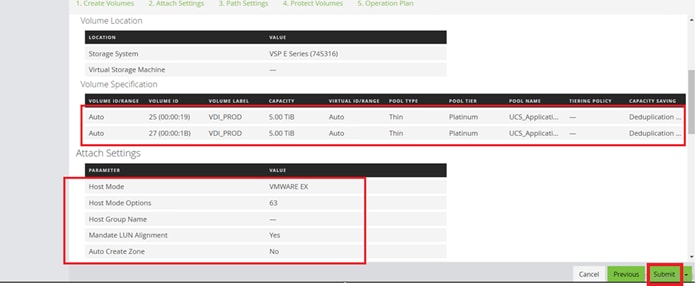

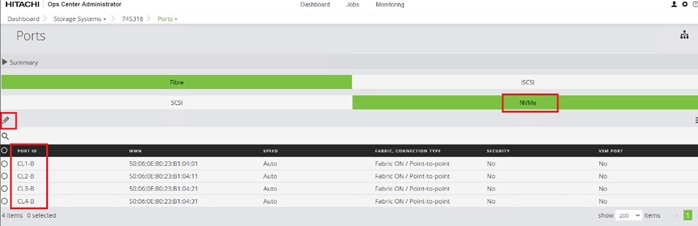

The VSP E1090 CHBs each contain up to four individual Fibre Channel ports, allowing for redundant connections to each fabric in the Cisco UCS infrastructure. The VSP CHBs each contain up to four individual Fibre Channel (FC) ports, allowing for redundant connections to each fabric in the Cisco UCS infrastructure. In this deployment 4 ports are configured for FC-SCSI protocol, one from controller 1 (CL1-A) and one from controller 2 (CL2-A) going into MDS fabric A, as well as one from controller 1 (CL3-A) and one from controller 2 (CL4-A) going into MDS fabric B for a total of 4 connections. These connections provide the data path to boot LUNs and VMFS datastores that use the FC-SCSI protocol.

Additionally, 4 ports are configured for FC-NVMe, one from controller 1 (CL1-B) and one from controller 2 (CL2-B) going into MDS fabric A, and one from controller 1 (CL3-B) and one from controller 2 (CL4-B) going into MDS fabric B to provide an additional data path for VMFS datastores that use the FC-NVMe protocol.

With the Cisco UCS ability to provide alternate data paths, there are a total of 4 paths per host, which provide boot LUN as well as for VMFS datastores. If you plan to deploy VMware ESXi hosts, each host’s WWN should be in its own host group. This approach provides granular control over LUN presentation to ESXi hosts. This is the best practice for SAN boot environments such as Cisco UCS, because ESXi hosts do not have access to other ESXi hosts’ boot LUNs.

The Hitachi VSP E1090 provides up to 32Gb FC support, as well as up to 25Gbs by way of optional iSCSI CHB adapters. Always make sure the correct number of HBAs and the speed of SFPs are included in the original BOM.

To reduce the impact of an outage or scheduled maintenance downtime, it Is good practice when designing fabrics to provide oversubscription of bandwidth, this enables a similar performance profile during component failure and protects workloads from being impacted by a reduced number of paths during a component failure or maintenance event. Oversubscription can be achieved by increasing the number of physically cabled connections between storage and compute. These connections can then be utilized to deliver performance and reduced latency to the underlying workloads running on the solution.

When configuring your SAN, it’s important to remember that the more hops you have, the more latency you will see. For best performance, the ideal topology is a “Flat Fabric” where the VSP is only one hop away from any applications being hosted on it.

Hitachi SAN and VMware Configuration Best Practices

A well-designed SAN must be reliable and scalable and recover quickly in the event of a single device failure. Also, a well- designed SAN grows easily as the demands of the infrastructure that it serves increases.

Hitachi storage uses Hitachi Storage Virtualization Operating System RF (SVOS RF). The following are specific advice for VMware environments using SVOS RF.

LUN and Datastore Provisioning Best Practices

These are the best practices for general VMFS provisioning. Hitachi recommends that you always use the latest VMFS version. Always separate the VMware cluster workload from other workloads.

LUN Size

The following lists the current maximum LUN/datastore size for VMware vSphere and Hitachi storage:

● The maximum LUN size for VMware vSphere is 64 TB. https://configmax.esp.vmware.com/home.

● The maximum LUN size for Hitachi Virtual Storage Platform E series, F series or VSP G series is 256 TB with replication.

● The LUN must be within a dynamic provisioning pool.

● The maximum VMDK size is 62 TB (vVol-VMDK or RDM).

● Using multiple smaller sized LUNs tends to provide higher aggregated I/O performance by reducing the concentration of a storage processor (MPB). It also reduces the recovery time in the event of a disaster. Take these points into consideration when using larger LUNs. In some environments, the convenience of using larger LUNs might outweigh the relatively minor performance disadvantage.

● Before Hitachi Virtual Storage Platform E series, VSP F series, and VSP G series storage systems, the maximum supported LUN size was limited to 4 TB because of storage replication capability. With current Hitachi Virtual Storage Platform storage systems, this limitation has been removed. Keep in mind that recovery is typically quicker with smaller LUNs. so use the size that maximizes usage of MPB resources per LUN for the workload. Use the VMware integrated adapters or plugins Hitachi Vantara provides, such as vSphere Plugin, Microsoft PowerShell cmdlets, or VMware vRealize Orchestrator workflows to automate datastore and LUN management.

Thin-Provisioned VMDKs on a Thin-Provisioned LUNs from Dynamic Provisioning Pool

● Thin provisioned VMDKs on thin provisioned LUNs have become a common storage provisioning configuration for virtualized environments. While EagerZeroThick VMDKs have typically seen better latency performance in older vSphere releases (that is, releases older than vSphere 5), the performance gap between thin VMDK and thick VMDK is now insignificant, and you get added benefits with in-guest UNMAP for better space efficiency with thin. In vVols, thin provisioned VMDK (vVol) is the norm, and it performs even better than thin VMDK on VMFS as no zeroing is required when allocating blocks (thin vVols are the new EZT). Generally, start with thin VMDK on VMFS or vVols datastores. The only exception where you might consider migrating to EZT disks is if you have performance sensitive heavy write VM/container workloads where you can potentially see low single digit % performance improvement for those initial writes that might not be noticeable to your app.

● In the VSP storage system with Hitachi Dynamic Provisioning, it is also quite common to provision thin LUNs with less physical storage capacity (as opposed to fully allocated LUNs) However, monitor storage usage closely to avoid running out of physical storage capacity.

The following are some storage management and monitoring recommendations:

● Hitachi Infrastructure Management Pack for VMware vRealize Operations provides dashboards and alerting capability for monitoring physical and logical storage capacity.

● Enable automatic UNMAP with VMFS 6 (scripted UNMAP command with VMFS 5) to maintain higher capacity efficiency.

RDMs and Command Devices

If presenting command devices as RDMs to virtual machines, ensure command devices have all attributes set before presenting them to VMware ESXi hosts.

LUN Distribution

The general recommendation is to distribute LUNs and workloads so that each host has 2-8 paths to each LDEV. This prevents workload pressure on a small set target ports to become a potential performance bottleneck.

It is prudent to isolate your production environment, and critical systems. to dedicated ports to avoid contention from other hosts' workloads. However, presenting the same LUN to too many target ports could also introduce additional problems with slower error recovery.

Follow the practice below while trying to achieve this goal:

● Each host bus adapter physical port (HBA) should only see one instance of each LUN.

● The number of paths should typically not exceed the number of HBA ports for better reliability and recovery.

● Two to four paths to each LUN provides the optimal performance for most workload environments,

● See Recommended Multipath Settings for Hitachi Storage knowledge base article for more information about LUN instances.

HBA LUN Queue Depth

In a general VMware environment, increasing the HBA LUN queue depth will not solve a storage I/O performance issue. It may overload the storage processors on your storage systems. Hitachi recommends keeping queue depth values to the HBA vendor’s default in most cases. See this Broadcom’s KB article for more details.

In certain circumstances, increasing the queue depth value may increase overall I/O throughput. For example, a LUN hosting as a target for virtual machine backups might require higher throughput during the backup window. Make sure to monitor storage processor usage carefully for queue depth changes.

Slower hosts with read-intensive workloads may request more data than they can remove from the fabric in a timely manner. Lowering the queue depth value can be an effective control mechanism to limit slower hosts.

For a VMware vSphere protocol endpoint (PE) configured to enable virtual volumes (vVols) from Hitachi storage, set a higher queue depth value, such as 128.

Host Group and Host Mode Options

To grant a host access to an LDEV, assign a logical unit number (LUN) within a host group. These are the settings and LUN mapping for host group configurations.

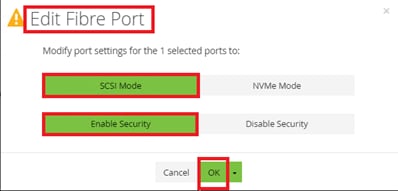

Fibre Channel Port Settings

If connecting a Fibre Channel port using a SAN switch or director, you must change the following settings:

● Port security — Set the port security to Enable. This allows multiple host groups on the Fibre Channel port.

● Fabric — Set fabric to ON. This allows connection to a Fibre Channel switch or director.

● Connection Type — Set the connection type to P-to-P. This allows a point-to-point connection to a Fibre Channel switch or director. Loop Attachment is deprecated and no longer supported on 16 Gb/s and 32 Gb/s storage channel ports.

● Hitachi recommends that you apply the same configuration to a port in cluster 1 as to a port in cluster 2 in the same location. For example, if you create a host group for a host on port CL1-A, also create a host group for that host on portCL2-A. One Host Group per VMware ESXi Host Configuration

● If you plan to deploy VMware ESXi hosts, each host’s WWN can be in its own host group. This approach provides granular control over LUN presentation to ESXi hosts. This is the best practice for SAN boot environments, because ESXi hosts do not have access to other ESXi hosts’ boot LUNs. Make sure to reserve LUN ID 0 for boot LUN for easier troubleshooting.

However, in a cluster environment, this approach can be an administrative challenge because keeping track of which WWNs for ESXi hosts are in a cluster can be difficult. When multiple ESXi hosts need to access the same LDEV for clustering purposes, the LDEV must be added to each host group.

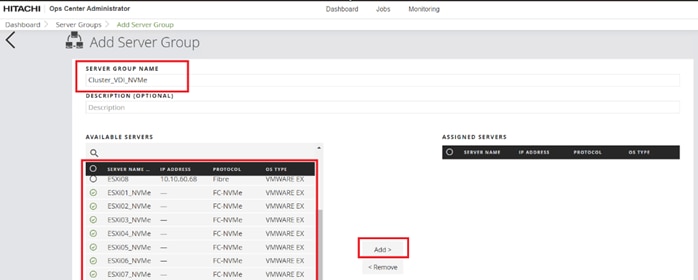

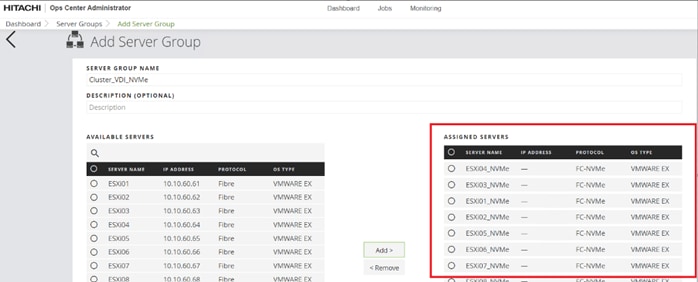

One Host Group per Cluster, Cluster Host Configuration

● VMware vSphere features such as vMotion, Distributed Resource Scheduler, High Availability, and Fault Tolerance require shared storage across the VMware ESXi hosts. Many of these features require that the same LUNs be presented to all ESXi hosts participating in these cluster functions.

● For convenience and where granular control is not essential, create host groups with clustering in mind. Place all the WWNs for the clustered ESXi hosts in a single host group. This ensures that when adding LDEVs to the host group, all ESXi hosts see the same LUNs. This creates consistency with LUN presentation across all hosts.

● Host Group Options

On Hitachi Virtual Storage Platform storage systems, create host groups using Hitachi Storage Navigator. Change the following host mode and host mode options to enable VMware vSphere Storage APIs for Array Integration (VAAI):

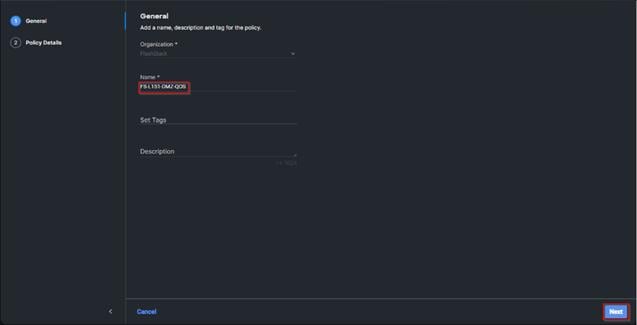

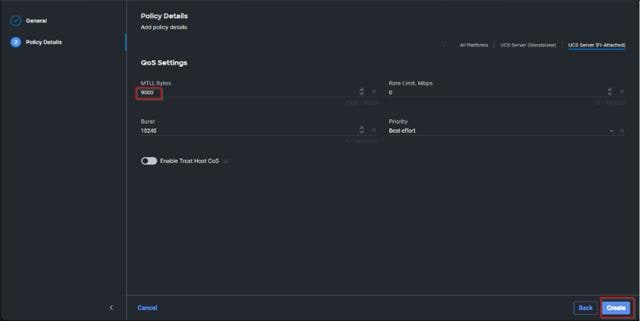

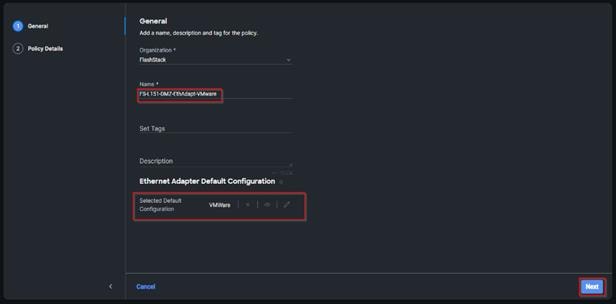

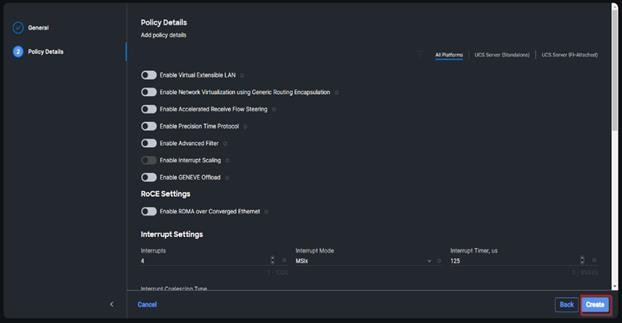

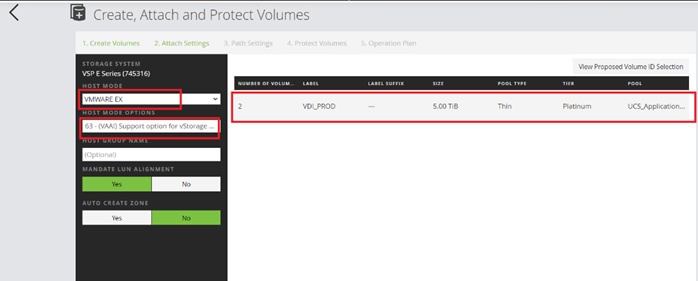

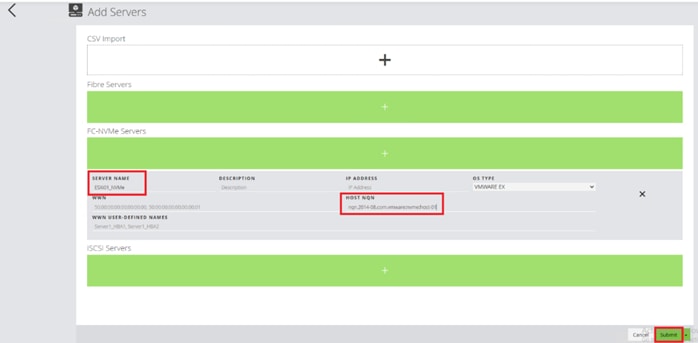

◦ Host Mode Options