Cisco and Hitachi Adaptive Solutions for Converged Infrastructure

Available Languages

Cisco and Hitachi Adaptive Solutions for Converged Infrastructure

Deployment Guide for Cisco and Hitachi Converged Infrastructure with Cisco UCS Blade Servers, Cisco Nexus 9336C-FX2 Switches, Cisco MDS 9706 Fabric Switches, and Hitachi VSP G1500 and VSP G370 Storage Systems with vSphere 6.5 and vSphere 6.7

Last Updated: June 19, 2019

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2019 Cisco Systems, Inc. All rights reserved.

Table of Contents

Deployment Hardware and Software

Hardware and Software Versions

Cisco Nexus Switch Configuration

Initial Nexus Configuration Dialogue

Add Individual Port Descriptions for Troubleshooting

Configure Port Channel Member Interfaces

Configure Virtual Port Channels

Create Hot Standby Router Protocol (HSRP) Switched Virtual Interfaces (SVI)

Initial MDS Configuration Dialogue

Cisco MDS Switch Configuration

Create Port Descriptions - Fabric B

Configuring Fibre Channel Ports on Hitachi Virtual Storage Platform

Cisco UCS Compute Configuration

Upgrade Cisco UCS Manager Software to Version 4.0(1b)

Enable Port Auto-Discovery Policy

Enable Info Policy for Neighbor Discovery

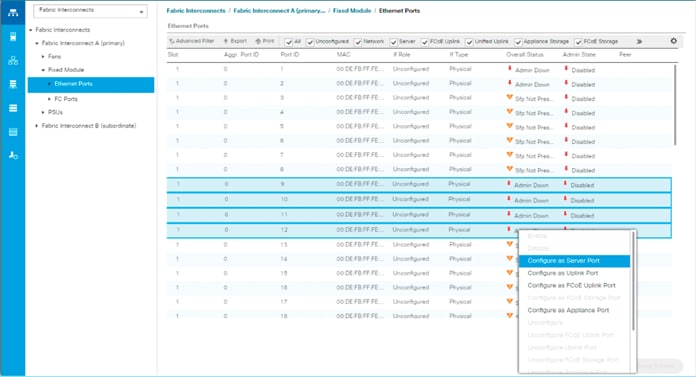

Enable Server and Uplink Ports

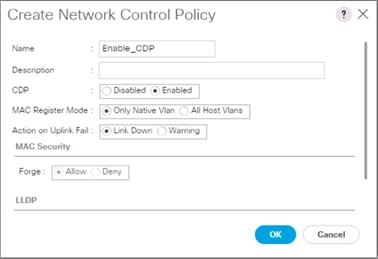

Configure Cisco UCS LAN Connectivity

Set Jumbo Frames in Cisco UCS Fabric

Create LAN Connectivity Policy

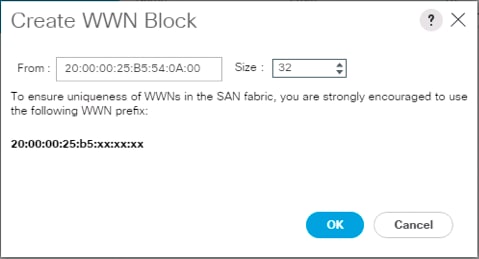

Create SAN Connectivity Policy

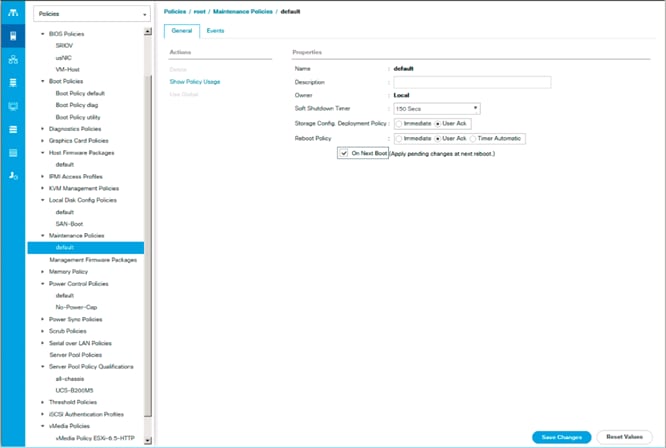

Create Service Profile Template

Create vMedia Service Profile Template

Collect UCS Host vHBA Information for Zoning

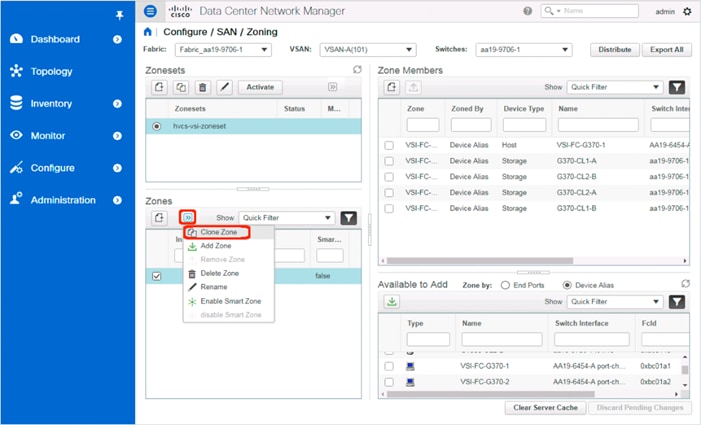

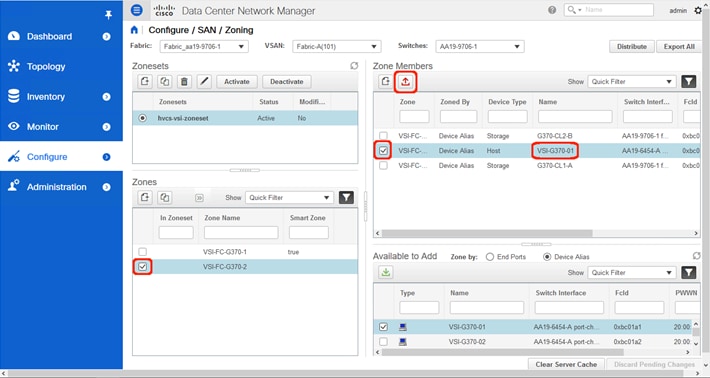

DCNM Switch Registration and Zoning(Optional)

Connecting to DCNM and Registering Switches

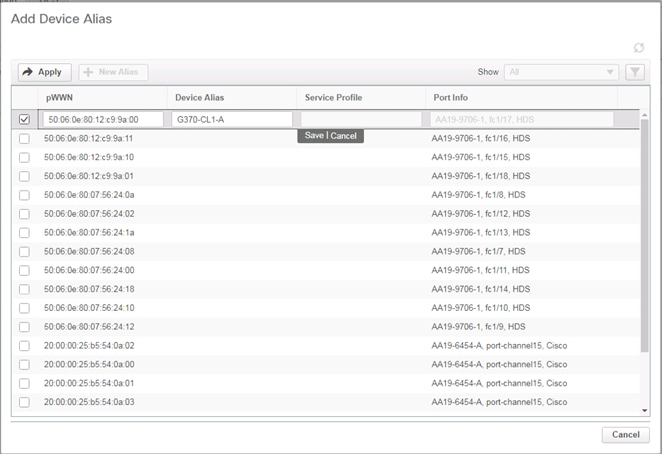

Configuring Device Aliases for the VSP and ESXi hosts

Configure Host Connectivity and Presentation of Storage on Hitachi Virtual Storage Platform

Create a Hitachi Dynamic Provisioning Pool for UCS Server Boot LDEVs

Create a Hitachi Dynamic Provisioning Pool for UCS Server VMFS Volume LDEVs

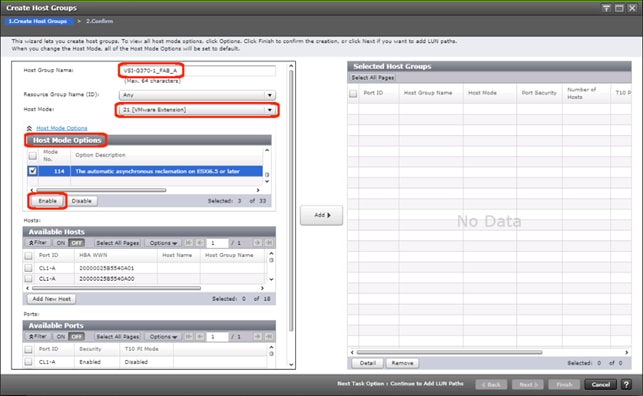

Create Host Groups for UCS Server vHBAs on Each Fabric

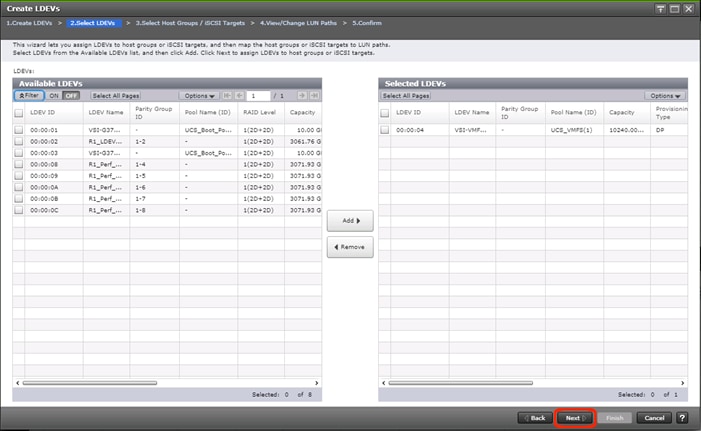

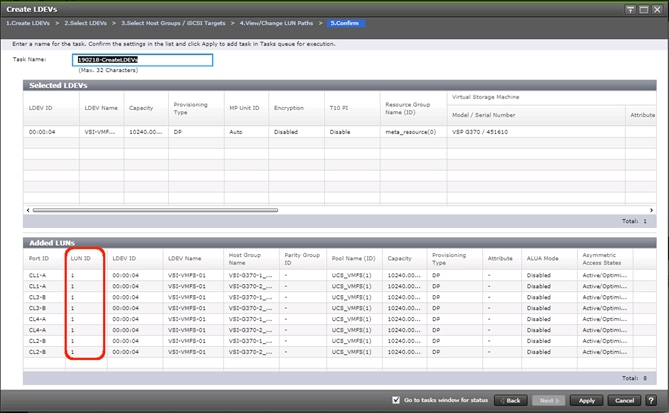

Create Boot LDEVs for Each UCS Service Profile and Add LDEV Paths

Create Shared VMFS LDEVs and Add LDEV Paths

Download Cisco Custom Image for ESXi 6.7 U1

Log into Cisco UCS 6454 Fabric Interconnect

Set Up VMware ESXi Installation

Set Up Management Networking for ESXi Hosts

Log into VMware ESXi Hosts by Using VMware Host Client

Set Up VMkernel Ports and Virtual Switch

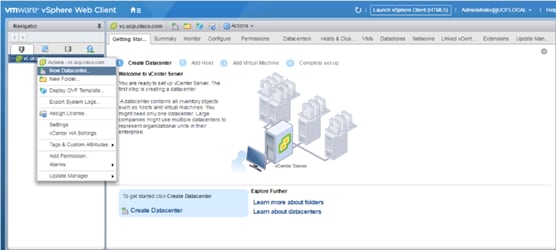

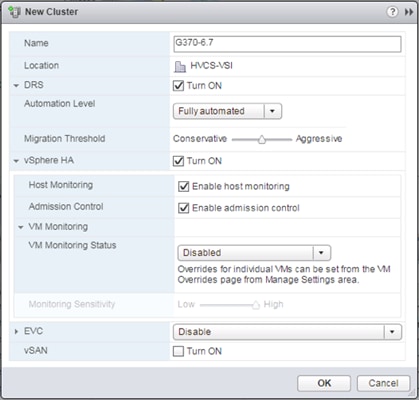

Add the VMware ESXi Hosts Using the VMware vSphere Web Client

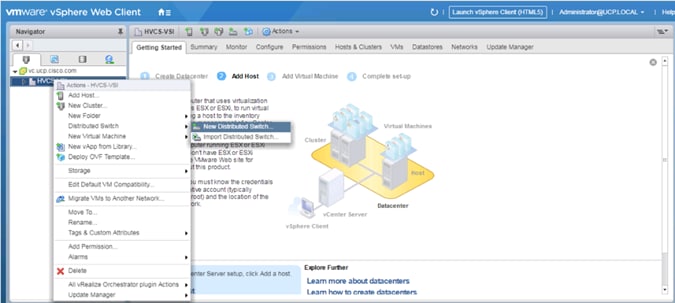

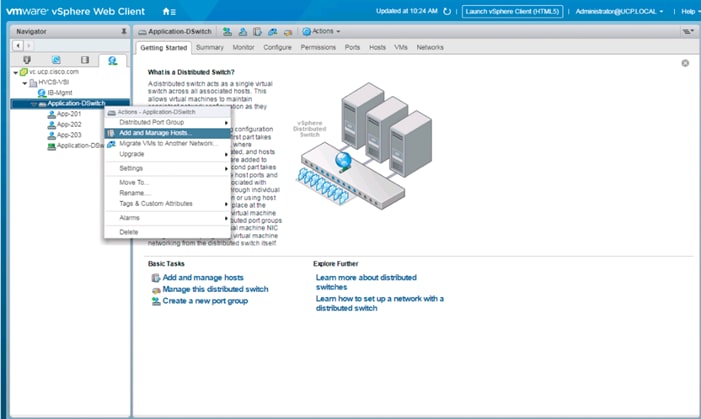

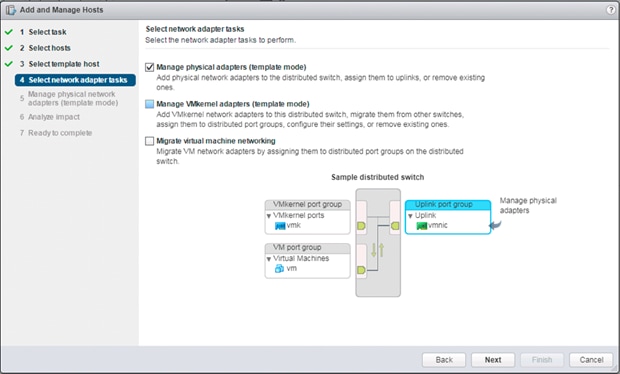

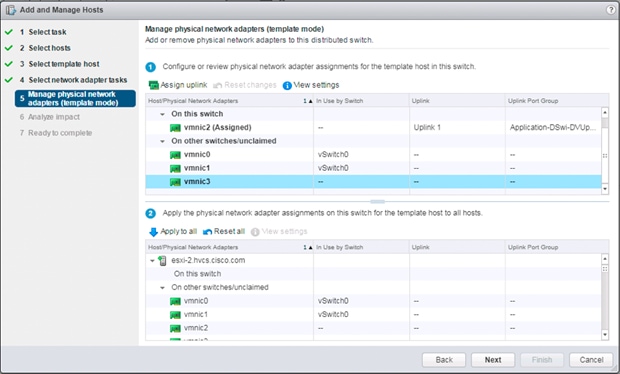

Create VMware vDS for Application Traffic

Create and Apply Patch Baselines with VUM

Remediation of L1 Terminal Fault – VMM (L1TF) Security Vulnerability (Optional)

Configuration of VMware Round Robin Path Selection Policy IOPS Limit

Appendix: MDS Device Alias and Zoning through CLI

Appendix: MDS Example startup-configuration File

Appendix: Nexus A Configuration Example

Appendix: Nexus B Configuration Example

Cisco Validated Designs consist of systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. These designs incorporate a wide range of technologies and products into a portfolio of solutions that have been developed to address the business needs of our customers.

Cisco and Hitachi are working together to deliver a converged infrastructure solution that helps enterprise businesses meet the challenges of today and position themselves for the future. Leveraging decades of industry expertise and superior technology, this Cisco CVD offers a resilient, agile, and flexible foundation for today’s businesses. In addition, the Cisco and Hitachi partnership extends beyond a single solution, enabling businesses to benefit from their ambitious roadmap of evolving technologies such as advanced analytics, IoT, cloud, and edge capabilities. With Cisco and Hitachi, organizations can confidently take the next step in their modernization journey and prepare themselves to take ad-vantage of new business opportunities enabled by innovative technology.

This document steps through the deployment of the Cisco and Hitachi Adaptive Solutions for Converged Infrastructure as a Virtual Server Infrastructure (VSI), as it was described in the Cisco and Hitachi Adaptive Solutions for Converged Infrastructure Design Guide. The recommended solution architecture is built on Cisco Unified Computing System (Cisco UCS) using the unified software release to support the Cisco UCS hardware platforms for Cisco UCS B-Series blade, Cisco UCS 6400 or 6300 Fabric Interconnects, Cisco Nexus 9000 Series switches, Cisco MDS Fibre channel switches, and Hitachi Virtual Storage Platform (VSP). This architecture is pulled together to support VMware vSphere 6.5 and VMware vSphere 6.7 to support a larger range of customer deployments within vSphere.

Introduction

Modernizing your data center can be overwhelming, and it’s vital to select a trusted technology partner with proven expertise. With Cisco and Hitachi as partners, companies can build for the future by enhancing systems of record, supporting systems of innovation, and growing their business. Organizations need an agile solution, free from operational inefficiencies, to deliver continuous data availability, meet SLAs, and prioritize innovation.

Hitachi and Cisco Adaptive Solutions for Converged Infrastructure as a Virtual Server Infrastructure (VSI) is a best practice datacenter architecture built on the collaboration of Hitachi Vantara and Cisco to meet the needs of enterprise customers utilizing virtual server workloads. This architecture is composed of the Hitachi Virtual Storage Platform (VSP) connecting through the Cisco MDS multilayer switches to Cisco Unified Computing System (UCS), and further enabled with the Cisco Nexus family of switches.

These deployment instructions are based on the buildout of the Cisco and Hitachi Adaptive Solutions for Converged Infrastructure validated reference architecture, that covers specifics of products utilized within the Cisco validation lab, but the solution is considered relevant for equivalent supported components listed within Cisco and Hitachi Vantara’s published compatibility matrixes. Supported adjustments from the example validated build must be evaluated with care as their implementation instructions may differ.

Audience

The audience for this document includes, but is not limited to; sales engineers, field consultants, professional services, IT managers, partner engineers, and customers who want to modernize their infrastructure to meet SLAs and their business needs at any scale.

Purpose of this Document

This document provides a step by step configuration and implementation guide for the Cisco and Hitachi Adaptive Solutions for Converged Infrastructure solution. This solution features a validated reference architecture composed of:

· Cisco UCS Compute

· Cisco Nexus Switches

· Cisco Multilayer SAN Switches

· Hitachi Virtual Storage Platform

For the design decisions and technology discussion of the solution, please refer to the Cisco and Hitachi Adaptive Solutions for Converged Infrastructure Design Guide:

Architecture

Cisco and Hitachi Adaptive Solutions for Converged Infrastructure is a validated reference architecture targeting Virtual Server Infrastructure(VSI) implementations. The architecture is built around the Cisco Unified Computing System(UCS) and the Hitachi Virtual Storage Platform(VSP) connected together by Cisco MDS Multilayer SAN Switches, and further enabled with Cisco Nexus Switches. These components come together to form a powerful and scalable design, built on the best practices of both companies to create an ideal environment for virtualized systems.

The solution is built and validated for two similar topologies featuring differing Cisco UCS Fabric Interconnects as well as differing Hitachi VSP Storage Systems, with both using the same MDS and Nexus switching infrastructure.

The first topology shown in Figure 1 leverages:

· Cisco Nexus 9336C-FX2 – 100Gb capable, LAN connectivity to the UCS compute resources.

· Cisco UCS 6454 Fabric Interconnect – Unified management of UCS compute, and the compute’s access to storage and networks.

· Cisco UCS B200 M5 – High powered, versatile blade server, conceived for virtual computing.

· Cisco MDS 9706 – 32Gbps Fibre Channel connectivity within the architecture, as well as interfacing to resources present in an existing data center.

· Hitachi VSP G370 – Mid-range, high-performance storage system with optional all-flash configuration

Figure 1 Cisco and Hitachi Adaptive Solution for CI with Hitachi VSP G370 and Cisco UCS 6454

The Cisco UCS B200 M5 blade servers in this topology are hosted within a Cisco UCS 5108 Chassis, and connect into the fabric interconnects from the chassis using Cisco UCS 2208XP I/O Modules (IOM). The 2208XP IOM supports 10G connections into the 10/25G ports of the Cisco UCS 6454 FIs, delivering a high port availability that may fit well in a branch office setting.

The second topology shown in Figure 2 leverages:

· Cisco Nexus 9336C-FX2 – 100Gb capable, LAN connectivity to the UCS compute resources.

· Cisco UCS 6332-16UP Fabric Interconnect – Unified management of UCS compute, and the compute’s access to storage and networks.

· Cisco UCS B200 M5 – High powered, versatile blade server, conceived for virtual computing.

· Cisco MDS 9706 – 32Gbps Fibre Channel connectivity within the architecture, as well as interfacing to resources present in an existing data center.

· Hitachi VSP G1500 – Enterprise-level, high-performance storage system with optional all-flash configuration

Figure 2 Cisco and Hitachi Adaptive Solution for CI with Hitachi VSP G1500 and Cisco UCS 6332-16UP

The Cisco UCS B200 M5 servers in this topology are hosted within the same Cisco UCS 5108 Chassis, but connect into the fabric interconnects from the chassis using Cisco UCS 2304 IOM. The Cisco UCS 2304 IOM supports 40G connections going into the Cisco UCS 6332-16UP FIs, delivering a high bandwidth solution that may fit well in a main office type setting.

Management components for both architectures additionally include:

· Cisco UCS Manager – Management delivered through the Fabric Interconnect, providing stateless compute, and policy driven implementation of the servers managed by it.

· Cisco Intersight (optional) – Comprehensive unified visibility across UCS domains, along with proactive alerts and enablement of expedited Cisco TAC communications.

· Cisco Data Center Network Manager (optional) – Multi-layer network configuration and monitoring.

Both topologies were validated for vSphere 6.5 U2 and vSphere 6.7 U1 to accommodate a larger range of expected customer deployments. Previous, and newer versions of vSphere, as well as other vendor hypervisors may be supported. These additional hypervisors must be within the compatibility and interoperability matrices listed at the start of the next section, but are not included in this validated design.

Hardware and Software Versions

Table 1 lists the validated hardware and software versions used for this solution. Configuration specifics are given in this deployment guide for the devices and versions listed in the following tables. Component and software version substitution from what is listed is considered acceptable within this reference architecture, but substitution will need to comply with the hardware and software compatibility matrices from both Cisco and Hitachi.

Cisco UCS Hardware Compatibility Matrix:

https://ucshcltool.cloudapps.cisco.com/public/

Cisco Nexus and MDS Interoperability Matrix:

Cisco Nexus Recommended Releases for Nexus 9K:

https://www.cisco.com/c/en/us/td/docs/switches/datacenter/nexus9000/sw/recommended_release/b_Minimum_and_Recommended_Cisco_NX-OS_Releases_for_Cisco_Nexus_9000_Series_Switches.html

Cisco MDS Recommended Releases:

https://www.cisco.com/c/en/us/td/docs/switches/datacenter/mds9000/sw/b_MDS_NX-OS_Recommended_Releases.html

Hitachi Vantara Interoperability:

https://support.hitachivantara.com/en_us/interoperability.html sub-page -> (VSP G1X00, F1500, Gxx0, Fxx0, VSP, HUS VM VMWare Support Matrix)

In addition, any substituted hardware or software may have different configurations from what is detailed in this guide and will require a thorough evaluation of the substituted product reference documents.

Table 1 Validated Hardware and Software

| Component |

Software Version/Firmware Version |

|

| Network |

Cisco Nexus 9336C-FX2 |

7.0(3)I7(5a) |

| Compute |

Cisco UCS Fabric Interconnect 6332 |

4.0(1b) |

| Cisco UCS 2304 IOM |

4.0(1b) |

|

| Cisco UCS Fabric Interconnect 6454 |

4.0(1b) |

|

| Cisco UCS 2208XP IOM |

4.0(1b) |

|

| Cisco UCS B200 M5 |

4.0(1b) |

|

| VMware vSphere |

6.7 U1 VMware_ESXi_6.7.0_10302608_Custom_Cisco_6.7.1.1.iso |

|

| ESXi 6.7 U1 nenic |

1.0.25.0 |

|

| ESXi 6.7 U1 nfnic |

4.0.0.14 |

|

| VMware vSphere |

6.5 U2 VMware-ESXi-6.5.0-9298722-Custom-Cisco-6.5.2.2.iso |

|

| ESXi 6.5 U2 nenic |

1.0.25.0 |

|

| ESXi 6.5 U2 fnic |

1.6.0.44 |

|

| VM Virtual Hardware Version |

13(1) |

|

| Storage |

Hitachi VSP G1500 |

80-06-42-00/00 |

| Hitachi VSP G370 |

88-02-03-60/00 |

|

| Cisco MDS 9706 (DS-X97-SF1-K9 & DS-X9648-1536K9) |

8.3(1) |

|

| Cisco Data Center Network Manager |

11.0(1) |

|

Configuration Guidelines

This document provides details for configuring a fully redundant, highly available configuration for the Cisco and Hitachi Converged Infrastructure. References are made to which component is being configured with each step, either “-1” or “-2”. For example, AA19-9336-1 and AA19-9336-2 are used to identify the two Nexus switches that are provisioned with this document, with AA19-9336-1 and 2 used to represent a command invoked on both Nexus switches. The Cisco UCS fabric interconnects are similarly configured. Additionally, this document details the steps for provisioning multiple Cisco UCS hosts, and these examples are identified as: VM-Host-Infra-01, VM-Host-Prod-02 to represent infrastructure and production hosts deployed to each of the fabric interconnects in this document. Finally, to indicate that you should include information pertinent to your environment in a given step, <text> appears as part of the command structure.

See the following example of a configuration step for both Nexus switches:

AA19-9336-1&2 (config)# ntp server <<var_oob_ntp>> use-vrf management

This document is intended to enable you to fully configure the customer environment. In this process, various steps require you to insert customer-specific naming conventions, IP addresses, and VLAN schemes, as well as to record appropriate MAC addresses. The tables provided can be copied or printed for use as a reference to align the appropriate customer deployed values for configuration specifics used within the guide.

Table 2 lists the VLANs necessary for deployment as outlined in this guide.

Table 2 VLANs Used in the Deployment

| VLAN Name |

VLAN Purpose |

ID Used in Validating this Document |

Customer Deployed Value |

| Out of Band Mgmt |

VLAN for out-of-band management interfaces |

19 |

|

| In-Band Mgmt |

VLAN for in-band management interfaces |

119 |

|

| Native |

VLAN to which untagged frames are assigned |

2 |

|

| vMotion |

VLAN for VMware vMotion |

1000 |

|

| VM-App1 |

VLAN for Production VM Interfaces |

201 |

|

| VM-App2 |

VLAN for Production VM Interfaces |

202 |

|

| VM-App2 |

VLAN for Production VM Interfaces |

203 |

|

Table 3 lists additional configuration variables are used throughout the document as pointers to where a customer provided name, or reference for relevant existing information will be used.

Table 3 Variables for Information Used in the Design

| Variable |

Variable Description |

Customer Deployed Value |

| <<var_nexus_A_hostname>> |

Nexus switch A hostname (Example: b19-93180-1) |

|

| <<var_nexus_A_mgmt_ip>> |

Out-of-band management IP for Nexus switch A (Example: 192.168.164.13) |

|

| <<var_nexus_B_hostname>> |

Nexus switch B hostname (Example: b19-93180-2) |

|

| <<var_nexus_B_mgmt_ip>> |

Out-of-band management IP for Nexus switch B (Example: 192.168.164.14) |

|

| <<var_oob_mgmt_mask>> |

Out-of-band management network netmask (Example: 255.255.255.0) |

|

| <<var_oob_gateway>> |

Out-of-band management network gateway (Example: 192.168.164.254) |

|

| <<var_oob_ntp>> |

Out-of-band management network NTP server (Example: 192.168.164.254) |

|

| <<var_nexus_A_ib_ip>> |

In-band management HSRP network interface Nexus switch A (Example: 10.1.164.252) |

|

| <<var_nexus_B_ib_ip>> |

In-band management HSRP network interface for Nexus switch B (Example: 10.1.164.253) |

|

| <<var_nexus_ib_vip>> |

In-band management HSRP network VIP (Example: 10.1.164.254) |

|

| <<var_password>> |

Administrative password (Example: N0taP4ss) |

|

| <<var_dns_domain_name>> |

DNS domain name (Example: ucp.cisco.com) |

|

| <<var_nameserver_ip>> |

DNS server IP(s) (Example: 10.1.168.9) |

|

| <<var_timezone>> |

Time zone (Example: America/New_York) |

|

| <<var_ib_mgmt_vlan_id>> |

In-band management network VLAN ID (Example: 119) |

|

| <<var_ib_mgmt_vlan_netmask_length>> |

Length of IB-MGMT-VLAN Netmask (Example: /24) |

|

| <<var_ib_gateway_ip>> |

In-band management network VLAN ID (Example: 10.1.168.1) |

|

| <<var_vmotion_vlan_id>> |

vMotion management network VLAN ID (Example: 1000) |

|

| <<var_vmotion_vlan_netmask_length>> |

Length of vMotion-VLAN Netmask (Example: /24) |

|

| <<var_mds_A_mgmt_ip>> |

Cisco MDS Management IP address (Example: 192.168.168.18) |

|

| <<var_mds_A_hostname>> |

Cisco MDS hostname (Example: aa19-9706-1) |

|

| <<var_mds_B_mgmt_ip>> |

Cisco MDS Management IP address (Example: 192.168.168.19) |

|

| <<var_mds_B_hostname>> |

Cisco MDS hostname (Example: aa19-9706-2) |

|

| <<var_vsan_a_id>> |

VSAN used for the A Fabric between the VSP/MDS/FI (Example: 101) |

|

| <<var_vsan_b_id>> |

VSAN used for the A Fabric between the VSP/MDS/FI (Example: 102) |

|

| <<vsp_hostname>> <<vsp-g370>> / <<vsp-g1500>> |

Hitachi VSP storage system name (Example g370-[Serial Number]) |

|

| <<var_ucs_clustername>> <<var_ucs_6454_clustername>> / <<var_ucs_6332_clustername>> |

Cisco UCS Manager cluster host name (Example: AA19-6454) |

|

| <<var_ucsa_mgmt_ip>> |

Cisco UCS fabric interconnect (FI) A out-of-band management IP address (Example: 192.168.168.16) |

|

| <<var_ucs_mgmt_vip>> |

Cisco UCS fabric interconnect (FI) Cluster out-of-band management IP address (Example: 192.168.168.15) |

|

| <<var_ucsb_mgmt_ip>> |

Cisco UCS FI B out-of-band management IP address (Example: 192.168.168.17) |

|

| <<var_vm_host_infra_01_ip>> |

VMware ESXi host 01 in-band management IP (Example: 10.1.168.21) |

|

| <<var_vm_host_infra_02_ip>> |

VMware ESXi host 02 in-band management IP (Example: 10.1.168.22) |

|

| <<var_vm_host_infra_vmotion_01_ip>> |

VMware ESXi host 01 vMotion IP (Example: 192.168.100.21) |

|

| <<var_vm_host_infra_vmotion_02_ip>> |

VMware ESXi host 02 vMotion IP (Example: 192.168.100.22) |

|

| <<var_vmotion_subnet_mask>> |

vMotion subnet mask (Example: 255.255.255.0) |

|

| <<var_vcenter_server_ip>> |

IP address of the vCenter Server (Example: 10.1.168.100) |

|

Physical Cabling

This section explains the cabling examples used for the two validated topologies in the environment. To make connectivity clear in this example, the tables include both the local and remote port locations.

This document assumes that out-of-band management ports are plugged into an existing management infrastructure at the deployment site. The upstream network from the Nexus 9336C-FX2 switches is out of scope of this document, with only the assumption that these switches will connect to the upstream switch or switches with a virtual Port Channel (vPC).

Physical Cabling for the UCS 6454 with the VSP G370 Topology

Figure 3 shows the cabling configuration used in the design featuring the Cisco UCS 6454 with the VSP G370.

Tables listing the specific port connections with the cables used in the deployment of the Cisco UCS 6454 and the VSP G370 are provided below.

Table 4 Cisco Nexus 9336C-FX2 A Cabling Information for Cisco UCS 6454 to VSP G370 Topology

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco Nexus 9336C-FX2 A

|

Eth1/1 |

40GbE |

Cisco Nexus 9336C-FX2 B |

Eth1/1 |

| Eth1/2 |

40GbE |

Cisco Nexus 9336C-FX2 B |

Eth1/2 |

|

| Eth1/5 |

100GbE |

Cisco UCS 6454 FI A |

Eth 1/53 |

|

| Eth1/6 |

100GbE |

Cisco UCS 6454 FI B |

Eth 1/53 |

|

| Eth1/35 |

40GbE or 100GbE |

Upstream Network Switch |

Any |

|

| Eth1/36 |

40GbE or 100GbE |

Upstream Network Switch |

Any |

|

| MGMT0 |

GbE |

GbE management switch |

Any |

![]() Selecting 100GbE between the Nexus 9336C-FX2 switches and the Cisco UCS 6454 fabric interconnects is not required, but was selected as an available option between the devices.

Selecting 100GbE between the Nexus 9336C-FX2 switches and the Cisco UCS 6454 fabric interconnects is not required, but was selected as an available option between the devices.

Table 5 Cisco Nexus 9336C-FX2 B Cabling Information for Cisco UCS 6454 to VSP G370 Topology

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco Nexus 9336C-FX2 B

|

Eth1/1 |

40GbE |

Cisco Nexus 9336C-FX2 A |

Eth1/1 |

| Eth1/2 |

40GbE |

Cisco Nexus 9336C-FX2 A |

Eth1/2 |

|

| Eth1/5 |

100GbE |

Cisco UCS 6454 FI A |

Eth 1/54 |

|

| Eth1/6 |

100GbE |

Cisco UCS 6454 FI B |

Eth 1/54 |

|

| Eth1/35 |

40GbE or 100GbE |

Upstream Network Switch |

Any |

|

| Eth1/36 |

40GbE or 100GbE |

Upstream Network Switch |

Any |

|

| MGMT0 |

GbE |

GbE management switch |

Any |

Table 6 Cisco UCS 6454 A Cabling Information for Cisco UCS 6454 to VSP G370 Topology

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco UCS 6454 FI A

|

FC 1/1 |

32Gb FC |

MDS 9706 A |

FC 1/5 |

| FC 1/2 |

32Gb FC |

MDS 9706 A |

FC 1/6 |

|

| Eth1/9 |

10GbE |

Cisco UCS Chassis 2208XP FEX A |

IOM 1/1 |

|

| Eth1/10 |

10GbE |

Cisco UCS Chassis 2208XP FEX A |

IOM 1/2 |

|

| Eth1/11 |

10GbE |

Cisco UCS Chassis 2208XP FEX A |

IOM 1/3 |

|

| Eth1/12 |

10GbE |

Cisco UCS Chassis 2208XP FEX A |

IOM 1/4 |

|

| Eth1/33 |

40GbE |

Cisco Nexus 9336C-FX2 A |

Eth1/5 |

|

| Eth1/34 |

40GbE |

Cisco Nexus 9336C-FX2 B |

Eth1/5 |

|

| MGMT0 |

GbE |

GbE management switch |

Any |

|

| L1 |

GbE |

Cisco UCS 6454 FI B |

L1 |

|

| L2 |

GbE |

Cisco UCS 6454 FI B |

L2 |

![]() Ports 1-8 on the Cisco UCS 6454 are unified ports that can be configured as Ethernet or as Fibre Channel ports. Server ports should be initially deployed started with 1/9 to give flexibility for FC port needs, and ports 49-54 are not configurable for server ports. Also, ports 45-48 are the only configurable ports for 1Gbps connections that may be needed to a network switch.

Ports 1-8 on the Cisco UCS 6454 are unified ports that can be configured as Ethernet or as Fibre Channel ports. Server ports should be initially deployed started with 1/9 to give flexibility for FC port needs, and ports 49-54 are not configurable for server ports. Also, ports 45-48 are the only configurable ports for 1Gbps connections that may be needed to a network switch.

Table 7 Cisco UCS 6454 B Cabling Information for Cisco UCS 6454 to VSP G370 Topology

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco UCS 6454 FI B

|

FC 1/1 |

32Gb FC |

MDS 9706 B |

FC 1/5 |

| FC 1/2 |

32Gb FC |

MDS 9706 B |

FC 1/6 |

|

| Eth1/9 |

10GbE |

Cisco UCS Chassis 2208XP FEX B |

IOM 1/1 |

|

| Eth1/10 |

10GbE |

Cisco UCS Chassis 2208XP FEX B |

IOM 1/2 |

|

| Eth1/11 |

10GbE |

Cisco UCS Chassis 2208XP FEX B |

IOM 1/3 |

|

| Eth1/12 |

10GbE |

Cisco UCS Chassis 2208XP FEX B |

IOM 1/4 |

|

| Eth1/33 |

40GbE |

Cisco Nexus 9336C-FX2 A |

Eth1/6 |

|

| Eth1/34 |

40GbE |

Cisco Nexus 9336C-FX2 B |

Eth1/6 |

|

| MGMT0 |

GbE |

GbE management switch |

Any |

|

| L1 |

GbE |

Cisco UCS 6454 FI A |

L1 |

|

| L2 |

GbE |

Cisco UCS 6454 FI A |

L2 |

Table 8 Cisco MDS 9706 A Cabling Information for Cisco UCS 6454 to VSP G370 Topology

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco MDS 9706 A

|

FC 1/5 |

32Gb FC |

Cisco UCS 6454 FI A |

FC 1/1 |

| FC 1/6 |

32Gb FC |

Cisco UCS 6454 FI A |

FC 1/2 |

|

| FC 1/11 |

32Gb FC |

VSP G370 Controller 1 |

CL 1-A |

|

| FC 1/12 |

32Gb FC |

VSP G370 Controller 2 |

CL 2-B |

|

| Sup1 MGMT0 |

GbE |

GbE management switch |

Any |

|

| Sup2 MGMT0 |

GbE |

GbE management switch |

Any |

![]() The MDS DS-X9648-1536K9 4/8/16/32 Gbps Advanced FC Module used in this design does not have port groups with shared bandwidth, so sequential port selection will not impact bandwidth. When looking at substituting a differing MDS switch into the topology from the respective compatibility matrices, care should be given to any port group specifics on how bandwidth may be shared between ports.

The MDS DS-X9648-1536K9 4/8/16/32 Gbps Advanced FC Module used in this design does not have port groups with shared bandwidth, so sequential port selection will not impact bandwidth. When looking at substituting a differing MDS switch into the topology from the respective compatibility matrices, care should be given to any port group specifics on how bandwidth may be shared between ports.

Table 9 Cisco MDS 9706 B Cabling Information for Cisco UCS 6454 to VSP G370 Topology

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco MDS 9706 B

|

FC 1/5 |

32Gb FC |

Cisco UCS 6454 FI B |

FC 1/1 |

| FC 1/6 |

32Gb FC |

Cisco UCS 6454 FI B |

FC 1/2 |

|

| FC 1/11 |

32Gb FC |

VSP G370 Controller 1 |

CL 3-B |

|

| FC 1/12 |

32Gb FC |

VSP G370 Controller 2 |

CL 4-A |

|

| Sup1 MGMT0 |

GbE |

GbE management switch |

Any |

|

| Sup2 MGMT0 |

GbE |

GbE management switch |

Any |

Table 10 Hitachi VSP G370 Cabling Information for Cisco UCS 6454 to VSP G370 Topology

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Hitachi VSP G370

|

CL 1-A |

32Gb FC |

Cisco MDS 9706 A |

FC 1/11 |

| CL 2-B |

32Gb FC |

Cisco MDS 9706 A |

FC 1/12 |

|

| CL 3-B |

32Gb FC |

Cisco MDS 9706 B |

FC 1/11 |

|

| CL 4-A |

32Gb FC |

Cisco MDS 9706 B |

FC 1/12 |

|

| Cont1 LAN |

GbE |

SVP |

LAN3 |

|

| Cont2 LAN |

GbE |

SVP |

LAN4 |

![]() SVP will be configured by a Hitachi Vantara support engineer at the time of initial configuration and is out of scope of the primary deployment.

SVP will be configured by a Hitachi Vantara support engineer at the time of initial configuration and is out of scope of the primary deployment.

Physical Cabling for the Cisco UCS 6332-16UP with the VSP G1500 Topology

Figure 4 illustrates the cabling configuration used in the design featuring the Cisco UCS 6332-16UP with the VSP G1500.

Tables listing the specific port connections with the cables used in the deployment of the Cisco UCS 6332-16UP and the VSP G1500 are below.

Table 11 Cisco Nexus 9336C-FX2 A Cabling Information for Cisco UCS 6332-16UP to VSP G1500 Topology

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco Nexus 9336C-FX2 A

|

Eth1/1 |

40GbE |

Cisco Nexus 9336C-FX2 B |

Eth1/1 |

| Eth1/2 |

40GbE |

Cisco Nexus 9336C-FX2 B |

Eth1/2 |

|

| Eth1/3 |

40GbE |

Cisco UCS 6332-16UP FI A |

Eth 1/39 |

|

| Eth1/4 |

40GbE |

Cisco UCS 6332-16UP FI B |

Eth 1/39 |

|

| Eth1/35 |

40GbE or 100GbE |

Upstream Network Switch |

Any |

|

| Eth1/36 |

40GbE or 100GbE |

Upstream Network Switch |

Any |

|

| MGMT0 |

GbE |

GbE management switch |

Any |

Table 12 Cisco Nexus 9336C-FX2 B Cabling Information for Cisco UCS 6332-16UP to VSP G1500 Topology

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco Nexus 9336C-FX2 B

|

Eth1/1 |

40GbE |

Cisco Nexus 9336C-FX2 A |

Eth1/1 |

| Eth1/2 |

40GbE |

Cisco Nexus 9336C-FX2 A |

Eth1/2 |

|

| Eth1/3 |

40GbE |

Cisco UCS 6332-16UP FI A |

Eth 1/40 |

|

| Eth1/4 |

40GbE |

Cisco UCS 6332-16UP FI B |

Eth 1/40 |

|

| Eth1/35 |

40GbE or 100GbE |

Upstream Network Switch |

Any |

|

| Eth1/36 |

40GbE or 100GbE |

Upstream Network Switch |

Any |

|

| MGMT0 |

GbE |

GbE management switch |

Any |

Table 13 Cisco UCS 6332-16UP A Cabling Information for Cisco UCS 6332-16UP to VSP G1500 Topology

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco UCS 6332-16UP FI A

|

FC 1/1 |

16Gb FC |

MDS 9706 A |

FC 1/1 |

| FC 1/2 |

16Gb FC |

MDS 9706 A |

FC 1/2 |

|

| FC 1/3 |

16Gb FC |

MDS 9706 A |

FC 1/3 |

|

| FC 1/4 |

16Gb FC |

MDS 9706 A |

FC 1/4 |

|

| Eth1/17 |

40GbE |

Cisco UCS Chassis 2304 FEX A |

IOM 1/1 |

|

| Eth1/18 |

40GbE |

Cisco UCS Chassis 2304 FEX A |

IOM 1/2 |

|

| Eth1/39 |

40GbE |

Cisco Nexus 9336C-FX2 A |

Eth1/3 |

|

| Eth1/40 |

40GbE |

Cisco Nexus 9336C-FX2 B |

Eth1/3 |

|

| MGMT0 |

GbE |

GbE management switch |

Any |

|

| L1 |

GbE |

Cisco UCS 6454 FI B |

L1 |

|

| L2 |

GbE |

Cisco UCS 6454 FI B |

L2 |

![]() Ports 1-16 are Universal ports in the UCS 6332-16UP that can be used for Ethernet or Fibre Channel, with ports 17-40 primarily used as server ports either with 40Gbps QSFP+ ports, or breakout cables to support 10Gbps. The last ports of 35-40 are generally used for network uplinks and will not support QSFP copper twinax type cables.

Ports 1-16 are Universal ports in the UCS 6332-16UP that can be used for Ethernet or Fibre Channel, with ports 17-40 primarily used as server ports either with 40Gbps QSFP+ ports, or breakout cables to support 10Gbps. The last ports of 35-40 are generally used for network uplinks and will not support QSFP copper twinax type cables.

Table 14 Cisco UCS 6332-16UP B Cabling Information for Cisco UCS 6332-16UP to VSP G1500 Topology

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco UCS 6332-16UP FI B

|

FC 1/1 |

16Gb FC |

MDS 9706 B |

FC 1/1 |

| FC 1/2 |

16Gb FC |

MDS 9706 B |

FC 1/2 |

|

| FC 1/3 |

16Gb FC |

MDS 9706 B |

FC 1/3 |

|

| FC 1/4 |

16Gb FC |

MDS 9706 B |

FC 1/4 |

|

| Eth1/17 |

40GbE |

Cisco UCS Chassis 2304 FEX A |

IOM 1/1 |

|

| Eth1/18 |

40GbE |

Cisco UCS Chassis 2304 FEX A |

IOM 1/2 |

|

| Eth1/39 |

40GbE |

Cisco Nexus 9336C-FX2 A |

Eth1/4 |

|

| Eth1/40 |

40GbE |

Cisco Nexus 9336C-FX2 B |

Eth1/4 |

|

| MGMT0 |

GbE |

GbE management switch |

Any |

|

| L1 |

GbE |

Cisco UCS 6454 FI A |

L1 |

|

|

|

L2 |

GbE |

Cisco UCS 6454 FI A |

L2 |

Table 15 Cisco MDS 9706 A Cabling Information for Cisco UCS 6332-16UP to VSP G1500 Topology

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco MDS 9706 A

|

FC 1/1 |

16Gb FC |

Cisco UCS 6332-16UP FI A |

FC 1/1 |

| FC 1/2 |

16Gb FC |

Cisco UCS 6332-16UP FI A |

FC 1/2 |

|

| FC 1/3 |

16Gb FC |

Cisco UCS 6332-16UP FI A |

FC 1/3 |

|

| FC 1/4 |

16Gb FC |

Cisco UCS 6332-16UP FI A |

FC 1/4 |

|

| FC 1/7 |

16Gb FC |

VSP G1500 |

CL 1-A |

|

| FC 1/8 |

16Gb FC |

VSP G1500 |

CL 2-A |

|

| FC 1/9 |

16Gb FC |

VSP G1500 |

CL 1-J |

|

| FC 1/10 |

16Gb FC |

VSP G1500 |

CL 2-J |

|

| Sup1 MGMT0 |

GbE |

GbE management switch |

Any |

|

| Sup2 MGMT0 |

GbE |

GbE management switch |

Any |

![]() The MDS DS-X9648-1536K9 4/8/16/32 Gbps Advanced FC Module used with the MDS 9706 in this design does not have port groups with shared bandwidth, so sequential port selection will not impact bandwidth. When looking at substituting a differing MDS switch into the topology from the respective compatibility matrices, care should be given to any port group specifics on how bandwidth may be shared between ports.

The MDS DS-X9648-1536K9 4/8/16/32 Gbps Advanced FC Module used with the MDS 9706 in this design does not have port groups with shared bandwidth, so sequential port selection will not impact bandwidth. When looking at substituting a differing MDS switch into the topology from the respective compatibility matrices, care should be given to any port group specifics on how bandwidth may be shared between ports.

Table 16 Cisco MDS 9706 B Cabling Information for Cisco UCS 6332-16UP to VSP G1500 Topology

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco MDS 9706 B

|

FC 1/1 |

16Gb FC |

Cisco UCS 6332-16UP FI B |

FC 1/1 |

| FC 1/2 |

16Gb FC |

Cisco UCS 6332-16UP FI B |

FC 1/2 |

|

| FC 1/3 |

16Gb FC |

Cisco UCS 6332-16UP FI B |

FC 1/3 |

|

| FC 1/4 |

16Gb FC |

Cisco UCS 6332-16UP FI B |

FC 1/4 |

|

| FC 1/7 |

16Gb FC |

VSP G1500 |

CL 3-L |

|

| FC 1/8 |

16Gb FC |

VSP G1500 |

CL 4-C |

|

| FC 1/9 |

16Gb FC |

VSP G1500 |

CL 3-C |

|

| FC 1/10 |

16Gb FC |

VSP G1500 |

CL 4-L |

|

| Sup1 MGMT0 |

GbE |

GbE management switch |

Any |

|

| Sup2 MGMT0 |

GbE |

GbE management switch |

Any |

Table 17 Hitachi VSP G1500 Cabling Information for Cisco UCS 6332-16UP to VSP G1500 Topology

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Hitachi VSP G1500

|

CL 1-A |

16Gb FC |

Cisco MDS 9706 A |

FC 1/7 |

| CL 2-A |

16Gb FC |

Cisco MDS 9706 A |

FC 1/8 |

|

| CL 1-J |

16Gb FC |

Cisco MDS 9706 A |

FC 1/9 |

|

| CL 2-J |

16Gb FC |

Cisco MDS 9706 A |

FC 1/10 |

|

| CL 3-L |

16Gb FC |

Cisco MDS 9706 B |

FC 1/7 |

|

| CL 4-C |

16Gb FC |

Cisco MDS 9706 B |

FC 1/8 |

|

| CL 3-C |

16Gb FC |

Cisco MDS 9706 B |

FC 1/9 |

|

| CL 4-L |

16Gb FC |

Cisco MDS 9706 B |

FC 1/10 |

|

| SVP LAN |

GbE |

GbE management switch |

Any |

![]() SVP will be configured by a Hitachi Vantara support engineer at the time of initial configuration and is out of scope of the primary deployment.

SVP will be configured by a Hitachi Vantara support engineer at the time of initial configuration and is out of scope of the primary deployment.

The Nexus switch configuration will explains the basic L2 and L3 functionality for the application environment used in the validation environment hosted by the UCS domains. The application gateways are hosted by the pair of Nexus switches, but primary routing is passed onto an existing router that is upstream of the converged infrastructure. This upstream router will need to be aware of any networks created on the Nexus switches, but configuration of an upstream router is beyond the scope of this deployment guide.

![]() Configuration connections for both Fabric Interconnect platforms are listed in these steps and both sets of Cisco UCS vPCs are not necessary in a deployment that will only deploy a single UCS domain.

Configuration connections for both Fabric Interconnect platforms are listed in these steps and both sets of Cisco UCS vPCs are not necessary in a deployment that will only deploy a single UCS domain.

Physical Connectivity

Physical cabling should be completed by following the diagram and table references found in section Deployment Hardware and Software.

Initial Nexus Configuration Dialogue

Complete this dialogue on each switch, using a serial connection to the console port of the switch, unless Power on Auto Provisioning is being used.

Abort Power on Auto Provisioning and continue with normal setup? (yes/no) [n]: yes

---- System Admin Account Setup ----

Do you want to enforce secure password standard (yes/no) [y]:

Enter the password for "admin":

Confirm the password for "admin":

---- Basic System Configuration Dialog VDC: 1 ----

This setup utility will guide you through the basic configuration of

the system. Setup configures only enough connectivity for management

of the system.

Please register Cisco Nexus9000 Family devices promptly with your

supplier. Failure to register may affect response times for initial

service calls. Nexus9000 devices must be registered to receive

entitled support services.

Press Enter at anytime to skip a dialog. Use ctrl-c at anytime

to skip the remaining dialogs.

Would you like to enter the basic configuration dialog (yes/no): yes

Create another login account (yes/no) [n]:

Configure read-only SNMP community string (yes/no) [n]:

Configure read-write SNMP community string (yes/no) [n]:

Enter the switch name : <<var_nexus_A_hostname>>|<<var_nexus_B_hostname>>

Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]:

Mgmt0 IPv4 address : << var_nexus_A_mgmt_ip>>|<< var_nexus_B_mgmt_ip>>

Mgmt0 IPv4 netmask : <<var_oob_mgmt netmask>

Configure the default gateway? (yes/no) [y]:

IPv4 address of the default gateway : <<var_oob_gw>>

Configure advanced IP options? (yes/no) [n]:

Enable the telnet service? (yes/no) [n]:

Enable the ssh service? (yes/no) [y]:

Type of ssh key you would like to generate (dsa/rsa) [rsa]:

Number of rsa key bits <1024-2048> [1024]:

Configure the ntp server? (yes/no) [n]: y

NTP server IPv4 address: <<var_oob_ntp>>

Configure default interface layer (L3/L2) [L2]:

Configure default switchport interface state (shut/noshut) [noshut]: shut

Configure CoPP system profile (strict/moderate/lenient/dense) [strict]:

The following configuration will be applied:

password strength-check

switchname AA19-9336-1

vrf context management

ip route 0.0.0.0/0 192.168.168.254

exit

no feature telnet

ssh key rsa 1024 force

feature ssh

system default switchport

system default switchport shutdown

copp profile strict

interface mgmt0

ip address 192.168.168.13 255.255.255.0

no shutdown

Would you like to edit the configuration? (yes/no) [n]:

Use this configuration and save it? (yes/no) [y]:

Enable Features and Settings

To enable IP switching features, run the following commands on each Cisco Nexus:

AA19-9336-1&2 (config)# feature lacp

AA19-9336-1&2 (config)# feature vpc

AA19-9336-1&2 (config)# feature interface-vlan

AA19-9336-1&2 (config)# feature hsrp

![]() The reference of AA19-9336-1&2 is used to represent a command run on both switches, AA19-9336-1 represents a command to run only on the first Nexus switch, and AA19-9336-2 stands for a command that should only be run on the second Nexus switch.

The reference of AA19-9336-1&2 is used to represent a command run on both switches, AA19-9336-1 represents a command to run only on the first Nexus switch, and AA19-9336-2 stands for a command that should only be run on the second Nexus switch.

Additionally, configure the spanning tree and save the running configuration to start-up:

AA19-9336-1&2 (config)# spanning-tree port type network default

AA19-9336-1&2 (config)# spanning-tree port type edge bpduguard default

AA19-9336-1&2 (config)# spanning-tree port type edge bpdufilter default

Create VLANs

Run the following commands on both switches to create VLANs:

AA19-9336-1&2 (config)# vlan 119

AA19-9336-1&2 (config-vlan)# name IB-MGMT

AA19-9336-1&2 (config-vlan)# vlan 2

AA19-9336-1&2 (config-vlan)# name Native

AA19-9336-1&2 (config-vlan)# vlan 1000

AA19-9336-1&2 (config-vlan)# name vMotion

AA19-9336-1&2 (config-vlan)# vlan 201

AA19-9336-1&2 (config-vlan)# name Web

AA19-9336-1&2 (config-vlan)# vlan 202

AA19-9336-1&2 (config-vlan)# name App

AA19-9336-1&2 (config-vlan)# vlan 203

AA19-9336-1&2 (config-vlan)# name DB

AA19-9336-1&2 (config-vlan)# exit

Continue adding VLANs as appropriate to your environment.

Add Individual Port Descriptions for Troubleshooting

To add individual port descriptions for troubleshooting activity and verification for switch A, enter the following commands from the global configuration mode:

AA19-9336-1(config)# interface port-channel 11

AA19-9336-1(config-if)# description vPC peer-link

AA19-9336-1(config-if)# interface port-channel 13

AA19-9336-1(config-if)# description vPC UCS 6332-16UP-1 FI

AA19-9336-1(config-if)# interface port-channel 14

AA19-9336-1(config-if)# description vPC UCS 6332-16UP-2 FI

AA19-9336-1(config-if)# interface port-channel 15

AA19-9336-1(config-if)# description vPC UCS 6454-1 FI

AA19-9336-1(config-if)# interface port-channel 16

AA19-9336-1(config-if)# description vPC UCS 6454-2 FI

AA19-9336-1(config-if)# interface port-channel 135

AA19-9336-1(config-if)# description vPC Upstream Network Switch A

AA19-9336-1(config-if)# interface port-channel 136

AA19-9336-1(config-if)# description vPC Upstream Network Switch B

![]() The port-channel numbers will need to match between the two switches, and while the port numbering can be somewhat arbitrary, a numbering scheme of the first port in the port channel is represented in the numbering scheme used, where port channel 11 has a first port of 1/1, and port channel 136 has a first port of 1/36.

The port-channel numbers will need to match between the two switches, and while the port numbering can be somewhat arbitrary, a numbering scheme of the first port in the port channel is represented in the numbering scheme used, where port channel 11 has a first port of 1/1, and port channel 136 has a first port of 1/36.

AA19-9336-1(config-if)# interface Ethernet1/1

AA19-9336-1(config-if)# description vPC peer-link connection to AA19-9336-2 Ethernet1/1

AA19-9336-1(config-if)# interface Ethernet1/2

AA19-9336-1(config-if)# description vPC peer-link connection to AA19-9336-2 Ethernet1/2

AA19-9336-1(config-if)# interface Ethernet1/3

AA19-9336-1(config-if)# description vPC 13 connection to UCS 6332-16UP-1 FI Ethernet1/39

AA19-9336-1(config-if)# interface Ethernet1/4

AA19-9336-1(config-if)# description vPC 14 connection to UCS 6332-16UP-2 FI Ethernet1/39

AA19-9336-1(config-if)# interface Ethernet1/5

AA19-9336-1(config-if)# description vPC 15 connection to UCS 6454-1 FI Ethernet1/53

AA19-9336-1(config-if)# interface Ethernet1/6

AA19-9336-1(config-if)# description vPC 16 connection to UCS 6454-2 FI Ethernet1/53

AA19-9336-1(config-if)# interface Ethernet1/35

AA19-9336-1(config-if)# description vPC 135 connection to Upstream Network Switch A

AA19-9336-1(config-if)# interface Ethernet1/36

AA19-9336-1(config-if)# description vPC 136 connection to Upstream Network Switch B

AA19-9336-1(config-if)# exit

![]() In these steps, the interface commands for the VLAN interface and Port-Channel interfaces, will create these interfaces if they do not already exist.

In these steps, the interface commands for the VLAN interface and Port-Channel interfaces, will create these interfaces if they do not already exist.

To add individual port descriptions for troubleshooting activity and verification for switch B, enter the following commands from the global configuration mode:

AA19-9336-2(config)# interface port-channel 11

AA19-9336-2(config-if)# description vPC peer-link

AA19-9336-2(config-if)# interface port-channel 13

AA19-9336-2(config-if)# description vPC UCS 6332-16UP-1 FI

AA19-9336-2(config-if)# interface port-channel 14

AA19-9336-2(config-if)# description vPC UCS 6332-16UP-2 FI

AA19-9336-2(config-if)# interface port-channel 15

AA19-9336-2(config-if)# description vPC UCS 6454-1 FI

AA19-9336-2(config-if)# interface port-channel 16

AA19-9336-2(config-if)# description vPC UCS 6454-2 FI

AA19-9336-2(config-if)# interface port-channel 135

AA19-9336-2(config-if)# description vPC Upstream Network Switch A

AA19-9336-2(config-if)# interface port-channel 136

AA19-9336-2(config-if)# description vPC Upstream Network Switch B

AA19-9336-2(config-if)# interface Ethernet1/1

AA19-9336-2(config-if)# description vPC peer-link connection to AA19-9336-1 Ethernet1/1

AA19-9336-2(config-if)# interface Ethernet1/2

AA19-9336-2(config-if)# description vPC peer-link connection to AA19-9336-1 Ethernet1/2

AA19-9336-2(config-if)# interface Ethernet1/3

AA19-9336-2(config-if)# description vPC 13 connection to UCS 6332-16UP-1 FI Ethernet1/40

AA19-9336-2(config-if)# interface Ethernet1/4

AA19-9336-2(config-if)# description vPC 14 connection to UCS 6332-16UP-2 FI Ethernet1/40

AA19-9336-2(config-if)# interface Ethernet1/5

AA19-9336-2(config-if)# description vPC 15 connection to UCS 6454-1 FI Ethernet1/54

AA19-9336-2(config-if)# interface Ethernet1/6

AA19-9336-2(config-if)# description vPC 16 connection to UCS 6454-2 FI Ethernet1/54

AA19-9336-2(config-if)# interface Ethernet1/35

AA19-9336-2(config-if)# description vPC 135 connection to Upstream Network Switch A

AA19-9336-2(config-if)# interface Ethernet1/36

AA19-9336-2(config-if)# description vPC 136 connection to Upstream Network Switch B

AA19-9336-2(config-if)# exit

Create the vPC Domain

The vPC domain will be assigned a unique number from 1-1000 and will handle the vPC settings specified within the switches. To set the vPC domain configuration on 9336C-FX2 A, run the following commands:

AA19-9336-1(config)# vpc domain 10

AA19-9336-1(config-vpc-domain)# peer-switch

AA19-9336-1(config-vpc-domain)# role priority 10

AA19-9336-1(config-vpc-domain)# peer-keepalive destination <<var_nexus_B_mgmt_ip>> source <<var_nexus_A_mgmt_ip>>

AA19-9336-1(config-vpc-domain)# delay restore 150

AA19-9336-1(config-vpc-domain)# peer-gateway

AA19-9336-1(config-vpc-domain)# auto-recovery

AA19-9336-1(config-vpc-domain)# ip arp synchronize

AA19-9336-1(config-vpc-domain)# exit

On the 9336C-FX2 B switch run these slightly differing commands, noting that role priority and peer-keepalive commands will differ from what was previously set:

AA19-9336-2(config)# vpc domain 10

AA19-9336-2(config-vpc-domain)# peer-switch

AA19-9336-2(config-vpc-domain)# role priority 20

AA19-9336-2(config-vpc-domain)# peer-keepalive destination <<var_nexus_A_mgmt_ip>> source <<var_nexus_B_mgmt_ip>>

AA19-9336-2(config-vpc-domain)# delay restore 150

AA19-9336-2(config-vpc-domain)# peer-gateway

AA19-9336-2(config-vpc-domain)# auto-recovery

AA19-9336-2(config-vpc-domain)# ip arp synchronize

AA19-9336-2(config-vpc-domain)# exit

Configure Port Channel Member Interfaces

On each switch, configure the Port Channel member interfaces that will be part of the vPC Peer Link and configure the vPC Peer Link:

AA19-9336-1&2 (config)# int eth 1/1-2

AA19-9336-1&2 (config-if-range)# channel-group 11 mode active

AA19-9336-1&2 (config-if-range)# no shut

AA19-9336-1&2 (config-if-range)# int port-channel 11

AA19-9336-1&2 (config-if)# switchport mode trunk

AA19-9336-1&2 (config-if)# switchport trunk native vlan 2

AA19-9336-1&2 (config-if)# switchport trunk allowed vlan 119,1000,201-203

AA19-9336-1&2 (config-if)# vpc peer-link

Configure Virtual Port Channels

On each switch, configure the Port Channel member interfaces and the vPC Port Channels to the Cisco UCS Fabric Interconnect and the upstream network switches:

![]() Port Channels to both a Cisco UCS 6332-16UP FI pair and Cisco UCS 6454 FI pair are shown below. The specific ports selected for these connections into the Nexus should reflect the cabling implemented for deployed Cisco UCS FI in a customer environment, and both should not be configured as shown in this example unless two UCS FI pairs are being deployed.

Port Channels to both a Cisco UCS 6332-16UP FI pair and Cisco UCS 6454 FI pair are shown below. The specific ports selected for these connections into the Nexus should reflect the cabling implemented for deployed Cisco UCS FI in a customer environment, and both should not be configured as shown in this example unless two UCS FI pairs are being deployed.

Nexus Connection vPC to Cisco UCS 6332-16UP A

AA19-9336-1&2 (config-if)# int ethernet 1/3

AA19-9336-1&2 (config-if)# channel-group 13 mode active

AA19-9336-1&2 (config-if)# no shut

AA19-9336-1&2 (config-if)# int port-channel 13

AA19-9336-1&2 (config-if)# switchport mode trunk

AA19-9336-1&2 (config-if)# switchport trunk native vlan 2

AA19-9336-1&2 (config-if)# switchport trunk allowed vlan 119,1000,201-203

AA19-9336-1&2 (config-if)# spanning-tree port type edge trunk

AA19-9336-1&2 (config-if)# mtu 9216

AA19-9336-1&2 (config-if)# load-interval counter 3 60

AA19-9336-1&2 (config-if)# vpc 13

Nexus Connection vPC to Cisco UCS 6332-16UP B

AA19-9336-1&2 (config-if)# int ethernet 1/4

AA19-9336-1&2 (config-if)# channel-group 14 mode active

AA19-9336-1&2 (config-if)# no shut

AA19-9336-1&2 (config-if)# int port-channel 14

AA19-9336-1&2 (config-if)# switchport mode trunk

AA19-9336-1&2 (config-if)# switchport trunk native vlan 2

AA19-9336-1&2 (config-if)# switchport trunk allowed vlan 119,1000,201-203

AA19-9336-1&2 (config-if)# spanning-tree port type edge trunk

AA19-9336-1&2 (config-if)# mtu 9216

AA19-9336-1&2 (config-if)# load-interval counter 3 60

AA19-9336-1&2 (config-if)# vpc 14

Nexus Connection vPC to Cisco UCS 6454 A

AA19-9336-1&2 (config-if)# int ethernet 1/5

AA19-9336-1&2 (config-if)# channel-group 15 mode active

AA19-9336-1&2 (config-if)# no shut

AA19-9336-1&2 (config-if)# int port-channel 15

AA19-9336-1&2 (config-if)# switchport mode trunk

AA19-9336-1&2 (config-if)# switchport trunk native vlan 2

AA19-9336-1&2 (config-if)# switchport trunk allowed vlan 119,1000,201-203

AA19-9336-1&2 (config-if)# spanning-tree port type edge trunk

AA19-9336-1&2 (config-if)# mtu 9216

AA19-9336-1&2 (config-if)# load-interval counter 3 60

AA19-9336-1&2 (config-if)# vpc 15

Nexus Connection vPC to Cisco UCS 6454 B

AA19-9336-1&2 (config-if)# int ethernet 1/6

AA19-9336-1&2 (config-if)# channel-group 16 mode active

AA19-9336-1&2 (config-if)# no shut

AA19-9336-1&2 (config-if)# int port-channel 16

AA19-9336-1&2 (config-if)# switchport mode trunk

AA19-9336-1&2 (config-if)# switchport trunk native vlan 2

AA19-9336-1&2 (config-if)# switchport trunk allowed vlan 119,1000,201-203

AA19-9336-1&2 (config-if)# spanning-tree port type edge trunk

AA19-9336-1&2 (config-if)# mtu 9216

AA19-9336-1&2 (config-if)# load-interval counter 3 60

AA19-9336-1&2 (config-if)# vpc 16

Nexus Connection vPC to Upstream Network Switch A

AA19-9336-1&2 (config-if)# interface Ethernet1/35

AA19-9336-1&2 (config-if)# channel-group 135 mode active

AA19-9336-1&2 (config-if)# no shut

AA19-9336-1&2 (config-if)# int port-channel 135

AA19-9336-1&2 (config-if)# switchport mode trunk

AA19-9336-1&2 (config-if)# switchport trunk native vlan 2

AA19-9336-1&2 (config-if)# switchport trunk allowed vlan 119

AA19-9336-1&2 (config-if)# vpc 135

Nexus Connection vPC to Upstream Network Switch B

AA19-9336-1&2 (config-if)# interface Ethernet1/36

AA19-9336-1&2 (config-if)# channel-group 136 mode active

AA19-9336-1&2 (config-if)# no shut

AA19-9336-1&2 (config-if)# int port-channel 136

AA19-9336-1&2 (config-if)# switchport mode trunk

AA19-9336-1&2 (config-if)# switchport trunk native vlan 2

AA19-9336-1&2 (config-if)# switchport trunk allowed vlan 119

AA19-9336-1&2 (config-if)# vpc 136

Create Hot Standby Router Protocol (HSRP) Switched Virtual Interfaces (SVI)

These interfaces can be considered optional if the subnets of the VLANs used within the environment are managed entirely by an upstream switch, but if that is the case, all managed VLANs will need to be carried up through the vPC to the Upstream switches.

More advanced Cisco routing protocols can be configured within the Nexus switches, but are not covered in this design. Routing between the SVIs is directly connected between them as they reside in the same Virtual Routing and Forwarding instance (VRF), and traffic set to enter and exit the VRF will traverse the default gateway set for the switches.

For 9336C-FX2 A:

Nexus A IB-Mgmt SVI

AA19-9336-1(config-if)# int vlan 119

AA19-9336-1(config-if)# no shutdown

AA19-9336-1(config-if)# ip address <<var_nexus_A_ib_ip>>/24

AA19-9336-1(config-if)# hsrp 19

AA19-9336-1(config-if-hsrp)# preempt

AA19-9336-1(config-if-hsrp)# ip <<var_nexus_ib_vip>>

![]() When HSRP priority is not set, it defaults to 100. Alternating SVIs within a switch are set to a number higher than 105 to set those SVIs to default to be the standby router for that network. Be careful when the VLAN SVI for one switch is set without a priority (defaulting to 100), the partner switch is set to a priority with a value other than 100.

When HSRP priority is not set, it defaults to 100. Alternating SVIs within a switch are set to a number higher than 105 to set those SVIs to default to be the standby router for that network. Be careful when the VLAN SVI for one switch is set without a priority (defaulting to 100), the partner switch is set to a priority with a value other than 100.

Nexus A Web SVI

AA19-9336-1(config-if-hsrp)# int vlan 201

AA19-9336-1(config-if)# no shutdown

AA19-9336-1(config-if)# ip address 172.18.101.252/24

AA19-9336-1(config-if)# hsrp 101

AA19-9336-1(config-if-hsrp)# preempt

AA19-9336-1(config-if-hsrp)# priority 105

AA19-9336-1(config-if-hsrp)# ip 172.18.101.254

Nexus A App SVI

AA19-9336-1(config-if-hsrp)# int vlan 202

AA19-9336-1(config-if)# no shutdown

AA19-9336-1(config-if)# ip address 172.18.102.252/24

AA19-9336-1(config-if)# hsrp 102

AA19-9336-1(config-if-hsrp)# preempt

AA19-9336-1(config-if-hsrp)# ip 172.18.102.254

Nexus A DB SVI

AA19-9336-1(config-if-hsrp)# int vlan 203

AA19-9336-1(config-if)# no shutdown

AA19-9336-1(config-if)# ip address 172.18.103.252/24

AA19-9336-1(config-if)# hsrp 103

AA19-9336-1(config-if-hsrp)# preempt

AA19-9336-1(config-if-hsrp)# priority 105

AA19-9336-1(config-if-hsrp)# ip 172.18.103.254

For 9336C-FX2 B:

Nexus B IB-Mgmt SVI

AA19-9336-2(config-if)# int vlan 119

AA19-9336-2(config-if)# no shutdown

AA19-9336-2(config-if)# ip address <<var_nexus_B_ib_ip>>/24

AA19-9336-2(config-if)# hsrp 19

AA19-9336-2(config-if-hsrp)# preempt

AA19-9336-2(config-if-hsrp)# priority 105

AA19-9336-2(config-if-hsrp)# <<var_nexus_ib_vip>>

Nexus B Web SVI

AA19-9336-2(config-if-hsrp)# int vlan 201

AA19-9336-2(config-if)# no shutdown

AA19-9336-2(config-if)# ip address 172.18.101.253/24

AA19-9336-2(config-if)# hsrp 101

AA19-9336-2(config-if-hsrp)# preempt

AA19-9336-2(config-if-hsrp)# ip 172.18.101.254

Nexus B App SVI

AA19-9336-2(config-if-hsrp)# int vlan 202

AA19-9336-2(config-if)# no shutdown

AA19-9336-2(config-if)# ip address 172.18.102.253/24

AA19-9336-2(config-if)# hsrp 102

AA19-9336-2(config-if-hsrp)# preempt

AA19-9336-2(config-if-hsrp)# priority 105

AA19-9336-2(config-if-hsrp)# ip 172.18.102.254

Nexus B DB SVI

AA19-9336-2(config-if-hsrp)# int vlan 203

AA19-9336-2(config-if)# no shutdown

AA19-9336-2(config-if)# ip address 172.18.103.253/24

AA19-9336-2(config-if)# hsrp 103

AA19-9336-2(config-if-hsrp)# preempt

AA19-9336-2(config-if-hsrp)# ip 172.18.103.254

Set Global Configurations

Run the following commands on both switches to set global configurations:

AA19-9336-1&2 (config-if-hsrp)# port-channel load-balance src-dst l4port

AA19-9336-1&2 (config)# ip route 0.0.0.0/0 <<var_ib_gateway_ip>>

AA19-9336-1&2 (config)# ntp server <<var_oob_ntp>> use-vrf management

![]() In above command block, the “l4port” is the letter L and 4, not the number fourteen.

In above command block, the “l4port” is the letter L and 4, not the number fourteen.

![]() The ntp server should be an accessible NTP server for use by the switches. In this case, point to an out-of-band source.

The ntp server should be an accessible NTP server for use by the switches. In this case, point to an out-of-band source.

AA19-9336-1&2 (config)# ntp master 3

AA19-9336-1&2 (config)# ntp source <<var_nexus_ib_vip>>

![]() Setting the switches as ntp masters to redistribute as an ntp source is optional here, but can be a valuable fix if the tenant networks are not enabled to reach the primary ntp server.

Setting the switches as ntp masters to redistribute as an ntp source is optional here, but can be a valuable fix if the tenant networks are not enabled to reach the primary ntp server.

![]() *** Save all configurations to this point on both Nexus Switches ***

*** Save all configurations to this point on both Nexus Switches ***

AA19-9336-1&2 (config)# copy running-config startup-config

The MDS configuration implements a common redundant physical fabric design with fabrics represented as “A” and “B”. The validating lab provided a basic MDS fabric supporting two VSP Storage Systems that are connected to two differing UCS domains within the SAN environment. Larger deployments may require a multi-tier core-edge or edge-core-edge design with port channels connecting the differing layers of the topology. Further discussion of these kinds of topologies, as well as considerations in implementing more complex SAN environments can be found in this white paper: https://www.cisco.com/c/en/us/products/collateral/storage-networking/mds-9700-series-multilayer-directors/white-paper-c11-729697.pdf

The configuration steps described below are implemented for the Cisco MDS 9706, but are similar to steps required for other Cisco MDS 9000 series switches that may be appropriate for a deployment. When making changes to the design that comply with the compatibility matrices of Cisco and Hitachi, it is required to consult the appropriate configuration documents of the differing equipment to confirm the correct implementation steps.

Physical Connectivity

Physical cabling should be completed by following the diagram and table references section Deployment Hardware and Software.

Initial MDS Configuration Dialogue

Complete this dialogue on each switch, using a serial connection to the console port of the switch, unless Power on Auto Provisioning is being used:

---- System Admin Account Setup ----

Do you want to enforce secure password standard (yes/no) [y]: <enter>

Enter the password for "admin": <<var_password>>

Confirm the password for "admin": <<var_password>>

---- Basic System Configuration Dialog ----

This setup utility will guide you through the basic configuration of

the system. Setup configures only enough connectivity for management

of the system.

Please register Cisco MDS 9000 Family devices promptly with your

supplier. Failure to register may affect response times for initial

service calls. MDS devices must be registered to receive entitled

support services.

Press Enter at anytime to skip a dialog. Use ctrl-c at anytime

to skip the remaining dialogs.

Would you like to enter the basic configuration dialog (yes/no): yes

Create another login account (yes/no) [n]: <enter>

Configure read-only SNMP community string (yes/no) [n]: <enter>

Configure read-write SNMP community string (yes/no) [n]: <enter>

Enter the switch name : <<var_mds_A_hostname>>|<<var_mds_B_hostname>>

Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]: <enter>

Mgmt0 IPv4 address : <<var_mds_A_mgmt_ip>>|<<var_mds_B_mgmt_ip>>

Mgmt0 IPv4 netmask : <<var_oob_netmask>>

Configure the default gateway? (yes/no) [y]: <enter>

IPv4 address of the default gateway : <<var_oob_gateway>>

Configure advanced IP options? (yes/no) [n]: <enter>

Enable the ssh service? (yes/no) [y]: <enter>

Type of ssh key you would like to generate (dsa/rsa) [rsa]: <enter>

Number of rsa key bits <1024-2048> [1024]: <enter>

Enable the telnet service? (yes/no) [n]: <enter>

Configure congestion/no_credit drop for fc interfaces? (yes/no) [y]: <enter>

Enter the type of drop to configure congestion/no_credit drop? (con/no) [c]: <enter>

Enter milliseconds in multiples of 10 for congestion-drop for logical-type edge

in range (<200-500>/default), where default is 500. [d]: <enter>

Congestion-drop for logical-type core must be greater than or equal to

Congestion-drop for logical-type edge. Hence, Congestion drop for

logical-type core will be set as default.

Enable the http-server? (yes/no) [y]: <enter>

Configure clock? (yes/no) [n]: y

Clock config format [HH:MM:SS Day Mon YYYY] [example: 18:00:00 1 november 2012]: <enter>

Enter clock config :17:26:00 2 january 2019

Configure timezone? (yes/no) [n]: y

Enter timezone config [PST/MST/CST/EST] :EST

Enter Hrs offset from UTC [-23:+23] : <enter>

Enter Minutes offset from UTC [0-59] : <enter>

Configure summertime? (yes/no) [n]: <enter>

Configure the ntp server? (yes/no) [n]: y

NTP server IPv4 address : <var_oob_ntp>

Configure default switchport interface state (shut/noshut) [shut]: <enter>

Configure default switchport trunk mode (on/off/auto) [on]: <enter>

Configure default switchport port mode F (yes/no) [n]: <enter>

Configure default zone policy (permit/deny) [deny]: <enter>

Enable full zoneset distribution? (yes/no) [n]: <enter>

Configure default zone mode (basic/enhanced) [basic]: <enter>

The following configuration will be applied:

password strength-check

switchname aa19-9706-1

interface mgmt0

ip address 192.168.168.18 255.255.255.0

no shutdown

ip default-gateway 192.168.168.254

ssh key rsa 1024 force

feature ssh

no feature telnet

system timeout congestion-drop default logical-type edge

system timeout congestion-drop default logical-type core

feature http-server

clock set 17:26:00 2 january 2019

clock timezone EST 0 0

ntp server 192.168.168.254

system default switchport shutdown

system default switchport trunk mode on

no system default zone default-zone permit

no system default zone distribute full

no system default zone mode enhanced

Would you like to edit the configuration? (yes/no) [n]: <enter>

Use this configuration and save it? (yes/no) [y]: <enter>

Cisco MDS Switch Configuration

Cisco MDS 9706 A and Cisco MDS 9706 B

To enable the correct features on the Cisco MDS switches, follow these steps:

1. Log in as admin.

2. Run the following commands:

aa19-9706-1&2# configure terminal

aa19-9706-1&2(config)# feature npiv

aa19-9706-1&2(config)# feature fport-channel-trunk

aa19-9706-1&2(config)# feature lldp

aa19-9706-1&2(config)# device-alias mode enhanced

aa19-9706-1&2(config)# device-alias commit

![]() The device-alias commit will trigger a warning that this command will clear existing device aliases on attached fabrics, which should not impact the initial deployment instructions being followed here.

The device-alias commit will trigger a warning that this command will clear existing device aliases on attached fabrics, which should not impact the initial deployment instructions being followed here.

Configure Individual Ports

Cisco MDS 9706 A

To configure individual ports and port-channels for switch A, follow these steps:

![]() In this step and in the following sections, configure the <var_ucs_6454_clustername> / <var_ucs_6332_clustername> and <vsp-g370> / <vsp-g1500> interfaces as appropriate to your deployment.

In this step and in the following sections, configure the <var_ucs_6454_clustername> / <var_ucs_6332_clustername> and <vsp-g370> / <vsp-g1500> interfaces as appropriate to your deployment.

From the global configuration mode, run the following commands:

aa19-9706-1(config)# interface fc1/1

aa19-9706-1(config-if)# switchport description <var_ucs_6332_clustername>-a:1/1

aa19-9706-1(config-if)# channel-group 11 force

aa19-9706-1(config-if)# no shutdown

aa19-9706-1(config-if)#

aa19-9706-1(config-if)# interface fc1/2

aa19-9706-1(config-if)# switchport description <var_ucs_6332_clustername>-a:1/2

aa19-9706-1(config-if)# channel-group 11 force

aa19-9706-1(config-if)# no shutdown

aa19-9706-1(config-if)#

aa19-9706-1(config-if)# interface fc1/3

aa19-9706-1(config-if)# switchport description <var_ucs_6332_clustername>-a:1/3

aa19-9706-1(config-if)# channel-group 11 force

aa19-9706-1(config-if)# no shutdown

aa19-9706-1(config-if)#

aa19-9706-1(config-if)# interface fc1/4

aa19-9706-1(config-if)# switchport description <var_ucs_6332_clustername>-a:1/4

aa19-9706-1(config-if)# channel-group 11 force

aa19-9706-1(config-if)# no shutdown

aa19-9706-1(config-if)#

aa19-9706-1(config-if)# interface fc1/5

aa19-9706-1(config-if)# switchport description <var_ucs_6454_clustername>-a:1/1

aa19-9706-1(config-if)# channel-group 15 force

aa19-9706-1(config-if)# no shutdown

aa19-9706-1(config-if)#

aa19-9706-1(config-if)# interface fc1/6

aa19-9706-1(config-if)# switchport description <var_ucs_6454_clustername>-a:1/2

aa19-9706-1(config-if)# channel-group 15 force

aa19-9706-1(config-if)# no shutdown

aa19-9706-1(config-if)#

aa19-9706-1(config-if)# interface fc1/7

aa19-9706-1(config-if)# switchport description <vsp-g1500>-a:CL 1-A

aa19-9706-1(config-if)# no shutdown

aa19-9706-1(config-if)#

aa19-9706-1(config-if)# interface fc1/8

aa19-9706-1(config-if)# switchport description <vsp-g1500>-a:CL 2-A

aa19-9706-1(config-if)# no shutdown

aa19-9706-1(config-if)#

aa19-9706-1(config-if)# interface fc1/9

aa19-9706-1(config-if)# switchport description <vsp-g1500>-a:CL 1-J

aa19-9706-1(config-if)# no shutdown

aa19-9706-1(config-if)#

aa19-9706-1(config-if)# interface fc1/10

aa19-9706-1(config-if)# switchport description <vsp-g1500>-a:CL 2-J

aa19-9706-1(config-if)# no shutdown

aa19-9706-1(config-if)#

aa19-9706-1(config-if)# interface fc1/11

aa19-9706-1(config-if)# switchport description <vsp-g370>-a:CL 1-A

aa19-9706-1(config-if)# no shutdown

aa19-9706-1(config-if)#

aa19-9706-1(config-if)# interface fc1/12

aa19-9706-1(config-if)# switchport description <vsp-g370>-a:CL 2-B

aa19-9706-1(config-if)# no shutdown

aa19-9706-1(config-if)#

aa19-9706-1(config-if)# interface port-channel 11

aa19-9706-1(config-if)# switchport description <var_ucs_6332_clustername>-portchannel

aa19-9706-1(config-if)# channel mode active

aa19-9706-1(config-if)#

aa19-9706-1(config-if)# interface port-channel 15

aa19-9706-1(config-if)# switchport description <var_ucs_6454_clustername>-portchannel

aa19-9706-1(config-if)# channel mode active

aa19-9706-1(config-if)# exit

Create Port Descriptions - Fabric B

To configure individual ports and port-channels for switch B, follow these steps:

From the global configuration mode, run the following commands:

aa19-9706-2(config-if)# interface fc1/1

aa19-9706-2(config-if)# switchport description <var_ucs_6332_clustername>-b:1/1

aa19-9706-2(config-if)# channel-group 11 force

aa19-9706-2(config-if)# no shutdown

aa19-9706-2(config-if)#

aa19-9706-2(config-if)# interface fc1/2

aa19-9706-2(config-if)# switchport description <var_ucs_6332_clustername>-b:1/2

aa19-9706-2(config-if)# channel-group 11 force

aa19-9706-2(config-if)# no shutdown

aa19-9706-2(config-if)#

aa19-9706-2(config-if)# interface fc1/3

aa19-9706-2(config-if)# switchport description <var_ucs_6332_clustername>-b:1/3

aa19-9706-2(config-if)# channel-group 11 force

aa19-9706-2(config-if)# no shutdown

aa19-9706-2(config-if)#

aa19-9706-2(config-if)# interface fc1/4

aa19-9706-2(config-if)# switchport description <var_ucs_6332_clustername>-b:1/4

aa19-9706-2(config-if)# channel-group 11 force

aa19-9706-2(config-if)# no shutdown

aa19-9706-2(config-if)#

aa19-9706-2(config-if)# interface fc1/5

aa19-9706-2(config-if)# switchport description <var_ucs_6454_clustername>-b:1/1

aa19-9706-2(config-if)# channel-group 15 force

aa19-9706-2(config-if)# no shutdown

aa19-9706-2(config-if)#

aa19-9706-2(config-if)# interface fc1/6

aa19-9706-2(config-if)# switchport description <var_ucs_6454_clustername>-b:1/2

aa19-9706-2(config-if)# channel-group 15 force

aa19-9706-2(config-if)# no shutdown

aa19-9706-2(config-if)#

aa19-9706-2(config-if)# interface fc1/7

aa19-9706-2(config-if)# switchport description <vsp-g1500>-a:CL 3-L

aa19-9706-2(config-if)# no shutdown

aa19-9706-2(config-if)#

aa19-9706-2(config-if)# interface fc1/8

aa19-9706-2(config-if)# switchport description <vsp-g1500>-a:CL 4-C

aa19-9706-2(config-if)# no shutdown

aa19-9706-2(config-if)#

aa19-9706-2(config-if)# interface fc1/9

aa19-9706-2(config-if)# switchport description <vsp-g1500>-a:CL 3-C

aa19-9706-2(config-if)# no shutdown

aa19-9706-2(config-if)#

aa19-9706-2(config-if)# interface fc1/10

aa19-9706-2(config-if)# switchport description <vsp-g1500>-a:CL 4-L

aa19-9706-2(config-if)# no shutdown

aa19-9706-2(config-if)#

aa19-9706-2(config-if)# interface fc1/11

aa19-9706-2(config-if)# switchport description <vsp-g370>-a:CL 3-B

aa19-9706-2(config-if)# no shutdown

aa19-9706-2(config-if)#

aa19-9706-2(config-if)# interface fc1/12

aa19-9706-2(config-if)# switchport description <vsp-g370>-a:CL 4-A

aa19-9706-2(config-if)# no shutdown

aa19-9706-2(config-if)#

aa19-9706-2(config-if)# interface port-channel 11

aa19-9706-2(config-if)# switchport description <var_ucs_6332_clustername>-portchannel

aa19-9706-2(config-if)# channel mode active

aa19-9706-2(config-if)#

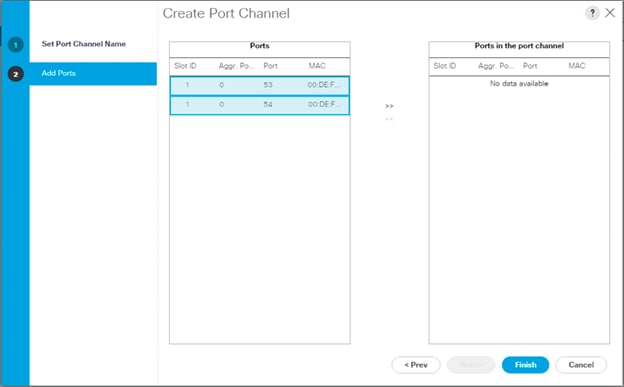

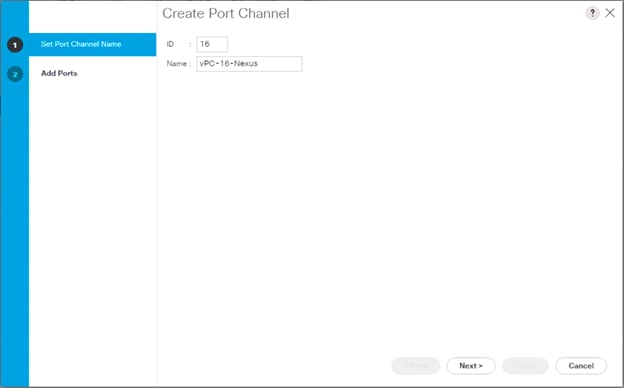

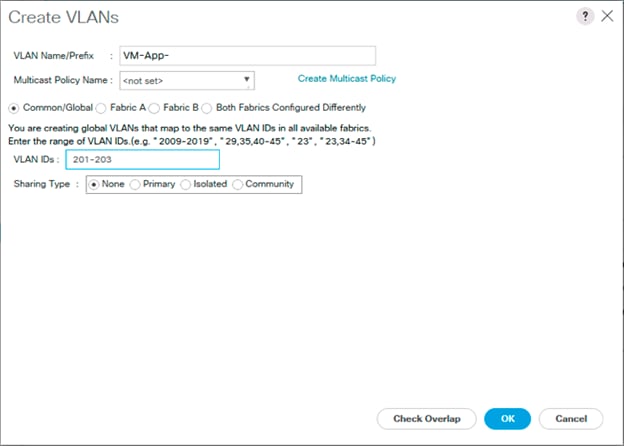

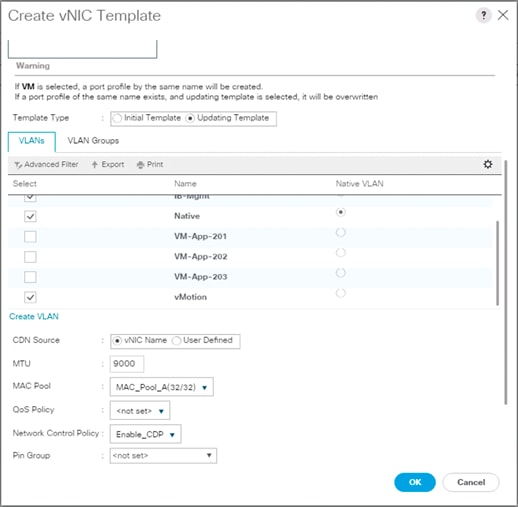

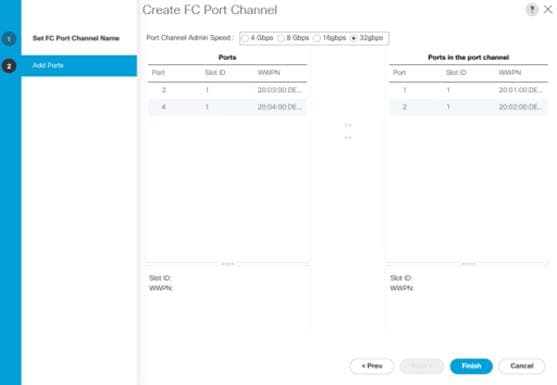

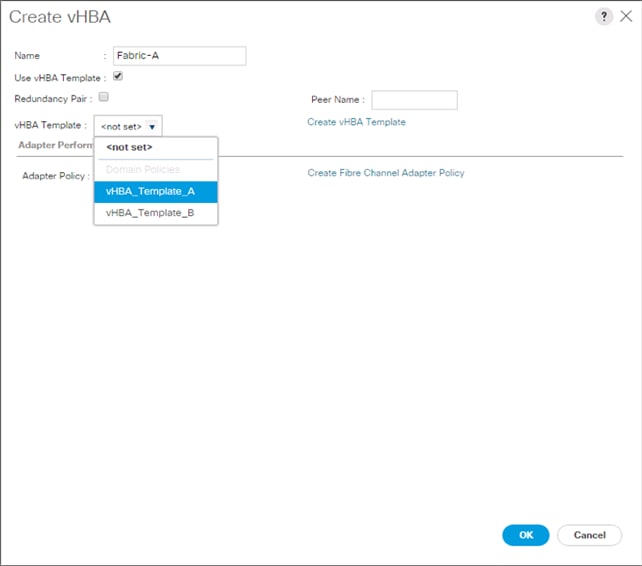

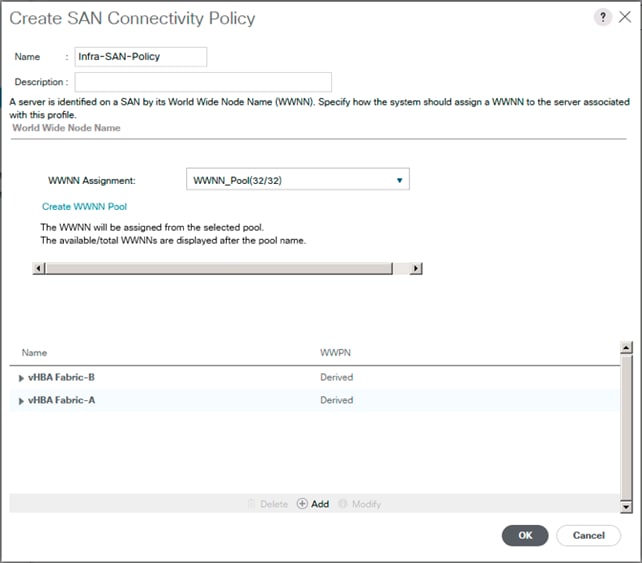

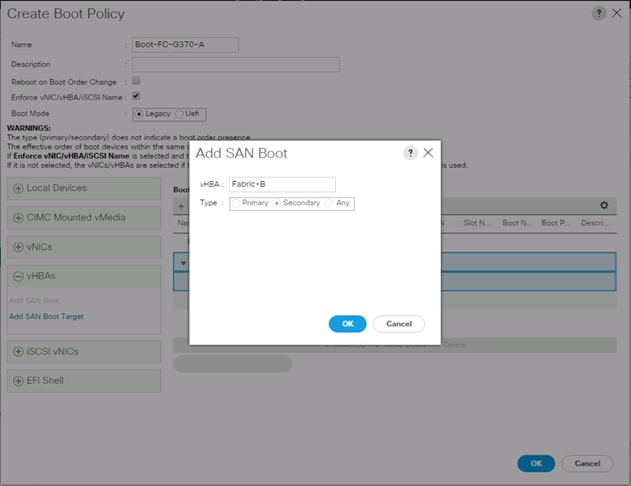

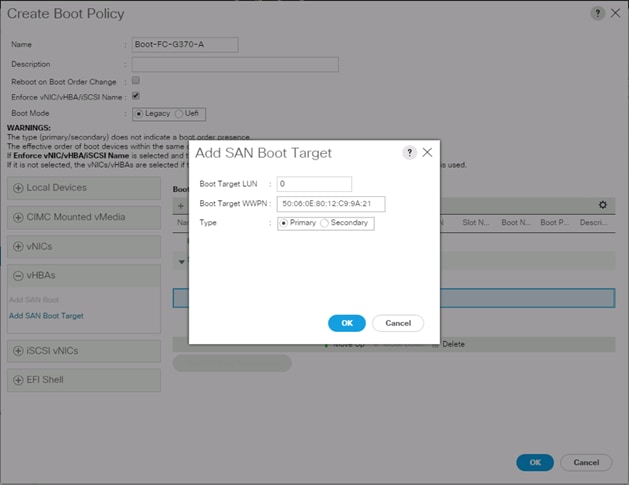

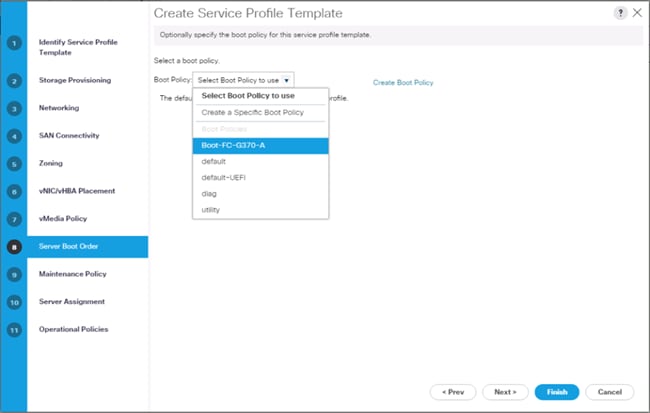

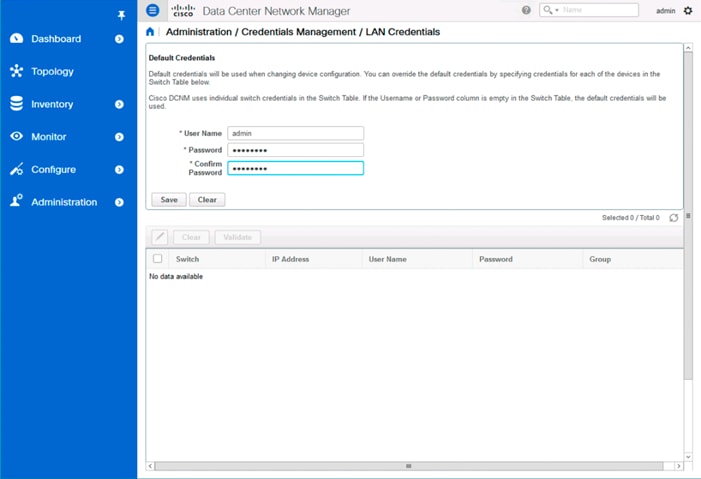

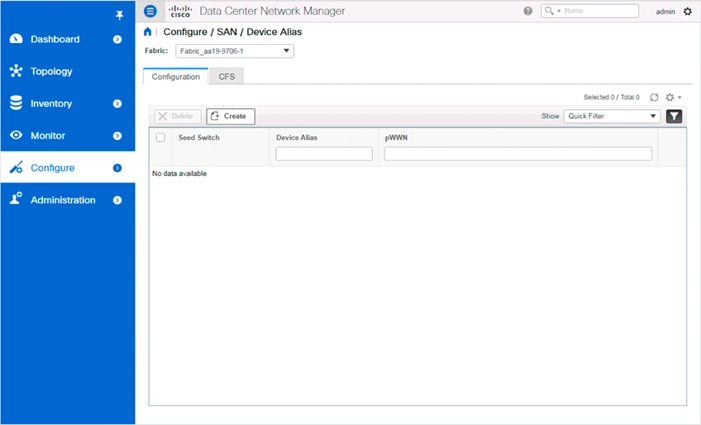

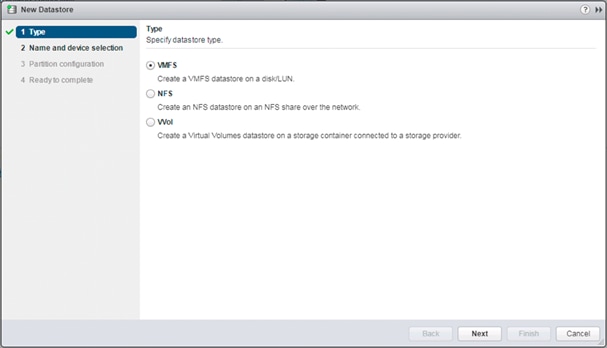

aa19-9706-2(config-if)# interface port-channel 15