Cisco UCS Integrated Infrastructure Solutions for SAP Applications

Available Languages

Cisco UCS Integrated Infrastructure Solutions for SAP Applications

Design and Deployment of Cisco UCS, Cisco Nexus 9000 Series Switches and EMC VNX Storage

Last Updated: July 17, 2017

The CVD program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information visit

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, IronPort, the IronPort logo, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2016 Cisco Systems, Inc. All rights reserved.

Cisco Unified Computing System

Cisco UCS 6248UP Fabric Interconnect

Cisco UCS 2204XP Fabric Extender

Cisco UCS 2208XP Fabric Extender

Cisco UCS Blade Server Chassis

Cisco UCS B200 M4 Blade Server

Cisco C220 M4 Rack-Mount Servers

Cisco UCS B260 M4 Blade Server

Cisco UCS B460 M4 Blade Server

Cisco UCS C460 M4 Rack-Mount Server

Cisco C880 M4 Rack-Mount Server

Cisco I/O Adapters for Blade and Rack Servers

Cisco VIC 1240 Virtual Interface Card

Cisco VIC 1280 Virtual Interface Card

Cisco VIC 1227 Virtual Interface Card

Cisco VIC 1225 Virtual Interface Card

Cisco MDS 9148S 16G Multilayer Fabric Switch

Cisco Nexus 1000v Virtual Switch

EMC Storage Technologies and Benefits

Deployment Hardware and Software

Global Hardware Requirements for SAP HANA

Log Volume with Intel E7-x890v2 CPU

SAP HANA System on a Single Server—Scale-Up (Bare Metal or Virtualized)

SAP HANA System on Multiple Servers—Scale-Out

Hardware and Software Components

Memory Configuration Guidelines for Virtual Machines

VMware ESX/ESXi Memory Management Concepts

Virtual Machine Memory Concepts

Allocating Memory to Virtual Machines

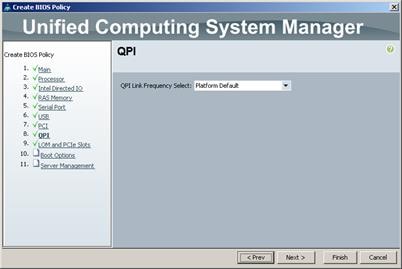

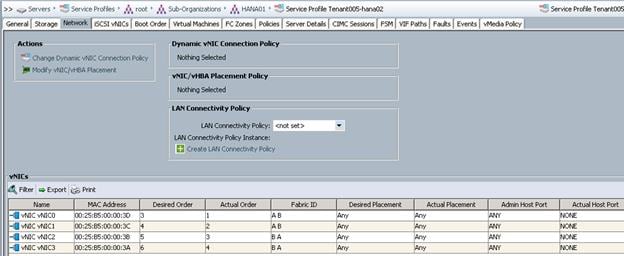

Cisco UCS Service Profile Design

Network High Availability Design

About Network Path Separation with Cisco UCS

Solution Configuration Guidelines

Install and Configure the Management Pod

Configure Domain Name Service (DNS)

Network Configuration for Management Pod

Cisco Nexus 3500 Configuration

Enable Appropriate Cisco Nexus 3500 Features and Settings

Create VLANs for Management Traffic

Create VLANs for Nexus 1000v Traffic

Configure Virtual Port Channel Domain

Configure Network Interfaces for the VPC Peer Links

Validate VLAN and VPC Configuration

Configure Network Interfaces to Connect the Cisco UCS C220 Management Server with LOM

Configure Network Interfaces to Connect the Cisco UCS C220 Management Server with VIC1225

Configure Network Interfaces to Connect the Cisco 2911 ISR

Configure Network Interfaces to Connect the EMC VNX5400 Storage

Configure Network Interfaces for Out of Band Management Access

Configure the Port as an Access VLAN Carrying the Out of Band Management VLAN Traffic

Configure the Port as an Access VLAN Carrying the Out of Band Management VLAN Traffic

Configure Network Interfaces for Access to the Managed Landscape

Configure VLAN Interfaces and HSRP

Uplink into Existing Network Infrastructure

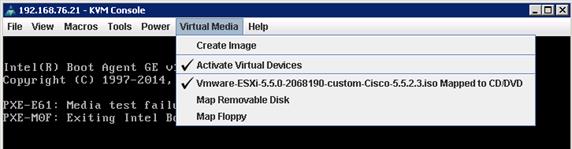

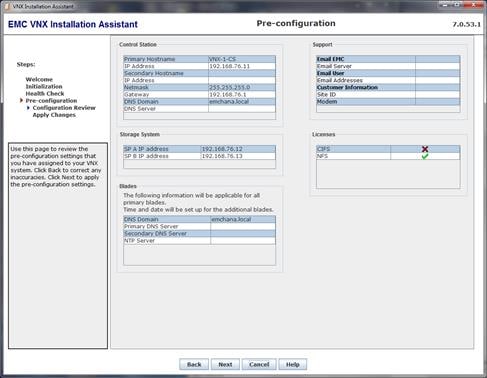

Management Server Installation

Management Server Configuration

Configure Network on the Datamover

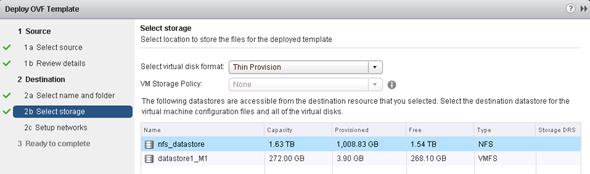

Create a File System for the NFS Datastore

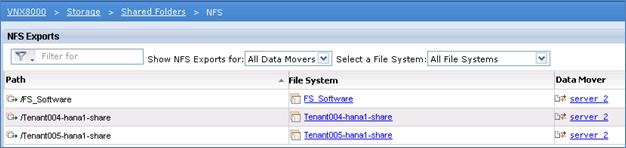

Create NFS-Export for the File Systems

Set Up Management Networking for ESXi Hosts

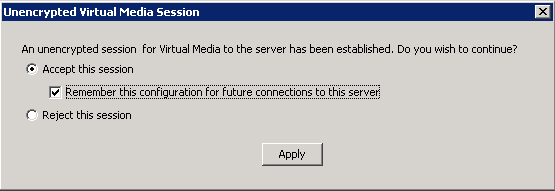

VMware ESXi Configuration with VMware vCenter Client

VMware Management Tool deployment

Deploy the VMware vCenter Server Appliance

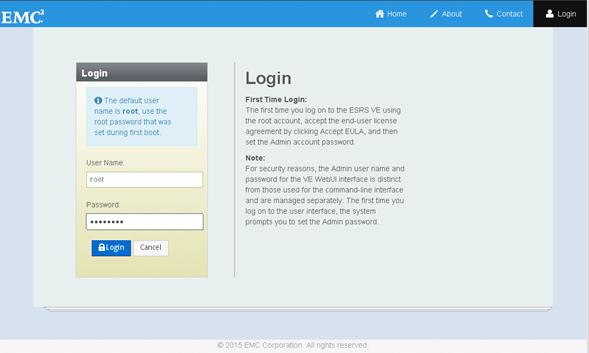

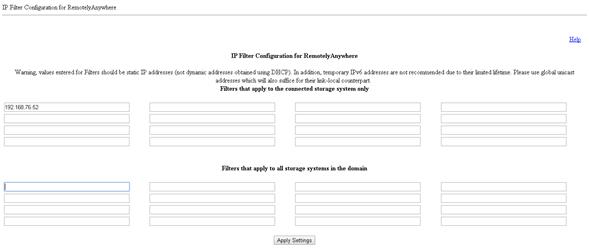

Install and Configure EMC Secure Remote Services

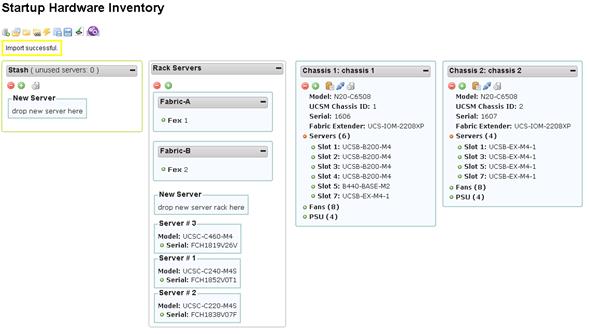

Install and Configure the Cisco UCS Integrated Infrastructure

Cisco Nexus 9000 Configuration

Initial Cisco Nexus 9000 Configuration

Enable the Appropriate Cisco Nexus 9000 Features and Settings

Configure the Spanning Tree Defaults

Create VLANs for Management and ESX Traffic

Configure Virtual Port Channel Domain

Configure Network Interfaces for the VPC Peer Links

Configure Network Interfaces to Connect the EMC VNX Storage

Configure Network Interfaces for Access to the Management Landscape

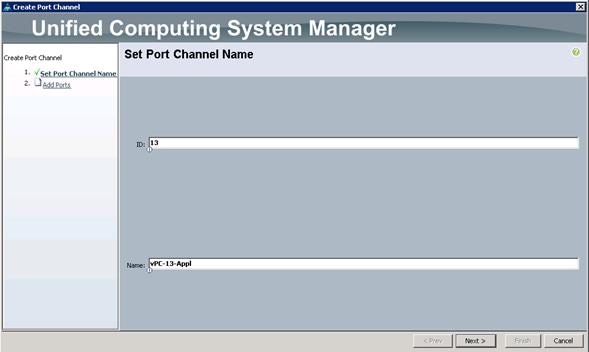

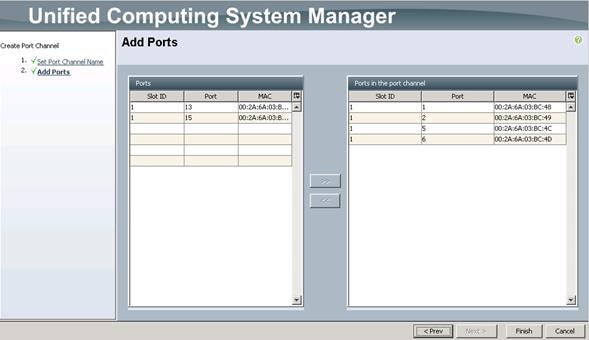

Configure Administration Port Channels to Cisco UCS Fabric Interconnect

Configure Port Channels Connected to Cisco UCS Fabric Interconnects

Configure Network Interfaces to the Backup Destination

Configure Ports connected to Cisco C880 M4 for VMware ESX

Cisco MDS Initial Configuration

Configure Fibre Channel Ports and Port Channels

Configure the Fibre Channel Zoning

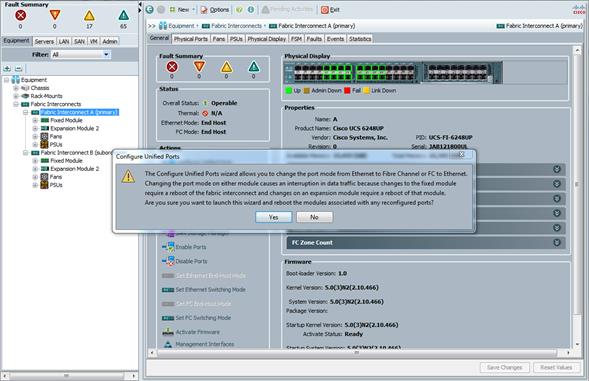

Configure the Cisco UCS Fabric Interconnects

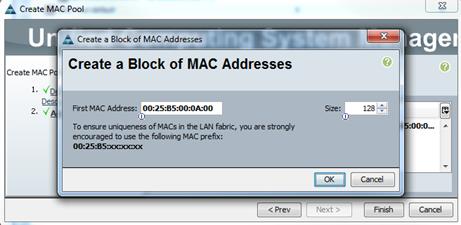

Configure Server (Service Profile) Policies and Pools

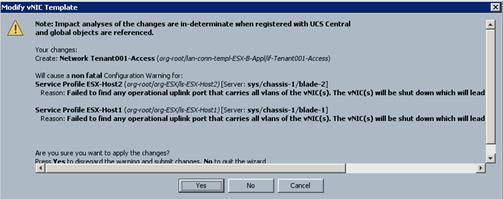

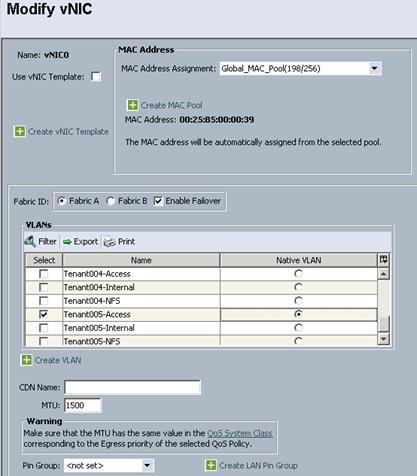

Configure the Service Profile Templates to Run VMware ESXi

Instantiate Service Profiles for VMware ESX

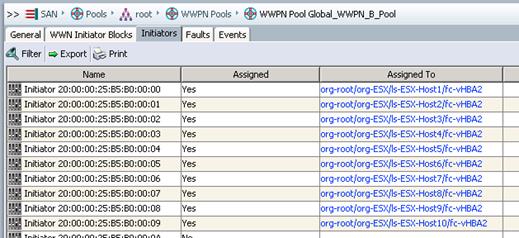

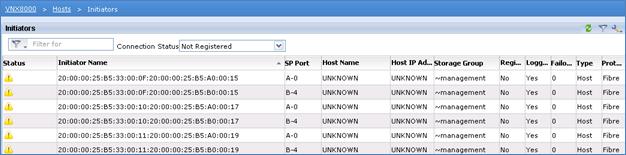

Create SAN Zones on the MDS Switches

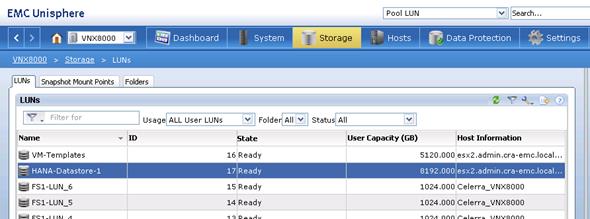

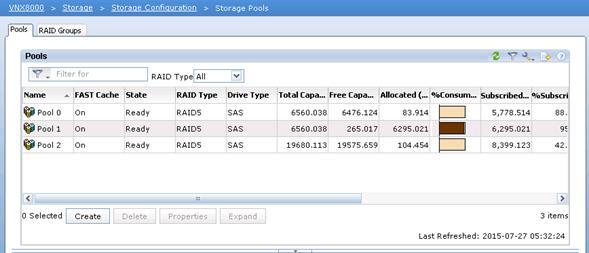

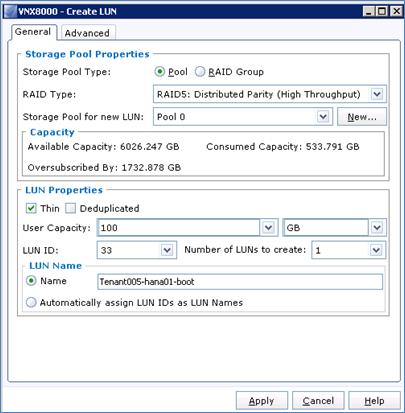

Configure Storage Pool for Operating Systems and Non-SAP HANA Applications.

Configure LUNs for VMware ESX Hosts

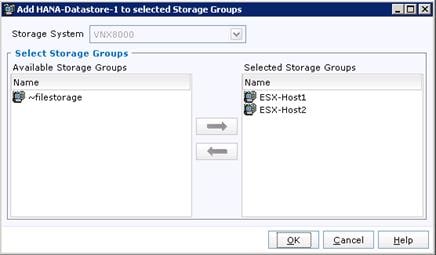

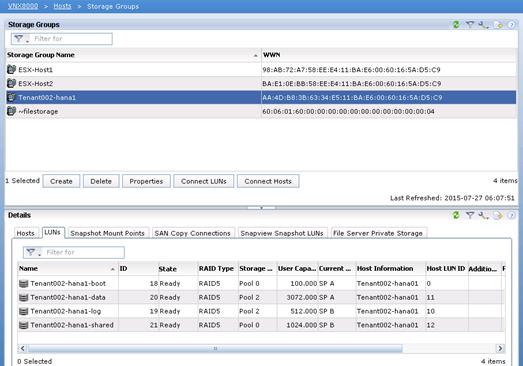

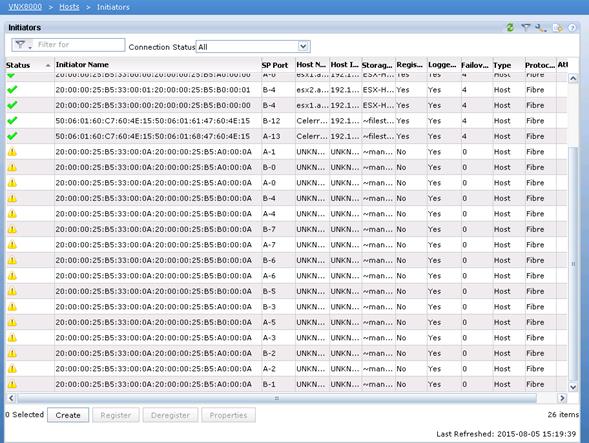

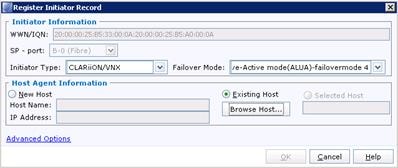

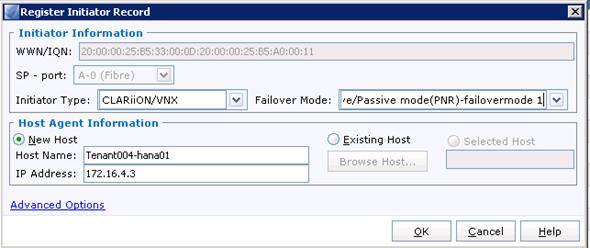

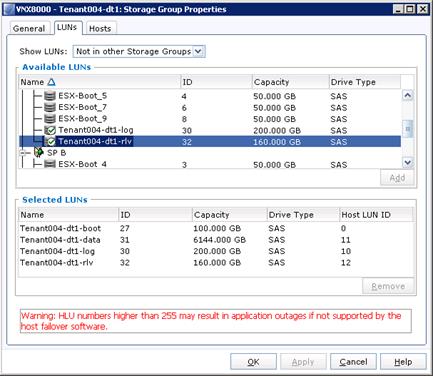

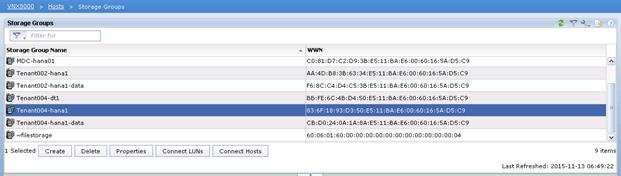

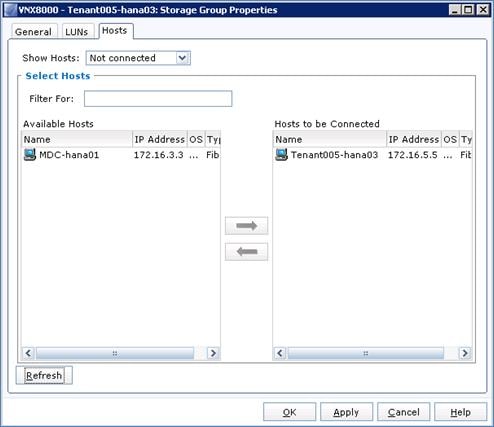

Register Hosts and Configure Storage Groups

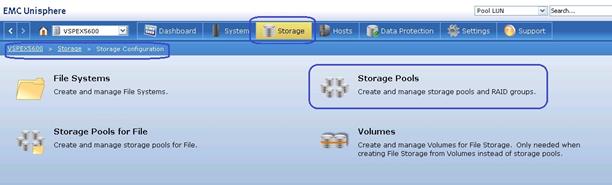

Configure Storage Pools for File

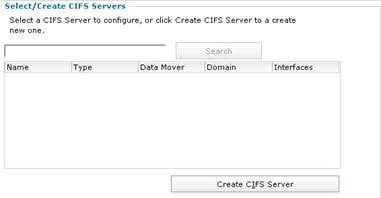

Configure Network and File Service

Create Additional Network Settings

Set Up Management Networking for ESXi Hosts

Register and Configure ESX Hosts with VMware vCenter

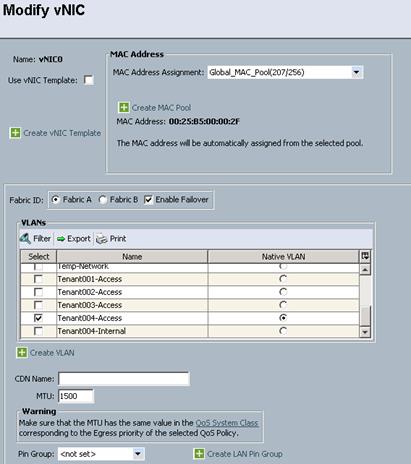

Connect VLAN-Groups to Cisco Nexus1000V

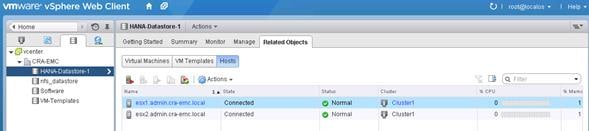

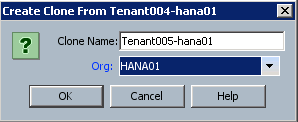

Configure Storage Groups for Virtual Machine Templates

Configure ESX-Hosts for SAP HANA

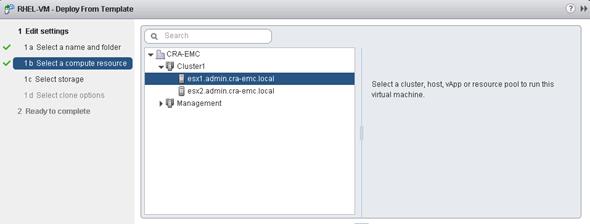

Virtual Machine Template with SUSE Linux Enterprise for SAP Applications.

Virtual Machine Template with Red Hat Enterprise Linux for SAP HANA

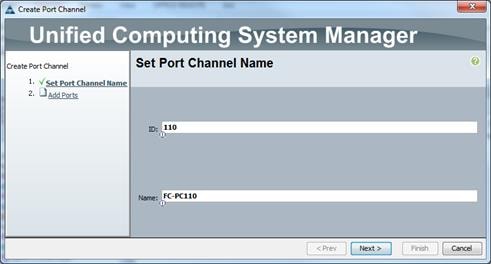

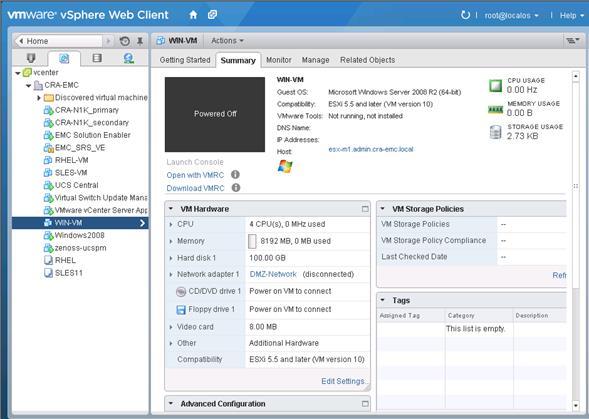

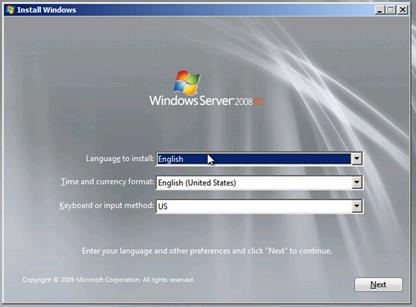

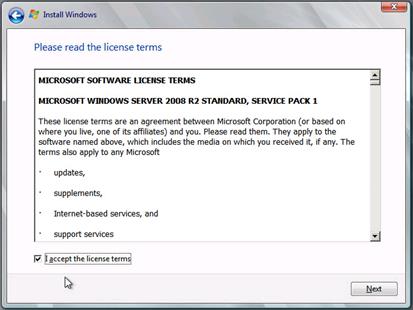

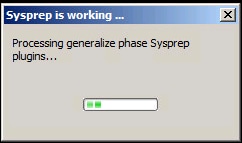

Virtual Machine Template with Microsoft Windows

Install and Configure Management Tools

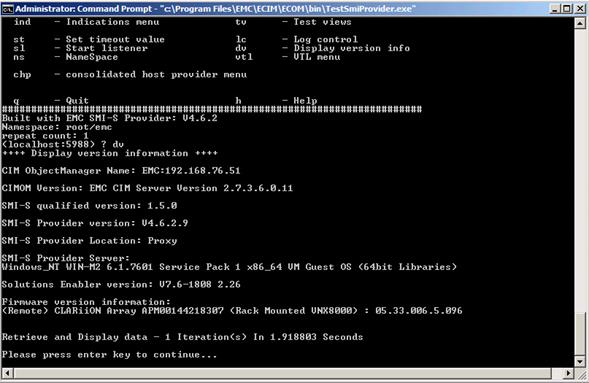

Install and Configure EMC Solution Enabler SMI Module

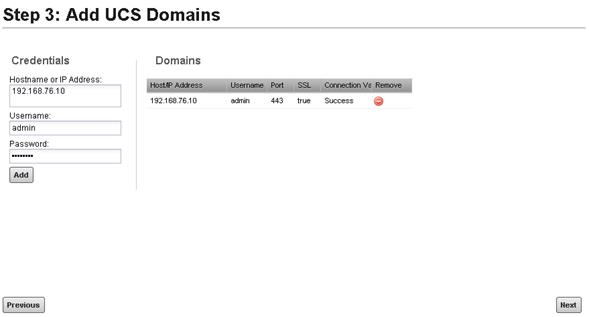

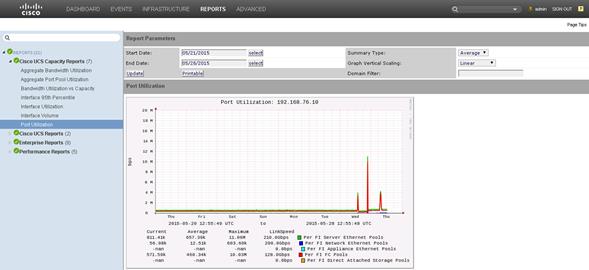

Install and Configure Cisco UCS Performance Manager

Reference Workloads and Use Cases

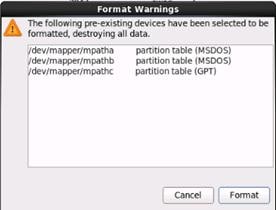

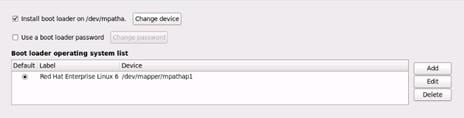

Operating System Installation and Configuration

Virtualized SAP HANA Scale-Up System (vHANA)

Check Available VMware Datastore and Available Capacity

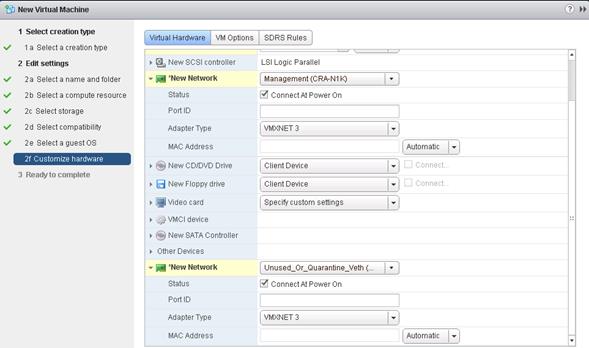

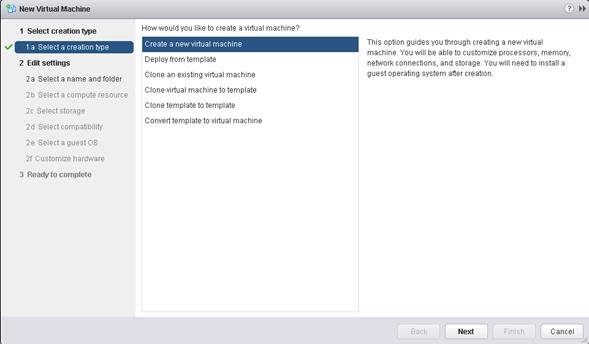

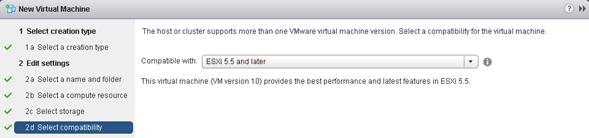

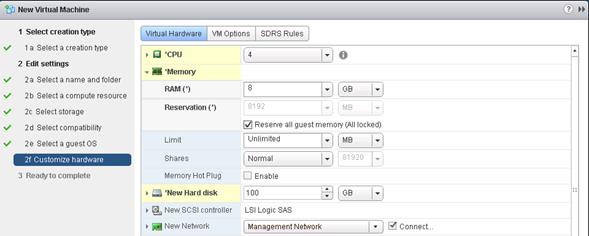

Create Virtual Machine from VM-Template

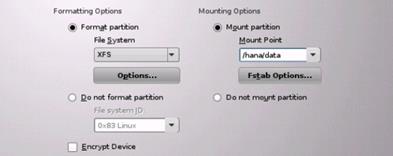

Configure Storage for SAP HANA

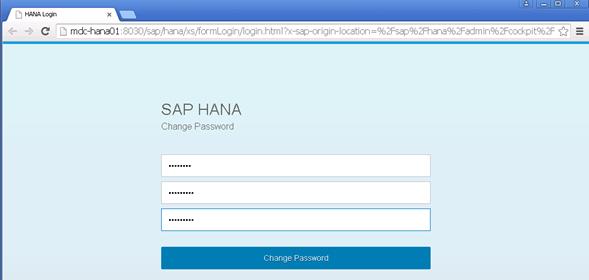

Test the connection to SAP HANA

SAP HANA Scale-Up System on a Bare Metal Server (Single SID)

Enabling data traffic on vHBA3 and vHBA4

Configure SUSE Linux for SAP HANA

Test the Connection to SAP HANA

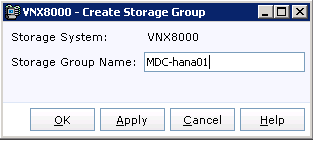

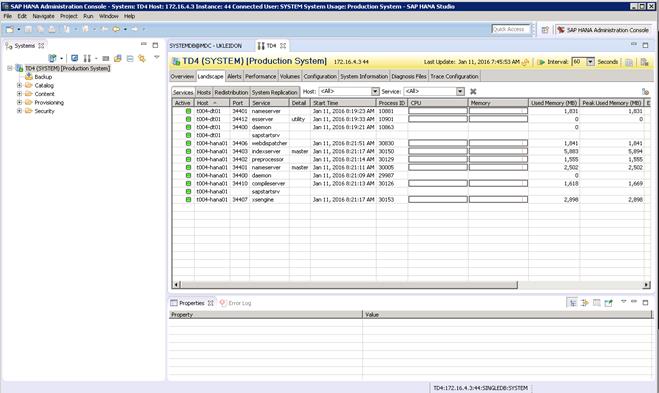

SAP HANA Scale-Up System with Multi Database Container

Enabling Data Traffic on vHBA3 and vHBA4

Configure SUSE Linux for SAP HANA

Test the Connection to SAP HANA

Install SAP HANA Database Container

SAP HANA Scale-Up System with SAP HANA Dynamic Tiering

Enabling data traffic on vHBA3 and vHBA4 (SAP HANA Node)

Enabling Data Traffic on vHBA3 and vHBA4 (SAP HANA Node)

Validating Cisco UCS Integrated Infrastructure with SAP HANA

Verify the Redundancy of the Solution Components

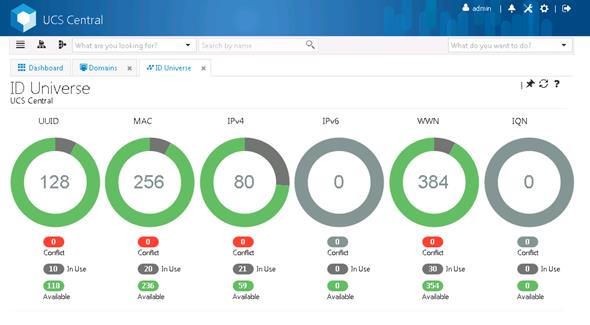

Register Cisco UCS Manager with Cisco UCS Central

Appendix—Solution Variables Data Sheet

Cisco® Validated Designs include systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. These designs incorporate a wide range of technologies and products into a portfolio of solutions that have been developed to address the business needs of customers.

This document describes the architecture and deployment procedures for a Cisco UCS Integrated Infrastructure composed of Cisco compute and network switching products, VMware virtualization and EMC VNX storage components to run Enterprise Applications. Our initial focus is the configuration for SAP HANA Tailored Data Center integration option, as this impacts the solution design most. The solution design is not limited to run SAP HANA; it will also take care that other applications required for an SAP Application landscape can run on the same infrastructure. The intent of this document is to show the configuration principles with the detailed configuration steps based on the individual situation.

Introduction

A cloud deployment model provides a better utilization of the underlying system resources leading to a reduced Total Cost of Ownership (TCO). However, choosing an appropriate platform for Databases and Enterprise Applications like SAP HANA can be a complex task. Platforms should be flexible, reliable and cost effective to facilitate various deployment options of the applications while being easily scalable and manageable. In addition, it is desirable to have an architecture that allows resource sharing across points of delivery (PoD) to address the stranded capacity issues, both within and outside the integrated stack. In this regard, this Cisco Integrated Infrastructure solution for Enterprise Applications provides a comprehensive validated infrastructure platform to suit customers’ needs. This document describing the infrastructure installation and configuration to run multiple enterprise applications, like SAP BusinessSuite or SAP HANA, on a shared infrastructure. The use cases to validate the design in this document are all SAP HANA based.

Audience

The intended audience of this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers deploy the SAP HANA. The reader of this document is expected to have the necessary training and background to install and configure EMC VNX series storage arrays, Cisco Unified Computing System (UCS) and Unified Computing Systems Manager (UCSM), Cisco Nexus 3000 series, Nexus 9000 series and Nexus1000v network switches, or have access to resources with the required knowledge. External references are provided where applicable and it is recommended that the reader be familiar with these documents. Readers are also expected to be familiar with the infrastructure and database security policies of the planned installation.

Purpose of this Document

This document describes the steps required to deploy and configure a Cisco UCS Integrated Infrastructure for Enterprise Applications with the focus on SAP HANA using VMware 5.5 as hypervisor. Cisco’s validation is further confirmation of component compatibility, connectivity and correct operation of the entire integrated stack. This document show cases one variant of cloud architecture for SAP HANA. While readers of this document are expected to have sufficient knowledge to install and configure the products used, configuration details that are important to the deployment of this solution are specifically mentioned.

Business Requirements

A Cisco CVD provides guidance to create complete solutions that enable you to make informed decisions towards an application ready platform.

Enterprise applications like SAP Business Suite are moving into the consolidated compute, network, and storage environment. Cisco UCS Integrated Infrastructure solution with EMC VNX helps to reduce complexity of configuring every component of a traditional deployment. The complexity of integration management is reduced while maintaining application design and implementation options. Administration is unified, while process separation can be adequately controlled and monitored. The following are the business requirements for this Cisco UCS Integrated Infrastructure:

· Provide an end-to-end solution to take full advantage of unified infrastructure components.

· Provide a Cisco ITaaS solution for an efficient environment catering to various SAP HANA use cases.

· Show implementation progression of a Reference Architecture design for SAP HANA with results.

· Provide a reliable, flexible and scalable reference design.

Solution Summary

This Cisco UCS Integrated Infrastructure with EMC VNX storage provides an end-to-end architecture with Cisco, EMC and VMware technologies that demonstrate support for multiple SAP HANA workloads with high availability and server redundancy.

The following are the components used in the design and deployment of this solution:

· Cisco Unified Compute System (UCS)

· Cisco UCS B-series or C-series servers, as per customer choice

· Cisco UCS VIC adapters

· Cisco Nexus 9396PX switches

· Cisco MDS 9148S Switches

· Cisco Nexus 1000V virtual switch

· EMC VNX5400 or VNX8000 storage array based on customer needs

· VMware vCenter 5.5

· SUSE Enterprise Linux Server 11 / SUSE Linux Enterprise Server for SAP

· Red Hat Enterprise Linux for SAP HANA

The solution is designed to host scalable, mixed workloads of SAP HANA and other SAP Applications.

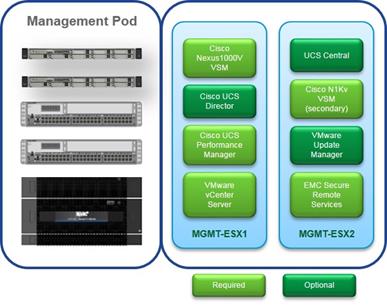

![]() The Management Point of Delivery (POD) (Blue border line) in the Solution Design is not part of the Cisco UCS Integrated Infrastructure. To build a manageable solution it is required to specify the management components and the communication paths between all components. In case you have the required management tools already in place, you can go to the section “Install and Configure the Management Pod” to identify the required changes.

The Management Point of Delivery (POD) (Blue border line) in the Solution Design is not part of the Cisco UCS Integrated Infrastructure. To build a manageable solution it is required to specify the management components and the communication paths between all components. In case you have the required management tools already in place, you can go to the section “Install and Configure the Management Pod” to identify the required changes.

Figure 1 Solution Overview

Cisco Unified Computing System

The Cisco Unified Computing System (Cisco UCS) is a state-of-the-art data center platform that unites computing, network, storage access, and virtualization into a single cohesive system.

The main components of Cisco Unified Computing System are:

· Computing - The system is based on an entirely new class of computing system that incorporates rack-mount and blade servers based on Intel Xeon Processor E5 and E7. The Cisco UCS servers offer the patented Cisco Extended Memory Technology to support applications with large datasets and allow more virtual machines (VM) per server.

· Network - The system is integrated onto a low-latency, lossless, 10-Gbps unified network fabric. This network foundation consolidates LANs, SANs, and high-performance computing networks which are separate networks today. The unified fabric lowers costs by reducing the number of network adapters, switches, and cables, and by decreasing the power and cooling requirements.

· Virtualization - The system unleashes the full potential of virtualization by enhancing the scalability, performance, and operational control of virtual environments. Cisco security, policy enforcement, and diagnostic features are now extended into virtualized environments to better support changing business and IT requirements.

· Storage access - The system provides consolidated access to both SAN storage and Network Attached Storage (NAS) over the unified fabric. By unifying the storage access the Cisco Unified Computing System can access storage over Ethernet (NFS or iSCSI), Fibre Channel, and Fibre Channel over Ethernet (FCoE). This provides customers with choice for storage access and investment protection. In addition, the server administrators can pre-assign storage-access policies for system connectivity to storage resources, simplifying storage connectivity, and management for increased productivity.

The Cisco Unified Computing System is designed to deliver:

· A reduced Total Cost of Ownership (TCO) and increased business agility.

· Increased IT staff productivity through just-in-time provisioning and mobility support.

· A cohesive, integrated system which unifies the technology in the data center.

· Industry standards supported by a partner ecosystem of industry leaders.

Cisco UCS Manager

Cisco Unified Computing System Manager (UCSM) provides unified, embedded management of all software and hardware components of the Cisco UCS through an intuitive GUI, a command line interface (CLI), or an XML API. The Cisco UCS Manager provides unified management domain with centralized management capabilities and controls multiple chassis and thousands of virtual machines.

Fabric Interconnect

These devices provide a single point for connectivity and management for the entire system. Typically deployed as an active-active pair, the system’s fabric interconnects integrate all components into a single, highly-available management domain controlled by Cisco UCS Manager. The fabric interconnects manage all I/O efficiently and securely at a single point, resulting in deterministic I/O latency regardless of a server or virtual machine’s topological location in the system.

Cisco UCS 6248UP Fabric Interconnect

Cisco UCS 6200 Series Fabric Interconnects support the system’s 10-Gbps unified fabric with low-latency, lossless, cut-through switching that supports IP, storage, and management traffic using a single set of cables. The fabric interconnects feature virtual interfaces that terminate both physical and virtual connections equivalently, establishing a virtualization-aware environment in which blade, rack servers, and virtual machines are interconnected using the same mechanisms. The Cisco UCS 6248UP is a 1-RU Fabric Interconnect that features up to 48 universal ports that can support 10 Gigabit Ethernet, Fibre Channel over Ethernet, or native Fibre Channel connectivity. The Cisco UCS 6296UP packs 96 universal ports into only two rack units.

Figure 2 Cisco UCS 6248UP Fabric Interconnect

![]()

Cisco UCS 2204XP Fabric Extender

The Cisco UCS 2204XP Fabric Extender (Figure 3) has four 10 Gigabit Ethernet, FCoE-capable, and Enhanced Small Form-Factor Pluggable (SFP+) ports that connect the blade chassis to the fabric interconnect. Each Cisco UCS 2204XP has thirty-two 10 Gigabit Ethernet ports connected through the midplane to each half-width slot in the chassis. Typically configured in pairs for redundancy, two fabric extenders provide up to 80 Gbps of I/O to the chassis.

Cisco UCS 2208XP Fabric Extender

The Cisco UCS 2208XP Fabric Extender (Figure 4) has eight 10 Gigabit Ethernet, FCoE-capable, and Enhanced Small Form-Factor Pluggable (SFP+) ports that connect the blade chassis to the fabric interconnect. Each Cisco UCS 2208XP has thirty-two 10 Gigabit Ethernet ports connected through the midplane to each half-width slot in the chassis. Typically configured in pairs for redundancy, two fabric extenders provide up to 160 Gbps of I/O to the chassis.

Figure 4 Cisco UCS 2208 XP

Cisco UCS Blade Server Chassis

The Cisco UCS 5100 Series Blade Server Chassis is a crucial building block of the Cisco Unified Computing System, delivering a scalable and flexible blade server chassis.

The Cisco UCS 5108 Blade Server Chassis is six rack units (6RU) high and can mount in an industry-standard 19-inch rack. A single chassis can house up to eight half-width Cisco UCS B-Series Blade Servers and can accommodate both half-width and full-width blade form factors. Four single-phase, hot-swappable power supplies are accessible from the front of the chassis. These power supplies are 92 percent efficient and can be configured to support non-redundant, N+ 1 redundant and grid-redundant configurations. The rear of the chassis contains eight hot-swappable fans, four power connectors (one per power supply), and two I/O bays for Cisco UCS 2200XP Fabric Extenders.

A passive mid-plane supports up to 2x 40 Gbit Ethernet links to each half-width blade slot or up to 4x 40 Gbit links to each full-width slot. It provides 8 blades with 1.2 terabits (Tb) of available Ethernet throughput for future I/O requirements. Note that the UCS 6324 FI supports only 512 Gbps.

The chassis is capable of supporting future 80 Gigabit Ethernet standards. The Cisco UCS Blade Server Chassis is shown in Figure 5.

Figure 5 Cisco Blade Server Chassis (front and back view)

Cisco UCS B200 M4 Blade Server

Optimized for data center or cloud, the Cisco UCS B200 M4 can quickly deploy stateless physical and virtual workloads, with the programmability of the UCS Manager and simplified server access of SingleConnect technology. The Cisco UCS B200 M4 is built with the Intel® Xeon® E5-2600 v3 processor family, up to 768 GB of memory (with 32 GB DIMMs), up to two drives, and up to 80 Gbps total bandwidth. It offers exceptional levels of performance, flexibility, and I/O throughput to run the most demanding applications.

In addition, Cisco UCS has the architectural advantage of not having to power and cool switches in each blade chassis. Having a larger power budget available for blades allows Cisco to design uncompromised expandability and capabilities in its blade servers.

The Cisco UCS B200 M4 Blade Server delivers:

· Suitability for a wide range of applications and workload requirements

· Highest-performing CPU and memory options without constraints in configuration, power or cooling

· Half-width form factor offering industry-leading benefits

· Latest features of Cisco UCS Virtual Interface Cards (VICs)

Figure 6 Cisco UCS B200 M4 Blade Server

Cisco C220 M4 Rack-Mount Servers

The Cisco UCS C220 M4 Rack-Mount Server is the most versatile, high-density, general-purpose enterprise infrastructure and application server in the industry today. It delivers world-record performance for a wide range of enterprise workloads, including virtualization, collaboration, and bare-metal applications.

The enterprise-class Cisco UCS C220 M4 Rack-Mount Server extends the capabilities of the Cisco Unified Computing System portfolio in a one rack-unit (1RU) form-factor. The Cisco UCS C220 M4 Rack-Mount Server provides the following:

· Dual Intel® Xeon® E5-2600 v3 processors for improved performance suitable for nearly all 2-socket applications

· Next-generation double-data-rate 4 (DDR4) memory and 12 Gbps SAS throughput

· Innovative Cisco UCS virtual interface card (VIC) support in PCIe or modular LAN on motherboard (MLOM) form factor

The Cisco UCS C220 M4 server also offers maximum reliability, availability, and serviceability (RAS) features, including:

· Tool-free CPU insertion

· Easy-to-use latching lid

· Hot-swappable and hot-pluggable components

· Redundant Cisco Flexible Flash SD cards

In Cisco UCS-managed operations, Cisco UCS C220 M4 takes advantage of our standards-based unified computing innovations to significantly reduce customers’ TCO and increase business agility.

Figure 7 Cisco C220 M4 Rack Server

Cisco UCS B260 M4 Blade Server

Optimized for data center or cloud, the Cisco UCS B260 M4 can quickly deploy stateless physical and virtual workloads, with the programmability of the Cisco UCS Manager and simplified server access of SingleConnect technology. The Cisco UCS B260 M4 is built with the Intel® Xeon® E7-4800 v2 processor family, up to 1.5 Terabyte of memory (with 32 GB DIMMs), up to two drives, and up to 160 Gbps total bandwidth. It offers exceptional levels of performance, flexibility, and I/O throughput to run the most demanding applications.

In addition, Cisco UCS has the architectural advantage of not having to power and cool switches in each blade chassis. Having a larger power budget available for blades allows Cisco to design uncompromised expandability and capabilities in its blade servers.

The Cisco UCS B260 M4 Blade Server delivers the following:

· Suitable for a wide range of applications and workload requirements

· Highest-performing CPU and memory options without constraints in configuration, power or cooling

· Full-width form factor offering industry-leading benefits

· Latest features of Cisco UCS Virtual Interface Cards (VICs)

Figure 8 Cisco UCS B260 M4 Blade Server

Cisco UCS B460 M4 Blade Server

Optimized for data center or cloud, the Cisco UCS B460 M4 can quickly deploy stateless physical and virtual workloads, with the programmability of the Cisco UCS Manager and simplified server access of SingleConnect technology. The UCS B460 M4 is built with the Intel® Xeon® E7-4800 v2 processor family, up to 3 Terabyte of memory (with 32 GB DIMMs), up to two drives, and up to 320 Gbps total bandwidth. It offers exceptional levels of performance, flexibility, and I/O throughput to run the most demanding applications.

In addition, Cisco UCS has the architectural advantage of not having to power and cool switches in each blade chassis. Having a larger power budget available for blades allows Cisco to design uncompromised expandability and capabilities in its blade servers.

The Cisco UCS B460 M4 Blade Server delivers the following:

· Suitable for a wide range of applications and workload requirements

· Highest-performing CPU and memory options without constraints in configuration, power or cooling

· Full-width double high form factor offering industry-leading benefits

· Latest features of Cisco UCS Virtual Interface Cards (VICs)

Figure 9 Cisco UCS B460 M4 Blade Server

Cisco UCS C460 M4 Rack-Mount Server

The Cisco UCS C460 M4 Rack Server offers industry-leading performance and advanced reliability well suited for the most demanding enterprise and mission-critical workloads, large-scale virtualization, and database applications.

Either as standalone or in Cisco UCS-managed operations, customers gain the benefits of the Cisco UCS C460 M4 server's high-capacity memory when very large memory footprints are required, as follows:

· SAP workloads

· Database applications and data warehousing

· Large virtualized environments

· Real-time financial applications

· Java-based workloads

· Server consolidation

The enterprise-class Cisco UCS C460 M4 server extends the capabilities of the Cisco Unified Computing System portfolio in a four rack-unit (4RU) form-factor. It provides the following:

· Either Two or Four Intel® Xeon® processor E7-4800 v2 or E7-8800 v2 product family CPU

· Up to 6 terabytes (TB)* of Double-data-rate 3 (DDR3) memory in 96 dual in-line memory (DIMM) slots

· Up to 12 Small Form Factor (SFF) hot-pluggable SAS/SATA/SSD disk drives

· 10 PCI Express (PCIe) Gen 3 slots supporting the Cisco UCS Virtual Interface Cards and third-party adapters and GPUs

· Two Gigabit Ethernet LAN-on-motherboard (LOM) ports

· Two 10-Gigabit Ethernet ports

· A dedicated out-of-band (OOB) management port

![]() *With 64GB DIMMs.

*With 64GB DIMMs.

The Cisco UCS C460 M4 server also offers maximum reliability, availability, and serviceability (RAS) features, including:

· Tool-free CPU insertion

· Easy-to-use latching lid

· Hot-swappable and hot-pluggable components

· Redundant Cisco Flexible Flash SD cards

In Cisco UCS-managed operations, Cisco UCS C460 M4 takes advantage of our standards-based unified computing innovations to significantly reduce customers’ TCO and increase business agility.

Figure 10 Cisco UCS C460 M4 Rack-Mount Server

Cisco C880 M4 Rack-Mount Server

The Cisco C880 M4 Rack-Mount Server offers industry-leading performance and advanced reliability well suited for the most demanding enterprise and mission-critical workloads, large-scale virtualization, and database applications.

As standalone operations, customers gain the benefits of the Cisco C880 M4 server's high-capacity memory when very large memory footprints such as the following are required:

· SAP workloads

· Database applications and data warehousing

The enterprise-class Cisco C880 M4 server extends the capabilities of the Cisco Unified Computing System portfolio in a ten rack-unit (10RU) form-factor. It provides the following:

· Eight Intel® Xeon® processor E7-8800 v2 product family CPU

· Up to 12 terabytes (TB)* of Double-data-rate 3 (DDR3) memory in 192 dual in-line memory (DIMM) slots

· Up to 4 internal Small Form Factor (SFF) hot-pluggable SAS/SATA/SSD disk drives

· Up to 12 PCI Express (PCIe) Gen 3 slots supporting the IO adapters and GPUs

· Four Gigabit Ethernet LAN-on-motherboard (LOM) ports

· Two 10 Gigabit Ethernet LA LAN-on-motherboard (LOM) ports

· A dedicated out-of-band (OOB) management port

![]() *With 64GB DIMMs.

*With 64GB DIMMs.

The Cisco C880 M4 server also offers maximum reliability, availability, and serviceability (RAS) features, including:

· Tool-free CPU insertion

· Easy-to-use latching lid

· Hot-swappable and hot-pluggable components

Figure 11 Cisco C880 M4 Rack-Mount Server

Cisco I/O Adapters for Blade and Rack Servers

Cisco Virtual Interface Card (VIC) was developed to provide acceleration for the various new operational modes introduced by server virtualization. The VIC is a highly configurable and self-virtualized adaptor that can create up 256 PCIe endpoints per adapter. These PCIe endpoints are created in the adapter firmware and present fully compliant standard PCIe topology to the host OS or hypervisor.

Each of the PCIe endpoints that the VIC creates can be configured individually with the following attributes:

· Interface type: Fibre Channel over Ethernet (FCoE), Ethernet, or Dynamic Ethernet interface device

· Resource maps that are presented to the host: PCIe base address registers (BARs), and interrupt arrays

· Network presence and attributes: Maximum transmission unit (MTU) and VLAN membership

· QoS parameters: IEEE 802.1p class, ETS attributes, rate limiting, and shaping

Cisco VIC 1240 Virtual Interface Card

The Cisco UCS blade server has various Converged Network Adapters (CNA) options. The Cisco UCS VIC 1240 Virtual Interface Card (VIC) option is used in this Cisco Validated Design.

The Cisco UCS Virtual Interface Card (VIC) 1240 (Figure 12) is a 4 x 10-Gbps Ethernet, Fibre Channel over Ethernet (FCoE)-capable modular LAN on motherboard (mLOM) designed for the M3 and M4 generation of Cisco UCS B-Series Blade Servers. When used in combination with an optional port expander, the Cisco UCS VIC 1240 capabilities is enabled for eight ports of 10-Gbps Ethernet.

Figure 12 Cisco UCS 1240 VIC Card

The Cisco UCS VIC 1240 enables a policy-based, stateless, agile server infrastructure that can present over 256 PCIe standards-compliant interfaces to the host that can be dynamically configured as either network interface cards (NICs) or host bus adapters (HBAs). In addition, the Cisco UCS VIC 1240 supports Cisco® Data Center Virtual Machine Fabric Extender (VM-FEX) technology, which extends the Cisco UCS fabric interconnect ports to virtual machines, simplifying server virtualization deployment and management.

Figure 13 Cisco UCS VIC 1240 Architecture

Cisco VIC 1280 Virtual Interface Card

The Cisco UCS blade server has various Converged Network Adapters (CNA) options. The Cisco UCS VIC 1280 Virtual Interface Card (VIC) option is used in this Cisco Validated Design.

The Cisco UCS Virtual Interface Card (VIC) 1280 (Figure 15) is a 8 x 10-Gbps Ethernet, Fibre Channel over Ethernet (FCoE)-capable modular LAN on motherboard (mLOM) designed for the M3 and M4 generation of Cisco UCS B-Series Blade Servers.

Figure 14 Cisco UCS 1280 VIC Card

The Cisco UCS VIC 1280 enables a policy-based, stateless, agile server infrastructure that can present over 256 PCIe standards-compliant interfaces to the host that can be dynamically configured as either network interface cards (NICs) or host bus adapters (HBAs). In addition, the Cisco UCS VIC 1280 supports Cisco® Data Center Virtual Machine Fabric Extender (VM-FEX) technology, which extends the Cisco UCS fabric interconnect ports to virtual machines, simplifying server virtualization deployment and management.

Figure 15 Cisco UCS VIC 1280 Architecture

Cisco VIC 1227 Virtual Interface Card

The Cisco UCS rack mount server has various Converged Network Adapters (CNA) options. The Cisco UCS 1227 Virtual Interface Card (VIC) is option is used in this Cisco Validated Design.

The Cisco UCS Virtual Interface Card (VIC) 1227 is a dual-port Enhanced Small Form-Factor Pluggable (SFP+) 10-Gbps Ethernet and Fibre Channel over Ethernet (FCoE)-capable PCI Express (PCIe) modular LAN-on-motherboard (mLOM) adapter designed exclusively for Cisco UCS C-Series Rack Servers (Figure 11). New to Cisco rack servers, the mLOM slot can be used to install a Cisco VIC without consuming a PCIe slot, which provides greater I/O expandability. It incorporates next-generation converged network adapter (CNA) technology from Cisco, providing investment protection for future feature releases. The card enables a policy-based, stateless, agile server infrastructure that can present up to 256 PCIe standards-compliant interfaces to the host that can be dynamically configured as either network interface cards (NICs) or host bus adapters (HBAs). In addition, the Cisco UCS VIC 1227 supports Cisco® Data Center Virtual Machine Fabric Extender (VM-FEX) technology, which extends the Cisco UCS fabric interconnect ports to virtual machines, simplifying server virtualization deployment.

Figure 16 Cisco UCS VIC 1227 Card

Cisco VIC 1225 Virtual Interface Card

The Cisco UCS 1225 Virtual Interface Card (VIC) option is used in this Cisco Validated Design to connect the Cisco UCS C460 M4 Rack server.

A Cisco® innovation, the Cisco UCS Virtual Interface Card (VIC) 1225 is a dual-port Enhanced Small Form-Factor Pluggable (SFP+) 10 Gigabit Ethernet and Fibre Channel over Ethernet (FCoE)-capable PCI Express (PCIe) card designed exclusively for Cisco UCS C-Series Rack Servers. Cisco UCS 1225 VIC provides the capability to create multiple vNICs (up to 128) on the CNA. This allows complete I/O configurations to be provisioned in virtualized or non-virtualized environments using just-in-time provisioning, providing tremendous system flexibility and allowing consolidation of multiple physical adapters. System security and manageability is improved by providing visibility and portability of network policies and security all the way to the virtual machines. Additional 1225 features like VM-FEX technology and pass-through switching, minimize implementation overhead and complexity.

Figure 17 Cisco UCS VIC 1225 Card

Cisco Nexus 9396PX Switch

The Cisco Nexus 9000 family of switches supports two modes of operation: NXOS standalone mode and Application Centric Infrastructure (ACI) fabric mode. In standalone mode, the switch performs as a typical Cisco Nexus switch with increased port density, low latency and 40G connectivity. In fabric mode, the administrator can take advantage of Cisco ACI.

The Cisco Nexus 9396PX is a 2RU switch which delivers comprehensive line-rate layer 2 and layer 3 features in a two-rack-unit form factor. It supports line rate 1/10/40 GE with 960 Gbps of switching capacity. It is ideal for top-of-rack and middle-of-row deployments in both traditional and Cisco Application Centric Infrastructure (ACI)–enabled enterprise, service provider, and cloud environments.

Specifications-at-a-Glance

· Forty-eight 1/10 Gigabit Ethernet Small Form-Factor Pluggable (SFP+) non-blocking ports

· Twelve 40 Gigabit Ethernet Quad SFP+ (QSFP+) non-blocking ports

· Low latency (approximately 2 microseconds)

· 50 MB of shared buffer

· Line rate VXLAN bridging, routing, and gateway support

· Fibre Channel over Ethernet (FCoE) capability

· Front-to-back or back-to-front airflow

Figure 18 Cisco Nexus 9396PX Switch

Cisco Nexus 3548 Switches

The Cisco Nexus 3548 and 3524 Switches are based on identical hardware, differentiated only by their software licenses, which allow the Cisco Nexus 3524 to operate 24 ports, and enable the use of all 48 ports on the Cisco Nexus 3548. These fixed switches are compact one-rack-unit (1RU) form-factor 10 Gigabit Ethernet switches that provide line-rate Layer 2 and 3 switching with ultra-low latency. Both software licenses run the industry-leading Cisco NX-OS Software operating system, providing customers with comprehensive features and functions that are deployed globally. The Cisco Nexus 3548 and 3524 contain no physical layer (PHY) chips, allowing low latency and low power consumption.

The Cisco Nexus 3548 and 3524 have the following hardware configuration:

· 48 fixed Enhanced Small Form-Factor Pluggable (SFP+) ports (1 or 10 Gbps); the Cisco Nexus 3524 enables only 24 ports

· Dual redundant hot-swappable power supplies

· Four individual redundant hot-swappable fans

· One 1-PPS timing port, with the RF1.0/2.3 QuickConnect connector type*

· Two 10/100/1000 management ports

· One RS-232 serial console port

· Locator LED

· Front-to-back or back-to-front airflow

Figure 19 Cisco Nexus 3548 switch

Cisco MDS 9148S 16G Multilayer Fabric Switch

The Cisco MDS 9148S 16G Multilayer Fabric Switch is the next generation of the highly reliable Cisco MDS 9100 Series Switches. It includes up to 48 auto-sensing line-rate 16-Gbps Fibre Channel ports in a compact easy to deploy and manage 1-rack-unit (1RU) form factor. In all, the Cisco MDS 9148S is a powerful and flexible switch that delivers high performance and comprehensive Enterprise-class features at an affordable price.

Features and Capabilities

· Flexibility for growth and virtualization

· Optimized bandwidth utilization and reduced downtime

· Enterprise-class features and reliability at low cost

· PowerOn Auto Provisioning and intelligent diagnostics

· In-Service Software Upgrade and dual redundant hot-swappable power supplies for high availability

· High-performance inter-switch links with multipath load balancing

Specifications-at-a-Glance

Performance and Port Configuration

· 2/4/8/16-Gbps auto-sensing with 16 Gbps of dedicated bandwidth per port

· Up to 256 buffer credits per group of 4 ports (64 per port default, 253 maximum for a single port in the group)

· Supports configurations of 12, 24, 36, or 48 active ports, with pay-as-you-grow, on-demand licensing

Figure 20 Cisco MDS9148S 16G Fabric Switch

Cisco Nexus 1000v Virtual Switch

The Cisco Nexus 1000V Series Switches are virtual machine access switches for the VMware vSphere environments running the Cisco NX-OS operating system. Operating inside the VMware ESX or ESXi hypervisors, the Cisco Nexus 1000V Series provides:

· Policy-based virtual machine connectivity

· Mobile virtual machine security and network policy

· Non-disruptive operational model for your server virtualization and networking teams

When server virtualization is deployed in the data center, virtual servers typically are not managed the same way as physical servers. Server virtualization is treated as a special deployment, leading to longer deployment time, with a greater degree of coordination among server, network, storage, and security administrators. With the Cisco Nexus 1000V Series, you can have a consistent networking feature set and provisioning process all the way from the virtual machine access layer to the core of the data center network infrastructure. Virtual servers can now use the same network configuration, security policy, diagnostic tools, and operational models as their physical server counterparts attached to dedicated physical network ports.

Virtualization administrators can access predefined network policies that follow mobile virtual machines to ensure proper connectivity saving valuable time to focus on virtual machine administration. This comprehensive set of capabilities helps you to deploy server virtualization faster and realize its benefits sooner.

Cisco Nexus 1000v is a virtual Ethernet switch with two components:

· Virtual Supervisor Module (VSM)—the control plane of the virtual switch that runs NX-OS.

· Virtual Ethernet Module (VEM)—a virtual line card embedded into each VMware vSphere hypervisor host (ESXi)

Virtual Ethernet Modules across multiple ESXi hosts form a virtual Distributed Switch (vDS). Using the Cisco vDS VMware plug-in, the VIC provides a solution that is capable of discovering the Dynamic Ethernet interfaces and registering all of them as uplink interfaces for internal consumption of the vDS. The vDS component on each host discovers the number of uplink interfaces that it has and presents a switch to the virtual machines running on the host. All traffic from an interface on a virtual machine is sent to the corresponding port of the vDS switch. The traffic is then sent out to physical link of the host using the special uplink port-profile. This vDS implementation guarantees consistency of features and better integration of host virtualization with rest of the Ethernet fabric in the data center.

Figure 21 Cisco Nexus 1000v virtual Distributed Switch Architecture

Cisco UCS Differentiators

Cisco Unified Computing System is revolutionizing the way servers are managed in data-center. The following are the unique differentiators of Cisco Unified Computing System and Cisco UCS Manager.

· Embedded management: In Cisco UCS, the servers are managed by the embedded firmware in the Fabric Interconnects, eliminating need for any external physical or virtual devices to manage the servers. Also, a pair of FIs can manage up to 40 chassis, each containing 8 blade servers. This provides enormous scaling on management plane.

· Unified fabric: In Cisco UCS, from blade server chassis or rack server fabric-extender to FI, there is a single Ethernet cable used for LAN, SAN and management traffic. This converged I/O results in reduced cables, SFPs and adapters—reducing capital and operational expenses of overall solution.

· Auto Discovery: By simply inserting the blade server in the chassis or connecting rack server to the fabric extender, discovery and inventory of compute resource occurs automatically without any management intervention. Combination of unified fabric and auto-discovery enables wire-once architecture of Cisco UCS, where compute capability of Cisco UCS can extending easily while keeping the existing external connectivity to LAN, SAN and management networks.

· Policy based resource classification: When a compute resource is discovered by Cisco UCS Manager, it can be automatically classified to a given resource pool based on policies defined. This capability is useful in multi-tenant cloud computing. This CVD show cases the policy based resource classification of Cisco UCS Manager.

· Combined Rack and Blade server management: Cisco UCS Manager can manage B-series blade servers and C-series rack server under the same Cisco UCS domain. This feature, along with stateless computing makes compute resources truly hardware form factor agnostic. In this CVD, we are show-casing combination of B and C series servers to demonstrate stateless and form factor independent computing work load.

· Model based management architecture: Cisco UCS Manager architecture and management database is model based and data driven. Open, standard based XML API is provided to operate on the management model. This enables easy and scalable integration of Cisco UCS Manager with other management system, such as VMware vCloud director, Microsoft system center, and Citrix CloudPlatform.

· Policies, Pools, Templates: Management approach in Cisco UCS Manager is based on defining policies, pools and templates, instead of cluttered configuration, which enables simple, loosely coupled, data driven approach in managing compute, network and storage resources.

· Loose referential integrity: In Cisco UCS Manager, a service profile, port profile or policies can refer to other policies or logical resources with loose referential integrity. A referred policy cannot exist at the time of authoring the referring policy or a referred policy can be deleted even though other policies are referring to it. This provides different subject matter experts to work independently from each-other. This provides great flexibilities where different experts from different domains, such as network, storage, security, server and virtualization work together to accomplish a complex task.

· Policy resolution: In Cisco UCS Manager, a tree structure of organizational unit hierarchy can be created that mimics the real life tenants and/or organization relationships. Various policies, pools and templates can be defined at different levels of organization hierarchy. A policy referring to other policy by name is resolved in the org hierarchy with closest policy match. If a policy with a specific name is not found in the hierarchy until root org, then special policy named “default” is searched. This policy resolution practice enables automation friendly management APIs and provides great flexibilities to owners of different orgs.

· Service profiles and stateless computing: Service profile is a logical representation of a server, carrying its various identities and policies. This logical server can be assigned to any physical compute resource as far as it meets the resource requirements. Stateless computing enables procurement of a server within minutes, which used to take days in legacy server management systems.

· Built-in multi-tenancy support: Combination of policies, pools and templates, loose referential integrity, policy resolution in org hierarchy and service profile based approach to compute resources makes Cisco UCS Manager inherently friendly to multi-tenant environment typically observed in private and public clouds.

· Virtualization aware network: VM-FEX technology makes access layer of network aware about host virtualization. This prevents domain pollution of compute and network domains with virtualization when virtual network is managed by port-profiles defined by the network administrators team. VM-FEX also offloads hypervisor CPU by performing switching in the hardware, thus allowing hypervisor CPU to do more virtualization related tasks. VM-FEX technology is well integrated with VMware vCenter, Linux KVM and Hyper-V SR-IOV to simplify cloud management.

· Simplified QoS: Even though the fibre-channel and Ethernet are converged in Cisco UCS fabric, built-in support for QoS and lossless Ethernet makes it seamless. Network Quality of Service (QoS) is simplified in Cisco UCS Manager by representing all system classes in one GUI panel.

VMware vSphere 5.5

VMware vSphere 5.5 is a next-generation virtualization solution from VMware which builds upon ESXi 5.1 and provides greater levels of scalability, security, and availability to virtualized environments. vSphere 5.5 offers improvements in performance and utilization of CPU, memory, and I/O. It also offers users the option to assign up to thirty two virtual CPU to a virtual machine—giving system administrators more flexibility in their virtual server farms as processor-intensive workloads continue to increase.

The vSphere 5.5 provides the VMware vCenter Server that allows system administrators to manage their ESXi hosts and virtual machines on a centralized management platform. With the Cisco Fabric Interconnects Switch integrated into the vCenter Server, deploying and administering virtual machines is similar to deploying and administering physical servers. Network administrators can continue to own the responsibility for configuring and monitoring network resources for virtualized servers as they did with physical servers. System administrators can continue to “plug-in” their virtual machines into network ports that have Layer 2 configurations, port access and security policies, monitoring features, etc., that have been pre-defined by the network administrators; in the same way they would plug in their physical servers to a previously-configured access switch. In this virtualized environment, the system administrator has the added benefit of the network port configuration/policies moving with the virtual machine if it is ever migrated to different server hardware.

EMC Storage Technologies and Benefits

The EMC VNX™ family is optimized for virtual applications delivering industry-leading innovation and enterprise capabilities for file, block, and object storage in a scalable, easy-to-use solution. This next-generation storage platform combines powerful and flexible hardware with advanced efficiency, management, and protection software to meet the demanding needs of today’s enterprises.

VNX series is designed to meet the high-performance, high-scalability requirements of midsize and large enterprises. The EMC VNX storage arrays are multi-protocol platform that can support the iSCSI, NFS, Fibre Channel, and CIFS protocols depending on the customer’s specific needs. This solution was validated using NFS and Fibre Channel protocol based on the best practices and use case requirements. EMC VNX series storage arrays have following customer benefits:

· Next-generation unified storage, optimized for virtualized applications

· Capacity optimization features including compression, deduplication, thin provisioning, and application-centric copies

· High availability, designed to deliver five 9s availability

· Multiprotocol support for file and block

· Simplified management with EMC Unisphere™ for a single management interface for all network-attached storage (NAS), storage area network (SAN), and replication needs

· Software suites available:

— Remote Protection Suite — Protects data against localized failures, outages, and disasters

— Application Protection Suite — Automates application copies and proves compliance

— Security and Compliance Suite — Keeps data safe from changes, deletions, and malicious activity

· Software packs available:

— Total Value Pack — Includes all protection software suites and the Security and Compliance Suite

Global Hardware Requirements for SAP HANA

There are hardware and software requirements defined by SAP Societas Europaea (SE) to run SAP HANA systems. Follow the guidelines provided by SAP documentation at http://saphana.com for SAP HANA appliances and the Tailored Datacenter Integration (TDI) option. Please check the documents regularly as SAP SE can change requirements on demand.

A list of certified server for SAP HANA is published at http://global.sap.com/community/ebook/2014-09-02-hana-hardware/enEN/index.html. All Cisco UCS server listed there can be used in this Reference Architecture as the solution design is not based on the server type – this includes server models introduced after the publication of this document. The servers listed in the “Supported Entry Level Systems” are for SAP HANA Scale-Up configurations with the SAP HANA Tailored Datacenter Integration (TDI) option only.

Network

A SAP HANA data center deployment can range from a database running on a single host to a complex distributed system with multiple hosts located at a primary site and one or more secondary sites and supporting a distributed multi-terabyte database with full fault and disaster recovery.

SAP HANA has different types of network communication channels to support the different SAP HANA scenasrios and setups, as follows:

· Client zone: Channels used for external access to SAP HANA functions by end-user clients, administration clients, and application servers, and for data provisioning through SQL or HTTP

· Internal zone: Channels used for SAP HANA internal communication within the database or, in a distributed scenario, for communication between hosts

· Storage zone: Channels used for storage access (data persistence) and for backup and restore procedures

Figure 22 High-Level SAP HANA Network Overview

Details about the network requirements for SAP HANA are available from the white paper from SAP at: http://www.saphana.com/docs/DOC-4805.

Table 1 lists networks defined by SAP, Cisco, or requested by customers.

Table 1 List of Known Networks

| Name |

Use Case |

Solutions |

Bandwidth requirements |

| Client Zone Networks |

|||

| Application Server Network |

SAP Application Server to DB communication |

All |

1 or 10 GbE |

| Client Network |

User / Client Application to DB communication |

All |

1 or 10 GbE |

| Data Source Network |

Data import and external data integration |

Optional for all SAP HANA systems |

1 or 10 GbE |

| Internal Zone Networks |

|||

| Inter-Node Network |

Node to node communication within a scale-out configuration |

Scale-Out |

10 GbE |

| System Replication Network |

SAP HANA System Replication |

For SAP HANA Disaster Tolerance |

TBD with Customer |

| Storage Zone Networks |

|||

| Backup Network |

Data Backup |

Optional for all SAP HANA systems |

10 GbE or 8 GBit FC |

| Storage Network |

Node to Storage communication |

Scale-Out Storage TDI |

10 GbE or 8 GBit FC network |

| Infrastructure Related Networks |

|||

| Administration Network |

Infrastructure and SAP HANA administration |

Optional for all SAP HANA systems |

1 GbE |

| Boot Network |

Boot the Operating Systems via PXE/NFS or FCoE |

Optional for all SAP HANA systems |

1 GbE |

All networks need to be properly segmented and may be connected to the same core/ backbone switch. It depends on the customer’s high-availability requirements if and how to apply redundancy for the different SAP HANA network segments.

![]() Network security and segmentation is a function of the network switch and must be configured according to the specifications of the switch vendor.

Network security and segmentation is a function of the network switch and must be configured according to the specifications of the switch vendor.

Based on the listed network requirements every server must be equipped with 2x 1/10 Gigabit Ethernet (10 GbE is recommended) for Scale-Up systems to establish the communication with the application or user (Client Zone). If the storage for HANA is external and accessed via the Network two additional 10 GbE Interfaces are required for the Storage Zone.

For Scale-Out solutions an additional redundant network for the HANA node to HANA node communication with ≥10 GbE is required (Internal Zone).

Storage

The storage used must be listed as part of a “Certified Appliance” in the Certified SAP HANA Hardware Directory at http://global.sap.com/community/ebook/2014-09-02-hana-hardware/enEN/index.html. The storage is only listed in the detail description of a certified solution. Click the entry in the Model Column to see the details. The storage can also be listed as Certified Enterprise Storage based on SAP HANA TDI option at the same URL in the “Certified Enterprise Storage” tab.

All relevant information about storage requirements is documented in the white paper “SAP HANA Storage Requirements” and is available at: http://www.saphana.com/docs/DOC-4071.

![]() It is important to know that SAP makes no difference between a virtualized SAP HANA system with 64GB memory and a physical installed SAP HANA system with 2TB memory in regards to storage requirements. If the storage is certified i.e. 4 nodes, you can run 4 VMs, or 4 bare metal systems, or a mixture of them on this storage.

It is important to know that SAP makes no difference between a virtualized SAP HANA system with 64GB memory and a physical installed SAP HANA system with 2TB memory in regards to storage requirements. If the storage is certified i.e. 4 nodes, you can run 4 VMs, or 4 bare metal systems, or a mixture of them on this storage.

Using the same storage for multiple applications can cause a significant performance degradation of the SAP HANA systems and the other applications. It is recommended to separate the storage for SAP HANA systems from the storage for other applications. It is required to specify the storage options together with your storage vendor based on your requirements.

Filesystem Sizes

To install and operate SAP HANA the following file system layout and sizes must be provided.

Figure 23 File System Layout for SAP HANA

![]() The recommendation from SAP about the file system layout for a SAP HANA TDI option differs from the one shown in Figure 24. For /hana/data the minimum size is 1* RAM instead of 3* RAM; the recommended size is 1.5* RAM.

The recommendation from SAP about the file system layout for a SAP HANA TDI option differs from the one shown in Figure 24. For /hana/data the minimum size is 1* RAM instead of 3* RAM; the recommended size is 1.5* RAM.

Scale-Up Solution

The following is a sample of a system with 512GB memory:

Root-FS: 50 GB

/usr/sap: 50 GB

/hana/log: 1x Memory (512 GB)

/hana/data: 1x memory (512 GB)

Scale-Out Solutions

The following is a sample of a solution with 512GB memory per server:

For each server:

Root-FS: 50 GB

/usr/sap: 50 GB

/hana/shared: # of Nodes * 512 GB (2+0 configuration sample: 2x 512 GB = 1024 GB)

For every active HANA node:

/hana/log/<SID>/mntXXXXX: 1x Memory (512 GB)

/hana/data/<SID>/mntXXXXX: 1x Memory (512 GB)

Log Volume with Intel E7-x890v2 CPU

For solutions based on Intel E7-x890v2 CPU the size of the Log volume has changed:

· ½ or the server memory for systems ≤ 256 GB memory

· Min 512 GB for systems with ≥ 512 GB memory

Operating System

The supported operating systems for SAP HANA are as follows:

· SUSE Linux Enterprise 11

· SUSE Linux Enterprise for SAP Applications

· Red Hat Enterprise Linux for SAP HANA

High Availability

SAP HANA scale-out comes with in integrated high-availability function. If the SAP HANA system is configured with a stand-by node, a failed part of SAP HANA will start on the stand-by node automatically. The infrastructure for a SAP HANA scale-out solution must have no single point of failure. After a failure in the components, the SAP HANA database must still be operational and running. For automatic host failover, the proper implementation and operation of the SAP HANA storage connector application programming interface (API) must be used.

Hardware and software components should include the following:

· Internal storage: A RAID-based configuration is preferred.

· External storage: Redundant data paths, dual controllers, and a RAID-based configuration are preferred.

· Ethernet switches: Two or more independent switches should be used.

For the latest information from SAP go to: http://saphana.com or http://service.sap.com/notes.

Since there are multiple implementation options, it is important to define the requirements before starting to bring the solution components together. This section sets the basic definition, and later in the document, we will refer to these definitions.

SAP HANA System on a Single Server—Scale-Up (Bare Metal or Virtualized)

A single-host system is the simplest system installation type. It is possible to run an SAP HANA system entirely on one host and then scale the system up as needed. A scale-up solution is, for some SAP HANA use cases the only supported option. All data and processes are located on the same server and can be accessed locally, and no network communication to other SAP HANA nodes is required. In general it provides the best SAP HANA performance

The network requirements for this option depend on the client and application access and storage. If you do not require system replication or a backup network, one 1 Gigabit Ethernet (access) and one 10 Gigabit Ethernet storage networks are sufficient to run SAP HANA Scale-Up.

For virtualized SAP HANA installation, please remember that hardware components are shared like network interfaces. It is recommended to use dedicated network adapters per virtualized SAP HANA system.

Based on the SAP HANA TDI option for shared storage and a shared network, you can build a solution to run multiple SAP HANA Scale-Up systems on a shared infrastructure.

SAP HANA System on Multiple Servers—Scale-Out

You should use a scale-out solution if the SAP HANA system does not fit into the main memory of a single server based on the rules defined by SAP. You can scale SAP HANA either by increasing RAM for a single server, or by adding hosts to the system to deal with larger workloads. This allows you to go beyond the limits of a single physical server. This means combining multiple independent servers into one system. The main reason for distributing a system across multiple hosts that is, scaling out is to overcome the hardware limitations of a single physical server. This means that an SAP HANA system can distribute the load between multiple servers. In a distributed system, each index server is usually assigned to its own host to achieve maximum performance. It is possible to assign different tables to different hosts (partitioning the database), as well as to split a single table between hosts (partitioning of tables). Distributed systems can be used for failover scenarios and to implement high availability. Individual hosts in a distributed system have different roles master, worker, slave, standby depending on the task.

![]() Some use cases are either not supported or not fully supported on scale-out solutions. Please check with SAP if your use case can be deployed on a scale-out solution.

Some use cases are either not supported or not fully supported on scale-out solutions. Please check with SAP if your use case can be deployed on a scale-out solution.

The network requirements for this option are higher than for scale-up systems. In addition to the client and application access and storage access network, you also must have a node-to-node network. If you do not need system replication or a backup network, one 10 Gigabit Ethernet (access) and one 10 Gigabit Ethernet (node-to-node) and one 10 Gigabit Ethernet storage networks are required to run SAP HANA in a scale-out configuration.

![]() Virtualized SAP HANA Scale-Out is not supported by SAP as of December 2014.

Virtualized SAP HANA Scale-Out is not supported by SAP as of December 2014.

SAP HANA Cloud

One reason for the popularity of SAP HANA is the option to deploy it in a cloud model. There are hundreds of interpretations of cloud. To set the scope for this document, three very high-level areas that are relevant for SAP HANA have been designed:

· Private Cloud, offered from the IT department to multiple customers within the same company

· Public Cloud, where SAP HANA is offered through a website, based on a global service description, by which you can get it just by a few mouse clicks and your credit card information

· SAP HANA Hosting, where a service provider runs the SAP HANA system based on an individual contract that includes a service-level agreement (SLA) about availability, performance, and security

· The cloud model affects the infrastructure setup and configuration in a variety of ways. The following are some examples: The performance requirements will define how many SAP HANA systems can run on the same hypervisor, storage, or network.

· The security requirements will influence whether or not you must run on dedicated hardware; that is, whether or not you must dedicate the physical host, the storage system, or the network equipment.

The availability requirements will influence how SAP HANA is deployed in the data center(s), the requirements for infrastructure or application clustering, and the data-replication technology and backup and restore procedures.

The network requirements for SAP HANA in a cloud environment are a combination of the requirements for SAP HANA scale-up and scale-out systems. You also can consider a very basic implementation of security and network traffic separation.

The storage requirements are not easy to define. On one side are the performance requirements for SAP HANA and the other applications. On the other side are requirements such as backup location, data mobility, and multi–data center concepts, and so on. These requirements must follow the data center best practices that are already in place.

Private Cloud

Private cloud for or with SAP HANA represents a very broad area of options. You can have a Solution for Multiple SAP HANA Systems – Scale-Up (bare metal or virtualized) with additional automation tools to provide a flexible and easy way to provision and de-provision SAP HANA systems. You can also use an already existing infrastructure for virtual desktop infrastructure (VDI) or other traditional applications and add SAP HANA to it. In most private-cloud deployments SAP HANA is not the only application that will run on the shared infrastructure.

Public Cloud

A public-cloud solution is used to run many small to medium-size SAP HANA systems; most of those systems are virtualized and the solution is 100-percent automated. A self-service portal for the end customer is used to request, deploy, and operate the SAP HANA system. There are no individual SLAs; you have to accept the predefined agreement to close the contract.

SAP HANA Hosting

A solution for SAP HANA hosting is used to run small to large-size SAP HANA systems; some are virtualized, some are bare metal scale-up, and some are scale-out. The solution is automated to a level that makes sense for the owner. A self-service portal for the end customer is not mandatory, but can be in place. Individual contracts are created, with a dedicated SLA per end customer. The infrastructure must be able to provide the best flexibility based on the individual performance, security, and availability requirements of the end customers.

Architectural Overview

This Cisco UCS Integrated Infrastructure is a defined set of hardware and software that serves as an integrated foundation for both virtualized and non-virtualized SAP HANA solutions. The architecture includes EMC VNX storage, Cisco Nexus® networking, the Cisco Unified Computing System™ (Cisco UCS®), and VMware vSphere software. The design is flexible enough that the networking, computing, and storage can fit in one data center rack or be deployed according to a customer’s data center design. Port density enables the networking components to accommodate multiple configurations of this kind.

One benefit of this architecture is the ability to customize or scale the environment to suit a customer’s requirements. This design can easily scale as requirements and demand change. The unit can scale both up and out. The reference architecture detailed in this document highlights the resiliency, cost benefit, and ease of deployment of an integrated solution. A storage system capable of serving multiple protocols allows for customer choice and investment protection because it truly is a wire-once architecture.

This CVD focuses on the architecture to run SAP HANA as the main workload, targeted for Enterprise and Service Provider segment, using EMC VNX storage arrays. The architecture uses Cisco UCS with B-series and C-series servers with EMC VNX5400 or VNX8000 storage attached to the Nexus 9396PX switches for NFS access and to the Cisco MDS 9148S switches for Fibre Channel access. The C-Series rack servers are connected directly to Cisco UCS Fabric Interconnect with single-wire management feature. VMware vSphere 5.5 is used as server virtualization architecture. This infrastructure is deployed to provide SAN-booted hosts with file-level and block-level access to shared storage. The reference architecture reinforces the “wire-once” strategy, because as additional storage is added to the architecture, no re-cabling is required from the hosts to the Cisco UCS fabric interconnect.

Hardware and Software Components

Table 2 lists the various hardware and software components, which occupies different tiers of the Cisco UCS Integrated Infrastructure under test.

Table 2 List of Hardware and Software Components

| Vendor |

Product |

Version |

Description |

| Cisco |

UCSM |

2.2(3f) |

UCS Manager |

| Cisco |

UCS 6248UP FI |

5.2(3)N2(2.23c) |

UCS Fabric Interconnects |

| Cisco |

UCS 5108 Chassis |

NA |

UCS Blade server chassis |

| Cisco |

UCS 2200XP FEX |

2.2(3f) |

UCS Fabric Extenders for Blade Server chassis |

| Cisco |

UCS B-Series M4 servers |

2.2(3f) |

Cisco B-Series M4 blade servers |

| Cisco |

UCS VIC 1240/1280 |

4.0(1b) |

Cisco UCS VIC 1240/1280 adapters |

| Cisco |

UCS C220 M4 servers |

2.0.3i – CIMC C220M4.2.0.3d – BIOS |

Cisco C220 M4 rack servers

|

| Cisco |

UCS C240 M4 servers |

2.0.3i – CIMC C240M4.2.0.3d – BIOS |

Cisco C240 M4 rack servers

|

| Cisco |

UCS VIC 1227 |

4.0(1b) |

Cisco UCS VIC adapter |

| Cisco |

UCS C460 M4 servers |

2.0.3i – CIMC C460M4.2.0.3c - BIOS |

Cisco C460 M4 rack servers |

| Cisco |

UCS VIC 1225 |

4.0(1b) |

Cisco UCS VIC adapter |

| Cisco |

MDS 9148 |

6.2(9) |

Cisco MDS 9148 8G Multi Fabric switches |

| Cisco |

Nexus 9396PX |

6.1(2)I2(2a) |

Cisco Nexus 9396x switches |

| Cisco |

Nexus 1000v |

5.2.1.SV3.1.2 |

Cisco Nexus 1000v virtual switch. |

| EMC |

VNX5400 |

- |

VNX storage array |

| EMC |

VNX8000 |

- |

VNX storage array |

| VMware |

ESXi 5.5 |

5.5 Update 2 |

Hypervisor |

| VMware |

vCenter Server |

5.5 |

VMware Management |

| SUSE |

SUSE Linux Enterprise Server (SLES) |

11 SP3 |

Operating System to host SAP HANA |

| Red Hat |

Red Hat Enterprise Linux (RHEL) for SAP HANA |

6.6 |

Operating System to host SAP HANA |

Table 3 Cisco UCS B220M4 or C220M4 Server Configuration (per server basis)

| Component |

Capacity |

| Memory (RAM) |

256 GB (16 x 16GB DDR4 DIMMs) |

| Processor |

2 x Intel® Xeon ® E5-2660 v3 CPUs, 2.6 GHz, 10 cores, 20 threads |

Table 4 Cisco UCS B460M4 or C460M4 server configuration (per server basis)

| Component |

Capacity |

| Memory (RAM) |

1024 GB (64x 16GB DDR4 DIMMs) |

| Processor |

4 x Intel® Xeon ® E7-4880 v2 CPUs, 2.4 GHz, 15 cores, 30 threads |

Scaling Options

This is one of the most difficult topics to define for a solution based on multiple components hosting multiple use-cases. The CPU and memory requirements per server to run the application gives you the information what must run on bare-metal and what can be virtualized. For virtualized workloads, the number of parallel running virtual machines per server is limited by the available CPU and memory resources. The IO requirements from the server to the Cisco UCS Fabric Interconnects for network and storage access and from the Cisco UCS Fabric Interconnects to the upstream LAN and SAN switches will define the required number of cables. The number of ports on the Cisco UCS Fabric Interconnect is limiting the number of servers managed by a single domain. The number of possible Cisco UCS Domains and EMC Storages attached to the SAN switches are limited by the model and the number of Cisco MDS switches. And the same logic applies also to the LAN switches, limited by the number and model of Nexus 9000 series switches used for this deployment.

In the “Reference Workloads and Use Cases” section later in this document, the base line requirements are defined case by case. With this information together with the basic requirements documented by SAP and the planned use of the infrastructure a basic sizing can be done.

Example 1: If you plan to install two very large SAP HANA systems with 80TB main memory each you need 2x 40 B460 M4 with 2TB each. Based on the IO requirements for SAP HANA Scale-Out it is necessary to use server from two or more Cisco UCS for a single SAP HANA system. A single Cisco UCS is limited to 30 servers to provide the required performance on all layers.

Example 2: If you plan to install many of SAP HANA systems with 64GB or 128GB main memory, mostly non-productive, the IO requirements are different. For this deployment it is possible to run 400 or more SAP HANA systems on a single Cisco UCS with 100 or more server.

One of the most important tasks of the daily operation is the check if there are bottlenecks in the solution. To support this it is recommended to use Cisco UCS Performance Manager to visualize the utilization of all LAN, SAN and server connections on the Cisco UCS level together with the storage utilization. With this information it is possible to identify possible bottlenecks ahead of time and add the required components or rebalance the workloads.

Based on the experience defining, certifying and deploying SAP HANA solutions from the past years the following three Scale Options are defined as a base line. Following table highlights the change in hardware components, as required by different scale. It is important to know that SAP has specified the storage performance for SAP HANA based on a per server rule, independent from the server size. In other words the maximum number of servers per storage is the same if you use virtual machine with 64GB main memory or Cisco UCS B460 M4 with 2 TB main memory for SAP HANA.

Table 5 Sample Scale Options for SAP HANA

| Component |

Scale Option 1 |

Scale Option 2 |

Scale Option 3 |

| Servers |

10 x Cisco C-Series or B-Series M4 servers |

20 x Cisco C-Series or B-Series M4 servers |

30 x Cisco C-Series or B-Series M4 servers |

| Blade servers chassis |

5 x Cisco 5108 Blade server chassis |

10 x Cisco 5108 Blade server chassis |

15 x Cisco 5108 Blade server chassis |

| Storage |

1x EMC VNX5400 or 1x EMC VNX8000 |

2x EMC VNX5400 2x EMC VNX8000 |

3x EMC VNX5400 2x EMC VNX8000 |

Although this is the base design, each of the components can be scaled easily to support specific business requirements. For example, more (or different) servers or even blade chassis can be deployed to increase compute capacity, additional EMC VNX series storage can be added to increase storage capacity, improve I/O capability and throughput, and special hardware or software features can be added to introduce new features.

In addition to the SAP HANA scale shown in table 5 the solution allows adding non SAP HANA workload. The scaling options in addition to the information in Table 5 are shown in Table 6.

Table 6 Additional HW for Non-HANA Workloads

| Component |

Scale Option 1 |

Scale Option 2 |

Scale Option 3 |

| Non-SAP HANA Servers |

80 x Cisco B200 M4 servers |

40 x Cisco B200 M4 servers |

0 x Cisco B200 M4 servers |

| Blade servers chassis |

10 x Cisco 5108 Blade server chassis |

5 x Cisco 5108 Blade server chassis |

0 x Cisco 5108 Blade server chassis |

| Additional Storage for Non-SAP HANA |

2x EMC VNX5400 or 1x EMC VNX8000 |

1x EMC VNX5400 1x EMC VNX8000 |

0x EMC VNX5400 0x EMC VNX8000 |

This CVD guides you through the steps for deploying the base architecture, as shown in Figure 24. These procedures cover everything from physical cabling to network, compute and storage device configurations.

Figure 24 Reference Architecture Design

Guidelines for VMware ESXi

Memory Configuration Guidelines for Virtual Machines

This section provides guidelines for allocating memory to virtual machines. The guidelines outlined here take into account vSphere memory overhead and the virtual machine memory settings.

VMware ESX/ESXi Memory Management Concepts

VMware vSphere virtualizes guest physical memory by adding an extra level of address translation. Shadow page tables make it possible to provide this additional translation with little or no overhead. Managing memory in the hypervisor enables the following:

· Memory sharing across virtual machines that have similar data (that is, same guest operating systems).