FlashStack® for AI: Powering the Data Pipeline

Available Languages

FlashStack® for AI: Powering the Data Pipeline

Deployment Guide for FlashStack™ for Artificial Intelligence and Deep Learning with Cisco UCS C480 ML M5 and Pure Storage® FlashBlade™

Published: January 16, 2020

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2020 Cisco Systems, Inc. All rights reserved.

Table of Contents

Integration with existing FlashStack Design

Hardware and Software Revisions

vGPU-only Deployment in Existing VMware Environment

Cisco Nexus A and Cisco Nexus B

Cisco Nexus A and Cisco Nexus B

Cisco Nexus A and Cisco Nexus B

Configure Virtual Port-Channel Parameters

Configure Virtual Port-Channels

Cisco UCS 6454 Fabric Interconnect to Nexus 9336C-FX2 Connectivity

Pure FlashBlade to Nexus 9336C-FX2 Connectivity

Cisco UCS Configuration for VMware with vGPU

Add VLAN to (updating) vNIC Template

VMware Setup and Configuration for vGPU

Obtain and Install NVIDIA vGPU Software

Setup NVIDIA vGPU Software License Server

Register License Server to NVIDIA Software Licensing Center

Install NVIDIA vGPU Manager in ESXi

Set the Host Graphics to SharedPassthru

(Optional) Enabling vMotion with vGPU

Add a Port-Group to Access AI/ML NFS Share

Red Hat Enterprise Linux VM Setup

Install Net-Tools and Verify MTU

Install NFS Utilities and Mount NFS Share

NVIDIA and CUDA Drivers Installation

Verify the NVIDIA and CUDA Installation

Setup NVIDIA vGPU Licensing on the VM

Cisco UCS Configuration for Bare Metal

Cisco UCS C220 M5 Connectivity

Cisco UCS C240 M5 Connectivity

Cisco UCS C480 ML M5 Connectivity

Modify Default Host Firmware Package

Set Jumbo Frames in Cisco UCS Fabric

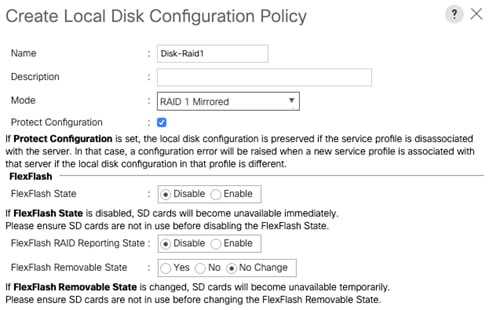

Create Local Disk Configuration Policy

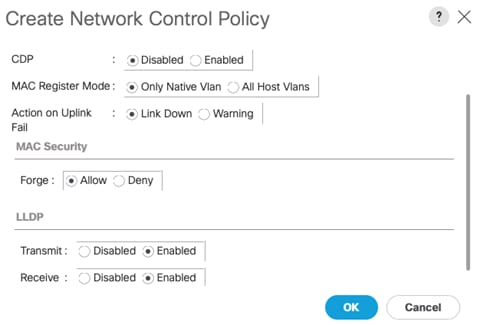

Create Network Control Policy to Enable Link Layer Discovery Protocol (LLDP)

Update the Default Maintenance Policy

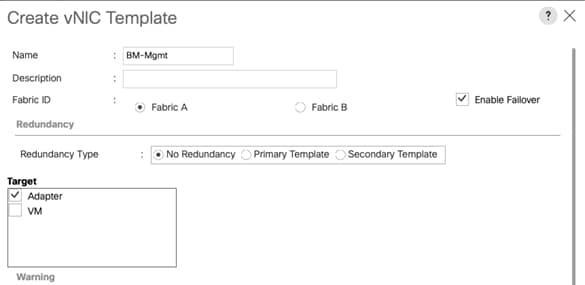

Create Management vNIC Template

(Optional) Create Traffic vNIC Template

Create LAN Connectivity Policy

Create Service Profile Template

Configure Storage Provisioning

Configure SAN Connectivity Options

Configure Operational Policies

Bare-Metal Server Setup and Configuration

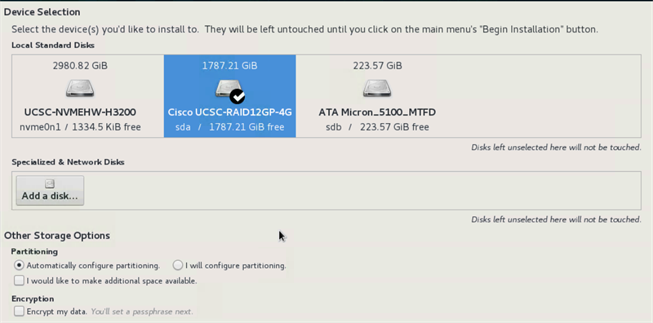

Red Hat Enterprise Linux (RHEL) Bare-Metal Installation

Install Net-Tools and Verify MTU

Install NFS Utilities and Mount NFS Share

NVIDIA and CUDA Drivers Installation

Verify the NVIDIA and CUDA Installation

Setup TensorFlow Convolutional Neural Network (CNN) Benchmark

Setup CNN Benchmark for ImageNet Data

Cisco UCS C480 ML M5 Performance Metrics

Cisco UCS C480 ML M5 Power Consumption

Cisco UCS 240 M5 Power Consumption

Cisco UCS 220 M5 Power Consumption

Cisco Validated Designs (CVDs) deliver systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. These designs incorporate a wide range of technologies and products into a portfolio of solutions that have been developed to address the business needs of the customers and to guide them from design to deployment.

Customers looking to deploy applications using a shared data center infrastructure face several challenges. A recurring infrastructure challenge is to achieve the required levels of IT agility and efficiency that can effectively meet the company’s business objectives. Addressing these challenges requires having an optimal solution with the following key characteristics:

· Availability: Help ensure applications and services availability at all times with no single point of failure

· Flexibility: Ability to support new services without requiring underlying infrastructure modifications

· Efficiency: Facilitate efficient operation of the infrastructure through re-usable policies

· Manageability: Ease of deployment and ongoing management to minimize operating costs

· Scalability: Ability to expand and grow with significant investment protection

· Compatibility: Minimize risk by ensuring compatibility of integrated components

Cisco and Pure Storage have partnered to deliver a series of FlashStackTM solutions that enable strategic data center platforms with the above characteristics. FlashStack solution delivers a modern converged infrastructure (CI) solution that is smarter, simpler, efficient, and extremely versatile to handle a broad set of workloads with their unique sets of infrastructure requirements. With FlashStack, customers can modernize their operational model, stay ahead of business demands, and protect and secure their applications and data, regardless of the deployment model on premises, at the edge, or in the cloud. FlashStack's fully modular and non-disruptive architecture abstracts hardware into software for non-disruptive changes which allow customers to seamlessly deploy new technology without having to re-architect their data center solutions.

This document is intended to provide deployment details and guidance around the integration of the Cisco UCS C480 ML M5 platform and Pure Storage FlashBlade into the FlashStack solution to deliver a unified approach for providing Artificial Intelligence (AI) and Machine Learning (ML) capabilities within the converged infrastructure. This document also covers NVIDIA GPU configuration on Cisco UCS C220 M5 and C240 M5 platforms as additional deployment options. For a detailed design discussion about the platforms and technologies used in this solution, refer to the FlashStack® for AI: Powering the Data Pipeline Design Guide.

Introduction

Building an AI-platform with off-the-shelf hardware and software components leads to solution complexity and eventually stalled initiatives. Valuable months are lost in IT resources on systems integration work that can result in fragmented resources which are difficult to manage and require in-depth expertise to optimize and control various deployments.

The FlashStack for AI solution aims to deliver seamless integration of the Cisco UCS C480 ML M5 platform into the current FlashStack portfolio to enable the customers to efficiently utilize the platform’s extensive GPU capabilities for their workloads without requiring extra time and resources for a successful deployment. FlashStack solution is a pre-designed, integrated and validated architecture for data center that combines Cisco UCS servers, Cisco Nexus family of switches, Cisco MDS fabric switches and Pure Storage Arrays into a single, flexible architecture. FlashStack solutions portfolio is designed for high availability, with no single points of failure, while maintaining cost-effectiveness and flexibility in the design to support a wide variety of workloads. FlashStack design can support different hypervisor options, bare metal servers and can also be sized and optimized based on customer workload requirements. FlashStack design discussed in this document has been validated for resiliency and fault tolerance during system upgrades, component failures, and partial as well as complete power loss scenarios. This document also covers the deployment details of NVIDIA GPU equipped Cisco UCS C220 M5 and Cisco UCS C240 M5 servers and is a detailed walk through of the solution build out.

Audience

The intended audience of this document includes but is not limited to data scientists, IT architects, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who want to take advantage of an infrastructure built to deliver IT efficiency and enable IT innovation.

What’s New in this Release?

The following design elements distinguish this FlashStack solution from previous models:

· Integration of Cisco UCS C480 ML M5 platform into the FlashStack design.

· Integration of Pure Storage FlashBlade to support AI/ML dataset.

· Showcase AI/ML workload acceleration using NVIDIA V100 32GB GPUs on both Cisco UCS C480 ML M5 and Cisco UCS C240 M5 platforms.

· Showcase AI/ML workload acceleration using NVIDIA T4 16GB GPUs on Cisco UCS C220 M5 platform.

· Showcase NVIDIA Virtual Compute Server (vComputeServer) software and Virtual GPU (vGPU) capabilities on various Cisco UCS platforms.

· Support for Intel 2nd Gen Intel Xeon Scalable Processors (Cascade Lake) processors*.

![]() * The Cisco UCS software version 4.0(4e) (covered in this validation) and RHEL 7.6 support Cascade Lake CPUs on Cisco UCS C220 M5 and C240 M5 servers. Support for Cisco UCS C480 ML M5 will be available in the upcoming Cisco UCS release.

* The Cisco UCS software version 4.0(4e) (covered in this validation) and RHEL 7.6 support Cascade Lake CPUs on Cisco UCS C220 M5 and C240 M5 servers. Support for Cisco UCS C480 ML M5 will be available in the upcoming Cisco UCS release.

Architecture

FlashStack for AI solution comprises of following core components:

· High-Speed Cisco NxOS based Nexus 9336C-FX2 switching design supporting up to 100GbE connectivity.

· Cisco UCS Manager (UCSM) on Cisco 4th generation 6454 Fabric Interconnects to support 10GbE, 25GbE and 100 GbE connectivity from various components.

· Cisco UCS C480 ML M5 server with 8 NVIDIA V100-32GB GPUs for AI/ML applications.

· Pure Storage FlashBlade providing scale-out, all-flash storage purpose built for massive concurrency as needed for AI/ML workloads.

· (Optional) Cisco UCS C220 M5 and Cisco UCS C240 M5 server(s) with NVIDIA V100 or NVIDIA T4 GPUs can also be utilized for AI/ML workload processing depending on customer requirements.

![]() In this validation, Cisco UCS C240 M5 server was equipped with two NVIDIA V100-32GB PCIE GPUs and a Cisco UCS C220 M5 was equipped with two NVIDIA T4 GPUs.

In this validation, Cisco UCS C240 M5 server was equipped with two NVIDIA V100-32GB PCIE GPUs and a Cisco UCS C220 M5 was equipped with two NVIDIA T4 GPUs.

The FlashStack solution for AI closely aligns with latest FlashStack for Virtual Machine Infrastructure CVD located here: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/ucs_flashstack_vsi_vm67_u1_design.html and can be used to easily extend the current virtual machine infrastructure design to support AI/ML workloads.

The following design requirements were considered for the GPU equipped Cisco UCS C-Series M5 server integration into the FlashStack:

1. Modular design that can be replicated to expand and grow as the needs of the business grow.

2. Enable current IT infrastructure teams to offer AI/ML infrastructure capabilities with little to no management overhead.

3. High-availability and redundancy for platforms connectivity such that the system can handle one or more link, Fabric Interconnect or a storage node failure.

4. Cisco UCS Service Profile based deployment for both Red Hat Enterprise Linux and VMware ESXi deployments.

5. Ability of the switching architecture to enable AI/ML platform to efficiently access AI/ML training and inference dataset from the Pure FlashBlade using NFS.

6. Ability to deploy and migrate a vGPU equipped VM across GPU (same model) equipped ESXi servers.

Physical Topology

The physical topology for the connecting GPU equipped C-Series servers to a Pure Storage FlashBlade using a Cisco UCS 6454 Fabric Interconnect and Nexus 9336C-FX2 switch is shown in Figure 1.

Figure 1 FlashStack for AI - Physical Topology

To validate the GPU equipped Cisco UCS C-Series M5 servers integration into FlashStack solution, an environment with the following components was setup:

· Cisco UCS 6454 Fabric Interconnects (FI) is used to connect and manage Cisco UCS C-Series M5 servers.

· Cisco UCS C480 ML M5 connects to each FI using Cisco VIC 1455. Cisco VIC 1455 has 4 25GbE ports. The server is connected to each FI using 2 x 25GbE connections configured as port-channels.

· Cisco UCS C220 M5 and C240 M5 servers connect to each FI using Cisco VIC 1457. Cisco VIC 1457 has 4 25GbE ports. The servers are connected to each FI using 2 x 25GbE connections configured as port-channels.

· Cisco Nexus 9336C running in NX-OS mode provides the switching fabric.

· Cisco UCS 6454 FI’s 100GbE uplink ports are connected to Nexus 9336C as port-channels.

· Pure Storage FlashBlade is connected to Nexus 9336C switch using 40GbE ports configured as a single port-channel.

Integration with existing FlashStack Design

The design illustrated in Figure 1 allows customers to easily integrate their traditional FlashStack solution with this new AI/ML configuration. The resulting physical topology, after the integration with a typical FlashStack design, is shown in Figure 2. Cisco UCS 6454 FI is used to connect both Cisco UCS 5108 chassis equipped with Cisco UCS B200 M5 blades and Cisco UCS C-Series servers. The Nexus 9336C-FX2 platform provides connectivity between Cisco UCS FI and both Pure Storage FlashArray and FlashBlade. The design shown in Figure 2 supports iSCSI connectivity option for stateless compute (boot from SAN) but can be seamlessly extended to support FC connectivity design by utilizing Cisco MDS switches.

The reference architecture described in this document leverages the components explained in the FlashStack Virtual Server Infrastructure with iSCSI Storage for VMware vSphere 6.7 U1 deployment guide. The FlashStack for AI extends the virtual infrastructure to include GPU equipped C-Series platforms to the base infrastructure thereby providing customers ability to deploy both bare metal Red Hat Enterprise Linux (RHEL) as well as NVIDIA vComputeServer (vGPU) functionality in the VMware environment.

Figure 2 Integration of FlashStack for Virtual Machine Infrastructure and Deep Learning Platforms

![]() This deployment guide explains the hardware integration aspects of both virtual infrastructure and AI/ML platforms as well as configuration of these platforms. However, the base hardware and core virtual machine infrastructure configuration and setup is not explained in this document. Customers are encouraged to refer to the FlashStack Virtual Server Infrastructure with iSCSI Storage for VMware vSphere 6.7 U1 CVD for step-by-step configuration procedures.

This deployment guide explains the hardware integration aspects of both virtual infrastructure and AI/ML platforms as well as configuration of these platforms. However, the base hardware and core virtual machine infrastructure configuration and setup is not explained in this document. Customers are encouraged to refer to the FlashStack Virtual Server Infrastructure with iSCSI Storage for VMware vSphere 6.7 U1 CVD for step-by-step configuration procedures.

Hardware and Software Revisions

Table 1 lists the software versions for hardware and software components used in this solution

Table 1 Hardware and Software Revisions

| Component |

Software |

|

| Network |

Nexus 9336C-FX2 |

7.0(3)I7(6) |

| Compute |

Cisco UCS Fabric Interconnect 6454 |

4.0(4e)* |

|

|

Cisco UCS C-Series servers |

4.0(4e)* |

|

|

Red Hat Enterprise Linux (RHEL) |

7.6 |

|

|

RHEL ENIC driver |

3.2.210.18-738.12 |

|

|

NVIDIA Driver for RHEL |

418.40.04 |

|

|

NVIDIA Driver for ESXi |

430.46 |

|

|

NVIDIA CUDA Toolkit |

10.1 Update 2 |

|

|

VMware vSphere |

6.7U3 |

|

|

VMware ESXi ENIC driver |

1.0.29.0 |

| Storage |

Pure Storage FlashBlade (Purity//FB) |

2.3.3 |

![]() * In this deployment guide, the UCS release 4.0(4e) was only verified for C-Series hosts participating in AI/ML workloads.

* In this deployment guide, the UCS release 4.0(4e) was only verified for C-Series hosts participating in AI/ML workloads.

Required VLANs

Table 2 list various VLANs configured for setting up the FlashStack environment including their specific usage.

| VLAN ID |

Name |

Usage |

| 2 |

Native-VLAN |

Use VLAN 2 as Native VLAN instead of default VLAN (1) |

| 20 |

IB-MGMT-VLAN |

Management VLAN to access and manage the servers |

| 220 (optional) |

Data-Traffic |

VLAN to carry data traffic for both VM and bare-metal Servers |

| 1110 (Fabric A only) |

iSCSI-A |

iSCSI-A path for both B-Series and C-Series servers |

| 1120 (Fabric B only) |

iSCSI-B |

iSCSI-B path for both B-Series and C-Series servers |

| 1130 |

vMotion |

VLAN user for VM vMotion |

| 3152 |

AI-ML-NFS |

NFS VLAN to access AI/ML NFS volume |

Some of the key highlights of VLAN usage are as follows:

· Both virtual machines and the bare-metal servers are managed using VLAN 20.

· An optional dedicated VLAN (220) is used for data communication; customers are encouraged to evaluate this VLANs usage according to their specific needs.

· A dedicated NFS VLAN is defined to enables NFS data share access for AI/ML data residing on Pure Storage FlashBlade.

· A pair of iSCSI VLANs are utilized to access iSCSI LUNs for ESXi servers.

· A vMotion VLAN for VMs migration (in the VMware environment).

Physical Infrastructure

The information in this section is provided as a reference for cabling the physical equipment in a FlashStack environment. Customers can adjust the ports according to their individual setup. This document assumes that out-of-band management ports are plugged into an existing management infrastructure at the deployment site. The interfaces shown in Figure 3 will be used in various configuration steps.

Figure 3 FlashStack for AI - Physical Cabling for Cisco UCS C-Series servers

![]() Figure 3 shows a 40Gbps connection from each controller to each Nexus switch. Based on throughput requirements, customers can use all eight 40Gbps ports on the FlashBlade for a combined throughput of 320Gbps.

Figure 3 shows a 40Gbps connection from each controller to each Nexus switch. Based on throughput requirements, customers can use all eight 40Gbps ports on the FlashBlade for a combined throughput of 320Gbps.

This section provides the configuration required on the Cisco Nexus 9000 switches for FlashStack for AI setup. The following procedures assume the use of Cisco Nexus 9000 7.0(3)I7(6), the Cisco suggested Nexus switch release at the time of this validation. The switch configuration covered below supports deployment of bare-metal server configuration.

![]() With Cisco Nexus 9000 release 7.0(3)I7(6), 100G auto-negotiation is not supported on certain ports of the Cisco Nexus 9336C-FX2 switch. To avoid any misconfiguration and confusion, the port speed and duplex are manually set for all the 100GbE connections.

With Cisco Nexus 9000 release 7.0(3)I7(6), 100G auto-negotiation is not supported on certain ports of the Cisco Nexus 9336C-FX2 switch. To avoid any misconfiguration and confusion, the port speed and duplex are manually set for all the 100GbE connections.

vGPU-only Deployment in Existing VMware Environment

If a customer requires vGPU functionality in an existing VMware infrastructure and does not need to deploy Bare-Metal RHEL servers, adding the NFS VLAN (3152) to the following Port-Channels (shown in Figure 4) is all that is needed:

· Port-Channel for Pure Storage FlashBlade

· Port-Channels for both Cisco UCS Fabric Interconnects

· Port-Channel between the Nexus switches used for VPC peer-link

Enabling the NFS VLAN on appropriate Port-Channels at the switches allows customers to access NFS LIF using a VM port-group on the ESXi hosts.

Figure 4 NFS VLAN on Nexus Switch for vGPU-only Support on Existing Infrastructure

The following configuration sections detail how to configure the Nexus switches for deploying bare-metal servers and include the addition of the NFS VLAN (3152) on the appropriate interfaces.

Enable Features

Cisco Nexus A and Cisco Nexus B

To enable the required features on the Cisco Nexus switches, follow these steps:

1. Log in as admin.

2. Run the following commands:

config t

feature udld

feature interface-vlan

feature lacp

feature vpc

feature lldp

Global Configurations

Cisco Nexus A and Cisco Nexus B

To set global configurations, complete the following step on both switches:

1. Run the following commands to set (or verify) various global configuration parameters:

config t

spanning-tree port type network default

spanning-tree port type edge bpduguard default

spanning-tree port type edge bpdufilter default

!

port-channel load-balance src-dst l4port

!

ntp server <NTP Server IP> use-vrf management

!

vrf context management

ip route 0.0.0.0/0 <ib-mgmt-vlan Gateway IP>

!

copy run start

![]() Make sure as part of the basic Nexus configuration, the management interface Mgmt0 is setup with an IB-MGMT-VLAN IP address.

Make sure as part of the basic Nexus configuration, the management interface Mgmt0 is setup with an IB-MGMT-VLAN IP address.

Create VLANs

Cisco Nexus A and Cisco Nexus B

To create the necessary virtual local area networks (VLANs), follow this step on both switches:

1. From the global configuration mode, run the following commands to create the VLANs. The VLAN IDs can be adjusted based on customer setup.

vlan 2

name Native-VLAN

vlan 20

name IB-MGMT-VLAN

vlan 220

name Data-Traffic

vlan 3152

name AI-ML-NFS

Configure Virtual Port-Channel Parameters

Cisco Nexus A

vpc domain 10

peer-switch

role priority 10

peer-keepalive destination <Nexus-B-Mgmt-IP> source <Nexus-A-Mgmt-IP>

delay restore 150

peer-gateway

no layer3 peer-router syslog

auto-recovery

ip arp synchronize

!

interface port-channel10

description vPC peer-link

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 20,220,3152

spanning-tree port type network

speed 100000

duplex full

no negotiate auto

vpc peer-link

!

interface Ethernet1/35

description Nexus-B:1/35

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 20,220,3152

speed 100000

duplex full

no negotiate auto

channel-group 10 mode active

no shutdown

!

interface Ethernet1/36

description Nexus-B:1/36

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 20,220,3152

speed 100000

duplex full

no negotiate auto

channel-group 10 mode active

no shutdown

!

Cisco Nexus B

vpc domain 10

peer-switch

role priority 20

peer-keepalive destination <Nexus-A-Mgmt0-IP> source <Nexus-B-Mgmt0-IP>

delay restore 150

peer-gateway

no layer3 peer-router syslog

auto-recovery

ip arp synchronize

!

interface port-channel10

description vPC peer-link

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 20,220,3152

spanning-tree port type network

speed 100000

duplex full

no negotiate auto

vpc peer-link

!

interface Ethernet1/35

description Nexus-A:1/35

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 20,220,3152

speed 100000

duplex full

no negotiate auto

channel-group 10 mode active

no shutdown

!

interface Ethernet1/36

description Nexus-A:1/36

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 20,220,3152

speed 100000

duplex full

no negotiate auto

channel-group 10 mode active

no shutdown

!

Configure Virtual Port-Channels

Cisco UCS 6454 Fabric Interconnect to Nexus 9336C-FX2 Connectivity

Cisco UCS 6454 Fabric Interconnect (FI) is connected to the Nexus switch using 100GbE uplink ports as shown in Figure 5. Each FI connects to each Nexus 9336C using 2 100GbE ports for a combined bandwidth of 400GbE from each FI to the switching fabric. The Nexus 9336C switches are configured for two separate vPCs, one for each FI.

Figure 5 Cisco UCS 6454 FI to Nexus 9336C Connectivity

Nexus A Configuration

! FI-A

!

interface port-channel11

description UCS FI-A

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 20,220,3152

spanning-tree port type edge trunk

mtu 9216

speed 100000

duplex full

no negotiate auto

vpc 11

!

interface Ethernet1/1

description UCS FI-A E1/51

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 20,220,3152

mtu 9216

speed 100000

duplex full

no negotiate auto

udld enable

channel-group 11 mode active

no shutdown

!

interface Ethernet1/2

description UCS FI-A E1/52

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 20,220,3152

mtu 9216

speed 100000

duplex full

no negotiate auto

udld enable

channel-group 11 mode active

no shutdown

!

! FI-B

!

interface port-channel12

description UCS FI-B

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 20,220,3152

spanning-tree port type edge trunk

mtu 9216

speed 100000

duplex full

no negotiate auto

vpc 12

!

interface Ethernet1/3

description UCS FI-B E1/1

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 20,220,3152

mtu 9216

speed 100000

duplex full

udld enable

no negotiate auto

channel-group 12 mode active

no shutdown

interface Ethernet1/4

description UCS FI-B E1/2

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 20,220,3152

mtu 9216

speed 100000

duplex full

no negotiate auto

udld enable

channel-group 12 mode active

no shutdown

!

Nexus B Configuration

! FI-A

!

interface port-channel11

description UCS FI-A

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 20,220,3152

spanning-tree port type edge trunk

mtu 9216

speed 100000

duplex full

no negotiate auto

vpc 11

!

interface Ethernet1/1

description UCS FI-A E1/53

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 20,220,3152

mtu 9216

speed 100000

duplex full

no negotiate auto

udld enable

channel-group 11 mode active

no shutdown

!

interface Ethernet1/2

description UCS FI-A E1/54

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 20,220,3152

mtu 9216

speed 100000

duplex full

no negotiate auto

udld enable

channel-group 11 mode active

no shutdown

!

! FI-B

!

interface port-channel12

description UCS FI-B

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 20,220,3152

spanning-tree port type edge trunk

mtu 9216

speed 100000

duplex full

no negotiate auto

vpc 12

!

interface Ethernet1/3

description UCS FI-B E1/53

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 20,220,3152

mtu 9216

speed 100000

duplex full

no negotiate auto

udld enable

channel-group 12 mode active

no shutdown

interface Ethernet1/4

description UCS FI-B E1/54

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 20,220,3152

mtu 9216

speed 100000

duplex full

no negotiate auto

udld enable

channel-group 12 mode active

no shutdown

!

Pure FlashBlade to Nexus 9336C-FX2 Connectivity

Pure FlashBlade is connected to Cisco Nexus 9336C-FX2 switches using 40GbE connections. Figure 6 shows the physical connectivity details.

Figure 6 Pure Storage FlashBlade Design

Nexus-A Configuration

!

interface port-channel20

description FlashBlade

switchport mode trunk

switchport trunk allowed vlan 20,3152

spanning-tree port type edge trunk

mtu 9216

vpc 20

!

interface Ethernet1/21

description FM-1 Eth1

switchport mode trunk

switchport trunk allowed vlan 20,3152

mtu 9216

channel-group 20 mode active

no shutdown

!

interface Ethernet1/23

description FM-2 Eth1

switchport mode trunk

switchport trunk allowed vlan 20,3152

mtu 9216

channel-group 20 mode active

no shutdown

!

Nexus-B Configuration

!

interface port-channel20

description FlashBlade

switchport mode trunk

switchport trunk allowed vlan 20,3152

spanning-tree port type edge trunk

mtu 9216

vpc 20

!

interface Ethernet1/21

description FM-1 Eth3

switchport mode trunk

switchport trunk allowed vlan 20,3152

mtu 9216

channel-group 20 mode active

no shutdown

!

interface Ethernet1/23

description FM-2 Eth3

switchport mode trunk

switchport trunk allowed vlan 20,3152

mtu 9216

channel-group 20 mode active

no shutdown

!

![]() The configuration for the (optional) Pure Storage FlashArray is explained in the FlashStack Virtual Server Infrastructure with iSCSI Storage for VMware vSphere 6.7 U1 Deployment Guide.

The configuration for the (optional) Pure Storage FlashArray is explained in the FlashStack Virtual Server Infrastructure with iSCSI Storage for VMware vSphere 6.7 U1 Deployment Guide.

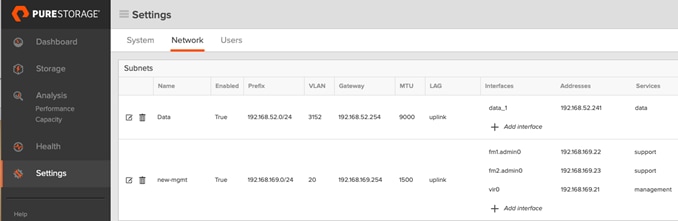

Create Subnet

To create a subnet, follow these steps:

1. Open a web browser and navigate to the Pure Storage FlashBlade management address.

2. Enter the Username and Password to log into the storage system.

3. From the Pure Storage Dashboard, go to Settings > Network. Click + to Create Subnets.

4. Enter Name, Prefix, VLAN, Gateway and MTU and click Create to create subnet.

Create Network Interface

To create the network interface, follow these steps:

1. Click the + sign to add an interface within the Subnet created in the last step.

2. Click Create to create the Network Interface.

Create NFS File System

To create the NFS file system, follow these steps:

1. From the Pure Storage Dashboard, go to Storage > File System. Click + to add a new file system.

2. Enter Name and Provisioned Size.

3. Optionally enable Fast Remove and/or Snapshots

4. Enable the NFSv3 and set the export rule as shown in the figure. In the capture below, the NFS subnet has been added to the export rule to limit the mounting source IP addresses to the NFS NICs.

5. Click Create to add the file system.

![]() The fast remove feature allows customers to quickly remove large directories by offloading this work onto the server. When the fast remove feature is enabled, a special pseudo-directory named .fast-remove is created in the root directory of the NFS mount. To remove a specific directory and its contents, run the mv command to move the directory into the .fast-remove directory.

The fast remove feature allows customers to quickly remove large directories by offloading this work onto the server. When the fast remove feature is enabled, a special pseudo-directory named .fast-remove is created in the root directory of the NFS mount. To remove a specific directory and its contents, run the mv command to move the directory into the .fast-remove directory.

Cisco UCS Configuration for VMware with vGPU

This section explains the configuration additions required to support the AI/ML workloads when deploying GPUs in the VMware environment.

Cisco UCS Base Configuration

For the base configuration for the Cisco UCS 6454 Fabric Interconnect, follow the Cisco UCS Configuration section here: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flashstack_vsi_iscsi_vm67_u1.html .

To enable VMs in the existing VMware Infrastructure to access the AI/ML dataset using NFS, on the Cisco UCS define the NFS VLAN (3152) and add the VLAN to the appropriate vNIC templates.

Create NFS VLAN

To create a new VLAN in the Cisco UCS, follow these steps:

1. In Cisco UCS Manager, click the LAN icon.

2. Select LAN > LAN Cloud.

3. Right-click VLANs.

4. Select Create VLANs.

5. Enter “AI-ML-NFS” as the name of the VLAN to be used to access NFS datastore hosting Imagenet data.

6. Keep the Common/Global option selected for the scope of the VLAN.

7. Enter the native VLAN ID <3152>.

8. Keep the Sharing Type as None.

9. Click OK and then click OK again.

Add VLAN to (updating) vNIC Template

To add the newly created VLAN in existing vNIC templates configured for ESXi hosts, follow these steps:

1. In the Cisco UCS Manager, click the LAN icon.

2. Select LAN > Policies > root > vNIC Templates (select the sub-organization if applicable).

3. Select the Fabric-A vNIC template used for ESXi host (e.g. vNIC_App_A).

4. In the main window “General”, click Modify VLANs.

5. Check the box to add the NFS VLAN (3152) and click OK.

6. Repeat this procedure to add the same VLAN to the Fabric-B vNIC template (e.g. vNIC_App_B).

When the NFS VLAN is added to appropriate vSwitch on the ESXi host, a port-group is created in the VMware environment to provide VMs access to the NFS share.

This deployment assumes customers have completed the base ESXi setup on the GPU equipped Cisco UCS C220 M5, C240 M5 or C480 ML M5 servers using the vSphere configuration explained here: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flashstack_vsi_iscsi_vm67_u1.html.

Obtain and Install NVIDIA vGPU Software

NVIDIA vGPU software is a licensed product. Licensed vGPU functionalities are activated during guest OS boot by the acquisition of a software license served over the network from an NVIDIA vGPU software license server. The license is returned to the license server when the guest OS shuts down.

Figure 7 NVIDIA vGPU Software Architecture

To utilize GPUs in a VM environment, the following configuration steps must be completed:

· Create an NVIDIA Enterprise Account and add appropriate product licenses

· Deploy a Windows based VM as NVIDIA vGPU License Server and install license file

· Download and install NVIDIA software on the hypervisor

· Setup VMs to utilize GPUs

![]() For detailed installation instructions, refer to the NVIDA vGPU installation guide: https://docs.nvidia.com/grid/latest/grid-software-quick-start-guide/index.html

For detailed installation instructions, refer to the NVIDA vGPU installation guide: https://docs.nvidia.com/grid/latest/grid-software-quick-start-guide/index.html

NVIDIA Licensing

To obtain the NVIDIA vGPU software from NVIDIA Software Licensing Center, follow these steps:

1. Create a NVIDIA Enterprise Account by following these steps: https://docs.nvidia.com/grid/latest/grid-software-quick-start-guide/index.html#creating-nvidia-enterprise-account

2. To redeem the product activation keys (PAK), follow these steps: https://docs.nvidia.com/grid/latest/grid-software-quick-start-guide/index.html#redeeming-pak-and-downloading-grid-software

Download NVIDIA vGPU Software

To download the NVIDIA vGPU software, follow these steps:

1. After the product activation keys have seen successfully redeemed, login to the Enterprise NVIDIA Account (if needed): https://nvidia.flexnetoperations.com/control/nvda/content?partnerContentId=NvidiaHomeContent

2. Click Product Information and then NVIDIA Virtual GPU Software version 9.1 (https://nvidia.flexnetoperations.com/control/nvda/download?element=11233147 )

3. Click NVIDIA vGPU for vSphere 6.7 and download the zip file (NVIDIA-GRID-vSphere-6.7-430.46-431.79.zip).

4. Scroll down and click 2019.05 64-bit License Manager for Windows to download the License Manager software for the Windows (NVIDIA-ls-windows-64-bit-2019.05.0.26416627.zip).

Setup NVIDIA vGPU Software License Server

The NVIDIA vGPU software License Server is used to serve a pool of floating licenses to NVIDIA vGPU software licensed products. The license server is designed to be installed at a location that is accessible from a customer’s network and be configured with licenses obtained from the NVIDIA Software Licensing Center.

Refer to the NVIDIA Virtual GPU Software License Server Documentation: https://docs.nvidia.com/grid/ls/latest/grid-license-server-user-guide/index.html for setting up the vGPU software license server.

To setup a standalone license server, follow these steps:

1. Deploy a windows server 2012 VM with the following hardware parameters:

a. 2 vCPUs

b. 4GB RAM

c. 100GB HDD

d. 64-bit Operating System

e. Static IP address

f. Internet access

g. Latest version of Java Runtime Environment

2. Copy the previously downloaded License Manager installation file (NVIDIA-ls-windows-64-bit-2019.05.0.26416627.zip) to the above VM, unzip and double-click Setup-x64.exe to install the License Server.

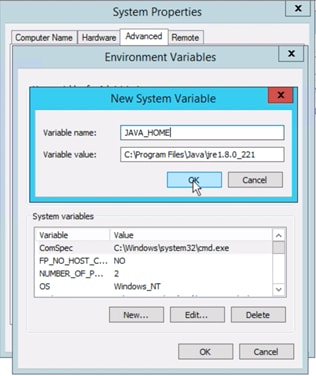

3. If a warning about JAVA_HOME environmental variable not defined is received, add the variable manually using the following steps:

a. Open Control Panel and change the view to Large Icons

b. Click and open System

c. Click and open Advanced system settings

d. Click on Environmental Variables

e. Click New under System variables

f. Add the variable name and path where Java Runtime Environment is deployed:

g. Click OK multiple times to accept the changes and close the configuration dialog boxes.

h. Run the installer again and follow the prompts.

4. When the installation is complete, open a web browser and enter the following URL to access the License Server: http://localhost:8080/licserver

![]() The license server uses Ports 8080 and 7070 to manager the server and for client registration. These ports should be enabled across the firewalls (if any).

The license server uses Ports 8080 and 7070 to manager the server and for client registration. These ports should be enabled across the firewalls (if any).

![]() In actual customer deployments, redundant license servers must be installed for high availability. Refer to the NVIDIA documentation for high availability requirements: https://docs.nvidia.com/grid/ls/latest/grid-license-server-user-guide/index.html#license-server-high-availability-requirements

In actual customer deployments, redundant license servers must be installed for high availability. Refer to the NVIDIA documentation for high availability requirements: https://docs.nvidia.com/grid/ls/latest/grid-license-server-user-guide/index.html#license-server-high-availability-requirements

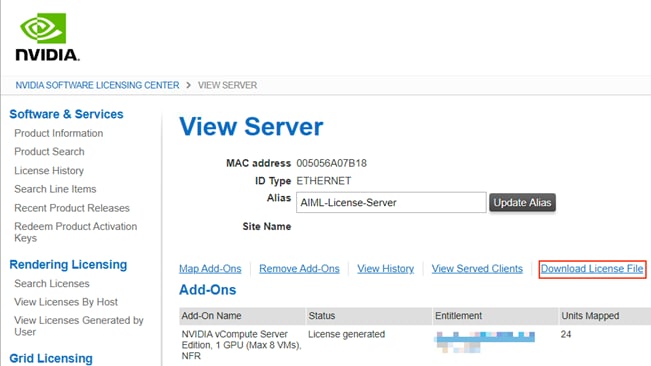

Register License Server to NVIDIA Software Licensing Center

To enable the License server to obtain and distribute licenses to the clients, the license server must be registered to NVIDIA Software Licensing Center. To do so, follow these steps:

1. Log into the NVIDIA Enterprise account and browse to NVIDIA Software License Center.

2. Click the Register License Server link.

3. The license server registration form requires the MAC address of the license server being registered. This information can be retrieved by opening the license server management interface (http://localhost:8080/licserver) and clicking Configuration.

4. Enter the MAC address and an alias and click Create.

5. On the next page, click Map Add-Ons to map the appropriate license feature(s).

6. On the following page, select the appropriate licensed feature (NVIDIA vCompute Server Edition) and quantity and click Map Add-Ons.

7. Click Download License File and copy this file over to the license server VM if the previous steps were performed in a different machine.

8. On the license server management console, click License Management and Choose File to select the file downloaded in the last step.

9. Click Upload to upload the file to the license server.

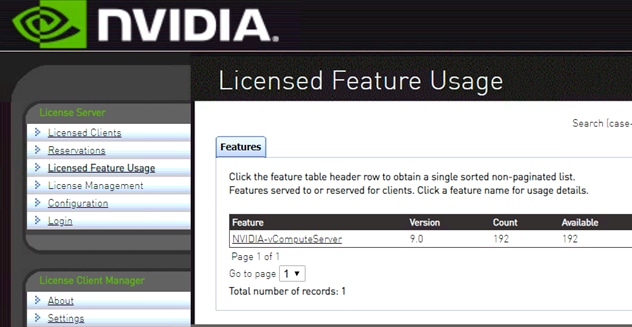

10. Click the License Feature Usage to verify the license was installed properly.

The License Server is now configured to serve licenses to the VMs.

Install NVIDIA vGPU Manager in ESXi

Before guests enabled for NVIDIA vGPU can be configured, the NVIDIA Virtual GPU Manager must be installed on the ESXi hosts. To do so, follow these steps:

1. Unzip the downloaded file NVIDIA-GRID-vSphere-6.7-430.46-431.79.zip to extract the software VIB file: NVIDIA-VMware_ESXi_6.7_Host_Driver-430.46-1OEM.670.0.0.8169922.x86_64.vib.

2. Copy the file to one of the shared datastores on the ESXi servers; in this example, the file was copied to the datastore infra_datastore_1.

3. Right-click the ESXi host and select Maintenance Mode -> Enter Maintenance Mode.

4. SSH to the ESXi server and install the vib file:

[root@AIML-ESXi:~] esxcli software vib install -v /vmfs/volumes/infra_datastore_1/NVIDIA-VMware_ESXi_6.7_Host_Driver-430.46-1OEM.670.0.0.8169922.x86_64.vib

Installation Result

Message: Operation finished successfully.

Reboot Required: false

VIBs Installed: NVIDIA_bootbank_NVIDIA-VMware_ESXi_6.7_Host_Driver_430.46-1OEM.670.0.0.8169922

VIBs Removed:

VIBs Skipped:

5. Reboot the host from vSphere client or from the CLI.

6. Log back into the host once the reboot completes and issue the following command to verify the driver installation on the ESXi host:

[root@AIML-ESXi:~] nvidia-smi

Fri Oct 11 05:33:09 2019

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 430.46 Driver Version: 430.46 CUDA Version: N/A |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 Tesla V100-SXM2... On | 00000000:1B:00.0 Off | 0 |

| N/A 43C P0 49W / 300W | 61MiB / 32767MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 1 Tesla V100-SXM2... On | 00000000:1C:00.0 Off | 0 |

| N/A 42C P0 46W / 300W | 61MiB / 32767MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 2 Tesla V100-SXM2... On | 00000000:42:00.0 Off | 0 |

| N/A 42C P0 45W / 300W | 61MiB / 32767MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 3 Tesla V100-SXM2... On | 00000000:43:00.0 Off | 0 |

| N/A 43C P0 43W / 300W | 61MiB / 32767MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 4 Tesla V100-SXM2... On | 00000000:89:00.0 Off | 0 |

| N/A 42C P0 46W / 300W | 61MiB / 32767MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 5 Tesla V100-SXM2... On | 00000000:8A:00.0 Off | 0 |

| N/A 42C P0 46W / 300W | 61MiB / 32767MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 6 Tesla V100-SXM2... On | 00000000:B2:00.0 Off | 0 |

| N/A 41C P0 45W / 300W | 61MiB / 32767MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

| 7 Tesla V100-SXM2... On | 00000000:B3:00.0 Off | 0 |

| N/A 41C P0 46W / 300W | 61MiB / 32767MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| 0 2102601 G Xorg 5MiB |

| 1 2102618 G Xorg 5MiB |

| 2 2102639 G Xorg 5MiB |

| 3 2102658 G Xorg 5MiB |

| 4 2102679 G Xorg 5MiB |

| 5 2102696 G Xorg 5MiB |

| 6 2102716 G Xorg 5MiB |

| 7 2102736 G Xorg 5MiB |

+-----------------------------------------------------------------------------+

![]() The output of the command “nvidia-smi” will vary depending on the ESXi host and the type and number of GPUs.

The output of the command “nvidia-smi” will vary depending on the ESXi host and the type and number of GPUs.

7. Right-click the ESXi host and select Maintenance Mode -> Exit Maintenance Mode.

8. Repeat these steps to install the vGPU manager on all the appropriate ESXi hosts.

Set the Host Graphics to SharedPassthru

A GPU card can be configured in shared virtual graphics mode or the vGPU (SharedPassthru) mode. For the AI/ML workloads, the NVIDIA card should be configured in the SharedPassthru mode. A server reboot is required when this setting is modified. To set the host graphics to SharedPassthru, follow these steps:

1. Click the ESXi host in the vSphere client and select Configure.

2. Scroll down and select Graphics and select Host Graphics from the main windows.

3. Click Edit.

4. Select Shared Direct and click OK.

5. Reboot the ESXi host after enabling Maintenance Mode. Remember to exit Maintenance Mode when the host comes back up.

6. Repeat these steps for all the appropriate ESXi hosts.

(Optional) Enabling vMotion with vGPU

To enable VMware vMotion with vGPU, an advanced vCenter Server setting must be enabled. To do so, follow these steps:

![]() For details about which VMware vSphere versions, NVIDIA GPUs, and guest OS releases support VM with vGPU migration, see: https://docs.nvidia.com/grid/latest/grid-vgpu-release-notes-vmware-vsphere/index.html

For details about which VMware vSphere versions, NVIDIA GPUs, and guest OS releases support VM with vGPU migration, see: https://docs.nvidia.com/grid/latest/grid-vgpu-release-notes-vmware-vsphere/index.html

1. Log into vCenter Server using the vSphere Web Client.

2. In the Hosts and Clusters view, select the vCenter Server instance.

![]() Ensure that the vCenter Server instance is selected, not the vCenter Server VM.

Ensure that the vCenter Server instance is selected, not the vCenter Server VM.

3. Click the Configure tab.

4. In the Settings section, select Advanced Settings and click Edit.

5. In the Edit Advanced vCenter Server Settings window that opens, type vGPU in the search field.

6. When the vgpu.hotmigrate.enabled setting appears, set the Enabled option and click OK.

Add a Port-Group to Access AI/ML NFS Share

Customers can choose to access the NFS share hosting Imagenet data (AI/ML dataset) in one of the following two ways:

1. Using a separate NIC assigned to the port-group setup to access AI/ML NFS VLAN (e.g. 3152).

2. Using the VM’s management interface if the network is setup for routing between VM’s IP address and the NFS interface IP address on FlashBlade.

In this deployment, a separate NIC was used to access the NFS share to keep the management traffic separate form NFS traffic and to be able to access the NFS share over directly connected network without having to route. To define a new port-group follow these steps for all the ESXi hosts:

![]() In this example, NFS VLAN was added to the vNIC template associated with vSwitch1. If a customer decides to use a different vSwitch or a distributed switch, select the appropriate vSwitch here.

In this example, NFS VLAN was added to the vNIC template associated with vSwitch1. If a customer decides to use a different vSwitch or a distributed switch, select the appropriate vSwitch here.

1. Log into the vSphere client and click on the host under Hosts and Clusters in the left side bar.

2. In the main window, select Configure > Networking > Virtual Switches.

3. Select ADD NETWORKING next to the vSwitch1.

4. In the Add Networking window, select Virtual Machine Port Group for a Standard Switch and click NEXT.

5. Select an existing vSwitch and make sure vSwitch1 is selected and click NEXT.

6. Provide a Network Label (e.g. 192-168-52-NFS) and VLAN (e.g. 3152). Click NEXT.

7. Verify the information and click FINISH.

The port-group is now configured to be assigned to the VMs.

Red Hat Enterprise Linux VM Setup

NVIDIA V100 and T4 GPUs support various vGPU profiles. These profiles, along with their intended use, are outlined in the NVIDIA documentation:

· NVIDIA T4 vGPU Types:

https://docs.nvidia.com/grid/latest/grid-vgpu-user-guide/index.html#vgpu-types-tesla-t4

· NVIDIA V100 SXM2 32GB vGPU Types:

https://docs.nvidia.com/grid/latest/grid-vgpu-user-guide/index.html#vgpu-types-tesla-v100-sxm2-32gb

· NVIDIA V100 PCIE 32GB vGPU Types:

https://docs.nvidia.com/grid/latest/grid-vgpu-user-guide/index.html#vgpu-types-tesla-v100-pcie-32gb

GPU profiles for VComputeServer workloads end with “C” in the profile name. For example, NVIDIA T4 GPU supports following vGPU profiles: T4-16C, T4-8C and T4-4C where 16, 8, and 4 represent frame buffer memory in GB. Because C-Series vComputeServer vGPUs have large BAR (Base Address Registers) memory settings, using these vGPUs has some restrictions in VMware ESXi:

· The guest OS must be a 64-bit OS.

· 64-bit MMIO and EFI boot must be enabled for the VM.

· The guest OS must be able to be installed in EFI boot mode.

· The VM’s MMIO space must be increased to 64 GB (refer to VMware KB article: https://kb.vmware.com/s/article/2142307). When using multiple vGPUs with single VM, this value might need to be increased to match the total memory for all the vGPUs.

· To use multiple vGPUs in a VM, set the VM compatibility to vSphere 6.7 U2.

![]() Refer to the NVIDIA vGPU software documentation: https://docs.nvidia.com/grid/latest/grid-vgpu-release-notes-vmware-vsphere/index.html#validated-platforms for various device settings and requirements

Refer to the NVIDIA vGPU software documentation: https://docs.nvidia.com/grid/latest/grid-vgpu-release-notes-vmware-vsphere/index.html#validated-platforms for various device settings and requirements

VM Hardware Setup

To setup a RHEL VM for running AI/ML workloads, follow these steps:

1. In the vSphere client, right-click in the ESXi host and select New Virtual Machine.

2. Select Create a new virtual machine and click NEXT.

3. Provide Virtual Machine Name and optionally select an appropriate folder. Click NEXT.

4. Make sure correct Host is selected and Compatibility checks succeeded. Click NEXT.

5. Select a datastore and click NEXT.

6. From the drop-down list, select ESXi 6.7 update 2 and later and click NEXT.

7. From the drop-down list, select Linux as the Guest OS Family and Red Hat Enterprise Linux 7 (64-bit) as the Guest OS Version. Click NEXT.

8. Change the number of CPUs and Memory to match workload requirements (8 vCPUs and 16GB memory was selected in this example).

9. Select appropriate network under NEW Network.

10. (Optional) Click ADD NEW DEVICE and add a second Network Adapter.

11. For the network, select the previously defined NFS Port-Group where AI/ML dataset (imagenet) can be accessed.

![]() This deployment assumes each ESXi host is pre-configured with a VM port-group providing layer-2 access to FlashBlade where Imagenet dataset is hosted.

This deployment assumes each ESXi host is pre-configured with a VM port-group providing layer-2 access to FlashBlade where Imagenet dataset is hosted.

![]() If this VM is going to be converted into a base OS template, do not add vGPUs at this time. The vGPUs will be added later.

If this VM is going to be converted into a base OS template, do not add vGPUs at this time. The vGPUs will be added later.

12. Click VM Options.

13. Expand Boot Options and under Firmware, select EFI (ignore the warning since this is a fresh install).

14. Expand Advanced and click EDIT CONFIGURATION…

15. Click ADD CONFIGURATION PARAMS twice and add pciPassthru.64bitMMIOSizeGB with value of 64* and pciPassthru.use64bitMMIO with value of TRUE. Click OK.

![]() This value should be adjusted based on the number of GPUs assigned to the VM. For example, if a VM is assigned 4 x 32GB V100 GPUs, this value should be 128.

This value should be adjusted based on the number of GPUs assigned to the VM. For example, if a VM is assigned 4 x 32GB V100 GPUs, this value should be 128.

16. Click NEXT and after verifying various selections, click FINISH.

17. Right-click the newly created VM and select Open Remote Console to bring up the console.

18. Click the Power On button.

Download RHEL 7.6 DVD ISO

If the RHEL DVD image has not been downloaded, follow these steps to download the ISO:

1. Click the following link RHEL 7.6 Binary DVD.

2. A user_id and password are required on the website (redhat.com) to download this software.

3. Download the .iso (rhel-server-7.6-x86_64-dvd.iso) file.

4. Follow the prompts to launch the KVM console.

Operating System Installation

To prepare the server for the OS installation, make sure the VM is powered on and follow these steps:

1. In the VMware Remote Console window, click VMRC -> Removable Devices -> CD/DVD Drive 1 -> Connect to Disk Image File (iso).

2. Browse and select the RHEL ISO file and click Open.

3. Press the Send Ctl+Alt+Del to Virtual machine button.

![]()

4. On reboot, the VM detects the presence of the RHEL installation media. From the Installation menu, use arrow keys to select Install Red Hat Enterprise Linux 7.6. This should stop automatic boot countdown.

5. Press Enter to continue the boot process.

6. After the installer finishes loading, select the language and press Continue.

7. On the Installation Summary screen, leave the software selection to Minimal Install.

![]() It might take a minute for the system to check the installation source. During this time, Installation Source will be grayed out. Wait for the system to load the menu items completely.

It might take a minute for the system to check the installation source. During this time, Installation Source will be grayed out. Wait for the system to load the menu items completely.

8. Click the Installation Destination to select the VMware Virtual disk as installation disk.

9. Leave Automatically configure partitioning checked and Click Done.

10. Click Begin Installation to start RHEL installation.

11. Enter and confirm the root password and click Done.

12. (Optional) Create another user for accessing the system.

13. After the installation is complete, click VMRC -> Removable Devices -> CD/DVD Drive 1 -> Disconnect <iso-file-name>

14. Click Reboot to reboot the system. The system should now boot up with RHEL.

![]() If the VM does not reboot properly and seems to hang, click the VMRC button and select Power -> Restart Guest.

If the VM does not reboot properly and seems to hang, click the VMRC button and select Power -> Restart Guest.

Network and Hostname Setup

Adding a management network for each VM is necessary for remotely logging in and managing the VM. During this configuration step, all the network interfaces and the hostname will be setup using the VMware Remote Console.

1. Log into the RHEL using the VMware Remote Console and make sure the VM has finished rebooting and login prompt is visible.

2. Log in as root, enter the password set during the initial setup.

3. After logging in, type nmtui and press <Return>.

4. Using the arrow keys, select Edit a connection and press <Return>.

5. In the connection list, select the connection with the lowest ID (ens192 in this example) and press <Return>.

6. When setting up the VM, the first interface should have been assigned to the management port-group. This can be verified by going to vSphere vCenter and clicking the VM. Under Summary -> VM Hardware, expand Network Adapter 1 and verify the MAC address and Network information.

7. This MAC address should match the MAC address information in the VMware Remote Console.

8. After the interface is correctly identified, in the Remote Console, using the arrow keys, scroll down to IPv4 CONFIGURATION <Automatic> and press <Return>. Select Manual.

9. Scroll to <Show> next to IPv4 CONFIGURATION and press <Return>.

10. Scroll to <Add…> next to Addresses and enter the management IP address with a subnet mask in the following format: x.x.x.x/nn (e.g. 192.168.169.121/24)

![]() Remember to enter a subnet mask when entering the IP address. The system will accept an IP address without a subnet mask and then assign a subnet mask of /32 causing connectivity issues.

Remember to enter a subnet mask when entering the IP address. The system will accept an IP address without a subnet mask and then assign a subnet mask of /32 causing connectivity issues.

11. Scroll down to Gateway and enter the gateway IP address.

12. Scroll down to <Add..> next to DNS server and add one or more DNS servers.

13. Scroll down to <Add…> next to Search Domains and add a domain (if applicable).

14. Scroll down to <Automatic> next to IPv6 CONFIGURATION and press <Return>.

15. Select Ignore and press <Return>.

16. Scroll down and check Automatically connect.

17. Scroll down to <OK> and press <Return>.

18. Repeat this procedure to setup the NFS* interface.

![]() * For the NFS interface, expand the Ethernet settings by selecting Show and set the MTU to 9000. Do not set a Gateway.

* For the NFS interface, expand the Ethernet settings by selecting Show and set the MTU to 9000. Do not set a Gateway.

19. Scroll down to <Back> and press <Return>.

20. From the main Network Manager TUI screen, scroll down to Set system hostname and press <Return>.

21. Enter the fully qualified domain name for the server and press <Return>.

22. Press <Return> and scroll down to Quit and press <Return> again.

23. At this point, the network services can be restarted for these changes to take effect. In the lab setup, the VM was rebooted (type reboot and press <Return>) to ensure all the changes were properly saved and applied across the future server reboots.

RHEL VM – Base Configuration

In this step, the following items are configured on the RHEL host:

· Setup Subscription Manager

· Enable repositories

· Install Net-Tools

· Install FTP

· Enable EPEL Repository

· Install NFS utilities and mount NFS share

· Update ENIC drivers

· Setup NTP

· Disable Firewall

· Install Kernel Headers

· Install gcc

· Install wget

· Install DKMS

Log into RHEL Host using SSH

To log in to the host(s), use an SSH client and connect to the previously configured management IP address of the host. Use the username: root and the <password> set up during RHEL installation.

Setup Subscription Manager

To setup the subscription manager, follow these steps:

1. To download and install packages, setup the subscription manager using valid redhat.com credentials:

[root@ rhel-tmpl~]# subscription-manager register --username= <Name> --password=<Password> --auto-attach

Registering to: subscription.rhsm.redhat.com:443/subscription

The system has been registered with ID: <***>

The registered system name is: rhel-tmpl.aiml.local

2. To verify the subscription status:

[root@ rhel-tmpl~]# subscription-manager attach --auto

Installed Product Current Status:

Product Name: Red Hat Enterprise Linux Server

Status: Subscribed

Enable Repositories

To setup repositories for downloading various software packages, run the following command:

[root@ rhel-tmpl~]# subscription-manager repos --enable="rhel-7-server-rpms" --enable="rhel-7-server-extras-rpms"

Repository 'rhel-7-server-rpms' is enabled for this system.

Repository 'rhel-7-server-extras-rpms' is enabled for this system.

Install Net-Tools and Verify MTU

To enable helpful network commands (including ifconfig), install net-tools:

[root@rhel-tmpl ~] yum install net-tools

Loaded plugins: product-id, search-disabled-repos, subscription-manager

<SNIP>

Installed:

net-tools.x86_64 0:2.0-0.25.20131004git.el7

Complete!

![]() Using the ifconfig command, verify the MTU is correctly set to 9000 on the NFS interface. If the MTU is not set correctly, modify the MTU and set it to 9000 (using nmtui).

Using the ifconfig command, verify the MTU is correctly set to 9000 on the NFS interface. If the MTU is not set correctly, modify the MTU and set it to 9000 (using nmtui).

Install FTP

Install the FTP client to enable copying files to the host using ftp:

[root@rhel-tmpl ~]# yum install ftp

Loaded plugins: product-id, search-disabled-repos, subscription-manager

epel/x86_64/metalink | 17 kB 00:00:00

<SNIP>

Installed:

ftp.x86_64 0:0.17-67.el7

Complete!

Enable EPEL Repository

EPEL (Extra Packages for Enterprise Linux) is open source and free community-based repository project from Fedora team which provides 100 percent high quality add-on software packages for Linux distribution including RHEL. Some of the packages installed later in the setup require EPEL repository to be enabled. To enable the repository, run the following:

[root@rhel-tmpl ~]# yum install -y https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm

Loaded plugins: product-id, search-disabled-repos, subscription-manager

epel-release-latest-7.noarch.rpm | 15 kB 00:00:00

Examining /var/tmp/yum-root-Gfcqhh/epel-release-latest-7.noarch.rpm: epel-release-7-12.noarch

Marking /var/tmp/yum-root-Gfcqhh/epel-release-latest-7.noarch.rpm to be installed

Resolving Dependencies

--> Running transaction check

---> Package epel-release.noarch 0:7-12 will be installed

--> Finished Dependency Resolution

Dependencies Resolved

=========================================================================================================

Package Arch Version Repository Size

=========================================================================================================

Installing:

epel-release noarch 7-12 /epel-release-latest-7.noarch 24 k

Transaction Summary

=========================================================================================================

Install 1 Package

Total size: 24 k

Installed size: 24 k

Downloading packages:

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : epel-release-7-12.noarch 1/1

Verifying : epel-release-7-12.noarch 1/1

Installed:

epel-release.noarch 0:7-12

Install NFS Utilities and Mount NFS Share

To mount the NFS share on the host, NFS utilities need to be installed and the /etc/fstab file needs to be modified. To do so, follow these steps:

1. To install the nfs-utils:

[root@rhel-tmpl ~]# yum install nfs-utils

Loaded plugins: product-id, search-disabled-repos, subscription-manager

Resolving Dependencies

<SNIP>

Installed:

nfs-utils.x86_64 1:1.3.0-0.65.el7

Dependency Installed:

gssproxy.x86_64 0:0.7.0-26.el7 keyutils.x86_64 0:1.5.8-3.el7 libbasicobjects.x86_64 0:0.1.1-32.el7

libcollection.x86_64 0:0.7.0-32.el7 libevent.x86_64 0:2.0.21-4.el7 libini_config.x86_64 0:1.3.1-32.el7

libnfsidmap.x86_64 0:0.25-19.el7 libpath_utils.x86_64 0:0.2.1-32.el7 libref_array.x86_64 0:0.1.5-32.el7

libtirpc.x86_64 0:0.2.4-0.16.el7 libverto-libevent.x86_64 0:0.2.5-4.el7 quota.x86_64 1:4.01-19.el7

quota-nls.noarch 1:4.01-19.el7 rpcbind.x86_64 0:0.2.0-48.el7 tcp_wrappers.x86_64 0:7.6-77.el7

Complete!

2. Using text editor (such as vi), add the following line at the end of the /etc/fstab file:

<IP Address of NFS Interface>:/aiml /mnt/imagenet

nfs rw,bg,nointr,hard,tcp,vers=3,actimeo=0

where the /aiml is the NFS mount point (as defined in Pure Storage FlashBlade).

3. Verify that the updated /etc/fstab file looks like:

#

# /etc/fstab

# Created by anaconda on Wed Mar 27 18:33:36 2019

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/rhel01-root / xfs defaults,_netdev,_netdev 0 0

UUID=36f667cf-xxxxxxxxx /boot xfs defaults,_netdev,_netdev,x-initrd.mount 0 0

/dev/mapper/rhel01-home /home xfs defaults,_netdev,_netdev,x-initrd.mount 0 0

/dev/mapper/rhel01-swap swap swap defaults,_netdev,x-initrd.mount 0 0

192.168.52.241:/aiml /mnt/imagenet nfs rw,bg,nointr,hard,tcp,vers=3,actimeo=0

4. Issue the following commands to mount NFS at the following location: /mnt/imagenet

[root@rhel-tmpl ~]# mkdir /mnt/imagenet

[root@rhel-tmpl ~]# mount /mnt/imagenet

5. To verify that the mount was successful:

[root@rhel-tmpl ~]# mount | grep imagenet

192.168.52.241:/aiml on /mnt/aiml type nfs (rw,relatime,vers=3,rsize=524288,wsize=524288,namlen=255,acregmin=0,acregmax=0,acdirmin=0,acdirmax=0,hard,proto=tcp,timeo=600,retrans=2,sec=sys,mountaddr=192.168.52.241,mountvers=3,mountport=2049,mountproto=tcp,local_lock=none,addr=192.168.52.241)

Setup NTP

To setup NTP, follow these steps:

1. To synchronize the host time to an NTP server, install NTP package:

[root@rhel-tmpl ~]# yum install ntp

<SNIP>

Installed:

ntp.x86_64 0:4.2.6p5-29.el7

Dependency Installed:

autogen-libopts.x86_64 0:5.18-5.el7 ntpdate.x86_64 0:4.2.6p5-29.el7

2. If the default NTP servers defined in /etc/ntp.conf file are not reachable or to add additional local NTP servers, modify the /etc/ntp.conf file (using a text editor such as vi) and add the server(s) as shown below:

![]() “#” in front of a server name or IP address signifies that the server information is commented out and will not be used

“#” in front of a server name or IP address signifies that the server information is commented out and will not be used

[root@rhel-tmpl~]# more /etc/ntp.conf | grep server

server 192.168.169.1 iburst

# server 0.rhel.pool.ntp.org iburst

# server 1.rhel.pool.ntp.org iburst

# server 2.rhel.pool.ntp.org iburst

# server 3.rhel.pool.ntp.org iburst

3. To verify the time is setup correctly, use the date command:

Disable Firewall

To make sure the installation goes smoothly, Linux firewall and the Linux kernel security module (SEliniux) is disabled. To do so, follow these steps:

![]() The Customer Linux Server Management team should review and enable these security modules with appropriate settings once the installation is complete.

The Customer Linux Server Management team should review and enable these security modules with appropriate settings once the installation is complete.

1. To disable Firewall:

[root@rhel-tmpl ~]# systemctl stop firewalld

[root@rhel-tmpl ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multiuser.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

2. To disable SELinux:

[root@rhel-tmpl ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

[root@rhel-tmpl ~]# setenforce 0

3. Reboot the host:

[root@rhel-tmpl l~]# reboot

Disable IPv6 (Optional)

If the IPv6 addresses are not being used in the customer environment, IPv6 can be disabled on the RHEL host:

[root@rhel-tmpl ~]# echo 'net.ipv6.conf.all.disable_ipv6 = 1' >> /etc/sysctl.conf

[root@rhel-tmpl ~]# echo 'net.ipv6.conf.default.disable_ipv6 = 1' >> /etc/sysctl.conf

[root@rhel-tmpl ~]# echo 'net.ipv6.conf.lo.disable_ipv6 = 1' >> /etc/sysctl.conf

[root@rhel-tmpl ~]# reboot

Install Kernel Headers

To install the Kernel Headers, run the following commands:

[root@rhel-tmpl~]# uname -r

3.10.0-957.el7.x86_64

[root@rhel-tmpl~]# yum install kernel-devel-$(uname -r) kernel-headers-$(uname -r)

Loaded plugins: product-id, search-disabled-repos, subscription-manager

Resolving Dependencies

<SNIP>

Installed:

kernel-devel.x86_64 0:3.10.0-957.el7 kernel-headers.x86_64 0:3.10.0-957.el7

Dependency Installed:

perl.x86_64 4:5.16.3-294.el7_6 perl-Carp.noarch 0:1.26-244.el7

perl-Encode.x86_64 0:2.51-7.el7 perl-Exporter.noarch 0:5.68-3.el7

perl-File-Path.noarch 0:2.09-2.el7 perl-File-Temp.noarch 0:0.23.01-3.el7

perl-Filter.x86_64 0:1.49-3.el7 perl-Getopt-Long.noarch 0:2.40-3.el7

perl-HTTP-Tiny.noarch 0:0.033-3.el7 perl-PathTools.x86_64 0:3.40-5.el7

perl-Pod-Escapes.noarch 1:1.04-294.el7_6 perl-Pod-Perldoc.noarch 0:3.20-4.el7

perl-Pod-Simple.noarch 1:3.28-4.el7 perl-Pod-Usage.noarch 0:1.63-3.el7

perl-Scalar-List-Utils.x86_64 0:1.27-248.el7 perl-Socket.x86_64 0:2.010-4.el7

perl-Storable.x86_64 0:2.45-3.el7 perl-Text-ParseWords.noarch 0:3.29-4.el7

perl-Time-HiRes.x86_64 4:1.9725-3.el7 perl-Time-Local.noarch 0:1.2300-2.el7

perl-constant.noarch 0:1.27-2.el7 perl-libs.x86_64 4:5.16.3-294.el7_6

perl-macros.x86_64 4:5.16.3-294.el7_6 perl-parent.noarch 1:0.225-244.el7

perl-podlators.noarch 0:2.5.1-3.el7 perl-threads.x86_64 0:1.87-4.el7

perl-threads-shared.x86_64 0:1.43-6.el7

Complete!

Install gcc

To install the C compiler, run the following commands:

[root@rhel-tmpl ~]# yum install gcc-4.8.5

<SNIP>

Installed:

gcc.x86_64 0:4.8.5-39.el7

Dependency Installed:

cpp.x86_64 0:4.8.5-39.el7 glibc-devel.x86_64 0:2.17-292.el7 glibc-headers.x86_64 0:2.17-292.el7

libmpc.x86_64 0:1.0.1-3.el7 mpfr.x86_64 0:3.1.1-4.el7

Dependency Updated:

glibc.x86_64 0:2.17-292.el7 glibc-common.x86_64 0:2.17-292.el7 libgcc.x86_64 0:4.8.5-39.el7

libgomp.x86_64 0:4.8.5-39.el7

Complete!

[root@rhel-tmpl ~]# yum install gcc-c++

Loaded plugins: product-id, search-disabled-repos, subscription-manager

<SNIP>

Installed:

gcc-c++.x86_64 0:4.8.5-39.el7

Dependency Installed:

libstdc++-devel.x86_64 0:4.8.5-39.el7

Dependency Updated:

libstdc++.x86_64 0:4.8.5-39.el7

Complete!

Install wget

To install wget for downloading files from Internet, run the following command:

[root@rhel-tmpl ~]# yum install wget

Loaded plugins: product-id, search-disabled-repos, subscription-manager

Resolving Dependencies

<SNIP>

Installed:

wget.x86_64 0:1.14-18.el7_6.1

Install DKMS

To enable Dynamic Kernel Module Support, run the following command:

[root@rhel-tmpl ~]# yum install dkms

Loaded plugins: product-id, search-disabled-repos, subscription-manager

epel/x86_64/metalink | 17 kB 00:00:00

<SNIP>

Installed:

dkms.noarch 0:2.7.1-1.el7

Dependency Installed:

elfutils-libelf-devel.x86_64 0:0.176-2.el7 zlib-devel.x86_64 0:1.2.7-18.el7

Dependency Updated:

elfutils-libelf.x86_64 0:0.176-2.el7 elfutils-libs.x86_64 0:0.176-2.el7

Complete!

![]() A VM template can be created at this time for cloning any future VMs. NVIDIA driver installation is GPU specific and if customers have a mixed GPU environment, NVIDIA driver installation will have a dependency on GPU model.

A VM template can be created at this time for cloning any future VMs. NVIDIA driver installation is GPU specific and if customers have a mixed GPU environment, NVIDIA driver installation will have a dependency on GPU model.

NVIDIA and CUDA Drivers Installation

In this step, the following components will be installed:

· Add vGPU to the VM

· Install NVIDIA Driver

· Install CUDA Toolkit

Add vGPU to the VM

To add one or more vGPUs to the VM, follow these steps:

1. In the vSphere client, make sure the VM is shutdown. If not, shutdown the VM using VM console.

2. Right-click the VM and select Edit Settings…

3. Click ADD NEW DEVICE and select Shared PCI Device. Make sure NVIDIA GRID vGPU is shown for New PCI Device.

4. Click the arrow next to New PCI Device and select a GPU profile. For various GPU profile options, refer to the NVIDIA documentation.

5. Click Reserve all memory.

![]() Since all VM memory is reserved, vSphere vCenter generates memory usage alarms. These alarms can be ignored or disabled as described in the VMware documentation: https://kb.vmware.com/s/article/2149787

Since all VM memory is reserved, vSphere vCenter generates memory usage alarms. These alarms can be ignored or disabled as described in the VMware documentation: https://kb.vmware.com/s/article/2149787

6. (Optional) Repeat the process to add more PCI devices (vGPUs).

7. Click OK

8. Power On the VM.

![]() If the VM compatibility is not set to vSphere 6.7 Update 2, only one GPU can be added to the VM.

If the VM compatibility is not set to vSphere 6.7 Update 2, only one GPU can be added to the VM.

Install NVIDIA Driver

To install the NVIDIA Driver on the RHEL VM, follow these steps:

1. From the previously downloaded zip file NVIDIA-GRID-vSphere-6.7-430.46-431.79.zip, extract the LINUX driver file NVIDIA-Linux-x86_64-430.46-grid.run.

2. Copy the file to the VM using FTP or sFTP.

3. Install the driver by running the following command:

[root@rhel-tmpl ~]# sh NVIDIA-Linux-x86_64-430.46-grid.run

4. For “Would you like to register the kernel module sources with DKMS? This will allow DKMS to automatically build a new module, if you install a different kernel later”, select Yes.

5. Select OK for the X library path warning

6. (Optional) For “Install NVIDIA's 32-bit compatibility libraries?”, select Yes if 32-bit libraries are needed.

7. Select OK when the installation is complete.

8. Verify the correct vGPU profile is reported using the following command:

[root@rhel-tmpl ~]# nvidia-smi --query-gpu=gpu_name --format=csv,noheader --id=0 | sed -e 's/ /-/g'

GRID-V100DX-32C

9. Blacklist the Nouveau Driver by opening the /etc/modprobe.d/blacklist-nouveau.conf in a text editor (for example vi) and adding following commands: