FlashStack Virtual Server Infrastructure with Cisco UCS X-Series and VMware 7.0 U2

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

Published: February 2022

Deployment Hardware and Software

FlashStack Cisco MDS Switch Configuration

FlashStack Management Tools Setup

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries. (LDW_U2)

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2022 Cisco Systems, Inc. All rights reserved.

● Simpler and programmable infrastructure: Infrastructure as a code delivered through an open application programming interface (API)

● Power and cooling innovations: Higher-power headroom and lower energy loss because of a 54V DC power delivery to the chassis

● Better airflow: Midplane free design with fewer barriers, thus lower impedance

● Fabric innovations: PCIe/Compute Express Link (CXL) topology for heterogeneous compute and memory composability

● Innovative cloud operations: Continuous feature delivery and no need for managing virtual machines

● Built for investment protections: Design-ready for future technologies such as liquid-cooling and high-wattage CPUs; CXL-ready

In addition to the compute-specific hardware and software innovations, integration of the Cisco Intersight cloud platform with VMware vCenter and Pure Storage FlashArray delivers monitoring, orchestration, and workload optimization capabilities for different layers (virtualization and storage) of the FlashStack solution. The modular nature of the Cisco Intersight platform also provides an easy upgrade path to additional services such as workload optimization and Kubernetes.

Customers interested in understanding FlashStack design and deployment details, including the configuration of various elements of design and associated best practices, should refer to Cisco Validated Designs for FlashStack at: https://www.cisco.com/c/en/us/solutions/design-zone/data-center-design-guides/data-center-design-guides-all.html - FlashStack.

Powered by the Cisco Intersight cloud operations platform, the Cisco UCS X-Series enables the next-generation cloud-operated FlashStack infrastructure that not only simplifies the datacenter management but also allows the infrastructure to adapt to unpredictable needs of the modern applications as well as traditional workloads. With the Cisco Intersight platform, you get all the benefits of SaaS delivery and the full lifecycle management of Cisco Intersight connected, distributed servers and integrated Pure Storage FlashArray across data centers, remote sites, branch offices, and edge environments.

Audience

Purpose of this Document

What’s New in this Release?

● Integration of the Cisco UCS X-Series into FlashStack

● Management of the Cisco UCS X-Series from the cloud using the Cisco Intersight platform

● Integration of the Cisco Intersight platform with Pure Storage FlashArray for storage monitoring and orchestration

● Integration of the Cisco Intersight software with VMware vCenter for interacting with, monitoring, and orchestrating the virtual environment

Deployment Hardware and Software

Architecture

The FlashStack VSI with Cisco UCS X-Series and VMware vSphere 7.0 U2 delivers a cloud-managed infrastructure solution on the latest Cisco UCS hardware. VMware vSphere 7.0 U2 hypervisor is installed on the Cisco UCS X210c M6 Compute Nodes configured for stateless compute design using boot from SAN. Pure Storage FlashArray//X50 R3 provides the storage infrastructure required for setting up the VMware environment. The Cisco Intersight cloud-management platform is utilized to configure and manage the infrastructure. The solution requirements and design details are covered in this section.

Requirements

The FlashStack VSI with Cisco UCS X-Series meets the following general design requirements:

● Resilient design across all layers of the infrastructure with no single point of failure

● Scalable design with the flexibility to add compute and storage capacity or network bandwidth as needed

● Modular design that can be replicated to expand and grow as the needs of the business grow

● Flexible design that can support different models of various components with ease

● Simplified design with the ability to integrate and automate with external automation tools

● Cloud-enabled design which can be configured, managed and orchestrated from the cloud using GUI or APIs

Physical Topology

FlashStack with Cisco UCS X-Series supports both IP and Fibre Channel (FC) based storage access design. For the IP-based solution, iSCSI configuration on Cisco UCS and Pure Storage FlashArray is utilized to set up storage access including boot from SAN configuration for the compute nodes. For the Fibre Channel designs, Pure Storage FlashArray and Cisco UCS X-Series are connected using Cisco MDS 9132T switches and storage access, including boot from SAN, is provided over the Fibre Channel network. The physical connectivity details for both IP and FC designs are explained below.

IP-based Storage Access

The physical topology for the IP-based FlashStack is shown in Figure 1.

To validate the IP-based storage access in a FlashStack configuration, the components are set up as follows:

● Cisco UCS 6454 Fabric Interconnects provide the chassis and network connectivity.

● The Cisco UCS X9508 Chassis connects to fabric interconnects using Cisco UCSX 9108-25G intelligent fabric modules (IFMs), where four 25 Gigabit Ethernet ports are used on each IFM to connect to the appropriate FI. If additional bandwidth is required, all eight 25G ports can be utilized.

● Cisco UCSX-210c M6 Compute Nodes contain fourth-generation Cisco 14425 virtual interface cards.

● Cisco Nexus 93180YC-FX3 Switches in Cisco NX-OS mode provide the switching fabric.

● Cisco UCS 6454 Fabric Interconnect 100-Gigabit Ethernet uplink ports connect to Cisco Nexus 93180YC-FX3 Switches in a Virtual Port Channel (vPC) configuration.

● The Pure Storage FlashArray//X50 R3 connects to the Cisco Nexus 93180YC-FX3 switches using four 25-GE ports.

● VMware 7.0 U2 ESXi software is installed on Cisco UCSX-210c M6 Compute Nodes to validate the infrastructure.

FC-based Storage Access

Figure 2 illustrates the FlashStack physical topology for FC connectivity.

To validate the FC-based storage access in a FlashStack configuration, the components are set up as follows:

● Cisco UCS 6454 Fabric Interconnects provide the chassis and network connectivity.

● The Cisco UCS X9508 Chassis connects to fabric interconnects using Cisco UCSX 9108-25G Intelligent Fabric Modules (IFMs), where four 25 Gigabit Ethernet ports are used on each IFM to connect to the appropriate FI.

● Cisco UCS X210c M6 Compute Nodes contain fourth-generation Cisco 14425 virtual interface cards.

● Cisco Nexus 93180YC-FX3 Switches in Cisco NX-OS mode provide the switching fabric.

● Cisco UCS 6454 Fabric Interconnect 100 Gigabit Ethernet uplink ports connect to Cisco Nexus 93180YC-FX3 Switches in a vPC configuration.

● Cisco UCS 6454 Fabric Interconnects are connected to the Cisco MDS 9132T switches using 32-Gbps Fibre Channel connections configured as a single port channel for SAN connectivity.

● The Pure Storage FlashArray//X50 R3 connects to the Cisco MDS 9132T switches using 32-Gbps Fibre Channel connections for SAN connectivity.

● VMware 7.0 U2 ESXi software is installed on Cisco UCS X210c M6 Compute Nodes to validate the infrastructure.

Deployment Hardware and Software

Table 1 lists the hardware and software versions used during solution validation. It is important to note that the validated FlashStack solution explained in this document adheres to Cisco, Pure Storage, and VMware interoperability matrix to determine support for various software and driver versions. Customers should use the same interoperability matrix to determine support for components that are different from the current validated design.

Click the following links for more information:

● Cisco UCS Hardware and Software Interoperability Tool: http://www.cisco.com/web/techdoc/ucs/interoperability/matrix/matrix.html

● Pure Storage Interoperability (note, this interoperability list will require a support login form Pure): https://support.purestorage.com/FlashArray/Getting_Started/Compatibility_Matrix

● Pure Storage FlashStack Compatibility Matrix (note, this interoperability list will require a support login from Pure): https://support.purestorage.com/FlashStack/Product_Information/FlashStack_Compatibility_Matrix

● VMware Compatibility Guide: http://www.vmware.com/resources/compatibility/search.php

Additionally, it is also strongly suggested to align FlashStack deployments with the recommended release for the Cisco Nexus 9000 switches used in the architecture:

Table 1. Hardware and Software Revisions

| Component |

Software |

|

| Network |

Cisco Nexus 93180YC-FX3 |

9.3(7) |

| Cisco MDS 9132T |

8.4(2c) |

|

| Compute |

Cisco UCS Fabric Interconnect 6454 and UCSX 9108-25G IFM |

4.2(1h) |

| Cisco UCS X210C with VIC 14425 |

5.0(1b) |

|

| VMware ESXi |

7.0 U2a |

|

| Cisco VIC ENIC Driver for ESXi |

1.0.35.0 |

|

| Cisco VIC FNIC Driver for ESXi |

5.0.0.15 |

|

| VMware vCenter Appliance |

7.0 U2b |

|

| Cisco Intersight Assist Virtual Appliance |

1.0.9-342 |

|

| Storage |

Pure Storage FlashArray//X50 R3 |

6.1.11 |

| Pure Storage VASA Provider |

3.5 |

|

| Pure Storage Plugin |

5.0.0 |

|

This document details the step-by-step configuration of a fully redundant and highly available Virtual Server Infrastructure built on Cisco and Pure Storage components. References are made to which component is being configured with each step, either 01 or 02 or A and B. For example, controller-1 and controller-2 are used to identify the two controllers within the Pure Storage FlashArray//X that are provisioned with this document, and Cisco Nexus A or Cisco Nexus B identifies the pair of Cisco Nexus switches that are configured. The Cisco UCS fabric interconnects are similarly configured. Additionally, this document details the steps for provisioning multiple Cisco UCS hosts, and these examples are identified as: VM-Host-Infra-FCP-01, VM-Host-Infra-FCP-02 to represent Fibre Channel booted infrastructure and production hosts deployed to the fabric interconnects in this document. Finally, to indicate that you should include information pertinent to your environment in each step, <<text>> appears as part of the command structure. The following is an example of a configuration step for both Cisco Nexus switches:

BB08-93180YC-FX-A (config)# ntp server <<var_oob_ntp>> use-vrf management

This document is intended to enable you to fully configure the customer environment. In this process, various steps require you to insert customer-specific naming conventions, IP addresses, and VLAN schemes, as well as to record appropriate MAC addresses. Table 2 lists the VLANs necessary for deployment as outlined in this guide, and Table 3 lists the external dependencies necessary for deployment as outlined in this guide.

| VLAN ID |

Name |

Usage |

| 2 |

Native-VLAN |

Use VLAN 2 as Native VLAN instead of default VLAN (1) |

| 15 |

OOB-MGMT-VLAN |

Out-of-Band Management VLAN to connect the management ports for various devices |

| 115 |

IB-MGMT-VLAN |

In-Band Management VLAN utilized for all in-band management connectivity for example, ESXi hosts, VM management, and so on. |

| 1101 |

VM-Traffic-VLAN |

VM data traffic VLAN. |

| 1130 |

vMotion-VLAN |

VMware vMotion traffic. |

| 901* |

iSCSI-A-VLAN |

iSCSI-A path for supporting boot-from-san for both Cisco UCS B-Series and Cisco UCS C-Series servers |

| 902* |

iSCSI-B-VLAN |

iSCSI-B path for supporting boot-from-san for both Cisco UCS B-Series and Cisco UCS C-Series servers |

Table 3 lists the VMs necessary for deployment as outlined in this document.

| Virtual Machine Description |

Host Name |

IP Address |

| vCenter Server |

|

|

| Cisco Data Center Network Manager (DCNM) |

|

|

| Cisco Intersight Assist |

|

|

Table 4. Configuration Variables

| Variable Name |

Variable Description |

Customer Variable Name |

| <<var_nexus_A_hostname>> |

Cisco Nexus switch A Host name (Example: BB08-91380YX-FX-A) |

|

| <<var_nexus_A_mgmt_ip>> |

Out-of-band management IP for Cisco Nexus switch A (Example: 10.1.164.61) |

|

| <<var_oob_mgmt_mask>> |

Out-of-band network mask (Example: 255.255.255.0) |

|

| <<var_oob_gateway>> |

Out-of-band network gateway (Example: 10.1.164.254) |

|

| <<var_oob_ntp>> |

Out-of-band management network NTP Server (Example: 10.1.164.254) |

|

| <<var_nexus_B_hostname>> |

Cisco Nexus switch B Host name (Example: BB08-91380YX-FX-B) |

|

| <<var_nexus_B_mgmt_ip>> |

Out-of-band management IP for Nexus switch B (Example: 10.1.164.62) |

|

| <<var_flasharray_hostname>> |

Array Hostname set during setup (Example: BB08-FlashArrayR3) |

|

| <<var_flasharray_vip>> |

Virtual IP that will answer for the active management controller (Example: 10.2.164.100) |

|

| <<var_contoller-1_mgmt_ip>> |

Out-of-band management IP for FlashArray controller-1 (Example:10.2.164.101) |

|

| <<var_contoller-1_mgmt_mask>> |

Out-of-band management network netmask (Example: 255.255.255.0) |

|

| <<var_contoller-1_mgmt_gateway>> |

Out-of-band management network default gateway (Example: 10.2.164.254) |

|

| <<var_contoller-2_mgmt_ip>> |

Out-of-band management IP for FlashArray controller-2 (Example:10.2.164.102) |

|

| <<var_contoller-2_mgmt_mask>> |

Out-of-band management network netmask (Example: 255.255.255.0) |

|

| <<var_ contoller-2_mgmt_gateway>> |

Out-of-band management network default gateway (Example: 10.2.165.254) |

|

| <<var_password>> |

Administrative password (Example: Fl@shSt4x) |

|

| <<var_dns_domain_name>> |

DNS domain name (Example: flashstack.cisco.com) |

|

| <<var_nameserver_ip>> |

DNS server IP(s) (Example: 10.1.164.125) |

|

| <<var_smtp_ip>> |

Email Relay Server IP Address or FQDN (Example: smtp.flashstack.cisco.com) |

|

| <<var_smtp_domain_name>> |

Email Domain Name (Example: flashstack.cisco.com) |

|

| <<var_timezone>> |

FlashStack time zone (Example: America/New_York) |

|

| <<var_oob_mgmt_vlan_id>> |

Out-of-band management network VLAN ID (Example: 15) |

|

| <<var_ib_mgmt_vlan_id>> |

In-band management network VLAN ID (Example: 215) |

|

| <<var_ib_mgmt_vlan_netmask_length>> |

Length of IB-MGMT-VLAN Netmask (Example: /24) |

|

| <<var_ib_gateway_ip>> |

In-band management network VLAN ID (Example: 10.2.164.254) |

|

| <<var_vmotion_vlan_id>> |

vMotion network VLAN ID (Example: 1130) |

|

| <<var_vmotion_vlan_netmask_length>> |

Length of vMotion VLAN Netmask (Example: /24) |

|

| <<var_native_vlan_id>> |

Native network VLAN ID (Example: 2) |

|

| <<var_app_vlan_id>> |

Example Application network VLAN ID (Example: 1101) |

|

| <<var_snmp_contact>> |

Administrator e-mail address (Example: admin@flashstack.cisco.com) |

|

| <<var_snmp_location>> |

Cluster location string (Example: RTP1-AA19) |

|

| <<var_mds_A_mgmt_ip>> |

Cisco MDS Management IP address (Example: 10.1.164.63) |

|

| <<var_mds_A_hostname>> |

Cisco MDS hostname (Example: BB08-MDS-9132T-A) |

|

| <<var_mds_B_mgmt_ip>> |

Cisco MDS Management IP address (Example: 10.1.164.64) |

|

| <<var_mds_B_hostname>> |

Cisco MDS hostname (Example: BB08-MDS-9132T-B) |

|

| <<var_vsan_a_id>> |

VSAN used for the A Fabric between the FlashArray/MDS/FI (Example: 100) |

|

| <<var_vsan_b_id>> |

VSAN used for the B Fabric between the FlashArray/MDS/FI (Example: 200) |

|

| <<var_ucs_clustername>> |

Cisco UCS Manager cluster host name (Example: BB08-FI-6454) |

|

| <<var_ucs_a_mgmt_ip>> |

Cisco UCS fabric interconnect (FI) A out-of-band management IP address (Example: 10.1.164.51) |

|

| <<var_ucs_mgmt_vip>> |

Cisco UCS fabric interconnect (FI) Cluster out-of-band management IP address (Example: 10.1.164.50) |

|

| <<var_ucs b_mgmt_ip>> |

Cisco UCS fabric interconnect (FI) Cluster out-of-band management IP address (Example: 10.1.164.52) |

|

| <<var_vm_host_fc_01_ip>> |

VMware ESXi host 01 in-band management IP (Example:10.1.164.111) |

|

| <<var_vm_host_fc_vmotion_01_ip>> |

VMware ESXi host 01 vMotion IP (Example: 192.168.130.101) |

|

| <<var_vm_host_fc_02_ip>> |

VMware ESXi host 02 in-band management IP (Example:10.1.164.112) |

|

| <<var_vm_host_fc_vmotion_02_ip>> |

VMware ESXi host 02 vMotion IP (Example: 192.168.130.102) |

|

| <<var_vmotion_subnet_mask>> |

vMotion subnet mask (Example: 255.255.255.0) |

|

| <<var_vcenter_server_ip>> |

IP address of the vCenter Server (Example: 10.1.164.110) |

|

Physical Infrastructure

The information in this section is provided as a reference for cabling the physical equipment in a FlashStack environment. To simplify cabling requirements, a cabling diagram was used.

The cabling diagram in this section contains details for the prescribed and supported configuration of the Pure FlashArray//X R3 running Purity 6.1.11.

This document assumes that out-of-band management ports are plugged into an existing management infrastructure at the deployment site. These interfaces will be used in various configuration steps.

Note: Make sure to use the cabling directions in this section as a guide.

Figure 3 details the cable connections used in the validation lab for FlashStack topology based on the Cisco UCS 6454 fabric interconnect. Four 32Gb uplinks connect as port-channels to each Cisco UCS Fabric Interconnect from the MDS switches, and a total of eight 32Gb links connect the MDS switches to the Pure FlashArray//X R3 controllers, four of these have been used for scsi-fc and the other four to support nvme-fc. The 100Gb links connect the Cisco UCS Fabric Interconnects to the Cisco Nexus Switches and the Pure FlashArray//X R3 controllers to the Cisco Nexus Switches. Additional 1Gb management connections will be needed for an out-of-band network switch that sits apart from the FlashStack infrastructure. Each Cisco UCS fabric interconnect and Cisco Nexus switch is connected to the out-of-band network switch, and each FlashArray controller has a connection to the out-of-band network switch. Layer 3 network connectivity is required between the Out-of-Band (OOB) and In-Band (IB) Management Subnets.

Note: Although the following diagram includes the Cisco UCS 5108 chassis with Cisco UCS B-Series M6 servers, this document describes the configuration of only Cisco UCS X210c M6 servers in the Cisco UCS X9508 chassis. However, Cisco UCS X9508 chassis with X210c M6 servers and Cisco UCS 5108 chassis with Cisco UCS B200 M6 servers can be connected to the same set of fabric interconnects with common management using Cisco Intersight.

Note: Cisco UCS Fabric Interconnect’s to the Cisco Nexus 93180YC-FX switches connectivity can be done using the 100Gbe or 25Gbe ports based on the bandwidth requirements, this document includes the usage of 100Gbe ports with aggregate bandwidth of 200Gbe per port channel from the Cisco UCS FI to the Cisco Nexus switches.

Note: * iSCSI connectivity is not required if iSCSI storage access is not being implemented.

Table 5. Cisco Nexus 93180YC-FX-A Cabling Information

| Local Device |

Local Port |

Connection |

Remote Device |

Remote port |

| Cisco Nexus 93180YC-FX-A |

Eth 1/49 |

100Gbe |

Cisco UCS 6454-A |

Eth 1/49 |

|

|

Eth 1/50 |

100Gbe |

Cisco UCS 6454-B |

Eth 1/49 |

|

|

Eth 1/53 |

100Gbe |

Cisco Nexus 93180YC-FX-B |

Eth 1/53 |

|

|

Eth 1/54 |

100Gbe |

Cisco Nexus 93180YC-FX-B |

Eth 1/54 |

|

|

Eth 1/9 |

10Gbe or 25 Gbe |

Upstream Network Switch |

Any |

|

|

Mgmt0 |

Gbe |

Gbe Management Switch |

Any |

|

|

Eth 1/37 * |

25Gbe |

FlashArray//X50 R3 Controller 1 |

CT0.ETH4 |

|

|

Eth 1/38 * |

25Gbe |

FlashArray//X50 R3 Controller 2 |

CT1.ETH4 |

Table 6. Cisco Nexus 93180YC-FX-B Cabling Information

| Local Device |

Local Port |

Connection |

Remote Device |

Remote port |

| Cisco Nexus 93180YC-FX-B |

Eth 1/49 |

100Gbe |

Cisco UCS 6454-A |

Eth 1/50 |

|

|

Eth 1/50 |

100Gbe |

Cisco UCS 6454-B |

Eth 1/50 |

|

|

Eth 1/9 |

10Gbe or 25 Gbe |

Upstream Network Switch |

Any |

|

|

Mgmt0 |

Gbe |

Gbe Management Switch |

Any |

|

|

Eth 1/37 * |

25Gbe |

FlashArray//X50 R3 Controller 1 |

CT0.ETH5 |

|

|

Eth 1/38 * |

25Gbe |

FlashArray//X50 R3 Controller 2 |

CT1.ETH5 |

Table 7. Cisco UCS-6545-A Cabling Information

| Local Device |

Local Port |

Connection |

Remote Device |

Remote port |

| Cisco UCS-6454-A |

Eth 1/49 |

100Gbe |

Cisco Nexus 93180YC-FX-A |

Eth 1/49 |

|

|

Eth 1/50 |

100Gbe |

Cisco Nexus 93180YC-FX-B |

Eth 1/49 |

|

|

Eth 1/17 |

25Gbe |

Cisco UCS Chassis 1 IFM 9108-25G A |

IFM 1/1 |

|

|

Eth 1/18 |

25Gbe |

Cisco UCS Chassis 1 IFM 9108-25G A |

IFM 1/2 |

|

|

Eth 1/19 |

25Gbe |

Cisco UCS Chassis 1 IFM 9108-25G A |

IFM 1/3 |

|

|

Eth 1/20 |

25Gbe |

Cisco UCS Chassis 1 IFM 9108-25G A |

IFM 1/4 |

|

|

FC1/1 |

32G FC |

Cisco MDS 9132T-A |

FC1/1 |

|

|

FC1/2 |

32G FC |

Cisco MDS 9132T-A |

FC1/2 |

|

|

FC1/3 |

32G FC |

Cisco MDS 9132T-A |

FC1/3 |

|

|

FC1/4 |

32G FC |

Cisco MDS 9132T-A |

FC1/4 |

|

|

Mgmt0 |

Gbe |

Gbe Management Switch |

Any |

Table 8. Cisco UCS-6545-B Cabling Information

| Local Device |

Local Port |

Connection |

Remote Device |

Remote port |

| Cisco UCS-6454-B |

Eth 1/49 |

100Gbe |

Cisco Nexus 93180YC-FX-A |

Eth 1/50 |

|

|

Eth 1/50 |

100Gbe |

Cisco Nexus 93180YC-FX-B |

Eth 1/50 |

|

|

Eth 1/17 |

25Gbe |

Cisco UCS Chassis 1 IFM 9108-25G B |

IFM 1/1 |

|

|

Eth 1/18 |

25Gbe |

Cisco UCS Chassis 1 IFM 9108-25G B |

IFM 1/2 |

|

|

Eth 1/19 |

25Gbe |

Cisco UCS Chassis 1 IFM 9108-25G B |

IFM 1/3 |

|

|

Eth 1/20 |

25Gbe |

Cisco UCS Chassis 1 IFM 9108-25G B |

IFM 1/4 |

|

|

FC1/1 |

32G FC |

Cisco MDS 9132T-B |

FC1/1 |

|

|

FC1/2 |

32G FC |

Cisco MDS 9132T-B |

FC1/2 |

|

|

FC1/3 |

32G FC |

Cisco MDS 9132T-B |

FC1/3 |

|

|

FC1/4 |

32G FC |

Cisco MDS 9132T-B |

FC1/4 |

|

|

Mgmt0 |

Gbe |

Gbe Management Switch |

Any |

Table 9. Cisco MDS-9132T-A Cabling Information

| Local Device |

Local Port |

Connection |

Remote Device |

Remote port |

| Cisco MDS-9132T-A |

FC1/5 |

32Gb FC |

Cisco UCS 6454-A |

FC1/1 |

|

|

FC1/6 |

32Gb FC |

Cisco UCS 6454-A |

FC1/2 |

|

|

FC 1/7 |

32Gb FC |

Cisco UCS 6454-A |

FC1/3 |

|

|

FC 1/8 |

32Gb FC |

Cisco UCS 6454-A |

FC1/4 |

|

|

FC1/1 |

32Gb FC |

FlashArray//X50 R3 Controller 0 |

CT0.FC0 (scsi-fc) |

|

|

FC1/2 |

32Gb FC |

FlashArray//X50 R3 Controller 1 |

CT1.FC0 (scsi-fc) |

|

|

FC1/3 |

32Gb FC |

FlashArray//X50 R3 Controller 0 |

CT0.FC1 (nvme-fc) |

|

|

FC1/4 |

32Gb FC |

FlashArray//X50 R3 Controller 1 |

CT1.FC1 (nvme-fc) |

|

|

Mgmt0 |

Gbe |

Gbe Management Switch |

Any |

Note: This design uses SCSI-FCP for boot and datastore storage access and Port numbers 0 and 2 on each Pure FlashArray Controller have been used for the fibre channel connectivity, the ports 1 and 3 are used for FC-NVMe datastore access. All the four ports can be used for SCSI-FCP or FC-NVMe as needed but each port can only function as an SCSI-FCP or FC-NVMe port.

Table 10.Cisco MDS-9132T-B Cabling Information

| Local Device |

Local Port |

Connection |

Remote Device |

Remote port |

| Cisco MDS-9132T-B |

FC1/5 |

32Gb FC |

Cisco UCS 6454-B |

FC1/1 |

|

|

FC1/6 |

32Gb FC |

Cisco UCS 6454-B |

FC1/2 |

|

|

FC 1/7 |

32Gb FC |

Cisco UCS 6454-B |

FC1/3 |

|

|

FC 1/8 |

32Gb FC |

Cisco UCS 6454-B |

FC1/4 |

|

|

FC1/1 |

32Gb FC |

FlashArray//X50 R3 Controller 0 |

CT0.FC2 (scsi-fc) |

|

|

FC1/2 |

32Gb FC |

FlashArray//X50 R3 Controller 1 |

CT1.FC2 (scsi-fc) |

|

|

FC1/3 |

32Gb FC |

FlashArray//X50 R3 Controller 0 |

CT0.FC3 (nvme-fc) |

|

|

FC1/4 |

32Gb FC |

FlashArray//X50 R3 Controller 1 |

CT1.FC3 (nvme-fc) |

|

|

Mgmt0 |

Gbe |

Gbe Management Switch |

Any |

Note: This design uses SCSI-FCP for boot and datastore storage access and Port numbers 0 and 2 on each Pure FlashArray Controller have been used for the fibre channel connectivity, the ports 1 and 3 are used for FC-NVMe datastore access. All the four ports can be used for SCSI-FCP or FC-NVMe as needed but each port can only function as an SCSI-FCP or FC-NVMe port.

Table 11.Pure Storage FlashArray//X50 R3 Controller 1 Cabling Information

| Local Device |

Local Port |

Connection |

Remote Device |

Remote port |

| FlashArray//X50 R3 Controller 1 |

CT0.FC0 (scsi-fc) |

32Gb FC |

Cisco MDS 9132T-A |

FC 1/1 |

|

|

CT0.FC2 (scsi-fc) |

32Gb FC |

Cisco MDS 9132T-B |

FC 1/1 |

|

|

CT0.FC1 (nvme-fc) |

32Gb FC |

Cisco MDS 9132T-A |

FC 1/3 |

|

|

CT0.FC3 (nvme-fc) |

32Gb FC |

Cisco MDS 9132T-B |

FC 1/3 |

|

|

CT0.ETH4 * |

25Gbe |

Cisco Nexus 93180YC-FX-A |

Eth 1/37 |

|

|

CT0.ETH5 * |

25Gbe |

Cisco Nexus 93180YC-FX-B |

Eth 1/37 |

Note: * Required only if iSCSI storage access is implemented.

Table 12.Pure Storage FlashArray//X50 R3 Controller 2 Cabling Information

| Local Device |

Local Port |

Connection |

Remote Device |

Remote port |

| FlashArray//X50 R3 Controller 2 |

CT1.FC0 (scsi-fc) |

32Gb FC |

Cisco MDS 9132T-A |

FC 1/2 |

|

|

CT1.FC2 (scsi-fc) |

32Gb FC |

Cisco MDS 9132T-B |

FC 1/2 |

|

|

CT1.FC1 (nvme-fc) |

32Gb FC |

Cisco MDS 9132T-A |

FC 1/4 |

|

|

CT1.FC3 (nvme-fc) |

32Gb FC |

Cisco MDS 9132T-B |

FC 1/4 |

|

|

CT1.ETH4 * |

25Gbe |

Cisco Nexus 93180YC-FX-A |

Eth 1/38 |

|

|

CT1.ETH5 * |

25Gbe |

Cisco Nexus 93180YC-FX-B |

Eth 1/38 |

Note: * Required only if iSCSI storage access is implemented.

The following procedures describe how to configure the Cisco Nexus switches for use in a base FlashStack environment. This procedure assumes the use of Cisco Nexus 93180YC-FX switches running NX-OS 9.3(8). Configuring on a differing model of Cisco Nexus 9000 series switches should be comparable but may differ slightly with model and changes in NX-OS release. The Cisco Nexus 93180YC-FX switch and the NX-OS 9.3(8) release were used in validating this FlashStack solution, so the steps will reflect this model and release.

Physical cabling should be completed by following the diagram and table references in section FlashStack Cabling.

The following procedures describe how to configure the Cisco Nexus 93180YC-FX switches for use in a base FlashStack environment. This procedure assumes the use of Cisco Nexus 9000 9.3(8), the Cisco suggested Nexus switch release at the time of this validation.

Note: The following procedure includes the setup of NTP distribution on both the mgmt0 port and the in-band management VLAN. The interface-vlan feature and ntp commands are used to set this up. This procedure also assumes that the default VRF is used to route the in-band management VLAN.

Cisco Nexus A

To set up the initial configuration for the Cisco Nexus A switch on <nexus-A-hostname>, follow these steps:

1. Configure the switch.

Note: On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

Abort Power On Auto Provisioning [yes - continue with normal setup, skip - bypass password and basic configuration, no - continue with Power On Auto Provisioning] (yes/skip/no)[no]: yes

Disabling POAP.......Disabling POAP

poap: Rolling back, please wait... (This may take 5-15 minutes)

---- System Admin Account Setup ----

Do you want to enforce secure password standard (yes/no) [y]: Enter

Enter the password for "admin": <password>

Confirm the password for "admin": <password>

Would you like to enter the basic configuration dialog (yes/no): yes

Create another login account (yes/no) [n]: Enter

Configure read-only SNMP community string (yes/no) [n]: Enter

Configure read-write SNMP community string (yes/no) [n]: Enter

Enter the switch name: <nexus-A-hostname>

Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]: Enter

Mgmt0 IPv4 address: <nexus-A-mgmt0-ip>

Mgmt0 IPv4 netmask: <nexus-A-mgmt0-netmask>

Configure the default gateway? (yes/no) [y]: Enter

IPv4 address of the default gateway: <nexus-A-mgmt0-gw>

Configure advanced IP options? (yes/no) [n]: Enter

Enable the telnet service? (yes/no) [n]: Enter

Enable the ssh service? (yes/no) [y]: Enter

Type of ssh key you would like to generate (dsa/rsa) [rsa]: Enter

Number of rsa key bits <1024-2048> [1024]: Enter

Configure the ntp server? (yes/no) [n]: Enter

Configure default interface layer (L3/L2) [L2]: Enter

Configure default switchport interface state (shut/noshut) [noshut]: shut

Enter basic FC configurations (yes/no) [n]: n

Configure CoPP system profile (strict/moderate/lenient/dense) [strict]: Enter

Would you like to edit the configuration? (yes/no) [n]: Enter

2. Review the configuration summary before enabling the configuration.

Use this configuration and save it? (yes/no) [y]: Enter

Cisco Nexus B

To set up the initial configuration for the Cisco Nexus B switch on <nexus-B-hostname>, follow these steps:

1. Configure the switch.

Note: On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

Abort Power On Auto Provisioning [yes - continue with normal setup, skip - bypass password and basic configuration, no - continue with Power On Auto Provisioning] (yes/skip/no)[no]: yes

Disabling POAP.......Disabling POAP

poap: Rolling back, please wait... (This may take 5-15 minutes)

---- System Admin Account Setup ----

Do you want to enforce secure password standard (yes/no) [y]: Enter

Enter the password for "admin": <password>

Confirm the password for "admin": <password>

Would you like to enter the basic configuration dialog (yes/no): yes

Create another login account (yes/no) [n]: Enter

Configure read-only SNMP community string (yes/no) [n]: Enter

Configure read-write SNMP community string (yes/no) [n]: Enter

Enter the switch name: <nexus-B-hostname>

Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]: Enter

Mgmt0 IPv4 address: <nexus-B-mgmt0-ip>

Mgmt0 IPv4 netmask: <nexus-B-mgmt0-netmask>

Configure the default gateway? (yes/no) [y]: Enter

IPv4 address of the default gateway: <nexus-B-mgmt0-gw>

Configure advanced IP options? (yes/no) [n]: Enter

Enable the telnet service? (yes/no) [n]: Enter

Enable the ssh service? (yes/no) [y]: Enter

Type of ssh key you would like to generate (dsa/rsa) [rsa]: Enter

Number of rsa key bits <1024-2048> [1024]: Enter

Configure the ntp server? (yes/no) [n]: Enter

Configure default interface layer (L3/L2) [L2]: Enter

Configure default switchport interface state (shut/noshut) [noshut]: shut

Enter basic FC configurations (yes/no) [n]: Enter

Configure CoPP system profile (strict/moderate/lenient/dense) [strict]: Enter

Would you like to edit the configuration? (yes/no) [n]: Enter

2. Review the configuration summary before enabling the configuration.

Use this configuration and save it? (yes/no) [y]: Enter

FlashStack Cisco Nexus Switch Configuration

Cisco Nexus A and Cisco Nexus B

To enable the appropriate features on the Cisco Nexus switches, follow these steps:

1. Log in as admin.

2. Run the following commands:

config t

feature udld

feature interface-vlan

feature lacp

feature vpc

feature lldp

feature nxapi

Cisco Nexus A and Cisco Nexus B

To set global configurations, follow this step on both switches:

1. Run the following commands to set global configurations:

spanning-tree port type network default

spanning-tree port type edge bpduguard default

spanning-tree port type edge bpdufilter default

system default switchport

system default switchport shutdown

port-channel load-balance src-dst l4port

ntp server <global-ntp-server-ip> use-vrf management

ntp master 3

clock timezone <timezone> <hour-offset> <minute-offset>

clock summer-time <timezone> <start-week> <start-day> <start-month> <start-time> <end-week> <end-day> <end-month> <end-time> <offset-minutes>

ip route 0.0.0.0/0 <ib-mgmt-vlan-gateway>

copy run start

Note: It is important to configure the local time so that logging time alignment and any backup schedules are correct. For more information on configuring the timezone and daylight savings time or summer time, please see Cisco Nexus 9000 Series NX-OS Fundamentals Configuration Guide, Release 9.3(x). Sample clock commands for the United States Eastern timezone are:

clock timezone EST -5 0

clock summer-time EDT 2 Sunday March 02:00 1 Sunday November 02:00 60

Cisco Nexus A and Cisco Nexus B

To create the necessary virtual local area networks (VLANs), follow this step on both switches:

1. From the global configuration mode, run the following commands:

vlan <oob-mgmt-vlan-id>

name OOB-MGMT

vlan <ib-mgmt-vlan-id>

name IB-MGMT-VLAN

vlan <native-vlan-id>

name Native-Vlan

vlan <vmotion-vlan-id>

name vMotion-VLAN

vlan <vm-traffic-vlan-id>

name VM-Traffic-VLAN

exit

Add NTP Distribution Interface

Cisco Nexus A

1. From the global configuration mode, run the following commands:

interface Vlan<ib-mgmt-vlan-id>

ip address <switch-a-ntp-ip>/<ib-mgmt-vlan-netmask-length>

no shutdown

exit

ntp peer <switch-b-ntp-ip> use-vrf default

Cisco Nexus B

1. From the global configuration mode, run the following commands:

interface Vlan<ib-mgmt-vlan-id>

ip address <switch-b-ntp-ip>/<ib-mgmt-vlan-netmask-length>

no shutdown

exit

ntp peer <switch-a-ntp-ip> use-vrf default

Add Individual Port Descriptions for Troubleshooting and Enable UDLD for Cisco UCS Interfaces

Cisco Nexus A

To add individual port descriptions for troubleshooting activity and verification for switch A, follow these steps:

Note: In this step and in the following sections, configure the Cisco UCS 6454 fabric interconnect clustername <ucs-clustername> interfaces as appropriate to your deployment.

1. From the global configuration mode, run the following commands:

interface Eth1/49

description <ucs-clustername>-A:1/49

udld enable

interface Eth1/50

description <ucs-clustername>-B:1/49

udld enable

Note: For fibre optic connections to Cisco UCS systems (AOC or SFP-based), entering udld enable will result in a message stating that this command is not applicable to fiber ports. This message is expected. If you have fibre optic connections, do not enter the udld enable command.

interface Ethernet1/53

description Peer Link <<nexus-B-hostname>>:Eth1/53

interface Ethernet1/54

description Peer Link <<nexus-B-hostname>>:Eth1/54

Cisco Nexus B

To add individual port descriptions for troubleshooting activity and verification for switch B and to enable aggressive UDLD on copper interfaces connected to Cisco UCS systems, follow this step:

1. From the global configuration mode, run the following commands:

interface Eth1/49

description <ucs-clustername>-A:1/50

udld enable

interface Eth1/50

description <ucs-clustername>-B:1/50

udld enable

Note: For fibre optic connections to Cisco UCS systems (AOC or SFP-based), entering udld enable will result in a message stating that this command is not applicable to fiber ports. This message is expected.

interface Ethernet1/53

description Peer Link <<nexus-A-hostname>>:Eth1/53

interface Ethernet1/54

description Peer Link <<nexus-A-hostname>>:Eth1/54

Create Port Channels

Cisco Nexus A and Cisco Nexus B

To create the necessary port channels between devices, follow this step on both switches:

1. From the global configuration mode, run the following commands:

interface Po10

description vPC peer-link

interface Eth1/53-54

channel-group 10 mode active

no shutdown

interface Po121

description <ucs-clustername>-A

interface Eth1/49

channel-group 121 mode active

no shutdown

interface Po123

description <ucs-clustername>-B

interface Eth1/50

channel-group 123 mode active

no shutdown

exit

copy run start

Configure Port Channel Parameters

Cisco Nexus A and Cisco Nexus B

To configure port channel parameters, follow this step on both switches:

1. From the global configuration mode, run the following commands:

interface Po10

switchport mode trunk

switchport trunk native vlan <native-vlan-id>

switchport trunk allowed vlan <ib-mgmt-vlan-id>, <vmotion-vlan-id>, <vm-traffic-vlan-id>, <oob-mgmt-vlan-id>

spanning-tree port type network

speed 100000

duplex full

state enabled

interface Po121

switchport mode trunk

switchport trunk native vlan <native-vlan-id>

switchport trunk allowed vlan <ib-mgmt-vlan-id>, <vmotion-vlan-id>, <vm-traffic-vlan-id>, <oob-mgmt-vlan-id>

spanning-tree port type edge trunk

mtu 9216

state enabled

interface Po123

switchport mode trunk

switchport trunk native vlan <native-vlan-id>

switchport trunk allowed vlan <ib-mgmt-vlan-id>, <vmotion-vlan-id>, <vm-traffic-vlan-id>, <oob-mgmt-vlan-id>

spanning-tree port type edge trunk

mtu 9216

state enabled

exit

copy run start

Configure Virtual Port Channels

Cisco Nexus A

To configure virtual port channels (vPCs) for switch A, follow this step:

1. From the global configuration mode, run the following commands:

vpc domain <nexus-vpc-domain-id>

role priority 10

peer-keepalive destination <nexus-B-mgmt0-ip> source <nexus-A-mgmt0-ip>

peer-switch

peer-gateway

auto-recovery

delay restore 150

ip arp synchronize

interface Po10

vpc peer-link

interface Po121

vpc 121

interface Po123

vpc 123

exit

copy run start

Cisco Nexus B

To configure vPCs for switch B, follow this step:

1. From the global configuration mode, run the following commands:

vpc domain <nexus-vpc-domain-id>

role priority 20

peer-keepalive destination <nexus-A-mgmt0-ip> source <nexus-B-mgmt0-ip>

peer-switch

peer-gateway

auto-recovery

delay restore 150

ip arp synchronize

interface Po10

vpc peer-link

interface Po121

vpc 121

interface Po123

vpc 123

exit

copy run start

Uplink into Existing Network Infrastructure

Depending on the available network infrastructure, several methods and features can be used to uplink the FlashStack environment. If an existing Cisco Nexus environment is present, we recommend using vPCs to uplink the Cisco Nexus switches included in the FlashStack environment into the infrastructure. The previously described procedures can be used to create an uplink vPC to the existing environment. Make sure to run copy run start to save the configuration on each switch after the configuration is completed.

The following commands can be used to check for correct switch configuration:

Note: Some of these commands need to run after further configuration of the FlashStack components are complete to see complete results.

show run

show vpc

show port-channel summary

show ntp peer-status

show cdp neighbors

show lldp neighbors

show run int

show int

show udld neighbors

show int status

Pure Storage FlashArray//X50 R3 Initial Configuration

FlashArray Initial Configuration

The following information should be gathered to enable the installation and configuration of the FlashArray. An official representative of Pure Storage will help rack and configure the new installation of the FlashArray.

| Array Settings |

Variable Name |

| Array Name (Hostname for Pure Array): |

<<var_flasharray_hostname>> |

| Virtual IP Address for Management: |

<<var_flasharray_vip>> |

| Physical IP Address for Management on Controller 0 (CT0): |

<<var_contoller-1_mgmt_ip >> |

| Physical IP Address for Management on Controller 1 (CT1): |

<<var_contoller-2_mgmt_ip>> |

| Netmask: |

<<var_contoller-1_mgmt_mask>> |

| Gateway IP Address: |

<<var_contoller-1_mgmt_gateway>> |

| DNS Server IP Address(es): |

<<var_nameserver_ip>> |

| DNS Domain Suffix: (Optional) |

<<var_dns_domain_name>> |

| NTP Server IP Address or FQDN: |

<<var_oob_ntp>> |

| Email Relay Server (SMTP Gateway IP address or FQDN): (Optional) |

<<var_smtp_ip>> |

| Email Domain Name: |

<<var_smtp_domain_name>> |

| Alert Email Recipients Address(es): (Optional) |

|

| HTTP Proxy Server ad Port (For Pure1): (Optional) |

|

| Time Zone: |

<<var_timezone>> |

When the FlashArray has completed initial configuration, it is important to configure the Cloud Assist phone-home connection to provide the best pro-active support experience possible. Furthermore, this will enable the analytics functionalities provided by Pure1.

The Alerts sub-view is used to manage the list of addresses to which Purity delivers alert notifications, and the attributes of alert message delivery. You can designate up to 19 alert recipients. The Alert Recipients section displays a list of email addresses that are designated to receive Purity alert messages. Up to 20 alert recipients can be designated.

Note: The list includes the built-in flasharray-alerts@purestorage.com address, which cannot be deleted.

The email address that Purity uses to send alert messages includes the sender domain name and is comprised of the following components:

<Array_Name>-<Controller_Name>@<Sender_Domain_Name>.com

To add an alert recipient, follow these steps:

1. Select Settings.

2. In the Alert Watchers section, enter the email address of the alert recipient and click the + icon.

The Relay Host section displays the hostname or IP address of an SMTP relay host, if one is configured for the array. If you specify a relay host, Purity routes the email messages via the relay (mail forwarding) address rather than sending them directly to the alert recipient addresses.

In the Sender Domain section, the sender domain determines how Purity logs are parsed and treated by Pure Storage Support and Escalations. By default, the sender domain is set to the domain name please-configure.me.

It is crucial that you set the sender domain to the correct domain name. If the array is not a Pure Storage test array, set the sender domain to the actual customer domain name. For example, mycompany.com.

The Pure1 Support section manages settings for Phone Home, Remote Assist, and Support Logs.

● The phone home facility provides a secure direct link between the array and the Pure Storage Technical Support web site. The link is used to transmit log contents and alert messages to the Pure Storage Support team so that when diagnosis or remedial action is required, complete recent history about array performance and significant events is available. By default, the phone home facility is enabled. If the phone home facility is enabled to send information automatically, Purity transmits log and alert information directly to Pure Storage Support via a secure network connection. Log contents are transmitted hourly and stored at the support web site, enabling detection of array performance and error rate trends. Alerts are reported immediately when they occur so that timely action can be taken.

● Phone home logs can also be sent to Pure Storage Technical support on demand, with options including Today's Logs, Yesterday's Logs, or All Log History.

The Remote Assist section displays the remote assist status as "Connected" or "Disconnected". By default, remote assist is disconnected. A connected remote assist status means that a remote assist session has been opened, allowing Pure Storage Support to connect to the array. Disconnect the remote assist session to close the session.

● The Support Logs section allows you to download the Purity log contents of the specified controller to the current administrative workstation. Purity continuously logs a variety of array activities, including performance summaries, hardware and operating status reports, and administrative actions.

Configure DNS Server IP Addresses

To configure the DNS server IP addresses, follow these steps:

1. Select Settings > Network.

2. In the DNS section, hover over the domain name and click the pencil icon. The Edit DNS dialog box appears.

3. Complete the following fields:

a. Domain: Specify the domain suffix to be appended by the array when doing DNS lookups.

b. NS#: Specify up to three DNS server IP addresses for Purity to use to resolve hostnames to IP addresses. Enter one IP address in each DNS# field. Purity queries the DNS servers in the order that the IP addresses are listed.

4. Click Save.

The Directory Service manages the integration of FlashArray with an existing directory service. When the Directory Service sub-view is configured and enabled, the FlashArray leverages a directory service to perform user account and permission level searches. Configuring directory services is OPTIONAL.

The FlashArray is delivered with a single local user, named pureuser, with array-wide (Array Admin) permissions.

To support multiple FlashArray users, integrate the array with a directory service, such as Microsoft Active Directory or OpenLDAP.

Role-based access control is achieved by configuring groups in the directory that correspond to the following permission groups (roles) on the array:

● Read Only Group. Read Only users have read-only privilege to run commands that convey the state of the array. Read Only uses cannot alter the state of the array.

● Storage Admin Group. Storage Admin users have all the privileges of Read Only users, plus the ability to run commands related to storage operations, such as administering volumes, hosts, and host groups. Storage Admin users cannot perform operations that deal with global and system configurations.

● Array Admin Group. Array Admin users have all the privileges of Storage Admin users, plus the ability to perform array-wide changes. In other words, Array Admin users can perform all FlashArray operations.

To configure the Directory Service, follow these steps:

1. Select Settings > Access > Users.

2. Select the![]() icon in the Directory Services panel:

icon in the Directory Services panel:

● Enabled: Select the check box to leverage the directory service to perform user account and permission level searches.

● URI: Enter the comma-separated list of up to 30 URIs of the directory servers. The URI must include a URL scheme (ldap, or ldaps for LDAP over SSL), the hostname, and the domain. You can optionally specify a port. For example, ldap://ad.company.com configures the directory service with the hostname "ad" in the domain "company.com" while specifying the unencrypted LDAP protocol.

● Base DN: Enter the base distinguished name (DN) of the directory service. The Base DN is built from the domain and should consist only of domain components (DCs). For example, for ldap://ad.storage.company.com, the Base DN would be: “DC=storage,DC=company,DC=com”

● Bind User: Username used to bind to and query the directory. For Active Directory, enter the username - often referred to as sAMAccountName or User Logon Name - of the account that is used to perform directory lookups. The username cannot contain the characters " [ ] : ; | = + * ? < > / \ and cannot exceed 20 characters in length. For OpenLDAP, enter the full DN of the user. For example, "CN=John,OU=Users,DC=example,DC=com".

● Bind Password: Enter the password for the bind user account.

● Group Base: Enter the organizational unit (OU) to the configured groups in the directory tree. The Group Base consists of OUs that, when combined with the base DN attribute and the configured group CNs, complete the full Distinguished Name of each groups. The group base should specify "OU=" for each OU and multiple OUs should be separated by commas. The order of OUs should get larger in scope from left to right. In the following example, SANManagers contains the sub-organizational unit PureGroups: "OU=PureGroups,OU=SANManagers".

● Array Admin Group: Common Name (CN) of the directory service group containing administrators with full privileges to manage the FlashArray. Array Admin Group administrators have the same privileges as pureuser. The name should be the Common Name of the group without the "CN=" specifier. If the configured groups are not in the same OU, also specify the OU. For example, "pureadmins,OU=PureStorage", where pureadmins is the common name of the directory service group.

● Storage Admin Group: Common Name (CN) of the configured directory service group containing administrators with storage related privileges on the FlashArray. The name should be the Common Name of the group without the "CN=" specifier. If the configured groups are not in the same OU, also specify the OU. For example, "pureusers,OU=PureStorage", where pureusers is the common name of the directory service group.

● Read Only Group: Common Name (CN) of the configured directory service group containing users with read-only privileges on the FlashArray. The name should be the Common Name of the group without the "CN=" specifier. If the configured groups are not in the same OU, also specify the OU. For example, "purereadonly,OU=PureStorage", where purereadonly is the common name of the directory service group.

● Check Peer: Select the check box to validate the authenticity of the directory servers using the CA Certificate. If you enable Check Peer, you must provide a CA Certificate.

● CA Certificate: Enter the certificate of the issuing certificate authority. Only one certificate can be configured at a time, so the same certificate authority should be the issuer of all directory server certificates. The certificate must be PEM formatted (Base64 encoded) and include the "-----BEGIN CERTIFICATE-----" and "-----END CERTIFICATE-----" lines. The certificate cannot exceed 3000 characters in total length.

3. Click Save.

4. Click Test to test the configuration settings. The LDAP Test Results pop-up window appears. Green squares represent successful checks. Red squares represent failed checks.

Self-Signed Certificate

Purity creates a self-signed certificate and private key when you start the system for the first time. The SSL Certificate sub-view allows you to view and change certificate attributes, create a new self-signed certificate, construct certificate signing requests, import certificates and private keys, and export certificates.

Creating a self-signed certificate replaces the current certificate. When you create a self-signed certificate, include any attribute changes, specify the validity period of the new certificate, and optionally generate a new private key.

When you create the self-signed certificate, you can generate a private key and specify a different key size. If you do not generate a private key, the new certificate uses the existing key.

You can change the validity period of the new self-signed certificate. By default, self-signed certificates are valid for 3650 days

CA-Signed Certificate

Certificate authorities (CA) are third party entities outside the organization that issue certificates. To obtain a CA certificate, you must first construct a certificate signing request (CSR) on the array.

The CSR represents a block of encrypted data specific to your organization. You can change the certificate attributes when you construct the CSR; otherwise, Purity will reuse the attributes of the current certificate (self-signed or imported) to construct the new one. Note that the certificate attribute changes will only be visible after you import the signed certificate from the CA.

Send the CSR to a certificate authority for signing. The certificate authority returns the SSL certificate for you to import. Verify that the signed certificate is PEM formatted (Base64 encoded), includes the "-----BEGIN CERTIFICATE-----" and "-----END CERTIFICATE-----" lines, and does not exceed 3000 characters in total length. When you import the certificate, also import the intermediate certificate if it is not bundled with the CA certificate.

If the certificate is signed with the CSR that was constructed on the current array and you did not change the private key, you do not need to import the key. However, if the CSR was not constructed on the current array or if the private key has changed since you constructed the CSR, you must import the private key. If the private key is encrypted, also specify the passphrase.

Note: If FC-NVMe is being implemented, the FC ports personality on the FlashArray need to be converted to nvme-fc from the default sccsi-fc. In this design we have used two scsi-fc and two nvme-fc ports to support both SCSI and NVMe over Fibre Channel. The ports can be converted to nvme-fc with the help of Pure support.

The following procedures describe how to configure the Cisco UCS domain for use in a base FlashStack environment. This procedure assumes you’re using Cisco UCS Fabric Interconnects running in Intersight managed mode.

Physical cabling should be completed by following the diagram and table references in section FlashStack Cabling.

Cisco Intersight Managed Mode Configuration

The Cisco Intersight™ platform is a management solution delivered as a service with embedded analytics for Cisco® and third-party IT infrastructures. The Cisco Intersight managed mode (also referred to as Cisco IMM or Intersight managed mode) is a new architecture that manages Cisco Unified Computing System™ (Cisco UCS®) fabric interconnect–attached systems through a Redfish-based standard model. Cisco Intersight managed mode standardizes both policy and operation management for Cisco UCSX X210c M6 compute nodes used in this deployment guide.

Set up Cisco Intersight Managed Mode on Cisco UCS Fabric Interconnects

The Cisco UCS fabric interconnects need to be set up to support Cisco Intersight managed mode. When converting an existing pair of Cisco UCS fabric interconnects from Cisco UCS Manager (UCSM) mode to Intersight Mange Mode (IMM), first erase the configuration and reboot your system.

Note: Converting fabric interconnects to Cisco Intersight managed mode is a disruptive process, and configuration information will be lost. Customers are encouraged to make a backup of their existing configuration. If a UCS software version that supports Intersight Managed Mode (4.1(3) or later) is already installed on Cisco UCS Fabric Interconnects, do not upgrade the software to a recommended recent release using Cisco UCS Manager. The software upgrade will be performed using Cisco Intersight to make sure Cisco UCS X-series firmware is part of the software upgrade.

This section provides the detailed procedures for configuring the Cisco Unified Computing System (Cisco UCS) for use in a FlashStack environment. These steps are necessary to provision the Cisco UCS Compute nodes and should be followed precisely to avoid improper configuration.

Cisco UCS Fabric Interconnect A

To configure the Cisco UCS for use in a FlashStack environment in Intersight managed mode, follow these steps:

1. Connect to the console port on the first Cisco UCS fabric interconnect.

2. Power on the Fabric Interconnect.

3. Power-on self-test messages will be displayed as the Fabric Interconnect boots.

4. When the unconfigured system boots, it prompts you for the setup method to be used. Enter console to continue the initial setup using the console CLI.

5. Enter the “intersight” as the management mode for the Fabric Interconnect:

● Intersight to manage the Fabric Interconnect through Cisco Intersight.

● ucsm to manage the Fabric Interconnect through Cisco UCS Manager.

6. Enter y to confirm that you want to continue the initial setup.

7. To use a strong password, enter y.

8. Enter the password for the admin account.

9. To confirm, re-enter the password for the admin account.

10. Enter yes to continue the initial setup for a cluster configuration.

11. Enter the Fabric Interconnect fabric (either A or B).

12. Enter the system name.

13. Enter the IPv4 or IPv6 address for the management port of the Fabric Interconnect.

Note: If you enter an IPv4 address, you will be prompted to enter an IPv4 subnet mask. If you enter an IPv6 address, you will be prompted to enter an IPv6 network prefix.

14. Enter the respective IPv4 subnet mask or IPv6 network prefix, then press Enter.

Note: You are prompted for an IPv4 or IPv6 address for the default gateway, depending on the address type you entered for the management port of the Fabric Interconnect.

15. Enter either of the following:

● IPv4 address of the default gateway

● IPv6 address of the default gateway

16. Enter the IPv4 or IPv6 address for the DNS server.

Note: The address type must be the same as the address type of the management port of the Fabric Interconnect.

17. Enter yes if you want to specify the default Domain name, or no if you do not.

Enter the configuration method. (console/gui) ? console

Enter the management mode. (ucsm/intersight)? intersight

Enter the setup mode; setup newly or restore from backup. (setup/restore) ? setup

You have chosen to setup a new Fabric interconnect in “ucsm” managed mode. Continue? (y/n): y

Enforce strong password? (y/n) [y]: Enter

Enter the password for "admin": <password>

Confirm the password for "admin": <password>

Is this Fabric interconnect part of a cluster(select 'no' for standalone)? (yes/no) [n]: y

Enter the switch fabric (A/B) []: A

Enter the system name: <ucs-cluster-name>

Physical Switch Mgmt0 IP address : <ucsa-mgmt-ip>

Physical Switch Mgmt0 IPv4 netmask : <ucsa-mgmt-mask>

IPv4 address of the default gateway : <ucsa-mgmt-gateway>

Cluster IPv4 address : <ucs-cluster-ip>

Configure the DNS Server IP address? (yes/no) [n]: y

DNS IP address : <dns-server-1-ip>

Configure the default domain name? (yes/no) [n]: y

Default domain name : <ad-dns-domain-name>

Apply and save the configuration (select 'no' if you want to re-enter)? (yes/no): yes

18. Wait for the login prompt for UCS Fabric Interconnect A before proceeding to the next section.

Cisco UCS Fabric Interconnect B

To configure the Cisco UCS for use in a FlashStack environment, follow these steps:

1. Connect to the console port on the second Cisco UCS fabric interconnect.

2. Power up the Fabric Interconnect.

3. When the unconfigured system boots, it prompts you for the setup method to be used. Enter console to continue the initial setup using the console CLI.

Enter the configuration method. (console/gui) ? console

Installer has detected the presence of a peer Fabric interconnect. This Fabric interconnect will be added to the cluster. Continue (y/n) ? y

Enter the admin password of the peer Fabric interconnect: <password>

Connecting to peer Fabric interconnect... done

Retrieving config from peer Fabric interconnect... done

Peer Fabric interconnect Mgmt0 IPv4 Address: <ucsa-mgmt-ip>

Peer Fabric interconnect Mgmt0 IPv4 Netmask: <ucsa-mgmt-mask>

Cluster IPv4 address : <ucs-cluster-ip>

Peer FI is IPv4 Cluster enabled. Please Provide Local Fabric Interconnect Mgmt0 IPv4 Address

Physical Switch Mgmt0 IP address : <ucsb-mgmt-ip>

Local fabric interconnect model(UCS-FI-6454)

Peer fabric interconnect is compatible with the local fabric interconnect. Continuing with the installer...

Apply and save the configuration (select 'no' if you want to re-enter)? (yes/no): yes

4. Wait for the login prompt for UCS Fabric Interconnect B before proceeding to the next section.

Set up Cisco Intersight Account

In this step, using the unique device information for the Cisco UCS, you set up a new Cisco Intersight account. Customers also can choose to add the Cisco UCS devices set up for Cisco Intersight managed mode to an existing Cisco Intersight account; however, that procedure is not covered in this document.

After completing the initial configuration for the fabric interconnects, log into Fabric Interconnect A using your web browser to capture the Cisco Intersight connectivity information. To claim a device, follow these steps:

1. Use the management IP address of Fabric Interconnect A to access the device from a web browser and the previously configured admin password to log into the device.

2. Under DEVICE CONNECTOR, you should see the current device status as “Not claimed.” Note, or copy, the Device ID and Claim Code information to use to set up a new Cisco Intersight account.

Note: The Device ID and Claim Code information can also be used to claim the Cisco UCS devices set up with Cisco Intersight managed mode in an existing Cisco Intersight account.

Create a new Cisco Intersight account

To create a new Cisco Intersight account, follow these steps:

1. Go to https://www.intersight.com and click “Don't have an Intersight Account? Create an account.”

2. Accept the end User license Agreement.

3. Click Next.

4. Click Create.

5. After the account has been created successfully, click “Go To Intersight.”

You will see a screen with your Cisco Intersight account as shown below:

Claim UCS Fabric Interconnects to Cisco Intersight

To claim a new target, follow these steps:

1. Log into Intersight with the Account Administrator, Device Administrator, or Device Technician privileges.

2. Navigate to ADMIN > Targets > Claim a New Target.

3. Choose Available for Claiming and select the Cisco UCS Domain (Intersight managed) as target type you want to claim. Click Start.

4. Enter the Device ID and Claim Code details captured from Device Connector tab earlier and click Claim to complete the claiming process.

Verify addition of Cisco UCS Fabric Interconnects to Cisco Intersight

To verify that the Cisco UCS fabric interconnects are added to your account in Cisco Intersight, follow these steps:

1. Go to the web GUI of the Cisco UCS fabric interconnect and click Refresh.

The fabric interconnect status should now be set to Claimed, as shown below:

The fabric interconnect now is listed as a Claimed Target, as shown below:

When setting up a new Cisco Intersight account (as discussed in this document), the account needs to be enabled for Cisco Smart Software Licensing. To set up licensing, follow these steps:

1. Associate the Cisco Intersight account with Cisco Smart Licensing by following these steps:

a. Log into the Cisco Smart Licensing portal: https://software.cisco.com/software/csws/ws/platform/home?locale=en_US#module/SmartLicensing.

b. Select the correct virtual account.

c. Under Inventory > General, generate a new token for product registration.

d. Copy this newly created token.

2. With the Cisco Intersight account associated with Cisco Smart Licensing, log into the Cisco Intersight portal and click Settings (the gear icon) in the top-right corner. Choose Licensing.

3. Under Cisco Intersight > Licensing, click Register.

4. Enter the copied token from the Cisco Smart Licensing portal.

5. Click Next.

6. Select the appropriate default Tier.

7. Click Register and wait for registration to process.

When the registration is successful, the information about the associated Cisco Smart account is displayed, as shown below:

8. If default licensing tier is not set to desired level, change it by clicking Set Default Tier on right side of the page. For Cisco Intersight managed mode, the default tier needs to be changed to Essential or a higher tier.

Note: The Default Tier was set to Premier during registration in the earlier step, however describing the procedure to set it up if it’s missed during license registration.

9. Select the tier supported by your Smart License.

Note: In this deployment, the default license tier is set to Premier.

Setup Intersight Organization

You need to define all Cisco Intersight managed mode configurations for Cisco UCS, including policies, under an organization. To define a new organization, follow these steps:

Create Resource Group

Note: Optionally, create a resource group to place the claimed targets. The Default Resource Group automatically groups all the resources of a User Account. A user with Account Administrator privilege can remove resources from the Default Resource Group when required.

1. Log into the Cisco Intersight portal.

2. Click Settings (the gear icon).

3. Click Resource Groups.

4. Click Create Resource Group.

5. Provide a name for the Resource Group (for example FSV-RG).

6. Under Memberships, select Custom.

7. Select the recently added Cisco UCS device for this Resource Group.

8. Click Create.

9. Click Organizations.

10. Click Create Organization.

11. Provide a name for the organization (for example FSV) and select the previously created resource group (FSV-RG).

12. Click Create.

Upgrade Fabric Interconnect Firmware using Cisco Intersight

Cisco UCS Manager does not support Cisco UCS X-Series, therefore upgrading Fabric Interconnect software using Cisco UCS Manager does not contain the firmware for Cisco UCS X-series. Before setting up a UCS domain profile and discovering the chassis, upgrade the Fabric Interconnect firmware to the latest recommended release using Cisco Intersight.

Note: If Cisco UCS Fabric Interconnects were upgraded to the latest recommended software using Cisco UCS Manager, this upgrade process through Intersight will still work and will copy the X-Series firmware to the Fabric Interconnects.

To perform the software upgrade, follow these steps:

1. Log in to the Cisco Intersight portal.

2. Click to expand OPERATE in the left pane and select Fabric Interconnects.

3. Click the ellipses “…” at the end of the row for either of the Fabric Interconnects and select Upgrade Firmware.

4. Click Start.

5. Verify the Fabric Interconnect information and click Next.

6. Enable Advanced Mode using the toggle switch and uncheck Fabric Interconnect Traffic Evacuation.

7. Select the recommended release from the list and click Next.

8. Verify the information and click Upgrade to start the upgrade process.

9. Keep an eye on the Request panel of the main Intersight screen as the system will ask for user permission before upgrading each FI. Click on the Circle with Arrow and follow the prompts on screen to grant permission.

10. Wait for both the FIs to successfully upgrade.

Configure a Cisco UCS Domain Profile

A Cisco UCS domain profile configures a fabric interconnect pair through reusable policies, allows configuration of the ports and port channels, and configures the VLANs and VSANs in the network. It defines the characteristics of and configures ports on fabric interconnects. The domain-related policies can be attached to the profile either at the time of creation or later. One Cisco UCS domain profile can be assigned to one fabric interconnect domain.

To create a Cisco UCS domain profile, follow these steps:

1. Log into the Cisco Intersight portal

2. Click to expand CONFIGURE in the left pane and select Profiles.

3. In the main window, select UCS Domain Profiles and click Create UCS Domain Profile.

4. In the “Create UCS Domain Profile” screen, click Start.

1. Choose the organization from the drop-down list (for example, FSV).

2. Provide a name for the domain profile (for example AA19-Domain-Profile).

3. Click Next.

Step 2 - UCS Domain Assignment

To create the Cisco UCS domain assignment, follow these steps:

1. Assign the Cisco UCS domain to this new domain profile by clicking Assign Now and selecting the previously added Cisco UCS domain (AA19-6454).

2. Click Next.

Step 3 - VLAN and VSAN Configuration

In this step, a single VLAN policy will be created for both FIs, but individual policies will be created for the VSANs as the VSAN IDs are unique for each FI.

Create and apply the VLAN Policy

1. Click Select Policy next to VLAN Configuration under Fabric Interconnect A and in the pane on the right, click Create New.

2. Verify the correct Organization is selected (for example, FSV).

3. Provide a name for the policy (for example, AA19-VLAN-Pol).

4. Click Next.

5. Click Add VLANs.

6. Provide the Name and VLAN ID for the native VLAN (for example, Native-VLAN: 2).

7. Make sure Auto Allow On Uplinks is enabled.

8. Click Select Policy under Multicast in the pane on the left, click Create New.

9. Verify the correct Organization is selected (for example, FSV).

10. Provide a name for the policy (for example, AA19-MCAST-Pol).

11. Click Next.

12. Click Create.

13. Click Add to add the VLAN.

14. Select Set Native VLAN ID and enter VLAN number (for example, 2) under the VLAN ID.

15. Add remaining VLANs for FlashStack by clicking Add VLANs and entering the VLANs one by one while selecting the Multicast policy (AA19-IB-MGMT-VLAN) created in earlier steps. The VLANs used during this validation for FC based storage are shown below.

Note: If implementing iSCSI storage, add the iSCSI-A and iSCSI-B VLANs.

16. Click Create to create all the VLANs.

17. Click Select Policy next to VLAN Configuration for FI-B and select the same VALN policy that was created in the last step.

Create and apply the VSAN Policies (FC configuration Only)

To create and apply the VSAN policy, follow these steps:

Note: A VSAN policy is only needed when configuring Fibre Channel and can be skipped when configuring IP-only storage access.

1. Click Select Policy next to VSAN Configuration under Fabric Interconnect A and in the pane on the right, click Create New.

2. Verify the correct Organization is selected (for example, FSV).

3. Provide a name for the policy (for example, AA19-VSAN-Pol-A).

4. Click Next.

5. Click Add VSAN and provide the Name (for example, VSAN-A), VSAN ID (for example, 101) and associated FCoE VLAN ID (for example, 101) for SAN-A.

6. Click Add.

7. Enable Uplink Trunking for this VSAN.

8. Click Create.

9. Repeat steps 1 – 8 to create a new VSAN policy for SAN-B.

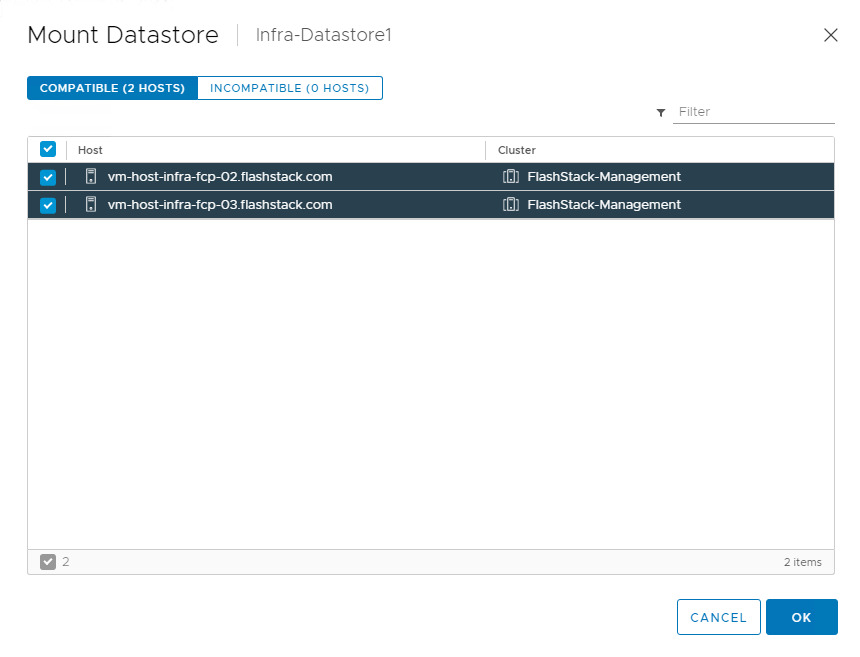

10. Click Select Policy next to VSAN Configuration under Fabric Interconnect B and in the pane on the right, click Create New.