FlexPod for Hybrid Cloud using Cisco Intersight Service and Cloud Volumes ONTAP Replication

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

Design and Deployment Guide for extending FlexPod to the Hybrid Cloud for Disaster Recover and Data Replication powered by Automation and Observability with Cisco Intersight

Published February 2022

In partnership with:

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries. (LDW_P2)

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2022 Cisco Systems, Inc. All rights reserved.

Cisco® Validated Designs include systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. These designs incorporate a wide range of technologies and products into a portfolio of solutions that have been developed to address the business needs of customers. Cisco and NetApp have partnered to deliver this document, which serves as a specific step-by-step guide for implementing this solution.

This document provides reference architecture and deployment details for disaster recovery of on-premises FlexPod Datacenter to NetApp Cloud Volumes ONTAP deployed on Amazon AWS using Cisco Intersight services.

The solution provides a comprehensive disaster recovery service to streamline data accessibility in the event of an on-premises outage to Cloud Volumes ONTAP deployed in Amazon AWS. This solution enables a secure transport and data protection, enables automated failover and failback to any destination across hybrid cloud in a cost-effective way powered by automation and observability using Cisco Intersight.

Cisco Intersight is a cloud operations platform that delivers intelligent visualization, optimization, and orchestration for applications and infrastructure across public cloud and on-premises environments. Cisco Intersight provides an essential control point for customers to get more value from hybrid IT investments by simplifying operations across on-prem and their public clouds, continuously optimizing their multi cloud environments and accelerating service delivery to address business needs.

With Cisco Intersight services, you can manage FlexPod Datacenter on-premises as well as easily orchestrate and automate data replication and disaster recovery solution for FlexPod Storage to Cloud Volumes ONTAP across hybrid cloud.

Protecting data and disaster recovery are important goals for businesses continuity. Disaster recovery allows organizations to failover the business operations to a secondary location and later recover and failback to the primary site efficiently and reliably. Multiple concerns like natural disaster, network failures, software vulnerabilities, human error etc. make developing a disaster recovery strategy the top IT priority for every business today. Disaster recovery requires all the workload running on the primary site be reproduced fully on the DR site. It also requires having an up-to-date copy of all enterprise data, including database, file services, NFS and iSCSI storage, and so on. As data in the production environment will be constantly updated, these data changes must be transferred to the DR site on a regular basis.

Deploying disaster recovery environments is challenging for most organizations due to the requirement for infrastructure and site independence. The amount of resources needed, costs of setting up, testing, and maintaining a secondary data center are very high almost the same cost as the entire production environment, especially considering organizations rarely use it. It is challenging to keep a minimal data footprint with adequate protection, continuously synchronizing the data and establishing seamless failover and failback. After building out all DR site, the challenge then becomes to replicate data from the production environment, and to keep it in synchronized going forward.

NetApp Cloud Volumes ONTAP delivers a solution for enterprise data management where data can be efficiently replicated from FlexPod Datacenter to Cloud Volumes ONTAP deployed on a public cloud like AWS. By leveraging cost-effective and secure public cloud resources, Cloud Volumes ONTAP enhances cloud-based DR with highly efficient data replication, built-in storage efficiencies, and simple DR testing, managed with unified control, drag-and-drop simplicity, providing cost-effective, bullet-proof protection against any kind of error, failure, or disaster. Cloud Volumes ONTAP provides SnapMirror as a solution for block-level data replication that keeps the destination up to date through incremental updates. Users can specify a synchronization schedule, for example, of every minute or every hour, at which time data changes from the source will be transferred over.

Cisco Intersight provides powerful services and easy-to-use GUI interface for management of infrastructure and workloads across hybrid cloud. We will examine in detail how we can orchestrate and automate the data replication and disaster recovery solution between FlexPod Datacenter and Cloud Volumes ONTAP using Cisco Intersight services like Intersight Cloud orchestrator and Intersight Service for HashiCorp Terraform.

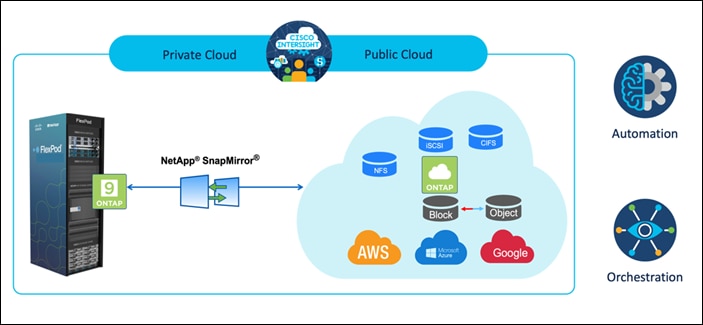

Figure 1. Solution Overview

There are multiple advantages of this solution, such as:

● Orchestration and Automation

Cisco Intersight simplifies the day-to-day operations of the industry trusted FlexPod hybrid cloud infrastructure by providing consistent orchestration frameworks that are delivered via automation.

● Customized Protection

Cloud Volumes ONTAP provides block-level data replication from ONTAP to the cloud that keeps the destination up to data through incremental updates. Users can specify a synchronization schedule such as every minute or every hour, based on which any changes at the source will be transferred over.

● Seamless Failover and Failback

When a disaster occurs, storage administrators can quickly set the failover to the cloud volumes. When the primary site is recovered, the new data created in the DR environment is synchronized back to the source volumes enabling the secondary data replication to be re-established.

● Efficiency

The storage space and costs for the secondary cloud copy are optimized through the usage of data compression, thin provisioning, and deduplication. The data is transferred on the block-level in their compressed and deduplicated form, improving the speed of the transfers. Data is also automatically tiered to low-cost object storage and only brought back to high-performance storage when accesses, such as in a DR scenario. This significantly reduces ongoing storage costs.

● Increase IT Productivity

Using Intersight as the single enterprise-grade, secure platform for infrastructure and application lifecycle management, simplify configuration management and automate of manual tasks at scale for the solution.

This section describes the components used in the solution outlined in this study.

FlexPod is a defined set of hardware and software that serves as an integrated foundation for both virtualized and non-virtualized solutions. VMware vSphere® built on FlexPod includes NetApp AFF storage, Cisco Nexus® networking, Cisco MDS storage networking, the Cisco Unified Computing System (Cisco UCS®), and VMware vSphere software in a single package. The design is flexible enough that the networking, computing, and storage can fit in one data center rack or be deployed according to a customer's data center design. Port density enables the networking components to accommodate multiple configurations of this kind.

One benefit of the FlexPod architecture is the ability to customize or "flex" the environment to suit a customer's requirements. A FlexPod can easily be scaled as requirements and demand change. The unit can be scaled both up (adding resources to a FlexPod unit) and out (adding more FlexPod units). The reference architecture detailed in this document highlights the resiliency, cost benefit, and ease of deployment of a Fibre Channel and IP-based storage solution. A storage system capable of serving multiple protocols across a single interface allows for customer choice and investment protection because it truly is a wire-once architecture.

FlexPod Components

FlexPod architecture includes the following core components:

● Cisco UCS

● Cisco Nexus® Family switches

● Cisco MDS Family switches

● NetApp AFF/FAS storage systems

Figure 2. FlexPod Component Families

These components are connected and configured according to the best practices of both Cisco and NetApp to provide an ideal platform for running a variety of enterprise workloads with confidence. FlexPod can scale up for greater performance and capacity (adding compute, network, or storage resources individually as needed), or it can scale out for environments that require multiple consistent deployments (such as rolling out of additional FlexPod stacks). The reference architecture covered in this document leverages Cisco Nexus 9000 for the network switching element and pulls in the Cisco MDS 9000 for the SAN switching component.

One of the key benefits of FlexPod is its ability to maintain consistency during scale. Each of the component families shown (Cisco UCS, Cisco Nexus, and NetApp AFF) offers platform and resource options to scale the infrastructure up or down, while supporting the same features and functionality that are required under the configuration and connectivity best practices of FlexPod.

Why FlexPod?

The following are some of the benefits of FlexPod:

● Consistent Performance and Scalability

◦ Consistent sub-millisecond latency with 100% flash storage

◦ Consolidate 100’s of enterprise-class applications in a single rack

◦ Scales easily, without disruption

◦ Continuous growth through multiple FlexPod CI deployments

● Operational Simplicity

◦ Fully tested, validated, and documented for rapid deployment

◦ Reduced management complexity

◦ Auto-aligned 512B architecture removes storage alignment issues

◦ No storage tuning or tiers necessary

● Lowest TCO

◦ Dramatic savings in power, cooling, and space with 100 percent Flash

◦ Industry leading data reduction

● Enterprise-Grade Resiliency

◦ Highly available architecture with no single point of failure

◦ Nondisruptive operations with no downtime

◦ Upgrade and expand without downtime or performance loss

◦ Native data protection: snapshots and replication

◦ Suitable for even large resource-intensive workloads such as real-time analytics or heavy transactional databases

Cisco Intersight is a SaaS platform which delivers intelligent automation, observability and optimization for traditional and cloud-native applications and infrastructure. The platform helps drive change with IT teams and delivers an operating model designed for hybrid cloud.

Cisco Intersight provides the following:

● Faster Delivery

Delivered “as a Service”, from the cloud or in the customers data center, with frequent updates and continued innovation, due to an agile-based software development model. This way, customer can just focus on accelerating delivery for line-of-business.

● Simplified operations

Simplify operations by using a single secure SaaS-delivered tool, with common inventory, authentication and APIs to work across full stack and all locations, eliminating silos across teams. From managing physical servers and hypervisors on-prem, to VMs, K8s, serverless, automation, optimization, and cost control across both on-prem and public clouds.

● Continuous optimization

Continuously optimize environment using intelligence provided by Intersight across every layer, as well as Cisco TAC. That intelligence is converted into recommended and automatable actions so you can adapt real-time to every change: from moving workloads and monitoring health of physical servers, to auto sizing K8s clusters, to cost reduction recommendations the public clouds you work with.

Cisco Intersight Management

There are two modes of management operations possible with Cisco Intersight. UCSM Managed Mode (UMM) and Intersight Managed Mode (IMM). You can select the native UCSM Managed Mode (UMM) or Intersight Managed Mode (IMM) for the Fabric attached Cisco UCS Systems during initial setup of the Fabric Interconnects.

UCSM Managed Mode allow to connect existing Cisco UCS infrastructure managed with Cisco UCS Manager (UCSM) to Cisco Intersight. In addition, Intersight integrates with third-party storage, cloud services, virtualization, and container platforms.

Intersight Managed Mode (IMM) is a new architecture that manages the Cisco UCS Fabric Interconnected systems through a Redfish-based standard model. Intersight Managed Mode unifies the capabilities of the Cisco UCS Systems and the cloud-based flexibility of Intersight, thus unifying the management experience for the standalone and Fabric Interconnect attached systems. Intersight Management Model standardizes policy and operation management for UCS-FI-6454, UCS-FI-64108, and Cisco UCS M5, M6, and X-Series servers.

Cisco Intersight Managed Mode (IMM) transition tool helps bootstrap new IMM deployments by replicating the configuration attributes of the existing Cisco UCS Mana infrastructure and by converting the existing Service Profile Templates to IMM Server Profile Templates to accelerate deployment of new servers in IMM. Download image and user guides are available at: https://ucstools.cloudapps.cisco.com/

Cisco Intersight Connected Virtual Appliance and Private Virtual Appliance

In addition to the SaaS deployment model running on Intersight.com, on-premises options can be purchased separately. The Cisco Intersight Connected Virtual Appliance and Cisco Intersight Private Virtual Appliance are available for organizations that have additional data locality or security requirements for managing systems. The Cisco Intersight Connected Virtual Appliance delivers the management features of the Cisco Intersight platform in an easy-to-deploy VMware Open Virtualization Appliance (OVA) or Microsoft Hyper-V Server virtual machine that allows you to control the system details that leave your premises. The Cisco Intersight Private Virtual Appliance is provided in a form factor specifically designed for users who operate in disconnected (air gap) environments. The Private Virtual Appliance requires no connection to public networks or back to Cisco to operate. At this time, Cisco Intersight managed mode configuration is available only through the Cisco Intersight SaaS platform and Connected Virtual Appliance.

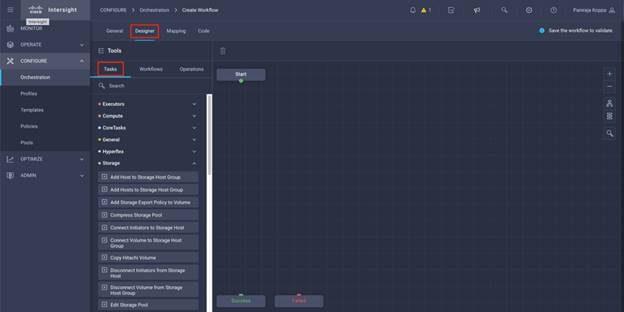

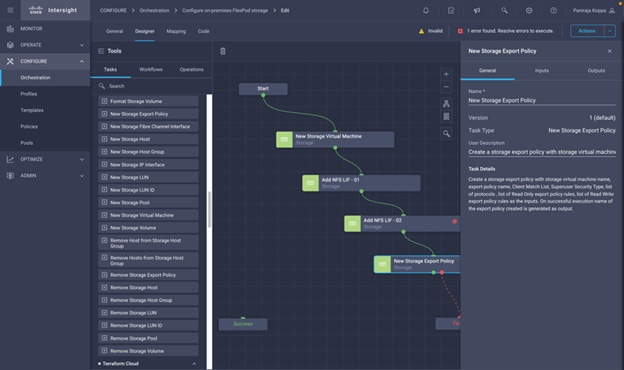

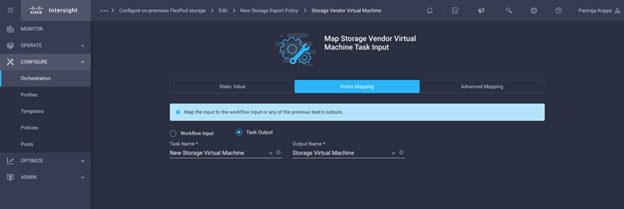

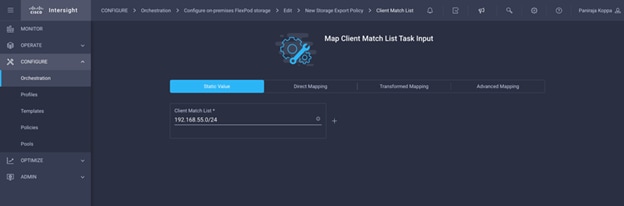

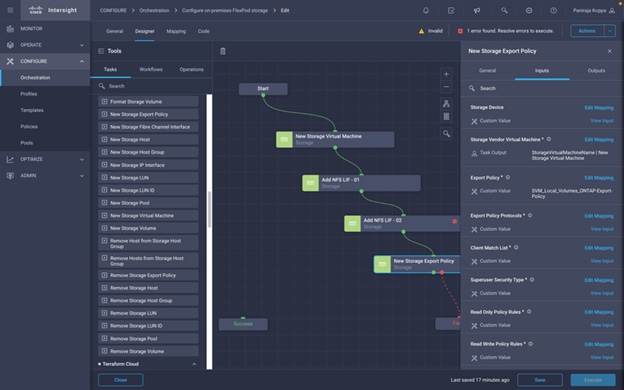

Cisco Intersight Cloud Orchestrator

Cisco Intersight Cloud Orchestrator is a powerful automation tool that enables IT operations teams not just to move at the speed of the business and standardize while reducing risk across all domains but also to provide a consistent cloud-like experience for users.

Cisco Intersight Cloud Orchestrator simplifies orchestration and automation for infrastructure and workloads across hybrid cloud by providing an easy-to-use workflow designer. Based on a library of curated, multi-domain tasks (custom or provided by Cisco), it enables users to create workflows, quickly and easily, without being coding experts! This enables quick and easy automation and deployment of any infrastructure resource, from servers, to VMs and the network, taking away some of the complexity of operating your hybrid IT environment.

The ICO workflow designer provides:

● Low/no-code workflow creation with a modern, drag-and-drop user experience with control flow support. The workflow designer includes policy-based, built-in tasks for Cisco UCS, virtualization, and other Cisco devices. A Software Development Kit (SDK) enables Cisco technology partners to build their own ICO tasks to develop custom solutions.

● Rollback capabilities to selectively undo a workflow’s tasks in the event of failure, or to deprovision infrastructure, which when done manually can often take longer and be more error prone than straight provisioning.

● Extensibility with a task designer that expands the functionality of currently supported targets or can be used to create new ones. ICO currently supports Web API with more integration options to come.

With Cisco Intersight Cloud Orchestrator you can truly evolve your automation strategy to provide consistent experience across on-premises resources and public clouds.

The following are some key benefits:

● Bring your public cloud and on-premises resources together with a solution that extends orchestration across any infrastructure and workload and integrates with the tools of your choice

● Save time and streamline automation with a user-friendly GUI-based designer that makes it easy to create and execute complex workflows without being a coding expert

● Standardize your deployment process with self-service delivery and boost productivity with a selection of validated blueprints

● Reduce risks by enforcing policy using rules for what can be orchestrated and who can access workflows and tasks

Cisco Intersight Service for HashiCorp Terraform

Infrastructure as Code (IaC) is the method of defining and provisioning infrastructure using definition files containing code. IaC adoption in public clouds allow application agility in the following ways:

● Address the problem of environment drift in the release pipeline IaC manages infrastructure using source code version in Git as the single source of truth.

● CICD toolchains automatically test, deploy, and track pull requests and configuration changes to your infrastructure.

● Regardless of an environment’s starting state, deployments always use the same configuration.

● Enables users to transition from mutable workloads (take existing infrastructure and try and upgrade in place) to immutable workloads (take existing infrastructure, create new infrastructure, and destroy the existing device).

Infrastructure as Code (IaC) enables IT and development teams to automate and scale the provisioning and management of IT resources aligned with application source-code releases in a descriptive manner. HashiCorp Terraform is the industry-leading IaC platform. Cisco Intersight Service for HashiCorp Terraform (IST) addresses the challenge of securely connecting and configuring on-premises environments to work with Terraform Cloud Business. Rather than spending time on firewall configurations or manually deploying and maintaining local runtime environments for Terraform Cloud Agents, IST removes the discomfort of DIY approaches by making the integration quick and easy.

Leveraging Intersight Assist users can integrate Terraform Cloud Business with Cisco Intersight, enabling secure communication between on-premises data centers and edge locations with the IaC platform. This means users can spend less time managing the end-to-end lifecycle of Terraform Cloud Agents, benefiting from native integration directly within Intersight, including upgrades and the ability to scale as demand grows. In addition, with common Single Sign-On (SSO), users can cross launch directly from Intersight into Terraform Cloud.

With Intersight Service for HashiCorp Terraform, seamlessly and securely extend modern, public cloud automation tools and best-practices to any on-premises environments, delivering consistent agility and flexibility for your DevOps teams while reducing operational overhead for ITOps.

Key benefits include:

● Reduce operational complexity and increase productivity using Infrastructure as Code to provision and manage your hybrid cloud environment

● Give your DevOps teams what they need with a ready-to-be-consumed on-premises infrastructure, securely integrated with their IaC tools

● Reduce risk with enterprise-grade capabilities to manage infrastructure in private environments, such as Single Sign-on (SSO) and audit logging

● Automate across all your hybrid cloud resources without having to manage more tools to integrate with Terraform Cloud Business

● Benefit from a hybrid cloud partnership between industry-leaders with a rich catalog of Terraform providers and a single point of contact for support and enablement

● Simplify usability with quality-of-life features such as common APIs and cross-launching through Cisco Intersight.

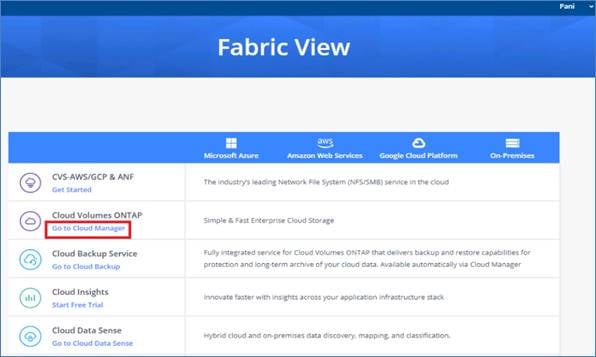

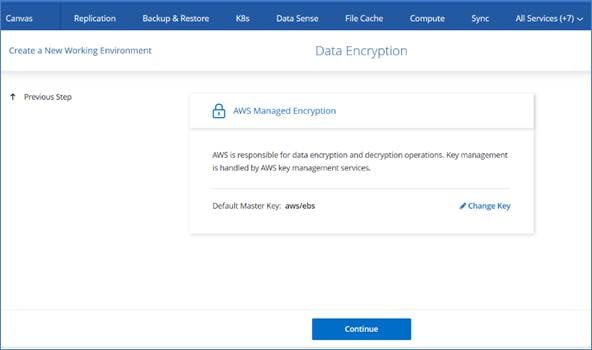

NetApp Cloud Volumes ONTAP is a software-defined storage offering that delivers advanced data management for file and block workloads. With Cloud Volumes ONTAP, you can optimize your cloud storage costs and increase application performance while enhancing data protection, security, and compliance.

Key benefits include:

● Leverage built-in data deduplication, data compression, thin provisioning, and cloning to minimize storage costs.

● Ensure enterprise reliability and continuous operations in case of failures in your cloud environment.

● Cloud Volumes ONTAP leverages SnapMirror, NetApp’s industry-leading replication technology, to replicate on-premises data to the cloud so it’s easy to have secondary copies available for multiple use cases.

● Cloud Volumes ONTAP also integrates with Cloud Backup service to deliver backup and restore capabilities for protection, and long-term archive of your cloud data.

● Switch between high and low-performance storage pools on-demand without taking applications offline.

● Ensure consistency of NetApp Snapshot copies using NetApp SnapCenter.

● Cloud Volumes ONTAP supports data encryption and provides protection against viruses and ransomware.

● Integration with Cloud Data Sense helps you understand data context and identify sensitive data.

Cloud Central provides a centralized location to access and manage NetApp cloud data services. These services enable you to run critical applications in the cloud, create automated DR sites, back up your SaaS data, and effectively migrate and control data across multiple clouds. For more information, refer to Cloud Central.

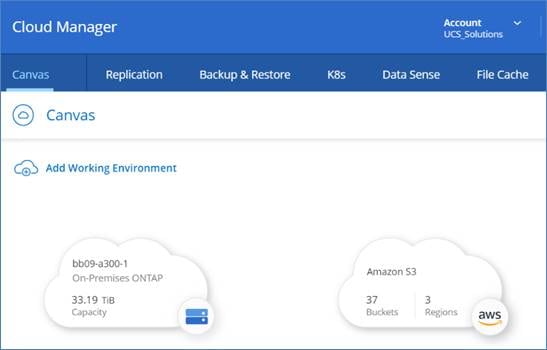

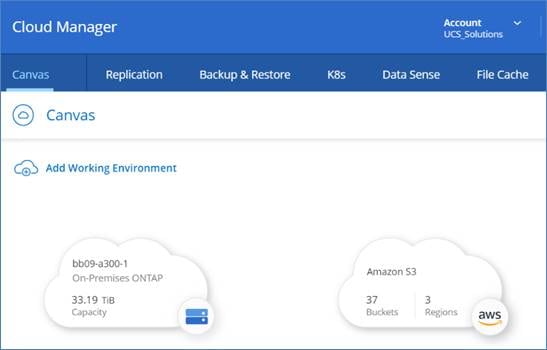

Cloud Manager is an enterprise-class, SaaS-based management platform that enables IT experts and cloud architects to centrally manage their hybrid multi-cloud infrastructure using NetApp’s cloud solutions. It provides a centralized system for viewing and managing your on-premises and cloud storage, supporting hybrid, multiple cloud providers and accounts. To find more info, refer to Cloud Manager.

Connector is an instance which enables Cloud Manager to manage resources and process within public cloud environment. A Connector is required to use many features which Cloud manager provides. A Connector can be deployed in the cloud or on-premises network.

Connector is supported in the following locations:

● Amazon Web Services

● Microsoft Azure

● Google Cloud

● On your premises

When you create your first Cloud Volumes ONTAP working environment, Cloud Manager will prompt you to create a Connector if you don’t have one yet. The user who creates a Connector from Cloud Manager needs specific permissions to deploy the instance in your cloud provider of choice. Cloud Manager will remind you of the permissions requirements when you create a Connector.

The Connector needs specific cloud provider permissions to perform operations on your behalf. For example, to deploy and manage Cloud Volumes ONTAP. When you create a Connector directly from Cloud Manager, Cloud Manager creates the Connector with the permissions that it needs. To learn more about Connectors, refer to Connectors.

It is essential for businesses to have a feasible, robust, and sustainable Business Continuity and Disaster Recovery (BCDR) plan in case of an outage. While there are several options that businesses can evaluate and explore, it all comes down to the cost of implementation, the Recovery Point Objective and the Recovery Time Objective that the plan can deliver.

For most businesses, the fundamental requirement in a BCDR plan is to have a failover site to sustain their operations while they take the necessary steps to recover the primary production environment. In such situations, the public cloud can serve as a DR environment which provides the required fault tolerance, on-demand resource provisioning and elasticity leading to a consumption-based billing that helps in lowering the overall cost of the BCDR implementation.

The implementation of the BCDR plan in a public cloud is not a straightforward approach especially when dealing with a high volume of mission critical data. The need for a secure, scalable, reliable, cost-optimized, and unified data management service that integrates seamlessly with the on-premises environment is an absolute requirement.

NetApp Cloud Volumes ONTAP when clubbed with an on-premises FlexPod running ONTAP, provides a secure data pathway for the mission critical data to be replicated to the cloud at a desired cadence driven by the RPO objectives and when there is a need to flip operations to the cloud in case of a disaster the replicated data in the cloud can be promoted to production at the click of a button. The entire data replication relationship between FlexPod and the Cloud Volumes ONTAP instance in the public cloud can be managed from the single control plane of Cisco Intersight.

The audience for this document includes, but is not limited to; sales engineers, field consultants, professional services, IT managers, partner engineers, Site Reliability Engineers, Cloud Architects, Cloud Engineers and customers who want to take advantage of an infrastructure built to deliver IT efficiency and enable IT innovation.

Requirements and Specification

Cisco Intersight uses a subscription-based license with multiple tiers. Each Cisco endpoint (Cisco UCS server, Cisco HyperFlex system, or Cisco UCS Director software) automatically includes a Cisco Intersight Base when you access the Cisco Intersight portal and claim a device.

Cisco Intersight license tiers

The following are the Cisco Intersight license tiers:

● Cisco Intersight Essentials—Essentials includes ALL functionality of Base with the additional features including Cisco UCS Central and Cisco IMC Supervisor entitlement, policy-based configuration with Server Profiles, firmware management, and evaluation of compatibility with the Hardware Compatibility List (HCL).

● Cisco Intersight Advantage—Advantage offers all features and functionality of the Base and Essentials tiers.

● Cisco Intersight Premier—In addition to the functionality provided in the Advantage tier, Intersight Premier includes full subscription entitlement for Cisco UCS Director at no additional cost.

More information about Intersight Licensing and features supported in each licensing can be found at: https://intersight.com/help/saas/getting_started/licensing_requirements#intersight_licensing

View current Cisco Intersight Infrastructure Service licensing.

Note: In our solution, we will use Intersight Cloud Orchestrator and Intersight Service for HashiCorp Terraform. These features are available for users with the Intersight Premier license so this licensing tier must be enabled.

Procedure 1. Activate a Cisco Intersight License

Follow these steps to register your license in Cisco Intersight:

Step 1. Log into Intersight with Account Administrator privileges.

Step 2. From Settings icon > Settings > License, click Register.

Step 3. In the Set Token page, enter the Product Instance Registration Token. Click Cisco Smart Software Manager to obtain your Intersight Registration token. If you do not have a Smart Account, create one from link: https://software.cisco.com/#SmartLicensing-Alerts. You can purchase the subscription and select the required Cisco UCS Server volume tier for the selected subscription duration from the same Smart Account. Click Next.

Step 4. In the Set Product page, select the required default license tier for Intersight and/or Workload Optimizer. Enabling Move all Servers To Default Tier, tags and moves all the existing servers to the default tier.

The available license tiers are:

● Intersight—Choose Essentials, Advantage, or Premier. For more information on the Intersight licensing tiers, see the Intersight Licensing section.

● Workload Optimizer—Toggle ON Activate Workload Optimizer and click Essentials, Advantage, or Premier. For more information on the Workload Optimizer licensing tiers, see the Workload Optimizer Licensing section.

License Status

The Cisco Intersight account license state could be one of the following depending on your subscription status:

● Not Used—This status is displayed when the server count in a license tier is 0.

● In Compliance—The account licensing state is in compliance and all the supported features are available to the users.

● Out of Compliance—The account license status displays Out of Compliance in the following cases:

◦ When not enough valid licenses are available because the subscription has reached the end of term, or you have more servers in the license tier than available licenses.

◦ When the grace period of 90 days is active or expired

◦ The servers are added to the account but not registered in the Smart Licensing account

When an account license status moves to Out of Compliance, a grace period of 90 days is triggered. In this period, you can continue to use the premium features, but the account license status remains Out of Compliance. To get back in compliance, you must purchase additional licenses or remove a server from the existing tier or move it to a lower tier. If you do not renew your license within the 90 days, the license state moves to Grace Expired and the license is downgraded to Base-level functionality and the premium features become unavailable. You must register a valid license again to resume using the features.

For example, if an account has a valid license for 20 servers and if you claim another server into the account, the status moves to Out of Compliance and the grace period is initiated. However, you can continue to access the features as before. To restore the In Compliance status, you can move one of the servers to a lower tier (Base/ Essentials/Advantage, as required) from the Actions Menu in the Server Details page, or from the Server /Bulk Actions in the Table view.

Note: After you purchase and activate additional licenses from the Cisco Smart Licensing portal, click the Refresh icon in the Subscription pane to sync the licensing status with that in the portal.

A few licensing options are available for Cloud Volumes ONTAP. Each of these licensing options enables you to select a configuration that meets your needs.

Table 1 lists the licensing options for Cloud Volumes ONTAP.

| Charging method |

Highlights |

Support |

Max system capacity |

| Capacity-based license: Essentials package |

Pay per TiB of capacity for one or more Cloud Volumes ONTAP systems Provides a la carte licensing for Cloud Volumes ONTAP Available by bringing your own license (BYOL) purchased from NetApp |

Included |

2 PiB |

| Capacity-based license: Professional package |

Pay per TiB of capacity for one or more Cloud Volumes ONTAP systems Provides licensing for any Cloud Volumes ONTAP configuration Includes volume backups using Cloud Backup (for volumes charged against this license) Available through an AWS Marketplace annual contract or by purchasing a license from NetApp (BYOL) |

Included |

2 PiB |

| Keystone Flex Subscription |

Pay-as-you-grow by TiB through a NetApp subscription Charging is based on the size of committed capacity The committed capacity is shared between the Cloud Volumes ONTAP systems deployed with the subscription Available for HA pairs only |

Included |

2 PiB |

| PAYGO by node |

Pay-as-you-go by the hour through a marketplace subscription from your cloud provider Charging is per Cloud Volumes ONTAP node Available in three licensing options: Explore, Standard, and Premium |

Included, but you must activate support. |

Explore: 2 TiB Standard: 10 TiB Premium: 368 TiB |

| Node-based license |

The previous generation BYOL for Cloud Volumes ONTAP A node-based license is available for license renewals only |

Included |

368 TiB per license |

Freemium offering

A new offering from NetApp that provides all Cloud Volumes ONTAP features free of charge from NetApp (cloud provider charges still apply):

● No license or contract is needed.

● Support is not included.

● You’re limited to 500 GiB of provisioned capacity per Cloud Volumes ONTAP system.

● You can use up to 10 Cloud Volumes ONTAP systems with the Freemium offering per NetApp account.

● If the provisioned capacity for a Cloud Volumes ONTAP system exceeds 500 GiB, Cloud Manager converts the system to the Essentials package (which is a capacity-based license) and charging starts.

Any other systems that have less than 500 GiB of provisioned capacity stay on the Freemium offering (if they were deployed using the Freemium offering).

To know more about how to obtain and apply license, refer to licensing overview.

Hardware and Software Revisions

This hybrid cloud solution can be extended to any FlexPod Datacenter environment that is running supported versions of software, firmware and hardware as defined in the NetApp Interoperability Matrix Tool and Cisco UCS Hardware Compatibility List.

The FlexPod solution used as the baseline platform in the on-premises environment has been deployed as per the guidelines and specifications described in the FlexPod Datacenter with Cisco UCS 4.2(1) in UCS Managed Mode, VMware vSphere 7.0 U2, and NetApp ONTAP 9.9 Design Guide.

Note: The workload running in the FlexPod Datacenter can be virtualized, non-virtualized and containerized applications.

Click the following links for more information:

● NetApp Interoperability Matrix Tool

● Cisco UCS Hardware and Software Interoperability Tool

Table 2. FlexPod Hardware and Software Revisions

| Component |

Product |

Version |

| Compute |

Cisco UCS B200 M5 Blades |

4.2(1f) |

| Cisco UCS B200 M6 Servers |

4.2(1f) |

|

| Cisco UCS X210C |

5.0(1b) |

|

| Cisco UCS Fabric Interconnects 6454 |

4.2(1f) |

|

| Network |

Cisco Nexus 93180YC-FX NX-OS |

9.3(8) |

| Cisco MDS 9132T |

8.4(2c) |

|

| Storage |

NetApp AFF A400 |

9.9.1P2 |

| NetApp ONTAP Tools for VMware |

9.8 |

|

| NetApp NFS Plugin for VMware VAAI |

2.0-15 |

|

| NetApp Active IQ Unified Manage |

9.9P1 |

|

| NetApp SnapCenter Plugin for VMware |

4.5 |

|

| Software |

VMware ESXi nenic Ethernet Driver |

1.0.35.0 |

| VMware ESXi nfnic FC Driver |

5.0.0.12 |

|

| vSphere ESXi |

7.0(U2) |

|

| VMware vCenter Appliance |

7.0 U2b |

|

| Cisco Intersight Assist Virtual Appliance |

1.0.9-342 |

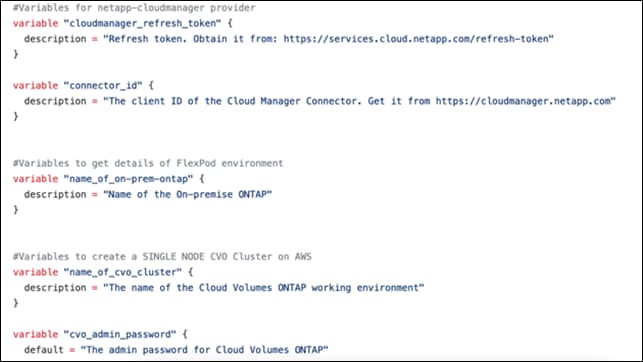

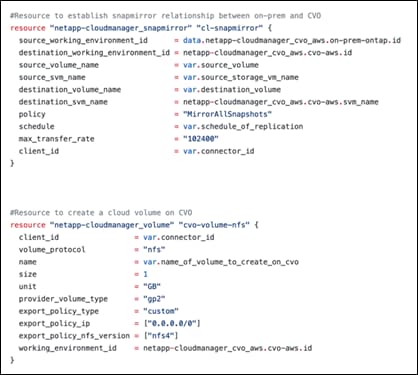

The execution of Terraform configurations happens on the Terraform Cloud for Business account. Terraform configuration uses the Terraform provider for NetApp Cloud Manager.

Table 3. Vendors, products, and versions

| Vendor |

Product |

Version |

| NetApp |

netapp-cloudmanager |

21.12.0 |

| HashiCorp |

Terraform |

1.0.0 |

Table 4. Cloud Manager and Cloud Volumes ONTAP versions

| Vendor |

Product |

Version |

| NetApp |

Cloud Volumes ONTAP |

9.10.1RC1 |

| Cloud Manager |

3.9.13 Build:1 |

|

| Mediator |

9-10-1rc1-mediator |

This section describes the design and architecture of the solution.

Solution Architecture

Figure 3 illustrates the architecture of this solution.

Figure 3. Solution architecture

Figure 3 represents the solution architecture comprised of the FlexPod Datacenter on-premises environment, NetApp Cloud Volumes ONTAP (CVO) running on Amazon Web Services, and the Cisco Intersight and NetApp Cloud Manager SaaS control planes.

The control planes and data planes are clearly indicated between the endpoints. The Data Plane runs between the ONTAP instance running on All Flash FAS in the FlexPod and the NetApp CVO instance in AWS by leveraging a secure site-to-site VPN connection.

The replication of the workload data from FlexPod Datacenter to NetApp CVO is handled by NetApp SnapMirror and the overall process is orchestrated using Cisco Intersight Cloud Orchestrator for both the on-premises and cloud environments.

Cisco Intersight Cloud Orchestrator consumes the Terraform Resource Providers for NetApp Cloud Manager to carry out the operations related to NetApp CVO Deployment and establishing the data replication relationships.

An optional backup and tiering of the cold data residing in the NetApp CVO instance to AWS S3 is also supported with this solution.

This section details the requirements for the hybrid cloud networking elements that form a core part of this solution.

You can create dedicated VPC in any region to deploy Connector and CVO. View the full list of supported regions. Also define the subnet per availability zone, route table and internet gateway.

A VPC endpoint is required to establish the connectivity between the VPC, and AWS supported services without requiring internet gateway, NAT device, VPN connection or direct connect. The VPC is not exposed to public internet and the communication will happen over AWS private network. There are three types of VPC endpoints: Interface endpoints, Gateway Load Balancer endpoints, and Gateway endpoints.

AWS VPN is used in the solution to establish secure connection between on-prem FlexPod network and the AWS global network. AWS Site-to-Site VPN creates encrypted tunnels between your network and your Amazon Virtual Private Clouds or AWS Transit Gateways.

The VPN connectivity utilizes the public internet, which can have unpredictable performance and can possess some security concerns. AWS direct connect bypass the public internet and establishes a secure dedicated connection from op-prem to AWS. AWS direct connect is a great option for customers that are seeking secure, low latency connectivity into AWS. If the customer already has AWS direct connect then the same connection can be used to establish communication between on-prem FlexPod and CVO instance.

Cloud Volumes ONTAP for Disaster Recovery

NetApp CVO can be deployed in various deployment modes; this section covers in detail all the modes of deployment and their associated pre-requisites. You can select any of the modes of deployment that match your business specific requirements.

CVO Deployment Modes and Architecture

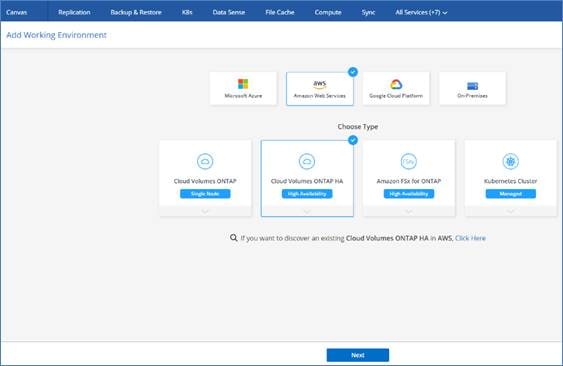

Cloud Volumes ONTAP is available in AWS as a single node system and as a high-availability (HA) pair of nodes. Based on the requirement, you can select CVO deployment modes. Upgrading a single node system to an HA pair is not supported. If you want to switch between a single node system and an HA pair, then you need to deploy a new system and replicate data from the existing system to the new system.

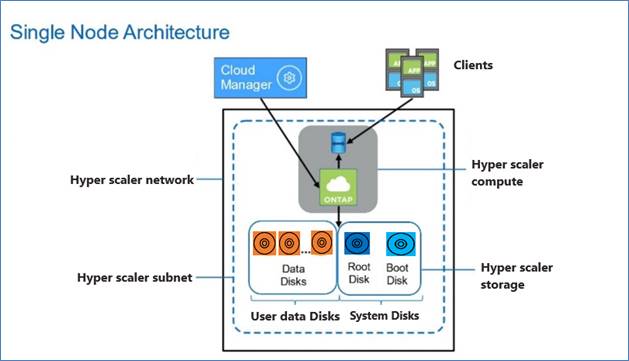

Cloud Volumes ONTAP Single Node

Cloud Volumes ONTAP deployment mode in AWS as a single node system that is ideal for disaster recovery, backups, and workloads that do not require high availability. In this mode all the LIFs will be assigned IP from same subnet.

Figure 4. CVO single node architecture

High Availability Pair Node

A Cloud Volumes ONTAP high availability (HA) configuration provides nondisruptive operations and fault tolerance. In AWS, data is synchronously mirrored between the two nodes.

In AWS, Cloud Volumes ONTAP HA configurations include the following components:

● Two Cloud Volumes ONTAP nodes whose data is synchronously mirrored between each other.

● A mediator instance that provides a communication channel between the nodes to assist in storage takeover and giveback processes.

Cloud Volumes ONTAP HA Single Availability Zone

Cloud Volumes ONTAP deployment mode in AWS as a single AZ HA pair that is ideal for fault tolerance and nondisruptive operations as it protects against failures within a single AZ. This HA configuration ensures high availability of your data if an instance that runs a Cloud Volumes ONTAP node fails. All data is natively accessible from outside of the VPC.

In this mode there will be two nodes and a mediator instance that will be deployed in single AZ. Because this configuration is in a single AZ it does not require floating IP address and you can use same set of IP addresses for NFS and CIFS data access from within the VPC and from outside the VPC. These IP addresses automatically migrate between HA nodes if failures occur.

Figure 5. CVO HA Architecture

Unlike an ONTAP cluster, storage in a Cloud Volumes ONTAP HA pair is not shared between nodes. Instead, data is synchronously mirrored between the nodes so that the data is available in the event of failure. You can use an HA pair as an active-active configuration, in which both nodes serve data to clients, or as an active-passive configuration, in which the passive node responds to data requests only if it has taken over storage for the active node:

● For iSCSI, Cloud Volumes ONTAP uses multipath I/O (MPIO) and Asymmetric Logical Unit Access (ALUA) to manage path failover between the active-optimized and non-optimized paths.

● For NAS configurations, the data IP addresses can migrate between HA nodes if failures occur. This ensures client access to storage.

Cloud Volumes ONTAP HA Multi Availability Zones

Cloud Volumes ONTAP deployment mode in AWS as a HA pair multi-AZ that is ideal for fault tolerance and nondisruptive operations for business continuity as it provides maximum protection against AZ failures. This HA configuration ensures high availability of your data if a failure occurs with an Availability Zones or an instance that runs a Cloud Volumes ONTAP node. Both Cloud Volumes ONTAP nodes must be deployed in different Availability Zones. A third Availability Zone is recommended for the HA mediator.

When an HA configuration is spread across multiple Availability Zones, floating IP addresses enable NAS client access. The floating IP addresses, which must be outside of the CIDR blocks for all VPCs in the region, can migrate between nodes when failures occur. They aren’t natively accessible to clients that are outside of the VPC, unless an AWS transit gateway is set up.

If there is no transit gateway, private IP addresses are available for NAS clients that are outside the VPC. However, these IP addresses are static—they can’t failover between nodes. The floating IP needs to be specified during the CVO deployment. The private IP addresses are automatically created by Cloud Manager.

Figure 6. CVO HA Multi-AZ architecture

Takeover and giveback:

● For iSCSI, Cloud Volumes ONTAP uses multipath I/O (MPIO) and Asymmetric Logical Unit Access (ALUA) to manage path failover between the active-optimized and non-optimized paths.

● For NAS, the takeover occurs using floating IP. The node’s floating IP address that clients use to access data moves to the other node.

Figure 7. Takeover and Giveback

Networking Requirements for Cloud Volumes ONTAP in AWS

Cloud Manager handles the setup of networking components for Cloud Volumes ONTAP, such as IP addresses, netmasks, and routes and so on.

The following sections describe the requirements that must be met in AWS.

Outbound internet access for Cloud Volumes ONTAP nodes

Cloud Volumes ONTAP nodes require outbound internet access to send messages to NetApp AutoSupport, which proactively monitors the health of your storage. By default, AutoSupport is enabled on each node to send messages to technical support using the HTTPS transport protocol.

Note: If you have a NAT instance, you must define an inbound security group rule that allows HTTPS traffic from the private subnet to the internet.

Outbound internet access for the HA mediator

The HA mediator instance must have an outbound connection to the AWS EC2 service so it can assist with storage failover. To provide the connection, you can add a public IP address, specify a proxy server, or use a manual option.

The manual option can be a NAT gateway or an interface VPC endpoint from the target subnet to the AWS EC2 service. For details about VPC endpoints, refer to AWS Documentation: Interface VPC Endpoints (AWS PrivateLink).

Security groups

Cloud Manager creates a security group but if you want to use your own then refer to Security group rules.

To replicate data between a Cloud Volumes ONTAP system in AWS and ONTAP systems in other networks, you must have a VPN connection between the AWS VPC and the other network.

Cloud Manager automatically allocates the required number of private IP addresses to Cloud Volumes ONTAP. You need to ensure that your networking has enough private IP addresses available.

The number of LIFs that Cloud Manager allocates for Cloud Volumes ONTAP depends on whether you deploy a single node system or an HA pair. A LIF is an IP address associated with a physical port.

IP addresses for a single node system

Table 5 lists the Cloud Manager IP addresses to a single node system.

Table 5. Cloud Manager IP addresses

| LIF name |

Assigned Interface |

Purpose |

| Cluster management LIF |

eth0 |

Administrative management of cluster |

| Node management LIF |

eth0 |

Administrative management of a node |

| Intercluster LIF |

eth0 |

Replication |

| NAS data LIF |

eth0 |

Client access over NAS protocol |

| iSCSI data LIF |

eth0 |

Client access over iSCSI protocol |

| SVM management LIF |

eth0 |

SVM management |

IP addresses for a HA pairs

HA pairs require more IP addresses than a single node system does. These IP addresses are spread across different ethernet interfaces, as shown in Figure 8.

Figure 8. CVO HA LIFs and Interfaces overview

Note: An HA pair deployed in a single AWS Availability Zone (AZ) requires 15 private IP addresses.

Table 6 lists the details about LIFs that are associated with each private IP address.

Table 6. LIFs for HA pairs in a single AZ

| LIF name |

Assigned Interface |

Node |

Purpose |

| Cluster management |

eth0 |

node 1 |

Administrative management of the entire cluster (HA pair) |

| Node management |

eth0 |

node 1 and node 2 |

Administrative management of a node |

| Intercluster |

eth0 |

node 1 and node 2 |

Cross-cluster communication, backup, and replication |

| NAS data |

eth0 |

node1 |

Client access over NAS protocol |

| iSCSI data |

eth0 |

node 1 and node 2 |

Client access over the iSCSI protocol |

| Cluster connectivity |

eth1 |

node 1 and node 2 |

Enables the nodes to communicate with each other and to move data within the cluster |

| HA connectivity |

eth2 |

node 1 and node 2 |

Communication between the two nodes in case of failover |

| RSM iSCSI traffic |

eth3 |

node 1 and node 2 |

RAID SyncMirror iSCSI traffic, as well as communication between the two Cloud Volumes ONTAP nodes and the mediator |

| Mediator |

eth0 |

Mediator |

A communication channel between the nodes and the mediator to assist in storage takeover and giveback processes |

Note: An HA pair deployed in a multiple AWS Availability Zone (AZ) requires 13 private IP addresses.

Table 7 lists the details about LIFs that are associated with each private IP address.

Table 7. LIFs for HA pairs in a multiple AZs

| LIF name |

Assigned Interface |

Node |

Purpose |

| Node management |

eth0 |

node 1 and node 2 |

Administrative management of a node |

| Intercluster |

eth0 |

node 1 and node 2 |

Cross-cluster communication, backup, and replication |

| iSCSI data |

eth0 |

node 1 and node 2 |

Client access over the iSCSI protocol. This LIF also manages the migration of floating IP addresses between nodes |

| Cluster connectivity |

eth1 |

node 1 and node 2 |

Enables the nodes to communicate with each other and to move data within the cluster |

| HA connectivity |

eth2 |

node 1 and node 2 |

Communication between the two nodes in case of failover |

| RSM iSCSI traffic |

eth3 |

node 1 and node 2 |

RAID SyncMirror iSCSI traffic, as well as communication between the two Cloud Volumes ONTAP nodes and the mediator |

| Mediator |

eth0 |

Mediator |

A communication channel between the nodes and the mediator to assist in storage takeover and giveback processes |

Requirements for HA pairs in multiple Availability Zones

Additional AWS networking requirements apply to Cloud Volumes ONTAP HA configurations that use multiple Availability Zones (Azs). This HA deployment model uses multiple Availability Zones to ensure high availability of the data. It’s recommended to use a dedicated AZ for each Cloud Volumes ONTAP instance and the mediator instance, which provides a communication channel between the HA pair. A subnet should be available in each Availability Zone.

HA configurations in multiple Azs use floating IP addresses that migrate between nodes if failures occur. They are not natively accessible from outside the VPC. To make floating IP accessible a Transit Gateway is required. You need to enter the floating IP addresses in Cloud Manager when you create a Cloud Volumes ONTAP HA working environment. Cloud Manager allocates the IP addresses to the HA pair when it launches the system.

The floating IP addresses must be outside of the CIDR blocks for all VPCs in the AWS region in which you deploy the HA configuration.

The floating IP addresses is a logical subnet that’s outside of the VPCs in your region. Cloud Manager will automatically add the static route to the route table of the VPC which will be selected during Cloud Volumes ONTAP deployment.

Figure 9. CVO HA Multi-AZ Floating IP Overview

Connect to NetApp management tools

To use NetApp management tools with HA configurations that are in multiple Azs, there are two connection options:

● Deploy the NetApp management tools in a different VPC and set up an AWS transit gateway. The gateway enables access to the floating IP address for the cluster management interface from outside the VPC.

● Deploy the NetApp management tools in the same VPC with a similar routing configuration as NAS clients.

Cloud Volumes ONTAP Deployment Specifications

Supported Regions

Cloud Volumes ONTAP is supported in most AWS regions. Newer AWS regions must be enabled before you can create and manage resources in those regions. Learn how to enable a region.

Compute Instances for running CVO

Cloud Volumes ONTAP supports several instance types, depending on the license type that you select. Supported configurations for Cloud Volumes ONTAP in AWS

Sizing

Sizing your Cloud Volumes ONTAP system can help you meet requirements for performance and capacity. Cloud Volumes ONTAP sizer is a tool that will help you to size your CVO environment to decide the best architecture and resources to leverage for your specific needs.

TCO Calculator

Easily calculate your storage costs on AWS, Azure or Google Cloud with Cloud Volumes ONTAP using this free, simplified, and easy to navigate calculator. Learn more about the TCO calculator.

This section describes the details of deployment. At a high level, deploying a disaster recovery solution across hybrid cloud consists of the steps shown below. The details of these steps are presented in the following sections.

1. Deploy FlexPod Datacenter in UCS Managed Mode or Intersight Managed Mode

2. Cisco Intersight Configuration

● Create and configure Cisco Intersight account

● Install Cisco Intersight Assist

● Add all FlexPod components to Intersight account

● Configure Cisco Intersight Service for HashiCorp Terraform

3. Hybrid Cloud Infrastructure preparation

● Hyper scalar configuration

● Access NetApp Cloud Manager

● Deploy connector

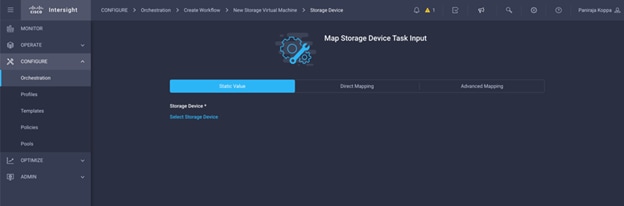

4. Hybrid Cloud Storage configuration using Intersight services

● NetApp Cloud Volumes ONTAP deployment

● Set up environment prerequisites

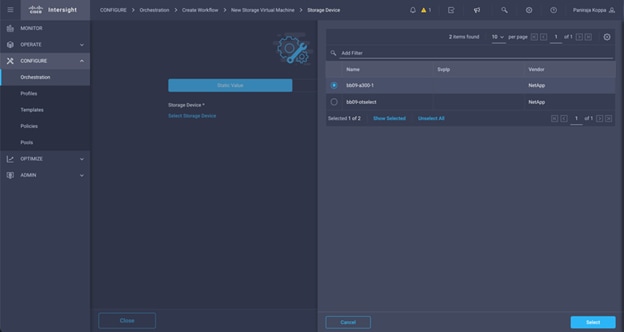

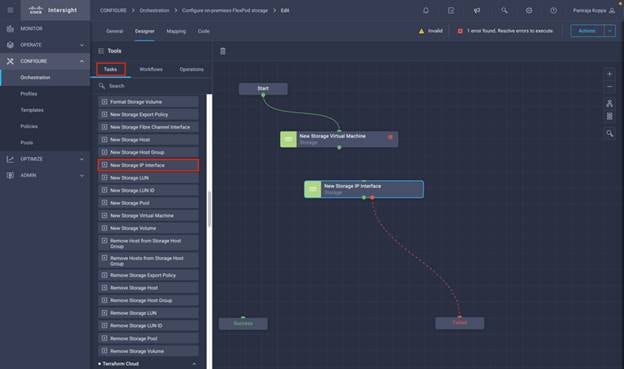

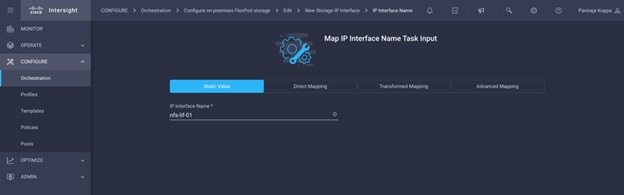

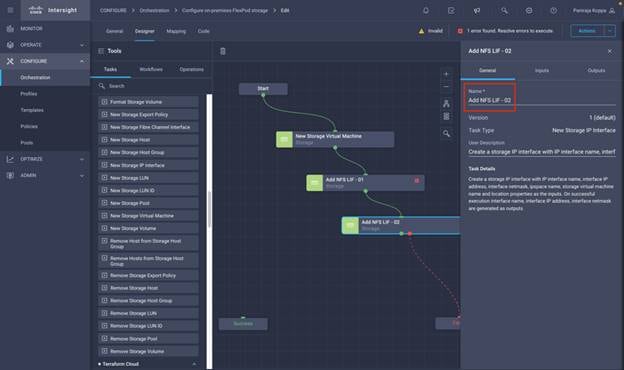

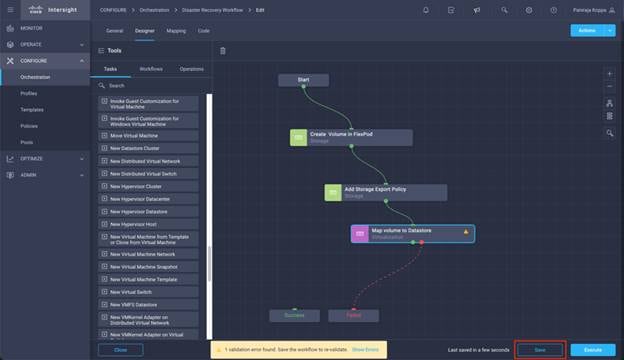

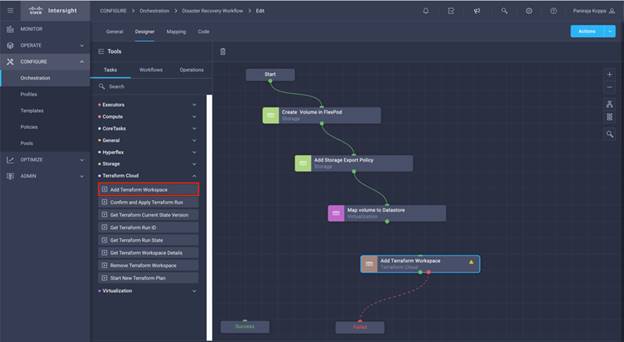

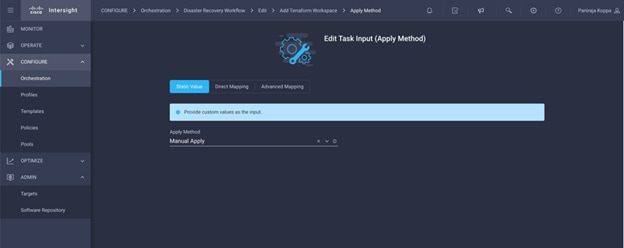

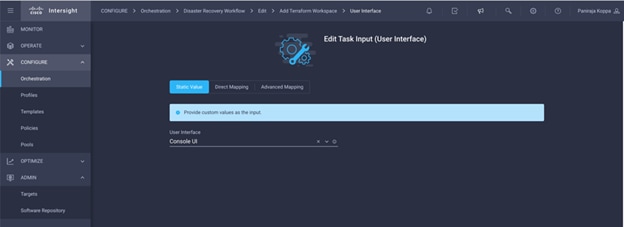

● Develop Intersight Orchestrator Workflows

◦ Create Volumes in NetApp AFF and map to datastore

◦ Add on-premises FlexPod storage

◦ Deploy CVO

◦ Configure SnapMirror replication between on-premises ONTAP and CVO

● Optionally, import Cisco built workflow

● Execution and Verification

● Sample Use case

Note: Skip this step if you already have FlexPod Datacenter deployed.

To understand the FlexPod design and deployment details, including the configuration of various elements of design and associated best practices, refer to Cisco Validated Designs for FlexPod found at: https://www.cisco.com/c/en/us/solutions/design-zone/data-center-design-guides/flexpod-design-guides.html

FlexPod can be deployed on both UCS Managed Mode and Cisco Intersight Managed Mode. If you are deploying FlexPod in UCS Managed Mode, latest Cisco Validated Design can be found at: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flexpod_m6_esxi7u2_design.html

Cisco Unified Compute System (Cisco UCS) X-Series is a brand-new modular compute system, configured and managed from the cloud. It is designed to meet the needs of modern applications and to improve operational efficiency, agility, and scale through an adaptable, future-ready, modular design. The design guidance around incorporating the Cisco Intersight—managed UCS X-Series platform within FlexPod Datacenter infrastructure can be found at: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flexpod_xseries_esxi7u2_design.html

FlexPod deployment can automated with Infrastructure as code using Ansible. Details are available at: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flexpod_m6_esxi7u2.html#AnsibleAutomationWorkflowandSolutionDeployment

Cisco Intersight Configuration

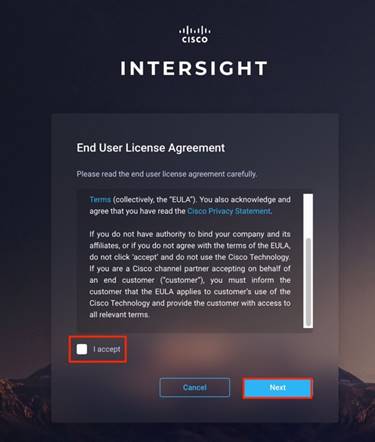

Procedure 1. Create an account in Cisco Intersight

Note: Skip this step if you already have an Intersight account.

A quick summary of the procedure to create an account in Cisco Intersight is outlined below. For more details, refer to: https://intersight.com/help/saas/getting_started/create_cisco_intersight_account

To get started with Cisco Intersight, create a Cisco Intersight account:

Step 1. Visit https://intersight.com/ to create your Intersight account. You must have a valid Cisco ID to create a Cisco Intersight account.

Step 2. Click Create an account.

Step 3. Sign-In with your Cisco ID.

Step 4. Read the End User License Agreement and select I accept and click Next.

Step 5. Provide a name for the account and click Create.

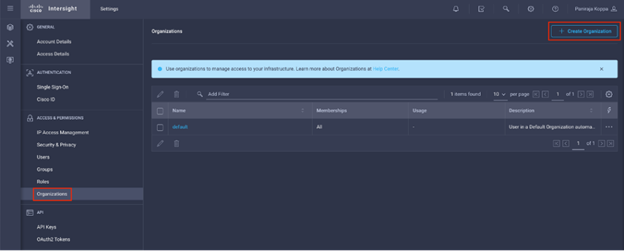

Procedure 2. Set up a Cisco Intersight organization

Optionally, you can define all Cisco Intersight resources under an organization. Note that a default organization already exists. To define a new organization, follow these steps:

Step 1. Log in to the Cisco Intersight portal.

Step 2. Click Settings (the gear icon) and click Settings.

Step 3. Click Organizations in the middle panel.

Step 4. Click Create Organization in the top-right corner.

Step 5. Provide a name for the organization (for example, FlexPod).

Step 6. Select the Cisco UCS device if it is already added. Click Create.

Procedure 3. Licensing

In this solution, you will use Cisco Intersight Cloud Orchestrator and Cisco Intersight Service for HashiCorp Terraform. These features are available for users who have the Cisco Intersight Premier license and therefore this licensing tier must be enabled.

Step 1. Log in to the Cisco Intersight portal.

Step 2. Click Settings (the gear icon) and click Licensing.

Note: If this is a new account, all servers connected to the UCS Domain will appear under the Base license Tier. If you have purchased Cisco Intersight licenses and have them in your Cisco Smart Account, click Register and follow the prompts to register this Cisco Intersight account to your Cisco Smart Account. Cisco Intersight also offers a one-time 90-day trial of Premier licensing for new accounts. Click Start Trial and then Start to begin this evaluation. The remainder of this section will assume Premier licensing.

Step 3. From the Licensing Window, click Actions > Set Products.

Step 4. From the drop-down list click Premier for Default Tier in the Intersight licensing section and click Set.

Install Cisco Intersight Assist

Note: Skip this step if you already have an Intersight assist deployed. One Intersight Assist would be enough in the datacenter for all the FlexPod components to communicate with Cisco Intersight.

Cisco Intersight Assist helps you add endpoint devices to Cisco Intersight. Your datacenter could have multiple targets that do not connect directly with Cisco Intersight. Any device that is supported by Cisco Intersight but does not connect directly with it, will need a connection mechanism. Cisco Intersight Assist provides that connection mechanism, and helps you add these targets into Cisco Intersight. It is deployable virtual machine contained within an Open Virtual Appliance (OVA) file format. You can install the appliance on ESXi 6.0 or higher.

A quick summary of requirements and deployment procedure is outlined below. For more information and a detailed deployment procedure, go to: https://www.cisco.com/c/en/us/td/docs/unified_computing/Intersight/cisco-intersight-assist-getting-started-guide/m-overview-of-cisco-intersight-assist.html

Table 8 lists the resource requirements for Cisco Intersight Assist.

Table 8. Cisco Intersight Assist Resource requirements

| Resource Requirements |

Systems Requirements |

|||

|

|

Tiny |

Small |

Medium |

Large |

| vCPU |

8 |

16 |

24 |

48 |

| RAM(GiB) |

16 |

32 |

64 |

96 |

| Storage (GB) |

500GB |

500GB |

500 |

500 |

| Number of servers |

|

|

2000 |

5000 |

| Supported Hypervisors |

|

VMware ESXi 6.5 and higher VMware vSphere Web Client 6.5 and higher |

||

Note: Port 443 need to be open for TCP/UDP traffic and port 80 for TCP.

DNS

You access the Cisco Intersight Assist using the <https://fqdn-of-your-appliance> URL. You must have a PTR record in the DNS entry. Configure DNS with A/PTR and CNAME Alias records as shown below:

Sample A/PTR record: intersightassist (ip.address)

Sample CNAME Alias record: dc-FQDN hostname

Procedure 4. Install Cisco Intersight Assist

Note: If you already have Cisco Intersight Assist deployed, skip the Installation procedure, and navigate to the Upgrade existing Cisco Intersight Assist section.

Step 1. Download the Intersight virtual appliance for VMware from Cisco Software Download portal: https://software.cisco.com/download/home

Step 2. Navigate to Downloads Home > Servers - Unified Computing > Intersight and download the ova file.

Step 3. Log in to VMware vSphere Web Client with administrator credentials. Right-click the Cluster/host and select Deploy OVF Template.

Step 4. On the Deploy OVF Template wizard, in the Source page, specify the source location, and click Next.

Step 5. On the OVF Template Details page, verify the OVF template details and click Next.

Note: No input is necessary.

Step 6. On the Name and Location page, add or edit the Name and Location for the Intersight Assist and click Next.

Step 7. On the Deployment Configuration page, select a configuration from the drop-down list and click Next.

Step 8. Choose either Small, Medium or Large deployment configuration since Tiny is only viable for Cisco Intersight Assist used with Cisco Intersight Cloud Orchestrator.

Step 9. On the Storage page, select a destination storage (hard drives) for the VM files in the selected host (ESX station) and click Next. Select the Disk Format for the virtual machine virtual disks. Select Thin Provision to optimize disk usage.

Step 10. On the Network Mapping page, for each network that is specified in the OVF template, select a source network and map it to a destination network and click Next.

Step 11. On the Properties page, customize the deployment properties of the OVF template and click Next.

Step 12. On the Ready to complete page, click Finish.

Step 13. After the OVA deployment is complete, and the VM is powered on, wait for a few seconds and then access your VM using the <https://fqdn-of-your-appliance> URL to complete setting up Cisco Intersight Assist.

Step 14. Click Cisco Intersight Assist and click Proceed.

Step 15. You will see Connect Intersight Virtual Appliance Wizard, make sure you get the Device ID and Claim Code.

Step 16. Login to Cisco Intersight and click ADMIN > Target > Claim a New Target.

Step 17. Search for Intersight Assist. Select it and click Start.

Step 18. Enter the Device ID and Claim code from Assist. Click Claim. Make sure claim is successful.

Step 19. Return to the Assist Initial Setup Wizard and click Continue.

The software download is initiated, and the software packages are installed. The installation process could take up to an hour to complete, depending on the network connection to Cisco Intersight. After the installation is complete, the Cisco Intersight Assist user interface appears.

Step 20. Log in to the Cisco Intersight Assist user interface using its FQDN. Enter the username – admin and the password you set at installation.

Procedure 5. Upgrade existing Cisco Intersight Assist

If Cisco Intersight Assist is already deployed for Orchestration, make sure Intersight Assist is always upgraded to latest.

Step 1. Login to Intersight Assist.

Step 2. From the left navigation pane, click Software.

Step 3. Make sure Software Schedule is set to “Automatic” or set a Weekly Maintenance Window. This will ensure that the upgraded packages are received.

Add FlexPod components to a Cisco Intersight account

Note: Skip this step if all FlexPod components are already added as targets within your Intersight account.

Target

A target is a service that performs management in your environment. Target Configuration specifies the ports Intersight uses to connect with these services. For each target, Intersight communicates with the service via the management protocol that it exposes — The REST API, SMI-S, XML, or some other management transport. Intersight uses this communication to discover the managed entities, monitor resource utilization, and execute actions.

Note: It is required to add all FlexPod components as a Target within Cisco Intersight.

Procedure 6. Claim a target

For more information about claiming target go to: https://intersight.com/help/saas/getting_started/claim_targets#target_claim

FAQs about account setup and claiming a target can be found here: https://intersight.com/help/saas/faqs#general_account_setup_claim_target

Step 1. Log in to Intersight with the Account Administrator, Device Administrator, or Device Technician privileges.

Step 2. Navigate to ADMIN > Targets > Claim a New Target.

Step 3. Click Available for Claiming and select the target type you want to claim. Click Start.

Step 4. Enter the required details and click Claim to complete the claiming process.

Procedure 7. Target Claim for Compute/Fabric

Step 1. Navigate to ADMIN > Targets > Claim a New Target.

Step 2. In Categories, select Compute/Fabric.

Step 3. Click the relevant target and click Start.

Step 4. Enter the applicable Device ID. Endpoint targets connect to the Cisco Intersight portal through a Device Connector that is embedded in the management controller (Management VM for Cisco UCS Director) of each system. The Device Connector provides a secure way for connected targets to send information and receive control instructions from the Cisco Intersight portal, using a secure Internet connection. Table 9 lists the format of the device ID and the device connector location.

Note: Before you gather the Claim Code, ensure that the Device Connector has outbound network access to Cisco Intersight, and is in the “Not Claimed” state.

Step 5. Claim Code—Enter the device claim code and click Claim. You can find this code in the Device Connector.

Step 6. Resource Groups—Select the Resource Group from the list to add it to the Organization.

Note: The Resource Group selection is enabled for the supported targets.

Step 7. Click Claim.

Note: After the targets are claimed, you can view the managed targets in the Targets table view.

Table 9. Device ID Format and Device Connector location

| Targets |

Device ID Format |

Device Connector Location |

| Cisco UCS Server |

Serial Number |

From Admin > Device Connector in Cisco IMC |

| Cisco UCS Domain (UCS Managed) |

Device serial ID of the primary and subordinate Fabric Interconnects |

From Admin > Device Connector in Cisco UCS Manager |

| Cisco UCS Domain (Intersight Managed) |

Device serial ID of the primary and subordinate Fabric Interconnects |

Device Connector in Device Console |

Procedure 8. Target Claim for vCenter

Step 1. Navigate to ADMIN > Targets > Claim a New Target.

Step 2. In Categories, select Hypervisor.

Step 3. Click VMware vCenter and click Start.

Step 4. Claim VMWare vCenter Target.

Step 5. Select the Intersight assist deployed. Provide vCenter details. If Intersight Workflow Optimizer (IWO) is enabled, turn on Datastore Browsing Enabled and Guest Metrics Enabled.

Step 6. Click Claim.

Step 7. After a few minutes, the VMware vCenter will appear in the Targets list. It also can be viewed by clicking Intersight Assist in the Targets list.

Step 8. Detailed information obtained from the vCenter can now be viewed by navigating to OPERATE > Virtualization.

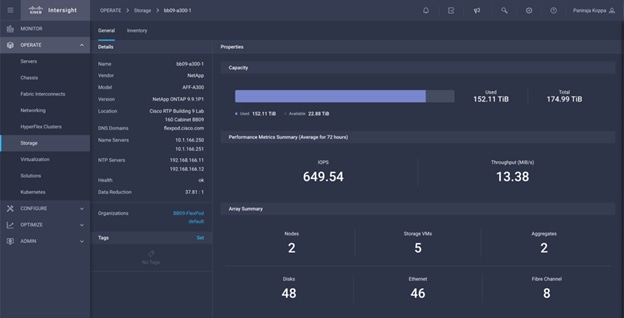

Procedure 9. Target Claim for NetApp

Step 1. Navigate to ADMIN > Targets > Claim a New Target.

Step 2. In Categories, click Storage.

Step 3. Click NetApp Active IQ Unified Manager and click Start.

Step 4. Select the Intersight assist deployed. Provide details.

Step 5. Click Claim.

After a few minutes, the NetApp ONTAP Storage will appear in the Storage tab.

The storage dashboard widgets can also be viewed from Monitoring tab.

Configure Cisco Intersight Service for HashiCorp Terraform

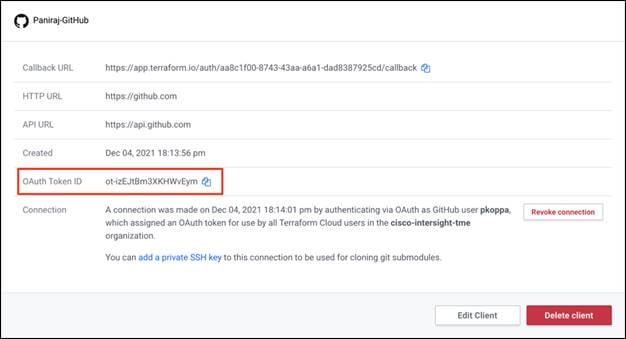

Procedure 1. Connect Cisco Intersight and Terraform Cloud

Step 1. Claim/create a Terraform cloud target by providing relevant Terraform Cloud account details.

Step 2. Create Terraform Cloud Agent target for private clouds so customers install the agent in the datacenter and allow communication with Terraform Cloud.

More information, go to: https://intersight.com/help/saas/features/terraform_cloud/admin

Procedure 2. Generate User Token

As part of adding a target for Terraform Cloud, you must provide the username and API token from the Terraform Cloud settings page.

Step 1. Login to Terraform Cloud and go to User Tokens: https://app.terraform.io/app/settings/tokens

Step 2. Click Create a new API token.

Step 3. Assign a name to remember and save the Token in a secure place.

Procedure 3. Claim Terraform Cloud Target

Step 1. Log in to Intersight with the Account Administrator, Device Administrator, or Device Technician privileges.

Step 2. Navigate to ADMIN > Targets > Claim a New Target.

Step 3. In Categories, click Cloud.

Step 4. Click Terraform Cloud and click Start.

Step 5. Enter a name for the target, your username for the Terraform Cloud, the API token, and a default organization in Terraform Cloud as displayed in the following image.

Step 6. In the Default Managed Hosts, make sure below links are added along with other managed hosts:

● github.com

● github-releases.githubusercontent.com

Table 10. Property details for Terraform Cloud Target

| Property |

Essential Information |

| Name |

The name you wish to assign to this Intersight Terraform Cloud integration. You can update this field, if required. |

| Terraform Cloud API Token |

The user API token from a Terraform user who is in the owners team in Terraform. This is the API token that will be used for the other features. The Intersight platform validates if you have the required license for Terraform Cloud on creation. Based on the validation result, the target status is updated in the dashboard (from Claim in progress to Connected). If any validation does not succeed, you will see the Not Connected state. If you see the Not Connected state at. any time, you can see the detail error message when you hover your mouse over the state. You can update this field, if required. |

| Terraform Cloud Username |

The User ID in Terraform Cloud whose token was provided in the API Token field. You can update this field, if required. |

| Default Terraform Cloud Organization Name |

In Terraform Cloud, a Terraform user can have access to more than one organization. Enter the organization with which Intersight will integrate using SSO. And this will be the default organization that will be used when user doesn’t specify one for the other features - Terraform API reverse proxy. Validated by the Intersight platform on creation. Based on the validation result, the target status is updated in the dashboard. If you provide a wrong organization name, the target will display the Not Connected state. You can update this field, if required. |

| Default Managed Hosts |

Optional. A comma separated list of IP addresses, subnets, or CIDRs that is used by the agent to communicate and execute IaC. Whenever a user creates a new agent, values provided in this list will be used to pre-populate the Managed Host field in the Claim Terraform Cloud Agent wizard. Note: Updating Default Managed Hosts will not impact existing agents. |

If successfully implemented, you will see your Terraform Cloud Target displayed in Intersight as displayed in the following image:

Terraform Cloud Agent

To run Terraform configurations in private datacenters that may or may not have direct ingress internet connectivity, Intersight users can enable Terraform Cloud Agent (also referred to as TFC Agent or agent) on Cisco Intersight Assist in the datacenter. The agent is considered to be a global resource within an organization.

You can then invoke deployments on workspaces that are configured in agent mode in Terraform Cloud and the Terraform agents reach the endpoints in private datacenters to perform the required actions.

Terraform Cloud provides a solution for running terraform code in environments which are isolated, on-premises, and/or private. This is done by provisioning an agent called the Terraform Cloud Agent (agent) in the users private environment. The agents are pull based and hence no inbound ports need to be exposed by the private infrastructure.

The Docker image used by the agent is available at the https://hub.docker.com/r/hashicorp/tfc-agent/tags. Intersight maintains and updates the agent images as required in an internal Docker registry. The agent is instantiated as Kubernetes pods in an Intersight Assist Appliance.

Note: Once you install Terraform Cloud Agent through Intersight, it becomes the child target of a Terraform Cloud Target. You will not be able to delete the Terraform Cloud Target till all the child Terraform Cloud Agents are deleted.

You can use the Terraform Cloud Agent to run the plans in a private datacenter. By selecting the Claim Terraform Cloud Agent in the Action dropdown for the Terraform Cloud target, you can deploy a Terraform Cloud Agent.

Procedure 4. Add Terraform Cloud Agents

Prerequisites

● Terraform Cloud target.

● Claimed Intersight Assist into Intersight before deploying the Terraform Cloud Agent.

Note: You can only claim 5 agents for each Assist.

Note: After creating the connection to Terraform it is time to spin up a Terraform Agent to execute the Terraform code.

Step 1. Click Claim Terraform Cloud Agent from the drop-down list of your Terraform Cloud Target as displayed in the following image:

Step 2. Enter the details for the Terraform Cloud Agent.

Table 11. Property Details for Terraform Agent

| Property |

Essential Information |

| Name |

The name you wish to assign to this Intersight Terraform Cloud Agent. |

| Intersight Assist |

Cisco Intersight Assist in the datacenter where the Terraform Cloud Agent will be deployed. Before claiming an agent target, you must ensure that you have an Assist to claim the agent target. |

| Terraform Cloud Organization |

The organization where the Terraform Cloud Agent will be deployed. The default organization of the Terraform Cloud target is pre-populated for the Agent as well. If not specified, the default TF cloud organization specified in the parent target will be used. |

| Managed Hosts |

This is a list of endpoints that the agent uses to communicate and execute the IaC. This endpoint can either be an IP address, subnet, or a CIDR. The list of endpoints are pre-populated based on the Terraform Cloud Integration’s Default Managed Hosts settings. Users can edit the list before saving. Recommendation: For specific Terraform Providers (for example, Cisco ACI), you may need to configure Managed Hosts with github.com and githubusercontent.com. You can modify the list of Managed Hosts at any time. |

| Terraform Cloud Agent Pool Name |

The agent pool to use for agent deployment. Each Agent is associated with a pool. You can use an existing pool. You can also have the Intersight platform create a new pool for you. |

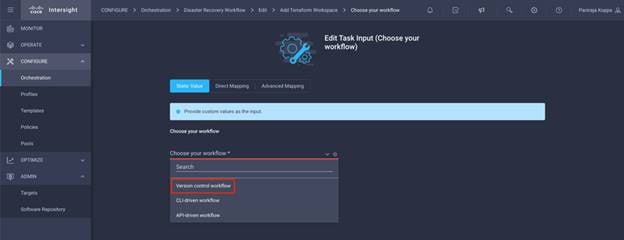

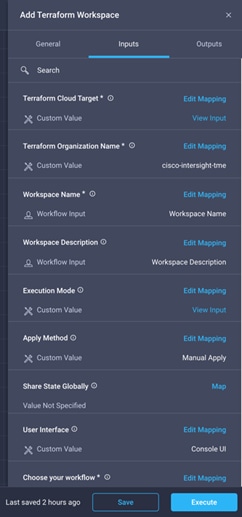

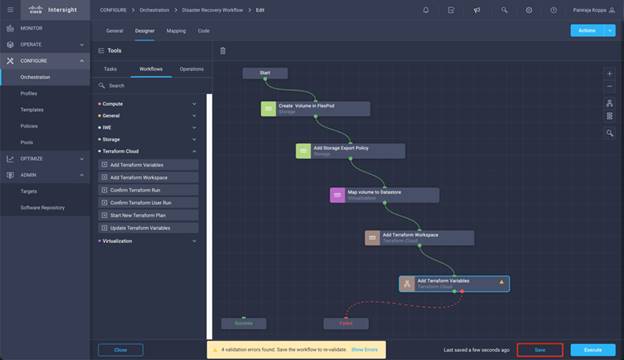

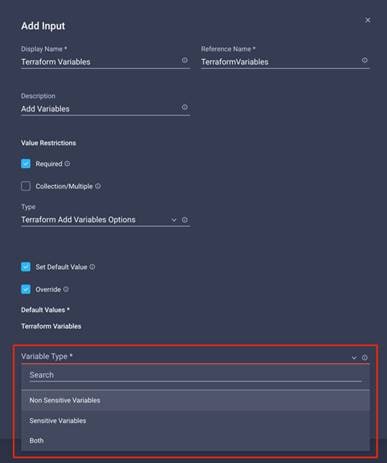

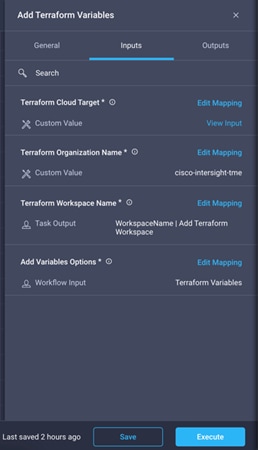

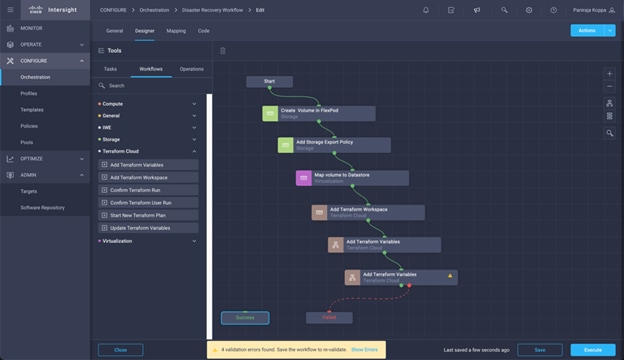

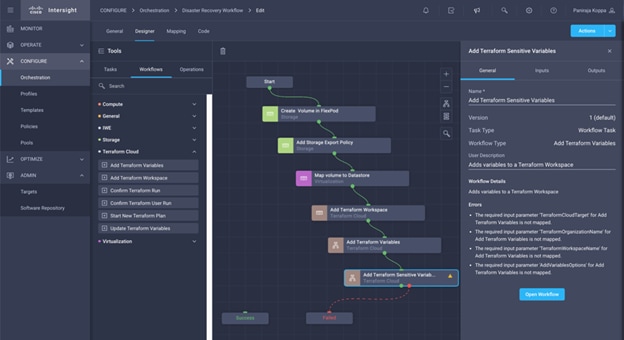

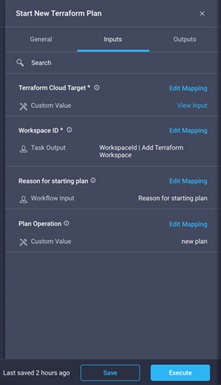

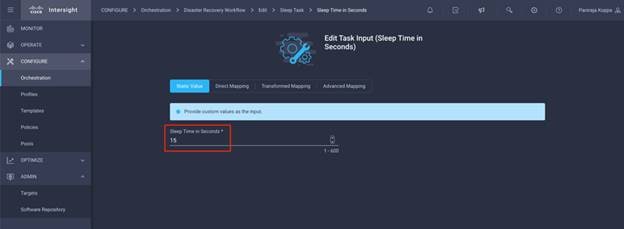

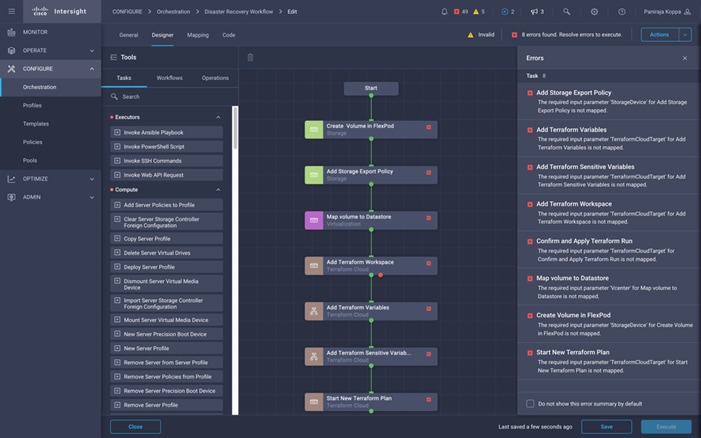

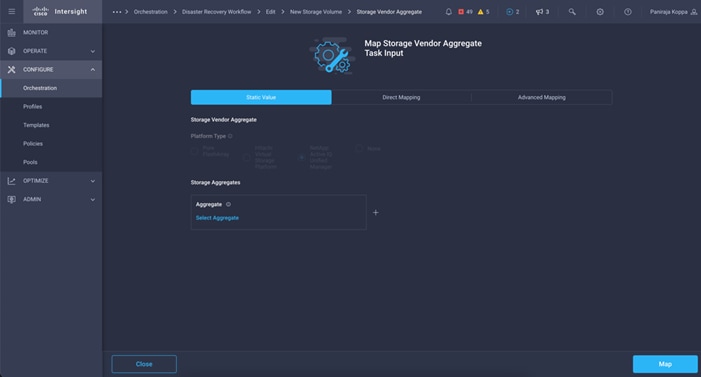

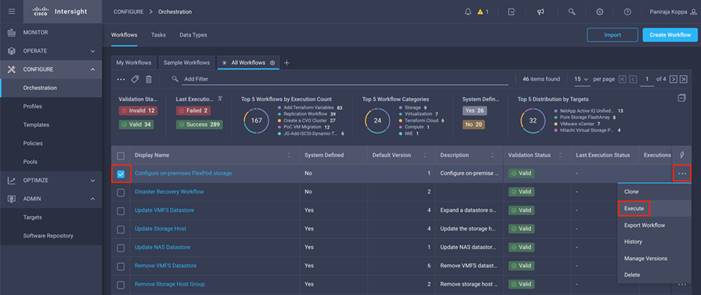

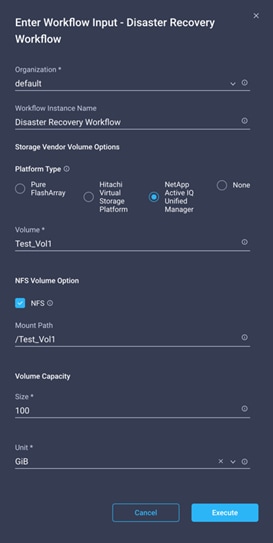

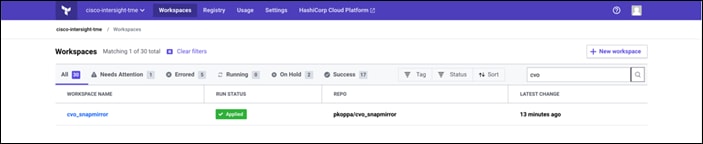

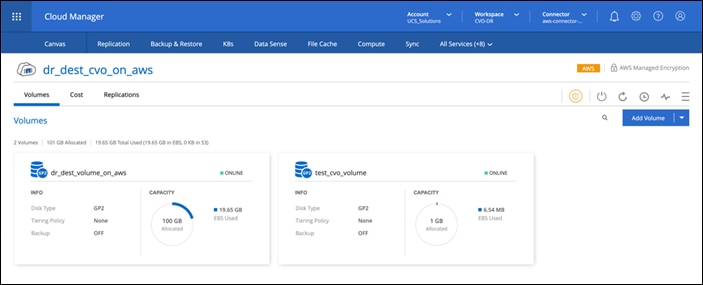

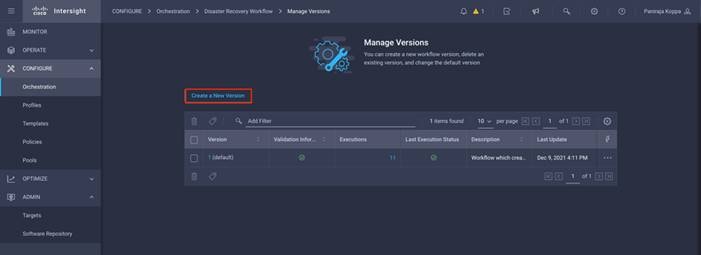

Figure 10. Configuration details for Terraform Agent