FlexPod for Accelerated RAG Pipeline with NVIDIA NIM and Cisco Webex

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

Published: October 2024

![]()

In partnership with:

![]()

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to: http://www.cisco.com/go/designzone.

The FlexPod Datacenter solution offers a validated design for implementing Cisco and NetApp technologies to create shared private and public cloud infrastructures. Through their partnership, Cisco and NetApp have developed a range of FlexPod solutions that support strategic data center platforms. The success of the FlexPod solution is attributed to its capacity to adapt and integrate technological and product advancements in management, computing, storage, and networking.

This document is the Cisco Validated Design and Deployment Guide for FlexPod for Accelerated RAG Pipeline with NVIDIA Inference Microservices (NIM) and Cisco Webex on the latest FlexPod Datacenter design with Cisco UCS X-Series based compute nodes, Cisco Intersight, NVIDIA GPUs, Red Hat OpenShift Container Platform (OCP) 4.16, Cisco Nexus switches, and NetApp ONTAP storage.

Generative Artificial Intelligence (Generative AI) is revolutionizing industries by driving innovations across various use cases. However, integrating generative AI into enterprise environments presents unique challenges. Establishing the right infrastructure with adequate computational resources is essential.

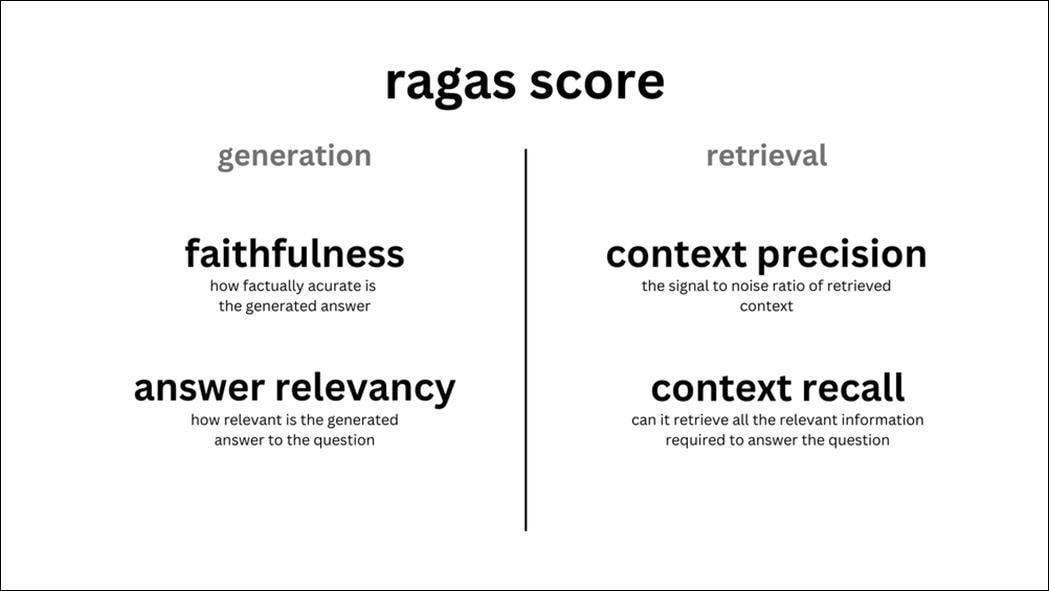

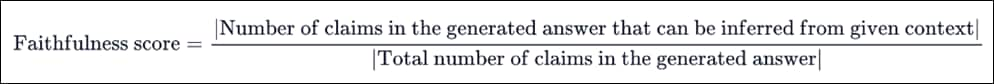

Retrieval Augmented Generation (RAG) is a cutting-edge technique in natural language processing (NLP) that is used to optimize the output of LLMs (Large Language Models) with dynamic domain specific data fetched from external sources. RAG is an end-to-end architecture that combines information retrieval component with response generator. Retrieval component fetches relevant document snippet in real time from corpus of knowledge. Generators take the input query along with retrieval chunks and generate natural response for the users. This hybrid approach enhances the effectiveness of AI applications by minimizing inaccuracies and boosting the relevance and faithfulness of the generated content.

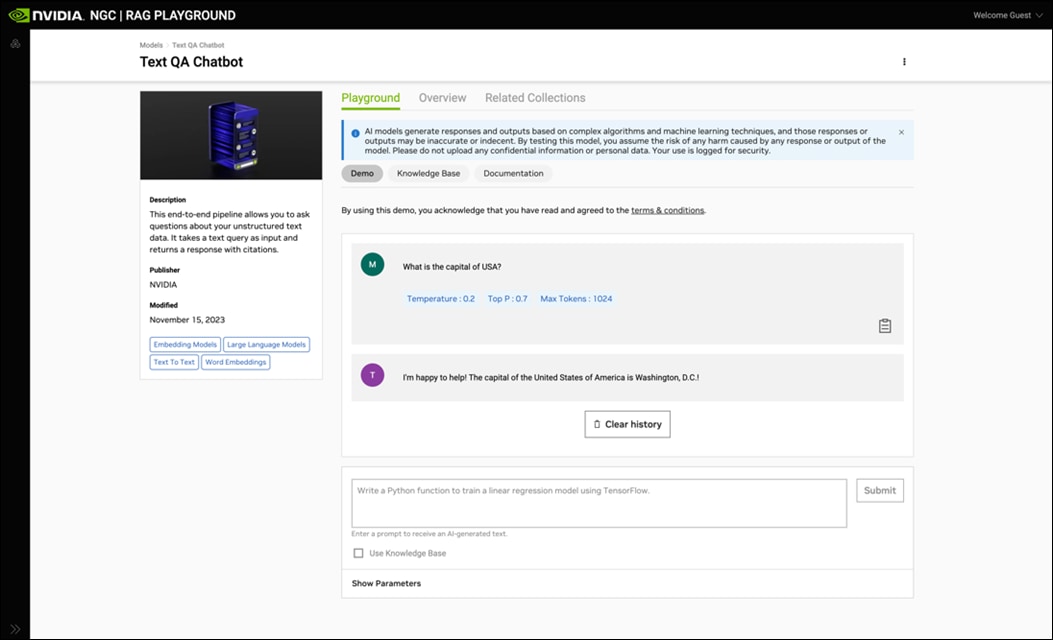

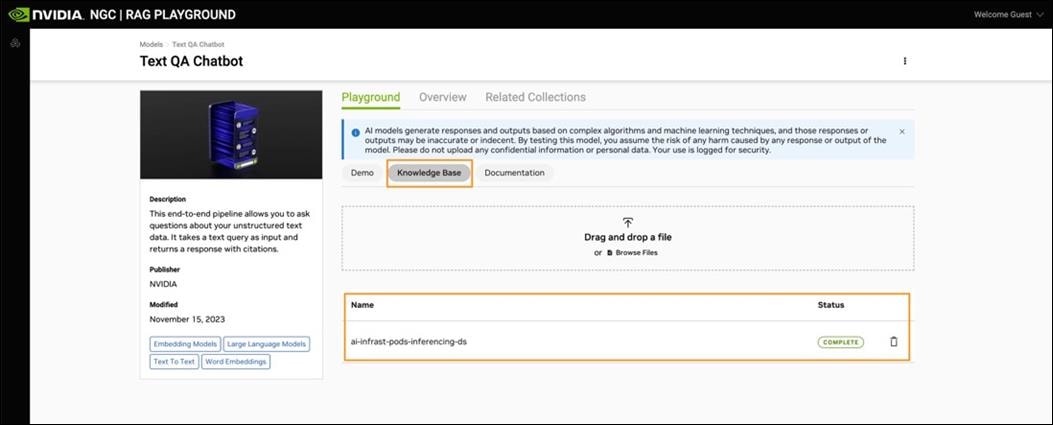

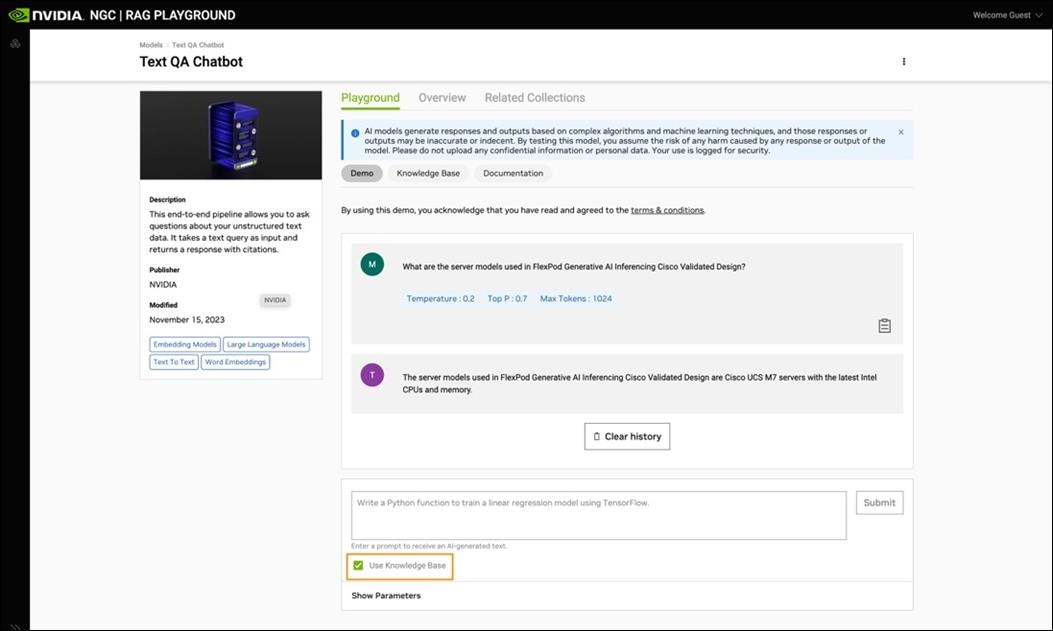

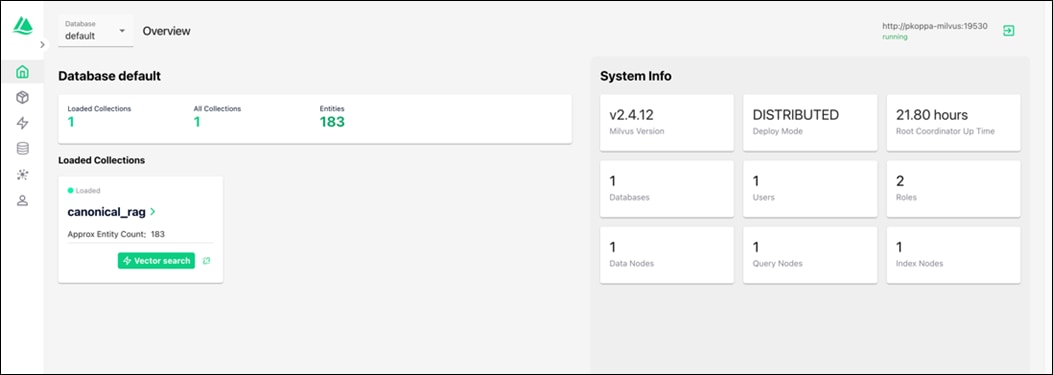

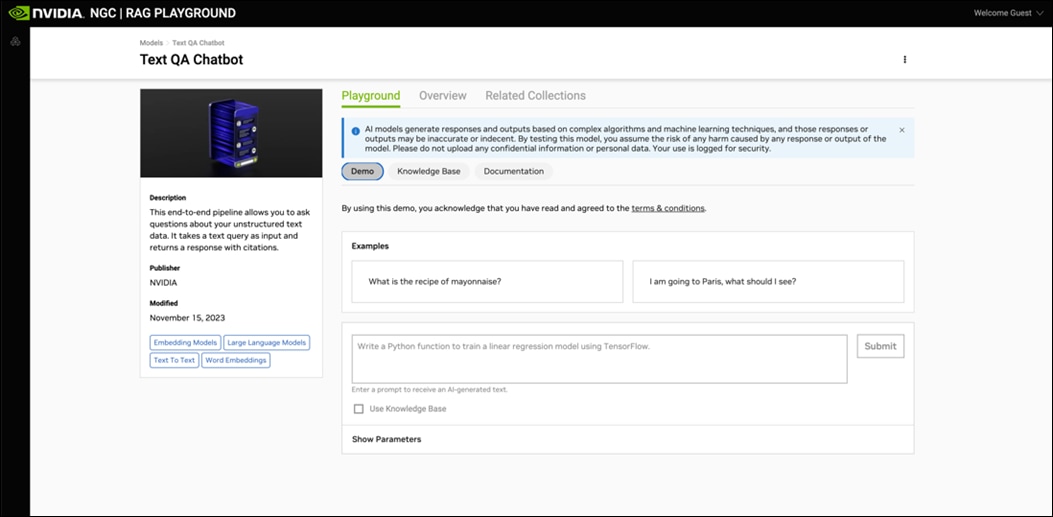

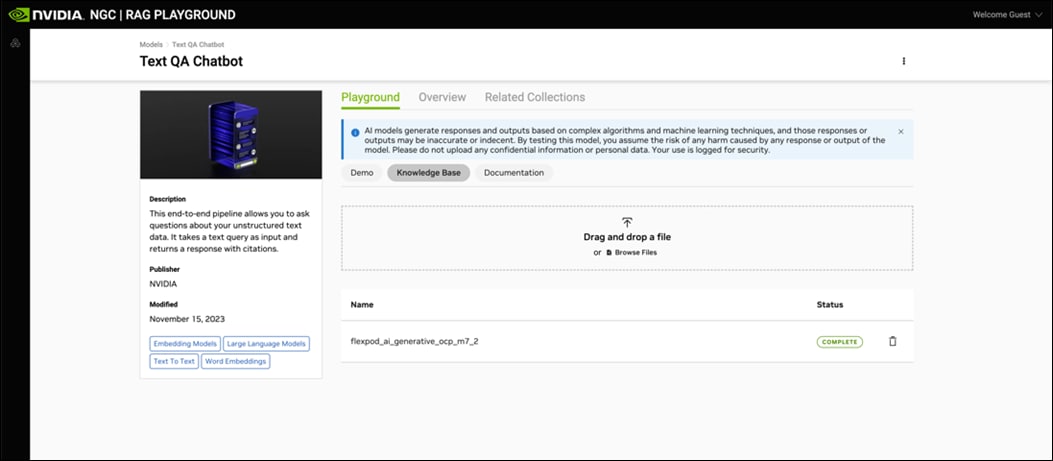

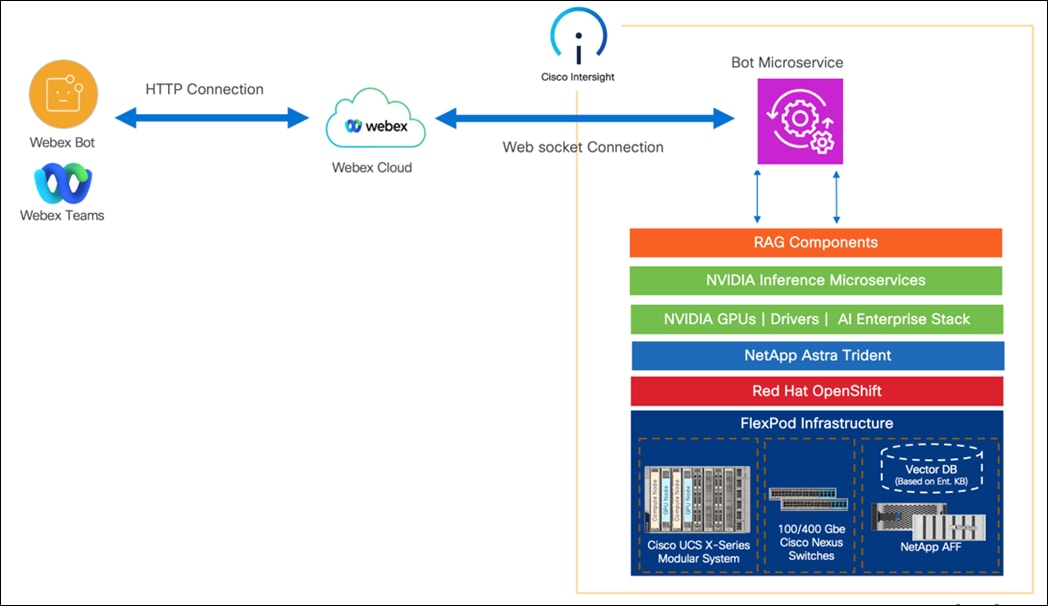

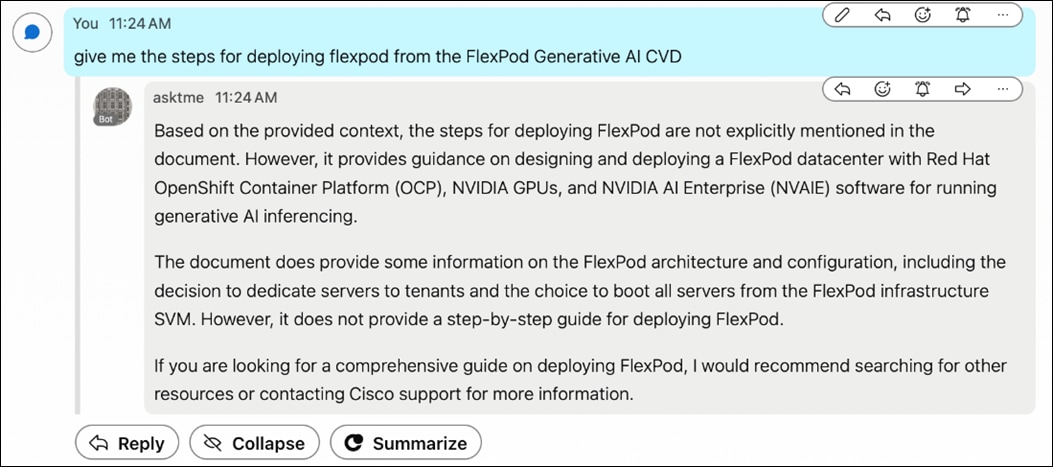

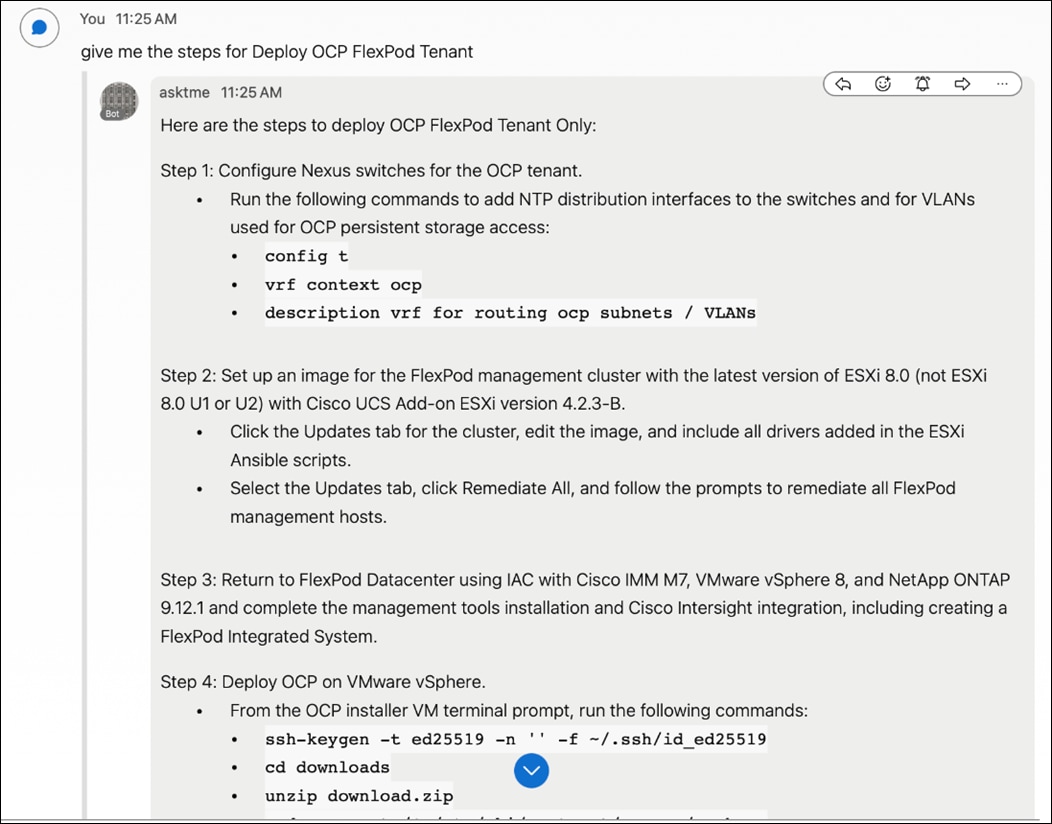

This solution implements a RAG pipeline using NVIDA NIM microservices deployed on the infrastructure. The RAG pipeline is integrated with Cisco Webex Chat bot to run inferencing.

This document provides design and deployment guidance for running Retrieval Augmented Generation pipeline using NVIDIA NIM microservices deployed on FlexPod Datacenter and Cisco Webex Chat bot for inferencing.

For information about the FlexPod design and deployment details, including the configuration of various elements of the design and associated best practices, refer to Cisco Validated Designs for FlexPod, here: https://www.cisco.com/c/en/us/solutions/design-zone/data-center-design-guides/flexpod-design-guides.html.

This chapter contains the following:

● Audience

Retrieval Augmented Generation, or RAG, is the process of augmenting large language models with domain specific information. During RAG, the pipeline applies the knowledge and patterns that the model acquired during its training phase and couples that with user-supplied data sources to provide more informed responses to user queries.

The solution demonstrates how enterprises can design and implement a technology stack leveraging Generative Pre-trained Transformer (GPT), enabling them to privately run and manage their preferred AI large language models (LLMs) and related applications. It emphasizes Retrieval Augmented Generation as the key use case. The hardware and software components are seamlessly integrated, allowing customers to deploy the solution swiftly and cost-effectively, while mitigating many of the risks involved in independently researching, designing, building, and deploying similar systems on their own.

The intended audience of this document includes IT decision makers such as CTOs and CIOs, IT architects, sales engineers, field consultants, professional services, IT managers, partner engineers, and customers. It is designed for those interested in the design, deployment, and life cycle management of generative AI systems and applications, as well as those looking to leverage an infrastructure that enhances IT efficiency and fosters innovation.

This document provides design and deployment guidance for running Retrieval Augmented Generation pipeline using NVIDIA NIM microservices deployed on FlexPod Datacenter and Cisco Webex Chat bot for inferencing.

The following design elements are built on the CVD: FlexPod Datacenter with Red Hat OCP Bare Metal Manual Configuration with Cisco UCS X-Series Direct Deployment Guide to implement an end-to-end Retrieval Augmented Generation pipeline with NVIDIA NIM and Cisco Webex:

● Installation and configuration of RAG pipeline components on FlexPod Datacenter

◦ NVIDIA GPUs

◦ NVIDIA AI Enterprise (NVAIE)

◦ NVIDIA NIM microservices

● Integrating RAG pipeline with Cisco Webex Chat bot

● RAG Evaluation

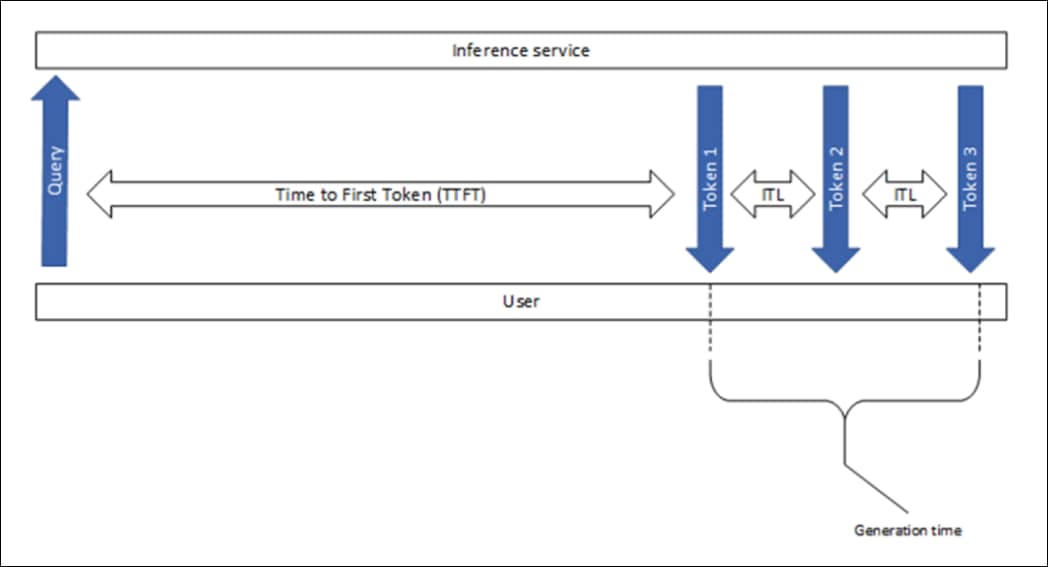

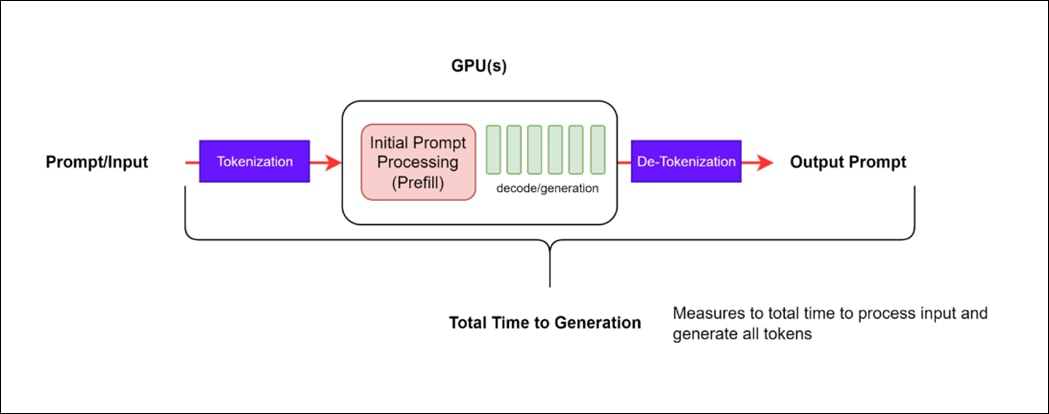

● NIM for LLM Benchmarking

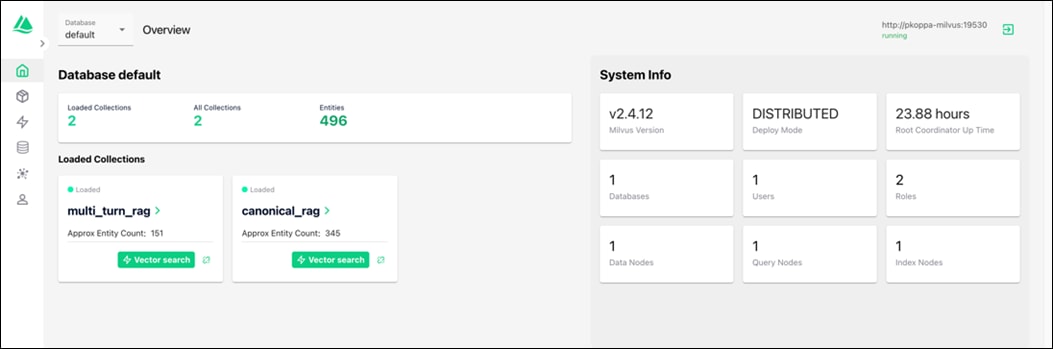

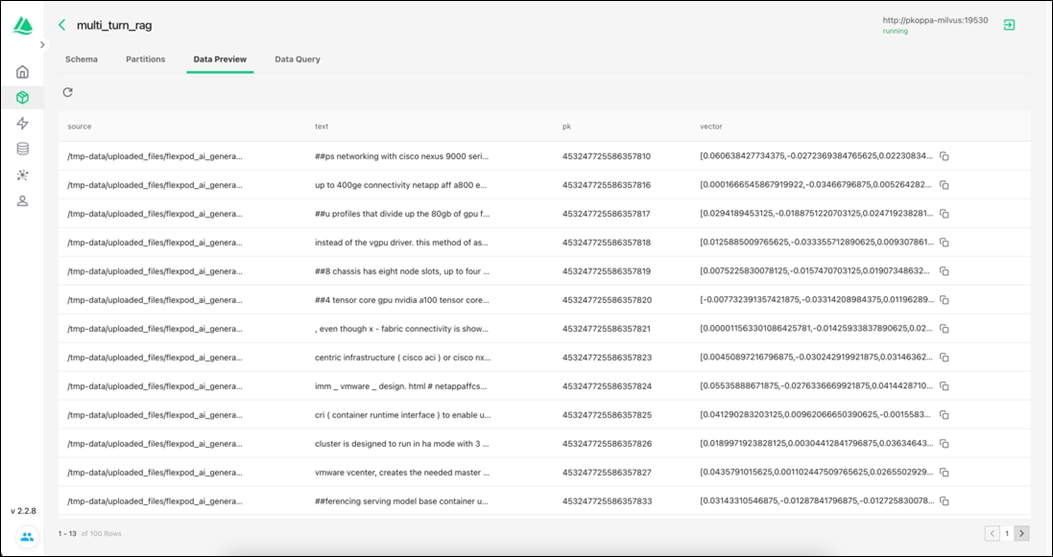

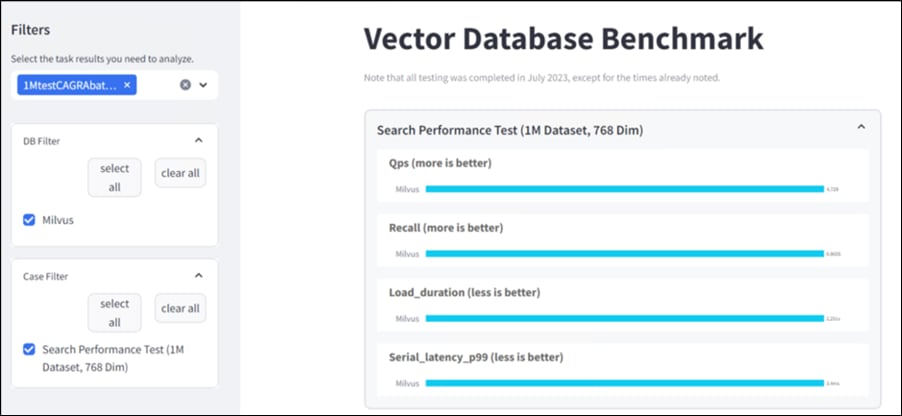

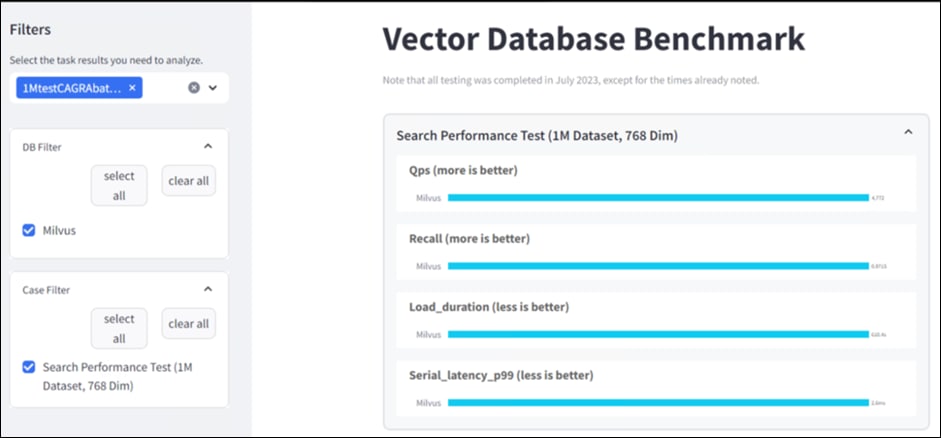

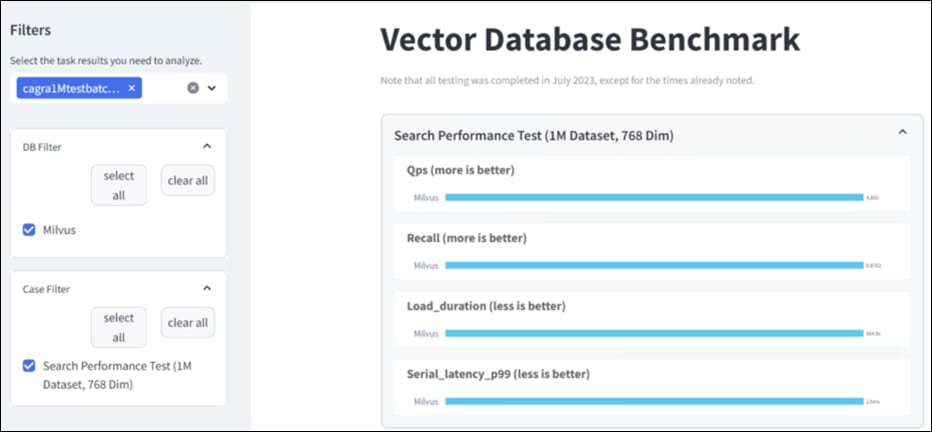

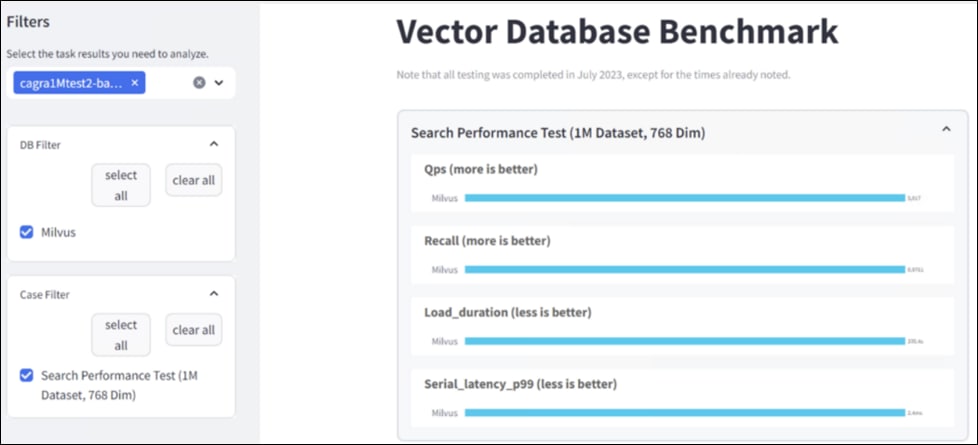

● Milvus Benchmarking

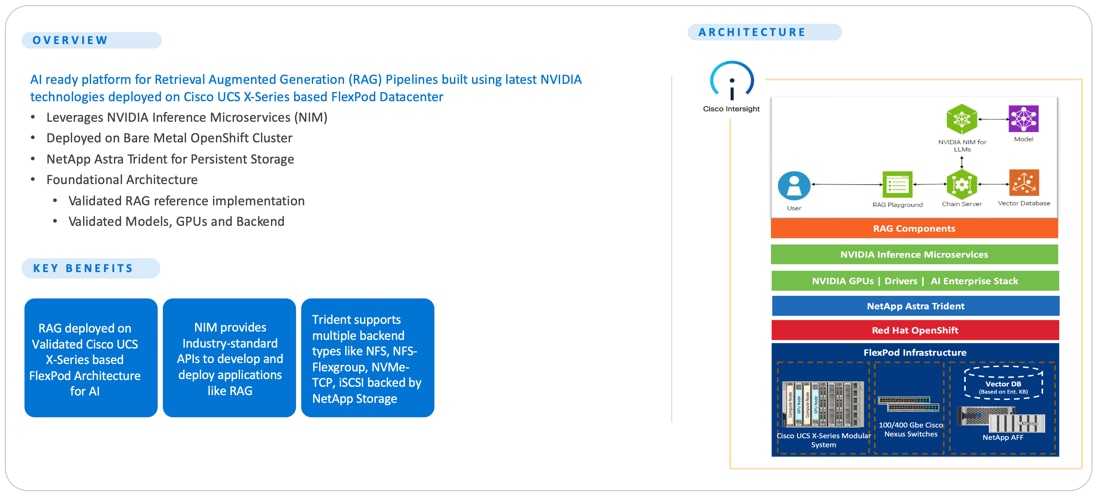

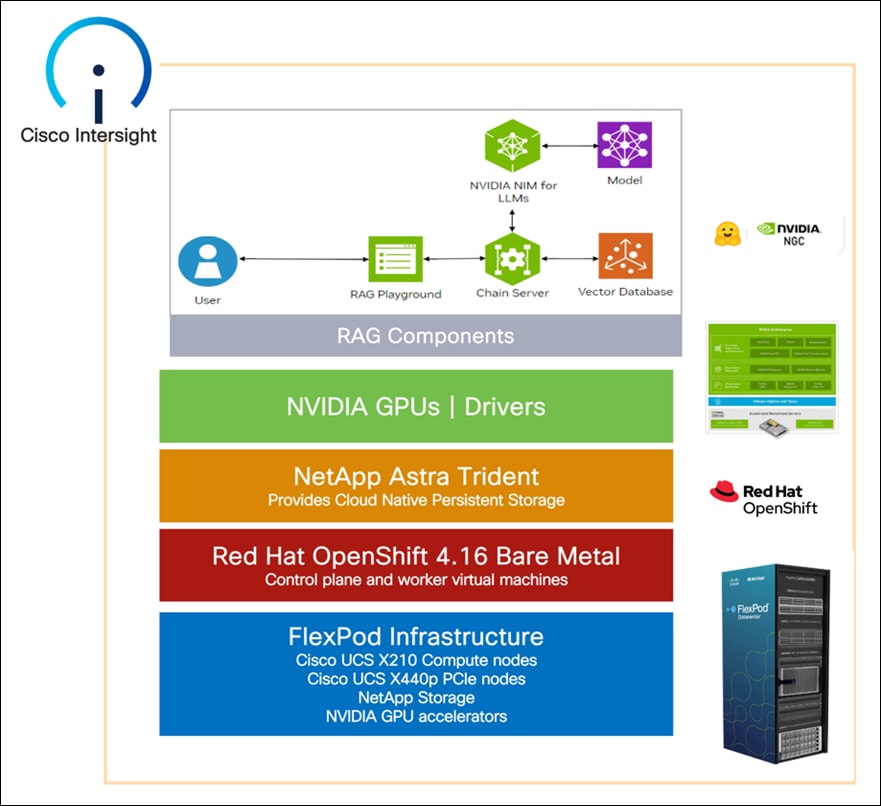

Figure 1 illustrates the solution summary.

The FlexPod Datacenter solution as a platform for Retrieval Augmented Generation offers the following key benefits:

● The ability to implement a Retrieval Augmented Generation pipeline quickly and easily on a powerful platform with high-speed persistent storage

● Evaluation of performance of platform components

● Simplified cloud-based management of solution components using policy-driven modular design

● Cooperative support model and Cisco Solution Support

● Easy to deploy, consume, and manage architecture, which saves time and resources required to research, procure, and integrate off-the-shelf components

● Support for component monitoring, solution automation and orchestration, and workload optimization

● Cisco Webex Chat bot integration with the RAG pipeline

Like all other FlexPod solution designs, FlexPod for Accelerated RAG Pipeline with NVIDIA NIM and Cisco Webex is configurable according to demand and usage. You can purchase exactly the infrastructure you need for your current application requirements and then scale-up/scale-out to meet future needs.

This chapter contains the following:

● Retrieval Augmented Generation

● NVIDIA Inference Microservices

● NVIDIA NIM for Large Language Models

● NVIDIA NIM for Text Embedding

Retrieval Augmented Generation

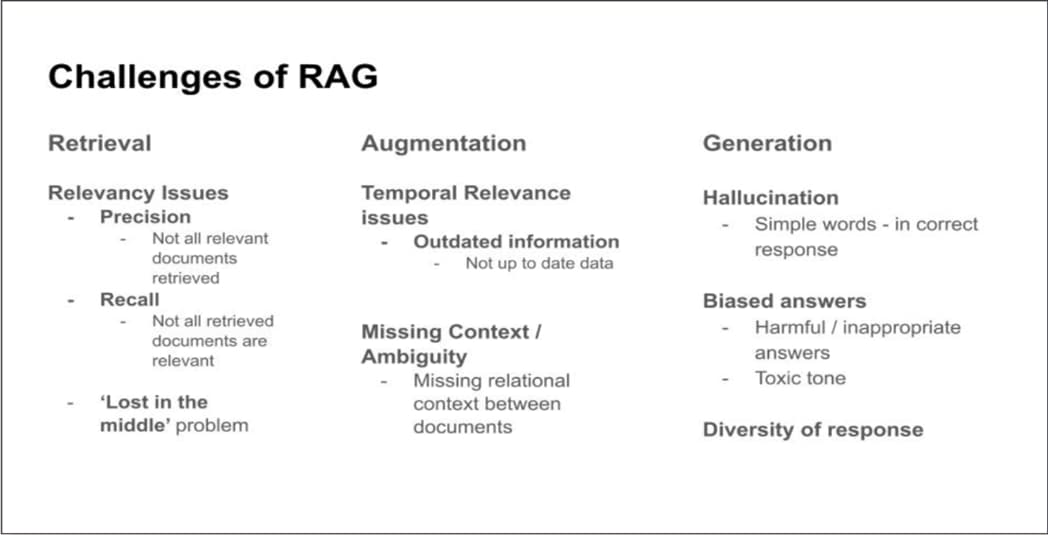

Retrieval Augmented Generation (RAG) is an enterprise application of Generative AI. RAG represents a category of large language model (LLM) applications that enhance the LLM's context by incorporating external data. It overcomes the limitation of knowledge cutoff date (events occurring after the model’s training). LLMs lack access to an organization's internal data or services. This absence of up-to-date and domain-specific or organization-specific information prevents their effective use in enterprise applications.

RAG Pipeline

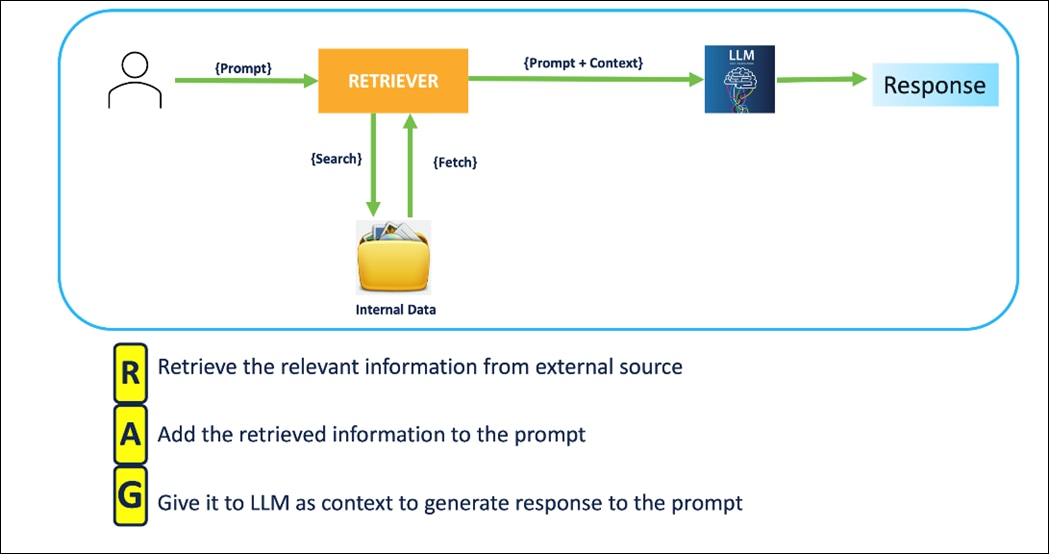

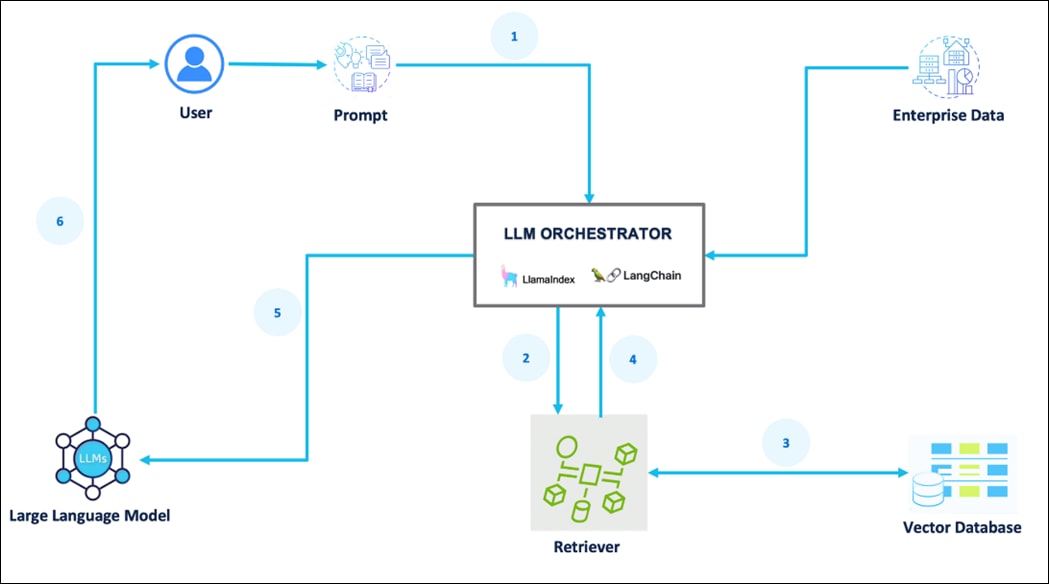

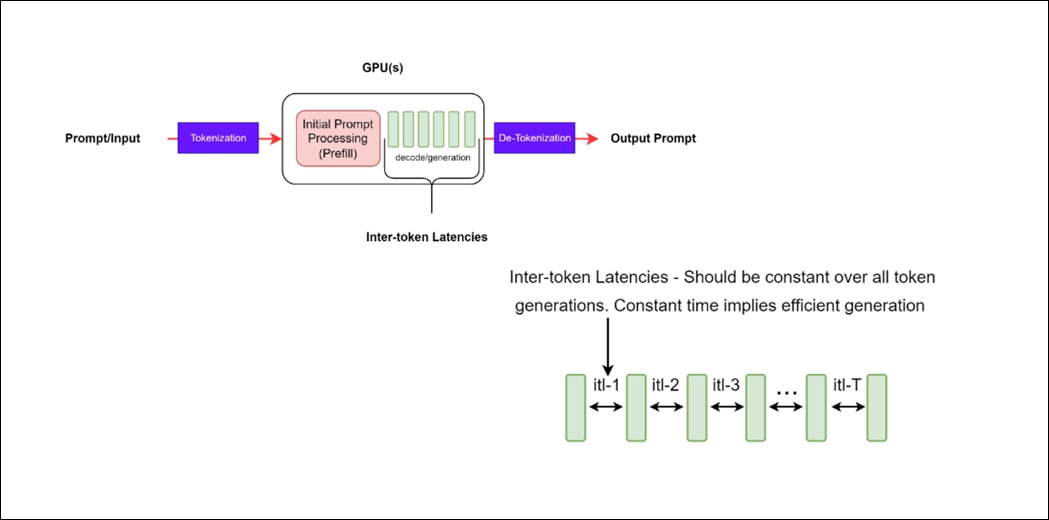

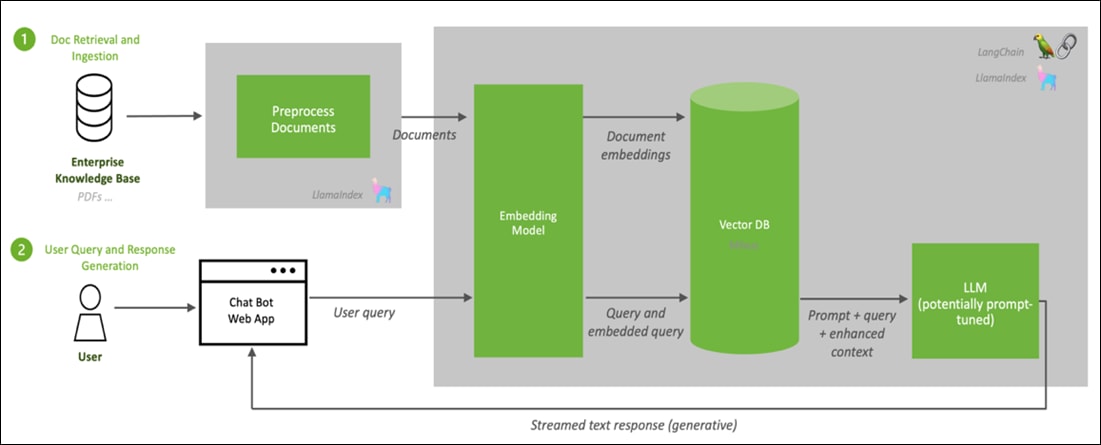

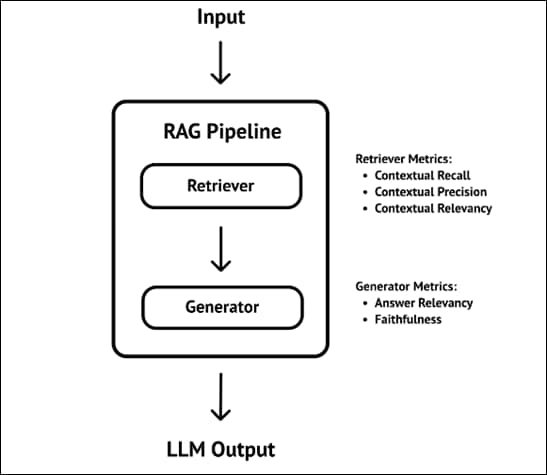

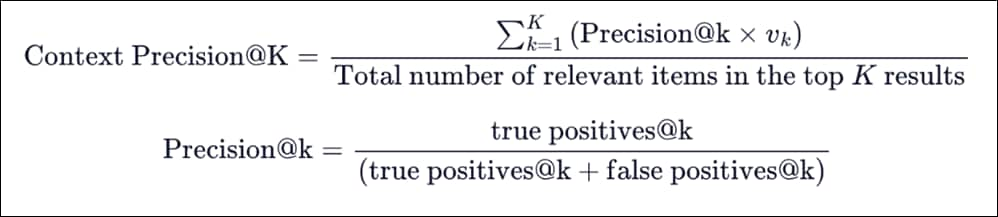

Figure 2 illustrates the RAG Pipeline overview.

Figure 2. RAG Pipeline overview

In this pipeline, when you enter a prompt/query, document chunks relevant to the prompt are searched and fetched to the system. The retrieved relevant information is augmented to the prompt as context. LLM is asked to generate a response to the prompt in the context and the user receives the response.

RAG Architecture

RAG is an end-to-end architecture that combines information retrieval component with a response generator.

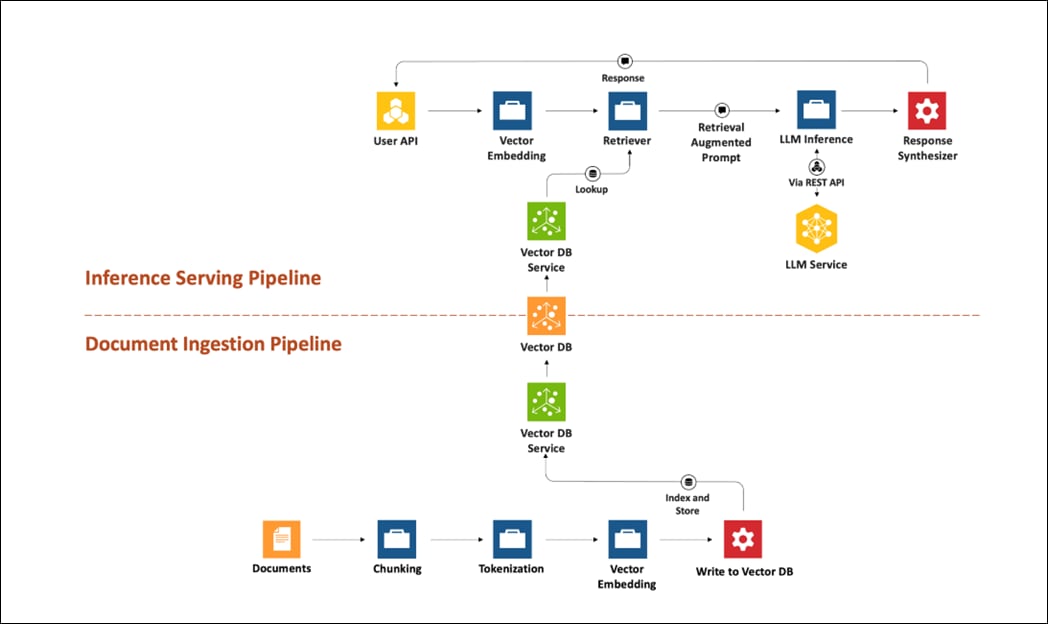

RAG can be broken into two process flows; document ingestion and inferencing.

Figure 4 illustrates the document ingestion pipeline.

Figure 4. Document Ingestion Pipeline overview

Figure 5 illustrates the inferencing pipeline.

Figure 5. Inferencing Pipeline overview

The process for the inference serving pipeline is as follows:

1. A prompt is passed to the LLM orchestrator.

2. The orchestrator sends a search query to the retriever.

3. The retriever fetches relevant information from the Vector Database.

4. The retriever returns the retrieved information to the orchestrator.

5. The orchestrator augments the original prompt with the context and sends it to the LLM.

6. The LLM responds with generated text/ response and presents it to the user.

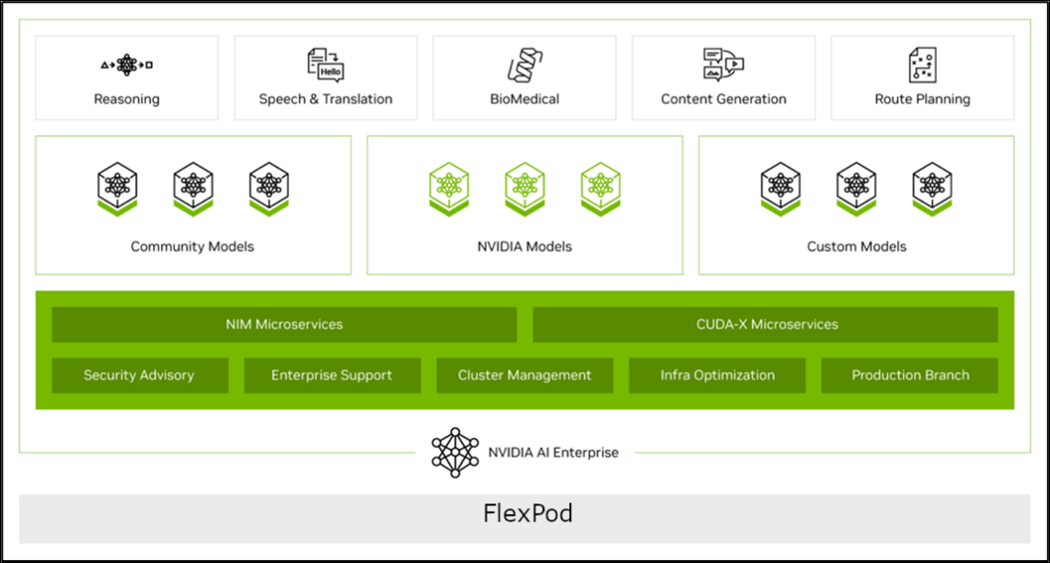

The NVIDIA AI Enterprise (NVAIE) platform was deployed on Red Hat OpenShift as the foundation for the RAG pipeline. NVIDIA AI Enterprise simplifies the development and deployment of generative AI workloads, including Retrieval Augmented Generation, at scale.

Figure 6. NVIDIA AI Enterprise with FlexPod Datacenter

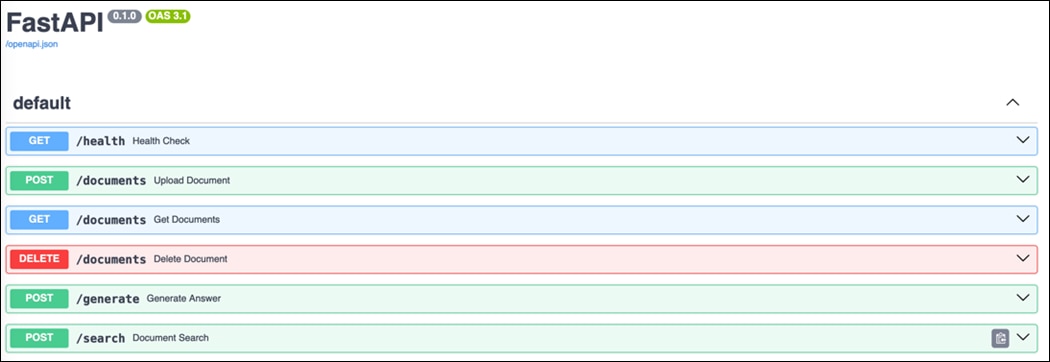

NVIDIA Inference Microservices

NVIDIA Inference Microservice (NIM), a component of NVIDIA AI Enterprise, offers an efficient route for creating AI-driven enterprise applications and deploying AI models in production environments. NIM consists of microservices that accelerate and simplify the deployment of generative AI models via automation using prebuilt containers, Helm charts, optimized models, and industry-standard APIs.

NIM simplifies the process for IT and DevOps teams to self-host large language models (LLMs) within their own managed environments. It provides developers with industry-standard APIs, enabling them to create applications such as copilots, chatbots, and AI assistants that can revolutionize their business operations. Content Generation, Sentiment Analysis, and Language Translation services are just a few additional examples of applications that can be rapidly deployed to meet various use cases. NIM ensures the quickest path to inference with unmatched performance.

Figure 7. NVIDIA NIM for optimized AI inference

NIMs are distributed as container images tailored to specific models or model families. Each NIM is encapsulated in its own container and includes an optimized model. These containers come with a runtime compatible with any NVIDIA GPU that has adequate GPU memory, with certain model/GPU combinations being optimized for better performance. One or more GPUs can be passed through to containers via the NVIDIA Container Toolkit to provide the horsepower needed for any workload. NIM automatically retrieves the model from NGC (NVIDIA GPU Cloud), utilizing a local filesystem cache if available. Since all NIMs are constructed from a common base, once a NIM has been downloaded, acquiring additional NIMs becomes significantly faster. The NIM catalog currently offers nearly 150 models and agent blueprints.

Utilizing domain specific models, NIM caters to the demand for specialized solutions and enhanced performance through a range of pivotal features. It incorporates NVIDIA CUDA (Compute Unified Device Architecture) libraries and customized code designed for distinct fields like language, speech, video processing, healthcare, retail, and others. This method ensures that applications are precise and pertinent to their particular use cases. Think of it like a custom toolkit for each profession; just as a carpenter has specialized tools for woodworking, NIM provides tailored resources to meet the unique needs of various domains.

NIM is designed with a production-ready base container that offers a robust foundation for enterprise AI applications. It includes feature branches, thorough validation processes, enterprise support with service-level agreements (SLAs), and frequent security vulnerability updates. This optimized framework makes NIM an essential tool for deploying efficient, scalable, and tailored AI applications in production environments. Think of NIM as the bedrock of a skyscraper; just as a solid foundation is crucial for supporting the entire structure, NIM provides the necessary stability and resources for building scalable and reliable portable enterprise AI solutions.

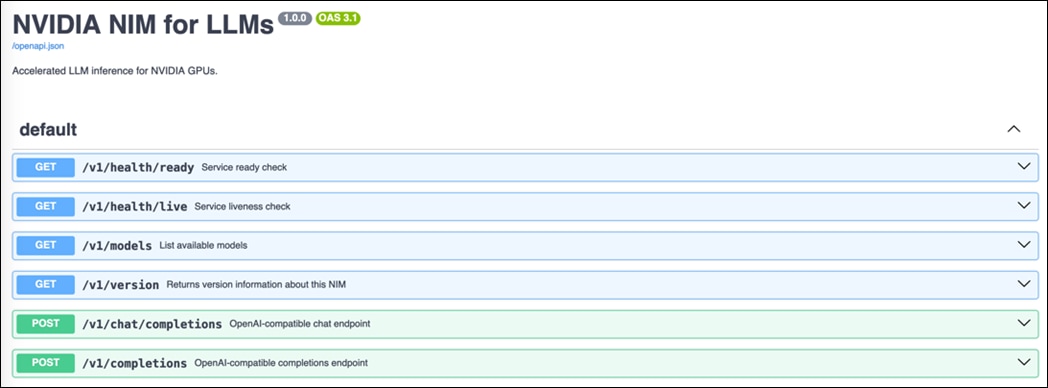

NVIDIA NIM for Large Language Models

NVIDIA NIM for Large Language Models (LLMs) (NVIDIA NIM for LLMs) brings the power of state-of-the-art large language models (LLMs) to enterprise applications, providing unmatched natural language processing (NLP) and understanding capabilities.

Whether developing chatbots, content analyzers, or any application that needs to understand and generate human language — NVIDIA NIM for LLMs is the fastest path to inference. Built on the NVIDIA software platform, NVIDIA NIM brings state of the art GPU accelerated large language model serving.

High Performance Features

NVIDIA NIM for LLMs abstracts away model inference internals such as execution engine and runtime operations. NVIDIA NIM for LLMs provides the most performant option available whether it be with TensorRT, vLLM or LLM others.

● Scalable Deployment: NVIDIA NIM for LLMs is performant and can easily and seamlessly scale from a few users to millions.

● Advanced Language Models: Built on cutting-edge LLM architectures, NVIDIA NIM for LLMs provides optimized and pre-generated engines for a variety of popular models. NVIDIA NIM for LLMs includes tooling to help create GPU optimized models.

● Flexible Integration: Easily incorporate the microservice into existing workflows and applications. NVIDIA NIM for LLMs provides an OpenAI API compatible programming model and custom NVIDIA extensions for additional functionality.

● Enterprise-Grade Security: Data privacy is paramount. NVIDIA NIM for LLMs emphasizes security by using safetensors, constantly monitoring and patching CVEs in our stack and conducting internal penetration tests.

Applications

The potential applications of NVIDIA NIM for LLMs are vast, spanning across various industries and use cases:

● Chatbots & Virtual Assistants: Empower bots with human-like language understanding and responsiveness.

● Content Generation & Summarization: Generate high-quality content or distill lengthy articles into concise summaries with ease.

● Sentiment Analysis: Understand user sentiments in real-time, driving better business decisions.

● Language Translation: Break language barriers with efficient and accurate translation services.

Architecture

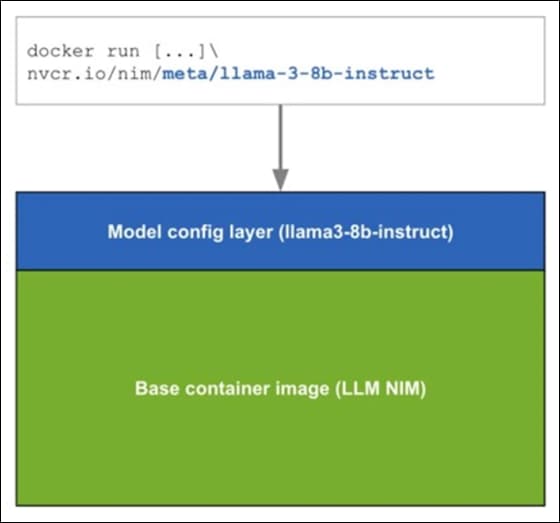

NVIDIA NIM for LLMs is one of what will become many NIMs. Each NIM is its own Docker container with a model, such as meta/llama3-8b-instruct. These containers include the runtime capable of running the model on any NVIDIA GPU. The NIM automatically downloads the model from NGC, leveraging a local filesystem cache if available. Each NIM is built from a common base, so once a NIM has been downloaded, downloading additional NIMs is extremely fast.

When a NIM is first deployed, NIM inspects the local hardware configuration, and the available optimized model in the model registry, and then automatically chooses the best version of the model for the available hardware. For a subset of NVIDIA GPUs, see: Support Matrix, NIM downloads the optimized TRT (TensorRT) engine and runs an inference using the TRT-LLM library. For all other NVIDIA GPUs, NIM downloads a non-optimized model and runs it using the vLLM library.

NIMs are distributed as NGC container images through the NVIDIA NGC Catalog. A security scan report is available for each container within the NGC catalog, which provides a security rating of that image, breakdown of CVE severity by package, and links to detailed information on CVEs.

Deployment Lifecycle

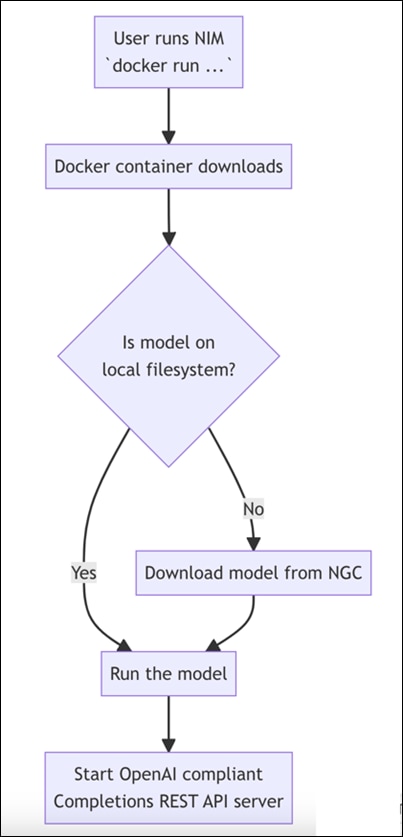

Figure 9 illustrates the deployment lifecycle.

Figure 9. Deployment Lifecycle

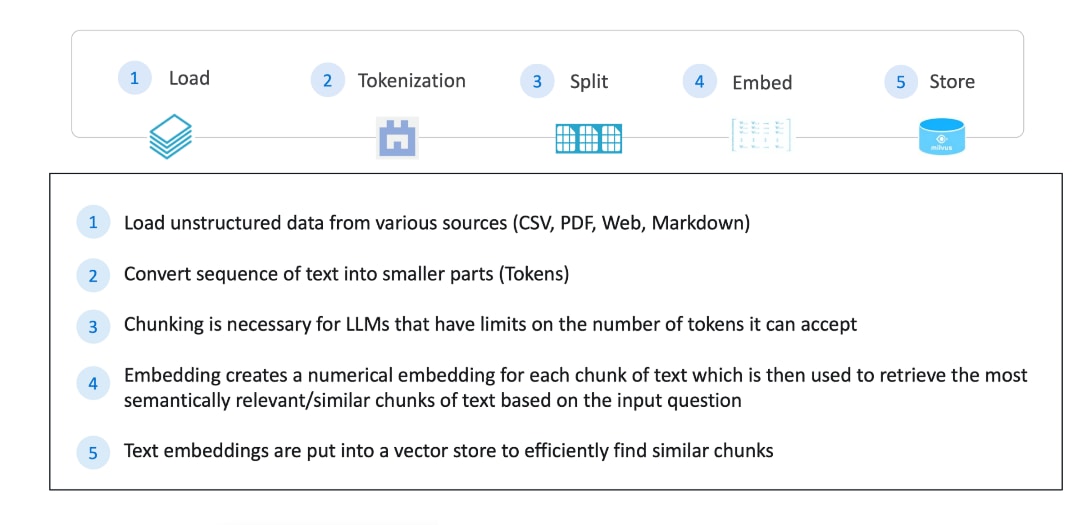

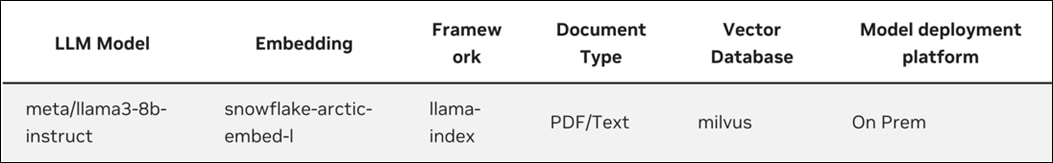

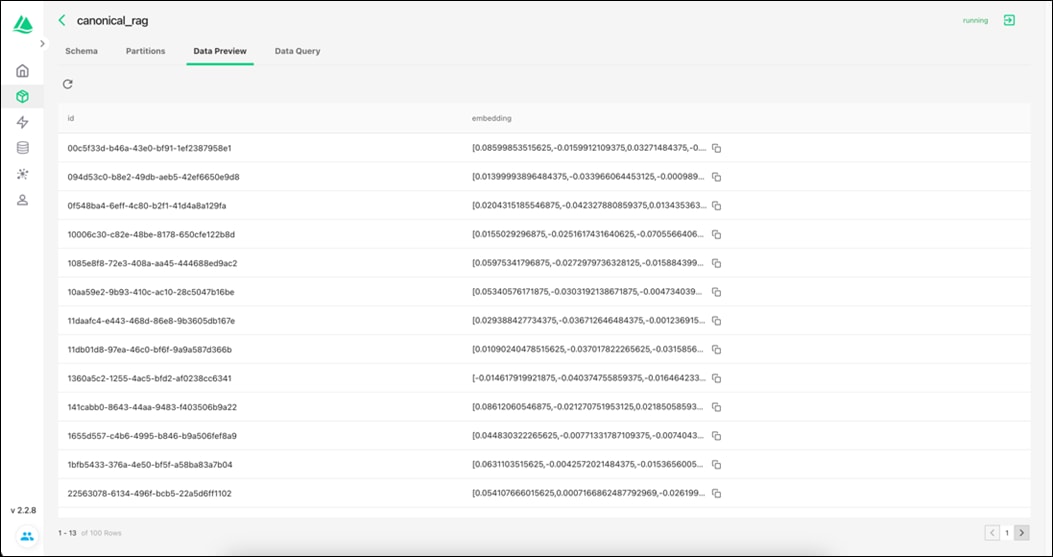

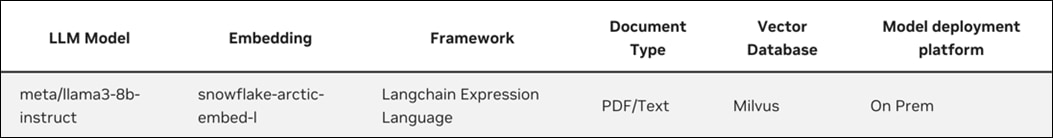

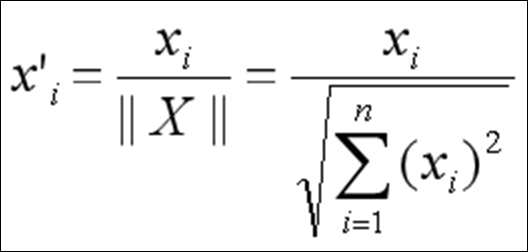

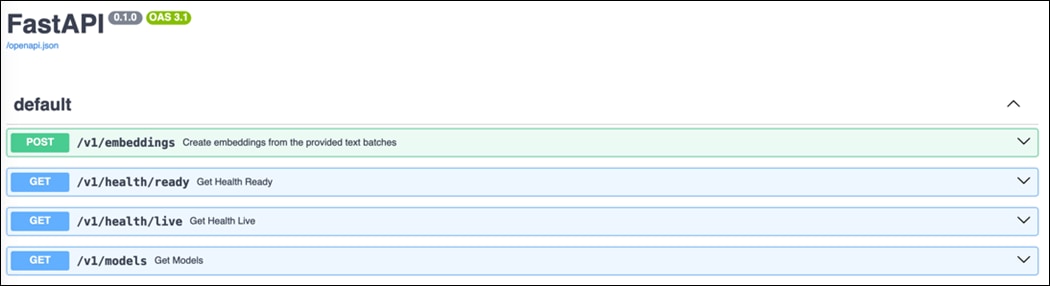

NeMo Text Retriever NIM APIs facilitate access to optimized embedding models — essential components for RAG applications that deliver precise and faithful answers. By using NVIDIA software (including CUDA, TensorRT, and Triton Inference Server), the Text Retriever NIM provides the tools needed by developers to create ready-to-use, GPU-accelerated applications. NeMo Retriever Text Embedding NIM enhances the performance of text-based question-answering retrieval by generating optimized embeddings. For this RAG CVD, the Snowflake Arctic-Embed-L embedding model was harnessed to encode domain-specific content which was then stored in a vector database. The NIM combines that data with an embedded version of the user’s query to deliver a relevant response.

Figure 10 shows how the Text Retriever NIM APIs can help a question-answering RAG application find the most relevant data in an enterprise setting.

Figure 10. Text Retriever NIM APIs for RAG Application

Enterprise-Ready Features

Text Embedding NIM comes with enterprise-ready features, such as a high-performance inference server, flexible integration, and enterprise-grade security.

● High Performance: Text Embedding NIM is optimized for high-performance deep learning inference with NVIDIA TensorRT and NVIDIA Triton Inference Server.

● Scalable Deployment: Text Embedding NIM seamlessly scales from a few users to millions.

● Flexible Integration: Text Embedding NIM can be easily incorporated into existing data pipelines and applications. Developers are provided with an OpenAI-compatible API in addition to custom NVIDIA extensions.

● Enterprise-Grade Security: Text Embedding NIM comes with security features such as the use of safetensors, continuous patching of CVEs, and constant monitoring with our internal penetration tests.

Solution Design

This chapter contains the following:

● FlexPod Datacenter with Red Hat OCP on Bare Metal

This solution meets the following general design requirements:

● Resilient design across all layers of the infrastructure with no single point of failure

● Scalable and flexible design to add compute capacity, storage, or network bandwidth as needed supported by various models of each component

● Modular design that can be replicated to expand and grow as the needs of the business grow

● Simplified design with ability to integrate and automate with external automation tools

● Cloud-enabled design which can be configured, managed, and orchestrated from the cloud using GUI or APIs

● Repeatable design for accelerating the provisioning of end-to-end Retrieval Augmented Generation pipeline

● Provide a testing methodology to evaluate the performance of the solution

● Provide an example implementation of Cisco Webex Chat Bot integration with RAG

FlexPod Topology

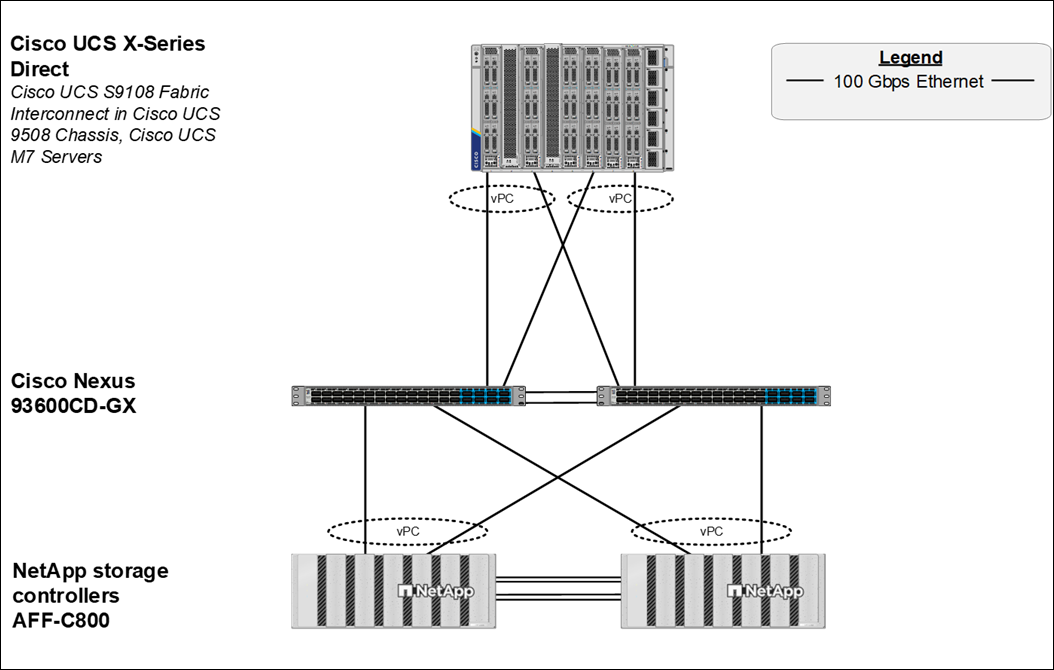

The FlexPod Datacenter for Accelerated RAG Pipeline with NVIDIA NIM and Cisco Webex is built using the following reference hardware components:

● 2 Cisco Nexus 93600CD-GX Switches in Cisco NX-OS mode provide the switching fabric. Other Cisco Nexus Switches are also supported.

● 2 Cisco UCS S9108 Fabric Interconnects (FIs) in the chassis provide the chassis connectivity. At least 2 100 Gigabit Ethernet ports from each FI, configured as a Port-Channel, are connected to each Cisco Nexus 93600CD-GX switch. 25 Gigabit Ethernet connectivity is also supported as well as other versions of the Cisco UCS FI that would be used with Intelligent Fabric Modules (IFMs) in the chassis.

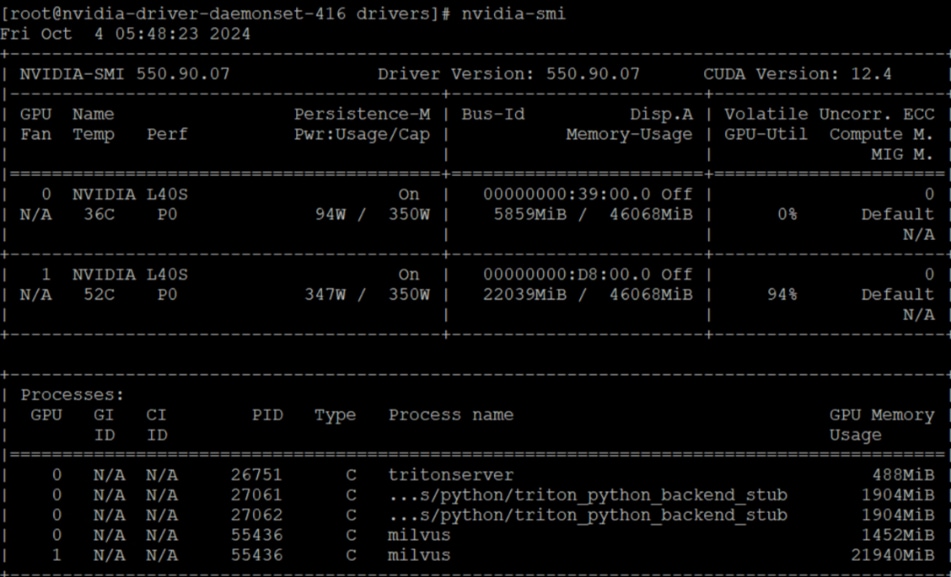

● 1 Cisco UCS X9508 Chassis contains 6 Cisco UCS X210C M7 servers and 2 Cisco UCS X440p PCIe Nodes each with 2 NVIDIA L40S GPUs. Other configurations of servers with and without GPUs are also supported.

● 1 NetApp AFF C800 HA pair connects to the Cisco Nexus 93600CD-GX Switches using two 100 GE ports from each controller configured as a Port-Channel. 25 Gigabit Ethernet connectivity is also supported as well as other NetApp AFF, ASA, and FAS storage controllers.

Figure 11 shows various hardware components and the network connections for the FlexPod Datacenter for Accelerated RAG Pipeline with NVIDIA NIM design.

Figure 11. FlexPod Datacenter Physical Topology

The software components of this solution consist of:

● Cisco Intersight SaaS platform to deploy, maintain, and support the FlexPod components

● Cisco Intersight Assist Virtual Appliance to connect NetApp ONTAP and Cisco Nexus switches to Cisco Intersight

● NetApp Active IQ Unified Manager to monitor and manage the storage and for NetApp ONTAP integration with Cisco Intersight

● Red Hat OCP to manage a Kubernetes containerized environment

FlexPod Datacenter with Red Hat OCP on Bare Metal

Red Hat OCP on Bare Metal Server Configuration

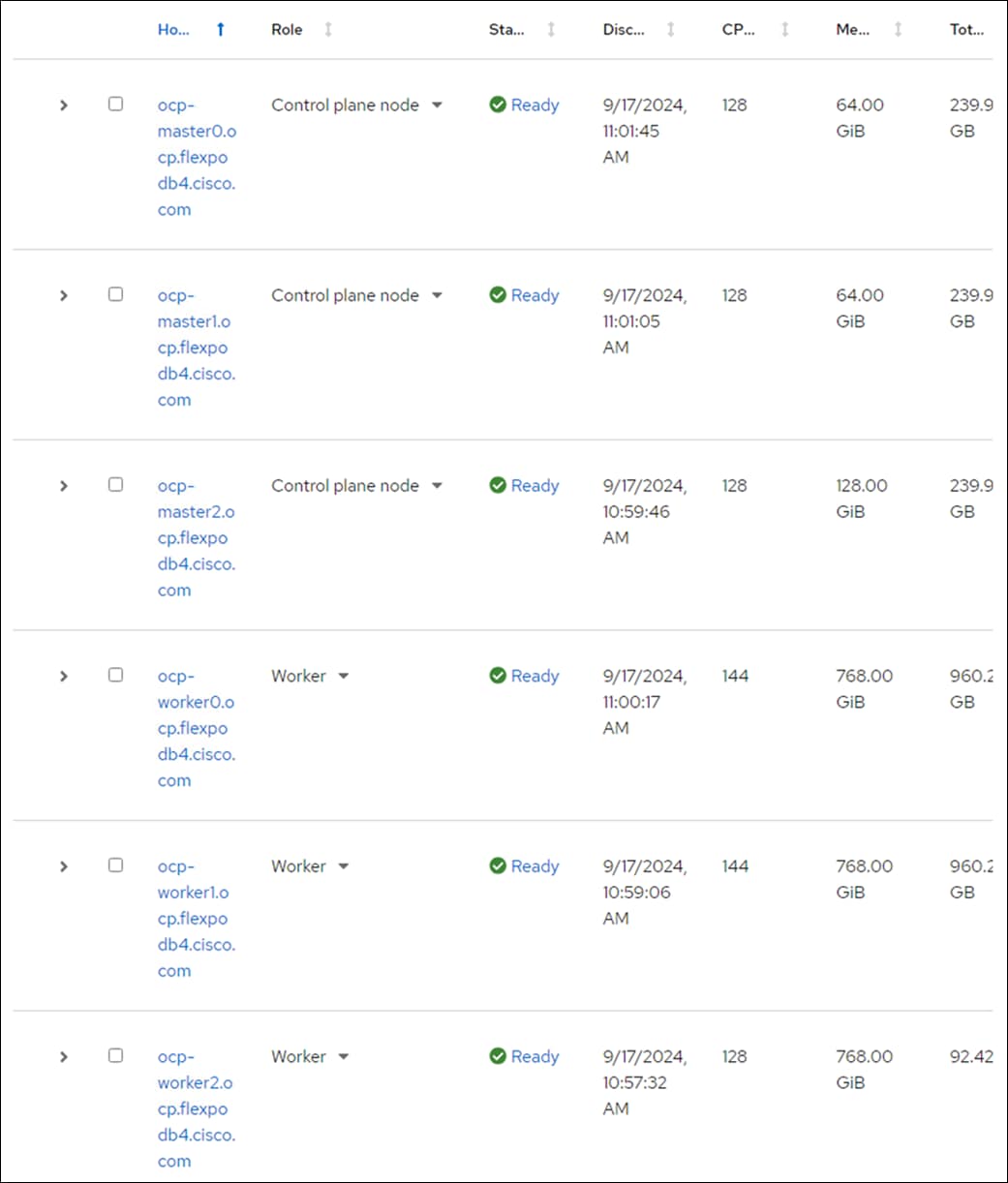

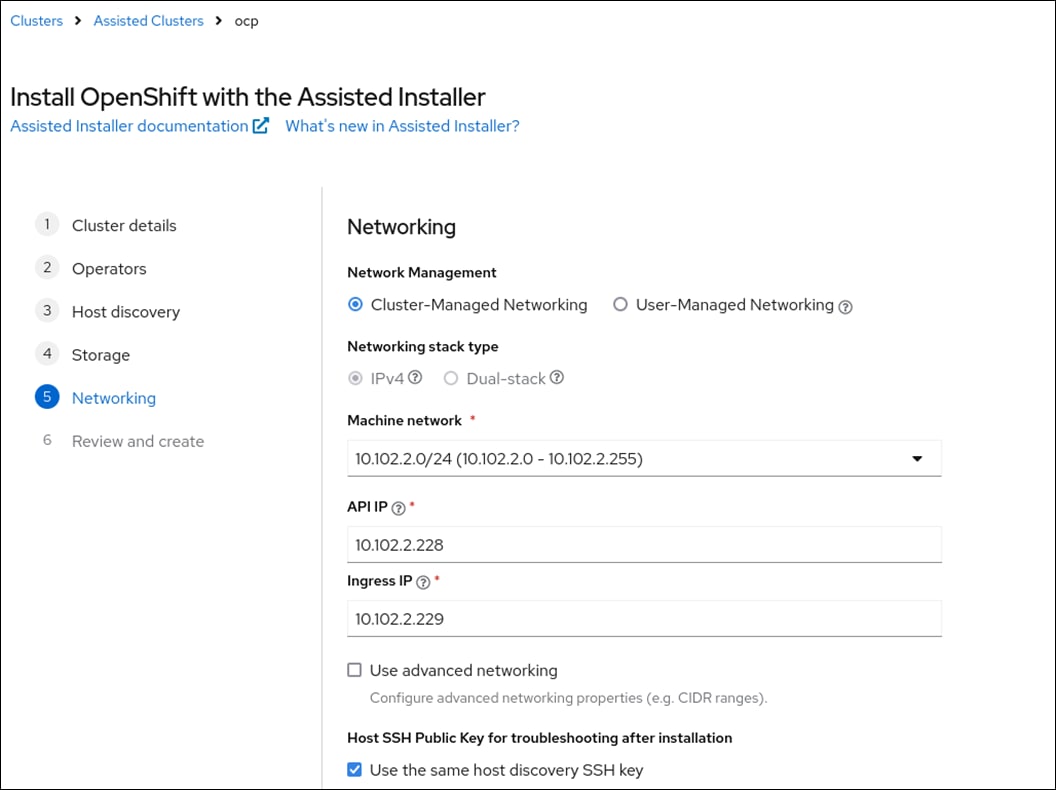

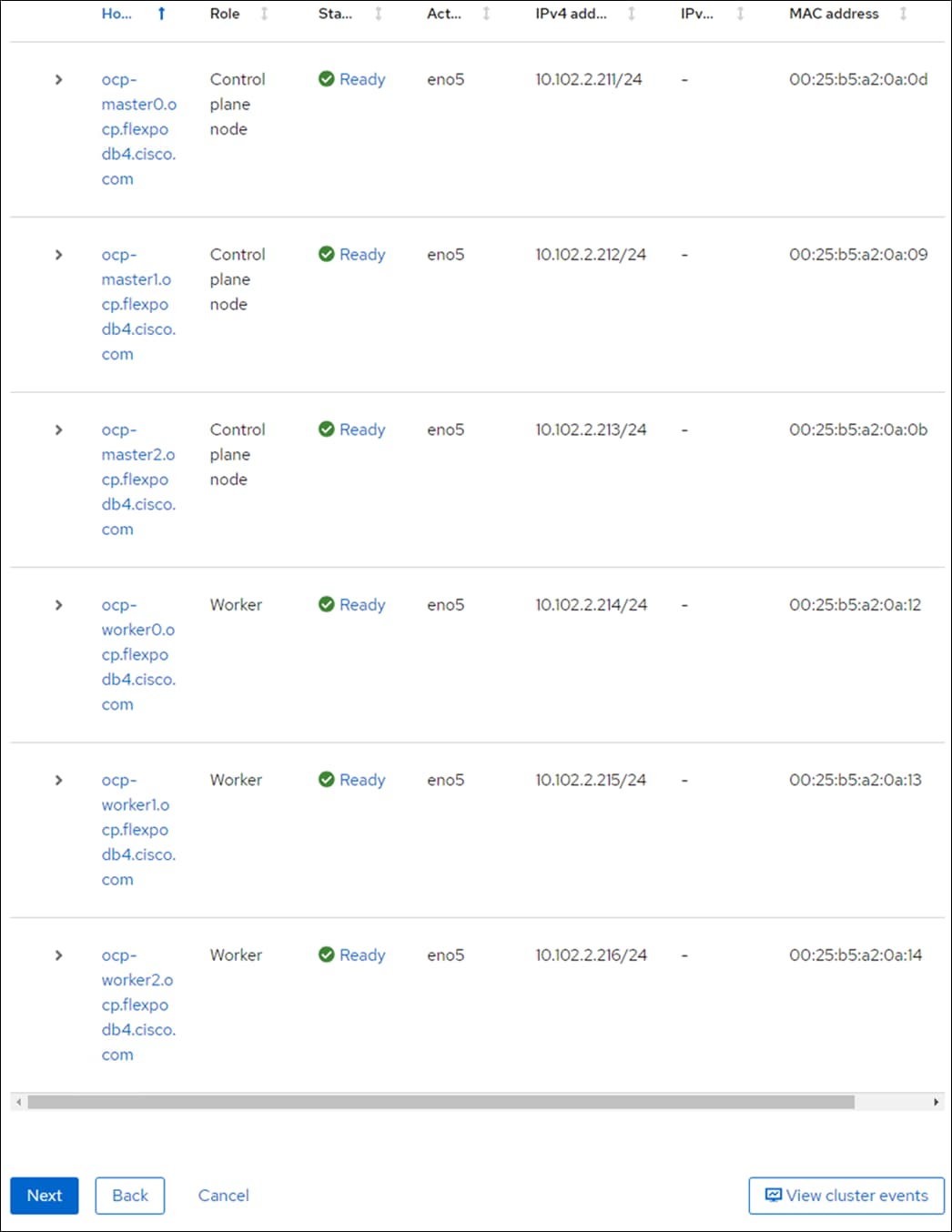

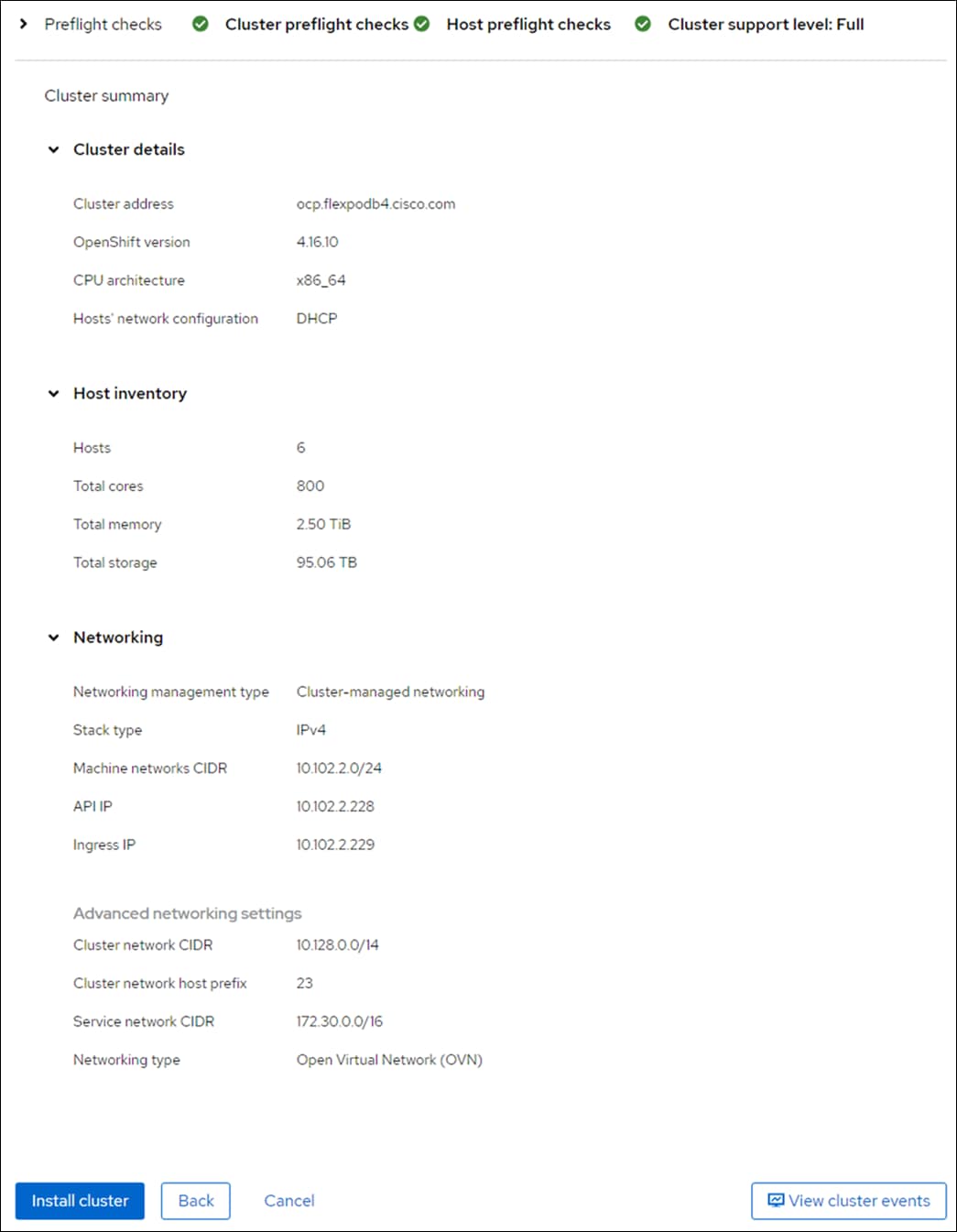

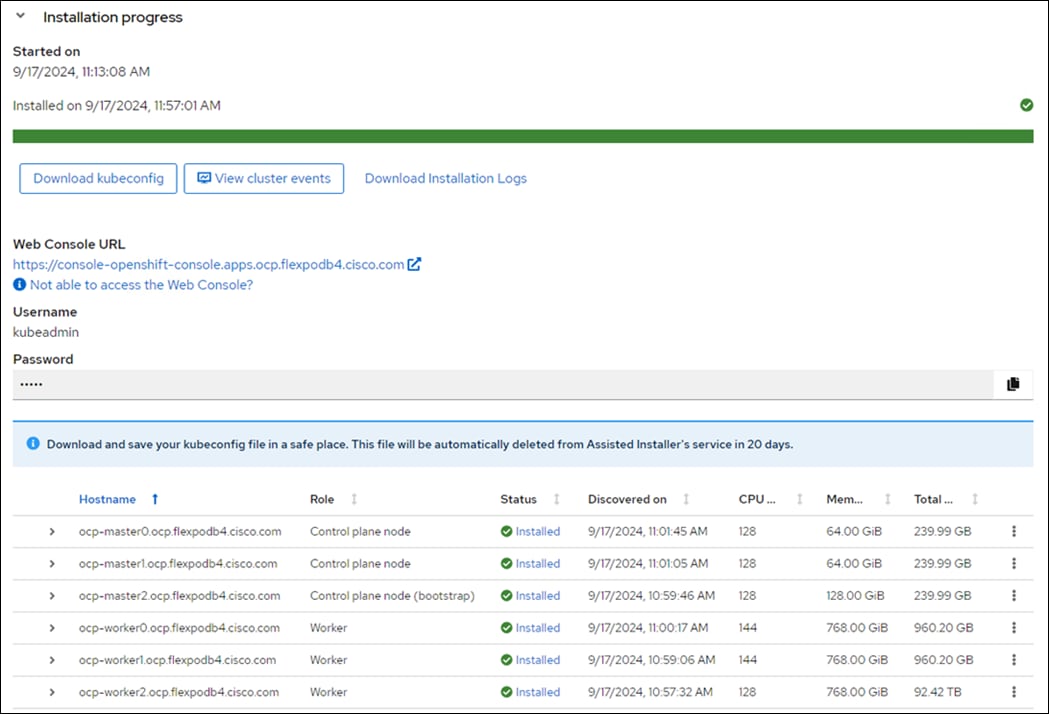

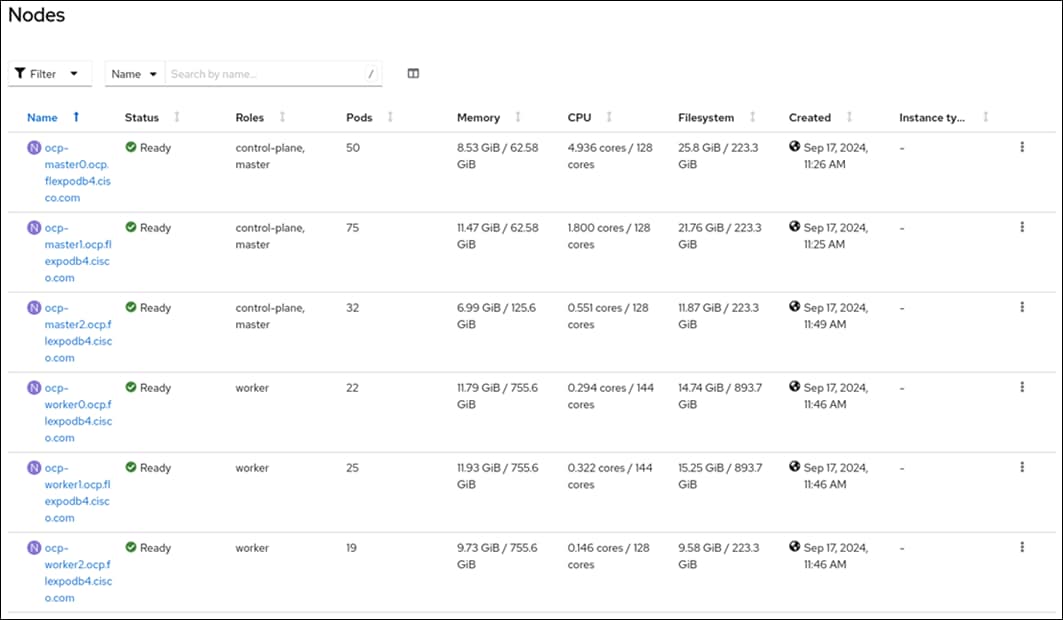

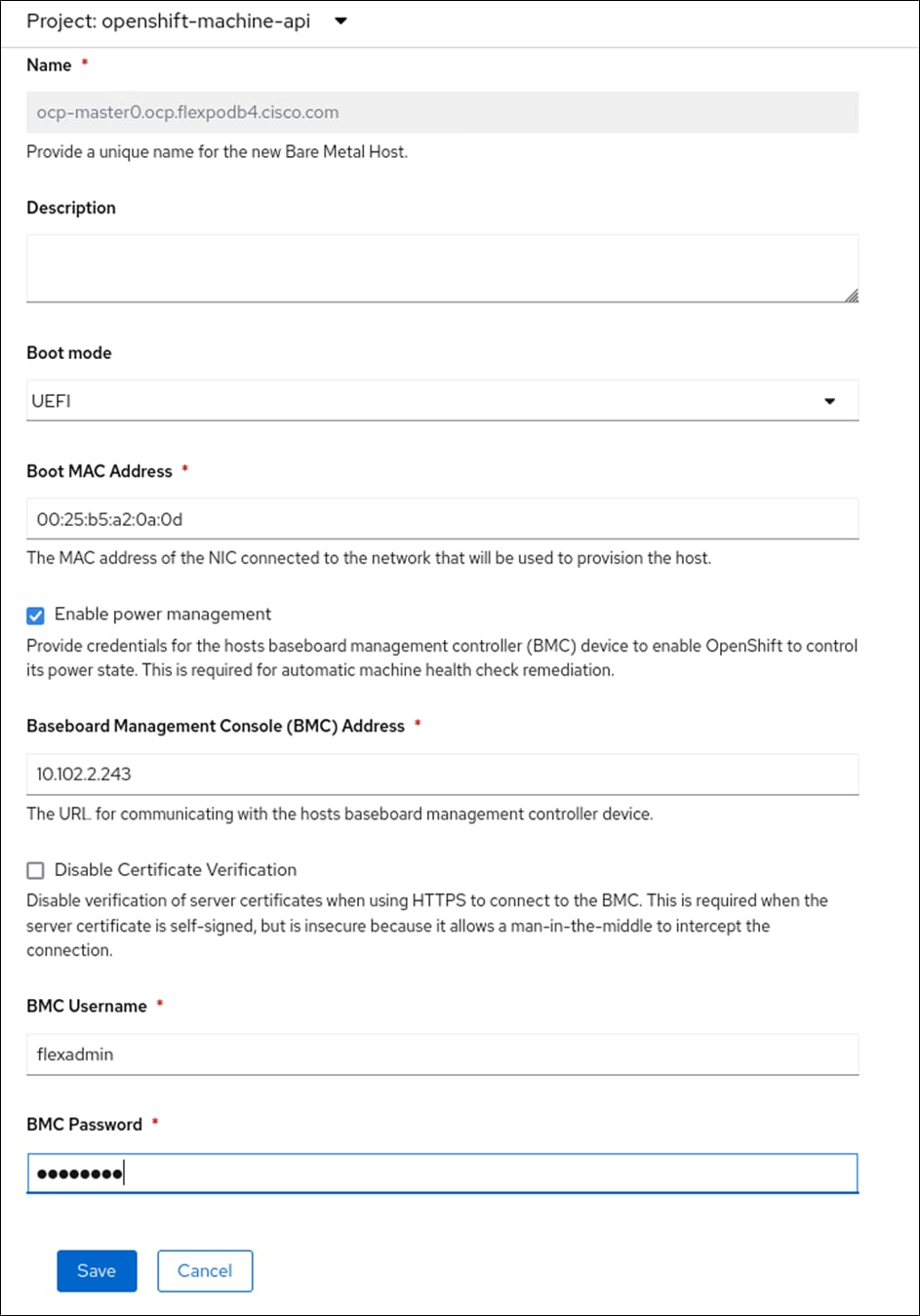

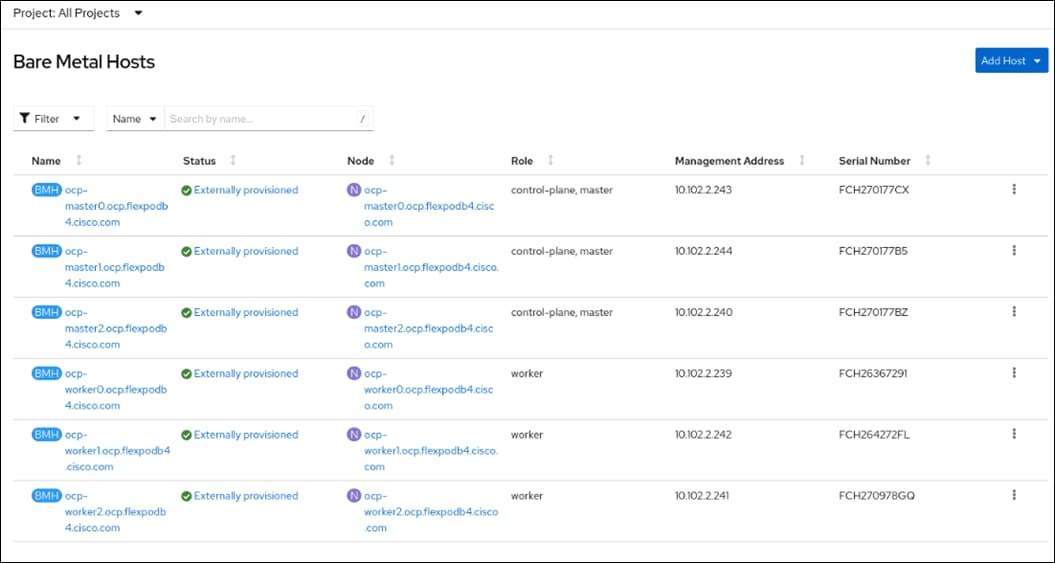

A simple Red Hat OCP cluster consists of at least five servers – 3 Master or Control Plane Nodes and 2 or more Worker Nodes where applications and VMs are run. In this lab validation 3 Worker Nodes were utilized. Based on OCP published requirements, the three Master Nodes were configured with 64GB RAM, and the three Worker Nodes were configured with 768GB RAM to handle containerized applications and VMs. Each Node was booted from M.2. Both a single M.2 module and 2 M.2 modules with RAID1 are supported. Also, the servers paired with X440p PCIe Nodes were configured as Workers. From a networking perspective, both the Masters and the Workers were configured with a single vNIC with Cisco UCS Fabric Failover in the Bare Metal or Management VLAN. The workers were configured with extra NICs (vNICs) to allow storage attachment to the Workers. Each worker had two additional vNICs with the iSCSI A and B VLANs configured as native to allow iSCSI persistent storage attachment and future iSCSI boot. These vNICs also had the NVMe-TCP A and B allowed VLANs assigned, allowing tagged VLAN interfaces for NVMe-TCP to be defined on the Workers. Finally, each worker had one additional vNIC with the OCP NFS VLAN configured as native to provide NFS persistent storage.

VLAN Configuration

Table 1 lists VLANs configured for setting up the FlexPod environment along with their usage.

| VLAN ID |

Name |

Usage |

IP Subnets |

| 2* |

Native-VLAN |

Use VLAN 2 as native VLAN instead of default VLAN (1) |

|

| 1020* |

OOB-MGMT-VLAN |

Out-of-band management VLAN to connect management ports for various devices |

10.102.0.0/24 GW: 10.102.0.254 |

| 1022 |

OCP-BareMetal-MGMT |

Routable OCP Bare Metal VLAN used for OCP cluster and node management |

10.102.2.0/24 GW: 10.102.2.254 |

| 3012 |

OCP-iSCSI-A |

Used for OCP iSCSI Persistent Storage |

192.168.12.0/24 |

| 3022 |

OCP-iSCSI-B |

Used for OCP iSCSI Persistent Storage |

192.168.22.0/24 |

| 3032 |

OCP-NVMe-TCP-A |

Used for OCP NVMe-TCP Persistent Storage |

192.168.32.0/24 |

| 3042 |

OCP-NVMe-TCP-B |

Used for OCP NVMe-TCP Persistent Storage |

192.168.42.0/24 |

| 3052 |

OCP-NFS |

Used for OCP NFS RWX Persistent Storage |

192.168.52.0/24 |

Note: *VLAN configured in FlexPod Base.

Table 2 lists the VMs or bare metal servers necessary for deployment as outlined in this document.

| Virtual Machine Description |

VLAN |

IP Address |

Comments |

| OCP AD1 |

1022 |

10.102.2.249 |

Hosted on pre-existing management infrastructure within the FlexPod |

| OCP AD2 |

1022 |

10.102.2.250 |

Hosted on pre-existing management infrastructure within the FlexPod |

| OCP Installer |

1022 |

10.102.2.10 |

Hosted on pre-existing management infrastructure within the FlexPod |

| NetApp Active IQ Unified Manager |

1021 |

10.102.1.97 |

Hosted on pre-existing management infrastructure within the FlexPod |

| Cisco Intersight Assist Virtual Appliance |

1021 |

10.102.1.96 |

Hosted on pre-existing management infrastructure within the FlexPod |

Table 3 lists the software revisions for various components of the solution.

| Layer |

Device |

Image Bundle |

Comments |

| Compute |

Cisco UCS Fabric Interconnect S9108 |

4.3(4.240078) |

|

| Cisco UCS X210C M7 |

5.2(2.240053) |

|

|

| Network |

Cisco Nexus 93600CD-GX NX-OS |

10.3(4a)M |

|

| Storage |

NetApp AFF C800 |

ONTAP 9.14.1 |

Latest patch release |

| Software |

Red Hat OCP |

4.16 |

|

| NetApp Astra Trident |

24.06.1 |

|

|

| NetApp DataOps Toolkit |

2.5.0 |

|

|

| Cisco Intersight Assist Appliance |

1.0.9-675 |

1.0.9-538 initially installed and then automatically upgraded |

|

| NetApp Active IQ Unified Manager |

9.14 |

|

|

| NVIDIA L40S GPU Driver |

550.90.07 |

|

The information in this section is provided as a reference for cabling the physical equipment in a FlexPod environment. To simplify cabling requirements, a cabling diagram was used.

The cabling diagram in this section contains the details for the prescribed and supported configuration of the NetApp AFF C800 running NetApp ONTAP 9.14.1.

Note: For any modifications of this prescribed architecture, consult the NetApp Interoperability Matrix Tool (IMT).

Note: This document assumes that out-of-band management ports are plugged into an existing management infrastructure at the deployment site. These interfaces will be used in various configuration steps.

Note: Be sure to use the cabling directions in this section as a guide.

The NetApp storage controller and disk shelves should be connected according to best practices for the specific storage controller and disk shelves. For disk shelf cabling, refer to NetApp Support.

Figure 12 details the cable connections used in the validation lab for the FlexPod topology based on the Cisco UCS S9108 Fabric Interconnect directly in the chassis. Two 100Gb links connect each Cisco UCS Fabric Interconnect to the Cisco Nexus Switches and each NetApp AFF controller to the Cisco Nexus Switches. Additional 1Gb management connections will be needed for one or more out-of-band network switches that sit apart from the FlexPod infrastructure. Each Cisco UCS Fabric Interconnect and Cisco Nexus switch is connected to the out-of-band network switches, and each AFF controller has a connection to the out-of-band network switches. Layer 3 network connectivity is required between the Out-of-Band (OOB) and In-Band (IB) Management Subnets.

Figure 12. FlexPod Cabling with Cisco UCS S9108 X-Series Direct Fabric Interconnects

Retrieval Augmented Generation (RAG) is designed to empower LLM solutions with real-time data access, preserving data privacy and mitigating LLM hallucinations. A typical RAG pipeline consists of several phases. The process of document ingestion occurs offline, and when an online query comes in, the retrieval of relevant documents and the generation of a response occurs. By using RAG, you can provide up-to-date and proprietary information with ease to LLMs and build a system that increases user trust, improves user experiences, and reduces hallucinations. NVIDIA RAG Enterprise designed with NVIDIA NeMo is an end-to-end platform for developing custom generative AI, anywhere. Deliver enterprise-ready models with precise data curation, cutting-edge customization, RAG, and accelerated performance.

The FlexPod architecture is designed as described in this CVD: FlexPod Datacenter with Red Hat OCP Bare Metal Manual Configuration with Cisco UCS X-Series Direct Deployment Guide. NVIDIA AI Enterprise and NVIDIA NIM microservices were installed to build a powerful platform for running Retrieval Augmented Generation. This layered approach, depicted below, was configured with best practices and security in mind, resulting in a high-performance, secure platform for Retrieval Augmented Generation. This platform can be extended for further AI applications such as Training, Fine Tuning, and other Inferencing use cases, provided that the platform is sized for the application.

Network Switch Configuration

This chapter contains the following:

● Cisco Nexus Switch Manual Configuration

● NetApp ONTAP Storage Configuration

This chapter provides a detailed procedure for configuring the Cisco Nexus 93600CD-GX switches for use in a FlexPod environment.

Note: The following procedures describe how to configure the Cisco Nexus switches for use in the OCP Bare Metal FlexPod environment. This procedure assumes the use of Cisco Nexus 9000 10.3(4a)M and includes the setup of NTP distribution on the bare metal VLAN. The interface-vlan feature and NTP commands are used in the setup. This procedure adds the tenant VLANs to the appropriate port-channels.

Follow the physical connectivity guidelines for FlexPod as explained in section FlexPod Cabling.

Cisco Nexus Switch Manual Configuration

Procedure 1. Create Tenant VLANs on Cisco Nexus A and Cisco Nexus B

Step 1. Log into both Nexus switches as admin using ssh.

Step 2. Configure the OCP Bare Metal VLAN:

config t

vlan <bm-vlan-id for example, 1022>

name <tenant-name>-BareMetal-MGMT

Step 3. Configure OCP iSCSI VLANs:

vlan <iscsi-a-vlan-id for example, 3012>

name <tenant-name>-iSCSI-A

vlan <iscsi-b-vlan-id for example, 3022>

name <tenant-name>-iSCSI-B

Step 4. If configuring NVMe-TCP storage access, create the following two additional VLANs:

vlan <nvme-tcp-a-vlan-id for example, 3032>

name <tenant-name>-NVMe-TCP-A

vlan <nvme-tcp-b-vlan-id for example, 3042>

name <tenant-name>-NVMe-TCP-B

exit

vlan <nfs-vlan-id for example, 3052>

name <tenant-name>-NFS

Step 6. Add VLANs to the vPC peer link in both Nexus switches:

int Po10

switchport trunk allowed vlan add <bm-vlan-id>,<iscsi-a-vlan-id>,<iscsi-b-vlan-id>,<nvme-tcp-a-vlan-id>,<nvme-tcp-b-vlan-id>,<nfs-vlan-id>

Step 7. Add VLANs to the storage interfaces in both Nexus switches:

int Po11,Po12

switchport trunk allowed vlan add <bm-vlan-id>,<iscsi-a-vlan-id>,<iscsi-b-vlan-id>,<nvme-tcp-a-vlan-id>,<nvme-tcp-b-vlan-id>,<nfs-vlan-id>

Step 8. Add VLANs to the UCS Fabric Interconnect Uplink interfaces in both Nexus switches:

int Po19,Po110

switchport trunk allowed vlan add <bm-vlan-id>,<iscsi-a-vlan-id>,<iscsi-b-vlan-id>,<nvme-tcp-a-vlan-id>,<nvme-tcp-b-vlan-id>,<nfs-vlan-id>

Step 9. Add the Bare Metal VLAN to the Switch Uplink interface in both Nexus switches:

interface Po127

switchport trunk allowed vlan add <bm-vlan-id>

exit

Step 10. If configuring NTP Distribution in these Nexus Switches, add Tenant VRF and NTP Distribution Interface in Cisco Nexus A:

vrf context <tenant-name>

ip route 0.0.0.0/0 <bm-subnet-gateway>

exit

interface vlan<bm-vlan-id>

no shutdown

vrf member <tenant-name>

ip address <bm-switch-a-ntp-distr-ip>/<bm-vlan-mask-length>

exit

copy run start

Step 11. If configuring NTP Distribution in these Nexus Switches, add Tenant VRF and NTP Distribution Interface in Cisco Nexus B:

vrf context <tenant-name>

ip route 0.0.0.0/0 <bm-subnet-gateway>

exit

interface vlan<bm-vlan-id>

no shutdown

vrf member <tenant-name>

ip address <bm-switch-b-ntp-distr-ip>/<bm-vlan-mask-length>

exit

copy run start

Step 12. The following commands can be used to see the switch configuration and status:

show run

show vpc

show vlan

show port-channel summary

show ntp peer-status

show cdp neighbors

show lldp neighbors

show run int

show int

show udld neighbors

show int status

NetApp ONTAP Storage Configuration

Procedure 1. Configure the NetApp ONTAP storage

Step 2. Open an SSH connection to either the cluster IP or the host name.

Step 3. Log into the admin user with the password you provided earlier.

Procedure 2. Configure the NetApp ONTAP Storage for the OCP Tenant

Note: By default, all network ports are included in a separate default broadcast domain. Network ports used for data services (for example, e5a, e5b, and so on) should be removed from their default broadcast domain and that broadcast domain should be deleted.

Step 1. Delete any Default-N automatically created broadcast domains:

network port broadcast-domain delete -broadcast-domain <Default-N> -ipspace Default

network port broadcast-domain show

Note: Delete the Default broadcast domains with Network ports (Default-1, Default-2, and so on). This does not include Cluster ports and management ports.

Step 2. Create an IPspace for the OCP tenant:

network ipspace create -ipspace AA02-OCP

Step 3. Create the OCP-MGMT, OCP-iSCSI-A, OCP-iSCSI-B, OCP-NVMe-TCP-A , OCP-NVMe-TCP-B, and OCP-NFS broadcast domains with appropriate maximum transmission unit (MTU):

network port broadcast-domain create -broadcast-domain OCP-MGMT -mtu 1500 -ipspace AA02-OCP

network port broadcast-domain create -broadcast-domain OCP-iSCSI-A -mtu 9000 -ipspace AA02-OCP

network port broadcast-domain create -broadcast-domain OCP-iSCSI-B -mtu 9000 -ipspace AA02-OCP

network port broadcast-domain create -broadcast-domain OCP-NVMe-TCP-A -mtu 9000 -ipspace AA02-OCP

network port broadcast-domain create -broadcast-domain OCP-NVMe-TCP-B -mtu 9000 -ipspace AA02-OCP

network port broadcast-domain create -broadcast-domain OCP-NFS -mtu 9000 -ipspace AA02-OCP

Step 4. Create the OCP management VLAN ports and add them to the OCP management broadcast domain:

network port vlan create -node AA02-C800-01 -vlan-name a0a-1022

network port vlan create -node AA02-C800-02 -vlan-name a0a-1022

network port broadcast-domain add-ports -ipspace AA02-OCP -broadcast-domain OCP-MGMT -ports AA02-C800-01:a0a-1022,AA02-C800-02:a0a-1022

Step 5. Create the OCP iSCSI VLAN ports and add them to the OCP iSCSI broadcast domains:

network port vlan create -node AA02-C800-01 -vlan-name a0a-3012

network port vlan create -node AA02-C800-02 -vlan-name a0a-3012

network port broadcast-domain add-ports -ipspace AA02-OCP -broadcast-domain OCP-iSCSI-A -ports AA02-C800-01:a0a-3012,AA02-C800-02:a0a-3012

network port vlan create -node AA02-C800-01 -vlan-name a0a-3022

network port vlan create -node AA02-C800-02 -vlan-name a0a-3022

network port broadcast-domain add-ports -ipspace AA02-OCP -broadcast-domain OCP-iSCSI-B -ports AA02-C800-01:a0a-3022,AA02-C800-02:a0a-3022

Step 6. Create the OCP NVMe-TCP VLAN ports and add them to the OCP NVMe-TCP broadcast domains:

network port vlan create -node AA02-C800-01 -vlan-name a0a-3032

network port vlan create -node AA02-C800-02 -vlan-name a0a-3032

network port broadcast-domain add-ports -ipspace AA02-OCP -broadcast-domain OCP-NVMe-TCP-A -ports AA02-C800-01:a0a-3032,AA02-C800-02:a0a-3032

network port vlan create -node AA02-C800-01 -vlan-name a0a-3042

network port vlan create -node AA02-C800-02 -vlan-name a0a-3042

network port broadcast-domain add-ports -ipspace AA02-OCP -broadcast-domain OCP-NVMe-TCP-B -ports AA02-C800-01:a0a-3042,AA02-C800-02:a0a-3042

Step 7. Create the OCP NFS VLAN ports and add them to the OCP NFS broadcast domain:

network port vlan create -node AA02-C800-01 -vlan-name a0a-3052

network port vlan create -node AA02-C800-02 -vlan-name a0a-3052

network port broadcast-domain add-ports -ipspace AA02-OCP -broadcast-domain OCP-NFS -ports AA02-C800-01:a0a-3052,AA02-C800-02:a0a-3052

Step 8. Create the SVM (Storage Virtual Machine) in the IPspace. Run the vserver create command:

vserver create -vserver OCP-Trident-SVM -ipspace AA02-OCP

Note: The SVM must be created in the IPspace. An SVM cannot be moved into an IPspace later.

Step 9. Add the required data protocols to the SVM and remove the unused data protocols from the SVM:

vserver add-protocols -vserver OCP-Trident-SVM -protocols iscsi,nfs,nvme

vserver remove-protocols -vserver OCP-Trident-SVM -protocols cifs,fcp,s3

Step 10. Add the two data aggregates to the OCP-Trident-SVM aggregate list and enable and run the NFS protocol in the SVM:

vserver modify -vserver OCP-Trident-SVM -aggr-list AA02_C800_01_SSD_CAP_1,AA02_C800_02_SSD_CAP_1

vserver nfs create -vserver OCP-Trident-SVM -udp disabled -v3 enabled -v4.1 enabled

Step 11. Create a Load-Sharing Mirror of the SVM Root Volume. Create a volume to be the load-sharing mirror of the infrastructure SVM root volume only on the node that does not have the Root Volume:

volume show -vserver OCP-Trident-SVM # Identify the aggregate and node where the vserver root volume is located.

volume create -vserver OCP-Trident-SVM -volume OCP_Trident_SVM_root_lsm01 -aggregate AA02_C800_0<x>_SSD_CAP_1 -size 1GB -type DP # Create the mirror volume on the other node

Step 12. Create the 15min interval job schedule:

job schedule interval create -name 15min -minutes 15

Step 13. Create the mirroring relationship:

snapmirror create -source-path OCP-Trident-SVM:OCP_Trident_SVM_root -destination-path OCP-Trident-SVM:OCP_Trident_SVM_root_lsm01 -type LS -schedule 15min

Step 14. Initialize and verify the mirroring relationship:

snapmirror initialize-ls-set -source-path OCP-Trident-SVM:OCP_Trident_SVM_root

snapmirror show -vserver OCP-Trident-SVM

Progress

Source Destination Mirror Relationship Total Last

Path Type Path State Status Progress Healthy Updated

----------- ---- ------------ ------- -------------- --------- ------- --------

AA02-C800://OCP-Trident-SVM/OCP_Trident_SVM_root

LS AA02-C800://OCP-Trident-SVM/OCP_Trident_SVM_root_lsm01

Snapmirrored

Idle - true -

Step 15. Create the iSCSI and NVMe services:

vserver iscsi create -vserver OCP-Trident-SVM -status-admin up

vserver iscsi show -vserver OCP-Trident-SVM

Vserver: OCP-Trident-SVM

Target Name: iqn.1992-08.com.netapp:sn.8442b0854ebb11efb1a7d039eab7b2f3:vs.5

Target Alias: OCP-Trident-SVM

Administrative Status: up

vserver nvme create -vserver OCP-Trident-SVM -status-admin up

vserver nvme show -vserver OCP-Trident-SVM

Vserver Name: OCP-Trident-SVM

Administrative Status: up

Discovery Subsystem NQN: nqn.1992-08.com.netapp:sn.8442b0854ebb11efb1a7d039eab7b2f3:discovery

Note: Make sure licenses are installed for all storage protocols used before creating the services.

Step 16. To create the login banner for the SVM, run the following command:

security login banner modify -vserver OCP-Trident-SVM -message "This OCP-Trident-SVM is reserved for authorized users only!"

Step 17. Remove insecure ciphers from the SVM. Ciphers with the suffix CBC are considered insecure. To remove the CBC ciphers, run the following NetApp ONTAP command:

security ssh remove -vserver OCP-Trident-SVM -ciphers aes256-cbc,aes192-cbc,aes128-cbc,3des-cbc

Step 18. Create a new rule for the SVM NFS subnet in the default export policy and assign the policy to the SVM’s root volume:

vserver export-policy rule create -vserver OCP-Trident-SVM -policyname default -ruleindex 1 -protocol nfs -clientmatch 192.168.52.0/24 -rorule sys -rwrule sys -superuser sys -allow-suid true

volume modify –vserver OCP-Trident-SVM –volume OCP_Trident_SVM_root –policy default

Step 19. Create and enable the audit log in the SVM:

volume create -vserver OCP-Trident-SVM -volume audit_log -aggregate AA02_C800_01_SSD_CAP_1 -size 50GB -state online -policy default -junction-path /audit_log -space-guarantee none -percent-snapshot-space 0

snapmirror update-ls-set -source-path OCP-Trident-SVM:OCP_Trident_SVM_root

vserver audit create -vserver OCP-Trident-SVM -destination /audit_log

vserver audit enable -vserver OCP-Trident-SVM

Step 20. Run the following commands to create NFS Logical Interfaces (LIFs):

network interface create -vserver OCP-Trident-SVM -lif nfs-lif-01 -service-policy default-data-files -home-node AA02-C800-01 -home-port a0a-3052 -address 192.168.52.51 -netmask 255.255.255.0 -status-admin up -failover-policy broadcast-domain-wide -auto-revert true

network interface create -vserver OCP-Trident-SVM -lif nfs-lif-02 -service-policy default-data-files -home-node AA02-C800-02 -home-port a0a-3052 -address 192.168.52.52 -netmask 255.255.255.0 -status-admin up -failover-policy broadcast-domain-wide -auto-revert true

Step 21. Run the following commands to create iSCSI LIFs:

network interface create -vserver OCP-Trident-SVM -lif iscsi-lif-01a -service-policy default-data-iscsi -home-node AA02-C800-01 -home-port a0a-3012 -address 192.168.12.51 -netmask 255.255.255.0 -status-admin up

network interface create -vserver OCP-Trident-SVM -lif iscsi-lif-01b -service-policy default-data-iscsi -home-node AA02-C800-01 -home-port a0a-3022 -address 192.168.22.51 -netmask 255.255.255.0 -status-admin up

network interface create -vserver OCP-Trident-SVM -lif iscsi-lif-02a -service-policy default-data-iscsi -home-node AA02-C800-02 -home-port a0a-3012 -address 192.168.12.52 -netmask 255.255.255.0 -status-admin up

network interface create -vserver OCP-Trident-SVM -lif iscsi-lif-02b -service-policy default-data-iscsi -home-node AA02-C800-02 -home-port a0a-3022 -address 192.168.22.52 -netmask 255.255.255.0 -status-admin up

Step 22. Run the following commands to create NVMe-TCP LIFs:

network interface create -vserver OCP-Trident-SVM -lif nvme-tcp-lif-01a -service-policy default-data-nvme-tcp -home-node AA02-C800-01 -home-port a0a-3032 -address 192.168.32.51 -netmask 255.255.255.0 -status-admin up

network interface create -vserver OCP-Trident-SVM -lif nvme-tcp-lif-01b -service-policy default-data-nvme-tcp -home-node AA02-C800-01 -home-port a0a-3042 -address 192.168.42.51 -netmask 255.255.255.0 -status-admin up

network interface create -vserver OCP-Trident-SVM -lif nvme-tcp-lif-02a -service-policy default-data-nvme-tcp -home-node AA02-C800-02 -home-port a0a-3032 -address 192.168.32.52 -netmask 255.255.255.0 -status-admin up

network interface create -vserver OCP-Trident-SVM -lif nvme-tcp-lif-02b -service-policy default-data-nvme-tcp -home-node AA02-C800-02 -home-port a0a-3042 -address 192.168.42.52 -netmask 255.255.255.0 -status-admin up

Step 23. Run the following command to create the SVM-MGMT LIF:

network interface create -vserver OCP-Trident-SVM -lif svm-mgmt -service-policy default-management -home-node AA02-C800-01 -home-port a0a-1022 -address 10.102.2.50 -netmask 255.255.255.0 -status-admin up -failover-policy broadcast-domain-wide -auto-revert true

Step 24. Run the following command to verify LIFs:

network interface show -vserver OCP-Trident-SVM

Logical Status Network Current Current Is

Vserver Interface Admin/Oper Address/Mask Node Port Home

----------- ---------- ---------- ------------------ ------------- ------- ----

OCP-Trident-SVM

iscsi-lif-01a

up/up 192.168.12.51/24 AA02-C800-01 a0a-3012

true

iscsi-lif-01b

up/up 192.168.22.51/24 AA02-C800-01 a0a-3022

true

iscsi-lif-02a

up/up 192.168.12.52/24 AA02-C800-02 a0a-3012

true

iscsi-lif-02b

up/up 192.168.22.52/24 AA02-C800-02 a0a-3022

true

nfs-lif-01 up/up 192.168.52.51/24 AA02-C800-01 a0a-3052

true

nfs-lif-02 up/up 192.168.52.52/24 AA02-C800-02 a0a-3052

true

nvme-tcp-lif-01a

up/up 192.168.32.51/24 AA02-C800-01 a0a-3032

true

nvme-tcp-lif-01b

up/up 192.168.42.51/24 AA02-C800-01 a0a-3042

true

nvme-tcp-lif-02a

up/up 192.168.32.52/24 AA02-C800-02 a0a-3032

true

nvme-tcp-lif-02b

up/up 192.168.42.52/24 AA02-C800-02 a0a-3042

true

svm-mgmt up/up 10.102.2.50/24 AA02-C800-01 a0a-1022

true

11 entries were displayed.

Step 25. Create a default route that enables the SVM management interface to reach the outside world:

network route create -vserver OCP-Trident-SVM -destination 0.0.0.0/0 -gateway 10.102.2.254

Step 26. Set a password for the SVM vsadmin user and unlock the user:

security login password -username vsadmin -vserver OCP-Trident-SVM

Enter a new password:

Enter it again:

security login unlock -username vsadmin -vserver OCP-Trident-SVM

Step 27. Add the OCP DNS servers to the SVM:

dns create -vserver OCP-Trident-SVM -domains ocp.flexpodb4.cisco.com -name-servers 10.102.2.249,10.102.2.250

Cisco Intersight Managed Mode Configuration

This chapter contains the following:

● Set up Cisco Intersight Resource Group

● Set up Cisco Intersight Organization

● Add OCP VLANs to VLAN Policy

● Cisco UCS IMM Manual Configuration

● Create Master Node Server Profile Template

● Configure Boot Order Policy for M2

● Configure Firmware Policy (optional)

● Configure Virtual Media Policy

● Configure Cisco IMC Access Policy

● Configure IPMI Over LAN Policy

● Configure Virtual KVM Policy

● Storage Configuration (optional)

● Create MAC Address Pool for Fabric A and B

● Create Ethernet Network Group Policy

● Create Ethernet Network Control Policy

● Create Ethernet Adapter Policy

● Add vNIC(s) to LAN Connectivity Policy

● Complete the Master Server Profile Template

● Build the OCP Worker LAN Connectivity Policy

● Create the OCP Worker Server Profile Template

The Cisco Intersight platform is a management solution delivered as a service with embedded analytics for Cisco and third-party IT infrastructures. The Cisco Intersight Managed Mode (also referred to as Cisco IMM or Intersight Managed Mode) is an architecture that manages Cisco Unified Computing System (Cisco UCS) Fabric Interconnect–attached systems through a Redfish-based standard model. Cisco Intersight managed mode standardizes both policy and operation management for Cisco UCS X210c M7 compute nodes used in this deployment guide.

Cisco UCS B-Series M5, M6, M7 blades and C-Series M6 and M7 servers, connected and managed through Cisco UCS 6400 & 6500 Fabric Interconnects, are also supported by IMM. For a complete list of supported platforms, go to: https://www.cisco.com/c/en/us/td/docs/unified_computing/Intersight/b_Intersight_Managed_Mode_Configuration_Guide/b_intersight_managed_mode_guide_chapter_01010.html

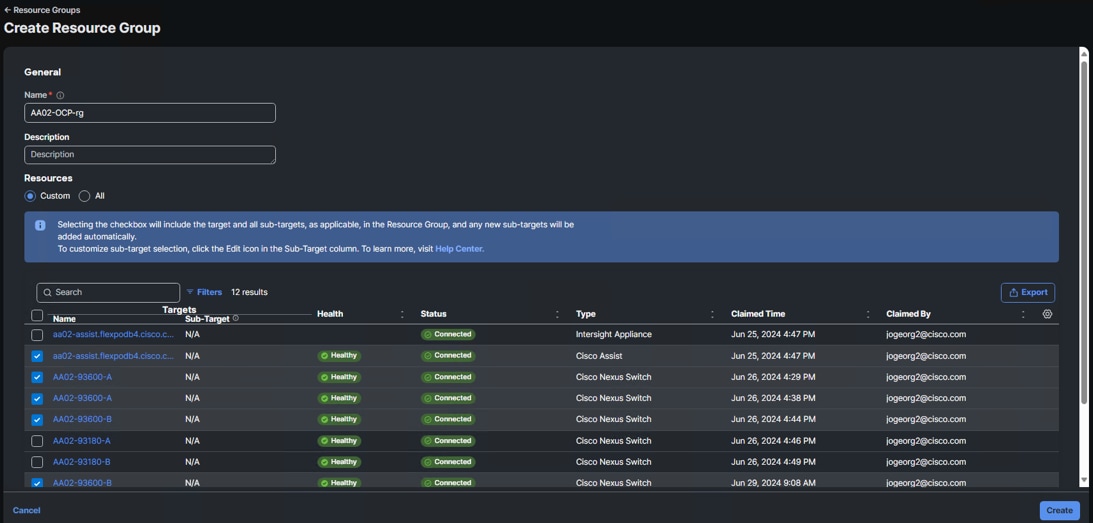

Procedure 1. Set up Cisco Intersight Resource Group

In this procedure, a Cisco Intersight resource group for the Red Hat OCP tenant is created where resources such as targets will be logically grouped. In this deployment, a single resource group is created to host all the resources, but you can choose to create multiple resource groups for granular control of the resources.

Step 1. Log into Cisco Intersight.

Step 2. Select System. On the left, click Settings (the gear icon).

Step 3. Click Resource Groups in the middle panel.

Step 4. Click + Create Resource Group in the top-right corner.

Step 5. Provide a name for the Resource Group (for example, AA02-OCP-rg).

Step 6. Under Resources, select Custom.

Step 7. Select all resources that are connected to this Red Hat OCP FlexPod.

Step 8. Click Create.

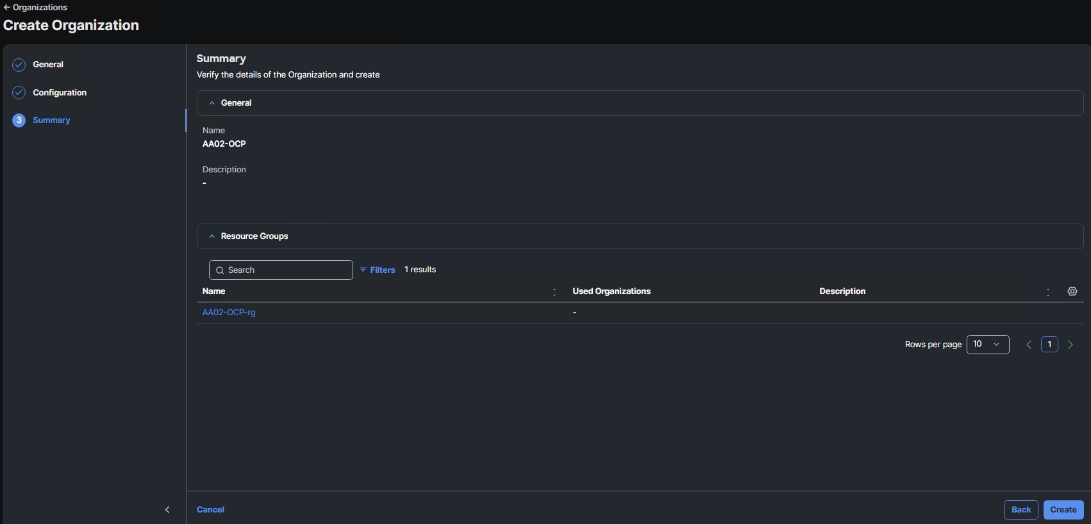

Procedure 2. Set Up Cisco Intersight Organization

In this procedure, an Intersight organization for the Red Hat OCP tenant is created where all Cisco Intersight Managed Mode configurations including policies are defined.

Step 1. Log into the Cisco Intersight portal.

Step 2. Select System. On the left, click Settings (the gear icon).

Step 3. Click Organizations in the middle panel.

Step 4. Click + Create Organization in the top-right corner.

Step 5. Provide a name for the organization (for example, AA02-OCP), optionally select Share Resources with Other Organizations, and click Next.

Step 6. Select the Resource Group created in the last step (for example, AA02-OCP-rg) and click Next.

Step 7. Click Create.

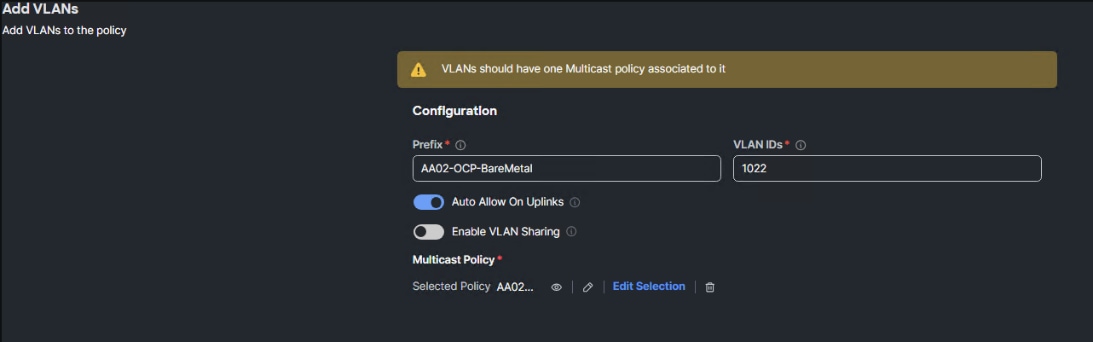

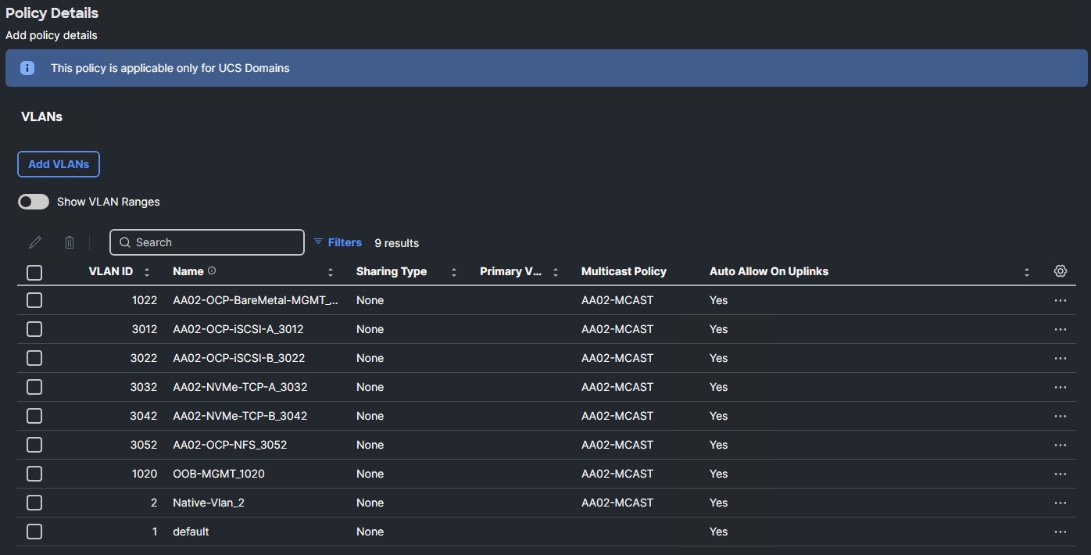

Procedure 3. Add OCP VLANs to VLAN Policy

Step 1. Log into the Cisco Intersight portal.

Step 2. Select Infrastructure Service. On the left, select Profiles then under Profiles select UCS Domain Profiles.

Step 3. To the right of the UCS Domain Profile used for the OCP tenant, click … and select Edit.

Step 4. Click Next to go to UCS Domain Assignment.

Step 5. Click Next to go to VLAN & VSAN Configuration.

Step 6. Under VLAN & VSAN Configuration, click the pencil icon to the left of the VLAN Policy to Edit the policy.

Step 7. Click Next to go to Policy Details.

Step 8. To add the OCP-BareMetal VLAN, click Add VLANs.

Step 9. For the Prefix, enter the VLAN name. For the VLAN ID, enter the VLAN id. Leave Auto Allow on Uplinks enabled and Enable VLAN Sharing disabled.

Step 10. Under Multicast Policy, click Select Policy and select the already configured Multicast Policy (for example, AA02-MCAST).

Step 11. Click Add to add the VLAN to the policy.

Step 12. Repeat step 10 and step 11 to add all the VLANs in Table 1 to the VLAN Policy.

Step 13. Click Save to save the VLAN Policy.

Step 14. Click Next three times to get to the UCS Domain Profile Summary page.

Step 15. Click Deploy and then Deploy again to deploy the UCS Domain Profile.

Cisco UCS IMM Manual Configuration

Configure Server Profile Template

In the Cisco Intersight platform, a server profile enables resource management by simplifying policy alignment and server configuration. The server profiles are derived from a server profile template. A Server profile template and its associated policies can be created using the server profile template wizard. After creating the server profile template, customers can derive multiple consistent server profiles from the template.

The server profile templates captured in this deployment guide supports Cisco UCS X210c M7 compute nodes with 5th Generation VICs and can be modified to support other Cisco UCS blades and rack mount servers.

vNIC and vHBA Placement for Server Profile Template

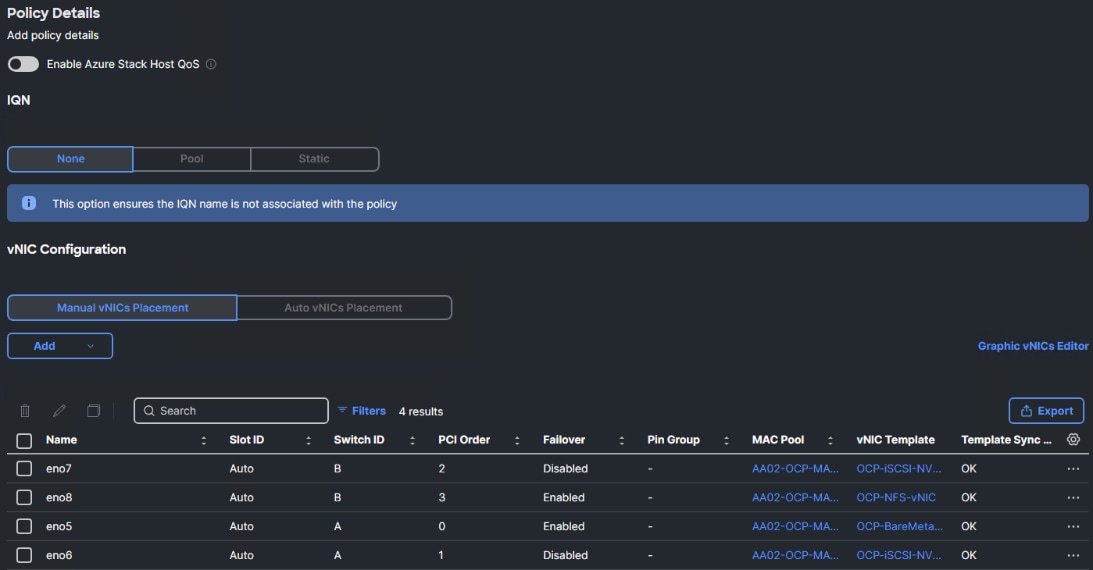

In this deployment, separate server profile templates are created for OCP Worker and Master Nodes where Worker Nodes have storage network interfaces to support workloads, but Master Nodes do not. The vNIC layout is explained below. While most of the policies are common across various templates, the LAN connectivity policies are unique and will use the information in the following tables:

● 1 vNIC is configured for OCP Master Nodes. This vNIC is manually placed as listed in Table 4.

● 4 vNICs are configured for OCP Worker Nodes. These vNICs are manually placed as listed in Table 5. NVMe-TCP VLAN Interfaces can be added as tagged VLANs to the iSCSI vNICs when NVMe-TCP is being used.

Table 4. vNIC placement for OCP Master Nodes

| vNIC/vHBA Name |

Switch ID |

PCI Order |

Fabric Failover |

Native VLAN |

Allowed VLANs |

| eno5 |

A |

0 |

Y |

OCP-BareMetal |

OCP-BareMetal-MGMT |

Table 5. vNIC placement for OCP Worker Nodes

| vNIC/vHBA Name |

Switch ID |

PCI Order |

Fabric Failover |

Native VLAN |

Allowed VLANs |

| eno5 |

A |

0 |

Y |

OCP-BareMetal |

OCP-BareMetal-MGMT |

| eno6 |

A |

1 |

N |

OCP-iSCSI-A |

OCP-iSCSI-A, OCP-NVMe-TCP-A |

| eno7 |

B |

2 |

N |

OCP-iSCSI-B |

OCP-iSCSI-B, OCP-NVMe-TCP-B |

| eno8 |

A |

3 |

Y |

OCP-NFS |

OCP-NFS |

Note: OCP-NVMe-TCP-A will be added to eno6 as a VLAN interface. OCP-NVMe-TCP-B will be added to eno7 as a VLAN interface.

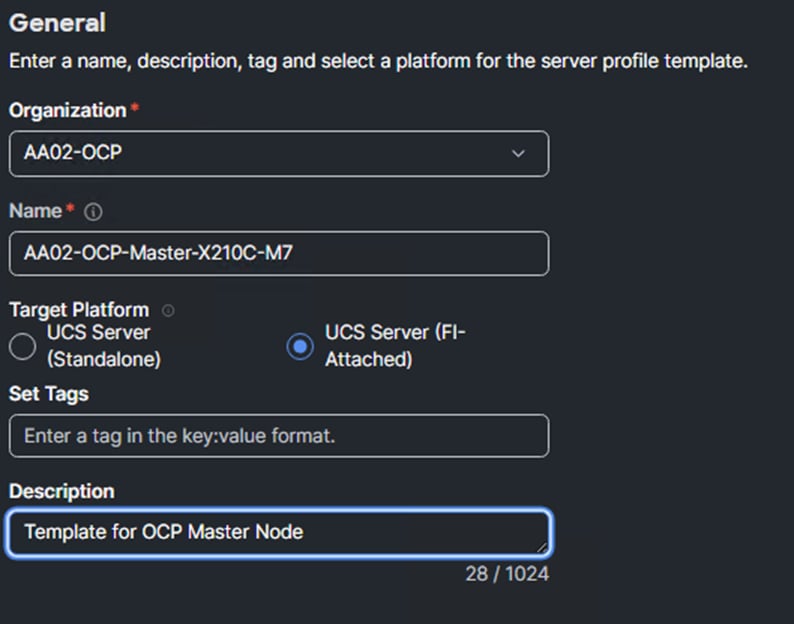

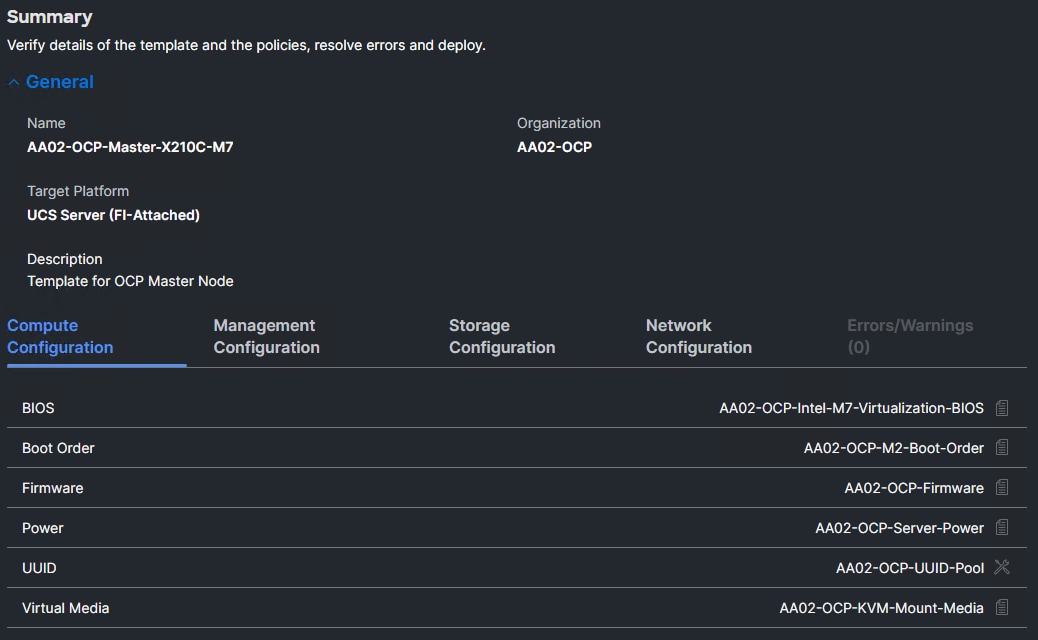

Procedure 1. Create Master Node Server Profile Template

A Server Profile Template will first be created for the OCP Master Nodes. This procedure will assume an X210C M7 is being used but can be modified for other server types.

Step 1. Log into Cisco Intersight.

Step 2. Go to Infrastructure Service > Configure > Templates and in the main window and select UCS Server Profile Templates. Click Create UCS Server Profile Template.

Step 3. Select the organization from the drop-down list (for example, AA02-OCP).

Step 4. Provide a name for the server profile template (for example, AA02-OCP-Master-X210C-M7)

Step 5. Select UCS Server (FI-Attached).

Step 6. Provide an optional description.

Step 7. Click Next.

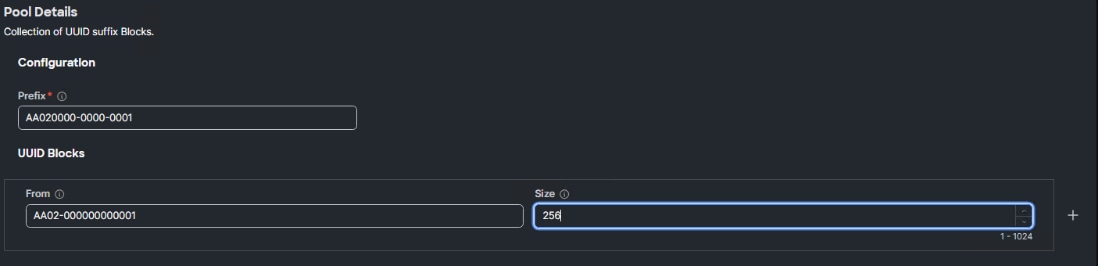

Procedure 2. Compute Configuration – Configure UUID Pool

Step 1. Click Select Pool under UUID Pool and then in the pane on the right, click Create New.

Step 2. Verify correct organization is selected from the drop-down list (for example, AA02) and provide a name for the UUID Pool (for example, AA02-OCP-UUID-Pool).

Step 3. Provide an optional Description and click Next.

Step 4. Provide a unique UUID Prefix (for example, a prefix of AA020000-0000-0001 was used).

Step 5. Add a UUID block.

Step 6. Click Create.

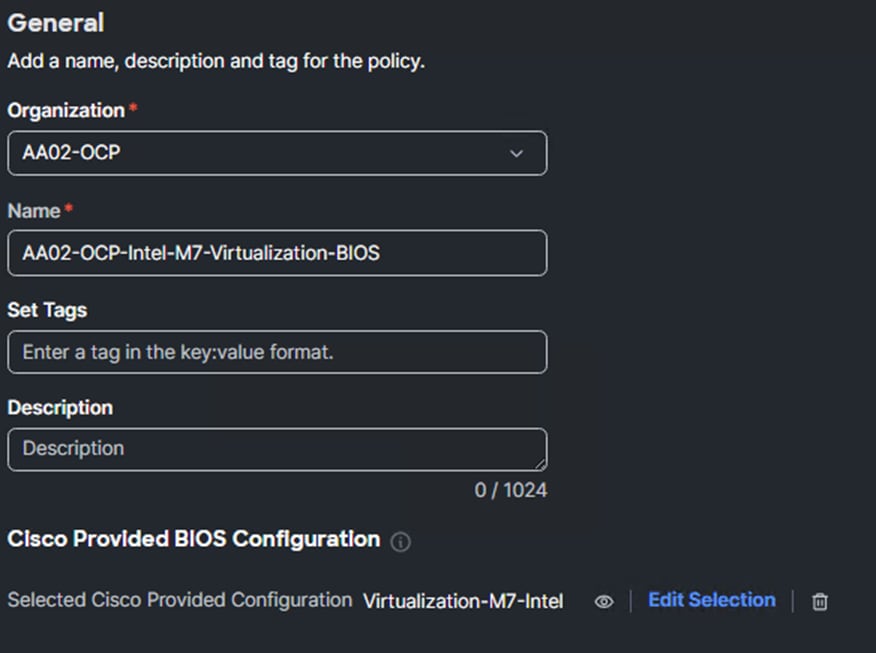

Procedure 3. Configure BIOS Policy

Step 1. Click Select Policy next to BIOS and in the pane on the right, click Create New.

Step 2. Verify correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-Intel-M7-Virtualization-BIOS).

Step 3. Enter an optional Description.

Step 4. Click Select Cisco Provided Configuration. In the Search box, type Vir. Select Virtualization-M7-Intel or the appropriate Cisco Provided Configuration for your platform.

Step 5. Click Next.

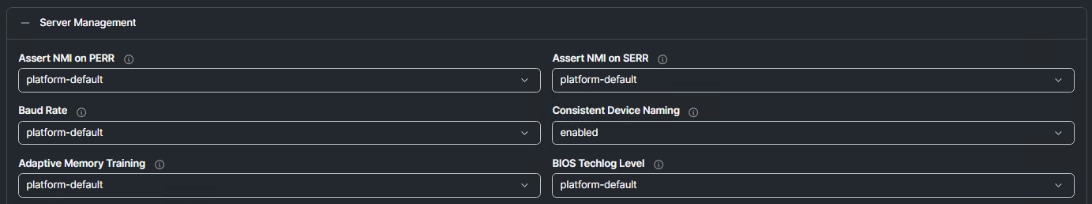

Step 6. On the Policy Details screen, expand Server Management. From the drop-down list select enabled for the Consistent Device Naming BIOS token.

Note: The BIOS Policy settings specified here are from the Performance Tuning Best Practices Guide for Cisco UCS M7 Platforms - Cisco with the Virtualization workload. For other platforms, the appropriate documents are listed below:

● Performance Tuning Guide for Cisco UCS M6 Servers - Cisco

● Performance Tuning Guide for Cisco UCS M5 Servers White Paper - Cisco

Step 7. Click Create to create the BIOS Policy.

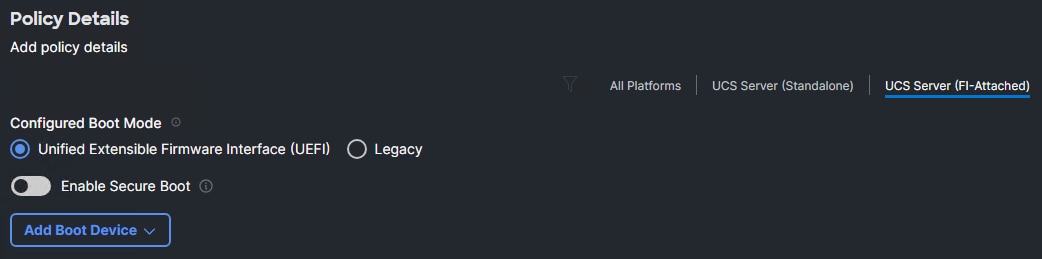

Procedure 4. Configure Boot Order Policy for M2

Step 1. Click Select Policy next to Boot Order and then, in the pane on the right, click Create New.

Step 2. Verify correct organization is selected from the drop-down list (for example, AA02) and provide a name for the policy (for example, AA02-OCP-M2-Boot-Order).

Step 3. Click Next.

Step 4. For Configured Boot Mode, select Unified Extensible Firmware Interface (UEFI).

Step 5. Do not turn on Enable Secure Boot.

Note: It is critical to not enable UEFI Secure Boot. If Secure Boot is enabled, the NVIDIA GPU Operator GPU driver will fail to initialize.

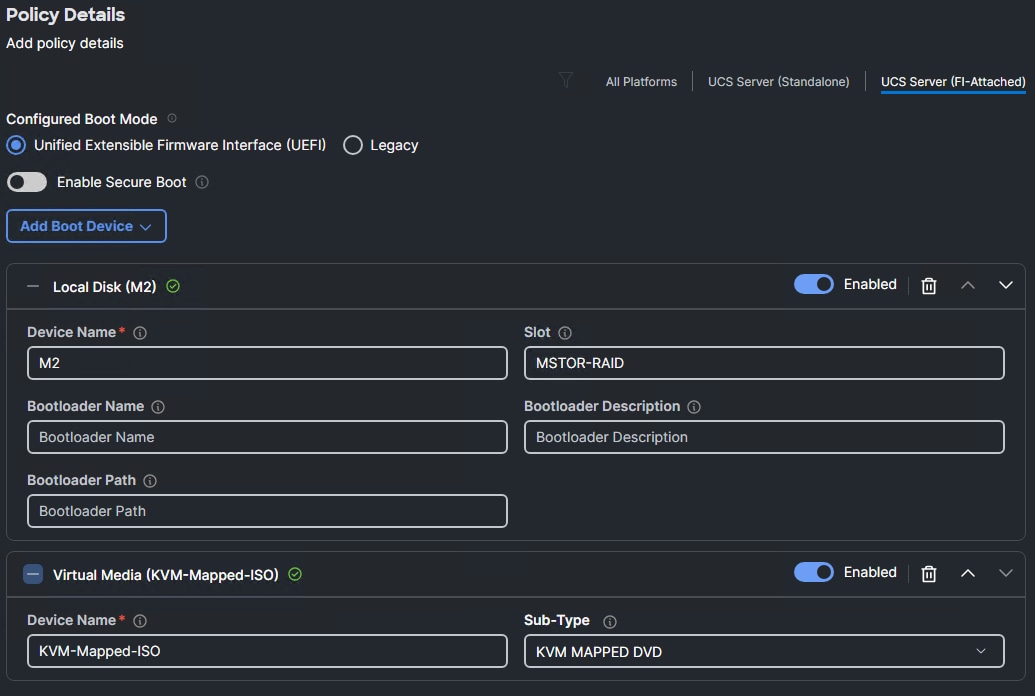

Step 6. Click the Add Boot Device drop-down list and select Virtual Media.

Note: You are entering the Boot Devices in reverse order here to avoid having to move them in the list later.

Step 7. Provide a Device Name (for example, KVM-Mapped-ISO) and then, for the subtype, select KVM Mapped DVD.

Step 8. Click the Add Boot Device drop-down list and select Local Disk.

Step 9. Provide a Device Name (for example, M2) and MSTOR-RAID for the Slot.

Step 10. Verify the order of the boot devices and adjust the boot order as necessary using arrows next to the Delete button.

Step 11. Click Create.

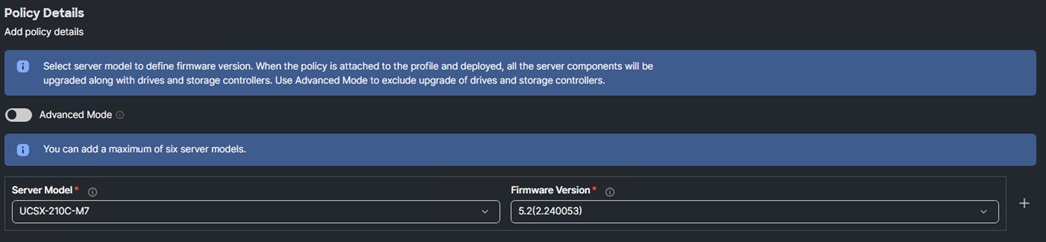

Procedure 5. Configure Firmware Policy (optional)

Since Red Hat OCP recommends using homogeneous server types for Masters (and Workers), a Firmware Policy can ensure that all servers are running the appropriate firmware when the Server Profile is deployed.

Step 1. Click Select Policy next to Firmware and then, in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-Firmware). Click Next.

Step 3. Select the Server Model (for example, UCSX-210C-M7) and the latest 5.2(2) firmware version.

Step 4. Optionally, other server models can be added using the plus sign.

Step 5. Click Create to create the Firmware Policy.

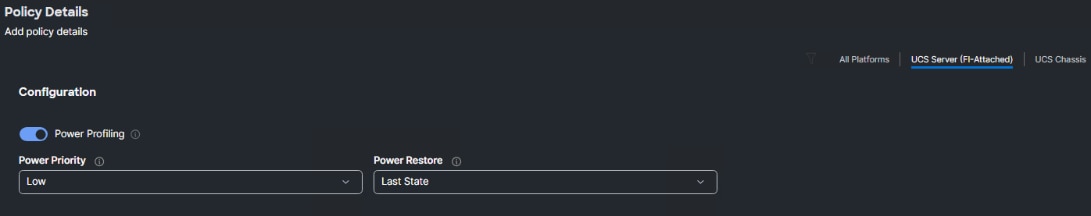

Procedure 6. Configure Power Policy

A Power Policy can be defined and attached to blade servers (Cico UCS X- and B-Series).

Step 1. Click Select Policy next to Power and in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-Server-Power). Click Next.

Step 3. Make sure UCS Server (FI-Attached) is selected and adjust any of the parameters according to your organizational policies.

Step 4. Click Create to create the Power Policy.

Step 5. Optionally, if you are using Cisco UCS C-Series servers, a Thermal Policy can be created and attached to the profile.

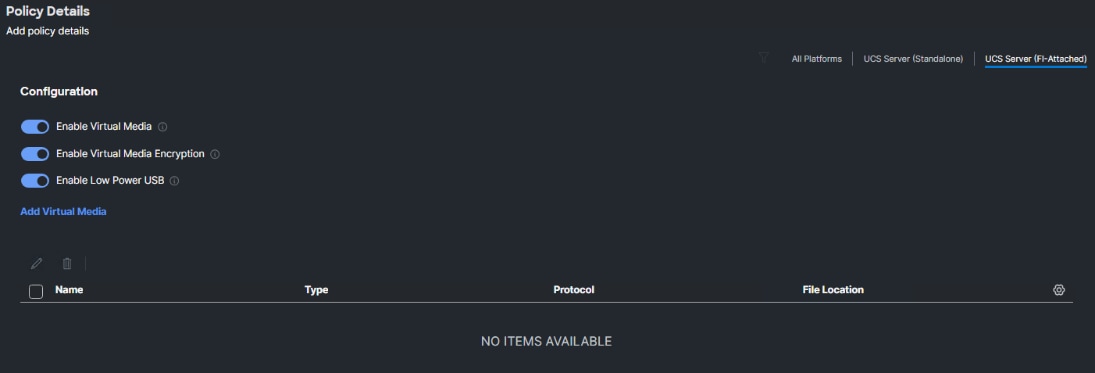

Procedure 7. Configure Virtual Media Policy

Step 1. Click Select Policy next to Virtual Media and then, in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-KVM-Mount-Media). Click Next.

Step 3. Ensure that Enable Virtual Media, Enable Virtual Media Encryption, and Enable Low Power USB are turned on.

Step 4. Do not Add Virtual Media at this time, but the policy can be modified and used to map an ISO for a CIMC Mapped DVD.

Step 5. Click Create to create the Virtual Media Policy.

Step 6. Click Next to move to Management Configuration.

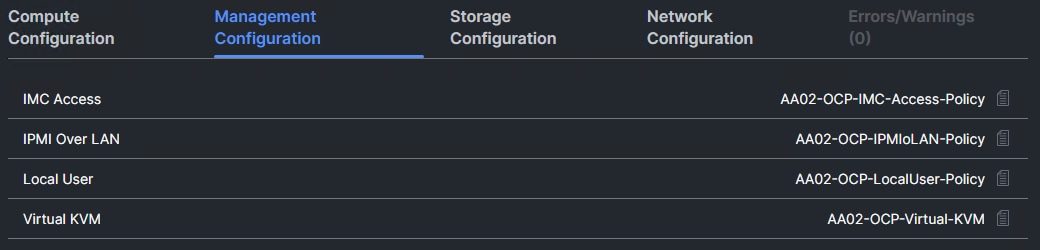

The following policies are added to the management configuration:

● IMC Access to define the pool of IP addresses for compute node KVM access

● IPMI Over LAN to allow Intersight to manage IPMI messages

● Local User to provide local administrator to access KVM

● Virtual KVM to allow the Tunneled KVM

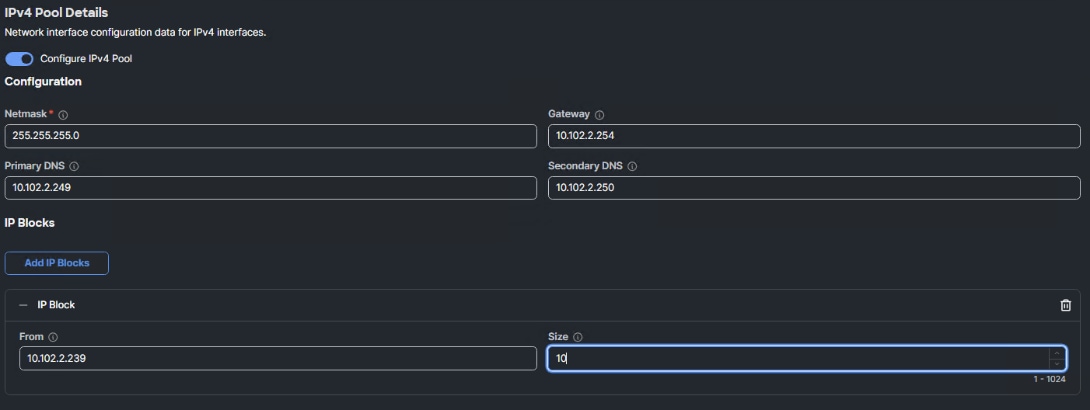

Procedure 1. Configure Cisco IMC Access Policy

Step 1. Click Select Policy next to IMC Access and then, in the pane on the right, click Create New.

Step 2. Verify correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-IMC-Access-Policy).

Step 3. Click Next.

Note: Since certain features are not yet enabled for Out-of-Band Configuration (accessed via the Fabric Interconnect mgmt0 ports), we are bringing in the OOB-MGMT VLAN through the Fabric Interconnect Uplinks and mapping it as the In-Band Configuration VLAN.

Step 4. Ensure UCS Server (FI-Attached) is selected.

Step 5. Enable In-Band Configuration. Enter the OCP-BareMetal VLAN ID (for example, 1022) and select “IPv4 address configuration.”

Step 6. Under IP Pool, click Select IP Pool and then, in the pane on the right, click Create New.

Step 7. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-BareMetal-IP-Pool). Click Next.

Step 8. Ensure Configure IPv4 Pool is selected and provide the information to define a unique pool for KVM IP address assignment including an IP Block (added by clicking Add IP Blocks).

Note: You will need the IP addresses of the OCP DNS servers.

Note: The management IP pool subnet should be accessible from the host that is trying to open the KVM connection. In the example shown here, the hosts trying to open a KVM connection would need to be able to route to the 10.102.2.0/24 subnet.

Step 9. Click Next.

Step 10. Deselect Configure IPv6 Pool.

Step 11. Click Create to finish configuring the IP address pool.

Step 12. Click Create to finish configuring the IMC access policy.

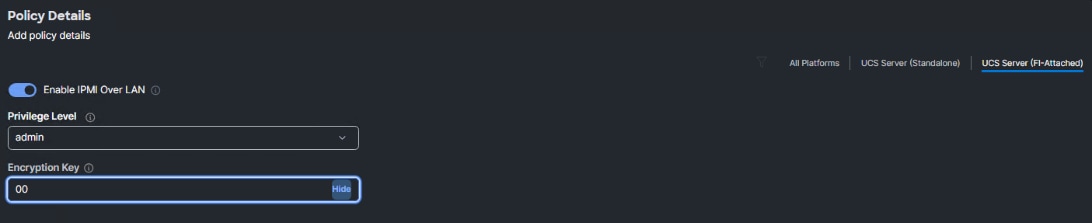

Procedure 2. Configure IPMI Over LAN Policy

The IPMI Over LAN Policy can be used to allow both IPMI and Redfish connectivity to Cisco UCS Servers.

Step 1. Click Select Policy next to IPMI Over LAN and then, in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02) and provide a name for the policy (for example, AA02-OCP-IPMIoLAN-Policy). Click Next.

Step 3. Ensure UCS Server (FI-Attached) is selected.

Step 4. Ensure Enable IPMI Over LAN is selected.

Step 5. From the Privilege Level drop-down list, select admin.

Step 6. For Encryption Key, enter 00 to disable encryption.

Step 7. Click Create to create the IPMI Over LAN policy.

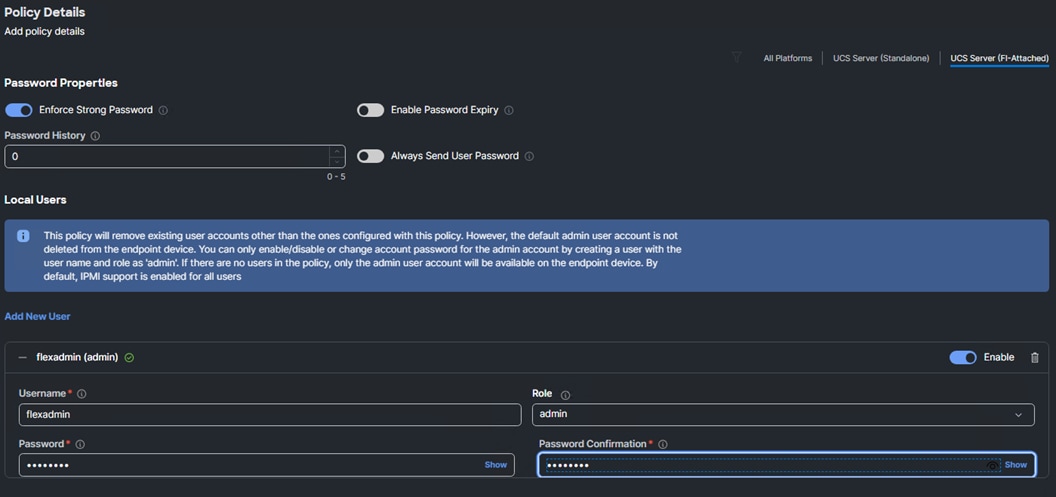

Procedure 3. Configure Local User Policy

Step 1. Click Select Policy next to Local User and then, in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-LocalUser-Policy). Click Next.

Step 3. Verify that UCS Server (FI-Attached) is selected.

Step 4. Verify that Enforce Strong Password is selected.

Step 5. Enter 0 under Password History.

Step 6. Click Add New User.

Step 7. Provide the username (for example, flexadmin), select a role (for example, admin), and provide a password and password confirmation.

Note: The username and password combination defined here will be used as an alternate to log in to KVMs and can be used for IPMI.

Step 8. Click Create to complete configuring the Local User policy.

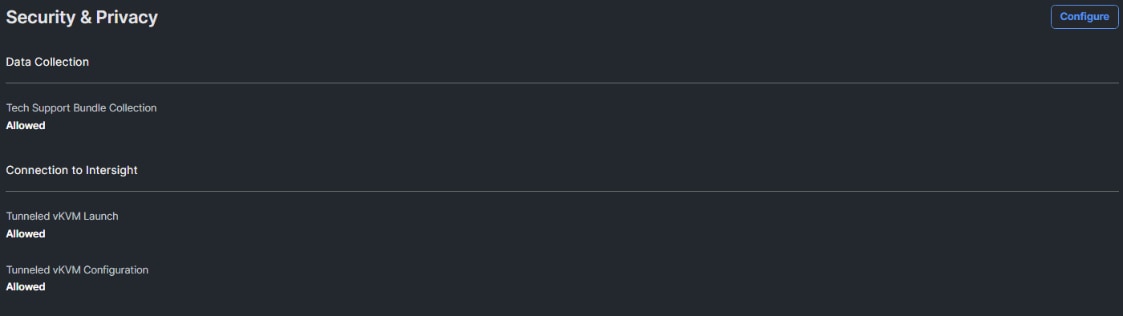

Procedure 4. Configure Virtual KVM Policy

Step 1. Click Select Policy next to Virtual KVM and then, in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-Virtual-KVM). Click Next.

Step 3. Verify that UCS Server (FI-Attached) is selected.

Step 4. Turn on Allow Tunneled vKVM.

Step 5. Click Create.

Note: To fully enable Tunneled KVM, once the Server Profile Template has been created, go to System > Settings > Security and Privacy and click Configure. Turn on Allow Tunneled vKVM Launch and Allow Tunneled vKVM Configuration. If Tunneled vKVM Launch and Tunneled vKVM Configuration are not Allowed, click Configure to change these settings.

Step 6. Click Next to go to Storage Configuration.

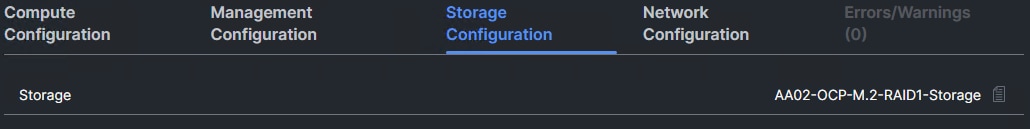

Storage Configuration

Procedure 1. Storage Configuration (optional)

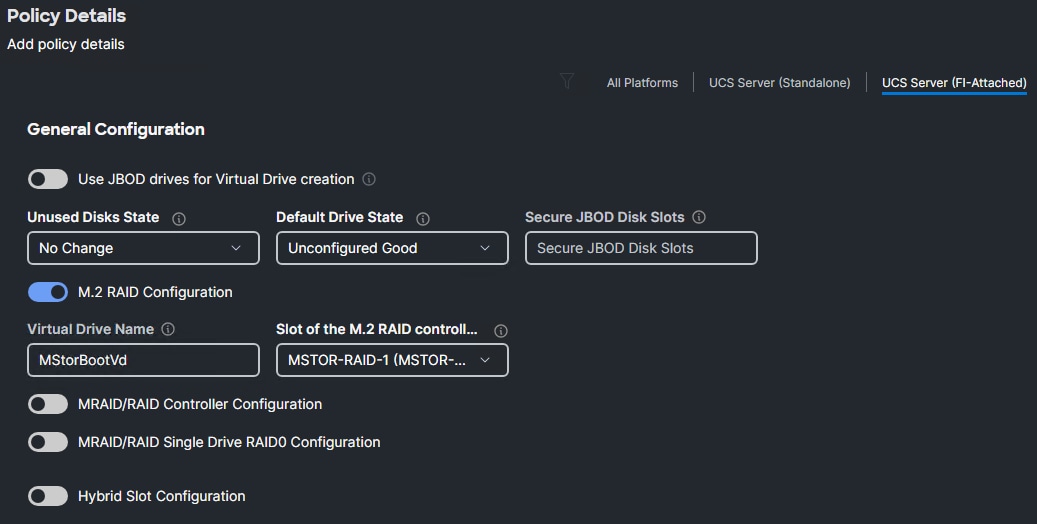

If you have two M.2 drives in your servers you can create an optional policy to mirror these drives using RAID1.

Step 1. If it is not necessary to configure a Storage Policy, click Next to continue to Network Configuration.

Step 2. Click Select Policy and in the pane on the right-click Create New.

Step 3. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-M.2-RAID1-Storage). Click Next.

Step 4. Enable M.2 RAID Configuration and leave the default Virtual Drive Name and Slot of the M.2 RAID controller field values, or values appropriate to your environment. Click Create.

Step 5. Click Next.

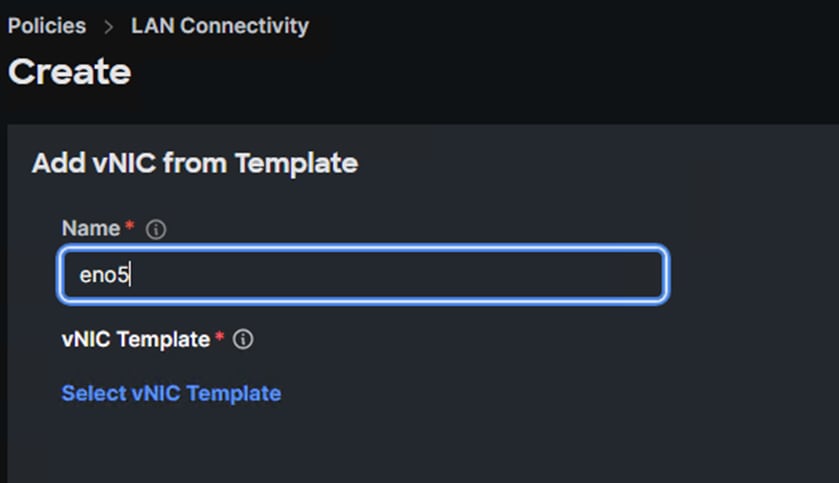

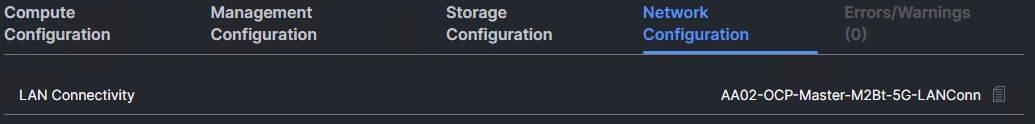

Procedure 1. Create Network Configuration – LAN Connectivity for Master Nodes

The LAN connectivity policy defines the connections and network communication resources between the server and the LAN. This policy uses pools to assign MAC addresses to servers and to identify the vNICs that the servers use to communicate with the network. For iSCSI hosts, this policy also defines an IQN address pool.

For consistent vNIC placement, manual vNIC placement is utilized. Additionally, the assumption is being made here that each server contains only one VIC card and Simple placement, which adds vNICs to the first VIC, is being used. If you have more than one VIC in a server, the Advanced placement will need to be used.

The Master hosts use 1 vNIC configured as listed in Table 6.

Table 6. vNIC placement for iSCSI connected storage for OCP Master Nodes

| vNIC/vHBA Name |

Switch ID |

PCI Order |

Fabric Failover |

Native VLAN |

Allowed VLANs |

MTU |

| eno5 |

A |

0 |

Y |

OCP-BareMetal-MGMT |

OCP-BareMetal-MGMT |

1500 |

Step 1. Click Select Policy next to LAN Connectivity and then, in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP), provide a name for the policy (for example, AA02-OCP-Master-M2Bt-5G-LANConn) and select UCS Server (FI-Attached) under Target Platform. Click Next.

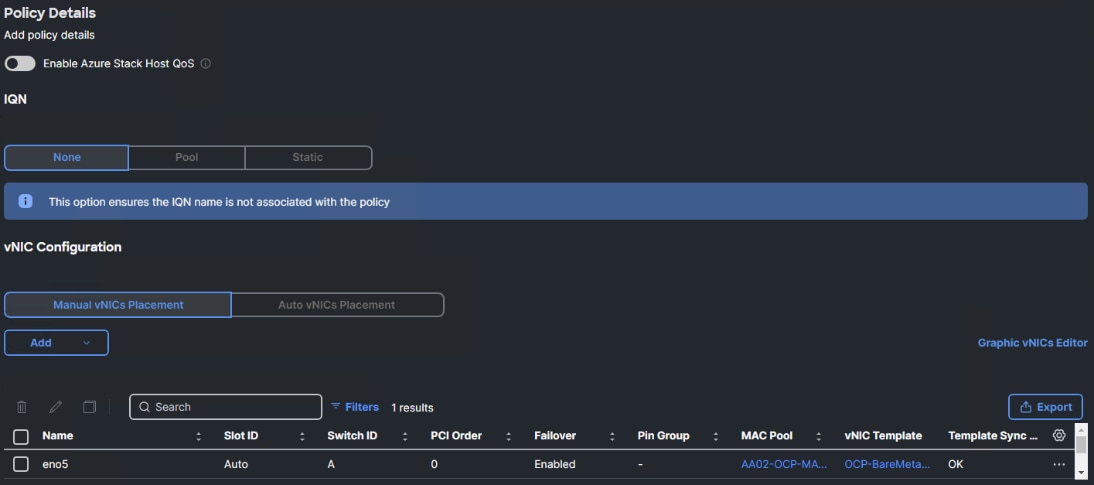

Step 3. Leave None selected under IQN and under vNIC Configuration, select Manual vNICs Placement.

Step 4. Use the Add drop-down list to select vNIC from Template.

Step 5. Enter the name for the vNIC from the table above (for example, eno5) and click Select vNIC Template.

Step 6. In the upper right, click Create New.

Step 7. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the vNIC Template (for example, AA02-OCP-BareMetal-MGMT-vNIC). Click Next.

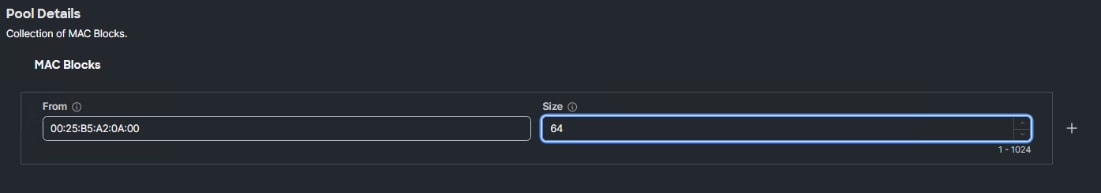

Procedure 2. Create MAC Address Pool for Fabric A and B

Note: When creating the first vNIC, the MAC address pool has not been defined yet, therefore a new MAC address pool will need to be created. Two separate MAC address pools are configured for each Fabric. MAC-Pool-A will be used for all Fabric-A vNICs, and MAC-Pool-B will be used for all Fabric-B vNICs. Adjust the values in the table for your environment.

| Pool Name |

Starting MAC Address |

Size |

vNICs |

| MAC-Pool-A |

00:25:B5:A2:0A:00 |

64* |

eno5, eno6 |

| MAC-Pool-B |

00:25:B5:A2:0B:00 |

64* |

eno7, eno8 |

Note: For Masters, each server requires 1 MAC address from MAC-Pool-A, and for Workers, each server requires 3 MAC addresses from MAC-Pool-A and 2 MAC addresses from MAC-Pool-B. Adjust the size of the pool according to your requirements.

Step 1. Click Select Pool under MAC Pool and then, in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the pool from Table 7 with the prefix applied depending on the vNIC being created (for example, AA02-OCP-MAC-Pool-A for Fabric A).

Step 3. Click Next.

Step 4. Provide the starting MAC address from Table 7 (for example, 00:25:B5:A2:0A:00)

Step 5. For ease of troubleshooting FlexPod, some additional information is always coded into the MAC address pool. For example, in the starting address 00:25:B5:A2:0A:00, A2 is the rack ID and 0A indicates Fabric A.

Step 6. Provide the size of the MAC address pool from Table 7 (for example, 64).

Step 7. Click Create to finish creating the MAC address pool.

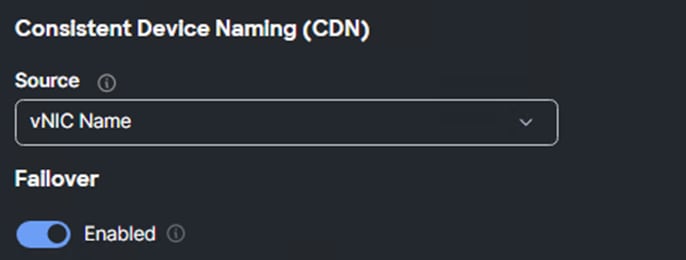

Step 8. From the Create vNIC Template window, provide the Switch ID from Table 6.

Step 9. For Consistent Device Naming (CDN), from the drop-down list, select vNIC Name.

Step 10. For Failover, set the value from Table 6.

Procedure 3. Create Ethernet Network Group Policy

Ethernet Network Group policies will be created and reused on applicable vNICs as explained below. The ethernet network group policy defines the VLANs allowed for a particular vNIC, therefore multiple network group policies will be defined for this deployment as listed in Table 8.

Table 8. Ethernet Group Policy Values

| Group Policy Name |

Native VLAN |

Apply to vNICs |

Allowed VLANs |

| AA02-OCP-BareMetal-NetGrp |

OCP-BareMetal-MGMT (1022) |

eno5 |

OCP-BareMetal-MGMT |

| AA02-OCP-iSCSI-NVMe-TCP-A-NetGrp |

OCP-iSCSI-A (3012) |

eno6 |

OCP-iSCSI-A, OCP-NVMe-TCP-A* |

| AA02-OCP-iSCSI-NVMe-TCP-B-NetGrp |

OCP-iSCSI-B (3022) |

eno7 |

OCP-iSCSI-B, OCP-NVMe-TCP-B* |

| AA02-OCP-NFS-NetGrp |

OCP-NFS |

eno8 |

OCP-NFS |

Note: *Add the NVMe-TCP VLANs when using NVMe-TCP.

Step 1. Click Select Policy under Ethernet Network Group Policy and then, in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy from the Table 8 (for example, AA02-OCP-BareMetal-NetGrp).

Step 3. Click Next.

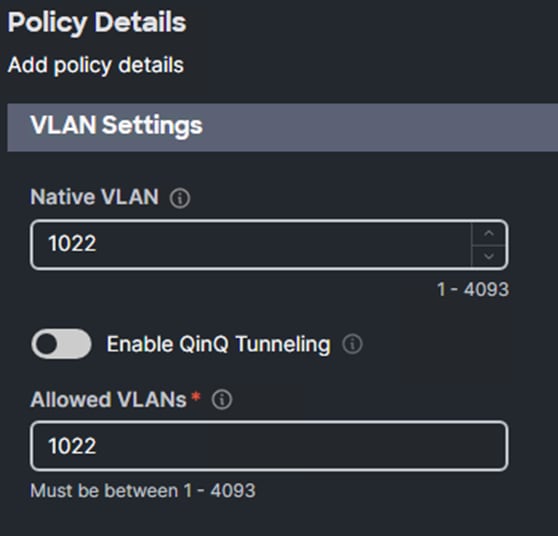

Step 4. Enter the Native VLAN ID (for example,1022) and the allowed VLANs (for example, 1022) from Table 2.

Step 5. Click Create to finish configuring the Ethernet network group policy.

Note: When ethernet group policies are shared between two vNICs, the ethernet group policy only needs to be defined for the first vNIC. For subsequent vNIC policy mapping, click Select Policy and pick the previously defined ethernet network group policy from the list.

Procedure 4. Create Ethernet Network Control Policy

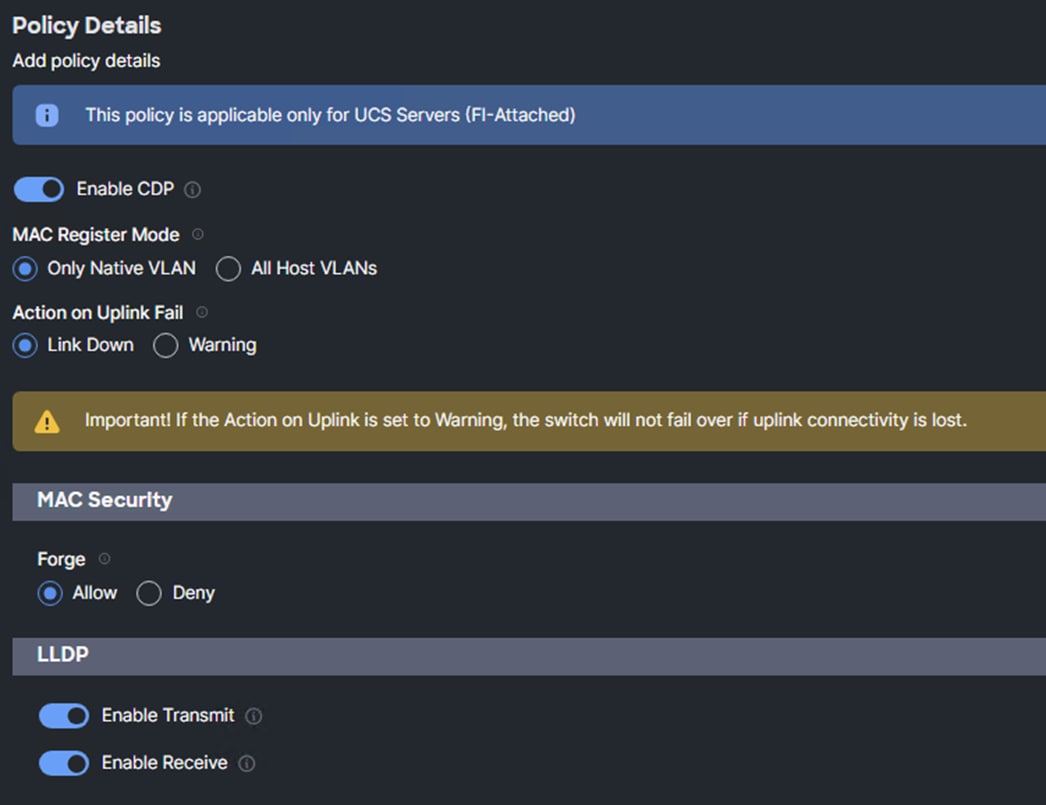

The Ethernet Network Control Policy is used to enable Cisco Discovery Protocol (CDP) and Link Layer Discovery Protocol (LLDP) for the vNICs. A single policy will be created here and reused for all the vNICs.

Step 1. Click Select Policy under Ethernet Network Control Policy and then, in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-Enable-CDP-LLDP).

Step 3. Click Next.

Step 4. Enable Cisco Discovery Protocol (CDP) and Enable Transmit and Enable Receive under LLDP.

Step 5. Click Create to finish creating Ethernet network control policy.

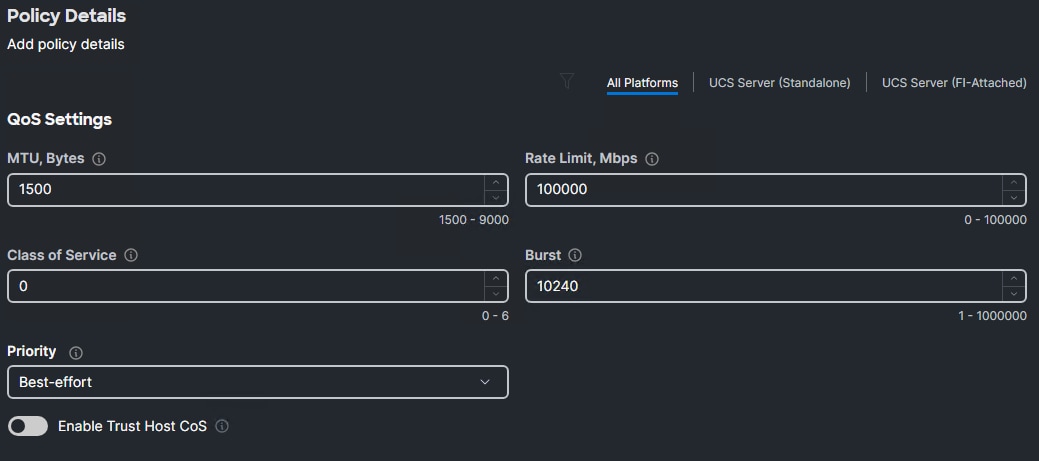

Procedure 5. Create Ethernet QoS Policy

Note: The Ethernet QoS policy is used to enable the appropriate maximum transmission unit (MTU) for all the vNICs. Across the vNICs, two policies will be created (one for MTU 1500 and one for MTU 9000) and reused for all the vNICs.

Step 1. Click Select Policy under Ethernet QoS and in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-MTU1500-EthernetQoS). The name of the policy should conform to the MTU from Table 8.

Step 3. Click Next.

Step 4. Change the MTU, Bytes value to the value from Table 8.

Step 5. Set the Rate Limit Mbps to 100000.

Step 6. Click Create to finish setting up the Ethernet QoS policy.

Procedure 6. Create Ethernet Adapter Policy

The ethernet adapter policy is used to set the interrupts, send, and receive queues, and queue ring size. The values are set according to the best-practices guidance for the operating system in use. Cisco Intersight provides a default Linux Ethernet Adapter policy for typical Linux deployments.

You can optionally configure a tweaked ethernet adapter policy for additional hardware receive queues handled by multiple CPUs in scenarios where there is a lot of traffic and multiple flows. In this deployment, a modified ethernet adapter policy, AA02-EthAdapter-16RXQs-5G, is created and attached to storage vNICs. Non-storage vNICs will use the default Linux-v2 Ethernet Adapter policy.

Table 9. Ethernet Adapter Policy association to vNICs

| Policy Name |

vNICs |

| AA02-OCP-EthAdapter-Linux-v2 |

eno5 |

| AA02-OCP-EthAdapter-16RXQs-5G |

eno6, eno7, eno8 |

Step 1. Click Select Policy under Ethernet Adapter and then, in the pane on the right, click Create New.

Step 2. Verify the correct organization is selected from the drop-down list (for example, AA02-OCP) and provide a name for the policy (for example, AA02-OCP-EthAdapter-Linux-v2).

Step 3. Click Select Cisco Provided Configuration under Cisco Provided Ethernet Adapter Configuration.

Step 4. From the list, select Linux-v2.

Step 5. Click Next.

Step 6. For the AA02-OCP-EthAdapter-Linux-v2 policy, click Create and skip the rest of the steps in this “Create Ethernet Adapter Policy” section.

Step 7. For the AA02-OCP-EthAdapter-16RXQs-5G policy, make the following modifications to the policy:

● Increase Interrupts to 19

● Increase Receive Queue Count to 16

● Increase Receive Ring Size to 16384 (Leave at 4096 for 4G VICs)

● Increase Transmit Ring Size to 16384 (Leave at 4096 for 4G VICs)

● Increase Completion Queue Count to 17

● Ensure Receive Side Scaling is enabled

Step 8. Click Create.

Procedure 7. Add vNIC(s) to LAN Connectivity Policy

The vNIC Template exists and all policies attached.

Step 1. For PCI Order enter the number from Table 8. Verify the other values.

Step 2. Click Add to add the vNIC to the LAN Connectivity Policy.

Step 3. If building the Worker LAN Connectivity Policy, go back to Procedure 1 Create Network Configuration - LAN Connectivity for Master Nodes, Step 4 and repeat the vNIC Template and vNIC creation for all five vNICs often selecting existing policies instead of creating them.

Step 4. Verify all vNICs were successfully created.

Step 5. Click Create to finish creating the LAN Connectivity policy.

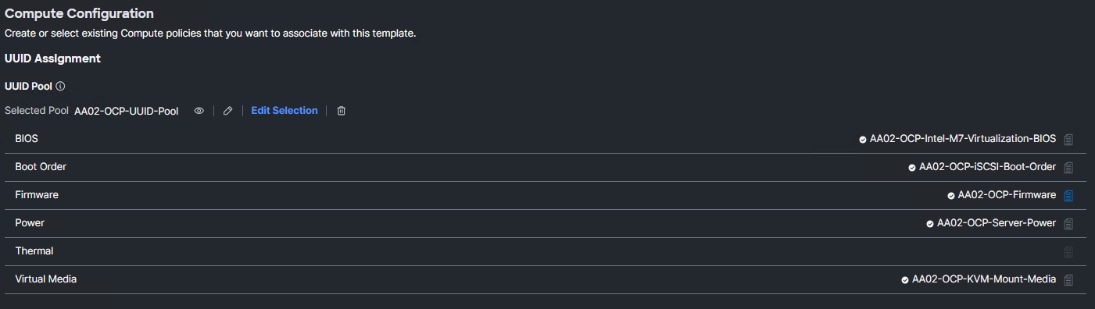

Procedure 8. Complete the Master Server Profile Template

Step 1. When the LAN connectivity policy is created, click Next to move to the Summary screen.

Step 2. On the Summary screen, verify the policies are mapped to various settings. The screenshots below provide the summary view for the OCP Master M.2 Boot server profile template.

Step 3. Click Close to close the template.

Procedure 9. Build the OCP Worker LAN Connectivity Policy

The OCP Master LAN Connectivity Policy can be cloned and four vNICs added to build the OCP Worker LAN Connectivity Policy that will then be used in the OCP Worker Server Profile Template. Table 10 lists the vNICs that will be added to the cloned policy.

Table 10. vNIC additions for iSCSI connected storage for OCP Worker Nodes

| vNIC/vHBA Name |

Switch ID |

PCI Order |

Fabric Failover |

Native VLAN |

Allowed VLANs |

MTU |

| eno6 |

A |

1 |

N |

OCP-iSCSI-A |

OCP-iSCSI-A, OCP-NVMe-TCP-A |

9000 |

| eno7 |

B |

2 |

N |

OCP-iSCSI-B |

OCP-iSCSI-B, OCP-NVMe-TCP-B |

9000 |

| eno8 |

A |

3 |

Y |

OCP-NFS |

OCP-NFS |

9000 |

Step 1. Log into Cisco Intersight and select Infrastructure Service > Policies.

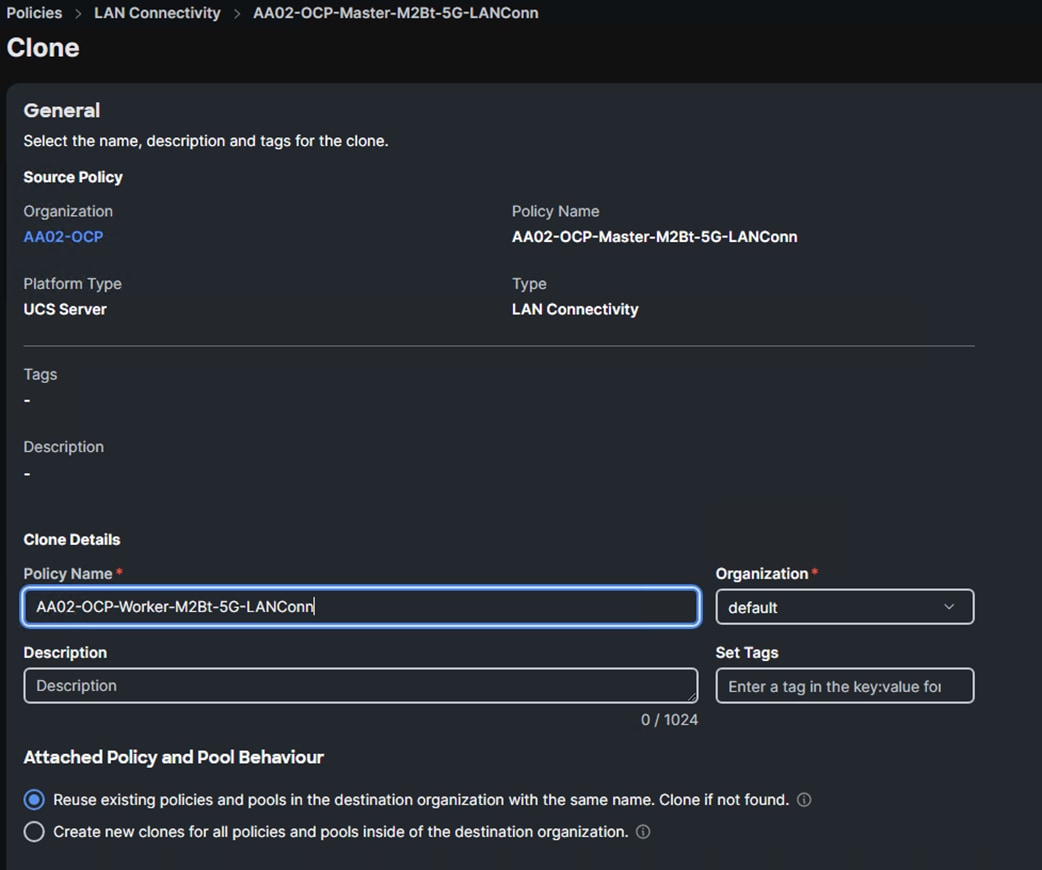

Step 2. In the policy list, look for the <org-name>-Master-M2Bt-5G-LANConn or the LAN Connectivity policy created above. Click … to the right of the policy and select Clone.

Step 3. Change the name of the cloned policy to something like AA02-OCP-Worker-M2Bt-5G-LANConn and select the correct Organization (for example, AA02-OCP).

Step 4. Click Clone to clone the policy.

Step 5. From the Policies window, click the refresh button to refresh the list. The newly cloned policy should now appear at the top of the list. Click … to the right of the newly cloned policy and select Edit.

Step 6. Click Next.

Step 7. Go to Procedure 1 Create Network Configuration - LAN Connectivity for Master Nodes and start at Step 4 to add the 4 vNICs from Template listed in Table 10.

The OCP-Worker-M2Bt-5G-LANConn policy is now built and can be added to the OCP Worker Server Profile Template.

Step 8. Click Save to save the policy.

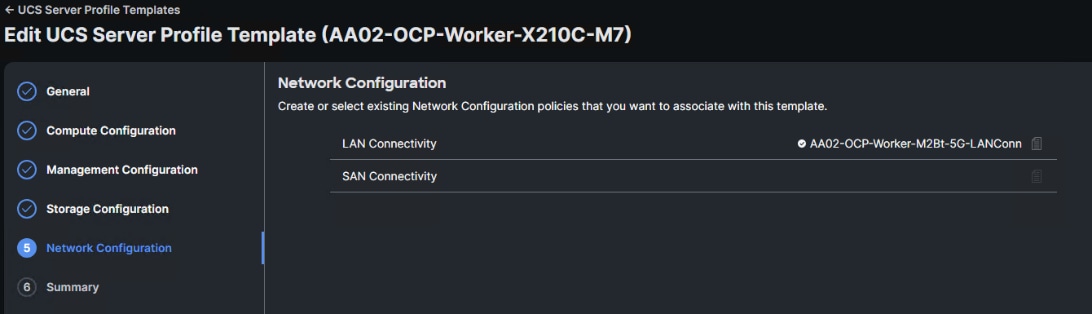

Procedure 10. Create the OCP Worker Server Profile Template

The OCP Master Server Profile Template can be cloned and modified to create the OCP Worker Server Profile Template.

Step 1. Log into Cisco Intersight and select Infrastructure Service > Templates > UCS Server Profile Templates.

Step 2. To the right of the OCP-Master-X210C-M7 template, click … and select Clone.

Step 3. Ensure that the correct Destination Organization is selected (for example, AA02-OCP) and click Next.

Step 4. Adjust the Clone Name (for example, AA02-Worker-X210C-M7) and Description as needed and click Next.

Step 5. From the Templates window, click the … to the right of the newly created clone and click Edit.

Step 6. Click Next until you get to Storage Configuration. If the Storage Policy needs to be added or deleted, make that adjustment.

Step 7. Click Next to get to Network Configuration. Click the page icon to the right of the LAN Connectivity Policy and select the Worker LAN Connectivity Policy. Click Select.

Step 8. Click Next and Close to save this template.

Complete the Cisco UCS IMM Setup

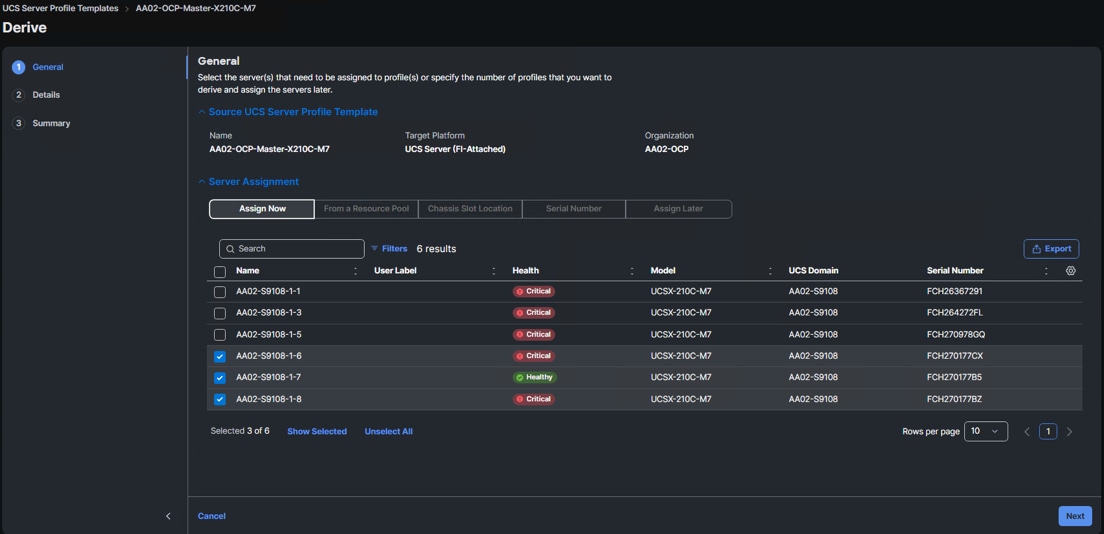

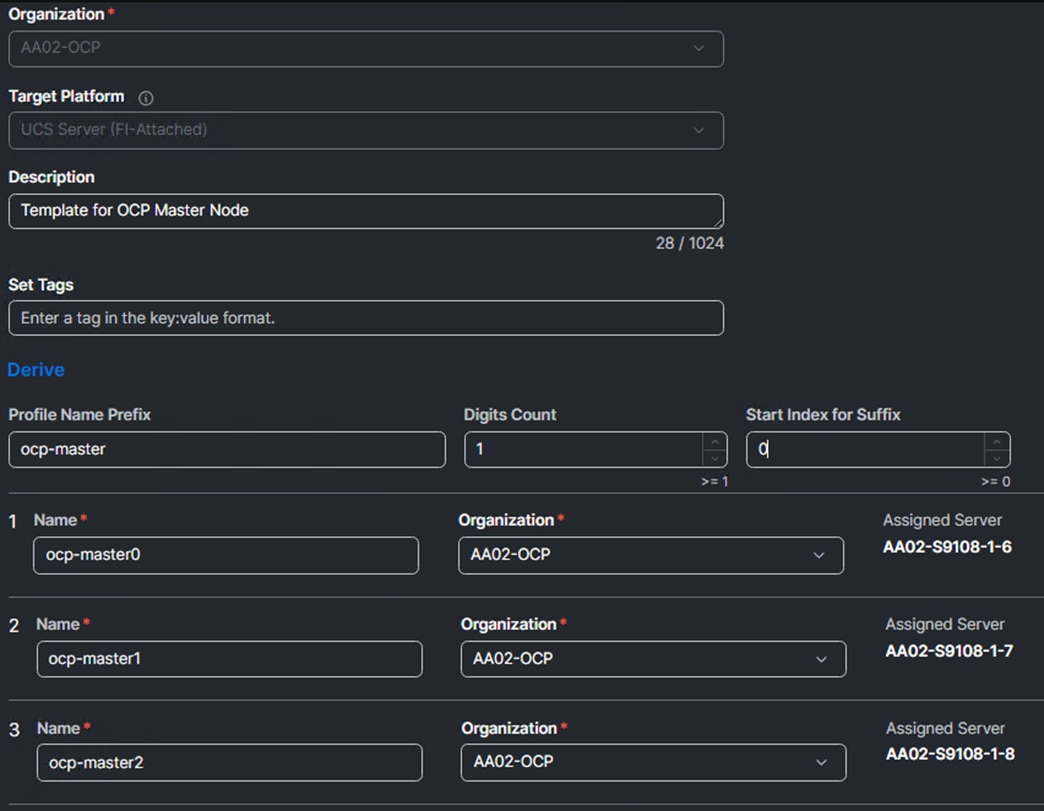

Procedure 1. Derive Server Profiles

Step 1. From the Infrastructure Service > Templates page, to the right of the OCP-Master template, click … and select Derive Profiles.

Step 2. Under the Server Assignment, select Assign Now and select the 3 Cisco UCS X210c M7 servers that will be used as OCP Master Nodes.

Step 3. Click Next.

Step 4. For the Profile Name Prefix, enter the first part of the OCP Master Node hostnames (for example, ocp-master. Set Start Index for Suffix to 0 (zero). The 3 server Names should now correspond to the OCP Master Node hostnames.

Step 5. Click Next.

Step 6. Click Derive to derive the OCP Master Node Server Profiles.

Step 7. Select Profiles on the left and then select the UCS Server Profiles tab.

Step 8. Select the 3 OCP Master profiles and then click the … at the top or bottom of the list and select Deploy.

Step 9. Select Reboot Immediately to Activate and click Deploy.

Step 10. Repeat this process to create 3 OCP Worker Node Server Profiles.

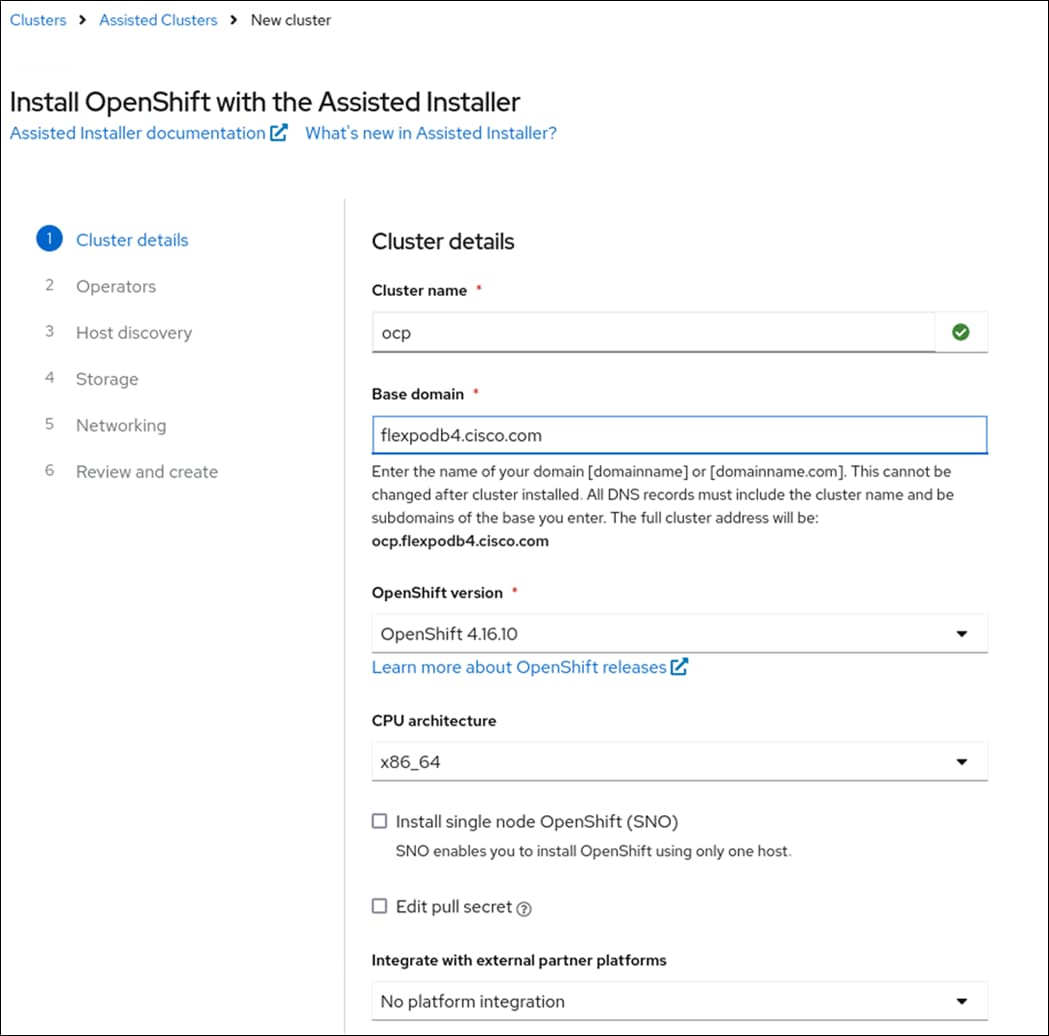

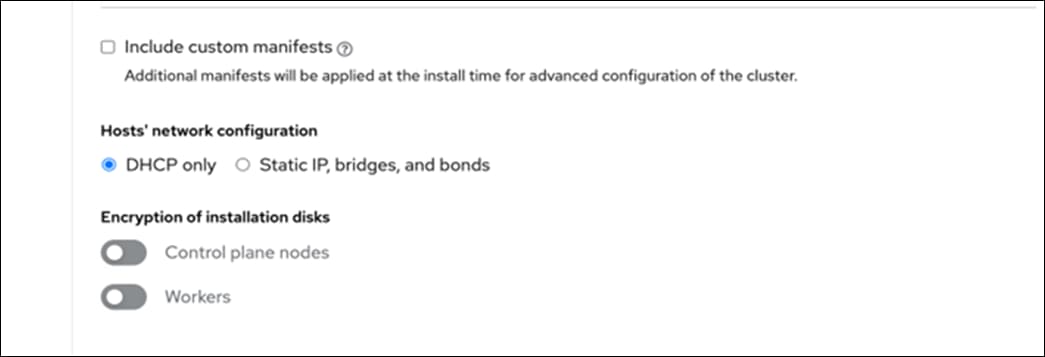

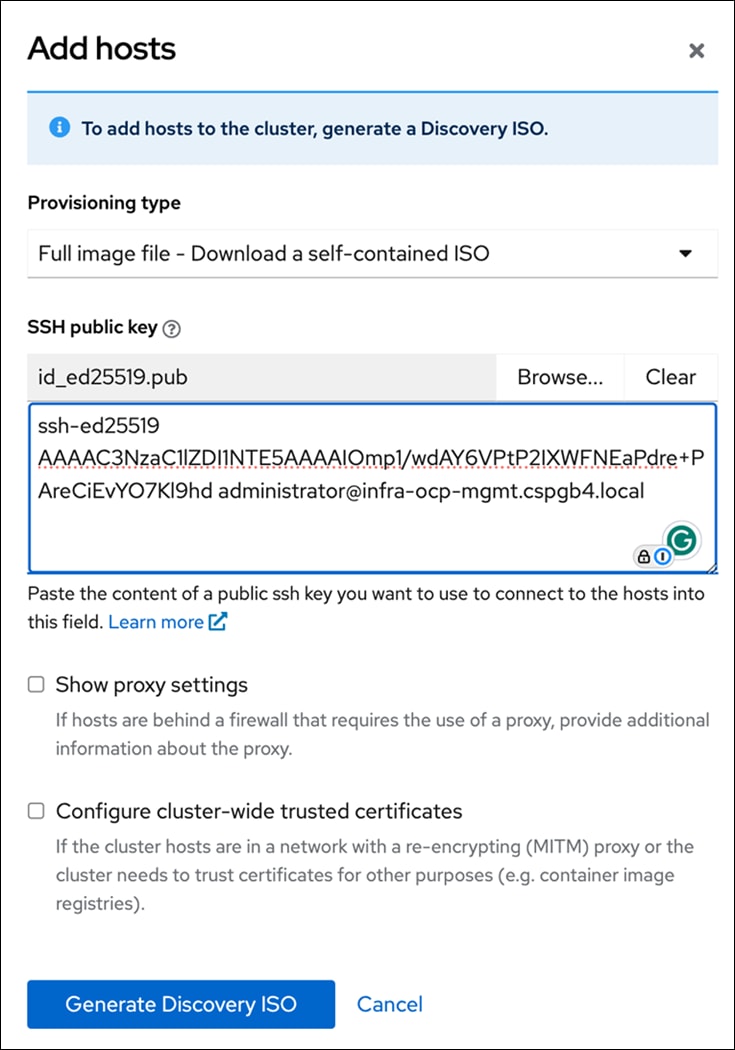

OpenShift Container Platform Installation and Configuration

This chapter contains the following:

● OpenShift Container Platform – Installation Requirements