FlexPod Datacenter Zero Trust Framework

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

![]()

In partnership with:

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to: http://www.cisco.com/go/designzone.

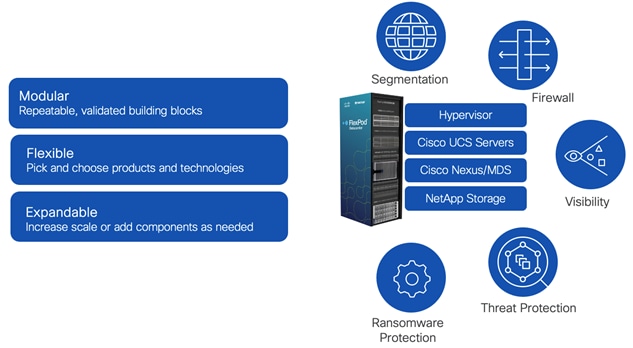

The FlexPod Datacenter solution is a validated approach for deploying Cisco® and NetApp technologies and products to build shared private and public cloud infrastructure. Cisco and NetApp have partnered to deliver a series of FlexPod solutions that enable strategic data-center platforms. The success of the FlexPod solution is driven by its ability to evolve and incorporate both technology and product innovations in the areas of management, computing, storage, networking, and security. This document explains the deployment details of incorporating and implementing various tools, technologies, and products to deliver a Zero Trust security framework for FlexPod Datacenter.

FlexPod delivers an integrated architecture that incorporates compute, storage, and network design best practices, thereby minimizing IT risks by validating the integrated architecture to ensure compatibility between various components. The solution also addresses IT pain points by providing documented design guidance, deployment guidance, and support that can be used in various stages (planning, designing, and implementation) of a deployment.

FlexPod Datacenter delivers following key benefits:

● Simpler and programmable infrastructure: FlexPod infrastructure delivered as infrastructure-as-code through a single partner integrable open API.

● Latest hardware and software compute innovations: policy-based configurations, delivered using Cisco Intersight, to deploy and manage the latest processor, memory, network, and power/cooling improvements.

● Storage Modernization: deliver high-speed, consistent, low latency, multi-tenant storage using a range of NetApp storage arrays.

● Innovative cloud operations: continuous feature delivery and no need for maintaining on-premises physical or virtual machines supporting management functions.

● Built for investment protections: design ready for future compute technologies such as liquid cooling and high-Wattage CPUs; CXL-ready.

A Zero Trust security framework is a comprehensive approach to network security that assumes no user, system, or device can be trusted by default, regardless of its location relative to the network perimeter. It operates under the principle of "never trust, always verify," meaning that every access request is thoroughly verified before granting access, irrespective of where it originates from. The Zero Trust framework aims to protect modern digital environments by leveraging network segmentation, preventing lateral movement, providing Layer 7 threat prevention, and simplifying granular user-access control.

Implementing Zero Trust framework on a FlexPod infrastructure provides following additional benefits:

● Enhanced Security: By treating every access request as a potential threat, Zero Trust significantly reduces the risk of data breaches and other security incidents.

● Greater Visibility: Constant monitoring of network activities provides a comprehensive view of the network, enabling quick identification and response to any unusual or suspicious activities.

● Reduced Attack Surface: By enforcing least privilege access and micro-segmentation, Zero Trust minimizes the potential points of vulnerability in the network.

● Improved Compliance: The stringent security controls in Zero Trust can help organizations meet compliance requirements for data protection and privacy.

● Efficient Incident Response: Quickly detect, block, and respond to threats. Verify data integrity and implement data loss prevention.

● Protection Against Internal Threats: Zero Trust considers the possibility of threats coming from inside the network, offering protection against insider threats as well as external ones.

The Zero Trust framework for FlexPod solution incorporates various additional security components by Cisco and NetApp including Cisco Secure Firewall Threat Defense (FTD), Cisco Secure Network Analytics (previously Stealthwatch), Cisco Secure Workload (previously Tetration), and NetApp Autonomous Ransomware Protection (ARP).

If you’re interested in understanding the FlexPod design and deployment details, including the configuration of various elements of design and associated best practices, see Cisco Validated Designs for FlexPod: https://www.cisco.com/c/en/us/solutions/design-zone/data-center-design-guides/flexpod-design-guides.html.

Solution Overview

This chapter contains the following:

● Audience

● Platform Resilience: device and protocol hardening including traffic isolation, role-based access control (RBAC), and utilizing secure connectivity.

● Segmentation and Control: multi-tenancy design using virtual routing and forwarding (VRF), VLANs, and Cisco Firewall Threat Defense.

● Visibility and Monitoring: network and OS level visibility and anomaly detection using Cisco Secure Network Analytics and Cisco Secure Workload.

● Threat Protection and Response: controlling the breach and recover quickly using Cisco Secure Workload and NetApp Ransomware Protection.

The intended audience of this document includes but is not limited to IT architects, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who want to take advantage of an infrastructure built to deliver IT efficiency and enable IT innovation.

This document provides deployment guidance around incorporating the Zero Trust framework design principles in a FlexPod Datacenter environment. This document provides deployment steps for the following key areas:

● Preparing a base FlexPod infrastructure to be used in the solution.

● Setting up infrastructure, network, compute, storage, and virtualization, for secure segmentation.

● Deploying Cisco Secure Firewall Threat Defense virtual (FTDv) to protect the tenant traffic and Cisco Secure Firewall Management Center (FMC) to manage multiple tenant firewalls.

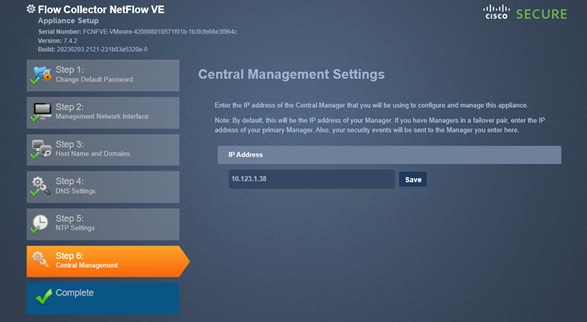

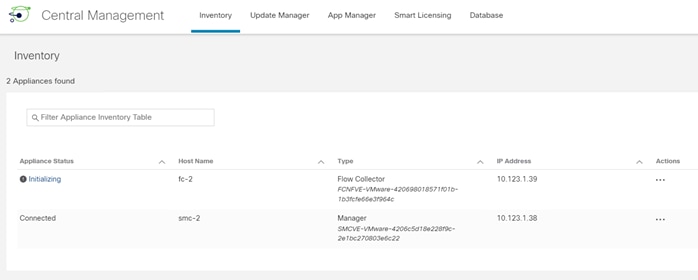

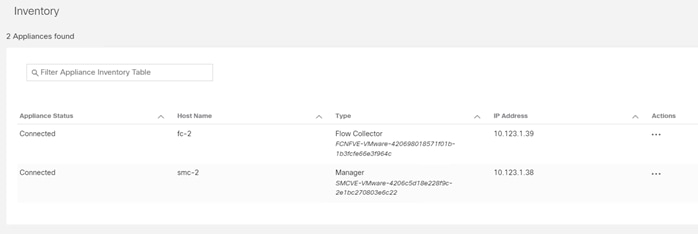

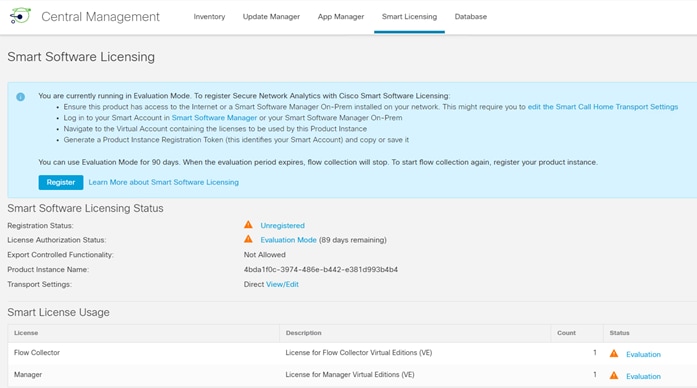

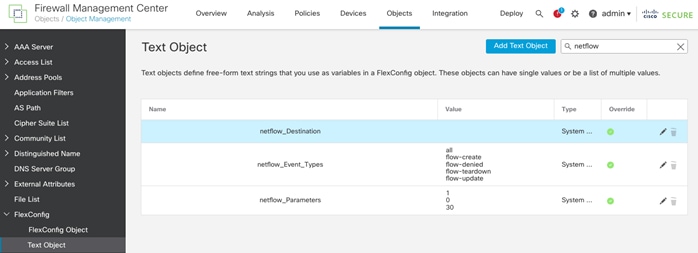

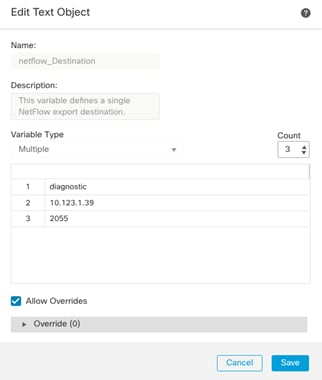

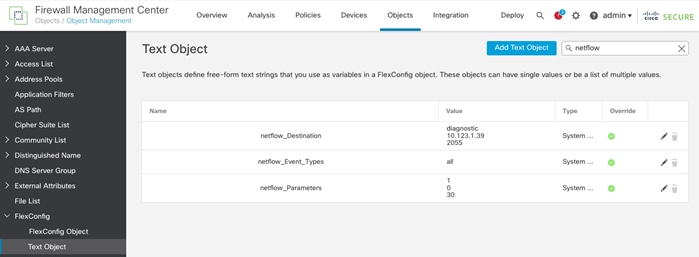

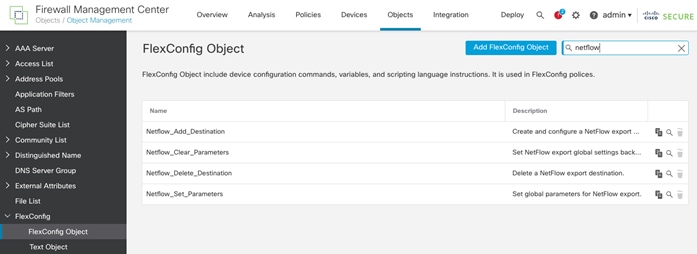

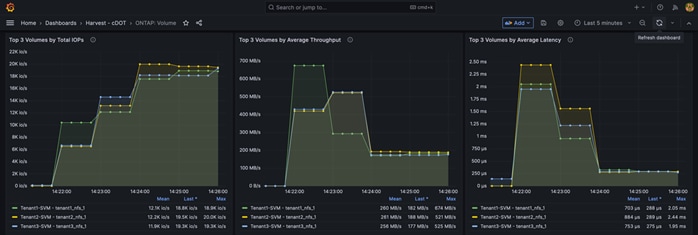

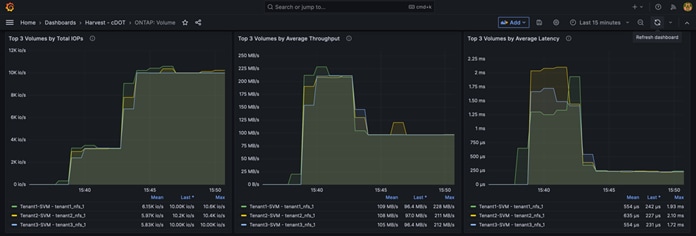

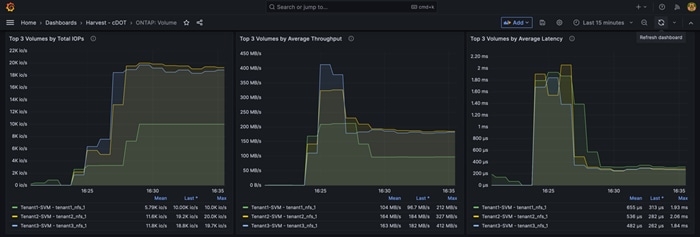

● Deploying Cisco Secure Network Analytics virtual and enabling NetFlow on various points in the network for visibility.

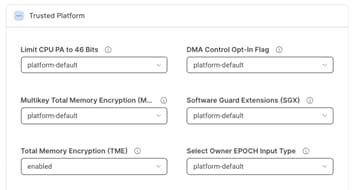

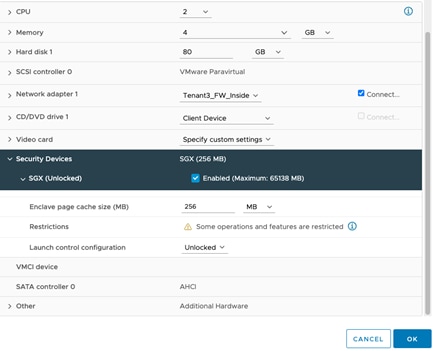

● Setting up Intel Confidential Computing components to enable Intel Total Memory Encryption (TME) and Intel Software Guard Extensions (SGX).

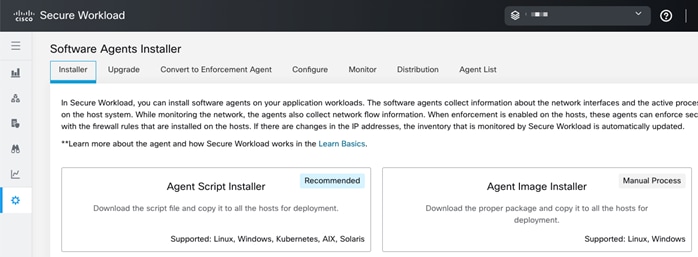

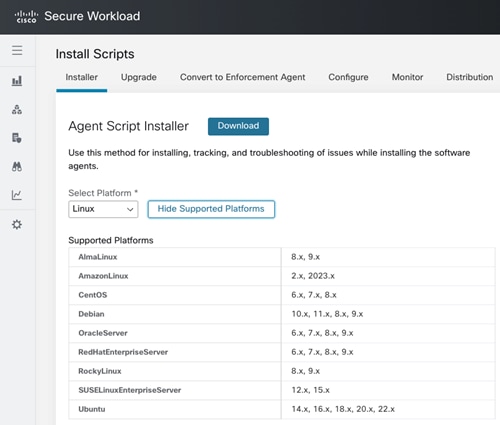

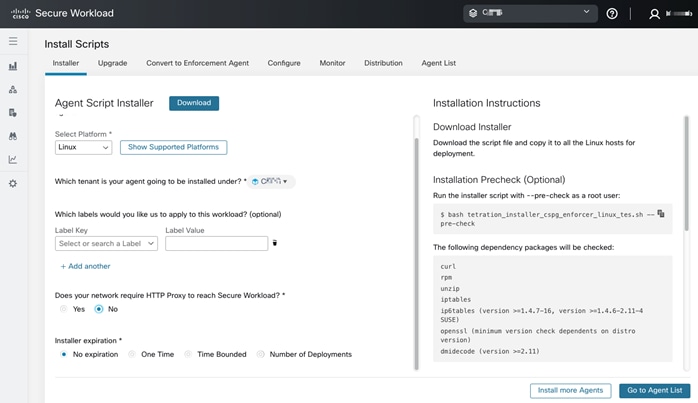

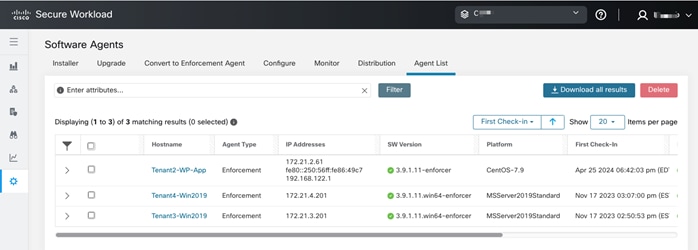

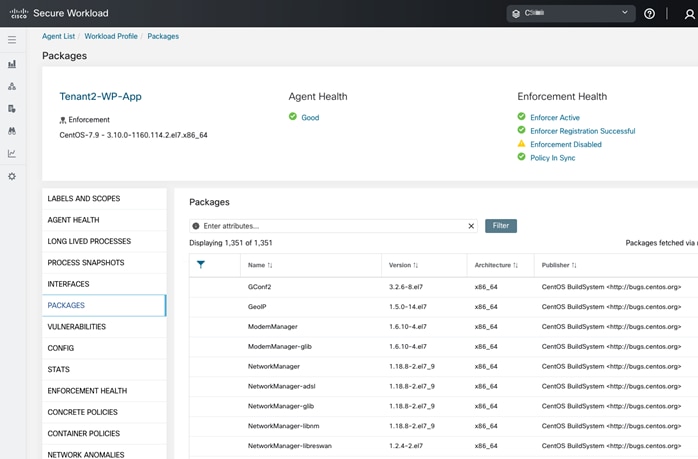

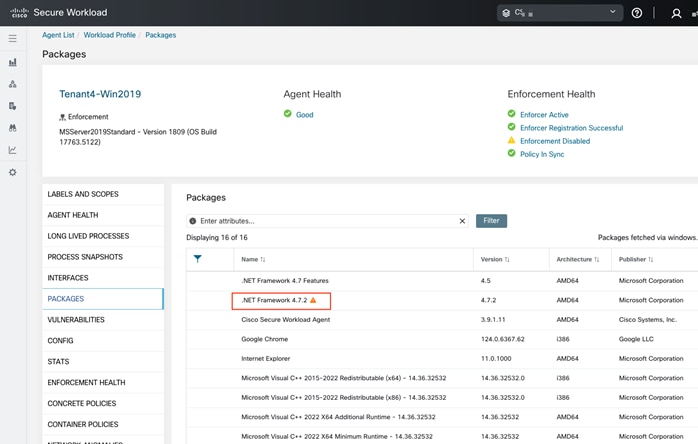

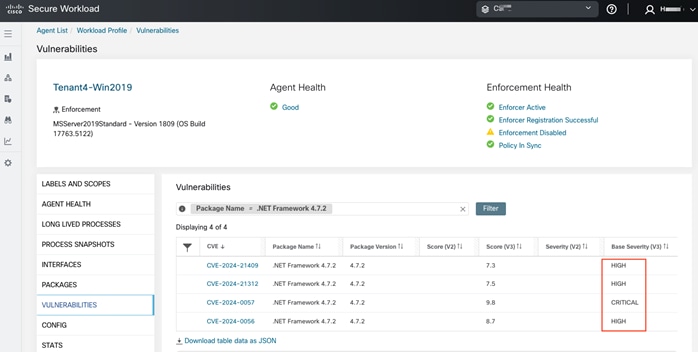

● Using a two-tier model application, WordPress, demonstrate the application and virtual machines (VMs) control using Cisco Secure Workload (SaaS).

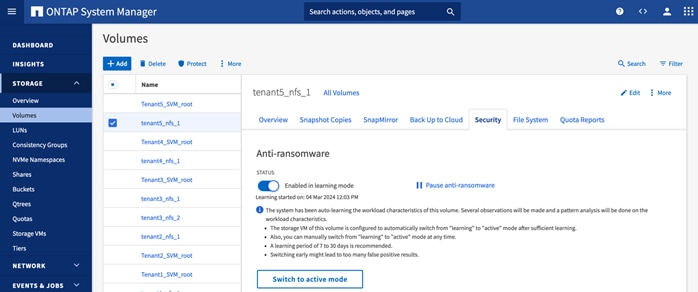

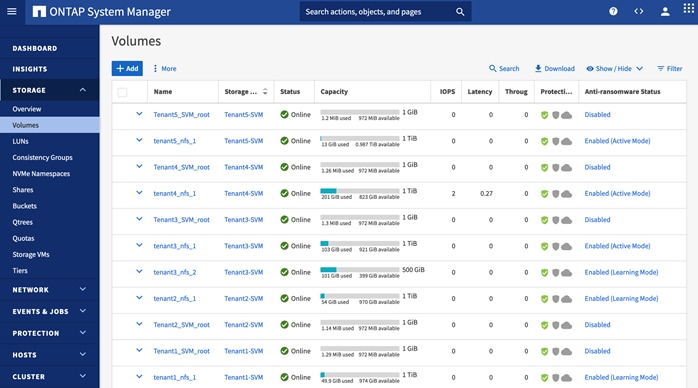

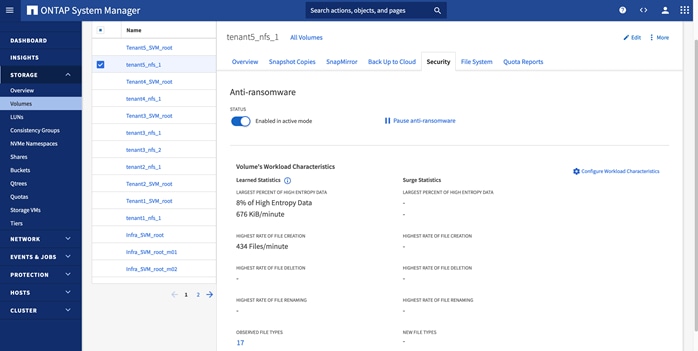

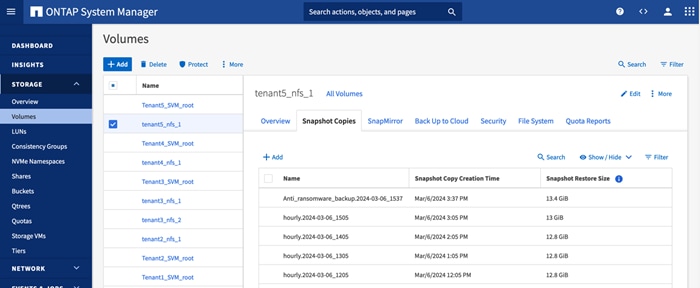

● Setup and utilize NetApp Autonomous Ransomware Protection (ARP) to recover a compromised application.

This document augments the FlexPod Datacenter using IaC with Cisco IMM M7, VMware vSphere 8, and NetApp ONTAP 9.12.1 (CVD) and explains new and changed information to support Zero Trust framework deployment.

The following design elements distinguish this FlexPod Datacenter Cisco Validated Design from previous designs:

● Enhanced platform security.

● Multi-tenant design.

● Controlling data traffic between various tenants using Cisco Secure Firewall Threat Defense virtual (FTDv).

● Cisco Secure Network Analytics virtual for network and process level visibility.

● Cisco Secure Workload SaaS for threat protections, application and VM control.

● Deployment of Intel Confidential Computing elements.

● Data loss prevention using NetApp Autonomous Ransomware Protection.

Deployment Hardware and Software

This chapter contains the following:

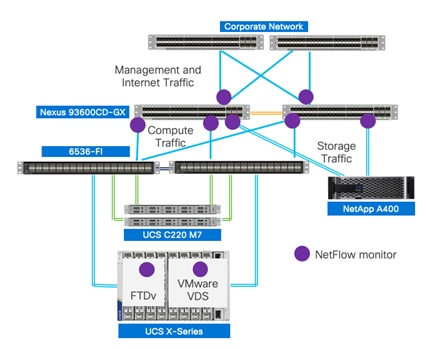

The Zero Trust framework for FlexPod Datacenter design incorporates various security products and components providing a robust framework that extends to all layers, including network, compute, hypervisor, and storage and includes implementation of tenant-based segmentation. This FlexPod validated design includes the following:

● Cisco Secure Firewall Threat Defense virtual devices are utilized to ensure secure communication across application tiers and tenants.

● Intel Confidential Computing provides a secure environment to execute customer workloads.

● Cisco Secure Network Analytics combined with NetFlow export from various sources provide application and tenant visibility.

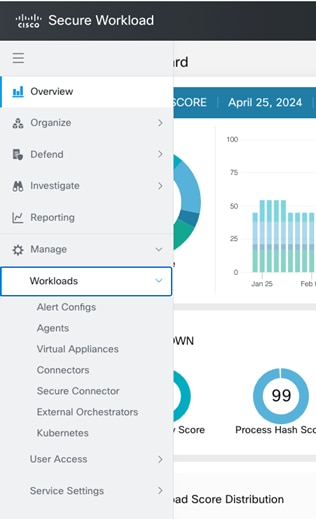

● Cisco Secure Workload is used for visibility into workload VMs' OS and processes and for providing micro segmentation.

● NetApp Autonomous Ransomware Protection delivers data classification, protection, and recovery. Additionally, data isolation on NetApp is achieved using IPspaces and Storage Virtual Machines (SVMs).

The FlexPod Datacenter with Cisco UCS and Cisco Intersight meets the following general design requirements:

● Resilient design across all layers of the infrastructure with no single point of failure.

● Scalable design with the flexibility to add compute capacity, storage, or network bandwidth as needed.

● Modular design that can be replicated to expand and grow as the needs of the business grow.

● Flexible design that can support different models of various components with ease.

● Simplified design with ability to integrate and automate with external automation tools.

● Cloud-enabled design which can be configured, managed, and orchestrated from the cloud using GUI or APIs.

● Follow cybersecurity best practices including device and protocol hardening therefore reducing the risk of configuration errors and vulnerabilities.

● Reduce attack surface using designs that support enhanced segmentation and control and reduce attack surface for malicious actors.

● Continuous Monitoring of the infrastructure at every layer to identify and mitigate threats.

● Utilize tools that allow for centralized device and security management and policy enforcement.

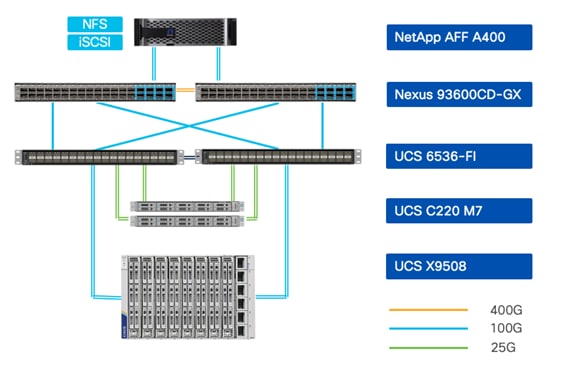

To deliver a solution which meets all these design requirements, various solution components are connected in a FlexPod configuration as shown in Figure 2. The deployment details are covered in the upcoming sections.

The Zero Trust framework for FlexPod can be deployed on both Fibre Channel (FC) and IP-based storage access FlexPod designs. For the FC designs, NetApp AFF A400 and Cisco UCS X-Series are connected through Cisco MDS 9132T Fibre Channel Switches and boot from SAN for stateless compute and uses the FC network. For the IP-only solution, there is no FC network and Cisco MDS is not needed. The boot from SAN for stateless compute uses the iSCSI network.

Note: The Fibre Channel based FlexPod design is supported but was not validated as part of this effort.

FlexPod Configuration

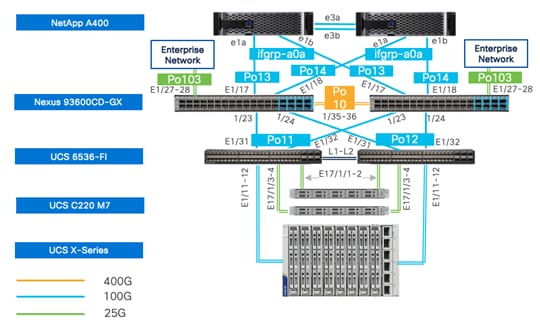

The FlexPod physical topology used in this deployment guide is shown in Figure 3.

Note: The validated configuration showcases the Cisco UCS X-Series chassis and Cisco UCS M7 servers. The Cisco UCS B-Series chassis and Cisco UCS B200 M6 servers were not validated in this CVD but are also supported.

The components are setup as follows:

● Cisco Nexus 93600CD-GX Switches in Cisco NX-OS mode provide the switching fabric. The two Nexus switches connect to each other using two 400Gbps ports configured as a port-channel (VPC peer-link).

● Cisco UCS 6536 Fabric Interconnects provide the Cisco UCS X-Series chassis, Cisco UCS C-Series servers, and network switch connectivity:

◦ Cisco UCS 6536 Fabric Interconnect (FI) 100 Gigabit Ethernet uplink ports connect to Cisco Nexus 93600CD-GX Switches in a vPC configuration.

◦ Cisco UCS X9508 Chassis connects to Cisco UCS 6536 FIs using Cisco UCSX 9108-100G Intelligent Fabric Modules (IFMs), where two or more 100 Gigabit Ethernet ports are used on each IFM to connect to the appropriate FI.

◦ The Cisco UCS C220 M7 servers connect to Cisco UCS 6536 FIs using four 25G connections. 100G to 4x25G breakout cables are used for this connectivity. Cisco UCS C220 M7 servers can also connect to UCS Fabric Interconnect using 100G VIC adapters.

◦ Cisco UCS x210c M7 compute nodes contain fifth-generation Cisco UCS 15231 virtual interface cards.

◦ Cisco UCS C220 M7 servers contain fifth-generation Cisco UCS 15428 virtual interface cards.

● The NetApp AFF A400 controller connects to the Cisco Nexus 93600CD-GX Switches using two 100 GE ports from each controller configured as a vPC for iSCSI boot and for NFS traffic.

● VMware 7.0 Update 3 ESXi software is installed on Cisco UCS compute nodes and rack servers.

VLAN Configuration

Table 1 lists VLANs configured for setting up the environment along with their usage.

| VLAN ID |

Name |

Description |

Subnet |

| 2 |

Native-VLAN |

Use VLAN 2 as native VLAN instead of default VLAN (1) |

|

| 1230 |

Mgmt |

Existing out of band management VLAN. |

10.123.0.0/24 |

| 1231 |

IB-Mgmt |

FlexPod In-band management VLAN. |

10.123.1.0/24 |

| 1232 |

Traffic-VLAN |

VLAN for all Firewall Thread Defense Virtual (FTDv) outside instances. |

10.123.2.0/24 |

| 3000 |

Infra-vMotion |

vMotion VLAN for all the infrastructure ESXi hosts |

10.101.7.0/24 |

| 3001 |

Infra-iSCSI-A |

Infrastructure host iSCSI-A VLAN |

192.168.1.0/24 |

| 3002 |

Infra-iSCSI-B |

Infrastructure host iSCSI-B VLAN |

192.168.2.0/24 |

| 3003 |

Infra-NFS |

VLAN for ESXi NFS datastore to host all VMs |

192.168.3.0/24 |

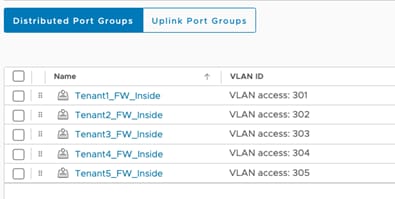

| 301-305 |

Tenant<x>-Inside |

VLANs for inside interfaces of FTDv appliances. The number of VLANs will depend on the number of tenants. |

172.21.<x>.0/24 |

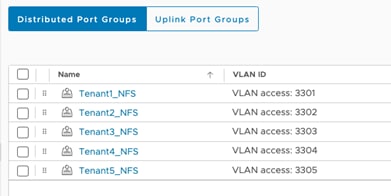

| 3301-3305 |

Tenant<x>-NFS |

VLANs for Tenant SVM specific NFS network |

172.22.<x>.0/24 |

Some of the key highlights of VLAN usage are as follows:

● VLAN 1230 is the management VLAN where out of band management interfaces of all the physical devices are connected.

● VLAN 1231 is used for in-band management of VMs, ESXi hosts, and other infrastructure services such as DNS, AD, etc. in the FlexPod environment.

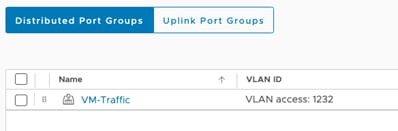

● VLAN 1232 is used for outside Interface of all the tenant FTDv appliances. You can choose to deploy separate VLANs, one for every FTDv, for more granular control.

● VLAN 3000 is the VM vMotion VLAN for infrastructure ESXi hosts.

● VLAN 3001 is used by infrastructure ESXi hosts to access iSCSI boot LUNs (A-Path).

● VLAN 3002 is also used by infrastructure ESXi hosts to access iSCSI boot LUNs (B-Path).

● VLAN 3003 provides ESXi hosts access to the infrastructure NSF datastores hosted on the NetApp Controllers. Infrastructure NFS storage is used as primary storage to host all the Tenant VMs in this design.

● VLANs 301+ are used for inside interfaces of all the FTDv. Each tenant will use a separate inside VLAN for traffic segregation. These protected VLANs will also be used for defining NetApp SVM management interfaces.

● VLANs 3301+ are used to provide NFS access to per-tenant Storage Virtual Machines (SVMs). Separate VLANs keep the traffic segregated. These VLANs are not needed if tenants do not require access to dedicated NFS shares.

Physical Components

Table 2 lists the required hardware components used to build the validated solution. You are encouraged to review your requirements and adjust the size or quantity of various components as needed.

| Component |

Hardware |

Comments |

| Cisco Nexus Switches |

Two Cisco Nexus 93600CD-GX switches |

|

| NetApp AFF A400 |

A NetApp AFF A400 HA pair with appropriate storage and network connectivity |

Your requirements will determine the amount of storage. The NetApp A400 should support 100Gbps (or 25 Gbps) ethernet connectivity |

| Fabric Interconnects |

Two Cisco UCS 6536 Fabric Interconnects |

These fabric interconnects provide connectivity for X-Series chassis and C-Series rack servers |

| Cisco UCS Chassis |

A minimum of one UCS X9508 chassis. |

Single chassis can host up to 8 Cisco UCS X210c M6/M7 compute nodes or a combination of compute and X440p PCIe GPU nodes |

| Cisco UCS Compute Nodes |

A total of four or more servers in any combination |

The validated configuration comprised of 2 X210c M7 compute nodes and 2 C220 M7 rack servers |

Table 3 lists various software releases used in the solution.

Table 3. Software Components and Versions

| Component |

Version |

| Cisco Nexus 93600CD-GX |

10.2(6) |

| Cisco UCS FI 6536 |

4.3(2) |

| Cisco UCS C220 M7 |

4.2(2a) |

| Cisco UCS X210c compute nodes |

5.2(0) |

| NetApp A400 - ONTAP |

9.13.1 |

| NetApp Active IQ Unified Manager |

9.13 |

| NetApp ONTAP Tools for VMware vSphere |

9.13 |

| NetApp SnapCenter Plugin for VMware vSphere |

4.9 |

| VMware vCenter |

7.0 Update 3 |

| VMware ESXi |

7.0 Update 3 |

| Security and Visibility |

|

| Cisco Secure Network Analytics |

7.4.2 |

| Cisco Secure Firewall Threat Defense |

7.2.5 |

| Cisco Secure Firewall Management Center |

7.2.5 |

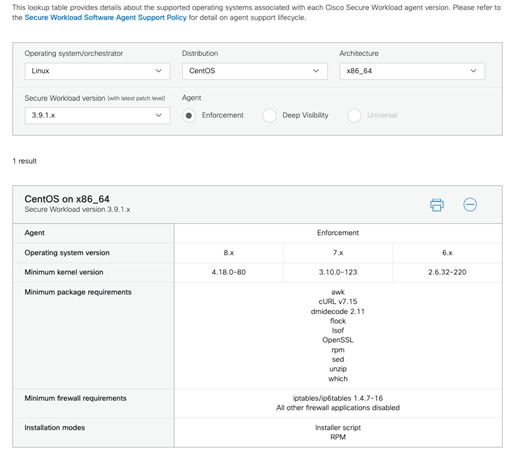

| Cisco Secure Workload (SaaS) |

3.9.1.1* |

* Secure Workload SaaS version is automatically updated.

Setting up the base FlexPod Infrastructure

This chapter contains the following:

● Network Switch Configuration

● NetApp ONTAP Storage Configuration

● Cisco Intersight Managed Mode Configuration

● Storage Configuration – ONTAP Boot Storage Setup

● Storage Configuration – ONTAP NVMe Configuration and Finalizing ONTAP Storage

● FlexPod Management Tools Setup

The configuration procedures of the base FlexPod Infrastructure are similar to those from the FlexPod Datacenter using IaC with Cisco IMM M7, VMware vSphere 8, and NetApp ONTAP 9.12.1 CVD with the following main differences due to the deployed configurations.

● This solution validation configuration uses an IP-only solution topology and Cisco MDS switches are therefore not needed. The SAN boot stateless compute uses iSCSI protocol and ethernet network switching infrastructure.

● The VLAN IDs used in this CVD are listed in Table 1 and should be used when following the setup procedures.

● The revisions of the infrastructure component software, as listed in Table 3, are different:

◦ Cisco UCS - 4.3(2) instead of 4.2(3d).

◦ Cisco Nexus switches - 10.2(6) instead of 10.2(5M).

◦ NetApp ONTAP - 9.13.1 instead of 9.12.1.

● The revisions of the infrastructure management software are different:

◦ NetApp Active IQ Unified Manager - 9.13 instead of 9.12.

◦ NetApp ONTAP Tools for VMware vSphere - 9.13 instead of 9.12.

◦ NetApp SnapCenter Plugin for VMware vSphere - 4.9 instead of 4.8.

◦ VMware vCenter - 7.0U3 instead of 8.0.

◦ VMware ESXi - 7.0U3 instead of 8.0.

● The deployment of additional components as needed for Zero Trust framework:

◦ Cisco Secure Network Analytics - 7.4.2.

◦ Cisco Secure Firewall Thread Device Virtual - 7.2.5.

◦ Cisco Secure Firewall Management Center - 7.2.5.

◦ Cisco Secure Workload SaaS - 3.9.1.1

The required network switch configuration for the base FlexPod infrastructure follows the base CVD section linked below. The Cisco Nexus 9000 series switch featured in this validation is the Cisco Nexus 93600CD-GX configured in NX-OS standalone mode running 10.2(6) firmware. Although different switches and firmware versions are used compared to the based CVD, the same configuration procedures apply. The VLAN IDs used during this CVD are listed in Table 1 and should be adjusted according to your environment.

For more information, see FlexPod Datacenter using IaC with Cisco IMM M7, VMware vSphere 8, and NetApp ONTAP 9.12.1, section Network Switch Configuration. This section provides the switch configuration for the infrastructure tenant. Additional configurations for the tenant deployment are explained in a following section.

NetApp ONTAP Storage Configuration

The required initial NetApp ONTAP storage configuration follows the CVD section here: NetApp ONTAP Storage Configuration. This section provides the initial ONTAP storage configuration for the infrastructure tenant. Additional ONTAP storage configurations for multi-tenant deployment are explained in a following section. The NetApp AFF A-series storage featured in this validation is the NetApp AFF A400 running ONTAP 9.13.1 in IP-based solution configuration. Although a newer ONTAP version is available, the same configuration procedures apply.

Cisco Intersight Managed Mode Configuration

Cisco Intersight managed mode standardizes policy and operation management for the Cisco UCS X210c M7 compute nodes and Cisco UCS C220 M7 rack servers used in this deployment. The required Cisco Intersight Managed Mode configuration follows the base CVD section here: Cisco Intersight Managed Mode Configuration. This section provides the Intersight configuration for setting up the infrastructure tenant. Additional multi-tenant deployment configurations are explained in a following section.

No FC SAN switch configuration is used for this IP-based validation configuration deployment. If you are using FC SAN configuration, please follow the guidance found here: SAN Switch Configuration. However, if you are also using IP-based deployment configuration, you can skip this section.

Storage Configuration – ONTAP Boot Storage Setup

The ONTAP boot storage configuration follows the base CVD section and depending on whether you are using iSCSI or FC SAN boot, you will need to properly update the variable files as instructed here: Storage Configuration - ONTAP Boot Storage Setup. For this validation, iSCSI SAN boot is used to boot the servers from iSCSI SAN.

For this deployment, infrastructure and additional tenants are sharing the iSCSI SAN booted ESXi hosts in the VMware cluster. As a result, no additional boot storage configurations are needed for the tenants.

In this deployment, Firewall Threat Defense virtual (FTDv) is installed on the FlexPod infrastructure being validated. The version of FTDv used during validation does not support VMware ESXi 8.0 and therefore even though the base FlexPod infrastructure supports vSphere 8.0, VMware vSphere 7.0 U3 was installed on FlexPod.

Note: If you are deploying physical FTD devices or are using an existing (separate) VMware vSphere 7.0 based management infrastructure to deploy FTDv, VMware vSphere 8.0 can be used on the FlexPod infrastructure to deploy applications.

The VMware vSphere 7.0 setup process is like the VMware vSphere 8.0 Setup section here: VMware vSphere 8.0 Setup. As detailed in the base CVD, KVM is used with ESXi ISO CD mapped to vMedia. The difference is that the ESXi image used for this CVD is ESXi 7.0U3 instead of ESXi 8.0.

Download ESXi 7.0U3 from VMware

Procedure 1. Download VMware ESXi ISO

Step 1. Click the following link: Cisco Custom Image for ESXi 7.0U3 Install CD.

Step 2. Download the .iso file.

Note: You will need a VMware user id and password on vmware.com to download this software.

Download the vCenter 7.0 image using the procedures below and then follow the base CVD procedures for VMware vCenter installation section to install the VMware vCenter 7.0U3h Server Appliance in a FlexPod environment.

Procedure 1. Download VMware vCenter ISO

Step 1. Click the following link: vCenter 7.0U3 Install ISO

Note: You will need a VMware user id and password on vmware.com to download this software.

Step 2. Download the .iso file.

Storage Configuration – ONTAP NVMe Configuration and Finalizing ONTAP Storage

If your FlexPod configuration utilizes NVMe, make sure to update the NVMe related information as instructed in the base CVD section here: Ansible ONTAP Storage Config Part 3. For this validation configuration, NVMe is not used and the NVMe related tasks are skipped.

FlexPod Management Tools Setup

FlexPod management tools are deployed using the information from the base CVD section here: FlexPod Management Tools Setup, with different software revisions for the various tools as documented in Table 3.

Note: The vVol configuration is not deployed for this validation. If vVol configuration is required, follow the optional Virtual Volume – vVol section in the base CVD FlexPod Management Tools Setup section.

Multi-tenant Infrastructure Setup

This chapter contains the following:

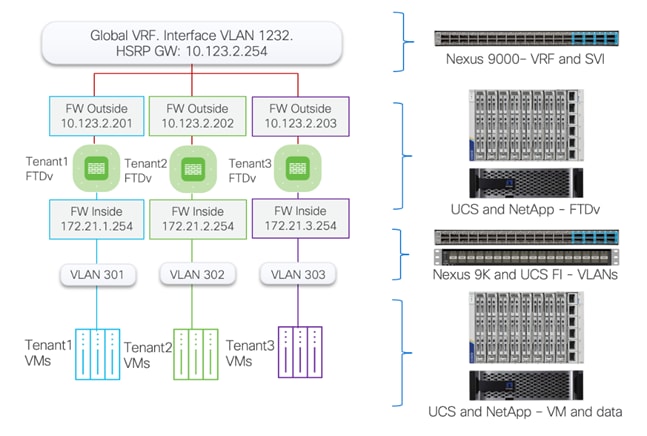

Segmentation plays a crucial role in the Zero Trust framework. Creating isolated zones within an infrastructure contain potential breaches and prevent attackers from moving laterally therefore limiting the scope of potential damage caused by a security breach. Even if an attacker gains access to one segment, they will have a much harder time reaching critical data or systems in other segments. Segmentation allows you to implement least privilege access control. This means users and devices only have access to the specific resources they need within their designated segment, minimizing the risk of unauthorized access to sensitive data.

This section explains the infrastructure level changes to enable the tenant deployment including changes at switching, compute, hypervisor, and storage level. The following sections will explain the implementation of firewall and other related technologies to protect customer traffic. The procedures described in this section outline deployment of multiple tenants, Tenant1, Tenant2, and so on. You can adjust the procedure to change the names and number of the tenants.

The multi-tenant network design deployed on a base FlexPod provides a secure, scalable, and flexible foundation for hosting multiple tenants with isolation. Figure 4 illustrates how the tenants (only three tenants shown) are layered on top of an existing FlexPod infrastructure.

In this deployment model:

● Cisco Nexus 93600CD-GX acts as the primary gateway, offering each tenant an entry point into their respective resources.

● A Secure Firepower Threat Defense virtual (FTDv) firewall is deployed for each tenant. Deploying separate instances of firewall provide granular control and management separation.

● FTDv appliances are managed using Secure Firewall Management Center (FMC). You can choose to manage the firewalls using device manager, but FMC standardizes and simplifies firewall policy management.

● Appropriately sized FTDv appliance is deployed (as a VM) in Cisco UCS and connected to port-group for outside (1232) and Inside VLANs (301+)

● All FTDv appliances use the same outside subnet and require a single gateway on Nexus 93600CD-GX.

● The protected traffic VLANs are defined on Cisco Nexus, Cisco UCS FI, and VMware distributed switch. This design ensures that each tenant's traffic remains separate and secure within its own VLAN.

To deploy this multi-tenant architecture on a FlexPod, following configurations are added to an existing FlexPod:

● VLANs, VRFs, and routing modifications on Cisco Nexus

● VLANs and vNICs modifications on Cisco UCS

● SVM and storage configuration on NetApp Controllers

● VLANs and port-group configurations on VMware vCenter

You should have deployed a base FlexPod as explained in the previous section. The configurations in this section build on or modify the base FlexPod design to deploy a multi-tenant architecture.

Procedure 1. Create the Tenant VLANs

Step 1. Log into each Nexus switch and define the global (single) Firewall Outside VLAN (1232).

vlan <FW-Outside-Traffic-VLAN>

For example when FW outside VLAN is 1232:

vlan 1232

name VM-Traffic

Step 2. On each Nexus switch, define the tenant Firewall inside (protected) VLANs for tenants.

vlan <Tenant<X>-Firwall-Inside-VLAN>

name Tenant<X>_FW_Inside

For example:

vlan 301

name Tenant1_FW_Inside

vlan 302

name Tenant2_FW_Inside

Step 3. On each Nexus switch, define the tenant NFS VLANs used for accessing NFS shares within tenant SVMs.

vlan <Tenant<X>-NFS-VLAN-ID>

name Tenant<X>-NFS

For example:

vlan 3301

name Tenant1-NFS

vlan 3302

name Tenant2-NFS

Step 4. On each Nexus switch, enable the VLANs on the VPC peer-link.

When the VPC peer-link is Port-Channel 10 and VLANs are defined for Tenant1-Tenant5:

interface port-channel10

description vPC Peer Link

switchport trunk allowed vlan add 301-305,1232,3301-3305

!

Step 5. On each Nexus switch, enable the global FW Outside, tenant FW Inside, and tenant NFS VLANs on Cisco UCS VPC interfaces.

When the Port-Channel 11-12 are connected to FIs and VLANs are defined for Tenant1-Tenant5:

interface port-channel11

description AB03-6536-A

switchport trunk allowed vlan add 301-305,1232, 3301-3305

!

interface port-channel12

description AB03-6536-B

switchport trunk allowed vlan add 301-305,1232, 3301-3305

!

Step 6. On each Nexus switch, enable Tenant SVM management and Tenant NFS VLANs on NetApp controller VPC interfaces.

Note: In this example, Tenant SVM management network is same as FW Inside network, but you can define a dedicated SVM management subnet/VLAN.

When the Port-Channel 13-14 are connected to NetApp controllers and VLANs are defined for Tenant1-Tenant5:

interface port-channel13

description AB03-A400-01

switchport trunk allowed vlan add 301-305,3301-3305

!

interface port-channel14

description AB03-A400-02

switchport trunk allowed vlan add 301-305,3301-3305

!

Procedure 2. Create the Firewall Outside SVI

Step 1. Log into the Nexus-1 and configure the SVI.

interface Vlan1232

description GW for FW Outside - 10.123.2.0/24 Network

no shutdown

no ip redirects

ip address 10.123.2.251/24

no ipv6 redirects

hsrp version 2

hsrp 1232

preempt delay minimum 300

priority 105

ip 10.123.2.254

!

Step 2. Log into the Nexus-2 and configure the SVI.

interface Vlan1232

description GW for FW-Outside - 10.123.2.0/24 Network

no shutdown

no ip redirects

ip address 10.123.2.252/24

no ipv6 redirects

hsrp version 2

hsrp 1232

ip 10.123.2.254

!

Procedure 1. Create the Tenant VLANs

Step 1. Log into Intersight and select Infrastructure Services.

Step 2. Click Policies in the left pane and find the VLAN policy.

Step 3. Click the name of the VLAN policy. Under the Actions button on top right, select Edit.

Step 4. Verify the Usage in Profiles tab and click Next.

Step 5. Click Add VLANs in the main window.

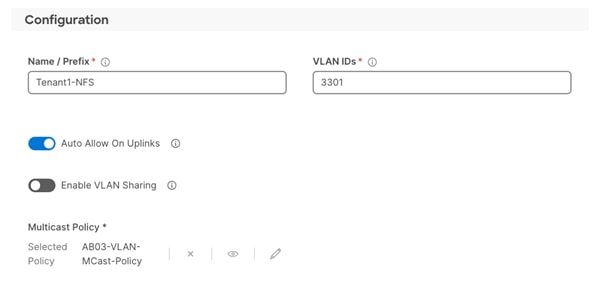

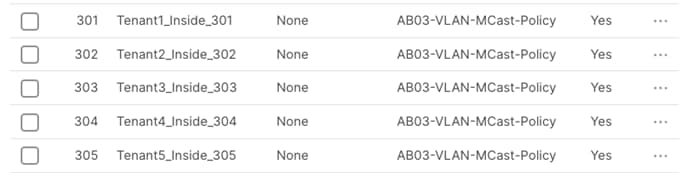

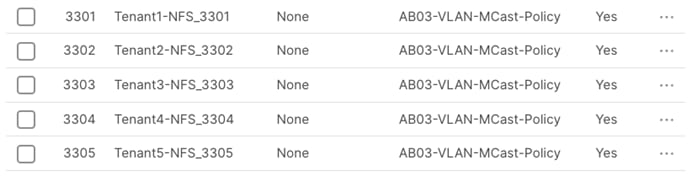

Step 6. Provide a name for the VLAN, VLAN ID and select Multicast Policy. Make sure Auto Allow in Uplinks is enabled.

Step 7. After defining all the VLANs, make sure the VLAN policy contains all the VLANs:

![]()

Step 8. Click Save.

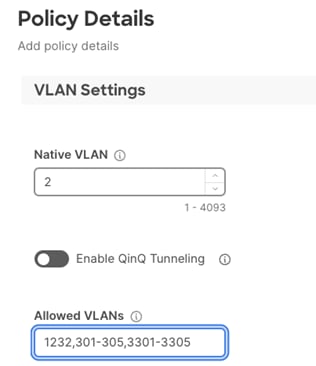

Procedure 2. Modify the VDS Ethernet Network Group policy to add VLANs

Step 1. Log into Intersight and select Infrastructure Services.

Step 2. Click on the Policies in the left pane and find the Ethernet Network Group policy for VDS.

Step 3. Click the name of the policy. Under the Actions button on top right, select Edit.

Step 4. Verify the Name, Tags (if defined) and Description and click Next.

Step 5. Under the Allowed VLANs, add the FW outside, tenant FW Inside, and tenant NFS VLANs.

Note: The screenshot below only captures tenant VLANs. Other existing VLANs defined in VDS policy are not shown to avoid confusion.

Step 6. Click Save.

Step 7. In the left pane, click on Profiles and select UCS Server Profiles in the main window.

Step 8. Click “…” and select Deploy for all the severs attached to the Ethernet Network Group policy.

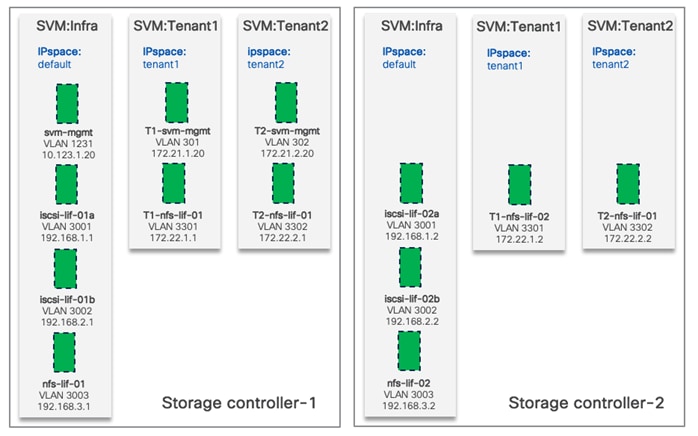

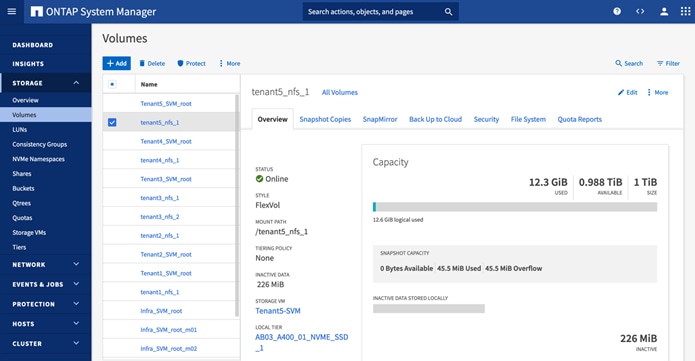

In Zero Trust framework for FlexPod, secure management and data isolation in storage are achieved by implementing separate storage virtual machine, IPspace, SVM management LIF, and NFS LIFs for each tenant in the NetApp AFF A400 storage system.

Figure 5 shows how the tenants, IPspaces and Logical Interfaces (LIFs) are defined on the two storage controllers. Management LIF is configured to move between the two controllers as needed. This design allows tenant VMs to access their NFS volumes directly therefore providing fast low-latency access.

Procedure 1. Configure IPspaces

Each tenant SVM is assigned to a separate IPspace to maintain IP separation. Log into NetApp AFF A400 controller to execute the following commands.

Step 1. To create an IPspace for a tenant SVM, use the following command syntax:

network ipspace create -ipspace <Tenant IPspace>

AB03-A400::> network ipspace create -ipspace Tenant<X> (where X = 1,2,3 etc.)

For example:

AB03-A400::> network ipspace create -ipspace Tenant1

AB03-A400::> network ipspace create -ipspace Tenant2

Procedure 2. Configure Storge Virtual Machine (SVM)

In this deployment, an infrastructure SVM is created to host the infrastructure services such as boot LUNs and NFS datastores to host the tenant VMs. Additional tenants are assigned dedicated SVMs to store their data and maintain isolation.

Step 1. To create a tenant SVM and assign it to the tenant IPspace, use the following command syntax.

vserver create -vserver <tenant-svm> -ipspace <tenant-IPspace>

AB03-A400::> vserver create -vserver Tenant1-SVM -ipspace Tenant<X> (where X = 1,2,3 etc.)

For example:

AB03-A400::> vserver create -vserver Tenant1-SVM -ipspace Tenant1

AB03-A400::> vserver create -vserver Tenant2-SVM -ipspace Tenant2

Step 2. Configure SVM protocol support. Check the allowed-protocols and disallowed-protocols configurations and update them based on your requirements with the following command syntaxes.

vserver show -vserver <tenant-svm> -fields allowed-protocols, disallowed-protocols

vserver add-protocols -vserver <tenant-svm> -protocols <protocols>

vserver remove-protocols -vserver <tenant-svm> -protocols <protocols>

To check currently configured protocols:

vserver show -vserver Tenant<X>-SVM -fields allowed-protocols,disallowed-protocols (where X = 1,2,3 etc.)

For example:

AB03-A400::> vserver show -vserver Tenant1-SVM,Tenant2-SVM -fields allowed-protocols,disallowed-protocols

vserver allowed-protocols disallowed-protocols

----------- -------------------------- --------------------

Tenant1-SVM nfs,cifs,fcp,iscsi,ndmp,s3 nvme

Tenant2-SVM nfs,cifs,fcp,iscsi,ndmp,s3 nvme

2 entries were displayed.

To remove unused protocols:

AB03-A400::> vserver remove-protocols -vserver Tenant<X>-SVM -protocols cifs,fcp,iscsi,ndmp,s3

For example:

AB03-A400::> vserver remove-protocols -vserver Tenant1-SVM -protocols cifs,fcp,iscsi,ndmp,s3

AB03-A400::> vserver remove-protocols -vserver Tenant2-SVM -protocols cifs,fcp,iscsi,ndmp,s3

To Verify the new protocol set:

AB03-A400::> vserver show -vserver Tenant1-SVM,Tenant2-SVM -fields allowed-protocols,disallowed-protocols

vserver allowed-protocols disallowed-protocols

----------- ----------------- ---------------------------

Tenant1-SVM nfs cifs,fcp,iscsi,ndmp,nvme,s3

Tenant2-SVM nfs cifs,fcp,iscsi,ndmp,nvme,s3

2 entries were displayed.

Procedure 3. Configure SVM aggregate list

Step 1. Add data aggregates to the SVM and show the configuration using the following command syntax.

vserver modify -vserver <tenant-svm> -aggr-list <aggregate-list>

vserver show -vserver <tenant-svm> -fields aggr-list

AB03-A400::> vserver modify -vserver Tenant<X>-SVM -aggr-list AB03_A400_01_NVME_SSD_1,AB03_A400_02_NVME_SSD_1 (where X = 1,2,3 etc.)

For example:

AB03-A400::> vserver modify -vserver Tenant1-SVM -aggr-list AB03_A400_01_NVME_SSD_1,AB03_A400_02_NVME_SSD_1

AB03-A400::> vserver modify -vserver Tenant2-SVM -aggr-list AB03_A400_01_NVME_SSD_1,AB03_A400_02_NVME_SSD_1

To verify the aggregates:

AB03-A400::> vserver show -vserver Tenant1-SVM,Tenant2-SVM -fields aggr-list

vserver aggr-list

----------- -----------------------------------------------

Tenant1-SVM AB03_A400_01_NVME_SSD_1,AB03_A400_02_NVME_SSD_1

Tenant2-SVM AB03_A400_01_NVME_SSD_1,AB03_A400_02_NVME_SSD_1

2 entries were displayed.

Procedure 4. Configure SVM NFS protocol support

Step 1. Create NFS server for the tenant SVM and enable or modify desired NFS version, transport support, VMware vStorage support and show the configurations with the following command syntax.

vserver nfs create -vserver <tenant-svm> -udp <enabled | disabled> -v3 <enabled | disabled> -v4.1 <enabled | disabled> -vstorage <enabled | disabled>

vserver nfs modify -vserver <tenant-svm> -udp <enabled | disabled> -v3 <enabled | disabled> -v4.1 <enabled | disabled> -vstorage <enabled | disabled>

vserver nfs show -vserver <tenant-svm> -fields udp,v3,v4.1,vstorage

AB03-A400::> vserver nfs create -vserver Tenant<X>-SVM -udp disabled -v3 enabled -v4.1 enabled -vstorage (where X = 1,2,3 etc.)

For example:

AB03-A400::> vserver nfs create -vserver Tenant1-SVM -udp disabled -v3 enabled -v4.1 enabled -vstorage enabled

AB03-A400::> vserver nfs create -vserver Tenant2-SVM -udp disabled -v3 enabled -v4.1 enabled -vstorage enabled

To verify:

AB03-A400::> vserver nfs show -vserver Tenant1-SVM,Tenant2-SVM -fields udp,v3,v4.1,vstorage

vserver v3 udp v4.1 vstorage

----------- ------- -------- ------- --------

Tenant1-SVM enabled disabled enabled enabled

Tenant2-SVM enabled disabled enabled enabled

2 entries were displayed.

Procedure 5. Configure SVM root volume load-sharing mirror

Step 1. Identify the node and aggregate of the Tenant SVM root volume, create a volume to be the load-sharing mirror of the Tenant SVM root volume only on the node that does not have the root volume using the following commands:

volume show -vserver <tenant-svm> -fields rootvolume,aggregate

volume create -vserver <tenant-svm> -volume <tenant-svm-rootvolume-lsm-name> -aggregate <root-volume-lsm-aggr-name> -size 1GB -type DP

AB03-A400::> vserver show -vserver Tenant<X>-SVM -fields rootvolume,aggregate (where X = 1,2,3 etc.)

For example:

AB03-A400::> vserver show -vserver Tenant1-SVM,Tenant2-SVM -fields rootvolume,aggregate

vserver rootvolume aggregate

----------- ---------------- -----------------------

Tenant1-SVM Tenant1_SVM_root AB03_A400_02_NVME_SSD_1

Tenant2-SVM Tenant2_SVM_root AB03_A400_02_NVME_SSD_1

2 entries were displayed.

AB03-A400::> volume create -vserver Tenant<X>-SVM -volume Tenant<X>_SVM_root_lsm01 -aggregate AB03_A400_01_NVME_SSD_1 -size 1GB -type DP (where X = 1,2,3 etc.)

For example:

AB03-A400::> volume create -vserver Tenant1-SVM -volume Tenant1_SVM_root_lsm01 -aggregate AB03_A400_01_NVME_SSD_1 -size 1GB -type DP

[Job 2408] Job succeeded: Successful

AB03-A400::> volume create -vserver Tenant2-SVM -volume Tenant2_SVM_root_lsm01 -aggregate AB03_A400_01_NVME_SSD_1 -size 1GB -type DP

[Job 2410] Job succeeded: Successful

Step 2. Create load-sharing mirror relationship for the tenant SVM root volume, initialize load-sharing mirror, and then verify the configuration using the following command syntax.

snapmirror create -source-path <tenant-svm:source-rootvolume-name> -destination-path <tenant-svm:mirror-rootvolume-name> -type LS -schedule 15min

snapmirror initialize-ls-set -source-path <tenant-svm:svm-rootvolume>

snapmirror show -vserver <tenant-svm>

AB03-A400::> snapmirror create -source-path Tenant<X>-SVM:Tenant<X>_SVM_root -destination-path Tenant<X>- SVM:Tenant<X>_SVM_root_lsm01 -type LS -schedule 15min (where X = 1,2,3 etc.)

For example:

AB03-A400::> snapmirror create -source-path Tenant1-SVM:Tenant1_SVM_root -destination-path Tenant1-SVM:Tenant1_SVM_root_lsm01 -type LS -schedule 15min

[Job 2418] Job is queued: snapmirror create for the relationship with destination "AB03-A400://Tenant1-SVM/Tenant1_SVM_r[Job 2418] Job succeeded: SnapMirror: done

AB03-A400::> snapmirror create -source-path Tenant2-SVM:Tenant2_SVM_root -destination-path Tenant2-SVM:Tenant2_SVM_root_lsm01 -type LS -schedule 15min

[Job 2420] Job is queued: snapmirror create for the relationship with destination "AB03-A400://Tenant2-SVM/Tenant2_SVM_r[Job 2420] Job succeeded: SnapMirror: done

AB03-A400::> snapmirror initialize-ls-set -source-path Tenant<X>-SVM:Tenant<X>_SVM_root (where X = 1,2,3 etc.)

For example:

AB03-A400::> snapmirror initialize-ls-set -source-path Tenant1-SVM:Tenant1_SVM_root

[Job 2422] Job is queued: snapmirror initialize-ls-set for source "AB03-A400://Tenant1-SVM/Tenant1_SVM_root".

AB03-A400::> snapmirror initialize-ls-set -source-path Tenant2-SVM:Tenant2_SVM_root

[Job 2423] Job is queued: snapmirror initialize-ls-set for source "AB03-A400://Tenant2-SVM/Tenant2_SVM_root".

AB03-A400::> snapmirror show -vserver Tenant<X>-SVM (where X = 1,2,3 etc.)

For example:

AB03-A400::> snapmirror show -vserver Tenant1-SVM,Tenant2-SVM

Progress

Source Destination Mirror Relationship Total Last

Path Type Path State Status Progress Healthy Updated

----------- ---- ------------ ------- -------------- --------- ------- --------

AB03-A400://Tenant1-SVM/Tenant1_SVM_root LS AB03-A400://Tenant1-SVM/Tenant1_SVM_root_lsm01 Snapmirrored Idle - true -

AB03-A400://Tenant2-SVM/Tenant2_SVM_root LS AB03-A400://Tenant2-SVM/Tenant2_SVM_root_lsm01 Snapmirrored Idle - true -

2 entries were displayed.

Procedure 6. Configure SVM login banner

Step 1. To create login banner for the Tenant SVM, use the following command syntax.

security login banner modify -vserver <tenant-svm> -message "This <tenant-svm> is reserved for authorized users only!"

AB03-A400::> security login banner modify -vserver Tenant<X>-SVM -message "Tenant<X>-SVM is reserved for authorized users only!" (where X = 1,2,3 etc.)

For example:

AB03-A400::> security login banner modify -vserver Tenant1-SVM -message "Tenant1-SVM is reserved for authorized users only!"

AB03-A400::> security login banner modify -vserver Tenant2-SVM -message "Tenant2-SVM is reserved for authorized users only!"

Procedure 7. Configure SVM audit log

Step 1. To create audit log volume and enable auditing configuration for the Tenant SVM, use the following command syntax.

volume create -vserver <tenant-svm> -volume <tenant_audit_log> -aggregate <aggregate-name> -size <volume-size> -state online -policy default -junction-path </tenant_audit_log> -space-guarantee none -percent-snapshot-space 0

vserver audit create -vserver <tenant-SVM> -destination /audit_log

vserver audit enable -vserver <tenant-SVM>

AB03-A400::> volume create -vserver Tenant<X>-SVM -volume Tenant<X>_audit_log -aggregate AB03_A400_02_NVME_SSD_1 -size 50GB -state online -policy default -junction-path /tenant<X>_audit_log -space-guarantee none -percent-snapshot-space 0 (where X = 1,2,3 etc.)

For example:

AB03-A400::> volume create -vserver Tenant1-SVM -volume Tenant1_audit_log -aggregate AB03_A400_02_NVME_SSD_1 -size 50GB -state online -policy default -junction-path /tenant1_audit_log -space-guarantee none -percent-snapshot-space 0

[Job 2433] Job succeeded: Successful

AB03-A400::> volume create -vserver Tenant2-SVM -volume Tenant2_audit_log -aggregate AB03_A400_02_NVME_SSD_1 -size 50GB -state online -policy default -junction-path /tenant2_audit_log -space-guarantee none -percent-snapshot-space 0

[Job 2435] Job succeeded: Successful

AB03-A400::> vserver audit create -vserver Tenant<X>-SVM -destination /tenant<X>_audit_log (where X = 1,2,3 etc.)

For example:

AB03-A400::> vserver audit create -vserver Tenant1-SVM -destination /tenant1_audit_log

AB03-A400::> vserver audit create -vserver Tenant2-SVM -destination /tenant2_audit_log

AB03-A400::> vserver audit enable -vserver Tenant<X>-SVM (where X = 1,2,3 etc.)

For example:

AB03-A400::> vserver audit enable -vserver Tenant1-SVM

AB03-A400::> vserver audit enable -vserver Tenant2-SVM

Procedure 8. Configure network broadcast domain

Step 1. To create SVM management and NFS data broadcast domains for tenants, with a maximum transmission unit (MTU) of 1500 and 9000, respectively, use the following command syntax:

network port broadcast-domain create -broadcast-domain <tenant-svm-mgmt-broadcast-domain> -mtu 1500 -ipspace <tenant-IPspace>

network port broadcast-domain create -broadcast-domain <tenant-nfs-broadcast-domain> -mtu 9000 -ipspace <tenant-IPspace>

AB03-A400::> network port broadcast-domain create -broadcast-domain Tenant<X>-SVM-MGMT -mtu 1500 -ipspace Tenant<X> (where X = 1,2,3 etc.)

For example:

AB03-A400::> network port broadcast-domain create -broadcast-domain Tenant1-SVM-MGMT -mtu 1500 -ipspace Tenant1

AB03-A400::> network port broadcast-domain create -broadcast-domain Tenant2-SVM-MGMT -mtu 1500 -ipspace Tenant2

AB03-A400::> network port broadcast-domain create -broadcast-domain TenantX>-NFS -mtu 9000 -ipspace Tenant<X> (where X = 1,2,3 etc.)

For example:

AB03-A400::> network port broadcast-domain create -broadcast-domain Tenant1-NFS -mtu 9000 -ipspace Tenant1

AB03-A400::> network port broadcast-domain create -broadcast-domain Tenant2-NFS -mtu 9000 -ipspace Tenant2

Procedure 9. Configure management and NFS VLANs

Step 1. To create management VLAN ports and NFS VLAN ports for tenants and add them to their respective network broadcast domains, use the following command syntaxes:

network port vlan create –node <st-node01> -vlan-name a0a-<tenant-svm-mgmt-vlan-id>

network port vlan create –node <st-node02> -vlan-name a0a-<tenant-svm-mgmt-vlan-id>

network port vlan create –node <st-node01> -vlan-name a0a-<tenant-nfs-vlan-id>

network port vlan create –node <st-node02> -vlan-name a0a-<tenant-nfs-vlan-id>

network port broadcast-domain add-ports -broadcast-domain <tenant-svm-mgmt-broadcast-domain> -ports <st-node01>:a0a-<tenant-svm-mgmt-vlan-id>,<st-node02>:a0a-<tenant-svm-mgmt-vlan-id>

network port broadcast-domain add-ports -broadcast-domain <tenant-nfs-broadcast-domain> -ports <st-node01>:a0a-<tenant-nfs-vlan-id>,<st-node02>:a0a-<tenant-nfs-vlan-id>

AB03-A400::> network port vlan create -node AB03-A400-01 -vlan-name a0a-<tenant-svm-mgmt-vlan-id>

AB03-A400::> network port vlan create -node AB03-A400-02 -vlan-name a0a-<tenant-svm-mgmt-vlan-id>

For example, when tenant-svm-mgmt-vlan-id for Tenant1 is 301 and for Tenant2 is 302:

AB03-A400::> network port vlan create -node AB03-A400-01 -vlan-name a0a-301

AB03-A400::> network port vlan create -node AB03-A400-02 -vlan-name a0a-301

AB03-A400::> network port vlan create -node AB03-A400-01 -vlan-name a0a-302

AB03-A400::> network port vlan create -node AB03-A400-02 -vlan-name a0a-302

AB03-A400::> network port vlan create -node AB03-A400-01 -vlan-name a0a-<tenant-nfs-vlan-id>

AB03-A400::> network port vlan create -node AB03-A400-02 -vlan-name a0a-<tenant-nfs-vlan-id>

For example, when tenant-nfs-vlan-id for Tenant1 is 3301 and for Tenant2 is 3302:

AB03-A400::> network port vlan create -node AB03-A400-01 -vlan-name a0a-3301

AB03-A400::> network port vlan create -node AB03-A400-02 -vlan-name a0a-3301

AB03-A400::> network port vlan create -node AB03-A400-01 -vlan-name a0a-3302

AB03-A400::> network port vlan create -node AB03-A400-02 -vlan-name a0a-3302

AB03-A400::> network port broadcast-domain add-port -broadcast-domain Tenant<X>-SVM-MGMT -port AB03-A400-01:a0a-<tenant-svm-mgmt-vlan-id>, AB03-A400-02:a0a-<tenant-svm-mgmt-vlan-id> -ipspace Tenant<X>

For example when tenant-svm-mgmt-vlan-id for Tenant1 is 301 and for Tenant2 is 302:

AB03-A400::> network port broadcast-domain add-port -broadcast-domain Tenant1-SVM-MGMT -port AB03-A400-01:a0a-301, AB03-A400-02:a0a-301 -ipspace Tenant1

AB03-A400::> network port broadcast-domain add-port -broadcast-domain Tenant2-SVM-MGMT -port AB03-A400-01:a0a-302, AB03-A400-02:a0a-302 -ipspace Tenant2

AB03-A400::> network port broadcast-domain add-port -broadcast-domain Tenant<X>-NFS -port AB03-A400-01:a0a-<tenant-nfs-vlan-id>, AB03-A400-02:a0a-<tenant-nfs-vlan-id> -ipspace Tenant<X>

For example when tenant-nfs-vlan-id for Tenant1 is 3301 and for Tenant2 is 3302:

AB03-A400::> network port broadcast-domain add-port -broadcast-domain Tenant1-NFS -port AB03-A400-01:a0a-3301, AB03-A400-02:a0a-3301 -ipspace Tenant1

AB03-A400::> network port broadcast-domain add-port -broadcast-domain Tenant2-NFS -port AB03-A400-01:a0a-3302, AB03-A400-02:a0a-3302 -ipspace Tenant2

Procedure 10. Configure SVM LIFs and access

To create tenant SVM administrator and SVM administration LIF in the tenant SVM management network, follow these steps:

Step 1. To create tenant SVM management LIF, use the following syntax:

network interface create –vserver <tenant-svm> –lif <tenant-svm-mgmt> -service-policy default-management –home-node <st-node02> -home-port a0a-<tenant-svm-mgmt-vlan-id> –address <tenant-svm-mgmt-ip> -netmask <tenant-svm-mgmt-mask> -status-admin up –failover-policy broadcast-domain-wide –auto-revert true

AB03-A400::> network interface create -vserver Tenant<X>-SVM -lif tenant<X>-svm-mgmt-service-policy default-management -home-node AB03-A400-02 -home-port a0a-<tenant-svm-mgmt-vlan-id> -address <tenant-svm-mgmt-ip> -netmask <tenant-svm-mgmt-mask> -status-admin up -failover-policy broadcast-domain-wide -auto-revert true (where X = 1,2,3 etc.)

For example:

AB03-A400::> network interface create -vserver Tenant1-SVM -lif tenant1-svm-mgmt –service-policy default-management -home-node AB03-A400-02 -home-port a0a-301 -address 172.21.1.20 -netmask 255.255.255.0 -status-admin up -failover-policy broadcast-domain-wide -auto-revert true

AB03-A400::> network interface create -vserver Tenant2-SVM -lif tenant2-svm-mgmt –service-policy default-management -home-node AB03-A400-02 -home-port a0a-302 -address 172.21.2.20 -netmask 255.255.255.0 -status-admin up -failover-policy broadcast-domain-wide -auto-revert true

Step 2. To create a default route that enables the SVM management interface to reach the outside world, use the following syntax:

network route create –vserver <tenant-svm> -destination 0.0.0.0/0 –gateway <tenant-svm-mgmt-gateway>

AB03-A400::> network route create -vserver Tenant<X>-SVM -destination 0.0.0.0/0 -gateway <tenant-svm-mgmt-gateway>

For example:

AB03-A400::> network route create -vserver Tenant1-SVM -destination 0.0.0.0/0 -gateway 172.21.1.254

AB03-A400::> network route create -vserver Tenant2-SVM -destination 0.0.0.0/0 -gateway 172.21.2.254

Step 3. To set a password for the SVM admin user (vsadmin) and unlock the user, use the following syntax.

:security login password –username vsadmin –vserver <tenant-svm>

security login unlock –username vsadmin –vserver <tenant-svm>

AB03-A400::> security login password -username vsadmin -vserver Tenant<X>-SVM

For example:

AB03-A400::> security login password -username vsadmin -vserver Tenant1-SVM

Enter a new password:

Enter it again:

AB03-A400::> security login password -username vsadmin -vserver Tenant2-SVM

Enter a new password:

Enter it again:

AB03-A400::> security login unlock -username vsadmin -vserver Tenant<X>-SVM

For example:

AB03-A400::> security login unlock -username vsadmin -vserver Tenant1-SVM

AB03-A400::> security login unlock -username vsadmin -vserver Tenant2-SVM

Procedure 11. Create NFS LIF

Step 1. To create tenant NFS LIFs in the tenant SVM, use the following syntax:

network interface create -vserver <tenant-SVM> -lif <tenant-nfs-lif-01> –service-policy default-data-files -home-node <st-node01> -home-port a0a-<tenant-nfs-vlan-id> –address <tenant-node01-nfs-lif-01-ip> -netmask <tenant-node01-nfs-lif-01-mask> -status-admin up –failover-policy broadcast-domain-wide –auto-revert true

network interface create -vserver <tenant-SVM> -lif <tenant-nfs-lif-02> –service-policy default-data-files -home-node <st-node02> -home-port a0a-<tenant-nfs-vlan-id> –address <tenant-node02-nfs-lif-01-ip> -netmask <tenant-node01-nfs-lif-01-mask> -status-admin up –failover-policy broadcast-domain-wide –auto-revert true

AB03-A400::> network interface create -vserver Tenant<X>-SVM -lif tenant<X>-nfs-lif-01 –service-policy default-data-files -home-node AB03-A400-01 -home-port a0a-<tenant-nfs-vlan-id> -address <tenant-node01-nfs-lif-01-ip> -netmask <tenant-node01-nfs-lif-01-mask> -status-admin up -failover-policy broadcast-domain-wide -auto-revert true

For example:

AB03-A400::> network interface create -vserver Tenant1-SVM -lif tenant1-nfs-lif-01 –service-policy default-data-files -home-node AB03-A400-01 -home-port a0a-3301 -address 172.22.1.1 -netmask 255.255.255.0 -status-admin up -failover-policy broadcast-domain-wide -auto-revert true

AB03-A400::> network interface create -vserver Tenant2-SVM -lif tenant2-nfs-lif-01 -service-policy default-data-files -home-node AB03-A400-01 -home-port a0a-3302 -address 172.22.2.1 -netmask 255.255.255.0 -status-admin up -failover-policy broadcast-domain-wide -auto-revert true

AB03-A400::> network interface create -vserver Tenant<X>-SVM -lif tenant<X>-nfs-lif-02 –service-policy default-data-files -home-node AB03-A400-02 -home-port a0a-<tenant-nfs-vlan-id> -address <tenant-node02-nfs-lif-02-ip> -netmask <tenant-node01-nfs-lif-02-mask> -status-admin up -failover-policy broadcast-domain-wide -auto-revert true

For example:

AB03-A400::> network interface create -vserver Tenant1-SVM -lif tenant1-nfs-lif-02 –service-policy default-data-files -home-node AB03-A400-02 -home-port a0a-3301 -address 172.22.1.2 -netmask 255.255.255.0 -status-admin up -failover-policy broadcast-domain-wide -auto-revert true

AB03-A400::> network interface create -vserver Tenant2-SVM -lif tenant2-nfs-lif-02 –service-policy default-data-files -home-node AB03-A400-02 -home-port a0a-3302 -address 172.22.2.2 -netmask 255.255.255.0 -status-admin up -failover-policy broadcast-domain-wide -auto-revert true

Procedure 12. Create NFS volumes

Step 1. To create NFS volumes for tenants in the tenant SVMs, use the following syntax:

volume create -vserver <tenant-SVM> -volume <tenant-nfs-volume> -aggregate <aggr1_node01 or aggr1_node02> -size <size> -state online -policy default -junction-path </tenant-junction-path> -space-guarantee none -percent-snapshot-space 0

AB03-A400::> volume create -vserver Tenant<X>-SVM -volume tenant<X>_nfs_1 -aggregate AB03_A400_<Y>_NVME_SSD_1 -size 500GB -state online -policy default -junction-path /tenant<X>_nfs_1 -space-guarantee none -percent-snapshot-space 0 (where X = 1,2,3 etc. and Y = 01 or 02)

For example:

AB03-A400::> volume create -vserver Tenant1-SVM -volume tenant1_nfs_1 -aggregate AB03_A400_01_NVME_SSD_1 -size 500GB -state online -policy default -junction-path /tenant1_nfs_1 -space-guarantee none -percent-snapshot-space 0

AB03-A400::> volume create -vserver Tenant2-SVM -volume tenant2_nfs_1 -aggregate AB03_A400_02_NVME_SSD_1 -size 500GB -state online -policy default -junction-path /tenant2_nfs_1 -space-guarantee none -percent-snapshot-space 0

Procedure 13. Configure NFS export policies

Step 1. To export the NFS volumes created in the last step to tenant NFS clients, proper export policies need to be created and assigned. To create an NFS export policy and apply it to the tenant SVM, use the following syntax:

vserver export-policy rule create –vserver <tenant-svm> -policyname default –ruleindex 1 –protocol nfs -clientmatch <tenant_client_nfs_ip_subnet> -rorule sys –rwrule sys -superuser sys –allow-suid true

volume modify -vserver <tenant-svm> -volume <tenant-nfs-volume>_root -policy default

AB03-A400::> vserver export-policy rule create -vserver Tenant<X>-SVM -policyname default -ruleindex 1 -protocol nfs -clientmatch <tenant_client_nfs_ip_subnet> -rorule sys -rwrule sys -superuser sys -allow-suid true

For example:

AB03-A400::> vserver export-policy rule create -vserver Tenant1-SVM -policyname default -ruleindex 1 -protocol nfs -clientmatch 172.22.1.0/24 -rorule sys -rwrule sys -superuser sys -allow-suid true

AB03-A400::> vserver export-policy rule create -vserver Tenant2-SVM -policyname default -ruleindex 1 -protocol nfs -clientmatch 172.22.2.0/24 -rorule sys -rwrule sys -superuser sys -allow-suid true

AB03-A400::> volume modify -vserver Tenant<X>-SVM -volume Tenant<X>_SVM_root -policy default

For example:

AB03-A400::> volume modify -vserver Tenant1-SVM -volume Tenant1_SVM_root -policy default

AB03-A400::> volume modify -vserver Tenant2-SVM -volume Tenant2_SVM_root -policy default

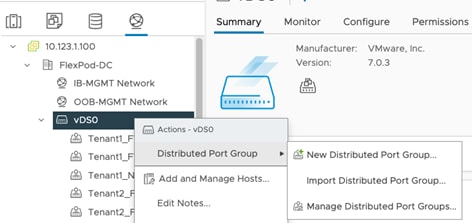

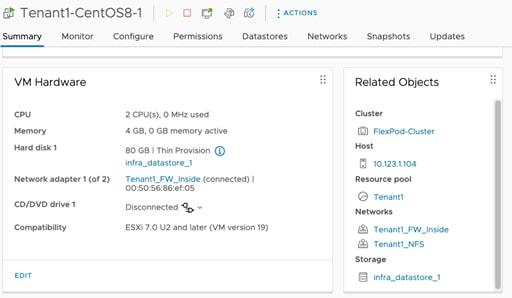

In this procedure, several port groups are configured on VMware vSphere. In the VDS configuration, port-groups for firewalls and NFS will be defined. The steps below detail two models that can be configured for tenant data and/or VM isolation:

● Tenants VMs are installed on the Infrastructure NFS data volume. Tenant application data is provisioned using NFS volumes in a tenant specific SVM. An isolated VLAN provides tenant VMs direct network access to their tenant specific NFS shares.

● For additional isolation, you can choose to create separate volumes within each tenant SVM and mount them in VMware vSphere as dedicated tenant datastores for deploying tenant VMs.

For storage access, IPspaces, dedicated VLANs, non-routed subnets, and stringent export policies limit data access.

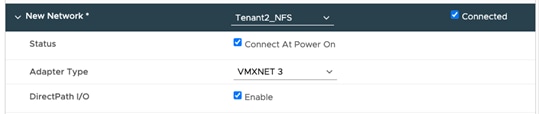

Procedure 1. Optional - Configure VM networking to mount tenant specific NFS shares

To allow tenant VMs access NFS shares in tenant SVMs, follow these steps:

Step 1. Log into VMware vCenter, click Network and select the VDS.

Step 2. Right-click the VDS name, select Distributed Port Group and then New Distributed Port Group.

Step 3. Provide the name and VLAN ID to add port-groups for FW outside VLAN, tenant FW inside VLANs and tenant NFS VLANs.

Step 4. To allow tenant VMs to access NFS shares in tenant SVMs, a second vNIC is created and assigned to appropriate port group. Right-click the VM and select Edit Settings.

Step 5. On the top right hand, click ADD NEW DEVICE and select Network Adapter.

Step 6. From the drop-down list, next to New Network, select Browse… and then appropriate port-group. Click OK.

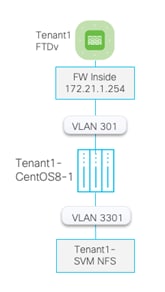

The following image shows a Tenant1 VM, hosted on shared Infra_datastore_1 and connected to two networks: Tenant1-FW-Inside and Tenant1-NFS:

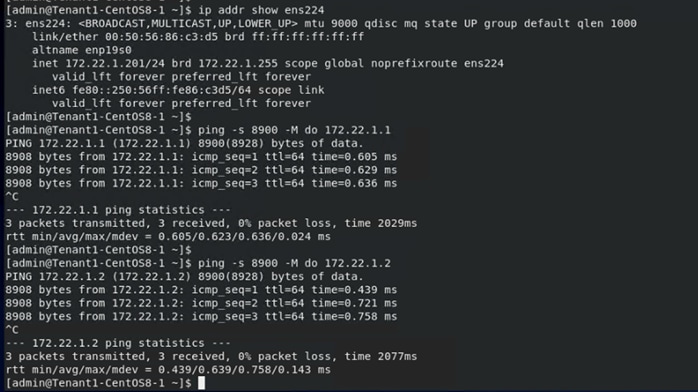

Step 7. Configure the IP address on the newly added VM NIC.

Step 8. Set the MTU on the newly added NIC to jumbo frame.

ens224: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 9000

inet 172.22.1.102 netmask 255.255.255.0 broadcast 172.22.1.255

inet6 fe80::1807:1e88:99a9:30ea prefixlen 64 scopeid 0x20<link>

ether 00:50:56:86:30:d2 txqueuelen 1000 (Ethernet)

RX packets 356669 bytes 58623025 (55.9 MiB)

RX errors 0 dropped 345112 overruns 0 frame 0

TX packets 5146 bytes 41453869 (39.5 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

Step 9. Mount the tenant specific NFS volume.

The example below shows fstab entry on a CentOS 8 VM to mount NFS share /tenant1_nfs_1 from Tenant1-SVM LIF 172.22.1.1 as /mnt/mysql-data:

[root@Tenant1-CentOS8-1 ~]# more /etc/fstab | grep nfs

172.22.1.1:/tenant1_nfs_1 /mnt/mysql-data nfs auto,noatime,nolock,bg,nfsvers=3,intr,tcp,actimeo=1800 0 0

[root@Tenant1-WP-DB ~]# mount /mnt/mysql-data/

[root@Tenant1-WP-DB ~]# ls /mnt/mysql-data/

mysql vdbench

Step 10. To verify connectivity and correct Jumbo MTU setup, the screenshot below shows an example for a VM in Tenant1 where the NFS NIC is configured with jumbo frame and the connectivity with the NFS servers is tested using a jumbo packet size.

Procedure 2. Optional - Mount NFS share to ESXi hosts and install tenant VMs on the tenant datastore

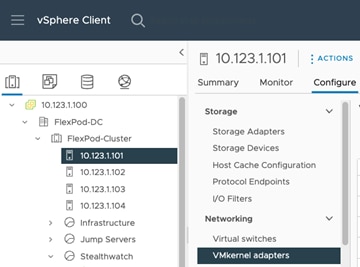

For the tenant NFS shares to be mounted on all the ESXi hosts in the cluster, create VMkernel ports for tenant NFS traffic on all ESXi hosts with proper tenant NFS network and jumbo frame MTU configurations. After the tenant NFS share is mounted on all hosts as a datastore, tenant can deploy their VMs on their assigned datastore.

Step 1. Log into VMware vCenter and click an ESXi host.

Step 2. Select Configure in the main window and then select VMkernel adapters.

Step 3. Click ADD NETWORKING and select VMkernel Network Adapter. Click NEXT.

Step 4. Click Select an existing network and click BROWSE… Pick appropriate port-group (for example, Tenant1-NFS) and click NEXT.

Step 5. Provide a Network Label, make sure the MTU is set to 9000 and click NEXT.

Step 6. Select Use Static IPv4 settings and provide an IP address and subnet mask. There is no need to define a default gateway because both the VMkernel port on ESXi host and LIF on NetApp controllers are in the same subnet. Click NEXT.

Step 7. Verify the settings and click FINISH.

Step 8. Repeat steps 1 - 7 to define VMkernel adapters for all the tenants.

Step 9. To verify the connectivity to NFS servers from an ESXi host, SSH to the ESXi host and use the vmkping command to verify connectivity and Jumbo MTU settings.

In the example, the IP address 172.22.4.1 is one of the NFS LIFs for Tenant 4 and vmk8 is the vmkernel port for the Tenant4_NFS network.

[root@AB03-ESXi-01:~] vmkping -s 8900 -I vmk8 172.22.4.2

PING 172.22.4.2 (172.22.4.2): 8900 data bytes

8908 bytes from 172.22.4.2: icmp_seq=0 ttl=64 time=0.229 ms

8908 bytes from 172.22.4.2: icmp_seq=1 ttl=64 time=0.488 ms

8908 bytes from 172.22.4.2: icmp_seq=2 ttl=64 time=0.526 ms

--- 172.22.4.2 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.229/0.414/0.526 ms

[root@AB03-ESXi-01:~]

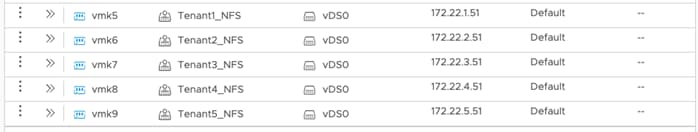

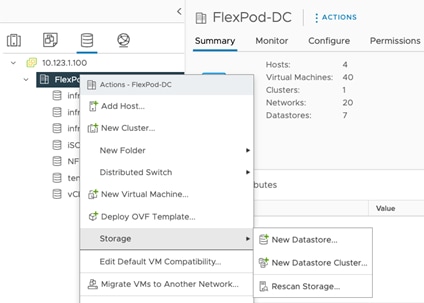

Step 10. Click the Datastore tab, right-click the Data Center name, and select Storage > New Datastore.

Step 11. Select NFS and click NEXT.

Step 12. Select the NFS version and click NEXT.

Step 13. Provide a name for the datastore, mountpoint folder and the SVM LIF IP address. Click NEXT.

Step 14. Select all the hosts where the datastore needs to be mounted. Click NEXT.

Step 15. Verify details and click FINISH.

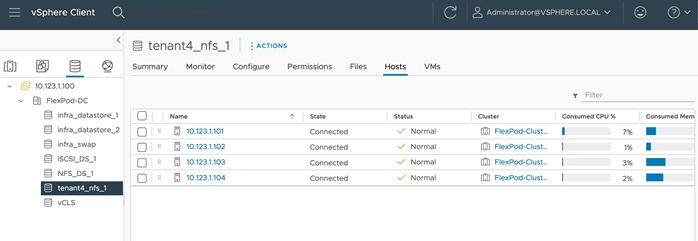

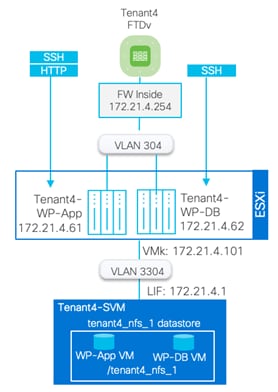

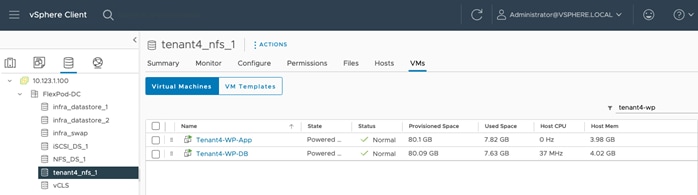

The following screenshots show the information of a NFS datastore from the Tenant4-SVM that is mounted on all the hosts in the VMware cluster:

Any tenant VMs deployed on this datastore will be hosted on a tenant dedicated SVM. This datastore allows both the VM and its data to be isolated for all tenants.

Deploy Secure Firewall Threat Defense

This chapter contains the following:

● Deploy Firewall Threat Defense Virtual

● Firewall Management Center Deployment

Cisco provides its firewall and threat defense solutions in several different form factors to meet various deployment needs. FlexPod customers can choose to deploy either physical or virtual appliances depending on their throughput and feature needs.

● Cisco Secure Firewall Threat Defense: Hardware appliances dedicated to firewall, VPN, IPS/IDS, and advanced threat protection.

● Cisco Secure Firewall Threat Defense Virtual (FTDv) is the virtualized options for Cisco’s proven network firewall with Snort IPS, URL filtering, and malware defense.

In this deployment, the Cisco Secure Firewall Threat Defense virtual (FTDv) appliance is utilized to secure the tenant network perimeter including access to the resources hosted within the tenant segments. This design enables security controls like filtering, intrusion prevention and malware detection at the edge of the tenant infrastructure.

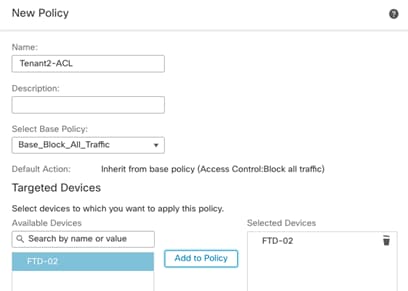

A separate instance of FTDv is deployed for every tenant. All the FTDv appliances are managed using Cisco Secure Firewall Management Center Virtual (FMC). The Secure Firewall Management Center Virtual Appliance provides:

● Common base access control policy that blocks all the traffic from outside (unprotected) to inside (protected) interfaces.

● Common NAT policy that allows all the inside hosts to use outside interface’s IP address to communicate to the outside world.

● Tenant specific access control policies allowing application traffic from outside to inside interfaces.

● Tenant specific static NAT mappings for application web (and similar) services.

● DHCP can be enabled on FTDv inside interface for VMs addressing (if desired).

● Common or tenant specific IPS and malware protection policies.

Deploy Firewall Threat Defense Virtual

Cisco offers threat defense virtual devices designed for VMware vSphere environment. These virtual threat defense devices are distributed as Open Virtualization Format (OVF) packages, which can be downloaded from Cisco.com.

Procedure 1. Download the Software

Step 1. To download the FTDv software, follow this link and download the OVF file for VMware: Cisco_Secure_Firewall_Threat_Defense_Virtual-7.2.5-208.tar.gz.

Step 2. Untar and unzip the file. When using VMware vCenter, you will need the .vmdk, .ovf, and .mf files containing “Virtual-VI” in the name.

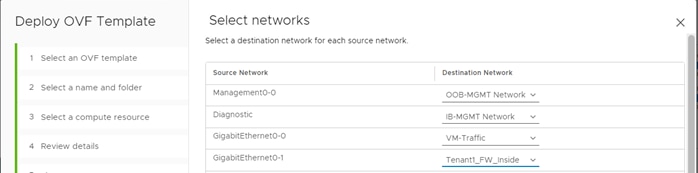

Procedure 2. Identify FTDv Interface Mapping

Table 4 lists the FTDv interface mappings that will be used for all tenants:

Table 4. FTDv Interface Mapping

| Network Adapter |

Source Networks |

Destination Networks |

Function |

| Network adapter 1 |

Management0-0 |

OOB-Mgmt-Network Port Group (VLAN 1230) |

Management |

| Network adapter 2 |

Diagnostic0-0 |

IB-Mgmt-Network Port Group (VLAN 1231) |

Diagnostic |

| Network adapter 3 |

GigabitEthernet0-0 |

VM-Traffic (FW Outside) Port-group (VLAN 1232) |

FW Outside |

| Network adapter 4 |

GigabitEthernet0-1 |

TenantX-FW-Inside Port Group (VLAN 301+) |

FW Inside |

Procedure 3. Identify performance tier

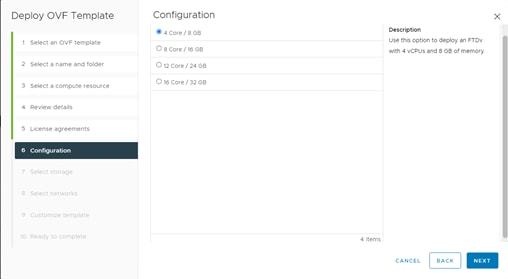

Use Table 5 to identify the correct VM sizing for the FTDv deployment. The information about number of CPUs and amount of memory will be used during OVF deployment.

Table 5. FTDv CPU/Memory Configuration

| Performance Tier |

Device Specifications (Core/RAM) |

Rate Limit |

RA VPN Session Limit |

| FTDv5, 100Mbps |

4 core/8 GB |

100Mbps |

50 |

| FTDv10, 1Gbps |

4 core/8 GB |

1Gbps |

250 |

| FTDv20, 3Gbps |

4 core/8 GB |

3Gbps |

250 |

| FTDv30, 5Gbps |

8 core/16 GB |

5Gbps |

250 |

| FTDv50, 10Gbps |

12 core/24 GB |

10Gbps |

750 |

| FTDv100, 16Gbps |

16 core/32 GB |

16Gbps |

10,000 |

Procedure 4. OVF Deployment in the VMware environment

Please follow this guide to deploy the OVF using VMware vCenter.

Step 1. When going through the deployment wizard, you will be prompted to select a CPU/Memory configuration. Use the Performance Tiers listed in Table 5 to select the correct values.

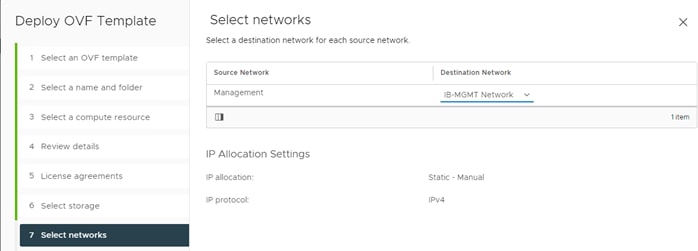

Step 2. Select the interface mapping for at least 4 interfaces. Make sure you use VMXNET3 interface types (default). You can have 10 interfaces when you deploy the threat defense virtual. For data interfaces, make sure that the Source Networks map to the correct Destination Networks, and that each data interface maps to a unique subnet or VLAN.

Note: You do not need to use all threat defense virtual interfaces; for interfaces you do not intend to use, you can simply leave the interface disabled within the threat defense virtual configuration. Make sure you read and follow the interface guidelines and limitations.

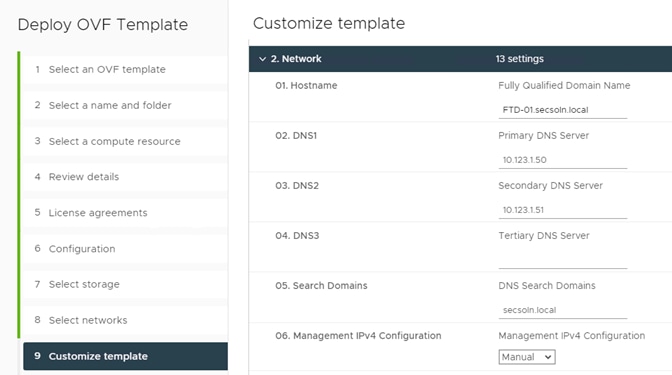

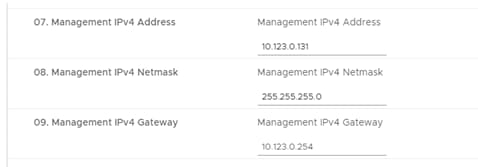

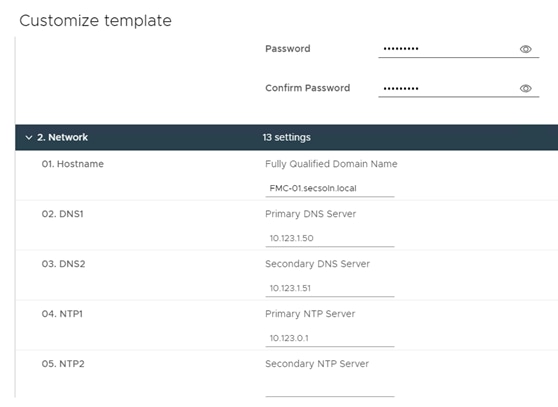

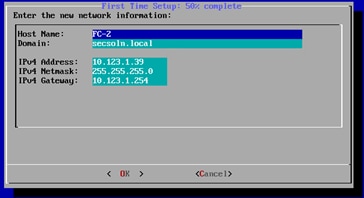

Step 3. Provide the password, FW name, and DNS information:

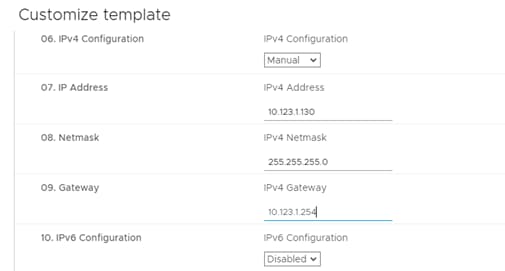

Step 4. Provide a IPv4 management IP address, netmask, and gateway:

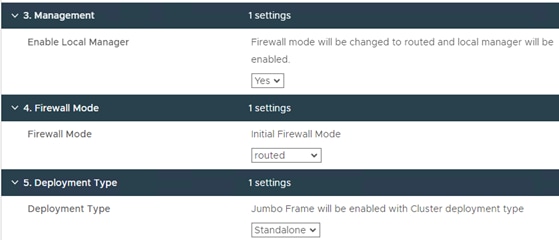

Step 5. Select YES for Local Manager for now but this FTDv will be added to FMC later.

Step 6. Select Initial Firewall Mode as routed.

Step 7. Select the Deployment type as Standalone. You can select the deployment type as cluster however in this validation, standalone mode was utilized.

Step 8. Do not add the registration information (IP address, and so on) for FMC now.

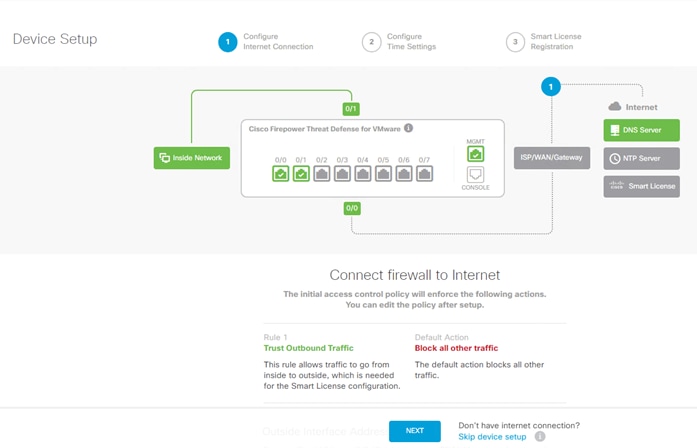

Step 9. When FTDv is successfully deployed, use vCenter to power on the VM.

Step 10. You can now access the FTDv using the management IP addresses defined at the time of OVF deployment, https://<FTDv_IP_Address> and using “admin” and password set at the time of OVF deployment to log into the FTDv.

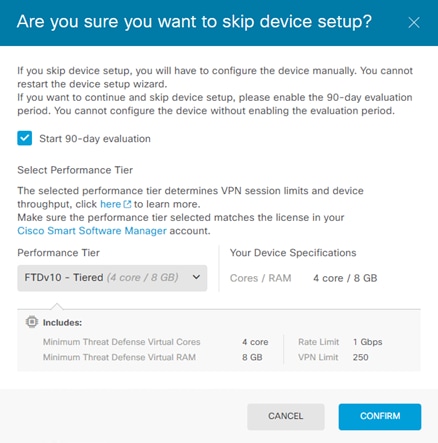

Step 11. Click Skip device setup and select a Performance Tier and select Start 90-day evaluation.

Step 12. Click CONFIRM.

Note: FTDv will be added to Firewall Management Center (FMC) later and the device configuration will be pushed from the FMC.

Step 13. Repeat these steps to add all the FTDv virtual appliances, one for each tenant.

Firewall Management Center Deployment

The Secure Firewall Management Center (FMC) Virtual Appliance brings full firewall functionality to virtualized environments to secure data center traffic and multi-tenant environments. A single instance of FMC is deployed as a central management device to manage all the tenant firewalls.

Procedure 1. Identify the Virtual Appliance performance tier

Follow the performance guidelines as explained in this document. Depending on the number of tenants, the CPU and memory settings of the appliance can be adjusted. By default, the FMC VM is allocated 32GB of memory and 8 CPUs (refer to Table 2 in the document).

Procedure 2. Download FMC software

Step 1. Follow this link to download the FMC software.

Step 2. Download the VMware installation package: Cisco_Secure_FW_Mgmt_Center_Virtual_VMware-7.2.5-208.tar.gz.

Step 3. Untar and unzip the file. When using VMware vCenter, you will need the .vmdk, .ovf, and .mf files containing “Virtual-VI” in the file name.

Procedure 3. OVF deployment in the VMware environment

Please follow this guide to deploy the OVF. Provided below is helpful guidance when deploying FMC virtual appliance. Provided below is helpful guidance for deploying FMC in the environment.

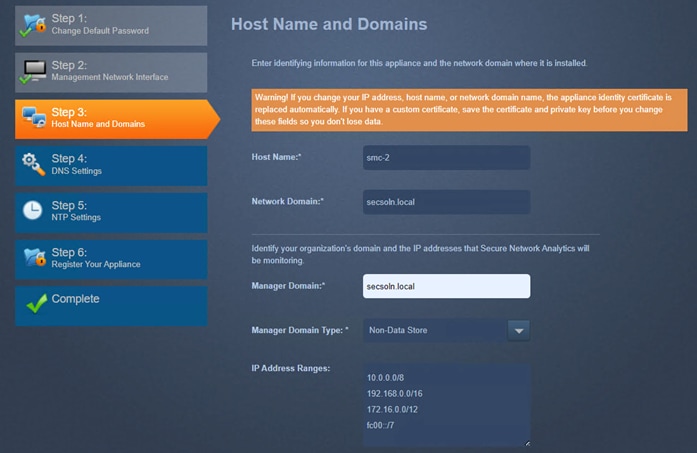

Step 1. For management network, pick the IB-Mgmt-Network (VLAN 1231).

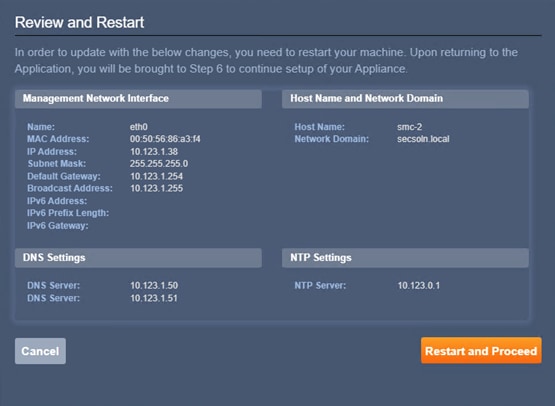

Step 2. Provide the password, FMC name, NTP, and DNS information.

Step 3. Provide IP address, netmask, and gateway information.

Step 4. When FMC is successfully deployed, power on the VM.

Step 5. Access FMC Web console by accessing https://<FMC_IP_Address> and using “admin” and password set at the time of OVF deployment.

Note: If the gateway is not properly set, you will need to access FMC web console from a VM in the same subnet as FMC. Once logged in, the gateway can be set by going into Settings (gear icon) > Configuration > Management Interfaces.

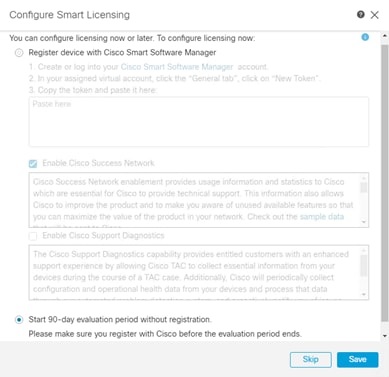

Step 6. Register FMC with Cisco Smart Software manager as shown in the initial splash screen and enable Cisco Success Network. Alternately, you can select Start 90-day evaluation period without registration and enter the licensing information later.

Step 7. Click Save.

Note: For details on FMC licensing including licensing for air-gapped deployment, refer to the administration guide.

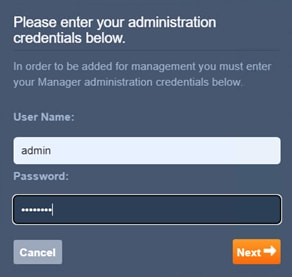

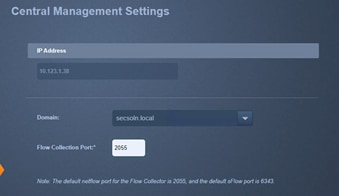

Follow this procedure to add FTDv devices to FMC.

Procedure 1. Prepare FTDv

Step 1. Log into the FTDv management console.

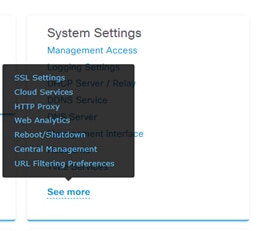

Step 2. On the main page, under System Settings, click See More and select Central Management.

Step 3. Click PROCEED and then click Continue.

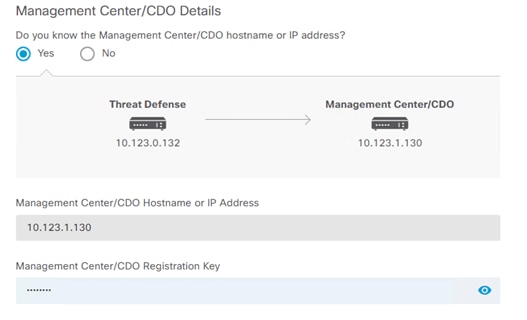

Step 4. Provide the IP address of FMC and create a Registration Key. This Registration key (password) will be used in FMC to add FTDv in the next procedure.

Step 5. Verify the hostname and management interface and click CONNECT.

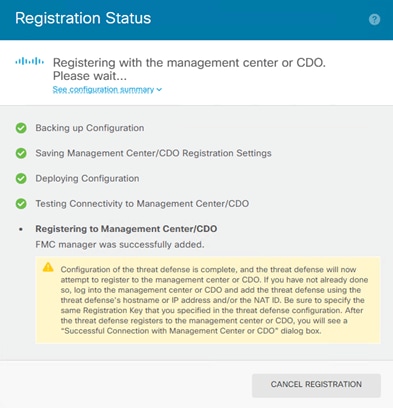

Step 6. The Registration Status box will appear, wait for registration to go through.

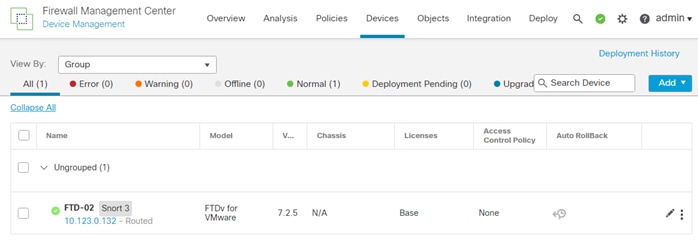

Procedure 2. Add device in FMC

Step 1. Log int the management console of FMC.

Step 2. Select Devices > Device Management.

Step 3. From the Add drop-down list, select Add Device.

Step 4. Provide the Host IP address of FTDv you want to add, a Display Name for the device, and Registration Key created when generating registration request in the last procedure.

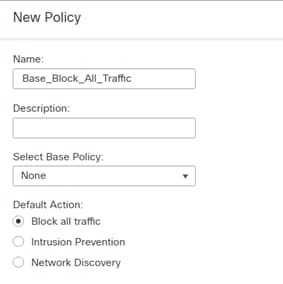

Step 5. Under Access Control Policy, select Create New Policy.

Step 6. Provide a name for the New Policy and set the default action to Block all traffic.

Step 7. Click Save.

Step 8. Select a Performance Tier.

Note: For details on available performance tiers, refer to the FTDv deployment procedures.

Step 9. Click Register.

The FTDv will be added to FMC.

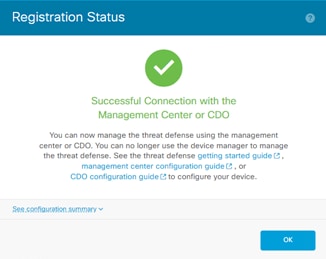

Step 10. To verify, revisit the FTDv management console window. Registration Status will show success.

Note: You will lose access to the device manager at this time and FTDv will be managed by FMC.

Step 11. Repeat these steps to add all the FTDv virtual appliances to FMC.

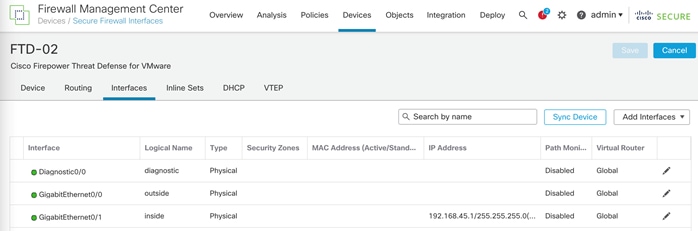

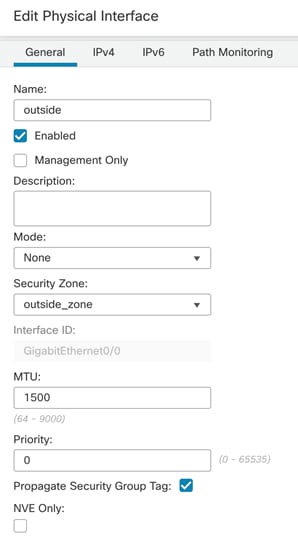

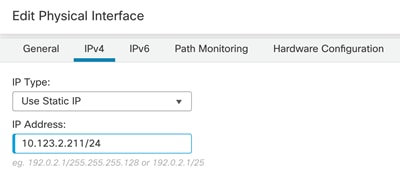

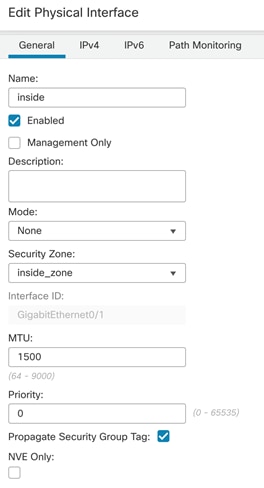

In this section, the FTDv appliances interfaces and routing will be configured.

Note: You can optionally set the FTDv appliance as a DHCP server for tenant VMs by going to Device > Select the FTDv > DHCP.

Procedure 1. Configure Interfaces

Step 1. Log into FMC console and select Devices > Device Management.

Step 2. Click the name of the FTDv appliance you want to configure.

Step 3. In the main screen, select Interfaces.

Step 4. Click the pencil to edit the Diagnostic0/0 interface. Under IPv4, enter an IP address for the interface. In this deployment, the Diagnostic0/0 interface is mapped to and assigned an IP address in the IB-Mgmt subnet.

Step 5. (Optional) Allow management access by clicking on Manager Access.

Step 6. Click OK to save the configuration.

Step 7. Click the pencil to edit G0/0, outside interface.

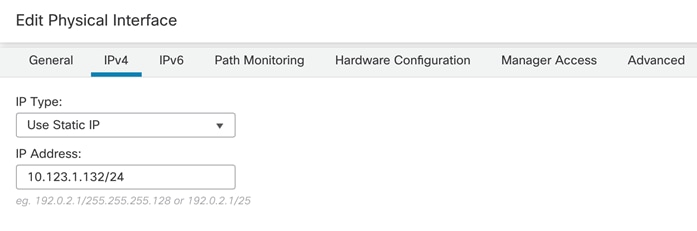

Step 8. Under Security Zone, select New and name the new security zone “outside_zone”

Step 9. Click IPv4 and from the IP Type drop-down list, select Use Static IP. Enter an IP address and subnet mask.

Step 10. Click OK to accept the changes.

Step 11. Click the pencil to edit G0/1, inside interface.

Step 12. Under Security Zone, select New and name the new security zone “inside_zone”

Step 13. Click IPv4 and from IP Type drop-down list, select Use Static IP. Enter an IP address and subnet mask.

Step 14. Click OK to accept the changes.

Step 15. Repeat these steps to configure interfaces for all the FTDv devices.

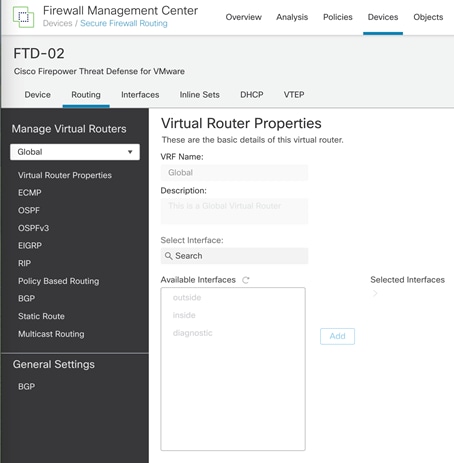

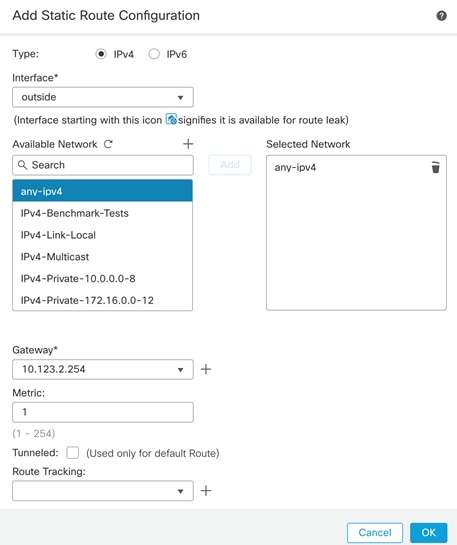

Procedure 2. Configure default route for outside interface

Step 1. In the main window, click Routing.

Step 2. Click Static Routing in the left pane.

Step 3. Click +Add Route.

Step 4. Select IPv4, and under Interface select outside.

Step 5. Under Available Network, select any-ipv4 and click Add to add the network to Selected Networks.

Step 6. Enter the gateway IP address and click OK to accept.

Step 7. Click Save to save the routing changes.

Step 8. Repeat these steps to configure the default gateways for all the FTDv devices.

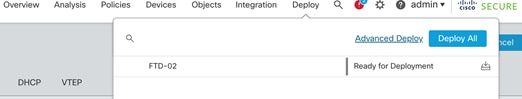

Procedure 3. Deploy the interface and routing changes

Step 1. From the main menu, select Deploy.

Step 2. Select Deploy All to push the interface and routing changes to all the FTDv appliances.

Note: Once the configuration deployment finishes, you can test the FTDv connectivity by logging into the VM console and pinging from the FTDv.

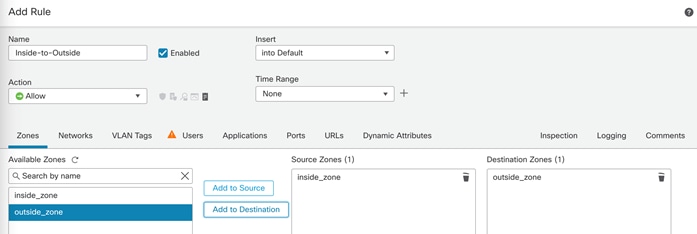

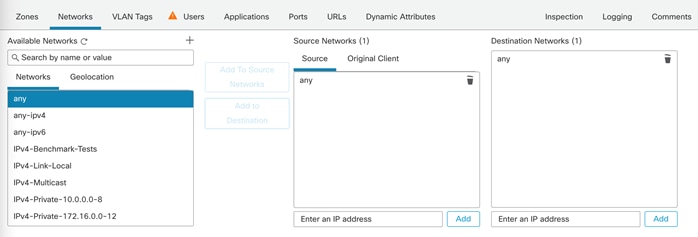

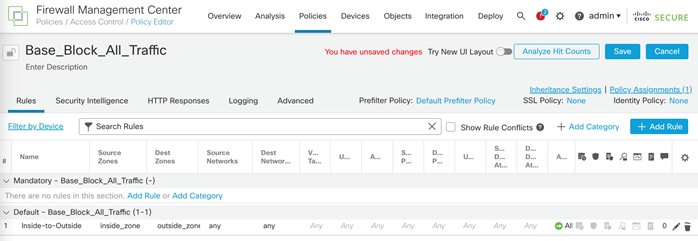

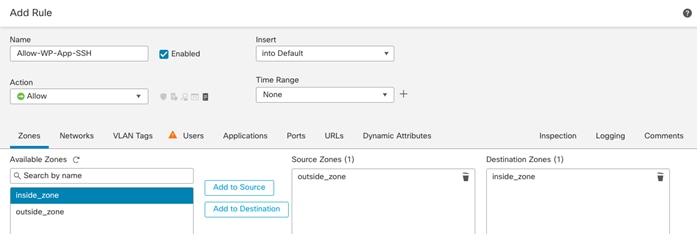

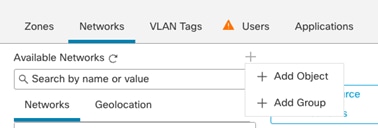

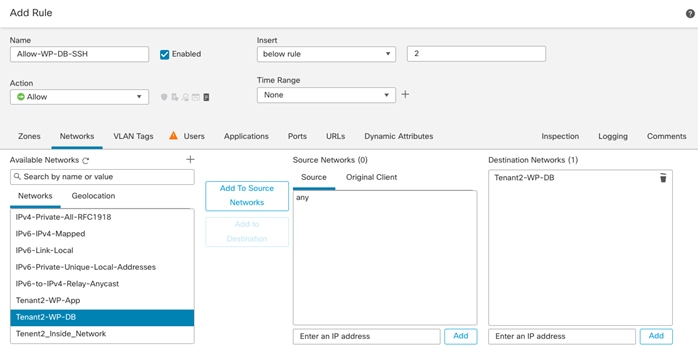

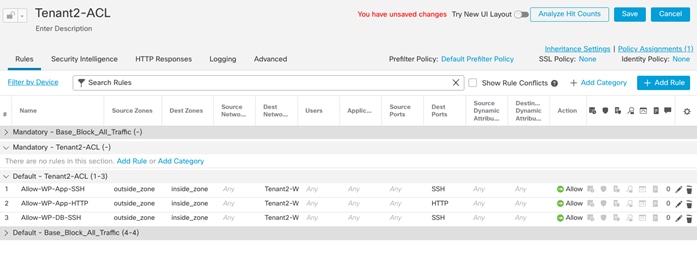

Cisco Secure Firewall Threat Defense (FTD) provides a very comprehensive set of policies. The intent of this document is not to explain all the firewall policies that FTD has to offer. The FW configuration explained in this section will be limited to following:

● Create an Access Policy that ensures traffic from application virtual machines (VM) within the tenant environment is always permitted to access networks outside the firewall.

● Restrict incoming traffic on the firewall so that only traffic destined for applications hosted within the tenant is allowed on limited set of ports.

● Set up Network Address Translation (NAT) to allow all traffic originating from the tenant network to use the external IP address of the FTDv appliance.

● Implement NAT rules to permit external traffic to reach a tenant application VMs without changing the IP address.

● Enable recommended Intrusion Prevention System (IPS) and Intrusion Detection System (IDS) policies on the FTDv to enhance security.

● Turn on the advised Malware protection policies on the FTDv to safeguard against malicious software threats.

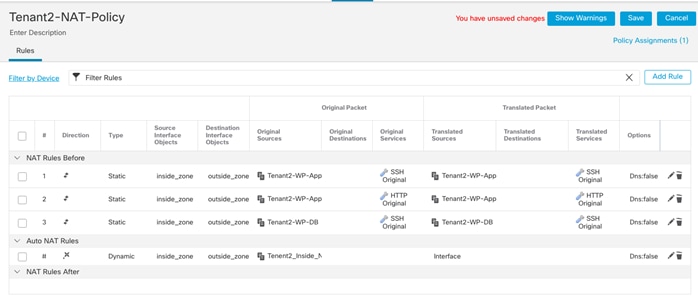

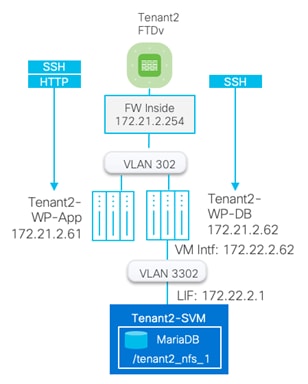

Tenant Setup

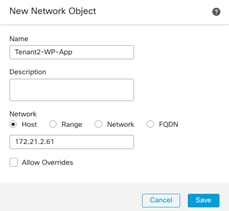

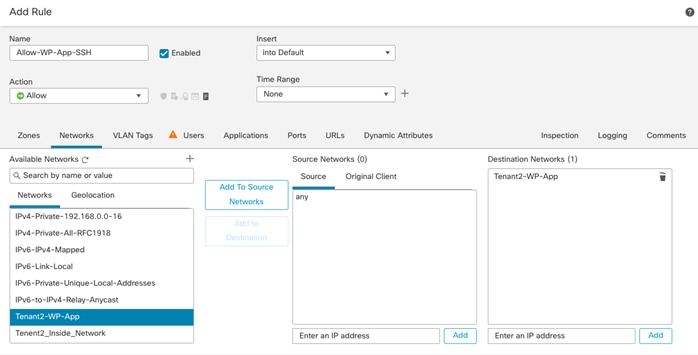

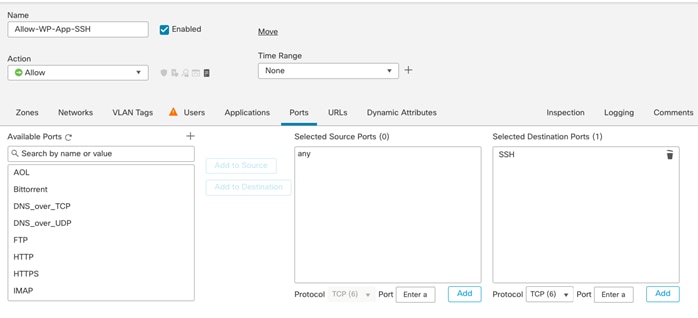

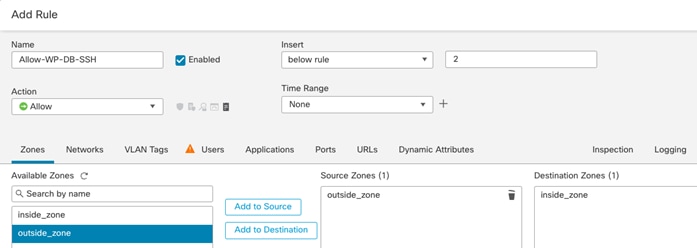

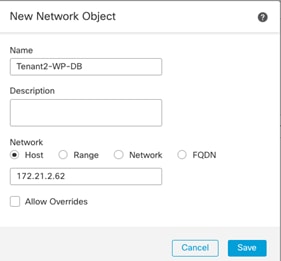

In the configuration examples below, various policies will be defined for the following Tenant:

Tenant Name: Tenant2

Tenant private IP subnet: 172.21.2.0/24

Tenant Application: WordPress

Tenant VMs:

● Tenant2-WP-APP – 172.21.2.61 – Allowed Traffic on FW: SSH, HTTP.

● Tenant2-WP-DB – 172.21.2.62 – Allowed Traffic on FW: SSH.

Access Control:

● Allow all tenant VMs to access outside networks including the Internet.

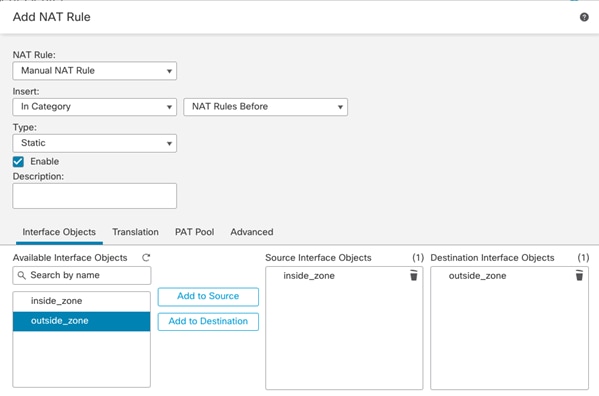

● Allow enterprise clients to access both WordPress VMs directly (without NAT) using SSH for management.

● Allow outside networks to access Tenant2-WP-App VM using HTTP to access WordPress.

NAT:

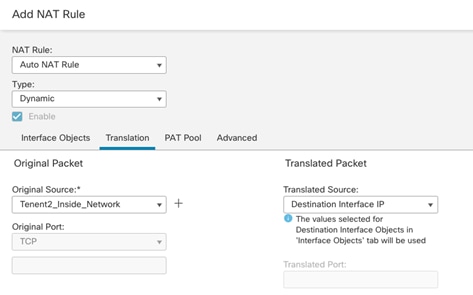

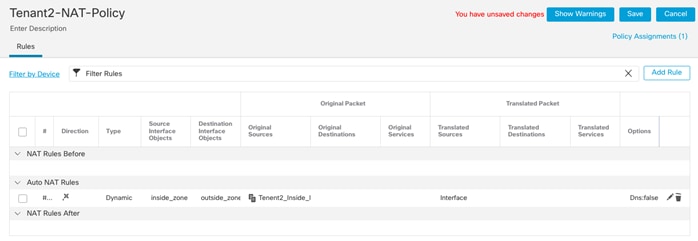

● Translate Tenant 2 VM IP addresses in subnet 172.21.2.0/24 to FTDv appliance outside interface to allow VMs access outside networks and Internet.

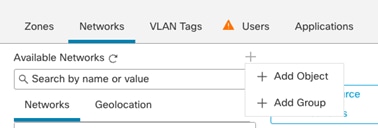

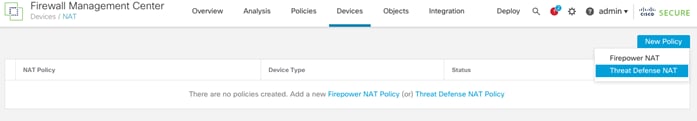

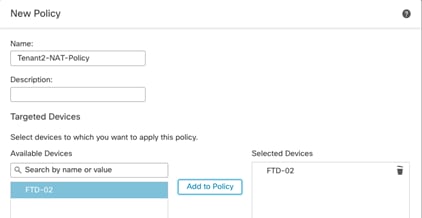

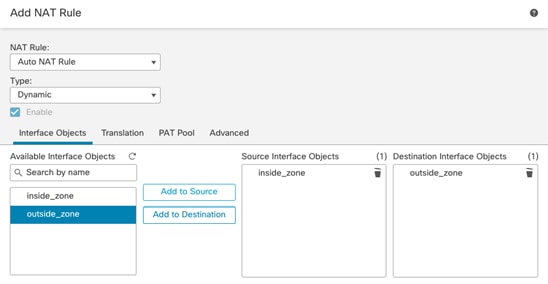

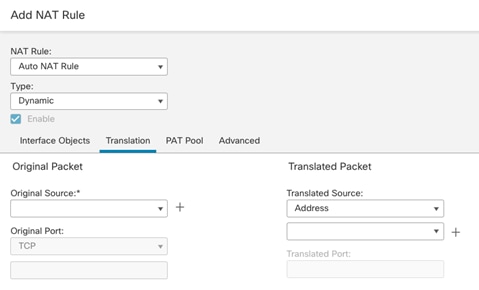

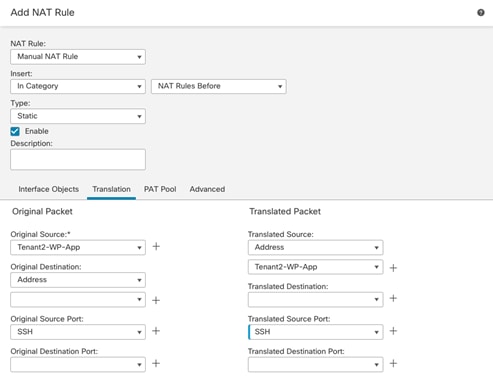

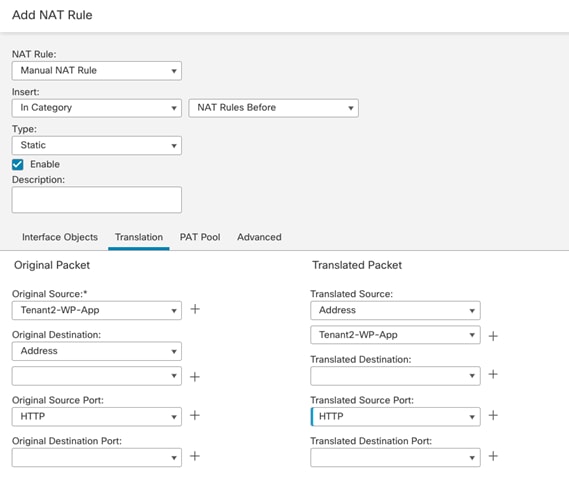

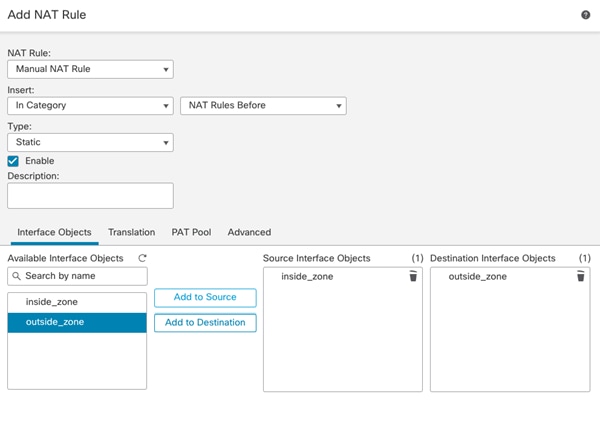

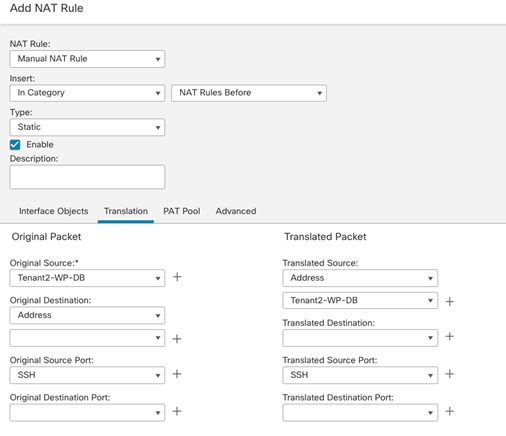

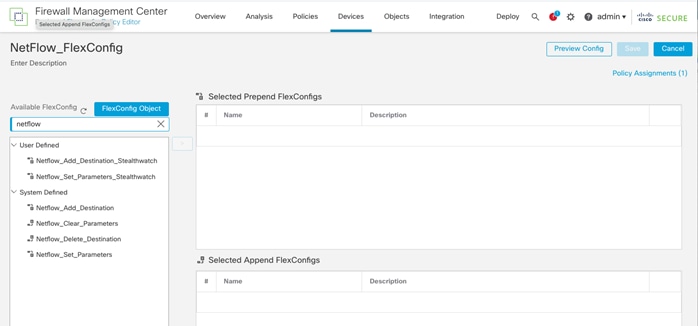

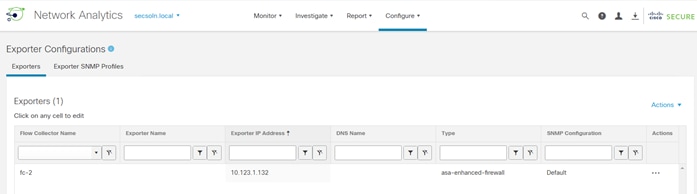

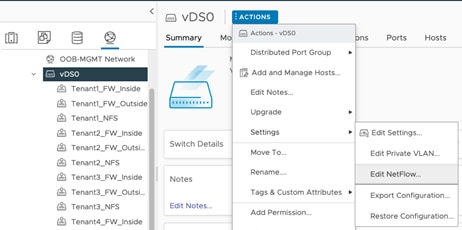

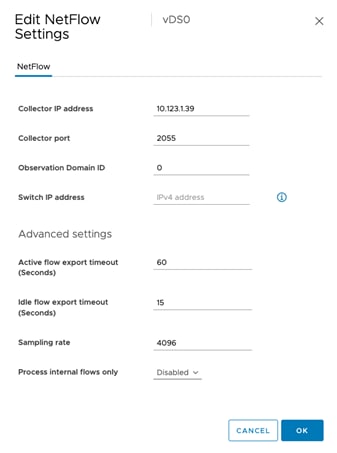

● Do not translate VM IP addresses 172.21.2.61 and 172.21.2.62 for SSH and HTTP access so corporate clients can access the application VMs using their private IP addresses.