FlexPod Datacenter with Microsoft SQL Server 2017 on Linux VM Running on VMware and Hyper-V

Available Languages

FlexPod Datacenter with Microsoft SQL Server 2017 on Linux VM Running on VMware and Hyper-V

Deployment Guide for FlexPod Hosting SQL Server 2017 Databases Running on RHEL7.4 in VMware ESXi 6.7 and Windows Server 2016 Hyper-V Virtual Environments

FlexPod Datacenter with Microsoft SQL Server 2017 on Linux VM Running on VMware and Hyper-V PDF

Last Updated: January 25, 2019

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2019 Cisco Systems, Inc. All rights reserved.

Table of Contents

FlexPod: Cisco and NetApp Verified and Validated Architecture

Out-of-the-Box Infrastructure High Availability

Cisco Unified Computing System

Cisco UCS Fabric Interconnects

Cisco UCS 5108 Blade Server Chassis

Cisco UCS B200 M5 Blade Server

Cisco Nexus 93180YC-EX Switches

Microsoft Windows Server 2016 Hyper-V

Deployment Hardware and Software

FlexPod Cisco Nexus Switch Configuration

Add NTP Distribution Interface

Add Individual Port Descriptions for Troubleshooting

Configure Port Channel Parameters

Configure Virtual Port Channels

Uplink into Existing Network Infrastructure

Complete Configuration Worksheet

Infrastructure Storage Virtual Machine (Optional)

Create Storage Virtual Machine

Create Load-Sharing Mirrors of SVM Root Volume

Create Block Protocol (iSCSI) Service

Add Infrastructure SVM Administrator

SVM for SQL Linux Virtual Machines on ESXi

Create Storage Virtual Machines

Create Load-Sharing Mirrors of SVM Root Volume

Create Block Protocol (iSCSI) Service

Create Storage Volume for SQL Virtual Machine Datastore and Swap LUNs

SVM for SQL Linux Virtual Machines on Hyper-V

Create Storage Virtual Machine

Create Load-Sharing Mirrors of SVM Root Volume

Create Block Protocol (iSCSI) Service

Create Storage Volume for SQL Virtual Machines Running on Hyper-V

Perform Initial Setup of Cisco UCS Fabric Interconnects for FlexPod Environments

Upgrade Cisco UCS Manager Software to Version 4.0(1c)

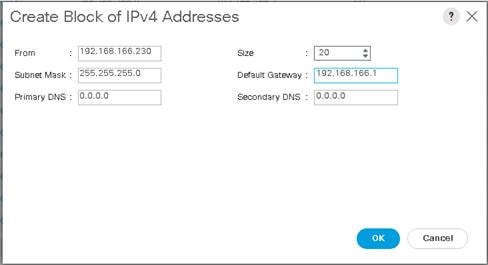

Add Block of IP Addresses for KVM Access

Enable Server and Uplink Ports

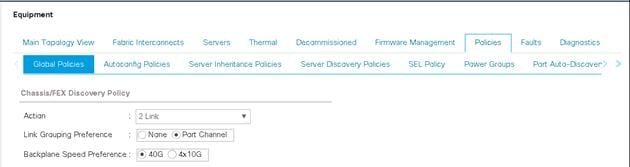

Acknowledge Cisco UCS Chassis and FEX

Create Uplink Port Channels to Cisco Nexus Switches

Create Organization (Optional)

Create IQN Pools for iSCSI Boot

Create IP Pools for iSCSI Boot

Modify Default Host Firmware Package

Set Jumbo Frames in Cisco UCS Fabric

Create Local Disk Configuration Policy (Optional)

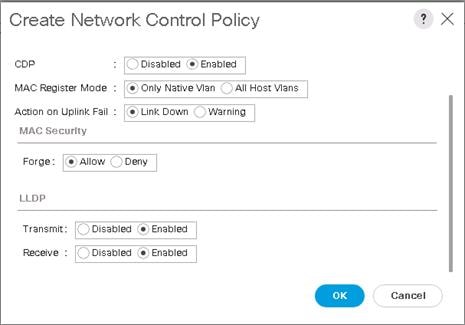

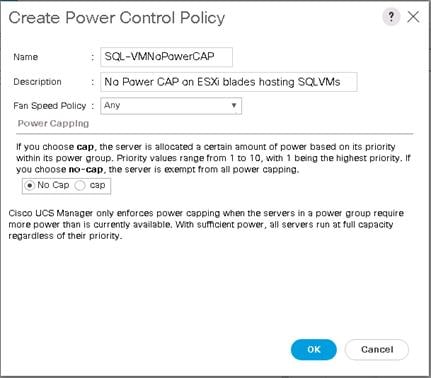

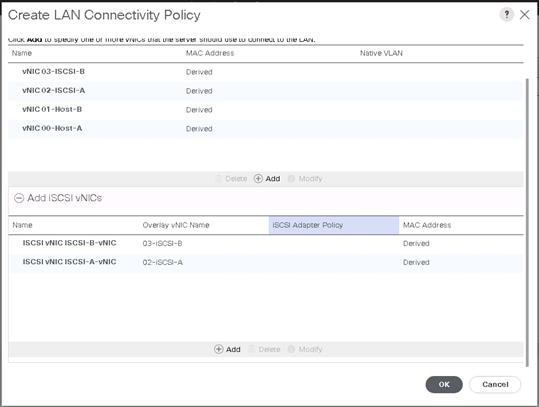

Create LAN Connectivity Policy for iSCSI Boot

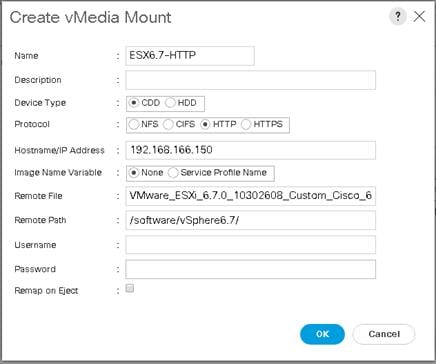

Create vMedia Policy for installing both ESXi and Hyper-V Hypervisors

Create Boot Policy for iSCSI Boot

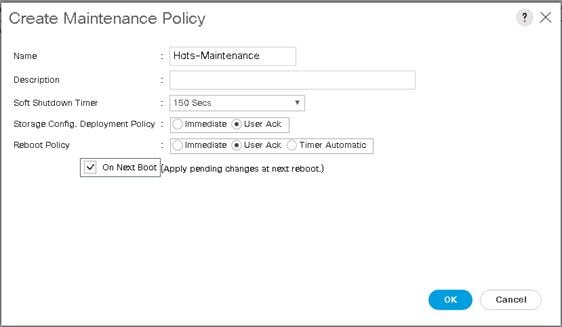

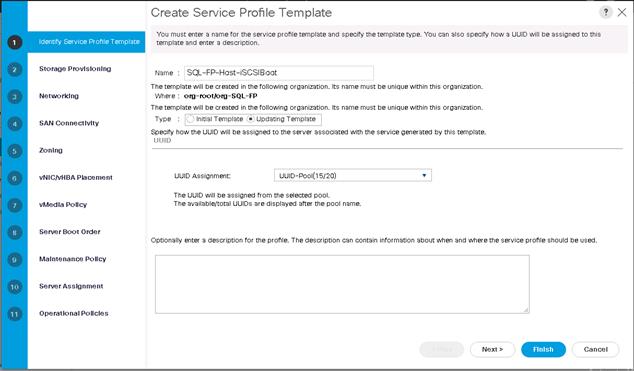

Create Service Profile Templates

Create vMedia-Enabled Service Profile Template

Add More Servers to FlexPod Unit

ONTAP Boot, Data LUNs, and Igroups Setup

Download Cisco Custom Image for ESXi 6.7U1

Set Up VMware ESXi Installation

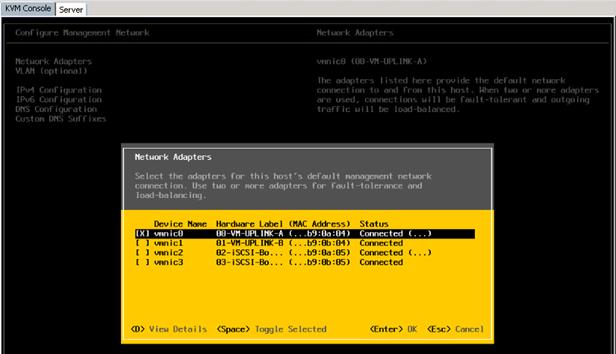

Set Up Management Networking for ESXi Hosts

Verifying UEFI secure boot of ESXi

Log into VMware ESXi Hosts by Using VMware Host Client

Set Up Basic VMkernel Ports on Standard vSwitch

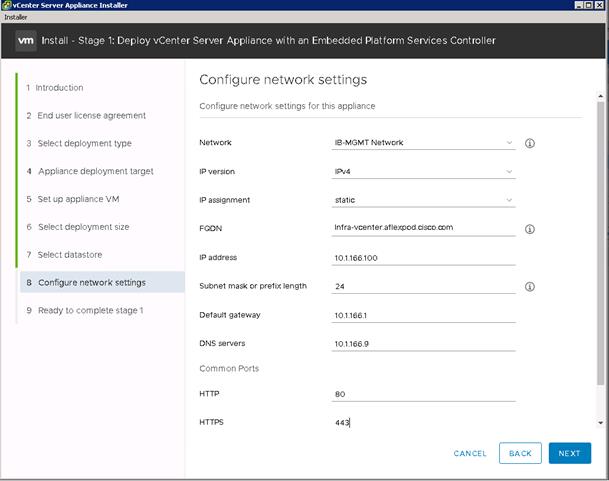

Build the VMware vCenter Server Appliance

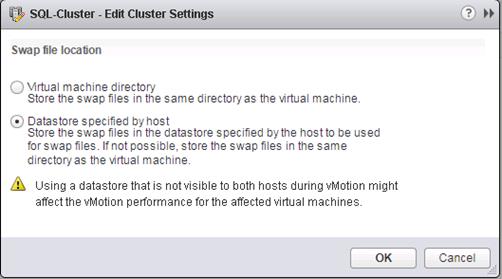

Configure the Default Swap File Location

Configure the ESXi Power Management

Add AD User Authentication to vCenter (Optional)

ESXi Dump Collector Setup for iSCSI-Booted Hosts

Cisco UCS Manager Plug-in for VMware vSphere Web Client

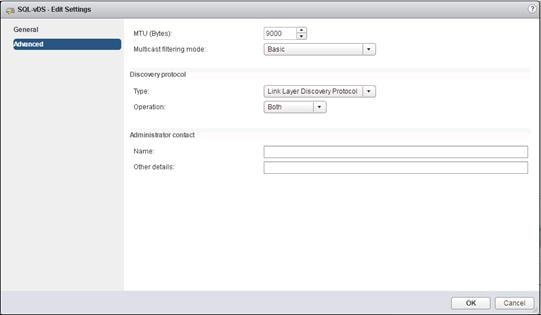

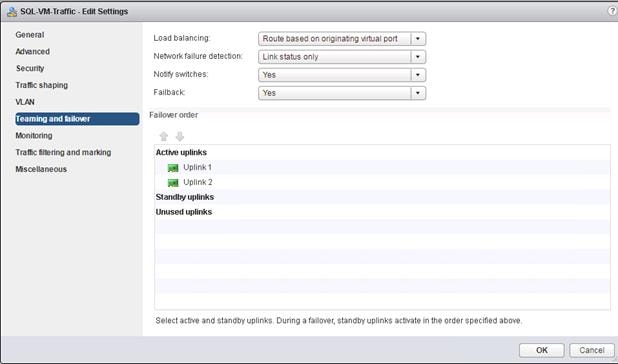

FlexPod VMware vSphere Distributed Switch (vDS)

Configure the VMware vDS in vCenter

Creating Virtual Machine for SQL Server

Microsoft Windows Server 2016 Hyper-V Deployment

Install Microsoft Windows Server 2016

Install Chipset Driver Software

Install Windows Roles and Features

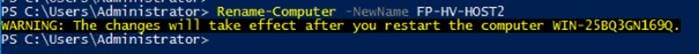

Host Renaming and Join to Domain

Configure Microsoft iSCSI Initiator

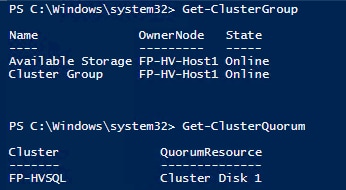

Create Windows Failover Cluster

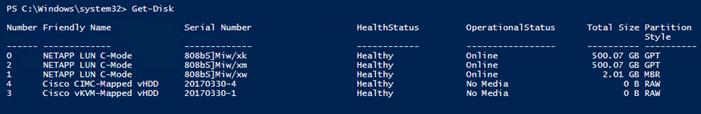

Prepare the Shared Disks for Cluster Use

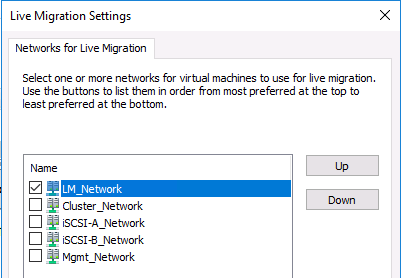

Create Clustered Virtual Machine for RHEL

RHEL on Virtual Machine Installation and Configuration

Download Red Hat Enterprise Linux 7.4 image

Install Red Hat Enterprise Linux

RHEL OS Configuration for Higher Database Performance

Join Linux Virtual Machine to Microsoft Windows Active Directory (AD) Domain

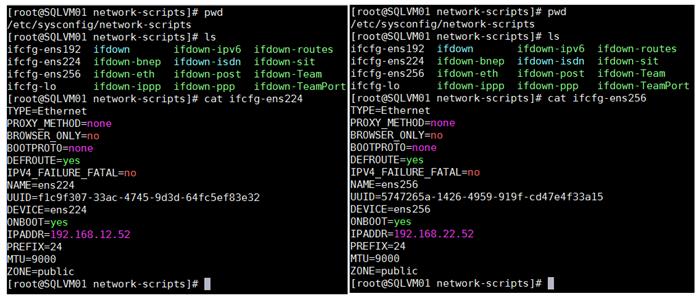

Configure iSCSI Network Adapters for Storage Access

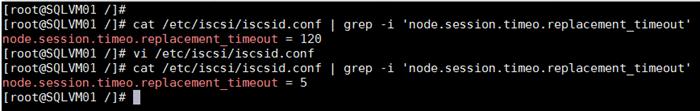

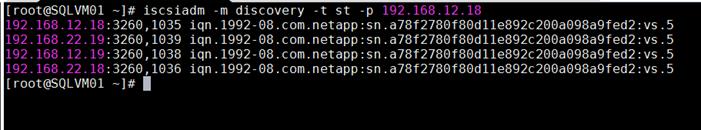

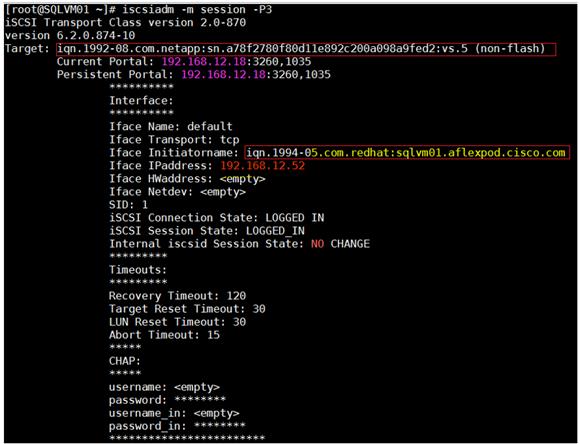

Install and Configure NetApp Linux Unified Host Utilities, Software iSCSI Initiator and Multipath

SQL Server 2017 on RHEL Installation and Configuration

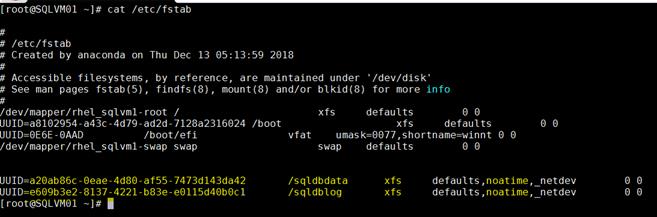

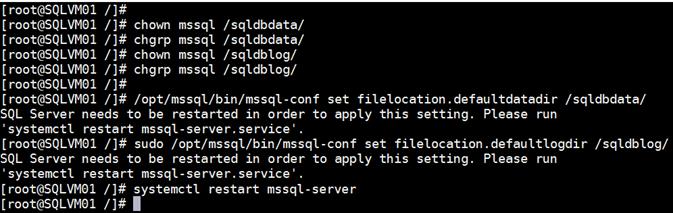

Change the Default Directories for Databases

Configure Tempdb Database Files

Add SQL Server to Active Directory Domain

SQL Server Always On Availability Group on RHEL Virtual Machines Deployment

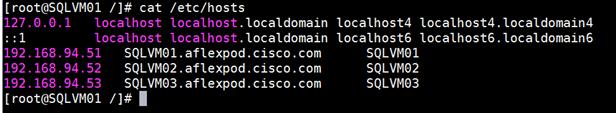

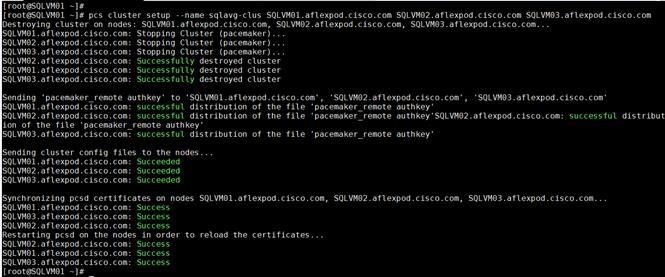

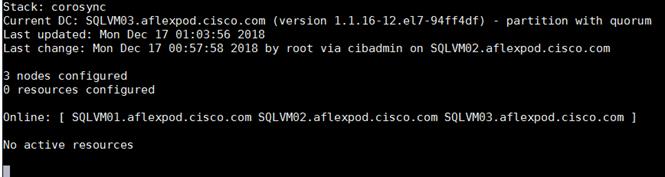

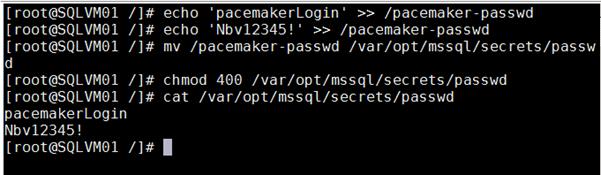

Install and Configure Pacemaker Cluster on RHEL Virtual Machines

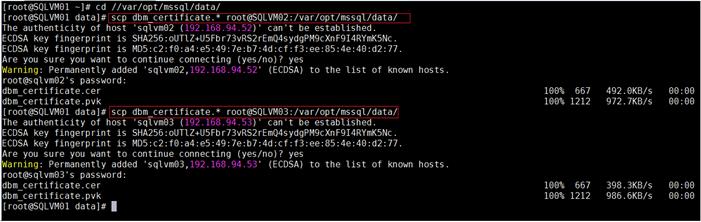

Install and Configure Always On Availability Group on Pacemaker Cluster

Automatic Failover Testing of Availability Group

FlexPod Management Tools Setup

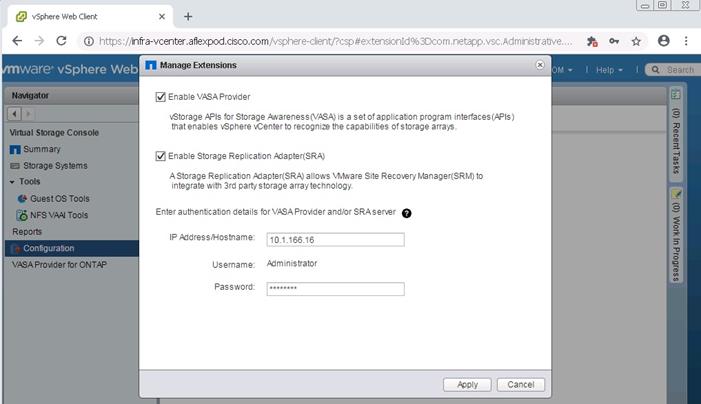

Deploy NetApp Virtual Storage Console 7.2.1

Virtual Storage Console 7.2.1 Pre-installation Considerations

Install Virtual Storage Console 7.2.1

Register Virtual Storage Console with vCenter Server

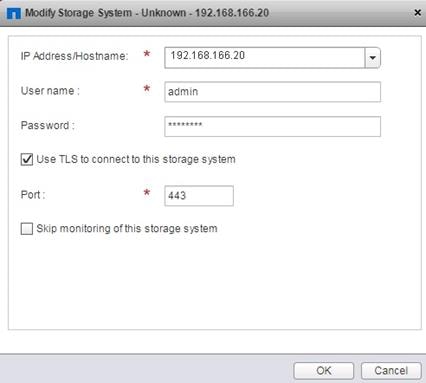

Discover and Add Storage Resources

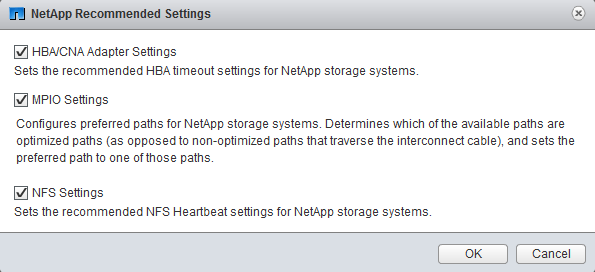

Optimal Storage Settings for ESXi Hosts

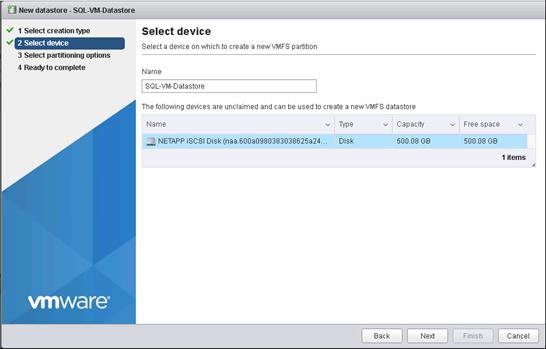

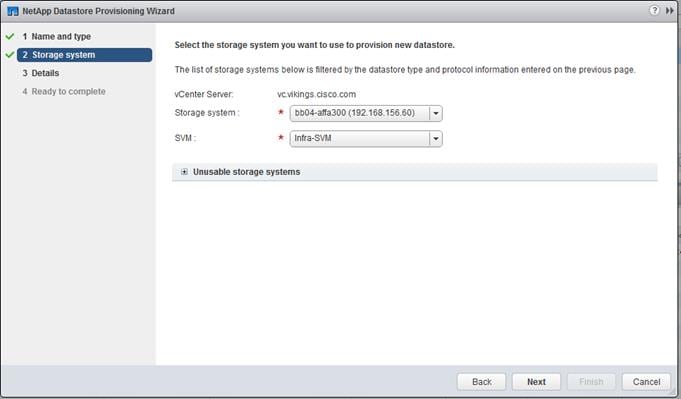

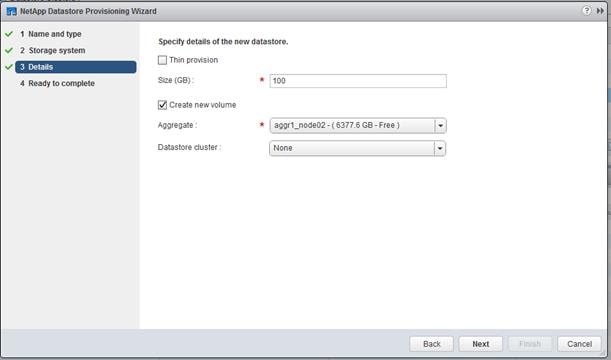

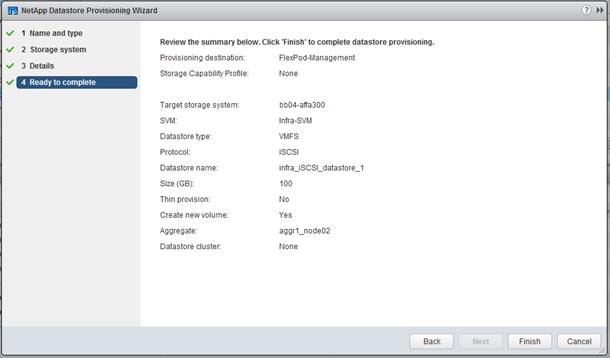

Virtual Storage Console 7.2.1 Provisioning Datastores

SnapCenter Server Requirements

SnapCenter 4.1.1 License Requirements

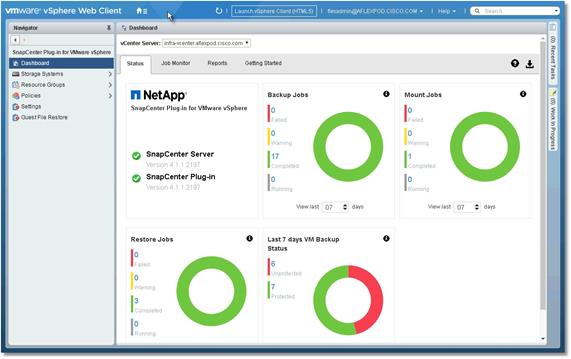

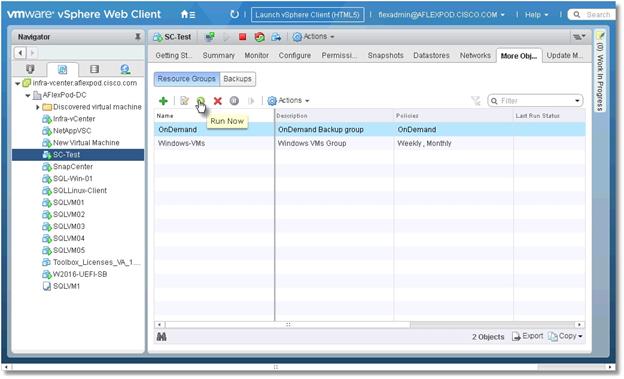

SnapCenter Plug-in for VMware vSphere

Support for Virtualized Databases and File Systems

Install SnapCenter Plug-in for VMware

Host and Privilege Requirements for the Plug-in for VMware vSphere

Install the Plug-in for VMware vSphere from the SnapCenter GUI

Configure SnapCenter Plug-in for vCenter

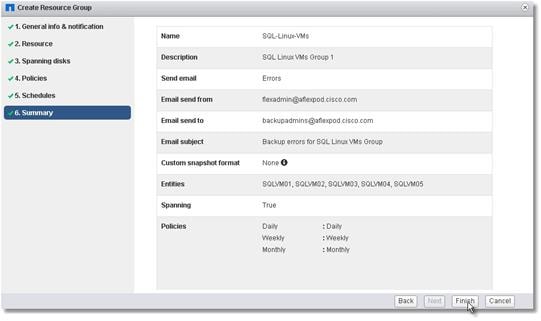

View Virtual Machine Backups and Restore from vCenter by Using SnapCenter Plug-in

Restore from vCenter by Using SnapCenter Plug-in

It is important that a datacenter solution embrace technology advancement in various areas, such as compute, network, and storage technologies to address rapidly changing requirements and challenges of IT organizations.

FlexPod is a popular converged datacenter solution created by Cisco and NetApp. It is designed to incorporate and support a wide variety of technologies and products into the solution portfolio. There have been continuous efforts to incorporate advancements in the technologies into the FlexPod solution. This enables the FlexPod solution to offer a more robust, flexible, and resilient platform to host a wide range of enterprise applications.

This document discusses a FlexPod reference architecture using the latest hardware and software products and provides deployment recommendations for hosting Microsoft SQL Server databases in VMware ESXi and Microsoft Windows Hyper-V virtualized environments, with Linux support enablement from Microsoft for SQL Server deployment.

The recommended solution architecture is built on Cisco Unified Computing System (Cisco UCS) using the unified software release 4.0.1c to support the Cisco UCS hardware platforms including Cisco UCS B-Series Blade Servers, Cisco UCS 6300 Fabric Interconnects, Cisco Nexus 9000 Series Switches, and NetApp AFF Series Storage Arrays.

Introduction

The current IT industry is witnessing vast transformations in the datacenter solutions. In the recent years, there is a considerable interest towards pre-validated and engineered datacenter solutions. Introduction of virtualization technology in the key areas has impacted the design principles and architectures of these solutions in a big way. It has opened the doors for many applications running on bare metal systems to migrate to these new virtualized integrated solutions.

FlexPod System is one such pre-validated and engineered datacenter solution designed to address rapidly changing needs of IT organizations. Cisco and NetApp have partnered to deliver FlexPod, which uses best of breed compute, network and storage components to serve as the foundation for a variety of enterprise workloads including databases, ERP, CRM and Web applications, etc.

With Microsoft SQL Server 2017, Microsoft has made a big announcement to support SQL Server deployments on Linux Operating systems. SQL Server 2017 on Linux platforms brings in support for most of the major features that are currently supported by SQL in a Windows platform. Microsoft claims that database performance of SQL on Linux should be similar as that of SQL in Windows. SQL on Linux is as secured as SQL in Windows platform. High availability features such as Always On Availability Groups and Failover Cluster Instance are well supported and integrated in Linux operating systems.

This enablement relieves Windows centric deployments of SQL Server and offers flexibility to customers to choose and deploy SQL Server databases on variety of widely used Linux operating systems.

Audience

The audience for this document includes, but is not limited to; sales engineers, field consultants, professional services, IT managers, partner engineers, and customers who want to take advantage of an infrastructure built to deliver IT efficiency and enable IT innovation.

Purpose of this Document

This document discusses reference architecture and step-by-step deployment guidelines for deploying Microsoft SQL Server 2017 databases on Red Hat Enterprise Linux Operating system on FlexPod system. It also provides deployment steps for configuring SQL Always On Availability Group feature for achieving high availability of SQL databases.

Highlights of this Solution

The following software and hardware products distinguish the reference architecture from others.

· SQL Server 2017 deployment on Red Hat Enterprise Linux 7.4

· SQL Always On Availability Group configuration for high availability of databases

· Cisco UCS B200 M5 Blade Servers

· NetApp All Flash A300 storage with Data ONTAP 9.5 and NetApp SnapCenter 4.1.1 for virtual machine backup and recovery

· 40G end-to-end networking and storage connectivity

· VMWare vSphere 6.7 and Windows Server 2016 Hyper-V

FlexPod System Overview

FlexPod is a best practice datacenter architecture that includes these components:

· Cisco Unified Computing System

· Cisco Nexus Switches

· NetApp FAS or AFF storage, NetApp E-Series storage systems

These components are connected and configured according to best practices of both Cisco and NetApp and provide the ideal platform for running multiple enterprise workloads with confidence. The reference architecture covered in this document leverages the Cisco Nexus 9000 Series switch. One of the key benefits of FlexPod is the ability to maintain consistency at scaling, including scale up and scale out. Each of the component families shown in Figure 7 (Cisco Unified Computing System, Cisco Nexus, and NetApp storage systems) offers platform and resource options to scale the infrastructure up or down, while supporting the same features and functionality that are required under the configuration and connectivity best practices of FlexPod.

FlexPod Benefits

As customers transition toward shared infrastructure or cloud computing they face several challenges such as initial transition hiccups, return on investment (ROI) analysis, infrastructure management and future growth plan. The FlexPod architecture is designed to help with proven guidance and measurable value. By introducing standardization, FlexPod helps customers mitigate the risk and uncertainty involved in planning, designing, and implementing a new datacenter infrastructure. The result is a more predictive and adaptable architecture capable of meeting and exceeding customers' IT demands.

The following list provides the unique features and benefits that FlexPod system provides for consolidation SQL Server database deployments.

· Support for latest Intel Xeon processor scalable family CPUs, UCS B200 M5 blades enables consolidating more SQL Server virtual machines and thereby achieving higher consolidation ratios reducing Total Cost of Ownership and achieving quick ROIs.

· End to End 40 Gbps networking connectivity using Cisco third-generation fabric interconnects, Nexus 9000 series switches and NetApp AFF A300 storage Arrays.

· Blazing IO performance using NetApp All Flash Storage Arrays and Complete virtual machine protection by using NetApp Snapshot technology and direct storage access to SQL virtual machines using in-guest iSCSI Initiator.

· Nondisruptive policy-based management of infrastructure using Cisco UCS Manager.

FlexPod: Cisco and NetApp Verified and Validated Architecture

Cisco and NetApp have thoroughly validated and verified the FlexPod solution architecture and its many use cases while creating a portfolio of detailed documentation, information, and references to assist customers in transforming their datacenters to this shared infrastructure model. This portfolio includes, but is not limited to the following items:

· Best practice architectural design

· Workload sizing and scaling guidance

· Implementation and deployment instructions

· Technical specifications (rules for FlexPod configuration do's and don’ts)

· Frequently asked questions (FAQs)

· Cisco Validated Designs and NetApp Verified Architectures (NVAs) focused on many use cases

Cisco and NetApp have also built a robust and experienced support team focused on FlexPod solutions, from customer account and technical sales representatives to professional services and technical support engineers. The cooperative support program extended by Cisco and NetApp provides customers and channel service partners with direct access to technical experts who collaborate with cross vendors and have access to shared lab resources to resolve potential issues. FlexPod supports tight integration with virtualized and cloud infrastructures, making it a logical choice for long-term investment. The following IT initiatives are addressed by the FlexPod solution.

Integrated System

FlexPod is a pre-validated infrastructure that brings together compute, storage, and network to simplify, accelerate, and minimize the risk associated with datacenter builds and application rollouts. These integrated systems provide a standardized approach in the datacenter that facilitates staff expertise, application onboarding, and automation as well as operational efficiencies relating to compliance and certification.

Out-of-the-Box Infrastructure High Availability

FlexPod is a highly available and scalable infrastructure that IT can evolve over time to support multiple physical and virtual application workloads. FlexPod has no single point of failure at any level, from the server through the network, to the storage. The fabric is fully redundant and scalable, and provides seamless traffic failover, should any individual component fail at the physical or virtual layer.

FlexPod Design Principles

FlexPod addresses four primary design principles:

· Application availability: Makes sure that services are accessible and ready to use

· Scalability: Addresses increasing demands with appropriate resources

· Flexibility: Provides new services or recovers resources without requiring infrastructure modifications

· Manageability: Facilitates efficient infrastructure operations through open standards and APIs

The following sections provide a brief introduction of the various hardware and software components used in this solution.

Cisco Unified Computing System

Cisco Unified Computing System is a next-generation solution for blade and rack server computing. The system integrates a low-latency; lossless 40 Gigabit Ethernet unified network fabric with enterprise-class, x86-architecture servers. The system is an integrated, scalable, multi-chassis platform in which all resources participate in a unified management domain. Cisco Unified Computing System accelerates the delivery of new services simply, reliably, and securely through end-to-end provisioning and migration support for both virtualized and non-virtualized systems. Cisco Unified Computing System provides:

· Comprehensive Management

· Radical Simplification

· High Performance

Cisco Unified Computing System consists of the following components:

· Compute - The system is based on an entirely new class of computing system that incorporates rack mount and blade servers based on Intel Xeon scalable processors product family.

· Network - The system is integrated onto a low-latency, lossless, 40-Gbps unified network fabric. This network foundation consolidates Local Area Networks (LANs), Storage Area Networks (SANs), and high-performance computing networks, which are separate networks today. The unified fabric lowers costs by reducing the number of network adapters, switches, and cables, and by decreasing the power and cooling requirements.

· Virtualization - The system unleashes the full potential of virtualization by enhancing the scalability, performance, and operational control of virtual environments. Cisco security, policy enforcement, and diagnostic features are now extended into virtualized environments to better support changing business and IT requirements.

· Storage access - The system provides consolidated access to both SAN storage and Network Attached Storage (NAS) over the unified fabric. It is also an ideal system for Software defined Storage (SDS). Combining the benefits of single framework to manage both the compute and Storage servers in a single pane, Quality of Service (QOS) can be implemented if needed to inject IO throttling in the system. In addition, the server administrators can pre-assign storage-access policies to storage resources, for simplified storage connectivity and management leading to increased productivity. In addition to external storage, both rack and blade servers have internal storage which can be accessed through built-in hardware RAID controllers. With storage profile and disk configuration policy configured in Cisco UCS Manager, storage needs for the host OS and application data gets fulfilled by user defined RAID groups for high availability and better performance.

· Management - the system uniquely integrates all system components to enable the entire solution to be managed as a single entity by Cisco UCS Manager. Cisco UCS Manager has an intuitive GUI, a CLI, and a powerful scripting library module for Microsoft PowerShell built on a robust API to manage all system configuration and operations.

Cisco Unified Computing System fuses access layer networking and servers. This high-performance, next-generation server system provides a data center with a high degree of workload agility and scalability.

Cisco UCS Manager

Cisco UCS Manager (UCSM) provides unified, embedded management for all software and hardware components in the Cisco UCS. Using Single Connect technology, it manages, controls, and administers multiple chassis for thousands of virtual machines. Administrators use the software to manage the entire Cisco Unified Computing System as a single logical entity through an intuitive GUI, CLI, or an XML API. Cisco UCS Manager resides on a pair of Cisco UCS 6300 Series Fabric Interconnects using a clustered, active-standby configuration for high availability.

Cisco UCS Manager offers unified embedded management interface that integrates server, network, and storage. Cisco UCS Manager performs auto-discovery to detect inventory, manage, and provision system components that are added or changed. It offers comprehensive set of XML API for third part integration, exposes 9000 points of integration and facilitates custom development for automation, orchestration, and to achieve new levels of system visibility and control.

Service profiles benefit both virtualized and non-virtualized environments and increase the mobility of non-virtualized servers, such as when moving workloads from server to server or taking a server offline for service or upgrade. Profiles can also be used in conjunction with virtualization clusters to bring new resources online easily, complementing existing virtual machine mobility.

For more information about Cisco UCS Manager, go to: https://www.cisco.com/c/en/us/products/servers-unified-computing/ucs-manager/index.html

Cisco UCS Fabric Interconnects

The Fabric interconnects provide a single point for connectivity and management for the entire system. Typically deployed as an active-active pair, the system’s fabric interconnects integrate all components into a single, highly-available management domain controlled by Cisco UCS Manager. The fabric interconnects manage all I/O efficiently and securely at a single point, resulting in deterministic I/O latency regardless of a server or virtual machine’s topological location in the system.

Cisco UCS 6300 Series Fabric Interconnects support the bandwidth up to 2.43-Tbps unified fabric with low-latency, lossless, cut-through switching that supports IP, storage, and management traffic using a single set of cables. The fabric interconnects feature virtual interfaces that terminate both physical and virtual connections equivalently, establishing a virtualization-aware environment in which blade, rack servers, and virtual machines are interconnected using the same mechanisms. The Cisco UCS 6332-16UP is a 1-RU Fabric Interconnect that features up to 40 universal ports that can support 24 40-Gigabit Ethernet, Fiber Channel over Ethernet, or native Fiber Channel connectivity. In addition to this it supports up to 16 1- and 10-Gbps FCoE or 4-, 8- and 16-Gbps Fibre Channel unified ports.

Figure 1 Cisco UCS Fabric Interconnect 6332-16UP

For more information, go to: https://www.cisco.com/c/en/us/products/servers-unified-computing/ucs-6332-16up-fabric-interconnect/index.html

Cisco UCS 5108 Blade Server Chassis

The Cisco UCS 5100 Series Blade Server Chassis is a crucial building block of the Cisco Unified Computing System, delivering a scalable and flexible blade server chassis. The Cisco UCS 5108 Blade Server Chassis is six rack units (6RU) high and can mount in an industry-standard 19-inch rack. A single chassis can house up to eight half-width Cisco UCS B-Series Blade Servers and can accommodate both half-width and full-width blade form factors. Four single-phase, hot-swappable power supplies are accessible from the front of the chassis. These power supplies are 92 percent efficient and can be configured to support non-redundant, N+ 1 redundant and grid-redundant configurations. The rear of the chassis contains eight hot-swappable fans, four power connectors (one per power supply), and two I/O bays for Cisco UCS 2304 Fabric Extenders. A passive mid-plane provides multiple 40 Gigabit Ethernet connections between blade serves and fabric interconnects. The Cisco UCS 2304 Fabric Extender has four 40 Gigabit Ethernet, FCoE-capable, Quad Small Form-Factor Pluggable (QSFP+) ports that connect the blade chassis to the fabric interconnect. Each Cisco UCS 2304 can provide one 40 Gigabit Ethernet ports connected through the midplane to each half-width slot in the chassis, giving it a total eight 40G interfaces to the compute. Typically configured in pairs for redundancy, two fabric extenders provide up to 320 Gbps of I/O to the chassis.

For more information, go to: http://www.cisco.com/c/en/us/products/servers-unified-computing/ucs-5100-series-blade-server-chassis/index.html

Cisco UCS B200 M5 Blade Server

The Cisco UCS B200 M5 Blade Server delivers performance, flexibility, and optimization for deployments in data centers, in the cloud, and at remote sites. This enterprise-class server offers market-leading performance, versatility, and density without compromise for workloads including Virtual Desktop Infrastructure (VDI), web infrastructure, distributed databases, converged infrastructure, and enterprise applications such as Oracle and SAP HANA. The Cisco UCS B200 M5 Blade Server can quickly deploy stateless physical and virtual workloads through programmable, easy-to-use Cisco UCS Manager Software and simplified server access through Cisco SingleConnect technology. The Cisco UCS B200 M5 Blade Server is a half-width blade. Up to eight servers can reside in the 6-Rack-Unit (6RU) Cisco UCS 5108 Blade Server Chassis, offering one of the highest densities of servers per rack unit of blade chassis in the industry. You can configure the Cisco UCS B200 M5 to meet your local storage requirements without having to buy, power, and cool components that you do not need. The Cisco UCS B200 M5 Blade Server provides these main features:

· Up to two Intel Xeon Scalable CPUs with up to 28 cores per CPU

· 24 DIMM slots for industry-standard DDR4 memory at speeds up to 2666 MHz, with up to 3 TB of total memory when using 128-GB DIMMs

· Modular LAN On Motherboard (mLOM) card with Cisco UCS Virtual Interface Card (VIC) 1340, a 2-port, 40 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE)–capable mLOM mezzanine adapter

· Optional rear mezzanine VIC with two 40-Gbps unified I/O ports or two sets of 4 x 10-Gbps unified I/O ports, delivering 80 Gbps to the server; adapts to either 10- or 40-Gbps fabric connections

· Two optional, hot-pluggable, Hard-Disk Drives (HDDs), Solid-State Disks (SSDs), or NVMe 2.5-inch drives with a choice of enterprise-class RAID or pass-through controllers

Figure 2 Cisco UCS B200 M5 Blade Server

For more information, go to: https://www.cisco.com/c/en/us/products/collateral/servers-unified-computing/ucs-b-series-blade-servers/datasheet-c78-739296.html

Cisco UCS Fabric Extenders

Cisco UCS 2304 Fabric Extender brings the unified fabric into the blade server enclosure, providing multiple 40 Gigabit Ethernet connections between blade servers and the fabric interconnect, simplifying diagnostics, cabling, and management. It is a third-generation I/O Module (IOM) that shares the same form factor as the second-generation Cisco UCS 2200 Series Fabric Extenders and is backward compatible with the shipping Cisco UCS 5108 Blade Server Chassis. The Cisco UCS 2304 connects the I/O fabric between the Cisco UCS 6300 Series Fabric Interconnects and the Cisco UCS 5100 Series Blade Server Chassis, enabling a lossless and deterministic Fibre Channel over Ethernet (FCoE) fabric to connect all blades and chassis together. Because the fabric extender is similar to a distributed line card, it does not perform any switching and is managed as an extension of the fabric interconnects. This approach reduces the overall infrastructure complexity and enabling Cisco UCS to scale to many chassis without multiplying the number of switches needed, reducing TCO and allowing all chassis to be managed as a single, highly available management domain.

The Cisco UCS 2304 Fabric Extender has four 40Gigabit Ethernet, FCoE-capable, Quad Small Form-Factor Pluggable (QSFP+) ports that connect the blade chassis to the fabric interconnect. Each Cisco UCS 2304 can provide one 40 Gigabit Ethernet ports connected through the midplane to each half-width slot in the chassis, giving it a total eight 40G interfaces to the compute. Typically configured in pairs for redundancy, two fabric extenders provide up to 320 Gbps of I/O to the chassis.

Figure 3 Cisco UCS 2304 Fabric Extender

For more information, go to: https://www.cisco.com/c/en/us/products/collateral/servers-unified-computing/ucs-6300-series-fabric-interconnects/datasheet-c78-675243.html

Cisco VIC Interface Cards

The Cisco UCS Virtual Interface Card (VIC) 1340 is a 2-port 40-Gbps Ethernet or dual 4 x 10-Gbps Ethernet, Fiber Channel over Ethernet (FCoE) capable modular LAN on motherboard (mLOM) designed exclusively for the M4 generation of Cisco UCS B-Series Blade Servers. All the blade servers for both Controllers and Computes will have MLOM VIC 1340 card. Each blade will have a capacity of 40Gb of network traffic. The underlying network interfaces will share this MLOM card.

The Cisco UCS VIC 1340 enables a policy-based, stateless, agile server infrastructure that can present over 256 PCIe standards-compliant interfaces to the host that can be dynamically configured as either network interface cards (NICs) or host bus adapters (HBAs).

For more information, go to: http://www.cisco.com/c/en/us/products/interfaces-modules/ucs-virtual-interface-card-1340/index.html

Cisco UCS Differentiators

Cisco Unified Computing System is revolutionizing the way servers are managed in the data center. The following are the unique differentiators of Cisco UCS and Cisco UCS Manager:

· Embedded Management—In Cisco UCS, the servers are managed by the embedded firmware in the Fabric Interconnects, eliminating need for any external physical or virtual devices to manage the servers.

· Unified Fabric—In Cisco UCS, from blade server chassis or rack servers to FI, there is a single Ethernet cable used for LAN, SAN and management traffic. This converged I/O results in reduced cables, SFPs and adapters which in turn reduce capital and operational expenses of the overall solution.

· Auto Discovery—By simply inserting the blade server in the chassis or connecting rack server to the fabric interconnect, discovery and inventory of compute resource occurs automatically without any management intervention. The combination of unified fabric and auto-discovery enables the wire-once architecture of Cisco UCS, where its compute capability can be extended easily while keeping the existing external connectivity to LAN, SAN and management networks.

· Policy Based Resource Classification—When a compute resource is discovered by Cisco UCS Manager, it can be automatically classified to a given resource pool based on policies defined. This capability is useful in multi-tenant cloud computing. This CVD showcases the policy-based resource classification of Cisco UCS Manager.

· Combined Rack and Blade Server Management—Cisco UCS Manager can manage B-Series blade servers and C-Series rack server under the same Cisco UCS domain. This feature, along with stateless computing makes compute resources truly hardware form factor agnostic.

· Model-based Management Architecture—Cisco UCS Manager Architecture and management database is model based and data driven. An open XML API is provided to operate on the management model. This enables easy and scalable integration of Cisco UCS Manager with other management systems.

· Policies, Pools, Templates—The management approach in Cisco UCS Manager is based on defining policies, pools and templates, instead of cluttered configuration, which enables a simple, loosely coupled, data driven approach in managing compute, network and storage resources.

· Loose Referential Integrity—In Cisco UCS Manager, a service profile, port profile or policies can refer to other policies or logical resources with loose referential integrity. A referred policy cannot exist at the time of authoring the referring policy or a referred policy can be deleted even though other policies are referring to it. This provides different subject matter experts to work independently from each-other. This provides great flexibility where different experts from different domains, such as network, storage, security, server and virtualization work together to accomplish a complex task.

· Policy Resolution—In Cisco UCS Manager, a tree structure of organizational unit hierarchy can be created that mimics the real-life tenants and/or organization relationships. Various policies, pools and templates can be defined at different levels of organization hierarchy. A policy referring to another policy by name is resolved in the organization hierarchy with closest policy match. If no policy with specific name is found in the hierarchy of the root organization, then special policy named “default” is searched. This policy resolution practice enables automation friendly management APIs and provides great flexibility to owners of different organizations.

· Service Profiles and Stateless Computing—a service profile is a logical representation of a server, carrying its various identities and policies. This logical server can be assigned to any physical compute resource as far as it meets the resource requirements. Stateless computing enables procurement of a server within minutes, which used to take days in legacy server management systems.

· Built-in Multi-Tenancy Support—The combination of policies, pools and templates, loose referential integrity, policy resolution in organization hierarchy and a service profiles based approach to compute resources makes Cisco UCS Manager inherently friendly to multi-tenant environment typically observed in private and public clouds.

· Extended Memory—the enterprise-class Cisco UCS B200 M5 blade server extends the capabilities of Cisco’s Unified Computing System portfolio in a half-width blade form factor. The Cisco UCS B200 M5 harnesses the power of the latest Intel Xeon scalable processors product family CPUs with up to 3 TB of RAM– allowing huge virtual machine-to-physical server ratio required in many deployments or allowing large memory operations required by certain architectures like Big-Data.

· Virtualization Aware Network—VM-FEX technology makes the access network layer aware about host virtualization. This prevents domain pollution of compute and network domains with virtualization when virtual network is managed by port-profiles defined by the network administrators’ team. VM-FEX also off-loads hypervisor CPU by performing switching in the hardware, thus allowing hypervisor CPU to do more virtualization related tasks. VM-FEX technology is well integrated with VMware vCenter, Linux KVM and Hyper-V SR-IOV to simplify cloud management.

· Simplified QoS—Even though Fiber Channel and Ethernet are converged in Cisco UCS fabric, built-in support for QoS and lossless Ethernet makes it seamless. Network Quality of Service (QoS) is simplified in Cisco UCS Manager by representing all system classes in one GUI panel.

Cisco Nexus 93180YC-EX Switches

In this solution, the Cisco Nexus 93180YC-EX Switches are used as upstream switches. It offers 48 1/10/25/40/100 Gigabit Ethernet SFP+ ports and 6 40/100-Gbps QSFP+ uplink ports. All ports are line rate, delivering 3.6 Tbps of throughput with a latency of less than 2 micro seconds in a 1-rack-unit (1RU) form factor.

Figure 4 Cisco UCS Nexus 93180YC-EX

For more information, go to: https://www.cisco.com/c/en/us/products/switches/nexus-93180yc-ex-switch/index.html

NetApp AFF A300 Storage

With the new A-Series All Flash FAS (AFF) controller lineup, NetApp provides industry leading performance while continuing to provide a full suite of enterprise-grade data management and data protection features. The A-Series lineup offers double the IOPS, while decreasing the latency.

This solution utilizes the NetApp AFF A300. This controller provides the high performance benefits of 40GbE and all flash SSDs, while taking up only 5U of rack space. Configured with 24 x 3.8TB SSD, the A300 provides ample performance and over 60TB effective capacity. This makes it an ideal controller for a shared workload converged infrastructure. For situations where more performance is needed, the A700s would be an ideal fit.

Figure 5 NetApp AFF A300

NetApp ONTAP 9.5

ONTAP 9.5 is the data management software that is used with the NetApp all-flash storage platforms in the solution design. ONTAP software offers unified storage for applications that read and write data over block or file-access protocols in storage configurations that range from high-speed flash to lower-priced spinning media or cloud-based object storage.

ONTAP implementations can run on NetApp engineered FAS or AFF appliances, on commodity hardware (ONTAP Select), and in private, public, or hybrid clouds (NetApp Private Storage and Cloud Volumes ONTAP). Specialized implementations offer best-in-class converged infrastructure as featured here as part of the FlexPod Datacenter solution and access to third-party storage arrays (NetApp FlexArray virtualization).

Together these implementations form the basic framework of the NetApp Data Fabric, with a common software-defined approach to data management and fast, efficient replication across platforms. FlexPod and ONTAP architectures can serve as the foundation for both hybrid cloud and private cloud designs.

The following few sections provide an overview of how ONTAP 9.5 is an industry-leading data management software architected on the principles of software defined storage.

NetApp Storage Virtual Machine

A NetApp ONTAP cluster serves data through at least one and possibly multiple storage virtual machines (SVMs; formerly called Vservers). An SVM is a logical abstraction that represents the set of physical resources of the cluster. Data volumes and network logical interfaces (LIFs) are created and assigned to an SVM and might reside on any node in the cluster to which the SVM has been given access. An SVM might own resources on multiple nodes concurrently, and those resources can be moved non-disruptively from one node in the storage cluster to another. For example, a NetApp FlexVol flexible volume can be non-disruptively moved to a new node and aggregate, or a data LIF can be transparently reassigned to a different physical network port. The SVM abstracts the cluster hardware, and thus it is not tied to any specific physical hardware.

An SVM can support multiple data protocols concurrently. Volumes within the SVM can be joined together to form a single NAS namespace, which makes all of an SVM's data available through a single share or mount point to NFS and CIFS clients. SVMs also support block-based protocols, and LUNs can be created and exported by using iSCSI, FC, or FCoE. Any or all of these data protocols can be configured for use within a given SVM. Storage administrators and management roles can also be associated with SVM, which enables higher security and access control, particularly in environments with more than one SVM, when the storage is configured to provide services to different groups or set of workloads.

Storage Efficiencies

Storage efficiency has always been a primary architectural design point of ONTAP data management software. A wide array of features allows you to store more data using less space. In addition to deduplication and compression, you can store your data more efficiently by using features such as unified storage, multitenancy, thin provisioning, and utilize NetApp Snapshot technology.

Starting with ONTAP 9, NetApp guarantees that the use of NetApp storage efficiency technologies on AFF systems reduce the total logical capacity used to store customer data by 75 percent, a data reduction ratio of 4:1. This space reduction is enabled by a combination of several different technologies, including deduplication, compression, and compaction.

Compaction, which was introduced in ONTAP 9, is the latest patented storage efficiency technology released by NetApp. In the ONTAP WAFL file system, all I/O takes up 4KB of space, even if it does not actually require 4KB of data. Compaction combines multiple blocks that are not using their full 4KB of space together into one block. This one block can be more efficiently stored on the disk to save space.

Encryption

Data security continues to be an important consideration for customers purchasing storage systems. NetApp supported self-encrypting drives in storage clusters prior to ONTAP 9. However, in ONTAP 9, the encryption capabilities of ONTAP are extended by adding an Onboard Key Manager (OKM). The OKM generates and stores keys for each of the drives in ONTAP, allowing ONTAP to provide all functionality required for encryption out of the box. Through this functionality, sensitive data stored on disk is secure and can only be accessed by ONTAP.

Beginning with ONTAP 9.1, NetApp has extended the encryption capabilities further with NetApp Volume Encryption (NVE), a software-based mechanism for encrypting data. It allows a user to encrypt data at the per-volume level instead of requiring encryption of all data in the cluster, thereby providing more flexibility and granularity to the ONTAP administrators. This encryption extends to Snapshot copies and NetApp FlexClone volumes that are created in the cluster. One benefit of NVE is that it runs after the implementation of the storage efficiency features, and, therefore, it does not interfere with the ability of ONTAP to create space savings.

For more information about encryption in ONTAP, see the NetApp Power Encryption Guide in the NetApp ONTAP 9 Documentation Center.

FlexClone

NetApp FlexClone technology enables instantaneous cloning of a dataset without consuming any additional storage until cloned data differs from the original.

SnapMirror (Data Replication)

NetApp SnapCenter

SnapCenter is a NetApp next-generation data protection software for tier 1 enterprise applications. SnapCenter, with its single-pane-of-glass management interface, automates and simplifies the manual, complex, and time-consuming processes associated with the backup, recovery, and cloning of multiple databases and other application workloads.

SnapCenter leverages technologies, including NetApp Snapshot copies, SnapMirror replication technology, SnapRestore data recovery software, and FlexClone thin cloning technology, that allow it to integrate seamlessly with technologies offered by Oracle, Microsoft, SAP, VMware, and MongoDB across FC, iSCSI, and NAS protocols. This integration allows IT organizations to scale their storage infrastructure, meet increasingly stringent SLA commitments, and improve the productivity of administrators across the enterprise.

SnapCenter is used in this solution for backup and restore of VMware virtual machines. SnapCenter has the capability to backup and restore SQL databases running on Windows OS. SnapCenter currently does not support SQL Server running on Linux OS, but that functionality is coming in near future. SnapCenter does not support the backup and restore of virtual machines running on Hyper-V. For Hyper-V virtual machine backup, NetApp SnapManager for Hyper-V can be used.

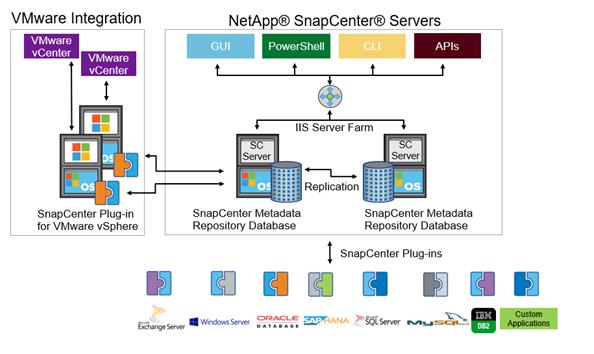

SnapCenter Architecture

SnapCenter is a centrally managed web-based application that runs on a Windows platform and remotely manages multiple servers that must be protected.

Figure 6 illustrates the high-level architecture of the NetApp SnapCenter Server.

Figure 6 SnapCenter Architecture

The SnapCenter Server has an HTML5-based GUI as well as PowerShell cmdlets and APIs.

The SnapCenter Server is high-availability capable out of the box, meaning that if one SnapCenter host is ever unavailable for any reason, then the second SnapCenter Server can seamlessly take over and no operations are affected.

The SnapCenter Server can push out plug-ins to remote hosts. These plug-ins are used to interact with an application, a database, or a file system. In most cases, the plug-ins must be present on the remote host so that application- or database-level commands can be issued from the same host where the application or database is running.

To manage the plug-ins and the interaction between the SnapCenter Server and the plug-in host, SnapCenter uses SM Service, which is a NetApp SnapManager web service running on top of Windows Server Internet Information Services (IIS) on the SnapCenter Server. SM Service takes all client requests such as backup, restore, clone, and so on.

The SnapCenter Server communicates those requests to SMCore, which is a service that runs co-located within the SnapCenter Server and remote servers and plays a significant role in coordinating with the SnapCenter plug-ins package for Windows. The package includes the SnapCenter plug-in for Microsoft Windows Server and SnapCenter plug-in for Microsoft SQL Server to discover the host file system, gather database metadata, quiesce and thaw, and manage the SQL Server database during backup, restore, clone, and verification.

SnapCenter Virtualization (SCV) is another plug-in that manages virtual servers running on VMWare and that helps in discovering the host file system, databases on virtual machine disks (VMDK), and raw device mapping (RDM).

SnapCenter Features

SnapCenter enables you to create application-consistent Snapshot copies and to complete data protection operations, including Snapshot copy-based backup, clone, restore, and backup verification operations. SnapCenter provides a centralized management environment, while using role-based access control (RBAC) to delegate data protection and management capabilities to individual application users across the SnapCenter Server and Windows hosts.

SnapCenter includes the following key features:

· A unified and scalable platform across applications and database environments and virtual and nonvirtual storage, powered by the SnapCenter Server

· Consistency of features and procedures across plug-ins and environments, supported by the SnapCenter user interface

· RBAC for security and centralized role delegation

· Application-consistent Snapshot copy management, restore, clone, and backup verification support from both primary and secondary destinations (NetApp SnapMirror and SnapVault)

· Remote package installation from the SnapCenter GUI

· Nondisruptive, remote upgrades

· A dedicated SnapCenter repository for faster data retrieval

· Load balancing implemented by using Microsoft Windows network load balancing (NLB) and application request routing (ARR), with support for horizontal scaling

· Centralized scheduling and policy management to support backup and clone operations

· Centralized reporting, monitoring, and dashboard views

· SnapCenter 4.1 support for data protection for VMware virtual machines, SQL Server Databases, Oracle Databases, MySQL, SAP HANA, MongoDB, and Microsoft Exchange

· SnapCenter Plug-in for VMware in vCenter integration into the vSphere Web Client. All virtual machine backup and restore tasks are preformed through the web client GUI

· Using the SnapCenter Plug-in for VMware in vCenter you can:

- Create policies, resource groups and backup schedules for virtual machines

- Backup virtual machines, VMDKs, and datastores

- Restore virtual machines, VMDKs, and files and folders (on Windows guest OS)

- Attach and detach VMDK

- Monitor and report data protection operations on virtual machines and datastores

- Support RBAC security and centralized role delegation

- Support guest file or folder (single or multiple) support for Windows guest OS

- Restore an efficient storage base from primary and secondary Snapshot copies through Single File SnapRestore

- Generate dashboard and reports that provide visibility into protected versus unprotected virtual machines and status of backup, restore, and mount jobs

- Attach or detach virtual disks from secondary Snapshot copies

- Attach virtual disks to an alternate virtual machine

VMware vSphere 6.7

VMWare vSphere 6.7 is the industry leading virtualization platform. VMware ESXi 6.7 is used to deploy and run the virtual machines. VCenter Server Appliance 6.7 is used to manage the ESXi hosts and virtual machines. Multiple ESXi hosts running on Cisco UCS B200 M5 blades are used form a VMware ESXi cluster. VMware ESXi cluster pool the compute, memory and network resources from all the cluster nodes and provides resilient platform for virtual machines running on the cluster. VMware ESXi cluster features, vSphere high availability and Distributed Resources Scheduler (DRS), contribute to the tolerance of the vSphere Cluster withstanding failures as well as distributing the resources across the VMWare ESXi hosts.

Microsoft Windows Server 2016 Hyper-V

Hyper-V is another leading virtualization platform offering from Microsoft. Hyper-V role is enabled on the Windows Server 2016 hosts to facilitate the virtual machine deployment. Multiple Windows Server 2016 hosts running on Cisco UCS B200 M5 blades are used to form Windows Server Failover Cluster (WSFC). WSFC along with Performance and Resource Management (PRO), Windows Hyper-V cluster provides high availability and resilient cluster platform for virtual machines to critical applications.

Red Hat Enterprise Linux 7

Red Hat Enterprise Linux (RHEL) is a widely adapted Linux server operating system. In this solution, RHEL 7,4 is used as guest operating system inside the virtual machines deployed on VMware ESXi Cluster and Windows Hyper-V Cluster. Using Red Hat Enterprise Linux 7, high availability can be deployed in a variety of configurations to suit varying needs for performance, high-availability, load balancing, and file sharing. RHEL uses pacemaker, a leading high availability cluster resource manager, to mage and recover cluster resources.

Microsoft SQL Server 2017

Microsoft SQL Server 2017 is the recent relational database engine from Microsoft. It brings in lot of new features and enhancements to the relational and analytical engines. With SQL Server 2017, Microsoft embraces Linux operating systems and announces to support SQL Server on Linux. SQL server 2017 is the first stable version that performs as good as it does in Windows. Currently most of the database features that are supported in Windows are also supported in Linux. This enablement on Linux removes windows lock-in and provides flexibility to the customers to choose and deploy SQL Server databases on widely used Linux flavored operating systems.

FlexPod is a defined set of hardware and software that serves as an integrated foundation for both virtualized and non-virtualized solutions. VMware vSphere built on FlexPod includes NetApp All Flash FAS storage, Cisco Nexus networking, Cisco Unified Computing System, and VMware vSphere software in a single package. The design is flexible enough that the networking, computing, and storage can fit in one datacenter rack or be deployed according to a customer's data center design. Port density enables the networking components to accommodate multiple configurations of this kind.

One benefit of the FlexPod architecture is the ability to customize or "flex" the environment to suit a customer's requirements. A FlexPod can easily be scaled as requirements and demand change. The unit can be scaled both up (adding resources to a FlexPod unit) and out (adding more FlexPod units). The reference architecture detailed in this document highlights the resiliency, cost benefit, and ease of deployment of an IP-based storage solution. A storage system capable of serving multiple protocols across a single interface allows for customer choice and investment protection because it truly is a wire-once architecture.

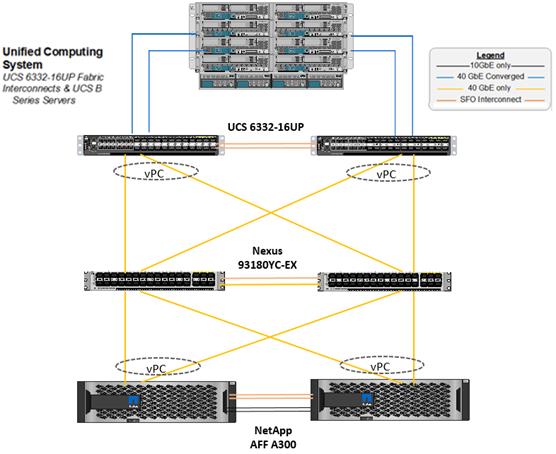

Figure 7 shows FlexPod components and the network connections for a configuration with the Cisco UCS 6332-16UP Fabric Interconnects. This design has end-to-end 40 Gb Ethernet connections between the Cisco UCS 5108 Blade Chassis and the Cisco UCS Fabric Interconnect, between the Cisco UCS Fabric Interconnect and Cisco Nexus 9000, and between Cisco Nexus 9000 and NetApp AFF A300. This infrastructure is deployed to provide iSCSI-booted hosts with file-level and block-level access to shared storage. The reference architecture reinforces the "wire-once" strategy, because as additional storage is added to the architecture, no re-cabling is required from the hosts to the Cisco UCS fabric interconnect.

Figure 7 FlexPod With Cisco UCS 6332-16UP Fabric Interconnects

Figure 7 illustrates a base design. Each of the components can be scaled easily to support specific business requirements. For example, more (or different) servers or even blade chassis can be deployed to increase compute capacity, additional storage controllers or disk shelves can be deployed to improve I/O capability and throughput, and special hardware or software features can be added to introduce new features.

The following components are used to the validate and test the solution:

· 1x Cisco 5108 chassis with Cisco UCS 2304 IO Modules

· 5x B200 M5 Blades with VIC 1340 and a Port Expander card

· Two Cisco Nexus 93180YC-EX switches

· Two Cisco UCS 6332-16UP fabric interconnects

· One NetApp AFF A300 (HA pair) running clustered Data ONTAP with Disk shelves and Solid-State Drives (SSD)

In this solution, both VMware ESXi and Windows Hyper-V virtual environments are tested and validated for deploying SQL Server 2017 on virtual machine running Red Hat Enterprise Linux operating system.

Figure 8 illustrates the high-level steps to deploy SQL Server on a RHEL virtual machine in a FlexPod environment with both VMware and Hyper-V hypervisors.

Figure 8 Flowchart to Deploy SQL Server on a RHEL Virtual Machine in a FlexPod Environment

Software Revisions

Table 1 lists the software revisions for this solution.

| Layer |

Device |

Image |

Components |

| Compute |

Cisco UCS third-generation 6332-16UP |

4.0(1c) |

Includes Cisco 5108 blade chassis with UCS 2304 IO Modules Cisco UCS B200 M5 blades with Cisco UCS VIC 1340 adapter |

| Network Switches |

Includes Cisco Nexus 93180YC |

NX-OS: 9.2.2 |

|

| Storage Controllers |

NetApp AFF A300 storage controllers |

Data ONTAP 9.5 |

|

| Software |

Cisco UCS Manager |

4.0(1c) |

|

|

|

Cisco UCS Manager Plugin for VMware vSphere Web Client |

2.0.4 |

|

|

|

VMware vSphere ESXi |

6.7 U1 |

|

|

|

VMware vCenter |

6.7 U1 |

|

|

|

Microsoft Windows Hyper-V |

Windows Server 2016 |

|

|

|

NetApp Virtual Storage Console (VSC) |

7.2.1 |

|

|

|

NetApp SnapCenter |

4.1.1 |

|

|

|

NetApp Host Utilities Kit for RHEL 7.4 & Windows 2016 |

7.1 |

|

|

|

Red Hat Enterprise Linux 7.4 |

7.4 |

|

|

|

Microsoft SQL Server |

2017 |

|

Configuration Guidelines

This document provides the details to configure a fully redundant, highly available configuration for a FlexPod unit with clustered Data ONTAP storage. Therefore, reference is made to which component is being configured with each step, either 01 or 02 or A and B. For example, node01 and node02 are used to identify the two NetApp storage controllers that are provisioned with this document, and Cisco Nexus A or Cisco Nexus B identifies the pair of Cisco Nexus switches that are configured. The Cisco UCS fabric interconnects are similarly configured. Additionally, this document details the steps for provisioning multiple Cisco UCS hosts, and these examples are identified as: VM-Host-01, VM-Host-02 to represent infrastructure hosts deployed to each of the fabric interconnects in this document. Finally, to indicate that you should include information pertinent to your environment in a given step, <text> appears as part of the command structure. See the following example for the network port vlan create command:

Usage:

network port vlan create ?

[-node] <nodename> Node

{ [-vlan-name] {<netport>|<ifgrp>} VLAN Name

| -port {<netport>|<ifgrp>} Associated Network Port

[-vlan-id] <integer> } Network Switch VLAN Identifier

Example:

network port vlan -node <node01> -vlan-name i0a-<vlan id>

This document is intended to enable you to fully configure the customer environment. In this process, various steps require you to insert customer-specific naming conventions, IP addresses, and VLAN schemes, as well as to record appropriate MAC addresses. Table 3 lists the virtual machines necessary for deployment as outlined in this guide. Table 2 lists the VLANs necessary for deployment as outlined in this guide.

| VLAN Name |

VLAN Purpose |

ID used in Validating this Document |

Virtual Environment (VMware / Hyper-V) |

| Out of Band Mgmt |

VLAN for out-of-band management interfaces |

17 |

VMware & Hyper-V |

| In Band Mgmt |

VLAN for in-band management interfaces |

117 |

|

| Native-VLAN |

VLAN to which untagged frames are assigned |

2 |

VMware & Hyper-V |

| SQL-VM-MGMT |

VLAN for in-band management interfaces and virtual machines interfaces |

904 |

VMware |

| VLAN for iSCSI A traffic for SQL virtual machines on ESXi as well as ESXi Infrastructure |

3012 |

VMware |

|

| SQL-VM-iSCSI-B |

VLAN for iSCSI B traffic for SQL virtual machines on ESXi as well as ESXi Infrastructure |

3022 |

VMware |

| SQL-vMotion |

VLAN for VMware vMotion |

905 |

VMware |

| SQL-HV-MGMT |

VLAN for in-band management interfaces and virtual machines interfaces |

906 |

Hyper-V |

| SQL-HV-iSCSI-A |

VLAN for iSCSI A traffic for SQL virtual machines on Hyper-V as well as Hyper-V Infrastructure |

3013 |

Hyper-V |

| SQL-HV-iSCSI-B |

VLAN for iSCSI B traffic for SQL virtual machines on Hyper-V as well as Hyper-V Infrastructure |

3023 |

Hyper-V |

| SQL-Live-Migration |

VLAN for Hyper-V virtual machine’s live migration |

907 |

Hyper-V |

| SQL-HV-Cluster |

VLAN for private cluster communication |

908 |

Hyper-V |

![]() For simplicity purposes, the same VLAN ID (SQL-VM-MGMT/SQL-HV-MGMT) is used for both in-band management interfaces of hypervisor hosts and virtual machines running the production workloads. If required, different VLANs can be used to segregate these traffics.

For simplicity purposes, the same VLAN ID (SQL-VM-MGMT/SQL-HV-MGMT) is used for both in-band management interfaces of hypervisor hosts and virtual machines running the production workloads. If required, different VLANs can be used to segregate these traffics.

Table 3 lists the virtual machines necessary for deployment as outlined in this document.

| Virtual Machine Description |

Host Name |

| Active Directory |

|

| vCenter Server |

|

| NetApp VSC |

|

| NetApp SnapCenter Server |

|

Physical Infrastructure

FlexPod Cabling

The information in this section is provided as a reference for cabling the physical equipment in a FlexPod environment. To simplify cabling requirements, the tables include both local and remote device and port locations.

The tables in this section contain details for the prescribed and supported configuration of the NetApp AFF A300 running NetApp ONTAP 9.5.

![]() For any modifications of this prescribed architecture, consult the NetApp Interoperability Matrix Tool (IMT)

For any modifications of this prescribed architecture, consult the NetApp Interoperability Matrix Tool (IMT)

This document assumes that out-of-band management ports are plugged into an existing management infrastructure at the deployment site. These interfaces will be used in various configuration steps.

![]() Be sure to use the cabling directions in this section as a guide.

Be sure to use the cabling directions in this section as a guide.

The NetApp storage controller and disk shelves should be connected according to best practices for the specific storage controller and disk shelves. For disk shelf cabling, refer to the Universal SAS and ACP Cabling Guide: https://library.netapp.com/ecm/ecm_get_file/ECMM1280392

The following details the cable connections used in the validation lab for the 40Gb end-to-end iSCSI topology based on the Cisco UCS 6332-16UP fabric interconnect. Additional 1Gb management connections will be needed for an out-of-band network switch that sits apart from the FlexPod infrastructure. Each Cisco UCS fabric interconnect and Cisco Nexus switch is connected to the out-of-band network switch, and each AFF controller has two connections to the out-of-band network switch.

Figure 9 FlexPod Cabling with Cisco UCS 6332-16UP Fabric Interconnects

This section provides the detailed process to configure the Cisco Nexus 9000s for use in a FlexPod environment.

![]() Follow these steps precisely since failure to do so could result in an improper configuration.

Follow these steps precisely since failure to do so could result in an improper configuration.

Physical Connectivity

Follow the physical connectivity guidelines for FlexPod as described in section FlexPod Cabling.

FlexPod Cisco Nexus Base

The following procedures describe how to configure the Cisco Nexus switches for use in a base FlexPod environment. This procedure assumes the use of Cisco Nexus 9000 9.2.2 and is valid for Cisco Nexus 93180YC-EX switches deployed with the 40Gb end-to-end topology.

![]() The following process includes setting up the Network Time Protocol (NTP) distribution on the in-band management VLAN. The interface-vlan feature and ntp commands are used in this setup. This procedure assumes that the default VRF is used to route the in-band management VLAN.

The following process includes setting up the Network Time Protocol (NTP) distribution on the in-band management VLAN. The interface-vlan feature and ntp commands are used in this setup. This procedure assumes that the default VRF is used to route the in-band management VLAN.

Set Up Initial Configuration

Cisco Nexus A

To set up the initial configuration for the Cisco Nexus A switch on <nexus-A-hostname>, follow these steps:

1. Configure the switch.

![]() On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

Abort Power on Auto Provisioning and continue with normal setup? (yes/no) [n]: yes

Do you want to enforce secure password standard (yes/no) [y]: Enter

Enter the password for "admin": <password>

Confirm the password for "admin": <password>

Would you like to enter the basic configuration dialog (yes/no): yes

Create another login account (yes/no) [n]: Enter

Configure read-only SNMP community string (yes/no) [n]: Enter

Configure read-write SNMP community string (yes/no) [n]: Enter

Enter the switch name: <nexus-A-hostname>

Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]: Enter

Mgmt0 IPv4 address: <nexus-A-mgmt0-ip>

Mgmt0 IPv4 netmask: <nexus-A-mgmt0-netmask>

Configure the default gateway? (yes/no) [y]: Enter

IPv4 address of the default gateway: <nexus-A-mgmt0-gw>

Configure advanced IP options? (yes/no) [n]: Enter

Enable the telnet service? (yes/no) [n]: Enter

Enable the ssh service? (yes/no) [y]: Enter

Type of ssh key you would like to generate (dsa/rsa) [rsa]: Enter

Number of rsa key bits <1024-2048> [1024]: Enter

Configure the ntp server? (yes/no) [n]: y

NTP server IPv4 address: <global-ntp-server-ip>

Configure default interface layer (L3/L2) [L3]: L2

Configure default switchport interface state (shut/noshut) [shut]: Enter

Configure CoPP system profile (strict/moderate/lenient/dense/skip) [strict]: Enter

Would you like to edit the configuration? (yes/no) [n]: Enter

2. Review the configuration summary before enabling the configuration.

Use this configuration and save it? (yes/no) [y]: Enter

Cisco Nexus B

To set up the initial configuration for the Cisco Nexus B switch on <nexus-B-hostname>, follow these steps:

1. Configure the switch.

![]() On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

Abort Power on Auto Provisioning and continue with normal setup? (yes/no) [n]: yes

Do you want to enforce secure password standard (yes/no) [y]: Enter

Enter the password for "admin": <password>

Confirm the password for "admin": <password>

Would you like to enter the basic configuration dialog (yes/no): yes

Create another login account (yes/no) [n]: Enter

Configure read-only SNMP community string (yes/no) [n]: Enter

Configure read-write SNMP community string (yes/no) [n]: Enter

Enter the switch name: <nexus-B-hostname>

Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]: Enter

Mgmt0 IPv4 address: <nexus-B-mgmt0-ip>

Mgmt0 IPv4 netmask: <nexus-B-mgmt0-netmask>

Configure the default gateway? (yes/no) [y]: Enter

IPv4 address of the default gateway: <nexus-B-mgmt0-gw>

Configure advanced IP options? (yes/no) [n]: Enter

Enable the telnet service? (yes/no) [n]: Enter

Enable the ssh service? (yes/no) [y]: Enter

Type of ssh key you would like to generate (dsa/rsa) [rsa]: Enter

Number of rsa key bits <1024-2048> [1024]: Enter

Configure the ntp server? (yes/no) [n]: y

NTP server IPv4 address: <global-ntp-server-ip>

Configure default interface layer (L3/L2) [L3]: L2

Configure default switchport interface state (shut/noshut) [shut]: Enter

Configure CoPP system profile (strict/moderate/lenient/dense/skip) [strict]: Enter

Would you like to edit the configuration? (yes/no) [n]: Enter

2. Review the configuration summary before enabling the configuration.

Use this configuration and save it? (yes/no) [y]: Enter

FlexPod Cisco Nexus Switch Configuration

Enable Licenses

Cisco Nexus A and Cisco Nexus B

To license the Cisco Nexus switches, follow these steps:

1. Log in as admin.

2. Run the following commands:

config t

feature interface-vlan

feature lacp

feature vpc

feature lldp

Set Global Configurations

Cisco Nexus A and Cisco Nexus B

To set global configurations, follow this step on both switches:

1. Run the following commands to set global configurations:

spanning-tree port type network default

spanning-tree port type edge bpduguard default

spanning-tree port type edge bpdufilter default

port-channel load-balance src-dst l4port

ntp server <global-ntp-server-ip> use-vrf management

ntp master 3

ip route 0.0.0.0/0 <ib-mgmt-vlan-gateway>

copy run start

Create VLANs

Cisco Nexus A and Cisco Nexus B

To create the necessary virtual local area networks (VLANs), follow this step on both switches:

1. From the global configuration mode, run the following commands:

vlan <Native-VLAN-id>

name Native-VLAN

vlan <SQL-VM-MGMT-id>

name SQL-VM-MGMT

vlan <SQL-vMotion-id>

name SQL-vMotion

vlan <SQL-VM-iSCSI-A-id>

name SQL-VM-iSCSI-A

vlan <SQL-VM-iSCSI-B-id>

name SQL-VM-iSCSI-B

vlan <SQL-HV-MGMT-id>

name SQL-HV-MGMT

vlan <SQL-Live-Migration-id>

name SQL-Live-Migration

vlan <SQL-HV-iSCSI-A-id>

name SQL-HV-iSCSI-A

vlan <SQL-HV-iSCSI-B-id>

name SQL-HV-iSCSI-B

vlan <SQL-HV-Cluster-id>

name SQL-HV-Cluster

exit

Add NTP Distribution Interface

Cisco Nexus A

From the global configuration mode, run the following commands:

ntp source <switch-a-ntp-ip>

interface Vlan<ib-mgmt-vlan-id>

ip address <switch-a-ntp-ip>/<ib-mgmt-vlan-netmask-length>

no shutdown

exit

Cisco Nexus B

From the global configuration mode, run the following commands:

ntp source <switch-b-ntp-ip>

interface Vlan<ib-mgmt-vlan-id>

ip address <switch-b-ntp-ip>/<ib-mgmt-vlan-netmask-length>

no shutdown

exit

Add Individual Port Descriptions for Troubleshooting

Cisco Nexus A

To add individual port descriptions for troubleshooting activity and verification for switch A, follow this step:

![]() In this step and in the later sections, configure the AFF nodename <st-node> and Cisco UCS 6332-16UP fabric interconnect clustername <ucs-clustername> interfaces as appropriate to your deployment.

In this step and in the later sections, configure the AFF nodename <st-node> and Cisco UCS 6332-16UP fabric interconnect clustername <ucs-clustername> interfaces as appropriate to your deployment.

1. From the global configuration mode, run the following commands:

interface Eth1/51

description <st-node>-1:e2a

interface Eth1/52

description <st-node>-2:e2a

interface Eth1/53

description <ucs-clustername>-A:1/39

interface Eth1/54

description <ucs-clustername>-B:1/39

interface Eth1/43

description <nexus-hostname>-B:1/43

interface Eth1/44

description <nexus-hostname>-B:1/44

interface Eth1/45

description <nexus-hostname>-B:1/45

interface Eth1/46

description <nexus-hostname>-B:1/46

exit

Cisco Nexus B

To add individual port descriptions for troubleshooting activity and verification for switch B, follow this step:

1. From the global configuration mode, run the following commands:

interface Eth1/51

description <st-node>-1:e2e

interface Eth1/52

description <st-node>-2:e2e

interface Eth1/53

description <ucs-clustername>-A:1/40

interface Eth1/54

description <ucs-clustername>-B:1/40

interface Eth1/43

description <nexus-hostname>-A:1/43

interface Eth1/44

description <nexus-hostname>-A:1/44

interface Eth1/45

description <nexus-hostname>-A:1/45

interface Eth1/46

description <nexus-hostname>-A:1/46

exit

Create Port Channels

Cisco Nexus A and Cisco Nexus B

To create the necessary port channels between devices, follow this step on both switches:

1. From the global configuration mode, run the following commands:

interface Po10

description vPC peer-link

interface Eth1/43-46

channel-group 10 mode active

no shutdown

interface Po151

description <st-node>-1

interface Eth1/51

channel-group 151 mode active

no shutdown

interface Po152

description <st-node>-2

interface Eth1/52

channel-group 152 mode active

no shutdown

interface Po153

description <ucs-clustername>-A

interface Eth1/53

channel-group 153 mode active

no shutdown

interface Po154

description <ucs-clustername>-B

interface Eth1/54

channel-group 154 mode active

no shutdown

exit

copy run start

Configure Port Channel Parameters

Cisco Nexus A and Cisco Nexus B

To configure port channel parameters, follow this step on both switches:

1. From the global configuration mode, run the following commands:

interface Po10

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan <SQL-VM-MGMT-id>,<SQL-VM-iSCSI-A-id>,<SQL-VM-iSCSI-B-id>,<SQL-vMotion-id>,<SQL-HV-MGMT-id>,<SQL-HV-iSCSI-A-id>,<SQL-HV-iSCSI-B-id>,<SQL-Live-Migration-id>,<SQL-HV-Cluster-id>

spanning-tree port type network

interface Po151

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan <SQL-VM-iSCSI-A-id>, <SQL-VM-iSCSI-B-id>, <SQL-HV-iSCSI-A-id>,<SQL-HV-iSCSI-B-id>

spanning-tree port type edge trunk

mtu 9216

interface Po152

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan <SQL-VM-iSCSI-A-id>, <SQL-VM-iSCSI-B-id>, <SQL-HV-iSCSI-A-id>,<SQL-HV-iSCSI-B-id>

spanning-tree port type edge trunk

mtu 9216

interface Po153

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan <SQL-VM-MGMT-id>, <SQL-VM-iSCSI-A-id>,<SQL-VM-iSCSI-B-id>,<SQL-vMotion-id>,<SQL-HV-MGMT-id>,<SQL-HV-iSCSI-A-id>,<SQL-HV-iSCSI-B-id>,<SQL-Live-Migration-id>,<SQL-HV-Cluster-id>

spanning-tree port type edge trunk

mtu 9216

interface Po154

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan <SQL-VM-MGMT-id>, <SQL-VM-iSCSI-A-id>,<SQL-VM-iSCSI-B-id>,<SQL-vMotion-id>,<SQL-HV-MGMT-id>,<SQL-HV-iSCSI-A-id>,<SQL-HV-iSCSI-B-id>,<SQL-Live-Migration-id>,<SQL-HV-Cluster-id>

spanning-tree port type edge trunk

mtu 9216

exit

copy run start

Configure Virtual Port Channels

Cisco Nexus A

To configure virtual port channels (vPCs) for switch A, follow this step:

1. From the global configuration mode, run the following commands:

vpc domain <nexus-vpc-domain-id>

role priority 10

peer-keepalive destination <nexus-B-mgmt0-ip> source <nexus-A-mgmt0-ip>

peer-switch

peer-gateway

auto-recovery

delay restore 150

interface Po10

vpc peer-link

interface Po151

vpc 151

interface Po152

vpc 152

interface Po153

vpc 153

interface Po154

vpc 154

exit

copy run start

Cisco Nexus B

To configure vPCs for switch B, follow this step:

1. From the global configuration mode, run the following commands:

vpc domain <nexus-vpc-domain-id>

role priority 20

peer-keepalive destination <nexus-A-mgmt0-ip> source <nexus-B-mgmt0-ip>

peer-switch

peer-gateway

auto-recovery

delay restore 150

interface Po10

vpc peer-link

interface Po151

vpc 151

interface Po152

vpc 152

interface Po153

vpc 153

interface Po154

vpc 154

exit

copy run start

Uplink into Existing Network Infrastructure

Depending on the available network infrastructure, several methods and features can be used to uplink the FlexPod environment. If an existing Cisco Nexus environment is present, we recommend using vPCs to uplink the Cisco Nexus switches included in the FlexPod environment into the infrastructure. The previously described procedures can be used to create an uplink vPC to the existing environment. Make sure to run copy run start to save the configuration on each switch after the configuration is completed.

NetApp Hardware Universe

![]() Confirm that the hardware and software components that you would like to use are supported with the version of ONTAP that you plan to install by using the HWU application at the NetApp Support site.

Confirm that the hardware and software components that you would like to use are supported with the version of ONTAP that you plan to install by using the HWU application at the NetApp Support site.

1. Access the HWU application to view the system configuration guides. Click the Platforms tab to view the compatibility between different version of the ONTAP software and the NetApp storage Platforms with your desired specifications.

2. Alternatively, to compare components by storage appliance, click Compare Storage Systems.

Controllers and Disk Shelves

Follow the physical installation procedures for the controllers found in the AFF A300 Series product documentation in the NetApp AFF and FAS Documentation Center.

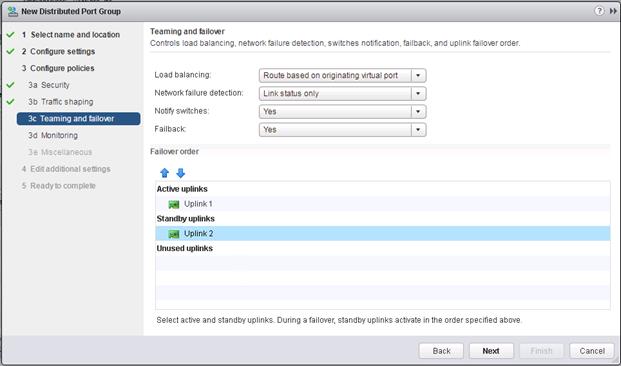

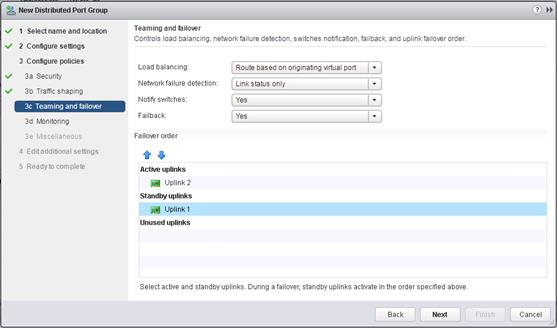

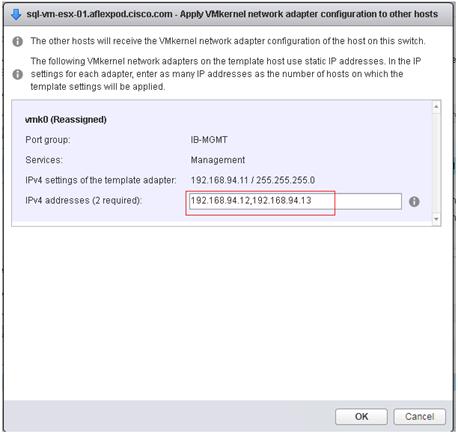

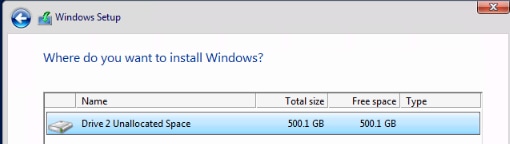

AFF A300 Controllers