SmartStack Deployment Guide with Cisco UCS Mini and Nimble CS300

Available Languages

SmartStack Deployment Guide with Cisco UCS Mini and Nimble CS300

Last Updated: November 24, 2015

The CVD program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information visit

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, IronPort, the IronPort logo, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2015 Cisco Systems, Inc. All rights reserved.

Validated Hardware and Software

Initial Configuration and Setup

Cisco Nexus Global Configuration

Cisco Nexus Configuration to Cisco UCS Mini Fabric Interconnect

Initialize the Nimble Storage Array

Configure the Nimble OS using the GUI

Nimble Management Tools: InfoSight

Nimble Management Tools: vCenter Plugin

Initial Setup and Configuration

Log in to Cisco UCS Manager (UCS Manager)

Synchronize Cisco UCS Mini to NTP

Configure Ports Connected to Switching Infrastructure

Configure PortChannels on Uplink Ports

Configure Ports Connected to Storage Array

Server Configuration Workflow: vNIC Template Creation

Create VLANs: Management VLAN on Uplink Ports to Cisco Nexus 9000 Series Switches

Create VLANs: Application Data VLANs on Uplink Ports to Cisco Nexus 9000 Series Switches

Create VLANs: Appliance Port VLANs to Nimble Storage

Create LAN Pools: Mac Address Pools

Create LAN Policies: QoS and Jumbo Frame

Create LAN Policies: Network Control Policy for Uplinks to Cisco Nexus Switches

Create LAN Policies: Network Control Policy for Storage vNICs

Create LAN Policies: vNIC Templates for Management Traffic

Create LAN Policies: vNIC Templates for Uplink Port Traffic

Create LAN Policies: vNIC Templates for Appliance Port Traffic

Server Configuration Workflow: Appliance Port Policies

Create LAN Policies: Network Control Policy for Appliance Port

Create LAN Policies: Flow Control Policy for Appliance Port

Apply Policies to Appliance Port

Server Configuration Workflow: Server Policies

Create Server Policies: Power Control Policy

Create Server Policies: Server Pool Qualification Policy

Create Server Policies: BIOS Policy

Create Server Policies: iSCSI Boot Adapter Policy

Create Server Policies: Local Disk Configuration Policy

Create Server Policies: Boot Policy

Create Server Policies: Maintenance Policy

Create Server Polices: Host Firmware Package Policy

Server Configuration Workflow: Server, LAN and SAN Pools

Create Server Pools: UUID Suffix Pool

Create SAN Pools: iSCSI IQN Pools

Server Configuration Workflow: Service Profile Template Creation

Create Service Profile Template

Service Profile Template Wizard: Networking

Service Profile Template Wizard: Storage

Service Profile Template Wizard: Boot Policy

Service Profile Template Wizard: Maintenance Policy

Service Profile Template Wizard: Server Assignment

Service Profile Template Wizard: Operational Policies

Cisco UCS Configuration Backup

Generate a Service Profile for the Host from the Service Profile Template.

Associate the Service Profile with the Host

iSCSI SAN Boot Setup - Create Initiator Group for the ESXi host

iSCSI SAN Boot Setup - Create Boot Volume for the host

iSCSI SAN Boot Setup - Configure the iSCSI Target on the ESXi Host

ESXi 6.0 SAN Boot Installation

Deploying Infrastructure Services

Install and Setup Microsoft Windows Server 2012 R2

Install and Setup VMware vCenter Server Appliance (VCSA)

Install the Client Integration Plug-In

Install vCenter Server Appliance

vCenter Server Appliance Setup – Install the Client Integration Plugin.

vCenter Server Appliance Setup – Join Active Directory Domain

vCenter Server Appliance Setup – ESXi Dump Collector

Setup vCenter Center with a Datacenter, Cluster, DRS and HA

Select Virtual Machine Swap File Location

Add the AD Account to the Administrator Group

SmartStack™ is an integrated infrastructure solution, designed and validated to meet the needs of mid-sized enterprises or departmental or remote deployments within larger environments. SmartStack infrastructure platform combines Cisco UCS Mini and Nimble CS300 array into a single, highly redundant and flexible architecture that can support different hypervisors, use cases and workloads. SmartStack platform management is enabled through Cisco UCS® Manager integrated into the Cisco UCS® Mini, VMware vCenter, Nimble Array Management GUI and Nimble InfoSight®, a cloud based management solution.

SmartStack is a Cisco Validated Design (CVD). CVDs are systems and solutions that have been designed, tested, and documented to facilitate and accelerate customer deployments. Documentation for this CVD includes the following documents:

· SmartStack Design Guide

· SmartStack Deployment Guide

This document serves as the SmartStack Deployment Guide. SmartStack Design Guide associated with this deployment guide can be found at: http://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/smartstack_cs300_mini.html

Introduction

This document outlines the deployment procedures for implementing a SmartStack infrastructure solution based on VMware vSphere 6.0, Cisco UCS Mini, Nimble CS300 and Cisco Nexus switches.

Audience

The intended audience for this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who want to deploy the core SmartStack architecture with Cisco UCS Mini and Nimble CS300.

Solution Design

The SmartStack architecture based on Cisco UCS Mini and directly attached Nimble CS300 storage array provide an optimal solution for mid-sized Enterprises and departmental or remote branch deployments within larger Enterprises. SmartStack solution integrates Cisco UCS Mini and Nimble CS300 storage array to deliver compute, storage and 10 Gigabit Ethernet (10GbE) networking in an easy-to-deploy, compact platform. The stateless server provisioning and management capabilities of Cisco UCS Manager combined with Nimble’s wizard based provisioning and simplified storage management make SmartStack easier to deploy and manage.

![]() For more details on the SmartStack architecture, please refer to the SmartStack Design Guide: http://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/smartstack_cs300_mini.html

For more details on the SmartStack architecture, please refer to the SmartStack Design Guide: http://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/smartstack_cs300_mini.html

Compute

The compute platform in this design is a Cisco UCS Mini with redundant Cisco UCS 6324 Fabric Interconnects (FI) providing 10 Gigabit Ethernet (GbE) connectivity to both network and storage infrastructure. The two Fabric Interconnects are deployed in a cluster for redundancy and provide two fabric planes (Fabric A, Fabric B) that are completely independent of each other from a data plane perspective. In the event of a failure or if only one was deployed, the fabric can be fully operational with one FI.

The Fabric Interconnects in the SmartStack design are deployed in End-host Ethernet switching mode. Ethernet switching mode determines how the fabric interconnects behave as switching devices between the servers and the network. End-host mode is the default and generally recommended mode of operation. In this mode, the fabric interconnects appear to the upstream LAN devices as end hosts with multiple adapters and do not run Spanning Tree. The Cisco Nexus switch ports that connect to the Cisco Fabric Interconnects are therefore deployed as spanning tree edge ports.

The ports on the Cisco UCS 6324 are unified ports that can support either Ethernet or Fibre Channel traffic based by changing the port mode. This storage access in this architecture uses iSCSI so all ports are deployed in Ethernet mode.

Ethernet ports on the fabric interconnects are unconfigured by default and must be explicitly configured as a specific type, which determines the port’s behavior. The port types used in this design are:

· Uplink ports for connecting to Cisco Nexus 9300 series switches and external LAN network

· Appliance ports for connecting to directly attached iSCSI-based Nimble CS300 array

· Server ports for connecting to external Cisco UCS C-series rack mount servers

Cisco UCS Manager (Cisco UCS Manager) is used to provision and manage Cisco UCS Mini and its sub-components (chassis, FI, blade and rack mount servers). Cisco UCS Manager runs on the Fabric Interconnects.

A key feature of Cisco UCS Manager are Service Profile Templates that enable the abstraction of policies, pools and other aspects of a server configuration and consolidate it in the form of a template. The configuration in a service profile template includes:

· Server Identity (For example: UUID Pool, Mac Address Pool, IP Pools)

· Server Policies (For example: BIOS Policy, Host Firmware Policy, Boot Policy)

· LAN Configuration (For example: VLAN, QoS, Jumbo Frames)

· Storage Configuration (For example: IQN pool)

The template, once created, can be used to generate a service profile that configure and deploy individual servers or groups of servers. A service profile defines the server and its storage and networking characteristics. Service Profile Templates reduce deployment time, increases operational agility, and provides general ease of deployment. Service Profile Templates are used in this SmartStack design to rapidly configure and deploy multiple servers with minimal modification.

Cisco UCS B-Series and C-Series servers provide the compute resources in the SmartStack design. Several models of these servers are supported – see the Cisco UCS Mini release notes for a complete list. The compute design comprises of an infrastructure layer and an application layer with compute resources allocated to both.

The infrastructure layer consists of common infrastructure services that are necessary to deploy, operate and manage the entire deployment. These include, but not limited to, Active Directory (AD), Dynamic Name Resolution Server (DNS) and VMware vCenter and were deployed for validating the SmartStack architecture.

The application layer consists of any virtualized application, hosted on SmartStack compute, which the business needs to fulfill its business functions. Depending on the size of the business, the application layer in a customer deployment could be extensive, with multiple levels of hierarchy and redundancy for supporting a large set of applications. The converged infrastructure to support the application layer may require multiple units of SmartStack to be deployed, with each unit scaled to 12 hosts (maximum supported as of this writing). SmartStack solution was validated using virtual machines (VM) running Microsoft Windows 2008R2 and IOMeter tool to generate load.

Virtualization

The common infrastructure services and the associated virtual machines (VM) are part of a dedicated infrastructure cluster (SS-Mgmt) running VMware HA. A separate cluster (SS-VM-Cluster1), also running VMware HA, hosts the application VMs. VMware virtual switches (vSwitch) are used to provide layer 2 connectivity between the virtual machines. Cisco Nexus 1000V switches or VMware distributed virtual switches could also be used, particularly in larger deployments.

Cisco UCS servers were deployed with Cisco UCS 1340 VIC network adapter. At the server level, each Cisco VIC presents multiple vPCIe devices to ESXi node which vSphere identifies as vmnics. ESXi is unaware that these NICs are virtual adapters. The virtual NICs (vNIC) are configured during the Service Profile Template creation and deployed on each server when the service profile is created and associated with the server. In the SmartStack design, the following virtual NICs are used.

· One vNIC for iSCSI traffic on Fabric A (iSCSI-A)

· One vNIC for iSCSI traffic on Fabric B (iSCSI-B)

· Two vNICs for in-band management and vMotion (MGMT-A, MGMT-B)

· Two vNICs for Application VM traffic (vNIC-A, vNIC-B)

The connectivity within each ESXi server and the vNICs presented to ESXi in the form of vmnics are shown in the figure below.

Figure 1 VMware ESXI Network

Storage

The Nimble array in this design provides iSCSI based block storage. iSCSI is also used to SAN boot the ESXi hosts on the Cisco UCS Mini. Storage controllers on the Nimble CS300 are connected to Appliance ports on each Cisco UCS Fabric Interconnect. This design uses two physically diverse switch fabrics as well as logically distinct iSCSI data VLANs (iSCSI-A-SAN, iSCSI-B-SAN). Virtual PortChannels are not used on the links between Cisco UCS Mini and Nimble. VLAN Trunking is also not necessary as Appliance ports carry traffic bound for one specific iSCSI VLAN to either Fabric A or Fabric B. Jumbo frames are also enabled on these links.

The Service Profile template used in this design has both primary and secondary iSCSI SAN boot configurations to allow boot to occur even when one path or FI is down. In addition, host based iSCSI multipathing with two distinct IP domains and iSCSI targets are also used to ensure access to storage at all times.

The Nimble Storage CS300 dual controller design provides either 10Gbps Ethernet or 16Gbps Fibre Channel connectivity on multiple links. In this CVD, Nimble CS300 is configured for 10Gbps Ethernet connectivity. Each storage controller can have from 2 to 4 10Gbps connections per controller. The total bandwidth capacity is the aggregate of bandwidth of all 10Gbps ports on a single controller. In the event of a controller failover, the same amount of network bandwidth is still available. The CS300 controller is also equipped with 2 x 6Gb SAS expander connections for additional shelf options.

The Nimble controllers operate in Active / Standby mode. In this design, the redundant links to each controller delivers 20Gbps of storage bandwidth to the current Active controller. If one of the links on the Active controller fails, the Standby controller will become the Active controller to ensure that 20Gbps of bandwidth is always available.

The CS300 array can scale performance by upgrading compute for greater throughput. It can be scale capacity by adding expansion shelves. It can also scale flash to accommodate a wide variety of application of working sets. Any of these options is combined to the specific need of the workload.

The configuration tested in this design is a CS300 equipped with 24TB of storage and 3.2TB of flash capacity as shown below.

Figure 2 Nimble Storage Array Configuration

To optimize and model the required performance, the storage array and virtual machines will be remotely monitored from the cloud using Nimble InfoSight™. This will provide insight into I/O and capacity usage, trend analysis for capacity planning and expansion, as well as pro-active ticketing and notification when any issues occur.

Networking

Networking infrastructure provides network connectivity to applications hosted on Cisco UCS Mini. A pair of Cisco Nexus 9372 PX switches, deployed in NX-OS standalone mode were used in this design. Redundant 10Gbps links from each Cisco Nexus switch is connected to the Uplink ports on each FI to provide an aggregate bandwidth of 20Gbps on each uplink. The design uses Virtual PortChannel (vPC) for the network connectivity between Cisco UCS Mini and the customer’s network. vPC provides Layer 2 multipathing by allowing multiple parallel paths between nodes with load balancing that result in increased bandwidth and redundancy. VLAN Trunking is enabled on these links as multiple application data VLANs, management and vMotion traffic will need to traverse these links. Jumbo Frames are also enabled on the uplinks to support vMotion.

SmartStack Topology

The figure below shows the SmartStack architecture.

Figure 3 SmartStack with Cisco UCS Mini and Direct Attached Nimble CS300

Low-level Design

The first two ports on each FI are connected to a pair of Cisco Nexus 9372 PX switches using 10GbE links as shown in Figure 3. These Ethernet ports on the fabric interconnects are configured as Uplink ports using Cisco UCS Manager.

The uplinks ports are then enabled for Link aggregation using PortChannels with uplink ports on FI-A in one PortChannel and the FI-B uplink ports on another. The uplinks ports are connected to different Cisco Nexus switches and configured to be part of a virtual PortChannel (vPC) on the Cisco Nexus switches. vPC11 and vPC12 shown in Figure 3 are PortChannels that connect Cisco Nexus A and Cisco Nexus B to FI-A and FI-B respectively. The Cisco Nexus vPC feature allows a third device to aggregate links going to different Cisco Nexus switches. As a result, the uplink ports appear as a regular PortChannel to Cisco UCS Min as shown below. Link aggregation on the uplinks between Cisco UCS Mini and Cisco Nexus switches follow Cisco recommended best practices. The detailed deployment procedures are covered in an upcoming section of this document.

Multiple VLANs need to traverse the uplink ports and uplink ports are automatically configured as IEEE 802.1Q trunks for all VLANs defined on the fabric interconnect. VLANs for management, vMotion and application traffic are defined on the fabric interconnects and enabled on the uplinks. The VLANs used in validating the SmartStack design are summarized in the table below.

Table 1 Uplink VLANs between Cisco UCS Mini and Cisco Nexus

| VLAN Type |

VLAN ID |

Description |

| Native VLAN |

2 |

Untagged VLAN Traffic are forwarded on this VLAN |

| In-Band Management |

12 |

VLAN used for in-band management, including ESXi hosts and Infrastructure VMs |

| vMotion |

12 |

VLAN used by ESXi for moving VMs between hosts. vMotion uses management VLAN |

| VM Application Traffic |

900, 901 |

VLAN used by Application Data Traffic |

The next two-10GbE ports on each Cisco UCS 6324 FI are used to directly connect to Nimble CS300 storage array. The ports on the FI connecting to the array were configured as Appliance ports. Appliance ports on each Fabric Interconnect are enabled for one iSCSI VLAN as shown in the table below.

Table 2 iSCSI VLANs between Cisco UCS Mini and Nimble

| VLAN Type |

VLAN ID |

Description |

| iSCSI Path A |

3010 |

VLAN used for iSCSI traffic on Fabric A. This VLAN exists only on Fabric A. |

| iSCSI Path B |

3020 |

VLAN used for iSCSI traffic on Fabric A. This VLAN exists only on Fabric B. |

The iSCSI Qualified Name (IQN) Block, IP Blocks, MAC block and iSCSI Discovery IP used in validation for the two independent iSCSI Paths are shown in the table below.

Table 3 iSCSI Deployment Information

| Mac Address Pool for vNIC iSCSI-A on FI-A |

00:25:B5:AA:AA:00 - 00:25:B5:AA:AA:7F |

| Mac Address Pool for vNIC iSCSI-B on FI-B |

00:25:B5:BB:BB:00 - 00:25:B5:BB:BB:7F |

| IP Pool for iSCSI-A on FI-A |

10.10.10.51 – 10.10.10.100 |

| IP Pool for iSCSI-B on FI-B |

10.10.20.50 – 10.10.20.99 |

| iSCSI IQN Name Suffix |

iqn.com.ucs-blade |

| iSCSI IQN Block (Block of 16) |

esx-blade:1 – esx-blade:16 |

| iSCSI Discovery IP for iSCSI-A on FI-A |

10.10.10.10 (TCP Port 3260) |

| iSCSI Discovery IP for iSCSI-B on FI-A |

10.10.20.10 (TCP Port 3260) |

Validated Hardware and Software

The table below is a summary of all the components used for validating the SmartStack design.

Table 4 Infrastructure Components and Software Revisions

| Infrastructure Stack |

Component |

Software Revision |

Details |

| Compute |

Cisco UCS Mini - 5108 |

N/A |

Blade Server Chassis |

| Cisco UCS B200 M4 |

3.0.2(c) |

Server/Host on Cisco UCS Mini |

|

| Cisco UCS FI 6324 |

3.0.2(c) |

Embedded Fabric Interconnect on Cisco UCS Mini |

|

| Cisco UCS Manager |

3.0.2(c) |

Embedded Management |

|

| Cisco eNIC Driver |

2.1.2.69 |

Ethernet driver for Cisco VIC |

|

| Network |

Cisco Nexus 9372 PX |

6.1(2)I3(4a) |

Optional |

| Storage |

Nimble CS300 Array |

2.3.7 |

Build: 2.3.7-280146-opt |

| Nimble NCM for ESXi |

2.3.1 |

Build: 2.3.1-600006 |

|

| Virtualization |

VMware vSphere |

6.0 |

Build: 6.0.0-2494585 |

| VMware vCenter Server Appliance |

6.0.0 U1 |

Build: 6.0.0.10000-3018521 |

|

| Microsoft SQL Server |

2012 RP1 |

VMware vCenter Database |

Cisco Nexus 9000

This section provides detailed procedures for deploying a Cisco Nexus 9k switch in a SmartStack environment. Note that Cisco Nexus 9000 is an optional component of the SmartStack Infrastructure Solution. Skip to the next section if a different type of switch or an existing switching infrastructure is used with this SmartStack deployment.

Initial Configuration and Setup

This section outlines the initial configuration necessary for bringing up a new Cisco Nexus 9000.

Cisco Nexus A

To set up the initial configuration for the first Cisco Nexus switch complete the following steps:

1. Connect to the serial/console port of the switch

Enter the configuration method: console

Abort Auto Provisioning and continue with normal setup? (yes/no[n]: y

---- System Admin Account Setup ----

Do you want to enforce secure password standard (yes/no[y]:

Enter the password for "admin":

Confirm the password for "admin":

---- Basic System Configuration Dialog VDC: 1 ----

This setup utility will guide you through the basic configuration of the system. Setup configures only enough connectivity for management of the system.

Please register Cisco Nexus9000 Family devices promptly with your supplier. Failure to register may affect response times for initial service calls. Cisco Nexus9000 devices must be registered to receive entitled support services.

Press Enter at anytime to skip a dialog. Use ctrl-c at anytime to skip the remaining dialogs.

Would you like to enter the basic configuration dialog (yes/no): y

Create another login account (yes/no) [n]: n

Configure read-only SNMP community string (yes/no) [n]:

Configure read-write SNMP community string (yes/no) [n]:

Enter the switch name: D01-n9k1

Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]:

Mgmt0 IPv4 address: 192.168.155.3

Mgmt0 IPv4 netmask: 255.255.255.0

Configure the default gateway? (yes/no) [y]:

IPv4 address of the default gateway: 192.168.155.1

Configure advanced IP options? (yes/no) [n]:

Enable the telnet service? (yes/no) [n]:

Enable the ssh service? (yes/no) [y]:

Type of ssh key you would like to generate (dsa/rsa) [rsa]:

Number of rsa key bits <1024-2048> [1024]: 2048

Configure the ntp server? (yes/no) [n]: y

NTP server IPv4 address: 192.168.155.254

Configure default interface layer (L3/L2) [L2]:

Configure default switchport interface state (shut/noshut) [noshut]:

Configure CoPP system profile (strict/moderate/lenient/dense/skip) [strict]:

2. Review the settings printed to the console. If they are correct, answer yes to apply and save the configuration

3. Wait for the login prompt to make sure that the configuration has been saved prior to proceeding.

Cisco Nexus B

To set up the initial configuration for the second Cisco Nexus switch complete the following steps:

1. Connect to the serial/console port of the switch

2. The Cisco Nexus B switch should present a configuration dialog identical to that of Cisco Nexus A shown above. Provide the configuration parameters specific to Cisco Nexus B for the following configuration variables. All other parameters should be identical to that of Cisco Nexus A.

· Admin password

· Cisco Nexus B Hostname: D01-n9k2

· Cisco Nexus B mgmt0 IP address: 192.168.155.4

· Cisco Nexus B mgmt0 Netmask: 255.255.255.0

· Cisco Nexus B mgmt0 Default Gateway: 192.168.155.1

Cisco Nexus Global Configuration

In this section, we look at the configuration necessary on both Cisco Nexus switches to connect to FI, in-band Management and other connections necessary.

1. On both Cisco Nexus switches, enable the following features and best practices.

feature udld

feature lacp

feature vpc

feature interface-vlan

spanning-tree port type network default

spanning-tree port type edge bpduguard default

spanning-tree port type edge bpdufilter default

2. Create VLANs for SmartStack system.

vlan 12

name IB-MGMT

vlan 2

name Native-VLAN

vlan 900

name VM-Traffic-VLAN900

vlan 901

name VM-Traffic-VLAN901

Cisco Nexus VPC Configuration

Cisco Nexus A

To configure virtual port channels (vPCs) for switch A, complete the following steps:

1. From the global configuration mode, create a new vPC domain:

vpc domain 155

2. Make Cisco Nexus A the primary vPC peer by defining a low priority value:

role priority 10

3. Use the management interfaces on the supervisors of the Cisco Nexus switches to establish a keepalive link:

peer-keepalive destination 192.168.155.4 source 192.168.155.3

4. Enable following features for this vPC domain:

peer-switch

delay restore 150

peer-gateway

ip arp synchronize

auto-recovery

5. Save the configuration:

copy run start

Cisco Nexus B

To configure vPCs for switch B, complete the following steps:

1. From the global configuration mode, create a new vPC domain:

vpc domain 155

2. Make Cisco Nexus A the primary vPC peer by defining a low priority value:

role priority 20

3. Use the management interfaces on the supervisors of the Cisco Nexus switches to establish a keepalive link:

peer-keepalive destination 192.168.155.3 source 192.168.155.4

4. Enable following features for this vPC domain:

peer-switch

delay restore 150

peer-gateway

ip arp synchronize

auto-recovery

5. Save the configuration:

copy run start

Configure Network Interfaces for the VPC Peer Links: Cisco Nexus A

1. Define a port description for the interfaces connecting to VPC Peer D01-n9k2.

interface Eth1/53

description VPC Peer D01-n9k2:e1/53

interface Eth1/54

description VPC Peer D01-n9k2:e1/54

2. Apply a port channel to both VPC Peer links and bring up the interfaces.

interface Eth1/53,Eth1/54

channel-group 155 mode active

no shutdown

3. Define a description for the port-channel connecting to D01-n9k2.

interface Po155

description vPC peer-link

4. Make the port-channel a switchport, and configure a trunk to allow in-band management, VM traffic, and the native VLAN.

switchport

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 12, 900, 901

5. Make this port-channel the VPC peer link and bring it up.

vpc peer-link

no shutdown

copy run start

Configure Network Interfaces for the VPC Peer Links: Cisco Nexus B

1. Define a port description for the interfaces connecting to VPC Peer D01-n9k1.

interface Eth1/53

description VPC Peer D01-n9k1:e1/53

interface Eth1/54

description VPC Peer D01-n9k1:e1/54

2. Apply a port channel to both VPC Peer links and bring up the interfaces.

interface Eth1/53,Eth1/54

channel-group 155 mode active

no shutdown

3. Define a description for the port-channel connecting to D01-n9k1.

interface Po155

description vPC peer-link

4. Make the port-channel a switchport, and configure a trunk to allow in-band management, VM traffic, and the native VLAN.

switchport

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 12, 900, 901

5. Make this port-channel the VPC peer link and bring it up.

vpc peer-link

no shutdown

copy run start

Cisco Nexus Configuration to Cisco UCS Mini Fabric Interconnect

Cisco Nexus A

1. Define a description for the port-channel connecting to D01-Mini1-A.

interface Po11

description D01-Mini1-A

2. Make the port-channel a switchport, and configure a trunk to allow in-band management, VM traffic, and the native VLANs.

switchport

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 12, 900, 901

3. Make the port channel and associated interfaces spanning tree edge ports.

spanning-tree port type edge trunk

4. Make this a VPC port-channel and bring it up.

vpc 11

no shutdown

5. Define a port description for the interface connecting to D01-Mini1-A.

interface Eth1/13

description D01-Mini1-A:p1

6. Apply it to a port channel and bring up the interface.

channel-group 11 force mode active

no shutdown

7. Define a description for the port-channel connecting to D01-Mini1-B.

interface Po12

description D01-Mini1-B

8. Make the port-channel a switchport, and configure a trunk to allow in-band management, VM traffic VLANs and the native VLAN.

switchport

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 12, 900, 901

9. Make the port channel and associated interfaces spanning tree edge ports.

spanning-tree port type edge trunk

10. Make this a VPC port-channel and bring it up.

vpc 12

no shutdown

11. Define a port description for the interface connecting to D01-Mini1-B

interface Eth1/14

description D01-Mini1-B:p1

12. Apply it to a port channel and bring up the interface.

channel-group 12 force mode active

no shutdown

copy run start

Cisco Nexus B

1. Define a description for the port-channel connecting to D01-Mini1-A.

interface Po11

description D01-Mini1-B

2. Make the port-channel a switchport, and configure a trunk to allow in-band management, VM traffic, and the native VLANs.

switchport

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 12, 900, 901

3. Make the port channel and associated interfaces spanning tree edge ports.

spanning-tree port type edge trunk

4. Make this a VPC port-channel and bring it up.

vpc 11

no shutdown

5. Define a port description for the interface connecting to D01-Mini1-A

interface Eth1/13

description D01-Mini1-A:p2

6. Apply it to a port channel and bring up the interface.

channel-group 11 force mode active

no shutdown

7. Define a description for the port-channel connecting to D01-Mini1-B

interface Po12

description D01-Mini1-B

8. Make the port-channel a switchport, and configure a trunk to allow in-band management, and VM traffic VLANs and the native VLAN.

switchport

switchport mode trunk

switchport trunk native vlan 2

switchport trunk allowed vlan 12, 900, 901

9. Make the port channel and associated interfaces spanning tree edge ports.

spanning-tree port type edge trunk

10. Make this a VPC port-channel and bring it up.

vpc 12

no shutdown

11. Define a port description for the interface connecting to D01-Mini1-B

interface Eth1/14

description D01-Mini1-A:p2

12. Apply it to a port channel and bring up the interface.

channel-group 12 force mode active

no shutdown

copy run start

Storage Configuration

This section provides the procedure for initializing a Nimble Storage array and setting up basic IP connectivity. Note that the dialog below is specific for an iSCSI array setup.

Nimble Setup Manager

The Nimble Setup manager can be downloaded from Infosight at this location: http://infosightweb.nimblestorage.com/InfoSight/cgi-bin/downloadFile?ID=documents/Setup-NimbleNWT-x64.2.3.2.287.zip

The Nimble Setup manager is part of the Nimble Storage Windows Toolkit. In this instance the Nimble Setup Manager is the only component that needs to be installed.

Initialize the Nimble Storage Array

1. In the Windows Start menu, click Nimble Storage > Nimble Setup Manager.

2. Select one of the uninitialized arrays from the Nimble Setup Manager list and click Next.

![]() If the array is not visible in Nimble Setup Manager, verify that the array’s eth1 ports of both controllers are on the same subnet as the Windows host.

If the array is not visible in Nimble Setup Manager, verify that the array’s eth1 ports of both controllers are on the same subnet as the Windows host.

Configure the Nimble OS using the GUI

1. Choose the appropriate group option and click Next.

· Set up the array but do not join a group. Continue to Step 5.

· Add the array to an existing group.

If you chose to join an existing group, your browser automatically redirects to the login screen of the group leader array. See Add Array to Group Using the GUI to complete the configuration.

2. Provide or change the following initial management settings and click Finish:

· Array name

· Group name

· Management IP address and subnet mask for the eth1 interface

· Default gateway IP address

· Optional. Administrator password

3. You may see a warning similar to There is a problem with this website's security certificate. It is safe to ignore this warning and click Continue.

![]() If prompted, you can also download and accept the certificate. Alternatively, create your own. See the cert command in the Nimble Command Line Reference Guide. Also, if Internet Explorer v7 displays a blank page, clear the browser's cache. The page should be visible after refreshing the browser.

If prompted, you can also download and accept the certificate. Alternatively, create your own. See the cert command in the Nimble Command Line Reference Guide. Also, if Internet Explorer v7 displays a blank page, clear the browser's cache. The page should be visible after refreshing the browser.

4. In the login screen, type the password you set and click Log In. From this point forward, you are in the Nimble OS GUI. The first time you access the Nimble OS GUI, the Nimble Storage License Agreement appears.

5. In the Nimble Storage License Agreement, read the agreement, scroll to the bottom, check the acknowledgment box, and then click Proceed.

6. Provide the Subnet Configuration information for the following sections and click Next:

a. Management IP: IP address, Network and Subnet Mask

![]() The Management IP is used for the GUI, CLI, and replication. It resides on the management subnet and floats across all "Mgmt only" and "Mgmt + Data" interfaces on that subnet.

The Management IP is used for the GUI, CLI, and replication. It resides on the management subnet and floats across all "Mgmt only" and "Mgmt + Data" interfaces on that subnet.

b. Subnet: Subnet label, Network, Netmask, Traffic Type(Data only, Mgmt Only, Mgmt +Data), MTU

c. Maximum Transmission Unit (MTU) – Standard (1500) for Management and Jumbo (9000) for TG ports. Ensure that your network switches support Jumbo frame size (9000). The data port IP addresses are assigned to interface pairs, such as TG1 on controller A and TG1 on controller B. If one controller fails, the corresponding port on the remaining controller still has data access. You need to configure two data IP subnets and assign one pair of interfaces to each subnet.

![]() To add a data subnet, click Add Data Subnet, provide the required information and click OK.

To add a data subnet, click Add Data Subnet, provide the required information and click OK.

7. Provide Interface Assignment information for the following sections and click Next:

· Interface Assignment: For each IP interface, assign it a subnet and a Data IP address within the specified network. For inactive interface, assign the "None" subnet.

· Diagnostics:

— Controller A diagnostics IP address within the specified network

— Controller B diagnostics IP address within the specified network

8. Provide the following Domain information and click Next:

· Domain Name

· DNS Servers: Type the hostname or IP address of your DNS server. You can list up to five servers.

9. Provide the following Time information and click Next:

· Time Zone: Choose the time zone the array is located in.

· Time (NTP) Server: Type the hostname or IP address of your NTP server.

10. Provide Support information for the following sections and click Finish.

11. Email Alerts

· From Address: This is the email address used by the array when sending email alerts. It does not need to be a real email address. Include the array name for easy identification.

· Send to Address: Nimble recommends that you check the Send event data to Nimble Storage Support check box.

· SMTP server hostname or IP address

· AutoSupport

— Checking the Send AutoSupport data to Nimble Storage check box enables Nimble Storage Support to monitor your array, notify you of problems, and provide solutions.

· HTTP Proxy: AutoSupport and software updates require an HTTPS connection to the Internet, either directly or through a proxy server. If a proxy server is required, check the Use HTTP Proxy check box and provide the following information to configure proxy server details:

— HTTP proxy server hostname or IP address

— HTTP proxy server port

— Proxy server user name

— Proxy server password

![]() The system does not test the validity of the SMTP server connection or the email addresses that you provided.

The system does not test the validity of the SMTP server connection or the email addresses that you provided.

12. Click Finish. The Setup Complete screen appears. Click Continue.

13. The Nimble OS home screen appears. Nimble Storage array setup is complete.

Nimble Management Tools: InfoSight

Register and Login to InfoSight

Before you begin

It can take up to 24 hours for the array to appear in InfoSight after the first data set is sent. Data sets are configured to be sent around midnight array local time. Changes made right after the data set is sent at midnight might not be reflected in InfoSight for up to 48 hours.

Procedure

1. Log in to the InfoSight portal at https://infosight.nimblestorage.com.

2. Click Enroll now to activate your account. If your email is not already registered, contact your InfoSight Administrator. If there is no existing, InfoSight Administrator (Super User) registered against your account or you are not sure, contact Nimble Storage Support for assistance.

3. Select the appropriate InfoSight role and enter the array serial number for your customer account. If this is the first account being created for your organization, you should select the Super User role. The number of super users is limited to the total number of arrays that are associated with an account.

4. Click Submit.

5. A temporary password is sent to the email address that you specified. You must change your password the first time you log in.

Configure Arrays to Send Data to InfoSight

To take full advantage of the InfoSight monitoring and analysis capabilities, configure your Nimble arrays to send data sets, statistics, and events to Nimble Storage Support. InfoSight recommendations and automatic fault detection are based on data that is sent from your arrays and processed by InfoSight. If you did not configure your Nimble arrays to send this data to Nimble Storage Support during the initial setup, you can change the configuration at any time from the Administration menu in the GUI or by running the group --edit command in the CLI.

Before you begin

This procedure must be performed in the array GUI.

Procedure

1. From the Administration menu in the array GUI, select Alerts and Monitoring > AutoSupport / HTTP Proxy.

2. On the AutoSupport page, select Send AutoSupport data to Nimble Storage Support.

3. Click Test AutoSupport Settings to confirm that AutoSupport is set up correctly.

4. Click Save.

Nimble Management Tools: vCenter Plugin

Register vCenter Plugins

The first steps must be performed on the array GUI, not in Infosight. Nimble Storage has integration vCenter through plugin registration. This gives allows for datastore creation and management using vCenter. The vCenter plugin is supported on ESX 5.5 update 1 and later.

![]() The plugin is not supported for:

The plugin is not supported for:

- Multiple datastores located on one LUN

- One datastore spanning multiple LUNs

- LUNs located on a storage device not made by Nimble

Procedure

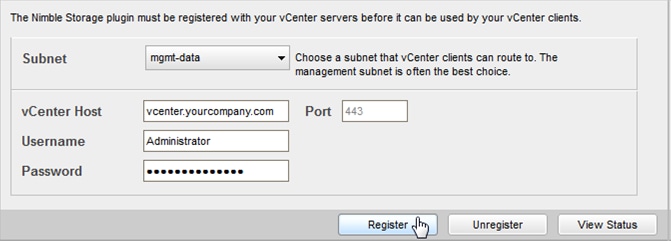

Use a vCenter account that has sufficient privileges to install a plugin (usually a user assigned to the Administrator role). You need to know the vCenter hostname or IP address. The plugin is part of the Nimble OS. To take advantage of it, you must first register the plugin with a vCenter Server. Multiple plugins can be registered on the Nimble array. In turn, each array that registers the plugin adds a tab to the vSphere client. The tab name for the datastore page is "datacenter page-Nimble-<groupname>". Registration steps are as follows:

1. From the Nimble OS GUI main menu, select Administration > vCenter Plugin.

2. If the fields are not already filled, enter the vCenter server host name or IP address, user name, and password.

3. Click View Status to see the current status of the plugin.

4. Click Register. If a Security Warning message appears, click Ignore.

5. If you are not sure of which subnet_label to select, the selection that appears when the dialog opens is probably the correct one.

6. Click View Status again to ensure that the plugin has been registered.

7. Restart the vSphere client.

View a List of Installed Plugins

A list of all registered plugins on the array can be discovered by completing the following steps:

1. Log into the Nimble OS CLI.

2. At the command prompt, type:

vmwplugin --list --username <username> --password <password> --server

<server_hostname-address> --port port_number <port number>

![]() If no port number is specified, port 443 is selected by default. A list of installed plugins displays.

If no port number is specified, port 443 is selected by default. A list of installed plugins displays.

Configure Arrays to Monitor your Virtual Environment

Procedure

1. Log in to https://infosight.nimblestorage.com

2. Go to Administration > Virtual Environment.

3. In the Virtual Environment list, find the array group for which you want to monitor the virtual environment.

4. Click Configure.

5. The Configure Group dialog box opens.

6. Verify that your software version is up to date and that your vCenter plugin is registered.

7. Select Enable in the VM Streaming Data list.

8. Click Update.

Configure Setup Email Notifications for Alerts

Procedure

1. On the Wellness page, click Daily Summary Emails.

2. Check Subscribe to daily summary email.

3. Enter an email address for delivery of the email alerts.

4. (Optional) You can click Test to send a test email to the email address that you indicated.

5. Click Submit to conclude the email alerts setup.

Server Configuration

This section provides detailed procedures for deploying a Cisco Unified Computing System (UCS) in a SmartStack environment.

Initial Setup and Configuration

This section outlines the initial setup necessary to deploy a new Cisco UCS Mini environment with Cisco Fabric interconnects (UCS-FI-M-6324) and Cisco UCS Manager (UCSM).

Cisco UCS 6324 Primary (FI-A)

1. Connect to the console port of the first FI on Cisco UCS Mini.

Enter the configuration method: console

Enter the setup mode; setup newly or restore from backup.(setup/restore)? Setup You have chosen to setup a new fabric interconnect? Continue? (y/n): y

Enforce strong passwords? (y/n) [y]: y

Enter the password for "admin":

Enter the same password for "admin":

Is this fabric interconnect part of a cluster (select 'no' for standalone)? (yes/no) [n]: y

Which switch fabric (A|B): A

Enter the system name: D01-Mini1

Physical switch Mgmt0 IPv4 address: 192.168.155.11

Physical switch Mgmt0 IPv4 netmask: 255.255.255.0

IPv4 address of the default gateway: 192.168.155.1

Cluster IPv4 address: 192.168.155.10

Configure DNS Server IPv4 address? (yes/no) [no]: y

DNS IPv4 address: 192.168.155.15

Configure the default domain name? y

Default domain name: smartstack.local

Join centralized management environment (UCS Central)? (yes/no) [n]:

2. Review the settings printed to the console. If they are correct, answer yes to apply and save the configuration.

3. Wait for the login prompt to make sure that the configuration has been saved prior to proceeding.

Cisco UCS 6324 Secondary (FI-B)

1. Connect to the console port on the second FI on Cisco UCS Mini.

2. Enter the configuration method: console

3. Installer has detected the presence of a peer Fabric interconnect. This Fabric interconnect will be added to the cluster. Do you want to continue {y|n}? y

Enter the admin password for the peer fabric interconnect:

Physical switch Mgmt0 IPv4 address: 192.168.155.12

Apply and save the configuration (select ‘no’ if you want to re-enter)?(yes/no: y

4. Verify the above setup to ensure the two Cisco UCS FIs formed a cluster by SSH-ing into the FIs and doing: show cluster state

5. Now you’re ready to log into Cisco UCS Manager using the cluster IP of the Cisco FIs

Log in to Cisco UCS Manager (UCS Manager)

To log in to the Cisco Unified Computing System (UCS) environment, complete the following steps:

1. Open a web browser and navigate to the Cisco UCS 6324 Fabric Interconnect cluster IP address.

2. Click the Launch UCS Manager link to download the Cisco UCS Manager software.

3. If prompted to accept security certificates, accept as necessary.

4. When prompted, enter admin as the user name and enter the administrative password.

5. Click Login to log in to Cisco UCS Manager.

6. Enter the information for the Anonymous Reporting if desired and click OK.

Synchronize Cisco UCS Mini to NTP

To synchronize the Cisco UCS environment to the NTP server, complete the following steps:

1. From Cisco UCS Manager, click the Admin tab in the navigation pane.

2. Select All > Timezone Management > Timezone.

3. Right-click and select Add NTP Server.

4. Specify NTP Server IP (For example: 192.168.155.254) and click OK, followed by Save Changes.

Configure Ports Connected to Switching Infrastructure

To configure the ports to be connected to the Cisco Nexus switches as Uplink ports, complete the following steps:

1. From Cisco UCS Manager, click the Equipment tab in the navigation pane.

2. Select Equipment > Fabric Interconnects > Fabric Interconnect A (primary) > Fixed Module.

3. Expand Ethernet Ports.

4. Select the first port (For example: Port 1) that connects to Cisco Nexus A switch, right-click and select Configure as Uplink Port à Click Yes to confirm the uplink ports and click OK. Repeat for second port (For example: Port 2) that connects to Cisco Nexus B switch.

5. Repeat above steps on Fabric Interconnect B to configure the ports that connect to Cisco Nexus A and B switches as Uplink ports

6. The resulting status on Cisco UCSM GUI should show the ports (Port 1, Port 2) as being up and Enabled on both Fabric Interconnect A and B with an If Role of Network as shown below.

Configure PortChannels on Uplink Ports

To configure Uplink ports to be connected to the Cisco Nexus switches in a PortChannel, complete the following steps:

1. From Cisco UCS Manager, click the LAN tab in the navigation pane.

2. Select LAN > LAN Cloud > Fabric A > Port Channels.

3. Right-click and select Create Port Channel.

4. Specify a Name, ID and select the ports to put in the channel (For example: Eth Interface 1/1 and Eth Interface 1/2) and Click Finish to create PortChannel. The resulting configuration is shown below.

5. Repeat above steps for Fabric B. The resulting configuration is shown below.

Configure Ports Connected to Storage Array

To configure ports connected to Nimble array as Appliance ports, complete the following steps:

1. From Cisco UCS Manager, click the Equipment tab in the navigation pane.

2. Select Equipment > Fabric Interconnects > Fabric Interconnect A (primary) > Fixed Module.

3. Expand Ethernet Ports.

4. Select the first port (For example: Port 3) that directly connects to Controller A on Nimble array, right-click and select Configure as Appliance Port à Click Yes to confirm the appliance ports and click OK to confirm. Repeat for second port (For example: Port 4) that connects to Controller B on Nimble array

5. Repeat above steps on the Fabric Interconnect B to configure the ports connected to Nimble Controller A and B as Appliance ports

6. The resulting status on Cisco UCS Manager GUI should show the ports (Port 3, Port 4) as being up and Enabled on both Fabric Interconnect A and B with an IfRole of Appliance Storage as shown below.

Server Configuration Workflow: vNIC Template Creation

To create a Service Profile Template to rapidly configure and deploy Cisco UCS servers in a SmartStack design, requires the creation of multiple vNIC templates. The figure below shows the high level workflow for creating vNIC Templates. The next few sections will cover the configuration of the individual steps in the workflow below.

Figure 4 vNIC Template Workflow

Create VLANs: Management VLAN on Uplink Ports to Cisco Nexus 9000 Series Switches

A management VLAN is required in order to provide in-band access to the Cisco UCS blades. The management VLAN is necessary for in-band management access to hosts and virtual machines on Cisco UCS Mini.

To create VLANs on an uplink port to Cisco Nexus 9000 Series switches, complete the following steps:

1. From Cisco UCS Manager, click the LAN tab in the navigation pane.

2. Select LAN > LAN Cloud > VLANs.

3. Right-click and select Create VLANs. Specify a name (For example: MGMT) and VLAN ID (For example: 12).

4. Determine whether or not the newly created VLAN should be a trunked VLAN or a native VLAN to the upstream switch. Either option is acceptable, but it needs to match what the upstream switch is set to. If the newly created VLAN is a native VLAN, select the VLAN, right-click and select Set as Native VLAN from the list.

5. Using the steps above, also create VLAN for vMotion (For example: 3000) which will use the same vNIC as Management in this design. vMotion can also use a separate dedicate vNIC.

Create VLANs: Application Data VLANs on Uplink Ports to Cisco Nexus 9000 Series Switches

Repeat the steps used to create the management VLAN above for every VLAN that needs to be trunked through the Cisco Nexus 9000 series switches to other parts of the network. These VLANs were created for testing the SmartStack architecture. Per security best practices, the default native VLAN (VLAN 1) was changed to VLAN 2.

Create VLANs: Appliance Port VLANs to Nimble Storage

The Appliance VLAN carries the storage related traffic to the directly attached Nimble array. An Appliance VLAN is specific to a single Fabric Interconnect. These VLANs do not span across multiple Fabric Interconnects. Therefore, each Appliance port VLAN is a single broadcast domain.

To configure the VLANs, complete the following steps:

1. From Cisco UCS Manager, select the LAN tab in the navigation pane.

2. Select LAN > Appliances > Fabric A > VLANs.

3. Right-click and select Create VLANs.

4. Specify the VLAN Name/Prefix (For example: iSCSI-A-SAN) and VLAN ID (For example: 3010) for connecting to Fabric Interconnect A. Click OK. The VLAN should not be a routed VLAN, but still should be unique for your environment.

5. Select LAN > Appliances > Fabric B > VLANs.

6. Repeat the above process for the VLAN used between Fabric Interconnect B and Nimble Array. In testing, storage VLAN used for Fabric Interconnect B was: iSCSI-B-SAN and VLAN 3020.

7. Navigate to LAN > LAN Cloud > Fabric A and right-click to create SCSI-A-SAN VLAN under LAN Cloud.

8. Navigate to LAN > LAN Cloud > Fabric B and right-click to create SCSI-B-SAN VLAN under LAN Cloud.

Create LAN Pools: Mac Address Pools

![]() For ease of troubleshooting, the 4th and 5th octet in the mac-address is modified in the SmartStack design to reflect whether that mac-address uses Fabric A or Fabric B.

For ease of troubleshooting, the 4th and 5th octet in the mac-address is modified in the SmartStack design to reflect whether that mac-address uses Fabric A or Fabric B.

Cisco UCS allows MAC address pool creation and allocation. Having organized MAC pool allocation can be extremely beneficial to any troubleshooting efforts that might be needed.

Create MAC Pool for a Presence in both Fabric Interconnect A

The MAC addresses in this pool will be used for traffic using Fabric Interconnect A.

1. From Cisco UCS Manager, click the LAN tab in the navigation pane.

2. Select LAN > Pools > root > Mac Pools.

3. Right-click and select Create Mac Pool.

4. Specify a name (For example: FI-A) that identifies this pool is specific to Fabric Interconnect A.

5. Select the Assignment Order as Sequential and click Next.

6. Click [+] Add to add a new MAC pool.

7. For ease-of-troubleshooting, change the 4th and 5th octet to include the letters AA:AA to indicate a presence only in Fabric Interconnect A. Generally speaking, the first three octets of a mac-address should not be changed.

8. Select a size of 256 MACs or less and select OK and then Finish to add the MAC pool.

Create MAC Pool for a Presence in both Fabric Interconnect B

The MAC addresses in the pool will be used for traffic using Fabric Interconnect B.

1. From Cisco UCS Manager, click the LAN tab in the navigation pane.

2. Select LAN > Pools > root > Mac Pools.

3. Right-click and select Create Mac Pool.

4. Specify a name (For example: FI-B) that identifies this pool is specific to Fabric Interconnect B.

5. Select the Assignment Order as Sequential and click Next.

6. Click [+] Add to add a new MAC pool.

7. For ease-of-troubleshooting, change the 4th and 5th octet to include the letters BB:BB to indicate a presence only in Fabric Interconnect B. Generally, the first three octets of a mac-address should not be changed.

8. Select a size of 256 MACs or less and select OK and then Finish to add the MAC pool.

MAC Pool Summary View

The resulting configuration is shown below.

Create LAN Policies: QoS and Jumbo Frame

Proper QoS configuration is required in order to allow proper MTU size and traffic priority. The most important thing to keep in mind is that the CoS / QoS level needs to be the same for the entire path from the Nimble Storage array to the server blade.

To create LAN policies for QoS and Jumbo frames, complete the following steps:

1. From Cisco UCS Manager, select the LAN tab in the navigation pane.

2. Select LAN > LAN Cloud > QOS System Class.

3. Select the Enabled checkbox for the Best Effort.

4. In the Best Effort row change the MTU to be a value of 9000 and click Save Changes.

5. Select LAN tab in the navigation pane.

6. Select LAN > Policies > root > QOS Policies.

7. Right-click and select Create QoS Policy.

8. Specify a policy Name (For example: Nimble-QoS) and select Priority as Best Effort. Click OK to save.

Create LAN Policies: Network Control Policy for Uplinks to Cisco Nexus Switches

For Uplink ports connected to Cisco Nexus switches, the policy is used to enable Cisco Discovery Protocol (CDP). CDP allows devices to discover and learn about devices connected to the ports where CDP is enabled and is a very useful for troubleshooting.

To create LAN policies for Network Control, complete the following steps:

1. From Cisco UCS Manager, select the LAN tab in the navigation pane.

2. Select LAN > Policies > LAN Cloud > root > Network Control Policies.

3. Right-click and select Create Network Control Policy.

4. Specify a Name for the network control policy (For example: Enable_CDP).

5. Select Enabled for CDP and click OK to create network control policy.

Create LAN Policies: Network Control Policy for Storage vNICs

A Network Control Policy controls how a resource will behave in the event that a Fabric Interconnects upstream connectivity becomes unavailable. A Network Control policy is necessary under LAN Cloud so as to apply the policy when creating iSCSI-A and iSCSI-B vNIC templates under LAN Cloud. An identical policy is also created under Appliances but this policy is for applying it directly to the Appliance port.

To create LAN policies for storage vNICs, complete the following steps:

1. From Cisco UCS Manager, select the LAN tab in the navigation pane.

2. Select LAN > Policies > LAN Cloud > root > Network Control Policies.

3. Right-click and select Create Network Control Policy.

4. Specify a Name for the network control policy (For example: Nimble-NCP).

5. Select the Action on Uplink Fail to be Warning and click OK to create network control policy.

Create LAN Policies: vNIC Templates for Management Traffic

To create virtual network interface card (vNIC) templates for Management traffic in a Cisco UCS environment, complete the following steps. Two management vNICs are created for redundancy – one through Fabric A and another through Fabric B.

1. From Cisco UCS Manager, select the LAN tab in the navigation pane.

2. Select LAN > Policies > root > vNIC Templates.

3. Right-click and select Create vNIC Template.

4. Specify a template Name (For example: MGMT-A) for the policy.

5. Keep Fabric A selected and leave Enable Failover checkbox unchecked.

6. Under Target, make sure that the VM checkbox is not selected.

7. Select Updating Template as the Template Type.

8. Under VLANs, select the checkboxes MGMT, vMotion.

9. For MTU, enter 9000 since vMotion traffic will use this vNIC.

10. For MAC Pool, select FI-A.

11. For Network Control Policy, select Enable_CDP.

12. Click OK twice to create the vNIC template. The resulting configuration is as shown below:

13. Repeat same steps to create a vNIC (MGMT-B) template through Fabric B with the same parameters as MGMT-A but using FI-B MAC Pool. The resulting configuration is as shown below:

Create LAN Policies: vNIC Templates for Uplink Port Traffic

To create virtual network interface card (vNIC) templates for Application Data traffic in a Cisco UCS environment, complete the following steps. Two Application vNICs are created for redundancy – one through Fabric A and another through Fabric B.

1. From Cisco UCS Manager, select the LAN tab in the navigation pane.

2. Select LAN > Policies > root > vNIC Templates.

3. Right-click and select Create vNIC Template.

4. Specify a template Name (For example: vNIC-A) for the policy.

5. Keep Fabric A selected and leave Enable Failover checkbox unchecked.

6. Under Target, make sure that the VM checkbox is not selected.

7. Select Updating Template as the Template Type.

8. Under VLANs, select the checkboxes default, Data-v900, Data-v901.

9. Set default as the native VLAN.

10. For MTU, enter 1500. MTU of 9000 can be used if needed.

11. For MAC Pool, select FI-A.

12. For Network Control Policy, select Enable_CDP.

13. Click OK twice to create the vNIC template. The resulting configuration is as shown below:

14. Repeat same steps to create a vNIC (vNIC-B) template through Fabric B with the same parameters as vNIC-A but using FI-B MAC Pool. The resulting configuration is as shown below:

Create LAN Policies: vNIC Templates for Appliance Port Traffic

To create virtual network interface card (vNIC) templates for the Appliance ports on Cisco UCS environment, complete the following steps. Two storage vNICs are created for redundancy – one through Fabric A and another through Fabric B.

1. From Cisco UCS Manager, select the LAN tab in the navigation pane.

2. Select LAN > Policies > root > vNIC Templates.

3. Right-click and select Create vNIC Template.

4. Specify a template Name (For example: iSCSI-A) for the policy.

5. Keep Fabric A selected and leave Enable Failover checkbox unchecked.

6. Under Target, make sure that the VM checkbox is not selected.

7. Select Updating Template as the Template Type.

8. Under VLANs, select the checkboxes for only iSCSI-A-SAN VLAN.

9. Set iSCSI-A-SAN as the native VLAN

10. For MTU, enter 9000.

11. For MAC Pool, select FI-A.

12. For QoS Policy, select Nimble-QoS.

13. For Network Control Policy, select Nimble-NCP.

14. Click OK twice to create the vNIC template. The resulting configuration is as shown below:

15. Repeat same steps to create a vNIC (iSCSI-B)template through Fabric B with the same parameters as iSCSI-A except that iSCSI-B will use MAC Pool FI-B and iSCSI-B-SAN will be the VLAN instead of iSCSi-A-SAN. The resulting configuration is as shown below.

Server Configuration Workflow: Appliance Port Policies

In the SmartStack design, the Nimble CS300 array is directly attached to Appliance Ports on Cisco UCS Mini Fabric Interconnect ports. This requires a number of policies to be applied to the Appliance port for the SmartStack platform to function optimally. The figure below shows the workflow for defining and applying the correct policies on Appliance Ports with direct attached iSCSI storage.

Figure 5 Appliance Port Policy Workflow

Create LAN Policies: Network Control Policy for Appliance Port

A Network Control Policy controls how a resource will behave in the event that a Fabric Interconnects upstream connectivity becomes unavailable. In particular, this policy needs to exist for Appliance ports to specify the behavior in the event of an upstream device failover. For Appliance ports connected to Nimble Storage arrays, the policy action should be set to not failover. This will allow the host MPIO software to handle failover scenarios. An identical policy is also created under LAN > LAN Cloud but for applying it to vNIC Template.

To create a Network Control policy for the Appliance Port, complete the following steps:

1. From Cisco UCS Manager, select the LAN tab in the navigation pane.

2. Select LAN > Policies > Appliances > Network Control Policies.

3. Right-click and select Create Network Control Policy.

4. Specify a name for the network control policy (For example: Nimble-NCP).

5. Select the Action on Uplink Fail to be Warning and click OK to create network control policy.

Create LAN Policies: Flow Control Policy for Appliance Port

Flow control determines how congestion control is negotiated between the Nimble Storage array and the directly connected Fabric Interconnect switch ports. To configure this policy, complete the following steps:

1. From Cisco UCS Manager, select the LAN tab in the navigation pane.

2. Select LAN > Policies > root > Flow Control Policies.

3. Right-click and select Create Flow Control Policy.

4. Specify a name (For example: Nimble-Flow) for the policy.

5. Set Receive and Send flow control to On. Leave the Priority set to Auto.

6. Click OK twice to create flow control policy.

Apply Policies to Appliance Port

To apply policies to the Appliance Port, complete the following steps:

1. From Cisco UCS Manager, select the LAN tab in the navigation pane.

2. Select Appliances > Fabric A > Interfaces

3. Select the first port (For example: Appliance Interface 1/3)

4. Set the Priority to be the correct QoS / CoS priority level. (Best Effort in this case)

5. Set the Network Control Policy to be the one previously created (For example: Nimble-NCP)

6. Set the Flow Control policy to be the one previously created (For example: Nimble-Flow).

7. Select the VLAN (For example: iSCSI-A-SAN) used for iSCSI Traffic through Fabric A.

8. Click Save Changes to apply the policies.

9. Repeat the above steps for other interfaces on Fabric A by selecting Fabric A > Interfaces > Appliance Interface 1/4.

10. Repeat the above steps for interfaces on Fabric B by selecting Fabric B > Interfaces > Appliance Interface 1/3, followed by Fabric B > Interfaces > Appliance Interface 1/4. Note that that VLAN for iSCSI traffic through Fabric B will be: iSCSI-B-SAN.

Server Configuration Workflow: Server Policies

The next step towards creating Service Profile Template is the creation of Server Policies. The figure below shows the workflow for creating server policies.

Figure 6 Server Policy Workflow

Create Server Policies: Power Control Policy

To create a power control policy for the Cisco UCS environment, complete the following steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Servers > Policies > root > Power Control Policies.

3. Right-click and select Create Power Control Policy.

4. Enter No-Power-Cap as the power control policy name.

5. Change the power capping setting to No Cap.

6. Click OK twice to create the power control policy.

Create Server Policies: Server Pool Qualification Policy

To create an optional server pool qualification policy for the Cisco UCS environment, complete the following steps:

![]() This example creates a policy for a Cisco UCS B200-M4 server.

This example creates a policy for a Cisco UCS B200-M4 server.

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Policies > root > Server Pool Policy Qualifications.

3. Right-click and select Create Server Pool Policy Qualification.

4. Enter UCSB-B200-M4 as the name for the policy.

5. Select Create Server PID Qualifications.

6. Enter UCSB-B200-M4 as the PID.

7. Click OK twice to create the server pool qualification policy.

Create Server Policies: BIOS Policy

To create a server BIOS policy for the Cisco UCS environment, complete the following steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Policies > root > BIOS Policies.

3. Right-click and select Create BIOS Policy.

4. Enter VM-Host-Infra as the BIOS policy name.

5. In the Main screen, change the Quiet Boot setting to Disabled.

6. Click Next.

7. Change Turbo Boost to Enabled.

8. Change Enhanced Intel Speedstep to Enabled.

9. Change Hyper Threading to Enabled.

10. Change Core Multi Processing to all.

11. Change Execution Disabled Bit to Enabled.

12. Change Virtualization Technology (VT) to Enabled.

13. Change Direct Cache Access to Enabled.

14. Change CPU Performance to Enterprise.

15. Click Next to go the Intel Directed IO Screen.

16. Change the VT for Direct IO to Enabled.

17. Click Next to go the RAS Memory screen.

18. Change the Memory RAS Config to maximum-performance.

19. Change NUMA to Enabled.

20. Change LV DDR Mode to performance-mode.

21. Click Finish and OK to create the BIOS policy.

Create Server Policies: iSCSI Boot Adapter Policy

To create a server iSCSI Adapter policy for the Cisco UCS environment, complete the following steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Policies > root > Adapter Policies.

3. Right-click and select Create iSCSI Adapter Policy.

4. Enter iSCSI-boot as the iSCSI Boot Adapter policy name.

5. Click OK twice to create the iSCSI Adapter policy

Create Server Policies: Local Disk Configuration Policy

To create a local disk configuration policy for the Cisco UCS environment, complete the following steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Policies > root > Local Disk Config Policies.

3. Right-click and select Create Local Disk Configuration Policy.

4. Enter iSCSI-boot as the iSCSI Boot Adapter policy name.

5. Click OK twice to complete the local disk configuration policy.

Create Server Policies: Boot Policy

To create server boot policy, complete the following steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Policies > root > Boot Policies.

3. Right-click and select Create Boot Policy.

4. Enter Nimble-iSCSI as the Boot policy name.

![]() Do not select the Reboot on Boot Order Change checkbox

Do not select the Reboot on Boot Order Change checkbox

5. Expand the Local Devices drop-down menu, select Add Remote CD/DVD.

6. Expand the iSCSI vNICs drop-down menu and select Add iSCSI Boot.

7. In the Add iSCSI Boot dialog box, enter iSCSI-A-boot in the iSCSI vNIC field.

8. Click OK to add the iSCSI boot initiator.

9. From the iSCSI vNICs drop-down menu, select Add iSCSI Boot.

10. Enter iSCSI-B-boot as the iSCSI vNIC.

11. Click OK to add the iSCSI boot initiator.

12. Click OK again twice to create the boot policy. The resulting configuration is as shown below.

Create Server Policies: Maintenance Policy

To update the default Maintenance Policy, complete the following steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Policies > root > Maintenance Policies.

3. Right-click and select Create Maintenance Policy.

4. Specify a name for the policy (For example: User-ACK).

5. Change the Reboot Policy to User Ack.

6. Click OK twice to create the maintenance policy.

Create Server Polices: Host Firmware Package Policy

Firmware management policies allow the administrator to select the corresponding packages for a given server con-figuration. These policies often include packages for adapter, BIOS, board controller, FC adapters, host bus adapter (HBA) option ROM, and storage controller properties. To create a firmware management policy for a given server configuration in the Cisco UCS environment, complete the following steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Policies > root > Host Firmware Packages.

3. Right-click Host Firmware Packages and select Create Host Firmware Package.

4. Enter VM-Host-Infra as the name of the host firmware package.

5. Leave Simple selected.

6. Select the version 3.0(2c) for both the Blade Package and Rack Package.

7. Click OK twice to create the host firmware package.

Server Configuration Workflow: Server, LAN and SAN Pools

The next step towards creating Service Profile Template is the creation of the remaining Sever, LAN and SAN Pools. The figure below shows all the pools that need to be created and the grey box represents a pool that was created in an earlier section of this document.

Figure 7 Pool Creation Workflow

Create Server Pools: UUID Suffix Pool

To configure the necessary universally unique identifier (UUID) suffix pool for the Cisco UCS environment, complete the following steps:

1. From Cisco UCS Manager, select Servers tab in the navigation pane.

2. Select Servers > Pools > root > UUID Suffix Pools.

3. Right-click and select Create UUID Suffix Pool.

4. Specify a Name for the UUID suffix pool and click Next.

5. Click [+] Add to add a block of UUIDs.

6. Alternatively, you can also modify the default pool and allocate/modify a UUID block.

7. Keep the From field at the default setting. Specify a block size (For example: 32) that is sufficient to support the available blade or server resources.

8. Click OK, click Finish and click OK again to create UUID Pool.

Create LAN Pools: IP Pools

Management IP pool: Add Block of IP Addresses for KVM Access

To create a block of IP addresses for server Keyboard, Video, Mouse (KVM) access in the Cisco UCS environment, complete the following steps:

![]() This block of IP addresses should be in the same subnet as the management IP addresses for the Cisco UCS Manager.

This block of IP addresses should be in the same subnet as the management IP addresses for the Cisco UCS Manager.

1. From Cisco UCS Manager, click the LAN tab in the navigation pane.

2. Select LAN > Pools > root > IP Pools.

3. Right-click and select Create IP Pool.

4. Specify a Name (For example: ext-mgmt) for the pool and click Next.

5. Click [+] Add to add a new IP block pool.

6. Enter the starting IP address of the block and the number of IP addresses required, and the subnet and gateway information.

7. Click OK and then Finish to create the IP block.

iSCSI Initiator IP Pool: Add Block of IP Addresses Exclusively for iSCSI Traffic on Fabric Interconnect A

To allocate a pool of IP addresses for iSCSI data traffic in Fabric Interconnect A, complete the following steps. This block of IPs will align to the IP address range in the Nimble Storage array TG1 interface.

1. From Cisco UCS Manager, click the LAN tab in the navigation pane.

2. Select LAN > Pools > root > IP Pools.

3. Right-click and select Create IP Pool.

4. Specify a Name (For example: iSCSI-A) for the pool that is specific to the traffic going to Fabric Interconnect A.

5. Set the Assignment Order to be Sequential and click Next.

6. Click [+] Add to add a new IP block pool.

7. Enter the starting IP address of the block and the number of IP addresses required, and the subnet. No other information should be entered.

8. Click OK and then Finish to create the IP block.

iSCSI Initiator IP Pool: Add Block of IP Addresses Exclusively for iSCSI Traffic on Fabric Interconnect B

To allocate a pool of IP addresses for iSCSI data traffic in Fabric Interconnect B, complete the following steps. This block of IPs will align to the IP address range in the Nimble Storage array TG2 interface.

1. From Cisco UCS Manager, click the LAN tab in the navigation pane.

2. Select LAN > Pools > root > IP Pools.

3. Right-click and select Create IP Pool.

4. Specify a Name (For example: iSCSI-B) for the pool that is specific to the traffic going to Fabric Interconnect B.

5. Set the Assignment Order to be Sequential and click Next.

6. Select [+] Add to add a new IP block pool.

7. Enter the starting IP address of the block and the number of IP addresses required, and the subnet. No other information should be entered.

8. Click OK and then Finish to create the IP block.

IP Pool Summary View

The resulting configuration is shown in the below figure.

Create SAN Pools: iSCSI IQN Pools

Cisco UCS allows you to create IQN pools that are used for the host initiators. This configuration will allow for a single Service Profile level IQN and so only one needs to be created per host. To create iSCSI IQN pools, complete the following steps: