Cisco HyperFlex Platforms for Big Data with Splunk

Available Languages

Cisco HyperFlex Platforms for Big Data with Splunk

Splunk SmartStore on Cisco HyperFlex Platforms with SwiftStack Object Storage System on Cisco UCS S3260 Servers Deployment Guide

Last Updated: July 30, 2019

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2019 Cisco Systems, Inc. All rights reserved.

Table of Contents

Cisco Unified Computing System

Cisco UCS 6332 Fabric Interconnect

Cisco UCS 6332-16UP Fabric Interconnect

Cisco UCS 6454 Fabric Interconnect

Cisco UCS S3260 M5 Storage Server

Cisco VIC 1387 MLOM Interface Cards

Cisco VIC 1457 MLOM Interface Cards

Cisco HyperFlex HX-Series Nodes

Cisco HyperFlex HXAF220c-M5SX All-Flash Node

Cisco HyperFlex HXAF240c-M5SX All-Flash Node

Cisco HyperFlex HX220c-M5SX Hybrid Node

Cisco HyperFlex HX240c-M5SX Hybrid Node

Cisco HyperFlex Compute-Only Nodes

Cisco HyperFlex Data Platform Software

Object Storage and SwiftStack Software

Splunk Enterprise for Big Data Analytics

Key Features of Splunk Enterprise

Splunk Enterprise Processing Components

Splunk Enterprise in Virtual Environments

Splunk Virtual Machines on Cisco HyperFlex

SwiftStack Object Storage on Cisco UCS S3260

Cisco HXAF240c-M5SX Servers Connectivity

Cisco UCS C220 M5 Servers Connectivity

Cisco UCS S3260 Servers Connectivity

Deployment of Hardware and Software

Install Cisco HyperFlex Cluster (with Nested vCenter)

Install Cisco HyperFlex Systems

HyperFlex Cluster Expansion with Computing-only Nodes

Perform Cisco HyperFlex Post-installation Configuration

Create Splunk Virtual Machine Templates

Create Splunk Base Virtual Machine Template

Configure Splunk Base Virtual Machine

Create Splunk Admin Virtual Machine Template

Create Splunk Search Head Virtual Machine Template

Create Splunk Indexer Virtual Machine Template

Create Splunk Virtual Machines

Install SwiftStack Object Storage System on Cisco UCS S3260 Servers

Configure Splunk Enterprise Cluster

Configure Distributed Monitoring Console

Configure SmartStore Indexes on the SwiftStack Object Storage

Create SwiftStack Object Storage Containers for SmartStore

Verify Transfer of Warm Buckets to the Remote Storage

Splunk SmartStore Cache Performance Testing

Appendix A: HyperFlex Cluster Capacity Calculations

Appendix B: PowerShell Script Example – Clone Splunk Virtual Machines

Appendix C: SmartStore indexes.conf File Example

Appendix D: Custom Event Generation Script

This CVD presents a validated scale-out data center analytics and security solution with Splunk Enterprise software that is deployed on the Cisco HyperFlex All Flash Data Platform as local computing and storage resources, with the SwiftStack object storage system on the Cisco UCS S3260 servers as S3 API compliant remote object stores.

Traditional tools for managing and monitoring IT infrastructures are inconsistent with the constant change happening in today's data centers. When problems arise, finding the root cause or gaining visibility across the infrastructure to pro-actively identify and prevent outages is nearly impossible.

Splunk Enterprise software is the platform that reliably collects and indexes machine data, from a single source to tens of thousands of sources, all in real time. Organizations typically start with Splunk to solve a specific problem, and then expand from there to address a broad range of use cases, such as application troubleshooting, IT infrastructure monitoring, security, business analytics, Internet of Things, and many others. As operational analytics become increasingly critical to day-to-day decision-making and Splunk deployments expand to terabytes of data, a high-performance, highly scalable infrastructure is critical to ensuring rapid and predictable delivery of insights. In addition, with virtualization and cloud infrastructures introducing additional complexity that results in an environment that is more challenging to control and manage, deploying Splunk software in a virtual, cloud or hybrid environment has become valuable for the IT engineers.

Splunk’s new SmartStore architecture is a response to these challenges. SmartStore is an indexer and caching engine which enables the use of remote object stores to store the indexed data. SmartStore makes it possible to dramatically expand the capacity for business insights by retaining significantly more searchable data through the use of cost-effective and scale-out remote object storage, either from the Cloud such as Amazon S3, or from an on-premises system like SwiftStack, alongside smaller footprints of low-latency local storage. With SmartStore, most data resides on remote storage while the indexer maintains a local cache that contains a minimal amount of data: hot buckets, copies of warm buckets participating in active or recent searches, and bucket metadata.

Cisco HyperFlex™ systems provide an all-purpose virtualized server platform, with hypervisor hosts, network connectivity, and virtual server storage across a set of Cisco HyperFlex HX-Series x86 rack-mount servers. The platform combines the converged computing and networking capabilities provided by the Cisco Unified Computing System™ (Cisco UCS®) with next-generation hyperconverged storage software to uniquely provide the computing resources, network connectivity, storage, and hypervisor platform needed to run an entire virtual environment, all contained in a single uniform system. A proven industry-leading hyperconverged platform, Cisco HyperFlex platform is an optimized choice for a Splunk Enterprise deployment in a VMware ESXi virtual environment.

SwiftStack’s large scale-out storage capacity can support much larger searchable buckets for Splunk and enables much longer data retention periods for SmartStore indexes. The clustered architecture provides the higher availability and improved data resiliency as compared to traditional Splunk deployments. It provides much faster search results as compared to storing search data in public cloud storage locations.

The Cisco Validated Design (CVD) program consist of systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. These designs usually incorporate a wide range of technologies and products from multiple vendors into a portfolio of solutions that have been developed to address the business needs of our customers.

Introduction

Today, the rapid growth of data and the need for data analytics brings a lot more pressure on the underlying IT infrastructure and is seen in many customers’ environments. Splunk has addressed this challenge by releasing a new software feature called SmartStore.

The architectural goal of the SmartStore feature is to optimize the use of local storage, while maintaining the fast indexing and search capabilities characteristic of Splunk Enterprise deployments. SmartStore introduces a remote storage tier and a cache manager that allow data to reside either locally on indexers or on the remote storage tier. Data movement between the indexer and the remote storage tier is managed by the cache manager, which resides on the indexer. With SmartStore the remote object store becomes the location for master copies of warm buckets, while the indexer's local storage is used to cache copies of warm buckets currently participating in a search or that have a high likelihood of participating in a future search. SmartStore also allows the customers to manage their indexer storage and compute resources in a cost-effective manner by scaling those resources separately.

The choice of the hardware for deploying SmartStore is important because the efficiency of the infrastructure affects the efficiency of the application and the speed of data collection and processing, storage performance, and resource management. Where to install Splunk Enterprise software and who can provide good object storage devices for the SmartStore indexes will never be a simple choice for the customers.

Virtualization is an ideal solution towards scaling challenges. It is a technology that allows for the sharing and easy expansion of underlying hardware resources by multiple workloads. This approach leads to higher utilization of IT resources while providing necessary fault tolerance. Hyperconvergence is an evolving technology that leverages many benefits of virtualization. Cisco HyperFlex systems let you unlock the full potential of hyperconvergence and adapt IT to the needs of your workloads. With Cisco HyperFlex systems, customers have many choices and flexibilities to support different types of workloads without comprising their performance requirements.

Cisco HyperFlex systems deliver many enterprise-class features, such as:

· A fully distributed log-structured file system that supports thin provisioning

· High performance and low latency from the flash-friendly architecture

· In-line data optimization with deduplication and compression

· Fast and space-efficient clones through metadata operations

· The flexibility to scale out computing and storage resources separately

· Data-at-rest encryption using hardware-based self-encrypting disks (SEDs)

· Non-Volatile Memory Express (NVMe)–based solid-state disk (SSD) support

· Native replication of virtual machine snapshots

· Cloud based central management

Installing Splunk Enterprise software on the Cisco HyperFlex All Flash Data Platform enables a validated analytics and security solution for a virtualized data center with simplified deployment on the integrated resources for computing, networking, and high-performance storage. In addition, Cisco HyperFlex is the only HCI platform that allows you to scale out computing and storage resources separately in today’s market. This perfectly meets the requirements for the deployment of Splunk SmartStore. That means the customers can easily scale out the indexers based on the performance demand by just adding compute-only nodes into the HyperFlex cluster.

Along with SwiftStack’s scalable object storage the solution provides a less expensive option to store the indexing buckets. The buckets of SmartStore indexes ordinarily have just two active states: hot and warm. The cold state, which is used with non-SmartStore indexes to distinguish older data eligible for moving to cheap storage, is not necessary with SmartStore because warm buckets already reside on inexpensive remote storage. This solution fully utilizes the SmartStore’s advanced caching mechanism that enables indexers to quickly return most search results from memory or local flash storage sitting on Cisco HyperFlex All Flash platforms while leveraging SwiftStack’s scale-out remote object storage for all remaining data. All data remains searchable at any time, regardless of whether it is physically present in the cache or not.

Figure 1 High-level Solution Introduction

Audience

The intended audience of this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineering and customers who want to deploy Splunk Enterprise software on Cisco HyperFlex Platforms for Big Data, and those who want to deploy the SmartStore indexes with SwiftStack object storage systems on Cisco UCS S3260 servers. The readers of this document are expected to have the necessary understanding of Cisco UCS and HyperFlex, Splunk applications, Object storage with Swift and SwiftStack. External references are provided where applicable and it is recommended that the reader be familiar with these documents.

Purpose of this Document

This document describes how to deploy Splunk indexing and searching virtual machines on a Cisco HyperFlex mixed (convergence and compute-only) cluster, and how to configure SmartStore indexes with SwiftStack object storage system on Cisco UCS S3260 servers. It includes design guidance covering the architecture and topologies, performance and scalability, a bill of materials, workarounds if required, while presenting a tested and validated solution, along with operational best practices.

Solution Summary

This CVD combines three evolving technologies into one solution: Big Data Analytics (Splunk), Cisco HyperFlex Platform (HCI), and SwiftStack Object Storage Software on Cisco UCS S3260 Servers (SDS). The solution consists of the following components:

· Splunk Enterprise (version 7.2.3) Virtual Machines

- (10+) Indexers

- (4) Search Heads

- (1) Master Node

- (1) License Master

- (1) Search Head Deployer

- (1) Deployment Server

· SwiftStack (version 6.19.1.3) Object Storage System on Cisco UCS S3260

- (2) Cisco UCS C220 M5 Rack Servers (SwiftStack Controllers)

- (3) Cisco UCS S3260 Dual-server Chassis

- (6) Cisco UCS S3260 M5 Servers (SwiftStack PACO Nodes)

- (6) System IO Controllers (SIOC) with 40G VIC 1380 adapters

· Cisco HyperFlex Data Platform (version 3.5.2a)

- (4) Cisco HXAF240c M5 Rack Servers (Converged nodes)

- (6) Cisco UCS C220 M5 Rack Servers (Compute-only nodes)

- (10) Cisco UCS VIC 1387 40G adapters

· Cisco UCSM Management Software (version 4.0.1c)

- (2) Cisco UCS 6332 40G Fabric Interconnects

· VMWare vSphere ESXi Hypervisor (version 6.5.0, 10884925)

· VMWare vSphere vCenter Appliance (version 6.5.0, 11347054)

· RedHat Enterprise Linux Server (version 7.5)

Figure 2 Solution Overview

Cisco HyperFlex systems are built on the Cisco UCS platform. Cisco UCS fabric interconnects provide a single point of connectivity integrating Cisco HyperFlex HX-Series nodes and other Cisco UCS servers into a single unified cluster. They can be deployed quickly and are highly flexible and efficient, reducing risk for the customer. Cisco HyperFlex delivers the simplicity, agility, scalability, and pay-as-you-grow economics of the cloud with the benefits of multisite, distributed computing at global scale.

Cisco Unified Computing System

Cisco Unified Computing System (Cisco UCS) is a next-generation data center platform that unites compute, network, and storage access. The platform, optimized for virtual environments, is designed using open industry-standard technologies and aims to reduce total cost of ownership (TCO) and increase business agility. The system integrates a low-latency, lossless 10 Gigabit Ethernet, 25 Gigabit Ethernet, 40 Gigabit Ethernet or 100 Gigabit Ethernet unified network fabric with enterprise-class, x86-architecture servers. It is an integrated, scalable, multi chassis platform in which all resources participate in a unified management domain.

The main components of Cisco Unified Computing System are:

· Computing: The system is based on an entirely new class of computing system that incorporates rack-mount and blade servers based on Intel Processors.

· Network: The system is integrated onto a low-latency, lossless, 10-Gbps, 25-Gbps, 40-Gbps or 100-Gbps unified network fabric. This network foundation consolidates LANs, SANs, and high-performance computing networks which are often separate networks today. The unified fabric lowers costs by reducing the number of network adapters, switches, and cables, and by decreasing the power and cooling requirements.

· Virtualization: The system unleashes the full potential of virtualization by enhancing the scalability, performance, and operational control of virtual environments. Cisco security, policy enforcement, and diagnostic features are now extended into virtualized environments to better support changing business and IT requirements.

· Storage access: The system provides consolidated access to both SAN storage and Network Attached Storage (NAS) over the unified fabric. By unifying storage access, the Cisco Unified Computing System can access storage over Ethernet, Fibre Channel, Fibre Channel over Ethernet (FCoE), and iSCSI. This provides customers with their choice of storage protocol and physical architecture, and enhanced investment protection. In addition, the server administrators can pre-assign storage-access policies for system connectivity to storage resources, simplifying storage connectivity, and management for increased productivity.

· Management: The system uniquely integrates all system components which enable the entire solution to be managed as a single entity by the Cisco UCS Manager (UCSM). The Cisco UCS Manager has an intuitive graphical user interface (GUI), a command-line interface (CLI), and a robust application programming interface (API) to manage all system configuration and operations.

Cisco Unified Computing System is designed to deliver:

· A reduced Total Cost of Ownership and increased business agility.

· Increased IT staff productivity through just-in-time provisioning and mobility support.

· A cohesive, integrated system which unifies the technology in the data center. The system is managed, serviced and tested as a whole.

· Scalability through a design for hundreds of discrete servers and thousands of virtual machines and the capability to scale I/O bandwidth to match demand.

· Industry standards supported by a partner ecosystem of industry leaders.

Cisco UCS Fabric Interconnect

The Cisco UCS Fabric Interconnect (FI) is a core part of the Cisco Unified Computing System, providing both network connectivity and management capabilities for the system. Depending on the model chosen, the Cisco UCS Fabric Interconnect offers line-rate, low-latency, lossless 10 Gigabit, 25 Gigabit, 40 Gigabit or 100 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE) and Fibre Channel connectivity. Cisco UCS Fabric Interconnects provide the management and communication backbone for the Cisco UCS C-Series, S-Series and HX-Series Rack-Mount Servers, Cisco UCS B-Series Blade Servers and Cisco UCS 5100 Series Blade Server Chassis. All servers and chassis, and therefore all blades, attached to the Cisco UCS Fabric Interconnects become part of a single, highly available management domain. In addition, by supporting unified fabrics, the Cisco UCS Fabric Interconnects provide both the LAN and SAN connectivity for all servers within its domain.

From a networking perspective, the Cisco UCS 6200 Series uses a cut-through architecture, supporting deterministic, low latency, line rate 10 Gigabit Ethernet on all ports, up to 1.92 Tbps switching capacity and 160 Gbps bandwidth per chassis, independent of packet size and enabled services. The product family supports Cisco low-latency, lossless 10 Gigabit Ethernet unified network fabric capabilities, which increase the reliability, efficiency, and scalability of Ethernet networks. The Fabric Interconnect supports multiple traffic classes over the Ethernet fabric from the servers to the uplinks. Significant TCO savings come from an FCoE-optimized server design in which network interface cards (NICs), host bus adapters (HBAs), cables, and switches can be consolidated.

The Cisco UCS 6300 Series offers the same features while supporting higher performance, low latency, lossless, line rate 40 Gigabit Ethernet, with up to 2.56 Tbps of switching capacity. Backward compatibility and scalability are assured with the ability to configure 40 Gbps quad SFP (QSFP) ports as breakout ports using 4x10GbE breakout cables. Existing Cisco UCS servers with 10GbE interfaces can be connected in this manner, although Cisco HyperFlex nodes must use a 40GbE VIC adapter in order to connect to a Cisco UCS 6300 Series Fabric Interconnect.

The Cisco UCS 6400 Series offers the same features while supporting even higher performance, low latency, lossless, line rate 10/25/40/100 Gigabit Ethernet ports, with up to 3.82 Tbps of switching capacity. Backward compatibility and scalability are assured with the ability to configure 10 Gbps ports as well as 25 Gbps ports for the new servers. Existing Cisco UCS servers with 10GbE interfaces can be connected in this manner. Cisco HyperFlex nodes can use a 10/25 GbE VIC adapters in order to connect to a Cisco UCS 6400 Series Fabric Interconnect.

Cisco UCS 6332 Fabric Interconnect

The Cisco UCS 6332 Fabric Interconnect is a one-rack-unit (1RU) 40 Gigabit Ethernet and FCoE switch offering up to 2560 Gbps of throughput. The switch has 32 40-Gbps fixed Ethernet and FCoE ports. Up to 24 of the ports can be reconfigured as 4x10Gbps breakout ports, providing up to 96 10-Gbps ports.

Figure 3 Cisco UCS 6332 Fabric Interconnect

Cisco UCS 6332-16UP Fabric Interconnect

The Cisco UCS 6332-16UP Fabric Interconnect is a one-rack-unit (1RU) 10/40 Gigabit Ethernet, FCoE, and native Fibre Channel switch offering up to 2430 Gbps of throughput. The switch has 24 40-Gbps fixed Ethernet and FCoE ports, plus 16 1/10-Gbps fixed Ethernet, FCoE, or 4/8/16 Gbps FC ports. Up to 18 of the 40-Gbps ports can be reconfigured as 4x10Gbps breakout ports, providing up to 88 total 10-Gbps ports.

Figure 4 Cisco UCS 6332-16UP Fabric Interconnect

![]() Note: When used for a Cisco HyperFlex deployment, due to mandatory QoS settings in the configuration, the 6332 and 6332-16UP will be limited to a maximum of four 4x10Gbps breakout ports, which can be used for other non-HyperFlex servers.

Note: When used for a Cisco HyperFlex deployment, due to mandatory QoS settings in the configuration, the 6332 and 6332-16UP will be limited to a maximum of four 4x10Gbps breakout ports, which can be used for other non-HyperFlex servers.

Cisco UCS 6454 Fabric Interconnect

The Cisco UCS 6454 54-Port Fabric Interconnect is a One-Rack-Unit (1RU) 10/25/40/100 Gigabit Ethernet, FCoE and Fibre Channel switch offering up to 3820 Gbps throughput and up to 54 ports. The switch has 36 10/25-Gbps Ethernet ports, 4 1/10/25-Gbps Ethernet ports, 6 40/100-Gbps Ethernet uplink ports and 8 unified ports that can support 8 10/25-Gbps Ethernet ports or 8/16/32-Gbps Fibre Channel ports. All Ethernet ports are capable of supporting FCoE.

Figure 5 Cisco UCS 6454 Fabric Interconnect

Cisco UCS C220 M5 Rack Server

The Cisco UCS C220 M5 Rack Server is among the most versatile general-purpose enterprise infrastructure and application servers in the industry. It is a high-density 2-socket rack server that delivers industry-leading performance and efficiency for a wide range of workloads, including virtualization, collaboration, and bare-metal applications. The Cisco UCS C-Series Rack Servers can be deployed as standalone servers or as part of the Cisco Unified Computing System™ (Cisco UCS) to take advantage of Cisco’s standards-based unified computing innovations that help reduce customers’ TCO and increase their business agility.

The Cisco UCS C220 M5 server extends the capabilities of the Cisco UCS portfolio in a 1-Rack-Unit (1RU) form factor. It incorporates the Intel® Xeon® Scalable processors, delivering significant performance and efficiency gains that will improve your application performance. The Cisco UCS C220 M5 delivers outstanding levels of expandability and performance in a compact package, with:

· Latest (second generation) Intel Xeon Scalable CPUs with up to 28 cores per socket

· Supports first-generation Intel Xeon Scalable CPUs with up to 28 cores per socket

· Up to 24 DDR4 DIMMs for improved performance

· Support for the Intel Optane DC Persistent Memory

· Up to 10 Small-Form-Factor (SFF) 2.5-inch drives or 4 Large-Form-Factor (LFF) 3.5-inch drives (77 TB storage capacity with all NVMe PCIe SSDs)

· Support for 12-Gbps SAS modular RAID controller in a dedicated slot, leaving the remaining PCIe Generation 3.0 slots available for other expansion cards

· Modular LAN-On-Motherboard (mLOM) slot that can be used to install a Cisco UCS Virtual Interface Card (VIC) without consuming a PCIe slot

· Dual embedded Intel x550 10GBASE-T LAN-On-Motherboard (LOM) ports

Figure 6 Cisco UCS C220 M5 Rack Server

Cisco UCS S3260 M5 Storage Server

The Cisco UCS® S3260 Storage Server is a modular, high-density, high-availability, dual-node storage- optimized server well suited for service providers, enterprises, and industry-specific environments. It provides dense, cost-effective storage to address your ever-growing data needs. Designed for a new class of data-intensive workloads, it is simple to deploy and excellent for applications for big data, data protection, software-defined storage environments, scale-out unstructured data repositories, media streaming, and content distribution.

Figure 7 Cisco UCS S3260 M5 Storage Server

The Cisco UCS S3260 server helps you achieve the highest levels of data availability and performance. With dual-node capability that is based on the 2nd Gen Intel® Xeon® Scalable and Intel Xeon Scalable processor, it features up to 840 TB of local storage in a compact 4-Rack-Unit (4RU) form factor. The drives can be configured with enterprise-class Redundant Array of Independent Disks (RAID) redundancy or with a pass-through Host Bus Adapter (HBA) controller. Network connectivity is provided with dual-port 40-Gbps nodes in each server, with expanded unified I/O capabilities for data migration between Network-Attached Storage (NAS) and SAN environments. This storage-optimized server comfortably fits in a standard 32-inch-depth rack, such as the Cisco® R 42610 Rack. Highlights of the Cisco UCS S3260 server are:

· Dual 2-socket server nodes based on 2nd Gen Intel Xeon Scalable and Intel Xeon Scalable processors with up to 48 cores per server node

· Up to 1.5 TB of DDR4 memory per M5 server node and up to 1 TB of Intel Optane™ DC Persistent Memory

· Support for high-performance Nonvolatile Memory Express (NVMe) and flash memory

· Massive 840-TB data storage capacity that easily scales to petabytes with Cisco UCS Manager software

· Policy-based storage management framework for zero-touch capacity on demand

· Dual-port 40-Gbps system I/O controllers with a Cisco UCS Virtual Interface Card 1300 platform embedded chip or PCIe-based system I/O controller for Quad Port 10/25G Cisco VIC 1455 or Dual Port 100G Cisco VIC 1495

· Unified I/O for Ethernet or Fibre Channel to existing NAS or SAN storage environments

· Support for Cisco bidirectional transceivers, with 40-Gbps connectivity over existing 10-Gbps cabling infrastructure

Cisco VIC 1387 MLOM Interface Cards

The Cisco UCS VIC 1387 Card is a dual-port Enhanced Quad Small Form-Factor Pluggable (QSFP+) 40-Gbps Ethernet and Fibre Channel over Ethernet (FCoE)-capable PCI Express (PCIe) modular LAN-on-motherboard (mLOM) adapter installed in the Cisco UCS HX-Series or C-Series Rack Servers. The VIC 1387 is used in conjunction with the Cisco UCS 6332 or 6332-16UP model Fabric Interconnects.

The mLOM slot can be used to install a Cisco VIC without consuming a PCIe slot, which provides greater I/O expandability. It incorporates next-generation converged network adapter (CNA) technology from Cisco, providing investment protection for future feature releases. The card enables a policy-based, stateless, agile server infrastructure that can present up to 256 PCIe standards-compliant interfaces to the host, each dynamically configured as either a network interface card (NICs) or host bus adapter (HBA). The personality of the interfaces is set programmatically using the service profile associated with the server. The number, type (NIC or HBA), identity (MAC address and World Wide Name [WWN]), failover policy, adapter settings, bandwidth, and quality-of-service (QoS) policies of the PCIe interfaces are all specified using the service profile.

Figure 8 Cisco VIC 1387 mLOM Card

![]() Note: Hardware revision V03 or later of the Cisco VIC 1387 card is required for the Cisco HyperFlex HX-series servers.

Note: Hardware revision V03 or later of the Cisco VIC 1387 card is required for the Cisco HyperFlex HX-series servers.

Cisco VIC 1457 MLOM Interface Cards

The Cisco UCS VIC 1457 is a quad-port Small Form-Factor Pluggable (SFP28) 10/25-Gbps Ethernet and Fibre Channel over Ethernet (FCoE)-capable PCI Express (PCIe) modular LAN-on-motherboard (mLOM) adapter installed in the Cisco UCS HX-Series or C-Series Rack Servers. The Cisco UCS VIC 1457 is used in conjunction with the Cisco UCS 6454 model Fabric Interconnects.

The mLOM slot can be used to install a Cisco VIC without consuming a PCIe slot, which provides greater I/O expandability. It incorporates next-generation converged network adapter (CNA) technology from Cisco, providing investment protection for future feature releases. The card enables a policy-based, stateless, agile server infrastructure that can present up to 256 PCIe standards-compliant interfaces to the host, each dynamically configured as either a network interface card (NICs) or host bus adapter (HBA). The personality of the interfaces is set programmatically using the service profile associated with the server. The number, type (NIC or HBA), identity (MAC address and World Wide Name [WWN]), failover policy, adapter settings, bandwidth, and quality-of-service (QoS) policies of the PCIe interfaces are all specified using the service profile.

Figure 9 Cisco VIC 1457 mLOM Card

Cisco HyperFlex HX-Series Nodes

A Cisco HyperFlex cluster requires a minimum of three HX-Series nodes. Data is replicated across at least two of these nodes, and a third node is required for continuous operation in the event of a single-node failure. The HX-Series nodes combine the CPU and RAM resources for hosting guest virtual machines with a shared pool of the physical storage resources used by the HX Data Platform software. HX-Series hybrid nodes use a combination of solid-state disks (SSDs) for caching and hard-disk drives (HDDs) for the capacity layer. HX-Series all-flash nodes use SSD or NVMe storage for the caching layer and SSDs for the capacity layer.

Cisco HyperFlex HXAF220c-M5SX All-Flash Node

This small footprint Cisco HyperFlex all-flash model contains a 240 GB M.2 form factor solid-state disk (SSD) that acts as the boot drive, a 240 GB housekeeping SSD drive, either a single 375 GB Optane NVMe SSD, a 1.6 TB NVMe SSD or 400GB SAS SSD write-log drive, and six to eight 960 GB or 3.8 TB SATA SSD drives for storage capacity. For configurations requiring self-encrypting drives, the caching SSD is replaced with an 800 GB SAS SED SSD, and the capacity disks are also replaced with either 800 GB, 960 GB or 3.8 TB SED SSDs.

Figure 10 HXAF220c-M5SX All-Flash Node

Cisco HyperFlex HXAF240c-M5SX All-Flash Node

This capacity optimized Cisco HyperFlex all-flash model contains a 240 GB M.2 form factor solid-state disk (SSD) that acts as the boot drive, a 240 GB housekeeping SSD drive, either a single 375 GB Optane NVMe SSD, a 1.6 TB NVMe SSD or 400GB SAS SSD write-log drive installed in a rear hot swappable slot, and six to twenty-three 960 GB or 3.8 TB SATA SSD drives for storage capacity. For configurations requiring self-encrypting drives, the caching SSD is replaced with an 800 GB SAS SED SSD, and the capacity disks are also replaced with either 800 GB, 960 GB or 3.8 TB SED SSDs.

Figure 11 HXAF240c-M5SX Node

![]() Note: Either a 375 GB Optane NVMe SSD, a 400 GB SAS SSD or 1.6 TB NVMe SSD caching drive may be chosen. While the NVMe options can provide a higher level of performance, the partitioning of the three disk options is the same, therefore the amount of cache available on the system is the same regardless of the model chosen.

Note: Either a 375 GB Optane NVMe SSD, a 400 GB SAS SSD or 1.6 TB NVMe SSD caching drive may be chosen. While the NVMe options can provide a higher level of performance, the partitioning of the three disk options is the same, therefore the amount of cache available on the system is the same regardless of the model chosen.

Cisco HyperFlex HX220c-M5SX Hybrid Node

This small footprint Cisco HyperFlex hybrid model contains a minimum of six, and up to eight 1.8 terabyte (TB) or 1.2 TB SAS hard disk drives (HDD) that contribute to cluster storage capacity, a 240 GB SSD housekeeping drive, a 480 GB or 800 GB SSD caching drive, and a 240 GB M.2 form factor SSD that acts as the boot drive. For configurations requiring self-encrypting drives, the caching SSD is replaced with an 800 GB SAS SED SSD, and the capacity disks are replaced with 1.2TB SAS SED HDDs.

Figure 12 HX220c-M5SX Node

![]() Note: Either a 480 GB or 800 GB caching SAS SSD may be chosen. This option is provided to allow flexibility in ordering based on product availability, pricing and lead times. There is no performance, capacity, or scalability benefit in choosing the larger disk.

Note: Either a 480 GB or 800 GB caching SAS SSD may be chosen. This option is provided to allow flexibility in ordering based on product availability, pricing and lead times. There is no performance, capacity, or scalability benefit in choosing the larger disk.

Cisco HyperFlex HX240c-M5SX Hybrid Node

This capacity optimized Cisco HyperFlex hybrid model contains a minimum of six and up to twenty-three 1.8 TB or 1.2 TB SAS small form factor (SFF) hard disk drives (HDD) that contribute to cluster storage, a 240 GB SSD housekeeping drive, a single 1.6 TB SSD caching drive installed in a rear hot swappable slot, and a 240 GB M.2 form factor SSD that acts as the boot drive. For configurations requiring self-encrypting drives, the caching SSD is replaced with a 1.6 TB SAS SED SSD, and the capacity disks are replaced with 1.2TB SAS SED HDDs.

Figure 13 HX240c-M5SX Node

All-Flash Versus Hybrid

The initial HyperFlex product release featured hybrid converged nodes, which use a combination of solid-state disks (SSDs) for the short-term storage caching layer, and hard disk drives (HDDs) for the long-term storage capacity layer. The hybrid HyperFlex system is an excellent choice for entry-level or midrange storage solutions, and hybrid solutions have been successfully deployed in many non-performance sensitive virtual environments. Meanwhile, there is significant growth in deployment of highly performance sensitive and mission critical applications. The primary challenge to the hybrid HyperFlex system from these highly performance sensitive applications, is their increased sensitivity to high storage latency. Due to the characteristics of the spinning hard disks, it is unavoidable that their higher latency becomes the bottleneck in the hybrid system. Ideally, if all of the storage operations were to occur in the caching SSD layer, the hybrid system’s performance will be excellent. But in several scenarios, the amount of data being written and read exceeds the caching layer capacity, placing larger loads on the HDD capacity layer, and the subsequent increases in latency will naturally result in reduced performance.

Cisco All-Flash HyperFlex systems are an excellent option for customers with a requirement to support high performance, latency sensitive workloads. With a purpose built, flash-optimized and high-performance log based filesystem, the Cisco All-Flash HyperFlex system provides:

· Predictable high performance across all the virtual machines on HyperFlex All-Flash and compute-only nodes in the cluster.

· Highly consistent and low latency, which benefits data-intensive applications and databases such as Microsoft SQL and Oracle.

· Support for NVMe caching SSDs, offering an even higher level of performance.

· Future ready architecture that is well suited for flash-memory configuration:

- Cluster-wide SSD pooling maximizes performance and balances SSD usage so as to spread the wear.

- A fully distributed log-structured filesystem optimizes the data path to help reduce write amplification.

- Large sequential writing reduces flash wear and increases component longevity.

- Inline space optimization, e.g. deduplication and compression, minimizes data operations and reduces wear.

· Lower operating cost with the higher density drives for increased capacity of the system.

· Cloud scale solution with easy scale-out and distributed infrastructure and the flexibility of scaling out independent resources separately.

Cisco HyperFlex support for hybrid and all-flash models now allows customers to choose the right platform configuration based on their capacity, applications, performance, and budget requirements. All-flash configurations offer repeatable and sustainable high performance, especially for scenarios with a larger working set of data, in other words, a large amount of data in motion. Hybrid configurations are a good option for customers who want the simplicity of the Cisco HyperFlex solution, but their needs focus on capacity-sensitive solutions, lower budgets, and fewer performance-sensitive applications.

Cisco HyperFlex Compute-Only Nodes

All current model Cisco UCS M4 and M5 generation servers, except the Cisco UCS C880 M4 and Cisco UCS C880 M5, may be used as compute-only nodes connected to a Cisco HyperFlex cluster, along with a limited number of previous M3 generation servers. Any valid CPU and memory configuration is allowed in the compute-only nodes, and the servers can be configured to boot from SAN, local disks, or internal SD cards. The following servers may be used as compute-only nodes:

· Cisco UCS B200 M4 Blade Server

· Cisco UCS B200 M5 Blade Server

· Cisco UCS B260 M4 Blade Server

· Cisco UCS B420 M4 Blade Server

· Cisco UCS B460 M4 Blade Server

· Cisco UCS B480 M5 Blade Server

· Cisco UCS C220 M4 Rack-Mount Servers

· Cisco UCS C220 M5 Rack-Mount Servers

· Cisco UCS C240 M4 Rack-Mount Servers

· Cisco UCS C240 M5 Rack-Mount Servers

· Cisco UCS C460 M4 Rack-Mount Servers

· Cisco UCS C480 M5 Rack-Mount Servers

Cisco HyperFlex Data Platform Software

The Cisco HyperFlex delivers a new generation of flexible, scalable, enterprise-class hyperconverged solutions. The solution also delivers storage efficiency features such as thin provisioning, data deduplication, and compression for greater capacity and enterprise-class performance. Additional operational efficiency is facilitated through features such as cloning and snapshots.

The complete end-to-end hyperconverged solution provides the following benefits to customers:

· Simplicity: The solution is designed to be deployed and managed easily and quickly through familiar tools and methods. No separate management console is required for the Cisco HyperFlex solution.

· Centralized hardware management: The cluster hardware is managed in a consistent manner by service profiles in Cisco UCS Manager. Cisco UCS Manager also provides a single console for solution management, including firmware management. Cisco HyperFlex HX Data Platform clusters are managed through a plug-in to VMware vCenter.

· High availability: Component redundancy is built in to most levels at the node. Cluster-level tolerance of node, network, and fabric interconnect failures is implemented as well.

· Enterprise-class storage features: Complementing the other management efficiencies are features such as thin provisioning, data deduplication, compression, cloning, and snapshots to address concerns related to overprovisioning of storage.

· Flexibility with a "pay-as-you-grow" model: Customers can purchase the exact amount of computing and storage they need and expand one node at a time up to the supported cluster node limit.

· Agility to support different workloads: Support for both hybrid and all-flash models allows customers to choose the right platform configuration for capacity-sensitive applications or performance-sensitive applications according to budget requirements.

The Cisco HyperFlex HX Data Platform is a purpose-built, high-performance, distributed file system with a wide array of enterprise-class data management services. The data platform’s innovations redefine distributed storage technology, exceeding the boundaries of first-generation hyperconverged infrastructures. The data platform has all the features that you would expect of an enterprise shared storage system, eliminating the need to configure and maintain complex Fibre Channel storage networks and devices. The platform simplifies operations and helps ensure data availability. Enterprise-class storage features include the following:

· Replication of all written data across the cluster so that data availability is not affected if single or multiple components fail (depending on the replication factor configured).

· Deduplication is always on, helping reduce storage requirements in which multiple operating system instances in client virtual machines result in large amounts of duplicate data.

· Compression further reduces storage requirements, reducing costs, and the log- structured file system is designed to store variable-sized blocks, reducing internal fragmentation.

· Thin provisioning allows large volumes to be created without requiring storage to support them until the need arises, simplifying data volume growth and making storage a “pay as you grow” proposition.

· Fast, space-efficient clones rapidly replicate virtual machines simply through metadata operations.

· Snapshots help facilitate backup and remote-replication operations: needed in enterprises that require always-on data availability.

The HX Data Platform can be administered through a VMware vSphere web client plug-in or through the HTML5-based native Cisco HyperFlex Connect management tool. Additionally, since the HX Data Platform Release 2.6, Cisco HyperFlex systems can also be managed remotely by the Cisco Intersight™ cloud-based management platform. Through the centralized point of control for the cluster, administrators can create data store, monitor the data platform health, and manage resource use.

An HX Data Platform controller resides on each node and implements the Cisco HyperFlex HX Distributed File System. The storage controller runs in user space within a virtual machine, intercepting and handling all I/O requests from guest virtual machines. The storage controller virtual machine uses the VMDirectPath I/O feature to provide PCI pass-through control of the physical server’s SAS disk controller. This approach gives the controller virtual machine full control of the physical disk resources. The controller integrates the data platform into VMware software through three preinstalled VMware ESXi vSphere Installation Bundles (VIBs): the VMware API for Array Integration (VAAI), a customized IOvisor agent that acts as a stateless Network File System (NFS) proxy, and a customized stHypervisorSvc agent for Cisco HyperFlex data protection and virtual machine replication.

The HX Data Platform controllers handle all read and write requests from the guest virtual machines to the virtual machine disks (VMDKs) stored in the distributed data stores in the cluster. The data platform distributes the data across multiple nodes of the cluster and across multiple capacity disks in each node according to the replication-level policy selected during cluster setup. The replication-level policy is defined by the replication factor (RF) parameter. When RF = 3, a total of three copies of the blocks are written and distributed to separate locations for every I/O write committed to the storage layer; when RF = 2, a total of two copies of the blocks are written and distributed.

Figure 14 shows the movement of data in the HX Data Platform.

Figure 14 Cisco HyperFlex HX Data Platform Data Movement

For each write operation, the data is intercepted by the IO Visor module on the node on which the virtual machine is running, a primary node is determined for that particular operation through a hashing algorithm, and the data is then sent to the primary node. The primary node compresses the data in real time and writes the compressed data to its caching SSD, and replica copies of that compressed data are written to the caching SSD of the remote nodes in the cluster, according to the replication factor setting. Because the virtual disk contents have been divided and spread out through the hashing algorithm, the result of this method is that all write operations are spread across all nodes, avoiding problems related to data locality and helping prevent “noisy” virtual machines from consuming all the I/O capacity of a single node. The write operation will not be acknowledged until all three copies are written to the caching-layer SSDs. Written data is also cached in a write log area resident in memory in the controller virtual machine, along with the write log on the caching SSDs. This process speeds up read requests when read operations are requested on data that has recently been written.

The HX Data Platform constructs multiple write caching segments on the caching SSDs of each node in the distributed cluster. As write-cache segments become full. Based on policies accounting for I/O load and access patterns, those write-cache segments are locked, and new write operations roll over to a new write-cache segment. The data in the now-locked cache segment is destaged to the HDD capacity layer of the nodes for a hybrid system or to the SDD capacity layer of the nodes for an all-flash system. During the destaging process, data is deduplicated before being written to the capacity storage layer, and the resulting data can now be written to the HDDs or SDDs of the server. On hybrid systems, the now deduplicated and compressed data is also written to the dedicated read-cache area of the caching SSD, which speeds up read requests for data that has recently been written. When the data is destaged to an HDD, it is written in a single sequential operation, avoiding disk-head seek thrashing on the spinning disks and accomplishing the task in a minimal amount of time. Deduplication, compression, and destaging take place with no delays or I/O penalties for the guest virtual machines making requests to read or write data, which benefits both the HDD and SDD configurations.

For data read operations, data may be read from multiple locations. For data that was very recently written, the data is likely to still exist in the write log of the local platform controller memory or in the write log of the local caching-layer disk. If local write logs do not contain the data, the distributed file system metadata will be queried to see if the data is cached elsewhere, either in write logs of remote nodes or in the dedicated read-cache area of the local and remote caching SSDs of hybrid nodes. Finally, if the data has not been accessed in a significant amount of time, the file system will retrieve the requested data from the distributed capacity layer. As requests for read operations are made to the distributed file system and the data is retrieved from the capacity layer, the caching SSDs of hybrid nodes populate their dedicated read-cache area to speed up subsequent requests for the same data. All-flash configurations, however, do not employ a dedicated read cache because such caching does not provide any performance benefit; the persistent data copy already resides on high-performance SSDs.

Object Storage and SwiftStack Software

The storage market has shifted dramatically in the last few years from one that is dominated by proprietary storage appliances. The data center has evolved from providing mainly back-office transactional services, to providing a much wider range of applications including cloud computing, content serving, distributed computing and archiving. Object Storage architecture manages data as objects as opposed to file systems that manage data as file hierarchy, and block storage which manages data as blocks within sectors and tracks. Figure 15 illustrates the differences.

Figure 15 Traditional vs. Object Storage

Object storage supports RESTful / HTTP protocols and every object in a SwiftStack container is accessed through http URL (Figure 16).

Figure 16 Every Object has an URL

With SwiftStack software running on Cisco UCS S3260 servers, you get hybrid cloud storage enabling freedom to move workloads between clouds with universal access to data across on-premises and public infrastructure. SwiftStack was built from day one to have the fundamental attributes of the cloud like a single namespace across multiple geographic locations, policy-driven placement of data, and consumption-based pricing.

Figure 17 SwiftStack Introduction

SwiftStack storage is optimized for unstructured data, which is growing at an ever-increasing rate inside most thriving enterprises. When media assets, scientific research data, and even backup archives live in a multi-tenant storage cloud, utilization of this valuable data increases while driving out unnecessary costs.

SwiftStack is a fully-distributed storage system that horizontally scales to hold your data today and tomorrow. It scales linearly, allowing you to add additional capacity and performance independently for whatever your applications need.

While scaling storage is typically complex, it’s not with SwiftStack. No advanced configuration is required. It takes only a few simple commands to install software on a new Cisco UCS S3260 server and deploy it in the cluster. Load balancing capabilities are fully integrated, allowing applications to automatically take advantage of the distributed cluster.

Powered by OpenStack Swift at the core, with SwiftStack, you get to utilize what drives some of the largest storage clouds and leverage the power of a vibrant community. SwiftStack is the lead contributor to the Swift project that has over 220 additional contributors worldwide. Having an engine backed by this community and deployed in demanding customer environments makes SwiftStack the most proven, enterprise-grade object storage software available. The SwiftStack software has no single points of failure, and requires no downtime during any upgrades, scaling, planned maintenance or unplanned system operations and is with self- healing capabilities.

Key SwiftStack features for an active archive:

· Starts as small as 150TB, and scales to 100s of PB

· Spans multiple data centers while still presenting a single namespace

· Handles data according to defined policies that align to the needs of different applications

· Uses erasure coding and replicas in the same cluster to protect data

· Offers multi-tenant support with authentication through Active Directory, LDAP, and Keystone

· Supports file protocols (SMB, NFS) and object APIs (S3, Swift) simultaneously

· Automatically synchronizes to Google Cloud Storage and Amazon S3 with the Cloud Sync feature

· Encrypts data and metadata at rest

· Manages highly scalable storage infrastructure through centralized out-of-band controller

· Ensures all functionality touching data is open by leveraging an open-source core

· Optimizes TCO with pay-as-you-grow licensing with support and maintenance included

Splunk Enterprise for Big Data Analytics

Splunk Enterprise is a software product that enables you to search, analyze, and visualize the data gathered from the components of your IT infrastructure or business. Splunk Enterprise takes in data from websites, applications, sensors, devices, and so on. After you define the data source, Splunk Enterprise indexes the data stream and parses it into a series of individual events that you can view and search.

All the IT applications, systems and technology infrastructure generate data every millisecond of every day. This machine data is one of the fastest growing, most complex areas of big data. It’s also one of the most valuable, containing a definitive record of user transactions, customer behavior, sensor activity, machine behavior, security threats, fraudulent activity and more.

Splunk Enterprise provides a holistic way to organize and extract real-time insights from massive amounts of machine data from virtually any source. This includes data from websites, business applications, social media platforms, app servers, hypervisors, sensors, traditional databases and open source data stores. Splunk Enterprise scales to collect and index tens of terabytes of data per day, cross multi-geography, multi-data center and hybrid cloud infrastructures.

Key Features of Splunk Enterprise

Splunk Enterprise provides the end-to-end, real-time solution for machine data delivering the following core capabilities:

· Universal collection and indexing of machine data, from virtually any source

· Powerful search processing language (SPL) to search and analyze real-time and historical data

· Real-time monitoring for patterns and thresholds; real-time alerts when specific conditions arise

· Powerful reporting and analysis

· Custom dashboards and views for different roles

· Resilience and scale on commodity hardware

· Granular role-based security and access controls

· Support for multi-tenancy and flexible, distributed deployments on-premises or in the cloud

· Robust, flexible platform for big data apps

Splunk Enterprise Processing Components

Table 1 lists the three major components processing Splunk data and the tiers that they occupy. It also describes the functions that each component performs.

Table 1 Splunk Enterprise Processing Components

| Component |

Tier |

Description |

| Forwarder |

Data input |

A forwarder consumes data and then forwards the data onwards, usually to an indexer. Forwarders usually require minimal resources, allowing them to reside lightly on the machine generating the data. |

| Indexer |

Indexing |

An indexer indexes incoming data that it usually receives from a group of forwarders. The indexer transforms the data into events and stores the events in an index. The indexer also searches the indexed data in response to search requests from a search head. To ensure high data availability and protect against data loss, or just to simplify the management of multiple indexers, you can deploy multiple indexers in indexer clusters. |

| Search head |

Search management |

A search head interacts with users, directs search requests to a set of indexers, and merges the results back to the user. To ensure high availability and simplify horizontal scaling, you can deploy multiple search heads in search head clusters. |

![]() Note: Forwarder is only used for testing the deployment server. We generate and send the data directly to the indexers.

Note: Forwarder is only used for testing the deployment server. We generate and send the data directly to the indexers.

SmartStore

Splunk SmartStore is a new feature that enables indexer capabilities to optimize the use of local storage and allows the system to use remote object stores, such as Amazon S3, to store indexed data. SmartStore introduces a remote storage tier and a cache manager. This feature allows data to reside either locally on indexers or on the remote storage tier. Most data resides on remote storage, while the indexer maintains a local cache that contains a minimal amount of data and metadata. SmartStore also allows the users to manage the indexer storage and compute resources in a cost-effective manner by scaling computing and storage resources separately. The users can reduce the indexer storage footprint to a minimum and choose I/O optimized compute resources.

SmartStore Indexes handle buckets differently from non-SmartStore indexes. Indexers maintain buckets for SmartStore indexes in two states:

· Hot buckets

· Warm buckets

The hot buckets reside on local storage while warm buckets reside on remote storage, although copies of those buckets might also reside temporarily in local storage. The concept of cold buckets becomes optional because the need to distinguish between warm and cold buckets no longer exists. With non-SmartStore indexes, the cold bucket state exists as a way to identify older buckets that can be safely moved to some type of cheaper storage, because buckets are typically searched less frequently as they age. But with SmartStore indexes, warm buckets are already on inexpensive storage, so there is no reason to move them to another type of storage as they age. Buckets can roll to frozen directly from warm.

When a bucket in a SmartStore index rolls to warm, the bucket is copied to remote storage. The rolled bucket does not immediately get removed from the indexer's local storage. Rather, it remains cached locally until it is evicted in response to the cache manager's eviction policy. Because searches tend to occur most frequently across recent data, this process helps to minimize the number of buckets that need to be retrieved from remote storage to fulfill a search request. After the cache manager finally does remove the bucket from the indexer's local storage, the indexer still retains metadata information. In addition, the indexer retains an empty directory for the bucket.

In the case of an indexer cluster, when a bucket rolls to warm, the source peer uploads the bucket to remote storage. The source peer continues to retain its bucket copy in local cache until, in due course, the cache manager evicts it. After successfully uploading the bucket, the source peer sends messages to the bucket's target peers, notifying them that the bucket has been uploaded to remote storage. The target peers then evict their local copies of the bucket, so that the cluster, as a whole, caches only a single copy of the rolled bucket, in the source peer's local storage. The target peers retain metadata for the bucket, so that the cluster has enough copies of the bucket, in the form of its metadata, to match the replication factor. When the source peer's copy of the bucket eventually gets evicted, the source peer, too, retains the bucket metadata. In addition to retaining metadata information for the bucket, the source peer continues to retain the primary designation for the bucket. The peer with primary designation fetches the bucket from remote storage when the bucket is needed for a search.

SmartStore offers several advantages to the deployment's indexing clusters:

· Reduced storage cost. The deployment can take advantage of the economy of remote object stores, instead of relying on costly local storage.

· Access to high availability and data resiliency features available through remote object stores.

· The ability to scale compute and storage resources separately, thus ensuring that you use resources efficiently.

· Simple and flexible configuration with per-index settings.

· Fast recovery from peer failure and fast data rebalancing, requiring only metadata fixups for warm data.

· Lower overall storage requirements, as the system maintains only a single permanent copy of each warm bucket on the remote object stores.

· Full recovery of warm buckets even when the number of peer nodes that goes down is greater than or equal to the replication factor.

· Global size-based data retention.

While SmartStore-enabled indexes can significantly decrease storage and management costs under the right circumstances, there are still times when the users might find it preferable to rely on local storage. The following circumstances are situations where you may consider enabling SmartStore:

· As the amount of data in local storage continues to grow. While local storage costs might not be a significant issue for a small deployment, you should reconsider your use of local storage as your deployment scales over time.

· If you are using indexer clusters to take advantage of features such as data recovery and disaster recovery. Through SmartStore, you can achieve these aims through the native capabilities of the remote store, without the need to store large amounts of redundant data on local storage.

· If you are using indexer clusters and you find that considerable amounts of your time and your computing resources are devoted to managing the cluster. Through SmartStore, you can eliminate much of the cluster management overhead. In particular, you can greatly reduce the scale of time-consuming activities such as offlining peer nodes, data rebalancing, and bucket fixup, because most of the data no longer resides on the peer nodes.

· When most searches are over recent data.

Requirements

The following sections detail the physical hardware, software revisions, and firmware versions required for the solution, which deploys Splunk Enterprise on the Cisco HyperFlex platform, and implements the SmartStore feature by storing warm data in remote SwiftStack object stores on Cisco UCS S3260 storage servers.

Splunk Enterprise in Virtual Environments

Splunk Architecture

Splunk software comes packaged as an ‘all-in-one’ distribution. The single host can be configured to function as one of or all of the types of Splunk Enterprise components. In a distributed deployment, you can split different types across multiple specialized instances of Splunk Enterprise. These instances can range in number from just a few to many thousands, depending on the quantity of data that you are dealing with and other variables in your environment.

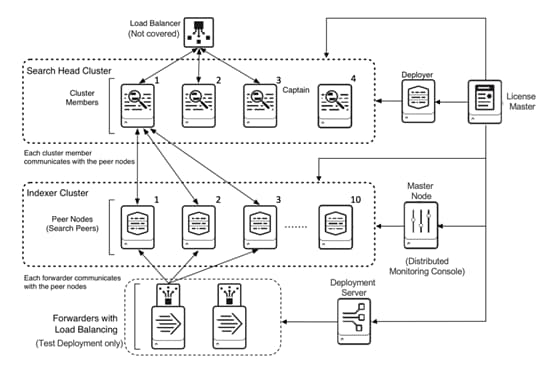

Figure 18 Splunk Components

There are several types of Splunk Enterprise components. Each component handles one or more Splunk Enterprise roles, such as data input or indexing. They fall into two broad categories:

· Processing components: These components handle the data.

· Management components: These components support the activities of the processing components.

These are the available processing component types:

· Search Head: In a distributed search environment, a Splunk Enterprise instance that handles search management functions, directing search requests to a set of search peers and then merging the results back to the user. A Splunk Enterprise instance can function as both a search head and a search peer. If it does only search (and not any indexing), it is usually referred to as a dedicated search head. Search head clusters are groups of search heads that coordinate their activities.

· Indexer: A Splunk Enterprise instance that indexes data, transforming raw data into events, places the results into an index, and searches the indexed data in response to search requests.

The indexer also frequently performs the other fundamental Splunk Enterprise functions: data input and search management. In larger deployments, forwarders handle data input, and forward the data to the indexer for indexing. Similarly, although indexers always perform searches across their own data, in larger deployments, a specialized Splunk Enterprise instance, called a search head, handles search management and coordinates searches across multiple indexers.

· Universal Forwarder: Forwarders ingest data. There are a few types of forwarders, but the universal forwarder is the right choice for most purposes. A small-footprint version of a forwarder, it uses a lightweight version of Splunk Enterprise that simply inputs data, performs minimal processing on the data, and then forwards the data to a Splunk indexer or a third-party system.

Management components include:

· Cluster Master (Master Node): The indexer cluster node that regulates the functioning of an indexer cluster.

· Deployment Server: A Splunk Enterprise instance that acts as a centralized configuration manager, grouping together and collectively managing any number of Splunk Enterprise instances. Instances that are remotely configured by deployment servers are called deployment clients. The deployment server downloads updated content, such as configuration files and apps, to deployment clients. Units of such content are known as deployment apps.

· Search Head Deployer (SHD): A Splunk Enterprise instance that distributes apps and certain other configuration updates to search head cluster members.

· License Master: A license master controls one or more license slaves. From the license master, you can define stacks, pools, add licensing capacity, and manage license.

· Distributed Monitoring Console (not pictured): The Distributed Monitoring Console lets you view detailed deployment and performance information about all your Splunk instances in one place. In a single indexer cluster, usually the distributed monitoring console is hosted on the instance running the master node unless the load on the master node is too heavy. In that case you can run the distributed monitoring console on a search head node that is dedicated to running monitoring console.

In this Distributed Configuration, indexers and search heads are configured in a clustered mode. Splunk enterprise supports clustering for both search heads and indexers. Search head cluster is a group of interchangeable and highly available search heads. By increasing concurrent user capacity and by eliminating single point of failure, search head clusters reduce the total cost of ownership. Indexer clusters are made up of groups of Splunk Enterprise indexers configured to replicate peer data so that the indexes of the system become highly available. By maintaining multiple, identical copies of indexes, clusters prevent data loss while promoting data availability for searching.

For the SmartStore feature, a remote S3 compliant storage volume is configured to store the master copies of warm buckets, while the indexer's local storage is used to cache copies of warm buckets currently participating in a search or that have a high likelihood of participating in a future search. The indexer's cache manager manages the local cache. It fetches copies of warm buckets from remote storage when the buckets are needed for a search. It also evicts buckets from the cache, based on factors such as the bucket's search frequency, its data recency, and various other, configurable criteria. With SmartStore indexes, indexer clusters maintain replication and search factor copies of hot buckets only. The remote storage is responsible for ensuring the high availability, data fidelity, and disaster recovery of the warm buckets. Because the remote storage handles warm bucket high availability, peer nodes replicate only warm bucket metadata, not the buckets themselves. This means that any necessary bucket fixup for SmartStore indexes proceeds much more quickly than it does for non-SmartStore indexes. If a group of peer nodes equaling or exceeding the replication factor goes down, the cluster does not lose any of its SmartStore warm data because copies of all warm buckets reside on the remote store. The flow of data for a SmartStore-enabled index in an indexer cluster is depicted in Figure 19.

Figure 19 Splunk SmartStore Data Flow

For more information, please refer to the Splunk Documentation.

Splunk Services and Processes

A Splunk Enterprise server installs a process on your host, splunkd. splunkd is a distributed C/C++ server that accesses, processes and indexes streaming IT data. It also handles search requests. splunkd processes and indexes your data by streaming it through a series of pipelines, each made up of a series of processors.

Pipelines are single threads inside the splunkd process, each configured with a single snippet of XML. Processors are individual, reusable C or C++ functions that act on the stream of IT data passing through a pipeline. Pipelines can pass data to one another through queues. splunkd supports a command-line interface for searching and viewing results.

splunkd also provides the Splunk Web user interface. It allows users to search and navigate data stored by Splunk servers and to manage your Splunk deployment through a Web interface. It communicates with your Web browser through Representational State Transfer (REST).

splunkd runs administration and management services on port 8089 with SSL/HTTPS turned on by default. It also runs a Web server on port 8000 with SSL/HTTPS turned off by default.

Figure 20 Splunk Services and Processes

Splunk Network Ports

Splunk Enterprise components require network connectivity to work properly if they have been distributed across multiple machines. Splunk components communicate with each other using TCP and UDP network protocols. The following ports, but not limited to these ports, must be available to cluster nodes and should be configured as open can to allow communication between the Splunk instances:

· On the master:

- The management port (by default, 8089) must be available to all other cluster nodes.

- The http port (by default, 8000) must be available to any browsers accessing the monitoring console.

· On each peer:

- The management port (by default, 8089) must be available to all other cluster nodes.

- The replication port (by default, 8080) must be available to all other peer nodes.

- The receiving port (by default, 9997) must be available to all forwarders sending data to that peer.

· On each search head:

- The management port (by default, 8089) must be available to all other nodes.

- The http port (by default, 8000) must be available to any browsers accessing data from the search head.

Figure 21 Splunk Network Ports

Splunk in Virtual Environments

Splunk Enterprise can be deployed in physical, virtual, cloud or hybrid environments. When deploying Splunk software in a virtualized environment, each type of the components can be run independently inside different virtual machines. The system resources required to run different components vary and should be planned appropriately. The typical components that make up the core of a Splunk deployment include Splunk forwarders, indexers and search heads. Indexers are responsible for storing and retrieving the data from disk, so CPU and disk I/O are the most important considerations. Search heads search for information across indexers and are usually both CPU and memory intensive. Forwarders collect and forward data and they are usually lightweight and are not resource intensive. Therefore, this CVD explains only the topic of the forwarders on how to deploy a universal forwarder from the deployment server. For the data ingestion the load generators send the generated events directly to the indexers without through the forwarders. Other management components include deployment servers and cluster masters are usually lightweight and are not resource intensive either.

The requirements for the Splunk virtual machines that are recommended in the Splunk technical brief for virtual deployment are listed as follows:

· Minimum 12 vCPU

· Minimum 12 GB of RAM

· Full reservations for vCPU and vRAM (no CPU and memory overcommit)

· Minimum 1200 random seek operations per second disk performance (sustained)

· Use VMware Tools in the guest virtual machine

· Use VMware Paravirtual SCSI (PVSCSI) controller

· Use VMXNET3 network adapter

· Provision virtual disks as Eager Zero Thick when not on an array that supports the appropriate VAAI primitives (“Write Same” and “ATS”)

![]() Note: Splunk recommends Eager Zero Thick provisioning for non-VAAI datastores. Cisco HyperFlex datastores support the integration with the VMware API for Array Integration (VAAI) so that will not have this restriction.

Note: Splunk recommends Eager Zero Thick provisioning for non-VAAI datastores. Cisco HyperFlex datastores support the integration with the VMware API for Array Integration (VAAI) so that will not have this restriction.

Splunk Virtual Machines on Cisco HyperFlex

Table 2 lists the virtual machines to install in this solution.

Table 2 Splunk Virtual Machines on HX

| Role |

Component Type |

Number of Virtual Machines |

vCPU |

Memory |

Data Disk |

Provision |

Network Adapter |

Type |

SCSI Controller |

Type |

| Indexer |

Processing |

10 |

12 |

64GB |

600GB |

Thick Eager Zeroed |

1 |

VMXNET3 |

1 |

PVSCSI |

| Search Head |

Processing |

4 |

12 |

64GB |

48GB |

Thin |

1 |

VMXNET3 |

1 |

PVSCSI |

| Universal Forwarder |

Processing |

1 |

4 |

16GB |

48GB |

Thin |

1 |

VMXNET3 |

1 |

PVSCSI |

| Cluster Master (Monitoring Console) |

Management |

1 |

4 |

16GB |

48GB |

Thin |

1 |

VMXNET3 |

1 |

PVSCSI |

| License Master |

Management |

1 |

4 |

16GB |

48GB |

Thin |

1 |

VMXNET3 |

1 |

PVSCSI |

| SH Deployer |

Management |

1 |

4 |

16GB |

48GB |

Thin |

1 |

VMXNET3 |

1 |

PVSCSI |

| Deployment Server |

Management |

1 |

4 |

16GB |

48GB |

Thin |

1 |

VMXNET3 |

1 |

PVSCSI |

Figure 22 illustrates the variable Splunk tiers and components in this solution.

Figure 22 Splunk Virtual Machines on HX

SwiftStack Object Storage on Cisco UCS S3260

SwiftStack provides native object API (S3 and Swift) to access the data stored in the SwiftStack Cluster. The design provides linear scalability, extreme durability with no single-point of failure. It uses any standard Linux system. SwiftStack clusters also support multi-region data center architecture.

SwiftStack nodes include four different roles to handle different services in the SwiftStack Cluster (Group of SwiftStack Nodes) called PACO – P: Proxy, A: Account, C: Container and O: Object. In most deployments, all four services are deployed and run on a single physical node.

Figure 23 SwiftStack Node Roles

SwiftStack System Architecture

The SwiftStack solution provides enterprise-grade object storage, with OpenStack Swift at its core. SwiftStack has been deployed at many sites with massive amounts of data stored. SwiftStack is comprised of two major components: SwiftStack Storage Nodes, which store the data, and the SwiftStack Controller Nodes, which are an out-of-band management system that manages one or more SwiftStack storage clusters. In this solution SwiftStack Controller Nodes are built with two Cisco UCS C220 M5 servers in an Active/Standby configuration, and SwiftStack Storage Nodes are built with six Cisco UCS S3260 M5 storage servers. Each Cisco UCS S3260 server is loaded with twenty-seven 12TB HDDs so the whole system provides close to 2PB total storage capacity.

Figure 24 SwiftStack Components

SwiftStack Network Layout

Network requirements for SwiftStack are based on standard Ethernet connections. While the software can work on a single network interface, it is recommended to configure multiple virtual interfaces in Cisco UCS to segregate the network traffic. Cisco UCS S3260 has two physical ports of 40Gb each and the Cisco VIC allows you to carve out many virtual network interfaces (vNICs) on each physical port.

The following networks are recommended for the smooth operation of the cluster:

· Management Network: All nodes must be able to route IP traffic to a SwiftStack controller. This is the management network for all services.

· Outward-Facing Network: This is the front facing network and is used for API access and to run the proxy and authentication services.

· Cluster-Facing Network: This is an internal network for communication between the proxy servers and the storage nodes. This is a private network.