Cisco UCS C220 M5 Rack Servers with ScaleProtect

Available Languages

Cisco UCS® C220 M5 Rack Servers with ScaleProtect™

Deployment Guide for ScaleProtect with Cisco UCS C220 M5 Rack Servers and Commvault HyperScale Release 11 SP16

Last Updated: November 15, 2019

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2019 Cisco Systems, Inc. All rights reserved.

Table of Contents

Cisco UCS Connectivity to Nexus Switches

Optional: Cisco UCS connectivity to SAN Fabrics

Cisco Nexus 9000 Initial Configuration Setup

Enable Appropriate Cisco Nexus 9000 Features and Settings

Cisco Nexus 9000 A and Cisco Nexus 9000 B

Create VLANs for ScaleProtect IP Traffic

Cisco Nexus 9000 A and Cisco Nexus 9000 B

Configure Virtual Port Channel Domain

Configure Network Interfaces for the vPC Peer Links

Configure Network Interfaces to Cisco UCS Fabric Interconnect

Uplink into Existing Network Infrastructure

Cisco Nexus 9000 A and B using Port Channel Example

Cisco UCS Server Configuration

Perform Initial Setup of Cisco UCS 6454 Fabric Interconnects

Upgrade Cisco UCS Manager Software to Version 4.0(4b)

Add Block IP Addresses for KVM Access

Optional: Edit Policy to Automatically Discover Server Ports

Optional: Enable Fibre Channel Ports

Optional: Create VSAN for the Fibre Channel Interfaces

Optional: Create Port Channels for the Fibre Channel Interfaces

Create Port Channels for Ethernet Uplinks

Cisco UCS C220 M5 Server Node Configuration

Optional: Create a WWNN Address Pool for FC-based Storage Access

Optional: Create a WWPN Address Pools for FC-based Storage Access

Create Network Control Policy for Cisco Discovery Protocol

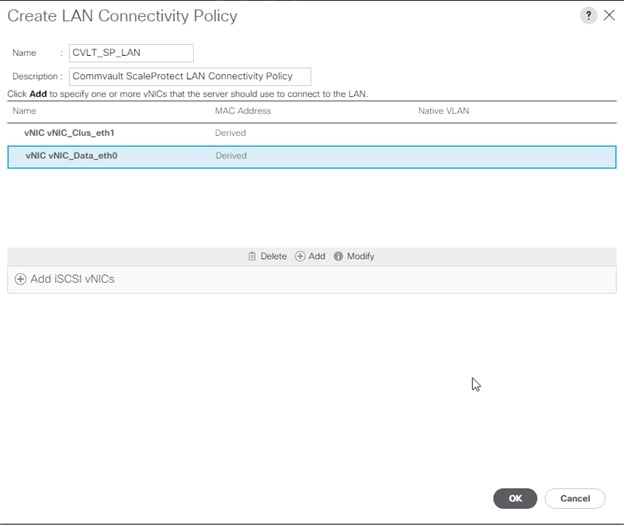

Create LAN Connectivity Policy

Optional: Create vHBA Templates for FC Connectivity

Optional: Create FC SAN Connectivity Policies

Cisco UCS C220 M5 Server Storage Setup

ScaleProtect with Cisco UCS Server Storage Profile

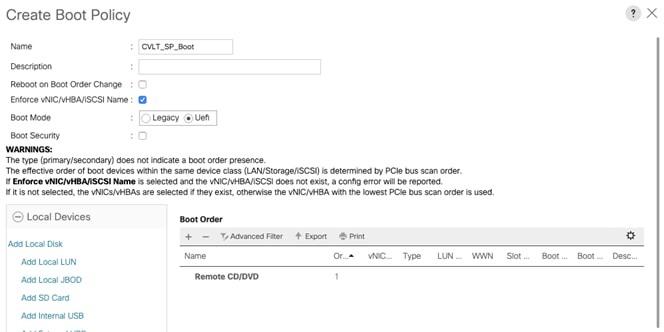

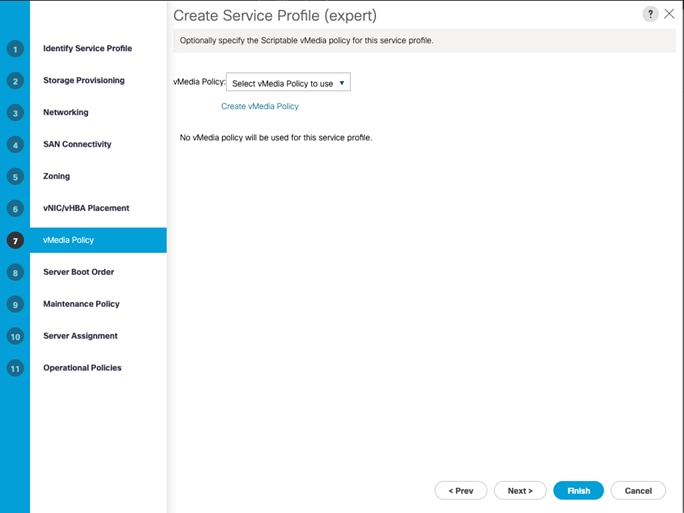

Cisco UCS C220 Service Profile Template

Create Service Profile Template

Commvault HyperScale Installation and Configuration

Cisco Validated Designs (CVDs) deliver systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. These designs incorporate a wide range of technologies and products into a portfolio of solutions that have been developed to address the business needs of the customers and to guide them from design to deployment. Cisco and Commvault have partnered to deliver a series of data protection solutions that provide customers with a new level of management simplicity and scale for managing secondary data on premises.

Secondary storage and their associated workloads account for the vast majority of storage today. Enterprises face increasing demands to store and protect data while addressing the need to find new value in these secondary storage locations as a means to drive key business and IT transformation initiatives. ScaleProtect™ with Cisco Unified Computing System (Cisco UCS) supports these initiatives by providing a unified modern data protection and management platform that delivers cloud-scalable services on-premises. The solution drives down costs across the enterprise by eliminating costly point solutions that do not scale and lack visibility into secondary data.

This CVD provides implementation details for the ScaleProtect with Cisco UCS solution, specifically focusing on the Cisco UCS C220 M5 Rack Server. ScaleProtect with Cisco UCS is deployed as a single cohesive system, which is made up of Commvault® Software and Cisco UCS infrastructure. Cisco UCS infrastructure provides the compute, storage, and networking, while Commvault Software provides the data protection and software designed scale-out platform.

Introduction

ScaleProtect with Cisco UCS solution is a pre-designed, integrated, and validated architecture for modern data protection that combines Cisco UCS servers, Cisco Nexus switches, Commvault Complete™ Backup & Recovery, and Commvault HyperScale™ Software into a single software-defined scale-out flexible architecture. ScaleProtect with Cisco UCS is designed for high availability and resiliency, with no single point of failure, while maintaining cost-effectiveness and flexibility in design to support secondary storage workloads (for example; backup and recovery, disaster recovery, dev/test copies, and so on.).

ScaleProtect design discussed in this document has been validated for resiliency and fault tolerance during system upgrades, component failures, and partial as well as complete loss of power scenarios.

Audience

The audience for this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineers, IT architects, and customers who want to take advantage of an infrastructure that is built to deliver IT efficiency and enable IT innovation. The reader of this document is expected to have the necessary training and background to install and configure Cisco UCS, Cisco Nexus, and Cisco UCS Manager as well as a high-level understanding of Commvault Software and its components. External references are provided where applicable and it is recommended that the reader be familiar with these documents.

Purpose of this Document

This document provides step-by-step configuration and implementation guidelines for setting up ScaleProtect with Cisco UCS C220 M5 Solution.

The design that is implemented is discussed in detail in the ScaleProtect with Cisco UCS Design Guide found here:

Solution Summary

Cisco UCS revolutionized the server market through its programmable fabric and automated management that simplify application and service deployment. Commvault HyperScale™ Software provides the software-defined scale-out architecture that is fully integrated and includes true hybrid cloud capabilities. Commvault Complete Backup & Recovery provides a full suite of functionality for protecting, recovering, indexing, securing, automating, reporting, and natively accessing data. Cisco UCS, along with Commvault Software delivers an integrated software defined scale-out solution called ScaleProtect with Cisco UCS.

It is the only solution available with enterprise-class data management services that takes full advantage of industry-standard scale-out infrastructure together with Cisco UCS Servers.

Figure 1 ScaleProtect with Cisco UCS C220 M5 Solution Summary

Architectural Overview

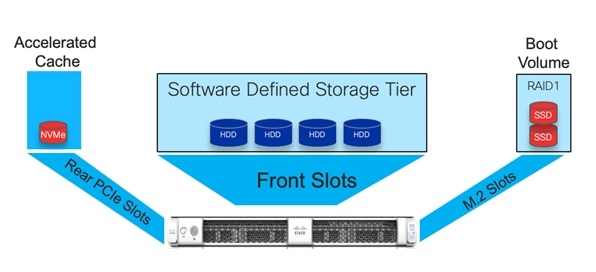

A typical ScaleProtect with Cisco UCS deployment starts with a 3-node block. The solution has been validated with three Cisco UCS C220 M5 Server Nodes with built-in storage that consists of 4 front-facing internal Large Form Factor (LFF) HDDs for the software defined data storage tier, rear-loaded NVMe PCIe SSD for the accelerated cache tier, and internal M.2 SATA SSD’s for the operating system and associated binaries. Connectivity for the solution is provided via a pair of Cisco UCS 6454 Fabric Interconnects connected to a pair of Cisco Nexus 9336C-FX2 upstream network switches.

ScaleProtect with Cisco UCS can start with more than 3 nodes, the additional nodes are simply added to the Cisco UCS 6454 Series Fabric Interconnects for linear scalability.

Figure 2 3-Node ScaleProtect with Cisco UCS Physical Architecture

The validated configuration uses the following components for deployment:

· Cisco Unified Computing System (Cisco UCS)

- Cisco UCS Manager

- Cisco UCS 6454 Series Fabric Interconnects

- Cisco UCS C220 M5 LFF Server

- Cisco VIC 1457

- Cisco Nexus 9336C-FX2 Series Switches

- Commvault Complete™ Backup and Recovery v11

- Commvault HyperScale Software release 11 SP16

This document guides customers through the low-level steps for deploying the ScaleProtect solution base architecture. These procedures describe everything from physical cabling to network, compute, and storage device configurations.

![]() This document includes additional Cisco UCS configuration information that helps in enabling SAN connectivity to existing storage environment. The ScaleProtect design for this solution doesn’t need SAN connectivity and additional information is included only as a reference and should be skipped if SAN connectivity is not required. All the sections that should be skipped for default design have been marked as optional.

This document includes additional Cisco UCS configuration information that helps in enabling SAN connectivity to existing storage environment. The ScaleProtect design for this solution doesn’t need SAN connectivity and additional information is included only as a reference and should be skipped if SAN connectivity is not required. All the sections that should be skipped for default design have been marked as optional.

Software Revisions

Table 1 lists the hardware and software versions used for the solution validation.

Table 1 Hardware and Software Revisions

| Layer |

Device |

Image |

| Compute |

Cisco UCS 6454 Series Fabric Interconnects |

4.0(4b) |

| Cisco UCS C220 M5 Rack Server |

4.0(4b) |

|

| Network |

Cisco Nexus 9336C-FX2 NX-OS |

7.0(3)I7(6) |

| Software |

Cisco UCS Manager |

4.0(4b) |

| Commvault Complete Backup and Recovery |

v11 Service Pack 16 |

|

| Commvault HyperScale Software |

v11 Service Pack 16 |

Configuration Guidelines

This document provides details for configuring a fully redundant, highly available ScaleProtect configuration. Therefore, appropriate references are provided to indicate the component being configured at each step, such as 01 and 02 or A and B. For example, the Cisco UCS fabric interconnects are identified as FI-A or FI-B. Finally, to indicate that you should include information pertinent to your environment in a given step, <text> appears as part of the command structure. See the following example during a configuration step for Cisco Nexus switches:

Nexus-9000-A (config)# ntp server <NTP Server IP Address> use-vrf management

This document is intended to enable customers and partners to fully configure the customer environment and during this process, various steps may require the use of customer-specific naming conventions, IP addresses, and VLAN schemes, as well as appropriate MAC addresses etc.

![]() This document details network (Cisco Nexus), compute (Cisco UCS), software (Commvault) and related storage configurations.

This document details network (Cisco Nexus), compute (Cisco UCS), software (Commvault) and related storage configurations.

Table 2 and Table 3 lists various VLANs, VSANs and subnets used to setup ScaleProtect infrastructure to provide connectivity between core elements of the design.

Table 2 ScaleProtect VLAN Configuration

| VLAN Name |

VLAN |

VLAN Purpose |

Example Subnet |

| Out of Band Mgmt |

11 |

VLAN for out-of-band management |

192.168.160.0/22 |

| SP-Data-VLAN |

111 |

VLAN for data protection and management network |

192.168.20.0/24 |

| SP-Cluster-VLAN |

3000 |

VLAN for ScaleProtect Cluster internal network |

10.10.10.0/24 |

| Native-VLAN |

2 |

Native VLAN |

|

![]() VSAN ids are optional and are only required if SAN connectivity is needed from the ScaleProtect Cluster to existing Tape Library or SAN fabrics.

VSAN ids are optional and are only required if SAN connectivity is needed from the ScaleProtect Cluster to existing Tape Library or SAN fabrics.

Table 3 Optional: ScaleProtect VSAN Configuration

| VSAN Name |

VSAN |

VSAN Purpose |

| Backup-VSAN-A |

201 |

Fabric-A VSAN for connectivity to data protection devices. |

| Backup-VSAN-B |

202 |

Fabric-B VSAN for connectivity to data protection devices. |

| Prod-VSAN-A |

101 |

Fabric-A VSAN for connectivity to production SAN Fabrics. |

| Prod-VSAN-B |

102 |

Fabric-B VSAN for connectivity to production SAN Fabrics. |

Physical Infrastructure

The information in this section is provided as a reference for cabling the equipment in ScaleProtect environment.

This document assumes that the out-of-band management ports are plugged into an existing management infrastructure at the deployment site. These interfaces will be used in various configuration steps.

![]() Customers can choose interfaces and ports of their liking but failure to follow the exact connectivity shown in figures below will result in changes to the deployment procedures since specific port information is used in various configuration steps.

Customers can choose interfaces and ports of their liking but failure to follow the exact connectivity shown in figures below will result in changes to the deployment procedures since specific port information is used in various configuration steps.

Cisco UCS Connectivity to Nexus Switches

For physical connectivity details of Cisco UCS to the Cisco Nexus switches, refer to Figure 3.

Figure 3 Cisco UCS Connectivity to the Nexus Switches

Each Cisco UCS C220 M5 rack server in the design is redundantly connected to the managing fabric interconnects with at least one port connected to each FI to support converged traffic. Internally the Cisco UCS C220 M5 servers are equipped with a Cisco VIC 1457 network interface card (NIC) with quad 10/25 Gigabit Ethernet (GbE) ports. The Cisco VIC is installed in a modular LAN on motherboard (MLOM) slot. The standard practice for redundant connectivity is to connect port 1 of each server’s VIC card to a numbered port on FI A, and port 3 of each server’s VIC card to the same numbered port on FI B. The use of ports 1 and 3 are because ports 1 and 2 form an internal port-channel, as does ports 3 and 4. This allows an optional 4 cable connection method providing an effective 50GbE bandwidth to each fabric interconnect.

Table 4 Cisco UCS C220 Server Connectivity to Cisco UCS Fabric Interconnects

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco UCS Fabric Interconnect A |

Eth1/17 |

25GbE |

Cisco UCS C220 M5 LFF Server1 |

VIC Port 1 |

| Cisco UCS Fabric Interconnect A |

Eth1/18 |

25GbE |

Cisco UCS C220 M5 LFF Server2 |

VIC Port 1 |

| Cisco UCS Fabric Interconnect A |

Eth1/19 |

25GbE |

Cisco UCS C220 M5 LFF Server3 |

VIC Port 1 |

| Cisco UCS Fabric Interconnect B |

Eth1/17 |

25GbE |

Cisco UCS C220 M5 LFF Server1 |

VIC Port 3 |

| Cisco UCS Fabric Interconnect B |

Eth1/18 |

25GbE |

Cisco UCS C220 M5 LFF Server2 |

VIC Port 3 |

| Cisco UCS Fabric Interconnect B |

Eth1/19 |

25GbE |

Cisco UCS C220 M5 LFF Server3 |

VIC Port 3 |

Table 5 Cisco UCS FI Connectivity to Nexus Switches

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco UCS Fabric Interconnect A |

Eth1/49 |

40/100GbE |

Cisco Nexus 9336C-FX2 |

Eth1/25 |

| Cisco UCS Fabric Interconnect A |

Eth1/50 |

40/100GbE |

Cisco Nexus 9336C-FX2 |

Eth1/25 |

| Cisco UCS Fabric Interconnect B |

Eth1/49 |

40/100GbE |

Cisco Nexus 9336C-FX2 |

Eth1/26 |

| Cisco UCS Fabric Interconnect B |

Eth1/50 |

40/100GbE |

Cisco Nexus 9336C-FX2 |

Eth1/26 |

Optional: Cisco UCS connectivity to SAN Fabrics

For physical connectivity details of Cisco UCS to a Cisco MDS based redundant SAN fabric (MDS 9132T has been shown as an example), refer to Figure 4. Cisco UCS to SAN connectivity is optional and is not required for default ScaleProtect implementation. SAN connectivity details are included in the document as a reference which can be leveraged to connect ScaleProtect infrastructure to existing SAN fabrics in customers environment.

![]() This document includes SAN configuration details for Cisco UCS but doesn’t explain the Cisco MDS switch configuration details and end device configurations such as Storage Arrays or Tape Library’s.

This document includes SAN configuration details for Cisco UCS but doesn’t explain the Cisco MDS switch configuration details and end device configurations such as Storage Arrays or Tape Library’s.

Figure 4 Cisco UCS Connectivity to Cisco MDS Switches

Table 6 Optional: Cisco UCS Connectivity to Cisco MDS Switches

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

| Cisco UCS Fabric Interconnect A |

FC1/1 |

32Gbps |

Cisco MDS 9132T A |

FC1/1 |

| Cisco UCS Fabric Interconnect A |

FC1/2 |

32Gbps |

Cisco MDS 9132T A |

FC1/2 |

| Cisco UCS Fabric Interconnect B |

FC1/1 |

32Gbps |

Cisco MDS 9132T B |

FC1/1 |

| Cisco UCS Fabric Interconnect B |

FC1/2 |

32Gbps |

Cisco MDS 9132T B |

FC1/2 |

Table 7 and Table 8 lists the hardware configuration and sizing options of Cisco UCS C220 M5 nodes for ScaleProtect Solution.

Table 7 Cisco UCS C220 M5 Server Node Configuration

| Resources |

Cisco UCS C220 M5 LFF |

| CPU |

2x 2nd Gen Intel® Xeon® Scalable Silver 4214 |

| Memory |

96GB DDR4 |

| Storage |

Boot Drives |

| (2) 960GB SSD – RAID1 |

|

| Accelerated Cache Tier |

|

| (1) 1.6TB NVMe |

|

| Software Defined Data Storage Tier |

|

| (4) 4/6/8/10/12TB HDD |

|

| Storage Controller |

SAS 12G RAID |

| Network |

(2/4) 25Gbps |

Table 8 ScaleProtect with Cisco UCS C220 M5 Solution Sizing

| Cisco UCS Model |

HDD |

3 Node |

6 Node |

9 Node |

12 Node |

15 Node |

| Cisco UCS C220 M5 (4 Drives per node)

|

4 TB |

29 TiB |

58 TiB |

87 TiB |

116 TiB |

145 TiB |

| 6 TB |

44 TiB |

88 TiB |

132 TiB |

176 TiB |

220 TiB |

|

| 8 TB |

58 TiB |

116 TiB |

174 TiB |

232 TiB |

290 TiB |

|

| 10 TB |

72 TiB |

144 TiB |

222 TiB |

296 TiB |

370 TiB |

|

| 12 TB |

87 TiB |

174 TiB |

261 TiB |

348 TiB |

435 TiB |

1. HDD capacity values are calculated using Base10 (e.g. 1TB = 1,000,000,000,000 bytes)

2. Usable capacity values are calculated using Base2 (e.g. 1TiB = 1,099,511,627,776 bytes), post erasure coding

Figure 5 illustrates the ScaleProtect implementation workflow which is explained in the following sections of this document.

Figure 5 ScaleProtect Implementation steps

This section provides detailed steps to configure the Cisco Nexus 9000 switches used in this ScaleProtect environment. Some changes may be appropriate for a customer’s environment, but care should be taken when stepping outside of these instructions as it may lead to an improper configuration.

For detailed configuration details, refer to the Cisco Nexus 9000 Series NX-OS Interfaces Configuration Guide.

![]() Any Cisco Nexus 9k switches can be used in the deployment based on the bandwidth requirements. However, be aware that there may be slight differences in setup and configuration based on the switch used. The switch model also dictates the connectivity options between the devices including the bandwidth supported, transceiver and cable types required.

Any Cisco Nexus 9k switches can be used in the deployment based on the bandwidth requirements. However, be aware that there may be slight differences in setup and configuration based on the switch used. The switch model also dictates the connectivity options between the devices including the bandwidth supported, transceiver and cable types required.

Figure 6 Cisco Nexus Configuration Workflow

Cisco Nexus 9000 Initial Configuration Setup

This section describes how to configure the Cisco Nexus switches to use in a ScaleProtect environment. This procedure assumes that you are using Cisco Nexus 9000 switches running 7.0(3)I7(6) code.

Cisco Nexus 9000 A

To set up the initial configuration for the Cisco Nexus A switch, follow these steps:

![]() On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

Disabling POAP.......Disabling POAP

poap: Rolling back, please wait... (This may take 5-15 minutes)

---- System Admin Account Setup ----

Do you want to enforce secure password standard (yes/no) [y]: Enter

Enter the password for "admin": <Switch Password>

Confirm the password for "admin": <Switch Password>

Would you like to enter the basic configuration dialog (yes/no): yes

Create another login account (yes/no) [n]: Enter

Configure read-only SNMP community string (yes/no) [n]: Enter

Configure read-write SNMP community string (yes/no) [n]: Enter

Enter the switch name: <Name of the Switch A>

Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]: Enter

Mgmt0 IPv4 address: <Mgmt. IP address for Switch A>

Mgmt0 IPv4 netmask: <Mgmt. IP Subnet Mask>

Configure the default gateway? (yes/no) [y]: Enter

IPv4 address of the default gateway: <Default GW for the Mgmt. IP>

Configure advanced IP options? (yes/no) [n]: Enter

Enable the telnet service? (yes/no) [n]: Enter

Enable the ssh service? (yes/no) [y]: Enter

Type of ssh key you would like to generate (dsa/rsa) [rsa]: Enter

Number of rsa key bits <1024-2048> [1024]: Enter

Configure the ntp server? (yes/no) [n]: y

NTP server IPv4 address: <NTP Server IP Address>

Configure default interface layer (L3/L2) [L2]: Enter

Configure default switchport interface state (shut/noshut) [noshut]: shut

Configure CoPP system profile (strict/moderate/lenient/dense/skip) [strict]: Enter

Would you like to edit the configuration? (yes/no) [n]: Enter

2. Review the configuration summary before enabling the configuration.

Cisco Nexus 9000 B

To set up the initial configuration for the Cisco Nexus B switch, follow these steps:

![]() On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

Abort Power On Auto Provisioning [yes - continue with normal setup, skip - bypass password and basic configuration, no - continue with Power On Auto Provisioning] (yes/skip/no)[no]: yes

Disabling POAP.......Disabling POAP

poap: Rolling back, please wait... (This may take 5-15 minutes)

---- System Admin Account Setup ----

Do you want to enforce secure password standard (yes/no) [y]: Enter

Enter the password for "admin": <Switch Password>

Confirm the password for "admin": <Switch Password>

Would you like to enter the basic configuration dialog (yes/no): yes

Create another login account (yes/no) [n]: Enter

Configure read-only SNMP community string (yes/no) [n]: Enter

Configure read-write SNMP community string (yes/no) [n]: Enter

Enter the switch name: <Name of the Switch B>

Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]: Enter

Mgmt0 IPv4 address: <Mgmt. IP address for Switch B>

Mgmt0 IPv4 netmask: <Mgmt. IP Subnet Mask>

Configure the default gateway? (yes/no) [y]: Enter

IPv4 address of the default gateway: <Default GW for the Mgmt. IP>

Configure advanced IP options? (yes/no) [n]: Enter

Enable the telnet service? (yes/no) [n]: Enter

Enable the ssh service? (yes/no) [y]: Enter

Type of ssh key you would like to generate (dsa/rsa) [rsa]: Enter

Number of rsa key bits <1024-2048> [1024]: Enter

Configure the ntp server? (yes/no) [n]: y

NTP server IPv4 address: <NTP Server IP Address>

Configure default interface layer (L3/L2) [L2]: Enter

Configure default switchport interface state (shut/noshut) [noshut]: shut

Configure CoPP system profile (strict/moderate/lenient/dense/skip) [strict]: Enter

Would you like to edit the configuration? (yes/no) [n]: Enter

2. Review the configuration summary before enabling the configuration.

Enable Appropriate Cisco Nexus 9000 Features and Settings

Cisco Nexus 9000 A and Cisco Nexus 9000 B

To enable the IP switching feature and set default spanning tree behaviors, follow these steps:

1. On each Nexus 9000, enter the configuration mode:

config terminal

2. Use the following commands to enable the necessary features:

feature lacp

feature vpc

feature interface-vlan

feature udld

feature lacp

feature nxapi

3. Configure the spanning tree and save the running configuration to start-up:

spanning-tree port type network default

spanning-tree port type edge bpduguard default

spanning-tree port type edge bpdufilter default

port-channel load-balance src-dst l4port

copy run start

Create VLANs for ScaleProtect IP Traffic

Cisco Nexus 9000 A and Cisco Nexus 9000 B

To create the necessary virtual local area networks (VLANs), follow these steps on both switches:

1. From the configuration mode, run the following commands:

vlan <ScaleProtect-Data VLAN id>

name SP-Data-VLAN

exit

vlan <ScaleProtect-Cluster VLAN id>

name SP-Cluster-VLAN

exit

vlan <Native VLAN id>>

name Native-VLAN

exit

copy run start

Configure Virtual Port Channel Domain

Cisco Nexus 9000 A

To configure vPC domain for switch A, follow these steps:

1. From the global configuration mode, create a new vPC domain:

vpc domain 10

2. Make the Nexus 9000A the primary vPC peer by defining a low priority value:

role priority 10

3. Use the management interfaces on the supervisors of the Nexus 9000s to establish a keepalive link:

peer-keepalive destination <Mgmt. IP address for Switch B> source <Mgmt. IP address for Switch A>

4. Enable the following features for this vPC domain:

peer-switch

delay restore 150

peer-gateway

ip arp synchronize

auto-recovery

copy run start

Cisco Nexus 9000 B

To configure the vPC domain for switch B, follow these steps:

1. From the global configuration mode, create a new vPC domain:

vpc domain 10

2. Make the Nexus 9000A the primary vPC peer by defining a low priority value:

role priority 20

3. Use the management interfaces on the supervisors of the Nexus 9000s to establish a keepalive link:

peer-keepalive destination <Mgmt. IP address for Switch A> source <Mgmt. IP address for Switch B>

4. Enable the following features for this vPC domain:

peer-switch

delay restore 150

peer-gateway

ip arp synchronize

auto-recovery

copy run start

Configure Network Interfaces for the vPC Peer Links

To configure the network interfaces for the vPC Peer links, follow these steps:

Cisco Nexus 9000 A

1. Define a port description for the interfaces connecting to vPC Peer <Nexus Switch B>>.

interface Eth1/27

description VPC Peer <Nexus-B Switch Name>:1/27

interface Eth1/28

description VPC Peer <Nexus-B Switch Name>:1/28

2. Apply a port channel to both vPC Peer links and bring up the interfaces.

interface Eth1/27,Eth1/28

channel-group 10 mode active

no shutdown

3. Define a description for the port-channel connecting to <Nexus Switch B>.

interface Po10

description vPC peer-link

4. Make the port-channel a switchport, and configure a trunk to allow Data, Cluster and the native VLAN.

switchport

switchport mode trunk

switchport trunk native vlan <Native VLAN id>

switchport trunk allowed vlan <ScaleProtect-Data VLAN id> <ScaleProtect-Cluster VLAN id>

spanning-tree port type network

5. Make this port-channel the VPC peer link and bring it up.

vpc peer-link

no shutdown

copy run start

Cisco Nexus 9000 B

1. Define a port description for the interfaces connecting to VPC Peer <Nexus Switch A>.

interface Eth1/27

description VPC Peer <Nexus-A Switch Name>:1/27

interface Eth1/28

description VPC Peer <Nexus-A Switch Name>:1/28

2. Apply a port channel to both VPC Peer links and bring up the interfaces.

interface Eth1/27,Eth1/28

channel-group 10 mode active

no shutdown

3. Define a description for the port-channel connecting to <Nexus Switch A>.

interface Po10

description vPC peer-link

4. Make the port-channel a switchport, and configure a trunk to allow Data, Cluster and the native VLAN.

switchport

switchport mode trunk

switchport trunk native vlan <Native VLAN id>

switchport trunk allowed vlan <ScaleProtect-Data VLAN id> <ScaleProtect-Cluster VLAN id>

spanning-tree port type network

5. Make this port-channel the VPC peer link and bring it up.

vpc peer-link no shutdown

copy run start

Configure Network Interfaces to Cisco UCS Fabric Interconnect

Cisco Nexus 9000 A

1. Define a description for the port-channel connecting to <<UCS Cluster Name>>-A.

interface Po40

description <UCS Cluster Name>-A

2. Make the port-channel a switchport and configure a trunk to allow ScaleProtect Data, ScaleProtect Cluster and the native VLANs.

switchport

switchport mode trunk

switchport trunk native vlan <Native VLAN id>

switchport trunk allowed vlan <ScaleProtect-Data VLAN id> <ScaleProtect-Cluster VLAN id>

3. Make the port channel and associated interfaces spanning tree edge ports.

spanning-tree port type edge trunk

4. Set the MTU to be 9216 to support jumbo frames.

mtu 9216

5. Make this a VPC port-channel and bring it up.

vpc 40

no shutdown

6. Define a port description for the interface connecting to <UCS Cluster Name>-A.

interface Eth1/25

description <UCS Cluster Name>-A:49

7. Apply it to a port channel and bring up the interface.

channel-group 40 force mode active

no shutdown

8. Define a description for the port-channel connecting to <UCS Cluster Name>-B.

interface Po50

description <UCS Cluster Name>-B

9. Make the port-channel a switchport and configure a trunk to ScaleProtect Data, ScaleProtect Cluster and the native VLANs.

switchport

switchport mode trunk

switchport trunk native vlan <Native VLAN id>

switchport trunk allowed vlan <ScaleProtect-Data VLAN id> <ScaleProtect-Cluster VLAN id>

10. Make the port channel and associated interfaces spanning tree edge ports.

spanning-tree port type edge trunk

11. Set the MTU to be 9216 to support jumbo frames.

mtu 9216

12. Make this a VPC port-channel and bring it up.

vpc 50

no shutdown

13. Define a port description for the interface connecting to <UCS Cluster Name>-B.

interface Eth1/26

description <UCS Cluster Name>-B:1/49

14. Apply it to a port channel and bring up the interface.

channel-group 50 force mode active

no shutdown

copy run start

Cisco Nexus 9000 B

1. Define a description for the port-channel connecting to <UCS Cluster Name>-B.

interface Po40

description <UCS Cluster Name>-A

2. Make the port-channel a switchport and configure a trunk to allow ScaleProtect Data, ScaleProtect Cluster and the native VLANs.

switchport

switchport mode trunk

switchport trunk native vlan <Native VLAN id>

switchport trunk allowed vlan <ScaleProtect-Data VLAN id> <ScaleProtect-Cluster VLAN id>

3. Make the port channel and associated interfaces spanning tree edge ports.

spanning-tree port type edge trunk

4. Set the MTU to 9216 to support jumbo frames.

mtu 9216

5. Make this a VPC port-channel and bring it up.

vpc 40

no shutdown

6. Define a port description for the interface connecting to <UCS Cluster Name>-B.

interface Eth1/25

description <UCS Cluster Name>-A:1/50

7. Apply it to a port channel and bring up the interface.

channel-group 40 force mode active

no shutdown

8. Define a description for the port-channel connecting to <UCS Cluster Name>-A.

interface Po50

description <UCS Cluster Name>-B

9. Make the port-channel a switchport and configure a trunk to allow ScaleProtect Data, ScaleProtect Cluster and the native VLANs.

switchport

switchport mode trunk

switchport trunk native vlan <Native VLAN id>

switchport trunk allowed vlan <ScaleProtect-Data VLAN id> <ScaleProtect-Cluster VLAN id>

10. Make the port channel and associated interfaces spanning tree edge ports.

spanning-tree port type edge trunk

11. Set the MTU to be 9216 to support jumbo frames.

mtu 9216

12. Make this a VPC port-channel and bring it up.

vpc 12

no shutdown

13. Define a port description for the interface connecting to <UCS Cluster Name>-A.

interface Eth1/26

description <UCS Cluster Name>-B:1/50

14. Apply it to a port channel and bring up the interface.

channel-group 50 force mode active

no shutdown

copy run start

Uplink into Existing Network Infrastructure

Depending on the available network infrastructure, several methods and features can be used to uplink the ScaleProtect environment. If an existing Cisco Nexus environment is present, it is recommended to use vPCs to uplink the Cisco Nexus 9336C-FX2 switches included in the present environment into the infrastructure. The previously described procedures can be used to create an uplink vPC to the existing environment. Make sure to run copy run start to save the configuration on each switch after the configuration is completed.

Cisco Nexus 9000 A and B using Port Channel Example

To enable data protection and management network access across the IP switching environment leveraging port channel to a single switch run the following commands in config mode:

![]() The connectivity to existing network is specific to each customer and the following is just an example for reference. Please consult the customer network team during implementation of the solution.

The connectivity to existing network is specific to each customer and the following is just an example for reference. Please consult the customer network team during implementation of the solution.

1. Define a description for the port-channel connecting to uplink switch.

interface po6

description <ScaleProtect Data VLAN>

2. Configure the port as an access VLAN carrying the management/data protection VLAN traffic.

switchport

switchport mode access

switchport access vlan <ScaleProtect Data VLAN id>

3. Make the port channel and associated interfaces normal spanning tree ports.

spanning-tree port type normal

4. Make this a VPC port-channel and bring it up.

vpc 6

no shutdown

5. Define a port description for the interface connecting to the existing network infrastructure.

interface Eth1/33

description <ScaleProtect Data VLAN>_uplink

6. Apply it to a port channel and bring up the interface.

channel-group 6 force mode active

no shutdown

7. Save the running configuration to start-up in both Nexus 9000s and run commands to look at port and port channel information.

Copy run start

sh int eth1/33 br

sh port-channel summary

This section describes the steps to configure the Cisco Unified Computing System (Cisco UCS) to use in a ScaleProtect environment.

![]() These steps are necessary to provision the Cisco UCS C220 M5 Servers and should be followed precisely to avoid improper configuration.

These steps are necessary to provision the Cisco UCS C220 M5 Servers and should be followed precisely to avoid improper configuration.

Figure 7 Cisco UCS implementation Workflow

![]() This document includes the configuration of the Cisco UCS infrastructure to enable SAN connectivity to existing storage environment. The ScaleProtect design for this solution doesn’t need SAN connectivity and additional information is included only as a reference and should be skipped if SAN connectivity is not required. All the sections that should be skipped for default design have been marked as optional.

This document includes the configuration of the Cisco UCS infrastructure to enable SAN connectivity to existing storage environment. The ScaleProtect design for this solution doesn’t need SAN connectivity and additional information is included only as a reference and should be skipped if SAN connectivity is not required. All the sections that should be skipped for default design have been marked as optional.

Cisco UCS Base Configuration

To complete Cisco UCS base configuration, follow the steps in this section.

Perform Initial Setup of Cisco UCS 6454 Fabric Interconnects

This section provides the configuration steps for the Cisco UCS 6454 Fabric Interconnects (FI) in a ScaleProtect design that includes Cisco UCS C220 M5 LFF Rack Servers.

Figure 8 Cisco UCS Basic Configuration Workflow

Cisco UCS 6454 Fabric Interconnect A

To configure Fabric Interconnect A, follow these steps:

1. Make sure the Fabric Interconnect cabling is properly connected, including the L1 and L2 cluster links, and power the Fabric Interconnects on by inserting the power cords.

2. Connect to the console port on the first Fabric Interconnect, which will be designated as the A fabric device. Use the supplied Cisco console cable (CAB-CONSOLE-RJ45=), and connect it to a built-in DB9 serial port, or use a USB to DB9 serial port adapter.

3. Start your terminal emulator software.

4. Create a connection to the COM port of the computer’s DB9 port, or the USB to serial adapter. Set the terminal emulation to VT100, and the settings to 9600 baud, 8 data bits, no parity, 1 stop bit.

5. Open the connection just created. You may have to press ENTER to see the first prompt.

6. Configure the first Fabric Interconnect, using the following example as a guideline.

7. Connect to the console port on the first Cisco UCS 6454 fabric interconnect.

Enter the configuration method. (console/gui) ? console

Enter the setup mode; setup newly or restore from backup.(setup/restore)? setup

You have chosen to setup a new Fabric interconnect? Continue? (y/n): y

Enforce strong password? (y/n) [y]: y

Enter the password for "admin": <UCS Password>

Confirm the password for "admin": <UCS Password>

Is this Fabric interconnect part of a cluster(select no for standalone)? (yes/no) [n]: yes

Which switch fabric (A/B)[]: A

Enter the system name: <Name of the System>

Physical Switch Mgmt0 IP address: <Mgmt. IP address for Fabric A>

Physical Switch Mgmt0 IPv4 netmask: <Mgmt. IP Subnet Mask>

IPv4 address of the default gateway: <Default GW for the Mgmt. IP >

Cluster IPv4 address: <Cluster Mgmt. IP address>

Configure the DNS Server IP address? (yes/no) [n]: y

DNS IP address: <DNS IP address>

Configure the default domain name? (yes/no) [n]: y

Default domain name: <DNS Domain Name>

Join centralized management environment (UCS Central)? (yes/no) [n]: n

Apply and save configuration (select no if you want to re-enter)? (yes/no): yes

8. Wait for the login prompt to make sure that the configuration has been saved.

Cisco UCS 6454 Fabric Interconnect B

To configure Fabric Interconnect B, follow these steps:

1. Connect to the console port on the first Fabric Interconnect, which will be designated as the B fabric device. Use the supplied Cisco console cable (CAB-CONSOLE-RJ45=), and connect it to a built-in DB9 serial port, or use a USB to DB9 serial port adapter.

2. Start your terminal emulator software.

3. Create a connection to the COM port of the computer’s DB9 port, or the USB to serial adapter. Set the terminal emulation to VT100, and the settings to 9600 baud, 8 data bits, no parity, 1 stop bit.

4. Open the connection just created. You may have to press ENTER to see the first prompt.

5. Configure the second Fabric Interconnect, using the following example as a guideline.

6. Connect to the console port on the second Cisco UCS 6454 fabric interconnect.

Enter the configuration method. (console/gui) ? console

Installer has detected the presence of a peer Fabric interconnect. This

Fabric interconnect will be added to the cluster. Continue (y|n)? y

Enter the admin password for the peer Fabric interconnect: <Admin Password>

Connecting to peer Fabric interconnect... done

Retrieving config from peer Fabric interconnect... done

Peer Fabric interconnect Mgmt0 IPv4 Address: <Address provided in last step>

Peer Fabric interconnect Mgmt0 IPv4 Netmask: <Mask provided in last step>

Cluster IPv4 address : <Cluster IP provided in last step>

Peer FI is IPv4 Cluster enabled. Please Provide Local Fabric Interconnect Mgmt0 IPv4 Address

Physical switch Mgmt0 IP address: < Mgmt. IP address for Fabric B>

Apply and save the configuration (select no if you want to re-enter)?

(yes/no): yes

7. Wait for the login prompt to make sure that the configuration has been saved.

Cisco UCS Setup

Log into Cisco UCS Manager

To log into the Cisco Unified Computing System (UCS) environment, follow these steps:

1. Open a web browser and navigate to the Cisco UCS fabric interconnect cluster address.

2. Click the Launch UCS Manager link to download the Cisco UCS Manager software.

3. If prompted to accept security certificates, accept as necessary.

4. When prompted, enter admin as the user name and enter the administrative password.

5. Click Login to log in to Cisco UCS Manager.

Upgrade Cisco UCS Manager Software to Version 4.0(4b)

This document assumes you are using Cisco UCS 4.0(4b). To upgrade the Cisco UCS Manager software and the Cisco UCS Fabric Interconnect software to version 4.0(4b), refer to the Cisco UCS Manager Install and Upgrade Guides.

Anonymous Reporting

To enable anonymous reporting, follow this step:

1. In the Anonymous Reporting window, select whether to send anonymous data to Cisco for improving future products:

Configure Cisco UCS Call Home

![]() It is highly recommended by Cisco to configure Call Home in Cisco UCS Manager. Configuring Call Home will accelerate resolution of support cases.

It is highly recommended by Cisco to configure Call Home in Cisco UCS Manager. Configuring Call Home will accelerate resolution of support cases.

To configure Call Home, follow these steps:

1. In Cisco UCS Manager, click the Admin icon on the left.

2. Select All > Communication Management > Call Home.

3. Change the State to On.

4. Fill in all the fields according to your Management preferences and click Save Changes and OK to complete configuring Call Home.

Synchronize Cisco UCS to NTP

To synchronize the Cisco UCS environment to the NTP server, follow these steps:

1. In Cisco UCS Manager, click the Admin tab in the navigation pane.

2. Select All > Timezone Management > Timezone.

3. In the Properties pane, select the appropriate time zone in the Timezone menu.

4. Click Add NTP Server.

5. Enter <NTP Server IP Address> and click OK.

6. Click Save Changes and then click OK.

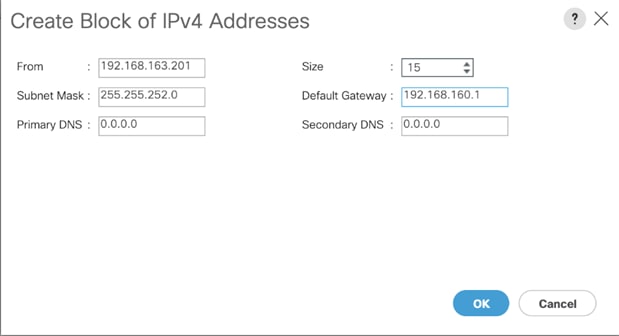

Add Block IP Addresses for KVM Access

To create a block of IP addresses for in band server Keyboard, Video, Mouse (KVM) access in the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the LAN tab in the navigation pane.

2. Select Pools > root > IP Pools.

3. Right-click IP Pool ext-mgmt and select Create Block of IPv4 Addresses.

4. Enter the starting IP address of the block, the number of IP addresses required, and the subnet and gateway information.

5. Click OK to create.

6. Click OK in the confirmation message.

Server Discovery Policy

The Server discovery policy determines how the system reacts when you add a new Cisco UCS server to a Cisco UCS system. Cisco UCS Manager uses the settings in the chassis discovery policy to determine whether to group links from the VIC’s to the fabric interconnects in fabric port channels.

![]() To add a previously standalone Cisco UCS C220 to a Cisco UCS system, you must first configure it to factory default. You can then connect both ports of the VIC on the server to both fabric interconnects. After you connect the VIC ports to the fabric interconnects, and mark the ports as server ports, server discovery begins.

To add a previously standalone Cisco UCS C220 to a Cisco UCS system, you must first configure it to factory default. You can then connect both ports of the VIC on the server to both fabric interconnects. After you connect the VIC ports to the fabric interconnects, and mark the ports as server ports, server discovery begins.

To modify the chassis discovery policy, follow these steps:

1. In Cisco UCS Manager, click the Equipment tab in the navigation pane and select Equipment in the list on the left

2. In the right pane, click the Policies tab.

3. Under Global Policies, set the Chassis/FEX Discovery Policy to match the number of uplink ports that are cabled between the chassis or fabric extenders (FEXes) and the fabric interconnects.

4. Click Save Changes if values changed from default values.

5. Click OK.

Enable Server Ports

The Ethernet ports of a Cisco UCS Fabric Interconnect connected to the rack-mount servers, or to the blade chassis or to Cisco UCS S3260 Storage Server must be defined as server ports. When a server port is activated, the connected server or chassis will begin the discovery process shortly afterwards. Rack-mount servers, blade chassis, and Cisco UCS S3260 chassis are automatically numbered in the order which they are first discovered. For this reason, it is important to configure the server ports sequentially in the order you wish the physical servers and/or chassis to appear within Cisco UCS Manager. For example, if you installed your servers in a cabinet or rack with server #1 on the bottom, counting up as you go higher in the cabinet or rack, then you need to enable the server ports to the bottom-most server first, and enable them one-by-one as you move upward. You must wait until the server appears in the Equipment tab of Cisco UCS Manager before configuring the ports for the next server. The same numbering procedure applies to blade server chassis.

![]() Cisco UCS Port Auto-Discovery Policy can be optionally enabled to discover the servers without having to manually define the server ports. The procedure in next section details the process of enabling Auto-Discovery Policy.

Cisco UCS Port Auto-Discovery Policy can be optionally enabled to discover the servers without having to manually define the server ports. The procedure in next section details the process of enabling Auto-Discovery Policy.

To define the specified ports to be used as server ports, follow these steps:

1. In Cisco UCS Manager, click the Equipment tab in the navigation pane

2. Select Fabric Interconnects > Fabric Interconnect A > Fixed Module > Ethernet Ports.

3. Select the first port that is to be a server port, right-click it, and click Configure as Server Port.

4. Click Yes to confirm the configuration and click OK.

5. Select Fabric Interconnects > Fabric Interconnect B > Fixed Module > Ethernet Ports.

6. Select the matching port as chosen for Fabric Interconnect A which would be configured as Server Port.

7. Click Yes to confirm the configuration and click OK.

8. Repeat steps 1-7 for enabling other ports connected to the other C220 M5 Server Nodes.

9. Wait for a brief period, until the rack-mount server appears in the Equipment tab underneath Equipment > Rack Mounts > Servers.

Optional: Edit Policy to Automatically Discover Server Ports

If the Cisco UCS Port Auto-Discovery Policy is enabled, server ports will be discovered automatically. To enable the Port Auto-Discovery Policy, follow these steps:

1. In Cisco UCS Manager, click the Equipment icon on the left and select Equipment in the second list

2. In the right pane, click the Policies tab.

3. Under Policies, select the Port Auto-Discovery Policy tab.

4. Under Properties, set Auto Configure Server Port to Enabled.

5. Click Save Changes.

6. Click OK.

![]() The first discovery process can take some time and is dependent on installed firmware on the chassis.

The first discovery process can take some time and is dependent on installed firmware on the chassis.

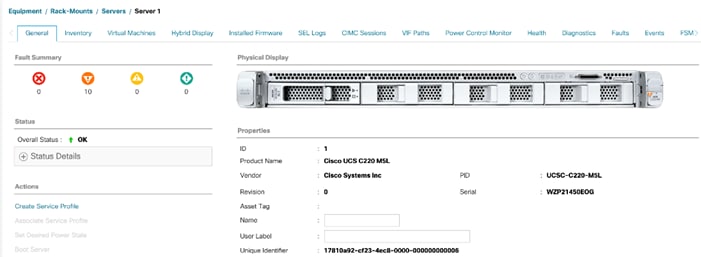

Server Discovery

As previously described, when the server ports of the Fabric Interconnects are configured and active, the servers connected to those ports will begin a discovery process. During discovery the servers’ internal hardware inventories are collected, along with their current firmware revisions. Before continuing with the Cisco UCS C220 rack server installation processes, wait for all of the servers to finish their discovery process and show as unassociated servers that are powered off, with no errors.

To view the servers’ discovery status, follow these steps:

1. In Cisco UCS Manager, click the Equipment tab in the navigation pane.

2. Click Rack-Mounts and click the Servers tab.

3. Select the respective server and view the Server status in the Overall Status column.

4. When the server is discovered, the C220 M5 server is displayed as shown below:

5. Click the Equipment > Rack–Mounts > Servers Tab and view the servers’ status in the Overall Status column. Below are the Cisco UCS C220 M5 Servers for ScaleProtect Cluster:

Optional: Enable Fibre Channel Ports

![]() The FC port and uplink configurations can be skipped if the ScaleProtect Cisco UCS environment does not need access to storage environment using FC SAN.

The FC port and uplink configurations can be skipped if the ScaleProtect Cisco UCS environment does not need access to storage environment using FC SAN.

Fibre Channel port configurations differ between the 6454, 6332-16UP and the 6248UP Fabric Interconnects. All Fabric Interconnects have a slider mechanism within the Cisco UCS Manager GUI interface, but the fibre channel port selection options for the 6454 are from the first 8 ports starting from the first port and configured in increments of 4 ports from the left. For the 6332-16UP the port selection options are from the first 16 ports starting from the first port, and configured in increments of the first 6, 12, or all 16 of the unified ports. With the 6248UP, the port selection options will start from the right of the 32 fixed ports, or the right of the 16 ports of the expansion module, going down in contiguous increments of 2. The remainder of this section shows configuration of the 6454. Modify as necessary for the 6332-16UP or 6248UP.

To enable FC uplink ports, follow these steps.

![]() This step requires a reboot. To avoid an unnecessary switchover, configure the subordinate Fabric Interconnect first.

This step requires a reboot. To avoid an unnecessary switchover, configure the subordinate Fabric Interconnect first.

1. In the Equipment tab, select the Fabric Interconnect B (subordinate FI in this example), and in the Actions pane, select Configure Unified Ports, and click Yes on the splash screen.

2. Slide the lever to change the ports 1-4 to Fiber Channel. Click Finish followed by Yes to the reboot message. Click OK.

![]() Select the number of ports to be enabled as FC uplinks based on the amount of bandwidth required in the customer specific setup.

Select the number of ports to be enabled as FC uplinks based on the amount of bandwidth required in the customer specific setup.

3. When the subordinate has completed reboot, repeat the procedure to configure FC ports on primary Fabric Interconnect. As before, the Fabric Interconnect will reboot after the configuration is complete.

Optional: Create VSAN for the Fibre Channel Interfaces

![]() Creating VSANs is optional and is only required if connectivity to existing production and backup SAN fabrics is required for the solution. Sample VSAN ids are used in the document for both production and backup fibre channel networks, match the VSAN ids based on customer specific environment.

Creating VSANs is optional and is only required if connectivity to existing production and backup SAN fabrics is required for the solution. Sample VSAN ids are used in the document for both production and backup fibre channel networks, match the VSAN ids based on customer specific environment.

To configure the necessary virtual storage area networks (VSANs) for FC uplinks for the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the SAN tab in the navigation pane.

2. Expand the SAN > SAN Cloud and select Fabric A.

3. Right-click VSANs and choose Create VSAN.

4. Enter Backup-A as the name of the VSAN for fabric A.

6. Keep the Disabled option selected for FC Zoning.

5. Click the Fabric A radio button.

6. Enter 201 as the VSAN ID for Fabric A.

7. Enter 201 as the FCoE VLAN ID for fabric A. Click OK twice.

8. In the SAN tab, expand SAN > SAN Cloud > Fabric-B.

9. Right-click VSANs and choose Create VSAN.

10. Enter Backup-B as the name of the VSAN for fabric B.

11. Keep the Disabled option selected for FC Zoning.

12. Click the Fabric B radio button.

13. Enter 202 as the VSAN ID for Fabric B. Enter 202 as the FCoE VLAN ID for Fabric B. Click OK twice.

![]() The VSANs created in the following steps are an example of production VSANs used in the document for access to production storage. Adjust the VSAN id’s based on customer specific deployment.

The VSANs created in the following steps are an example of production VSANs used in the document for access to production storage. Adjust the VSAN id’s based on customer specific deployment.

14. In Cisco UCS Manager, click the SAN tab in the navigation pane.

15. Expand the SAN > SAN Cloud and select Fabric A.

16. Right-click VSANs and choose Create VSAN.

17. Enter vsan-A as the name of the VSAN for fabric A.

7. Keep the Disabled option selected for FC Zoning.

18. Click the Fabric A radio button.

19. Enter 101 as the VSAN ID for Fabric A.

20. Enter 101 as the FCoE VLAN ID for fabric A. Click OK twice.

21. In the SAN tab, expand SAN > SAN Cloud > Fabric-B.

22. Right-click VSANs and choose Create VSAN.

23. Enter vsan-B as the name of the VSAN for fabric B.

24. Keep the Disabled option selected for FC Zoning.

25. Click the Fabric B radio button.

26. Enter 102 as the VSAN ID for Fabric B. Enter 102 as the FCoE VLAN ID for Fabric B. Click OK twice.

Optional: Create Port Channels for the Fibre Channel Interfaces

![]() As previously mentioned, Fibre channel connectivity is optional and the following procedure to create port-channels is included for reference and the procedure varies depending on the upstream SAN infrastructure.

As previously mentioned, Fibre channel connectivity is optional and the following procedure to create port-channels is included for reference and the procedure varies depending on the upstream SAN infrastructure.

To configure the necessary FC port channels for the Cisco UCS environment, follow these steps:

Fabric-A

1. In the navigation pane, under SAN > SAN Cloud, expand the Fabric A tree.

2. Click Enable FC Uplink Trunking.

3. Click Yes on the warning message.

4. Click Create FC Port Channel on the same screen.

5. Enter 6 for the port channel ID and Po6 for the port channel name.

6. Click Next then choose ports 1 and 2 and click >> to add the ports to the port channel.

7. Select Port Channel Admin Speed as 32gbps.

8. Click Finish.

9. Click OK.

10. Select FC Port-Channel 6 from the menu in the left pane and from the VSAN drop-down field, keep VSAN 1 selected in the right pane.

11. Click Save Changes and then click OK.

Fabric-B

1. Click the SAN tab. In the navigation pane, under SAN > SAN Cloud, expand the Fabric B.

2. Right-click FC Port Channels and choose Create Port Channel.

3. Enter 7 for the port channel ID and Po7 for the port channel name. Click Next.

4. Choose ports 1 and 2 and click >> to add the ports to the port channel.

5. Click Finish, and then click OK.

6. Select FC Port-Channel 7 from the menu in the left pane and from the VSAN drop-down list, keep VSAN 1 selected in the right pane.

7. Click Save Changes and then click OK.

![]() The procedure (above) creates port channels with trunking enabled to allow both production and backup VSANs, the necessary configuration needs to be completed on the upstream switches to establish connectivity successfully.

The procedure (above) creates port channels with trunking enabled to allow both production and backup VSANs, the necessary configuration needs to be completed on the upstream switches to establish connectivity successfully.

Disable Unused FC Uplink Ports (FCP)

When Unified Ports were configured earlier in this procedure, on the Cisco UCS 6454 FI and the Cisco UCS 6332-16UP FI, FC ports are configured in groups. Because of this group configuration, some FC ports are unused and need to be disabled to prevent alerts.

To disable the unused FC ports 3 and 4 on the Cisco UCS 6454 FIs, follow these steps:

1. In Cisco UCS Manager, click Equipment.

2. In the Navigation Pane, expand Equipment > Fabric Interconnects > Fabric Interconnect A > Fixed Module > FC Ports.

3. Select FC Port 3 and FC Port 4. Right-click and select Disable.

4. Click Yes and OK to complete disabling the unused FC ports.

5. In the Navigation Pane, expand Equipment > Fabric Interconnects > Fabric Interconnect B > Fixed Module > FC Ports.

6. Select FC Port 3 and FC Port 4. Right-click and select Disable.

7. Click Yes and OK to complete disabling the unused FC ports.

Enable Ethernet Uplink Ports

The Ethernet ports of a Cisco UCS 6554 Fabric Interconnect are all capable of performing several functions, such as network uplinks or server ports, and more. By default, all ports are unconfigured, and their function must be defined by the administrator.

To define the specified ports to be used as network uplinks to the upstream network, follow these steps:

1. In Cisco UCS Manager, click the Equipment tab in the navigation pane.

2. Select Fabric Interconnects > Fabric Interconnect A > Fixed Module > Ethernet Ports.

3. Select the ports that are to be uplink ports (49 & 50), right click them, and click Configure as Uplink Port.

4. Click Yes to confirm the configuration and click OK.

5. Select Fabric Interconnects > Fabric Interconnect B > Fixed Module > Ethernet Ports.

6. Select the ports that are to be uplink ports (49 & 50), right-click them, and click Configure as Uplink Port.

7. Click Yes to confirm the configuration and click OK.

8. Verify all the necessary ports are now configured as uplink ports.

Create Port Channels for Ethernet Uplinks

If the Cisco UCS uplinks from one Fabric Interconnect are to be combined into a port channel or vPC, you must separately configure the port channels using the previously configured uplink ports.

To configure the necessary port channels in the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the LAN tab in the navigation pane.

2. Under LAN > LAN Cloud, click to expand the Fabric A tree.

3. Right-click Port Channels underneath Fabric A and select Create Port Channel.

4. Enter the port channel ID number as the unique ID of the port channel.

5. Enter the name of the port channel.

6. Click Next.

7. Click each port from Fabric Interconnect A that will participate in the port channel and click the >> button to add them to the port channel.

8. Click Finish.

9. Click OK.

10. Under LAN > LAN Cloud, click to expand the Fabric B tree.

11. Right-click Port Channels underneath Fabric B and select Create Port Channel.

12. Enter the port channel ID number as the unique ID of the port channel.

13. Enter the name of the port channel.

14. Click Next.

15. Click each port from Fabric Interconnect B that will participate in the port channel and click the >> button to add them to the port channel.

16. Click Finish.

17. Click OK.

18. Verify the necessary port channels have been created. It can take a few minutes for the newly formed port channels to converge and come online.

Cisco UCS C220 M5 Server Node Configuration

The steps provided in this section details for Cisco UCS C220 M5 Server setup. The procedure includes creation of ScaleProtect environment specific UCS pools and policies, followed by the Cisco UCS C220 M5 Server Node setup which will involve Service Profile creation and association using Storage Profile.

Figure 9 Cisco UCS C220 Server Node Configuration Workflow

Create Sub-Organization

In this setup, one sub-organization under the root has been created. Sub-organizations help to restrict user access to logical pools and objects in order to facilitate secure provisioning and to provide easier user interaction. For ScaleProtect backup infrastructure, create a sub-organization as “CV-ScaleProtect”.

To create a sub-organization, follow these steps:

1. In the Navigation pane, click the Servers tab.

2. In the Servers tab, expand Service Profiles > root. You can also access the Sub-Organizations node under the Policies or Pools nodes.

3. Right-click Sub-Organizations and choose Create Organization.

4. Enter CV-ScaleProtect as the name or any other obvious name, enter a description, and click OK.

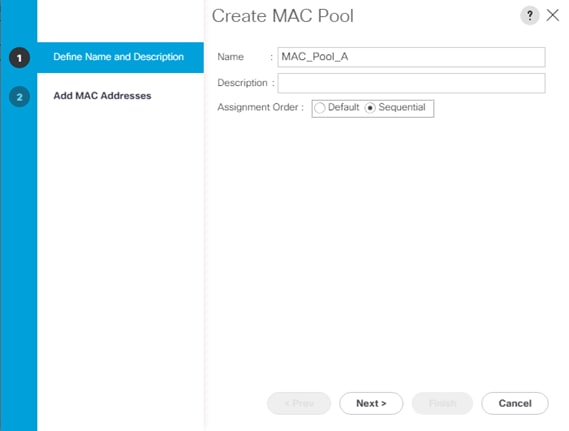

Create MAC Address Pools

To configure the necessary MAC address pools for the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the LAN tab in the navigation pane.

2. Select Pools > root > Sub-organizations > CV-ScaleProtect.

![]() In this procedure, two MAC address pools are created, one for each switching fabric.

In this procedure, two MAC address pools are created, one for each switching fabric.

3. Right-click MAC Pools under the root organization.

4. Select Create MAC Pool to create the MAC address pool.

5. Enter MAC_Pool_A as the name of the MAC pool.

6. Optional: Enter a description for the MAC pool.

7. Select Sequential as the option for Assignment Order.

8. Click Next.

9. Click Add.

10. Specify a starting MAC address.

![]() It is recommended to place 0A in the second last octet of the starting MAC address to identify all of the MAC addresses as Fabric A addresses. It is also recommended to not change the first three octets of the MAC address.

It is recommended to place 0A in the second last octet of the starting MAC address to identify all of the MAC addresses as Fabric A addresses. It is also recommended to not change the first three octets of the MAC address.

11. Specify a size for the MAC address pool that is sufficient to support the future ScaleProtect cluster expansion and any available blade or server resources.

12. Click OK.

13. Click Finish.

14. In the confirmation message, click OK.

15. Right-click MAC Pools under the root organization.

16. Select Create MAC Pool to create the MAC address pool.

17. Enter MAC_Pool_B as the name of the MAC pool.

18. Optional: Enter a description for the MAC pool.

19. Select Sequential as the option for Assignment Order.

20. Click Next.

21. Click Add.

22. Specify a starting MAC address.

![]() It is recommended to place 0B in the second last octet of the starting MAC address to identify all of the MAC addresses as Fabric A addresses. It is also recommended to not change the first three octets of the MAC address.

It is recommended to place 0B in the second last octet of the starting MAC address to identify all of the MAC addresses as Fabric A addresses. It is also recommended to not change the first three octets of the MAC address.

23. Specify a size for the MAC address pool that is sufficient to support the future ScaleProtect cluster expansion and any available blade or server resources.

24. Click OK.

25. Click Finish.

26. In the confirmation message, click OK.

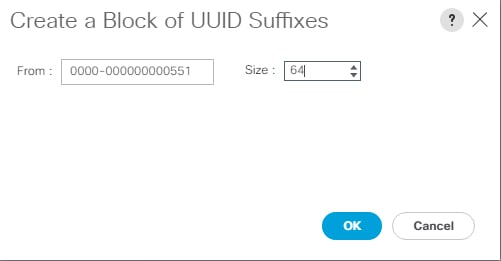

Create UUID Suffix Pool

To configure the necessary universally unique identifier (UUID) suffix pool for the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Pools > root > Sub-Organizations > CV-ScaleProtect.

3. Right-click UUID Suffix Pools.

4. Select Create UUID Suffix Pool.

5. Enter UUID_Pool as the name of the UUID suffix pool.

6. Optional: Enter a description for the UUID suffix pool.

7. Keep the prefix at the derived option.

8. Select Sequential for the Assignment Order.

9. Click Next.

10. Click Add to add a block of UUIDs.

11. Keep the value in From field at the default setting.

12. Specify a size for the UUID block that is sufficient to support the available server resources.

13. Click OK.

14. Click Finish.

15. Click OK.

Create Server Pool

The following procedure guides you in creating two server pools, one for first server nodes in the chassis and the other of the second server nodes.

![]() Always consider creating unique server pools to achieve the granularity that is required in your environment.

Always consider creating unique server pools to achieve the granularity that is required in your environment.

To configure the necessary server pool for the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Pools > root > Sub-Organizations > CV-ScaleProtect.

3. Right-click Server Pools.

4. Select Create Server Pool.

5. Enter CVLT_SP_C220_M5 as the name of the server pool.

6. Optional: Enter a description for the server pool.

7. Click Next.

8. Select C220 M5 server nodes and click >> to add them to the CVLT_SP_C220M5 server pool.

9. Click Finish.

10. Click OK.

11. Verify that the server pools have been created.

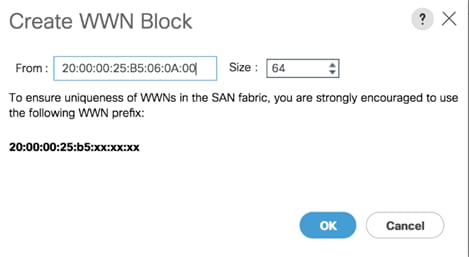

Optional: Create a WWNN Address Pool for FC-based Storage Access

![]() This configuration step can be skipped if the UCS environment does not need to access storage environment using FC.

This configuration step can be skipped if the UCS environment does not need to access storage environment using FC.

For FC connectivity to SAN fabrics, create a World Wide Node Name (WWNN) pool by following these steps:

1. In Cisco UCS Manager, click the SAN tab in the navigation pane.

2. Select Pools > root.

3. Right-click WWNN Pools under the root organization and choose Create WWNN Pool to create the WWNN address pool.

4. Enter WWNN-Pool as the name of the WWNN pool.

5. Optional: Enter a description for the WWNN pool.

6. Select the Sequential Assignment Order and click Next.

7. Click Add.

8. Specify a starting WWNN address.

9. Specify a size for the WWNN address pool that is sufficient to support the available blade or rack server resources. Each server will receive one WWNN.

10. Click OK and click Finish.

11. In the confirmation message, click OK.

Optional: Create a WWPN Address Pools for FC-based Storage Access

![]() This configuration step can be skipped if the UCS environment does not need access to storage environment using FC.

This configuration step can be skipped if the UCS environment does not need access to storage environment using FC.

For FC connectivity to SAN fabrics, create a World Wide Port Name (WWPN) pool for each SAN switching fabric by following these steps:

1. In Cisco UCS Manager, click the SAN tab in the navigation pane.

2. Select Pools > root.

3. Right-click WWPN Pools under the root organization and choose Create WWPN Pool to create the first WWPN address pool.

4. Enter WWPN-Pool-A as the name of the WWPN pool.

5. Optional: Enter a description for the WWPN pool.

6. Select the Sequential Assignment Order and click Next.

7. Click Add.

8. Specify a starting WWPN address.

![]() It is recommended to place 0A in the second last octet of the starting WWPN address to identify all of the WWPN addresses as Fabric A addresses.

It is recommended to place 0A in the second last octet of the starting WWPN address to identify all of the WWPN addresses as Fabric A addresses.

9. Specify a size for the WWPN address pool that is sufficient to support the available blade or rack server resources. Each server’s Fabric A vHBA will receive one WWPN from this pool.

10. Click OK and click Finish.

11. In the confirmation message, click OK.

12. Right-click WWPN Pools under the root organization and choose Create WWPN Pool to create the second WWPN address pool.

13. Enter WWPN-Pool-B as the name of the WWPN pool.

14. Optional: Enter a description for the WWPN pool.

15. Select the Sequential Assignment Order and click Next.

16. Click Add.

17. Specify a starting WWPN address.

![]() It is recommended to place 0B in the second last octet of the starting WWPN address to identify all of the WWPN addresses as Fabric B addresses.

It is recommended to place 0B in the second last octet of the starting WWPN address to identify all of the WWPN addresses as Fabric B addresses.

18. Specify a size for the WWPN address pool that is sufficient to support the available blade or rack server resources. Each server’s Fabric B vHBA will receive one WWPN from this pool.

19. Click OK and click Finish.

20. In the confirmation message, click OK.

Create VLANs

To configure the necessary virtual local area networks (VLANs) for the Cisco UCS ScaleProtect environment, follow these steps:

1. In Cisco UCS Manager, click the LAN tab in the navigation pane.

2. Select LAN > LAN Cloud.

3. Right-click VLANs.

4. Select Create VLANs.

5. Enter Data_VLAN as the name of the VLAN to be used for the native VLAN.

6. Keep the Common/Global option selected for the scope of the VLAN.

7. Keep the Sharing Type as None.

8. Click OK and then click OK again.

9. Repeat steps 3-8 to add Cluster VLAN as shown below:

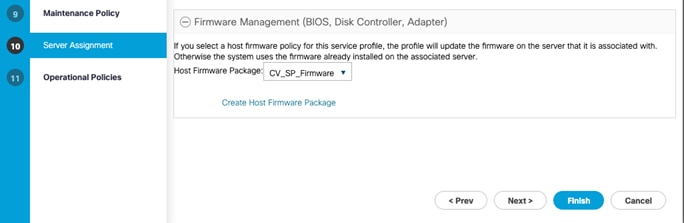

Create Host Firmware Package

Firmware management policies allow the administrator to select the corresponding packages for a given server configuration. These policies often include packages for adapter, BIOS, board controller, FC adapters, host bus adapter (HBA) option ROM, and storage controller properties.

To create a firmware management policy for a given server configuration in the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Policies > root > Sub-Organizations > CV-ScaleProtect.

3. Expand Host Firmware Packages.

4. Right-click and Select Create Host Firmware Package.

5. Enter name as CV_SP_Firmware

6. Select the version 4.0(4b)C for Rack Packages.

7. Click OK to add the host firmware package.

![]() The Local disk is excluded by default in host firmware policy as a safety feature. Un-Exclude Local Disk within the firmware policy during initial deployment, only if drive firmware is required to be upgraded and is not at the minimum firmware level. Keep it excluded for any future updates and update the drives manually if required.

The Local disk is excluded by default in host firmware policy as a safety feature. Un-Exclude Local Disk within the firmware policy during initial deployment, only if drive firmware is required to be upgraded and is not at the minimum firmware level. Keep it excluded for any future updates and update the drives manually if required.

Create Network Control Policy for Cisco Discovery Protocol

To create a network control policy that enables Cisco Discovery Protocol (CDP) on virtual network ports, follow these steps:

1. In Cisco UCS Manager, click the LAN tab in the navigation pane.

2. Select Policies > root >Sub-Organization > CV-ScaleProtect.

3. Right-click Network Control Policies.

4. Select Create Network Control Policy.

5. Enter ScaleProtect_NCP as the policy name.

6. For CDP, select the Enabled option.

7. For LLDP, scroll down and select Enabled for both Transit and Receive.

8. Click OK to create the network control policy.

9. Click OK.

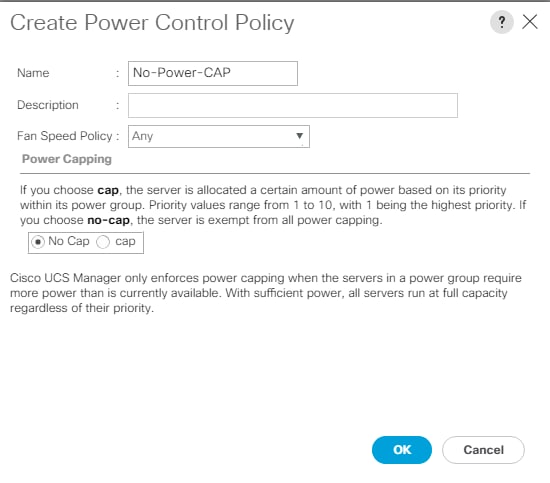

Create Power Control Policy

To create a power control policy for the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane. Select Policies > root >Sub-Organizations > CV-ScaleProtect.

2. Right-click Power Control Policies.

3. Select Create Power Control Policy.

4. Enter No-Power-Cap as the power control policy name.

5. Change the power capping setting to No Cap.

6. Click OK to create the power control policy.

7. Click OK.

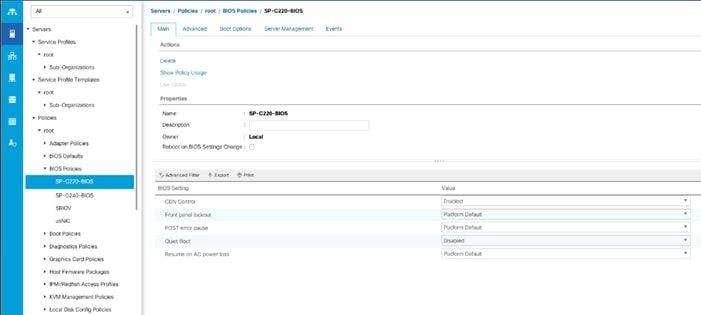

Create Server BIOS Policy

To create a server BIOS policy for the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Policies > root > sub-Organizations > CV-ScaleProtect.

3. Right-click BIOS Policies.

4. Select Create BIOS Policy.

5. Enter SP-C220-BIOS as the BIOS policy name.

6. Click OK.

7. Select the newly created BIOS Policy.

8. Change the Quiet Boot setting to disabled.

9. Change Consistent Device Naming to enabled.

10. Click Advanced tab and then select Processor.

11. From the Processer tab, make changes as shown below.

12. Change the Workload Configuration to IO Sensitive on the same page.

Create Maintenance Policy

To update the default Maintenance Policy, follow these steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Policies > root > sub-Organizations > CV-ScaleProtect.

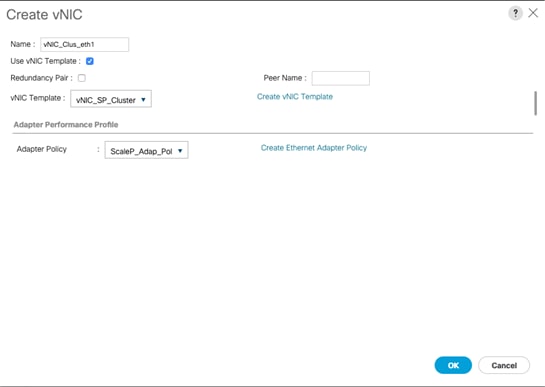

3. Right-click Maintenance Policies and Select Create Maintenance Policy.