Cisco UCS Infrastructure for Red Hat OpenShift Container Platform Deployment Guide

Available Languages

Cisco UCS Infrastructure for Red Hat OpenShift Container Platform Deployment Guide

Cisco UCS Infrastructure for Red Hat OpenShift Container Platform 3.9 with Container-native Storage Solution

Last Updated: July 24, 2018

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, visit:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2018 Cisco Systems, Inc. All rights reserved.

Table of Contents

Cisco Unified Computing System

Cisco UCS Fabric Interconnects

Cisco UCS 5108 Blade Server Chassis

Cisco UCS C220M5 Rack-Mount Server

Cisco UCS C240M5 Rack-Mount Server

Intel Scalable Processor Family

Red Hat OpenShift Container Platform

Red Hat Enterprise Linux Atomic Host

Red Hat OpenShift Integrated Container Registry

Container-native Storage Solution from Red Hat

Deployment Hardware and Software

Switch Configuration - Cisco Nexus 9396PX

Initial Configuration and Setup

Configuring Network Interfaces

Cisco UCS Manager - Administration

Initial Setup of Cisco Fabric Interconnects

Configuring Ports for Server, Network and Storage Access

Cisco UCS Manager – Setting up NTP Server

Assigning Block of IP addresses for KVM Access

Editing Chassis Discovery Policy

Acknowledging Cisco UCS Chassis

Enabling Uplink Ports to Cisco Nexus 9000 Series Switches

Configuring Port Channels on Uplink Ports to Cisco Nexus 9000 Series Switches

Cisco UCS Configuration – Server

Creating Host Firmware Package Policy

Cisco UCS Configuration – Storage

Creating Service Profile Templates

Creating Service Profile Template for OpenShift Master Nodes

Creating Service Profile Template for Infra Nodes

Creating Service Profile Template for App Nodes

Creating Service Profile Template for App Nodes

Configuring PXE-less Automated OS Installation Infra with UCSM vMedia Policy

Bastion Node – Installation and Configuration

Preparing Bastion Node for vMedia Automated OS Install

Web Server – Installation and Configuration

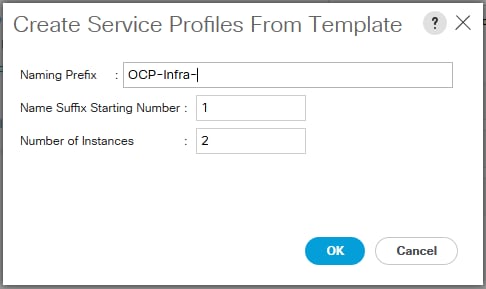

Service Profile Instantiation and Association

Red Hat Enterprise Linux Atomic Host OS Installation

Provisioning - OpenShift Container Platform

Cloning/Getting OpenShift-UCSM Ansible Playbook code from the GitHub repository

Node Preparation for OpenShift Container Platform Deployment

Setting up Multi-Master HA - Keepalived Deployment

Red Hat OpenShift Container Platform Deployment

Functional Test Cases – OCP/container-native storage

Scaling-up OpenShift Container Platform Cluster Tests

Appendix – I: Additional Components – Metrics and Logging Installation

Appendix – II: Persistent Volume Claim/Application Pod – yml file usage

Application Pod Deployment with PVC

Appendix – III: Disconnected Installation

Appendix – IV: Uninstalling Container-native Storage

Business Challenges

Businesses are increasingly expecting technology to be a centerpiece of every new change. Traditional approaches to leverage technology are too slow in delivering innovation at the pace at which business ecosystems are changing.

To stay competitive, organizations need containerized software applications to meet their unique needs — from customer engagements to new product and services development. With this, businesses are facing challenges around policy management, application portability, agility, resource utilization and so on. In order to keep up with new technologies or stay one step ahead, enterprises will have to overcome the key challenges to accelerate product development, add value and compete better at lower cost.

The need to speed up application development, testing, delivery, and deployment is becoming a necessary business competency. Containers are proving to be the next step forward as they are much lighter to build and deploy in comparison with the methods like omnibus software builds and full Virtual Machine images. Containers are efficient, portable, and agile and is been accepted industry wide for the ease of deployment at scale versus monolithic architecture based applications.

Typically, the container platform is expected to allow developers to focus on their code using their existing tools and familiar workflows including CI/CD. Ideally, developers should not have to worry about (or touch) a Dockerfile and deploy apps from their development environments to any destination including, their laptops, data center and the cloud. It should all be about ease of use and delivering a superior developer experience. Also, it should provide Ops teams enough visibility from app-layer down to container, OS, virtualization and the underlying hardware layer.

Red Hat® OpenShift® Container Platform offers enterprises, a complete control over their Kubernetes environments, whether they are on-premise or in the public cloud, giving them freedom to build and run applications anywhere. Red Hat OpenShift Container Platform 3.9 on Cisco UCS® B- and C- Series severs with Intel’s Intel® Xeon® Scalable processors and storage inspired SATA SSDs optimized for read-intensive workloads is a joint effort by Cisco, Red Hat and Intel to bring in a best-in-class solution to cater to the rapidly growing IT needs.

This CVD is intended to provide an end-to-end solution deployment steps along with the test/ validations performed on the proposed reference architecture. This guide is a step forward to the published design guide, which details the reference architecture design and considerations made. For more information on the design, see: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/ucs_openshift_design.html

Introduction

Deployment-centric application platform and DevOps initiatives are driving benefits for organizations in their digital transformation journey. Though still early in maturity, Docker format container packaging and Kubernetes container orchestration are emerging as industry standards for state-of-the-art PaaS solutions.

Cisco, Intel and Red Hat have joined hands to develop a best-in-class, easy-to-deploy PaaS solution on an on-prem, private/ public cloud environments with automated deployments on robust platform such as Cisco UCS and Red Hat OpenShift Container Platform.

Red Hat OpenShift Container Platform provides a set of container-based open source tools that enable digital transformation and accelerates application development while making optimal use of infrastructure. Red Hat OpenShift Container Platform helps organizations use the cloud delivery model and simplify continuous delivery of applications and services in a cloud-native way. Built on proven open source technologies, Red Hat OpenShift Container Platform also provides development teams multiple modernization options to enable smooth transition to microservices architecture and to the cloud for existing traditional applications.

Red Hat OpenShift Container Platform 3.9 is built around a core of application containers powered by Docker, with orchestration and management provided by Kubernetes, on a foundation of Red Hat Enterprise Linux® Atomic Host. It provides many enterprise-ready features, like enhanced security features, multitenancy, simplified application deployment, and continuous integration/ continuous deployment tools. With Cisco UCS M5 servers, provisioning and managing the Red Hat OpenShift Container Platform 3.9 infrastructure becomes practically effortless with stateless computing and single-plane of management, thus giving a resilient solution.

This solution is built on Cisco UCS B-, C-Series M5 servers and Cisco Nexus 9000 Series switches. These servers are powered by the Intel® Xeon® Scalable platform, which provides the foundation for a powerful data center platform that creates an evolutionary leap in agility and scalability. Disruptive by design, this innovative processor sets a new level of platform convergence and capabilities across compute, storage, memory, network, and security.

This solution intends to provide near line rate performance advantage for the container applications through its design principles to run Red Hat OpenShift Container Platform on Cisco UCS Bare Metal servers with Intel best of class CPUs and Solid-State Drives.

This guide provides a step-by-step procedure for deploying Red Hat OpenShift Container Platform 3.9 on Cisco UCS B- and C- Series servers, and also provides a list of feature functional, high-availability and scale tests performed on the enterprise class and dev/ test environments.

Audience

The intended audience for this CVD is system administrators or system architects. Some experience with Cisco UCS, Docker and Red Hat OpenShift technologies might be helpful, but is not required.

Purpose of this Document

This document highlights the benefits of using Cisco UCS M5 servers for Red Hat OpenShift Container Platform 3.9 to efficiently deploy, scale, and manage a production-ready application container environment for enterprise customers. While Cisco UCS infrastructure provides a platform for compute, network and storage requirements, Red Hat OpenShift Container Platform provides a single development platform for new cloud-native applications and transitioning from monoliths to microservices. The goal of this document is to demonstrate the value that Cisco UCS brings to the data center, such as single-point hardware lifecycle management, highly available compute, network, storage infrastructure and the simplicity of deployment of application containers using Red Hat OpenShift Container Platform.

What’s New?

· Automated operating system provisioning.

· Red Hat Ansible® Playbooks for end-to-end deployment of OpenShift Container Platform on UCS infrastructure including Container-native storage solution.

· Automated scale-up/down infrastructure and OpenShift Container Platform components.

· Cisco UCS® M5 servers are based on new Intel® Xeon® Scalable processors with increased core counts, more PCIe lanes, faster memory bandwidth, Optane SSDs and upto 2X higher storage performance.

Solution Summary

This deployment guide provides detailed instructions on preparing, provisioning, deploying, and managing a Red Hat OpenShift Container Platform 3.9 on an on-prem environment with container-native storage solution from Red Hat on Cisco UCS M5 B- and C-Series servers to cater to the stateful application’s persistent storage needs.

Solution Benefits

Some of the key benefits of this solution include:

· Red Hat OpenShift

- Strong, role-based access controls, with integrations to enterprise authentication systems.

- Powerful, web-scale container orchestration and management with Kubernetes.

- Integrated Red Hat Enterprise Linux Atomic Host, optimized for running containers at scale with Security-enhanced Linux (SELinux) enabled for strong isolation.

- Integration with public and private registries.

- Integrated CI/CD tools for secure DevOps practices.

- SDN plugin for container networking in CNI mode.

- Modernize application architectures toward microservices.

- Adopt a consistent application platform for hybrid cloud deployments.

- Support for remote storage volumes.

- Persistent storage for stateful cloud-native containerized applications.

· Cisco UCS

- Reduced data center complexities through Cisco UCS infrastructure with a single management control plane for hardware lifecycle management.

- Easy to deploy and scale the solution.

- Best-in-class performance for container applications running on bare metal servers.

- Superior scalability and high-availability.

- Compute form factor agnostic. Application workload can be distributed across UCS B- and C-Series servers seamlessly.

- Better response with optimal ROI.

- Optimized hardware footprint for production and dev/ test deployments.

This section provides a brief introduction of the various hardware/ software components used in this solution.

Cisco Unified Computing System

The Cisco Unified Computing System is a next-generation solution for blade and rack server computing. The system integrates a low-latency; lossless 10 Gigabit Ethernet unified network fabric with enterprise-class, x86-architecture servers. The system is an integrated, scalable, multi-chassis platform in which all resources participate in a unified management domain. The Cisco Unified Computing System accelerates the delivery of new services simply, reliably, and securely through end-to-end provisioning and migration support for both virtualized and non-virtualized systems. Cisco Unified Computing System (Cisco UCS) fuses access layer networking and servers. This high-performance, next-generation server system provides a data center with a high degree of workload agility and scalability.

Cisco UCS Manager

Cisco Unified Computing System (UCS) Manager provides unified, embedded management for all software and hardware components in the Cisco UCS. Using Single Connect technology, it manages, controls, and administers multiple chassis for thousands of virtual machines. Administrators use the software to manage the entire Cisco Unified Computing System as a single logical entity through an intuitive GUI, a command-line interface (CLI), or an XML API. The Cisco UCS Manager resides on a pair of Cisco UCS 6300 Series Fabric Interconnects using a clustered, active-standby configuration for high-availability.

Cisco UCS Fabric Interconnects

The Fabric interconnects provide a single point for connectivity and management for the entire system. Typically deployed as an active-active pair, the system’s fabric interconnects integrate all components into a single, highly-available management domain controlled by Cisco UCS Manager. The fabric interconnects manage all I/O efficiently and securely at a single point, resulting in deterministic I/O latency regardless of a server or virtual machine’s topological location in the system.

Cisco UCS 5108 Blade Server Chassis

The Cisco UCS 5100 Series Blade Server Chassis is a crucial building block of the Cisco Unified Computing System, delivering a scalable and flexible blade server chassis. The Cisco UCS 5108 Blade Server Chassis is six rack units (6RU) high and can mount in an industry-standard 19-inch rack. A single chassis can house up to eight half-width Cisco UCS B-Series Blade Servers and can accommodate both half-width and full-width blade form factors. Four single-phase, hot-swappable power supplies are accessible from the front of the chassis. These power supplies are 92 percent efficient and can be configured to support non-redundant, N+ 1 redundant and grid-redundant configurations. The rear of the chassis contains eight hot-swappable fans, four power connectors (one per power supply), and two I/O bays for Cisco UCS 2304 Fabric Extenders.

Cisco UCS B200M5 Blade Server

The Cisco UCS B200M5 Blade Server delivers performance, flexibility, and optimization for deployments in data centers, in the cloud, and at remote sites. This enterprise-class server offers market-leading performance, versatility, and density without compromise for workloads including Virtual Desktop Infrastructure (VDI), web infrastructure, distributed databases, converged infrastructure, and enterprise applications such as Oracle and SAP HANA. The B200M5 server can quickly deploy stateless physical and virtual workloads through programmable, easy-to-use Cisco UCS Manager Software and simplified server access through Cisco SingleConnect technology. The Cisco UCS B200M5 server is a half-width blade. Up to eight servers can reside in the 6-Rack-Unit (6RU) Cisco UCS 5108 Blade Server Chassis, offering one of the highest densities of servers per rack unit of blade chassis in the industry. You can configure the B200M5 to meet your local storage requirements without having to buy, power, and cool components that you do not need.

Cisco UCS C220M5 Rack-Mount Server

The Cisco UCS C220M5 Rack Server is among the most versatile general-purpose enterprise infrastructure and application servers in the industry. It is a high-density 2-socket rack server that delivers industry-leading performance and efficiency for a wide range of workloads, including virtualization, collaboration, and bare metal applications. The Cisco UCS C-Series Rack Servers can be deployed as standalone servers or as part of the Cisco Unified Computing System™ (Cisco UCS) to take advantage of Cisco’s standards-based unified computing innovations that help reduce customers’ Total Cost of Ownership (TCO) and increase their business agility. The Cisco UCS C220M5 server extends the capabilities of the Cisco UCS portfolio in a 1-Rack-Unit (1RU) form factor. It incorporates the Intel® Xeon® Scalable processors, supporting up to 20 percent more cores per socket, twice the memory capacity, 20 percent greater storage density, and five times more PCIe NVMe Solid-State Disks (SSDs) compared to the previous generation of servers. These improvements deliver significant performance and efficiency gains that will improve your application performance.

Cisco UCS C240M5 Rack-Mount Server

The Cisco UCS C240M5 Rack Server is a 2-socket, 2-Rack-Unit (2RU) rack server offering industry-leading performance and expandability. It supports a wide range of storage and I/O-intensive infrastructure workloads, from big data and analytics to collaboration. Cisco UCS C-Series Rack Servers can be deployed as standalone servers or as part of a Cisco Unified Computing System™ (Cisco UCS) managed environment to take advantage of Cisco’s standards-based unified computing innovations that help reduce customers’ Total Cost of Ownership (TCO) and increase their business agility.

In response to ever-increasing computing and data-intensive real-time workloads, the enterprise-class Cisco UCS C240M5 server extends the capabilities of the Cisco UCS portfolio in a 2RU form factor. It incorporates the Intel® Xeon® Scalable processors, supporting up to 20 percent more cores per socket, twice the memory capacity, and five times more.

Non-Volatile Memory Express (NVMe) PCI Express (PCIe) Solid-State Disks (SSDs) compared to the previous generation of servers. These improvements deliver significant performance and efficiency gains that will improve your application performance.

Cisco VIC Interface Cards

The Cisco UCS Virtual Interface Card (VIC) 1340 is a 2-port 40-Gbps Ethernet or dual 4 x 10-Gbps Ethernet, Fiber Channel over Ethernet (FCoE) capable modular LAN on motherboard (mLOM) designed exclusively for the M4 generation of Cisco UCS B-Series Blade Servers. All the blade servers for both Controllers and Computes will have MLOM VIC 1340 card. Each blade will have a capacity of 40Gb of network traffic. The underlying network interfaces like will share this MLOM card.

The Cisco UCS Virtual Interface Card 1385 improves flexibility, performance, and bandwidth for Cisco UCS C-Series Rack Servers. It offers dual-port Enhanced Quad Small Form-Factor Pluggable (QSFP+) 40 Gigabit Ethernet and Fibre Channel over Ethernet (FCoE) in a half-height PCI Express (PCIe) adapter. The 1385 card works with Cisco Nexus 40 Gigabit Ethernet (GE) and 10 GE switches for high-performance applications. The Cisco VIC 1385 implements the Cisco Data Center Virtual Machine Fabric Extender (VM-FEX), which unifies virtual and physical networking into a single infrastructure. The extender provides virtual-machine visibility from the physical network and a consistent network operations model for physical and virtual servers.

Cisco Nexus 9000 Switches

The Cisco Nexus 9000 Series delivers proven high performance and density, low latency, and exceptional power efficiency in a broad range of compact form factors. Operating in Cisco NX-OS Software mode or in Application Centric Infrastructure (ACI) mode, these switches are ideal for traditional or fully automated data center deployments.

The Cisco Nexus 9000 Series Switches offer both modular and fixed 10/40/100 Gigabit Ethernet switch configurations with scalability up to 30 Tbps of non-blocking performance with less than five-microsecond latency, 1152 x 10 Gbps or 288 x 40 Gbps non-blocking Layer 2 and Layer 3 Ethernet ports and wire speed VXLAN gateway, bridging, and routing.

Intel Scalable Processor Family

Intel® Xeon® Scalable processors provide a new foundation for secure, agile, multi-cloud data centers. This platform provides businesses with breakthrough performance to handle system demands ranging from entry-level cloud servers to compute-hungry tasks including real-time analytics, virtualized infrastructure, and high-performance computing. This processor family includes technologies for accelerating and securing specific workloads. The integrated Intel® Omni-Path Architecture (Intel® OPA) Host Fabric Interface provides End-to-end high-bandwidth, low-latency fabric that optimizes performance and eases deployment of HPC clusters by eliminating the need for a discrete host fabric interface card, and is integrated in the CPU package.

Intel® SSD DC S4500 Series

Intel® SSD DC S4500 Series is storage inspired SATA SSD optimized for read-intensive workloads. Based on TLC Intel® 3D NAND Technology, these larger capacity SSDs enable data centers to increase data stored per rack unit. Intel® SSD DC S4500 Series is built for compatibility in legacy infrastructures so it enables easy storage upgrades that minimize the costs associated with modernizing data center. This 2.5” 7mm form factor offers wide range of capacity from 240 GB up to 3.8 TB.

Red Hat OpenShift Container Platform

OpenShift Container Platform is Red Hat’s container application platform that brings together Docker and Kubernetes and provides an API to manage these services. OpenShift Container Platform allows you to create and manage containers. Containers are standalone processes that run within their own environment, independent of operating system and the underlying infrastructure. OpenShift helps developing, deploying, and managing container-based applications. It provides a self-service platform to create, modify, and deploy applications on demand, thus enabling faster development and release life cycles. OpenShift Container Platform has a microservices-based architecture of smaller, decoupled units that work together. It runs on top of a Kubernetes cluster, with data about the objects stored in etcd, a reliable clustered key-value store.

Kubernetes Infrastructure

Within OpenShift Container Platform, Kubernetes manages containerized applications across a set of Docker runtime hosts and provides mechanisms for deployment, maintenance, and application-scaling. The Docker service packages, instantiates, and runs containerized applications.

A Kubernetes cluster consists of one or more masters and a set of nodes. This solution design includes HA functionality at the hardware as well as the software stack. Kubernetes cluster is designed to run in HA mode with 3 master nodes and 2 Infra nodes to ensure that the cluster has no single point of failure.

Red Hat Enterprise Linux Atomic Host

Red Hat Enterprise Linux Atomic Host is a lightweight variant of the Red Hat Enterprise Linux operating system designed to exclusively run Linux containers. By combining the modular capabilities of Linux containers and Red Hat Enterprise Linux, containers can be more securely and effectively deployed and managed across public, private, and hybrid cloud infrastructures.

Red Hat OpenShift Integrated Container Registry

OpenShift Container Platform provides an integrated container registry called OpenShift Container Registry (OCR) that adds the ability to automatically provision new image repositories on demand. This provides users with a built-in location for their application builds to push the resulting images. Whenever a new image is pushed to OCR, the registry notifies OpenShift Container Platform about the new image, passing along all the information about it, such as the namespace, name, and image metadata. Different pieces of OpenShift Container Platform react to new images, creating new builds and deployments.

Container-native Storage Solution from Red Hat

Container-native storage solution from Red Hat makes OpenShift Container Platform a fully hyperconverged infrastructure where storage containers co-reside with the compute containers. Storage plane is based on containerized Red Hat Gluster® Storage services, which controls storage devices on every storage server. Heketi is a part of the container-native storage architecture and controls all of the nodes that are members of storage cluster. Heketi also provides an API through which storage space for containers can be easily requested. While Heketi provides an endpoint for storage cluster, the object that makes calls to its API from OpenShift clients is called a Storage Class. It is a Kubernetes and OpenShift object that describes the type of storage available for the cluster and can dynamically send storage requests when a persistent volume claim is generated.

Container-native storage for OpenShift Container Platform is built around three key technologies:

· OpenShift provides the Platform-as-a-Service (PaaS) infrastructure based on Kubernetes container management. Basic OpenShift architecture is built around multiple master systems where each system contains a set of nodes.

· Red Hat Gluster Storage provides the containerized distributed storage based on Red Hat Gluster Storage container. Each Red Hat Gluster Storage volume is composed of a collection of bricks, where each brick is the combination of a node and an export directory.

· Heketi provides the Red Hat Gluster Storage volume life cycle management. It creates the Red Hat Gluster Storage volumes dynamically and supports multiple Red Hat Gluster Storage clusters.

Docker

Red Hat OpenShift Container Platform uses Docker runtime engine for containers.

Kubernetes

Red Hat OpenShift Container Platform is a complete container application platform that natively integrates technologies like Docker and Kubernetes - a powerful container cluster management and orchestration system.

Etcd

Etcd is a key-value store used in OpenShift Container Platform cluster. Etcd data store provides complete cluster and endpoint states to the OpenShift API servers. Etcd data store furnishes information to API servers about node status, network configurations, secrets etc.

Open vSwitch

Open vSwitch is an open-source implementation of a distributed virtual multilayer switch. It is designed to enable effective network automation through programmatic extensions, while supporting standard management interfaces and protocols such as 802.1ag, SPAN, LACP and NetFlow. Open vSwitch provides software-defined networking (SDN)-specific functions in the OpenShift Container Platform environment.

HAProxy

HAProxy is open source software that provides a high availability load balancer and proxy server for TCP and HTTP-based applications that spreads requests across multiple servers. In this solution, HAProxy provides routing and load-balancing functions for Red Hat OpenShift applications. Other instance of HAProxy acts as an ingress router for all applications deployed in Red Hat OpenShift cluster. Both instances are replicated to every infrastructure node and managed by additional components (keepalived, OpenShift services) to provide redundancy and high availability.

Keepalived

Keepalived is routing software which provides simple and robust facilities for load balancing and high-availability to Linux based infrastructure. In this solution, keepalived is used to provide virtual IP management for HAProxy instances to ensure highly available OpenShift Container Platform cluster. Keepalived is deployed into infrastructure nodes as they also act as HAProxy load balancers. In case of a failure of one infrastructure node Keepalived automatically moves Virtual IPs to second node that acts as a backup. With Keepalived Red Hat OpenShift infrastructure becomes highly available and resistant to failures.

Red Hat Ansible Automation

Red Hat Ansible Automation is a powerful IT automation tool. It is capable of provisioning numerous types of resources and deploying applications. It can configure and manage devices and operating system components. Due to the simplicity, extensibility, and portability, this OpenShift solution is based largely on Ansible Playbooks.

Ansible is mainly used for installation and management of the Red Hat OpenShift Container Platform deployment.

For in-depth description of each hardware and software components, refer solution design guide at: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/ucs_openshift_design.html

This section provides an overview of the hardware and software components used in this solution, as well as the design factors to be considered in order to make the system work as a single, highly available solution. In addition to this, the section also describes in detail, end-to-end deployment steps for the solution.

Architecture

Red Hat OpenShift Container Platform is managed by the Kubernetes container orchestrator, which manages containerized applications across a cluster of systems running the Docker container runtime. The physical configuration of Red Hat OpenShift Container Platform is based on the Kubernetes cluster architecture. OpenShift is a layered system designed to expose underlying Docker-formatted container image and Kubernetes concepts as accurately as possible, with a focus on easy composition of applications by a developer. For example, install Ruby, push code, and add MySQL. The concept of an application as a separate object is removed in favor of more flexible composition of "services", allowing two web containers to reuse a database or expose a database directly to the edge of the network.

Figure 1 Red Hat OpenShift Container Platform Architecture Overview

This Red Hat OpenShift RA contains five types of nodes: bastion, master, infrastructure, storage, and application.

· Bastion Node:

This is a dedicated node that serves as the main deployment and management server for the Red Hat OpenShift cluster. It is used as the logon node for the cluster administrators to perform the system deployment and management operations, such as running the Ansible OpenShift deployment Playbooks and performing scale-out operations. Also, Bastion node runs DNS services for the OpenShift Cluster nodes. The bastion node runs Red Hat Enterprise Linux 7.5.

· OpenShift Master Nodes:

The OpenShift Container Platform master is a server that performs control functions for the whole cluster environment. It is responsible for the creation, scheduling, and management of all objects specific to Red Hat OpenShift. It includes API, controller manager, and scheduler capabilities in one OpenShift binary. It is also a common practice to install an etcd key-value store on OpenShift masters to achieve a low-latency link between etcd and OpenShift masters. It is recommended that you run both Red Hat OpenShift masters and etcd in highly available environments. This can be achieved by running multiple OpenShift masters in conjunction with an external active-passive load balancer and the clustering functions of etcd. The OpenShift master node runs Red Hat Enterprise Linux Atomic Host 7.5.

· OpenShift Infrastructure Nodes:

The OpenShift infrastructure node runs infrastructure specific services: Docker Registry*, HAProxy router, and Heketi. Docker Registry stores application images in the form of containers. The HAProxy router provides routing functions for Red Hat OpenShift applications. It currently supports HTTP(S) traffic and TLS-enabled traffic via Server Name Indication (SNI). Heketi provides management API for configuring GlusterFS persistent storage. Additional applications and services can be deployed on OpenShift infrastructure nodes. The OpenShift infrastructure node runs Red Hat Enterprise Linux Atomic Host 7.5.

· OpenShift Application Nodes:

The OpenShift application nodes run containerized applications created and deployed by developers. An OpenShift application node contains the OpenShift node components combined into a single binary, which can be used by OpenShift masters to schedule and control containers. A Red Hat OpenShift application node runs Red Hat Enterprise Linux Atomic Host 7.5.

· OpenShift Storage Nodes:

The OpenShift storage nodes run containerized GlusterFS services which configure persistent volumes for application containers that require data persistence. Persistent volumes may be created manually by a cluster administrator or automatically by storage class objects. An OpenShift storage node is also capable of running containerized applications. A Red Hat OpenShift storage node runs Red Hat Enterprise Linux Atomic Host 7.5.

Below table shows functions and roles, each class of node in this solution reference design of OpenShift Container Platform:

Table 1 Type of nodes in OpenShift Container Platform cluster and their roles

| Node | Roles |

| Bastion Node | - System deployment and Management Operations - Runs DNS services for the OpenShift Container Platform cluster - Provides IP masquerading services to the internal cluster nodes |

| Master Nodes | - Kubernetes services - Etcd data store - Controller Manager & Scheduler - API services |

| Infrastructure Nodes | - Container Registry - HA Proxy - Heketi - KeepAlived |

| Application Nodes | - Application Containers PODs - Docker Runtime |

| Storage Nodes | - Red Hat Gluster Storage - Container-native storage services - Storage nodes are labeled `compute`, so workload scheduling is enabled by default |

![]() Etcd data store which is KV pair database used by Kubernetes, is co-hosted on master nodes running other services like Controller Manager, API and Scheduling.

Etcd data store which is KV pair database used by Kubernetes, is co-hosted on master nodes running other services like Controller Manager, API and Scheduling.

Topology

Physical Topology Reference Architecture Production Use Case

The Physical Topology for Architecture – I: High-End Production use case, includes 9x Cisco UCS B200M5s, 1xCisco UCS C220M5 and 3x Cisco UCS C240M5 blade and rack servers, UCS Fabric Interconnects 6332-16UP and Cisco Nexus 9396PX switches. All the nodes are provisioned with SSDs as storage devices including container-native storage nodes for persistent storage provisioning.

Figure 2 Reference Architecture – Production Use Case

Physical Topology Reference Architecture Dev/ Test Use Case

The Physical Topology for Architecture – II: Starter Dev/ Test use case includes 5xCisco UCS C240M5 and 4xCisco UCS C220M5 rack servers, UCS Fabric Interconnects 6332-16UP and Cisco Nexus 9396PX switches. All the nodes are provisioned with HDDs as storage devices including storage nodes for container-native storage. App nodes are co-located with 2-node Infra as well 3-node storage cluster on Cisco UCS C240M5 servers.

Figure 3 Reference Architecture – Dev/ Test Use Case

Logical Topology Reference Architecture Production Use Case

The cluster node roles and various services they provide are shown in the diagram below.

Figure 4 Logical Topology Reference Architecture Production Use Case

Logical Topology Reference Architecture Dev/ Test Use Case

In this architecture option, 2-nodes are dedicated for Infra services and 3-nodes for container-native storage services. In this architecture, Application nodes are co-hosted on 2 of the Infra nodes and container-native storage services are co-hosted on the rest of the 3 Application nodes. Master and Bastion nodes remain dedicated for the services and roles they perform in the cluster.

Figure 5 Logical Reference Architecture for Dev/ Test Use Case

Physical Network Connectivity

Following figures illustrates cabling details for the two architectures.

Figure 6 Production Use Case

Figure 7 Dev/ Test Use Case

Network Addresses

Following tables list out network subnets, VLANs and addresses used in the solution. These are examples and are not mandated to be used as is, for other deployments. Consumers of this solution are free to use their own address/VLANs/subnets based on their environment, as long as they fit into overall scheme.

Architecture - I: Production Use Case (high performance)

Table 2 Network Subnets

| Network | VLAN ID | Interface | Purpose |

| 10.65.121.0/24 | 602 | Mgmt0/Bond0 | Management Network with External Connectivity |

| 10.65.122.0/24 | 603 | eno6 | External Network |

| 100.100.100.0/24 | 1001 | eno5 | Internal Cluster Network - Host |

| 172.25.0.0/16 | 1001 | eno5 | Internal Subnet used by OpenShift Container Platform SDN plugin for service provisioning |

| 172.28.0.0/14 | 1001 | eno5 | Internal Cluster Network – POD |

Table 3 Cluster Node Addresses

| Host | Interface 1 | Interface 2 | Purpose |

| Bastion Node | Bond0/10.65.121.50 | Bond1001/100.100.100.10 | DNS services, vMedia Policy Repo Host |

| OCP-Infra-1 | eno5/100.100.100.51 | eno6/10.65.122.61 | Registry Services, HA-Proxy, Keepalived, OpenShift Router, Logging and Metrices |

| OCP-Infra-2 | eno5/100.100.100.52 | eno6/10.65.122.62 | Registry Services, HA-Proxy, Keepalived, OpenShift Router, Logging and Metrices |

| OCP-Mstr-1 | eno5/100.100.100.53 | - | Kubernetes services, etcd data store, API services, Controller and Scheduling services |

| OCP-Mstr-2 | eno5/100.100.100.54 | - | Kubernetes services, etcd data store, API services, Controller and Scheduling services |

| OCP-Mstr-3 | eno5/100.100.100.56 | - | Kubernetes services, etcd data store, API services, Controller and Scheduling services |

| OCP-App-1 | eno5/100.100.100.57 | - | Container Runtime, Application Container PODs |

| OCP-App-2 | eno5/100.100.100.58 | - | Container Runtime, Application Container PODs |

| OCP-App-3 | eno5/100.100.100.59 | - | Container Runtime, Application Container PODs |

| OCP-App-4 | eno5/100.100.100.60 | - | Container Runtime, Application Container PODs |

| OCP-Strg-1 | eno5/100.100.100.61 | - | Red Hat Gluster Storage, Container-native storage Services (CNS) and Storage Backend |

| OCP-Strg-2 | eno5/100.100.100.62 | - | Red Hat Gluster Storage, container-native storage Services (CNS) and Storage Backend |

| OCP-Strg-3 | eno5/100.100.100.62 | - | Red Hat Gluster Storage, Container-native storage Services (CNS) and Storage Backend |

Architecture - II: Dev/ Test Use Case

Table 4 Network Subnets

| Network | VLAN ID | Interface | Purpose |

| 10.65.122.0/24 | 603 | Mgmt0/Bond0 | Management Network with External Connectivity |

| 10.65.121.0/24 | 602 | eno6 | External Network |

| 201.201.201.0/24 | 2001 | eno5 | Internal Cluster Network - Host |

| 172.25.0.0/16 | 2001 | eno5 | Internal Subnet used by OpenShift Container Platform SDN plugin for service provisioning |

| 172.28.0.0/14 | 2001 | eno5 | Internal Cluster Network – POD |

Table 5 Cluster Node Addresses

| Host | Interface 1 | Interface 2 | Purpose |

| Bastion Node | Bond0/10.65.122.99 | Bond1001/201.201.201.10 | OpenShift DNS services, vMedia Policy Repo Host |

| OCP-Infra-1 | eno5/201.201.201.51 | eno6/10.65.121.121 | Registry Services, HA-Proxy, Keepalived, OpenShift Router, Logging and Metrices, Container Runtime, Application Container PODs |

| OCP-Infra-2 | eno5/201.201.201.52 | eno6/10.65.121.122 | Registry Services, HA-Proxy, Keepalived, OpenShift Router, Logging and Metrices, Container Runtime, Application Container PODs |

| OCP-Mstr-1 | eno5/201.201.201.53 | - | Kubernetes services, etcd data store, API services, Controller and Scheduling services |

| OCP-Mstr-2 | eno5/201.201.201.54 | - | Kubernetes services, etcd data store, API services, Controller and Scheduling services |

| OCP-Mstr-3 | eno5/201.201.201.56 | - | Kubernetes services, etcd data store, API services, Controller and Scheduling services |

| OCP-App-1 | eno5/201.201.201.57 | - | Container Runtime, Application Container PODs, Red Hat Gluster Storage, Container-native storage services(CNS) and Storage Backend |

| OCP-App-2 | eno5/201.201.201.58 | - | Container Runtime, Application Container PODs, Red Hat Gluster Storage, Container-native storage services(CNS) and Storage Backend |

| OCP-App-3 | eno5/201.201.201.59 | - | Container Runtime, Application Container PODs, Red Hat Gluster Storage, Container-native storage services(CNS) and Storage Backend |

Logical Network Connectivity

This section provides details on how Cisco VIC enables application containers to use dedicated I/O path for network access in a secured environment with multi-tenancy. With Cisco VIC, containers get a dedicated physical path to a classic L2 VLAN topology with better line rate efficiency. To further enhance the value of Cisco UCS by optimizing the infrastructure utilization, the traffic paths were configured to segregate all management/control traffic through fabric interconnect A, and container data traffic through fabric interconnect B.

Figure 8 Logical Network Diagram

Physical Infrastructure

This section describes various hardware and software components used in the RA and this deployment guide. The following infrastructure components are needed for the two architectural options under the solution.

Table 6 Architecture - I: Production Use Case (high performance)

| Component | Model | Quantity | Description |

| App nodes | Cisco UCS B200M5 | 4 | CPU – 2 x 6130@2.1GHz,16 Cores each Memory – 24 x 16GB 2666 DIMM – total of 384G SSDs – 2x960GB 6G SATA -EV (Intel S4500 Enterprise Value) Network Card – 1x1340 VIC + 1xPort Expander for 40Gig network I/O Raid Controller – Cisco MRAID 12 G SAS Controller |

| Master nodes | Cisco UCS B200M5 | 3 | CPU – 2 x 4114@2.2GHz,10Cores each Memory – 12 x 16GB 2400 DIMM – total of 192G SSDs – 2x240GB 6G SATA -EV Network Card – 1x1340 VIC + 1xPort Expander for 40Gig network I/O Raid Controller – Cisco MRAID 12 G SAS Controller |

| Infra nodes | Cisco UCS B200M5 | 2 | CPU – 2 x 4114@2.2GHz,10Cores each Memory – 12 x 16GB 2400 DIMM – total of 192G SSDs – 2x240GB 6G SATA -EV Network Card – 1x1340 VIC + 1xPort Expander for 40Gig network I/O Raid Controller – Cisco MRAID 12 G SAS Controller |

| Bastion node | Cisco UCS C220M5 | 1 | CPU – 2 x 4114@2.2GHz,10Cores each Memory – 12 x 16GB 2400 DIMM – total of 192G HDDs – 2x300GB12GSAS10KSFF Network Card – 1x1385 VIC RAID Controller - Cisco MRAID 12 G SAS Controller |

| Storage nodes | Cisco UCS C240M5SX | 3 | CPU – 2 x 6130@2.1GHz,16 Cores each Memory – 12 x 16GB 2666 DIMM – total of 192G SSDs – 2x240GB 6G SATA -EV SSDs - 20x3.8TB SATA (Intel S4500 Enterprise Value) Network Card – 1x1385 VIC Raid Controller – Cisco MRAID 12 G SAS Controller |

| Chassis | Cisco UCS 5108 | 2 |

|

| IO Modules | Cisco IOM 2304 | 4 |

|

| Fabric Interconnects | Cisco UCS 6332-16UP | 2 |

|

| Switches | Cisco Nexus 9396PX | 2 |

|

Table 7 Architecture - II: Dev/ Test Use Case

| Component | Model | Quantity | Description |

| Application+Storage nodes co-located | Cisco UCS C240M5SX | 3 | CPU – 2 x 4114@2.2GHz,10Cores each Memory – 12 x 16GB 2400 DIMM – total of 192G HDDs – 2x300GB 12GSAS 10KSFF (Internal Boot Drives) HDDs – 20x1.2TB12GSAS10KSFF Network Card – 1x1385 VIC Raid Controller – Cisco MRAID 12 G SAS Controller |

| Application+Infra | Cisco UCS C240M5SX | 2 | CPU – 2 x 4114@2.2GHz,10Cores each Memory – 12 x 16GB 2400 DIMM – total of 192G HDDs – 2x300GB 12GSAS 10KSFF (Internal Boot Drives) Network Card – 1x1385 VIC Raid Controller – Cisco MRAID 12 G SAS Controller |

| Master nodes | Cisco UCS C220M5 | 3 | CPU – 2 x 4114@2.2GHz,10Cores each Memory – 12 x 16GB 2400 DIMM – total of 192G HDDs – 2x300GB 12GSAS 10KSFF Network Card – 1x1385 VIC Raid Controller – Cisco MRAID 12 G SAS Controller |

| Bastion node | Cisco UCS C220M5 | 1 | CPU – 2 x 4114@2.2GHz,10Cores each Memory – 12 x 16GB 2400 DIMM – total of 192G HDDs – 2x300GB 12GSAS 10KSFF Network Card – 1x1385 VIC RAID Controller - Cisco MRAID 12 G SAS Controller |

| Fabric Interconnects | Cisco UCS 6332-16UP | 2 |

|

| Switches | Cisco UCS Nexus 9396PX | 2 |

|

Table 8 Software Components

| Component | Version |

| Cisco UCS Manager | 3.2.(3d) |

| Red Hat Enterprise Linux | 7.5 |

| Red Hat Enterprise Linux Atomic Host | 7.5 |

| Red Hat OpenShift Container Platform | 3.9 |

| Container-native storage solution from Red Hat | 3.9 |

| Kubernetes | 1.9.1 |

| Docker | 1.13.1 |

| Red Hat Ansible Engine | 2.4.4 |

| Etcd | 3.2.18 |

| Open vSwitch | 2.7.3 |

| HAProxy - Router | 1.8.1 |

| HAProxy – Load Balancer | 1.5.18 |

| Keepalived | 1.3.5 |

| Red Hat Gluster Storage | 3.3.0 |

| GlusterFS | 3.8.4 |

| Heketi | 6.0.0 |

Switch Configuration - Cisco Nexus 9396PX

Initial Configuration and Setup

This section outlines the initial configuration necessary for bringing up a new Cisco Nexus 9000.

Cisco Nexus A

To set up the initial configuration for the first Cisco Nexus switch complete the following steps:

1. Connect to the serial or console port of the switch

Enter the configuration method: console

Abort Auto Provisioning and continue with normal setup? (yes/no[n]: y

---- System Admin Account Setup ----

Do you want to enforce secure password standard (yes/no[y] :

Enter the password for "admin":

Confirm the password for "admin":

---- Basic System Configuration Dialog VDC: 1 ----

This setup utility will guide you through the basic configuration of the system. Setup configures only enough connectivity for management of the system.

Please register Cisco Nexus9000 Family devices promptly with your supplier. Failure to register may affect response times for initial service calls. Nexus9000 devices must be registered to receive entitled support services.

Press Enter at anytime to skip a dialog. Use ctrl-c at anytime to skip the remaining dialogs.

Would you like to enter the basic configuration dialog (yes/no): y

Create another login account (yes/no) [n]: n

Configure read-only SNMP community string (yes/no) [n]:

Configure read-write SNMP community string (yes/no) [n]:

Enter the switch name: OCP-N9K-A

Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]:

Mgmt0 IPv4 address: xxx.xxx.xxx.xxx

Mgmt0 IPv4 netmask: 255.255.255.0

Configure the default gateway? (yes/no) [y]:

IPv4 address of the default gateway: xxx.xxx.xxx.xxx

Configure advanced IP options? (yes/no) [n]:

Enable the telnet service? (yes/no) [n]:

Enable the ssh service? (yes/no) [y]:

Type of ssh key you would like to generate (dsa/rsa) [rsa]:

Number of rsa key bits <1024-2048> [1024]: 2048

Configure the ntp server? (yes/no) [n]: y

NTP server IPv4 address: yy.yy.yy.yy

Configure default interface layer (L3/L2) [L2]:

Configure default switchport interface state (shut/noshut) [noshut]:

Configure CoPP system profile (strict/moderate/lenient/dense/skip) [strict]:

2. Review the settings printed to the console. If they are correct, answer yes to apply and save the configuration

3. Wait for the login prompt to make sure that the configuration has been saved prior to proceeding.

Cisco Nexus B

To set up the initial configuration for the second Cisco Nexus switch complete the following steps:

1. Connect to the serial or console port of the switch

2. The Cisco Nexus B switch should present a configuration dialog identical to that of Cisco Nexus A shown above. Provide the configuration parameters specific to Cisco Nexus B for the following configuration variables. All other parameters should be identical to that of Cisco Nexus A.

Admin password

Nexus B Hostname: OCP-N9K-B

Nexus B mgmt0 IP address: xxx.xxx.xxx.xxx

Nexus B mgmt0 Netmask: 255.255.255.0

Nexus B mgmt0 Default Gateway: xxx.xxx.xxx.xxx

Feature Enablement

The following commands enable the IP switching feature and set default spanning tree behaviors:

1. On each Nexus 9000, enter the configuration mode:

config terminal

2. Use the following commands to enable the necessary features:

feature udld

feature lacp

feature vpc

feature interface-vlan

3. Configure the spanning tree and save the running configuration to start-up:

spanning-tree port type network default

spanning-tree port type edge bpduguard default

spanning-tree port type edge bpdufilter default

copy run start

VLAN Creation

To create the necessary virtual local area networks (VLANs), complete the following step on both switches:

From the configuration mode, run the following commands:

vlan 603

name vlan603

vlan 602

name vlan602

Configuring VPC

Configuring VPC Domain

Cisco Nexus A

To configure virtual port channels (vPCs) for switch A, complete the following steps:

1. From the global configuration mode, create a new vPC domain:

vpc domain 10

2. Make Cisco Nexus A the primary vPC peer by defining a low priority value:

role priority 10

3. Use the management interfaces on the supervisors of the Cisco Nexus switches to establish a keepalive line

peer-keepalive destination xx.xx.xx.xx source yy.yy.yy.yy

4. Enable following features for this vPC domain:

peer-switch

delay restore 150

peer-gateway

ip arp synchronize

auto-recovery

5. Save the configuration.

copy run start

Cisco Nexus B

To configure vPCs for switch B, complete the following steps:

6. From the global configuration mode, create a new vPC domain:

vpc domain 10

7. Make Cisco Nexus A the primary vPC peer by defining a higher priority value on this switch:

role priority 20

8. Use the management interfaces on the supervisors of the Cisco Nexus switches to establish a keepalive link:

peer-keepalive destination yy.yy.yy.yy source xx.xx.xx.xx

9. Enable following features for this vPC domain:

peer-switch

delay restore 150

peer-gateway

ip arp synchronize

auto-recovery

10. Save the configuration:

copy run start

Configuring Network Interfaces for VPC Peer Links

Cisco Nexus A

1. Define a port description for the interfaces connecting to VPC Peer OCP-N9K-B.

interface Eth1/9

description VPC Peer OCP-N9K-B:e1/10

interface Eth1/10

description VPC Peer OCP-N9K-B:e1/9

2. Apply a port channel to both VPC Peer links and bring up the interfaces.

interface Eth1/9,Eth1/10

channel-group 11 mode active

no shutdown

3. Enable UDLD on both interfaces to detect unidirectional links.

udld enable

4. Define a description for the port-channel connecting to OCP-N9K-B.

interface port-channel 11

description vPC peer-link

5. Make the port-channel a switchport, and configure a trunk to allow in-band management, VM traffic, and the native VLAN.

switchport

switchport mode trunk

switchport trunk native vlan 602-603

spanning-tree port type network

6. Make this port-channel the VPC peer link and bring it up.

vpc peer-link

no shutdown

copy run start

Cisco Nexus B

7. Define a port description for the interfaces connecting to VPC Peer OCP-N9K-A.

interface Eth1/9

description VPC Peer OCP-N9K-A:e1/10

interface Eth1/10

description VPC Peer OCP-N9K-A:e1/9

8. Apply a port channel to both VPC Peer links and bring up the interfaces.

interface Eth1/9,Eth1/10

channel-group 11 mode active

no shutdown

9. Enable UDLD on both interfaces to detect unidirectional links.

udld enable

10. Define a description for the port-channel connecting to OCP-N9K-A.

interface port-channel 11

description vPC peer-link

11. Make the port-channel a switchport, and configure a trunk to allow in-band management, VM traffic, and the native VLAN.

switchport

switchport mode trunk

switchport trunk native vlan 602-603

spanning-tree port type network

12. Make this port-channel the VPC peer link and bring it up.

vpc peer-link

no shutdown

copy run start

Configuring Network Interfaces

Cisco Nexus A

1. Define a description for the port-channel connecting to OCP-FI-A.

interface port-channel 12

description OCP-FI-A

2. Make the port-channel a switchport, and configure a trunk to allow in-band management, VM traffic, and the native VLANs.

switchport mode trunk

switchport trunk native vlan 602-603

3. Make the port channel and associated interfaces spanning tree edge ports.

spanning-tree port type edge trunk

spanning-tree guard root

no lacp graceful-convergence

4. Make this a VPC port-channel and bring it up.

vpc 12

no shutdown

5. Define a port description for the interface connecting to OCP-FI-A.

interface Eth2/1

6. Apply it to a port channel and bring up the interface.

channel-group 12 mode active

no shutdown

7. Enable UDLD to detect unidirectional links.

udld enable

8. Define a description for the port-channel connecting to OCP-FI-B.

interface port-channel

description OCP-FI-B

9. Make the port-channel a switchport, and configure a trunk to allow in-band management, VM traffic VLANs and the native VLAN.

switchport mode trunk

switchport trunk native vlan 603

10. Make the port channel and associated interfaces spanning tree edge ports.

spanning-tree port type edge trunk

spanning-tree guard root

no lacp graceful-convergence

11. Make this a VPC port-channel and bring it up.

vpc 13

no shutdown

12. Define a port description for the interface connecting to OCP-FI-B.

interface Eth2/2

13. Apply it to a port channel and bring up the interface.

channel-group 13 mode active

no shutdown

14. Enable UDLD to detect unidirectional links.

udld enable

copy run start

Cisco Nexus B

1. Define a description for the port-channel connecting to OCP-FI-B.

interface port-channel 12

description OCP-FI-B

2. Make the port-channel a switchport, and configure a trunk to allow in-band management, VM traffic, and the native VLANs.

switchport mode trunk

switchport trunk native vlan 603

3. Make the port channel and associated interfaces spanning tree edge ports.

spanning-tree port type edge trunk

spanning-tree guard root

no lacp graceful-convergence

4. Make this a VPC port-channel and bring it up.

vpc 12

no shutdown

5. Define a port description for the interface connecting to OCP-FI-B.

interface Eth2/1

6. Apply it to a port channel and bring up the interface.

channel-group 12 mode active

no shutdown

7. Enable UDLD to detect unidirectional links.

udld enable

8. Define a description for the port-channel connecting to OCP-FI-A.

interface port-channel 13

description OCP-FI-A

9. Make the port-channel a switchport, and configure a trunk to allow in-band management, and VM traffic VLANs and the native VLAN.

switchport mode trunk

switchport trunk native vlan 603

10. Make the port channel and associated interfaces spanning tree edge ports.

spanning-tree port type edge trunk

spanning-tree guard root

no lacp graceful-convergence

11. Make this a VPC port-channel and bring it up.

vpc 13

no shutdown

12. Define a port description for the interface connecting to OCP-N9K-A.

interface Eth2/2

13. Apply it to a port channel and bring up the interface.

channel-group 13 mode active

no shutdown

14. Enable UDLD to detect unidirectional links.

udld enable

copy run start

Cisco UCS Manager - Administration

Initial Setup of Cisco Fabric Interconnects

A pair of Cisco UCS 6332-16UP Fabric Interconnects is used in this design. The minimum configuration required for bringing up the FIs and the embedded Cisco UCS Manager (UCSM) is outlined below. All configurations after this will be done using Cisco UCS Manager.

Cisco UCS 6332-16UP FI – Primary (FI-A)

1. Connect to the console port of the primary Cisco UCS FI.

Enter the configuration method: console

Enter the setup mode; setup newly or restore from backup.(setup/restore)? Setup You have chosen to setup a new fabric interconnect? Continue? (y/n): y

Enforce strong passwords? (y/n) [y]: y

Enter the password for "admin": <Enter Password>

Enter the same password for "admin": <Enter Password>

Is this fabric interconnect part of a cluster (select 'no' for standalone)? (yes/no) [n]: y

Which switch fabric (A|B): A

Enter the system name: OCP-FI

Physical switch Mgmt0 IPv4 address: xx.xx.xx.xx

Physical switch Mgmt0 IPv4 netmask: 255.255.255.0

IPv4 address of the default gateway: yy.yy.yy.yy

Cluster IPv4 address: xx.xx.xx.xx

Configure DNS Server IPv4 address? (yes/no) [no]: y

DNS IPv4 address: yy.yy.yy.yy

Configure the default domain name? y

Default domain name: <domain name>

Join centralized management environment (UCS Central)? (yes/no) [n]: <Enter>

2. Review the settings printed to the console. If they are correct, answer yes to apply and save the configuration.

3. Wait for the login prompt to make sure that the configuration has been saved prior to proceeding.

Cisco UCS 6332-16UP FI – Secondary (FI-B)

1. Connect to the console port on the second FI on Cisco UCS 6332-16UP FI.

Enter the configuration method: console

Installer has detected the presence of a peer Fabric interconnect. This Fabric interconnect will be added to the cluster. Do you want to continue {y|n}? y

Enter the admin password for the peer fabric interconnect: <Enter Password>

Peer Fabric interconnect Mgmt0 IPv4 address: xx.xx.xx.xx

Peer Fabric interconnect Mgmt0 IPv4 netmask: 255.255.255.0

Cluster IPv4 address: 10.65.122.131

Apply and save the configuration (select ‘no’ if you want to re-enter)?(yes/no): y

2. Verify the above configuration by using Secure Shell (SSH) to login to each FI and verify the cluster status. Status should be as follows if the cluster is up and running properly.

OCP-FI-A# show cluster state

Now you are ready to log into Cisco UCS Manager using either the individual or cluster IPs of the Cisco UCS Fabric Interconnects.

Configuring Ports for Server, Network and Storage Access

Logging into Cisco UCS Manager

To log into the Cisco Unified Computing System (UCS) environment, complete the following steps:

1. Open a web browser and navigate to the Cisco UCS 6332-16UP Fabric Interconnect cluster IP address configured in earlier step.

2. Click Launch Cisco UCS Manager link to download the Cisco UCS Manager software.

3. If prompted, accept security certificates as necessary.

4. When prompted, enter admin as the user name and enter the administrative password.

5. Click Login to log in to Cisco UCS Manager.

6. Select Yes or No to authorize Anonymous Reporting if desired and click OK.

Cisco UCS Manager – Setting up NTP Server

To synchronize the Cisco UCS environment to the NTP server, complete the following steps:

1. From Cisco UCS Manager, click Admin tab in the navigation pane.

2. Select All > Timezone Management > Timezone.

3. Right-click and select Add NTP Server.

4. Specify NTP Server IP (for example, 171.68.38.66) and click OK twice to save edits. The Time Zone can also be specified in the Properties section of the Time Zone window.

Upgrading Cisco UCS Manager

This document assumes that the Cisco UCS Manager is running the version outlined in the Software Matrix. If an upgrade is required, follow the procedures outlined in the Cisco UCS Install and Upgrade Guides.

Assigning Block of IP addresses for KVM Access

To create a block of IP addresses for in-band access to servers in the Cisco UCS environment, complete the following steps. The addresses are used for Keyboard, Video, and Mouse (KVM) access to individual servers managed by Cisco UCS Manager.

![]() This block of IP addresses should be in the same subnet as the management IP addresses for the Cisco UCS Manager. And should be configured for out-of-band access.

This block of IP addresses should be in the same subnet as the management IP addresses for the Cisco UCS Manager. And should be configured for out-of-band access.

1. From Cisco UCS Manager, click LAN tab in the navigation pane.

2. Select LAN > Pools > root > IP Pools.

3. Right-click and select Create IP Pool.

4. Specify a Name (for example, ext-mgmt) for the pool. Click Next.

5. Click [+] Add to add a new IP Block. Click Next.

6. Enter the starting IP address (From), the number of IP addresses in the block (Size), the Subnet Mask and Default Gateway. Click OK.

7. Click Finish to create the IP block.

Editing Chassis Discovery Policy

Setting the discovery policy simplifies the addition of Cisco UCS Blade Server chassis and Cisco Fabric Extenders. To modify the chassis discovery policy, complete the following steps:

1. From Cisco UCS Manager, click Equipment tab in the navigation pane and select Equipment in the list on the left.

2. In the right pane, click Policies tab.

3. Under Global Policies, set the Chassis/FEX Discovery Policy to match the number of uplink ports that are cabled between the chassis or fabric extenders (FEXes) and the fabric interconnects.

4. Set the Link Grouping Preference to Port Channel.

5. Click Save Changes and then OK to complete.

Acknowledging Cisco UCS Chassis

To acknowledge all Cisco UCS chassis, complete the following steps:

1. From Cisco UCS Manager, click Equipment tab in the navigation pane.

2. Expand Chassis and for each chassis in the deployment, right-click and select Acknowledge Chassis.

3. In the Acknowledge Chassis pop-up, click Yes and then click OK.

Enabling Server Ports

To configure ports connected to Cisco UCS servers as Server ports, complete the following steps:

1. From Cisco UCS Manager, click Equipment tab in the navigation pane.

2. Select Equipment > Fabric Interconnects > Fabric Interconnect A (primary) > Fixed Module.

3. Expand Ethernet Ports.

4. Select the ports that are connected to Cisco UCS Blade server chassis. Right-click and select Configure as Server Port.

5. Click Yes and then OK to confirm the changes.

6. Repeat above steps for Fabric Interconnect B (secondary) ports that connect to servers.

7. Verify that the ports connected to the servers are now configured as server ports. The view below is filtered to only show Server ports.

Enabling Uplink Ports to Cisco Nexus 9000 Series Switches

To configure ports connected to Cisco Nexus switches as Network ports, complete the following steps:

1. From Cisco UCS Manager, click Equipment tab in the navigation pane.

2. Select Equipment > Fabric Interconnects > Fabric Interconnect A (primary) > Fixed Module.

3. Expand Ethernet Ports.

4. Select the first port (for example, Port 33) that connects to Cisco Nexus A switch, right-click and select Configure as Uplink Port > Click Yes to confirm the uplink ports and click OK. Repeat for second port (for example, Port 34) that connects to Cisco Nexus B switch.

5. Repeat above steps for Fabric Interconnect B (secondary) uplink ports that connect to Cisco Nexus A and B switches.

6. Verify that the ports connected to the servers are now configured as server ports. The view below is filtered to only show Network ports.

![]()

![]()

Configuring Port Channels on Uplink Ports to Cisco Nexus 9000 Series Switches

In this procedure, two port channels are created, one from Fabric A to both the Cisco Nexus switches and one from Fabric B to both the Cisco Nexus switches.

To configure port channels on Uplink/Network ports connected to Cisco Nexus switches, complete the following steps:

1. From Cisco UCS Manager, click LAN tab in the navigation pane.

2. Select LAN > LAN Cloud > Fabric A > Port Channels.

3. Right-click and select Create Port Channel.

4. In the Create Port Channel window, specify a Name and unique ID.

5. In the Create Port Channel window, select the ports to put in the channel (for example, Eth1/33 and Eth1/34). Click Finish to create the port channel.

6. Verify the resulting configuration.

7. Repeat above steps for Fabric B and verify the configuration.

Cisco UCS Configuration – LAN

Creating VLANs

Complete these steps to create necessary VLANs.

1. From Cisco UCS Manager, click LAN tab in the navigation pane.

2. Select LAN > LAN Cloud > VLANs.

3. Right-click and select Create VLANs. Specify a name (for example, vlan603) and VLAN ID (for example, 603).

4. If the newly created VLAN is a native VLAN, select VLAN, right-click and select Set as Native VLAN from the list. Either option is acceptable, but it needs to match what the upstream switch is set to.

In this solution we require 2 sets of VLANs:

· Externally accessible VLAN for OpenShift cluster hosts

· VLAN range to be used for OpenShift networking backplane to be consumed by container work-load

The below screenshot shows the configured VLANs for this solution:

Creating LAN Pools

Creating MAC Address Pools

The MAC addresses in this pool will be used for traffic through Fabric Interconnect A and Fabric Interconnect B.

1. From Cisco UCS Manager, click LAN tab in the navigation pane.

2. Select LAN > Pools > root > MAC Pools.

3. Right-click and select Create Mac Pool.

4. Specify a name (for example, OCP-Pool) that identifies this pool.

5. Leave the Assignment Order as Default and click Next.

6. Click [+] Add to add a new MAC pool.

7. For ease-of-troubleshooting, change the fourth and fifth octet to 99:99 traffic using Fabric Interconnect A. Generally speaking, the first three octets of a mac-address should not be changed.

8. Select a size (for example, 500) and select OK and then click Finish to add the MAC pool.

Creating LAN Policies

Creating vNIC Templates

To create virtual network interface card (vNIC) templates for Cisco UCS hosts, complete the following steps. In this solution we have created five vNIC templates for different types of OpenShift cluster nodes.

· Two vNIC templates for Infra nodes. One each for external connectivity and internal cluster connectivity.

· One vNIC template each for Master nodes, App nodes and Storage nodes.

Creating vNIC Template

1. From Cisco UCS Manager, select LAN tab in the navigation pane.

2. Select LAN > Policies > root > vNIC Templates.

3. Right-click and select Create vNIC Template.

4. Specify a template Name (for example, OCP-Infra-Ext) for the policy.

5. Keep Fabric A selected and keep Enable Failover checkbox checked.

6. Under Target, make sure that the VM checkbox is NOT selected.

7. Select Updating Template as the Template Type.

8. Under VLANs, select the checkboxes for all VLAN traffic that a host needs to see (for example, 603) and select the Native VLAN radio button.

9. For CDN Source, select User Defined radio button. This option ensures that the defined vNIC name gets reflected as the adapter’s network interface name during OS installation.

10. For CDN Name, enter a suitable name.

11. Keep the MTU as 1500.

12. For MAC Pool, select the previously configured LAN pool (for example, OCP-Pool).

13. Choose the default values in the Connection Policies section.

14. Click OK to create the vNIC template.

15. Repeat the above steps to create a vNIC template (for example, OCP-Infra-Int) through Fabric A.

16. Repeat the same steps to create three more vNIC templates for Mater, App and Storage. Here we need to make sure to allow the VLANs (for example, 1001 to 1005) for internal cluster host connectivity similar to the vNIC template example screenshot shown for OCP-Infra-Int.

![]() Similarly, for Dev/ Test architecture, create three vNIC templates for Master, Infra and Storage. As the App nodes are co-located with two of Infra and three of Storage nodes, a separate vNIC templete for App is not created:

Similarly, for Dev/ Test architecture, create three vNIC templates for Master, Infra and Storage. As the App nodes are co-located with two of Infra and three of Storage nodes, a separate vNIC templete for App is not created:

Cisco UCS Configuration – Server

Creating Server Policies

In this section creation of various server policies that are used in this solution are shown.

Creating BIOS Policy

To create a server BIOS policy for Cisco UCS hosts, complete the following steps:

1. In Cisco UCS Manager, click Servers tab in the navigation pane.

2. Select Policies > root > BIOS Policies.

3. Right-click and select Create BIOS Policy.

4. In the Main screen, enter BIOS Policy Name (for example, OCP) and change the Consistent Device Naming to enabled state. Click Next.

5. Keep the other options in all the other tabs at Platform Default.

6. Click Finish and OK to create the BIOS policy.

Creating Boot Policy

This solution uses scriptable vMedia feature for UCS Manager to install bare metal OSes on OpenShift cluster nodes. This option is fully automated and does not require PXE boot or any other manual intervention. vMedia policy and boot policy are the two major configuration items in order to achieve automated OS install using the UCSM policies.

To create the boot policy, complete the following steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Policies > root > Boot Policies.

3. Right-click and select Create Boot Policy.

4. In the Create Boot Policy window, enter the policy name (for example, OCP-vMedia).

5. Boot mode should be set to Legacy and rest of the options should be left at default.

6. Now select Add Local LUN under Add Local Disk. In the pop-up window select Primary radio button and for the LUN Name, enter the boot lun name (for example, Boot-LUN). Click OK to add the local lun image. This device will be the target device for bare metal OS install and will be used for host boot-up.

![]() LUN name here should match with the LUN name defined in the storage profile to be used in service profile templates.

LUN name here should match with the LUN name defined in the storage profile to be used in service profile templates.

7. Add CIMC mounted CD/DVD under CIMC Mounted vMedia section, next to the local LUN definition. This device will be mounted for accessing boot images required during installation.

8. Add again CIMC mounted HDD from the section device class which is CIMC Mounted vMedia. This device will be mounted for bare metal operating system installation configuration.

9. After the creation Boot Policy, you can view the created boot options as shown.

Creating Host Firmware Package Policy

Firmware management policies allow the administrator to select the corresponding packages for a given server configuration. These policies often include packages for adapter, BIOS, board controller, FC adapters, host bus adapter (HBA) option ROM, and storage controller properties. To create a firmware management policy for a given server configuration in the Cisco UCS environment, complete the following steps:

1. In Cisco UCS Manager, click Servers tab in the navigation pane.

2. Select Policies > root > Host Firmware Packages.

3. Right-click on Host Firmware Packages and select Create Host Firmware Package.

4. Enter the name of the host firmware package (for example, 3.2.3d).

5. Leave Simple selected.

6. Select the package versions for the different type of servers (Blade, Rack) in the deployment (for example, 3.2(3d) for Blade and Rack servers.

7. Click OK twice to create the host firmware package.

![]() The Host Firmware package 3.2(3d) includes BIOS revisions with updated microcode, which is required for mitigating security vulnerabilities, Meltdown and Spectre variants.

The Host Firmware package 3.2(3d) includes BIOS revisions with updated microcode, which is required for mitigating security vulnerabilities, Meltdown and Spectre variants.

Creating UUID Suffix Pool

To configure the necessary universally unique identifier (UUID) suffix pool for the Cisco UCS environment, complete the following steps:

1. From Cisco UCS Manager, select Servers tab in the navigation pane.

2. Select Servers > Pools > root > UUID Suffix Pools.

3. Right-click and select Create UUID Suffix Pool.

4. Specify a Name (for example, OCP-Pool) for the UUID suffix pool and click Next.

5. Click [+] Add to add a block of UUIDs. Alternatively, you can also modify the default pool and allocate/modify a UUID block.

6. Keep the From field at the default setting. Specify a block size (for example, 100) that is sufficient to support the available blade or server resources.

7. Click OK, click Finish and click OK again to create UUID Pool.

Creating Server Pools

Four server pools are created, one each for Master nodes, Infra nodes, App nodes and Storage nodes. As there are three Master, two Infra, three Storage and four App nodes in this solution, separate pools are created for each of these categories spread across two different chassis. This enables you to expand the cluster based on your requirements. Any of these categories of nodes can be scaled up by adding blade/s to the respective pools and instantiating a new service profile from the corresponding template in order for the new node to get added to the OpenShift cluster.

To configure the necessary server pool for the Cisco UCS environment, complete the following steps:

1. In Cisco UCS Manager, click Servers tab in the navigation pane.

2. Select Pools > root.

3. Right-click Server Pools and select Create Server Pool.

4. Enter name of the server pool (for example, OCP-Mstr).

5. Optional: Enter a description for the server pool.

6. Click Next.

7. Select two (or more) servers to be added and click >> to add them to the server pool.

8. Click Finish to complete.

9. Similarly create two more Server Pools (for example, OCP-Infra, OCP-App and OCP-Strg). The created Server Pools can be viewed under Server Pools.

![]() For Dev/ Test architecture, server pools are created as shown in the screenshot. Follow the above steps and select rack servers into the respective pools. As you can see there only three server pools created as App nodes are co-hosted with 2 of Infra nodes and three of Storage nodes: