Cisco UCS S3260 Storage Server with Red Hat Ceph Storage

Available Languages

Cisco UCS S3260 Storage Server with Red Hat Ceph Storage

Design and Deployment of Red Hat Ceph Storage 2.3 on Cisco UCS S3260 Storage Server

Last Updated: August 28, 2017

About Cisco Validated Designs

The CVD program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information visit

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, IronPort, the IronPort logo, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2017 Cisco Systems, Inc. All rights reserved.

Table of Contents

Cisco Unified Computing System

Cisco UCS S3260 Storage Server

Cisco UCS Virtual Interface Card 1387

Cisco UCS 6300 Series Fabric Interconnect

Design Principles of Red Hat Ceph Storage on Cisco UCS

Deploying Red Hat Ceph Storage on Cisco UCS

Operational Guide for Red Hat Ceph Storage on Cisco UCS

Physical Topology and Configuration

Software Distributions and Versions

Deployment of Hardware and Software

Initial Setup of Cisco UCS 6332 Fabric Interconnects

Configure Fabric Interconnect A

Configure Fabric Interconnect B

Logging Into Cisco UCS Manager

Initial Base Setup of the Environment

Enable Fabric Interconnect A Ports for Server

Enable Fabric Interconnect A Ports for Uplinks

Label Each Chassis for Identification

Label Each Server for Identification

Create Maintenance Policy Setup

Create Power Control Policy Setup

Create Chassis Firmware Package

Create Chassis Maintenance Policy

Create Compute Connection Policy

Create Chassis Profile Template

Create Chassis Profile from Template

Setting Disks for Rack-Mount Servers to Unconfigured-Good

Setting Disks for Cisco UCS S3260 Storage Server to Unconfigured-Good with Cisco UCS PowerTool

Create Storage Profile for Cisco UCS S3260 Storage Server

Creating Disk Group Policies and RAID 0 LUNs for Top-Loaded Cisco UCS S3260 Storage Server HDDs

Create Storage Profile for Cisco UCS C220 M4S Rack-Mount Server

Creating a Service Profile Template

Create Service Profile Template for Cisco UCS S3260 Storage Server Top and Bottom Node

Create Service Profile Template for Cisco UCS C220 M4S

Create Service Profiles from Template

Creating Port Channel for Uplinks

Create Port Channel for Fabric Interconnect A/B

Configure Cisco Nexus C9332PQ Switch A and B

Initial Setup of Cisco Nexus C9332PQ Switch A and B

Enable Features on Cisco Nexus 9332PQ Switch A and B

Configuring VLANs on Nexus 9332PQ Switch A and B

Verification Check of Cisco Nexus C9332PQ Configuration for Switch A and B

Install Red Hat Enterprise Linux 7.3 Operating System

Prepare Ceph Admin Node cephadm

Copy Files to /var/www/html to Install cephmon1-3 and cephosd1-10

Prepare Kickstart Files for an Automated Installation of Ceph Monitor and OSD Nodes

Install RHEL 7.3 on Ceph Monitor Nodes cephmon1-3 and Ceph OSD Nodes cephosd1-10

Red Hat Ceph Storage Installation via Ansible

Configure Ceph Global Settings

Deploy Red Hat Ceph Storage via Ansible

Final Check of Ceph Deployment

Operational Guide to Extend a Ceph Cluster with Cisco UCS

Adding Cisco UCS S3260 as Ceph OSD Nodes

Enable Fabric Interconnect Ports for Server

Label Chassis for Identification

Label each Server for Identification

Create Chassis Profile from Template

Setting Disks for Cisco UCS S3260 Storage Server to Unconfigured-Good with Cisco UCS PowerTool

Create Service Profiles from Template and Associate to Servers

Change .ssh/config File for cephadm User

Install RHEL 7.3 on Cisco UCS S3260 Storage Server

Deploy Red Hat Ceph Storage via Ansible

Final Check of Ceph Deployment

Add RADOS Gateway for Object Storage

Enable Fabric Interconnect Ports for Server

Label Each Server for Identification

Setting Disks for Rack-Mount Servers to Unconfigured-Good

Create Service Profiles from Template and Associate Template

Change .ssh/config File for cephadm User

Install RHEL 7.3 on Cisco UCS C220 M4S

Prepare Expansion of Ceph Cluster with RGWs

Deploy Red Hat Ceph Storage via Ansible

Final Check of Ceph Deployment

Appendix A – Kickstart File for Ceph Admin Host cephadm

Appendix B – Kickstart File for Cisco Monitor Node

Appendix C – Kickstart File for Cisco OSD Node

Appendix D – Kickstart File for Cisco RGW Node

Appendix E – Example /etc/hosts File

Appendix F – /home/cephadm/.ssh/config File from Ansible Administration Node cephadm

Appendix G - /usr/share/ceph-ansible/group_vars/all Configuration File

Appendix H - /usr/share/ceph-ansible/group_vars/osds Configuration File

Modern data centers increasingly rely on a variety of architectures for storage. Whereas in the past organizations focused on traditional storage only, today organizations are focusing on Software Defined Storage for several reasons:

· Software Defined Storage offers unlimited scalability and simple management.

· Because of the low cost per gigabyte, Software Defined Storage is well suited for large-capacity needs, and therefore for use cases such as archive, backup, and cloud operations.

· Software Defined Storage allows the use of commodity hardware.

Enterprise storage systems are designed to address business-critical requirements in the data center. But these solutions may not be optimal for use cases such as backup and archive workloads and other unstructured data, for which OLTP-style data latency is not especially important.

Red Hat Ceph Storage is an example of a massively scalable, Open Source, software-defined storage system that gives you unified storage for cloud environments. It is an object storage architecture, that can easily achieve enterprise-class reliability, scale-out capacity, and lower costs with an industry-standard server solution.

The Cisco UCS S3260 Storage Server (S3260), originally designed for the data center, together with Red Hat Ceph Storage is optimized for Software Defined Storage solutions, making it an excellent fit for unstructured data workloads such as backup, archive, and cloud data. The S3260 delivers a complete hardware with exceptional scalability for computing and storage resources together with 40 Gigabit Ethernet networking. The S3260 is the platform of choice for Software Defined Storage solutions because it provides more than comparable platforms:

· Proven server architecture that allows you to upgrade individual components without the need for migration.

· High-bandwidth networking that meets the needs of large-scale object storage solutions like Red Hat Ceph Storage.

· Unified, embedded management for an easy-to-scale infrastructure.

· API access for cloud-scale applications.

Cisco and Red Hat are collaborating to offer customers a scalable Software Defined Storage solution for unstructured data that is integrated with Red Hat Ceph Storage. With the power of the Cisco UCS management framework, the solution is cost effective to deploy and manage and will enable the next-generation cloud deployments that drive business agility, lower operational costs and avoid vendor lock-in.

Introduction

Traditional storage systems are limited in their ability to easily and cost-effectively scale to support massive amounts of unstructured data. With about 80 percent of data being unstructured, new approaches using x86 servers are proving to be more cost effective, providing storage that can be expanded as easily as your data grows. Software Defined Storage is a scalable and cost-effective approach for handling massive amounts of data.

Red Hat Ceph Storage is a massively scalable, open source, software-defined storage system that supports unified storage for a cloud environment. With object and block storage in one platform, Red Hat Ceph Storage efficiently and automatically manages the petabytes of data needed to run businesses facing massive data growth. It is proven at web scale and has many deployments in production environments as an object store for large, global corporations. Red Hat Ceph Storage was designed from the ground up for web-scale block and object storage and cloud infrastructures.

Scale-out storage uses x86 architecture storage-optimized servers to increase performance while reducing costs. The Cisco UCS S3260 Storage Server is well suited for scale-out storage solutions. It provides a platform that is cost effective to deploy and manage using the power of the Cisco Unified Computing System (Cisco UCS) management: capabilities that traditional unmanaged and agent-based management systems can’t offer. You can design S3260 solutions for a computing-intensive, capacity-intensive, or throughput-intensive workload.

Both solutions together, Red Hat Ceph Storage and Cisco UCS S3260 Storage Server, deliver a simple, fast and scalable architecture for enterprise scale-out storage.

Solution

This Cisco Validated Design (CVD) is a simple and linearly scalable architecture that provides Software Defined Storage for block and object on Red Hat Ceph Storage 2.3 and Cisco UCS S3260 Storage Server. The solution includes the following features:

· Infrastructure for large scale-out storage.

· Design of a Red Hat Ceph Storage solution together with Cisco UCS S3260 Storage Server.

· Simplified infrastructure management with Cisco UCS Manager (UCSM).

· Architectural scalability – linear scaling based on network, storage, and compute requirements.

· Operational guide to extend a working Red Hat Ceph cluster with Ceph RADOS Gateway (RGW) and Ceph OSD nodes.

Audience

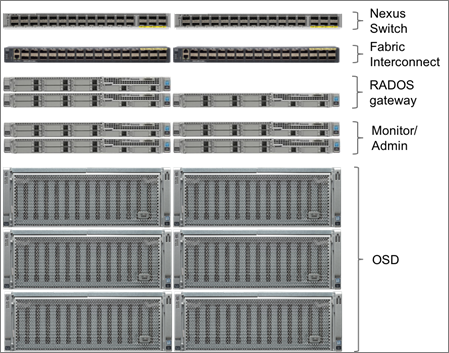

This document describes the architecture, design and deployment procedures of a Red Hat Ceph Storage solution using six Cisco UCS S3260 Storage Servers with two C3x60 M4 server nodes each as OSD nodes, three Cisco UCS C220 M4 S rack server each as Monitor nodes, three Cisco UCS C220 M4S rackserver each as RGW node, one Cisco UCS C220 M4S rackserver as Admin node, and two Cisco UCS 6332 Fabric Interconnect managed by Cisco UCS Manager. The intended audience for this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who want to deploy Red Hat Ceph Storage on the Cisco Unified Computing System (UCS) using Cisco UCS S3260 Storage Servers.

Solution Summary

This CVD describes in detail the process of deploying Red Hat Ceph Storage 2.3 on Cisco UCS S3260 Storage Server.

The configuration uses the following architecture for the deployment:

· 6 x Cisco UCS S3260 Storage Servers, each with 2 x C3x60 M4 server nodes working as Ceph OSD nodes

· 3 x Cisco UCS C220 M4S rack server working as Ceph Monitor nodes

· 3 x Cisco UCS C220 M4S rack server working as Ceph RADOS gateway nodes

· 1 x Cisco UCS C220 M4S rack server working as Ceph Admin node

· 2 x Cisco UCS 6332 Fabric Interconnect

· 1 x Cisco UCS Manager

· 2 x Cisco Nexus 9332PQ Switches

The solution has various options to scale performance and capacity. A base capacity summary is shown in Table 1.

Table 1 Usable capacity options for tested Cisco Validated Design

| HDD Type |

Number of Disks |

Data Protection 3 x Replication |

Data Protection Erasure Coding 4:2 |

| 4 TB 7200-rpm LFF SAS drives |

288 |

384 TB |

760 TB |

| 6 TB 7200-rpm LFF SAS drives[1] |

288 |

576 TB |

1140 TB |

| 8 TB 7200-rpm LFF SAS drives |

288 |

768 TB |

1520 TB |

| 10 TB 7200-rpm LFF SAS drives |

288 |

960 TB |

1900 TB |

Cisco Unified Computing System

The Cisco Unified Computing System (Cisco UCS) is a state-of-the-art data center platform that unites computing, network, storage access, and virtualization into a single cohesive system.

The main components of Cisco Unified Computing System are:

· Computing - The system is based on an entirely new class of computing system that incorporates rack-mount and blade servers based on Intel Xeon Processor E5 and E7. The Cisco UCS servers offer the patented Cisco Extended Memory Technology to support applications with large datasets and allow more virtual machines (VM) per server.

· Network - The system is integrated onto a low-latency, lossless, 10-Gbps unified network fabric. This network foundation consolidates LANs, SANs, and high-performance computing networks which are separate networks today. The unified fabric lowers costs by reducing the number of network adapters, switches, and cables, and by decreasing the power and cooling requirements.

· Virtualization - The system unleashes the full potential of virtualization by enhancing the scalability, performance, and operational control of virtual environments. Cisco security, policy enforcement, and diagnostic features are now extended into virtualized environments to better support changing business and IT requirements.

· Storage access - The system provides consolidated access to both SAN storage and Network Attached Storage (NAS) over the unified fabric. By unifying the storage access the Cisco Unified Computing System can access storage over Ethernet (NFS or iSCSI), Fibre Channel, and Fibre Channel over Ethernet (FCoE). This provides customers with choice for storage access and investment protection. In addition, the server administrators can pre-assign storage-access policies for system connectivity to storage resources, simplifying storage connectivity, and management for increased productivity.

The Cisco Unified Computing System is designed to deliver:

· A reduced Total Cost of Ownership (TCO) and increased business agility.

· Increased IT staff productivity through just-in-time provisioning and mobility support.

· A cohesive, integrated system which unifies the technology in the data center.

· Industry standards supported by a partner ecosystem of industry leaders.

Cisco UCS S3260 Storage Server

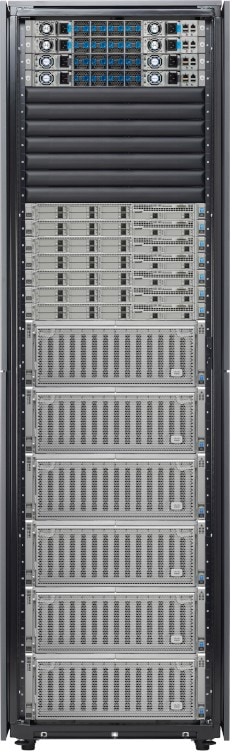

The Cisco UCS® S3260 Storage Server (Figure 1) is a modular, high-density, high-availability dual node rack server well suited for service providers, enterprises, and industry-specific environments. It addresses the need for dense cost effective storage for the ever-growing data needs. Designed for a new class of cloud-scale applications, it is simple to deploy and excellent for big data applications, software-defined storage environments such as Ceph and other unstructured data repositories, media streaming, and content distribution.

Figure 1 Cisco UCS S3260 Storage Server

Extending the capability of the Cisco UCS C3000 portfolio, the Cisco UCS S3260 helps you achieve the highest levels of data availability. With dual-node capability that is based on the Intel® Xeon® processor E5-2600 v4 series, it features up to 600 TB of local storage in a compact 4-rack-unit (4RU) form factor. All hard-disk drives can be asymmetrically split between the dual-nodes and are individually hot-swappable. The drives can be built-in in an enterprise-class Redundant Array of Independent Disks (RAID) redundancy or be in a pass-through mode.

This high-density rack server comfortably fits in a standard 32-inch depth rack, such as the Cisco® R42610 Rack.

The Cisco UCS S3260 is deployed as a standalone server in both bare-metal or virtualized environments. Its modular architecture reduces total cost of ownership (TCO) by allowing you to upgrade individual components over time and as use cases evolve, without having to replace the entire system.

The Cisco UCS S3260 uses a modular server architecture that, using Cisco’s blade technology expertise, allows you to upgrade the computing or network nodes in the system without the need to migrate data migration from one system to another. It delivers:

· Dual server nodes

· Up to 36 computing cores per server node

· Up to 60 drives mixing a large form factor (LFF) with up to 28 solid-state disk (SSD) drives plus 2 SSD SATA boot drives per server node

· Up to 1 TB of memory per server node (2 terabyte [TB] total)

· Support for 12-Gbps serial-attached SCSI (SAS) drives

· A system I/O Controller with Cisco VIC 1300 Series Embedded Chip supporting Dual-port 40Gbps

· High reliability, availability, and serviceability (RAS) features with tool-free server nodes, system I/O controller, easy-to-use latching lid, and hot-swappable and hot-pluggable components

Cisco UCS C220 M4 Rack Server

The Cisco UCS® C220 M4 Rack Server (Figure 2) is the most versatile, general-purpose enterprise infrastructure and application server in the industry. It is a high-density two-socket enterprise-class rack server that delivers industry-leading performance and efficiency for a wide range of enterprise workloads, including virtualization, collaboration, and bare-metal applications. The Cisco UCS C-Series Rack Servers can be deployed as standalone servers or as part of the Cisco Unified Computing System™ (Cisco UCS) to take advantage of Cisco’s standards-based unified computing innovations that help reduce customers’ total cost of ownership (TCO) and increase their business agility.

Figure 2 Cisco UCS C220 M4 Rack Server

The enterprise-class Cisco UCS C220 M4 server extends the capabilities of the Cisco UCS portfolio in a 1RU form factor. It incorporates the Intel® Xeon® processor E5-2600 v4 and v3 product family, next-generation DDR4 memory, and 12-Gbps SAS throughput, delivering significant performance and efficiency gains. The Cisco UCS C220 M4 rack server delivers outstanding levels of expandability and performance in a compact 1RU package:

· Up to 24 DDR4 DIMMs for improved performance and lower power consumption

· Up to 8 Small Form-Factor (SFF) drives or up to 4 Large Form-Factor (LFF) drives

· Support for 12-Gbps SAS Module RAID controller in a dedicated slot, leaving the remaining two PCIe Gen 3.0 slots available for other expansion cards

· A modular LAN-on-motherboard (mLOM) slot that can be used to install a Cisco UCS virtual interface card (VIC) or third-party network interface card (NIC) without consuming a PCIe slot

· Two embedded 1Gigabit Ethernet LAN-on-motherboard (LOM) ports

Cisco UCS Virtual Interface Card 1387

The Cisco UCS Virtual Interface Card (VIC) 1387 (Figure 3) is a Cisco® innovation. It provides a policy-based, stateless, agile server infrastructure for your data center. This dual-port Enhanced Quad Small Form-Factor Pluggable (QSFP) half-height PCI Express (PCIe) modular LAN-on-motherboard (mLOM) adapter is designed exclusively for Cisco UCS C-Series and S3260 Rack Servers. The card supports 40 Gigabit Ethernet and Fibre Channel over Ethernet (FCoE). It incorporates Cisco’s next-generation converged network adapter (CNA) technology and offers a comprehensive feature set, providing investment protection for future feature software releases. The card can present more than 256 PCIe standards-compliant interfaces to the host, and these can be dynamically configured as either network interface cards (NICs) or host bus adapters (HBAs). In addition, the VIC supports Cisco Data Center Virtual Machine Fabric Extender (VM-FEX) technology. This technology extends the Cisco UCS fabric interconnect ports to virtual machines, simplifying server virtualization deployment.

Figure 3 Cisco UCS Virtual Interface Card 1387

The Cisco UCS VIC 1387 provides the following features and benefits:

· Stateless and agile platform: The personality of the card is determined dynamically at boot time using the service profile associated with the server. The number, type (NIC or HBA), identity (MAC address and World Wide Name [WWN]), failover policy, bandwidth, and quality-of-service (QoS) policies of the PCIe interfaces are all determined using the service profile. The capability to define, create, and use interfaces on demand provides a stateless and agile server infrastructure

· Network interface virtualization: Each PCIe interface created on the VIC is associated with an interface on the Cisco UCS fabric interconnect, providing complete network separation for each virtual cable between a PCIe device on the VIC and the interface on the fabric interconnect

Cisco UCS 6300 Series Fabric Interconnect

The Cisco UCS 6300 Series Fabric Interconnects are a core part of Cisco UCS, providing both network connectivity and management capabilities for the system (Figure 4). The Cisco UCS 6300 Series offers line-rate, low-latency, lossless 10 and 40 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE), and Fibre Channel functions.

Figure 4 Cisco UCS 6300 Series Fabric Interconnect

The Cisco UCS 6300 Series provides the management and communication backbone for the Cisco UCS B-Series Blade Servers, 5100 Series Blade Server Chassis, and C-Series Rack Servers managed by Cisco UCS. All servers attached to the fabric interconnects become part of a single, highly available management domain. In addition, by supporting unified fabric, the Cisco UCS 6300 Series provides both LAN and SAN connectivity for all servers within its domain.

From a networking perspective, the Cisco UCS 6300 Series uses a cut-through architecture, supporting deterministic, low-latency, line-rate 10 and 40 Gigabit Ethernet ports, switching capacity of 2.56 terabits per second (Tbps), and 320 Gbps of bandwidth per chassis, independent of packet size and enabled services. The product family supports Cisco® low-latency, lossless 10 and 40 Gigabit Ethernet unified network fabric capabilities, which increase the reliability, efficiency, and scalability of Ethernet networks. The fabric interconnect supports multiple traffic classes over a lossless Ethernet fabric from the server through the fabric interconnect. Significant TCO savings can be achieved with an FCoE optimized server design in which network interface cards (NICs), host bus adapters (HBAs), cables, and switches can be consolidated.

The Cisco UCS 6332 32-Port Fabric Interconnect is a 1-rack-unit (1RU) Gigabit Ethernet, and FCoE switch offering up to 2.56 Tbps throughput and up to 32 ports. The switch has 32 fixed 40-Gbps Ethernet and FCoE ports.

Both the Cisco UCS 6332UP 32-Port Fabric Interconnect and the Cisco UCS 6332 16-UP 40-Port Fabric Interconnect have ports that can be configured for the breakout feature that supports connectivity between 40 Gigabit Ethernet ports and 10 Gigabit Ethernet ports. This feature provides backward compatibility to existing hardware that supports 10 Gigabit Ethernet. A 40 Gigabit Ethernet port can be used as four 10 Gigabit Ethernet ports. Using a 40 Gigabit Ethernet SFP, these ports on a Cisco UCS 6300 Series Fabric Interconnect can connect to another fabric interconnect that has four 10 Gigabit Ethernet SFPs. The breakout feature can be configured on ports 1 to 12 and ports 15 to 26 on the Cisco UCS 6332UP fabric interconnect. Ports 17 to 34 on the Cisco UCS 6332 16-UP fabric interconnect support the breakout feature.

Cisco Nexus 9332PQ Switch

The Cisco Nexus® 9000 Series Switches (Figure 5) include both modular and fixed-port switches that are designed to overcome these challenges with a flexible, agile, low-cost, application-centric infrastructure.

Figure 5 Cisco Nexus 9332PQ Switch

The Cisco Nexus 9300 platform consists of fixed-port switches designed for top-of-rack (ToR) and middle-of-row (MoR) deployment in data centers that support enterprise applications, service provider hosting, and cloud computing environments. They are Layer 2 and 3 nonblocking 10 and 40 Gigabit Ethernet switches with up to 2.56 terabits per second (Tbps) of internal bandwidth.

The Cisco Nexus 9332PQ Switch is a 1-rack-unit (1RU) switch that supports 2.56 Tbps of bandwidth and over 720 million packets per second (mpps) across thirty-two 40-Gbps Enhanced QSFP+ ports

All the Cisco Nexus 9300 platform switches use dual- core 2.5-GHz x86 CPUs with 64-GB solid-state disk (SSD) drives and 16 GB of memory for enhanced network performance.

With the Cisco Nexus 9000 Series, organizations can quickly and easily upgrade existing data centers to carry 40 Gigabit Ethernet to the aggregation layer or to the spine (in a leaf-and-spine configuration) through advanced and cost-effective optics that enable the use of existing 10 Gigabit Ethernet fiber (a pair of multimode fiber strands).

Cisco provides two modes of operation for the Cisco Nexus 9000 Series. Organizations can use Cisco® NX-OS Software to deploy the Cisco Nexus 9000 Series in standard Cisco Nexus switch environments. Organizations also can use a hardware infrastructure that is ready to support Cisco Application Centric Infrastructure (Cisco ACI™) to take full advantage of an automated, policy-based, systems management approach.

Cisco UCS Manager

Cisco UCS® Manager (Figure 6) provides unified, embedded management of all software and hardware components of the Cisco Unified Computing System™ (Cisco UCS) across multiple chassis, rack servers and thousands of virtual machines. It supports all Cisco UCS product models, including Cisco UCS B-Series Blade Servers, C-Series Rack Servers, and M-Series composable infrastructure and Cisco UCS Mini, as well as the associated storage resources and networks. Cisco UCS Manager is embedded on a pair of Cisco UCS 6300 or 6200 Series Fabric Interconnects using a clustered, active-standby configuration for high availability. The manager participates in server provisioning, device discovery, inventory, configuration, diagnostics, monitoring, fault detection, auditing, and statistics collection.

An instance of Cisco UCS Manager with all Cisco UCS components managed by it forms a Cisco UCS domain, which can include up to 160 servers. In addition to provisioning Cisco UCS resources, this infrastructure management software provides a model-based foundation for streamlining the day-to-day processes of updating, monitoring, and managing computing resources, local storage, storage connections, and network connections. By enabling better automation of processes, Cisco UCS Manager allows IT organizations to achieve greater agility and scale in their infrastructure operations while reducing complexity and risk. The manager provides flexible role- and policy-based management using service profiles and templates.

Cisco UCS Manager manages Cisco UCS systems through an intuitive HTML 5 or Java user interface and a command-line interface (CLI). It can register with Cisco UCS Central Software in a multi-domain Cisco UCS environment, enabling centralized management of distributed systems scaling to thousands of servers. Cisco UCS Manager can be integrated with Cisco UCS Director to facilitate orchestration and to provide support for converged infrastructure and Infrastructure as a Service (IaaS).

The Cisco UCS XML API provides comprehensive access to all Cisco UCS Manager functions. The API provides Cisco UCS system visibility to higher-level systems management tools from independent software vendors (ISVs) such as VMware, Microsoft, and Splunk as well as tools from BMC, CA, HP, IBM, and others. ISVs and in-house developers can use the XML API to enhance the value of the Cisco UCS platform according to their unique requirements. Cisco UCS PowerTool for Cisco UCS Manager and the Python Software Development Kit (SDK) help automate and manage configurations within Cisco UCS Manager.

Red Hat Enterprise Linux 7.3

Red Hat® Enterprise Linux® is a high-performing operating system that has delivered outstanding value to IT environments for more than a decade. More than 90 percent of Fortune Global 500 companies use Red Hat products and solutions including Red Hat Enterprise Linux. As the world’s most trusted IT platform, Red Hat Enterprise Linux has been deployed in mission-critical applications at global stock exchanges, financial institutions, leading telcos, and animation studios. It also powers the websites of some of the most recognizable global retail brands.

Red Hat Enterprise Linux:

· Delivers high performance, reliability, and security

· Is certified by the leading hardware and software vendors

· Scales from workstations, to servers, to mainframes

· Provides a consistent application environment across physical, virtual, and cloud deployments

Designed to help organizations make a seamless transition to emerging datacenter models that include virtualization and cloud computing, Red Hat Enterprise Linux includes support for major hardware architectures, hypervisors, and cloud providers, making deployments across physical and different virtual environments predictable and secure. Enhanced tools and new capabilities in this release enable administrators to tailor the application environment to efficiently monitor and manage compute resources and security.

Red Hat Ceph Storage

Red Hat® Ceph Storage is an open, cost-effective, software-defined storage solution that enables massively scalable cloud and object storage workloads. By unifying object, block storage and file storage in one platform, Red Hat Ceph Storage efficiently and automatically manages the petabytes of data needed to run businesses facing massive data growth. Ceph is a self-healing, self-managing platform with no single point of failure. Ceph enables a scale-out cloud infrastructure built on industry standard servers that significantly lowers the cost of storing enterprise data and helps enterprises manage their exponential data growth in an automated fashion.

For OpenStack environments, Red Hat Ceph Storage is tightly integrated with OpenStack services, including Nova, Cinder, Manila, Glance, Keystone, and Swift, and it offers user-driven storage life-cycle management. Voted the No. 1 storage option by OpenStack users, the product’s highly tunable, extensible, and configurable architecture offers mature interfaces for enterprise block and object storage, making it well suited for archival, rich media, and cloud infrastructure environments.

Red Hat Ceph Storage is also ideal for object storage workloads outside of OpenStack because it is proven at web scale, flexible for demanding applications, and offers the data protection, reliability, and availability enterprises demand. It was designed from the ground up for web-scale object storage. Industry-standard APIs allow seamless migration of, and integration with, an enterprise’s applications. A Ceph object storage cluster is accessible via S3, Swift, or native API protocols.

Ceph has a lively and active open source community contributing to its innovation. At Ceph’s core is RADOS, a distributed object store that stores data by spreading it out across multiple industry standard servers. Ceph uses CRUSH (Controller Replication Under Scalable Hashing), a uniquely differentiated data placement algorithm that intelligently distributes the data pseudo-randomly across the cluster for better performance and data protection. Ceph supports both replication and erasure coding to protect data and also provides multi-site disaster recovery options.

Red Hat collaborates with the global open source Ceph community to develop new Ceph features, then packages changes into predictable, stable, enterprise-quality SDS product, which is Red Hat Ceph Storage. This unique development model takes combines the advantage of a large development community with Red Hat’s industry-leading support services to offer new storage capabilities and benefits to enterprises.

Solution Overview

The current solution based on Cisco UCS and Red Hat Ceph Storage is divided into multiple sections and covers three main aspects:

1. Design of an Object Storage Solution based on Cisco UCS and Red Hat Ceph Storage.

2. Deployment of the Solution (Figure 7) is divided into three areas:

— Integration and configuration of the Cisco UCS hardware into Cisco UCS Manager

— Base installation of Red Hat Enterprise Linux

— Deployment of Red Hat Ceph Storage

Figure 7 Deployment Parts for the Cisco Validated Design

3. Operational guide to work with Red Hat Ceph Storage on Cisco UCS

— Expansion of the current cluster by adding one more Cisco UCS S3260 Storage Server with two C3x60 M4 server nodes working as OSD nodes

— Expansion of the current cluster by adding three more Cisco UCS C220 M4S Rack Server working as RADOS gateways for object storage

Design Principles of Red Hat Ceph Storage on Cisco UCS

A general design of a Red Hat Ceph Storage solution should consider the principles shown in Figure 8.

1. Quality need for scale-out storage – Scalability, dynamic provisioning across a unified namespace, and performance-at-scale are common reasons why people chose to add distributed scale-out storage to their datacenters. For a few use cases, such as primary storage for scale-up Oracle RDBMS, traditional storage appliances remain the right solution

2. Design for a target workload – Red Hat Ceph Storage pools can be deployed to serve three different types of workload categories, including IOPS-intensive, throughput-intensive, and capacity-intensive workloads. As noted in Table 2, server configurations should be chosen accordingly.

3. Storage Access Method – Red Hat Ceph Storage supports both block access pools and object access pools within a single Ceph cluster (additionally, distributed file access is in tech preview at time of writing). Block access is supported on replicated pools. Object access is supported on either replicated or erasure-coded pools.

4. Capacity – Based on the cluster storage capacity needs, standard, dense, or ultra-dense servers can be chosen to sit beneath Ceph storage pools. Cisco UCS C-Series and Cisco UCS S-Series provide several well-suited server models to choose from.

5. Fault-domain risk tolerance – Ceph clusters are self-healing following hardware failure. Customers wanting to reduce performance and resource impact during self-healing should observe minimum cluster server recommendations described in Table 2 below.

6. Data Protection method – With Replication and Erasure Coding, Red Hat Ceph Storage offers two data protection methods that could affect the overall design. Erasure-coded pools can provide greater price/performance, while replicated pools typically provide higher absolute performance.

Figure 8 Red Hat Ceph Storage Design Consideration

Based on the previous section of design principles there are some technical specifications that have to be followed for a successful implementation. The technical specifications are shown in Table 2.

Table 2 Technical Specifications for Red Hat Ceph Storage

| Workload |

Cluster Size |

Network |

CPU / Memory |

OSD Journal to Disk Media Ratio |

Data Protection |

| IOPS |

Min. 10 OSD nodes |

10G - 40G |

5 core-GHz per NVMe OSD or 3 core-GHz per SSD OSD / 16 GB + 2 GB per OSD |

4:1 → SSD:NVMe or all NVMe with co-located journals |

Ceph RBD (Block) Replicated Pools |

| Throughput |

Min. 10 OSD nodes |

10G - 40G (>10G when > 12 HDDs/node) |

1 core-GHz per HDD / 16 GB + 2 GB per OSD |

12-18:1 → HDD:NVMe, or 4-5:1 → HDD:SSD |

Ceph RBD (Block) Replicated Pools Ceph RGW (Object) Replicated Pools |

| Capacity-Archive |

Min. 7 OSD nodes |

10G (or 40G for latency sensitive requirements) |

1 core-GHz per HDD / 16 GB + 2 GB per OSD |

All HDD with co-located journals |

Ceph RGW (Object) Erasure-Coded Pools |

The solution for the current Cisco Validated Design follows a mixed workload setup of Throughput- and Capacity-intensive configurations and is classified as follows[2]:

· Cluster Size: Starting with 10 OSD nodes and adding two more OSD nodes.

· Network: All Ceph nodes connected with 40G.

· CPU / Memory: All nodes come with 128 GB memory and more than 40 Core-GHz.

· OSD Disk: The solution is configured for a 6:1 HDD:SSD ratio.

· Data Protection: Ceph RBD with 3 x Replication and Ceph RGW with Erasure Coding.

· Ceph Admin, Monitor, and RADOS gateway nodes are deployed on Cisco UCS C220 M4S rack server.

· Ceph OSD nodes are deployed on Cisco UCS S3260 Storage Server.

Deploying Red Hat Ceph Storage on Cisco UCS

Deploying the solution is based on three steps; the first step is integrating Cisco UCS S3260 Storage Server and Cisco UCS C220 M4S into Cisco UCS Manager, connected to Cisco UCS 6332 Fabric Interconnect and then Cisco Nexus 9332PQ; the second step is the installation of Red Hat Enterprise Linux and preparation for the third step; the installation, configuration and deployment of Red Hat Ceph Storage. Figure 9 illustrates the deployment steps.

Figure 9 Deployment Parts for Red Hat Ceph Storage on Cisco UCS

Operational Guide for Red Hat Ceph Storage on Cisco UCS

As an addition to the design and deployment part of the Red Hat Ceph Storage solution on Cisco UCS, the Cisco Validated Design gives an operational guidance on how to add more capacity and another access layer to the starting configuration.

The first part of installation and configuration of the solution contains one Admin node, three Ceph Monitor nodes and 10 Ceph OSD nodes and is shown in Figure 10. This comes along with the minimum size of a Throughput-intensive Ceph cluster of 10 Ceph OSD nodes.

Figure 10 Base Installation of Red Hat Ceph Storage on Cisco UCS

In the second step, the environment gets expanded by adding one more Cisco UCS S3260 Storage Server enclosure with two C3x60 M4 server nodes inside. All steps will be described, showing the simplicity of adding further capacity in less than 40 minutes. Figure 11 shows the additional integration of a Cisco UCS S3260 Storage Server.

Figure 11 Expansion of Red Hat Ceph Storage Cluster with Ceph OSD Nodes

In the last step, the cluster gets further expanded by adding an object storage pool accessed via the RADOS Gateway (RGW). Three more Cisco UCS C220 M4S nodes are getting implemented with Cisco UCS Manager, installed with Red Hat Enterprise Linux and Red Hat Ceph Storage. Figure 12 shows the final infrastructure of this CVD.

Figure 12 Expansion of Red Hat Ceph Storage Cluster with Ceph RADOS Gateways

Requirements

This CVD describes the architecture, design and deployment of a Red Hat Ceph Storage solution on six Cisco UCS S3260 Storage Server, each with two C3x60 M4 nodes and seven Cisco UCS C220 M4S Rack servers providing control-plane functions, including three Ceph Monitor nodes, three Ceph RGW nodes, and one Ceph Admin node. The whole solution is connected to two Cisco UCS 6332 Fabric Interconnects and two Cisco Nexus 9332PQ.

The detailed configuration consists the following:

· Two Cisco Nexus 9332PQ Switches

· Two Cisco UCS 6332 Fabric Interconnects

· Six Cisco UCS S3260 Storage Servers with two C3x60 M4 server nodes each

· Seven Cisco UCS C220 M4S Rack Servers

· One Cisco R42610 Standard Rack

· Two Vertical Power Distribution Units (PDUs) (Country Specific)

![]() Note: Please contact your Cisco representative for country specific information.

Note: Please contact your Cisco representative for country specific information.

Rack and PDU Configuration

Each rack consists of two vertical PDUs. The rack consists of two Cisco Nexus 9332PQ, two Cisco UCS 6332 Fabric Interconnects, 7 Cisco UCS C220 M4S, and 6 Cisco UCS S3260 Storage Server. Each chassis is connected to two vertical PDUs for redundancy, to help ensure availability during power source failure. Table 3 shows the exact layout of the configuration.

Figure 13 Rack Configuration

| Position |

Devices |

| 42 |

Cisco Nexus 9332PQ |

| 41 |

Cisco Nexus 9332PQ |

| 40 |

Cisco UCS 6332 FI |

| 39 |

Cisco UCS 6332 FI |

| 38 |

Unused |

| 37 |

Unused |

| 36 |

Unused |

| 35 |

Unused |

| 34 |

Unused |

| 33 |

Unused |

| 32 |

Unused |

| 31 |

Cisco UCS C220 M4S |

| 30 |

Cisco UCS C220 M4S |

| 29 |

Cisco UCS C220 M4S |

| 28 |

Cisco UCS C220 M4S |

| 27 |

Cisco UCS C220 M4S |

| 26 |

Cisco UCS C220 M4S |

| 25 |

Cisco UCS C220 M4S |

| 24 |

Cisco UCS S3260 Storage Server |

| 23 |

|

| 22 |

|

| 21 |

|

| 20 |

Cisco UCS S3260 Storage Server |

| 19 |

|

| 18 |

|

| 17 |

|

| 16 |

Cisco UCS S3260 Storage Server |

| 15 |

|

| 14 |

|

| 13 |

|

| 12 |

Cisco UCS S3260 Storage Server |

| 11 |

|

| 10 |

|

| 9 |

|

| 8 |

Cisco UCS S3260 Storage Server |

| 7 |

|

| 6 |

|

| 5 |

|

| 4 |

Cisco UCS S3260 Storage Server |

| 3 |

|

| 2 |

|

| 1 |

Physical Topology and Configuration

The following sections describe the physical design of the solution and the configuration of each component.

Table 4 shows the naming conventions used for this solution.

| Device |

Function |

Name |

| Cisco Nexus 9332PQ Switch A |

|

N9k-A |

| Cisco Nexus 9332PQ Switch B |

|

N9k-B |

| Cisco UCS 6332 Fabric Interconnect A |

|

FI6332-A |

| Cisco UCS 6332 Fabric Interconnect B |

|

FI6332-B |

| Cisco UCS C220 M4S |

Ceph RADOS Gateway |

cephrgw1 |

| Cisco UCS C220 M4S |

Ceph RADOS Gateway |

cephrgw2 |

| Cisco UCS C220 M4S |

Ceph RADOS Gateway |

cephrgw3 |

| Cisco UCS C220 M4S |

Ceph Monitor |

cephmon1 |

| Cisco UCS C220 M4S |

Ceph Monitor |

cephmon2 |

| Cisco UCS C220 M4S |

Ceph Monitor |

cephmon3 |

| Cisco UCS C220 M4S |

Ceph Admin |

cephadm |

| Cisco UCS S3260 Storage Server Top Node |

Ceph OSD |

cephosd1 |

| Cisco UCS S3260 Storage Server Bottom Node |

Ceph OSD |

cephosd2 |

| Cisco UCS S3260 Storage Server Top Node |

Ceph OSD |

cephosd3 |

| Cisco UCS S3260 Storage Server Bottom Node |

Ceph OSD |

cephosd4 |

| Cisco UCS S3260 Storage Server Top Node |

Ceph OSD |

cephosd5 |

| Cisco UCS S3260 Storage Server Bottom Node |

Ceph OSD |

cephosd6 |

| Cisco UCS S3260 Storage Server Top Node |

Ceph OSD |

cephosd7 |

| Cisco UCS S3260 Storage Server Bottom Node |

Ceph OSD |

cephosd8 |

| Cisco UCS S3260 Storage Server Top Node |

Ceph OSD |

cephosd9 |

| Cisco UCS S3260 Storage Server Bottom Node |

Ceph OSD |

cephosd10 |

| Cisco UCS S3260 Storage Server Top Node |

Ceph OSD |

cephosd11 |

| Cisco UCS S3260 Storage Server Bottom Node |

Ceph OSD |

cephosd12 |

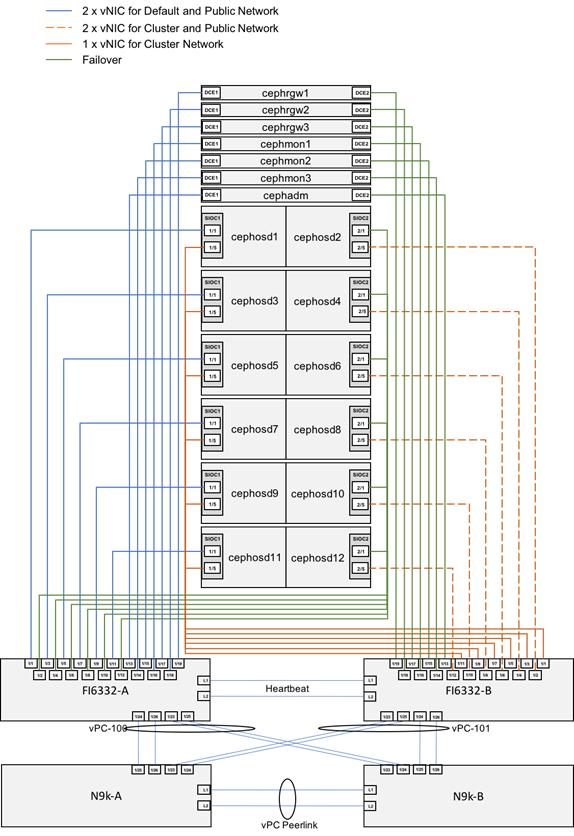

The connectivity of the solution is based on 40 Gbit. All components are connected together via 40 Gbit QSFP cables. Between both Cisco Nexus 9332PQ switches are 2 x 40 Gbit cabling. Each Cisco UCS 6332 Fabric Interconnect is connected via 2 x 40 Gbit to each Cisco UCS 9332PQ switch. And each Cisco UCS C220 M4S and each Cisco UCS C3x60 M4 server is connected with a single 40 Gbit cable to each Fabric Interconnect.

The exact cabling for the Red Hat Ceph Storage solution is illustrated in Figure 13. It shows also the separate vNIC configuration for Public and Cluster network to avoid traffic congestion. The Public Network for the top node of the Cisco UCS S3260 Storage Server is connected to Fabric Interconnect A and the Public Network for the bottom node of the Cisco UCS S3260 Storage Server is connected to Fabric Interconnect B.

All vNICs for the Cluster Network are connected to Fabric Interconnect B to keep the whole Cluster traffic under a single Fabric Interconnect. All vNICs are configured for Fabric Interconnect failover.

Figure 14 Red Hat Ceph Storage Solution Cabling Diagram

For a better reading and overview the exact physical connectivity between the Cisco UCS 6332 Fabric Interconnects and the Cisco UCS S-Series and C-Class server is shown in Table 5.

Table 5 Physical Connectivity between FI 6332 and S3260/C220 M4S

| Port |

Role |

FI6332-A |

FI6332-B |

| Eth1/1 |

Server |

cephosd1, SIOC1/1 |

cephosd1, SIOC1/5 |

| Eth1/2 |

Server |

cephosd2, SIOC2/1 |

cephosd2, SIOC2/5 |

| Eth1/3 |

Server |

cephosd3, SIOC1/1 |

cephosd3, SIOC1/5 |

| Eth1/4 |

Server |

cephosd4, SIOC2/1 |

cephosd4, SIOC2/5 |

| Eth1/5 |

Server |

cephosd5, SIOC1/1 |

cephosd5, SIOC1/5 |

| Eth1/6 |

Server |

cephosd6, SIOC2/1 |

cephosd6, SIOC2/5 |

| Eth1/7 |

Server |

cephosd7, SIOC1/1 |

cephosd7, SIOC1/5 |

| Eth1/8 |

Server |

cephosd8, SIOC2/1 |

cephosd8, SIOC2/5 |

| Eth1/9 |

Server |

cephosd9, SIOC1/1 |

cephosd9, SIOC1/5 |

| Eth1/10 |

Server |

cephosd10, SIOC2/1 |

cephosd10, SIOC2/5 |

| Eth1/11 |

Server |

cephosd11, SIOC1/1 |

cephosd11, SIOC1/5 |

| Eth1/12 |

Server |

cephosd12, SIOC2/1 |

cephosd12, SIOC2/5 |

| Eth1/13 |

Server |

cephadm, DCE1 |

cephadm, DCE2 |

| Eth1/14 |

Server |

cephmon3, DCE1 |

cephmon3, DCE2 |

| Eth1/15 |

Server |

cephmon2, DCE1 |

cephmon2, DCE2 |

| Eth1/16 |

Server |

cephmon1, DCE1 |

cephmon1, DCE2 |

| Eth1/17 |

Server |

cephrgw3, DCE1 |

cephrgw3, DCE2 |

| Eth1/18 |

Server |

cephrgw2, DCE1 |

cephrgw2, DCE2 |

| Eth1/19 |

Server |

cephrgw1, DCE1 |

cephrgw1, DCE2 |

| Eth1/23 |

Network |

N9k-B, Eth1/23 |

N9k-A, Eth1/23 |

| Eth1/24 |

Network |

N9k-A, Eth1/25 |

N9k-B, Eth1/25 |

| Eth1/25 |

Network |

N9k-B, Eth1/24 |

N9k-A, Eth1/24 |

| Eth1/26 |

Network |

N9k-A, Eth1/26 |

N9k-B, Eth1/26 |

Figure 15 shows a more detailed view on the cabling and configuration of Cisco Nexus 9332PQ and Cisco UCS 6332 Fabric Interconnect. Between each Cisco UCS 6332 Fabric Interconnect and both Cisco Nexus 9332PQ is one virtual Port Channel (vPC) configured. vPCs allow links that are physically connected to two different Cisco Nexus 9000 switches to appear to the Fabric Interconnect as coming from a single device and as part of a single port channel. vPC-100 connects FI6332-A with N9k-A and N9k-B. vPC-101 connects FI6332-B with N9k-A and N9k-B. The overall bandwidth for each Port Channel is 160 Gbit.

Between both Cisco Nexus 9332PQ is a vPC peer link configured, containing two 40 Gbit lines.

Figure 15 Cabling and Configuration of Cisco Nexus 9332PQ and Cisco UCS 6332 Fabric Interconnect

The connectivity between Cisco Nexus 9332PQ and Cisco UCS 6332 Fabric Interconnect is shown in Table 6.

Table 6 Physical Connectivity between Cisco Nexus 9332PQ and Cisco UCS 6332 Fabric Interconnect

| Port |

N9k-A |

N9k-B |

| Eth1/23 |

FI6332-B, Eth1/23, vPC-101 |

FI6332-A, Eth1/23, vPC-100 |

| Eth1/24 |

FI6332-B, Eth1/25, vPC-101 |

FI6332-A, Eth1/25, vPC-100 |

| Eth1/25 |

FI6332-A, Eth1/24, vPC-100 |

FI6332-B, Eth1/24, vPC-101 |

| Eth1/26 |

FI6332-A, Eth1/26, vPC-100 |

FI6332-B, Eth1/26, vPC-101 |

| Eth1/31 |

N9k-B, Eth1/31, vPC-1 |

N9k-A, Eth1/31, vPC-1 |

| Eth1/32 |

N9k-B, Eth1/32, vPC-1 |

N9k-A, Eth1/32, vPC-1 |

Software Distributions and Versions

The required software distribution versions are listed below in Table 7.

| Layer |

Component |

Version or Release |

| Compute (Chassis) S3260 |

Board Controller |

1.0.15 |

| Chassis Management Controller |

3.0(3b) |

|

| Shared Adapter |

4.1(3a) |

|

| SAS Expander |

04.08.01.B076 |

|

| Compute (Server Nodes) C3x60 M4 |

BIOS |

C3x60M4.3.0.3b |

| Board Controller |

2.0 |

|

| CIMC Controller |

3.0(3b) |

|

| Storage Controller |

29.00.1-0110 |

|

| Compute (Rack Server) C220 M4S |

Adapter |

4.1(3a) |

| BIOS |

C220M4.3.0.3a |

|

| Board Controller |

33.0 |

|

| CIMC Controller |

3.0(3c) |

|

| FlexFlash Controller |

1.3.2 build 165 |

|

| Storage Controller |

24.12.1-0203 |

|

| Network 6332 Fabric Interconnect |

UCS Manager |

3.1(3c) |

| Kernel |

5.0(3)N2(3.13c) |

|

| System |

5.0(3)N2(3.13c) |

|

| Network Nexus 9332PQ |

BIOS |

07.59 |

|

|

NXOS |

7.0(3)I5(1) |

| Software |

Red Hat Enterprise Linux Server |

7.3 (x86_64) |

|

|

Ceph |

10.2.3-13.el7cp |

Fabric Configuration

This section provides the details for configuring a fully redundant, highly available Cisco UCS 6332 fabric configuration.

· Initial setup of the Fabric Interconnect A and B.

· Connect to Cisco UCS Manager using virtual IP address of the web browser.

· Launch Cisco UCS Manager.

· Enable server and uplink ports.

· Start discovery process.

· Create pools and policies for service profile template.

· Create chassis and storage profiles.

· Create Service Profile templates and appropriate Service Profiles.

· Associate Service Profiles to servers.

Initial Setup of Cisco UCS 6332 Fabric Interconnects

This section describes the initial setup of the Cisco UCS 6332 Fabric Interconnects A and B

Configure Fabric Interconnect A

To configure Fabric Interconnect A, follow these steps:

1. Connect to the console port on the first Cisco UCS 6332 Fabric Interconnect.

2. At the prompt to enter the configuration method, enter console to continue.

3. If asked to either perform a new setup or restore from backup, enter setup to continue.

4. Enter y to continue to set up a new Fabric Interconnect.

5. Enter n to enforce strong passwords.

6. Enter the password for the admin user.

7. Enter the same password again to confirm the password for the admin user.

8. When asked if this fabric interconnect is part of a cluster, answer y to continue.

9. Enter A for the switch fabric.

10. Enter the cluster name FI6332 for the system name.

11. Enter the Mgmt0 IPv4 address.

12. Enter the Mgmt0 IPv4 netmask.

13. Enter the IPv4 address of the default gateway.

14. Enter the cluster IPv4 address.

15. To configure DNS, answer y.

16. Enter the DNS IPv4 address.

17. Answer y to set up the default domain name.

18. Enter the default domain name.

19. Review the settings that were printed to the console, and if they are correct, answer yes to save the configuration.

20. Wait for the login prompt to make sure the configuration has been saved.

Example Setup for Fabric Interconnect A

---- Basic System Configuration Dialog ----

This setup utility will guide you through the basic configuration of

the system. Only minimal configuration including IP connectivity to

the Fabric interconnect and its clustering mode is performed through these steps.

Type Ctrl-C at any time to abort configuration and reboot system.

To back track or make modifications to already entered values,

complete input till end of section and answer no when prompted

to apply configuration.

Enter the configuration method. (console/gui) ? console

Enter the setup mode; setup newly or restore from backup. (setup/restore) ? setup

You have chosen to setup a new Fabric interconnect. Continue? (y/n): y

Enforce strong password? (y/n) [y]: n

Enter the password for "admin":

Confirm the password for "admin":

Is this Fabric interconnect part of a cluster(select 'no' for standalone)? (yes/no) [n]: yes

Enter the switch fabric (A/B): A

Enter the system name: FI6332

Physical Switch Mgmt0 IP address : 172.25.206.221

Physical Switch Mgmt0 IPv4 netmask : 255.255.255.0

IPv4 address of the default gateway : 172.25.206.1

Cluster IPv4 address : 172.25.206.220

Configure the DNS Server IP address? (yes/no) [n]: yes

DNS IP address : 173.36.131.10

Configure the default domain name? (yes/no) [n]:

Join centralized management environment (UCS Central)? (yes/no) [n]:

Following configurations will be applied:

Switch Fabric=A

System Name=FI6332

Enforced Strong Password=no

Physical Switch Mgmt0 IP Address=172.25.206.221

Physical Switch Mgmt0 IP Netmask=255.255.255.0

Default Gateway=172.25.206.1

Ipv6 value=0

DNS Server=173.36.131.10

Cluster Enabled=yes

Cluster IP Address=172.25.206.220

NOTE: Cluster IP will be configured only after both Fabric Interconnects are initialized.

UCSM will be functional only after peer FI is configured in clustering mode.

Apply and save the configuration (select 'no' if you want to re-enter)? (yes/no): yes

Applying configuration. Please wait.

Configuration file - Ok

Cisco UCS 6300 Series Fabric Interconnect

FI6332-A login:

Configure Fabric Interconnect B

To configure Fabric Interconnect B, follow these steps:

1. Connect to the console port on the second Cisco UCS 6332 Fabric Interconnect.

2. When prompted to enter the configuration method, enter console to continue.

3. The installer detects the presence of the partner Fabric Interconnect and adds this fabric interconnect to the cluster. Enter y to continue the installation.

4. Enter the admin password that was configured for the first Fabric Interconnect.

5. Enter the Mgmt0 IPv4 address.

6. Answer yes to save the configuration.

7. Wait for the login prompt to confirm that the configuration has been saved.

Example Setup for Fabric Interconnect B

---- Basic System Configuration Dialog ----

This setup utility will guide you through the basic configuration of

the system. Only minimal configuration including IP connectivity to

the Fabric interconnect and its clustering mode is performed through these steps.

Type Ctrl-C at any time to abort configuration and reboot system.

To back track or make modifications to already entered values,

complete input till end of section and answer no when prompted

to apply configuration.

Enter the configuration method. (console/gui) ? console

Installer has detected the presence of a peer Fabric interconnect. This Fabric interconnect will be added to the cluster. Continue (y/n) ? y

Enter the admin password of the peer Fabric interconnect:

Connecting to peer Fabric interconnect... done

Retrieving config from peer Fabric interconnect... done

Peer Fabric interconnect Mgmt0 IPv4 Address: 172.25.206.221

Peer Fabric interconnect Mgmt0 IPv4 Netmask: 255.255.255.0

Cluster IPv4 address : 172.25.206.220

Peer FI is IPv4 Cluster enabled. Please Provide Local Fabric Interconnect Mgmt0 IPv4 Address

Physical Switch Mgmt0 IP address : 172.25.206.222

Apply and save the configuration (select 'no' if you want to re-enter)? (yes/no): yes

Applying configuration. Please wait.

Fri Sep 30 05:41:48 UTC 2016

Configuration file - Ok

Cisco UCS 6300 Series Fabric Interconnect

FI6332-B login:

Logging Into Cisco UCS Manager

To login to Cisco UCS Manager, follow these steps:

1. Open a Web browser and navigate to the Cisco UCS 6332 Fabric Interconnect cluster address.

2. Click the Launch link to download the Cisco UCS Manager software.

3. If prompted to accept security certificates, accept as necessary.

4. Click Launch UCS Manager HTML.

5. When prompted, enter admin for the username and enter the administrative password.

6. Click Login to log in to the Cisco UCS Manager.

Configure NTP Server

To configure the NTP server for the Cisco UCS environment, follow these steps:

1. Select Admin tab on the left site.

2. Select Time Zone Management.

3. Select Time Zone.

4. Under Properties select your time zone.

5. Select Add NTP Server.

6. Enter the IP address of the NTP server.

7. Select OK.

Figure 16 Adding a NTP Server - Summary

Initial Base Setup of the Environment

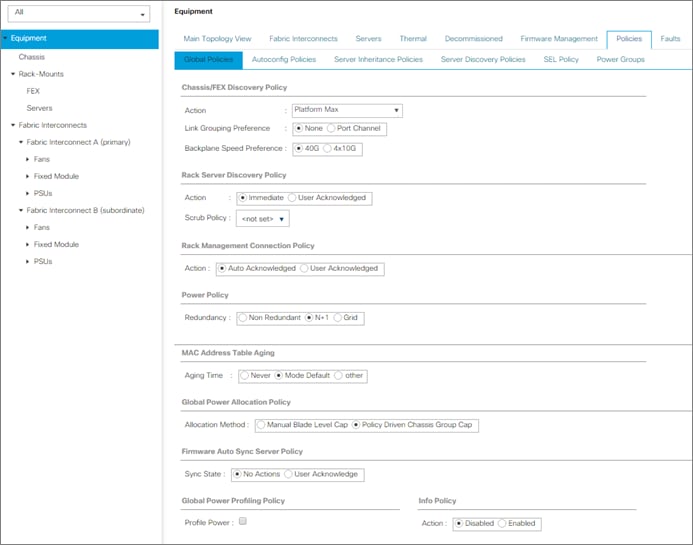

Configure Global Policies

To configure the Global Policies, follow these steps:

1. Select the Equipment tab on the left site of the window.

2. Select Policies on the right site.

3. Select Global Policies.

4. Under Chassis/FEX Discovery Policy select Platform Max under Action.

5. Select 40G under Backplane Speed Preference.

6. Under Rack Server Discovery Policy select Immediate under Action.

7. Under Rack Management Connection Policy select Auto Acknowledged under Action.

8. Under Power Policy select Redundancy N+1.

9. Under Global Power Allocation Policy select Policy Driven.

10. Select Save Changes.

Figure 17 Configuration of Global Policies

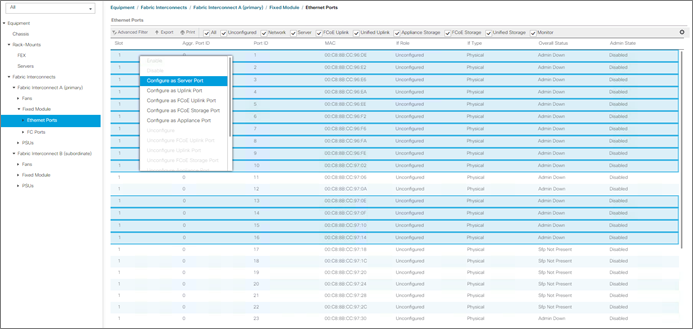

Enable Fabric Interconnect A Ports for Server

To enable server ports, follow these steps:

1. Select the Equipment tab on the left site.

2. Select Equipment > Fabric Interconnects > Fabric Interconnect A (subordinate) > Fixed Module.

3. Click Ethernet Ports section.

4. Select Ports 1-10 and 13-16, right-click and then select Configure as Server Port.

5. Click Yes and then OK.

6. Repeat the same steps for Fabric Interconnect B.

Figure 18 Configuration of Server Ports

Enable Fabric Interconnect A Ports for Uplinks

To enable uplink ports, follow these steps:

1. Select the Equipment tab on the left site.

2. Select Equipment > Fabric Interconnects > Fabric Interconnect A (subordinate) > Fixed Module.

3. Click Ethernet Ports section.

4. Select Ports 23-26, right-click and then select Configure as Uplink Port.

5. Click Yes and then OK.

6. Repeat the same steps for Fabric Interconnect B.

Label Each Chassis for Identification

To label each chassis for better identification, follow these steps:

1. Select the Equipment tab on the left site.

2. Select Chassis > Chassis 1.

3. In the Properties section on the right go to User Label and add Ceph OSD 1/2 to the field.

4. Repeat the previous steps for Chassis 2 – 5 by using the following labels (Table 8):

| Chassis |

Name |

| Chassis 1 |

Ceph OSD 1/2 |

| Chassis 2 |

Ceph OSD 3/4 |

| Chassis 3 |

Ceph OSD 5/6 |

| Chassis 4 |

Ceph OSD 7/8 |

| Chassis 5 |

Ceph OSD 9/10 |

Figure 19 Labeling of all Chassis

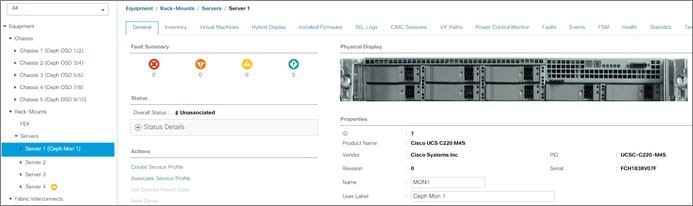

Label Each Server for Identification

To label each server for better identification, follow these steps:

1. Select the Equipment tab on the left site.

2. Select Chassis > Chassis 1 > Server 1.

3. In the Properties section on the right go to User Label and add Ceph OSD 1 to the field.

4. Repeat the previous steps for Server 2 of Chassis 1 and for all other servers of Chassis 2 – 5 according to Table 9.

5. Go then to Servers > Rack-Mounts > Servers > and repeat the step for all servers according to Table 9.

| Server |

Name |

| Chassis 1 / Server 1 |

Ceph OSD 1 |

| Chassis 1 / Server 2 |

Ceph OSD 2 |

| Chassis 2 / Server 1 |

Ceph OSD 3 |

| Chassis 2 / Server 2 |

Ceph OSD 4 |

| Chassis 3 / Server 1 |

Ceph OSD 5 |

| Chassis 3 / Server 2 |

Ceph OSD 6 |

| Chassis 4 / Server 1 |

Ceph OSD 7 |

| Chassis 4 / Server 2 |

Ceph OSD 8 |

| Chassis 5 / Server 1 |

Ceph OSD 9 |

| Chassis 5 / Server 2 |

Ceph OSD 10 |

| Rack-Mount / Server 1 |

Ceph Mon 1 |

| Rack-Mount / Server 2 |

Ceph Mon 2 |

| Rack-Mount / Server 3 |

Ceph Mon 3 |

| Rack-Mount / Server 4 |

Ceph Adm |

Figure 20 Labeling of Rack Servers

Create KVM IP Pool

To create a KVM IP Pool, follow these steps:

1. Select the LAN tab on the left site.

2. Go to LAN > Pools > root > IP Pools > IP Pool ext-mgmt.

3. Right-click Create Block of IPv4 Addresses.

4. Enter an IP Address in the From field.

5. Enter Size 20.

6. Enter your Subnet Mask.

7. Fill in your Default Gateway.

8. Enter your Primary DNS and Secondary DNS if needed.

9. Click OK.

Figure 21 Create Block of IPv4 Addresses

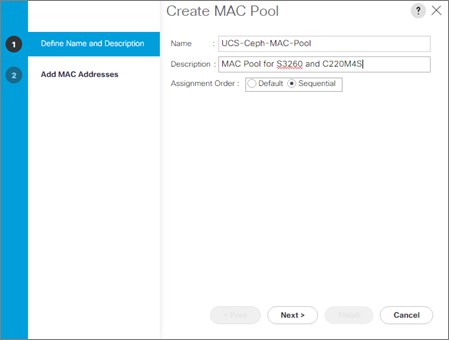

Create MAC Pool

To create a MAC Pool, follow these steps:

1. Select the LAN tab on the left site.

2. Go to LAN > Pools > root > Mac Pools and right-click Create MAC Pool.

3. Type in UCS-Ceph-MAC-Pool for Name.

4. (Optional) Enter a Description of the MAC Pool.

5. Set Assignment Order as Sequential.

Figure 22 Create MAC Pool

6. Click Next.

7. Click Add.

8. Specify a starting MAC address.

9. Specify a size of the MAC address pool, which is sufficient to support the available server resources, for example, 100.

Figure 23 Create a Block of MAC Addresses

10. Click OK.

11. Click Finish.

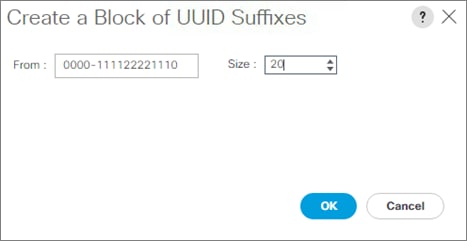

Create UUID Pool

To create a UUID Pool, follow these steps:

1. Select the Servers tab on the left site.

2. Go to Servers > Pools > root > UUID Suffix Pools and right-click Create UUID Suffix Pool.

3. Type in UCS-Ceph-UUID-Pool for Name.

4. (Optional) Enter a Description of the UUID Pool.

5. Set Assignment Order to Sequential and click Next.

Figure 24 Create UUID Suffix Pool

6. Click Add.

7. Specify a starting UUID Suffix.

8. Specify a size of the UUID suffix pool, which is sufficient to support the available server resources, for example, 20.

Figure 25 Create a Block of UUID Suffixes

9. Click OK.

10. Click Finish and then OK.

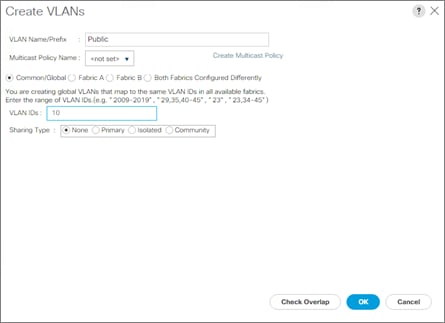

Create VLANs

As mentioned before it is important to separate the network traffic with VLANs for Public network traffic and Cluster network traffic. Table 10 lists the configured VLANs.

| VLAN |

Name |

NIC Port |

Function |

| 1 |

default |

eth0 |

Administration & Management |

| 10 |

Public |

eth1 |

Public network |

| 20 |

Cluster |

eth2 |

Cluster network |

To configure VLANs in the Cisco UCS Manager GUI, follow these steps:

1. Select LAN in the left pane in the Cisco UCS Manager GUI.

2. Select LAN > LAN Cloud > VLANs and right-click Create VLANs.

3. Enter Public for the VLAN Name.

4. Keep Multicast Policy Name as <not set>.

5. Select Common/Global for Public.

6. Enter 10 in the VLAN IDs field.

7. Click OK and then Finish.

Figure 26 Create a VLAN

8. Repeat the steps for VLAN Cluster.

Enable CDP

To enable Network Control Policies, follow these steps:

1. Select the LAN tab in the left pane of the Cisco UCS Manager GUI.

2. Go to LAN > Policies > root > Network Control Policies and right-click Create Network-Control Policy.

3. Type in Enable-CDP in the Name field.

4. (Optional) Enter a description in the Description field.

5. Click Enabled under CDP.

6. Click All Hosts Vlans under MAC Register Mode.

7. Leave everything else untouched and click OK.

8. Click OK.

Figure 27 Create a Network Control Policy

QoS System Class

To create a Quality of Service System Class, follow these steps:

1. Select the LAN tab in the left pane of the Cisco UCS Manager GUI.

2. Go to LAN > LAN Cloud > QoS System Class.

3. Set Best Effort Weight to 10 and MTU to 9216.

4. Set Fibre Channel Weight to None.

5. Click Save Changes and then OK.

Figure 28 QoS System Class

QoS Policy Setup

Based on the previous QoS System Class, to setup a QoS Policy follow these steps:

1. Select the LAN tab in the left pane of the Cisco UCS Manager GUI.

2. Go to LAN > Policies > root > QoS Policies and right-click Create QoS Policy.

3. Type in QoS-Ceph in the Name field.

4. Set Priority as Best Effort and leave everything else unchanged.

5. Click OK and then OK.

Figure 29 QoS Policy Setup

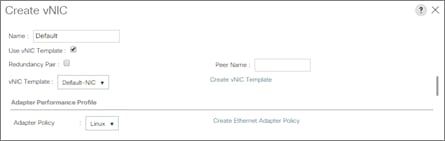

vNIC Template Setup

Based on the previous section of creating VLANs, the next step is to create the appropriate vNIC templates. For Red Hat Ceph Storage we need to create up four different vNICs, depending on the role of the server.

For the Public Network, please create two vNIC, one for the top node of the Cisco UCS S3260 Storage Server to connect to Fabric Interconnect A and one vNIC for the bottom node to connect to Fabric Interconnect B. This to avoid traffic congestion over the configured vPCs. If you have more OSD nodes think about upgrading the number of vPC lines to the Cisco Nexus 9332PQ switch.

Table 11 gives you an overview of the configuration.

| Name |

vNIC Name |

Fabric Interconnect |

Failover |

VLAN |

MTU Size |

MAC Pool |

Network Control Policy |

| Default |

Default-NIC |

A |

Yes |

default - 1 |

1500 |

UCS-Ceph-MAC-Pool |

Enable-CDP |

| Public Network |

PublicA-NIC |

A |

Yes |

Public - 10 |

9000 |

UCS-Ceph-MAC-Pool |

Enable-CDP |

| PublicB-NIC |

B |

Yes |

Public - 10 |

9000 |

UCS-Ceph-MAC-Pool |

Enable-CDP |

|

| Cluster Network |

Cluster-NIC |

B |

Yes |

Cluster - 20 |

9000 |

UCS-Ceph-MAC-Pool |

Enable-CDP |

To create the appropriate vNICs, follow these steps:

1. Select the LAN tab in the left pane of the Cisco UCS Manager GUI.

2. Go to LAN > Policies > root > vNIC Templates and right-click Create vNIC Template.

3. Type in Default-NIC in the Name field.

4. (Optional) Enter a description in the Description field.

5. Click Fabric A as Fabric ID and enable failover.

6. Select default as VLANs and click Native VLAN.

7. Select UCS-Ceph-MAC-Pool as MAC Pool.

8. Select QoS-Ceph as QoS Policy.

9. Select Enable-CDP as Network Control Policy.

10. Click OK and then OK.

Figure 30 Setup of vNIC Template for Default vNIC

11. Repeat the above steps for the vNICs Public and Cluster. Make sure you select the correct Fabric Interconnect, VLAN (without Native VLAN), and MTU size according to Table 11.

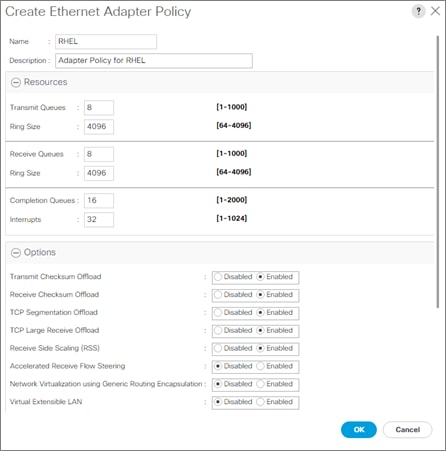

Adapter Policy Setup

To create a specific adapter policy for Red Hat Enterprise Linux, follow these steps:

1. Select the Server tab in the left pane of the Cisco UCS Manager GUI.

2. Go to Servers > Policies > root > Adapter Policies and right-click Create Ethernet Adapter Policy.

3. Type in RHEL in the Name field.

4. (Optional) Enter a description in the Description field.

5. Under Resources type in the following values:

a. Transmit Queues: 8

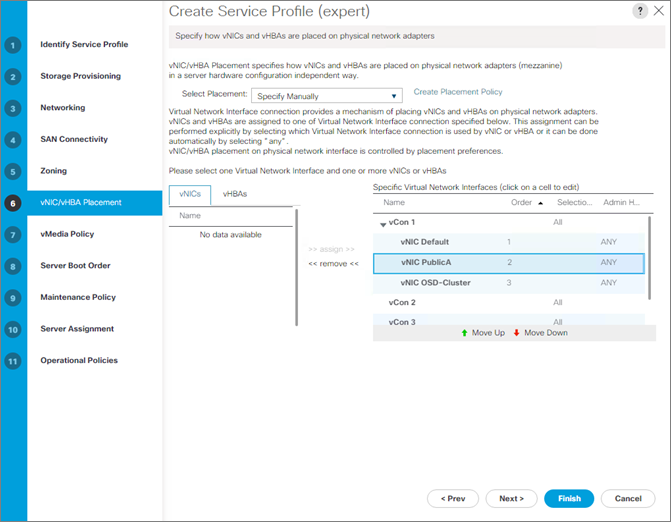

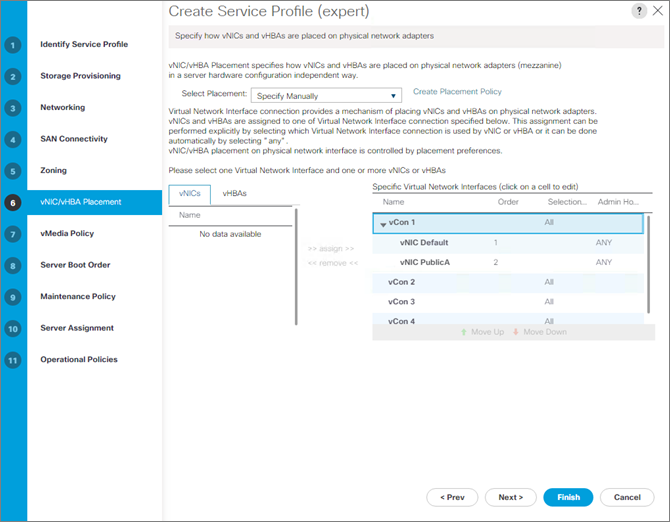

b. Ring Size: 4096

c. Receive Queues: 8

d. Ring Size: 4096

e. Completion Queues: 16

f. Interrupts: 32

6. Under Options enable Receive Side Scaling (RSS).

7. Click OK and then OK.

Figure 31 Adapter Policy for RHEL

Boot Policy Setup

To create a Boot Policy, follow these steps:

1. Select the Servers tab in the left pane.

2. Go to Servers > Policies > root > Boot Policies and right-click Create Boot Policy.

3. Type in a PXE-Boot in the Name field.

4. (Optional) Enter a description in the Description field.

Figure 32 Create Boot Policy

5. Click Local Devices > Add Local LUN.

Figure 33 Add Local LUN

6. Click OK.

7. Click Local Devices > Add Local CD/DVD.

8. Click OK.

9. Click OK.

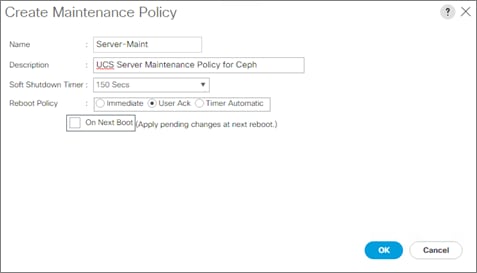

Create Maintenance Policy Setup

To setup a Maintenance Policy, follow these steps:

1. Select the Servers tab in the left pane.

2. Go to Servers > Policies > root > Maintenance Policies and right-click Create Maintenance Policy.

3. Type in a Server-Maint in the Name field.

4. (Optional) Enter a description in the Description field.

5. Click User Ack under Reboot Policy.

6. Click OK and then OK.

Figure 34 Create Maintenance Policy

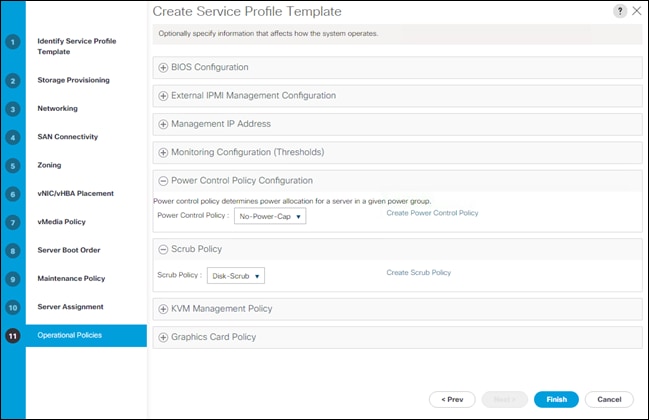

Create Power Control Policy Setup

To create a Power Control Policy, follow these steps:

1. Select the Servers tab in the left pane.

2. Go to Servers > Policies > root > Power Control Policies and right-click Create Power Control Policy.

3. Type in No-Power-Cap in the Name field.

4. (Optional) Enter a description in the Description field.

5. Click No Cap.

6. Click OK and then OK.

Figure 35 Power Control Policy

Create Disk Scrub Policy

To prevent failures during re-deployment of a Red Hat Ceph Storage environment, implement a Disk Scrub Policy that is enabled when removing a profile from a server.

To create a Disk Scrub Policy, follow these steps:

1. Select the Servers tab in the left pane.

2. Go to Servers > Policies > root > Scrub Policies and right-click Create Scrub Policy.

3. Type in Disk-Scrub in the Name field.

4. (Optional) Enter a description in the Description field.

5. Select Disk Scrub radio button to Yes.

6. Click OK and then OK.

Figure 36 Create a Disk Scrub Policy

Creating Chassis Profile

The Chassis Profile is required to assign specific disks to a particular server node in a Cisco UCS S3260 Storage Server as well as upgrading to a specific chassis firmware package.

Create Chassis Firmware Package

To create a Chassis Firmware Package, follow these steps:

1. Select the Chassis tab in the left pane of the Cisco UCS Manager GUI.

2. Go to Chassis > Policies > root > Chassis Firmware Package and right-click Create Chassis Firmware Package.

3. Type in UCS-S3260-Firm in the Name field.

4. (Optional) Enter a description in the Description field.

5. Select 3.1.(2b)C form the drop-down menu of Chassis Package.

6. Select OK and then OK.

Figure 37 Create Chassis Firmware Package

Create Chassis Maintenance Policy

To create a Chassis Maintenance Policy, follow these steps:

1. Select the Chassis tab in the left pane of the Cisco UCS Manager GUI.

2. Go to Chassis > Policies > root > Chassis Maintenance Policies and right-click Create Chassis Maintenance Policy.

3. Type in UCS-S3260-Main in the Name field.

4. (Optional) Enter a description in the Description field.

5. Click OK and then OK.

Figure 38 Create Chassis Maintenance Policy

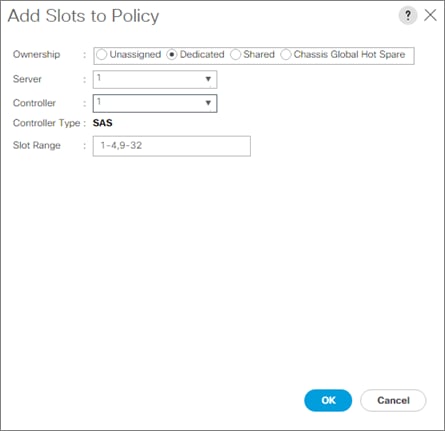

Create Disk Zoning Policy

To create a Disk Zoning Policy, follow these steps:

1. Select the Chassis tab in the left pane of the Cisco UCS Manager GUI.

2. Go to Chassis > Policies > root > Disk Zoning Policies and right-click Create Disk Zoning Policy.

3. Type in UCS-S3260-Zoning in the Name field.

4. (Optional) Enter a description in the Description field.

Figure 39 Create Disk Zoning Policy

5. Click Add.

6. Select Dedicated under Ownership.

7. Select Server 1.

8. Select Controller 1.

9. Add Slot Range 1-4, 9-32 for the top node of the Cisco UCS S3260 Storage Server.

Figure 40 Add Slots to Top Node of Cisco UCS S3260

10. Click OK.

11. Click Add.

12. Select Dedicated under Ownership.

13. Select Server 2.

14. Select Controller 1.

15. Add Slot Range 5-8, 33-56 for the bottom node of the Cisco UCS S3260 Storage Server.

Figure 41 Add Slots to Bottom Node of Cisco UCS S3260

16. Click OK and then OK.

Create Compute Connection Policy

To create a Compute Connection Policy, follow these steps:

1. Select the Chassis tab in the left pane of the Cisco UCS Manager GUI.

2. Go to Chassis > Policies > root > Compute Connection Policies and right-click Create Compute Connection Policy.

3. Type in UCS-S3260-Connec in the Name field.

4. (Optional) Enter a description in the Description field.

5. Select Single Server Single Sioc.

6. Click OK and then OK.

Figure 42 Create a SIOC Connection Policy

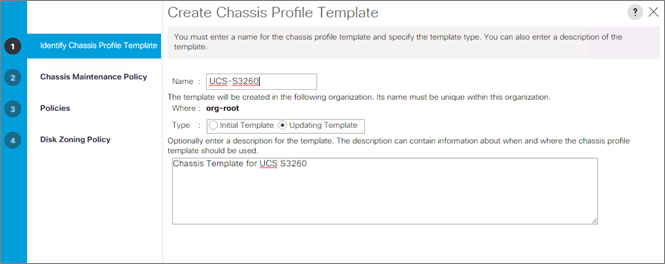

Create Chassis Profile Template

To create a Chassis Profile Template, follow these steps:

1. Select the Chassis tab in the left pane of the Cisco UCS Manager GUI.

2. Go to Chassis > Chassis Profile Templates and right-click Create Chassis Profile Template.

3. Type in UCS-S3260 in the Name field.

4. Under Type, select Updating Template.

5. (Optional) Enter a description in the Description field.

Figure 43 Create Chassis Profile Template

6. Select Next.

7. Under the radio button Chassis Maintenance Policy, select your previously created Chassis Maintenance Policy.

Figure 44 Chassis Profile Template – Chassis Maintenance Policy

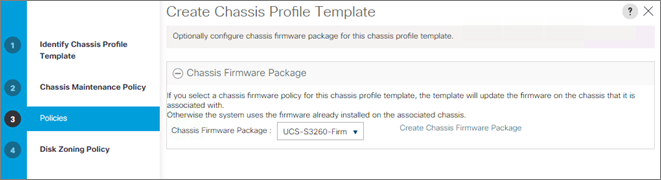

8. Select Next.

9. Select the + button and select under Chassis Firmware Package your previously created Chassis Firmware Package Policy.

10. Select the + button and select under Compute Connection Policy your previously created Compute Connection Policy.

Figure 45 Chassis Profile Template – Chassis Firmware Package

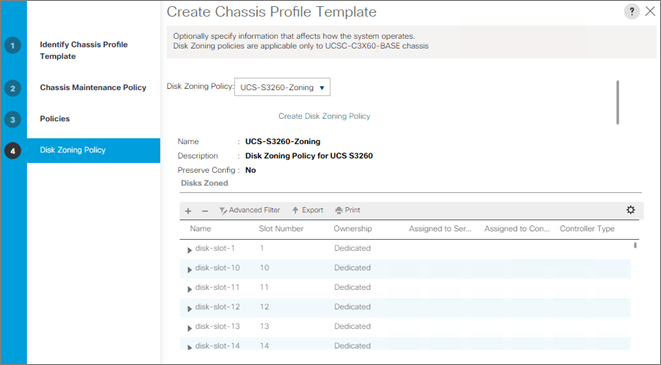

11. Select Next.

12. Under Disk Zoning Policy select your previously created Disk Zoning Policy.

Figure 46 Chassis Profile Template – Disk Zoning Policy

13. Click Finish and then OK.

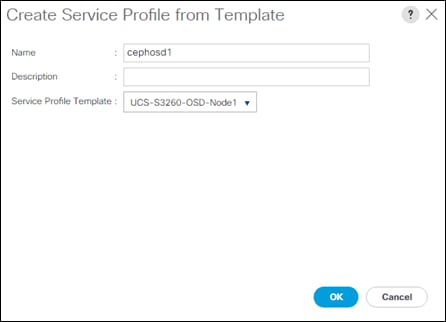

Create Chassis Profile from Template

To create the Chassis Profiles from the previous created Chassis Profile Template, follow these steps:

1. Select the Chassis tab in the left pane of the Cisco UCS Manager GUI.

2. Go to Chassis > Chassis Profiles and right-click Create Chassis Profile from Template.

3. Type in S3260-Dual- in the Name field.

4. Leave the Name Suffix Starting Number untouched.

5. Enter 5 for the Number of Instances for all connected Cisco UCS S3260 Storage Server.

6. Choose your previously created Chassis Profile Template.

7. Click OK and then OK.

Figure 47 Create Chassis Profiles from Template

Associate Chassis Profile

To associate all previous created Chassis Profile, follow these steps:

1. Select the Chassis tab in the left pane of the Cisco UCS Manager GUI.

2. Go to Chassis > Chassis Profiles and select S3260-Dual-1.

3. Right-click Change Chassis Profile Association.

4. Under Chassis Assignment, choose Select existing Chassis.

5. Under Available Chassis, select ID 1.

6. Click OK and then OK.

7. Repeat the steps for the other four Chassis Profiles by selecting the IDs 2 – 5.

Figure 48 Associate Chassis Profile

Creating Storage Profiles

Setting Disks for Rack-Mount Servers to Unconfigured-Good

To prepare all disks from the Rack-Mount servers for storage profiles, the disks have to be converted from JBOD to Unconfigured-Good. To convert the disks, follow these steps:

1. Select the Equipment tab in the left pane of the Cisco UCS Manager GUI.

2. Go to Equipment > Rack-Mounts > Servers > Server 1 > Disks.

3. Select both disks and right-click Set JBOD to Unconfigured-Good.

4. Repeat the steps for Server 2-4.

Figure 49 Set Disks for Rack-Mount Servers to Unconfigured-Good

Setting Disks for Cisco UCS S3260 Storage Server to Unconfigured-Good with Cisco UCS PowerTool