Cisco UCS Storage Server with Scality Ring

Available Languages

Cisco UCS Storage Server with Scality Ring

Design and Deployment of Scality Object Storage on Cisco UCS S3260 Storage Server

Last Updated: April 10, 2017

About the Cisco Validated Design (CVD) Program

The CVD program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information visit

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2017 Cisco Systems, Inc. All rights reserved.

Table of Contents

Cisco Unified Computing System

Cisco UCS S3260 Storage Server

Cisco UCS Virtual Interface Card 1387

Cisco UCS 6300 Series Fabric Interconnect

Software Distributions and Versions

Physical Topology and Configuration

Deployment Hardware and Software

Initial Setup of Cisco UCS 6332 Fabric Interconnects

Configure Fabric Interconnect A

Example Setup for Fabric Interconnect A

Configure Fabric Interconnect B

Example Setup for Fabric Interconnect B

Logging into Cisco UCS Manager

Initial Base Setup of the Environment

Enable Fabric Interconnect A Ports for Server

Enable Fabric Interconnect A Ports for Uplinks

Label Each Server for Identification

Create LAN Connectivity Policy Setup

Create Maintenance Policy Setup

Create Power Control Policy Setup

Create Chassis Firmware Package

Create Chassis Maintenance Policy

Create Chassis Profile Template

Create Chassis Profile from Template

Setting Disks for Cisco UCS C220 M4 Rack-Mount Servers to Unconfigured-Good

Create Storage Profile for Cisco UCS S3260 Storage Server

Create Storage Profile for Cisco UCS C220 M4S Rack-Mount Servers

Creating a Service Profile Template

Create Service Profile Template for Cisco UCS S3260 Storage Server Top and Bottom Node

Identify Service Profile Template

Create Service Profile Template for Cisco UCS C220 M4S

Create Service Profiles from Template

Creating Port Channel for Uplinks

Create Port Channel for Fabric Interconnect A/B

Configuration of Nexus 9332PQ Switch A and B

Initial Setup of Nexus 9332PQ Switch A and B

Enable Features on Cisco Nexus 9332PQ Switch A and B

Configuring VLANs on Nexus 9332PQ Switch A and B

Verification Check of Cisco Nexus C9332PQ Configuration for Switch A and B

Installing Red Hat Enterprise Linux 7.3 Operating System

Installation of RHEL 7.3 on Cisco UCS C220 M4S

Installing RHEL 7.3 on Cisco UCS S3260 Storage Server

Post-Installation Steps for Red Hat Enterprise Linux 7.3

Preparing all Nodes for Scality RING Installation

Post-Installation for Scality RING

Scality S3 Connector Installation

Functional Testing of NFS Connectors

Functional Testing of S3 connectors

High-Availability for Hardware Stack

Hardware Failures of Cisco UCS S3260 and Cisco UCS C220 M4 Servers

Appendix A – Kickstart File of Connector Nodes for Cisco UCS C220 M4S.

Appendix B – Kickstart File of Storage nodes for Cisco UCS S3260 M4 Server

Appendix C – Example /etc/hosts File

Appendix D – Best Practice Configurations for Ordering Cisco UCS S3260 for Scality

Appendix E – Other Best Practices to Consider

Appendix F – How to Order Using Cisco UCS S3260 + Scality Solution IDs

Modern data centers increasingly rely on a variety of architectures for storage. Whereas in the past organizations focused on block and file storage only, today organizations are focusing on object storage, for several reasons:

· Object storage offers unlimited scalability and simple management

· Because of the low cost per gigabyte, object storage is well suited for large-capacity needs, and therefore for use cases such as archive, backup, and cloud operations

· Object storage allows the use of custom metadata for objects

Enterprise storage systems are designed to address business-critical requirements in the data center. But these solutions may not be optimal for use cases such as backup and archive workloads and other unstructured data, for which data latency is not especially important.

Scality object Storage is a massively scalable, software-defined storage system that gives you unified storage for your cloud environment. It is an object storage architecture that can easily achieve enterprise-class reliability, scale-out capacity, and lower costs with an industry-standard server solution.

The Cisco UCS S3260 Storage Server, originally designed for the data center, together with Scality RING is optimized for object storage solutions, making it an excellent fit for unstructured data workloads such as backup, archive, and cloud data. The S3260 delivers a complete infrastructure with exceptional scalability for computing and storage resources together with 40 Gigabit Ethernet networking. The S3260 is the platform of choice for object storage solutions because it provides more than comparable platforms:

· Proven server architecture that allows you to upgrade individual components without the need for migration

· High-bandwidth networking that meets the needs of large-scale object storage solutions like Scality RING Storage

· Unified, embedded management for easy-to-scale infrastructure

Cisco and Scality are collaborating to offer customers a scalable object storage solution for unstructured data that is integrated with Scality RING Storage. With the power of the Cisco UCS management framework, the solution is cost effective to deploy and manage and will enable the next-generation cloud deployments that drive business agility, lower operational costs and avoid vendor lock-in.

Introduction

Traditional storage systems are limited in their ability to easily and cost-effectively scale to support massive amounts of unstructured data. With about 80 percent of data being unstructured, new approaches using x86 servers are proving to be more cost effective, providing storage that can be expanded as easily as your data grows. Object storage is the newest approach for handling massive amounts of data.

Scality is an industry leader in enterprise-class, petabyte-scale storage. Scality introduced a revolutionary software-defined storage platform that could easily manage exponential data growth, ensure high availability, deliver high performance and reduce operational cost. Scality’s scale-out storage solution, the Scality RING, is based on patented object storage technology and operates seamlessly on any commodity server hardware. It delivers outstanding scalability and data persistence, while its end-to-end parallel architecture provides unsurpassed performance. Scality’s storage infrastructure integrates seamlessly with applications through standard storage protocols such as NFS, SMB and S3.

Scale-out object storage uses x86 architecture storage-optimized servers to increase performance while reducing costs. The Cisco UCS S3260 Storage Server is well suited for object-storage solutions. It provides a platform that is cost effective to deploy and manage using the power of the Cisco Unified Computing System (Cisco UCS) management: capabilities that traditional unmanaged and agent-based management systems can’t offer. You can design S3260 solutions for a computing-intensive, capacity-intensive, or throughput-intensive workload.

Both solutions together, Scality object Storage and Cisco UCS S3260 Storage Server, deliver a simple, fast and scalable architecture for enterprise scale-out storage

.

Solution

The current Cisco Validated Design (CVD) is a simple and linearly scalable architecture that provides object storage solution on Scality RING and Cisco UCS S3260 Storage Server. The solution includes the following features:

· Infrastructure for large scale object storage

· Design of a Scality object Storage solution together with Cisco UCS S3260 Storage Server

· Simplified infrastructure management with Cisco UCS Manager

· Architectural scalability – linear scaling based on network, storage, and compute requirements

Audience

This document describes the architecture, design and deployment procedures of a Scality object Storage solution using six Cisco UCS S3260 Storage Server with two C3X60 M4 server nodes each as Storage nodes, two Cisco UCS C220 M4 S rack server each as connector nodes, one Cisco UCS C220 M4S rackserver as Supervisor node, and two Cisco UCS 6332 Fabric Interconnect managed by Cisco UCS Manager. The intended audience for this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who want to deploy Scality object Storage on the Cisco Unified Computing System (UCS) using Cisco UCS S3260 Storage Servers.

Solution Summary

This CVD describes in detail the process of deploying Scality object Storage on Cisco UCS S3260 Storage Server.

The configuration uses the following architecture for the deployment:

· 6 x Cisco UCS S3260 Storage Server with 2 x C3X60 M4 server nodes working as Storage nodes

· 3 x Cisco UCS C220 M4S rack server working as Connector nodes

· 1 x Cisco UCS C220 M4S rack server working as Supervisor node

· 2 x Cisco UCS 6332 Fabric Interconnect

· 1 x Cisco UCS Manager

· 2 x Cisco Nexus 9332PQ Switches

· Scality RING 6.3

· Redhat Enterprise Linux Server 7.3

Cisco Unified Computing System

The Cisco Unified Computing System (Cisco UCS) is a state-of-the-art data center platform that unites computing, network, storage access, and virtualization into a single cohesive system.

The main components of Cisco Unified Computing System are:

· Computing - The system is based on an entirely new class of computing system that incorporates rack-mount and blade servers based on Intel Xeon Processor E5 and E7. The Cisco UCS servers offer the patented Cisco Extended Memory Technology to support applications with large datasets and allow more virtual machines (VM) per server.

· Network - The system is integrated onto a low-latency, lossless, 40-Gbps unified network fabric. This network foundation consolidates LANs, SANs, and high-performance computing networks which are separate networks today. The unified fabric lowers costs by reducing the number of network adapters, switches, and cables, and by decreasing the power and cooling requirements.

· Virtualization - The system unleashes the full potential of virtualization by enhancing the scalability, performance, and operational control of virtual environments. Cisco security, policy enforcement, and diagnostic features are now extended into virtualized environments to better support changing business and IT requirements.

· Storage access - The system provides consolidated access to both SAN storage and Network Attached Storage (NAS) over the unified fabric. By unifying the storage access the Cisco Unified Computing System can access storage over Ethernet (NFS or iSCSI), Fibre Channel, and Fibre Channel over Ethernet (FCoE). This provides customers with choice for storage access and investment protection. In addition, the server administrators can pre-assign storage-access policies for system connectivity to storage resources, simplifying storage connectivity, and management for increased productivity.

The Cisco Unified Computing System is designed to deliver:

· A reduced Total Cost of Ownership (TCO) and increased business agility.

· Increased IT staff productivity through just-in-time provisioning and mobility support.

· A cohesive, integrated system which unifies the technology in the data center.

· Industry standards supported by a partner ecosystem of industry leaders.

Cisco UCS S3260 Storage Server

The Cisco UCS® S3260 Storage Server (Figure 1) is a modular, high-density, high-availability dual node rack server well suited for service providers, enterprises, and industry-specific environments. It addresses the need for dense cost effective storage for the ever-growing data needs. Designed for a new class of cloud-scale applications, it is simple to deploy and excellent for big data applications, software-defined storage environments and other unstructured data repositories, media streaming, and content distribution.

Figure 1 Cisco UCS S3260 Storage Server

Extending the capability of the Cisco UCS S-Series portfolio, the Cisco UCS S3260 helps you achieve the highest levels of data availability. With dual-node capability that is based on the Intel® Xeon® processor E5-2600 v4 series, it features up to 600 TB of local storage in a compact 4-rack-unit (4RU) form factor. All hard-disk drives can be asymmetrically split between the dual-nodes and are individually hot-swappable. The drives can be built-in in an enterprise-class Redundant Array of Independent Disks (RAID) redundancy or be in a pass-through mode.

This high-density rack server comfortably fits in a standard 32-inch depth rack, such as the Cisco® R42610 Rack.

The Cisco UCS S3260 is deployed as a standalone server in both bare-metal or virtualized environments. Its modular architecture reduces total cost of ownership (TCO) by allowing you to upgrade individual components over time and as use cases evolve, without having to replace the entire system.

The Cisco UCS S3260 uses a modular server architecture that, using Cisco’s blade technology expertise, allows you to upgrade the computing or network nodes in the system without the need to migrate data migration from one system to another. It delivers:

· Dual server nodes

· Up to 36 computing cores per server node

· Up to 60 drives mixing a large form factor (LFF) with up to 14 solid-state disk (SSD) drives plus 2 SSD SATA boot drives per server node

· Up to 512 GB of memory per server node (1 terabyte [TB] total)

· Support for 12-Gbps serial-attached SCSI (SAS) drives

· A system I/O Controller with Cisco VIC 1300 Series Embedded Chip supporting Dual-port 40Gbps

· High reliability, availability, and serviceability (RAS) features with tool-free server nodes, system I/O controller, easy-to-use latching lid, and hot-swappable and hot-pluggable components

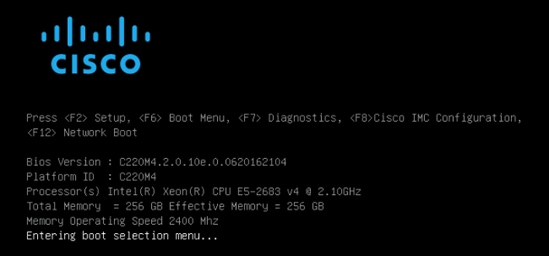

Cisco UCS C220 M4 Rack Server

The Cisco UCS® C220 M4 Rack Server (Figure 2) is the most versatile, general-purpose enterprise infrastructure and application server in the industry. It is a high-density two-socket enterprise-class rack server that delivers industry-leading performance and efficiency for a wide range of enterprise workloads, including virtualization, collaboration, and bare-metal applications. The Cisco UCS C-Series Rack Servers can be deployed as standalone servers or as part of the Cisco Unified Computing System™ (Cisco UCS) to take advantage of Cisco’s standards-based unified computing innovations that help reduce customers’ total cost of ownership (TCO) and increase their business agility.

Figure 2 Cisco UCS C220 M4 Rack Server

The Cisco UCS® C220 M4 Rack Server (Figure 2) is the most versatile, general-purpose enterprise infrastructure and application server in the industry. It is a high-density two-socket enterprise-class rack server that delivers industry-leading performance and efficiency for a wide range of enterprise workloads, including virtualization, collaboration, and bare-metal applications. The Cisco UCS C-Series Rack Servers can be deployed as standalone servers or as part of the Cisco Unified Computing System™ (Cisco UCS) to take advantage of Cisco’s standards-based unified computing innovations that help reduce customers’ total cost of ownership (TCO) and increase their business agility.

The enterprise-class Cisco UCS C220 M4 server extends the capabilities of the Cisco UCS portfolio in a 1RU form factor. It incorporates the Intel® Xeon® processor E5-2600 v4 and v3 product family, next-generation DDR4 memory, and 12-Gbps SAS throughput, delivering significant performance and efficiency gains. The Cisco UCS C220 M4 rack server delivers outstanding levels of expandability and performance in a compact 1RU package:

· Up to 24 DDR4 DIMMs for improved performance and lower power consumption

· Up to 8 Small Form-Factor (SFF) drives or up to 4 Large Form-Factor (LFF) drives

· Support for 12-Gbps SAS Module RAID controller in a dedicated slot, leaving the remaining two PCIe Gen 3.0 slots available for other expansion cards

· A modular LAN-on-motherboard (mLOM) slot that can be used to install a Cisco UCS virtual interface card (VIC) or third-party network interface card (NIC) without consuming a PCIe slot

· Two embedded 1Gigabit Ethernet LAN-on-motherboard (LOM) ports

Cisco UCS Virtual Interface Card 1387

The Cisco UCS Virtual Interface Card (VIC) 1387 (Figure 3) is a Cisco® innovation. It provides a policy-based, stateless, agile server infrastructure for your data center. This dual-port Enhanced Quad Small Form-Factor Pluggable (QSFP) half-height PCI Express (PCIe) modular LAN-on-motherboard (mLOM) adapter is designed exclusively for Cisco UCS C-Series and 3260 Rack Servers. The card supports 40 Gigabit Ethernet and Fibre Channel over Ethernet (FCoE). It incorporates Cisco’s next-generation converged network adapter (CNA) technology and offers a comprehensive feature set, providing investment protection for future feature software releases. The card can present more than 256 PCIe standards-compliant interfaces to the host, and these can be dynamically configured as either network interface cards (NICs) or host bus adapters (HBAs). In addition, the VIC supports Cisco Data Center Virtual Machine Fabric Extender (VM-FEX) technology. This technology extends the Cisco UCS Fabric Interconnect ports to virtual machines, simplifying server virtualization deployment.

Figure 3 Cisco UCS Virtual Interface Card 1387

The Cisco UCS VIC 1387 provides the following features and benefits:

· Stateless and agile platform: The personality of the card is determined dynamically at boot time using the service profile associated with the server. The number, type (NIC or HBA), identity (MAC address and World Wide Name [WWN]), failover policy, bandwidth, and quality-of-service (QoS) policies of the PCIe interfaces are all determined using the service profile. The capability to define, create, and use interfaces on demand provides a stateless and agile server infrastructure.

· Network interface virtualization: Each PCIe interface created on the VIC is associated with an interface on the Cisco UCS fabric interconnect, providing complete network separation for each virtual cable between a PCIe device on the VIC and the interface on the fabric interconnect.

Cisco UCS 6300 Series Fabric Interconnect

The Cisco UCS 6300 Series Fabric Interconnects are a core part of Cisco UCS, providing both network connectivity and management capabilities for the system (Figure 4). The Cisco UCS 6300 Series offers line-rate, low-latency, lossless 10 and 40 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE), and Fibre Channel functions.

Figure 4 Cisco UCS 6300 Series Fabric Interconnect

The Cisco UCS 6300 Series provides the management and communication backbone for the Cisco UCS B-Series Blade Servers, 5100 Series Blade Server Chassis, and C-Series Rack Servers managed by Cisco UCS. All servers attached to the fabric interconnects become part of a single, highly available management domain. In addition, by supporting unified fabric, the Cisco UCS 6300 Series provides both LAN and SAN connectivity for all servers within its domain.

From a networking perspective, the Cisco UCS 6300 Series uses a cut-through architecture, supporting deterministic, low-latency, line-rate 10 and 40 Gigabit Ethernet ports, switching capacity of 2.56 terabits per second (Tbps), and 320 Gbps of bandwidth per chassis, independent of packet size and enabled services. The product family supports Cisco® low-latency, lossless 10 and 40 Gigabit Ethernet unified network fabric capabilities, which increase the reliability, efficiency, and scalability of Ethernet networks. The fabric interconnect supports multiple traffic classes over a lossless Ethernet fabric from the server through the fabric interconnect. Significant TCO savings can be achieved with an FCoE optimized server design in which network interface cards (NICs), host bus adapters (HBAs), cables, and switches can be consolidated.

The Cisco UCS 6332 32-Port Fabric Interconnect is a 1-rack-unit (1RU) Gigabit Ethernet, and FCoE switch offering up to 2.56 Tbps throughput and up to 32 ports. The switch has 32 fixed 40-Gbps Ethernet and FCoE ports.

Both the Cisco UCS 6332UP 32-Port Fabric Interconnect and the Cisco UCS 6332 16-UP 40-Port Fabric Interconnect have ports that can be configured for the breakout feature that supports connectivity between 40 Gigabit Ethernet ports and 10 Gigabit Ethernet ports. This feature provides backward compatibility to existing hardware that supports 10 Gigabit Ethernet. A 40 Gigabit Ethernet port can be used as four 10 Gigabit Ethernet ports. Using a 40 Gigabit Ethernet SFP, these ports on a Cisco UCS 6300 Series Fabric Interconnect can connect to another fabric interconnect that has four 10 Gigabit Ethernet SFPs. The breakout feature can be configured on ports 1 to 12 and ports 15 to 26 on the Cisco UCS 6332UP fabric interconnect. Ports 17 to 34 on the Cisco UCS 6332 16-UP fabric interconnect support the breakout feature.

Cisco Nexus 9332PQ Switch

The Cisco Nexus® 9000 Series Switches include both modular and fixed-port switches that are designed to overcome these challenges with a flexible, agile, low-cost, application-centric infrastructure.

Figure 5 Cisco 9332PQ

The Cisco Nexus 9300 platform consists of fixed-port switches designed for top-of-rack (ToR) and middle-of-row (MoR) deployment in data centers that support enterprise applications, service provider hosting, and cloud computing environments. They are Layer 2 and 3 nonblocking 10 and 40 Gigabit Ethernet switches with up to 2.56 terabits per second (Tbps) of internal bandwidth.

The Cisco Nexus 9332PQ Switch is a 1-rack-unit (1RU) switch that supports 2.56 Tbps of bandwidth and over 720 million packets per second (mpps) across thirty-two 40-Gbps Enhanced QSFP+ ports

All the Cisco Nexus 9300 platform switches use dual- core 2.5-GHz x86 CPUs with 64-GB solid-state disk (SSD) drives and 16 GB of memory for enhanced network performance.

With the Cisco Nexus 9000 Series, organizations can quickly and easily upgrade existing data centers to carry 40 Gigabit Ethernet to the aggregation layer or to the spine (in a leaf-and-spine configuration) through advanced and cost-effective optics that enable the use of existing 10 Gigabit Ethernet fiber (a pair of multimode fiber strands).

Cisco provides two modes of operation for the Cisco Nexus 9000 Series. Organizations can use Cisco® NX-OS Software to deploy the Cisco Nexus 9000 Series in standard Cisco Nexus switch environments. Organizations also can use a hardware infrastructure that is ready to support Cisco Application Centric Infrastructure (Cisco ACI™) to take full advantage of an automated, policy-based, systems management approach.

Cisco UCS Manager

Cisco UCS® Manager (Figure 6) provides unified, embedded management of all software and hardware components of the Cisco Unified Computing System™ (Cisco UCS) across multiple chassis, rack servers and thousands of virtual machines. It supports all Cisco UCS product models, including Cisco UCS B-Series Blade Servers, C-Series Rack Servers, and Cisco UCS Mini, as well as the associated storage resources and networks. Cisco UCS Manager is embedded on a pair of Cisco UCS 6300 or 6200 Series Fabric Interconnects using a clustered, active-standby configuration for high availability. The manager participates in server provisioning, device discovery, inventory, configuration, diagnostics, monitoring, fault detection, auditing, and statistics collection.

An instance of Cisco UCS Manager with all Cisco UCS components managed by it forms a Cisco UCS domain, which can include up to 160 servers. In addition to provisioning Cisco UCS resources, this infrastructure management software provides a model-based foundation for streamlining the day-to-day processes of updating, monitoring, and managing computing resources, local storage, storage connections, and network connections. By enabling better automation of processes, Cisco UCS Manager allows IT organizations to achieve greater agility and scale in their infrastructure operations while reducing complexity and risk. The manager provides flexible role- and policy-based management using service profiles and templates.

Cisco UCS Manager manages Cisco UCS systems through an intuitive HTML 5 or Java user interface and a command-line interface (CLI). It can register with Cisco UCS Central Software in a multi-domain Cisco UCS environment, enabling centralized management of distributed systems scaling to thousands of servers. Cisco UCS Manager can be integrated with Cisco UCS Director to facilitate orchestration and to provide support for converged infrastructure and Infrastructure as a Service (IaaS).

The Cisco UCS XML API provides comprehensive access to all Cisco UCS Manager functions. The API provides Cisco UCS system visibility to higher-level systems management tools from independent software vendors (ISVs) such as VMware, Microsoft, and Splunk as well as tools from BMC, CA, HP, IBM, and others. ISVs and in-house developers can use the XML API to enhance the value of the Cisco UCS platform according to their unique requirements. Cisco UCS PowerTool for Cisco UCS Manager and the Python Software Development Kit (SDK) help automate and manage configurations within Cisco UCS Manager.

Red Hat Enterprise Linux 7.3

Red Hat® Enterprise Linux® is a high-performing operating system that has delivered outstanding value to IT environments for more than a decade. More than 90% of Fortune Global 500 companies use Red Hat products and solutions including Red Hat Enterprise Linux. As the world’s most trusted IT platform, Red Hat Enterprise Linux has been deployed in mission-critical applications at global stock exchanges, financial institutions, leading telcos, and animation studios. It also powers the websites of some of the most recognizable global retail brands.

Red Hat Enterprise Linux:

· Delivers high performance, reliability, and security

· Is certified by the leading hardware and software vendors

· Scales from workstations, to servers, to mainframes

· Provides a consistent application environment across physical, virtual, and cloud deployments

Designed to help organizations make a seamless transition to emerging datacenter models that include virtualization and cloud computing, Red Hat Enterprise Linux includes support for major hardware architectures, hypervisors, and cloud providers, making deployments across physical and different virtual environments predictable and secure. Enhanced tools and new capabilities in this release enable administrators to tailor the application environment to efficiently monitor and manage compute resources and security.

Scality RING 6.3

Scality RING 6.3 (Figure 7) sets a new standard, enabling many more enterprises and services providers to benefit from object storage through enhanced S3 API support with a uniquely enterprise-ready identity and security model.

Figure 7 Scality RING Architecture

In addition, customers with high-scale compliance needs can now take advantage of the standards-based interfaces and hardware-independent capabilities of the RING. For file system users, Scality continues to enhance the RING’s file support, now improving performance for specific applications like backup and media.

Featured highlights and benefits:

· Enables Enterprise-Ready object Storage Deployment with Strong S3 Features, Security, and Performance

· Scality RING 6.3 is the first S3-compatible object storage with full Microsoft Active Directory and AWS IAM support

· RING 6.3 offers exceptional levels of S3 API performance, including scale-out Bucket access, even across multiple locations

· Protects Petabytes of Records, Images, and More with the Most Scalable Data Compliance Solution

· Standards-based, compliance solution that scales into petabytes in a single system

· Tackles More Enterprise Applications with Enhanced Scale-out File System Capabilities

· Fully parallel and multi-user write performance to the same directories, further enabling specific backup and media applications

· Integrated Load Balancing and Failover across multiple file system interfaces

· High performance at scale - Linear performance scale to many petabytes of data and Supports a broad mix of application workloads

· 100% reliable - Zero downtime for maintenance and expansion, zero downtime when disk, server, rack, and site fail & Always available and durable with native geo-redundancy.

Deployment Architecture

The reference architecture use case provides a comprehensive, end-to-end example of deploying Scality object storage on Cisco UCS S3260 (Figure 8).

The first section in this Cisco Validated Design covers setting up the Cisco UCS hardware; the Cisco UCS 6332 Fabric Interconnects (Cisco UCS Manager), Cisco UCS S3260 Storage servers, Cisco UCS C220 M4 Rack Servers, and the peripherals like Cisco Nexus 9332 switches. The second section explains the step-by-step installation instructions to install Scality RING. The final section includes the functional and High Availability tests on the test bed, performance, and the best practices evolved while validating the solution.

Figure 8 Cisco UCS SDS Architecture

Solution Overview

The current solution based on Cisco UCS and Scality object Storage is divided into multiple sections and covers three main aspects.

Hardware Requirements

This CVD describes the architecture, design and deployment of a Scality object Storage solution on six Cisco UCS S3260 Storage Server, each with two Cisco UCS C3X60 M4 nodes configured as Storage servers and 3 Cisco UCS C220 M4S Rack servers as three Connector nodes and one Supervisor node. The whole solution is connected to the pair of Cisco UCS 6332 Fabric Interconnects and to pair of upstream network switch Cisco Nexus 9332PQ.

The detailed configuration is as follows:

· Two Cisco Nexus 9332PQ Switches

· Two Cisco UCS 6332 Fabric Interconnects

· Six Cisco UCS S3260 Storage Servers with two UCS C3X60 M4 server nodes each

· Three Cisco UCS C220 M4S Rack Servers

![]() Note: Please contact your Cisco representative for country specific information.

Note: Please contact your Cisco representative for country specific information.

Software Distributions and Versions

The required software distribution versions are listed below in Table 1.

| Layer |

Component |

Version or Release |

| Storage (Chassis) UCS S3260 |

Chassis Management Controller |

2.0(13e) |

| Shared Adapter |

4.1(2d) |

|

| Compute (Server Nodes) UCS C3X60 M4 |

BIOS |

C3x60M4.2.0.13c |

| CIMC Controller |

2.0(13f) |

|

| Compute (Rack Server) C220 M4S |

BIOS |

C220M4.2.0.13d |

| CIMC Controller |

2.0(13f) |

|

| Network 6332 Fabric Interconnect |

UCS Manager |

3.1(2b) |

| Kernel |

5.0(3)N2(3.12b) |

|

| System |

5.0(3)N2(3.12b) |

|

| Network Nexus 9332PQ |

BIOS |

07.59 |

|

|

NXOS |

7.0(3)I5(1) |

| Software |

Red Hat Enterprise Linux Server |

7.3 (x86_64) |

|

|

Scality RING |

6.3 |

Hardware Requirements

This section contains the hardware components (Table 2) used in the test bed.

| Component |

Model |

Quantity |

Comments |

|

| Scality Storage node |

Cisco UCS S3260 M4 Chassis |

6 |

· 2 x UCS C3X60 M4 Server Nodes per Chassis (Total = 12nodes) · Per Server Node - 2 x Intel E5-2650 v4, 256 GB RAM - Cisco 12G SAS RAID Controller - 2 x 480 GB SSD for OS, 26 x 10TB HDDs for Data, 2 x 800G SSD for Metadata - Dual-port 40 Gbps VIC |

|

| Scality Connector Nodes |

Cisco UCS C220M4S Rack server |

3 |

· 2 x Intel E5-2683v4, 256 GB RAM · Cisco 12G SAS RAID Controller · 2 x 600 GB SAS for OS · Dual-port 40 Gbps VIC |

|

| Scality Supervisor Node |

Cisco UCS C220M4S Rack server |

1 |

· 2 x Intel E5-2683v4, 256 GB RAM · Cisco 12G SAS RAID Controller · 2 x 600 GB SAS for OS · Dual-port 40 Gbps VIC |

|

| UCS Fabric Interconnects |

Cisco UCS 6332 Fabric Interconnects |

2 |

|

|

| Switches |

Cisco Nexus 9332PQ Switches |

2 |

|

|

Physical Topology and Configuration

The following sections describe the physical design of the solution and the configuration of each component.

Figure 9 Physical Topology of the Solution

The connectivity of the solution is based on 40 Gbit. All components are connected together via 40 QSFP cables. Between both Cisco Nexus 9332PQ switches are 2 x 40 Gbit cabling. Each Cisco UCS 6332 Fabric Interconnect is connected via 2 x 40 Gbit to each Cisco UCS 9332PQ switch. And each Cisco UCS C220 M4S is connected via 1 x 40 Gbit and each Cisco UCS S3260 M4 server is connected with 2 x 40 Gbit cable to each Fabric Interconnect.

Figure 10 Physical Cabling of the Solution

The exact cabling for the Cisco UCS S3260 Storage Server, Cisco UCS C220 M4S, and the Cisco UCS 6332 Fabric Interconnect is illustrated in Table 3.

| Local Device |

Local Port |

Connection |

Remote Device |

Remote Port |

Cable |

| Cisco Nexus 9332 Switch A |

Eth1/1 |

40GbE |

Cisco Nexus 9372 Switch B |

Eth1/1 |

QSFP-H40G-CU1M |

| Eth1/2 |

40GbE |

Cisco Nexus 9372 Switch B |

Eth1/2 |

QSFP-H40G-CU1M |

|

| Eth1/17 |

40GbE |

Cisco UCS Fabric Interconnect A |

Eth1/17 |

QSFP-H40G-CU1M |

|

| Eth1/18 |

40GbE |

Cisco UCS Fabric Interconnect B |

Eth1/17 |

QSFP-H40G-CU1M |

|

| Eth1/23 |

40GbE |

Top of Rack (Upstream Network) |

Any |

QSFP+ 4SFP10G |

|

| MGMT0 |

1GbE |

Top of Rack (Management) |

Any |

1G RJ45 |

|

| Cisco Nexus 9332 Switch B |

Eth1/1 |

40GbE |

Cisco Nexus 9372 Switch B |

Eth1/1 |

QSFP-H40G-CU1M |

| Eth1/2 |

40GbE |

Cisco Nexus 9372 Switch B |

Eth1/2 |

QSFP-H40G-CU1M |

|

| Eth1/17 |

40GbE |

Cisco UCS Fabric Interconnect A |

Eth1/18 |

QSFP-H40G-CU1M |

|

| Eth1/18 |

40GbE |

Cisco UCS Fabric Interconnect B |

Eth1/18 |

QSFP-H40G-CU1M |

|

| Eth1/23 |

40GbE |

Top of Rack (Upstream Network) |

Any |

QSFP+ 4SFP10G |

|

| MGMT0 |

1GbE |

Top of Rack (Management) |

Any |

1G RJ45 |

|

| Cisco UCS 6332 Fabric Interconnect A |

Eth1/1 |

40GbE |

S3260 Chassis 1 - SIOC 1 (right) |

port 1 |

QSFP-H40G-CU3M |

| Eth1/2 |

40GbE |

S3260 Chassis 1 - SIOC 2 (left) |

port 1 |

QSFP-H40G-CU3M |

|

| Eth1/3 |

40GbE |

S3260 Chassis 2 - SIOC 1 (right) |

port 1 |

QSFP-H40G-CU3M |

|

| Eth1/4 |

40GbE |

S3260 Chassis 2 - SIOC 2 (left) |

port 1 |

QSFP-H40G-CU3M |

|

| Eth1/5 |

40GbE |

S3260 Chassis 3 - SIOC 1 (right) |

port 1 |

QSFP-H40G-CU3M |

|

| Eth1/6 |

40GbE |

S3260 Chassis 3 - SIOC 2 (left) |

port 1 |

QSFP-H40G-CU3M |

|

| Eth1/7 |

40GbE |

S3260 Chassis 4 - SIOC 1 (right) |

port 1 |

QSFP-H40G-CU3M |

|

| Eth1/8 |

40GbE |

S3260 Chassis 4 - SIOC 2 (left) |

port 1 |

QSFP-H40G-CU3M |

|

| Eth1/9 |

40GbE |

S3260 Chassis 5 - SIOC 1 (right) |

port 1 |

QSFP-H40G-CU3M |

|

| Eth1/10 |

40GbE |

S3260 Chassis 5 - SIOC 2 (left) |

port 1 |

QSFP-H40G-CU3M |

|

| Eth1/11 |

40GbE |

S3260 Chassis 6 - SIOC 1 (right) |

port 1 |

QSFP-H40G-CU3M |

|

| Eth1/12 |

40GbE |

S3260 Chassis 6 - SIOC 2 (left) |

port 1 |

QSFP-H40G-CU3M |

|

| Eth1/17 |

40GbE |

C220 M4S - Server1 - VIC1387 |

VIC - Port 1 |

QSFP-H40G-CU1M |

|

| Eth1/18 |

40GbE |

C240 M4S - Server2 - VIC1387 |

VIC - Port 1 |

QSFP-H40G-CU1M |

|

| Eth1/19 |

40GbE |

C240 M4S - Server3 - VIC1387 |

VIC - Port 1 |

QSFP-H40G-CU1M |

|

| Eth1/20 |

40GbE |

C240 M4S - Server4 - VIC1387 |

VIC - Port 1 |

QSFP-H40G-CU1M |

|

| Eth1/25 |

40GbE |

Nexus 9332 A |

Eth 1/25 |

QSFP-H40G-CU1M |

|

| Eth1/26 |

40GbE |

Nexus 9332 B |

Eth 1/25 |

QSFP-H40G-CU1M |

|

| MGMT0 |

40GbE |

Top of Rack (Management) |

Any |

1G RJ45 |

|

| L1 |

1GbE |

UCS 6332 Fabric Interconnect B |

L1 |

1G RJ45 |

|

| L2 |

1GbE |

UCS 6332 Fabric Interconnect B |

L2 |

1G RJ45 |

|

| Cisco UCS 6332 Fabric Interconnect B |

Eth1/1 |

40GbE |

S3260 Chassis 1 - SIOC 1 (right) |

port 2 |

QSFP-H40G-CU3M |

| Eth1/2 |

40GbE |

S3260 Chassis 1 - SIOC 2 (left) |

port 2 |

QSFP-H40G-CU3M |

|

| Eth1/3 |

40GbE |

S3260 Chassis 2 - SIOC 1 (right) |

port 2 |

QSFP-H40G-CU3M |

|

| Eth1/4 |

40GbE |

S3260 Chassis 2 - SIOC 2 (left) |

port 2 |

QSFP-H40G-CU3M |

|

| Eth1/5 |

40GbE |

S3260 Chassis 3 - SIOC 1 (right) |

port 2 |

QSFP-H40G-CU3M |

|

| Eth1/6 |

40GbE |

S3260 Chassis 3 - SIOC 2 (left) |

port 2 |

QSFP-H40G-CU3M |

|

| Eth1/7 |

40GbE |

S3260 Chassis 4 - SIOC 1 (right) |

port 2 |

QSFP-H40G-CU3M |

|

| Eth1/8 |

40GbE |

S3260 Chassis 4 - SIOC 2 (left) |

port 2 |

QSFP-H40G-CU3M |

|

| Eth1/9 |

40GbE |

S3260 Chassis 5 - SIOC 1 (right) |

port 2 |

QSFP-H40G-CU3M |

|

| Eth1/10 |

40GbE |

S3260 Chassis 5 - SIOC 2 (left) |

port 2 |

QSFP-H40G-CU3M |

|

| Eth1/11 |

40GbE |

S3260 Chassis 6 - SIOC 1 (right) |

port 2 |

QSFP-H40G-CU3M |

|

| Eth1/12 |

40GbE |

S3260 Chassis 6 - SIOC 2 (left) |

port 2 |

QSFP-H40G-CU3M |

|

| Eth1/17 |

40GbE |

C220 M4S - Server1 - VIC1387 |

VIC - Port 2 |

QSFP-H40G-CU1M |

|

| Eth1/18 |

40GbE |

C240 M4S - Server2 - VIC1387 |

VIC - Port 2 |

QSFP-H40G-CU1M |

|

| Eth1/19 |

40GbE |

C240 M4S - Server3 - VIC1387 |

VIC - Port 2 |

QSFP-H40G-CU1M |

|

| Eth1/20 |

40GbE |

C240 M4S - Server4 - VIC1387 |

VIC - Port 2 |

QSFP-H40G-CU1M |

|

| Eth1/25 |

40GbE |

Nexus 9332 A |

Eth 1/26 |

QSFP-H40G-CU1M |

|

| Eth1/26 |

40GbE |

Nexus 9332 B |

Eth 1/26 |

QSFP-H40G-CU1M |

|

| MGMT0 |

40GbE |

Top of Rack (Management) |

Any |

1G RJ45 |

|

| L1 |

1GbE |

UCS 6332 Fabric Interconnect A |

L1 |

1G RJ45 |

|

| L2 |

1GbE |

UCS 6332 Fabric Interconnect A |

L2 |

1G RJ45 |

Figure 11 Network Layout of the Solution

Fabric Configuration

This section provides the details for configuring a fully redundant, highly available Cisco UCS 6332 fabric configuration.

· Initial setup of the Fabric Interconnect A and B

· Connect to Cisco UCS Manager using virtual IP address of using the web browser

· Launch Cisco UCS Manager

· Enable server and uplink ports

· Start discovery process

· Create pools and policies for service profile template

· Create chassis and storage profiles

· Create Service Profile templates and appropriate Service Profiles

· Associate Service Profiles to servers

Initial Setup of Cisco UCS 6332 Fabric Interconnects

To set up the Cisco UCS 6332 Fabric Interconnects A and B, complete the following steps:

Configure Fabric Interconnect A

1. Connect to the console port on the first Cisco UCS 6332 Fabric Interconnect.

2. At the prompt to enter the configuration method, enter console to continue.

3. If asked to either perform a new setup or restore from backup, enter setup to continue.

4. Enter y to continue to set up a new Fabric Interconnect.

5. Enter n to enforce strong passwords.

6. Enter the password for the admin user.

7. Enter the same password again to confirm the password for the admin user.

8. When asked if this fabric interconnect is part of a cluster, answer y to continue.

9. Enter A for the switch fabric.

10. Enter the cluster name UCS-FI-6332 for the system name.

11. Enter the Mgmt0 IPv4 address.

12. Enter the Mgmt0 IPv4 netmask.

13. Enter the IPv4 address of the default gateway.

14. Enter the cluster IPv4 address.

15. To configure DNS, answer y.

16. Enter the DNS IPv4 address.

17. Answer y to set up the default domain name.

18. Enter the default domain name.

19. Review the settings that were printed to the console, and if they are correct, answer yes to save the configuration.

20. Wait for the login prompt to make sure the configuration has been saved.

Example Setup for Fabric Interconnect A

---- Basic System Configuration Dialog ----

This setup utility will guide you through the basic configuration of

the system. Only minimal configuration including IP connectivity to

the Fabric interconnect and its clustering mode is performed through these steps.

Type Ctrl-C at any time to abort configuration and reboot system.

To back track or make modifications to already entered values,

complete input till end of section and answer no when prompted

to apply configuration.

Enter the configuration method. (console/gui) ? console

Enter the setup mode; setup newly or restore from backup. (setup/restore) ? setup

You have chosen to setup a new Fabric interconnect. Continue? (y/n): y

Enforce strong password? (y/n) [y]: n

Enter the password for "admin":

Confirm the password for "admin":

Is this Fabric interconnect part of a cluster(select 'no' for standalone)? (yes/no) [n]: yes

Enter the switch fabric (A/B): A

Enter the system name: UCS-FI-6332

Physical Switch Mgmt0 IP address : 192.168.10.101

Physical Switch Mgmt0 IPv4 netmask : 255.255.255.0

IPv4 address of the default gateway : 192.168.10.1

Cluster IPv4 address : 192.168.10.100

Configure the DNS Server IP address? (yes/no) [n]: no

Configure the default domain name? (yes/no) [n]: no

Join centralized management environment (UCS Central)? (yes/no) [n]: no

Following configurations will be applied:

Switch Fabric=A

System Name= UCS-FI-6332

Enforced Strong Password=no

Physical Switch Mgmt0 IP Address=192.168.10.101

Physical Switch Mgmt0 IP Netmask=255.255.255.0

Default Gateway=192.168.10.1

Ipv6 value=0

Cluster Enabled=yes

Cluster IP Address=192.168.10.100

NOTE: Cluster IP will be configured only after both Fabric Interconnects are initialized.

UCSM will be functional only after peer FI is configured in clustering mode.

Apply and save the configuration (select 'no' if you want to re-enter)? (yes/no): yes

Applying configuration. Please wait.

Configuration file - Ok

Cisco UCS 6300 Series Fabric Interconnect

UCS-FI-6332-A login:

Configure Fabric Interconnect B

1. Connect to the console port on the second Cisco UCS 6332 Fabric Interconnect.

2. When prompted to enter the configuration method, enter console to continue.

3. The installer detects the presence of the partner Fabric Interconnect and adds this fabric interconnect to the cluster. Enter y to continue the installation.

4. Enter the admin password that was configured for the first Fabric Interconnect.

5. Enter the Mgmt0 IPv4 address.

6. Answer yes to save the configuration.

7. Wait for the login prompt to confirm that the configuration has been saved.

Example Setup for Fabric Interconnect B

---- Basic System Configuration Dialog ----

This setup utility will guide you through the basic configuration of

the system. Only minimal configuration including IP connectivity to

the Fabric interconnect and its clustering mode is performed through these steps.

Type Ctrl-C at any time to abort configuration and reboot system.

To back track or make modifications to already entered values,

complete input till end of section and answer no when prompted

to apply configuration.

Enter the configuration method. (console/gui) ? console

Installer has detected the presence of a peer Fabric interconnect. This Fabric interconnect will be added to the cluster. Continue (y/n) ? y

Enter the admin password of the peer Fabric interconnect:

Connecting to peer Fabric interconnect... done

Retrieving config from peer Fabric interconnect... done

Peer Fabric interconnect Mgmt0 IPv4 Address: 192.168.10.101

Peer Fabric interconnect Mgmt0 IPv4 Netmask: 255.255.255.0

Cluster IPv4 address : 192.168.10.100

Peer FI is IPv4 Cluster enabled. Please Provide Local Fabric Interconnect Mgmt0 IPv4 Address

Physical Switch Mgmt0 IP address : 192.168.10.102

Apply and save the configuration (select 'no' if you want to re-enter)? (yes/no): yes

Applying configuration. Please wait.

Configuration file - Ok

Cisco UCS 6300 Series Fabric Interconnect

UCS-FI-6332-B login:

Logging into Cisco UCS Manager

To login to Cisco UCS Manager, complete the following steps:

1. Open a Web browser and navigate to the Cisco UCS 6332 Fabric Interconnect cluster address.

2. Click the Launch link to download the Cisco UCS Manager software.

3. If prompted to accept security certificates, accept as necessary.

4. Click Launch UCS Manager HTML.

5. When prompted, enter admin for the username and enter the administrative password.

6. Click Login to log in to the Cisco UCS Manager.

Configure NTP Server

This section describes how to configure the NTP server for the Cisco UCS environment.

1. Select Admin tab on the left site.

2. Select Time Zone Management.

3. Select Time Zone.

4. Under Properties select your time zone.

5. Select Add NTP Server.

6. Enter the IP address of the NTP server.

7. Select OK.

Figure 12 Adding a NTP server - Summary

Initial Base Setup of the Environment

Configure Global Policies

This section describes how to configure the global policies.

1. Select the Equipment tab on the left site of the window.

2. Select Policies on the right site.

3. Select Global Policies.

4. Under Chassis/FEX Discovery Policy select Platform Max under Action.

5. Select 40G under Backplane Speed Preference.

6. Under Rack Server Discovery Policy select Immediate under Action.

7. Under Rack Management Connection Policy select Auto Acknowledged under Action.

8. Under Power Policy select Redundancy N+1.

9. Under Global Power Allocation Policy select Policy Driven.

10. Select Save Changes.

Figure 13 Configuration of Global Policies

Enable Fabric Interconnect A Ports for Server

To enable server ports, complete the following steps:

1. Select the Equipment tab on the left site.

2. Select Equipment > Fabric Interconnects > Fabric Interconnect A (subordinate) > Fixed Module.

3. Click Ethernet Ports section.

4. Select Ports 1-12, right-click and then select Configure as Server Port and click Yes and then OK.

5. Select Ports 17-20 for C220 M4S server, right-click and then select “Configure as Server Port” and click Yes and then OK.

6. Repeat the same steps for Fabric Interconnect B.

Figure 14 Configuration of Server Ports

Enable Fabric Interconnect A Ports for Uplinks

To enable uplink ports, complete the following steps:

1. Select the Equipment tab on the left site.

2. Select Equipment > Fabric Interconnects > Fabric Interconnect A (subordinate) > Fixed Module.

3. Click Ethernet Ports section.

4. Select Ports 25-26, right-click and then select Configure as Uplink Port.

5. Click Yes and then OK.

6. Repeat the same steps for Fabric Interconnect B.

Label Each Server for Identification

To label each server (provides better identification), complete the following steps:

1. Select the Equipment tab on the left site.

2. Select Chassis > Chassis 1 > Server 1.

3. In the Properties section on the right go to User Label and add Storage-Node1 to the field.

4. Repeat the previous steps for Server 2 of Chassis 1 and for all other servers of Chassis 2 – 6 according to Table 2.

5. Go to Servers > Rack-Mounts > Servers > and repeat the step for all servers according to Table 4.

| Server |

Name |

| Chassis 1 / Server 1 |

Storage-Node1 |

| Chassis 1 / Server 2 |

Storage-Node2 |

| Chassis 1 / Server 3 |

Storage-Node3 |

| Chassis 1 / Server 4 |

Storage-Node4 |

| Chassis 1 / Server 5 |

Storage-Node5 |

| Chassis 1 / Server 6 |

Storage-Node6 |

| Chassis 1 / Server 7 |

Storage-Node7 |

| Chassis 1 / Server 8 |

Storage-Node8 |

| Chassis 1 / Server 9 |

Storage-Node9 |

| Chassis 1 / Server 10 |

Storage-Node10 |

| Chassis 1 / Server 11 |

Storage-Node11 |

| Chassis 1 / Server 12 |

Storage-Node12 |

| Rack-Mount / Server 1 |

Supervisor |

| Rack-Mount / Server 2 |

Connector-Node1 |

| Rack-Mount / Server 3 |

Connector-Node2 |

| Rack-Mount / Server 4 |

Connector-Node3 |

Figure 15 Labeling of Rack Servers

Create KVM IP Pool

To create a KVM IP Pool, complete the following steps:

1. Select the LAN tab on the left site.

2. Go to LAN > Pools > root > IP Pools > IP Pool ext-mgmt.

3. Right-click Create Block of IPv4 Addresses.

4. Enter an IP Address in the From field.

5. Enter Size 20.

6. Enter your Subnet Mask.

7. Fill in your Default Gateway.

8. Enter your Primary DNS and Secondary DNS if needed.

9. Click OK.

Figure 16 Create Block of IPv4 Addresses

Create MAC Pool

To create a MAC Pool, complete the following steps:

1. Select the LAN tab on the left site.

2. Go to LAN > Pools > root > Mac Pools and right-click Create MAC Pool.

3. Type in UCS--MAC-Pools for Name.

4. (Optional) Enter a Description of the MAC Pool.

5. Set Assignment Order as Sequential.

Figure 17 Create MAC Pool

6. Click Next.

7. Click Add.

8. Specify a starting MAC address.

9. Specify a size of the MAC address pool, which is sufficient to support the available server resources, for example, 100.

Figure 18 Create a Block of MAC Addresses

10. Click OK.

11. Click Finish.

Create UUID Pool

To create a UUID Pool, complete the following steps:

1. Select the Servers tab on the left site.

2. Go to Servers > Pools > root > UUID Suffix Pools and right-click Create UUID Suffix Pool.

3. Type in UCS-UUID-Pools for Name.

4. (Optional) Enter a Description of the MAC Pool.

5. Set Assignment Order to Sequential and click Next.

Figure 19 Create UUID Suffix Pool

6. Click Add.

7. Specify a starting UUID Suffix.

8. Specify a size of the UUID suffix pool, which is sufficient to support the available server resources, for example, 25.

Figure 20 Create a Block of UUID Suffixes

9. Click OK.

10. Click Finish and then OK.

Create VLANs

As mentioned previously, it is important to separate the network traffic with VLANs for Storage-Management traffic and Storage-Cluster traffic, External traffic, and Client traffic (optional). Table 5 lists the configured VLANs.

![]() Note: Client traffic is optional. We used Client traffic, to validate the functionality of NFS & S3 connectors.

Note: Client traffic is optional. We used Client traffic, to validate the functionality of NFS & S3 connectors.

| VLAN |

Name |

Function |

| 10 |

Storage-Management |

Storage Management traffic for Supervisor, Connector & Storage Nodes |

| 20 |

Storage-Cluster |

Storage Cluster traffic for Supervisor, Connector & Storage Nodes |

| 30 |

Client-Network (optional) |

Client traffic for Connector & Storage Nodes |

| 79 |

External-Network |

External Public Network for all UCS Servers |

To configure VLANs in the Cisco UCS Manager GUI, complete the following steps:

1. Select LAN in the left pane in the Cisco UCS Manager GUI.

2. Select LAN > LAN Cloud > VLANs and right-click Create VLANs.

3. Enter Storage-Management for the VLAN Name.

4. Keep Multicast Policy Name as <not set>.

5. Select Common/Global for Public.

6. Enter 10 in the VLAN IDs field.

7. Click OK and then Finish.

Figure 21 Create a VLAN

8. Repeat the steps for the rest of the VLANs Storage-Cluster, Client Network, and External-Network.

Enable CDP

To enable Network Control Policies, complete the following steps:

1. Select the LAN tab in the left pane of the Cisco UCS Manager GUI.

2. Go to LAN > Policies > root > Network Control Policies and right-click Create Network-Control Policy.

3. Type in Enable-CDP in the Name field.

4. (Optional) Enter a description in the Description field.

5. Click Enabled under CDP.

6. Click All Hosts VLANs under MAC Register Mode.

7. Leave everything else untouched and click OK.

8. Click OK.

Figure 22 Create a Network Control Policy

QoS System Class

To create a Quality of Service System Class, complete the following steps:

1. Select the LAN tab in the left pane of the Cisco UCS Manager GUI.

2. Go to LAN > LAN Cloud > QoS System Class.

3. Enable Priority Platinum & Gold and set Weight 10 & 9 respectively and MTU to 9216 and Best Effort MTU as 9216.

4. Set Fibre Channel Weight to None.

5. Click Save Changes and then OK.

Figure 23 QoS System Class

QoS Policy Setup

Based on the previous QoS System Class, setup a QoS Policy with the following configuration:

1. Select the LAN tab in the left pane of the Cisco UCS Manager GUI.

2. Go to LAN > Policies > root > QoS Policies and right-click Create QoS Policy.

3. Type in Storage-Mgmt in the Name field.

4. Set Priority as platinum and leave everything else unchanged.

5. Click OK and then OK.

Figure 24 QoS Policy Setup

6. Repeat the steps to create Qos Policy for Storage-Cluster and Set Priority as Gold.

vNIC Template Setup

Based on the previous section, creating VLANs, the next step is to create the appropriate vNIC templates. For Scality Storage we need to create four different vNICs, depending on the role of the server. Table 6 provides an overview of the configuration.

| Name |

vNIC Name |

Fabric Interconnect |

Failover |

VLAN |

MTU Size |

MAC Pool |

Network Control Policy |

| Storage-Mgmt |

Storage-Mgmt |

A |

Yes |

Storage-Mgmt – 10 |

9000 |

UCS-MAC-Pools |

Enable-CDP |

| Storage-Cluster |

Storage-Cluster |

B |

Yes |

Storage-Cluster - 20 |

9000 |

UCS-MAC-Pools |

Enable-CDP |

| Client-Network |

Client-Network |

A |

Yes |

Client-Network – 30 |

1500 |

UCS-MAC-Pools |

Enable-CDP |

| External-Mgmt |

External-Mgmt |

A |

Yes |

External-Mgmt -79 |

1500 |

UCS-MAC-Pools |

Enable-CDP |

To create the appropriate vNICs, complete the following steps:

1. Select the LAN tab in the left pane of the Cisco UCS Manager GUI.

2. Go to LAN > Policies > root > vNIC Templates and right-click Create vNIC Template.

3. Type in Storage-Mgmt in the Name field.

4. (Optional) Enter a description in the Description field.

5. Click Fabric A as Fabric ID and enable failover.

6. Select default as VLANs and click Native VLAN.

7. Select UCS-MAC-Pools as MAC Pool.

8. Select Storage-Mgmt as QoS Policy.

9. Select Enable-CDP as Network Control Policy.

10. Click OK and then OK.

Figure 25 Setup the vNIC Template for Storage-Mgmt vNIC

11. Repeat the steps for the vNICs Storage-Cluster, Client-NIC and External-Mgmt. Make sure you select the correct Fabric ID, VLAN and MTU size according to Table 6.

Ethernet Adapter Policy Setup

By default, Cisco UCS provides a set of Ethernet adapter policies. These policies include the recommended settings for each supported server operating system. Operating systems are sensitive to the settings in these policies.

![]() Note: Cisco UCS best practice is to enable Jumbo Frames MTU 9000 for any Storage facing Networks (Storage-Mgmt & Storage-Cluster). Enabling jumbo frames on specific interfaces, guarantees 39Gb/s bandwidth on the Cisco UCS fabric. For Jumbo Frames MTU9000, you can use default Ethernet Adapter Policy predefined as Linux.

Note: Cisco UCS best practice is to enable Jumbo Frames MTU 9000 for any Storage facing Networks (Storage-Mgmt & Storage-Cluster). Enabling jumbo frames on specific interfaces, guarantees 39Gb/s bandwidth on the Cisco UCS fabric. For Jumbo Frames MTU9000, you can use default Ethernet Adapter Policy predefined as Linux.

If the customer deployment scenarios only supports only MTU1500, you can still modify the Ethernet Adapter policy resources Tx & Rx queues to guarantee 39Gb/s bandwidth.

To create a specific adapter policy for Red Hat Enterprise Linux, complete the following steps:

1. Select the Server tab in the left pane of the Cisco UCS Manager GUI.

2. Go to Servers > Policies > root > Adapter Policies and right-click Create Ethernet Adapter Policy.

3. Type in RHEL in the Name field.

4. (Optional) Enter a description in the Description field.

5. Under Resources type in the following values:

a. Transmit Queues: 8

b. Ring Size: 4096

c. Receive Queues: 8

d. Ring Size: 4096

e. Completion Queues: 16

f. Interrupts: 32

6. Under Options enable Receive Side Scaling (RSS).

7. Click OK and then OK.

Figure 26 Adapter Policy for RHEL

Boot Policy Setup

To create a Boot Policy, complete the following steps:

1. Select the Servers tab in the left pane.

2. Go to Servers > Policies > root > Boot Policies and right-click Create Boot Policy.

3. Type in a Local-OS-Boot in the Name field.

4. (Optional) Enter a description in the Description field.

Figure 27 Create Boot Policy

5. Click Local Devices > Add Local CD/DVD and click OK.

6. Click Local Devices > Add Local LUN and Set Type as “Any” and click OK.

7. Click OK.

Create LAN Connectivity Policy Setup

To create a LAN Connectivity Policy, complete the following steps:

1. Select the LAN tab in the left pane.

2. Go to Servers > Policies > root > LAN Connectivity Policies and right-click Create LAN Connectivity Policy for Storage Servers.

3. Type in Storage-Node in the Name field.

4. (Optional) Enter a description in the Description field.

5. Click Add.

Figure 28 LAN Connectivity Policy

6. Type in Storage-Mgmt in the name field.

7. Click “Use vNIC Template.”

8. Select vNIC template for “Storage-Mgmt” from drop-down list.

9. If you are using Jumbo Frame MTU 9000, Select default Adapter Policy as Linux from the drop-down list.

![]() Note: If you are using MTU 1500, Select Adapter Policy as RHEL created before from the drop-down list.

Note: If you are using MTU 1500, Select Adapter Policy as RHEL created before from the drop-down list.

Figure 29 LAN Connectivity Policy

10. Repeat the vNIC creation steps for Storage-Cluster, Client-Network, and External-Network.

Create Maintenance Policy Setup

To setup a Maintenance Policy, complete the following steps:

1. Select the Servers tab in the left pane.

2. Go to Servers > Policies > root > Maintenance Policies and right-click Create Maintenance Policy.

3. Type in a Server-Maint in the Name field.

4. (Optional) Enter a description in the Description field.

5. Click User Ack under Reboot Policy.

6. Click OK and then OK.

7. Create Maintenance Policy.

Create Power Control Policy Setup

To create a Power Control Policy, complete the following steps:

8. Select the Servers tab in the left pane.

9. Go to Servers > Policies > root > Power Control Policies and right-click Create Power Control Policy.

10. Type in No-Power-Cap in the Name field.

11. (Optional) Enter a description in the Description field.

12. Click No Cap and click OK.

13. Create Power Control Policy.

Creating Chassis Profile

The Chassis Profile is required to assign specific disks to a particular server node in a Cisco UCS S3260 Storage Server as well as upgrading to a specific chassis firmware package.

Create Chassis Firmware Package

To create a Chassis Firmware Package, complete the following steps:

1. Select the Chassis tab in the left pane of the Cisco UCS Manager GUI.

2. Go to Chassis > Policies > root > Chassis Firmware Package and right-click Create Chassis Firmware Package.

3. Type in UCS-S3260-FW in the Name field.

4. (Optional) Enter a description in the Description field.

5. Select 3.1.(2b)C form the drop-down menu of Chassis Package.

6. Select OK and then OK.

7. Create Chassis Firmware Package.

Create Chassis Maintenance Policy

To create a Chassis Maintenance Policy, complete the following steps:

1. Select the Chassis tab in the left pane of the Cisco UCS Manager GUI.

2. Go to Chassis > Policies > root > Chassis Maintenance Policies and right-click Create Chassis Maintenance Policy.

3. Type in UCS-S3260-Main in the Name field.

4. (Optional) Enter a description in the Description field.

5. Click OK and then OK.

6. Create Chassis Maintenance Policy.

Create Disk Zoning Policy

To create a Disk Zoning Policy, complete the following steps:

7. Select the Chassis tab in the left pane of the Cisco UCS Manager GUI.

8. Go to Chassis > Policies > root > Disk Zoning Policies and right-click Create Disk Zoning Policy.

9. Type in UCS-S3260-Zoning in the Name field.

10. (Optional) Enter a description in the Description field.

11. Create Disk Zoning Policy.

12. Click Add.

13. Select Dedicated under Ownership.

14. Select Server 1 and Select Controller 1.

15. Add Slot Range 1-28 for the top node of the Cisco UCS S3260 Storage Server and click OK.

16. Add Slots to Top Node of Cisco UCS S3260.

17. Click Add.

18. Select Dedicated under Ownership.

19. Select Server 2 and Select Controller 1.

20. Add Slot Range 29-56 for the bottom node of the Cisco UCS S3260 Storage Server and click OK.

21. Add Slots to Bottom Node of Cisco UCS S3260.

Create Chassis Profile Template

To create a Chassis Profile Template, complete the following steps:

22. Select the Chassis tab in the left pane of the Cisco UCS Manager GUI.

23. Go to Chassis > Chassis Profile Templates and right-click Create Chassis Profile Template.

24. Type in S3260-Chassis in the Name field.

25. Under Type, select Updating Template.

26. (Optional) Enter a description in the Description field.

27. Create Chassis Profile Template.

28. Select Next.

29. Under the radio button Chassis Maintenance Policy, select your previously created Chassis Maintenance Policy.

30. Chassis Profile Template – Chassis Maintenance Policy.

31. Select Next.

32. Select the + button and select under Chassis Firmware Package your previously created Chassis Firmware Package Policy.

33. Chassis Profile Template – Chassis Firmware Package.

34. Select Next.

35. Under Disk Zoning Policy select your previously created Disk Zoning Policy.

36. Chassis Profile Template – Disk Zoning Policy

37. Click Finish and then click OK.

Create Chassis Profile from Template

To create the Chassis Profiles from the previous created Chassis Profile Template, complete the following steps:

1. Select the Chassis tab in the left pane of the Cisco UCS Manager GUI.

2. Go to Chassis > Chassis Profiles and right-click Create Chassis Profiles from Template.

3. Type in S3260-Chassis in the Name field.

4. Leave the Name Suffix Starting Number untouched.

5. Enter 6 for the Number of Instances for all connected Cisco UCS S3260 Storage Server.

6. Choose your previously created Chassis Profile Template.

7. Click OK and then click OK.

8. Create Chassis Profiles from Template.

Associate Chassis Profile

To associate all previous created Chassis Profile, complete the following steps:

1. Select the Chassis tab in the left pane of the Cisco UCS Manager GUI.

2. Go to Chassis > Chassis Profiles and select S3260-Chassis.

3. Right-click Change Chassis Profile Association.

4. Under Chassis Assignment, choose Select existing Chassis.

5. Under Available Chassis, select ID 1.

6. Click OK and then click OK again.

7. Repeat the steps for the other four Chassis Profiles by selecting the IDs 2 – 6.

8. Associate Chassis Profile.

Creating Storage Profiles

Setting Disks for Cisco UCS C220 M4 Rack-Mount Servers to Unconfigured-Good

To prepare all disks from the Rack-Mount Servers for storage profiles, the disks have to be converted from JBOD to Unconfigured-Good. To convert the disks, complete the following steps:

1. Select the Equipment tab in the left pane of the Cisco UCS Manager GUI.

2. Go to Equipment > Rack-Mounts > Servers > Server 1 > Disks.

3. Select both disks and right-click Set JBOD to Unconfigured-Good.

4. Repeat the steps for Server 2-4.

5. Set Disks for C220 M4 Servers to Unconfigured-Good.

Create Storage Profile for Cisco UCS S3260 Storage Server

To create the Storage Profile for Boot LUNs for the top node of the Cisco UCS S3260 Storage Server, complete the following steps:

1. Select Storage in the left pane of the Cisco UCS Manager GUI.

2. Go to Storage > Storage Profiles and right-click Create Storage Profile.

3. Type in S3260-OS-Node1 in the Name field.

4. (Optional) Enter a description in the Description field.

5. Click Add.

6. Type in OS-BootLUN in the Name field.

7. Configure as follow:

a. Create Local LUN

b. Size (GB) = 1

c. Fractional Size (MB) = 0

d. Auto Deploy

e. Select Expand To Available

f. Click Create Disk Group Policy

g. Create Local LUN

h. Type in RAID1-DG in the Name field.

i. (Optional) Enter a description in the Description field.

j. RAID Level = RAID 1 Mirrored.

k. Select Disk Group Configuration (Manual).

l. Click Add.

m. Type in 201 for Slot Number.

n. Click OK and then again Add.

o. Type in 202 for Slot Number.

p. Leave everything else untouched.

q. Click OK and then OK.

r. Select your previously created Disk Group Policy for the Boot SSDs by selecting the radio button under Select Disk Group Configuration.

s. Select Disk Group Configuration.

t. Click OK, click OK again, and then click OK.

u. Storage Profile for the top node of Cisco UCS S3260 Storage Server.

8. To create the Storage Profile for the OS boot LUN for the bottom S3260 Node2 of the Cisco UCS S3260 Storage Server, repeat the same steps for Disk slot 203 and 204.

Create Storage Profile for Cisco UCS C220 M4S Rack-Mount Servers

To create a Storage Profile for the Cisco UCS C220 M4S, complete the following steps:

1. Select Storage in the left pane of the Cisco UCS Manager GUI.

2. Go to Storage > Storage Profiles and right-click Create Storage Profile.

3. Type in C220-OS-Boot in the Name field.

4. (Optional) Enter a description in the Description field.

5. Click Add.

6. Create Storage Profile for Cisco UCS C220 M4S.

7. Type in Boot in the Name field.

8. Configure as follow:

a. Create Local LUN.

b. Size (GB) = 1

c. Fractional Size (MB) = 0

d. Select Expand To Available.

e. Auto Deploy.

f. Click Create Disk Group Policy.

g. Type in RAID1-DG-C220 in the Name field.

h. (Optional) Enter a description in the Description field.

i. RAID Level = RAID 1 Mirrored.

j. Select Disk Group Configuration (Manual).

k. Click Add.

l. Type in 1 for Slot Number.

m. Click OK and then again Add.

n. Type in 2 for Slot Number.

o. Leave everything else untouched. Click OK and then OK.

p. Create Disk Group Policy for C220 M4S.

9. Select your previously created Disk Group Policy for the C220 M4S Boot Disks with the radio button under Select Disk Group Configuration.

10. Create Disk Group Configuration for C220 M4S.

11. Click OK and then click OK and click OK again.

Creating a Service Profile Template

Create Service Profile Template for Cisco UCS S3260 Storage Server Top and Bottom Node

To create a Service Profile Template, complete the following steps:

1. Select Servers in the left pane of the Cisco UCS Manager GUI.

2. Go to Servers > Service Profile Templates > root and right-click Create Service Profile Template.

Identify Service Profile Template

1. Type in Scality-Storage-Server-Template in the Name field.

2. In the UUID Assignment section, select the UUID Pool you created in the beginning.

3. (Optional) Enter a description in the Description field.

4. Identify Service Profile Template.

5. Click Next.

Storage Provisioning

1. Go to the Storage Profile Policy tab and select the Storage Profile S3260-OS-Node1 for the top node of the Cisco UCS S3260 Storage Server you created before.

2. Click Next.

3. Storage Provisioning.

Networking

1. Keep the Dynamic vNIC Connection Policy field at the default.

2. Select LAN connectivity to Use Connectivity Policy created before.

3. From LAN Connectivity drop-down list, select “Storage-Node” created before and click Next.

4. Click Next to continue with SAN Connectivity.

5. Select No vHBA for How would you like to configure SAN Connectivity?

6. Click Next to continue with Zoning.

7. Click Next.

vNIC/vHBA Placement

1. Select Let system Perform placement form the drop-down menu.

2. Under PCI order section, Sort all the vNICs.

3. Make sure the vNICs order listed as External-Mgmt > 1, then followed by Storage-Mgmt > 2, Storage-Cluster > 3 and Client-Network > 4.

4. Click Next to continue with vMedia Policy.

5. Click Next.

Server Boot Order

1. Select the Boot Policy “local-OS-Boot” you created before under Boot Policy.

2. Server Boot Order.

3. Click Next.

Maintenance Policy

1. From the Maintenance Policy drop-down list, select the Maintenance Policy you previously created under.

2. Click Next.

3. For Server Assignment, keep the default settings.

4. Click Next.

Operational Policies

1. Under Operational Policies, for the BIOS Configuration, select the previously created BIOS Policy “S3260-BIOS”. Under Power Control Policy Configuration, select the previously created Power Policy “No-Power-Cap”.

2. Click Finish and then click OK.

3. Repeat the steps for the bottom node of the Cisco UCS S3260 Storage Server, but change the following:

a. Choose the Storage Profile for the bottom node you previously.

Create Service Profile Template for Cisco UCS C220 M4S

The Service Profiles for the Cisco UCS Rack-Mount Servers are very similar to the above created for the S3260. The only differences are with the Storage Profiles, Networking, vNIC/vHBA Placement, and BIOS Policy. The changes are listed here:

1. In the Storage Provisioning tab, choose the appropriate Storage Profile for the Cisco C220 M4S you previously created.

2. In the Networking tab, keep the Dynamic vNIC connection policy as default and select the LAN connectivity policy from the drop-down list the “Connector-Nodes” previously created.

3. Click Next.

4. Configure the vNIC/vHBA Placement in the following order as shown in the screenshot below:

5. In the Operational Policies tab, under BIOS Configuration, select the previously created BIOS Policy “C220-BIOS”. Under Power Control Policy Configuration, select the previously created Power Policy “No-Power-Cap.”

Create Service Profiles from Template

Now create the appropriate Service Profiles from the previous Service Profile Templates. To create the first profile for the top node of the Cisco UCS S3260 Storage Server, complete the following steps:

1. Select Servers from the left pane of the Cisco UCS Manager GUI.

2. Go to Servers > Service Profiles and right-click Create Service Profiles from Template.

3. Type in Scality-Storage-Node in the Name Prefix field.

4. Leave Name Suffix Starting Number as 1.

5. Type in 12 for the Number of Instances.

6. Choose Scality-Storage-Node-Template as the Service Profile Template you created before for the top node of the Cisco UCS S3260 Storage Server.

7. Click OK and then click OK again.

8. Create Service Profiles from Template for all the S3260 M4 nodes.

9. Repeat steps 1-7 for the next Service Profile for the Cisco UCS C220 M4S Rack-Mount Server and choose the appropriate Service Profile Template Scality-Connector-Node-Template you previously created for the Cisco UCS C220 M4 S Rack-Mount Server.

10. Create Service Profiles from Template for the C220 M4S for Connector Nodes.

Creating Port Channel for Uplinks

Create Port Channel for Fabric Interconnect A/B

To create Port Channels to the connected Nexus 9332PQ switches, complete the following steps:

1. Select the LAN tab in the left pane of the Cisco UCS Manager GUI.

2. Go to LAN > LAN Cloud > Fabric A > Port Channels and right-click Create Port Channel.

3. Type in ID 10.