Cisco Solution for EMC VSPEX Microsoft SQL 2014 Consolidation

Available Languages

Cisco Solution for EMC VSPEX Microsoft SQL 2014 Consolidation

Deployment Guide for Microsoft SQL 2014 with Cisco UCS B200 M4 Blade Server, Nexus 9000 Switches (Standalone), Microsoft Windows Server 2012 R2, VMware vSphere 5.5 and EMC VNX5400

Last Updated: October 9, 2015

About Cisco Validated Designs

The CVD program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information visit

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, IronPort, the IronPort logo, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2015 Cisco Systems, Inc. All rights reserved.

Table of Contents

Cisco Unified Computing System (UCS)

Cisco UCS 6248UP Fabric Interconnects

Cisco UCS 5108 Blade Server Chassis

Cisco UCS B200 M4 Blade Servers

Cisco UCS 2208 Series Fabric Extenders

EMC Storage Technology and Benefits

VNX Intel MCx Code Path Optimization

EMC Unisphere Management Suite

Microsoft Windows Server 2012 R2

Hardware and Software Specifications

Cisco VSPEX Environment Deployment

Configure Cisco Nexus 9396PX Switches

Configure Cisco Unified Computing System

Configure EMC VNX5400 Storage Array

Install and Configure vSphere ESXi

Set Up VMware ESXi Installation

Set Up Management Networking for ESXi Hosts

Deploy Virtual Machines and Install OS

Install Windows Server 2012 R2

Install SQL Server 2014 Standalone Instance on Virtual machines

Performance of Consolidated SQL Servers

Cisco UCS B200 M4 BIOS Settings

Install Windows Server Failover Cluster

Verifying the Cluster Configuration

Install SQL Server 2014 Standalone Instance on each Virtual Machine

Configure SQL Server AlwaysOn Availability Group

Enable AlwaysOn Availability Group Feature

Create AlwaysOn Availability Group

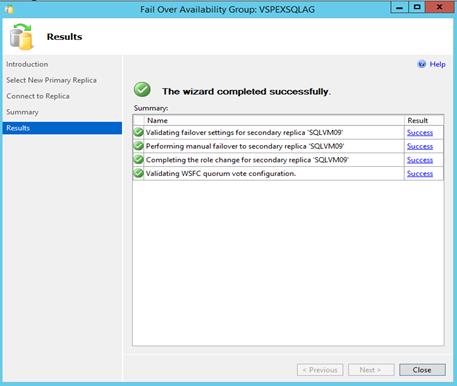

SQL Server AlwaysOn Availability Group Failover Validation

The emerging IT infrastructure strategies have given businesses an opportunity to evolve with greater agility while improving efficiency. Private cloud infrastructures allow businesses to consolidate infrastructure, centralize management and realize an improved total cost of ownership in a short time.

Legacy Information Technology architectures were typically silos of infrastructure, dedicated to a single purpose. Core business applications and database servers were frequently sequestered on dedicated machinery, resulting in low asset utilization and challenging asset refresh project. Modern advancements in virtualization make migration easy, while improved server and network infrastructure ensure that key applications have the resources needed to serve business needs.

Microsoft SQL Server has evolved as the database of choice for a huge number of built-in business applications because of its robust and rich set of features, ease of use and competitive pricing. Given its important role in many businesses, it makes more sense to host Microsoft SQL Server workloads efficiently on private cloud infrastructures that can optimize management and performance of this critical business engine. Microsoft SQL Server adapts itself perfectly to the virtualized private cloud platform, like Cisco-EMC VSPEX solution.

This Cisco Validated Design (CVD) provides a framework with virtualization as an option for consolidation of multi-database, multi-instance SQL Server running OLTP applications by highlighting some of the key decision points based on technical analysis. With this consolidation scenario one can integrate it with Cloud orchestration and provisioning processes to offer Database-as–a-Service (DaaS) with Microsoft SQL server. This deployment guide shows how to design the deployment strategy for virtualized SQL server instance while satisfying key business requirement like scalability, availability and disaster recovery.

This Cisco-EMC VSPEX solution showcases improved efficiency by guiding the reader through a typical consolidation scenario for Microsoft SQL Server supporting enterprise level applications. It showcases both HA and DR solutions as value added offering along with the virtualized SQL Server instance. The other major highlights of this solution will be VMware ESX based deployment, features like SQL Server 2014 AlwaysOn, VMware DRS and the use of Cisco Nexus 9000 Series switches for client traffic.

The objective of this activity is to deliver optimized operational efficiency for businesses through the use of this reference deployment guide. It describes a Microsoft SQL Server 2014 consolidation architecture using Cisco UCS B-Series Servers, VMware ESXi, and EMC VNX5400 storage array The HA/DR features of Microsoft SQL Server 2014 and VMware vSphere will be highlighted.

Introduction

Developing an infrastructure that allows flexibility, redundancy, high-availability, ease of management, security, and access control while reducing costs, hardware footprints, and computing complexities is one of the biggest challenges faced by IT across all industries.

The tremendous growth in hardware computing capacity, database applications, and physical server sprawl has resulted in high-priced and complex computing environments containing many over-provisioned and under-utilized database servers. Many of these servers implement a single instance of SQL Server utilizing 10 to 15 percent CPU utilization on an average which certainly results in insufficient utilization of server resources. Additionally, with all its concomitant complexities, the system administrator’s workload increases in terms of SQL Server management. Especially during a catastrophic physical database server failure, system administrators may be required to rebuild and restore environments within a short period of time.

Consolidating database environments through virtualization represents a significant opportunity to address these challenges. By integrating services into a private cloud, not only does the business benefit from greater efficiency, the services themselves improve. Consolidation through private cloud solutions like VSPEX drive standardization of the database environment, simplify maintenance and administration tasks while improving asset utilization.

Microsoft SQL Server is the de facto database for most Windows applications; this database technology is an ideal prospect for consolidation on a virtualized platform. Once consolidated onto a private cloud like Cisco-EMC VSPEX solution, SQL Server better supports enterprise applications through improved failover and DR capabilities inherent to the private cloud hosting solution.

Target Audience

This CVD is intended for customers, partners, solution architects, storage administrators, and database administrators who are evaluating or planning consolidation of Microsoft SQL Server by virtualizing on VMware vSphere environment using HA/DRS feature for high availability and load balancing. This document also showcases protection against application failure using the Microsoft SQL Server AlwaysOn Availability Group feature. It provides an overview of various considerations and reference architectures for consolidating of SQL Server 2014 deployments in a highly available environment.

Purpose of this Document

This document captures the architecture, configuration information and deployment procedure of consolidating Microsoft SQL Server 2014 databases by virtualizing on VMware vSphere 5.5 using Cisco UCS, Cisco Nexus 9000 series switches and EMC VNX 5400 series storage arrays. This document focuses mainly on consolidation of multiple Microsoft SQL Server 2014 databases on a two-node VMware vSphere cluster with HA/DRS enabled to maximize the availability of VMs and application uptime. The performance of this consolidation study on a single host is also measured to understand the throughput, latency, CPU usage and VM scalability.

This document also showcases building a secondary site as a near-site disaster recovery location for the consolidated SQL Servers in the primary site by configuring SQL Server AlwaysOn availability group pairs with synchronous replication between them.

Business Challenge

Many Microsoft SQL Server deployments have a single application database residing on a physical server. Hitherto, this was a simplistic way to provision new applications for business requirements. As business expanded, the simple applications evolved into business-critical applications, which impeded the functioning of the organization by causing disruption in the information availability due to the lack of high availability features in the simplistic deployments used. Hence, IT organizations face the biggest challenge in keeping the system up and running round the clock with increasing demands and growing complexities.

By following the simplistic process, the problems may not be immediately perceived; however, the challenges become apparent when the databases are moved to the data center:

· When the applications and their servers are moved to a data center, the heterogeneous hardware in the data center can lead to increased maintenance and administrative costs.

· The deployments may not be license-compliant for all the software that is in use, and the cost of becoming legal can potentially be quite expensive.

· Systems and applications that may have started out small and non-mission-critical may have changed over time to become central to the business, but the deployment of those servers is the same as when they were first deployed, so the hardware and architecture does not meet the availability and/or performance needs of the business.

· Poor server utilization and space usage in the data center. Large numbers of older servers consume space and other resources that may be needed for newer servers. The servers themselves may be either sitting nearly idle or completely maximized as a result of poor capacity planning or the lack of a hardware refresh during the lifetime.

This section provides an overview of the Cisco solution for EMC VSPEX for virtualized Microsoft SQL Server and the key technologies used in this solution. This solution has been designed and validated by Cisco to provide the server, network and storage resources for hardware consolidation of Microsoft SQL Server deployments using Cisco UCS, VMware virtualization technologies and EMC VNX5400 storage arrays.

This solution enables customers to consolidate and deploy multiple small or medium virtualized SQL Servers in a Cisco solution for EMC VSPEX environment.

This solution was validated using Fibre Channel for EMC VNX5400 storage arrays. This solution requires the presence of Active Directory, DNS and VMware vCenter. The implementation of these services is beyond the scope of this guide, but the services are prerequisites for a successful deployment.

Following are the components used for the design and deployment of this solution:

· Cisco Unified Computing Solution

· Cisco UCS Server B200 M4 Blades

· Cisco UCS 6248 Fabric Interconnects

· Cisco UCS 5108 Blade Server Chassis

· EMC VNX5400 Storage Arrays

· VMware vSphere 5.5 Update 2

· Microsoft Windows Server 2012 R2

· Microsoft SQL Server 2014

Cisco Unified Computing System (UCS)

The Cisco Unified Computing System™ (Cisco UCS®) is a next-generation data center platform that unites computing, networking, storage access, and virtualization resources into a cohesive system designed to reduce total cost of ownership (TCO) and increase business agility. The system integrates a low-latency; lossless 10 Gigabit Ethernet unified network fabric with enterprise-class, x86-architecture servers. The system is an integrated, scalable, multi chassis platform in which all resources participate in a unified management domain.

Figure 1 Cisco UCS Components

Figure 2 Cisco UCS Overview

The main components of the Cisco UCS are:

· Compute - The system is based on an entirely new class of computing system that incorporates rack mount and blade servers based on Intel Xeon 2600 v2 Series Processors.

· Network - The system is integrated onto a low-latency, lossless, 10-Gbps unified network fabric. This network foundation consolidates LANs, SANs, and high-performance computing networks which are separate networks today. The unified fabric lowers costs by reducing the number of network adapters, switches, and cables, and by decreasing the power and cooling requirements.

· Virtualization - The system unleashes the full potential of virtualization by enhancing the scalability, performance, and operational control of virtual environments. Cisco security, policy enforcement, and diagnostic features are now extended into virtualized environments to better support changing business and IT requirements.

· Storage access - The system provides consolidated access to both SAN storage and Network Attached Storage (NAS) over the unified fabric. By unifying the storage access the Cisco Unified Computing System can access storage over Ethernet (SMB 3.0 or iSCSI), Fibre Channel, and Fibre Channel over Ethernet (FCoE). This provides customers with storage choices and investment protection. In addition, the server administrators can pre-assign storage-access policies to storage resources, for simplified storage connectivity and management leading to increased productivity.

· Management - the system uniquely integrates all system components to enable the entire solution to be managed as a single entity by the Cisco UCS Manager. The Cisco UCS Manager has an intuitive graphical user interface (GUI), a command-line interface (CLI), and a powerful scripting library module for Microsoft PowerShell built on a robust application programming interface (API) to manage all system configuration and operations.

Cisco Unified Computing System (Cisco UCS) fuses access layer networking and servers. This high-performance, next-generation server system provides a data center with a high degree of workload agility and scalability.

The Cisco Unified Computing System is designed to deliver:

· A reduced Total Cost of Ownership and increased business agility.

· Increased IT staff productivity through just-in-time provisioning and mobility support.

· A cohesive, integrated system which unifies the technology in the data center. The system is managed, serviced, and tested as a whole.

· Scalability through a design for hundreds of discrete servers and thousands of virtual machines and the capability to scale I/O bandwidth to match demand.

· Industry standards supported by a partner ecosystem of industry leaders.

Cisco UCS Manager

Cisco UCS Manager provides unified, embedded management of all software and hardware components of the Cisco Unified Computing System through an intuitive GUI, a command line interface (CLI), a Microsoft PowerShell module, or an XML API. The Cisco UCS Manager provides unified management domain with centralized management capabilities and controls multiple chassis and thousands of virtual machines.

Cisco UCS 6248UP Fabric Interconnects

The Fabric interconnects provide a single point for connectivity and management for the entire system. Typically deployed as an active-active pair, the system’s fabric interconnects integrate all components into a single, highly-available management domain controlled by Cisco UCS Manager. The fabric interconnects manage all I/O efficiently and securely at a single point, resulting in deterministic I/O latency regardless of a server or virtual machine’s topological location in the system.

Cisco UCS 6200 Series Fabric Interconnects support the system’s 80-Gbps unified fabric with low-latency, lossless, cut-through switching that supports IP, storage, and management traffic using a single set of cables. The fabric interconnects feature virtual interfaces that terminate both physical and virtual connections equivalently, establishing a virtualization-aware environment in which blade, rack servers, and virtual machines are interconnected using the same mechanisms. The Cisco UCS 6248UP is a 1-RU Fabric Interconnect that features up to 48 universal ports that can support 80 Gigabit Ethernet, Fibre Channel over Ethernet, or native Fibre Channel connectivity.

Figure 3 Cisco UCS 6248UP Fabric Interconnect – Front View

![]()

![]()

Cisco UCS 5108 Blade Server Chassis

The Cisco UCS 5100 Series Blade Server Chassis is a crucial building block of the Cisco Unified Computing System, delivering a scalable and flexible blade server chassis.

The Cisco UCS 5108 Blade Server Chassis is six rack units (6RU) high and can mount in an industry-standard 19-inch rack. A single chassis can house up to eight half-width Cisco UCS B-Series Blade Servers and can accommodate both half-width and full-width blade form factors.

Four single-phase, hot-swappable power supplies are accessible from the front of the chassis. These power supplies are 92 percent efficient and can be configured to support non-redundant, N+ 1 redundant and grid-redundant configurations. The rear of the chassis contains eight hot-swappable fans, four power connectors (one per power supply), and two I/O bays for Cisco UCS 2208 XP Fabric Extenders.

A passive mid-plane provides up to 40 Gbps of I/O bandwidth per server slot and up to 80 Gbps of I/O bandwidth for two slots. The chassis is capable of supporting future 80 Gigabit Ethernet standards.

Figure 4 Cisco UCS5100 Series Blade Server Chassis (Front and Rear Views)

Cisco UCS B200 M4 Blade Servers

The enterprise-class Cisco UCS B200 M4 blade server extends the capabilities of Cisco’s Unified Computing System portfolio in a half-width blade form factor. The Cisco UCS B200 M4 uses the power of the latest Intel® Xeon® E5-2600 v3 Series processor family CPUs with up to 1536 GB of RAM (using 64 GB DIMMs), two solid-state drives (SSDs) or hard disk drives (HDDs), and up to 80 Gbps throughput connectivity. The Cisco UCS B200 M4 Blade Server mounts in a Cisco UCS 5100 Series blade server chassis or Cisco UCS Mini blade server chassis. It has 24 total slots for registered ECC DIMMs (RDIMMs) or load-reduced DIMMs (LR DIMMs) for up to 1536 GB total memory capacity (B200 M4 configured with two CPUs using 64 GB DIMMs). It supports one connector for Cisco’s VIC 1340 or 1240 adapter, which provides Ethernet and Fibre Channel over Ethernet (FCoE).

Figure 5 Cisco UCS B200 M4 Blade Server

Cisco UCS 2208 Series Fabric Extenders

The Cisco UCS 2208XP Fabric Extender has eight 10 Gigabit Ethernet, FCoE-capable, Enhanced Small Form-Factor Pluggable (SFP+) ports that connect the blade chassis to the fabric interconnect. Each Cisco UCS 2208XP has thirty-two 10 Gigabit Ethernet ports connected through the midplane to each half-width slot in the chassis. Typically configured in pairs for redundancy, two fabric extenders provide up to 160 Gbps of I/O to the chassis.

Figure 6 Cisco UCS 2208XP Fabric Extender

Cisco VIC 1340

The Cisco UCS Virtual Interface Card (VIC) 1340 is a 2-port 40-Gbps Ethernet or dual 4 x 10-Gbps Ethernet, Fibre Channel over Ethernet (FCoE)-capable modular LAN on motherboard (mLOM) designed exclusively for the M4 generation of Cisco UCS B-Series Blade Servers. When used in combination with an optional port expander, the Cisco UCS VIC 1340 capabilities is enabled for two ports of 40-Gbps Ethernet.

The Cisco UCS VIC 1340 enables a policy-based, stateless, agile server infrastructure that can present over 256 PCIe standards-compliant interfaces to the host that can be dynamically configured as either network interface cards (NICs) or host bus adapters (HBAs). In addition, the Cisco UCS VIC 1340 supports Cisco® Data Center Virtual Machine Fabric Extender (VM-FEX) technology, which extends the Cisco UCS fabric interconnect ports to virtual machines, simplifying server virtualization deployment and management.

Figure 7 Cisco Virtual Interface Card (VIC) 1340

Cisco UCS Differentiators

Cisco's Unified Computing System is revolutionizing the way servers are managed in data centers. Following are the unique differentiators of Cisco UCS and Cisco UCS Manager.

· Embedded management—In Cisco Unified Computing System, the servers are managed by the embedded firmware in the Fabric Interconnects, eliminating need for any external physical or virtual devices to manage the servers. Also, a pair of FIs can manage up to 40 chassis, each containing up 8 blade servers, to a total of 160 servers with fully redundant connectivity. This gives enormous scaling on the management plane.

· Unified fabric—In Cisco Unified Computing System, from blade server chassis or rack server fabric-extender to FI, there is a single Ethernet cable used for LAN, SAN, and management traffic. This converged I/O results in reduced cables, SFPs, and adapters - reducing capital and operational expenses of overall solution.

· Auto Discovery—By simply inserting the blade server in the chassis, discovery and inventory of compute resource occurs automatically without any management intervention. The combination of unified fabric and auto-discovery enables the wire-once architecture of Cisco Unified Computing System, where compute capability of Cisco Unified Computing System can be extended easily while keeping the existing external connectivity to LAN, SAN, and management networks. Policy based resource classification—When a compute resource is discovered by Cisco UCS Manager, it can be automatically classified to a given resource pool based on policies defined. This capability is useful in multi-tenant cloud computing.

· Combined Rack and Blade server management—Cisco UCS Manager can manage Cisco UCS B-series blade servers and Cisco UCS C-series rack server under the same Cisco UCS domain. This feature, along with stateless computing makes compute resources truly hardware form factor agnostic.

· Model based management architecture—Cisco UCS Manager architecture and management database is model based and data driven. An open, standard based XML API is provided to operate on the management model. This enables easy and scalable integration of Cisco UCS Manager with other management system, such as VMware vCloud director, Microsoft System Center, and Citrix Cloud Platform.

· Policies, Pools, Templates—The management approach in Cisco UCS Manager is based on defining policies, pools and templates, instead of cluttered configuration, which enables a simple, loosely coupled, data driven approach in managing compute, network and storage resources.

· Loose referential integrity—In Cisco UCS Manager, a service profile, port profile, or policies can refer to other policies or logical resources with loose referential integrity. A referred policy does not have to exist at the time of authoring the referring policy or a referred policy can be deleted even though other policies are referring to it. This provides different subject matter experts to work independently from each other. This provides great flexibility where different experts from different domains, such as network, storage, security, server, and virtualization work together to accomplish a complex task.

· Policy resolution—In Cisco UCS Manager, a tree structure of organizational unit hierarchy can be created that mimics the real life tenants and/or organization relationships. Various policies, pools, and templates can be defined at different levels of organization hierarchy. A policy referring to another policy by name is resolved in the organization hierarchy with closest policy match. If no policy with specific name is found in the hierarchy of the root organization, then special policy named "default" is searched. This policy resolution practice enables automation friendly management APIs and provides great flexibility to owners of different organizations.

· Service profiles and stateless computing—A service profile is a logical representation of a server, carrying its various identities and policies. This logical server can be assigned to any physical compute resource as far as it meets the resource requirements. Stateless computing enables procurement of a server within minutes, which used to take days in legacy server management systems.

· Built-in multi-tenancy support—The combination of policies, pools, templates, loose referential integrity, policy resolution in organization hierarchy, and a service profiles based approach to compute resources makes Cisco UCS Manager inherently friendly to multi-tenant environment typically observed in private and public clouds.

· Extended Memory—The extended memory architecture of Cisco Unified Computing System servers allows up to 760 GB RAM per server - allowing huge VM to physical server ratio required in many deployments, or allowing large memory operations required by certain architectures like Big-Data.

· Virtualization aware network—VM-FEX technology makes access layer of network aware about host virtualization. This prevents domain pollution of compute and network domains with virtualization when virtual network is managed by port-profiles defined by the network administrators' team. VM-FEX also off loads hypervisor CPU by performing switching in the hardware, thus allowing hypervisor CPU to do more virtualization related tasks. VM-FEX technology is well integrated with VMware vCenter, Linux KVM, and Hyper-V SR-IOV to simplify cloud management.

· Simplified QoS—When the Fibre Channel and Ethernet are converged in Cisco Unified Computing System fabric, built-in support for QoS and lossless Ethernet makes it seamless. Network Quality of Service (QoS) is simplified in Cisco UCS Manager by representing all system classes in one GUI panel.

Cisco Nexus 9396PX Switches

The Cisco Nexus 9396X Switch delivers comprehensive line-rate layer 2 and layer 3 features in a two-rack-unit (2RU) form factor. It supports line rate 1/10/40 GE with 960 Gbps of switching capacity. It is ideal for top-of-rack and middle-of-row deployments in both traditional and Cisco Application Centric Infrastructure (ACI)–enabled enterprise, service provider, and cloud environments.

Figure 8 Cisco Nexus 9396PX Switch

EMC VNX Series

EMC Storage Technology and Benefits

The VNX storage series provides both file and block access with a broad feature set, which makes it an ideal choice for any private cloud implementation.

VNX storage includes the following components, sized for the stated reference architecture workload:

· Host Adapter Ports (for block)—Provide host connectivity through fabric to the array

· Storage Processors—The compute components of the storage array, which are used for all aspects of data moving into, out of, and between arrays

· Disk Drives—Disk spindles and solid state drives (SSDs) that contain the host or application data and their enclosures

· Data Movers (for file)—Front-end appliances that provide file services to hosts (optional if CIFS services are provided)

![]() The term Data Mover refers to a VNX hardware component, which has a CPU, memory, and I/O ports. It enables Common Internet File System (CIFS-SMB) and Network File System (NFS) protocols on the VNX.

The term Data Mover refers to a VNX hardware component, which has a CPU, memory, and I/O ports. It enables Common Internet File System (CIFS-SMB) and Network File System (NFS) protocols on the VNX.

The VNX5400 array can support a maximum of 250 drives, the VNX5600 can host up to 500 drives, and the VNX5800 can host up to 750 drives.

The VNX series supports a wide range of business-class features that are ideal for the private cloud environment including:

· EMC Fully Automated Storage Tiering for Virtual Pools (FAST VP™)

· EMC FAST Cache

· File-level data deduplication and compression

· Block deduplication

· Thin provisioning

· Replication

· Snapshots or checkpoints

· File-level retention

· Quota management

Features and Enhancements

The EMC VNX flash-optimized unified storage platform delivers innovation and enterprise capabilities for file, block, and object storage in a single, scalable, and easy-to-use solution. Ideal for mixed workloads in physical or virtual environments, VNX combines powerful and flexible hardware with advanced efficiency, management, and protection software to meet the demanding needs of today's virtualized application environments.

VNX has new features and enhancements that are designed and built upon the first generation's success. These features and enhancements are:

· More capacity with Multicore optimization with Multicore Cache, Multicore RAID, and Multicore

FAST Cache (MCx)

· Greater efficiency with a flash-optimized hybrid array

· Better protection by increasing application availability with active/active storage processors

· Easier administration and deployment by increasing productivity with a new Unisphere

Management Suite

Flash Optimized Hybrid Array

VNX is a flash-optimized hybrid array that provides automated tiering to deliver the best performance to your critical data, while intelligently moving less frequently accessed data to lower-cost disks. In this hybrid approach, a small percentage of flash drives in the overall system can provide percentage high percentage of the overall IOPS. A flash-optimized VNX takes full advantage of the low latency of flash to deliver cost-saving optimization and high performance scalability. The EMC Fully Automated Storage Tiering Suite (FAST Cache and FAST VP) tiers both block and file data across heterogeneous drives and boosts the most active data to the cache, ensuring that customers never have to make concessions for cost or performance.

FAST VP dynamically absorbs unpredicted spikes in system workloads. As that data ages and becomes less active over time, FAST VP tiers the data from high-performance to high-capacity drives automatically, based on customer-defined policies. This functionality has been enhanced with four times better granularity and with new FAST VP solid-state disks (SSDs) based on enterprise multi-level cell (eMLC) technology to lower the cost per gigabyte. All VSPEX use cases benefit from the increased efficiency.

VSPEX Proven Infrastructures deliver private cloud, end-user computing, and virtualized application solutions. With VNX, customers can realize an even greater return on their investment. VNX provides out-of-band, block-based deduplication that can dramatically lower the costs of the flash tier.

VNX Intel MCx Code Path Optimization

The advent of flash technology has been a catalyst in totally changing the requirements of midrange storage systems. EMC redesigned the midrange storage platform to efficiently optimize multicore CPUs to provide the highest performing storage system at the lowest cost in the market. MCx distributes all VNX data services across all cores—up to 32, as shown in the below figure. The VNX series with MCx has dramatically improved the file performance for transactional applications like databases or virtual machines over network-attached storage (NAS).

Figure 9 Next-Generation VNX with Multicore Optimization

Multicore Cache

The cache is the most valuable asset in the storage subsystem; its efficient use is key to the overall efficiency of the platform in handling variable and changing workloads. The cache engine has been modularized to take advantage of all the cores available in the system.

Multicore RAID

Another important part of the MCx redesign is the handling of I/O to the permanent back-end storage-hard disk drives (HDDs) and SSDs. Greatly increased performance improvements in VNX come from the modularization of the back-end data management processing, which enables MCx to seamlessly scale across all processors.

VNX Performance

Performance Enhancements

VNX storage, enabled with the MCx architecture, is optimized for FLASH 1st and provides unprecedented overall performance, optimizing for transaction performance (cost per IOPS), bandwidth performance (cost per GB/s) with low latency, and providing optimal capacity efficiency (cost per GB).

VNX provides the following performance improvements:

· Up to four times more file transactions when compared with dual controller arrays

· Increased file performance for transactional applications (for example, Microsoft Exchange on

VMware over NFS) by up to three times with a 60 percent better response time

· Up to four times more Oracle and Microsoft SQL Server OLTP transactions

· Up to four times more virtual machines, a greater than three times improvement

· Active/Active Array Storage Processors

The new VNX architecture provides active/active array storage processors, as shown in the following figure, which eliminate application timeouts during path failover since both paths are actively serving I/O.

Load balancing is also improved and applications can achieve an up to two times improvement in performance. Active/Active for block is ideal for applications that require the highest levels of availability and performance, but do not require tiering or efficiency services like compression, deduplication, or snapshot.

With this VNX release, VSPEX customers can use virtual Data Movers (VDMs) and VNX Replicator to perform automated and high-speed file system migrations between systems.

Figure 10 Active/Active Processors Increase Performance, Resiliency, and Efficiency

EMC Unisphere Management Suite

The new EMC Unisphere Management Suite extends Unisphere's easy-to-use, interface to include VNX Monitoring and Reporting for validating performance and anticipating capacity requirements. As shown in the following figure, the suite also includes Unisphere Remote for centrally managing up to thousands of VNX systems with new support for XtremSW Cache.

Figure 11 Unisphere Management Suite

EMC Storage Integrator

EMC Storage Integrator (ESI) is aimed towards the Windows and Application administrator. ESI is easy to use, delivers end-to end monitoring, and is hypervisor agnostic. Administrators can provision ESI in both virtual and physical environments for a Windows platform, and troubleshoot by viewing the topology of an application from the underlying hypervisor to the storage.

VMware vSphere 5.5

ESXi 5.5 is a "bare-metal" hypervisor, so it installs directly on top of the physical server and partitions it into multiple virtual machines that can run simultaneously, sharing the physical resources of the underlying server. VMware introduced ESXi in 2007 to deliver industry-leading performance and scalability while setting a new bar for reliability, security and hypervisor management efficiency.

Due to its ultra-thin architecture with less than 100MB of code-base disk footprint, ESXi delivers industry-leading performance and scalability plus:

Improved Reliability and Security — with fewer lines of code and independence from general purpose OS, ESXi drastically reduces the risk of bugs or security vulnerabilities and makes it easier to secure your hypervisor layer.

Streamlined Deployment and Configuration — ESXi has far fewer configuration items than ESX, greatly simplifying deployment and configuration and making it easier to maintain consistency.

Higher Management Efficiency — The API-based, partner integration model of ESXi eliminates the need to install and manage third party management agents. You can automate routine tasks by leveraging remote command line scripting environments such as vCLI or PowerCLI.

Simplified Hypervisor Patching and Updating — Due to its smaller size and fewer components, ESXi requires far fewer patches than ESX, shortening service windows and reducing security vulnerabilities.

Microsoft Windows Server 2012 R2

Microsoft Windows Server 2012 R2 offers businesses an enterprise-class infrastructure that simplifies the deployment of IT services. With Windows Server 2012 R2, you can achieve affordable, multi-node business continuity scenarios with high service uptime and at-scale disaster recovery. As an open application and web platform, Windows Server 2012 R2 helps you build, deploy, and scale modern applications and high-density websites for the datacenter and the cloud. Windows Server 2012 R2 also enables IT to empower users by providing them with flexible, policy-based resources while protecting corporate information.

Microsoft SQL Server 2014

Microsoft SQL Server 2014 builds on the mission-critical capabilities delivered in the prior release by making it easier and more cost effective to develop high-performance applications. Apart from the several performance improving capabilities Microsoft SQL Server delivers a robust platform for hosting mission critical database environments.

AlwaysOn Availability Groups

The AlwaysOn Availability Groups feature is a high-availability and disaster-recovery solution that provides an enterprise-level alternative to database mirroring. Introduced in SQL Server 2012, AlwaysOn Availability Groups maximize the availability of a set of user databases for an enterprise. An availability group supports a failover environment for a discrete set of user databases, known as availability databases, which fail over together. An availability group supports a set of read-write primary databases and one to eight sets of corresponding secondary databases. Optionally, secondary databases can be made available for read-only access and/or some backup operations.

An availability group fails over at the level of an availability replica. Failovers are not caused by database issues such as a database becoming suspect due to a loss of a data file, deletion of a database, or corruption of a transaction log.

Figure 12 illustrates the architecture for the Cisco solution for EMC VSPEX infrastructure for SQL Server consolidation.

Figure 12 Reference Architecture

This reference architecture showcases the possibility of building a balanced and scalable infrastructure enabling a solution-based consolidation of SQL Server within a customer environment. The solution provides lower cost, predicable performance while enabling options such as accelerated backup and comprehensive resiliency features. The design leverages the flexibility of the Cisco UCS Fabric Interconnects to operate in FC switching mode, eliminating the need for a separate fibre channel switch thus reducing the deployment costs. SAN connectivity policies in Cisco UCS Manager are used to automate SAN zoning, which reduces the administrative tasks.

The architecture for this solution is divided into two sections and they are:

1. Primary Site for SQL Server Consolidation

2. Secondary Site for near-site disaster recovery solution

Primary site showcases the consolidation of multiple Windows Server 2012 R2 virtual machines each hosting single SQL Server 2014 database instance on a VMware environment. Virtual machines are fully isolated from the other virtual machines and communicate with the other servers on the network as if they were physical machines. Optimal resource governance between multiple virtual machines are automatically managed by the VMware ESXi hypervisor. VMware vSphere HA, DRS and vMotion features are configured to provide high availability of virtual machines within the primary site.

Secondary site is built similarly to the primary site to provide a near-site disaster recovery solution using the SQL Server AlwaysOn Availability Group feature. The hardware requirements for both primary and secondary site are same; however, in this solution we have used a pair of Cisco Nexus Switch between the primary and secondary sites as shown in the above figure.

In this solution single instance SQL Server virtual machines are deployed on both the sites. SQL Server AlwaysOn Availability Groups with synchronous replication is setup between a pair of SQL Server virtual machines, one in the primary site and the other in the secondary site. To enable and configure SQL Server AlwaysOn Availability Group, it is required to have the virtual machines hosting SQL Server database to be a part of Microsoft Windows Server Failover Cluster (WSFC).

VMware requires you to create the virtual machine to virtual machine anti-affinity rules for the WSFC virtual machines across physical ESXi hosts in a VMware vSphere cluster enabled with HA/ DRS. This solution is designed to keep the WSFC nodes hosting SQL Server AlwaysOn availability group replicas on separate vSphere clusters and different storage arrays preventing them from running on the same physical host but allowing WSFC virtual machines to vMotion to other ESXi hosts within their specific site and vSphere cluster.

This solution leverages the high availability features of both VMware and SQL Server 2014. In case of ESXi host failure, the VMware vSphere HA restarts the virtual machine on any available host in the cluster and vMotion helps in reducing the downtime during the maintenance and upgrade activities. The SQL Server AlwaysOn availability group feature provides database level availability between the primary and secondary sites, and offloads tasks such as backup and reporting services from the primary to the secondary replica.

For managing the various components in the deployment, a separate Cisco UCS server with Windows Active Directory, VMware vCenter and other infrastructure components is configured. It is assumed that these are part of an existing infrastructure management framework that customers have deployed for the entire common infrastructure managed within their data center.

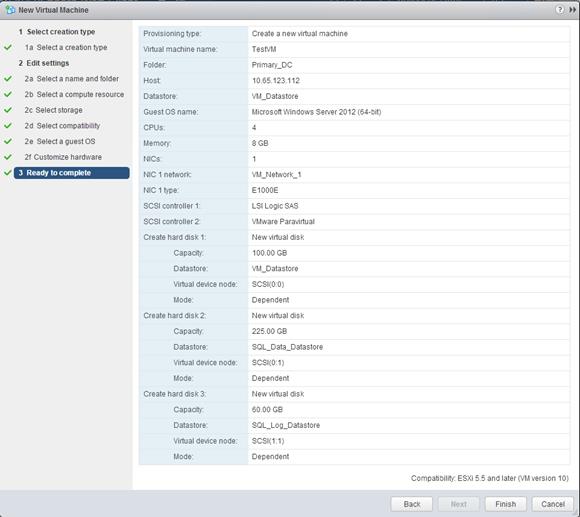

For this solution we are using a total of four Cisco UCS B200 M4 servers. Each site has two blade servers with VMware ESXi 5.5 hypervisor installed on them. As per the reference architecture, two ESXi hosts in the primary site are part of a VMware vSphere cluster and similarly the other two ESXi hosts in the secondary site are part of another VMware vSphere cluster. On the ESXi hosts multiple Windows Server 2012 R2 virtual machines, each running single SQL Server 2014 database instance, are deployed. Each virtual machine is configured with four vCPU, 8 GB RAM, and three virtual disks (VMDKs) for OS, SQL Data and Log files.

Figure 13 shows the logical representation of network connectivity. The networking configuration is designed to ensure high availability and bandwidth for the network traffic at every layer.

The Cisco UCS provides high availability to the high end applications by providing redundancy to all the critical components in the infrastructure stack. Cisco UCS B200 M4 blades are connected to a pair of Cisco UCS 6248 Fabric Interconnects through Cisco UCS IOM 2208 Fabric Extenders housed in the Cisco UCS 5108 Chassis. The Cisco Nexus 9396 switches deployed in pair are configured for virtual port channel (vPC). Virtual port channels are configured between Cisco UCS 6248 Fabric Interconnects and Cisco Nexus 9396 switches for optimal network connectivity.

The QoS policies created from the Cisco UCS Manager were used along with VLANs isolating the different traffic types to create a network infrastructure with prioritized network traffic. Cisco UCS service profiles associated to Cisco UCS B200 M4 blades having 1340 VIC adapter is configured with four virtual network interfaces (vNICs) and two virtual host bus adapters (vHBAs). The vNICs were designed to optimally segregate the different types of management and data traffic for the recommended architecture. Each NIC is configured to have its own VLAN for traffic segregation. vNICs, eth0 and eth2 connect to the ports on fabric-A and eth1 and eth3 connect to the ports on fabric-B.

On the VMware ESXi hosts, two virtual standard switches (vSS) with virtual port groups (vmkernel and VM port groups) are configured for the purpose of differentiating the different kinds of traffic passing through a virtual switch. On one vSS, it is configured with two vmkernel port groups, one for vMotion and the other for Management. The second vSS is configured with two VM port groups with one used for VM and SQL traffic while the other is used for Microsoft Windows server failover cluster traffic. The virtual switches on both the VMware ESXi hosts use two uplink adapters (vmnics) teamed in active-active configuration for redundancy and load balancing as shown in the below figure. Jumbo frame support was enabled for vMotion traffic for better performance. The virtual machines running on VMware ESXi hosts are configured with two vNICs, where one vNIC connects to the VM port group configured for VM and SQL traffic and the other vNIC connects to VM port group configured for Microsoft WSFC traffic on the same vSwitch.

Figure 13 Primary Site Logical Connectivity

The EMC VNX5400 storage arrays are directly connected to the Cisco UCS 6248UP Fabric Interconnects in highly available configuration, without the need of a separate Fibre Channel switch. The fabric interconnects were configured to be in Fibre Channel switching mode and were configured to perform the zoning operations for the storage access. The Service Profile associated with Cisco UCS B200 M4 blade is configured with two virtual host adapters with each bound to separate fabric interconnect on different VSAN. This configuration provides redundant multiple paths to both the controllers on the storage array. VMware ESXi native multipathing plugin with round robin as the path selection policy is used to provide the benefit of multiple active/optimized paths for I/O scalability.

The EMC VNX5400 used for this solution has disk configuration of 66x600GB SAS drives and 12x200GB Flash drives. The recommended hot spare policy of one hot spare per thirty disks is configured on the storage arrays. The storage pool design for the SQL Server virtual machine consolidation use case is given in the table below.

Table 1 Storage Pool Details

| Pool Name |

RAID Type |

Disk Configuration |

Fast VP (Yes/No) |

LUN Details |

Purpose |

| SAN_Boot |

RAID5(4+1) |

5 SAS Disks |

No |

SAN Boot LUN 1 SAN Boot LUN 2 |

Performance tier for ESXi SAN Boot |

| VM_Datastore |

RAID5(4+1) |

5 SAS Disks |

No |

VM LUN |

Performance tier for virtual machine OS |

| SQL_DATA |

RAID5(4+1) RAID1/0(4+4) |

25 SAS + 8 Flash |

Yes |

SQL DATA LUN |

Extreme Performance tier for SQL Server OLTP database |

| SQL_LOG |

RAID1/0(4+4) |

16 SAS |

No |

SQL Log LUN |

Performance tier for SQL Server OLTP database logs |

Separate Storage pools are created for ESXi SAN boot, virtual machine OS and SQL Server database data and log files to isolate the spindles for better performance. The VM LUN, SQL data and Log LUNs are presented to both the VMware ESXi hosts in the cluster. These LUNs are formatted using the VMware VMFS and the volumes are mounted on both the VMware ESXi hosts participating in the cluster. Virtual machines use these VMFS datastore volumes to store their OS, SQL Data and Log virtual disks (vmdk). Figure 14 shows the physical and logical disk layout used for this solution.

Figure 14 Storage Layout Diagram

All the components in this base design can be scaled easily to support specific business requirements. For example, more (or different) servers or even blade chassis can be deployed to increase compute capacity, additional disk shelves can be deployed to improve I/O capability and throughput, and special hardware or software features can be added to introduce new features.

This document guides you through the steps for deploying the base architecture. These procedures cover everything from the network, compute and storage device configuration perspectives.

Hardware and Software Specifications

This section lists the hardware and software models versions validated as part of this guide.

Table 2 Hardware and Software Details

| Description |

Vendor |

Name |

Version |

| Cisco UCS Fabric Interconnects |

Cisco |

Cisco 6248UP |

Cisco UCSM 2.2(3f) |

| Blade Chassis |

Cisco |

Cisco UCS 5108 Chassis |

|

| Network Switches |

Cisco |

Cisco Nexus 9396PX Switch |

NXOS: version 6.1(2)I2(2a) |

| Blade Server |

Cisco |

Cisco UCS B200 M4 Half width Blade Server |

BIOS : B200M4.2.2.3d.0.111420141438 |

| Processors per Server |

Intel |

Intel(R) Xeon(R) E5-2650 v3 2.30 GHz |

2 x Intel E5-2650 v3, 2.30 GHz, 10 Cores/Socket, HT enabled, 40 Threads |

| Memory per Server |

Samsung |

256 GB |

16GB DDR4-2133-MHz RDIMM/PC4-17000/dual rank/x4/1.2v |

| Network Adapter |

Cisco |

Virtual Interface Card(VIC) 1340 |

4.0(1f) |

| Hypervisor |

VMware |

vSphere ESXi 5.5 U2 |

Cisco Custom Image Build # 2068190 |

| Guest Operating System |

Microsoft |

Windows Server 2012 R2 |

Datacenter Edition |

| Database Server |

Microsoft |

SQL Server 2014 |

Enterprise Edition |

| Primary Server Storage |

EMC |

VNX5400 |

Block S/W – 05.33.000.5.081 File S/W – 8.1.3-79 |

| Secondary Server Storage |

EMC |

VNX5400 |

Block S/W – 05.33.000.5.081 File S/W – 8.1.3-79 |

| Test Tool |

Open source |

HammerDB |

2.16 |

Cisco VSPEX Environment Deployment

Deployment of Primary Site

This section provides an end-to-end guidance on setting up the Cisco VSPEX environment for consolidating SQL Server database workloads as in the proposed reference architecture.

The below flow chart depicts the high level flow of the deployment procedure for the primary site.

Figure 15 Primary Site Workflow Setup for Microsoft SQL Server 2014 Consolidation

Configure Cisco Nexus 9396PX Switches

This section explains switch configuration needed for the Cisco solution for EMC VSPEX VMware architecture. Details about configuring password, management connectivity and strengthening the device are not covered here; please refer to the Cisco Nexus 9000 series configuration guide for that.

Configure Global VLANs

For this VSPEX solution we have created VLANs using the below reference table.

Table 3 VLAN Details

| VLAN Name |

VLAN ID |

Description |

| Mgmt. |

604 |

Management VLAN for vSphere servers to reach vCenter management plane |

| vMotion |

40 |

VLAN for virtual machine vMotion |

| VM-Data |

613 |

VLAN for the virtual machine (application) traffic (can be multiple VLANs) |

| Win_Clus |

50 |

VLAN for Windows Server Failover Cluster and SQL AlwaysOn AG |

Following figure shows how to configure VLAN on a Cisco Nexus 9000 series switches. Create VLANs on both the switches as shown in the below figure.

Figure 16 Create VLANs

Configure Virtual Port-Channel (vPC)

Virtual port-channel effectively enables two physical switches to behave like a single virtual switch, and port-channel can be formed across the two physical switches. Following are the steps to configure vPC:

1. Enable LACP feature on both switches.

2. Enable vPC feature on both switches as shown in the below figure.

Figure 17 Enable Features

![]()

3. Configure a unique vPC domain ID, identical on both switches.

4. Configure mutual management IP addresses on both switches and configure peer-gateway as shown in the following figure. Refer to Cisco Nexus 9K Switch vPC configuration for more details.

Figure 18 Create VPC Domain

5. Create and configure port-channel on the inter-switch links. The steps to configure these port-channels are shown in the figure below. Make sure that “vpc peer-link” is configured on this port-channel.

Figure 19 Create Port-Channels for Inter-Switch Link Interfaces

6. Add ports (inter-switch link ports) with LACP protocol to the port-channel created in the above step using “channel-group 10 force mode active” command under the interface subcommand as shown below.

Figure 20 Add Ports to the Port-Channel

7. Repeat the steps from 1 to 6 to create vPC domain on the peer N9K switch.

8. Verify vPC status using “show vpc” command. Successful vPC configuration would look like as shown in the below figure:

Figure 21 Verify vPC Status

Configure Port-Channels Connected to Fabric-Interconnects

Interfaces connected to fabric interconnects need to be in the trunk mode and vMotion, Mgmt and application VLANs are allowed on this port. From the switch side, interfaces connected to FI-A and FI-B are in a vPC, and from the FI side the links connected to Cisco Nexus 9396 A and B switches are in regular LACP port-channels. It is a good practice to use a good description for each port and port-channel on the switch to aid in diagnosis if any problem arises later. Refer to the following figure for exact configuration commands for Cisco Nexus 9K switch A & B:

9. To create and configure the port channels for the interfaces connected to Fabric Interconnects follow the steps as given in the below figure.

Figure 22 Create Port Channel for Interfaces Connected to Fabric Interconnects

10. Add ports on the port-channel as shown below.

Figure 23 Add Ports to the Port-Channels

11. Repeat the above 9 and 10 steps on the peer switch.

Verify VLAN and Port-Channel Configuration

At this point of time, all ports and port-channels are configured with necessary VLANs, switchport mode and vPC configuration. Validate this configuration using the “show vlan”, “show port-channel summary” and “show vpc” commands as shown in the following figures. Note that ports would be “up” only after the peer devices are also configure properly, so you should revisit this subsection after configuring the fabric interconnects in the Cisco UCS configuration section.

Figure 24 Show VLAN

“show vlan” command can be restricted to a given VLAN or set of VLANs as shown in the above figure. Ensure that all required VLANs are in “active” status on both switches and the right set of ports and port-channels are part of the necessary VLANs.

The port-channel configuration can be verified using “show port-channel summary” command. Following figure shows the expected output of this command.

Figure 25 Show Port-Channel Summary

In this example, port-channel 10 is the vPC peer-link port-channel; port-channels 25 and 26 are connected to the Cisco UCS Fabric Interconnects. Make sure that state of the member ports of each port-channel is “P” (Up in port-channel). Note that ports may not come up if the peer ports are not properly configured. Common reasons for port-channel port being down are:

· Port-channel protocol mis-match across the peers (LACP v/s none)

· Inconsistencies across two vPC peer switches. Use “show vpc consistency-parameters {global | interface {port-channel | port} <id>} command to diagnose such inconsistencies.

vPC status can be verified using “show vpc” command. Example output is shown in the figure below:

Figure 26 Show vPC Brief

Make sure that vPC peer status is “peer adjacency formed ok” and all the port-channels, including the peer-link port-channel, have status “up”, except one of the two port-channels connected to the storage array as explained before.

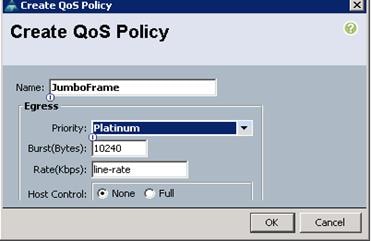

Enable Jumbo Frame on Cisco 9396PX Switch

The Cisco solution for EMC VSPEX VMware architectures require MTU set at 9216 (jumbo frames) for efficient storage and vMotion traffic. You can configure the system jumbo MTU size, which can be used to specify the MTU size for Layer 2 interfaces. You can specify an even number between 1500 and 9216. If you do not configure the system jumbo MTU size, it defaults to 9216 bytes

To configure jumbo MTU on the Cisco Nexus 9000 series switches, use the following steps on both switches A and B as shown in the below figure.

Figure 27 Configure Jumbo Frames

Configure Ports Connected To Infrastructure Network

Depending on the available network infrastructure, several methods and features can be used to uplink this VSPEX environment. If an existing Cisco Nexus environment is present, Cisco recommends using vPCs to uplink the

Cisco Nexus 9396 switches included in this solution into the infrastructure. The previously described procedures can be used to create an uplink vPC to the existing environment.

Configure Cisco Unified Computing System

The following section provides a detailed procedure for configuring the Cisco Unified Computing System for use in this VSPEX environment. These steps should be followed precisely because a failure to do so could result in an improper configuration.

Perform Initial Setup of the Cisco UCS 6248 Fabric Interconnects

These steps provide details for initial setup of the Cisco UCS 6248 fabric Interconnects.

Cisco UCS 6248 A

1. Connect to the console port on the first Cisco UCS 6248 fabric interconnect.

2. At the prompt to enter the configuration method, enter console to continue.

3. If asked to either do a new setup or restore from backup, enter setup to continue.

4. Enter y to continue to set up a new fabric interconnect.

5. Enter y to enforce strong passwords.

6. Enter the password for the admin user.

7. Enter the same password again to confirm the password for the admin user.

8. When asked if this fabric interconnect is part of a cluster, answer y to continue.

9. Enter A for the switch fabric.

10. Enter the <cluster name> for the system name.

11. Enter the <Mgmt0 IPv4> address.

12. Enter the <Mgmt0 IPv4> netmask.

13. Enter the <IPv4 address> of the default gateway.

14. Enter the <cluster IPv4 address>.

15. To configure DNS, answer y.

16. Enter the <DNS IPv4 address>.

17. Answer y to set up the default domain name.

18. Enter the <default domain name>.

19. Review the settings that were printed to the console, and if they are correct, answer yes to save the

20. configuration.

21. Wait for the login prompt to make sure the configuration has been saved.

Cisco UCS 6248 B

1. Connect to the console port on the second Cisco UCS 6248 fabric interconnect.

2. When prompted to enter the configuration method, enter console to continue.

3. The installer detects the presence of the partner fabric interconnect and adds this fabric interconnect to the cluster. Enter y to continue the installation.

4. Enter the admin password for the first fabric interconnect.

5. Enter the <Mgmt0 IPv4 address>.

6. Answer yes to save the configuration.

7. Wait for the login prompt to confirm that the configuration has been saved.

8. Connect to the Cisco UCSM cluster IP address that was configured in the previous steps using SSH and verify the HA status as shown below.

Figure 28 Verify FI Cluster State

Log into Cisco UCS Manager

These steps provide details for logging into the Cisco UCS environment.

1. Open a web browser and navigate to the Cisco UCS 6248 fabric interconnect cluster address to launch the Cisco UCS Manager.

Figure 29 Connect to Cisco UCSM Manager

2. Click the Launch link to download the Cisco UCS Manager software. If prompted to accept security certificates, accept as necessary.

3. In the next screen click Launch UCS Manager.

Figure 30 Launch Cisco UCSM Manager

4. When prompted, enter the credentials to login to the Cisco UCS Manager software.

Figure 31 Cisco UCSM Manger Login

Add a Block of IP Addresses for KVM Access

These steps provide details for creating a block of KVM IP addresses for server access in the Cisco UCS environment:

1. Select the LAN tab at the top of the left window.

2. Select Pools > root > IP Pools > IP Pool ext-mgmt

3. Select the appropriate radio button for the preferred assignment order.

4. Select Create Block of IP Addresses.

5. Enter the starting IP address of the block and number of IPs needed as well as the subnet and gateway information.

6. Click OK to create the IP block.

7. Click OK in the message box.

Figure 32 Add a Block of IP Address

Synchronize Cisco UCS to NTP

These steps provide details for synchronizing the Cisco UCS environment to the NTP server:

1. Select the Admin tab at the top of the left window.

2. Select All > Timezone Management.

3. In the right pane, select the appropriate timezone in the Timezone drop-down list.

4. Click Add NTP Server.

5. Input the NTP server IP and click OK.

6. Click Next and then click Save Changes. Then click OK.

Figure 33 Adding NTP Server

Chassis Discovery Policy

These steps provide details to modify the chassis discovery policy (as the base architecture includes two uplinks from each IO module installed in the Cisco UCS chassis).

1. Click the Equipment tab in the left pane and select the Equipment top-node object. In the right pane, click the Policies tab.

2. Under Global Policies, change the Chassis Discovery Policy to 2-link or set it to match the number of uplink ports that are cabled between the chassis or IO modules (IOMs) and the fabric interconnects.

3. Select Link Grouping Preference to be Port Channel and click Save Changes in the bottom right corner.

Figure 34 Specify Chassis Discovery Policy Settings

Enable Server, Fibre Channel and Uplink Ports

These steps provide details for enabling Fibre Channel, server and uplinks ports.

Server Ports

In the current configuration, the ports 1 and 2 of the fabric interconnects are connected to the blade chassis. Follow the steps given below to configure the ports as server ports:

1. Click the Equipment tab on the top left of the window.

2. Select Equipment > Fabric Interconnects > Fabric Interconnect A (primary) > Fixed Module.

3. Expand the Ethernet Ports object.

4. Select the ports that are connected to the chassis, right-click them, and select Configure as Server Port.

5. Click Yes to confirm the server ports, and then click OK.

Repeat the above procedure to configure the ports on Fabric Interconnect B.

The ports connected to the chassis now configured as server ports.

Figure 35 Configure Server Ports

The ports connected to the chassis are now configured as server ports.

Figure 36 Port Status and Role

Fibre Channel Ports

In this configuration we are connecting the storage array directly to Cisco UCS fabric interconnects without any upstream SAN switch. The below steps provide details on configuring direct-attached storage in the Cisco UCS. Here were using the Fibre Channel ports on the expansion module of the fabric interconnect.

Follow the steps given below to configure Fibre Channel Ports:

1. Select Equipment > Fabric Interconnects > Fabric Interconnect A (primary) and right-click.

2. Click Set FC Switching Mode.

Figure 37 FC Switching Mode

3. Reboot the fabric interconnect and repeat the steps 1 to 3 on the other fabric interconnect.

4. Select Equipment > Fabric Interconnects > Fabric Interconnect A.

5. On the General tab of the right hand side of the window, click Configure Unified Ports under the Actions pane.

Figure 38 Configure Unified Ports

6. Click Yes to allow the change of port mode from Ethernet to Fibre Channel.

Figure 39 Port Mode Change Message

7. Select one of the following buttons to select the module for which you want to configure the port modes:

— Configure Expansion Module

Figure 40 Configure Unified Ports

In the current configuration, we have selected the Expansion Module.

8. Adjust the slider to configure the desired number of ports as FC ports.

Figure 41 Configure Expansion Module Ports

9. Click Yes on the confirmation message box to continue with the port mode configuration.

10. Click Finish to save the port mode configuration.

Depending upon the module for which you configured the port modes, data traffic for the Cisco UCS domain is interrupted as follows:

· Fixed module—the fabric interconnect reboots. All data traffic through that fabric interconnect is interrupted. In a cluster configuration that provides high availability and includes servers with vNICs that are configured for failover, traffic fails over to the other fabric interconnect and no interruption occurs. It takes about 8 minutes for the fixed module to reboot.

· Expansion module—the module reboots. All data traffic through ports in that module is interrupted. It takes about 1 minute for the expansion module to reboot.

Figure 42 Confirm the Configuration Change

11. Repeat the steps 4 to 10 on the Fabric Interconnect B

12. Once the FC port mode configuration is complete, make sure the selected ports list the Desired IF Role as FC Uplink.

13. The next task is to configure the FC uplink port to be of the FC storage port type.

14. Select Equipment > Fabric Interconnects > Fabric Interconnect A (primary) and select Expansion Module 2.

15. Select the ports configured with FC Uplink role (here Ports 15 and 16), right-click and then click Configure as FC Storage Port.

Figure 43 Configure as FC Storage Port

16. Click Yes on the next prompt.

17. Repeat the step 12 to configure the ports on Fabric Interconnect B.

Now the desired ports should be able to be used as FC ports connecting directly to the storage.

Figure 44 FC Storage Ports

LAN Uplink Ports

The below steps provide details to configure LAN Uplink Ports:

1. Click the Equipment tab on the top left of the window.

2. Select Equipment > Fabric Interconnects > Fabric Interconnect A (primary) > Fixed Module.

3. Expand the Ethernet Ports object.

4. Select the ports that are connected to the LAN, right-click them and then select Configure as Uplink Port.

5. Click Yes to confirm and then click OK.

Repeat the above procedure to configure the ports on Fabric Interconnect B.

Now we have the uplink ports configured.

Figure 45 Configure LAN Uplink Ports

Acknowledge the Cisco UCS Chassis

The connected chassis needs to be acknowledged before it can be managed by Cisco UCS Manager. Follow the steps given below to acknowledge the Cisco UCS Chassis:

1. Select Chassis 1 in the left pane.

2. Click Acknowledge Chassis under the Actions pane in the right hand side window.

Figure 46 Acknowledge Cisco UCS Chassis

Create Uplink Port Channels to Cisco Nexus Switches

The uplink port channels need to be configured to enable the communication from the Cisco UCS environment to the external environments. In the proposed configuration, the below uplinks were configured in the Cisco UCS Manager.

Table 4 Acknowledge Cisco UCS Chassis

| Cisco Fabric Interconnects |

Port Channel Name |

Port Channel ID |

Member ports |

| Fabric A |

PO-25-FabA |

25 |

Fabric Interconnect A Eth1/19 and Eth1/20 |

| Fabric B |

PO-26-FabB |

26 |

Fabric Interconnect B Eth1/19 and Eth1/20 |

To configure the necessary port channels out of the Cisco UCS environment, complete the following steps:

1. In Cisco UCS Manager, click the LAN tab in the navigation pane.

![]() In this procedure, two port channels are created: one from fabric A to both Cisco Nexus switches and one from fabric B to both Cisco Nexus switches.

In this procedure, two port channels are created: one from fabric A to both Cisco Nexus switches and one from fabric B to both Cisco Nexus switches.

2. Under LAN > LAN Cloud, expand the Fabric A tree.

3. Right-click Port Channels.

4. Click Create Port Channel.

Figure 47 Create Port-Channel

5. Enter 25 as the unique ID of the port channel.

Figure 48 Set Port Channel Name

6. Provide a name to the port channel.

7. Click Next.

8. Select the following ports to be added to the port channel:

— Slot ID 1 and port 19

— Slot ID 1 and port 20

9. Click >> to add the ports to the port channel.

Figure 49 Add Ports to Port Channel

10. Click Finish to create the port channel

11. Click OK.

12. In the navigation pane, under LAN > LAN Cloud, expand the fabric B tree.

13. Right-click Port Channels.

14. Select Create Port Channel.

15. Enter 26 as the unique ID of the port channel.

16. Enter PO-26-FabB as the name of the port channel.

17. Click Next.

18. Select the following ports to be added to the port channel:

— Slot ID 1 and port 19

— Slot ID 1 and port 20

19. Click >> to add the ports to the port channel.

20. Click Finish to create the port channel.

21. Click OK

Shown below is the screenshot from Cisco UCS Manager after creating the Uplink port channels.

Figure 50 Port Channel Status

Create VSANs

Follow the steps given below to configure the necessary VSANs for the Cisco UCS environment directly connected to the EMC VNX 5400 fibre channel storage array.

1. Click the SAN tab at the top left of the window.

2. Expand the Storage Cloud tree.

3. Right-click VSANs

4. Click Create Storage VSAN.

Figure 51 Create Storage VSAN

Using the below table as a reference complete the steps 5 to 10 to create the two VSANs Fab-A and Fab-B.

Table 5 VSAN Details

| VSAN Name |

FC Zoning |

Fabric |

VSAN ID |

FCoE VLAN |

| Fab-A |

Enabled |

Fabric A |

100 |

100 |

| Fab-B |

Enabled |

Fabric B |

200 |

200 |

5. Enter the VSAN name.

6. Select the FC zoning option to be enabled.

7. Select the Fabric.

8. Enter the VSAN ID.

9. Enter the FCoE VLAN ID. Make sure that FCoE VLAN ID is a VLAN ID that is not currently used in the network.

10. Click OK to create the VSAN.

Figure 52 Create Storage VSAN Wizard

Add Interfaces to VSAN

This section describes the procedure to add FC storage ports (in this case they are ports 15 and 16) to the appropriate VSANs created in the previous section.

1. Select Equipment > Fabric Interconnects > Fabric Interconnect A and then select Expansion Module 2.

2. Select the FC Port 15.

3. Click the General tab of the FC port 15, Select Fab-A (100) from the drop-down list.

4. Click Save Changes to commit the configuration.

Figure 53 Add FI-A Interfaces to VSAN

5. Repeat the above steps for FC Storage port 16.

6. Select Equipment > Fabric Interconnects > Fabric Interconnect B and the select Expansion Module 2.

7. Select the FC Port 15.

8. Click General tab of the FC port 15, Select Fab-B (200) from the drop-down list.

Figure 54 Add FI-B Interfaces to VSAN

9. Click Save Changes to commit the configuration.

10. Repeat the above steps for FC Storage port 16 and should be see the status as shown in the below figure. If required disable and enable the ports to get the port status to up and Enabled.

Figure 55 Storage FC Interfaces View

Confirm Storage Port WWPN Is Logged in to the Fabric

Follow the below procedure to verify if the WWPNs of the storage array are logged in to the fabric.

1. Log into Cisco UCS Manager Console by using Secured Shell (SSH) or Telnet connection.

2. Enter the connect nxos { a | b } command, where a | b represents FI A or FI B.

3. Enter the show flogi database vsan <vsan ID> command, where <vsan ID> is the identifier for the VSAN.

Figure 56 Show Flogi Database on FI-A

Figure 57 Show Flogi Database on FI-B

Configure FC Zoning

The below steps describes the procedure to create storage connection policies. We are creating two storage connection policies for the Cisco UCS environment; one for fabric A and other one for fabric B.

1. Select the SAN tab > Policies > Root.

2. Right-click Storage Connection Policies and select Create Storage Connection Policy.

Figure 58 Create Storage Connection Policy

3. Enter the SAN-Fabric-A as the name of the Storage Connection Policy.

4. Select the zoning type as Single Initiator Multiple Targets, since we have multiple storage ports connected to the same fabric.

5. Click the plus sign next to the FC Target Endpoints section and the Create FC Target Endpoint window opens.

6. Enter the WWPN of the FC target.

Figure 59 Create FC Target End Point

7. Provide the path for the fabric to be Fabric A.

8. For Select VSAN, select the Fab-A (100) from the drop-down list.

9. Click OK.

10. Repeat Steps 5 to 9 to add multiple FC targets on fabric A path.

Figure 60 Storage Connection Policies View Fabric A

11. Click OK to save changes.

12. Repeat steps 2 to 11 to create SAN-Fabric-B for Fabric B.

Figure 61 Storage Connection Policies View for Fabric B

The below figure shows the storage connection policies created in the above steps.

Figure 62 Storage Connection Policies View

Create UUID Suffix Pools

The below steps describes the procedure to configure the necessary UUID suffix pools for the Cisco UCS environment.

1. Click the Servers tab on the top left of the window.

2. Select Pools > root.

3. Right-click UUID Suffix Pools and select Create UUID Suffix Pool.

Figure 63 Create UUID Suffix Pool

4. Enter UUID_Pool as the name of the UUID suffix pool.

5. (Optional) Give the UUID suffix pool a description. Leave the prefix at the derived option.

6. Leave the Assignment order as Default.

7. Click Next to continue.

8. Click Add to add a block of UUIDs.

9. Leave the From field is as the default setting.

10. Specify a size of the UUID block sufficient to support the available blade resources.

11. Click OK to proceed.

12. Click Finish and then click OK.

Figure 64 Create UUID Suffix Pool – Add UUID Blocks

Create a MAC Address Pool

The below steps describes the procedure to configure the necessary MAC address pool for the Cisco UCS environment.

1. Click the LAN tab on the left of the window.

2. Select Pools > root > MAC Pools.

3. In the right pane click Create MAC Pool.

Figure 65 Create MAC Pool

4. Enter a name for the MAC pool.

5. (Optional) Enter a description of the MAC pool.

6. Select Default assignment order and click Next.

7. Click Add.

8. Specify a starting MAC address and a size of the MAC address pool sufficient to support the available blade resources.

9. Click OK.

10. Click Finish and then OK to create the MAC Address Pool.

Figure 66 Create MAC Pool – Add MAC Addresses

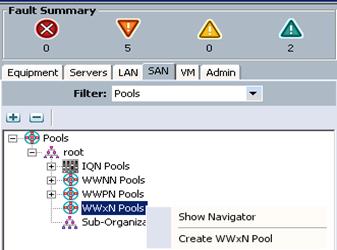

Create WWxN Pools

The below steps describes the procedure to configure the necessary WWxN pools for the Cisco UCS environment.

1. Click the SAN tab at the top left of the window.

2. Select Pools > root.

3. Right-click WWxN Pools and select Create WWxN Pool.

Figure 67 Create WWxN Pool

4. Enter a name for the WWxN pool.

5. (Optional) Add a description for the WWxN pool. Click Next to continue.

Figure 68 Create WWxN Pool –Define Name and Description

6. Select 3 Ports per Node from the drop-down list for Max Ports per Node and click Next.

7. Click Add to Add WWN Blocks.

8. Specify a starting WWN address and a size of the WWxN address pool sufficient to support the available blade resources.

Figure 69 Create WWN Block

9. Click OK and click Finish.

Figure 70 Create WWxN Pool Wizard

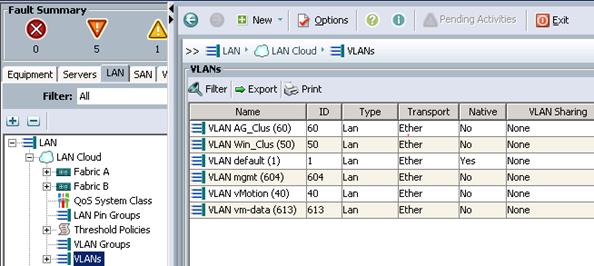

Create VLANs

The below steps describes the procedure to configure the necessary VLANs for the Cisco UCS environment.

The below table shows the VLANs that were used as part of the configuration detailed in this document:

Table 6 VLAN Details

| VLAN Name |

VLAN Purpose |

VLAN ID |

| default |

VLAN for Cisco UCS KVM IP Pool |

1 |

| vMotion |