Replacing a Drive

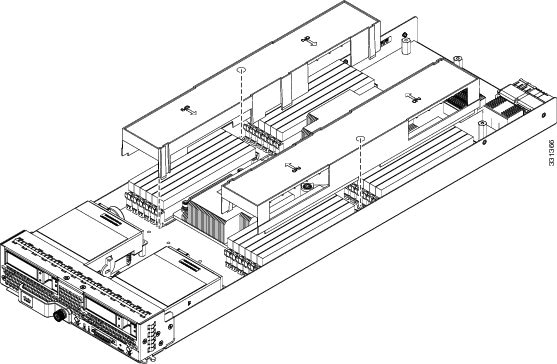

The Cisco UCS B200 M4 blade server uses an optional Cisco UCS FlexStorage modular storage subsystem that can provide support for two drive bays and RAID controller, or NVMe-based PCIe SSD support functionality. If you purchased the UCS B200 M4 blade server without the modular storage system configured as a part of the system, a pair of blanking panels may be in place. These panels should be removed before installing hard drives, but should remain in place to ensure proper cooling and ventilation if the drive bays are unused.

You can remove and install hard drives without removing the blade server from the chassis.

The drives supported in this blade server come with the hot-plug drive sled attached. Empty hot-plug drive sled carriers (containing no drives) are not sold separately from the drives. A list of currently supported drives is in the Cisco UCS B200 M4 Blade Server Specification Sheet.

Before upgrading or adding a drive to a running blade server, check in the service profile and make sure the new hardware configuration will be within the parameters allowed by the service profile.

Note |

See also 4K Sector Format SAS/SATA Drives Considerations. |

Removing a Blade Server Hard Drive

To remove a hard drive from a blade server, follow these steps:

Procedure

| Step 1 |

Push the button to release the ejector, and then pull the hard drive from its slot. |

| Step 2 |

Place the hard drive on an antistatic mat or antistatic foam if you are not immediately reinstalling it in another server. |

| Step 3 |

Install a hard disk drive blank faceplate to keep dust out of the blade server if the slot will remain empty. |

Installing a Blade Server Drive

To install a drive in a blade server, follow these steps:

Procedure

| Step 1 |

Place the drive ejector into the open position by pushing the release button.  |

| Step 2 |

Gently slide the drive into the opening in the blade server until it seats into place. |

| Step 3 |

Push the drive ejector into the closed position. You can use Cisco UCS Manager to format and configure RAID services. For details, see the Configuration Guide for the version of Cisco UCS Manager that you are using. The configuration guides are available at the following URL: http://www.cisco.com/en/US/products/ps10281/products_installation_and_configuration_guides_list.html If you need to move a RAID cluster, see the Cisco UCS Manager Troubleshooting Reference Guide. |

4K Sector Format SAS/SATA Drives Considerations

-

You must boot 4K sector format drives in UEFI mode, not legacy mode. See the procedure in this section for setting UEFI boot mode in the boot policy.

-

Do not configure 4K sector format and 512-byte sector format drives as part of the same RAID volume.

-

Operating system support on 4K sector drives is as follows: Windows: Win2012 and Win2012R2; Linux: RHEL 6.5, 6.6, 6.7, 7.0, 7.2, 7.3; SLES 11 SP3, and SLES 12. ESXi/VMWare is not supported.

Setting Up UEFI Mode Booting in the UCS Manager Boot Policy

Procedure

| Step 1 |

In the Navigation pane, click Servers. |

| Step 2 |

Expand Servers > Policies. |

| Step 3 |

Expand the node for the organization where you want to create the policy. If the system does not include multitenancy, expand the root node. |

| Step 4 |

Right-click Boot Policies and select Create Boot Policy. The Create Boot Policy wizard displays. |

| Step 5 |

Enter a unique name and description for the policy. This name can be between 1 and 16 alphanumeric characters. You cannot use spaces or any special characters other than - (hyphen), _ (underscore), : (colon), and . (period). You cannot change this name after the object is saved. |

| Step 6 |

(Optional) After you make changes to the boot order, check the Reboot on Boot Order Change check box to reboot all servers that use this boot policy. For boot policies applied to a server with a non-Cisco VIC adapter, even if the Reboot on Boot Order Change check box is not checked, when SAN devices are added, deleted, or their order is changed, the server always reboots when boot policy changes are saved. |

| Step 7 |

(Optional) If desired, check the Enforce vNIC/vHBA/iSCSI Name check box.

|

| Step 8 |

In the Boot Mode field, choose the UEFI radio button. |

| Step 9 |

Check the Boot Security check box if you want to enable UEFI boot security. |

| Step 10 |

Configure one or more of the following boot options for the boot policy and set their boot order:

You can specify a primary and a secondary SAN boot. If the primary boot fails, the server attempts to boot from the secondary.

|

Feedback

Feedback