更换计算服务器UCS C240 M4 - vEPC

下载选项

非歧视性语言

此产品的文档集力求使用非歧视性语言。在本文档集中,非歧视性语言是指不隐含针对年龄、残障、性别、种族身份、族群身份、性取向、社会经济地位和交叉性的歧视的语言。由于产品软件的用户界面中使用的硬编码语言、基于 RFP 文档使用的语言或引用的第三方产品使用的语言,文档中可能无法确保完全使用非歧视性语言。 深入了解思科如何使用包容性语言。

关于此翻译

思科采用人工翻译与机器翻译相结合的方式将此文档翻译成不同语言,希望全球的用户都能通过各自的语言得到支持性的内容。 请注意:即使是最好的机器翻译,其准确度也不及专业翻译人员的水平。 Cisco Systems, Inc. 对于翻译的准确性不承担任何责任,并建议您总是参考英文原始文档(已提供链接)。

目录

简介

本文档介绍在托管StarOS虚拟网络功能(VNF)的Ultra-M设置中更换有故障的计算服务器所需的步骤。

背景信息

Ultra-M是预打包和验证的虚拟化移动数据包核心解决方案,旨在简化VNF的部署。OpenStack是Ultra-M的虚拟化基础设施管理器(VIM),由以下节点类型组成:

- 计算

- 对象存储磁盘 — 计算(OSD — 计算)

- 控制器

- OpenStack平台 — 导向器(OSPD)

此图中描述了Ultra-M的高级体系结构和涉及的组件:

UltraM架构

UltraM架构

本文档面向熟悉Cisco Ultra-M平台的思科人员,并详细介绍在计算服务器更换时在OpenStack和StarOS VNF级别执行所需的步骤。

注意:为了定义本文档中的步骤,我们考虑了Ultra M 5.1.x版本。

缩写

| VNF | 虚拟网络功能 |

| CF | 控制功能 |

| 旧金山 | 服务功能 |

| ESC | 弹性服务控制器 |

| MOP | 程序方法 |

| OSD | 对象存储磁盘 |

| 硬盘 | 硬盘驱动器 |

| SSD | 固态驱动器 |

| VIM | 虚拟基础设施管理器 |

| 虚拟机 | 虚拟机 |

| EM | 元素管理器 |

| UAS | 超自动化服务 |

| UUID | 通用唯一IDentifier |

MoP的工作流

更换过程的高级工作流

更换过程的高级工作流

先决条件

备份

在更换计算节点之前,必须检查Red Hat OpenStack平台环境的当前状态。建议您检查当前状态,以避免在计算更换流程开启时出现问题。通过这种替换流可以实现。

在恢复时,思科建议使用以下步骤备份OSPD数据库:

[root@director ~]# mysqldump --opt --all-databases > /root/undercloud-all-databases.sql

[root@director ~]# tar --xattrs -czf undercloud-backup-`date +%F`.tar.gz /root/undercloud-all-databases.sql

/etc/my.cnf.d/server.cnf /var/lib/glance/images /srv/node /home/stack

tar: Removing leading `/' from member names

此过程可确保在不影响任何实例可用性的情况下更换节点。此外,建议备份StarOS配置,特别是当要更换的计算节点托管控制功能(CF)虚拟机(VM)时。

确定托管在计算节点中的虚拟机

确定托管在计算服务器上的虚拟机。有两种可能:

- 计算服务器仅包含服务功能(SF)VM:

[stack@director ~]$ nova list --field name,host | grep compute-10

| 49ac5f22-469e-4b84-badc-031083db0533 | VNF2-DEPLOYM_s9_0_8bc6cc60-15d6-4ead-8b6a-10e75d0e134d |

pod1-compute-10.localdomain |

- 计算服务器包含虚拟机的控制功能(CF)/弹性服务控制器(ESC)/元件管理器(EM)/超自动化服务(UAS)组合:

[stack@director ~]$ nova list --field name,host | grep compute-8

| 507d67c2-1d00-4321-b9d1-da879af524f8 | VNF2-DEPLOYM_XXXX_0_c8d98f0f-d874-45d0-af75-88a2d6fa82ea | pod1-compute-8.localdomain |

| f9c0763a-4a4f-4bbd-af51-bc7545774be2 | VNF2-DEPLOYM_c1_0_df4be88d-b4bf-4456-945a-3812653ee229 | pod1-compute-8.localdomain |

| 75528898-ef4b-4d68-b05d-882014708694 | VNF2-ESC-ESC-0 | pod1-compute-8.localdomain |

| f5bd7b9c-476a-4679-83e5-303f0aae9309 | VNF2-UAS-uas-0 | pod1-compute-8.localdomain |

注意:在此处显示的输出中,第一列对应于通用唯一IDentifier(UUID),第二列是VM名称,第三列是VM所在的主机名。此输出的参数将用于后续部分。

平稳关闭电源

案例1.计算节点仅托管SF VM

将SF卡迁移到备用状态

- 登录StarOS VNF并识别与SF VM对应的卡。使用“识别在计算节点中托管的VM”一节中确定的SF VM的UUID,并识别与UUID对应的卡:

[local]VNF2# show card hardware

Tuesday might 08 16:49:42 UTC 2018

<snip>

Card 8:

Card Type : 4-Port Service Function Virtual Card

CPU Packages : 26 [#0, #1, #2, #3, #4, #5, #6, #7, #8, #9, #10, #11, #12, #13, #14, #15, #16, #17, #18, #19, #20, #21, #22, #23, #24, #25]

CPU Nodes : 2

CPU Cores/Threads : 26

Memory : 98304M (qvpc-di-large)

UUID/Serial Number : 49AC5F22-469E-4B84-BADC-031083DB0533

<snip>

- 检查卡的状态:

[local]VNF2# show card table

Tuesday might 08 16:52:53 UTC 2018

Slot Card Type Oper State SPOF Attach

----------- -------------------------------------- ------------- ---- ------

1: CFC Control Function Virtual Card Active No

2: CFC Control Function Virtual Card Standby -

3: FC 4-Port Service Function Virtual Card Active No

4: FC 4-Port Service Function Virtual Card Active No

5: FC 4-Port Service Function Virtual Card Active No

6: FC 4-Port Service Function Virtual Card Active No

7: FC 4-Port Service Function Virtual Card Active No

8: FC 4-Port Service Function Virtual Card Active No

9: FC 4-Port Service Function Virtual Card Active No

10: FC 4-Port Service Function Virtual Card Standby -

- 如果卡处于活动状态,请将卡移至备用状态:

[local]VNF2# card migrate from 8 to 10

从ESC关闭SF VM

- 登录到与VNF对应的ESC节点并检查SF VM的状态:

[admin@VNF2-esc-esc-0 ~]$ cd /opt/cisco/esc/esc-confd/esc-cli

[admin@VNF2-esc-esc-0 esc-cli]$ ./esc_nc_cli get esc_datamodel | egrep --color "<state>|<vm_name>|<vm_id>|<deployment_name>"

<snip>

<state>SERVICE_ACTIVE_STATE</state>

<vm_name>VNF2-DEPLOYM_c1_0_df4be88d-b4bf-4456-945a-3812653ee229</vm_name>

<state>VM_ALIVE_STATE</state>

<vm_name> VNF2-DEPLOYM_s9_0_8bc6cc60-15d6-4ead-8b6a-10e75d0e134d</vm_name>

<state>VM_ALIVE_STATE</state>

<snip>

- 使用SF VM名称停止SF VM。(VM名称在“识别托管在计算节点中的VM”一节中注明):

[admin@VNF2-esc-esc-0 esc-cli]$ ./esc_nc_cli vm-action STOP VNF2-DEPLOYM_s9_0_8bc6cc60-15d6-4ead-8b6a-10e75d0e134d

- 一旦停止,VM必须进入SHUTOFF状态:

[admin@VNF2-esc-esc-0 ~]$ cd /opt/cisco/esc/esc-confd/esc-cli

[admin@VNF2-esc-esc-0 esc-cli]$ ./esc_nc_cli get esc_datamodel | egrep --color "<state>|<vm_name>|<vm_id>|<deployment_name>"

<snip>

<state>SERVICE_ACTIVE_STATE</state>

<vm_name>VNF2-DEPLOYM_c1_0_df4be88d-b4bf-4456-945a-3812653ee229</vm_name>

<state>VM_ALIVE_STATE</state>

<vm_name>VNF2-DEPLOYM_c3_0_3e0db133-c13b-4e3d-ac14-

<state>VM_ALIVE_STATE</state>

<vm_name>VNF2-DEPLOYM_s9_0_8bc6cc60-15d6-4ead-8b6a-10e75d0e134d</vm_name>

<state>VM_SHUTOFF_STATE</state>

<snip>

从Nova聚合列表中删除计算节点

- 列出nova聚合并根据它托管的VNF确定与计算服务器对应的聚合。通常,格式为<VNFNAME>-SERVICE<X>:

[stack@director ~]$ nova aggregate-list

+----+-------------------+-------------------+

| Id | Name | Availability Zone |

+----+-------------------+-------------------+

| 29 | POD1-AUTOIT | mgmt |

| 57 | VNF1-SERVICE1 | - |

| 60 | VNF1-EM-MGMT1 | - |

| 63 | VNF1-CF-MGMT1 | - |

| 66 | VNF2-CF-MGMT2 | - |

| 69 | VNF2-EM-MGMT2 | - |

| 72 | VNF2-SERVICE2 | - |

| 75 | VNF3-CF-MGMT3 | - |

| 78 | VNF3-EM-MGMT3 | - |

| 81 | VNF3-SERVICE3 | - |

+----+-------------------+-------------------+

在这种情况下,要替换的计算服务器属于VNF2,因此相应的聚合列表将是VNF2-SERVICE2。

- 从已识别的聚合中删除计算节点(通过“识别在计算节点中托管的VM”一节中注明的主机名删除):

nova aggregate-remove-host

[stack@director ~]$ nova aggregate-remove-host VNF2-SERVICE2 pod1-compute-10.localdomain

- 验证计算节点是否已从聚合中删除。现在,主机不能列在聚合下:

nova aggregate-show

[stack@director ~]$ nova aggregate-show VNF2-SERVICE2

案例2.计算节点主机CF/ESC/EM/UAS

将CF卡迁移到备用状态

- 登录StarOS VNF并识别与CF VM对应的卡。使用“识别在计算节点中托管的VM”一节中确定的CF VM的UUID,并查找与UUID对应的卡:

[local]VNF2# show card hardware

Tuesday might 08 16:49:42 UTC 2018

<snip>

Card 2:

Card Type : Control Function Virtual Card

CPU Packages : 8 [#0, #1, #2, #3, #4, #5, #6, #7]

CPU Nodes : 1

CPU Cores/Threads : 8

Memory : 16384M (qvpc-di-large)

UUID/Serial Number : F9C0763A-4A4F-4BBD-AF51-BC7545774BE2

<snip>

- 检查卡的状态:

[local]VNF2# show card table

Tuesday might 08 16:52:53 UTC 2018

Slot Card Type Oper State SPOF Attach

----------- -------------------------------------- ------------- ---- ------

1: CFC Control Function Virtual Card Standby -

2: CFC Control Function Virtual Card Active No

3: FC 4-Port Service Function Virtual Card Active No

4: FC 4-Port Service Function Virtual Card Active No

5: FC 4-Port Service Function Virtual Card Active No

6: FC 4-Port Service Function Virtual Card Active No

7: FC 4-Port Service Function Virtual Card Active No

8: FC 4-Port Service Function Virtual Card Active No

9: FC 4-Port Service Function Virtual Card Active No

10: FC 4-Port Service Function Virtual Card Standby -

- 如果卡处于活动状态,请将卡移至备用状态:

[local]VNF2# card migrate from 2 to 1

从ESC关闭CF和EM VM

- 登录到与VNF对应的ESC节点并检查VM的状态:

[admin@VNF2-esc-esc-0 ~]$ cd /opt/cisco/esc/esc-confd/esc-cli

[admin@VNF2-esc-esc-0 esc-cli]$ ./esc_nc_cli get esc_datamodel | egrep --color "<state>|<vm_name>|<vm_id>|<deployment_name>"

<snip>

<state>SERVICE_ACTIVE_STATE</state>

<vm_name>VNF2-DEPLOYM_c1_0_df4be88d-b4bf-4456-945a-3812653ee229</vm_name>

<state>VM_ALIVE_STATE</state>

<vm_name>VNF2-DEPLOYM_c3_0_3e0db133-c13b-4e3d-ac14-

<state>VM_ALIVE_STATE</state>

<deployment_name>VNF2-DEPLOYMENT-em</deployment_name>

<vm_id>507d67c2-1d00-4321-b9d1-da879af524f8</vm_id>

<vm_id>dc168a6a-4aeb-4e81-abd9-91d7568b5f7c</vm_id>

<vm_id>9ffec58b-4b9d-4072-b944-5413bf7fcf07</vm_id>

<state>SERVICE_ACTIVE_STATE</state>

<vm_name>VNF2-DEPLOYM_XXXX_0_c8d98f0f-d874-45d0-af75-88a2d6fa82ea</vm_name>

<state>VM_ALIVE_STATE</state>

<snip>

- 使用VM名称逐个停止CF和EM VM。(“识别托管在计算节点中的虚拟机”一节中注明的虚拟机名称):

[admin@VNF2-esc-esc-0 esc-cli]$ ./esc_nc_cli vm-action STOP VNF2-DEPLOYM_c1_0_df4be88d-b4bf-4456-945a-3812653ee229

[admin@VNF2-esc-esc-0 esc-cli]$ ./esc_nc_cli vm-action STOP VNF2-DEPLOYM_XXXX_0_c8d98f0f-d874-45d0-af75-88a2d6fa82ea

- 停止后,VM必须进入SHUTOFF状态:

[admin@VNF2-esc-esc-0 ~]$ cd /opt/cisco/esc/esc-confd/esc-cli

[admin@VNF2-esc-esc-0 esc-cli]$ ./esc_nc_cli get esc_datamodel | egrep --color "<state>|<vm_name>|<vm_id>|<deployment_name>"

<snip>

<state>SERVICE_ACTIVE_STATE</state>

<vm_name>VNF2-DEPLOYM_c1_0_df4be88d-b4bf-4456-945a-3812653ee229</vm_name>

<state>VM_SHUTOFF_STATE</state>

<vm_name>VNF2-DEPLOYM_c3_0_3e0db133-c13b-4e3d-ac14-

<state>VM_ALIVE_STATE</state>

<deployment_name>VNF2-DEPLOYMENT-em</deployment_name>

<vm_id>507d67c2-1d00-4321-b9d1-da879af524f8</vm_id>

<vm_id>dc168a6a-4aeb-4e81-abd9-91d7568b5f7c</vm_id>

<vm_id>9ffec58b-4b9d-4072-b944-5413bf7fcf07</vm_id>

<state>SERVICE_ACTIVE_STATE</state>

<vm_name>VNF2-DEPLOYM_XXXX_0_c8d98f0f-d874-45d0-af75-88a2d6fa82ea</vm_name>

VM_SHUTOFF_STATE

<snip>

将ESC迁移到备用模式

- 登录到计算节点中托管的ESC,并检查它是否处于主状态。如果是,请将ESC切换到备用模式:

[admin@VNF2-esc-esc-0 esc-cli]$ escadm status

0 ESC status=0 ESC Master Healthy

[admin@VNF2-esc-esc-0 ~]$ sudo service keepalived stop

Stopping keepalived: [ OK ]

[admin@VNF2-esc-esc-0 ~]$ escadm status

1 ESC status=0 In SWITCHING_TO_STOP state. Please check status after a while.

[admin@VNF2-esc-esc-0 ~]$ sudo reboot

Broadcast message from admin@vnf1-esc-esc-0.novalocal

(/dev/pts/0) at 13:32 ...

The system is going down for reboot NOW!

从Nova聚合列表中删除计算节点

- 列出nova聚合并根据它托管的VNF确定与计算服务器对应的聚合。通常,格式为<VNFNAME>-EM-MGMT<X>和<VNFNAME>-CF-MGMT<X>

[stack@director ~]$ nova aggregate-list

+----+-------------------+-------------------+

| Id | Name | Availability Zone |

+----+-------------------+-------------------+

| 29 | POD1-AUTOIT | mgmt |

| 57 | VNF1-SERVICE1 | - |

| 60 | VNF1-EM-MGMT1 | - |

| 63 | VNF1-CF-MGMT1 | - |

| 66 | VNF2-CF-MGMT2 | - |

| 69 | VNF2-EM-MGMT2 | - |

| 72 | VNF2-SERVICE2 | - |

| 75 | VNF3-CF-MGMT3 | - |

| 78 | VNF3-EM-MGMT3 | - |

| 81 | VNF3-SERVICE3 | - |

+----+-------------------+-------------------+

在本例中,计算服务器属于VNF2。因此,对应的聚合是VNF2-CF-MGMT2和VNF2-EM-MGMT2。

- 从识别的聚合中删除计算节点:

nova aggregate-remove-host

[stack@director ~]$ nova aggregate-remove-host VNF2-CF-MGMT2 pod1-compute-8.localdomain

[stack@director ~]$ nova aggregate-remove-host VNF2-EM-MGMT2 pod1-compute-8.localdomain

- 验证计算节点是否已从聚合中删除。现在,确保主机未列在聚合下:

nova aggregate-show

[stack@director ~]$ nova aggregate-show VNF2-CF-MGMT2

[stack@director ~]$ nova aggregate-show VNF2-EM-MGMT2

计算节点删除

本节中提到的步骤是通用的,与计算节点中托管的虚拟机无关。

从服务列表中删除计算节点

- 从服务列表中删除计算服务:

[stack@director ~]$ source corerc

[stack@director ~]$ openstack compute service list | grep compute-8

| 404 | nova-compute | pod1-compute-8.localdomain | nova | enabled | up | 2018-05-08T18:40:56.000000 |

openstack compute service delete

[stack@director ~]$ openstack compute service delete 404

删除中子代理

- 删除旧的关联中子代理并打开计算服务器的vswitch代理:

[stack@director ~]$ openstack network agent list | grep compute-8

| c3ee92ba-aa23-480c-ac81-d3d8d01dcc03 | Open vSwitch agent | pod1-compute-8.localdomain | None | False | UP | neutron-openvswitch-agent |

| ec19cb01-abbb-4773-8397-8739d9b0a349 | NIC Switch agent | pod1-compute-8.localdomain | None | False | UP | neutron-sriov-nic-agent |

openstack network agent delete

[stack@director ~]$ openstack network agent delete c3ee92ba-aa23-480c-ac81-d3d8d01dcc03

[stack@director ~]$ openstack network agent delete ec19cb01-abbb-4773-8397-8739d9b0a349

从Ironic数据库中删除

- 从讽刺数据库中删除节点并验证:

[stack@director ~]$ source stackrc

nova show| grep hypervisor

[stack@director ~]$ nova show pod1-compute-10 | grep hypervisor

| OS-EXT-SRV-ATTR:hypervisor_hostname | 4ab21917-32fa-43a6-9260-02538b5c7a5a

ironic node-delete

[stack@director ~]$ ironic node-delete 4ab21917-32fa-43a6-9260-02538b5c7a5a

[stack@director ~]$ ironic node-list (node delete must not be listed now)

从Overcloud中删除

- 创建名为delete_node.sh的脚本文件,其内容如图所示。请确保所提及的模板与用于堆栈部署的deploy.sh脚本中使用的模板相同:

delete_node.sh

openstack overcloud node delete --templates -e /usr/share/openstack-tripleo-heat-templates/environments/puppet-pacemaker.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/storage-environment.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/neutron-sriov.yaml -e /home/stack/custom-templates/network.yaml -e /home/stack/custom-templates/ceph.yaml -e /home/stack/custom-templates/compute.yaml -e /home/stack/custom-templates/layout.yaml -e /home/stack/custom-templates/layout.yaml --stack

[stack@director ~]$ source stackrc

[stack@director ~]$ /bin/sh delete_node.sh

+ openstack overcloud node delete --templates -e /usr/share/openstack-tripleo-heat-templates/environments/puppet-pacemaker.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/storage-environment.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/neutron-sriov.yaml -e /home/stack/custom-templates/network.yaml -e /home/stack/custom-templates/ceph.yaml -e /home/stack/custom-templates/compute.yaml -e /home/stack/custom-templates/layout.yaml -e /home/stack/custom-templates/layout.yaml --stack pod1 49ac5f22-469e-4b84-badc-031083db0533

Deleting the following nodes from stack pod1:

- 49ac5f22-469e-4b84-badc-031083db0533

Started Mistral Workflow. Execution ID: 4ab4508a-c1d5-4e48-9b95-ad9a5baa20ae

real 0m52.078s

user 0m0.383s

sys 0m0.086s

- 等待OpenStack堆栈操作进入“完成”状态:

[stack@director ~]$ openstack stack list

+--------------------------------------+------------+-----------------+----------------------+----------------------+

| ID | Stack Name | Stack Status | Creation Time | Updated Time |

+--------------------------------------+------------+-----------------+----------------------+----------------------+

| 5df68458-095d-43bd-a8c4-033e68ba79a0 | pod1 | UPDATE_COMPLETE | 2018-05-08T21:30:06Z | 2018-05-08T20:42:48Z |

+--------------------------------------+------------+-----------------+----------------------+----------------------+

安装新计算节点

- 安装新UCS C240 M4服务器的步骤和初始设置步骤可从以下位置参考:

- 安装服务器后,将硬盘作为旧服务器插入各插槽

- 使用CIMC IP登录服务器

- 如果固件与之前使用的推荐版本不同,请执行BIOS升级。BIOS升级步骤如下:

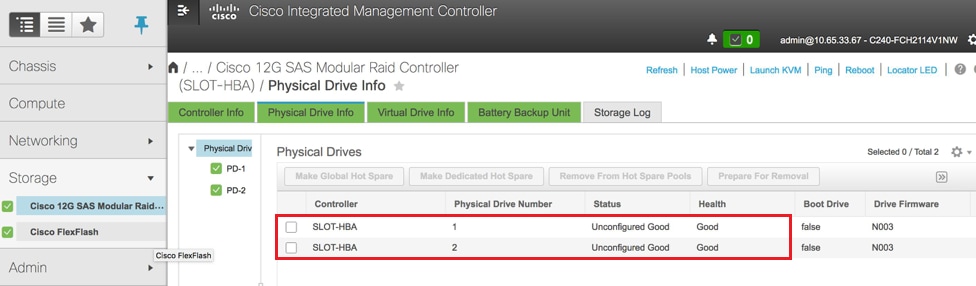

- 验证物理驱动器的状态。它必须是“未配置的好”:

存储> Cisco 12G SAS模块化Raid控制器(SLOT-HBA)>物理驱动器信息

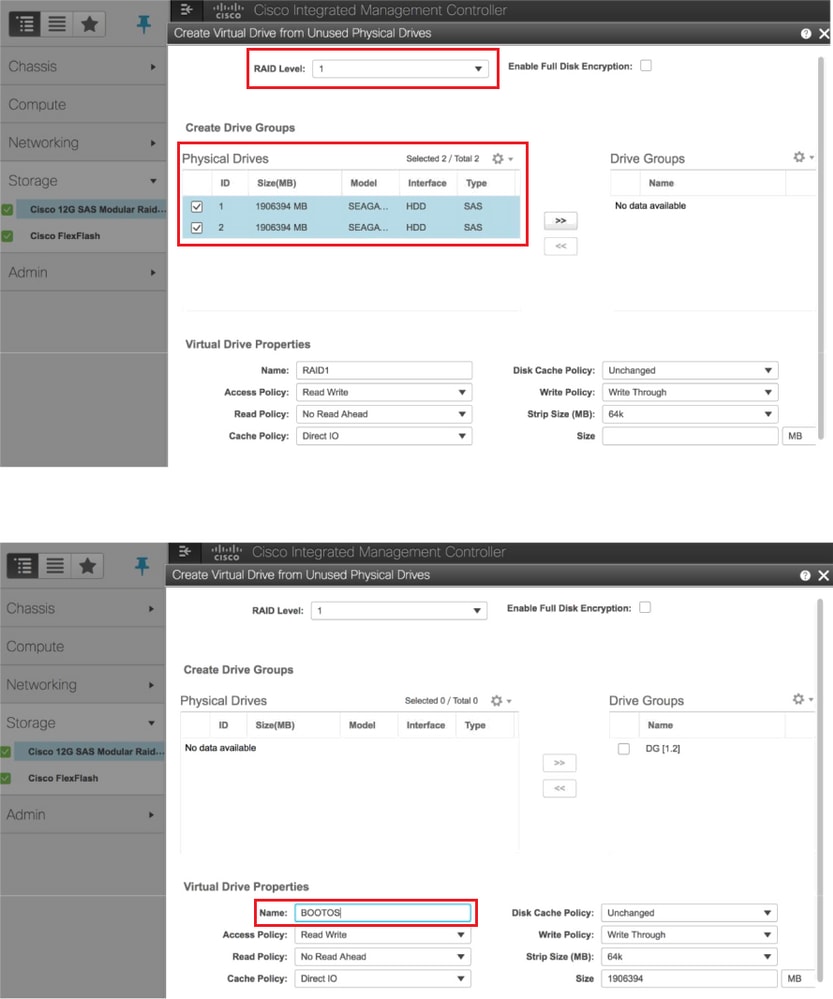

- 使用RAID 1级从物理驱动器创建虚拟驱动器:

存储> Cisco 12G SAS模块化RAID控制器(SLOT-HBA)>控制器信息>从未使用的物理驱动器创建虚拟驱动器

- 选择VD并配置“Set as Boot Drive”:

- 启用IPMI over LAN:

管理员>通信服务>通信服务

- 禁用超线程:

计算> BIOS >配置BIOS >高级>处理器配置

注意:此处显示的图像和本节中提及的配置步骤均参考固件版本3.0(3e),如果您使用其他版本,可能会略有变化。

将新计算节点添加到超云

本节中提到的步骤是通用的,与计算节点托管的VM无关。

- 添加具有不同索引的计算服务器

创建仅包含要添加的新计算服务器详细信息的add_node.json文件。确保以前未使用过新计算服务器的索引号。通常,增加下一个最高的计算值。

示例:最早的是compute-17,因此,在2-vnf系统的情况下创建compute-18。

注意:注意json格式。

[stack@director ~]$ cat add_node.json

{

"nodes":[

{

"mac":[

"<MAC_ADDRESS>"

],

"capabilities": "node:compute-18,boot_option:local",

"cpu":"24",

"memory":"256000",

"disk":"3000",

"arch":"x86_64",

"pm_type":"pxe_ipmitool",

"pm_user":"admin",

"pm_password":"<PASSWORD>",

"pm_addr":"192.100.0.5"

}

]

}

- 导入json文件:

[stack@director ~]$ openstack baremetal import --json add_node.json

Started Mistral Workflow. Execution ID: 78f3b22c-5c11-4d08-a00f-8553b09f497d

Successfully registered node UUID 7eddfa87-6ae6-4308-b1d2-78c98689a56e

Started Mistral Workflow. Execution ID: 33a68c16-c6fd-4f2a-9df9-926545f2127e

Successfully set all nodes to available.

- 使用上一步中记录的UUID运行节点内省:

[stack@director ~]$ openstack baremetal node manage 7eddfa87-6ae6-4308-b1d2-78c98689a56e

[stack@director ~]$ ironic node-list |grep 7eddfa87

| 7eddfa87-6ae6-4308-b1d2-78c98689a56e | None | None | power off | manageable | False |

[stack@director ~]$ openstack overcloud node introspect 7eddfa87-6ae6-4308-b1d2-78c98689a56e --provide

Started Mistral Workflow. Execution ID: e320298a-6562-42e3-8ba6-5ce6d8524e5c

Waiting for introspection to finish...

Successfully introspected all nodes.

Introspection completed.

Started Mistral Workflow. Execution ID: c4a90d7b-ebf2-4fcb-96bf-e3168aa69dc9

Successfully set all nodes to available.

[stack@director ~]$ ironic node-list |grep available

| 7eddfa87-6ae6-4308-b1d2-78c98689a56e | None | None | power off | available | False |

- 执行以前用于部署堆栈的deploy.sh脚本,以便将新计算节点添加到超云堆栈:

[stack@director ~]$ ./deploy.sh

++ openstack overcloud deploy --templates -r /home/stack/custom-templates/custom-roles.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/puppet-pacemaker.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/storage-environment.yaml -e /usr/share/openstack-tripleo-heat-templates/environments/neutron-sriov.yaml -e /home/stack/custom-templates/network.yaml -e /home/stack/custom-templates/ceph.yaml -e /home/stack/custom-templates/compute.yaml -e /home/stack/custom-templates/layout.yaml --stack ADN-ultram --debug --log-file overcloudDeploy_11_06_17__16_39_26.log --ntp-server 172.24.167.109 --neutron-flat-networks phys_pcie1_0,phys_pcie1_1,phys_pcie4_0,phys_pcie4_1 --neutron-network-vlan-ranges datacentre:1001:1050 --neutron-disable-tunneling --verbose --timeout 180

…

Starting new HTTP connection (1): 192.200.0.1

"POST /v2/action_executions HTTP/1.1" 201 1695

HTTP POST http://192.200.0.1:8989/v2/action_executions 201

Overcloud Endpoint: http://10.1.2.5:5000/v2.0

Overcloud Deployed

clean_up DeployOvercloud:

END return value: 0

real 38m38.971s

user 0m3.605s

sys 0m0.466s

- 等待openstack堆栈状态为“完成:

[stack@director ~]$ openstack stack list

+--------------------------------------+------------+-----------------+----------------------+----------------------+

| ID | Stack Name | Stack Status | Creation Time | Updated Time |

+--------------------------------------+------------+-----------------+----------------------+----------------------+

| 5df68458-095d-43bd-a8c4-033e68ba79a0 | ADN-ultram | UPDATE_COMPLETE | 2017-11-02T21:30:06Z | 2017-11-06T21:40:58Z |

+--------------------------------------+------------+-----------------+----------------------+----------------------+

- 检查新计算节点是否处于活动状态:

[stack@director ~]$ source stackrc

[stack@director ~]$ nova list |grep compute-18

| 0f2d88cd-d2b9-4f28-b2ca-13e305ad49ea | pod1-compute-18 | ACTIVE | - | Running | ctlplane=192.200.0.117 |

[stack@director ~]$ source corerc

[stack@director ~]$ openstack hypervisor list |grep compute-18

| 63 | pod1-compute-18.localdomain |

服务器更换后设置

将服务器添加到超云后,请参阅以下链接以应用以前在旧服务器中存在的设置:

恢复虚拟机

案例1.计算节点仅托管SF VM

Nova聚合列表的附加项

- 将计算节点添加到聚合主机并验证主机是否已添加:

nova aggregate-add-host

[stack@director ~]$ nova aggregate-add-host VNF2-SERVICE2 pod1-compute-18.localdomain

nova aggregate-show

[stack@director ~]$ nova aggregate-show VNF2-SERVICE2

从ESC恢复SF VM

- SF VM在nova列表中将处于错误状态:

[stack@director ~]$ nova list |grep VNF2-DEPLOYM_s9_0_8bc6cc60-15d6-4ead-8b6a-10e75d0e134d

| 49ac5f22-469e-4b84-badc-031083db0533 | VNF2-DEPLOYM_s9_0_8bc6cc60-15d6-4ead-8b6a-10e75d0e134d | ERROR | - | NOSTATE |

- 从ESC恢复SF VM:

[admin@VNF2-esc-esc-0 ~]$ sudo /opt/cisco/esc/esc-confd/esc-cli/esc_nc_cli recovery-vm-action DO VNF2-DEPLOYM_s9_0_8bc6cc60-15d6-4ead-8b6a-10e75d0e134d

[sudo] password for admin:

Recovery VM Action

/opt/cisco/esc/confd/bin/netconf-console --port=830 --host=127.0.0.1 --user=admin --privKeyFile=/root/.ssh/confd_id_dsa --privKeyType=dsa --rpc=/tmp/esc_nc_cli.ZpRCGiieuW

<?xml version="1.0" encoding="UTF-8"?>

<rpc-reply xmlns="urn:ietf:params:xml:ns:netconf:base:1.0" message-id="1">

<ok/>

</rpc-reply>

- 监控yangesc.log:

admin@VNF2-esc-esc-0 ~]$ tail -f /var/log/esc/yangesc.log

…

14:59:50,112 07-Nov-2017 WARN Type: VM_RECOVERY_COMPLETE

14:59:50,112 07-Nov-2017 WARN Status: SUCCESS

14:59:50,112 07-Nov-2017 WARN Status Code: 200

14:59:50,112 07-Nov-2017 WARN Status Msg: Recovery: Successfully recovered VM [VNF2-DEPLOYM_s9_0_8bc6cc60-15d6-4ead-8b6a-10e75d0e134d].

- 确保SF卡在VNF中作为备用SF

案例2.计算节点主机CF、ESC、EM和UAS

Nova聚合列表的附加项

将计算节点添加到聚合主机并验证主机是否已添加。在这种情况下,必须将计算节点添加到CF和EM主机聚合。

nova aggregate-add-host

[stack@director ~]$ nova aggregate-add-host VNF2-CF-MGMT2 pod1-compute-18.localdomain

[stack@director ~]$ nova aggregate-add-host VNF2-EM-MGMT2 pod1-compute-18.localdomain

nova aggregate-show

[stack@director ~]$ nova aggregate-show VNF2-CF-MGMT2

[stack@director ~]$ nova aggregate-show VNF2-EM-MGMT2

恢复UAS VM

- 检查UAS VM在nova列表中的状态并将其删除:

[stack@director ~]$ nova list | grep VNF2-UAS-uas-0

| 307a704c-a17c-4cdc-8e7a-3d6e7e4332fa | VNF2-UAS-uas-0 | ACTIVE | - | Running | VNF2-UAS-uas-orchestration=172.168.11.10; VNF2-UAS-uas-management=172.168.10.3

[stack@tb5-ospd ~]$ nova delete VNF2-UAS-uas-0

Request to delete server VNF2-UAS-uas-0 has been accepted.

- 要恢复autovnf-uas VM,请运行uas-check脚本以检查状态。它必须报告错误。然后使用 — fix选项再次运行,以重新创建缺少的UAS VM:

[stack@director ~]$ cd /opt/cisco/usp/uas-installer/scripts/

[stack@director scripts]$ ./uas-check.py auto-vnf VNF2-UAS

2017-12-08 12:38:05,446 - INFO: Check of AutoVNF cluster started

2017-12-08 12:38:07,925 - INFO: Instance 'vnf1-UAS-uas-0' status is 'ERROR'

2017-12-08 12:38:07,925 - INFO: Check completed, AutoVNF cluster has recoverable errors

[stack@director scripts]$ ./uas-check.py auto-vnf VNF2-UAS --fix

2017-11-22 14:01:07,215 - INFO: Check of AutoVNF cluster started

2017-11-22 14:01:09,575 - INFO: Instance VNF2-UAS-uas-0' status is 'ERROR'

2017-11-22 14:01:09,575 - INFO: Check completed, AutoVNF cluster has recoverable errors

2017-11-22 14:01:09,778 - INFO: Removing instance VNF2-UAS-uas-0'

2017-11-22 14:01:13,568 - INFO: Removed instance VNF2-UAS-uas-0'

2017-11-22 14:01:13,568 - INFO: Creating instance VNF2-UAS-uas-0' and attaching volume ‘VNF2-UAS-uas-vol-0'

2017-11-22 14:01:49,525 - INFO: Created instance ‘VNF2-UAS-uas-0'

- 登录autovnf-uas。等待几分钟,UAS必须返回良好状态:

VNF2-autovnf-uas-0#show uas

uas version 1.0.1-1

uas state ha-active

uas ha-vip 172.17.181.101

INSTANCE IP STATE ROLE

-----------------------------------

172.17.180.6 alive CONFD-SLAVE

172.17.180.7 alive CONFD-MASTER

172.17.180.9 alive NA

注意:如果uas-check.py —fix失败,您可能需要复制此文件并再次运行。

[stack@director ~]$ mkdir –p /opt/cisco/usp/apps/auto-it/common/uas-deploy/

[stack@director ~]$ cp /opt/cisco/usp/uas-installer/common/uas-deploy/userdata-uas.txt /opt/cisco/usp/apps/auto-it/common/uas-deploy/

ESC VM恢复

- 从nova列表中检查ESC VM的状态并将其删除:

stack@director scripts]$ nova list |grep ESC-1

| c566efbf-1274-4588-a2d8-0682e17b0d41 | VNF2-ESC-ESC-1 | ACTIVE | - | Running | VNF2-UAS-uas-orchestration=172.168.11.14; VNF2-UAS-uas-management=172.168.10.4 |

[stack@director scripts]$ nova delete VNF2-ESC-ESC-1

Request to delete server VNF2-ESC-ESC-1 has been accepted.

- 在AutoVNF-UAS中,查找ESC部署事务,并在事务日志中查找用于创建ESC实例的boot_vm.py命令行:

ubuntu@VNF2-uas-uas-0:~$ sudo -i

root@VNF2-uas-uas-0:~# confd_cli -u admin -C

Welcome to the ConfD CLI

admin connected from 127.0.0.1 using console on VNF2-uas-uas-0

VNF2-uas-uas-0#show transaction

TX ID TX TYPE DEPLOYMENT ID TIMESTAMP STATUS

-----------------------------------------------------------------------------------------------------------------------------

35eefc4a-d4a9-11e7-bb72-fa163ef8df2b vnf-deployment VNF2-DEPLOYMENT 2017-11-29T02:01:27.750692-00:00 deployment-success

73d9c540-d4a8-11e7-bb72-fa163ef8df2b vnfm-deployment VNF2-ESC 2017-11-29T01:56:02.133663-00:00 deployment-success

VNF2-uas-uas-0#show logs 73d9c540-d4a8-11e7-bb72-fa163ef8df2b | display xml

<config xmlns="http://tail-f.com/ns/config/1.0">

<logs xmlns="http://www.cisco.com/usp/nfv/usp-autovnf-oper">

<tx-id>73d9c540-d4a8-11e7-bb72-fa163ef8df2b</tx-id>

<log>2017-11-29 01:56:02,142 - VNFM Deployment RPC triggered for deployment: VNF2-ESC, deactivate: 0

2017-11-29 01:56:02,179 - Notify deployment

..

2017-11-29 01:57:30,385 - Creating VNFM 'VNF2-ESC-ESC-1' with [python //opt/cisco/vnf-staging/bootvm.py VNF2-ESC-ESC-1 --flavor VNF2-ESC-ESC-flavor --image 3fe6b197-961b-4651-af22-dfd910436689 --net VNF2-UAS-uas-management --gateway_ip 172.168.10.1 --net VNF2-UAS-uas-orchestration --os_auth_url http://10.1.2.5:5000/v2.0 --os_tenant_name core --os_username ****** --os_password ****** --bs_os_auth_url http://10.1.2.5:5000/v2.0 --bs_os_tenant_name core --bs_os_username ****** --bs_os_password ****** --esc_ui_startup false --esc_params_file /tmp/esc_params.cfg --encrypt_key ****** --user_pass ****** --user_confd_pass ****** --kad_vif eth0 --kad_vip 172.168.10.7 --ipaddr 172.168.10.6 dhcp --ha_node_list 172.168.10.3 172.168.10.6 --file root:0755:/opt/cisco/esc/esc-scripts/esc_volume_em_staging.sh:/opt/cisco/usp/uas/autovnf/vnfms/esc-scripts/esc_volume_em_staging.sh --file root:0755:/opt/cisco/esc/esc-scripts/esc_vpc_chassis_id.py:/opt/cisco/usp/uas/autovnf/vnfms/esc-scripts/esc_vpc_chassis_id.py --file root:0755:/opt/cisco/esc/esc-scripts/esc-vpc-di-internal-keys.sh:/opt/cisco/usp/uas/autovnf/vnfms/esc-scripts/esc-vpc-di-internal-keys.sh

将boot_vm.py行保存到外壳脚本文件(esc.sh),并使用正确信息(通常是core/<PASSWORD>)更新所有用户名*****和密码******行。 您还需要删除 — encrypt_key选项。对于user_pass和user_confd_pass,您需要使用格式 — username:密码(示例 — admin:<PASSWORD>)。

- 从running-config中查找bootvm.py的URL,并将bootvm.py文件获取到autovnf-uas VM。在这种情况下,10.1.2.3是Auto-IT VM的IP:

root@VNF2-uas-uas-0:~# confd_cli -u admin -C

Welcome to the ConfD CLI

admin connected from 127.0.0.1 using console on VNF2-uas-uas-0

VNF2-uas-uas-0#show running-config autovnf-vnfm:vnfm

…

configs bootvm

value http:// 10.1.2.3:80/bundles/5.1.7-2007/vnfm-bundle/bootvm-2_3_2_155.py

!

root@VNF2-uas-uas-0:~# wget http://10.1.2.3:80/bundles/5.1.7-2007/vnfm-bundle/bootvm-2_3_2_155.py

--2017-12-01 20:25:52-- http://10.1.2.3 /bundles/5.1.7-2007/vnfm-bundle/bootvm-2_3_2_155.py

Connecting to 10.1.2.3:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 127771 (125K) [text/x-python]

Saving to: ‘bootvm-2_3_2_155.py’

100%[=====================================================================================>] 127,771 --.-K/s in 0.001s

2017-12-01 20:25:52 (173 MB/s) - ‘bootvm-2_3_2_155.py’ saved [127771/127771]

- 创建/tmp/esc_params.cfg文件:

root@VNF2-uas-uas-0:~# echo "openstack.endpoint=publicURL" > /tmp/esc_params.cfg

- 执行外壳脚本以从UAS节点部署ESC:

root@VNF2-uas-uas-0:~# /bin/sh esc.sh

+ python ./bootvm.py VNF2-ESC-ESC-1 --flavor VNF2-ESC-ESC-flavor --image 3fe6b197-961b-4651-af22-dfd910436689

--net VNF2-UAS-uas-management --gateway_ip 172.168.10.1 --net VNF2-UAS-uas-orchestration --os_auth_url

http://10.1.2.5:5000/v2.0 --os_tenant_name core --os_username core --os_password <PASSWORD> --bs_os_auth_url

http://10.1.2.5:5000/v2.0 --bs_os_tenant_name core --bs_os_username core --bs_os_password <PASSWORD>

--esc_ui_startup false --esc_params_file /tmp/esc_params.cfg --user_pass admin:<PASSWORD> --user_confd_pass

admin:<PASSWORD> --kad_vif eth0 --kad_vip 172.168.10.7 --ipaddr 172.168.10.6 dhcp --ha_node_list 172.168.10.3

172.168.10.6 --file root:0755:/opt/cisco/esc/esc-scripts/esc_volume_em_staging.sh:/opt/cisco/usp/uas/autovnf/vnfms/esc-scripts/esc_volume_em_staging.sh

--file root:0755:/opt/cisco/esc/esc-scripts/esc_vpc_chassis_id.py:/opt/cisco/usp/uas/autovnf/vnfms/esc-scripts/esc_vpc_chassis_id.py

--file root:0755:/opt/cisco/esc/esc-scripts/esc-vpc-di-internal-keys.sh:/opt/cisco/usp/uas/autovnf/vnfms/esc-scripts/esc-vpc-di-internal-keys.sh

- 登录新ESC并验证备份状态:

ubuntu@VNF2-uas-uas-0:~$ ssh admin@172.168.11.14

…

####################################################################

# ESC on VNF2-esc-esc-1.novalocal is in BACKUP state.

####################################################################

[admin@VNF2-esc-esc-1 ~]$ escadm status

0 ESC status=0 ESC Backup Healthy

[admin@VNF2-esc-esc-1 ~]$ health.sh

============== ESC HA (BACKUP) ===================================================

ESC HEALTH PASSED

从ESC恢复CF和EM VM

- 从nova列表中检查CF和EM VM的状态。它们必须处于ERROR状态

[stack@director ~]$ source corerc

[stack@director ~]$ nova list --field name,host,status |grep -i err

| 507d67c2-1d00-4321-b9d1-da879af524f8 | VNF2-DEPLOYM_XXXX_0_c8d98f0f-d874-45d0-af75-88a2d6fa82ea | None | ERROR|

| f9c0763a-4a4f-4bbd-af51-bc7545774be2 | VNF2-DEPLOYM_c1_0_df4be88d-b4bf-4456-945a-3812653ee229 |None | ERROR

- 登录到ESC Master,为每个受影响的EM和CF VM运行recovery-vm-action。耐心点。ESC将安排恢复操作,并且可能不会持续几分钟。监控yangesc.log:

sudo /opt/cisco/esc/esc-confd/esc-cli/esc_nc_cli recovery-vm-action DO

[admin@VNF2-esc-esc-0 ~]$ sudo /opt/cisco/esc/esc-confd/esc-cli/esc_nc_cli recovery-vm-action DO VNF2-DEPLOYMENT-_VNF2-D_0_a6843886-77b4-4f38-b941-74eb527113a8

[sudo] password for admin:

Recovery VM Action

/opt/cisco/esc/confd/bin/netconf-console --port=830 --host=127.0.0.1 --user=admin --privKeyFile=/root/.ssh/confd_id_dsa --privKeyType=dsa --rpc=/tmp/esc_nc_cli.ZpRCGiieuW

<?xml version="1.0" encoding="UTF-8"?>

<rpc-reply xmlns="urn:ietf:params:xml:ns:netconf:base:1.0" message-id="1">

<ok/>

</rpc-reply>

[admin@VNF2-esc-esc-0 ~]$ tail -f /var/log/esc/yangesc.log

…

14:59:50,112 07-Nov-2017 WARN Type: VM_RECOVERY_COMPLETE

14:59:50,112 07-Nov-2017 WARN Status: SUCCESS

14:59:50,112 07-Nov-2017 WARN Status Code: 200

14:59:50,112 07-Nov-2017 WARN Status Msg: Recovery: Successfully recovered VM [VNF2-DEPLOYMENT-_VNF2-D_0_a6843886-77b4-4f38-b941-74eb527113a8]

- 登录新EM并验证EM状态为up:

ubuntu@VNF2vnfddeploymentem-1:~$ /opt/cisco/ncs/current/bin/ncs_cli -u admin -C

admin connected from 172.17.180.6 using ssh on VNF2vnfddeploymentem-1

admin@scm# show ems

EM VNFM

ID SLA SCM PROXY

---------------------

2 up up up

3 up up up

- 登录StarOS VNF并验证CF卡处于备用状态

处理ESC恢复故障

如果ESC由于意外状态而无法启动VM,思科建议通过重新启动主ESC执行ESC切换。ESC切换大约需要一分钟。在新的主ESC上运行脚本“health.sh”,检查状态是否为up。主ESC以启动VM并修复VM状态。完成此恢复任务最多需要5分钟。

您可以监控/var/log/esc/yangesc.log和/var/log/esc/escmanager.log。如果您在5-7分钟后未看到VM恢复,则用户需要转到并手动恢复受影响的VM。

自动部署配置更新

从AutoDeploy VM中,编辑autodeploy.cfg并将旧计算服务器替换为新计算服务器。 然后在confd_cli中加载replace。 此步骤是以后成功停用部署所必需的。

root@auto-deploy-iso-2007-uas-0:/home/ubuntu# confd_cli -u admin -C

Welcome to the ConfD CLI

admin connected from 127.0.0.1 using console on auto-deploy-iso-2007-uas-0

auto-deploy-iso-2007-uas-0#config

Entering configuration mode terminal

auto-deploy-iso-2007-uas-0(config)#load replace autodeploy.cfg

Loading. 14.63 KiB parsed in 0.42 sec (34.16 KiB/sec)

auto-deploy-iso-2007-uas-0(config)#commit

Commit complete.

auto-deploy-iso-2007-uas-0(config)#end

在配置更改后重新启动uas-confd和自动部署服务。

root@auto-deploy-iso-2007-uas-0:~# service uas-confd restart

uas-confd stop/waiting

uas-confd start/running, process 14078

root@auto-deploy-iso-2007-uas-0:~# service uas-confd status

uas-confd start/running, process 14078

root@auto-deploy-iso-2007-uas-0:~# service autodeploy restart

autodeploy stop/waiting

autodeploy start/running, process 14017

root@auto-deploy-iso-2007-uas-0:~# service autodeploy status

autodeploy start/running, process 14017

启用系统日志

要为UCS服务器、Openstack组件和恢复的VM启用系统日志,请遵循以下各节

在以下链接中“重新启用UCS和Openstack组件的系统日志”和“启用VNF的系统日志”:

相关信息

- https://access.redhat.com/documentation/en-us/red_hat_openstack_platform/10/html/director_installation_and_usage/sect-Scaling_the_Overcloud - sect-removing_compute_Nodes

- https://access.redhat.com/documentation/en-us/red_hat_openstack_platform/10/html/director_installation_and_usage/sect-Scaling_the_Overcloud#sect-Adding_Compute_or_Ceph_Storage_Nodes

- 技术支持和文档 - Cisco Systems

修订历史记录

| 版本 | 发布日期 | 备注 |

|---|---|---|

1.0 |

01-Jun-2018 |

初始版本 |

由思科工程师提供

- Partheeban RajagopalCisco Advanced Services

- Padmaraj RamanoudjamCisco Advanced Services

反馈

反馈