OSPD服务器UCS 240M4的更换- vEPC

下载选项

非歧视性语言

此产品的文档集力求使用非歧视性语言。在本文档集中,非歧视性语言是指不隐含针对年龄、残障、性别、种族身份、族群身份、性取向、社会经济地位和交叉性的歧视的语言。由于产品软件的用户界面中使用的硬编码语言、基于 RFP 文档使用的语言或引用的第三方产品使用的语言,文档中可能无法确保完全使用非歧视性语言。 深入了解思科如何使用包容性语言。

关于此翻译

思科采用人工翻译与机器翻译相结合的方式将此文档翻译成不同语言,希望全球的用户都能通过各自的语言得到支持性的内容。 请注意:即使是最好的机器翻译,其准确度也不及专业翻译人员的水平。 Cisco Systems, Inc. 对于翻译的准确性不承担任何责任,并建议您总是参考英文原始文档(已提供链接)。

Contents

Introduction

本文描述要求的步骤为了替换主机OpenStack平台导向器的有故障的服务器(OSPD)在Ultra-M设置。

背景信息

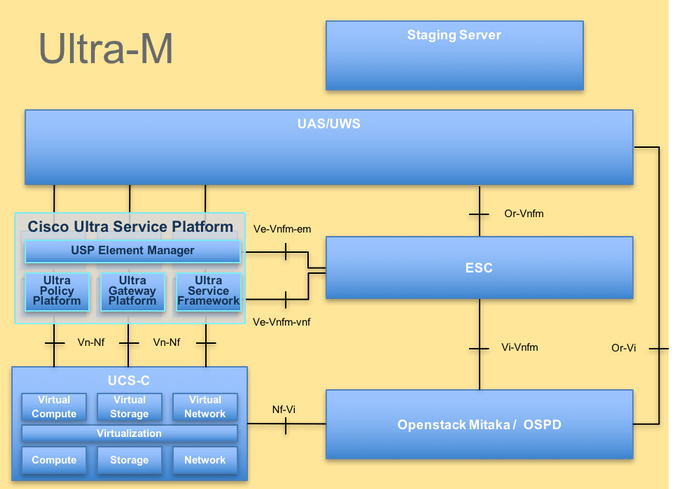

Ultra-M是设计为了简化VNFs的配置的一个被预先包装的和被验证的虚拟化的便携信息包核心解决方案。 OpenStack是虚拟化的基础设施管理器(精力) Ultra-M的并且包括这些节点类型:

- 估计

- 对象存储磁盘-估计(OSD -估计)

- 控制器

- OSPD

Ultra-M高级体系结构和介入的组件在此镜像表示:

UltraM体系结构

UltraM体系结构

本文供熟悉Cisco Ultra-M平台的Cisco人员使用,并且选派要求为了被执行在OpenStack级别在OSPD服务器更换时的步骤。

Note:超M 5.1.x版本考虑为了定义在本文的程序。

简称

| VNF | 虚拟网络功能 |

| CF | 控制功能 |

| SF | 服务功能 |

| ESC | 有弹性服务控制器 |

| MOP | 程序方法 |

| OSD | 对象存储磁盘 |

| HDD | 硬盘驱动器 |

| SSD | 固体驱动 |

| 精力 | 虚拟基础设施管理器 |

| VM | 虚拟机 |

| EM | 网元管理 |

| UAS | 超自动化服务 |

| UUID | 通用唯一标识符 |

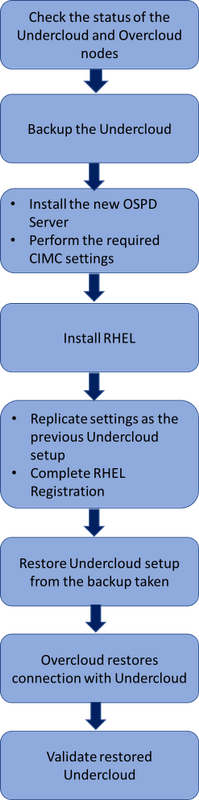

Mop的工作流

替换程序的高级工作流

替换程序的高级工作流

Prerequisites

状态检查

在您替换一个OSPD服务器前,请注意检查红帽子OpenStack平台环境的当前状态和是健康的为了避免复杂化,当替换过程打开时。

检查OpenStack堆栈和节点列表的状况:

[stack@director ~]$ source stackrc

[stack@director ~]$ openstack stack list --nested

[stack@director ~]$ ironic node-list

[stack@director ~]$ nova list

是否请保证所有undercloud服务在装载,激活和运行状态从OSP-D节点:

[stack@director ~]$ systemctl list-units "openstack*" "neutron*" "openvswitch*"

UNIT LOAD ACTIVE SUB DESCRIPTION

neutron-dhcp-agent.service loaded active running OpenStack Neutron DHCP Agent

neutron-openvswitch-agent.service loaded active running OpenStack Neutron Open vSwitch Agent

neutron-ovs-cleanup.service loaded active exited OpenStack Neutron Open vSwitch Cleanup Utility

neutron-server.service loaded active running OpenStack Neutron Server

openstack-aodh-evaluator.service loaded active running OpenStack Alarm evaluator service

openstack-aodh-listener.service loaded active running OpenStack Alarm listener service

openstack-aodh-notifier.service loaded active running OpenStack Alarm notifier service

openstack-ceilometer-central.service loaded active running OpenStack ceilometer central agent

openstack-ceilometer-collector.service loaded active running OpenStack ceilometer collection service

openstack-ceilometer-notification.service loaded active running OpenStack ceilometer notification agent

openstack-glance-api.service loaded active running OpenStack Image Service (code-named Glance) API server

openstack-glance-registry.service loaded active running OpenStack Image Service (code-named Glance) Registry server

openstack-heat-api-cfn.service loaded active running Openstack Heat CFN-compatible API Service

openstack-heat-api.service loaded active running OpenStack Heat API Service

openstack-heat-engine.service loaded active running Openstack Heat Engine Service

openstack-ironic-api.service loaded active running OpenStack Ironic API service

openstack-ironic-conductor.service loaded active running OpenStack Ironic Conductor service

openstack-ironic-inspector-dnsmasq.service loaded active running PXE boot dnsmasq service for Ironic Inspector

openstack-ironic-inspector.service loaded active running Hardware introspection service for OpenStack Ironic

openstack-mistral-api.service loaded active running Mistral API Server

openstack-mistral-engine.service loaded active running Mistral Engine Server

openstack-mistral-executor.service loaded active running Mistral Executor Server

openstack-nova-api.service loaded active running OpenStack Nova API Server

openstack-nova-cert.service loaded active running OpenStack Nova Cert Server

openstack-nova-compute.service loaded active running OpenStack Nova Compute Server

openstack-nova-conductor.service loaded active running OpenStack Nova Conductor Server

openstack-nova-scheduler.service loaded active running OpenStack Nova Scheduler Server

openstack-swift-account-reaper.service loaded active running OpenStack Object Storage (swift) - Account Reaper

openstack-swift-account.service loaded active running OpenStack Object Storage (swift) - Account Server

openstack-swift-container-updater.service loaded active running OpenStack Object Storage (swift) - Container Updater

openstack-swift-container.service loaded active running OpenStack Object Storage (swift) - Container Server

openstack-swift-object-updater.service loaded active running OpenStack Object Storage (swift) - Object Updater

openstack-swift-object.service loaded active running OpenStack Object Storage (swift) - Object Server

openstack-swift-proxy.service loaded active running OpenStack Object Storage (swift) - Proxy Server

openstack-zaqar.service loaded active running OpenStack Message Queuing Service (code-named Zaqar) Server

openstack-zaqar@1.service loaded active running OpenStack Message Queuing Service (code-named Zaqar) Server Instance 1

openvswitch.service loaded active exited Open vSwitch

LOAD = Reflects whether the unit definition was properly loaded.

ACTIVE = The high-level unit activation state, i.e. generalization of SUB.

SUB = The low-level unit activation state, values depend on unit type.

37 loaded units listed. Pass --all to see loaded but inactive units, too.

To show all installed unit files use 'systemctl list-unit-files'.

备份

保证您有可用的充足的磁盘空间,在您执行备份进程前。此tarball预计是至少3.5 GB。

[stack@director ~]$df -h

运行此命令作为root用户为了备份从undercloud节点的数据到名为undercloud备份[timestamp]的文件.tar.gz。

[root@director ~]# mysqldump --opt --all-databases > /root/undercloud-all-databases.sql

[root@director ~]# tar --xattrs -czf undercloud-backup-`date +%F`.tar.gz /root/undercloud-all-databases.sql

/etc/my.cnf.d/server.cnf /var/lib/glance/images /srv/node /home/stack

tar: Removing leading `/' from member names

安装新的OSPD节点

UCS服务器安装

步骤为了安装一个新的UCS C240 M4服务器以及初始建立步骤可以从Cisco UCS C240 M4服务器安装和服务指南参考。

登陆到有使用的服务器CIMC IP。

如果固件不是根据以前,使用的推荐的版本请执行BIOS升级。测量得BIOS升级的步骤这里: Cisco UCS C系列机架装置服务器BIOS升级指南。

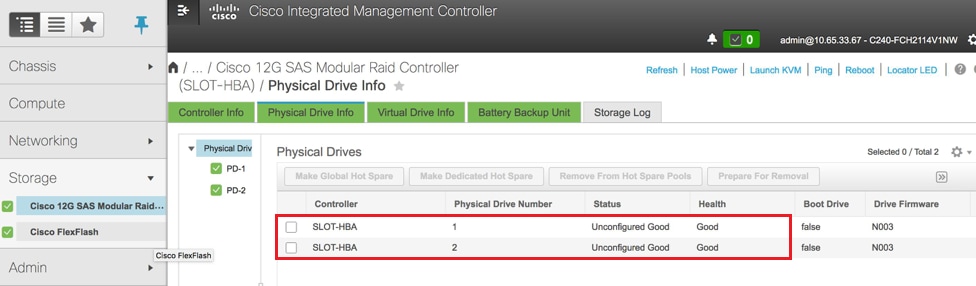

验证物理驱动的状况。这一定是没有配置好:

连接对存贮> Cisco 12G SAS模块化袭击控制器(SLOT-HBA) >物理驱动信息如显示这里在镜像。

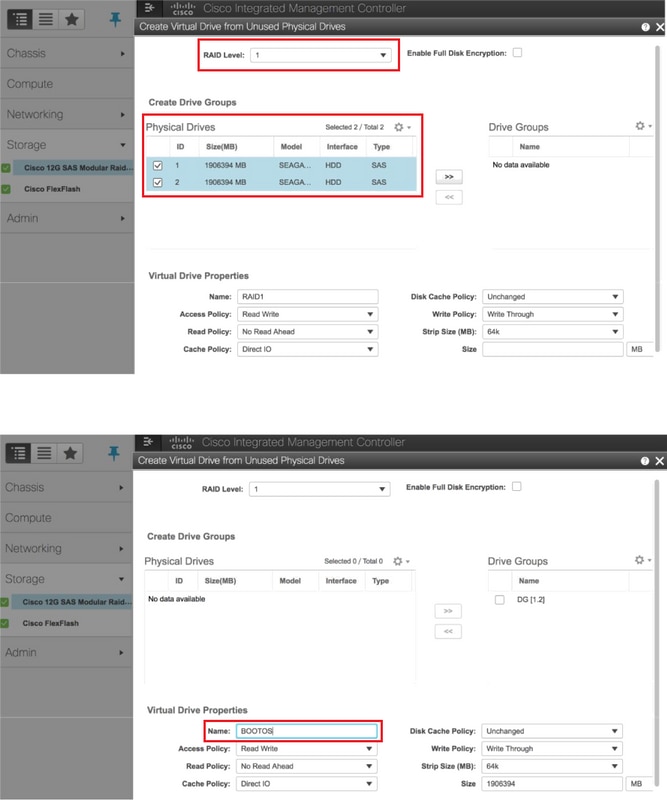

用RAID第1级创建从物理驱动的一个虚拟驱动器:

连接对存贮> Cisco 12G SAS模块化袭击控制器(SLOT-HBA)如镜像所显示, >控制器信息>创建从未使用的物理驱动的虚拟驱动器。

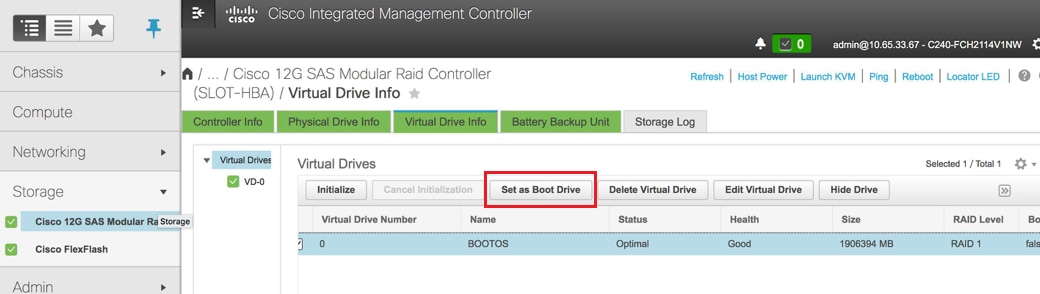

如镜像所显示,选择VD并且配置集作为引导驱动器。

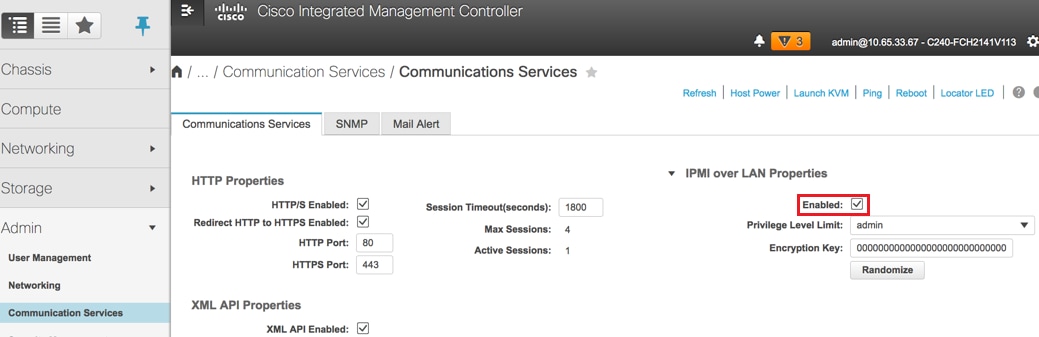

在LAN的Enable (event) IPMI :

如镜像所显示,连接对Admin >通信服务>通信服务。

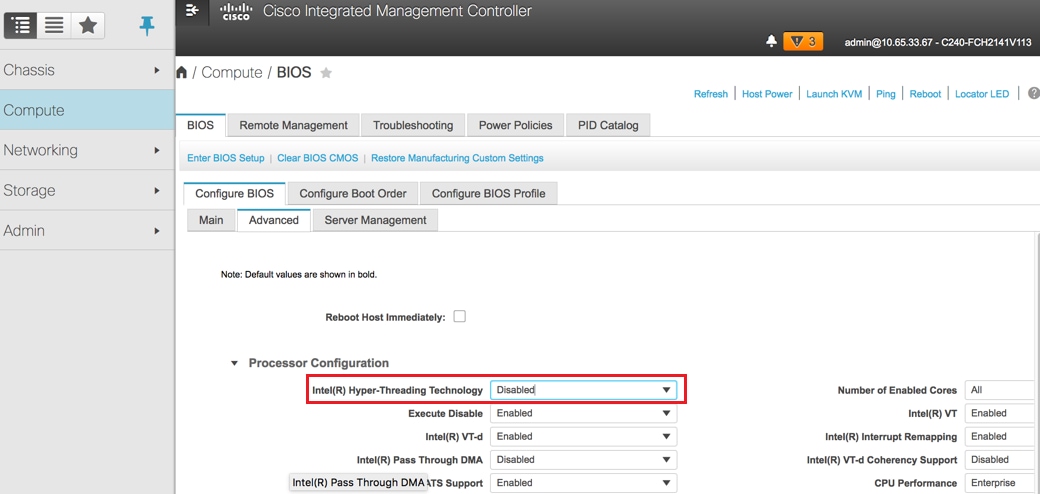

hyperthreading的功能失效:

如镜像所显示,连接计算> BIOS >配置BIOS >Advanced >处理器配置。

Note:此处显示的图像和在此部分提及的这里配置步骤是关于固件版本3.0(3e),并且也许有轻微的变化,如果研究其他版本。

红帽子安装

安放红帽子ISO镜像

1. 登陆到OSP-D服务器。

2. 启动KVM控制台。

3. 连接对虚拟媒体>激活虚拟设备。接受切记您的将来连接的会话和enable (event)设置。

4. 选择虚拟媒体>映射CD/DVDand映射红帽子ISO镜像。

5. 选择功率>重置系统(热引导)重新启动系统。

6. 在重新启动,请按F6and选择Cisco vKVM被映射的vDVD1.22and按Enter。

安装Red帽子恩特普赖斯Linux

Note:在此部分的程序表示确定参数的最小数量的必须配置安装过程的简化版本。

1. 选择选项安装Red帽子恩特普赖斯Linux (RHEL)为了开始安装。

2. 选择软件选择>仅最低的安装。

3. Configure network网络界面(eno1和eno2)。

4. 点击网络和主机名。

- 选择将使用外部通信的接口(eno1或eno2)

- 点击配置

- 选择IPv4 Settingstab,选择手工方法并且点击Add

- 设置这些参数如以前使用: 地址,子网掩码,网关, DNS服务器

5. 选择日期和时间并且指定您的地区和城市。

6. Enable (event)网络时间和配置NTP服务器。

7. 选择安装目的地并且请使用ext4文件系统 。

Note:删除/home/并且再分配容量在根/下。

8. 禁用Kdump。

9. 设置仅根密码。

10. 开始安装

。

恢复Undercloud

准备根据备份的undercloud安装

一旦机器安装有RHEL 7.3和在一个干净的状态,重新授权给必要的所有订阅/贮藏库安装和运行导向器。

主机名配置:

[root@director ~]$sudo hostnamectl set-hostname <FQDN_hostname>

[root@director ~]$sudo hostnamectl set-hostname --transient <FQDN_hostname>

编辑/etc/hosts文件:

[root@director ~]$ vi /etc/hosts

<ospd_external_address> <server_hostname> <FQDN_hostname>

10.225.247.142 pod1-ospd pod1-ospd.cisco.com

验证主机名- :

[root@director ~]$ cat /etc/hostname

pod1-ospd.cisco.com

验证DNS配置:

[root@director ~]$ cat /etc/resolv.conf

#Generated by NetworkManager

nameserver <DNS_IP>

修改设置的NIC接口:

[root@director ~]$ cat /etc/sysconfig/network-scripts/ifcfg-eno1

DEVICE=eno1

ONBOOT=yes

HOTPLUG=no

NM_CONTROLLED=no

PEERDNS=no

DEVICETYPE=ovs

TYPE=OVSPort

OVS_BRIDGE=br-ctlplane

BOOTPROTO=none

MTU=1500

完成红帽子注册

下载此程序包为了配置订阅管理器为了使用rh卫星:

[root@director ~]$ rpm -Uvh http://<satellite-server>/pub/katello-ca-consumer-latest.noarch.rpm

[root@director ~]$ subscription-manager config

向有使用的rh卫星登记RHEL的7.3此activationkey。

[root@director ~]$subscription-manager register --org="<ORG>" --activationkey="<KEY>"

为了看到订阅:

[root@director ~]$ subscription-manager list –consumed

Enable (event)贮藏库类似于老OSPD回购:

[root@director ~]$ sudo subscription-manager repos --disable=*

[root@director ~]$ subscription-manager repos --enable=rhel-7-server-rpms --enable=rhel-7-server-extras-rpms --enable=rh

el-7-server-openstack-10-rpms --enable=rhel-7-server-rh-common-rpms --enable=rhel-ha-for-rhel-7-server-rpm

执行在您的系统的一次更新为了保证您有最新的基本系统程序包和重新启动系统:

[root@director ~]$sudo yum update -y

[root@director ~]$sudo reboot

Undercloud恢复

在您enable (event)订阅,导入备份的undercloud TAR文件undercloud备份DATE +%F `.tar.gz新的OSP-D服务器根目录/root后。

安装mariadb服务器:

[root@director ~]$ yum install -y mariadb-server

提取MariaDB配置文件和数据库(DB)备份。执行此操作作为root用户。

[root@director ~]$ tar -xzC / -f undercloud-backup-$DATE.tar.gz etc/my.cnf.d/server.cnf

[root@director ~]$ tar -xzC / -f undercloud-backup-$DATE.tar.gz root/undercloud-all-databases.sql

若有编辑/etc/my.cnf.d/server.cnf并且注释BIND地址条目:

[root@tb3-ospd ~]# vi /etc/my.cnf.d/server.cnf

开始MariaDB服务和临时地更新max_allowed_packet设置:

[root@director ~]$ systemctl start mariadb

[root@director ~]$ mysql -uroot -e"set global max_allowed_packet = 16777216;"

整理某些权限(以后将被再创) :

[root@director ~]$ for i in ceilometer glance heat ironic keystone neutron nova;do mysql -e "drop user $i";done

[root@director ~]$ mysql -e 'flush privileges'

Note: 如果云高计服务在设置以前被禁用,请执行此命令并且去除云高计。

创建stackuser帐户:

[root@director ~]$ sudo useradd stack

[root@director ~]$ sudo passwd stack << specify a password

[root@director ~]$ echo "stack ALL=(root) NOPASSWD:ALL" | sudo tee -a /etc/sudoers.d/stack

[root@director ~]$ sudo chmod 0440 /etc/sudoers.d/stack

恢复堆栈用户主目录:

[root@director ~]$ tar -xzC / -f undercloud-backup-$DATE.tar.gz home/stack

安装很快和扫视基本程序包,然后恢复他们的数据:

[root@director ~]$ yum install -y openstack-glance openstack-swift

[root@director ~]$ tar --xattrs -xzC / -f undercloud-backup-$DATE.tar.gz srv/node var/lib/glance/images

确认数据由正确的用户拥有:

[root@director ~]$ chown -R swift: /srv/node

[root@director ~]$ chown -R glance: /var/lib/glance/images

恢复undercloud SSL证书(可选-将执行,只有当设置使用SSL证书)。

[root@director ~]$ tar -xzC / -f undercloud-backup-$DATE.tar.gz etc/pki/instack-certs/undercloud.pem

[root@director ~]$ tar -xzC / -f undercloud-backup-$DATE.tar.gz etc/pki/ca-trust/source/anchors/ca.crt.pem

重新运行undercloud安装作为stackuser并且保证运行它在堆栈用户主目录里:

[root@director ~]$ su - stack

[stack@director ~]$ sudo yum install -y python-tripleoclient

确认主机名-在/etc/hosts正确地设置。

重新安装undercloud :

[stack@director ~]$ openstack undercloud install

<snip>

#############################################################################

Undercloud install complete.

The file containing this installation's passwords is at

/home/stack/undercloud-passwords.conf.

There is also a stackrc file at /home/stack/stackrc.

These files are needed to interact with the OpenStack services, and must be

secured.

#############################################################################

重新连接恢复的Undercloud对乌云密布

在您完成这些步骤后, undercloud可以预计自动地恢复其与乌云密布的连接。节点将继续轮询配器法(热量)待定任务的,与每隔几秒钟发出的使用一个简单的HTTP请求。

验证完整恢复

请使用这些命令为了执行最近恢复的环境的健康检查:

[root@director ~]$ su - stack

Last Log in: Tue Nov 28 21:27:50 EST 2017 from 10.86.255.201 on pts/0

[stack@director ~]$ source stackrc

[stack@director ~]$ nova list

+--------------------------------------+--------------------+--------+------------+-------------+------------------------+

| ID | Name | Status | Task State | Power State | Networks |

+--------------------------------------+--------------------+--------+------------+-------------+------------------------+

| b1f5294a-629e-454c-b8a7-d15e21805496 | pod1-compute-0 | ACTIVE | - | Running | ctlplane=192.200.0.119 |

| 9106672e-ac68-423e-89c5-e42f91fefda1 | pod1-compute-1 | ACTIVE | - | Running | ctlplane=192.200.0.120 |

| b3ed4a8f-72d2-4474-91a1-b6b70dd99428 | pod1-compute-2 | ACTIVE | - | Running | ctlplane=192.200.0.124 |

| 677524e4-7211-4571-ac35-004dc5655789 | pod1-compute-3 | ACTIVE | - | Running | ctlplane=192.200.0.107 |

| 55ea7fe5-d797-473c-83b1-d897b76a7520 | pod1-compute-4 | ACTIVE | - | Running | ctlplane=192.200.0.122 |

| c34c1088-d79b-42b6-9306-793a89ae4160 | pod1-compute-5 | ACTIVE | - | Running | ctlplane=192.200.0.108 |

| 4ba28d8c-fb0e-4d7f-8124-77d56199c9b2 | pod1-compute-6 | ACTIVE | - | Running | ctlplane=192.200.0.105 |

| d32f7361-7e73-49b1-a440-fa4db2ac21b1 | pod1-compute-7 | ACTIVE | - | Running | ctlplane=192.200.0.106 |

| 47c6a101-0900-4009-8126-01aaed784ed1 | pod1-compute-8 | ACTIVE | - | Running | ctlplane=192.200.0.121 |

| 1a638081-d407-4240-b9e5-16b47e2ff6a2 | pod1-compute-9 | ACTIVE | - | Running | ctlplane=192.200.0.112 |

<snip>

[stack@director ~]$ ssh heat-admin@192.200.0.107

[heat-admin@pod1-controller-0 ~]$ sudo pcs status

Cluster name: tripleo_cluster

Stack: corosync

Current DC: pod1-controller-0 (version 1.1.15-11.el7_3.4-e174ec8) - partition with quorum

3 nodes and 22 resources configured

Online: [ pod1-controller-0 pod1-controller-1 pod1-controller-2 ]

Full list of resources:

ip-10.1.10.10 (ocf::heartbeat:IPaddr2): Started pod1-controller-0

ip-11.120.0.97 (ocf::heartbeat:IPaddr2): Started pod1-controller-1

Clone Set: haproxy-clone [haproxy]

Started: [ pod1-controller-0 pod1-controller-1 pod1-controller-2 ]

Master/Slave Set: galera-master [galera]

Masters: [ pod1-controller-0 pod1-controller-1 pod1-controller-2 ]

ip-192.200.0.106 (ocf::heartbeat:IPaddr2): Started pod1-controller-0

ip-11.120.0.95 (ocf::heartbeat:IPaddr2): Started pod1-controller-1

ip-11.119.0.98 (ocf::heartbeat:IPaddr2): Started pod1-controller-0

ip-11.118.0.92 (ocf::heartbeat:IPaddr2): Started pod1-controller-1

Clone Set: rabbitmq-clone [rabbitmq]

Started: [ pod1-controller-0 pod1-controller-1 pod1-controller-2 ]

Master/Slave Set: redis-master [redis]

Masters: [ pod1-controller-0 ]

Slaves: [ pod1-controller-1 pod1-controller-2 ]

openstack-cinder-volume (systemd:openstack-cinder-volume): Started pod1-controller-0

my-ipmilan-for-controller-0 (stonith:fence_ipmilan): Stopped

my-ipmilan-for-controller-1 (stonith:fence_ipmilan): Stopped

my-ipmilan-for-controller-2 (stonith:fence_ipmilan): Stopped

Failed Actions:

* my-ipmilan-for-controller-0_start_0 on pod1-controller-1 'unknown error' (1): call=190, status=Timed Out, exitreason='none',

last-rc-change='Wed Nov 22 13:52:45 2017', queued=0ms, exec=20005ms

* my-ipmilan-for-controller-1_start_0 on pod1-controller-1 'unknown error' (1): call=192, status=Timed Out, exitreason='none',

last-rc-change='Wed Nov 22 13:53:08 2017', queued=0ms, exec=20005ms

* my-ipmilan-for-controller-2_start_0 on pod1-controller-1 'unknown error' (1): call=188, status=Timed Out, exitreason='none',

last-rc-change='Wed Nov 22 13:52:23 2017', queued=0ms, exec=20004ms

* my-ipmilan-for-controller-0_start_0 on pod1-controller-0 'unknown error' (1): call=210, status=Timed Out, exitreason='none',

last-rc-change='Wed Nov 22 13:53:08 2017', queued=0ms, exec=20005ms

* my-ipmilan-for-controller-1_start_0 on pod1-controller-0 'unknown error' (1): call=207, status=Timed Out, exitreason='none',

last-rc-change='Wed Nov 22 13:52:45 2017', queued=0ms, exec=20004ms

* my-ipmilan-for-controller-2_start_0 on pod1-controller-0 'unknown error' (1): call=206, status=Timed Out, exitreason='none',

last-rc-change='Wed Nov 22 13:52:45 2017', queued=0ms, exec=20006ms

* ip-192.200.0.106_monitor_10000 on pod1-controller-0 'not running' (7): call=197, status=complete, exitreason='none',

last-rc-change='Wed Nov 22 13:51:31 2017', queued=0ms, exec=0ms

* my-ipmilan-for-controller-0_start_0 on pod1-controller-2 'unknown error' (1): call=183, status=Timed Out, exitreason='none',

last-rc-change='Wed Nov 22 13:52:23 2017', queued=1ms, exec=20006ms

* my-ipmilan-for-controller-1_start_0 on pod1-controller-2 'unknown error' (1): call=184, status=Timed Out, exitreason='none',

last-rc-change='Wed Nov 22 13:52:23 2017', queued=0ms, exec=20005ms

* my-ipmilan-for-controller-2_start_0 on pod1-controller-2 'unknown error' (1): call=177, status=Timed Out, exitreason='none',

last-rc-change='Wed Nov 22 13:52:02 2017', queued=0ms, exec=20005ms

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

[heat-admin@pod1-controller-0 ~]$ sudo ceph status

cluster eb2bb192-b1c9-11e6-9205-525400330666

health HEALTH_OK

monmap e1: 3 mons at {pod1-controller-0=11.118.0.40:6789/0,pod1-controller-1=11.118.0.41:6789/0,pod1-controller-2=11.118.0.42:6789/0}

election epoch 58, quorum 0,1,2 pod1-controller-0,pod1-controller-1,pod1-controller-2

osdmap e1398: 12 osds: 12 up, 12 in

flags sortbitwise,require_jewel_osds

pgmap v1245812: 704 pgs, 6 pools, 542 GB data, 352 kobjects

1625 GB used, 11767 GB / 13393 GB avail

704 active+clean

client io 21549 kB/s wr, 0 op/s rd, 120 op/s wr

检查身份服务(根本原理)操作

此步骤通过查询验证身份服务操作用户列表。

[stack@director ~]$ source stackrc

[stack@director ~]$ openstack user list

+----------------------------------+------------------+

| ID | Name |

+----------------------------------+------------------+

| 69ac2b9d89414314b1366590c7336f7d | admin |

| f5c30774fe8f49d0a0d89d5808a4b2cc | glance |

| 3958d852f85749f98cca75f26f43d588 | heat |

| cce8f2b7f1a843a08d0bb295a739bd34 | ironic |

| ce7c642f5b5741b48a84f54d3676b7ee | ironic-inspector |

| a69cd42a5b004ec5bee7b7a0c0612616 | mistral |

| 5355eb161d75464d8476fa0a4198916d | neutron |

| 7cee211da9b947ef9648e8fe979b4396 | nova |

| f73d36563a4a4db482acf7afc7303a32 | swift |

| d15c12621cbc41a8a4b6b67fa4245d03 | zaqar |

| 3f0ed37f95544134a15536b5ca50a3df | zaqar-websocket |

+----------------------------------+------------------+

[stack@director ~]$

[stack@director ~]$ source <overcloudrc>

[stack@director ~]$ openstack user list

+----------------------------------+------------+

| ID | Name |

+----------------------------------+------------+

| b4e7954942184e2199cd067dccdd0943 | admin |

| 181878efb6044116a1768df350d95886 | neutron |

| 6e443967ee3f4943895c809dc998b482 | heat |

| c1407de17f5446de821168789ab57449 | nova |

| c9f64c5a2b6e4d4a9ff6b82adef43992 | glance |

| 800e6b1163b74cc2a5fab4afb382f37d | cinder |

| 4cfa5a2a44c44c678025842f080e5f53 | heat-cfn |

| 9b222eeb8a58459bb3bfc76b8fff0f9f | swift |

| 815f3f25bcda49c290e1b56cd7981d1b | core |

| 07c40ade64f34a64932129175150fa4a | gnocchi |

| 0ceeda0bc32c4d46890e53adef9a193d | aodh |

| f3caab060171468592eab376a94967b8 | ceilometer |

+----------------------------------+------------+

[stack@director ~]$

将来节点内省的加载镜像

验证/httpboot和所有这些文件inspector.ipxe, agent.kernel, agent.ramdisk,如果不进行到这些步骤。

[stack@director ~]$ ls /httpboot

inspector.ipxe

[stack@director ~]$ source stackrc

[stack@director ~]$ cd images/

[stack@director images]$ openstack overcloud image upload --image-path /home/stack/images

Image "overcloud-full-vmlinuz" is up-to-date, skipping.

Image "overcloud-full-initrd" is up-to-date, skipping.

Image "overcloud-full" is up-to-date, skipping.

Image "bm-deploy-kernel" is up-to-date, skipping.

Image "bm-deploy-ramdisk" is up-to-date, skipping.

[stack@director images]$ ls /httpboot

agent.kernel agent.ramdisk inspector.ipxe

[stack@director images]$

重新启动操刀

操刀在中止状态在OSPD恢复以后。此程序enable (event)操刀。

[heat-admin@pod1-controller-0 ~]$ sudo pcs property set stonith-enabled=true

[heat-admin@pod1-controller-0 ~]$ sudo pcs status

[heat-admin@pod1-controller-0 ~]$sudo pcs stonith show

Related Information

由思科工程师提供

- Padmaraj RamanoudjamCisco高级服务

- Partheeban RajagopalCisco高级服务

反馈

反馈