在Catalyst 9800上使用Telegraf、ConsumeDB和Grafana配置高級gRPC工作流程

下載選項

無偏見用語

本產品的文件集力求使用無偏見用語。針對本文件集的目的,無偏見係定義為未根據年齡、身心障礙、性別、種族身分、民族身分、性別傾向、社會經濟地位及交織性表示歧視的用語。由於本產品軟體使用者介面中硬式編碼的語言、根據 RFP 文件使用的語言,或引用第三方產品的語言,因此本文件中可能會出現例外狀況。深入瞭解思科如何使用包容性用語。

關於此翻譯

思科已使用電腦和人工技術翻譯本文件,讓全世界的使用者能夠以自己的語言理解支援內容。請注意,即使是最佳機器翻譯,也不如專業譯者翻譯的內容準確。Cisco Systems, Inc. 對這些翻譯的準確度概不負責,並建議一律查看原始英文文件(提供連結)。

簡介

本文檔介紹如何部署Telegraf、ConsumeDB和Grafana (TIG)堆疊以及如何將其與Catalyst 9800互聯。

必要條件

本文檔透過複雜的整合演示了Catalyst 9800的程式設計介面功能。本文檔旨在展示如何根據任何需求完全定製這些功能,以及如何節省日常時間。此處展示的部署依賴於gRPC,並提供遙測配置,使來自任何Telegraf、ConsumeDB、Grafana (TIG)可觀察堆疊中的Catalyst 9800無線資料可用。

需求

思科建議您瞭解以下主題:

- Catalyst Wireless 9800配置型號。

- 網路可程式設計性和資料模型。

- TIG堆疊基礎知識。

採用元件

本文中的資訊係根據以下軟體和硬體版本:

- Catalyst 9800-CL (v. 17.12.03)。

- Ubuntu (v. 22.04.03)。

- InfloodDB (v. 1.06.07)。

- Telegraf (v. 1.21.04)。

- 格拉法納(v. 10.02.01)。

本文中的資訊是根據特定實驗室環境內的裝置所建立。文中使用到的所有裝置皆從已清除(預設)的組態來啟動。如果您的網路運作中,請確保您瞭解任何指令可能造成的影響。

設定

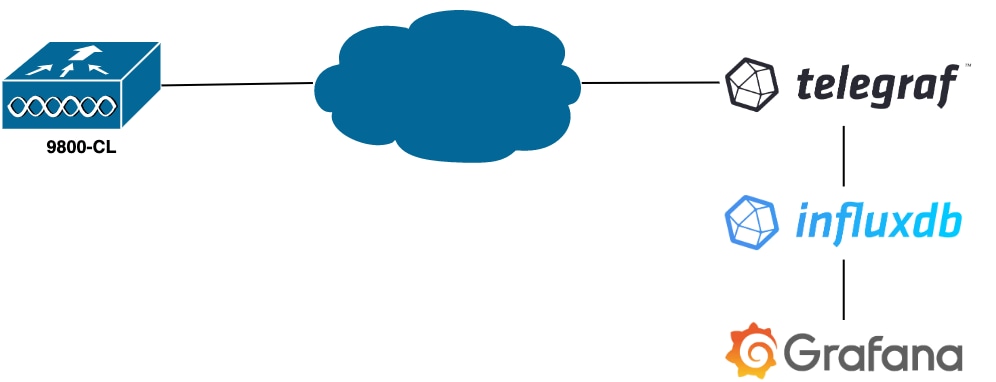

網路圖表

組態

在本示例中,在9800-CL上使用gRPC撥出來配置遙測,以將儲存資訊的Telegraf應用程式上的資訊推送到ConsumeDB資料庫中。這裡使用了兩個裝置,

- 託管整個TIG堆疊的Ubuntu伺服器。

- Catalyst 9800-CL。

本配置指南並不關注這些裝置的整個部署,而是關注每個應用程式上要正確傳送、接收和顯示9800資訊所需的配置。

步驟 1.準備資料庫

進入配置部分之前,請確保您的Confusion例項運行正常。使用systemctl status命令,如果您使用的是Linux發行版,這可以很容易地完成。

admin@tig:~$ systemctl status influxd

● influxdb.service - InfluxDB is an open-source, distributed, time series database

Loaded: loaded (/lib/systemd/system/influxdb.service; enabled; vendor preset: enabled)

Active: active (running) since Wed 2023-06-14 13:06:18 UTC; 2 weeks 5 days ago

Docs: https://docs.influxdata.com/influxdb/

Main PID: 733 (influxd)

Tasks: 15 (limit: 19180)

Memory: 4.2G

CPU: 1h 28min 47.366s

CGroup: /system.slice/influxdb.service

└─733 /usr/bin/influxd -config /etc/influxdb/influxdb.conf例如,Telegraf需要一個資料庫來儲存測量結果,以及一個使用者來連線至此測量結果。可以使用以下命令,從InfloodDB CLI輕鬆建立這些命令:

admin@tig:~$ influx

Connected to http://localhost:8086 version 1.8.10

InfluxDB shell version: 1.8.10

> create database TELEGRAF

> create user telegraf with password 'YOUR_PASSWORD'現在已建立資料庫,Telegraf可以設定成將測量結果正確儲存到資料庫中。

步驟 2.準備電報

此示例中只有兩個有趣的Telegraf配置起作用。這些可由配置檔案/etc/telegraf/telegraf.conf建立(對於運行在Unix上的應用程式,通常如此)。

第一個命令會宣告Telegraf所使用的輸出。如上所述,此處使用ConfusionDB,並在telegraf.conf檔案的輸出部分中配置如下:

###############################################################################

# OUTPUT PLUGINS #

###############################################################################

# Output Plugin InfluxDB

[[outputs.influxdb]]

## The full HTTP or UDP URL for your InfluxDB instance.

# ##

# ## Multiple URLs can be specified for a single cluster, only ONE of the

# ## urls will be written to each interval.

urls = [ "http://127.0.0.1:8086" ]

# ## The target database for metrics; will be created as needed.

# ## For UDP url endpoint database needs to be configured on server side.

database = "TELEGRAF"

# ## HTTP Basic Auth

username = "telegraf"

password = "YOUR_PASSWORD"這指示Telegraf進程將其接收到的資料儲存在埠8086上運行於同一主機上的ConsumeDB中,並使用名為「TELEGRAF」的資料庫(以及訪問它的憑證telegraf/YOUR_PASSWORD)。

如果第一個宣告的是輸出格式,第二個當然是輸入格式。要通知Telegraf它接收的資料來自使用遙測的思科裝置,您可以使用cisco_telemetry_mdt」輸入模組。要配置此功能,您只需在/etc/telegraf/telegraf.conf檔案中增加以下行:

###############################################################################

# INPUT PLUGINS #

###############################################################################

# # Cisco model-driven telemetry (MDT) input plugin for IOS XR, IOS XE and NX-OS platforms

[[inputs.cisco_telemetry_mdt]]

# ## Telemetry transport can be "tcp" or "grpc". TLS is only supported when

# ## using the grpc transport.

transport = "grpc"

#

# ## Address and port to host telemetry listener

service_address = ":57000"

# ## Define aliases to map telemetry encoding paths to simple measurement names

[inputs.cisco_telemetry_mdt.aliases]

ifstats = "ietf-interfaces:interfaces-state/interface/statistics"這使得在主機上運行的Telegraf應用程式(在預設埠57000)能夠對來自WLC的接收資料進行解碼。

儲存配置後,請確保重新啟動Telegraf以將其應用於服務。還要確保服務已正確重新啟動:

admin@tig:~$ sudo systemctl restart telegraf

admin@tig:~$ systemctl status telegraf.service

● telegraf.service - Telegraf

Loaded: loaded (/lib/systemd/system/telegraf.service; enabled; vendor preset: enabled)

Active: active (running) since Mon 2023-07-03 17:12:49 UTC; 2min 18s ago

Docs: https://github.com/influxdata/telegraf

Main PID: 110182 (telegraf)

Tasks: 10 (limit: 19180)

Memory: 47.6M

CPU: 614ms

CGroup: /system.slice/telegraf.service

└─110182 /usr/bin/telegraf -config /etc/telegraf/telegraf.conf -config-directory /etc/telegraf/telegraf.d步驟 3.確定包含所需度量的遙測訂閱

如上所述,在思科裝置上和其他許多裝置上,度量是按照YANG模型組織的。可以在此處找到每個版本的IOS XE(用於9800)的特定思科YANG型號,特別是本示例中使用的用於都柏林IOS XE 17.12.03的型號。

在本例中,我們側重於從使用的9800-CL例項收集CPU利用率指標。透過檢查Cisco IOS XE都柏林17.12.03的YANG型號,可以確定哪個模組包含控制器的CPU使用率,尤其是最近5秒內的使用率。這些是Cisco-IOS-XE-process-cpu-oper模組的一部分,位於cpu-utilization分組(枝葉5秒)下。

步驟 4.在控制器上啟用NETCONF

gRPC撥出架構依賴NETCONF來正常運行。因此,必須在9800上啟用此功能,可透過運行以下命令實現此功能:

WLC(config)#netconf ssh

WLC(config)#netconf-yang步驟 5.在控制器上配置遙測訂閱

根據YANG模型確定的度量的XPaths(a.k.a,XML路徑語言)之後,可以從9800 CLI輕鬆配置遙測訂閱,以便開始將這些遙測訂閱流式傳輸到步驟2中配置的Telegraf例項。這可以透過執行以下命令來完成:

WLC(config)#telemetry ietf subscription 101

WLC(config-mdt-subs)#encoding encode-kvgpb

WLC(config-mdt-subs)#filter xpath /process-cpu-ios-xe-oper:cpu-usage/cpu-utilization/five-seconds

WLC(config-mdt-subs)#source-address 10.48.39.130

WLC(config-mdt-subs)#stream yang-push

WLC(config-mdt-subs)#update-policy periodic 100

WLC(config-mdt-subs)#receiver ip address 10.48.39.98 57000 protocol grpc-tcp在此代碼塊中,首先定義識別符號為101的遙測訂閱。訂用識別符號可以是<0-2147483647>之間的任何數字,只要它不與其他訂用重疊。此訂閱已按以下順序進行配置:

- 使用的編碼方法,在使用gRPC傳輸協定時,必須是kvGPB。

- 訂用所傳送度量的過濾器,是定義我們感興趣度量的XPath(若要知道,請使用

/process-cpu-ios-xe-oper:cpu-usage/cpu-utilization/five-seconds)。 - 控制器用於傳送度量的源IP地址。

- 用於傳遞度量的流型別,在本例中為YANG Push IETF標準。

- 控制器用來在100秒內傳送資料給訂戶的頻率。在本例中,它被配置為每秒定期傳送更新。

- 接收器IP地址和埠號,以及用於在控制器和使用者之間通訊的協定。在本示例中,gRPC-TCP用於將度量傳送到埠57000上的主機10.48.39.98。

步驟 6.配置Grafana資料來源

現在,控制器開始向Telegraf傳送資料,這些資料儲存在TELEGRAF HonglumDB資料庫中,現在應該配置Grafana使其瀏覽這些指標。

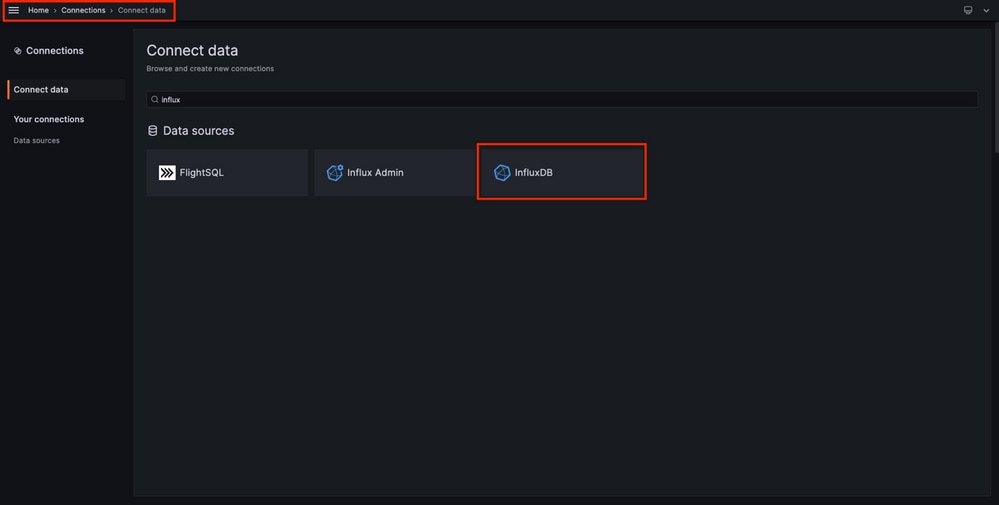

在您的Grafana GUI中,導航到Home > Connections > Connect data,然後使用搜尋欄查詢InflostDB資料來源。

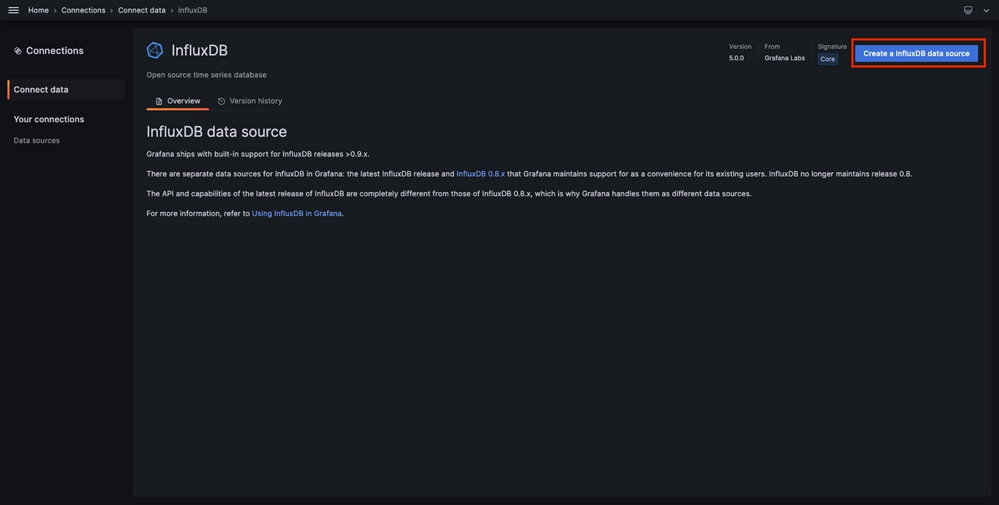

選擇此資料來源型別,然後使用「建立ConsumeDB資料來源」按鈕連線Grafana和在步驟1中建立的TELEGRAPH資料庫。

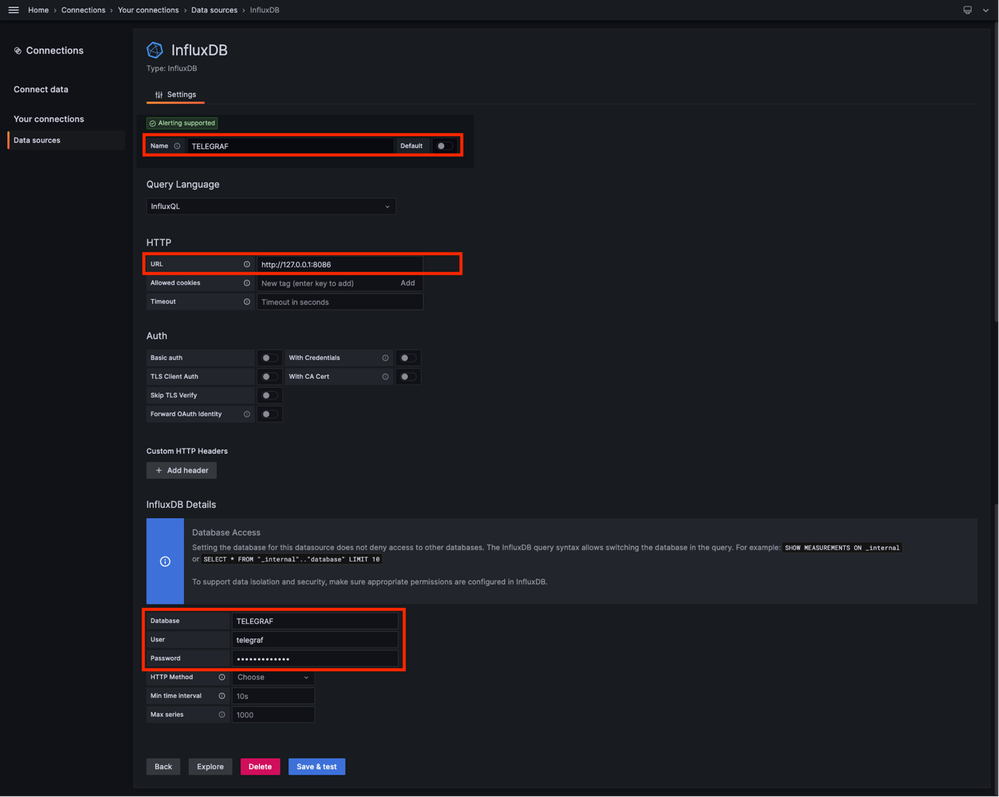

填入熒幕上顯示的表單,特別是提供:

- 資料來源的名稱。

- 所使用的ConfusionDB例項的URL。

- 使用的資料庫名稱(在本範例中為「TELEGRAF」)。

- 使用者定義的認證以存取它(在此範例中為telegraf/YOUR_PASSWORD)。

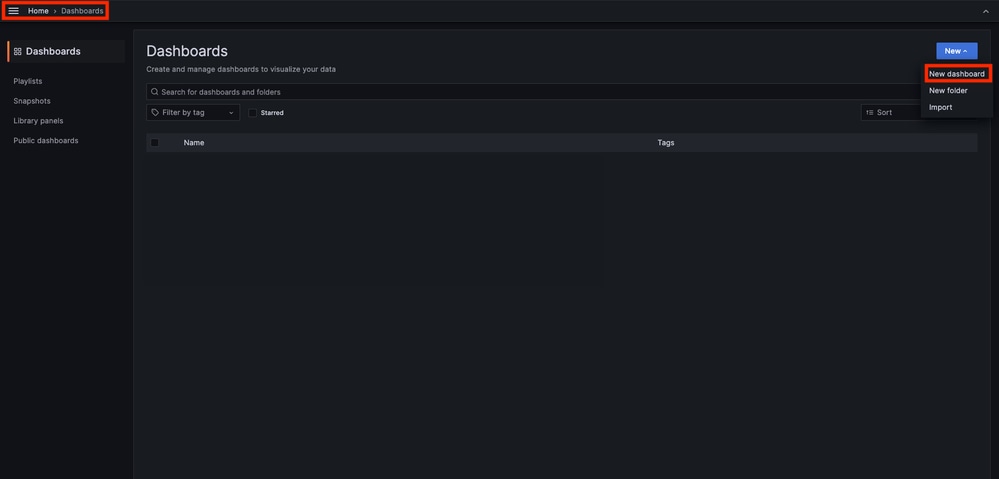

步驟 7.建立儀表板

Grafana視覺化內容被組織到控制台中。要建立包含Catalyst 9800度量視覺化的儀表板,請導航到首頁>儀表板,然後使用「新建儀表板」按鈕

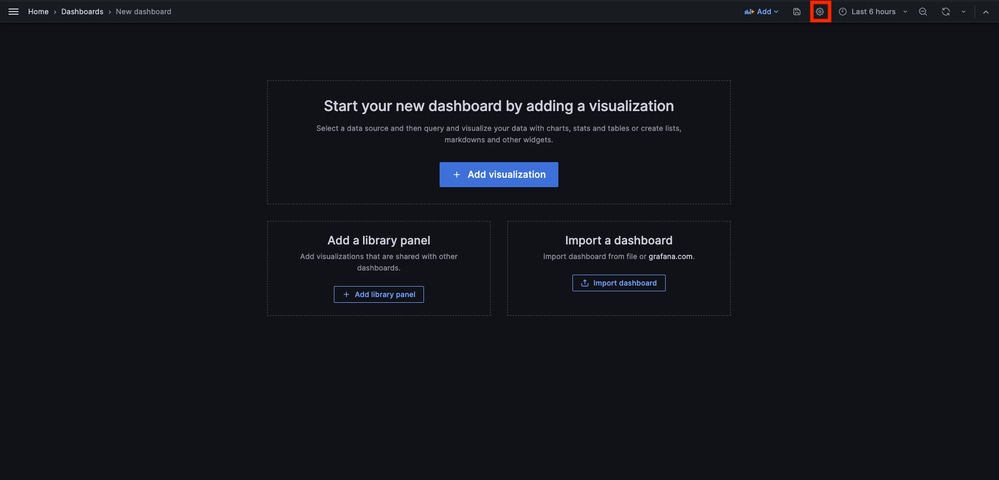

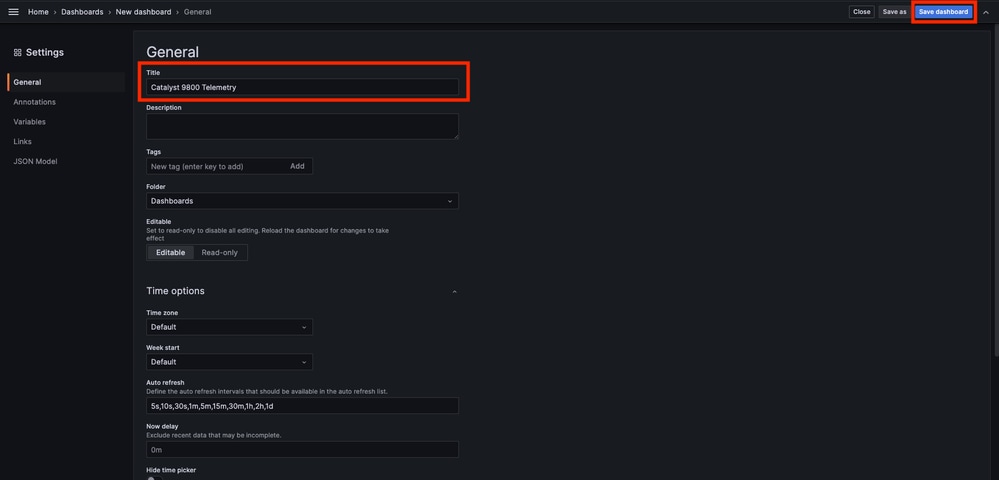

這樣會開啟新建的圖示板。按一下齒輪圖示以存取圖示板引數並變更其名稱。本例使用「Catalyst 9800遙測」。執行此操作後,請使用「儲存控制台」按鈕儲存控制台。

步驟 8.將視覺化新增至儀表板

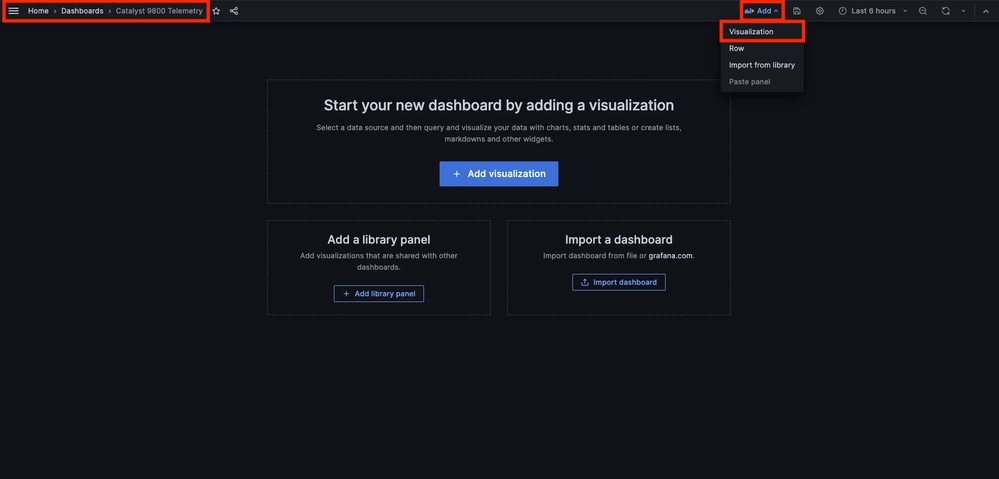

既然資料已經正確傳送、接收和儲存,而且Grafana可以訪問這個儲存位置,那麼現在是時候為他們建立視覺化了。

從任何Grafana儀表板中,使用「新增」按鈕,並從顯示的功能表中選取「視覺化」,建立測量結果的視覺化。

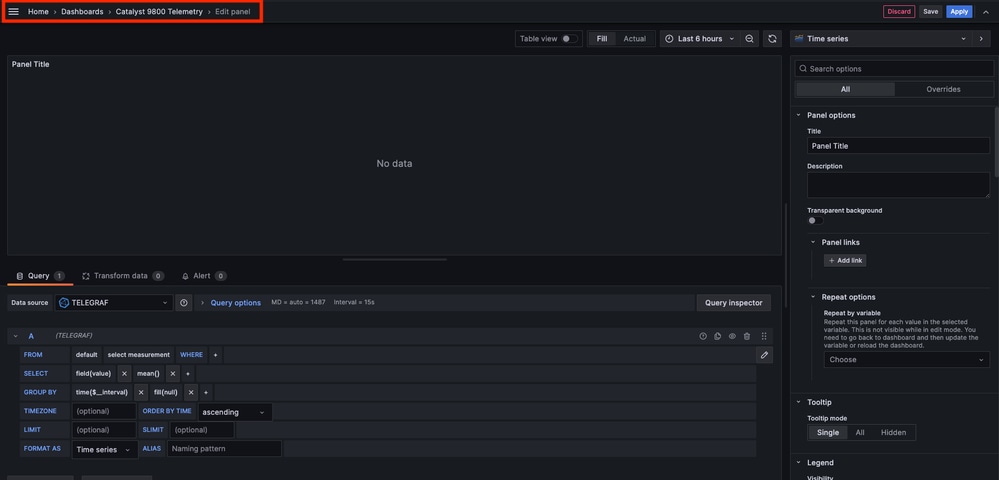

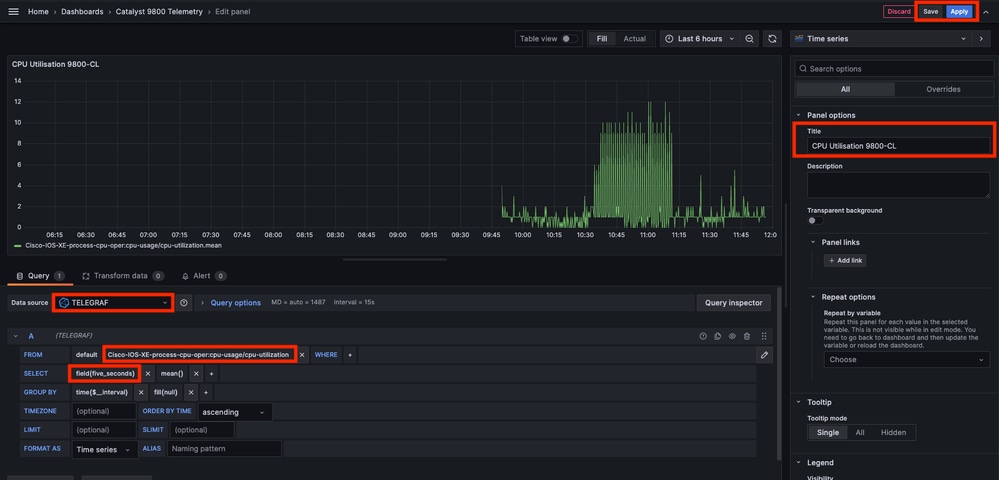

這將打開所建立的視覺化的編輯面板:

在此面板中,選取

- 您在步驟6中建立的資料來源的名稱,在本示例中為TELEGRAF。

- 包含要視覺化資料的度量(架構),在本例中為「Cisco-IOS-XE-process-cpu-oper:cpu-usage/cpu-utilization」。

- 代表您要視覺化之測量結果的資料庫欄位,在本範例中為「five_seconds」。

- 此範例中視覺化功能的標題「CPU使用率9800-CL」。

按下上一個圖中的「儲存/應用」按鈕後,顯示Catalyst 9800控制器隨時間變化的CPU使用情況的視覺化內容將增加到控制台中。使用磁片圖示按鈕可以儲存對控制台所做的更改。

驗證

WLC運行配置

Building configuration...

Current configuration : 112215 bytes

!

! Last configuration change at 14:28:36 UTC Thu May 23 2024 by admin

! NVRAM config last updated at 14:28:23 UTC Thu May 23 2024 by admin

!

version 17.12

[...]

aaa new-model

!

!

aaa authentication login default local

aaa authentication login local-auth local

aaa authentication dot1x default group radius

aaa authorization exec default local

aaa authorization network default group radius

[...]

vlan internal allocation policy ascending

!

vlan 39

!

vlan 1413

name VLAN_1413

!

!

interface GigabitEthernet1

switchport access vlan 1413

negotiation auto

no mop enabled

no mop sysid

!

interface GigabitEthernet2

switchport trunk allowed vlan 39,1413

switchport mode trunk

negotiation auto

no mop enabled

no mop sysid

!

interface Vlan1

no ip address

no ip proxy-arp

no mop enabled

no mop sysid

!

interface Vlan39

ip address 10.48.39.130 255.255.255.0

no ip proxy-arp

no mop enabled

no mop sysid

[...]

telemetry ietf subscription 101

encoding encode-kvgpb

filter xpath /process-cpu-ios-xe-oper:cpu-usage/cpu-utilization

source-address 10.48.39.130

stream yang-push

update-policy periodic 1000

receiver ip address 10.48.39.98 57000 protocol grpc-tcp

[...]

netconf-yangTelegraf配置

# Configuration for telegraf agent

[agent]

metric_buffer_limit = 10000

collection_jitter = "0s"

debug = true

quiet = false

flush_jitter = "0s"

hostname = ""

omit_hostname = false

###############################################################################

# OUTPUT PLUGINS #

###############################################################################

# Configuration for sending metrics to InfluxDB

[[outputs.influxdb]]

urls = ["http://127.0.0.1:8086"]

database = "TELEGRAF"

username = "telegraf"

password = "Wireless123#"

###############################################################################

# INPUT PLUGINS #

###############################################################################

###############################################################################

# SERVICE INPUT PLUGINS #

###############################################################################

# # Cisco model-driven telemetry (MDT) input plugin for IOS XR, IOS XE and NX-OS platforms

[[inputs.cisco_telemetry_mdt]]

transport = "grpc"

service_address = "10.48.39.98:57000"

[inputs.cisco_telemetry_mdt.aliases]

ifstats = "ietf-interfaces:interfaces-state/interface/statistics"GrowthDB配置

### Welcome to the InfluxDB configuration file.

reporting-enabled = false

[meta]

dir = "/var/lib/influxdb/meta"

[data]

dir = "/var/lib/influxdb/data"

wal-dir = "/var/lib/influxdb/wal"

[retention]

enabled = true

check-interval = "30m"

Grafana配置

#################################### Server ####################################

[server]

http_addr = 10.48.39.98

domain = 10.48.39.98疑難排解

WLC One Stop-Shop反射

從WLC端,首先要檢驗的是與程式設計介面相關的進程是否已啟動並正在運行。

#show platform software yang-management process

confd : Running

nesd : Running

syncfd : Running

ncsshd : Running <-- NETCONF / gRPC Dial-Out

dmiauthd : Running <-- For all of them, Device Managment Interface needs to be up.

nginx : Running <-- RESTCONF

ndbmand : Running

pubd : Running

gnmib : Running <-- gNMI 對於NETCONF(由gRPC撥出),這些命令還可以幫助檢查進程的狀態。

WLC#show netconf-yang status

netconf-yang: enabled

netconf-yang candidate-datastore: disabled

netconf-yang side-effect-sync: enabled

netconf-yang ssh port: 830

netconf-yang turbocli: disabled

netconf-yang ssh hostkey algorithms: rsa-sha2-256,rsa-sha2-512,ssh-rsa

netconf-yang ssh encryption algorithms: aes128-ctr,aes192-ctr,aes256-ctr,aes128-cbc,aes256-cbc

netconf-yang ssh MAC algorithms: hmac-sha2-256,hmac-sha2-512,hmac-sha1

netconf-yang ssh KEX algorithms: diffie-hellman-group14-sha1,diffie-hellman-group14-sha256,ecdh-sha2-nistp256,ecdh-sha2-nistp384,ecdh-sha2-nistp521,diffie-hellman-group16-sha512

檢查進程狀態後,另一個重要檢查是Catalyst 9800和電信接收器之間的遙測連線狀態。可使用「show telemetry connection all」命令檢視它。

WLC#show telemetry connection all

Telemetry connections

Index Peer Address Port VRF Source Address State State Description

----- -------------------------- ----- --- -------------------------- ---------- --------------------

28851 10.48.39.98 57000 0 10.48.39.130 Active Connection up

如果WLC和接收器之間的遙測連線啟動,您還可以使用show telemetry ietf subscription all brief命令確保配置的訂閱有效。

WLC#show telemetry ietf subscription all brief

ID Type State State Description

101 Configured Valid Subscription validated此命令的詳細版本show telemetry ietf subscription all detail提供有關訂閱的更多資訊,有助於指出其配置中的問題。

WLC#show telemetry ietf subscription all detail

Telemetry subscription detail:

Subscription ID: 101

Type: Configured

State: Valid

Stream: yang-push

Filter:

Filter type: xpath

XPath: /process-cpu-ios-xe-oper:cpu-usage/cpu-utilization

Update policy:

Update Trigger: periodic

Period: 1000

Encoding: encode-kvgpb

Source VRF:

Source Address: 10.48.39.130

Notes: Subscription validated

Named Receivers:

Name Last State Change State Explanation

-------------------------------------------------------------------------------------------------------------------------------------------------------

grpc-tcp://10.48.39.98:57000 05/23/24 08:00:25 Connected 確認網路連線能力

Catalyst 9800控制器將gRPC資料傳送到為每個遙測訂閱配置的接收器埠。

WLC#show run | include receiver ip address

receiver ip address 10.48.39.98 57000 protocol grpc-tcp要驗證WLC和此已配置埠上的接收器之間的網路連線,可使用多種工具。

從WLC中,可以在已設定的接收器IP/連線埠(這裡為10.48.39.98:57000)上使用telnet來驗證此接收器是否已開啟以及是否可從控制器本身連線。如果沒有封鎖流量,則連線埠必須在輸出中顯示為開啟:

WLC#telnet 10.48.39.98 57000

Trying 10.48.39.98, 57000 ... Open <-------或者,您可以使用來自任何主機的Nmap來確保接收器已在配置的埠上正確曝光。

$ sudo nmap -sU -p 57000 10.48.39.98

Starting Nmap 7.95 ( https://nmap.org ) at 2024-05-17 13:12 CEST

Nmap scan report for air-1852e-i-1.cisco.com (10.48.39.98)

Host is up (0.020s latency).

PORT STATE SERVICE

57000/udp open|filtered unknown

Nmap done: 1 IP address (1 host up) scanned in 0.35 seconds記錄與除錯

2024/05/23 14:40:36.566486156 {pubd_R0-0}{2}: [mdt-ctrl] [30214]: (note): **** Event Entry: Configured legacy receiver creation/modification of subscription 101 receiver 'grpc-tcp://10.48.39.98:57000'

2024/05/23 14:40:36.566598609 {pubd_R0-0}{2}: [mdt-ctrl] [30214]: (note): Use count for named receiver 'grpc-tcp://10.48.39.98:57000' set to 46.

2024/05/23 14:40:36.566600301 {pubd_R0-0}{2}: [mdt-ctrl] [30214]: (note): {subscription receiver event='configuration created'} received for subscription 101 receiver 'grpc-tcp://10.48.39.98:57000'

[...]

2024/05/23 14:40:36.572402901 {pubd_R0-0}{2}: [pubd] [30214]: (info): Collated data collector filters for subscription 101.

2024/05/23 14:40:36.572405081 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Creating periodic sensor for subscription 101.

2024/05/23 14:40:36.572670046 {pubd_R0-0}{2}: [pubd] [30214]: (info): Creating data collector type 'ei_do periodic' for subscription 101 using filter '/process-cpu-ios-xe-oper:cpu-usage/cpu-utilization'.

2024/05/23 14:40:36.572670761 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Creating crimson data collector for filter '/process-cpu-ios-xe-oper:cpu-usage/cpu-utilization' (1 subfilters) with cap 0x0001.

2024/05/23 14:40:36.572671763 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Need new data collector instance 0 for subfilter '/process-cpu-ios-xe-oper:cpu-usage/cpu-utilization'.

2024/05/23 14:40:36.572675434 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Creating CRIMSON periodic data collector for filter '/process-cpu-ios-xe-oper:cpu-usage/cpu-utilization'.

2024/05/23 14:40:36.572688399 {pubd_R0-0}{2}: [pubd] [30214]: (debug): tree rooted at cpu-usage

2024/05/23 14:40:36.572715384 {pubd_R0-0}{2}: [pubd] [30214]: (debug): last container/list node 0

2024/05/23 14:40:36.572740734 {pubd_R0-0}{2}: [pubd] [30214]: (debug): 1 non leaf children to render from cpu-usage down

2024/05/23 14:40:36.573135594 {pubd_R0-0}{2}: [pubd] [30214]: (debug): URI:/cpu_usage;singleton_id=0 SINGLETON

2024/05/23 14:40:36.573147953 {pubd_R0-0}{2}: [pubd] [30214]: (debug): 0 non leaf children to render from cpu-utilization down

2024/05/23 14:40:36.573159482 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Timer created for subscription 101, sensor 0x62551136f0e8

2024/05/23 14:40:36.573166451 {pubd_R0-0}{2}: [mdt-ctrl] [30214]: (note): {subscription receiver event='receiver connected'} received with peer (10.48.39.98:57000) for subscription 101 receiver 'grpc-tcp://10.48.39.98:57000'

2024/05/23 14:40:36.573197750 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Starting batch from periodic collector 'ei_do periodic'.

2024/05/23 14:40:36.573198408 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Building from the template

2024/05/23 14:40:36.575467870 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Created dbal batch:133, for crimson subscription

2024/05/23 14:40:36.575470867 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Done building from the template

2024/05/23 14:40:36.575481078 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Executing batch:133 for periodic subscription

2024/05/23 14:40:36.575539723 {pubd_R0-0}{2}: [mdt-ctrl] [30214]: (note): {subscription id=101 receiver name='grpc-tcp://10.48.39.98:57000', state='connecting'} handling 'receiver connected' event with result 'e_mdt_rc_ok'

2024/05/23 14:40:36.575558274 {pubd_R0-0}{2}: [mdt-ctrl] [30214]: (note): {subscription receiver event='receiver connected'} subscription 101 receiver 'grpc-tcp://10.48.39.98:57000' changed

2024/05/23 14:40:36.577274757 {ndbmand_R0-0}{2}: [ndbmand] [30690]: (info): get__next_table reached the end of table for /services;serviceName=ewlc_oper/capwap_data@23

2024/05/23 14:40:36.577279206 {ndbmand_R0-0}{2}: [ndbmand] [30690]: (debug): Cleanup table for /services;serviceName=ewlc_oper/capwap_data cursor=0x57672da538b0

2024/05/23 14:40:36.577314397 {ndbmand_R0-0}{2}: [ndbmand] [30690]: (info): get__next_object cp=ewlc-oper-db exit return CONFD_OK

2024/05/23 14:40:36.577326609 {ndbmand_R0-0}{2}: [ndbmand] [30690]: (debug): yield ewlc-oper-db

2024/05/23 14:40:36.579099782 {iosrp_R0-0}{1}: [parser_cmd] [26295]: (note): id= A.B.C.D@vty0:user= cmd: 'receiver ip address 10.48.39.98 57000 protocol grpc-tcp' SUCCESS 2024/05/23 14:40:36.578 UTC

2024/05/23 14:40:36.580979429 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Batch response received for crimson sensor, batch:133

2024/05/23 14:40:36.580988867 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Green response: Result rc 0, Length: 360, num_records 1

2024/05/23 14:40:36.581175013 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Green Resp cursor len 63

2024/05/23 14:40:36.581176173 {pubd_R0-0}{2}: [pubd] [30214]: (debug): There is no more data left to be retrieved from batch 133.

2024/05/23 14:40:36.581504331 {iosrp_R0-0}{2}: [parser_cmd] [24367]: (note): id= 10.227.65.133@vty1:user=admin cmd: 'receiver ip address 10.48.39.98 57000 protocol grpc-tcp' SUCCESS 2024/05/23 14:40:36.553 UTC

[...]

2024/05/23 14:40:37.173223406 {pubd_R0-0}{2}: [pubd] [30214]: (info): Added queue (wq: tc_inst 60293411, 101) to be monitored (mqid: 470)

2024/05/23 14:40:37.173226005 {pubd_R0-0}{2}: [pubd] [30214]: (debug): New subscription (subscription 101) monitoring object stored at id 19

2024/05/23 14:40:37.173226315 {pubd_R0-0}{2}: [pubd] [30214]: (note): Added subscription for monitoring (subscription 101, msid 19)

2024/05/23 14:40:37.173230769 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Stats updated for Q (wq: tc_inst 60293411, 101), total_enqueue: 1

2024/05/23 14:40:37.173235969 {pubd_R0-0}{2}: [pubd] [30214]: (debug): (grpc::events) Processing event Q

2024/05/23 14:40:37.173241290 {pubd_R0-0}{2}: [pubd] [30214]: (debug): GRPC telemetry connector update data for subscription 101, period 1 (first: true)

2024/05/23 14:40:37.173257944 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Encoding path is Cisco-IOS-XE-process-cpu-oper:cpu-usage/cpu-utilization

2024/05/23 14:40:37.173289128 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Creating kvgpb encoder

2024/05/23 14:40:37.173307771 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Creating combined parser

2024/05/23 14:40:37.173310050 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Beginning MDT yang container walk for record 0

2024/05/23 14:40:37.173329761 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Dispatching new container [data_node: name=Cisco-IOS-XE-process-cpu-oper:cpu-usage, type=container, parent=n/a, key=false]

2024/05/23 14:40:37.173334681 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Container 'Cisco-IOS-XE-process-cpu-oper:cpu-usage' started successfully

2024/05/23 14:40:37.173340313 {pubd_R0-0}{2}: [pubd] [30214]: (debug): add data in progress

2024/05/23 14:40:37.173343079 {pubd_R0-0}{2}: [pubd] [30214]: (debug): GRPC telemetry connector continue data for subscription 101, period 1 (first: true)

2024/05/23 14:40:37.173345689 {pubd_R0-0}{2}: [pubd] [30214]: (debug): (grpc::events) Processing event Q

2024/05/23 14:40:37.173350431 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Dispatching new container [data_node: name=cpu-utilization, type=container, parent=Cisco-IOS-XE-process-cpu-oper:cpu-usage, key=false]

2024/05/23 14:40:37.173353194 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Deferred container cpu-utilization

2024/05/23 14:40:37.173355275 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Container 'cpu-utilization' started successfully

2024/05/23 14:40:37.173380121 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Dispatching new leaf [name=five-seconds, value=3, parent=cpu-utilization, key=false]

2024/05/23 14:40:37.173390655 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Leaf 'five-seconds' added successfully

2024/05/23 14:40:37.173393529 {pubd_R0-0}{2}: [pubd] [30214]: (debug): add data in progress

2024/05/23 14:40:37.173395693 {pubd_R0-0}{2}: [pubd] [30214]: (debug): GRPC telemetry connector continue data for subscription 101, period 1 (first: true)

2024/05/23 14:40:37.173397974 {pubd_R0-0}{2}: [pubd] [30214]: (debug): (grpc::events) Processing event Q

2024/05/23 14:40:37.173406311 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Dispatching new leaf [name=five-seconds-intr, value=0, parent=cpu-utilization, key=false]

2024/05/23 14:40:37.173408937 {pubd_R0-0}{2}: [pubd] [30214]: (debug): Leaf 'five-seconds-intr' added successfully

2024/05/23 14:40:37.173411575 {pubd_R0-0}{2}: [pubd] [30214]: (debug): add data in progress

[...]確保指標到達TIG堆疊

從HonglumDB CLI

與其他資料庫系統一樣,ConfusionDB隨附一個CLI,可用於檢查Telegraf是否正確接收度量並儲存在定義的資料庫中。ConfusionDB將指標(稱為點)組織為度量,這些度量本身被組織為序列。此處介紹的一些基本命令可用於驗證ConsumeDB端的資料方案並確保資料到達此應用程式。

首先,您可以檢查序列、測量及其結構(鍵)是否正確產生。它們由Telegraf和ConsumeDB根據使用的RPC結構自動生成。

注意:當然,此結構可以從Telegraf和InfluenceDB配置完全定製。但是,這超出了本配置指南的範圍。

$ influx

Connected to http://localhost:8086 version 1.6.7~rc0

InfluxDB shell version: 1.6.7~rc0

> USE TELEGRAF

Using database TELEGRAF

> SHOW SERIES

key

---

Cisco-IOS-XE-process-cpu-oper:cpu-usage/cpu-utilization,host=ubuntu-virtual-machine,path=Cisco-IOS-XE-process-cpu-oper:cpu-usage/cpu-utilization,source=WLC,subscription=101

> SHOW MEASUREMENTS

name: measurements

name

----

Cisco-IOS-XE-process-cpu-oper:cpu-usage/cpu-utilization

> SHOW FIELD KEYS FROM "Cisco-IOS-XE-process-cpu-oper:cpu-usage/cpu-utilization"

name: Cisco-IOS-XE-process-cpu-oper:cpu-usage/cpu-utilization

fieldKey fieldType

-------- ---------

cpu_usage_processes/cpu_usage_process/avg_run_time integer

cpu_usage_processes/cpu_usage_process/five_minutes float

cpu_usage_processes/cpu_usage_process/five_seconds float

cpu_usage_processes/cpu_usage_process/invocation_count integer

cpu_usage_processes/cpu_usage_process/name string

cpu_usage_processes/cpu_usage_process/one_minute float

cpu_usage_processes/cpu_usage_process/pid integer

cpu_usage_processes/cpu_usage_process/total_run_time integer

cpu_usage_processes/cpu_usage_process/tty integer

five_minutes integer

five_seconds integer

five_seconds_intr integer

one_minute integer一旦資料結構明確(整數、字串、布林值、...),就可以根據特定欄位獲取在這些測量上儲存的資料點的數量。

# Get the number of points from "Cisco-IOS-XE-process-cpu-oper:cpu-usage/cpu-utilization" for the field "five_seconds".

> SELECT COUNT(five_seconds) FROM "Cisco-IOS-XE-process-cpu-oper:cpu-usage/cpu-utilization"

name: Cisco-IOS-XE-process-cpu-oper:cpu-usage/cpu-utilization

time count

---- -----

0 1170

> SELECT COUNT(five_seconds) FROM "Cisco-IOS-XE-process-cpu-oper:cpu-usage/cpu-utilization"

name: Cisco-IOS-XE-process-cpu-oper:cpu-usage/cpu-utilization

time count

---- -----

0 1171

# Fix timestamp display

> precision rfc3339

# Get the last point stored in "Cisco-IOS-XE-process-cpu-oper:cpu-usage/cpu-utilization" for the field "five_seconds".

> SELECT LAST(five_seconds) FROM "Cisco-IOS-XE-process-cpu-oper:cpu-usage/cpu-utilization"

name: Cisco-IOS-XE-process-cpu-oper:cpu-usage/cpu-utilization

time last

---- ----

2024-05-23T13:18:53.51Z 0

> SELECT LAST(five_seconds) FROM "Cisco-IOS-XE-process-cpu-oper:cpu-usage/cpu-utilization"

name: Cisco-IOS-XE-process-cpu-oper:cpu-usage/cpu-utilization

time last

---- ----

2024-05-23T13:19:03.589Z 2

如果特定欄位的點數和上次出現的時間戳增加,則TIG堆疊正確接收和儲存WLC傳送的資料是很好的跡象。

從Telegraf

要驗證Telegraf接收方確實從控制器獲取了一些度量並檢查其格式,您可以將Telegraf度量重定向到主機上的輸出檔案。在裝置互連故障排除方面,這非常方便。為此,只需使用Telegraf的「file」輸出外掛(可透過/etc/telegraf/telegraf.conf進行配置)。

# Send telegraf metrics to file(s)

[[outputs.file]]

# ## Files to write to, "stdout" is a specially handled file.

files = ["stdout", "/tmp/metrics.out", "other/path/to/the/file"]

#

# ## Use batch serialization format instead of line based delimiting. The

# ## batch format allows for the production of non line based output formats and

# ## may more efficiently encode metric groups.

# # use_batch_format = false

#

# ## The file will be rotated after the time interval specified. When set

# ## to 0 no time based rotation is performed.

# # rotation_interval = "0d"

#

# ## The logfile will be rotated when it becomes larger than the specified

# ## size. When set to 0 no size based rotation is performed.

# # rotation_max_size = "0MB"

#

# ## Maximum number of rotated archives to keep, any older logs are deleted.

# ## If set to -1, no archives are removed.

# # rotation_max_archives = 5

#

# ## Data format to output.

# ## Each data format has its own unique set of configuration options, read

# ## more about them here:

# ## https://github.com/influxdata/telegraf/blob/master/docs/DATA_FORMATS_OUTPUT.md

data_format = "influx"

參考資料

修訂記錄

| 修訂 | 發佈日期 | 意見 |

|---|---|---|

1.0 |

10-Jun-2024 |

初始版本 |

由思科工程師貢獻

- Guilian DeflandreCisco TAC

- Rasheed HamdanCisco TAC

意見

意見