Packet Classification Overview

|

Feature Name |

Release Information |

Feature Description |

|---|---|---|

|

Cisco NC57 Compatibility Mode: QoS Enablement on Layer 2 MPLS/BGP |

Release 7.3.1 |

This feature is now supported on routers that have the Cisco NC57 line cards installed and operate in the compatibility mode. The following Layer 2 services are supported:

Apart from packet classification, this feature is available for the following QoS operations: |

|

Cisco NC57 Native Mode: QoS Enablement on Layer 2 Services |

Release 7.4.1 |

This feature is now supported on routers that have the Cisco NC57 line cards installed and operate in the native mode. The following Layer 2 services are supported:

Apart from packet classification, this feature is available for the following QoS operations: |

Packet classification involves categorizing a packet within a specific group (or class) and assigning it a traffic descriptor to make it accessible for QoS handling on the network. The traffic descriptor contains information about the forwarding treatment (quality of service) that the packet should receive. Using packet classification, you can partition network traffic into multiple priority levels or classes of service. The source agrees to adhere to the contracted terms and the network promises a quality of service. Traffic policers and traffic shapers use the traffic descriptor of a packet to ensure adherence to the contract.

Traffic policers and traffic shapers rely on packet classification features, such as IP precedence, to select packets (or traffic flows) traversing a router or interface for different types of QoS service. After you classify packets, you can use other QoS features to assign the appropriate traffic handling policies including congestion management, bandwidth allocation, and delay bounds for each traffic class.

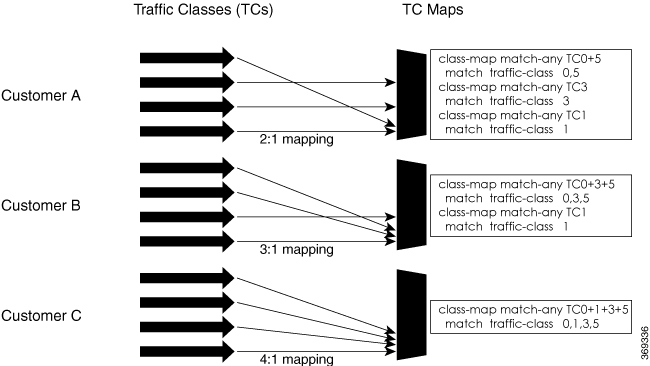

The Modular Quality of Service (QoS) CLI (MQC) defines the traffic flows that must be classified, where each traffic flow is called a class of service, or class. Later, a traffic policy is created and applied to a class. All traffic not identified by defined classes fall into the category of a default class.

You can classify packets at the ingress on L3 subinterfaces for (CoS, DEI) for IPv4, IPv6, and MPLS flows. IPv6 packets are forwarded by paths that are different from those for IPv4. To enable classification of IPv6 packets based on (CoS, DEI) on L3 subinterfaces, run the hw-module profile qos ipv6 short-l2qos-enable command and reboot the line card for the command to take effect.

Starting with Cisco IOS XR Release 7.4.1 systems with Cisco NC57 line cards running in native mode support QoS over Layer 2 services for:

-

Local switching [xconnect or bridging]

-

L2 VPN – VPWS

Feedback

Feedback