Cisco WAE Overview

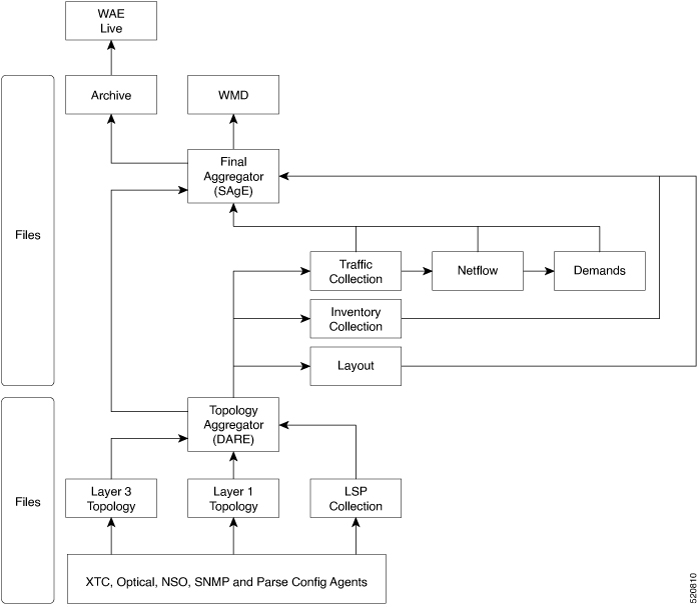

The Cisco WAN Automation Engine (WAE) platform is an open, programmable framework that interconnects software modules, communicates with the network, and provides APIs to interface with external applications.

Cisco WAE provides the tools to create and maintain a model of the current network through the continual monitoring and analysis of the network and the traffic demands that is placed on it. At a given time, this network model contains all relevant information about a network, including topology, configuration, and traffic information. You can use this information as a basis for analyzing the impact on the network due to changes in traffic demands, paths, node and link failures, network optimizations, or other changes.

Some of the important features of Cisco WAE platform are:

-

Traffic engineering and network optimization—Compute TE LSP configurations to improve the network performance, or perform local or global optimization.

-

Demand engineering—Examine the impact on network traffic flow of adding, removing, or modifying traffic demands on the network.

-

Topology and predictive analysis—Observe the impact to network performance of changes in the network topology, which is driven either by design or by network failures.

-

TE tunnel programming—Examine the impact of modifying tunnel parameters, such as the tunnel path and reserved bandwidth.

-

Class of service (CoS)-aware bandwidth on demand—Examine existing network traffic and demands, and admit a set of service-class-specific demands between routers.

Feedback

Feedback