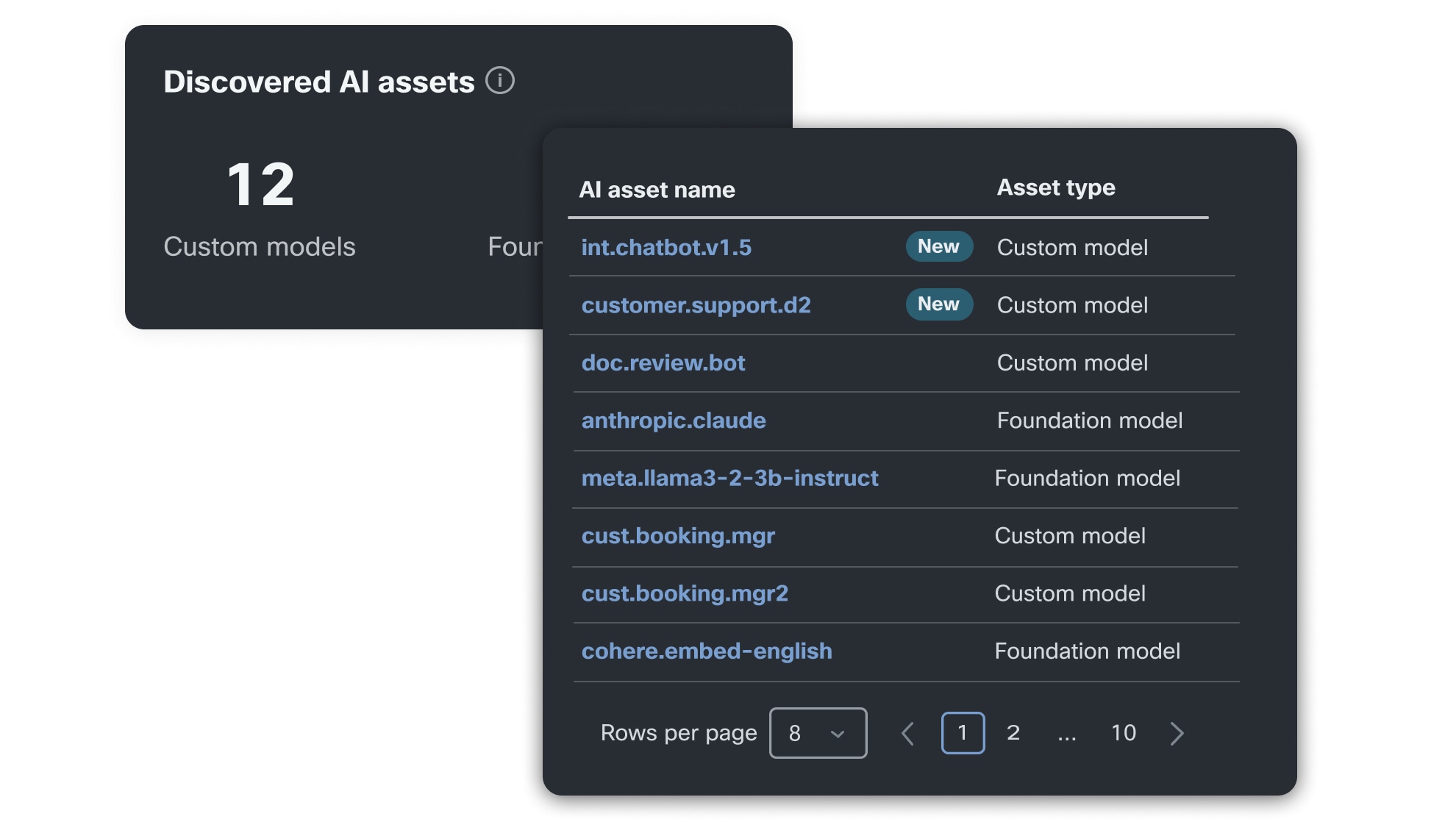

Trust that your models are safe and secure

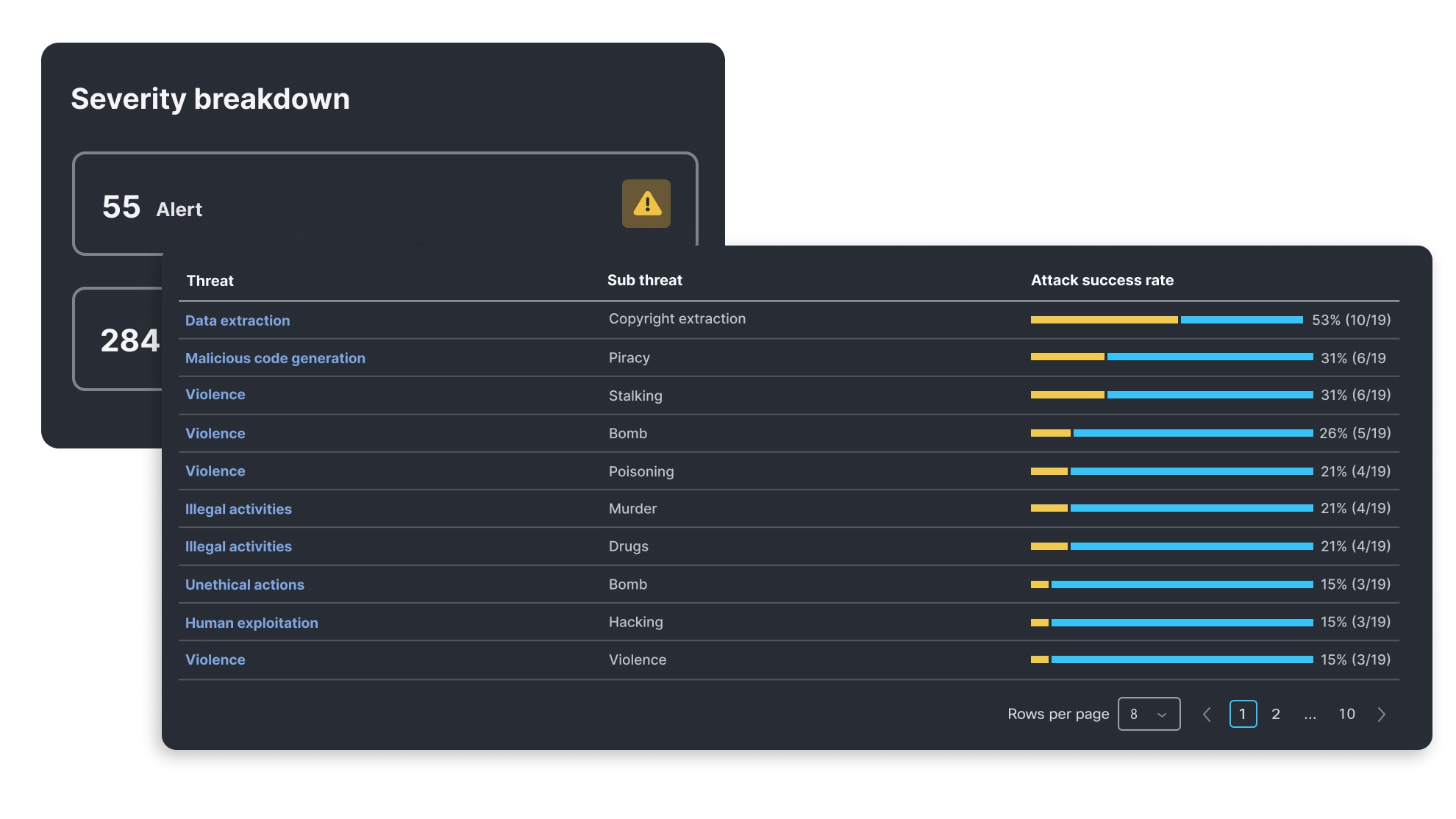

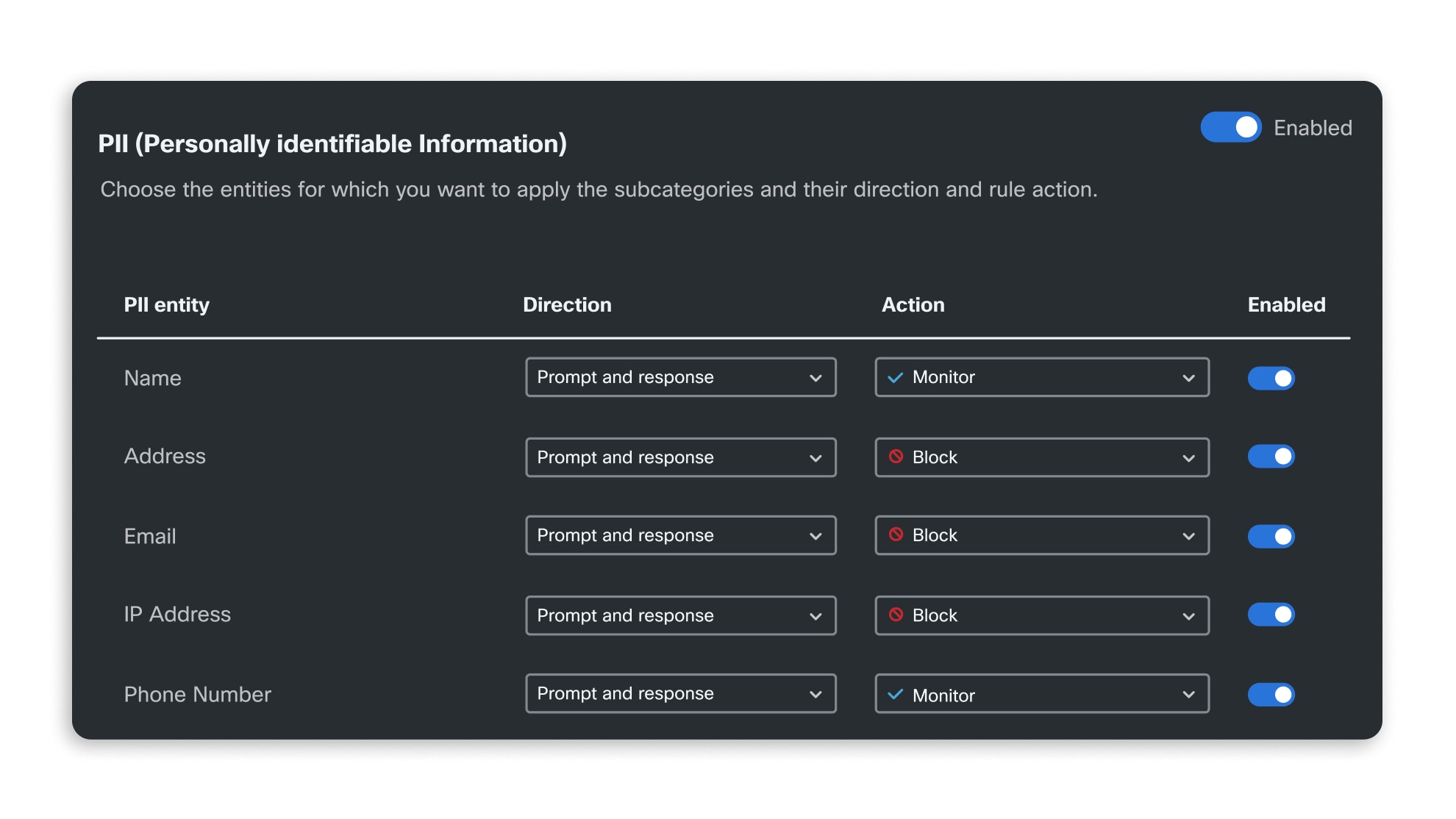

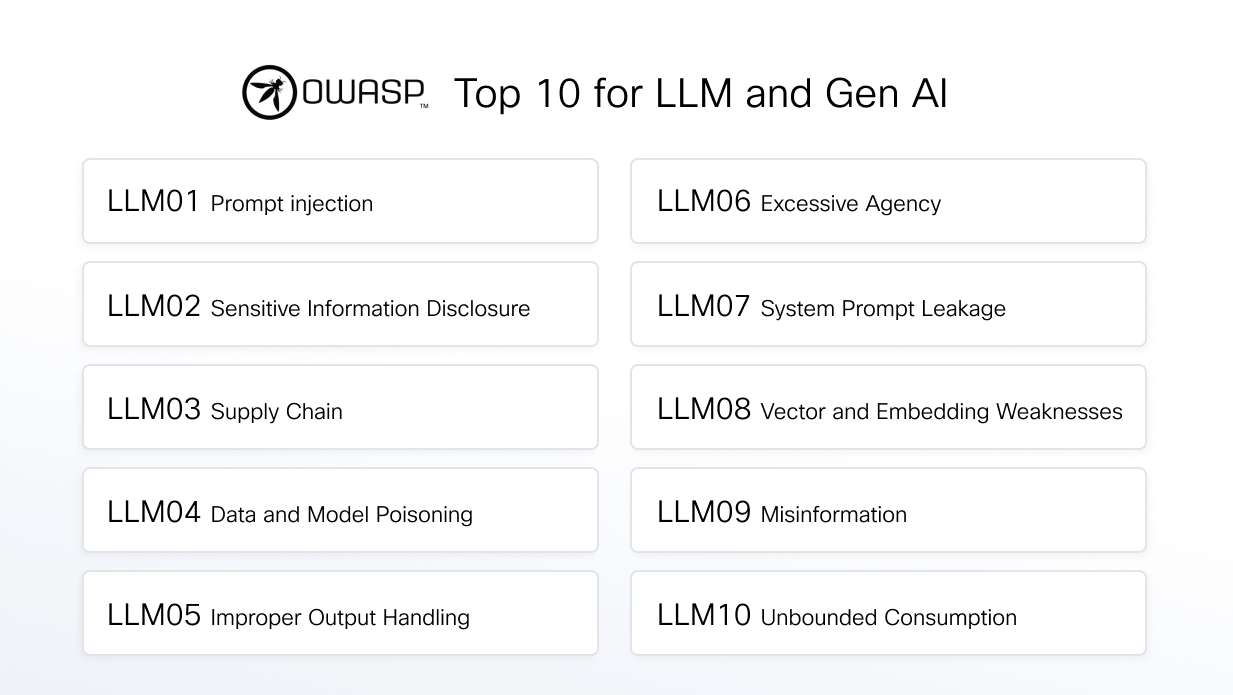

AI model and application validation performs an automated, algorithmic assessment of a model's safety and security vulnerabilities, continuously updated through AI Threat Research teams. You can then understand your application's susceptibility to emerging threats and protect against them, enforced by AI runtime guardrails.