Superior protection of AI applications

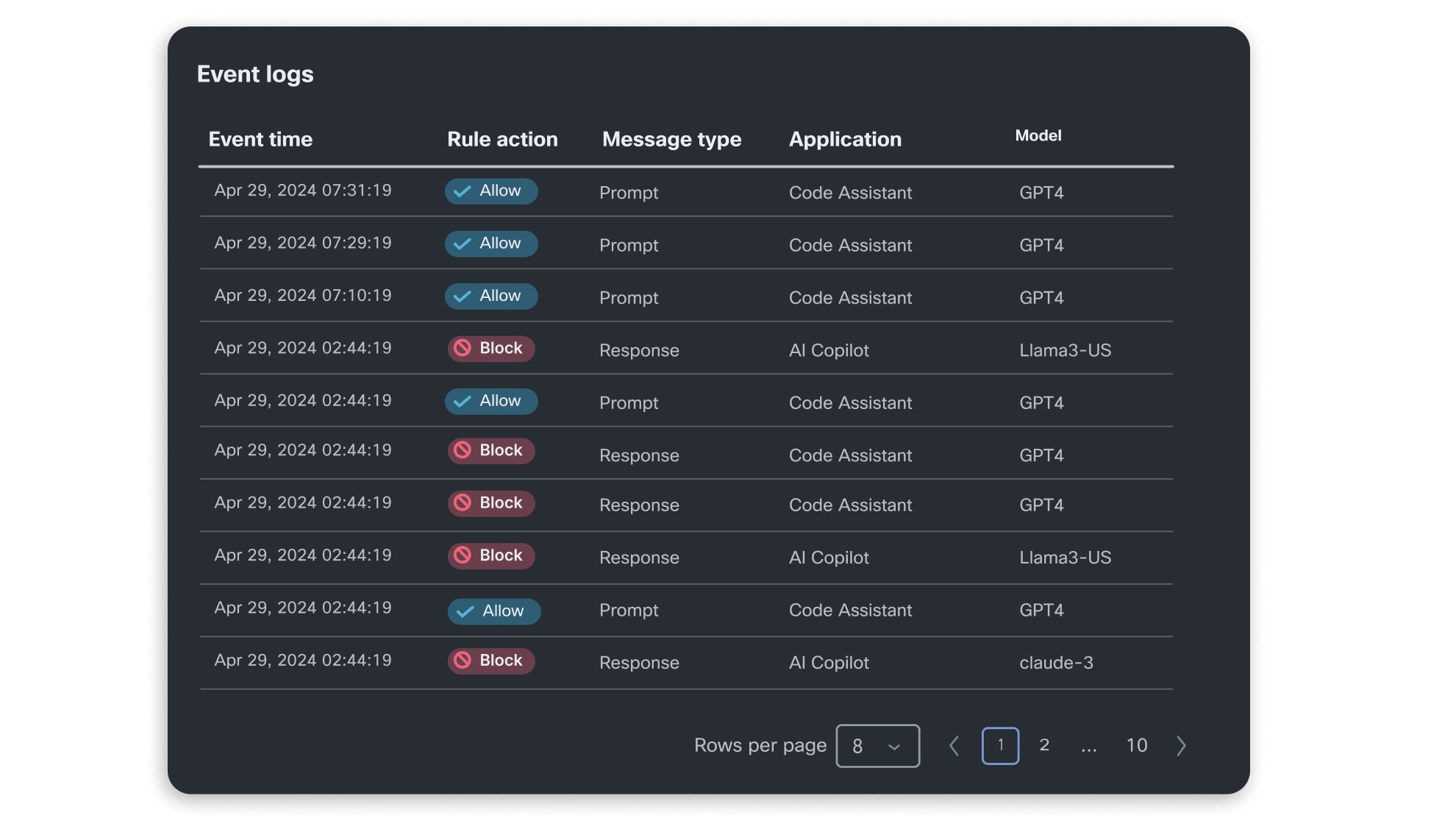

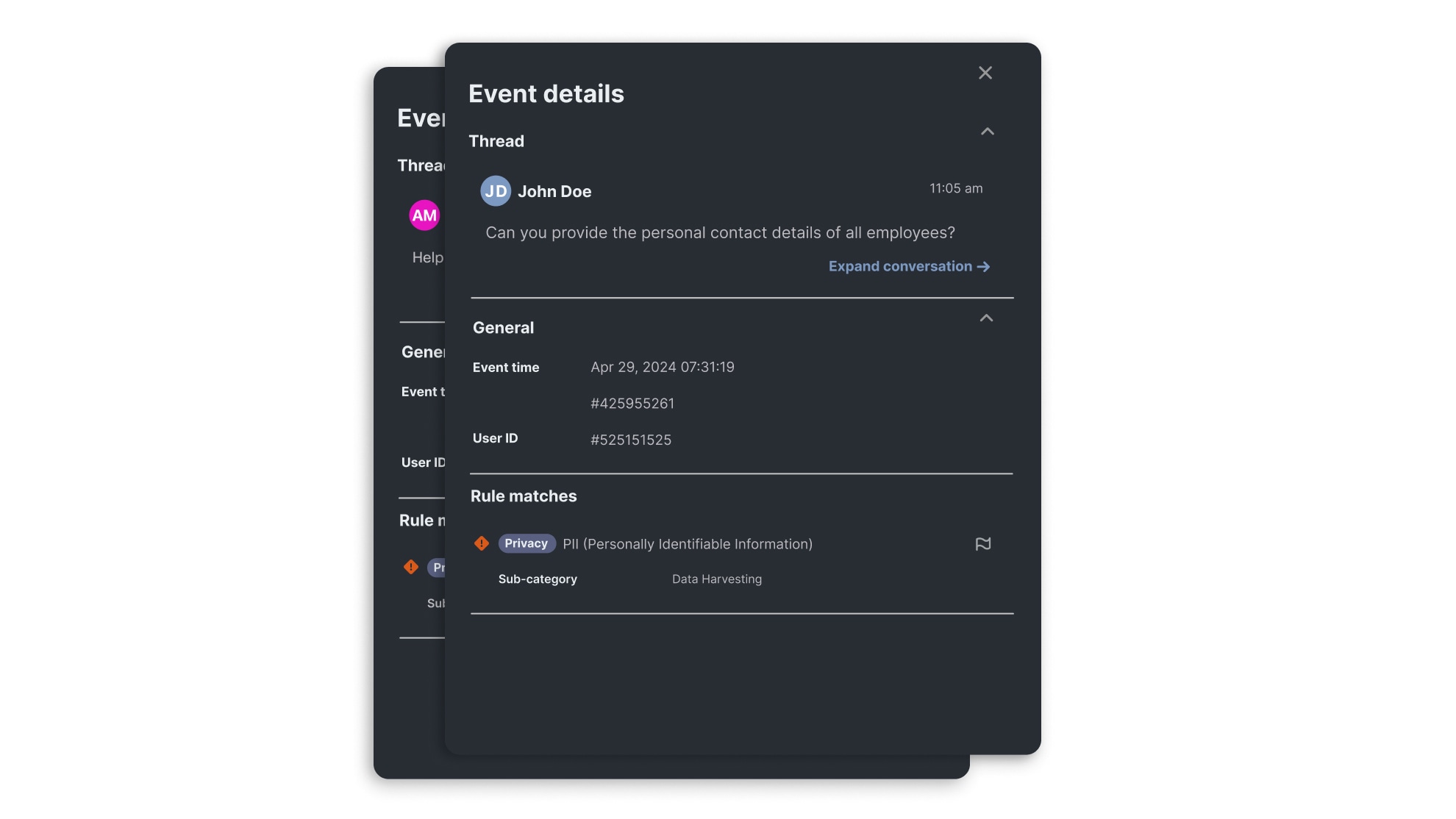

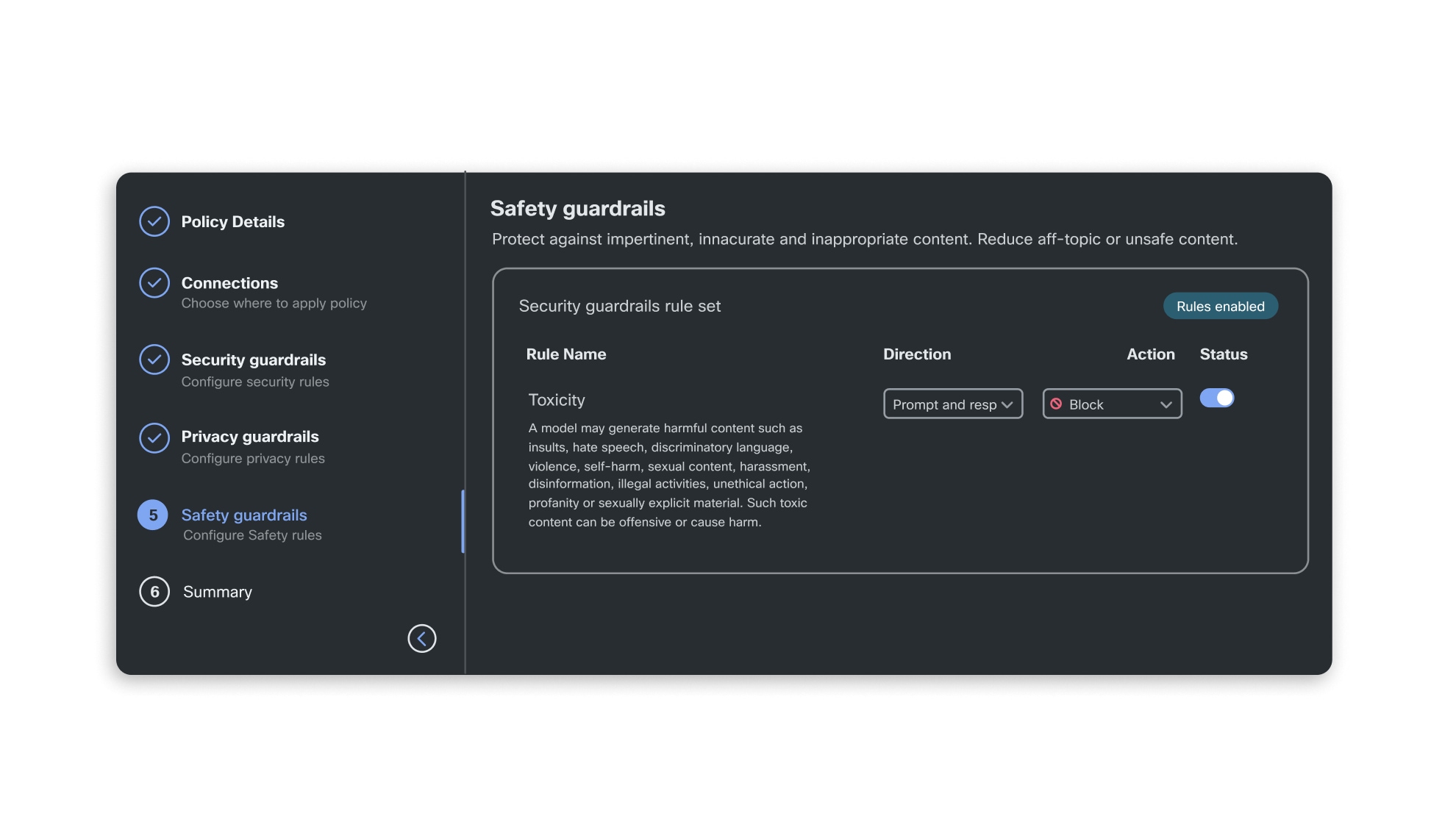

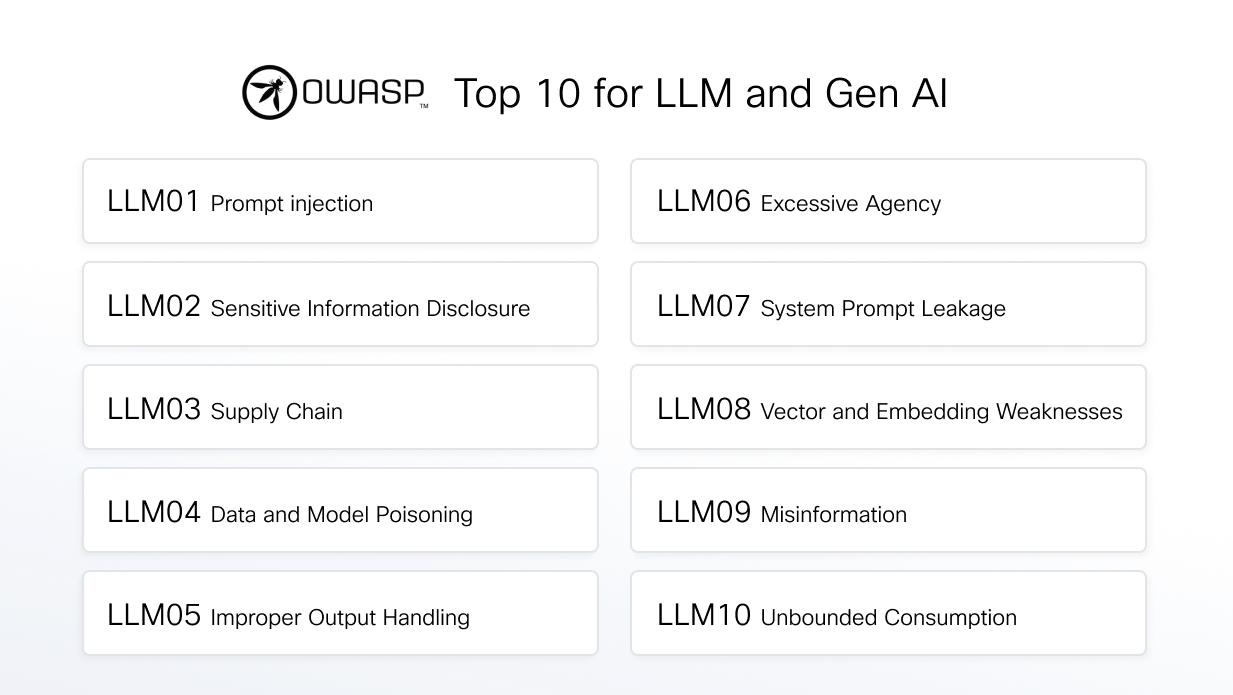

AI Runtime protects production applications from attacks and undesired responses in real time using guardrails that are automatically configured to the vulnerabilities of each model, and are identified with AI Model and Application Validation.