Overview

Cisco Application Policy Infrastructure Controller (APIC) Release 4.1(1) introduces Cisco Cloud APIC, which is a software deployment of Cisco APIC that you deploy on a cloud-based virtual machine (VM). Release 4.1(1) supports Amazon Web Services. Beginning in Release 4.2(x), support is added for Azure.

When deployed, the Cisco Cloud APIC:

-

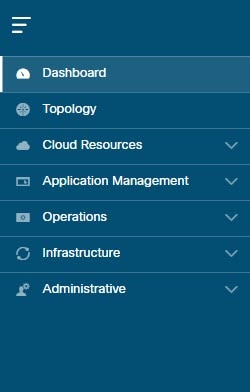

Provides an interface that is similar to the existing Cisco APIC to interact with the Azure public cloud

-

Automates the deployment and configuration of cloud constructs

-

Configures the cloud router control plane

-

Configures the data path between the on-premises Cisco ACI fabric and the cloud site

-

Translates Cisco ACI policies to cloud native construct

-

Discovers endpoints

-

Provides a consistent policy, security, and analytics for workloads deployed either on or across on-premises data centers and the public cloud

Note

-

Cisco Multi-Site pushes the MP-BGP EVPN configuration to the on-premises spine switches

-

On-premises VPN routers require a manual configuration for IPsec

-

-

Provides an automated connection between on-premises data centers and the public cloud with easy provisioning and monitoring

-

Policies are pushed by Cisco Nexus Dashboard Orchestrator to the on-premises and cloud sites, and Cisco Cloud APIC translates the policies to the cloud native constructs to keep the policies consistent with the on-premises site

For more information about extending Cisco ACI to the public cloud, see the Cisco Cloud APIC Installation Guide.

When the Cisco Cloud APIC is up and running, you can begin adding and configuring Cisco Cloud APIC components. This document describes the Cisco Cloud APIC policy model and explains how to manage (add, configure, view, and delete) the Cisco Cloud APIC components using the GUI and the REST API.

Feedback

Feedback