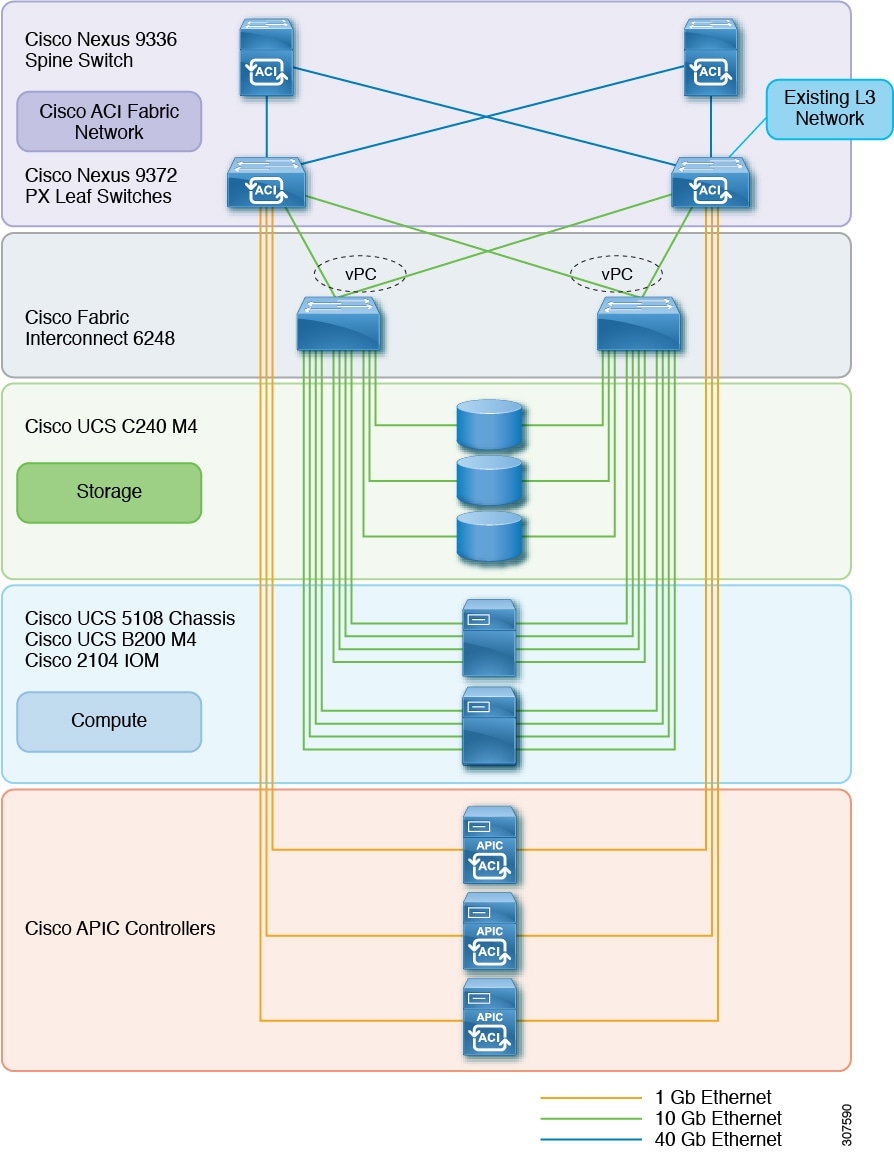

Configuring UCS B-Series for Cisco ACI and OpenStack Orchestration

You need three levels of configuration for Cisco Unified Computing System (UCS) B-Series to work with Cisco Application Centric Infrastructure (ACI) and OpenStack orchestration. The first layer is on Cisco UCS, the second on the host, and the third on the leaf switches.

Note |

This document applies to the Cisco UCS B-Series and C-Series servers connected to Fabric Interconnects in UCS mode and provides additional configuration required to install OpenStack on Cisco UCS. |

Feedback

Feedback