Deployment Overview

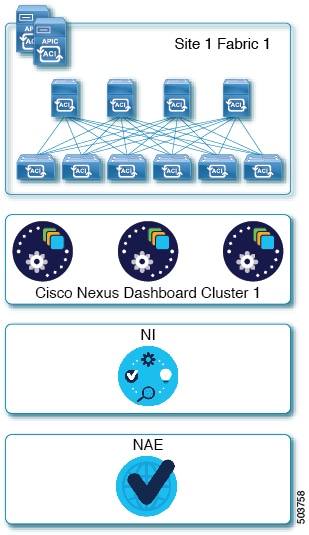

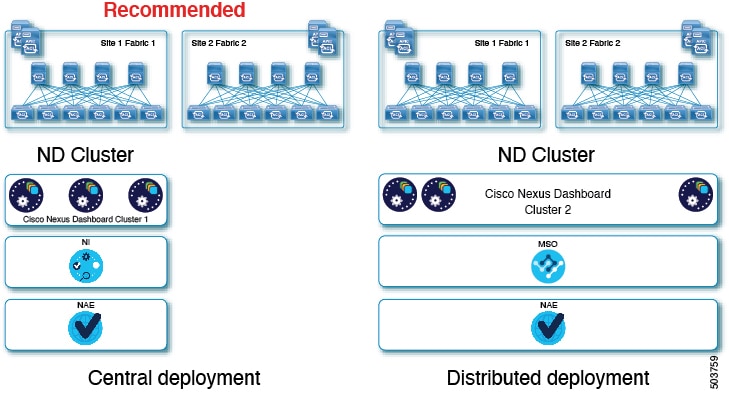

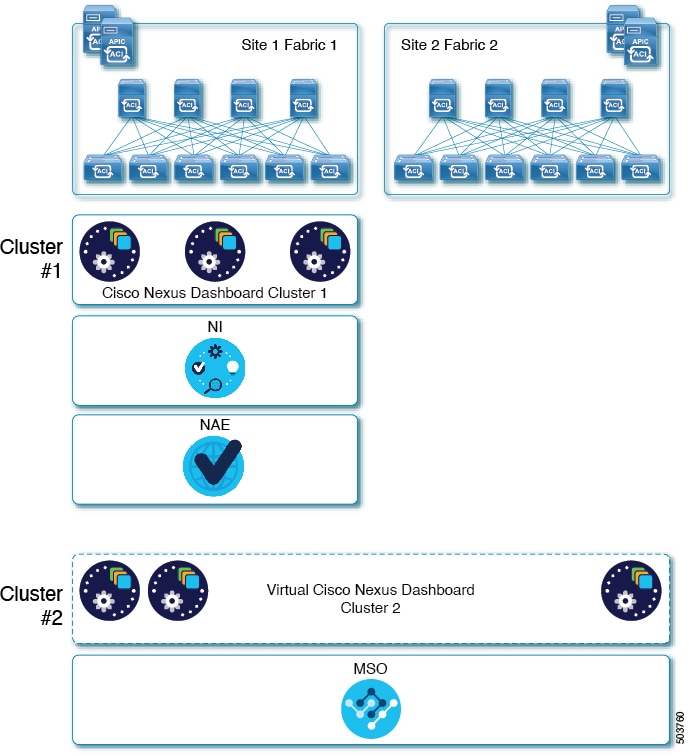

Cisco Nexus Dashboard is a central management console for multiple data center sites and a common platform for hosting Cisco data center operation applications, such as Nexus Insights and Nexus Assurance Engine. These applications are universally available for all the data center sites and provide real time analytics, visibility, and assurance for network policies and operations. Cisco Multi-Site Orchestrator can also run on Nexus Dashboard as a hosted application.

Nexus Dashboard provides a common platform and modern technology stack for these micro-services-based applications, simplifying the life cycle management of the different modern applications and reducing the operational overhead to run and maintain these applications. It also provides a central integration point for external 3rd party applications with the locally hosted applications.

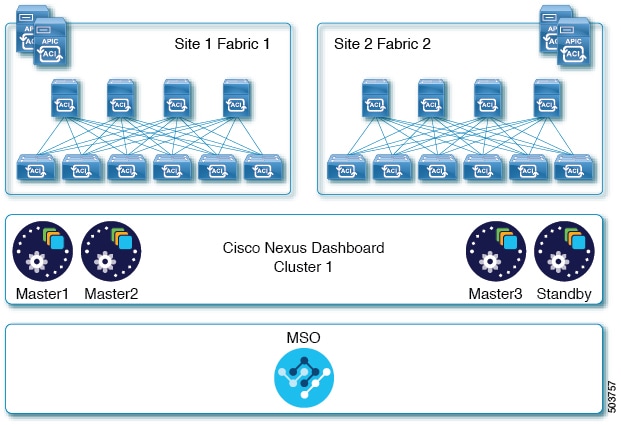

Each Nexus Dashboard cluster consists of 3 master nodes. For physical Nexus Dashboard clusters, you can also provision up to 4 worker nodes to enable horizontal scaling and up to 2 standby nodes for easy cluster recovery in case of a master node failure. For virtual and cloud clusters, only the base 3-node cluster

is supported.

Note |

This document describes initial configuration of the 3-node cluster. After your cluster is up and running, you can configure and deploy additional nodes as described in the Cisco Nexus Dashboard User Guide, which is also available directly from the Nexus Dashboard GUI. |

Hardware vs Software Stack

Nexus Dashboard is offered as a cluster of specialized Cisco UCS servers (Nexus Dashboard platform) with the software framework (Nexus Dashboard) pre-installed on it. The Cisco Nexus Dashboard software stack can be decoupled from the hardware and deployed in a number of virtual form factors. For the purposes of this document, we will use "Nexus Dashboard platform" specifically to refer to the hardware and "Nexus Dashboard" to refer to the software stack and the GUI console.

This guide describes the initial deployment of the Nexus Dashboard software; hardware setup is described in the Nexus Dashboard Hardware Setup Guide, while other Nexus Dashboard operations procedures are described in the Cisco Nexus Dashboard User Guide.

Nexus Dashboard and Cisco DCNM

Nexus Dashboard may be used in context of Cisco DCNM. In this case, DCNM is not an application running in the Nexus Dashboard

software stack. Instead, the DCNM image (.iso) is installed directly on the Nexus Dashboard physical servers in order to provide additional compute resources to the applications

installed and running in Cisco DCNM thus enabling horizontal scaling of the DCNM platform. As this document deals with the

Nexus Dashboard software stack deployments, see a Cisco DCNM Installation Guide appropriate for your deployment type for information related to installing DCNM on Nexus Dashboard hardware.

Available Form Factors

Cisco Nexus Dashboard, Release 2.0.1 and 2.0.2g can be deployed as a physical appliance only. This refers to software stack already deployed on the Nexus Dashboard platform hardware that you purchase

Cisco Nexus Dashboard, Release 2.0.2h can be deployed using a number of different form factors. Keep in mind however, you must use the same form factor for all nodes, mixing different form factors within the same cluster is not supported.

Note |

Nexus Dashboard, Release 2.0.2h supports virtual form factor clusters for Multi-Site Orchestrator application only. For other applications, such as Nexus Insights, you must deploy a physical cluster. |

-

Cisco Nexus Dashboard physical appliance (

.iso)This form factor refers to the original physical appliance hardware that you purchased with the Cisco Nexus Dashboard software stack pre-installed on it.

The later sections in this document describe how to configure the software stack on the existing physical appliance hardware to deploy the cluster. Setting up the original Cisco Nexus Dashboard platform hardware is described in Cisco Nexus Dashboard Hardware Setup Guide.

-

VMware ESX (

.ova)Virtual form factor that allows you to deploy a Nexus Dashboard cluster using three VMware ESX virtual machines.

-

Amazon Web Services (

.ami)Cloud form factor that allows you to deploy a Nexus Dashboard cluster using three AWS instances.

-

Microsoft Azure (

.arm)Cloud form factor that allows you to deploy a Nexus Dashboard cluster using three Azure instances.

Upgrading From Previous Versions of Nexus Dashboard

If you are already running a Nexus Dashboard, Release 2.0.1 or later, you can upgrade to the latest release while retaining the cluster configuration and applications, as described in Upgrading Nexus Dashboard

Upgrading From Application Services Engine

If you are running Application Services Engine, Release 1.1.3d as a physical appliance, you can upgrade to Nexus Dashboard to retain the cluster configuration and applications, as described in Upgrading Nexus Dashboard

If you are running Application Services Engine, Release 1.1.3d as a virtual appliance or a release prior to Release 1.1.3d, stateful upgrade or migration of the cluster is supported to Nexus Dashboard, Release 2.0.2h or later only. If you want to deploy Release 2.0.1 or 2.0.2g, you would need to deploy a brand new physical appliance cluster and reinstall all the applications.

Cluster Sizing Guidelines

Nexus Dashboard supports co-hosting of applications. Depending on the type and number of applications you choose to run, you may be required to deploy additional worker nodes in your cluster. For cluster sizing information and recommended number of nodes based on specific use cases, see Cisco Nexus Dashboard Cluster Sizing.

After your initial 3-node cluster is up and running, you can configure and deploy additional nodes as described in the Cisco Nexus Dashboard User Guide, which is also available directly from the Nexus Dashboard GUI.

Supported Applications

For the full list of supported applications and the associated compatibility and interoperability information, see the Cisco Day-2 Operations Apps Support Matrix.

The following table provides a reference for the recommended application release versions for Nexus Dashboard, Release 2.x:

|

Nexus Dashboard Release and Form Factor |

Nexus Insights |

Multi-Site Orchestrator |

Network Assurance Engine |

|---|---|---|---|

|

Nexus Dashboard, Release 2.0.1 Physical cluster |

5.0(1) |

3.2(1) |

5.1(1a) |

|

Nexus Dashboard, Release 2.0.2g Physical cluster |

5.1(1) |

3.2(1) |

5.1(1b) |

|

Nexus Dashboard, Release 2.0.2h Physical cluster |

5.1(1) |

3.3(1) |

5.1(1b) |

|

Nexus Dashboard, Release 2.0.2h Virtual cluster |

Not supported |

3.3(1) |

Not supported |

Feedback

Feedback