Prerequisites and guidelines for deploying Nexus Dashboard as a physical appliance

Before you proceed with deploying the Nexus Dashboard cluster, you must:

-

Review and complete the general and service-specific prerequisites described in Prerequisites: Nexus Dashboard.

-

Review and complete any additional prerequisites that is described in the Release Notes for the services you plan to deploy.

You can find the service-specific documents at the following links:

-

Ensure you are using the following hardware and the servers are racked and connected as described in Cisco Nexus Dashboard Hardware Setup Guide specific to the model of server you have.

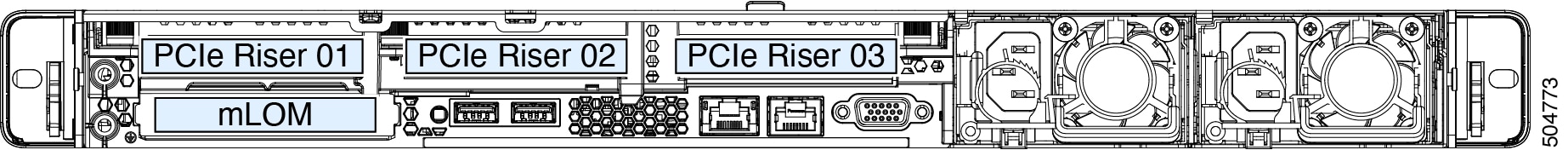

The physical appliance form factor is supported on the UCS-C220-M5 (

SE-NODE-G2) and UCS-C225-M6 (ND-NODE-L4) original Cisco Nexus Dashboard platform hardware only. The following table lists the PIDs and specifications of the physical appliance servers:Table 1. Supported UCS-C220-M5 Hardware Product ID

Hardware

SE-NODE-G2

-

Cisco UCS C220 M5 Chassis

-

2x 10-core 2.2-GHz Intel Xeon Silver CPU

-

256 GB of RAM

-

4x 2.4-TB HDDs

400-GB SSD

1.2-TB NVME drive

-

Cisco UCS Virtual Interface Card 1455 (4x25G Ports)

-

1050-W power supply

SE-CL-L3

A cluster of 3x SE-NODE-G2=appliances.Table 2. Supported UCS-C225-M6 Hardware Product ID

Hardware

ND-NODE-L4

-

Cisco UCS C225 M6 Chassis

-

2.8-GHz AMD CPU

-

256 GB of RAM

-

4x 2.4-TB HDDs

960-GB SSD

1.6-TB NVME drive

-

Intel X710T2LG 2x10 GbE (Copper)

-

One of the following:

-

Intel E810XXVDA2 2x25/10 GbE (Fiber Optic)

-

Cisco UCS Virtual Interface Card 1455 (4x25G Ports)

-

-

1050-W power supply

ND-CLUSTER-L4

A cluster of 3x ND-NODE-L4=appliances.

Note

The above hardware supports Cisco Nexus Dashboard software only. If any other operating system is installed, the node can no longer be used as a Cisco Nexus Dashboard node.

-

-

Ensure that you are running a supported version of Cisco Integrated Management Controller (CIMC).

The minimum that is supported and recommended versions of CIMC are listed in the "Compatibility" section of the Release Notes for your Cisco Nexus Dashboard release.

-

Ensure that you have configured an IP address for the server's CIMC.

See Configure a Cisco Integrated Management Controller IP address.

-

Ensure that Serial over LAN (SoL) is enabled in CIMC.

See Enable Serial over LAN in the Cisco Integrated Management Controller.

You might have a misconfiguration of SoL if the bootstrap fails at the

bootstrap peer nodespoint with this error:Waiting for firstboot prompt on NodeX -

Ensure that all nodes are running the same release version image.

-

If your Cisco Nexus Dashboard hardware came with a different release image than the one you want to deploy, we recommend deploying the cluster with the existing image first and then upgrading it to the needed release.

For example, if the hardware you received came with release 2.3.2 image pre-installed, but you want to deploy release 3.2.1 instead, we recommend:

-

First, bring up the release 2.3.2 cluster, as described in the deployment guide for that release.

-

Then upgrade to release 3.2.1, as described in Upgrading Existing ND Cluster to This Release.

Note

For brand new deployments, you can also choose to simply re-image the nodes with the latest version of the Cisco Nexus Dashboard (for example, if the hardware came with an image which does not support a direct upgrade to this release through the GUI workflow) before returning to this document for deploying the cluster. This process is described in the "Re-Imaging Nodes" section of the Troubleshooting article for this release.

-

-

You must have at least a 3-node cluster. Extra secondary nodes can be added for horizontal scaling if required by the number of services you deploy. For the maximum number of

secondaryandstandbynodes in a single cluster, see the Release Notes for your release.

Configure a Cisco Integrated Management Controller IP address

Follow these steps to configure a Cisco Integrated Management Controller (CIMC) IP address.

Procedure

|

Step 1 |

Power on the server. After the hardware diagnostic is complete, you will be prompted with different options controlled by the function (Fn) keys. |

|

Step 2 |

Press the F8 key to enter the Cisco IMC configuration Utility. |

|

Step 3 |

Follow these substeps. |

|

Step 4 |

Press F10 to save the configuration and then restart the server. |

Enable Serial over LAN in the Cisco Integrated Management Controller

Serial over LAN (SoL) is required for the connect host command, which you use to connect to a physical appliance node to provide basic configuration information. To use the SoL,

you must first enable it on your Cisco Integrated Management Controller (CIMC).

Follow these steps to enable Serial over LAN in the Cisco Integrated Management Controller.

Procedure

|

Step 1 |

SSH into the node using the CIMC IP address and enter the sign-in credentials. |

|

Step 2 |

Run these commands: |

|

Step 3 |

In the command output, verify that This enables the system to monitor the console using the |

Feedback

Feedback