SR-MPLS and Multi-Site

Starting with Nexus Dashboard Orchestrator, Release 3.0(1) and APIC Release 5.0(1), the Multi-Site architecture supports APIC sites connected via MPLS networks.

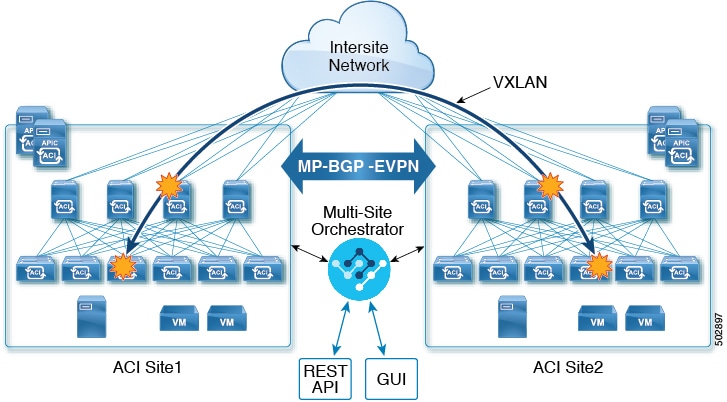

In a typical Multi-Site deployment, traffic between sites is forwarded over an intersite network (ISN) via VXLAN encapsulation:

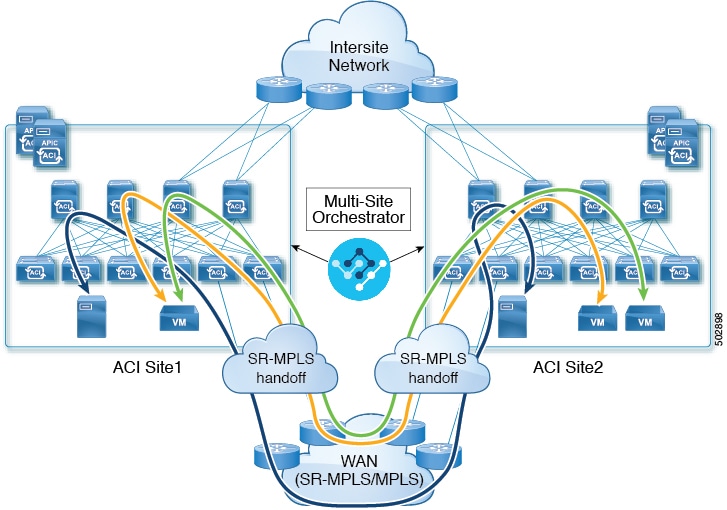

With Release 3.0(1), MPLS network can be used in addition to or instead of the ISN allowing inter-site communication via WAN:

The following sections describe guidelines, limitations, and configurations specific to managing Schemas that are deployed to these sites from the Nexus Dashboard Orchestrator. Detailed information about MPLS hand off, supported individual site topologies (such as remote leaf support), and policy model is available in the Cisco APIC Layer 3 Networking Configuration Guide.

Feedback

Feedback