- Status LEDs and Buttons

- Preparing for Component Installation

- Installing or Replacing Node Components

- Replaceable Component Locations

- Replacing Drives

- Replacing Fan Modules

- Replacing the Motherboard RTC Battery

- Replacing DIMMs

- Replacing CPUs and Heatsinks

- Replacing an Internal SD Card

- Enabling or Disabling the Internal USB Port

- Replacing a Cisco Modular HBA Riser (Internal Riser 3)

- Replacing a Cisco Modular HBA Card

- Replacing a PCIe Riser Assembly

- Replacing a PCIe Card

- Installing and Enabling a Trusted Platform Module

- Replacing an mLOM Card (Cisco VIC 1227)

- Replacing Power Supplies

Maintaining the Node

This chapter describes how to diagnose node problems using LEDs. It also provides information about how to install or replace hardware components, and it includes the following sections:

Status LEDs and Buttons

This section describes the location and meaning of LEDs and buttons and includes the following topics

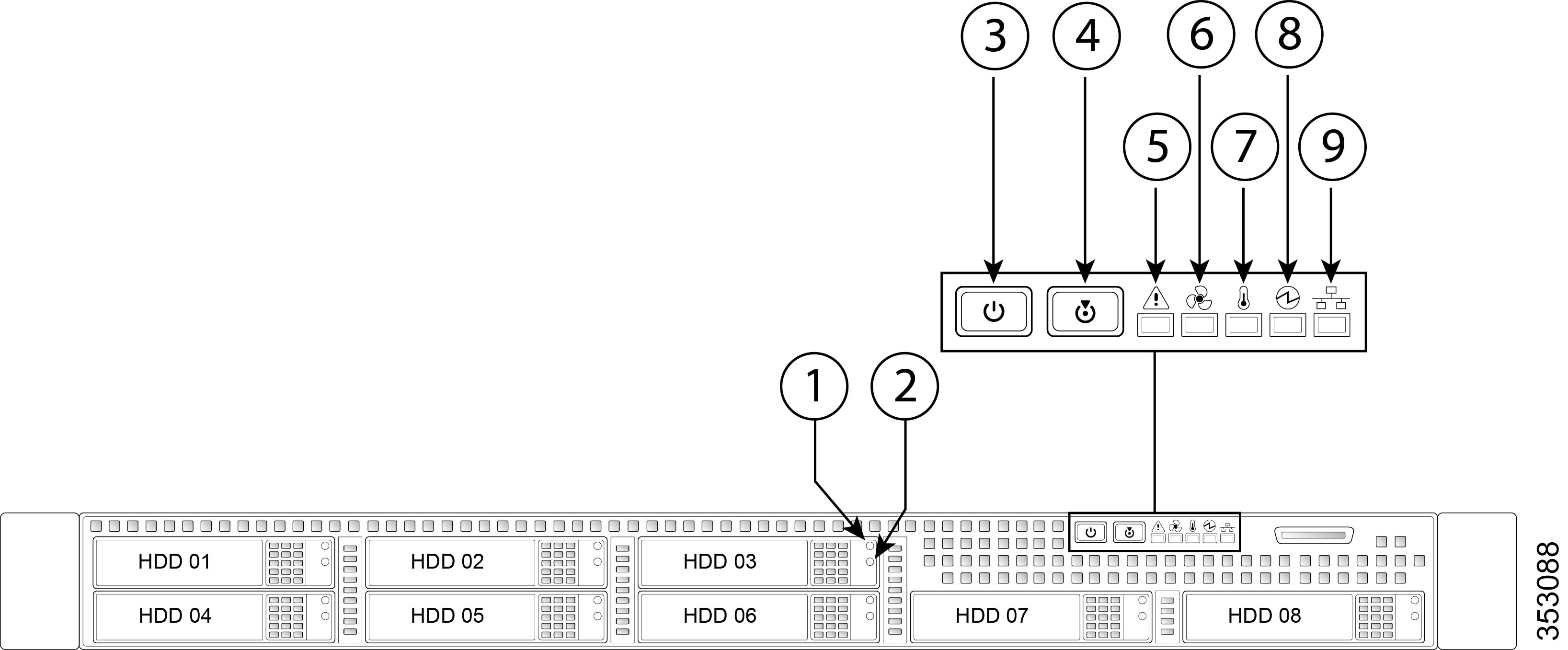

Front Panel LEDs

Figure 3-1 shows the front panel LEDs. Table 3-1 defines the LED states.

|

|

|

||

|

|

|

||

|

|

|

||

|

|

|

||

|

|

|

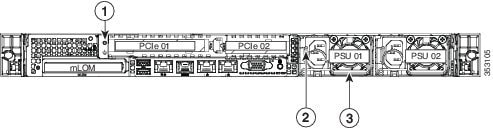

Rear Panel LEDs and Buttons

Figure 3-2 shows the rear panel LEDs and buttons. Table 3-2 defines the LED states.

Figure 3-2 Rear Panel LEDs and Buttons

|

|

mLOM card LED (Cisco VIC 1227) |

|

|

|

|

|

||

|

|

|

||

|

|

|

Internal Diagnostic LEDs

The node has internal fault LEDs for CPUs, DIMMs, fan modules, SD cards, the RTC battery, and the mLOM card. These LEDs are available only when the node is in standby power mode. An LED lights amber to indicate a faulty component.

See Figure 3-3 for the locations of these internal LEDs.

Figure 3-3 Internal Diagnostic LED Locations

|

|

Fan module fault LEDs (one next to each fan connector on the motherboard) |

|

|

|

|

|

||

|

|

DIMM fault LEDs (one in front of each DIMM socket on the motherboard) |

|

|

|

|

|---|---|

Preparing for Component Installation

This section describes how to prepare for component installation, and it includes the following topics:

- Required Equipment

- Shutting Down the Node

- Decommissioning the Node Using Cisco UCS Manager

- Post-Maintenance Procedures

- Removing and Replacing the Node Top Cover

Required Equipment

The following equipment is used to perform the procedures in this chapter:

Shutting Down the Node

The node can run in two power modes:

- Main power mode—Power is supplied to all node components and any operating system on your drives can run.

- Standby power mode—Power is supplied only to the service processor and the cooling fans and it is safe to power off the node from this mode.

This section contains the following procedures, which are referenced from component replacement procedures. Alternate shutdown procedures are included.

Shutting Down the Node From the Equipment Tab in Cisco UCS Manager

When you use this procedure to shut down an HX node, Cisco UCS Manager triggers the OS into a graceful shutdown sequence.

Note![]() If the Shutdown Server link is dimmed in the Actions area, the node is not running.

If the Shutdown Server link is dimmed in the Actions area, the node is not running.

Step 1![]() In the Navigation pane, click Equipment.

In the Navigation pane, click Equipment.

Step 2![]() Expand Equipment > Rack Mounts > Servers.

Expand Equipment > Rack Mounts > Servers.

Step 3![]() Choose the node that you want to shut down.

Choose the node that you want to shut down.

Step 4![]() In the Work pane, click the General tab.

In the Work pane, click the General tab.

Step 5![]() In the Actions area, click Shutdown Server.

In the Actions area, click Shutdown Server.

Step 6![]() If a confirmation dialog displays, click Yes.

If a confirmation dialog displays, click Yes.

After the node has been successfully shut down, the Overall Status field on the General tab displays a power-off status.

Shutting Down the Node From the Service Profile in Cisco UCS Manager

When you use this procedure to shut down an HX node, Cisco UCS Manager triggers the OS into a graceful shutdown sequence.

Note![]() If the Shutdown Server link is dimmed in the Actions area, the node is not running.

If the Shutdown Server link is dimmed in the Actions area, the node is not running.

Step 1![]() In the Navigation pane, click Servers.

In the Navigation pane, click Servers.

Step 2![]() Expand Servers > Service Profiles.

Expand Servers > Service Profiles.

Step 3![]() Expand the node for the organization that contains the service profile of the server node you are shutting down.

Expand the node for the organization that contains the service profile of the server node you are shutting down.

Step 4![]() Choose the service profile of the server node that you are shutting down.

Choose the service profile of the server node that you are shutting down.

Step 5![]() In the Work pane, click the General tab.

In the Work pane, click the General tab.

Step 6![]() In the Actions area, click Shutdown Server.

In the Actions area, click Shutdown Server.

Step 7![]() If a confirmation dialog displays, click Yes.

If a confirmation dialog displays, click Yes.

After the node has been successfully shut down, the Overall Status field on the General tab displays a power-off status.

Shutting Down the Node Through vSphere With Cisco HX Maintenance Mode

Some procedures directly place the node into Cisco HX Maintenance mode. This procedure migrates all VMs to other nodes before the node is shut down and decommissioned from Cisco UCS Manager.

Step 1![]() Put the node in Cisco HX Maintenance mode by using the vSphere interface:

Put the node in Cisco HX Maintenance mode by using the vSphere interface:

a.![]() Log in to the vSphere web client.

Log in to the vSphere web client.

b.![]() Go to Home > Hosts and Clusters.

Go to Home > Hosts and Clusters.

c.![]() Expand the Datacenter that contains the HX Cluster.

Expand the Datacenter that contains the HX Cluster.

d.![]() Expand the HX Cluster and select the node.

Expand the HX Cluster and select the node.

e.![]() Right-click the node and select Cisco HX Maintenance Mode > Enter HX Maintenance Mode.

Right-click the node and select Cisco HX Maintenance Mode > Enter HX Maintenance Mode.

a.![]() Log in to the storage controller cluster command line as a user with root privileges.

Log in to the storage controller cluster command line as a user with root privileges.

b.![]() Move the node into HX Maintenance Mode.

Move the node into HX Maintenance Mode.

1. Identify the node ID and IP address:

2. Enter the node into HX Maintenance Mode.

# stcli node maintenanceMode (--id ID | --ip IP Address ) --mode enter

(see also stcli node maintenanceMode --help)

c.![]() Log into the ESXi command line of this node as a user with root privileges.

Log into the ESXi command line of this node as a user with root privileges.

d.![]() Verify that the node has entered HX Maintenance Mode:

Verify that the node has entered HX Maintenance Mode:

# esxcli system maintenanceMode get

Step 2![]() Shut down the node using UCS Manager as described in Shutting Down the Node.

Shut down the node using UCS Manager as described in Shutting Down the Node.

Shutting Down the Node with the Node Power Button

Note![]() This method is not recommended for a HyperFlex node, but the operation of the physical power button is explained here in case an emergency shutdown is required.

This method is not recommended for a HyperFlex node, but the operation of the physical power button is explained here in case an emergency shutdown is required.

Step 1![]() Check the color of the Power Status LED (see the “Front Panel LEDs” section).

Check the color of the Power Status LED (see the “Front Panel LEDs” section).

- Green—The node is in main power mode and must be shut down before it can be safely powered off. Go to Step 2.

- Amber—The node is already in standby mode and can be safely powered off.

Step 2![]() Invoke either a graceful shutdown or a hard shutdown:

Invoke either a graceful shutdown or a hard shutdown:

- Graceful shutdown—Press and release the Power button. The operating system performs a graceful shutdown and the node goes to standby mode, which is indicated by an amber Power Status LED.

- Emergency shutdown—Press and hold the Power button for 4 seconds to force the main power off and immediately enter standby mode.

Decommissioning the Node Using Cisco UCS Manager

Before replacing an internal component of a node, you must decommission the node to remove it from the Cisco UCS configuration. When you use this procedure to shut down an HX node, Cisco UCS Manager triggers the OS into a graceful shutdown sequence.

Step 1![]() In the Navigation pane, click Equipment.

In the Navigation pane, click Equipment.

Step 2![]() Expand Equipment > Rack Mounts > Servers.

Expand Equipment > Rack Mounts > Servers.

Step 3![]() Choose the node that you want to decommission.

Choose the node that you want to decommission.

Step 4![]() In the Work pane, click the General tab.

In the Work pane, click the General tab.

Step 5![]() In the Actions area, click Server Maintenance.

In the Actions area, click Server Maintenance.

Step 6![]() In the Maintenance dialog box, click Decommission, then click OK.

In the Maintenance dialog box, click Decommission, then click OK.

The node is removed from the Cisco UCS configuration.

Post-Maintenance Procedures

This section contains the following procedures, which are referenced from component replacement procedures:

Recommissioning the Node Using Cisco UCS Manager

After replacing an internal component of a node, you must recommission the node to add it back into the Cisco UCS configuration.

Step 1![]() In the Navigation pane, click Equipment.

In the Navigation pane, click Equipment.

Step 2![]() Under Equipment, click the Rack Mounts node.

Under Equipment, click the Rack Mounts node.

Step 3![]() In the Work pane, click the Decommissioned tab.

In the Work pane, click the Decommissioned tab.

Step 4![]() On the row for each rack-mount server that you want to recommission, do the following:

On the row for each rack-mount server that you want to recommission, do the following:

a.![]() In the Recommission column, check the check box.

In the Recommission column, check the check box.

Step 5![]() If a confirmation dialog box displays, click Yes.

If a confirmation dialog box displays, click Yes.

Step 6![]() (Optional) Monitor the progress of the server recommission and discovery on the FSM tab for the server.

(Optional) Monitor the progress of the server recommission and discovery on the FSM tab for the server.

Associating a Service Profile With an HX Node

Use this procedure to associate an HX node to its service profile after recommissioning.

Step 1![]() In the Navigation pane, click Servers.

In the Navigation pane, click Servers.

Step 2![]() Expand Servers > Service Profiles.

Expand Servers > Service Profiles.

Step 3![]() Expand the node for the organization that contains the service profile that you want to associate with the HX node.

Expand the node for the organization that contains the service profile that you want to associate with the HX node.

Step 4![]() Right-click the service profile that you want to associate with the HX node and then select Associate Service Profile.

Right-click the service profile that you want to associate with the HX node and then select Associate Service Profile.

Step 5![]() In the Associate Service Profile dialog box, select the Server option.

In the Associate Service Profile dialog box, select the Server option.

Step 6![]() Navigate through the navigation tree and select the HX node to which you are assigning the service profile.

Navigate through the navigation tree and select the HX node to which you are assigning the service profile.

Exiting HX Maintenance Mode

Use this procedure to exit HX Maintenance Mode after performing a service procedure.

Step 1![]() Exit the node from Cisco HX Maintenance mode by using the vSphere interface:

Exit the node from Cisco HX Maintenance mode by using the vSphere interface:

a.![]() Log in to the vSphere web client.

Log in to the vSphere web client.

b.![]() Go to Home > Hosts and Clusters.

Go to Home > Hosts and Clusters.

c.![]() Expand the Datacenter that contains the HX Cluster.

Expand the Datacenter that contains the HX Cluster.

d.![]() Expand the HX Cluster and select the node.

Expand the HX Cluster and select the node.

e.![]() Right-click the node and select Cisco HX Maintenance Mode > Exit HX Maintenance Mode.

Right-click the node and select Cisco HX Maintenance Mode > Exit HX Maintenance Mode.

a.![]() Log in to the storage controller cluster command line as a user with root privileges.

Log in to the storage controller cluster command line as a user with root privileges.

b.![]() Exit the node out of HX Maintenance Mode.

Exit the node out of HX Maintenance Mode.

1. Identify the node ID and IP address:

2. Exit the node out of HX Maintenance Mode

# stcli node maintenanceMode (--id ID | --ip IP Address ) --mode exit

(see also stcli node maintenanceMode --help)

c.![]() Log into ESXi command line of this node as a user with root privileges.

Log into ESXi command line of this node as a user with root privileges.

d.![]() Verify that the node has exited HX Maintenance Mode:

Verify that the node has exited HX Maintenance Mode:

# esxcli system maintenanceMode get

Removing and Replacing the Node Top Cover

Step 1![]() Remove the top cover (see Figure 3-4).

Remove the top cover (see Figure 3-4).

a.![]() If the cover latch is locked, use a screwdriver to turn the lock 90-degrees counterclockwise to unlock it. See Figure 3-4.

If the cover latch is locked, use a screwdriver to turn the lock 90-degrees counterclockwise to unlock it. See Figure 3-4.

b.![]() Lift on the end of the latch that has the green finger grip. The cover is pushed back to the open position as you lift the latch.

Lift on the end of the latch that has the green finger grip. The cover is pushed back to the open position as you lift the latch.

c.![]() Lift the top cover straight up from the node and set it aside.

Lift the top cover straight up from the node and set it aside.

Note![]() The latch must be in the fully open position when you set the cover back in place, which allows the opening in the latch to sit over a peg that is on the fan tray.

The latch must be in the fully open position when you set the cover back in place, which allows the opening in the latch to sit over a peg that is on the fan tray.

a.![]() With the latch in the fully open position, place the cover on top of the node about one-half inch (1.27 cm) behind the lip of the front cover panel. The opening in the latch should fit over the peg that sticks up from the fan tray.

With the latch in the fully open position, place the cover on top of the node about one-half inch (1.27 cm) behind the lip of the front cover panel. The opening in the latch should fit over the peg that sticks up from the fan tray.

b.![]() Press the cover latch down to the closed position. The cover is pushed forward to the closed position as you push down the latch.

Press the cover latch down to the closed position. The cover is pushed forward to the closed position as you push down the latch.

c.![]() If desired, lock the latch by using a screwdriver to turn the lock 90-degrees clockwise.

If desired, lock the latch by using a screwdriver to turn the lock 90-degrees clockwise.

Figure 3-4 Removing the Top Cover

|

|

|

Installing or Replacing Node Components

Warning![]() Blank faceplates and cover panels serve three important functions: they prevent exposure to hazardous voltages and currents inside the chassis; they contain electromagnetic interference (EMI) that might disrupt other equipment; and they direct the flow of cooling air through the chassis. Do not operate the node unless all cards, faceplates, front covers, and rear covers are in place.

Blank faceplates and cover panels serve three important functions: they prevent exposure to hazardous voltages and currents inside the chassis; they contain electromagnetic interference (EMI) that might disrupt other equipment; and they direct the flow of cooling air through the chassis. Do not operate the node unless all cards, faceplates, front covers, and rear covers are in place.

Statement 1029

Tip![]() You can press the unit identification button on the front panel or rear panel to turn on a flashing unit identification LED on the front and rear panels of the node. This button allows you to locate the specific node that you are servicing when you go to the opposite side of the rack. You can also activate these LEDs remotely by using the Cisco IMC interface. See the “Status LEDs and Buttons” section for locations of these LEDs.

You can press the unit identification button on the front panel or rear panel to turn on a flashing unit identification LED on the front and rear panels of the node. This button allows you to locate the specific node that you are servicing when you go to the opposite side of the rack. You can also activate these LEDs remotely by using the Cisco IMC interface. See the “Status LEDs and Buttons” section for locations of these LEDs.

This section describes how to install and replace node components, and it includes the following topics:

- Replaceable Component Locations

- Replacing Drives

- Replacing Fan Modules

- Replacing the Motherboard RTC Battery

- Replacing DIMMs

- Replacing CPUs and Heatsinks

- Replacing an Internal SD Card

- Enabling or Disabling the Internal USB Port

- Replacing a Cisco Modular HBA Riser (Internal Riser 3)

- Replacing a Cisco Modular HBA Card

- Replacing a PCIe Riser Assembly

- Replacing a PCIe Riser Assembly

- Replacing a PCIe Card

- Installing and Enabling a Trusted Platform Module

- Replacing an mLOM Card (Cisco VIC 1227)

- Replacing Power Supplies

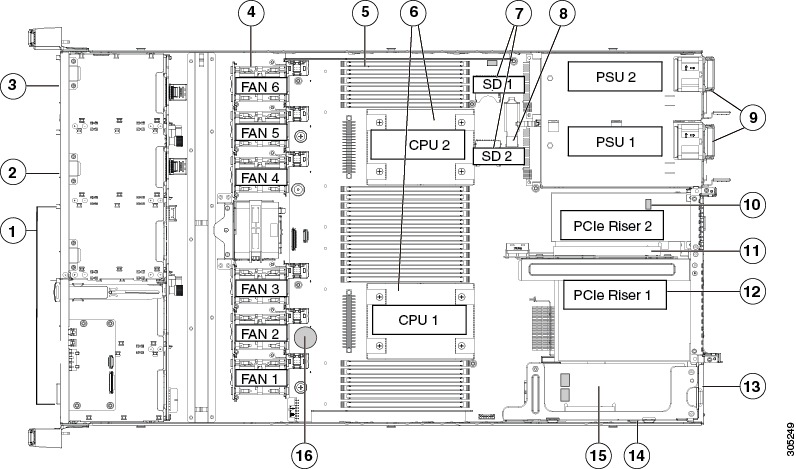

Replaceable Component Locations

This section shows the locations of the field-replaceable components. The view in Figure 3-5 is from the top down with the top cover and air baffle removed.

Figure 3-5 Replaceable Component Locations

|

|

See Replacing Drives for information about supported drives. |

|

Power supplies (up to two, hot-swappable when redundant as 1+1) |

|

|

Drive bay 2: SSD caching drive The supported caching SSD differs between the HX220c and HX220c All-Flash nodes. See Replacing Drives. |

|

Trusted platform module (TPM) socket on motherboard (not visible in this view) |

|

|

|

||

|

|

|

||

|

|

|

Modular LOM (mLOM) connector on chassis floor for Cisco VIC 1227 |

|

|

|

|

Cisco modular HBA PCIe riser (dedicated riser with horizontal socket) |

|

|

|

|

||

|

|

|

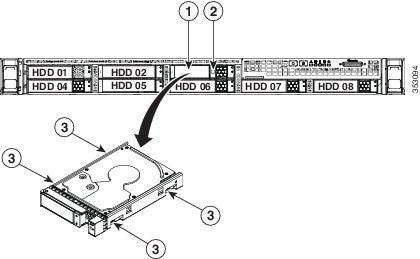

Replacing Drives

Drive Population Guidelines

The drive bay numbering is shown in Figure 3-6.

Figure 3-6 Drive Bay Numbering

|

|

|

||

|

|

|

Observe these drive population guidelines:

- Populate the housekeeping SSD for SDS logs only in bay 1.

- Populate the SSD caching drive only in bay 2. See Table 3-4 for the supported caching SSDs, which differ between supported drive configurations.

- Populate persistent data drives only in bays 3 - 8.

–![]() HX220c: HDD persistent data drives

HX220c: HDD persistent data drives

–![]() HX220c All-Flash: SSD persistent data drives

HX220c All-Flash: SSD persistent data drives

See Table 3-4 for the supported persistent drives, which differ between supported drive configurations.

- When populating persistent data drives, add drives in the lowest numbered bays first.

- Keep an empty drive blanking tray in any unused bays to ensure optimal airflow and cooling.

- See HX220c Drive Configuration Comparison for comparison of supported drive configurations.

HX220c Drive Configuration Comparison

|

|

|

|

|

|

|---|---|---|---|---|

Note the following considerations and restrictions for All-Flash HyperFlex nodes:

- The minimum Cisco HyperFlex software required is Release 2.0 or later.

- HX220c All-Flash HyperFlex nodes are ordered as specific All-Flash PIDs; All-Flash configurations are supported only on those PIDs.

- Conversion from hybrid HX220c configuration to HX220c All-Flash configuration is not supported.

- Mixing hybrid HX220c HyperFlex nodes with HX220c All-Flash HyperFlex nodes within the same HyperFlex cluster is not supported.

Note the following considerations and restrictions for SED HyperFlex nodes:

Drive Replacement Overview

The three types of drives in the node require different replacement procedures.

Hot-swap replacement is supported. See Replacing Persistent Data Drives (Bays 3 – 8). NOTE: Hot-swap replacement includes hot-removal, so you can remove the drive while it is still operating. |

|

Node must be put into Cisco HX Maintenance Mode before replacing the housekeeping SSD. Replacement requires additional technical assistance and cannot be completed by the customer. See Replacing the Housekeeping SSD for SDS Logs (Bay 1). |

|

Hot-swap replacement is supported. See Replacing the SSD Caching Drive (Bay 2). NOTE: Hot-swap replacement for SAS/SATA drives includes hot-removal, so you can remove the drive while it is still operating. NOTE: If an NVMe SSD is used as the caching drive, additional steps are required, as described in the procedure. |

Replacing Persistent Data Drives (Bays 3 – 8)

The persistent data drives must be installed only in drive bays 3 - 8.

See HX220c Drive Configuration Comparison for supported drives.

Note![]() Hot-swap replacement includes hot-removal, so you can remove the drive while it is still operating.

Hot-swap replacement includes hot-removal, so you can remove the drive while it is still operating.

Step 1![]() Remove the drive that you are replacing or remove a blank drive tray from the bay:

Remove the drive that you are replacing or remove a blank drive tray from the bay:

a.![]() Press the release button on the face of the drive tray. See Figure 3-7.

Press the release button on the face of the drive tray. See Figure 3-7.

b.![]() Grasp and open the ejector lever and then pull the drive tray out of the slot.

Grasp and open the ejector lever and then pull the drive tray out of the slot.

c.![]() If you are replacing an existing drive, remove the four drive-tray screws that secure the drive to the tray and then lift the drive out of the tray.

If you are replacing an existing drive, remove the four drive-tray screws that secure the drive to the tray and then lift the drive out of the tray.

a.![]() Place a new drive in the empty drive tray and install the four drive-tray screws.

Place a new drive in the empty drive tray and install the four drive-tray screws.

b.![]() With the ejector lever on the drive tray open, insert the drive tray into the empty drive bay.

With the ejector lever on the drive tray open, insert the drive tray into the empty drive bay.

c.![]() Push the tray into the slot until it touches the backplane, and then close the ejector lever to lock the drive in place.

Push the tray into the slot until it touches the backplane, and then close the ejector lever to lock the drive in place.

|

|

|

||

|

|

|

Replacing the Housekeeping SSD for SDS Logs (Bay 1)

Note![]() This procedure requires assistance from technical support for additional software update steps after the hardware is replaced. It cannot be completed without technical support assistance.

This procedure requires assistance from technical support for additional software update steps after the hardware is replaced. It cannot be completed without technical support assistance.

Step 1![]() Put the node in Cisco HX Maintenance mode as described in Shutting Down the Node Through vSphere With Cisco HX Maintenance Mode.

Put the node in Cisco HX Maintenance mode as described in Shutting Down the Node Through vSphere With Cisco HX Maintenance Mode.

Step 2![]() Shut down the node as described in Shutting Down the Node.

Shut down the node as described in Shutting Down the Node.

Step 3![]() Decommission the node as described in Decommissioning the Node Using Cisco UCS Manager.

Decommission the node as described in Decommissioning the Node Using Cisco UCS Manager.

Step 4![]() Disconnect all power cables from the power supplies.

Disconnect all power cables from the power supplies.

Step 5![]() Remove the drive that you are replacing:

Remove the drive that you are replacing:

a.![]() Press the release button on the face of the drive tray. See Figure 3-7.

Press the release button on the face of the drive tray. See Figure 3-7.

b.![]() Grasp and open the ejector lever and then pull the drive tray out of the slot.

Grasp and open the ejector lever and then pull the drive tray out of the slot.

c.![]() If you are replacing an existing drive, remove the four drive-tray screws that secure the drive to the tray and then lift the drive out of the tray.

If you are replacing an existing drive, remove the four drive-tray screws that secure the drive to the tray and then lift the drive out of the tray.

a.![]() Place a new drive in the empty drive tray and install the four drive-tray screws.

Place a new drive in the empty drive tray and install the four drive-tray screws.

b.![]() With the ejector lever on the drive tray open, insert the drive tray into the empty drive bay.

With the ejector lever on the drive tray open, insert the drive tray into the empty drive bay.

c.![]() Push the tray into the slot until it touches the backplane, and then close the ejector lever to lock the drive in place.

Push the tray into the slot until it touches the backplane, and then close the ejector lever to lock the drive in place.

Step 7![]() Replace the node in the rack, replace power cables, and then power on the node by pressing the Power button.

Replace the node in the rack, replace power cables, and then power on the node by pressing the Power button.

Step 8![]() Recommission the node as described in Recommissioning the Node Using Cisco UCS Manager.

Recommission the node as described in Recommissioning the Node Using Cisco UCS Manager.

Step 9![]() Associate the node to its service profile as described in Associating a Service Profile With an HX Node.

Associate the node to its service profile as described in Associating a Service Profile With an HX Node.

Step 10![]() After ESXi reboot, exit HX Maintenance mode as described in Exiting HX Maintenance Mode.

After ESXi reboot, exit HX Maintenance mode as described in Exiting HX Maintenance Mode.

Note![]() After you replace the SSD hardware, you must contact technical support for additional software update steps.

After you replace the SSD hardware, you must contact technical support for additional software update steps.

Replacing the SSD Caching Drive (Bay 2)

The SSD caching drive must be installed in drive bay 2.

Note the following considerations and restrictions for NVMe SSDs When Used As the Caching SSD:

- NVMe SSDs are supported in HX220c and All-Flash nodes.

- NVMe SSDs are not supported in Hybrid nodes.

- NVMe SSDs are supported only in the Caching SSD position, in drive bay 2.

- NVMe SSDs are not supported for persistent storage or as the Housekeeping drive.

- The locator (beacon) LED cannot be turned on or off on NVMe SSDs.

Note![]() Always replace the drive with the same type and size as the original drive.

Always replace the drive with the same type and size as the original drive.

Note![]() Upgrading or downgrading the Caching drive in an existing HyperFlex cluster is not supported. If the Caching drive must be upgraded or downgraded, then a full redeployment of the HyperFlex cluster is required.

Upgrading or downgrading the Caching drive in an existing HyperFlex cluster is not supported. If the Caching drive must be upgraded or downgraded, then a full redeployment of the HyperFlex cluster is required.

Note![]() When using a SAS drive, hot-swap replacement includes hot-removal, so you can remove a SAS drive while it is still operating. NVMe drives cannot be hot-swapped.

When using a SAS drive, hot-swap replacement includes hot-removal, so you can remove a SAS drive while it is still operating. NVMe drives cannot be hot-swapped.

Step 1![]() Only if the caching drive is an NVMe SSD, enter the ESXi host into HX Maintenance Mode. Otherwise, skip to step 2

Only if the caching drive is an NVMe SSD, enter the ESXi host into HX Maintenance Mode. Otherwise, skip to step 2![]() .

.

Step 2![]() Remove the SSD caching drive:

Remove the SSD caching drive:

a.![]() Press the release button on the face of the drive tray (see Figure 3-7).

Press the release button on the face of the drive tray (see Figure 3-7).

b.![]() Grasp and open the ejector lever and then pull the drive tray out of the slot.

Grasp and open the ejector lever and then pull the drive tray out of the slot.

c.![]() Remove the four drive-tray screws that secure the SSD to the tray and then lift the SSD out of the tray.

Remove the four drive-tray screws that secure the SSD to the tray and then lift the SSD out of the tray.

Step 3![]() Install a new SSD caching drive:

Install a new SSD caching drive:

a.![]() Place a new SSD in the empty drive tray and replace the four drive-tray screws.

Place a new SSD in the empty drive tray and replace the four drive-tray screws.

b.![]() With the ejector lever on the drive tray open, insert the drive tray into the empty drive bay.

With the ejector lever on the drive tray open, insert the drive tray into the empty drive bay.

c.![]() Push the tray into the slot until it touches the backplane, and then close the ejector lever to lock the drive in place.

Push the tray into the slot until it touches the backplane, and then close the ejector lever to lock the drive in place.

Step 4![]() Only if the caching drive is an NVMe SSD :

Only if the caching drive is an NVMe SSD :

a.![]() reboot the ESXi host. This enables ESXi to discover the NVMe SSD.

reboot the ESXi host. This enables ESXi to discover the NVMe SSD.

b.![]() Exit the ESXi host from HX Maintenance Mode.

Exit the ESXi host from HX Maintenance Mode.

Replacing Fan Modules

The six fan modules in the node are numbered as follows when you are facing the front of the node (also see Figure 3-9).

Figure 3-8 Fan Module Numbering

Tip![]() Each fan module has a fault LED next to the fan connector on the motherboard that lights amber if the fan module fails. Standby power is required to operate these LEDs.

Each fan module has a fault LED next to the fan connector on the motherboard that lights amber if the fan module fails. Standby power is required to operate these LEDs.

Step 1![]() Remove a fan module that you are replacing (see Figure 3-9):

Remove a fan module that you are replacing (see Figure 3-9):

a.![]() Slide the node out the front of the rack far enough so that you can remove the top cover. You might have to detach cables from the rear panel to provide clearance.

Slide the node out the front of the rack far enough so that you can remove the top cover. You might have to detach cables from the rear panel to provide clearance.

b.![]() Remove the top cover as described in Removing and Replacing the Node Top Cover.

Remove the top cover as described in Removing and Replacing the Node Top Cover.

c.![]() Grasp the fan module at its front and on the green connector. Lift straight up to disengage its connector from the motherboard and free it from the two alignment pegs.

Grasp the fan module at its front and on the green connector. Lift straight up to disengage its connector from the motherboard and free it from the two alignment pegs.

Step 2![]() Install a new fan module:

Install a new fan module:

a.![]() Set the new fan module in place, aligning its two openings with the two alignment pegs on the motherboard. See Figure 3-9.

Set the new fan module in place, aligning its two openings with the two alignment pegs on the motherboard. See Figure 3-9.

b.![]() Press down gently on the fan module connector to fully engage it with the connector on the motherboard.

Press down gently on the fan module connector to fully engage it with the connector on the motherboard.

d.![]() Replace the node in the rack.

Replace the node in the rack.

Figure 3-9 Top View of Fan Module

|

|

|

Replacing the Motherboard RTC Battery

Warning![]() There is danger of explosion if the battery is replaced incorrectly. Replace the battery only with the same or equivalent type recommended by the manufacturer. Dispose of used batteries according to the manufacturer’s instructions. [Statement 1015]

There is danger of explosion if the battery is replaced incorrectly. Replace the battery only with the same or equivalent type recommended by the manufacturer. Dispose of used batteries according to the manufacturer’s instructions. [Statement 1015]

The real-time clock (RTC) battery retains node settings when the node is disconnected from power. The battery type is CR2032. Cisco supports the industry-standard CR2032 battery, which can be purchased from most electronic stores.

Step 1![]() Put the node in Cisco HX Maintenance mode as described in Shutting Down the Node Through vSphere With Cisco HX Maintenance Mode.

Put the node in Cisco HX Maintenance mode as described in Shutting Down the Node Through vSphere With Cisco HX Maintenance Mode.

Step 2![]() Shut down the node as described in Shutting Down the Node.

Shut down the node as described in Shutting Down the Node.

Step 3![]() Decommission the node as described in Decommissioning the Node Using Cisco UCS Manager.

Decommission the node as described in Decommissioning the Node Using Cisco UCS Manager.

Step 4![]() Disconnect all power cables from the power supplies.

Disconnect all power cables from the power supplies.

Step 5![]() Slide the node out the front of the rack far enough so that you can remove the top cover. You might have to detach cables from the rear panel to provide clearance.

Slide the node out the front of the rack far enough so that you can remove the top cover. You might have to detach cables from the rear panel to provide clearance.

Step 6![]() Remove the top cover as described in Removing and Replacing the Node Top Cover.

Remove the top cover as described in Removing and Replacing the Node Top Cover.

Step 7![]() Locate the RTC battery. See Figure 3-10.

Locate the RTC battery. See Figure 3-10.

Step 8![]() Gently remove the battery from the holder on the motherboard.

Gently remove the battery from the holder on the motherboard.

Step 9![]() Insert the battery into its holder and press down until it clicks in place.

Insert the battery into its holder and press down until it clicks in place.

Note![]() The positive side of the battery marked “3V+” should face upward.

The positive side of the battery marked “3V+” should face upward.

Step 10![]() Replace the top cover.

Replace the top cover.

Step 11![]() Replace the node in the rack, replace power cables, and then power on the node by pressing the Power button.

Replace the node in the rack, replace power cables, and then power on the node by pressing the Power button.

Step 12![]() Recommission the node as described in Recommissioning the Node Using Cisco UCS Manager.

Recommission the node as described in Recommissioning the Node Using Cisco UCS Manager.

Step 13![]() Associate the node to its service profile as described in Associating a Service Profile With an HX Node.

Associate the node to its service profile as described in Associating a Service Profile With an HX Node.

Step 14![]() After ESXi reboot, exit HX Maintenance mode as described in Exiting HX Maintenance Mode.

After ESXi reboot, exit HX Maintenance mode as described in Exiting HX Maintenance Mode.

Figure 3-10 Motherboard RTC Battery Location

|

|

|

Replacing DIMMs

This section includes the following topics:

Note![]() To ensure the best node performance, it is important that you are familiar with memory performance guidelines and population rules before you install or replace DIMMs.

To ensure the best node performance, it is important that you are familiar with memory performance guidelines and population rules before you install or replace DIMMs.

Memory Performance Guidelines and Population Rules

This section describes the type of memory that the node requires and its effect on performance. The section includes the following topics:

DIMM Slot Numbering

Figure 3-11 shows the numbering of the DIMM slots.

Figure 3-11 DIMM Slots and CPUs

DIMM Population Rules

Observe the following guidelines when installing or replacing DIMMs:

–![]() CPU1 supports channels A, B, C, and D.

CPU1 supports channels A, B, C, and D.

–![]() CPU2 supports channels E, F, G, and H.

CPU2 supports channels E, F, G, and H.

–![]() A channel can operate with one, two, or three DIMMs installed.

A channel can operate with one, two, or three DIMMs installed.

–![]() If a channel has only one DIMM, populate slot 1 first (the blue slot).

If a channel has only one DIMM, populate slot 1 first (the blue slot).

–![]() Fill blue #1 slots in the channels first: A1, E1, B1, F1, C1, G1, D1, H1

Fill blue #1 slots in the channels first: A1, E1, B1, F1, C1, G1, D1, H1

–![]() Fill black #2 slots in the channels second: A2, E2, B2, F2, C2, G2, D2, H2

Fill black #2 slots in the channels second: A2, E2, B2, F2, C2, G2, D2, H2

–![]() Fill white #3 slots in the channels third: A3, E3, B3, F3, C3, G3, D3, H3

Fill white #3 slots in the channels third: A3, E3, B3, F3, C3, G3, D3, H3

- Any DIMM installed in a DIMM socket for which the CPU is absent is not recognized. In a single-CPU configuration, populate the channels for CPU1 only (A, B, C, D).

- Memory mirroring reduces the amount of memory available by 50 percent because only one of the two populated channels provides data. When memory mirroring is enabled, DIMMs must be installed in sets of 4, 6, or 8 as described in Memory Mirroring and RAS.

- Observe the DIMM mixing rules shown in Table 3-6 .

Memory Mirroring and RAS

The Intel E5-2600 CPUs within the node support memory mirroring only when an even number of channels are populated with DIMMs. If one or three channels are populated with DIMMs, memory mirroring is automatically disabled. Furthermore, if memory mirroring is used, DRAM size is reduced by 50 percent for reasons of reliability.

Lockstep Channel Mode

When you enable lockstep channel mode, each memory access is a 128-bit data access that spans four channels.

Lockstep channel mode requires that all four memory channels on a CPU must be populated identically with regard to size and organization. DIMM socket populations within a channel (for example, A1, A2, A3) do not have to be identical but the same DIMM slot location across all four channels must be populated the same.

For example, DIMMs in sockets A1, B1, C1, and D1 must be identical. DIMMs in sockets A2, B2, C2, and D2 must be identical. However, the A1-B1-C1-D1 DIMMs do not have to be identical with the A2-B2-C2-D2 DIMMs.

DIMM Replacement Procedure

Identifying a Faulty DIMM

Each DIMM socket has a corresponding DIMM fault LED, directly in front of the DIMM socket. See Figure 3-3 for the locations of these LEDs. The LEDs light amber to indicate a faulty DIMM. To operate these LEDs from the supercap power source, remove AC power cords and then press the unit identification button. See also Internal Diagnostic LEDs.

Replacing DIMMs

Step 1![]() Put the node in Cisco HX Maintenance mode as described in Shutting Down the Node Through vSphere With Cisco HX Maintenance Mode.

Put the node in Cisco HX Maintenance mode as described in Shutting Down the Node Through vSphere With Cisco HX Maintenance Mode.

Step 2![]() Shut down the node as described in Shutting Down the Node.

Shut down the node as described in Shutting Down the Node.

Step 3![]() Decommission the node as described in Decommissioning the Node Using Cisco UCS Manager.

Decommission the node as described in Decommissioning the Node Using Cisco UCS Manager.

Step 4![]() Disconnect all power cables from the power supplies.

Disconnect all power cables from the power supplies.

Step 5![]() Slide the node out the front of the rack far enough so that you can remove the top cover. You might have to detach cables from the rear panel to provide clearance.

Slide the node out the front of the rack far enough so that you can remove the top cover. You might have to detach cables from the rear panel to provide clearance.

Step 6![]() Remove the top cover as described in Removing and Replacing the Node Top Cover.

Remove the top cover as described in Removing and Replacing the Node Top Cover.

Step 7![]() Identify the faulty DIMM by observing the DIMM slot fault LEDs on the motherboard.

Identify the faulty DIMM by observing the DIMM slot fault LEDs on the motherboard.

Step 8![]() Open the ejector levers at both ends of the DIMM slot, and then lift the DIMM out of the slot.

Open the ejector levers at both ends of the DIMM slot, and then lift the DIMM out of the slot.

Note![]() Before installing DIMMs, see the population guidelines: Memory Performance Guidelines and Population Rules.

Before installing DIMMs, see the population guidelines: Memory Performance Guidelines and Population Rules.

e.![]() Align the new DIMM with the empty slot on the motherboard. Use the alignment key in the DIMM slot to correctly orient the DIMM.

Align the new DIMM with the empty slot on the motherboard. Use the alignment key in the DIMM slot to correctly orient the DIMM.

f.![]() Push down evenly on the top corners of the DIMM until it is fully seated and the ejector levers on both ends lock into place.

Push down evenly on the top corners of the DIMM until it is fully seated and the ejector levers on both ends lock into place.

Step 10![]() Replace the node in the rack, replace power cables, and then power on the node by pressing the Power button.

Replace the node in the rack, replace power cables, and then power on the node by pressing the Power button.

Step 11![]() Recommission the node as described in Recommissioning the Node Using Cisco UCS Manager.

Recommission the node as described in Recommissioning the Node Using Cisco UCS Manager.

Step 12![]() Associate the node to its service profile as described in Associating a Service Profile With an HX Node.

Associate the node to its service profile as described in Associating a Service Profile With an HX Node.

Step 13![]() After ESXi reboot, exit HX Maintenance mode as described in Exiting HX Maintenance Mode.

After ESXi reboot, exit HX Maintenance mode as described in Exiting HX Maintenance Mode.

Replacing CPUs and Heatsinks

This section contains the following topics:

- Special Information For Upgrades to Intel Xeon v4 CPUs

- CPU Configuration Rules

- CPU Replacement Procedure

- Special Instructions for Upgrades to Intel Xeon v4 Series

- Additional CPU-Related Parts to Order with RMA Replacement Motherboards

Note![]() You can use Xeon v3- and v4-based nodes in the same cluster. Do not mix Xeon v3 and v4 CPUs within the same node.

You can use Xeon v3- and v4-based nodes in the same cluster. Do not mix Xeon v3 and v4 CPUs within the same node.

Special Information For Upgrades to Intel Xeon v4 CPUs

The minimum software and firmware versions required for the node to support Intel v4 CPUs are as follows:

|

|

|

|---|---|

Do one of the following actions:

- If your node’s firmware and/or Cisco UCS Manager software are already at the required levels shown in Table 3-7 , you can replace the CPU hardware by using the procedure in CPU Replacement Procedure.

- If your node’s firmware and/or Cisco UCS Manager software is earlier than the required levels, use the instructions in Special Information For Upgrades to Intel Xeon v4 CPUs to upgrade your software in the correct order. After you upgrade the software, you will be redirected to this section to replace the CPU hardware.

CPU Configuration Rules

This node has two CPU sockets. Each CPU supports four DIMM channels (12 DIMM slots). See Figure 3-11.

CPU Replacement Procedure

Note![]() This node uses the new independent loading mechanism (ILM) CPU sockets, so no Pick-and-Place tools are required for CPU handling or installation. Always grasp the plastic frame on the CPU when handling.

This node uses the new independent loading mechanism (ILM) CPU sockets, so no Pick-and-Place tools are required for CPU handling or installation. Always grasp the plastic frame on the CPU when handling.

Step 1![]() Put the node in Cisco HX Maintenance mode as described in Shutting Down the Node Through vSphere With Cisco HX Maintenance Mode.

Put the node in Cisco HX Maintenance mode as described in Shutting Down the Node Through vSphere With Cisco HX Maintenance Mode.

Step 2![]() Shut down the node as described in Shutting Down the Node.

Shut down the node as described in Shutting Down the Node.

Step 3![]() Decommission the node as described in Decommissioning the Node Using Cisco UCS Manager.

Decommission the node as described in Decommissioning the Node Using Cisco UCS Manager.

Step 4![]() Disconnect all power cables from the power supplies.

Disconnect all power cables from the power supplies.

Step 5![]() Slide the node out the front of the rack far enough so that you can remove the top cover. You might have to detach cables from the rear panel to provide clearance.

Slide the node out the front of the rack far enough so that you can remove the top cover. You might have to detach cables from the rear panel to provide clearance.

Step 6![]() Remove the top cover as described in Removing and Replacing the Node Top Cover.

Remove the top cover as described in Removing and Replacing the Node Top Cover.

Step 7![]() Remove the plastic air baffle that sits over the CPUs.

Remove the plastic air baffle that sits over the CPUs.

Step 8![]() Remove the heatsink that you are replacing. Use a Number 2 Phillips-head screwdriver to loosen the four captive screws that secure the heatsink and then lift it off of the CPU.

Remove the heatsink that you are replacing. Use a Number 2 Phillips-head screwdriver to loosen the four captive screws that secure the heatsink and then lift it off of the CPU.

Note![]() Alternate loosening each screw evenly to avoid damaging the heatsink or CPU.

Alternate loosening each screw evenly to avoid damaging the heatsink or CPU.

Step 9![]() Open the CPU retaining mechanism:

Open the CPU retaining mechanism:

a.![]() Unclip the first retaining latch labeled with the

Unclip the first retaining latch labeled with the  icon, and then unclip the second retaining latch labeled with the

icon, and then unclip the second retaining latch labeled with the  icon. See Figure 3-12.

icon. See Figure 3-12.

b.![]() Open the hinged CPU cover plate.

Open the hinged CPU cover plate.

|

|

|

||

|

|

|

||

|

|

|

Step 10![]() Remove any existing CPU:

Remove any existing CPU:

a.![]() With the latches and hinged CPU cover plate open, swing up the CPU in its hinged seat to the open position, as shown in Figure 3-12.

With the latches and hinged CPU cover plate open, swing up the CPU in its hinged seat to the open position, as shown in Figure 3-12.

b.![]() Grasp the CPU by the finger grips on its plastic frame and lift it up and out of the hinged CPU seat.

Grasp the CPU by the finger grips on its plastic frame and lift it up and out of the hinged CPU seat.

c.![]() Set the CPU aside on an anti-static surface.

Set the CPU aside on an anti-static surface.

a.![]() Grasp the new CPU by the finger grips on its plastic frame and align the tab on the frame that is labeled “ALIGN” with the SLS mechanism, as shown in Figure 3-13.

Grasp the new CPU by the finger grips on its plastic frame and align the tab on the frame that is labeled “ALIGN” with the SLS mechanism, as shown in Figure 3-13.

b.![]() Insert the tab on the CPU frame into the seat until it stops and is held firmly.

Insert the tab on the CPU frame into the seat until it stops and is held firmly.

The line below the word “ALIGN” should be level with the edge of the seat, as shown in Figure 3-13.

c.![]() Swing the hinged seat with the CPU down until the CPU frame clicks in place and holds flat in the socket.

Swing the hinged seat with the CPU down until the CPU frame clicks in place and holds flat in the socket.

d.![]() Close the hinged CPU cover plate.

Close the hinged CPU cover plate.

e.![]() Clip down the CPU retaining latch with the

Clip down the CPU retaining latch with the  icon, and then clip down the CPU retaining latch with the

icon, and then clip down the CPU retaining latch with the  icon. See Figure 3-12.

icon. See Figure 3-12.

Figure 3-13 CPU and Socket Alignment Features

|

|

|

a.![]() Apply the cleaning solution, which is included with the heatsink cleaning kit (UCSX-HSCK=, shipped with spare CPUs), to the old thermal grease on the heatsink and CPU and let it soak for a least 15 seconds.

Apply the cleaning solution, which is included with the heatsink cleaning kit (UCSX-HSCK=, shipped with spare CPUs), to the old thermal grease on the heatsink and CPU and let it soak for a least 15 seconds.

b.![]() Wipe all of the old thermal grease off the old heat sink and CPU using the soft cloth that is included with the heatsink cleaning kit. Be careful to not scratch the heat sink surface.

Wipe all of the old thermal grease off the old heat sink and CPU using the soft cloth that is included with the heatsink cleaning kit. Be careful to not scratch the heat sink surface.

Note![]() New heatsinks come with a pre-applied pad of thermal grease. If you are reusing a heatsink, you must apply thermal grease from a syringe (UCS-CPU-GREASE3=).

New heatsinks come with a pre-applied pad of thermal grease. If you are reusing a heatsink, you must apply thermal grease from a syringe (UCS-CPU-GREASE3=).

c.![]() Using the syringe of thermal grease provided with the CPU (UCS-CPU-GREASE3=), apply 2 cubic centimeters of thermal grease to the top of the CPU. Use the pattern shown in Figure 3-14 to ensure even coverage.

Using the syringe of thermal grease provided with the CPU (UCS-CPU-GREASE3=), apply 2 cubic centimeters of thermal grease to the top of the CPU. Use the pattern shown in Figure 3-14 to ensure even coverage.

Figure 3-14 Thermal Grease Application Pattern

d.![]() Align the four heatsink captive screws with the motherboard standoffs, and then use a Number 2 Phillips-head screwdriver to tighten the captive screws evenly.

Align the four heatsink captive screws with the motherboard standoffs, and then use a Number 2 Phillips-head screwdriver to tighten the captive screws evenly.

Note![]() Alternate tightening each screw evenly to avoid damaging the heatsink or CPU.

Alternate tightening each screw evenly to avoid damaging the heatsink or CPU.

Step 13![]() Replace the air baffle.

Replace the air baffle.

Step 14![]() Replace the top cover.

Replace the top cover.

Step 15![]() Replace the node in the rack, replace power cables, and then power on the node by pressing the Power button.

Replace the node in the rack, replace power cables, and then power on the node by pressing the Power button.

Step 16![]() Recommission the node as described in Recommissioning the Node Using Cisco UCS Manager.

Recommission the node as described in Recommissioning the Node Using Cisco UCS Manager.

Step 17![]() Associate the node to its service profile as described in Associating a Service Profile With an HX Node.

Associate the node to its service profile as described in Associating a Service Profile With an HX Node.

Step 18![]() After ESXi reboot, exit HX Maintenance mode as described in Exiting HX Maintenance Mode.

After ESXi reboot, exit HX Maintenance mode as described in Exiting HX Maintenance Mode.

Special Instructions for Upgrades to Intel Xeon v4 Series

Use the following procedure to upgrade the node and CPUs.

Step 1![]() Upgrade the Cisco UCS Manager software to the minimum version for your node (or later). See Table 3-7 .

Upgrade the Cisco UCS Manager software to the minimum version for your node (or later). See Table 3-7 .

Use the procedures in the appropriate Cisco UCS Manager upgrade guide (depending on your current software version): Cisco UCS Manager Upgrade Guides.

Step 2![]() Use Cisco UCS Manager to upgrade and activate the node Cisco IMC to the minimum version for your node (or later). See Table 3-7 .

Use Cisco UCS Manager to upgrade and activate the node Cisco IMC to the minimum version for your node (or later). See Table 3-7 .

Use the procedures in the GUI or CLI Cisco UCS Manager Firmware Management Guide for your release.

Step 3![]() Use Cisco UCS Manager to upgrade and activate the node BIOS to the minimum version for your node (or later). See Table 3-7 .

Use Cisco UCS Manager to upgrade and activate the node BIOS to the minimum version for your node (or later). See Table 3-7 .

Use the procedures in the Cisco UCS Manager GUI or CLI Cisco UCS Manager Firmware Management Guide for your release.

Step 4![]() Replace the CPUs with the Intel Xeon v4 Series CPUs.

Replace the CPUs with the Intel Xeon v4 Series CPUs.

Use the CPU replacement procedures in CPU Replacement Procedure.

Additional CPU-Related Parts to Order with RMA Replacement Motherboards

When a return material authorization (RMA) of the motherboard or CPU is done on a node, additional parts might not be included with the CPU or motherboard spare bill of materials (BOM). The TAC engineer might need to add the additional parts to the RMA to help ensure a successful replacement.

–![]() Heat sink cleaning kit (UCSX-HSCK=)

Heat sink cleaning kit (UCSX-HSCK=)

–![]() Thermal grease kit for C240 M4 (UCS-CPU-GREASE3=)

Thermal grease kit for C240 M4 (UCS-CPU-GREASE3=)

–![]() Heat sink cleaning kit (UCSX-HSCK=)

Heat sink cleaning kit (UCSX-HSCK=)

A CPU heatsink cleaning kit is good for up to four CPU and heatsink cleanings. The cleaning kit contains two bottles of solution, one to clean the CPU and heatsink of old thermal interface material and the other to prepare the surface of the heatsink.

New heatsink spares come with a pre-applied pad of thermal grease. It is important to clean the old thermal grease off of the CPU prior to installing the heatsinks. Therefore, when you are ordering new heatsinks, you must order the heatsink cleaning kit.

Replacing an Internal SD Card

The node has two internal SD card bays on the motherboard.

Dual SD cards are supported. RAID 1 support can be configured through the Cisco IMC interface.

Step 1![]() Put the node in Cisco HX Maintenance mode as described in Shutting Down the Node Through vSphere With Cisco HX Maintenance Mode.

Put the node in Cisco HX Maintenance mode as described in Shutting Down the Node Through vSphere With Cisco HX Maintenance Mode.

Step 2![]() Shut down the node as described in Shutting Down the Node.

Shut down the node as described in Shutting Down the Node.

Step 3![]() Decommission the node as described in Decommissioning the Node Using Cisco UCS Manager.

Decommission the node as described in Decommissioning the Node Using Cisco UCS Manager.

Step 4![]() Disconnect all power cables from the power supplies.

Disconnect all power cables from the power supplies.

Step 5![]() Slide the node out the front of the rack far enough so that you can remove the top cover. You might have to detach cables from the rear panel to provide clearance.

Slide the node out the front of the rack far enough so that you can remove the top cover. You might have to detach cables from the rear panel to provide clearance.

Step 6![]() Remove the top cover as described in Removing and Replacing the Node Top Cover.

Remove the top cover as described in Removing and Replacing the Node Top Cover.

Step 7![]() Locate the SD card that you are replacing on the motherboard (see Figure 3-15).

Locate the SD card that you are replacing on the motherboard (see Figure 3-15).

Step 8![]() Push on the top of the SD card, and then release it to allow it to spring up in the slot.

Push on the top of the SD card, and then release it to allow it to spring up in the slot.

Step 9![]() Remove the SD card from the slot.

Remove the SD card from the slot.

Step 10![]() Insert the SD card into the slot with the label side facing up.

Insert the SD card into the slot with the label side facing up.

Step 11![]() Press on the top of the card until it clicks in the slot and stays in place.

Press on the top of the card until it clicks in the slot and stays in place.

Step 12![]() Replace the top cover.

Replace the top cover.

Step 13![]() Replace the node in the rack, replace power cables, and then power on the node by pressing the Power button.

Replace the node in the rack, replace power cables, and then power on the node by pressing the Power button.

Step 14![]() Recommission the node as described in Recommissioning the Node Using Cisco UCS Manager.

Recommission the node as described in Recommissioning the Node Using Cisco UCS Manager.

Step 15![]() Associate the node to its service profile as described in Associating a Service Profile With an HX Node.

Associate the node to its service profile as described in Associating a Service Profile With an HX Node.

Step 16![]() After ESXi reboot, exit HX Maintenance mode as described in Exiting HX Maintenance Mode.

After ESXi reboot, exit HX Maintenance mode as described in Exiting HX Maintenance Mode.

Figure 3-15 SD Card Bays and USB Port Locations on the Motherboard

|

|

|

Enabling or Disabling the Internal USB Port

The factory default is for all USB ports on the node to be enabled. However, the internal USB port can be enabled or disabled in the node BIOS. See Figure 3-15 for the location of the USB port on the motherboard.

Step 1![]() Enter the BIOS Setup Utility by pressing the F2 key when prompted during bootup.

Enter the BIOS Setup Utility by pressing the F2 key when prompted during bootup.

Step 2![]() Navigate to the Advanced tab.

Navigate to the Advanced tab.

Step 3![]() On the Advanced tab, select USB Configuration.

On the Advanced tab, select USB Configuration.

Step 4![]() On the USB Configuration page, select USB Ports Configuration.

On the USB Configuration page, select USB Ports Configuration.

Step 5![]() Scroll to USB Port: Internal, press Enter, and then choose either Enabled or Disabled from the dialog box.

Scroll to USB Port: Internal, press Enter, and then choose either Enabled or Disabled from the dialog box.

Step 6![]() Press F10 to save and exit the utility.

Press F10 to save and exit the utility.

Replacing a Cisco Modular HBA Riser (Internal Riser 3)

The node has a dedicated internal riser 3 that is used only for the Cisco modular HBA card. This riser plugs into a dedicated motherboard socket and provides a horizontal socket for the HBA.

Step 1![]() Put the node in Cisco HX Maintenance mode as described in Shutting Down the Node Through vSphere With Cisco HX Maintenance Mode.

Put the node in Cisco HX Maintenance mode as described in Shutting Down the Node Through vSphere With Cisco HX Maintenance Mode.

Step 2![]() Shut down the node as described in Shutting Down the Node.

Shut down the node as described in Shutting Down the Node.

Step 3![]() Decommission the node as described in Decommissioning the Node Using Cisco UCS Manager.

Decommission the node as described in Decommissioning the Node Using Cisco UCS Manager.

Step 4![]() Disconnect all power cables from the power supplies.

Disconnect all power cables from the power supplies.

Step 5![]() Slide the node out the front of the rack far enough so that you can remove the top cover. You might have to detach cables from the rear panel to provide clearance.

Slide the node out the front of the rack far enough so that you can remove the top cover. You might have to detach cables from the rear panel to provide clearance.

Step 6![]() Remove the top cover as described in Removing and Replacing the Node Top Cover.

Remove the top cover as described in Removing and Replacing the Node Top Cover.

Step 7![]() Remove the existing riser (see Figure 3-16):

Remove the existing riser (see Figure 3-16):

a.![]() If the existing riser has a card in it, disconnect the SAS cable from the card.

If the existing riser has a card in it, disconnect the SAS cable from the card.

b.![]() Lift the riser straight up to disengage the riser from the motherboard socket. The riser bracket must also lift off of two pegs that hold it to the inner chassis wall.

Lift the riser straight up to disengage the riser from the motherboard socket. The riser bracket must also lift off of two pegs that hold it to the inner chassis wall.

d.![]() Remove the card from the riser. Loosen the single thumbscrew that secures the card to the riser bracket and then pull the card straight out from its socket on the riser (see Figure 3-17).

Remove the card from the riser. Loosen the single thumbscrew that secures the card to the riser bracket and then pull the card straight out from its socket on the riser (see Figure 3-17).

a.![]() Install your HBA card into the new riser. See Replacing a Cisco Modular HBA Card.

Install your HBA card into the new riser. See Replacing a Cisco Modular HBA Card.

b.![]() Align the connector on the riser with the socket on the motherboard. At the same time, align the two slots on the back side of the bracket with the two pegs on the inner chassis wall.

Align the connector on the riser with the socket on the motherboard. At the same time, align the two slots on the back side of the bracket with the two pegs on the inner chassis wall.

c.![]() Push down gently to engage the riser connector with the motherboard socket. The metal riser bracket must also engage the two pegs that secure it to the chassis wall.

Push down gently to engage the riser connector with the motherboard socket. The metal riser bracket must also engage the two pegs that secure it to the chassis wall.

d.![]() Reconnect the SAS cable to its connector on the HBA card.

Reconnect the SAS cable to its connector on the HBA card.

Step 10![]() Replace the node in the rack, replace power cables, and then power on the node by pressing the Power button.

Replace the node in the rack, replace power cables, and then power on the node by pressing the Power button.

Step 11![]() Recommission the node as described in Recommissioning the Node Using Cisco UCS Manager.

Recommission the node as described in Recommissioning the Node Using Cisco UCS Manager.

Step 12![]() Associate the node to its service profile as described in Associating a Service Profile With an HX Node.

Associate the node to its service profile as described in Associating a Service Profile With an HX Node.

Step 13![]() After ESXi reboot, exit HX Maintenance mode as described in Exiting HX Maintenance Mode.

After ESXi reboot, exit HX Maintenance mode as described in Exiting HX Maintenance Mode.

Figure 3-16 Cisco Modular HBA Riser (Internal Riser 3) Location

|

|

|

Replacing a Cisco Modular HBA Card

The node can use a Cisco modular HBA card that plugs into a horizontal socket on a dedicated internal riser 3.

Step 1![]() Put the node in Cisco HX Maintenance mode as described in Shutting Down the Node Through vSphere With Cisco HX Maintenance Mode.

Put the node in Cisco HX Maintenance mode as described in Shutting Down the Node Through vSphere With Cisco HX Maintenance Mode.

Step 2![]() Shut down the node as described in Shutting Down the Node.

Shut down the node as described in Shutting Down the Node.

Step 3![]() Decommission the node as described in Decommissioning the Node Using Cisco UCS Manager.

Decommission the node as described in Decommissioning the Node Using Cisco UCS Manager.

Step 4![]() Disconnect all power cables from the power supplies.

Disconnect all power cables from the power supplies.

Step 5![]() Slide the node out the front of the rack far enough so that you can remove the top cover. You might have to detach cables from the rear panel to provide clearance.

Slide the node out the front of the rack far enough so that you can remove the top cover. You might have to detach cables from the rear panel to provide clearance.

Step 6![]() Remove the top cover as described in Removing and Replacing the Node Top Cover.

Remove the top cover as described in Removing and Replacing the Node Top Cover.

Step 7![]() Remove the riser from the node (see Figure 3-16):

Remove the riser from the node (see Figure 3-16):

a.![]() Disconnect the SAS cable from the existing HBA card.

Disconnect the SAS cable from the existing HBA card.

b.![]() Lift the riser straight up to disengage the riser from the motherboard socket. The riser bracket must also lift off of two pegs that hold it to the inner chassis wall.

Lift the riser straight up to disengage the riser from the motherboard socket. The riser bracket must also lift off of two pegs that hold it to the inner chassis wall.

Step 8![]() Remove the card from the riser:

Remove the card from the riser:

a.![]() Loosen the single thumbscrew that secures the card to the metal riser bracket (see Figure 3-17).

Loosen the single thumbscrew that secures the card to the metal riser bracket (see Figure 3-17).

b.![]() Pull the card straight out from its socket on the riser and the guide channel on the riser bracket.

Pull the card straight out from its socket on the riser and the guide channel on the riser bracket.

Step 9![]() Install the HBA card into the riser:

Install the HBA card into the riser:

a.![]() With the riser upside down, set the card on the riser. Align the right end of the card with the alignment channel on the riser; align the connector on the card edge with the socket on the riser (see Figure 3-17).

With the riser upside down, set the card on the riser. Align the right end of the card with the alignment channel on the riser; align the connector on the card edge with the socket on the riser (see Figure 3-17).

b.![]() Being careful to avoid scraping the underside of the card on the threaded standoff on the riser, push on both corners of the card to seat its connector in the riser socket.

Being careful to avoid scraping the underside of the card on the threaded standoff on the riser, push on both corners of the card to seat its connector in the riser socket.

c.![]() Tighten the single thumbscrew that secures the card to the riser bracket.

Tighten the single thumbscrew that secures the card to the riser bracket.

Step 10![]() Return the riser to the node:

Return the riser to the node:

a.![]() Align the connector on the riser with the socket on the motherboard. At the same time, align the two slots on the back side of the bracket with the two pegs on the inner chassis wall.

Align the connector on the riser with the socket on the motherboard. At the same time, align the two slots on the back side of the bracket with the two pegs on the inner chassis wall.

b.![]() Push down gently to engage the riser connector with the motherboard socket. The metal riser bracket must also engage the two pegs that secure it to the chassis wall.

Push down gently to engage the riser connector with the motherboard socket. The metal riser bracket must also engage the two pegs that secure it to the chassis wall.

Step 11![]() Reconnect the SAS cable to its connector on the HBA card.

Reconnect the SAS cable to its connector on the HBA card.

Step 12![]() Replace the top cover.

Replace the top cover.

Step 13![]() Replace the node in the rack, replace power cables, and then power on the node by pressing the Power button.

Replace the node in the rack, replace power cables, and then power on the node by pressing the Power button.

Step 14![]() Recommission the node as described in Recommissioning the Node Using Cisco UCS Manager.

Recommission the node as described in Recommissioning the Node Using Cisco UCS Manager.

Step 15![]() Associate the node to its service profile as described in Associating a Service Profile With an HX Node.

Associate the node to its service profile as described in Associating a Service Profile With an HX Node.

Step 16![]() After ESXi reboot, exit HX Maintenance mode as described in Exiting HX Maintenance Mode.

After ESXi reboot, exit HX Maintenance mode as described in Exiting HX Maintenance Mode.

Figure 3-17 Cisco Modular HBA Card in Riser

|

|

|

||

|

|

|

CAUTION: Do not scrape the underside of the card on this threaded standoff. |

Replacing a PCIe Riser Assembly

The node contains two PCIe risers that are attached to a single riser assembly. Riser 1 provides PCIe slot 1 and riser 2 provides PCIe slot 2, as shown in Figure 3-18. See Table 3-8 for a description of the PCIe slots on each riser.

Figure 3-18 Rear Panel, Showing PCIe Slots

To install or replace a PCIe riser, follow these steps:

Step 1![]() Put the node in Cisco HX Maintenance mode as described in Shutting Down the Node Through vSphere With Cisco HX Maintenance Mode.

Put the node in Cisco HX Maintenance mode as described in Shutting Down the Node Through vSphere With Cisco HX Maintenance Mode.

Step 2![]() Shut down the node as described in Shutting Down the Node.

Shut down the node as described in Shutting Down the Node.

Step 3![]() Decommission the node as described in Decommissioning the Node Using Cisco UCS Manager.

Decommission the node as described in Decommissioning the Node Using Cisco UCS Manager.

Step 4![]() Disconnect all power cables from the power supplies.

Disconnect all power cables from the power supplies.

Step 5![]() Slide the node out the front of the rack far enough so that you can remove the top cover. You might have to detach cables from the rear panel to provide clearance.

Slide the node out the front of the rack far enough so that you can remove the top cover. You might have to detach cables from the rear panel to provide clearance.

Step 6![]() Remove the top cover as described in Removing and Replacing the Node Top Cover.

Remove the top cover as described in Removing and Replacing the Node Top Cover.

Step 7![]() Use two hands to grasp the metal bracket of the riser assembly and lift straight up to disengage its connectors from the two sockets on the motherboard.

Use two hands to grasp the metal bracket of the riser assembly and lift straight up to disengage its connectors from the two sockets on the motherboard.

Step 8![]() If the riser has any cards installed, remove them from the riser.

If the riser has any cards installed, remove them from the riser.

Step 9![]() Install a new PCIe riser assembly:

Install a new PCIe riser assembly:

a.![]() If you removed any cards from the old riser assembly, install the cards to the new riser assembly (see Replacing a PCIe Card).

If you removed any cards from the old riser assembly, install the cards to the new riser assembly (see Replacing a PCIe Card).

b.![]() Position the riser assembly over its two sockets on the motherboard and over the chassis alignment channels (see Figure 3-19):

Position the riser assembly over its two sockets on the motherboard and over the chassis alignment channels (see Figure 3-19):

c.![]() Carefully push down on both ends of the riser assembly to fully engage its connectors with the two sockets on the motherboard.

Carefully push down on both ends of the riser assembly to fully engage its connectors with the two sockets on the motherboard.

Step 10![]() Replace the top cover.

Replace the top cover.

Step 11![]() Replace the node in the rack, replace power cables, and then power on the node by pressing the Power button.

Replace the node in the rack, replace power cables, and then power on the node by pressing the Power button.

Step 12![]() Recommission the node as described in Recommissioning the Node Using Cisco UCS Manager.

Recommission the node as described in Recommissioning the Node Using Cisco UCS Manager.

Step 13![]() Associate the node to its service profile as described in Associating a Service Profile With an HX Node.

Associate the node to its service profile as described in Associating a Service Profile With an HX Node.

Step 14![]() After ESXi reboot, exit HX Maintenance mode as described in Exiting HX Maintenance Mode.

After ESXi reboot, exit HX Maintenance mode as described in Exiting HX Maintenance Mode.

Figure 3-19 PCIe Riser Assembly Location and Alignment Channels

|

|

|

Replacing a PCIe Card

PCIe Slots

The node contains two toolless PCIe risers for horizontal installation of PCIe cards. See Figure 3-20 and Table 3-8 for a description of the PCIe slots on these risers.

Both slots support the network communications services interface (NCSI) protocol and standby power.

Figure 3-20 Rear Panel, Showing PCIe Slots

|

|

Lane Width |

|

|

|

|

|---|---|---|---|---|---|

|

1.This is the supported length because of internal clearance. |

Replacing a PCIe Card

To install or replace a PCIe card, follow these steps:

Step 1![]() Put the node in Cisco HX Maintenance mode as described in Shutting Down the Node Through vSphere With Cisco HX Maintenance Mode.

Put the node in Cisco HX Maintenance mode as described in Shutting Down the Node Through vSphere With Cisco HX Maintenance Mode.

Step 2![]() Shut down the node as described in Shutting Down the Node.

Shut down the node as described in Shutting Down the Node.

Step 3![]() Decommission the node as described in Decommissioning the Node Using Cisco UCS Manager.

Decommission the node as described in Decommissioning the Node Using Cisco UCS Manager.

Step 4![]() Disconnect all power cables from the power supplies.

Disconnect all power cables from the power supplies.

Step 5![]() Slide the node out the front of the rack far enough so that you can remove the top cover. You might have to detach cables from the rear panel to provide clearance.

Slide the node out the front of the rack far enough so that you can remove the top cover. You might have to detach cables from the rear panel to provide clearance.

Step 6![]() Remove the top cover as described in Removing and Replacing the Node Top Cover.

Remove the top cover as described in Removing and Replacing the Node Top Cover.

a.![]() Remove any cables from the ports of the PCIe card that you are replacing.

Remove any cables from the ports of the PCIe card that you are replacing.

b.![]() Use two hands to grasp the metal bracket of the riser assembly and lift straight up to disengage its connectors from the two sockets on the motherboard.

Use two hands to grasp the metal bracket of the riser assembly and lift straight up to disengage its connectors from the two sockets on the motherboard.

c.![]() Open the hinged plastic retainer that secures the rear-panel tab of the card (see Figure 3-21).

Open the hinged plastic retainer that secures the rear-panel tab of the card (see Figure 3-21).

d.![]() Pull evenly on both ends of the PCIe card to remove it from the socket on the PCIe riser.

Pull evenly on both ends of the PCIe card to remove it from the socket on the PCIe riser.