Overview

The Cisco HyperFlex (HX) C240 M6 is a stand-alone 2U rack server chassis that can operate in both standalone environments and as part of the Cisco Unified Computing System (Cisco UCS).

The Cisco HX C240 M6 servers support a maximum of two 3rd Gen Intel® Xeon® Scalable Processors, in either one or two CPU configurations.

The servers support:

-

16 DIMM slots per CPU for 3200-MHz DDR4 DIMMs in capacities up to 128 GB DIMMs.

-

A maximum of 8 or 12 TB of memory is supported for a dual CPU configuration populated with either:

-

DIMM memory configurations of either 32 128 GB DDR DIMMs, or 16 128 GB DDR4 DIMMs plus 16 512 GB Intel® Optane™ Persistent Memory Modules (DCPMMs).

-

-

The servers have different supported drive configurations depending on whether they are configured with large form factor (LFF) or small form factor (SFF) front-loading drives.

-

The C240 M6 12 LFF supports midplane mounted storage through a maximum of r4 LFF HDDs.

-

Up to 2 M.2 SATA RAID cards for server boot.

-

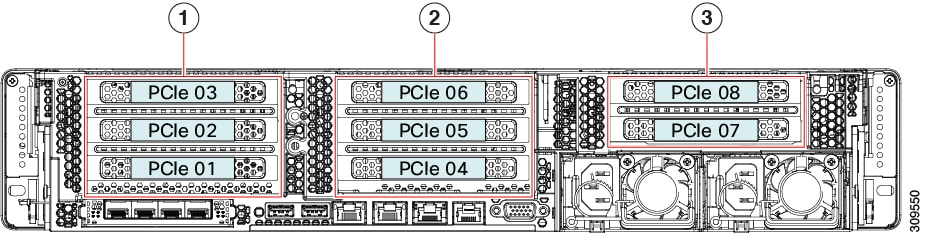

Rear Storage risers (2 slots each)

-

One rear PCIe riser (3 slots)

-

Internal slot for a 12 G SAS RAID controller with SuperCap for write-cache backup, or for a SAS HBA.

-

Network connectivity through either a dedicated modular LAN over motherboard card (mLOM) that accepts a series 14xxx Cisco virtual interface card (VIC) or a third-party NIC. These options are in addition to Intel x550 10Gbase-T mLOM ports built into the server motherboard.

-

One mLOM/VIC card provides 10/25/40/50/100 Gbps. The following mLOMs are supported:

-

Cisco HX VIC 15428 Quad Port CNA MLOM (UCSC-M-V5Q50G) supports:

-

a x16 PCIe Gen4 Host Interface to the rack server

-

four 10G/25G/50G SFP56 ports

-

4GB DDR4 Memory, 3200 MHz

-

Integrated blower for optimal ventilation

-

-

Cisco HX VIC 1467 Quad Port 10/25G SFP28 mLOM (UCSC-M-V25-04) supports:

-

a x16 PCIe Gen3 Host Interface to the rack server

-

four 10G/25G QSFP28 ports

-

2GB DDR3 Memory, 1866 MHz

-

-

Cisco HX VIC 1477 Dual Port 40/100G QSFP28 (UCSC-M-V100-04)

-

a x16 PCIe Gen3 Host Interface to the rack server

-

two 40G/100G QSFP28 ports

-

2GB DDR3 Memory, 1866 MHz

-

These options are in addition to Intel x550 10Gbase-T mLOM ports built into the server motherboard.

-

-

The following virtual interface cards (VICs) are supported in addition to some third-party VICs):

-

Cisco HX VIC 1455 quad port 10/25G SFP28 PCIe (UCSC-PCIE-C25Q-04=)

-

Cisco HX VIC 1495 Dual Port 40/100G QSFP28 CNA PCIe (UCSC-PCIE-C100-042)

-

-

Two power supplies (PSUs) that support N+1 power configuration.

-

Six modular, hot swappable fans.

Server Configurations, LFF

The server is orderable with the following configuration for LFF drives.

-

Cisco HX C240 M6 LFF 12 (HX-C240-M6L)—Large form-factor (LFF) drives, with a 12-drive backplane.

-

Front-loading drive bays 1—12 support 3.5-inch SAS/SATA drives.

-

The midplane drive cage supports four 3.5-inch SAS-only drives.

-

Optionally, rear-loading drive bays support either two or four SAS/SATA or NVMe drives.

-

Server Configurations, SFF 12 SAS/SATA

The SFF 12 SAS/SATA configuration (HX-C240-M6-S) can be configured with 12 SFF drives and an optional optical drive. Also, The SFF configurations can be ordered as either an I/O-centric configuration or a storage centric configuration. This server supports the following:

-

A maximum of 12 Small form-factor (SFF) drives, with a 12-drive backplane.

-

Front-loading drive bays 1—12 support a maximum of 12 2.5-inch SAS/SATA drives as SSDs or HDDs.

-

Optionally, drive bays 1—4 can support 2.5-inch NVMe SSDs. In this configuration, any number of NVMe drives can be installed up to the maximum of 4.

Note

NVMe drives are supported only on a dual CPU server.

-

The server can be configured with a SATA Interposer card. If your server uses a SATA Interposer card, up to a maximum of 8 SATA-only drives can be configured. These drives can be installed only in slots 1-8.

-

Drive bays 5 —12 support SAS/SATA SSDs or HDDs only; no NVMe.

-

Optionally, the rear-loading drive bays support four 4 2.5-inch SAS/SATA or NVMe drives.

-

Server Configurations, 24 SFF SAS/SATA

The SFF 24 SAS/SATA configuration (HX-C240-M6SX) can be ordered as either an I/O-centric configuration or a storage centric configuration. This server supports the following:

-

A maximum of 24 small form-factor (SFF) drives, with a 24-drive backplane.

-

Front-loading drive bays 1—24 support 2.5-inch SAS/SATA drives as SSDs or HDDs.

-

Optionally, drive bays 1—4 can support 2.5-inch NVMe SSDs. In this configuration, any number of NVMe drives can be installed up to the maximum of 4.

Note

NVMe drives are supported only on a dual CPU server.

-

Drive bays 5 —24 support SAS/SATA SSDs or HDDs only; no NVMe.

-

Optionally, the rear-loading drive bays support four 4 2.5-inch SAS/SATA or NVMe drives.

-

As an option, this server can be ordered with "GPU ready" configuration. This option supports adding GPUs at a later date even though the GPU is not purchased at the time the server is initially ordered.

Note

To order the GPU Ready configuration through the Cisco online ordering and configuration tool, you must select the GPU air duct PID to enable GPU ready configuration. Follow the additional rules displayed in the tool. For additional information, see GPU Card Configuration Rules.

-

Server Configurations, 12 NVMe

The SFF 12 NVMe configuration (HX-C240-M6N) can be ordered as an NVMe-only server. The NVMe-optimized server requires two CPUs. T His server supports the following:

-

A maximum of 12 SFF NVMe drives as SSDs with a 12-drive backplane, NVMe-optimized.

-

Front-loading drive bays 1—12 support 2.5-inch NVMe PCIe SSDs only.

-

The two rear-loading drive bays support two 2.5-inch NVMe SSDs only. These drive bays are the top and middle slot on the left of the rear panel.

-

Server Configurations, 24 NVMe

The SFF 24 NVMe configuration (HX-C240-M6SN) can be ordered as an NVMe-only server. The NVMe-optimized server requires two CPUs. This server supports the following:

-

A maximum of 24 SFF NVMe drives as SSDs with a 24-drive backplane, NVMe-optimized.

-

Front-loading drive bays 1—24 support 2.5-inch NVMe PCIe SSDs only.

-

The two rear-loading drive bays support two 2.5-inch NVMe SSDs only. These drive bays are the top and middle slot on the left of the rear panel.

-

As an option, this server can be ordered with "GPU ready" configuration. This option supports adding GPUs at a later date even though the GPU is not purchased at the time the server is initially ordered.

Note

To order the GPU Ready configuration through the Cisco online ordering and configuration tool, you must select the GPU air duct PID to enable GPU ready configuration. Follow the additional rules displayed in the tool. For additional information, see GPU Card Configuration Rules.

-

Feedback

Feedback