HX Data Protection Snapshot Overview

The HyperFlex Data Protection Snapshot (DP Snapshot) feature enables the protection of virtual machines from disaster by configuring the replication of running VMs between a pair of network connected clusters. Protected virtual machines running on one cluster replicate to the other cluster in the pair, and vice versa. The two paired clusters typically are located at a distance from each other, with each cluster serving as the disaster recovery site for virtual machines running on the other cluster.

Once protection is configured on a VM, the HX Data Platform periodically takes a DP Snapshot of the running VM on the local cluster and replicates (copies) the DP snapshot to the paired remote cluster. In the event of a disaster at the local cluster, the most recently replicated snapshot of each protected VM can be recovered on the remote cluster. Each cluster that serves as a disaster recovery site for another cluster must be sized with adequate spare resources so that upon a disaster, it can run the newly recovered VMs in addition to its normal workload.

Note |

Only the most recently replicated DP snapshot is retained on the destination cluster. Retaining additional DP snapshots is not supported. |

Each VM is individually protected by assigning it protection attributes, including the replication interval (schedule). The shorter the replication interval, the fresher the replicated snapshot data is likely to be. DP snapshot intervals can range from once every 5 minutes to once every 24 hours.

A protection group is a group of VMs that have a common DP snapshot schedule and quiescence parameter value

Setting up DP snapshots requires two existing clusters running HX Data Platform version 2.5 or later. Both clusters must be on the same HX Data Platform version. Use HyperFlex Connect to complete the setup.

First, each cluster is set up for local replication networking. Use HX Connect to provide a set of IP addresses to be used by local cluster nodes to replicate to the remote cluster. HX Connect creates VLANs through UCS Manager, for dedicated local replication network use.

Note |

When this option is chosen in HX Connect, UCSM is configured only when both UCS Manager and fabric interconnect are associated with the HyperFlex cluster. When UCSM and FI are not present, you must enter the VLAN ID, and not select UCSM configuration in HX Connect. |

The two clusters, and their corresponding existing relevant datastores must be explicitly paired. The pairing setup can be completed using HX Connect from one of the two clusters. This requires administrative credentials of the other cluster.

Virtual machines can be protected (or have their existing protection attributes modified) by using HX Connect at the cluster where they are currently active.

HX Connect can monitor the status of both incoming and outgoing replication activity on a cluster.

After a disaster, a protected VM can be recovered and run on the cluster that serves as the DP snapshot recovery site for that VM.

Replication and Recovery Considerations

The following is a list of considerations when configuring virtual machine replication and performing disaster recovery of virtual machines.

Note |

Cisco HX Data Replication is not supported with HyperFlex clusters that have Hardware Acceleration Cards installed. |

-

Administrator―All replication and recovery tasks, except monitoring, can only be performed with administrator privileges on the local cluster. For tasks involving a remote cluster, both the local and remote user must have administrator privileges and should be configured with the vCenter SSO on their respective clusters.

-

Minimum and Recommended Bandwidth―Beginning with HX Release 4.0(2a) the minimum bandwidth can be configured to be 10 Mb for smaller size deployments. The replication network link should also be reliable and have sustained minimum symmetric bandwidth same as configured in a HyperFlex DR network. This should not be shared with any other applications on an Uplink or Downlink.

-

Maximum Latency―Maximum latency supported is 75ms between two clusters.

If you are scheduling to run multiple replication jobs at the same time, for example 32 as maximum supported by DR, and your bandwidth (50Mbs) is low and latency (75ms) high, it is possible that some jobs will error out until bandwidth becomes available. If this situation occurs, retry the job, increase bandwidth or reduce the concurrency by staggering the replications.

During this situation, unprotect operations can take longer than expected.

-

Network Loss―When there is a packet loss in data transmission across two sites, protection and recovery operations will have unexpected results. The transmission should be reliable for these features to work as expected.

-

Storage Space―Ensure that there is sufficient space on the remote cluster to support the DR replication schedule. The protected virtual machines are replicated (copied) to the remote cluster at every scheduled interval. Though storage capacity methods are applied (deduplication and compression), each replicated virtual machine will consume some storage space.

Not having sufficient storage space on the remote cluster can cause the remote cluster to reach capacity usage maximums. If you see Out of Space errors, see Handling Out of Space Errors. Pause all replication schedules until you have appropriately adjusted the space available on the cluster. Always ensure that your cluster capacity consumption is below the space utilization warning threshold.

-

Supported Clusters―Replication is supported between the following HyperFlex clusters:

-

1:1 replication between HX clusters running under fabric interconnects.

-

1:1 replication between All Flash and Hybrid HX cluster running under fabric interconnects.

-

1:1 replication between 3-Node and 4-Node HX Edge and another 3-Node and 4-Node HX Edge cluster.

-

1:1 replication between All Flash 3-Node and 4-Node Edge and Hybrid 3-Node and 4-Node HX Edge clusters.

-

1:1 replication between 3-Node and 4-Node HX Edge and an HX cluster running under fabric interconnects.

-

Starting with HX release 4.5(2a), 1:1 replication with 2-Node HX Edge cluster.

-

-

Rebooting Nodes―Do not reboot any nodes in the HX Cluster during any restore, replication, or recovery operation.

-

Thin Provision―Protected VMs are recovered with thin provisioned disks irrespective of how disks were specified in the originally protected VM.

-

Protection Group Limitations

-

Do not add VMs with ISOs or floppies to protection groups.

Protected Virtual Machine Scalability

-

HX Release 3.5(x) supports the maximum limit of 200 VMs.

-

-

Non-HX Datastores―Periodical replication fails on a protected a VM with storage on a non-HX datastore. To avoid the failure, unprotect the VM or remove non-HX storage from the VM.

Do not move protected VMs from HX datastores to non-HX datastores. If a VM is moved to a non-HX datastore through storage vMotion, unprotect the VM.

-

Templates―Templates are not supported with disaster recovery.

-

Protection and Recovery of Virtual Machines with Snapshots

When replication is enabled:

-

VM with no Snapshots— The entire content of the VM is replicated.

-

VM with VMware redolog snapshots— The entire content including the snapshot data is replicated. When a VM with redolog snapshots is recovered, all previous snapshots are preserved.

-

VM with HX native snapshots—Only the latest data is replicated, and the HX native snapshot data is not replicated. When the VM is recovered, previous snapshots are not preserved.

-

-

Data Protection snapshots are stored on the same datastore as the protected VMs. Manual deletion of DP snapshots is not supported. Deleting snapshot directories will compromise HX data protection and disaster recovery.

Caution

To avoid deleting DP snapshots manually, it is important to remember that VMware does not restrict operations on datastores by the Admin user. In any VMware environment, datastores are accessed by the Admin via vCenter browser or by logging into the ESX host. Because of this, the snapshot directory and contents are browseable and accessible to Admins.

Other points for consideration include:

-

Location of the VMware vCenter—If you delete a VM from VMware vCenter that is located on a “Other DRO” datastore pair, a recovery plan for this datastore pair fails during recovery. To avoid this failure, first unprotect the VM using the following command on one of the controller VM

stcli dp vm delete --vmid <VM_ID>

-

Name of the VM—If you rename a VM from the vCenter, HyperFlex recovers at the previous name folder but registers the VM with the new name on the recovery side. Following are some of the limitations to this situation:

-

VMware allows a VMDK located at any location to be attached to a VM. In such cases, Hyperflex recovers the VM inside the VM folder and not at a location mapped to the original location. Also, recovery can fail if the VMDK is explicitly referenced in the

virtualmachine name.vmxfile by its path. The data is recovered accurately but there could be problems with registering the VM to vCenter. Correct this error by updating thevirtualmachine name.vmxfile name with the new path. -

If a VM is renamed and a VMDK is added subsequently, the new VMDK is created at [sourceDs] newVm/newVm.vmdk. Hyperflex recovers this VMDK with the earlier name. In such cases, recovery can fail if the VMDK is explicitly referenced in the

virtualmachine name.vmxfile by its path. The data is recovered accurately but there could be problems with registering the VM to the Virtual Center. Correct this error by updating thevirtualmachine name.vmxfile name with the new path.

-

-

Network Ports—The comprehensive list of ports required for component communication for the HyperFlex solution is located in Appendix A of the HX Data Platform Security Hardening Guide.

Replication Network and Pairing Considerations

A replication network must be established between clusters that are expected to use replication for DP snapshots. The Replication network is created to isolate inter-cluster replication traffic from other traffic within each cluster and site.

The following is a list of pre-validation checks necessary for pairing:

-

Verify and ensure that peer credentials are working.

-

Check the health of both clusters and proceed with pairing only when both the clusters are healthy.

-

Verify and ensure that vCenter version is at least same or later than ESXi version at each cluster.

The following is a list of considerations when configuring replication network and pairing:

-

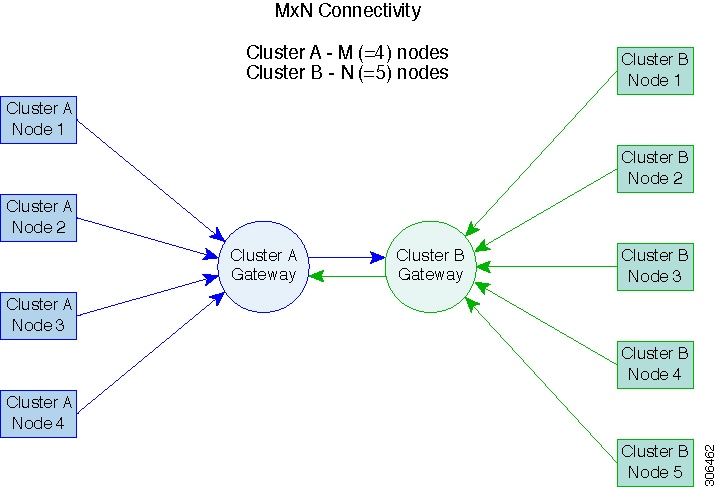

To support efficient replication, all M nodes of cluster A have to communicate with all N nodes of cluster B, as illustrated in the M x N connectivity between clusters figure.

-

To enable replication traffic between clusters to cross the site-boundary and traverse the internet, each node on Cluster A should be able to communicate with each node on Cluster B across the site boundary and the internet.

-

The replication traffic must be isolated from other traffic within the cluster and the data center.

-

To create this isolated replication network for inter-cluster traffic, complete these steps:

-

Create a replication network on each cluster.

-

Pair clusters to associate the clusters and establish M x N connectivity between the clusters.

-

-

IP addresses, Subnet, VLAN, and Gateway are associated with the replication network of each cluster. Configure the corporate firewall and routers on both sites to allow communication between the clusters and the sites on TCP ports 9338,3049,9098,4049,4059.

-

The comprehensive list of ports required for component communication for the HyperFlex solution is located in Appendix A of the HX Data Platform Security Hardening Guide.

M*N Connectivity Between Clusters

Data Protection Terms

Interval―Part of the replication schedule configuration, used to enforce how often the protected VMs DP snapshot must be taken and copied to the target cluster.

Local cluster―The cluster you are currently logged into through HX Connect, in a VM replication cluster pair. From the local cluster, you can configure replication protection for locally resident VMs. The VMs are then replicated to the paired remote cluster.

Migration―A routine system maintenance and management task where a recent replication DP snapshot copy of the VM becomes the working VM. The replication pair of source and target cluster do not change.

Primary cluster―An alternative name for the source cluster in VM disaster recovery.

Protected virtual machine― A VM that has replication configured. The protected VMs reside in a datastore on the local cluster of a replication pair. Protected VMs have a replication schedule configured either individually or by inclusion in a protection group.

Protection group―A means to apply the same replication configuration to a group of VMs.

Recovery process―The manual process to recover protected VMs in the event the source cluster fails or a disaster occurs.

Recovery test―A maintenance task that ensures the recovery process will be successful in the event of a disaster.

Remote cluster―One of a VM replication cluster pair. The remote cluster receives the replication snapshots from the Protected VMs in the local cluster.

Replication pair―Two clusters that together provide a remote cluster location for storing the replicated DP snapshots of local cluster VMs.

Clusters in a replication pair can be both a remote and local cluster. Both clusters in a replication pair can have resident VMs. Each cluster is local to its resident VMs. Each cluster is remote to the VMs that reside on the paired local cluster.

DP snapshot―Part of the replication protection mechanism. A type of snapshot taken of a protected VM, which is replicated from the local cluster to the remote cluster.

Secondary cluster―An alternative name for the target cluster in VM disaster recovery.

Source cluster―One of a VM replication cluster pair. The source cluster is where the protected VMs reside.

Target cluster―One of a VM replication cluster pair. The target cluster receives the replicated DP snapshots from the VMs of the source cluster. The target cluster is used to recover the VMs in the event of a disaster on the source cluster.

Best Practices for Data Protection and Disaster Recovery

The requirement for an effective data protection and disaster recovery strategy based on the environment being protected cannot be overstated. The solution should be designed and deployed to meet or exceed the business requirements for both Recovery Point Objectives (RPO) and Recovery Time Objectives (RTO) of the production VMs. The following are some of the points that must be considered when designing this strategy:

-

The number of Service Level Agreements (SLA) necessary to comply with various categories of production workloads that may include mission critical, business critical, and important VMs.

-

Detailed constructs of each SLA that may include RPO, RTO, the number or recovery points retained, requirements for off-site copies of data, and any requirements for storing backup copies on different media types. There may be additional requirements that include the ability to recover to a different environment such as a different location, different hypervisor or different private/public cloud.

-

An ongoing testing strategy for each SLA which serves to prove that the solution meets the business requirements it was designed for.

Note that backups and backup copies must be stored external to the HyperFlex cluster being protected. For example, backups performed to protect VMs on a HyperFlex cluster should not be saved to a backup repository or a disk library that is hosted on the same HyperFlex cluster.

The built-in HyperFlex data protection capabilities are generalized into the following categories:

-

Data Replication Factor—Refers to the number of redundant copies of data within a HyperFlex cluster. A data replication factor of 2 or 3 can be configured during data platform installation and cannot be changed. The data replication factor benefit is that it contributes to the number of cluster tolerated failures. See the section titled, HX Data Platform Cluster Tolerated Failures for additional information about the data replication factor.

Note

Data Replication Factor alone may not fulfill requirements for recovery in the highly unlikely event of a cluster failure, or an extended site outage. Also, the data replication factor does not facilitate point-in-time recovery, retention of multiple recovery points, or creation of point-in-time copies of data external to the cluster.

-

HX Native Snapshots—Operates on an individual VM basis and enables saving versions of a VM over time. A maximum of 31 total snapshots, including the HX SENTINEL snapshot, can be retained.

Note

HX native snapshots alone may not fulfill requirements for recovery in the unlikely event of a cluster failure, or an extended site outage. Also, HX native snapshots do not facilitate the ability to create point-in-time copies of data external to the cluster. More importantly, unintentional deletion of a VM also deletes any HX native snapshots associated with the deleted VM.

-

Asynchronous Replication—Also known as The HX Data Platform disaster recovery feature, it enables protection of virtual machines by replicating virtual machine DP snapshots between a pair of network connected HyperFlex clusters. Protected virtual machines running on one cluster replicate to the other cluster in the pair, and vice versa. The two paired clusters typically are located at a distance from each other, with each cluster serving as the disaster recovery site for virtual machines running on the other cluster.

Note

Asynchronous Replication alone may not fulfill requirements for recovery when multiple point-in-time copies need to be retained on the remote cluster. Only the most recent snapshot replica for a given VM is retained on the remote cluster. Also, asynchronous replication does not facilitate the ability to create point-in-time copies of data external to either cluster.

It is recommended to first understand the unique business requirements of the environment and then deploy a comprehensive data protection and disaster recovery solution to meet or exceed those requirements.

Feedback

Feedback