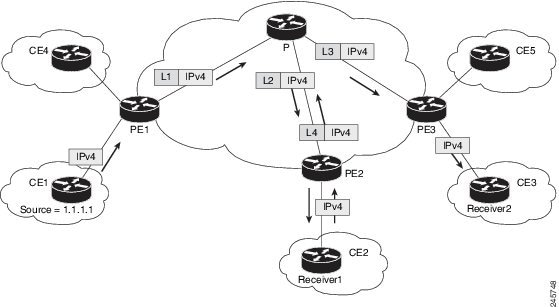

Draft-Rosen Multicast VPN (Profile 0) uses Generic Routing Encapsulation (GRE) as an overlay protocol. All multicast packets

are encapsulated inside GRE. Profile 0 has PIM as the multicast routing protocol between the edge routers (PE) and hosts (CE), and between the PE routers

in the VRF mode. The PE routers directly connect using a Default Multicast Distribution Tree (MDT) formed between the PE routers.

The PE routers connect to each other as PIM neighbors across the Default MDT.

Verification

Router# show pim vrf vpn101 context

Wed Aug 9 14:56:28.382 UTC

PIM context information for VRF vpn101 (0x55845d7772b0)

VRF ID: 0x60000002

Table ID: 0xe0000002

Remote Table ID: 0xe0800002

MDT Default Group : 232.1.0.1

MDT Source : (4.4.4.4, Loopback0) Per-VRF

MDT Immediate Switch Not Configured

MDT handle: 0x2000806c(mdtvpn101)

Context Active, ITAL Active

Routing Enabled

Registered with MRIB

Owner of MDT Interface

Raw socket req: T, act: T, LPTS filter req: T, act: T

UDP socket req: T, act: T, UDP vbind req: T, act: T

Reg Inj socket req: T, act: T, Reg Inj LPTS filter req: T, act: T

Mhost Default Interface : TenGigE0/0/0/18 (publish pending: F)

Remote MDT Default Group : 232.2.0.1

Backup MLC virtual interface: Null

Neighbor-filter: -

MDT Neighbor-filter: -

Router# show mrib vrf vpn101 route detail

Wed Aug 9 10:20:40.486 UTC

IP Multicast Routing Information Base

Entry flags: L - Domain-Local Source, E - External Source to the Domain,

C - Directly-Connected Check, S - Signal, IA - Inherit Accept,

IF - Inherit From, D - Drop, ME - MDT Encap, EID - Encap ID,

MD - MDT Decap, MT - MDT Threshold Crossed, MH - MDT interface handle

CD - Conditional Decap, MPLS - MPLS Decap, EX - Extranet

MoFE - MoFRR Enabled, MoFS - MoFRR State, MoFP - MoFRR Primary

MoFB - MoFRR Backup, RPFID - RPF ID Set, X - VXLAN

Interface flags: F - Forward, A - Accept, IC - Internal Copy,

NS - Negate Signal, DP - Don't Preserve, SP - Signal Present,

II - Internal Interest, ID - Internal Disinterest, LI - Local Interest,

LD - Local Disinterest, DI - Decapsulation Interface

EI - Encapsulation Interface, MI - MDT Interface, LVIF - MPLS Encap,

EX - Extranet, A2 - Secondary Accept, MT - MDT Threshold Crossed,

MA - Data MDT Assigned, LMI - mLDP MDT Interface, TMI - P2MP-TE MDT Interface

IRMI - IR MDT Interface, TRMI - TREE SID MDT Interface, MH - Multihome Interface

(*,224.0.0.0/4) Ver: 0x9b0d RPF nbr: 21.1.1.1 Flags: L C RPF P, FGID: 11903, Statistics enabled: 0x0, Tunnel RIF: 0xffffffff, Tunnel LIF: 0xffffffff

Up: 00:03:33

Outgoing Interface List

Decapstunnel1 Flags: NS DI, Up: 00:03:28

(*,224.0.0.0/24) Ver: 0xec6c Flags: D P, FGID: 11901, Statistics enabled: 0x0, Tunnel RIF: 0xffffffff, Tunnel LIF: 0xffffffff

Up: 00:03:33

(*,224.0.1.39) Ver: 0xe7dc Flags: S P, FGID: 11899, Statistics enabled: 0x0, Tunnel RIF: 0xffffffff, Tunnel LIF: 0xffffffff

Up: 00:03:33

(*,224.0.1.40) Ver: 0xf5fb Flags: S P, FGID: 11900, Statistics enabled: 0x0, Tunnel RIF: 0xffffffff, Tunnel LIF: 0xffffffff

Up: 00:03:33

Outgoing Interface List

TenGigE0/0/0/6 Flags: II LI, Up: 00:03:33

(*,232.0.0.0/8) Ver: 0x96d1 Flags: D P, FGID: 11902, Statistics enabled: 0x0, Tunnel RIF: 0xffffffff, Tunnel LIF: 0xffffffff

Up: 00:03:33

(2.0.0.2,232.1.1.1) Ver: 0x2c3f RPF nbr: 4.4.4.4 Flags: RPF RPFID, FGID: 11907, Statistics enabled: 0x0, Tunnel RIF: 0x26, Tunnel LIF: 0xa000, Flags2: 0x2

Up: 00:00:22

RPF-ID: 1, Encap-ID: 0

Incoming Interface List

mdtvpn101 Flags: A MI, Up: 00:00:22

Outgoing Interface List

TenGigE0/0/0/6 Flags: F NS LI, Up: 00:00:22

Router# show pim vrf vpn101 context

Wed Aug 9 15:00:03.092 UTC

IP Multicast Routing Information Base

Entry flags: L - Domain-Local Source, E - External Source to the Domain,

C - Directly-Connected Check, S - Signal, IA - Inherit Accept,

IF - Inherit From, D - Drop, ME - MDT Encap, EID - Encap ID,

MD - MDT Decap, MT - MDT Threshold Crossed, MH - MDT interface handle

CD - Conditional Decap, MPLS - MPLS Decap, EX - Extranet

MoFE - MoFRR Enabled, MoFS - MoFRR State, MoFP - MoFRR Primary

MoFB - MoFRR Backup, RPFID - RPF ID Set, X - VXLAN

Interface flags: F - Forward, A - Accept, IC - Internal Copy,

NS - Negate Signal, DP - Don't Preserve, SP - Signal Present,

II - Internal Interest, ID - Internal Disinterest, LI - Local Interest,

LD - Local Disinterest, DI - Decapsulation Interface

EI - Encapsulation Interface, MI - MDT Interface, LVIF - MPLS Encap,

EX - Extranet, A2 - Secondary Accept, MT - MDT Threshold Crossed,

MA - Data MDT Assigned, LMI - mLDP MDT Interface, TMI - P2MP-TE MDT Interface

IRMI - IR MDT Interface, TRMI - TREE SID MDT Interface, MH - Multihome Interface

(*,224.0.0.0/24) Ver: 0x7f6e Flags: D P, FGID: 9235, Statistics enabled: 0x0, Tunnel RIF: 0xffffffff, Tunnel LIF: 0xffffffff

Up: 00:06:54

(*,224.0.1.39) Ver: 0x7af2 Flags: S P, FGID: 9233, Statistics enabled: 0x0, Tunnel RIF: 0xffffffff, Tunnel LIF: 0xffffffff

Up: 00:06:54

(*,224.0.1.40) Ver: 0x9f9b Flags: S P, FGID: 9234, Statistics enabled: 0x0, Tunnel RIF: 0xffffffff, Tunnel LIF: 0xffffffff

Up: 00:06:54

Outgoing Interface List

TenGigE0/0/0/4 Flags: II LI, Up: 00:06:54

(*,232.0.0.0/8) Ver: 0x517d Flags: D P, FGID: 9236, Statistics enabled: 0x0, Tunnel RIF: 0xffffffff, Tunnel LIF: 0xffffffff

Up: 00:06:54

(4.4.4.4,232.1.0.1) Ver: 0xc19e RPF nbr: 4.4.4.4 Flags: RPF ME MH, FGID: 9242, Statistics enabled: 0x0, Tunnel RIF: 0x35, Tunnel LIF: 0xc000, Flags2: 0x2

MVPN TID: 0xe0000002

MVPN Remote TID: 0x0

MVPN Payload: IPv4

MDT IFH: 0x2000806c

Up: 00:06:49

RPF-ID: 1, Encap-ID: 0

Incoming Interface List

Loopback0 Flags: F A, Up: 00:06:49

Outgoing Interface List

Loopback0 Flags: F A, Up: 00:06:49

TenGigE0/0/0/4 Flags: F NS, Up: 00:04:13

(21.21.21.21,232.1.0.1) Ver: 0xa354 RPF nbr: 10.2.0.2 Flags: RPF MD MH CD, FGID: 9244, Statistics enabled: 0x0, Tunnel RIF: 0x35, Tunnel LIF: 0xc000, Flags2: 0x2

MVPN TID: 0xe0000002

MVPN Remote TID: 0x0

MVPN Payload: IPv4

MDT IFH: 0x2000806c

Up: 00:04:13

RPF-ID: 1, Encap-ID: 0

Incoming Interface List

TenGigE0/0/0/4 Flags: A, Up: 00:04:13

Outgoing Interface List

Loopback0 Flags: F NS, Up: 00:04:13

(4.4.4.4,232.2.0.1) Ver: 0xbab2 RPF nbr: 4.4.4.4 Flags: RPF ME MH, FGID: 9243, Statistics enabled: 0x0, Tunnel RIF: 0x35, Tunnel LIF: 0xc000, Flags2: 0x2

MVPN TID: 0x0

MVPN Remote TID: 0xe0800002

MVPN Payload: IPv6

MDT IFH: 0x2000806c

Up: 00:06:49

RPF-ID: 1, Encap-ID: 0

Incoming Interface List

Loopback0 Flags: F A, Up: 00:06:49

Outgoing Interface List

Loopback0 Flags: F A, Up: 00:06:49

(4.4.4.4,232.100.1.0) Ver: 0x86b RPF nbr: 4.4.4.4 Flags: RPF ME MH, FGID: 9246, Statistics enabled: 0x0, Tunnel RIF: 0x35, Tunnel LIF: 0xc000, Flags2: 0x2

MVPN TID: 0xe0000002

MVPN Remote TID: 0x0

MVPN Payload: IPv4

MDT IFH: 0x2000806c

Up: 00:01:17

RPF-ID: 1, Encap-ID: 0

Incoming Interface List

Loopback0 Flags: F A, Up: 00:01:17

Outgoing Interface List

Loopback0 Flags: F A, Up: 00:01:17

TenGigE0/0/0/4 Flags: F NS, Up: 00:01:17

Router# show mrib route detail

Wed Aug 9 15:00:03.092 UTC

IP Multicast Routing Information Base

Entry flags: L - Domain-Local Source, E - External Source to the Domain,

C - Directly-Connected Check, S - Signal, IA - Inherit Accept,

IF - Inherit From, D - Drop, ME - MDT Encap, EID - Encap ID,

MD - MDT Decap, MT - MDT Threshold Crossed, MH - MDT interface handle

CD - Conditional Decap, MPLS - MPLS Decap, EX - Extranet

MoFE - MoFRR Enabled, MoFS - MoFRR State, MoFP - MoFRR Primary

MoFB - MoFRR Backup, RPFID - RPF ID Set, X - VXLAN

Interface flags: F - Forward, A - Accept, IC - Internal Copy,

NS - Negate Signal, DP - Don't Preserve, SP - Signal Present,

II - Internal Interest, ID - Internal Disinterest, LI - Local Interest,

LD - Local Disinterest, DI - Decapsulation Interface

EI - Encapsulation Interface, MI - MDT Interface, LVIF - MPLS Encap,

EX - Extranet, A2 - Secondary Accept, MT - MDT Threshold Crossed,

MA - Data MDT Assigned, LMI - mLDP MDT Interface, TMI - P2MP-TE MDT Interface

IRMI - IR MDT Interface, TRMI - TREE SID MDT Interface, MH - Multihome Interface

(*,224.0.0.0/24) Ver: 0x7f6e Flags: D P, FGID: 9235, Statistics enabled: 0x0, Tunnel RIF: 0xffffffff, Tunnel LIF: 0xffffffff

Up: 00:06:54

(*,224.0.1.39) Ver: 0x7af2 Flags: S P, FGID: 9233, Statistics enabled: 0x0, Tunnel RIF: 0xffffffff, Tunnel LIF: 0xffffffff

Up: 00:06:54

(*,224.0.1.40) Ver: 0x9f9b Flags: S P, FGID: 9234, Statistics enabled: 0x0, Tunnel RIF: 0xffffffff, Tunnel LIF: 0xffffffff

Up: 00:06:54

Outgoing Interface List

TenGigE0/0/0/4 Flags: II LI, Up: 00:06:54

(*,232.0.0.0/8) Ver: 0x517d Flags: D P, FGID: 9236, Statistics enabled: 0x0, Tunnel RIF: 0xffffffff, Tunnel LIF: 0xffffffff

Up: 00:06:54

(4.4.4.4,232.1.0.1) Ver: 0xc19e RPF nbr: 4.4.4.4 Flags: RPF ME MH, FGID: 9242, Statistics enabled: 0x0, Tunnel RIF: 0x35, Tunnel LIF: 0xc000, Flags2: 0x2

MVPN TID: 0xe0000002

MVPN Remote TID: 0x0

MVPN Payload: IPv4

MDT IFH: 0x2000806c

Up: 00:06:49

RPF-ID: 1, Encap-ID: 0

Incoming Interface List

Loopback0 Flags: F A, Up: 00:06:49

Outgoing Interface List

Loopback0 Flags: F A, Up: 00:06:49

TenGigE0/0/0/4 Flags: F NS, Up: 00:04:13

(21.21.21.21,232.1.0.1) Ver: 0xa354 RPF nbr: 10.2.0.2 Flags: RPF MD MH CD, FGID: 9244, Statistics enabled: 0x0, Tunnel RIF: 0x35, Tunnel LIF: 0xc000, Flags2: 0x2

MVPN TID: 0xe0000002

MVPN Remote TID: 0x0

MVPN Payload: IPv4

MDT IFH: 0x2000806c

Up: 00:04:13

RPF-ID: 1, Encap-ID: 0

Incoming Interface List

TenGigE0/0/0/4 Flags: A, Up: 00:04:13

Outgoing Interface List

Loopback0 Flags: F NS, Up: 00:04:13

(4.4.4.4,232.2.0.1) Ver: 0xbab2 RPF nbr: 4.4.4.4 Flags: RPF ME MH, FGID: 9243, Statistics enabled: 0x0, Tunnel RIF: 0x35, Tunnel LIF: 0xc000, Flags2: 0x2

MVPN TID: 0x0

MVPN Remote TID: 0xe0800002

MVPN Payload: IPv6

MDT IFH: 0x2000806c

Up: 00:06:49

RPF-ID: 1, Encap-ID: 0

Incoming Interface List

Loopback0 Flags: F A, Up: 00:06:49

Outgoing Interface List

Loopback0 Flags: F A, Up: 00:06:49

(4.4.4.4,232.100.1.0) Ver: 0x86b RPF nbr: 4.4.4.4 Flags: RPF ME MH, FGID: 9246, Statistics enabled: 0x0, Tunnel RIF: 0x35, Tunnel LIF: 0xc000, Flags2: 0x2

MVPN TID: 0xe0000002

MVPN Remote TID: 0x0

MVPN Payload: IPv4

MDT IFH: 0x2000806c

Up: 00:01:17

RPF-ID: 1, Encap-ID: 0

Incoming Interface List

Loopback0 Flags: F A, Up: 00:01:17

Outgoing Interface List

Loopback0 Flags: F A, Up: 00:01:17

TenGigE0/0/0/4 Flags: F NS, Up: 00:01:17

Feedback

Feedback