Table 9. Feature History Table

|

Feature Name

|

Release Information

|

Feature Description

|

|

Bandwidth Protection Functions to Enhance auto-tunnel backup Capabilities

|

Release 7.5.1

|

This feature introduces bandwidth protection functions for auto-tunnel backups, such as signaled bandwidth, bandwidth protection,

and soft-preemption. These functions provide better bandwidth usage and prevent traffic congestion and traffic loss.

In earlier releases, auto-tunnel backups provided only link protection and node protection. Backup tunnels were signaled with

zero bandwidth, causing traffic congestion when FRR went active.

This feature introduces the following commands and keywords:

|

The following example shows the auto-tunnel backup configuration for core or edge routers.

RP/0/RSP0/CPU0:router(config)#

mpls traffic-eng

auto-tunnel backup

tunnel-id min 60000 max 61000

interface pos 0/1/0/0

auto-tunnel backup

attribute-set ab

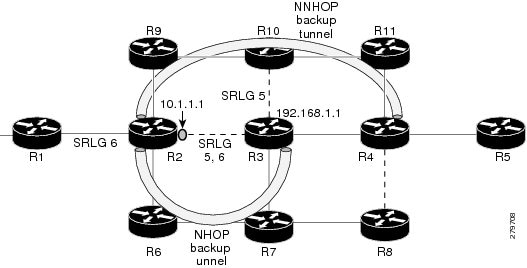

The following example shows the protection (NNHOP and SRLG) that was set on the auto-tunnel backup.

RP/0/RSP0/CPU0:router# show mpls traffic-eng tunnels 1

Signalling Summary:

LSP Tunnels Process: running

RSVP Process: running

Forwarding: enabled

Periodic reoptimization: every 3600 seconds, next in 2524 seconds

Periodic FRR Promotion: every 300 seconds, next in 49 seconds

Auto-bw enabled tunnels: 1

Name: tunnel-te1 Destination: 200.0.0.3 (auto backup)

Status:

Admin: up Oper: up Path: valid Signalling: connected

path option 10, type explicit (autob_nnhop_srlg_tunnel1) (Basis for Setup, path weight 11)

path option 20, type explicit (autob_nnhop_tunnel1)

G-PID: 0x0800 (derived from egress interface properties)

Bandwidth Requested: 0 kbps CT0

Creation Time: Fri Jul 10 01:53:25.581 PST (1h 25m 17s ago)

Config Parameters:

Bandwidth: 0 kbps (CT0) Priority: 7 7 Affinity: 0x0/0xffff

Metric Type: TE (default)

AutoRoute: disabled LockDown: disabled Policy class: not set

Forwarding-Adjacency: disabled

Loadshare: 0 equal loadshares

Auto-bw: disabled

Fast Reroute: Disabled, Protection Desired: None

Path Protection: Not Enabled

Auto Backup:

Protected LSPs: 4

Protected S2L Sharing Families: 0

Protected S2Ls: 0

Protected i/f: Gi0/1/0/0 Protected node: 20.0.0.2

Protection: NNHOP+SRLG

Unused removal timeout: not running

History:

Tunnel has been up for: 00:00:08

Current LSP:

Uptime: 00:00:08

Prior LSP:

ID: path option 1 [545]

Removal Trigger: configuration changed

Path info (OSPF 0 area 0):

Hop0: 10.0.0.2

Hop1: 100.0.0.2

Hop2: 100.0.0.3

Hop3: 200.0.0.3

The following example shows automatically created path options for this backup auto-tunnel.

RP/0/RSP0/CPU0:router# show mpls traffic-eng tunnels 1 detail

Signalling Summary:

LSP Tunnels Process: running

RSVP Process: running

Forwarding: enabled

Periodic reoptimization: every 3600 seconds, next in 2524 seconds

Periodic FRR Promotion: every 300 seconds, next in 49 seconds

Auto-bw enabled tunnels: 1

Name: tunnel-te1 Destination: 200.0.0.3 (auto backup)

Status:

Admin: up Oper: up Path: valid Signalling: connected

path option 10, type explicit (autob_nnhop_srlg_tunnel1) (Basis for Setup, path weight 11)

path option 20, type explicit (autob_nnhop_tunnel1)

G-PID: 0x0800 (derived from egress interface properties)

Bandwidth Requested: 0 kbps CT0

Creation Time: Fri Jul 10 01:53:25.581 PST (1h 25m 17s ago)

Config Parameters:

Bandwidth: 0 kbps (CT0) Priority: 7 7 Affinity: 0x0/0xffff

Metric Type: TE (default)

AutoRoute: disabled LockDown: disabled Policy class: not set

Forwarding-Adjacency: disabled

Loadshare: 0 equal loadshares

Auto-bw: disabled

Fast Reroute: Disabled, Protection Desired: None

Path Protection: Not Enabled

Auto Backup (NNHOP+SRLG):

Protected LSPs: 4

Protected S2L Sharing Families: 0

Protected S2Ls: 0

Protected i/f: Gi0/1/0/0 Protected node: 20.0.0.2

Protection: NNHOP+SRLG

Unused removal timeout: not running

Path Options Details:

10: Explicit Path Name: (autob_nnhop_srlg_te1)

1: exclude-srlg 50.0.0.1

2: exclude-address 50.0.0.2

3: exclude-node 20.0.0.2

20: Explicit Path Name: (autob_nnhop_te1)

1: exclude-address 50.0.0.1

2: exclude-address 50.0.0.2

3: exclude-node 20.0.0.2

History:

Tunnel has been up for: 00:00:08

Current LSP:

Uptime: 00:00:08

Prior LSP:

ID: path option 1 [545]

Removal Trigger: configuration changed

Path info (OSPF 0 area 0):

Hop0: 10.0.0.2

Hop1: 100.0.0.2

Hop2: 100.0.0.3

Hop3: 200.0.0.3

This example shows the automatically created backup tunnels.

RP/0/RSP0/CPU0:router# show mpls traffic-eng tunnels brief

TUNNEL NAME DESTINATION STATUS STATE

tunnel-te0 200.0.0.3 up up

tunnel-te1 200.0.0.3 up up

tunnel-te2 200.0.0.3 up up

tunnel-te50 200.0.0.3 up up

*tunnel-te60 200.0.0.3 up up

*tunnel-te70 200.0.0.3 up up

*tunnel-te80 200.0.0.3 up up

RP/0/RSP0/CPU0:router# show mpls traffic-eng tunnels tabular

Tunnel LSP Destination Source FRR LSP Path

Name ID Address Address State State Role Prot

------------------ ------ --------------- --------------- ------- ------- ------ -----

tunnel-te0 549 200.0.0.3 200.0.0.1 up Inact Head InAct

tunnel-te1 546 200.0.0.3 200.0.0.1 up Inact Head InAct

tunnel-te2 6 200.0.0.3 200.0.0.1 up Inact Head InAct

tunnel-te50 6 200.0.0.3 200.0.0.1 up Active Head InAct

tunnel-te60 4 200.0.0.3 200.0.0.1 up Active Head InAct

tunnel-te70 4 200.0.0.3 200.0.0.1 up Active Head InAct

tunnel-te80 3 200.0.0.3 200.0.0.1 up Active Head InAct

This example shows the auto-tunnel backup details.

RP/0/RSP0/CPU0:router# show mpls traffic-eng tunnels auto-tunnel backup detail

Name: tunnel-te400 Destination: 10.0.0.1 (auto-tunnel backup)

Status:

Admin: up Oper: up Path: valid Signalling: connected

path option 20, type explicit (autob_nnhop_te400) (Basis for Setup, path weight 2)

path option 10, type explicit (autob_nnhop_srlg_te400) [disabled]

G-PID: 0x0800 (derived from egress interface properties)

Bandwidth Requested: 0 kbps CT0

Creation Time: Thu Aug 16 18:30:41 2012 (00:01:28 ago)

Config Parameters:

Bandwidth: 0 kbps (CT0) Priority: 7 7 Affinity: 0x0/0xffff

Metric Type: TE (default)

Metric Type: TE (default)

Hop-limit: disabled

AutoRoute: disabled LockDown: disabled Policy class: not set

Forwarding-Adjacency: disabled

Loadshare: 0 equal loadshares

Auto-bw: disabled

Fast Reroute: Disabled, Protection Desired: None

Path Protection: Not Enabled

Soft Preemption: Disabled

Auto Backup:

Protected LSPs: 1

Protected S2L Sharing Families: 0

Protected S2L: 0

Protected i/f: Gi0/1/0/3 Protected node: 192.168.0.1

Attribute-set: ab1

Protection: NNHOP

Unused removal timeout: not running

Path Option Details:

10: Explicit Path Name: (autob_nnhop_srlg_te400)

1: exclude-srlg 34.9.0.4

2: exclude-address 34.9.0.3

3: exclude-node 192.168.0.1

20: Explicit Path Name: (autob_nnhop_te400)

1: exclude-address 34.9.0.4

2: exclude-address 34.9.0.3

3: exclude-node 192.168.0.1

SNMP Index: 221

History:

Tunnel has been up for: 00:00:34 (since Thu Aug 16 18:31:35 EST 2012)

Current LSP:

Uptime: 00:00:34 (since Thu Aug 16 18:31:35 EST 2012)

Current LSP Info:

Instance: 2, Signaling Area: OSPF 100 area 10.2.3.4

Uptime: 00:00:34 (since Thu Aug 16 18:31:35 EST 2012)

Outgoing Interface: GigabitEthernet0/1/0/2, Outgoing Label: 16000

Router-IDs: local 209.165.201.1

downstream 172.16.0.1

Soft Preemption: None

Path Info:

Outgoing:

Explicit Route:

Strict, 24.9.0.2

Strict, 12.9.1.1

Strict, 10.0.0.1

Record Route: Empty

Tspec: avg rate=0 kbits, burst=1000 bytes, peak rate=0 kbits

Session Attributes: Local Prot: Not Set, Node Prot: Not Set, BW Prot: Not Set

Soft Preemption Desired: Not Set

Resv Info:

Record Route:

IPv4 24.9.0.2, flags 0x0

IPv4 12.9.1.1, flags 0x0

Fspec: avg rate=0 kbits, burst=1000 bytes, peak rate=0 kbits

Displayed 1 (of 104) heads, 0 (of 0) midpoints, 0 (of 201) tails

Displayed 1 up, 0 down, 0 recovering, 0 recovered heads

This example shows the automatically created backup tunnels.

RP/0/RSP0/CPU0:router# show mpls traffic-eng tunnels auto-tunnel backup tabular

Tunnel LSP Destination Source Tun FRR LSP Path

Name ID Address Address State State Role Prot

----------------- ----- --------------- --------------- ------ ------ ---- -----

*tunnel-te400 2 10.0.0.1 209.165.201.1 up Inact Head Inact

*tunnel-te401 2 192.168.0.1 209.165.201.1 up Inact Head Inact

* = automatically created backup tunnel

RP/0/RSP0/CPU0:router# show mpls traffic-eng tunnels auto-tunnel backup brief

TUNNEL NAME DESTINATION STATUS STATE

*tunnel-te400 10.0.0.1 up up

*tunnel-te401 192.168.0.1 up up

* = automatically created backup tunnel

Displayed 2 (of 104) heads, 0 (of 0) midpoints, 0 (of 201) tails

Displayed 2 up, 0 down, 0 recovering, 0 recovered heads

This example shows the attribute-set for auto-backup tunnels.

RP/0/RSP0/CPU0:router# show mpls traffic-eng attribute-set auto-backup

Attribute Set Name: ab (Type: auto-backup)

Number of affinity constraints: 2

Include bit map : 0x4

Include name : blue

Exclude bit map : 0x2

Exclude name : red

Priority: 7 7 (Default)

Record-route: Enabled

Policy-class: 1

Logging: reoptimize, state

List of protected interfaces (count 1)

POS0_3_0_1

List of tunnel IDs (count 1)

3000

This example shows the attribute-set for auto-mesh tunnels.

RP/0/RSP0/CPU0:router# show mpls traffic-eng attribute-set auto-mesh

Attribute Set Name: am (Type: auto-mesh)

Bandwidth: 100 kbps (CT0)

Number of affinity constraints: 2

Include bit map : 0x8

Include name : yellow

Exclude bit map : 0x2

Exclude name : red

Priority: 2 2

Interface Bandwidth: 0 kbps (Default)

AutoRoute Announce: Disabled

Auto-bw: Disabled

Soft Preemption: Disabled

Fast Reroute: Enabled, Protection Desired: Node, Bandwidth

Record-route: Enabled

Policy-class: 0 (Not configured)

Logging: None

List of Mesh Groups (count 1)

1

This example shows the details about the tunnel that is using auto-backup type of attribute-set.

RP/0/RSP0/CPU0:router# show mpls traffic-eng tunnels attribute-set auto-backup ab

Name: tunnel-te3000 Destination: 10.0.0.1 (auto-tunnel backup)

Status:

Admin: up Oper: up Path: valid Signalling: connected

path option 20, type explicit (autob_nhop_te3000) (Basis for Setup, path weight 2)

path option 10, type explicit (autob_nhop_srlg_te3000) [disabled]

G-PID: 0x0800 (derived from egress interface properties)

Bandwidth Requested: 0 kbps CT0

Creation Time: Tue Aug 14 23:24:27 2012 (00:05:28 ago)

Config Parameters:

Bandwidth: 0 kbps (CT0) Priority: 7 7

Number of affinity constraints: 2

Include bit map : 0x4

Include name : blue

Exclude bit map : 0x2

Exclude name : red

Metric Type: TE (default)

Hop-limit: disabled

AutoRoute: disabled LockDown: disabled Policy class: 1

Forwarding-Adjacency: disabled

Loadshare: 0 equal loadshares

Auto-bw: disabled

Fast Reroute: Disabled, Protection Desired: None

Path Protection: Not Enabled

Soft Preemption: Disabled

Auto Backup:

Protected LSPs: 2

Protected S2L Sharing Families: 0

Protected S2L: 0

Protected i/f: PO0/3/0/1

Attribute-set: ab

Protection: NHOP

Unused removal timeout: not running

History:

Tunnel has been up for: 00:04:57 (since Tue Aug 14 23:24:58 EST 2012)

Current LSP:

Uptime: 00:04:57 (since Tue Aug 14 23:24:58 EST 2012)

Path info (OSPF 100 area 16909060):

Node hop count: 2

Hop0: 23.9.0.2

Hop1: 12.9.0.2

Hop2: 12.9.0.1

Hop3: 10.0.0.1

Displayed 1 (of 7) heads, 0 (of 3) midpoints, 0 (of 0) tails Displayed 1 up, 0 down, 0 recovering, 0 recovered heads

This example shows the protected interface for auto-backup auto-tunnels.

RP/0/RSP0/CPU0:router# show mpls traffic-eng tunnels backup protected-interface

Interface: Gi0/2/0/1 (auto-tunnel backup)

SRLG: N/A, NHOP-only: No

Attribute-set: Not configured

Auto-tunnel backup recreate time remaining: timer not running

No backup tunnel found

Interface: Gi0/2/0/3

tunnel-te340 PROTECTED : out i/f: PO0/3/0/2 Admin: up Oper: up

Interface: PO0/3/0/1 (auto-tunnel backup)

SRLG: N/A, NHOP-only: No

Attribute-set: ab

Auto-tunnel backup recreate time remaining: timer not running

*tunnel-te3000 NHOP : out i/f: Gi0/2/0/2 Admin: up Oper: up

* = automatically created backup tunnel

This example shows the details about all the tunnels that are using auto-mesh type of attribute-set.

RP/0/RSP0/CPU0:router# show mpls traffic-eng tunnels attribute-set auto-mesh all

Name: tunnel-te3501 Destination: 10.0.0.1 (auto-tunnel mesh)

Status:

Admin: up Oper: up Path: valid Signalling: connected

path option 10, type dynamic (Basis for Setup, path weight 2)

G-PID: 0x0800 (derived from egress interface properties)

Bandwidth Requested: 100 kbps CT0

Creation Time: Tue Aug 14 23:25:41 2012 (00:06:13 ago)

Config Parameters:

Bandwidth: 100 kbps (CT0) Priority: 2 2

Number of affinity constraints: 2

Include bit map : 0x8

Include name : yellow

Exclude bit map : 0x2

Exclude name : red

Metric Type: TE (default)

Hop-limit: disabled

AutoRoute: disabled LockDown: disabled Policy class: not set

Forwarding-Adjacency: disabled

Loadshare: 0 equal loadshares

Auto-bw: disabled

Fast Reroute: Enabled, Protection Desired: Node, Bandwidth

Path Protection: Not Enabled

Attribute-set: am (type auto-mesh)

Soft Preemption: Disabled

Auto-tunnel Mesh:

Group ID: 1

Destination list: blah

Unused removal timeout: not running

History:

Tunnel has been up for: 00:06:13 (since Tue Aug 14 23:25:41 EST 2012)

Current LSP:

Uptime: 00:06:13 (since Tue Aug 14 23:25:41 EST 2012)

Path info (OSPF 100 area 16909060):

Node hop count: 2

Hop0: 23.9.0.2

Hop1: 12.9.0.2

Hop2: 12.9.0.1

Hop3: 10.0.0.1

Name: tunnel-te3502 Destination: 172.16.0.1 (auto-tunnel mesh)

Status:

Admin: up Oper: up Path: valid Signalling: connected

path option 10, type dynamic (Basis for Setup, path weight 1)

G-PID: 0x0800 (derived from egress interface properties)

Bandwidth Requested: 100 kbps CT0

Creation Time: Tue Aug 14 23:25:41 2012 (00:06:13 ago)

Config Parameters:

Bandwidth: 100 kbps (CT0) Priority: 2 2

Number of affinity constraints: 2

Include bit map : 0x8

Include name : yellow

Exclude bit map : 0x2

Exclude name : red

Metric Type: TE (default)

Hop-limit: disabled

AutoRoute: disabled LockDown: disabled Policy class: not set

Forwarding-Adjacency: disabled

Loadshare: 0 equal loadshares

Auto-bw: disabled

Fast Reroute: Enabled, Protection Desired: Node, Bandwidth

Path Protection: Not Enabled

Attribute-set: am (type auto-mesh)

Soft Preemption: Disabled

Auto-tunnel Mesh:

Group ID: 1

Destination list: blah

Unused removal timeout: not running

History:

Tunnel has been up for: 00:06:13 (since Tue Aug 14 23:25:41 EST 2012)

Current LSP:

Uptime: 00:06:13 (since Tue Aug 14 23:25:41 EST 2012)

Path info (OSPF 100 area 16909060):

Node hop count: 1

Hop0: 23.9.0.2

Hop1: 172.16.0.1

Name: tunnel-te3503 Destination: 209.165.201.1 (auto-tunnel mesh)

Status:

Admin: up Oper: down Path: not valid Signalling: Down

path option 10, type dynamic

Last PCALC Error: Tue Aug 14 23:31:26 2012

Info: No path to destination, 209.165.201.1 (affinity)

G-PID: 0x0800 (derived from egress interface properties)

Bandwidth Requested: 100 kbps CT0

Creation Time: Tue Aug 14 23:25:41 2012 (00:06:13 ago)

Config Parameters:

Bandwidth: 100 kbps (CT0) Priority: 2 2

Number of affinity constraints: 2

Include bit map : 0x8

Include name : yellow

Exclude bit map : 0x2

Exclude name : red

Metric Type: TE (default)

Hop-limit: disabled

AutoRoute: disabled LockDown: disabled Policy class: not set

Forwarding-Adjacency: disabled

Loadshare: 0 equal loadshares

Auto-bw: disabled

Fast Reroute: Enabled, Protection Desired: Node, Bandwidth

Path Protection: Not Enabled

Attribute-set: am (type auto-mesh)

Soft Preemption: Disabled

Auto-tunnel Mesh:

Group ID: 1

Destination list: blah

Unused removal timeout: not running

Displayed 3 (of 7) heads, 0 (of 3) midpoints, 0 (of 0) tails Displayed 2 up, 1 down, 0 recovering, 0 recovered heads

Bandwidth Protection Functions to Enhance auto-tunnel backup Capabilities

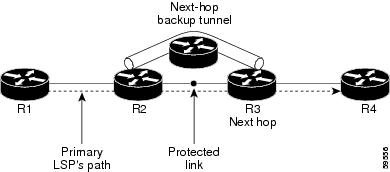

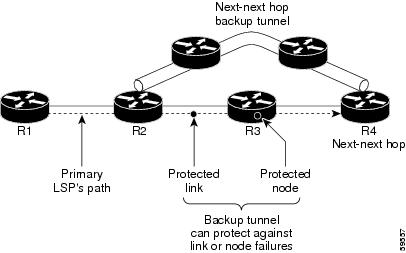

Without bandwidth protection, auto-tunnel backups provide only link protection and node protection (per next-next-hop), and

backup tunnels are signalled with zero bandwidth. This causes traffic congestion when FRR goes active, since the backup tunnels

might be protecting huge amount of data, such as LSPs with large bandwidth or multiple LSPs.

To address the congestion issue, bandwidth protection capabilities are added for auto-tunnel backups. Bandwidth protection,

signalled bandwidth, and soft-preemption settings are provided. Details:

-

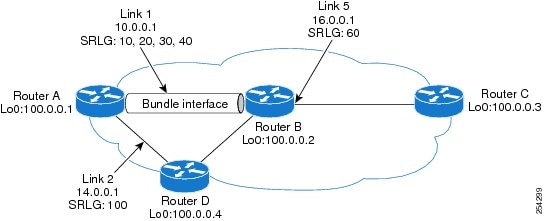

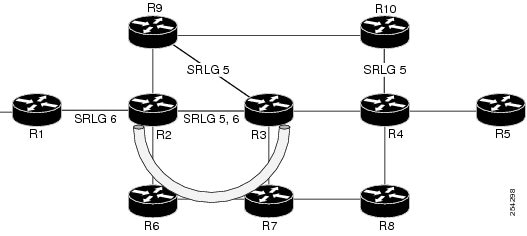

Bandwidth protection – A link or node protection backup might not provide bandwidth protection. But with this setting (bandwidth-protection maximum-aggregate), you can set the maximum bandwidth value that an auto-tunnel can protect.

-

Signalled bandwidth – Without bandwidth protection, auto-tunnel backups are signaled with zero bandwidth too, with no guarantee that at least

some bandwidth is backed up. So, the backup tunnels might be setup on links that are highly utilized, causing congestion drops

when the backup tunnels start to transmit traffic after FRR is triggered.

This setting (signalled-bandwidth) addresses the issue, since you can set the signalled bandwidth of the tunnel (and reserve minimal bandwidth for an auto-tunnel

backup). When you set the signal bandwidth value for auto-backup tunnels, congestion over backup links reduces.

-

Soft-preemption – Since bandwidth can be reserved for autobackup tunnels, a setting (soft-preemption) is provided for soft-preemption of the reserved bandwidth, if it is needed for a higher-priority tunnel.

Configurations

/*Enable Bandwidth Protection On a TE Auto-Tunnel Backup*/

Router# configure

Router(config)# mpls traffic-eng

Router(config-mpls-te)# interface GigabitEthernet 0/2/0/0 auto-tunnel backup

Router(config-te-if-auto-backup)# bandwidth-protection maximum-aggregate 100000

Router(config-te-if-auto-backup)# commit

/*Enable Signalled Bandwidth On a TE Auto-Tunnel Backup*/

Router# configure

Router(config)# mpls traffic-eng attribute-set auto-backup MyBackupConfig

Router(config-te-attribute-set)# signalled-bandwidth 700000

Router(config-te-attribute-set)# commit

After creating the auto backup attribute-set (MyBackupConfig in this case), associate with the auto-tunnel backup interface.

Router# configure

Router(config)# mpls traffic-eng

Router(config-mpls-te)# interface GigabitEthernet 0/2/0/0 auto-tunnel backup

Router(config-te-if-auto-backup)# attribute-set MyBackupConfig

Router(config-te-if-auto-backup)# auto-tunnel backup tunnel-id min 6000 max 6500

Router(config-mpls-te)# commit

/*Enable Soft-Preemption Bandwidth On a TE Auto-Tunnel Backup*/

Router# configure

Router(config)# mpls traffic-eng attribute-set auto-backup MyBackupConfig

Router(config-te-attribute-set)# soft-preemption

Router(config-te-attribute-set)# commit

Verification

/*Verify Auto-Tunnel Backup Configuration*/

In the output, bandwidth protection details are displayed, as denoted by BW.

Router# show mpls traffic-eng auto-tunnel backup

AutoTunnel Backup Configuration:

Interfaces count: 1

Unused removal timeout: 1h 0m 0s

Configured tunnel number range: 6000-6500

AutoTunnel Backup Summary:

AutoTunnel Backups:

0 created, 0 up, 0 down, 0 unused

0 NHOP, 0 NNHOP, 0 SRLG strict, 0 SRLG preferred, 0 SRLG weighted, 0 BW protected

Protected LSPs:

0 NHOP, 0 NHOP+SRLG, 0 NHOP+BW, 0 NHOP+BW+SRLG

0 NNHOP, 0 NNHOP+SRLG, 0 NNHOP+BW, 0 NNHOP+BW+SRLG

Protected S2L Sharing Families:

0 NHOP, 0 NHOP+SRLG, 0 NNHOP+BW, 0 NNHOP+BW+SRLG

0 NNHOP, 0 NNHOP+SRLG, 0 NNHOP+BW, 0 NNHOP+BW+SRLG

Protected S2Ls:

0 NHOP, 0 NHOP+SRLG, 0 NNHOP+BW, 0 NNHOP+BW+SRLG

0 NNHOP, 0 NNHOP+SRLG, 0 NNHOP+BW, 0 NNHOP+BW+SRLG

Cumulative Counters (last cleared 00:08:47 ago):

Total NHOP NNHOP

Created: 0 0 0

Connected: 0 0 0

Removed (down): 0 0 0

Removed (unused): 0 0 0

Removed (in use): 0 0 0

Range exceeded: 0 0 0

Feedback

Feedback