- Preface

- Cisco CRS Carrier Routing System 16-Slot Line Card Chassis Enhanced Router Overview

- Power Systems

- Cooling System

- Switch Fabric

- Line Cards and Physical Layer Interface Modules

- Route Processor

- Single-Chassis System Summary

- Control Plane

- Cisco CRS Carrier Routing System 16-Slot Line Card Chassis Enhanced Router Specifications

- Product IDs

- OC-48 POS/Dynamic Packet Transport PLIM

- 10-Gigabit Ethernet XENPAK PLIM

- 8-Port 10-GE PLIM with XFP Optics Modules

- 4-Port 10-GE PLIM with XFP Optics Modules

- 1-Port 100-GE PLIM with CFP Optics Module

- 20-Port 10-GE PLIM with XFP Optics Modules

- 14-Port 10-GE PLIM with XFP Optics Modules

- XFP Optics Power Management

- PLIM Impedance Carrier

Line Cards and Physical Layer Interface Modules

This chapter describes the modular services card (MSC), forwarding processors (FP), the label switch processor (LSP) card, and the associated physical layer interface modules (PLIMs) of the Cisco CRS Series Enhanced 16-Slot Line Card Chassis (LCC).

This chapter includes the following sections:

- Overview of Line Card Architecture

- Overview of Line Cards and PLIMs

- Line Card Descriptions

- Physical Layer Interface Modules (PLIMs)

Note![]() For a complete list of cards supported in the LCC, go to the Cisco Carrier Routing System Data Sheets at: http://www.cisco.com/en/US/products/ps5763/products_data_sheets_list.html

For a complete list of cards supported in the LCC, go to the Cisco Carrier Routing System Data Sheets at: http://www.cisco.com/en/US/products/ps5763/products_data_sheets_list.html

Overview of Line Card Architecture

The MSC, FP, or LSP card, also called line cards, are the Layer 3 forwarding engine in the CRS 16-Slot routing system. Every LCC has 16 MSC, FC, or LSP slots, each with a capacity of up to 400 gigabits per second (Gbps) ingress and 400 Gbps egress, for a total routing capacity per chassis of 12800 Gbps or 12.8 terabits per second (Tbps). (A terabit is 1 x 1012 bits or 1000 gigabits.)

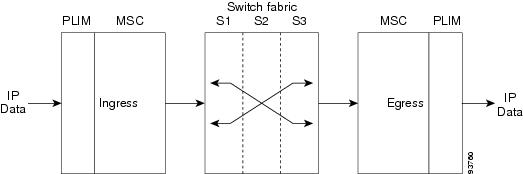

The routing system is built around a scalable, distributed three-stage Benes switch fabric and a variety of data interfaces. The data interfaces are contained on PLIMs that mate with an associated line card through the chassis midplane. The switch fabric cross-connects line cards to each other. Figure 5-1 is a simple diagram of the basic Cisco CRS architecture.

Figure 5-1 Simple Cisco CRS Series Routing System Architecture

Figure 5-1 illustrates the following concepts, which are common to all Cisco CRS-1 routing systems:

- Packet data enters the line card through physical data interfaces located on the associated PLIM. In Figure 5-1, these physical interfaces are represented by four OC-192 ports.

- Data is routed through the line card, a Layer 3 forwarding engine, to the three-stage Benes switch fabric. Each line card and its associated PLIM have Layer 1 through Layer 3 functionality, and each line card can deliver line-rate performance (140 Gbps aggregate bandwidth). See “Overview of Line Cards and PLIMs” section for more information.

- The three-stage Benes switch fabric cross-connects the line cards in the routing system. The switch fabric is partitioned into eight planes (plane 0 to plane 7) and is implemented by several components (see Figure 5-1). See “Switch Fabric,” for more information.

Overview of Line Cards and PLIMs

Each MSC, FP, and LSP is paired with a corresponding physical layer interface module (PLIM, also called line card) that contains the physical interfaces for the line card. An MSC, FP, or LSP can be paired with different types of PLIMs to provide a variety of packet interfaces.

- The MSC card is available in the following versions: CRS-MSC (end-of-sale), CRS-MSC-B, CRS-MSC-140G, and CRS-MSC-X (400G mode).

- The FP card is available in the following versions: CRS-FP140, CRS-FP-X (400G mode).

- The LSP card is available in the following versions: CRS-LSP, CRS-LSP-X.

Note![]() See the See the “Line Card Chassis Components” section for information about CRS fabric, MSC, and PLIM component compatibility.

See the See the “Line Card Chassis Components” section for information about CRS fabric, MSC, and PLIM component compatibility.

Each line card and associated PLIM implement Layer 1 through Layer 3 functionality that consists of physical layer framers and optics, MAC framing and access control, and packet lookup and forwarding capability. The line cards deliver line-rate performance (up to 400 Gbps aggregate bandwidth). Additional services, such as Class of Service (CoS) processing, Multicast, Traffic Engineering (TE), including statistics gathering, are also performed at the 400 Gbps line rate.

Line cards support several forwarding protocols, including IPV4, IPV6, and MPLS. Note that the route processor (RP) performs routing protocol functions and routing table distributions, while the MSC, FP, and LSP actually forward the data packets.

Line cards and PLIMs are installed on opposite sides of the LCC, and mate through the LCC midplane. Each MSC/PLIM pair is installed in corresponding chassis slots in the chassis (on opposite sides of the chassis).

Figure 5-2 shows how the MSC takes ingress data through its associated PLIM and forwards the data to the switch fabric where the data is switched to another MSC, FP, and LSP, which passes the egress data out its associated PLIM.

Figure 5-2 Data Flow through the Cisco CRS LCC

Data streams are received from the line side (ingress) through optic interfaces on the PLIM. The data streams terminate on the PLIMs. Frames and packets are mapped based on the Layer 2 (L2) headers.

The line card converts packets to and from cells and provides a common interface between the routing system switch fabric and the assorted PLIMs. The PLIM provides the interface to user IP data. PLIMs perform Layer 1 and Layer 2 functions, such as framing, clock recovery, serialization and deserialization, channelization, and optical interfacing. Different PLIMs provide a range of optical interfaces, such as very-short-reach (VSR), intermediate-reach (IR), or long-reach (LR).

A PLIM eight-byte header is built for packets entering the fabric. The PLIM header includes the port number, the packet length, and some summarized layer-specific data. The L2 header is replaced with PLIM headers and the packet is passed to the MSC for feature applications and forwarding.

The transmit path is essentially the opposite of the receive path. Packets are received from the drop side (egress) from the line card through the chassis midplane. The Layer 2 header is based on the PLIM eight-byte header received from the line card. The packet is then forwarded to appropriate Layer 1 devices for framing and transmission on the fiber.

A control interface on the PLIM is responsible for configuration, optic control and monitoring, performance monitoring, packet count, error-packet count and low-level operations of the card, such as PLIM card recognition, power up of the card, and voltage and temperature monitoring.

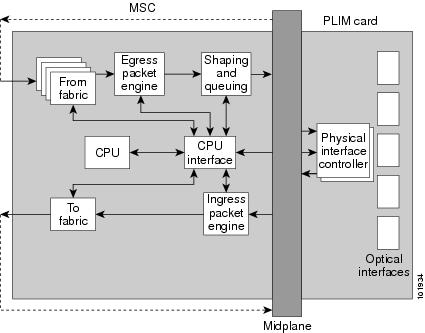

Figure 5-3 is a simple block diagram of the major components of an MSC and PLIM pair. These components are described in the sections that follow. This diagram also applies to the FP and LSP line cards.

Figure 5-3 MSC and PLIM Simple Block Diagram

PLIM Physical Interface Module on Ingress

As shown in Figure 5-3, received data enters a PLIM from the physical optical interface. The data is routed to the physical interface controller, which provides the interface between the physical ports, and the Layer 3 function of the line card. For receive (ingress) data, the physical interface controller performs the following functions:

- Multiplexes the physical ports and transfers them to the ingress packet engine through the LCC midplane.

- Buffers incoming data, if necessary, to accommodate back-pressure from the packet engine.

- Provides Gigabit Ethernet specific functions, such as:

MSC Ingress Packet Engine

The ingress packet engine performs packet processing on the received data. It makes the forwarding decision and places the data into a rate-shaping queue in the “to fabric” section. To perform Layer 3 forwarding, the packet engine performs the following functions:

- Classifies packets by protocol type and parses the appropriate headers on which to do the forwarding lookup on

- Performs an algorithm to determine the appropriate output interface to which to route the data

- Performs access control list filtering

- Maintains per-interface and per-protocol byte-and-packet statistics

- Maintains Netflow accounting

- Implements a flexible dual-bucket policing mechanism

MSC To Fabric Section and Queuing

The “to fabric” section of the board takes packets from the ingress packet engine, segments them into fabric cells, and distributes (sprays) the cells into the eight planes of the switch fabric. Because each MSC has multiple connections per plane, the “to fabric” section distributes the cells over the links within a fabric plane. The chassis midplane provides the path between the “to fabric” section and the switch fabric (as shown by the dotted line in Figure 5-3).

- The first level performs ingress shaping and queuing, with a rate-shaping set of queues that are normally used for input rate-shaping (that is, per input port or per subinterface within an input port), but can also be used for other purposes, such as to shape high-priority traffic.

- The second level consists of a set of destination queues where each destination queue maps to a destination line card, plus a multicast destination.

Note that the flexible queues are programmable through the Cisco IOS XR software.

MSC From Fabric Section

The “from fabric” section of the board receives cells from the switch fabric and reassembles the cells into IP packets. The section then places the IP packets in one of its 8K egress queues, which helps the section adjust for the speed variations between the switch fabric and the egress packet engine. Egress queues are serviced using a modified deficit round-robin (MDRR) algorithm. The dotted line in Figure 5-3 indicates the path from the midplane to the “from fabric” section.

MSC Egress Packet Engine

The transmit (egress) packet engine performs a lookup on the IP address or MPLS label of the egress packet based on the information in the ingress MSC buffer header and on additional information in its internal tables. The transmit (egress) packet engine performs transmit side features such as output committed access rate (CAR), access lists, diffServ policing, MAC layer encapsulation, and so on.

Shaping and Queuing Function

The transmit packet engine sends the egress packet to the shaping and queuing function (shape and regulate queues function), which contains the output queues. Here the queues are mapped to ports and classes of service (CoS) within a port. Random early-detection algorithms perform active queue management to maintain low average queue occupancies and delays.

PLIM Physical Interface Section on Egress

On the transmit (egress) path, the physical interface controller provides the interface between the line card and the physical ports on the PLIM. For the egress path, the controller performs the following functions:

- Support for the physical ports. Each physical interface controller can support up to four physical ports and there can be up to four physical interface controllers on a PLIM.

- Queuing for the ports

- Back-pressure signalling for the queues

- Dynamically shared buffer memory for each queue

- A loopback function where transmitted data can be looped back to the receive side

MSC CPU and CPU Interface

As shown in Figure 5-3, the MSC contains a central processing unit (CPU) that performs the following tasks:

- CPU chip

- Layer 3 cache

- NVRM

- Flash boot PROM

- Memory controller

- Memory, a dual in-line memory module (DIMM) socket, providing the following:

–![]() Up to 2 GB of 133 MHz DDR SDRAM on the CRS-MSC

Up to 2 GB of 133 MHz DDR SDRAM on the CRS-MSC

–![]() Up to 2 GB of 166 MHz DDR SDRAM on the CRS-MSC-B

Up to 2 GB of 166 MHz DDR SDRAM on the CRS-MSC-B

–![]() Up to 8GB of 533 MHz DDR2 SDRAM on the CRS-MSC-140G

Up to 8GB of 533 MHz DDR2 SDRAM on the CRS-MSC-140G

–![]() Up to 15GB of 667 MHz DDR3 DIMM on the CRS-MSC-X

Up to 15GB of 667 MHz DDR3 DIMM on the CRS-MSC-X

The CPU interface provides the interface between the CPU subsystem and the other ASICs on the MSC and PLIM.

The MSC also contains a service processor (SP) module that provides:

The SP, CPU subsystem, and CPU interface module work together to perform housekeeping, communication, and control plane functions for the MSC. The SP controls card power up, environmental monitoring, and Ethernet communication with the LCC RPs.

The CPU subsystem performs a number of control plane functions, including FIB download receive, local PLU and TLU management, statistics gathering and performance monitoring, and MSC ASIC management and fault-handling.

The CPU interface module drives high-speed communication ports to all ASICs on the MSC and PLIM. The CPU talks to the CPU interface module through a high-speed bus attached to its memory controller.

Line Card Descriptions

An MSC, FP, or LSP fits into any available MSC slot and connects directly to the midplane. There are the following types of MSC, FP, and LSP cards:

- MSCs: CRS-MSC (end-of-sale), CRS-MSC-B, CRS-MSC-140G, and CRS-MSC-X (400G mode)

- FPs : CRS-FP40, CRS-FP140, CRS-FP-X (400G mode)

- LSPs: CRS-LSP, CRS-LSP-X

|

|

|

|---|---|

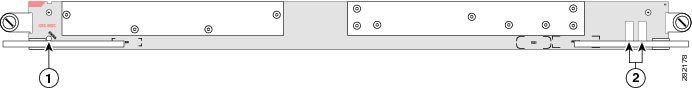

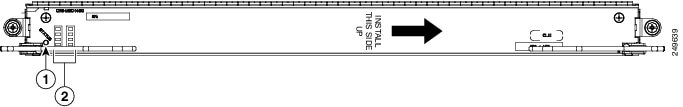

Figure 5-4 shows a Cisco CRS routing system CRS-MSC-140G.

Figure 5-4 Modular Services Card (CRS-MSC-140G)

Figure 5-5 shows the CRS-MSC front panel.

Figure 5-5 CRS-MSC Front Panel

|

|

|

Figure 5-6 shows the front panel of the CRS-MSC-B.

Figure 5-6 CRS-MSC-B Front Panel

|

|

|

Figure 5-7 shows the front panel of the CRS-MSC-140G. The CRS-MSC-X card front panel is similar.

Figure 5-7 CRS-MSC-140G Front Panel

|

|

|

Figure 5-8 shows the front panel of the CRS-FP140. The CRS-FP40 and CRS-FP-X card front panels are similar.

Figure 5-8 CRS-FP140 Front Panel

|

|

|

Physical Layer Interface Modules (PLIMs)

A physical layer interface module (PLIM) provides the packet interfaces for the routing system. Optic modules on the PLIM contain ports to which fiber-optic cables are connected. User data is received and transmitted through the PLIM ports, and converted between the optical signals (used in the network) and the electrical signals (used by Cisco CRS Series components).

Each PLIM is paired with a line card through the chassis midplane. The line card provides Layer 3 services for the user data, and the PLIM provides Layer 1 and Layer 2 services. A line card can be paired with different types of PLIMs to provide a variety of packet interfaces and port densities.

Line cards and PLIMs are installed on opposite sides of the LCC, and mate through the chassis midplane. Each line card and PLIM pair is installed in corresponding chassis slots in the chassis (on opposite sides of the chassis). The chassis midplane enables you to remove and replace a line card without disconnecting the user cables on the PLIM.

You can mix and match PLIM types in the chassis.

Warning Because invisible radiation may be emitted from the aperture of the port when no fiber cable is connected, avoid exposure to radiation and do not stare into open apertures. Statement 125

Figure 5-9 shows a typical PLIM, in this case, a 14-port 10-GE XFP PLIM. Other PLIMs are relatively similar, however each PLIM has a different front panel, depending on the interface type.

Figure 5-9 Typical PLIM—14-Port 10-GE XFP PLIM

The following sections describe some of the types of PLIMs currently available for the LCC:

Note![]() For a full list of supported PLIMs, see the Cisco CRS Carrier Routing System Ethernet Physical Layer Interface Module Installation Note. For a full list of supported PLIMs, see the Cisco CRS Carrier Routing System Ethernet Physical Layer Interface Module Installation Note.

For a full list of supported PLIMs, see the Cisco CRS Carrier Routing System Ethernet Physical Layer Interface Module Installation Note. For a full list of supported PLIMs, see the Cisco CRS Carrier Routing System Ethernet Physical Layer Interface Module Installation Note.

- OC-48 POS/Dynamic Packet Transport PLIM

- 10-Gigabit Ethernet XENPAK PLIM

- 8-Port 10-GE PLIM with XFP Optics Modules

- 4-Port 10-GE PLIM with XFP Optics Modules

- 1-Port 100-GE PLIM with CFP Optics Module

- 20-Port 10-GE PLIM with XFP Optics Modules

- 14-Port 10-GE PLIM with XFP Optics Modules

- PLIM Impedance Carrier

OC-48 POS/Dynamic Packet Transport PLIM

The 16-port OC-48 PLIM contains 16 OC-48 interfaces that can be software configured to operate in packet-over-SONET (POS) or Dynamic Packet Transport (DPT) mode. The PLIM performs Layer 1 and Layer 2 processing for 16 OC-48 data streams by removing and adding the proper header information as data packets enter and exit the PLIM. The PLIM feeds the line card a single 40 Gbps data packet stream.

The PLIM is a class 1 laser product.

Note DPT mode is supported on the OC-192/OC-48 POS PLIM in Cisco IOS XR Release 3.4.

The 16-port OC-48 PLIM consists of the following components:

- Optics modules—Provide the receive (Rx) and transmit (Tx) optic interfaces for each of the 16 ports. The OC-48 PLIM uses small form-factor pluggable (SFP) optics modules that can be removed and replaced while the PLIM is powered up. The SFPs provide short-reach (SR) and long-reach (LR2) optics with LC fiber-optic interfaces.

- Framers—Provide processing and termination for SONET section, line, and path layers, including alarm processing and APS support and management. The framer supports both packet and cell processing for a multiservice operating mode.

- DPT or transparent mode components—Provide the MAC layer function for the Spatial Reuse Protocol used in the DPT mode. When the OC-48 PLIM operates in the POS mode, these components operate in the transparent mode.

- Physical interface controller—Provides data packet buffering and Layer 2 processing and multiplexing and demultiplexing of the 16 OC-48 data streams. This includes processing for VLANs and back-pressure signals from the line card.

- Additional components—Provide power, clocking, voltage and temperature sensing, and an identification EEPROM that stores initial configuration information and details about the PLIM type and hardware revision.

- Power consumption of the OC-48 PLIM—136 W

Figure 5-10 shows the front panel of the OC-48 PLIM.

Figure 5-10 16-Port OC-48 POS PLIM Front Panel View

|

|

|

||

|

|

|

||

|

|

|

One of sixteen slots for SFPs numbered from 0 through 15 left to right |

|

|

|

|

||

|

|

|

As shown, the 16-port OC-48 PLIM has:

- Sixteen slots for SFP optic modules, which provide SR or LR optics with LC fiber-optic interfaces.

- STATUS LED—Green indicates that the PLIM is properly seated and operating correctly.

Yellow or amber indicates a problem with the PLIM. If the LED is off (dark), check that the board is properly seated and that system power is on. - Eight DPT MODE or POS MODE LEDs—One of these DPT MODE or POS MODE LEDs is for each pair of ports, 0 and 1, 2 and 3, 4 and 5, 6 and 7, 8 and 9, 10 and 11, 12 and 13, and 14 and 15. The DPT mode is always configured on pairs of ports. The LED is lit when a pair of ports are configured in the DPT mode. Currently, the OC-48 PLIM operates only in the POS mode.

- Five green LEDs for each port. The LEDs, which correspond to the labels on the lower left of the front panel, have the following meanings (from left to right):

–![]() ACTIVE/FAILURE—Indicates that the port is logically active; the laser is on.

ACTIVE/FAILURE—Indicates that the port is logically active; the laser is on.

–![]() CARRIER—Indicates that the receive port (Rx) is receiving a carrier signal.

CARRIER—Indicates that the receive port (Rx) is receiving a carrier signal.

–![]() RX PKT—Blinks every time a packet is received.

RX PKT—Blinks every time a packet is received.

–![]() WRAP—Indicates that the port is in DPT wrapped mode.

WRAP—Indicates that the port is in DPT wrapped mode.

–![]() PASS THRU—Indicates that the port is operating in the POS mode (DPT pass through).

PASS THRU—Indicates that the port is operating in the POS mode (DPT pass through).

10-Gigabit Ethernet XENPAK PLIM

The 8-port 10-Gigabit Ethernet (GE) XENPAK PLIM provides from one to eight 10-GE interfaces. The PLIM supports from one to eight pluggable XENPAK optic modules that provide the 10-GE interfaces for the card. The PLIM performs Layer 1 and Layer 2 processing for up to eight 10-GE data streams by removing and adding the proper header information as data packets enter and exit the PLIM.

Although the PLIM can terminate up to 80 Gbps of traffic, the line card forwards traffic at 40 Gbps. Therefore, the PLIM provides 40 Gbps of throughput, which it passes to the line card as two 20-Gbps data packet streams:

Oversubscription of 10-GE Ports

If more than 2 optic modules are installed in either set of ports, oversubscription occurs on all ports in that set. For example, if modules are installed in ports 0 and 1, each interface has 10 Gbps of throughput. Adding another module in port 2 causes oversubscription on all of the interfaces (0, 1, and 2).

Note![]() If your configuration cannot support oversubscription, use the following guidelines to determine which PLIM slots to install optic modules in. If your configuration cannot support oversubscription, use the following guidelines to determine which PLIM slots to install optic modules in.

If your configuration cannot support oversubscription, use the following guidelines to determine which PLIM slots to install optic modules in. If your configuration cannot support oversubscription, use the following guidelines to determine which PLIM slots to install optic modules in.

- Do not install more than four optic modules in each PLIM.

- Install the optic modules in any one of the following sets of PLIM slots:

If your configuration can support oversubscription and you want to install more than four optic modules in a PLIM, we recommend that you install additional modules in empty slots, alternating between upper and lower ports. For example, if you install a fifth optic module in an empty slot in the upper set of ports (0 to 3), be sure to install the next module in an empty slot in the lower set of ports (4 to 7), and so on.

10-GE PLIM Components

The 8-port 10-GE XENPAK PLIM consists of:

- Optic modules—Provide receive (Rx) and transmit (Tx) optical interfaces that comply with IEEE 802.3ae. The PLIM supports from one to eight pluggable XENPAK optic modules, each providing full-duplex long-reach (LR) optics with SC fiber-optic interfaces. Note that the PLIM automatically shuts down any optic module that is not a valid type.

- Physical interface controller—Provides data packet buffering, Layer 2 processing, and multiplexing and demultiplexing of the 10-GE data streams, including processing for VLANs and back-pressure signals from the line card.

- Additional components—Include power and clocking components, voltage and temperature sensors, and an identification EEPROM that stores initial configuration and PLIM hardware information.

Figure 5-11 shows the front panel of the 10-GE XENPAK PLIM.

Figure 5-11 10-GE XENPAK PLIM Front Panel

|

|

|

The 8-port 10-GE XENPAK PLIM has the following elements:

- Eight slots that accept XENPAK optic modules, which provide LR optics with SC fiber-optic interfaces.

- A STATUS LED—Green indicates that the PLIM is properly seated and operating correctly.

Yellow or amber indicates a problem with the PLIM. If the LED is off (dark), check that the board is properly seated and that system power is on. - An LED for each port—Indicates that the port is logically active; the laser is on.

- Power consumption of the 10-GE XENPAK PLIM—110 W (with 8 optic modules)

8-Port 10-GE PLIM with XFP Optics Modules

The 8-port 10-GE XFP PLIM supports from one to eight pluggable XFP optics modules.

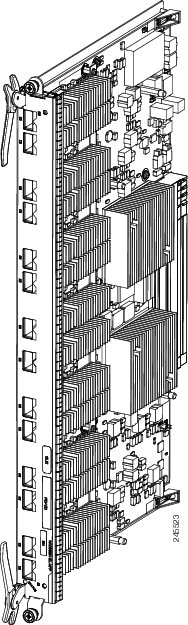

Figure 5-12 shows the front panel of the 8-port 10-GE XFP PLIM. The 8-port 10-GE XFP PLIM has:

The 8-port 10-GE PLIMs supports the following types of XFP optical transceiver modules:

- Single-mode low power multirate XFP module—XFP10GLR-192SR-L, V01

- Single-mode low power multirate XFP module—XFP10GER-192IR-L, V01

Note![]() For information about the XFP optical transceiver modules supported on the 8-port 10-GE XFP PLIM, see Cisco CRS Carrier Routing System Ethernet Physical Layer Interface Module Installation Note.

For information about the XFP optical transceiver modules supported on the 8-port 10-GE XFP PLIM, see Cisco CRS Carrier Routing System Ethernet Physical Layer Interface Module Installation Note.

Cisco qualifies the optics that are approved for use with its PLIMs.

For the modules listed, use a single-mode optical fiber that has a modal-field diameter of 8.7 ±0.5 microns (nominal diameter is approximately 10/125 micron) to connect your router to a network.

Figure 5-12 shows the front panel of the 8-Port 10-GE XFP PLIM.

Figure 5-12 8-Port 10-Gigabit Ethernet XFP PLIM front panel

|

|

|

Table 5-2 describes the PLIM LEDs for the 8-Port 10-GE XFP PLIM.

|

|

|

|

Table 5-3 provides cabling specifications for the XFP modules that can be installed on the 8-port 10-GE XFP PLIM.

|

|

|

|

|

|

4-Port 10-GE PLIM with XFP Optics Modules

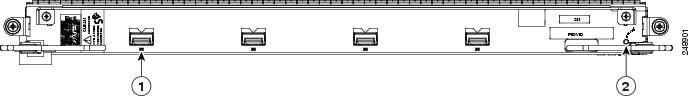

The 4-port 10-GE XFP PLIM supports from one to four pluggable XFP optics modules.

Figure 5-13 shows the front panel of the 4-port 10-GE XENPAK PLIM. The 4-port 10-GE XFP PLIM has:

The 4-port 10-GE PLIMs supports the following types of XFP optical transceiver modules:

- Single-mode low power multirate XFP module—XFP10GLR-192SR-L, V01

- Single-mode low power multirate XFP module—XFP10GER-192IR-L, V01

Note![]() For information about the XFP optical transceiver modules supported on the 4-port 10-GE XFP PLIM, see Cisco CRS Carrier Routing System Ethernet Physical Layer Interface Module Installation Note.

For information about the XFP optical transceiver modules supported on the 4-port 10-GE XFP PLIM, see Cisco CRS Carrier Routing System Ethernet Physical Layer Interface Module Installation Note.

Cisco qualifies the optics that are approved for use with its PLIMs.

For the modules listed, use a single-mode optical fiber that has a modal-field diameter of 8.7 ±0.5 microns (nominal diameter is approximately 10/125 micron) to connect your router to a network.

Figure 5-13 shows the front panel of the 4-Port 10-GE XFP PLIMs.

Figure 5-13 4-Port 10-Gigabit Ethernet XFP PLIM front panel

|

|

|

Table 5-4 describes the PLIM LEDs for the 4-Port 10-GE XFP PLIM. The 4-Port 10-GE XFP PLIM power consumption is 74 W (with four optics modules)

|

|

|

|

Table 5-5 provides cabling specifications for the XFP modules that can be installed on the 4-port 10-GE XFP PLIMs.

|

|

|

|

|

|

1-Port 100-GE PLIM with CFP Optics Module

The 1-port 100-GE CFP PLIM supports one pluggable CFP optics module.

- One port that accepts a CFP optics module

- Status LED for the PLIM

- Four LED indicators for the single port

The 1-port 100-GE PLIM supports the following types of CFP optical transceiver modules:

Cisco qualifies the optics that are approved for use with its PLIMs.

Figure 5-14 shows the front panel of the 1-Port 100-GE CFP PLIM.

Figure 5-14 1-Port 100-Gigabit Ethernet CFP PLIM front panel

|

|

|

|

|

|

Table 5-6 describes the PLIM LEDs for the 1-Port 100-GE CFP PLIM.

|

|

|

|

PLIM is not properly seated, system power is off, or power up did not complete successfully. |

||

Port is enabled by software, but there is a problem with the link. |

||

20-Port 10-GE PLIM with XFP Optics Modules

The 20-port 10-GE XFP PLIM supports from one to twenty pluggable XFP optics modules.

The 20-port 10-GE PLIM supports the following types of XFP optical transceiver modules:

- Single-mode low power multirate XFP module—XFP10GLR-192SR-L, V01

- Single-mode low power multirate XFP module—XFP10GER-192IR-L, V01

Note![]() For information about the XFP optical transceiver modules supported on the 20-port 10-GE XFP PLIM, see Cisco CRS Carrier Routing System Ethernet Physical Layer Interface Module Installation Note.

For information about the XFP optical transceiver modules supported on the 20-port 10-GE XFP PLIM, see Cisco CRS Carrier Routing System Ethernet Physical Layer Interface Module Installation Note.

Note![]() The 20-port XFP PLIM has a fixed power budget for the pluggable XFP optics. See The 20-port XFP PLIM has a fixed power budget for the pluggable XFP optics. See “XFP Optics Power Management” section for detailed information.

The 20-port XFP PLIM has a fixed power budget for the pluggable XFP optics. See The 20-port XFP PLIM has a fixed power budget for the pluggable XFP optics. See “XFP Optics Power Management” section for detailed information.

Cisco qualifies the optics that are approved for use with its PLIMs.

For the modules listed, use a single-mode optical fiber that has a modal-field diameter of 8.7 ±0.5 microns (nominal diameter is approximately 10/125 micron) to connect your router to a network.

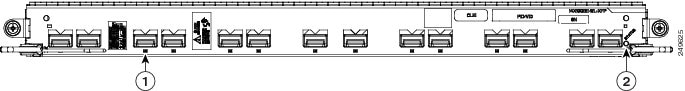

Figure 5-15 shows the front panel of the 20-Port 10-GE XFP PLIMs.

Figure 5-15 20-Port 10-Gigabit Ethernet XFP PLIM front panel

|

|

|

Table 5-7 describes the PLIM LEDs for the 20-Port 10-GE XFP PLIM.

|

|

|

|

PLIM is not properly seated, system power is off, or power up did not complete successfully. |

||

Port is enabled by software, but there is a problem with the link. |

||

The 20-port 10-GE XFP PLIM has the following physical characteristics:

- Height—20.6 in (52.2 cm)

- Depth—11.2 in (28.4 cm)

- Width—1.8 in (4.5 cm)

- Weight—8.45 lb (3.8 kg)

- Power consumption—150 W (120 W with no optics installed, 30 W optics budget)

14-Port 10-GE PLIM with XFP Optics Modules

The 14-port 10-GE XFP PLIM supports from one to fourteen pluggable XFP optics modules.

The14-port 10-GE PLIM supports the following types of XFP optical transceiver modules:

- Single-mode low power multirate XFP module—XFP10GLR-192SR-L, V01

- Single-mode low power multirate XFP module—XFP10GER-192IR-L, V01

Cisco qualifies the optics that are approved for use with its PLIMs.

For the modules listed, use a single-mode optical fiber that has a modal-field diameter of 8.7 ±0.5 microns (nominal diameter is approximately 10/125 micron) to connect your router to a network.

Figure 5-16 shows the front panel of the 14-Port 10-GE XFP PLIMs.

Figure 5-16 14-Port 10-Gigabit Ethernet XFP PLIM front panel

|

|

|

Table 5-8 describes the PLIM LEDs for the 14-Port 10-GE XFP PLIM.

|

|

|

|

PLIM is not properly seated, system power is off, or power up did not complete successfully. |

||

Port is enabled by software, but there is a problem with the link. |

||

The 14-port 10-GE XFP PLIM have the following physical characteristics:

- Height—20.6 in (52.2 cm)

- Depth—11.2 in (28.4 cm)

- Width—1.8 in (4.49 cm)

- Weight—7.85 lbs (3.55 kg)

- Power consumption—150 W (115 W with no optics installed, 35 W optics budget)

Note![]() The 20-port XFP PLIM has a fixed power budget for the pluggable XFP optics. See The 20-port XFP PLIM has a fixed power budget for the pluggable XFP optics. See “XFP Optics Power Management” section for detailed information.

The 20-port XFP PLIM has a fixed power budget for the pluggable XFP optics. See The 20-port XFP PLIM has a fixed power budget for the pluggable XFP optics. See “XFP Optics Power Management” section for detailed information.

XFP Optics Power Management

The 20- and 14-port XFP PLIMs have a fixed power budget for the pluggable XFP optics. The XFP pluggable optics for the 20- and 14-port XFP PLIMs have different power consumptions based on their reach and type. The number of XFPs which will power up in a PLIM depends on their aggregate power consumption within the allocated power budget.

During XFP insertion, the power is allotted to the optics based on the insertion order of the XFPs. On boot up and reload, priority is re-assigned to the lower numbered ports.

The recommended insertion sequence is to alternate between inserting XFPs in lowest numbered ports for each interface device driver ASIC to avoid oversubscription. The insertion order for a 20 Port PLIM would be “0,10,1,11,2,12,…9,19.” For a 14 Port PLIM, insertion order would be “0,7,1,8,…6,13.”

If the PLIM power budget is exceeded, a console log message is displayed informing the user the power budget has been exceeded and to remove the XFP:

Any unpowered XFPs should be removed to ensure that the same XFPs that were powered before a reload are the same XFPs that are powered after a reload. Removing the unpowered XFPs prevents the powered down XFPs being given priority after the reload.

A show command is provided to indicate how much of the XFP power budget is currently used and how much power an XFP is consuming:

PLIM Impedance Carrier

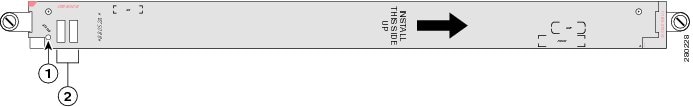

A PLIM impedance carrier must be installed in each empty PLIM slot in the Cisco CRS Series chassis (see Figure 5-17). The CRS 16-slot chassis is shipped with impedance carriers installed in the empty slots. The impedance carrier preserves the integrity of the chassis and is required for EMI compliance and proper cooling in the chassis.

Figure 5-17 PLIM Impedance Carrier

|

|

Feedback

Feedback