CPwE_PhyArch_AppGuide.book

Available Languages

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]() Physical Infrastructure for the Converged Plantwide Ethernet Architecture

Physical Infrastructure for the Converged Plantwide Ethernet Architecture

Application Guide

February 2023

Document Reference Number: ENET-TD020B-EN-P

![]()

|

Preface

Converged Plantwide Ethernet (CPwE) is a collection of architected, tested, and validated designs. The testing and validation follow the Cisco Validated Design (CVD) and Cisco Reference Design (CRD) methodologies. The content of CPwE, which is relevant to both operational technology (OT) and informational technology (IT) disciplines, consists of documented architectures, best practices, guidance, and configuration settings to help industrial operations and OEMs achieve the design and deployment of a scalable, reliable, secure, and future-ready plant- wide or site-wide industrial network infrastructure. CPwE can also help industrial operations and OEMs achieve cost reduction benefits using proven designs that can facilitate quicker deployment while helping to minimize risk in deploying new technology. CPwE is brought to market through a CPwE ecosystem consisting of Cisco, Panduit, and Rockwell Automation emergent from the strategic alliance between Cisco Systems and Rockwell Automation.

Resilient plant-wide or site-wide network architectures play a pivotal role in helping to confirm overall plant/site uptime and productivity. Industrial Automation and Control System (IACS) application requirements such as availability and performance drive the choice of resiliency technology. A scalable, holistic, and reliable plant-wide or site-wide network architecture is composed of multiple technologies (logical and physical) deployed at different levels within plant-wide or site-wide architectures. When selecting resiliency technology, various IACS application factors should be evaluated, including physical layout of IACS devices (geographic dispersion), recovery time performance, uplink media type, tolerance to data latency and jitter and future-ready requirements.

Physical Infrastructure for the Converged Plantwide Ethernet Architecture, which is documented in this Application Guide, outlines several physical layer use cases for designing and deploying reliable OEM, plant-wide or site-wide architectures for Industrial Automation and Control System (IACS) applications.

Release Notes

This section summarizes this February 2023 release:

· Standalone fiber optic cabling guide merge with this physical infrastructure guide

· Expanded coverage of industrial wireless guidance

· Inclusion of SPE (Single Pair Ethernet) and Ethernet-APL

· Additional topics added: minimum/maximum cable length, high speed SFPs, and cable entry systems

· Updated part numbers

Document Organization

This document is composed of the following chapters and appendices:

| Description |

|

| CPwE Physical Layer Overview |

Provides an overview of CPwE Physical Layer and the uses cases in this release. |

| Physical Infrastructure Network Design for CPwE Logical Architecture |

Introduces key concepts and addresses common design elements for mapping the physical infrastructure to the CPwE Logical Network Design, key requirements and considerations, link testing, and wireless physical infrastructure considerations. |

| Physical Infrastructure Design for the Cell/Area Zone |

Describes the Cell/Area Zone physical infrastructure for applications and the locations for the Allen-Bradley® Stratix® Industrial Ethernet Switches (IES), including IES in control panels and/or PNZS components. |

| Physical Infrastructure Design for the Industrial Zone |

Describes the physical infrastructure for network distribution across the Industrial Zone (one or more Cell/Area Zones) through use of Industrial Distribution Frames (IDF), industrial pathways, and robust media/connectivity. |

| Physical Infrastructure Deployment for Level 3 Site Operations |

Describes the physical infrastructure for Level 3 Site Operations, including Industrial Data Centers (IDCs) for compute, storage, and switching resources for software and services within industrial operations. |

| References |

Links to documents and websites that are relevant to this Application Guide. |

| Acronyms and Initialisms |

List of acronyms and initialisms used in this document. |

For More Information

More information on CPwE Design and Implementation Guides can be found at the following URLs:

• Rockwell Automation site:

– https://www.rockwellautomation.com/en-us/capabilities/industrial-networks/network- architectures.html

• Cisco site:

– http://www.cisco.com/c/en/us/solutions/enterprise/design-zone-manufacturing/landing_ettf.html

• Panduit site:

Note This release of the CPwE architecture focuses on EtherNet/IP™, which uses the ODVA, Inc. Common Industrial Protocol (CIP™) and is ready for the Industrial Internet of Things (IIoT). For more information on EtherNet/IP, CIP Sync™, CIP Security™, and DLR, see odva.org at the following URL:

• http://www.odva.org/Technology-Standards/EtherNet-IP/Overview

Physical Infrastructure for the Converged Plantwide Ethernet Architecture

![]()

|

CPwE Physical Layer Overview

This chapter includes the following major topics:

• CPwE Overview, page 1-1

• CPwE Resilient IACS Architectures Overview, page 1-2

• CPwE Physical Layer Solution Use Cases, page 1-3

The prevailing trend in Industrial Automation and Control System (IACS) networking is the convergence of technology, specifically IACS operational technology (OT) with information technology (IT). Converged Plantwide Ethernet (CPwE) helps to enable IACS network and security technology convergence, including OT-IT persona convergence, by using standard Ethernet, Internet Protocol (IP), network services, security services, and EtherNet/IP. A reliable and secure converged OEM, plant-wide or site-wide IACS architecture helps to enable the Industrial Internet of Things (IIoT).

CPwE Overview

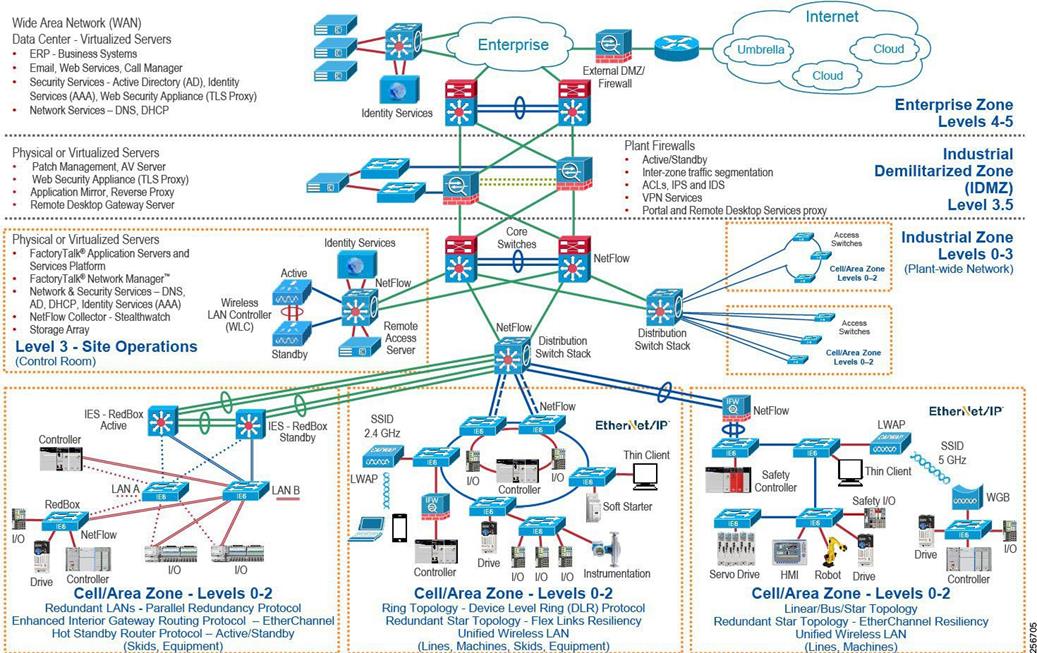

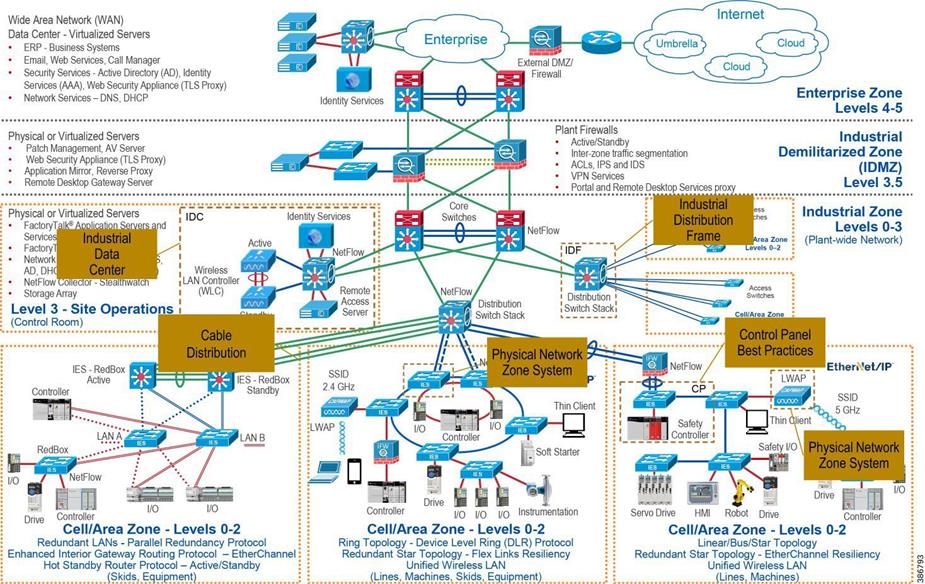

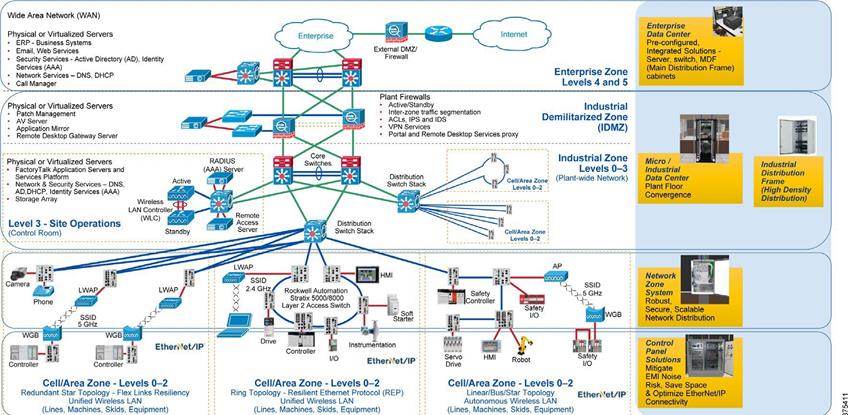

CPwE is the underlying architecture that provides standard network and security services for control and information disciplines, devices, and equipment found in modern IACS applications. The CPwE architectures (Figure 1-1) were architected, tested, and validated to provide design and implementation guidance, test results, and documented configuration settings. This can help to achieve the real-time communication, reliability, scalability, security, and resiliency requirements of modern IACS applications. The content and key tenets of CPwE are relevant to both OT and IT disciplines. CPwE key tenets include:

• Smart IIoT devices—Controllers, I/O, drives, instrumentation, actuators, analytics, and a single IIoT network technology (EtherNet/IP), facilitating both technology coexistence and IACS device interoperability, which helps to enable the choice of best-in- class IACS devices.

• Zoning (segmentation)—Smaller connected LANs, functional areas, and security groups.

• Managed infrastructure—Managed Allen-Bradley Stratix industrial Ethernet switches (IES) and Cisco Catalyst® distribution/core switches.

• Resiliency—Robust physical layer and resilient or redundant topologies

with resiliency protocols.

• Time-critical data—Data prioritization and time synchronization via CIP Sync and IEEE-1588 Precision Time Protocol (PTP).

• Wireless—Unified wireless LAN (WLAN) to enable mobility for personnel and equipment.

• Holistic defense-in-depth security—Multiple layers of diverse technologies for threat detection and prevention, implemented by different persona (for example, OT and IT) and applied at different levels of the plant-wide or site-wide IACS architecture.

• Convergence-ready—Seamless plant-wide or site-wide integration by trusted partner applications.

Figure 1-1 CPwE Architectures

|

CPwE Resilient IACS Architectures Overview

An IACS is deployed in a wide variety of industries such as automotive, pharmaceuticals, consumer packaged goods, pulp and paper, oil and gas, mining, and energy. IACS applications are composed of multiple control and information disciplines such as continuous process, batch, discrete, and hybrid combinations. One of the challenges facing industrial operations is the industrial hardening of standard Ethernet and IP-converged IACS networking technologies to take advantage of the business benefits associated with IIoT. A resilient LAN

architecture can help to increase the overall equipment effectiveness (OEE) of the IACS by helping to reduce the impact of a failure and speed recovery from an outage, which lowers Mean-Time-to-Repair (MTTR).

Protecting availability for IACS assets requires a scalable defense-in-depth approach where different solutions are needed to address various network resiliency requirements for OEM, plant-wide or site-wide architectures. This section summarizes the Cisco, Panduit, and Rockwell Automation CPwE application guidance that address different aspects of availability for IIoT IACS applications.

• Deploying Device Level Ring within a Converged Plantwide Ethernet Architecture Design and Implementation Guide outlines several use cases for designing and deploying DLR technology with IACS device-level, switch-level, and mixed device/switch-level single and multiple ring topologies across OEM and plant-wide or site-wide resilient LAN IACS applications.

– Rockwell Automation site: https://literature.rockwellautomation.com/idc/groups/literature/document s/td/enet-td015_-en-p.pdf

– Cisco site: https://www.cisco.com/c/en/us/solutions/enterprise/design-zone- manufacturing/landing_ettf.html

• Deploying Parallel Redundancy Protocol within a Converged Plantwide Ethernet Architecture Design and Implementation Guide outlines several use cases for designing and deploying Parallel Redundancy Protocol (PRP) technology with redundant LANs across plant-wide or site-wide IACS applications.

– Rockwell Automation site: https://literature.rockwellautomation.com/idc/groups/literature/docume nts/td/enet-td021_-en-p.pdf

– Cisco site: https://www.cisco.com/c/en/us/solutions/enterprise/design-zone- manufacturing/landing_ettf.html

• Deploying A Resilient Converged Plantwide Ethernet Architecture Design and Implementation Guide outlines several use cases for designing and deploying resilient plant-wide or site-wide architectures for IACS applications, utilizing a robust physical layer and resilient LAN topologies with resiliency protocols.

– Rockwell Automation site: https://literature.rockwellautomation.com/idc/groups/literature/document s/td/enet-td010_-en-p.pdf

– Cisco site: http://www.cisco.com/c/en/us/solutions/enterprise/design-zone- manufacturing/landing_ettf.html

CPwE Physical Layer Solution Use Cases

Successful deployment of CPwE logical architectures depends on a robust physical infrastructure network design that addresses environmental and performance challenges with best practices from Operational Technology

(OT) and Information Technology (IT). Cisco, Panduit, and Rockwell Automation have collaborated to reference Panduit’s building block approach for physical infrastructure (Figure 1-2) deployment. This approach helps customers address the physical deployment associated with converged OEM, plant-wide or site-wide EtherNet/IP architectures. As a result, users can achieve resilient, scalable networks that can support proven and flexible CPwE logical architectures designed to help optimize OEM, plant-wide or site-wide IACS network performance.

The following use cases were documented by Panduit:

• Robust physical infrastructure design considerations and best practices

– Long reach cable applications, minimum/maximum cable lengths

– Single Pair Ethernet (SPE) and Ethernet-APL

– Wireless deployments

• Control Panel:

– Electromagnetic interference (EMI) noise mitigation through bonding, shielding, and grounding

– Industrial Ethernet Switch (IES) deployment within the Cell/Area Zone

• Physical Network Zone System:

– IES and Access Point (AP) deployment within the Cell/Area Zone

• Cable distribution across the Industrial Zone

• Industrial Distribution Frame (IDF):

– Industrial aggregation/distribution switch deployment within the Industrial Zone

• Industrial Data Center (IDC):

– Physical design and deployment of the Level 3 Site Operations

Figure 1-2 Panduit Robust Physical Infrastructure for the CPwE Architecture

|

|

Physical Infrastructure Network Design for CPwE Logical Architecture

Successful deployment of a Converged Plantwide Ethernet (CPwE) logical architecture depends on a solid physical infrastructure network design that addresses environmental, performance, and security challenges with best practices from Operational Technology (OT) and Information Technology (IT). Panduit collaborates with industry leaders such as Rockwell Automation and Cisco to help customers address deployment complexities that are associated with plant-wide Industrial Ethernet. As a result, users achieve resilient, scalable networks that support proven and flexible logical CPwE architectures that are designed to optimize industrial network performance. This chapter provides an overview of the key recommendations and best practices to simplify design and deployment of a standard, highly capable industrial Ethernet physical infrastructure. It introduces key concepts and addresses common design elements for the other chapters, specifically:

• Mapping Physical Infrastructure to the CPwE Logical Network Design, page 2-2

• Key Requirements and Considerations, page 2-3:

– Essential physical infrastructure design considerations

– Physical Network Zone System cabling architecture, the use of structured cabling versus point-to-point cabling and network topology

– M.I.C.E. assessment for industrial characteristics

– Physical infrastructure building block systems

– Cable media and connector selection

– Effective cable management

– Network cabling pathways

– Grounding and bonding industrial networks

• Link Testing, page 2-17

• Wireless Physical Infrastructure Considerations, page 2-18

Physical Infrastructure for the Converged Plantwide Ethernet Architecture

Mapping Physical Infrastructure to the CPwE Logical Network Design

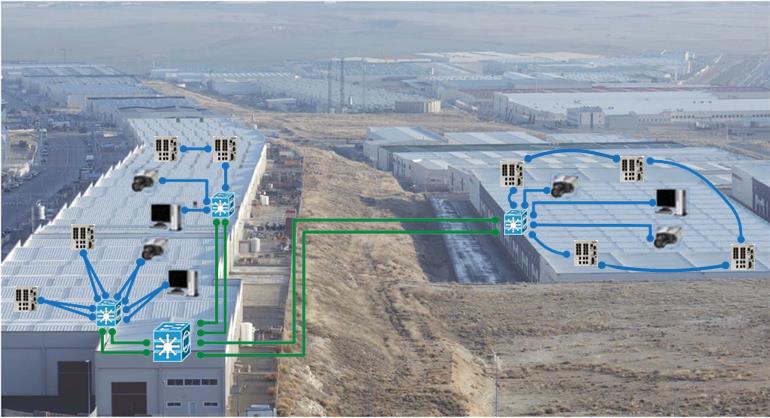

Network designers are being challenged to implement a reliable, secure, and future-ready network infrastructure across the varied and harsh environments of industrial plants/sites. The networking assets must be placed across the plant/site with consideration of difficult environmental factors such as long distances, temperature extremes, humidity, shock/vibration, chemical/climatic conditions, water/dust ingress and electromagnetic threats. These factors introduce threats that can potentially degrade network performance, affect network reliability, and/or shorten asset longevity. Figure 2-1 shows the CPwE logical framework mapped to a hypothetical plant/site footprint.

Mapping CPwE Logical to Physical

The physical impact on network architecture includes:

• Geographic Distribution—The selection of IES and overall logical architecture is also heavily influenced by the geographic dispersion of IACS devices, switches, and compute resources, and the type and amount of traffic anticipated between IACS devices and switches. Figure 2-1 shows the network architecture superimposed over the building locations and the campus-type connectivity between buildings that may require the long- reach capabilities of single-mode fiber.

Figure 2-1 Network Overlay on Building Locations

|

• Brownfield or Legacy Network—Additional design considerations are necessary to transition from or work alongside a legacy network. Existing installations have many challenges, including bandwidth concerns, poor grounding/bonding, inadequate media pathways, and limited space for new areas to protect networking gear. Additional cabling and pathways are often needed during the transition to maintain existing production while installing new gear.

• ![]() Greenfield or New Construction—Critical deadlines must be met within short installation time frames. In addition, installation risk must be minimized. Mitigating these concerns requires a proven, validated network building block system approach that uses pre- configured, tested, and validated network assets built specifically for the application.

Greenfield or New Construction—Critical deadlines must be met within short installation time frames. In addition, installation risk must be minimized. Mitigating these concerns requires a proven, validated network building block system approach that uses pre- configured, tested, and validated network assets built specifically for the application.

Physical Infrastructure Building Block Systems

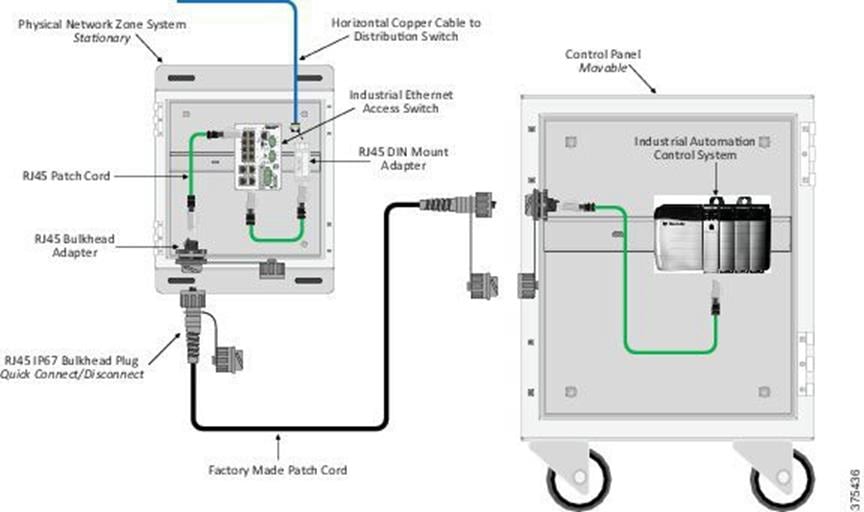

Industrial physical infrastructure cabling systems and enclosures are often designed and built without attention to detail. Poorly deployed industrial networks frequently fail because growth, environmental impact, device incompatibility, and poor construction were not anticipated. A better approach is to specify tested, validated industrial network building block systems that are built for industrial network deployment. A standardized approach to industrial network design speeds deployment and reduces risk, leading to a cost-effective solution. Also, as the network is expanded, the consistency of standardized, validated systems is rewarded with lower maintenance and support costs.

Industrial physical infrastructure network building block systems comprised of integrated active gear can be deployed at most levels of the CPwE logical architecture. An industrial network building block system simplifies deployment of the network infrastructure required at each level of CPwE by containing the specified switching, routing, computing and/or storage elements required for a given zone housed in an enclosure, cabinet, or rack complete with cabling, cable management, identification, grounding, and power. These building block systems can be ordered pre-configured with all the components and parts to be assembled on site or as an integrated, ready-to-install solution.

Figure 2-2 shows various building block systems as they relate to the CPwE architecture. At the Level 3 Site Operations or IDMZ, the building block system may consist of a network, compute, and storage system and may be delivered as both pre-configured and integrated. The integrated solution is basically an appliance compute system, such as an IDC, which is described in more detail in Chapter 5, “Physical Infrastructure Deployment for Level 3 Site Operations.” In the Cell/Area Zone, a Physical Network Zone System (PNZS) with a DIN- mount IES can be deployed as a building block system. These building block systems can be both pre-configured and integrated. Building block systems in the Industrial Zone are addressed in Chapter 5, “Physical Infrastructure Deployment for Level 3 Site Operations.” The Cell/Area Zone building block systems are described in Chapter 4, “Physical Infrastructure Design for the Industrial Zone.”

Figure 2-2 Various CPwE Building Block Systems

|

Key Requirements and Considerations

The following are key considerations for helping to ensure the success of CPwE logical architecture:

• Reach—The distance the cable must travel to form the connections between the IACS device and IES ports. Distance includes all media between ports, including patch cables.

• Industrial Characteristics—Environmental factors that act upon networking assets and cabling infrastructure installed in the plant/site.

• Physical Infrastructure Life Span—IACS and the plant/site network backbone can be in service 20 years or more. Therefore, cabling, connectivity, and the PNZS must survive the expected lifespan. During its lifespan, changes, and upgrades to the IACS served by the network can occur. As a result, the physical infrastructure and logical aspects of the network must be engineered to adapt.

• Maintainability—Moves, Adds, and Changes (MACs) have dependencies and may affect many Cell/Area Zones. Also, changes must be planned and executed correctly because errors can cause costly outages. Proper cable management practices, such as use of patch panels, secure bundling and routing, clear and legible permanent identification with accurate documentation, and revision control are vital to effective network maintenance and operation and rapid response to outages.

• Scalability—In general, the explosive growth of EtherNet/IP and IP connections strains legacy network performance. Controlling access of personnel to network enclosures is a key consideration of a defense in depth security strategy. Use of a card reader system is an alternative to key lock solutions which controls access to the enclosure and provides physical status of door. In addition, rapid IACS device growth causes network sprawl that can threaten uptime and security. A strong physical infrastructure design accounts for current traffic and anticipated growth. Forming this forecast view of network expansion simplifies management and guides installation of additional physical infrastructure components when necessary controls access to the enclosure and provides physical status of door. In addition, rapid IACS device growth causes network sprawl that can threaten uptime and security. A strong physical infrastructure design accounts for current traffic and

anticipated growth. Forming this forecast view of network expansion simplifies management and guides installation of additional physical infrastructure components when necessary.

• Designing for High Availability—PNZSs can either be connected in rings or redundant star topologies to achieve high availability. Use of an uninterruptible power supply (UPS) for backup of critical switches prevents network downtime from power bumps and outages. New battery-free UPS technologies leverage ultra-capacitors with a wide temperature range and a long lifetime for IACS control panel and PNZSs. Intelligent UPS backup devices with EtherNet/IP ports and embedded CIP (ODVA, Inc. Common Industrial Protocol) object support allow for faceplates and alarm integration with IACS to improve system maintainability and uptime.

• Network Compatibility and Performance—Network compatibility and optimal performance are essential from port to port. This measurement includes port data rate and cabling bandwidth. Network link performance is governed by the poorest performing element within a given link. These parameters take on greater importance when considering the reuse of legacy cabling infrastructure.

• EMI (Noise) Mitigation—The risks from high frequency noise sources (such as variable- frequency drives, motors, power supplies and contractors) causing networking disruptions must be addressed with a defense-in-depth approach that includes grounding/bonding/shielding, well-balanced cable design, shielded cables, fiber-optics and cable separation. The importance of cable design and shielding increases for copper cabling as noise susceptibility and communication rates increase. Industry guidelines and standards from ODVA, Telecommunications Industry Association (TIA), and International Electrotechnical Commission (IEC) provide guidance into cable spacing, recommended connectors and cable categories to enable optimum performance.

• Grounding and Bonding—Grounding and bonding is an essential practice not only for noise mitigation but also to help enable worker safety and help prevent equipment damage. A well-architected grounding/bonding system, whether internal to control panels, across plants/sites, or between buildings, helps to greatly enhance network reliability and helps to deliver a significant increase in network performance. A single, verifiable grounding network is essential to avoid ground loops that degrade data transmission. Lack of good grounding and bonding practices risks loss of equipment availability and has considerable safety implications.

• Security—A security incident can cause outages resulting in high downtime costs and related business costs. Many industry security practices and all critical infrastructure regulations require physical infrastructure security as a foundation. Controlling access of personnel to network enclosures is a key consideration of a defense in depth security strategy. Use of a card reader system is an alternative to key lock solutions which controls access to the enclosure and provides physical status of door. Network security must address not only intentional security breaches but inadvertent security challenges. One example of an inadvertent challenge is the all-too-frequent practice of plugging into live ports when attempting to recover from an outage. A successful security strategy employs logical security methods and physical infrastructure practices such as lock-in/block-out (LIBO) devices to secure critical ports, keyed patch cords to prevent inappropriate patches, and hardened pathways to protect cabling from tampering.

• Reliability Considerations—Appropriate cabling, connectivity, and enclosure selection is vital for network reliability, which must be considered over the design life span, from installation through operational phase(s) to eventual decommissioning/replacement. Designing in reliability protocols helps prevent or minimizes unexpected failures. Reliability planning may also include over- provisioning the cabling installation. Typically, the cost of spare media is far less than the labor cost of installing new media and is readily offset by avoiding outages.

• Safety—During the physical infrastructure deployment of IES, it is important to consider compliance with local safety standards to avoid electrical shock hazards. IT personnel may occasionally require access to industrial network equipment and may not be familiar with electrical and/or other hazards contained by PNZSs deployed in the industrial network. Standards such as National Fire Protection Association (NFPA) 70E provide definitions that help clarify this issue. Workers are generally categorized by NFPA-70E as qualified or unqualified to work in hazardous environments. In many organizations, IT personnel have not received the required training to be considered qualified per NFPA- 70E. Therefore, network planning and design must include policies and procedures that address this safety issue.

• Wireless—The deployment of wireless in the PNZS requires design decisions on cabling and installation considerations for access points (APs) and Wireless Local Area Networks (WLANs). The PNZS backbone media selection and the selection of cabling for APs using Power over Ethernet (PoE) are critical for future readiness and bandwidth considerations. Another planning aspect in the wireless realm involves legacy cabling connected to current wireless APs. Legacy802.11 a/b/g devices are likely to exist. New wireless APs deployed today are likely to be 802.11 n/ac/ax (WiFi 4, 5, or WiFi 6 certified) with speeds up to 10Gbps. Cable requirements for new installations are recommended to be Category 6 (WiFi 4) to Category 6A. As higher performance wireless standards such as 802.11be (also known as Wi-Fi 7) are considered, it is important to understand that in some cases, existing cabling may not support increased uplink bandwidths necessary to support higher bit rate APs.

• PoE—PoE is a proven method for delivering commercial device power over network copper cabling. DC power, nominally 48 volts, is injected by the network switch. PoE switch capabilities have evolved to help deliver higher levels of power over standards-compliant Ethernet copper cabling. Industrial Network switches are available that offer PoE (IEEE 802.3af (802.3at Type 1)) and PoE+ (IEEE 802.3at Type 2) ports with between 15.4W to 30W available to devices. 23AWG Category 6 cabling is recommended for these installations. The IEEE 802.3bt-2018 standard further expands the power capabilities for PoE++ (IEEE 802.3bt Type 3) at 60W and 4PPoE (IEEE 802.3bt Type 4) at 99W. 23 AWG Category 6A cabling will be required to support these device connections. In time, the scope of PoE will expand to become a viable power source for other elements of industrial networks. Accordingly, consideration of conductor gauge and bundling density will grow in importance.

The above considerations are addressed in this chapter with various design, installation, and maintenance techniques, such as PNZS architecture and cabling methods (structured and point-to- point). The following sections describe these methodologies.

Physical Zone Cabling Architecture

A common approach to deploying industrial Ethernet networks was to cable infrastructure as a home run from the IES back to a centralized IT closet switch, to an IDC, as shown in Figure 2-

3. This was a reasonable approach because fewer cable runs existed and the Ethernet network was for IACS information only. As industrial networks grew in size and scope, with Ethernet becoming pervasive in many layers of the network (control and information), this home run methodology became difficult to scale and developed into a more expensive choice. Adding a new IES to the edge of the IACS may require a major cable installation to help overcome obstacles, route in pathways, and so on. In addition, this growth in home run networks has led to significant network cabling sprawl, impacting the reliability of the network. A better approach is a PNZS cabling architecture.

Figure 2-3 Example of 'Home Run' Cabling from Control Panels and Machines back to IT Closet

Figure 2-3 Example of 'Home Run' Cabling from Control Panels and Machines back to IT Closet

![]() PNZS cabling architectures are well proven in Enterprise networks across offices and buildings for distributing networking in a structured, distributed manner. These architectures have evolved to include active switches at the distributed locations. For an IACS application as depicted in Figure 2-4, PNZS architecture helps mitigate long home runs by strategically locating IES around the plant to connect equipment more easily. The IES switches are located in control panels, IDF enclosures, and in PNZS enclosures. IDF enclosures house higher density 19" rack-mounted IES and related infrastructure while PNZSs house DIN rail-mounted IES and related infrastructure. PNZS architectures can leverage a robust, resilient fiber backbone in a redundant star or ring topology to link the IES in each enclosure location. Shorter copper cable drops can then be installed from each IDF or PNZS to control panels or on-machine IACS devices in the vicinity rather than back to a central IT closet.

PNZS cabling architectures are well proven in Enterprise networks across offices and buildings for distributing networking in a structured, distributed manner. These architectures have evolved to include active switches at the distributed locations. For an IACS application as depicted in Figure 2-4, PNZS architecture helps mitigate long home runs by strategically locating IES around the plant to connect equipment more easily. The IES switches are located in control panels, IDF enclosures, and in PNZS enclosures. IDF enclosures house higher density 19" rack-mounted IES and related infrastructure while PNZSs house DIN rail-mounted IES and related infrastructure. PNZS architectures can leverage a robust, resilient fiber backbone in a redundant star or ring topology to link the IES in each enclosure location. Shorter copper cable drops can then be installed from each IDF or PNZS to control panels or on-machine IACS devices in the vicinity rather than back to a central IT closet.

![]()

![]()

![]() Figure 2-4 Example Use of PNZS and IDFs to Efficiently Distribute Cabling Using a Physical Zone Cabling Architecture

Figure 2-4 Example Use of PNZS and IDFs to Efficiently Distribute Cabling Using a Physical Zone Cabling Architecture

![]() TIA/EIA and related ISO/IEC standards support PNZS cabling architectures. A specific example of cabling support appears in Work Area Section 6.1 of TIA 568-C.1: "Work area cabling is critical to a well-managed distribution system; however, it is generally non-permanent and easy to change." These standards define work areas to be the locations where end device connections are made. For office buildings, the work area can be desks in offices and cubicles. In industrial settings, the work area is often in harsh areas on the machine or process line within Cell/Area Zones. These standards require structured cabling that eases management and enables performance by connecting equipment with patch cords to horizontal cabling terminated to jacks.

TIA/EIA and related ISO/IEC standards support PNZS cabling architectures. A specific example of cabling support appears in Work Area Section 6.1 of TIA 568-C.1: "Work area cabling is critical to a well-managed distribution system; however, it is generally non-permanent and easy to change." These standards define work areas to be the locations where end device connections are made. For office buildings, the work area can be desks in offices and cubicles. In industrial settings, the work area is often in harsh areas on the machine or process line within Cell/Area Zones. These standards require structured cabling that eases management and enables performance by connecting equipment with patch cords to horizontal cabling terminated to jacks.

Structured and Point-to-Point Cabling Methods

Networked IACS devices can be connected in two ways for both copper conductor and optical fiber:

• Structured

• Point-to-point or Direct Attached

The preferred approach to deploy an industrial network is a standards-based (TIA-1005) structured cabling approach. Structured cabling has its roots in Enterprise and data center applications and can be reliable, maintainable and future-ready. Although point-to-point connectivity was the practice for slower proprietary networks for over 25 years, as networks move to higher-performing industrial Ethernet networks, weaknesses occur. In general, point-to- point is less robust than structured cabling because testable links don't exist, spare ports cannot be installed, and the use of stranded conductors means reduced reach. However, good use cases exist for point-to-point connectivity, such as connecting devices to a switch in a panel or short single-connection runs. For more detail on this topic, see Chapter 5, “Physical Infrastructure Deployment for Level 3 Site Operations.”

A PNZS cabling architecture addresses the following key considerations:

• Reach—Media selection is a significant aspect of network reach. Standards-compliant 4- pair copper installations have a maximum link length of 100 m (328 feet). Single Pair

Ethernet (per IEEE 802.3cg) utilizing 10BASE-T1L PHYs can provide a maximum link length of 1000 m (3280 feet). A fiber backbone can reach 400 m to 10 km along with the downlinks. PNZS cabling architecture has a unique ability to extend the practical reach of copper links beyond the 100 m home run copper cabling limitation. For an all-copper installation, the uplink can travel up to 100 m while the downlink can reach another 100 m for a total of 200 m.

• Industrial Characteristics—Media selection can be more granular and cost-effective in a PNZS architecture because only the necessary harsh environment drops from IES to IACS end devices are run in hardened media and connectivity.

• Physical Network Infrastructure Life Span—A PNZS cabling infrastructure has a longer potential life span than home run cabling. Where it may be too expensive to install state- of-the-art cabling to each IACS device in a home run scenario, a physical zoned architecture can have high-bandwidth uplinks in the backbone and downlinks/IES can be upgraded as needed, extending the effective life span of a PNZS cabling infrastructure.

• Maintainability—MACs are faster, easier, and less expensive with a PNZS architecture because only shorter downlink cables are installed, removed, or changed. A home run change requires greater quantities of cable and installation/commissioning labor.

• Scalability—A key feature of a PNZS architecture is scalability because spare ports are automatically included in the design with a structured cabling approach.

• Designing for High Availability—PNZSs can be connected in either a resilient ring or redundant star topology to achieve higher availability.

• Network Compatibility and Performance—A key feature of a PNZS-deployed IES is the ability to place the machine/skid IES in a dedicated enclosure with power always on. This helps eliminate network degradation to rebuild address tables caused by powering up/down IES in a control panel for production runs.

• Grounding and Bonding—A PNZS enclosure must have a grounding system with a ground bar tied to the plant ground network. In addition to addressing worker safety considerations, an effective grounding/bonding strategy ensures maximum transmission quality for the network.

• Security—PNZSs typically have a keyed lock to control access to the equipment housed within. In addition, port blocking and port lock-in accessories can secure IES ports, helping prevent problems that may be caused by inadvertent connections made when recovering from an outage.

• Reliability Considerations—A PNZS architecture with a structured cabling system helps deliver high reliability because it provides testable links. Built-in spare ports can resolve outages rapidly.

• Safety—An IES in a PNZS enclosure separates personnel from hazardous voltages in a control panel connected to the IES.

• Wireless—APs can be connected to IES in a PNZS architecture.

• PoE—IES can have PoE-powered ports. Typical applications for PoE include cameras, APs, and other IP devices that can use PoE power. PNZS cabling architectures can help easily support the addition of PoE devices from the IES while minimizing cost and complexity of a home run.

• PoE Network Extender—Utilizing a PoE-powered port from an IES a network connection can be extended beyond the 100-meter limitation by installing a PoE network extender module. This setup includes a transmitter and receiver device that leverages standard physical infrastructure.

M.I.C.E. Assessment for Industrial Characteristics

Cabling in IACS environments frequently is exposed to caustic, wet, vibrating, and electrically noisy conditions. During the design phase, network stakeholders must assess the environmental factors of each area of the plant where the network is to be distributed. A systematic approach to make this assessment, called Mechanical Ingress Chemical/Climatic Electromagnetic (M.I.C.E.), is described in TIA-1005A and other standards ANSI/TIA-568-C.0, ODVA, ISO/IEC24702 and CENELEC EN50173-3.

M.I.C.E. assessment considers four areas:

• Mechanical—Shock, vibration, crush, impact

• Ingress—Penetration of liquids and dust

• Chemical/Climatic—Temperature, humidity, contaminants, solar radiation

• Electromagnetic—Interference caused by electromagnetic noise on communication and electronic systems

M.I.C.E. factors are graded on a severity scale from 1 to 3, where 1 is negligible, 2 is moderate and 3 is severe (see Figure 2-5). Understanding exposure levels helps to enable the appropriate connectivity and pathways are specified to guarantee long-term performance. For example, exposure to shock, vibration, and/or UV light may require use of armored fiber cabling suitable for outdoor environments.

Figure 2-5 TIA-1005A MICE Criteria

Figure 2-5 TIA-1005A MICE Criteria

![]() It is important to understand that M.I.C.E. rates the environment, not the product. The result of a

It is important to understand that M.I.C.E. rates the environment, not the product. The result of a

M.I.C.E. evaluation is used as a benchmark for comparing product specifications. Each product used in the system design should at least be equal to or exceed the M.I.C.E. evaluation for that space.

M.I.C.E diagramming allows the design to balance component costs with mitigation costs to build a robust, yet cost-effective system. The process starts by assessing the environmental conditions in each Cell/Area Zone within the Industrial Zone. A score is determined for each factor in each area. For example, the Machine/Line is a harsh environment with a rating of M3I3C3E3. Since the E factor, electromagnetic, is high, the likely cabling would be optical fiber. Since the other factors, M, I and C, are high as well, the cabling would require armor and a durable jacket. Figure 2-6 shows an example of

M.I.C.E. diagramming.

Figure 2-6 Sample Environmental Analysis Using the M.I.C.E. System

Figure 2-6 Sample Environmental Analysis Using the M.I.C.E. System

Particulate Ingre s, Immersion

(NEMA or IP Rati ng systems)

Cable Media and Connector Selection

Cable Media and Connector Selection

|

Many considerations exist for media selection, such as reach, industrial characteristics, life span, maintainability, compatibility, scalability, performance, and reliability, all of which depend on the construction of the cable. The four main options for the cable construction are:

• Media—Copper (shielded or unshielded, solid or stranded) or optical fiber

• Media Performance—For copper, Cat 5e, Cat 6, Cat 6A, Cat 7; for fiber-optic cable, OM1, OM2, OM3, OM4, OM5, single-mode

• Inner Covering/Protection—A number of media variants are designed to allow copper and fiber-optic cable to survive in harsh settings. These include loose tube, tight buffer, braided shield, foil,

aluminum-clad, and dielectric double jacketed.

• Outer Jacket—TPE, PVC, PUR, PE, LSZH

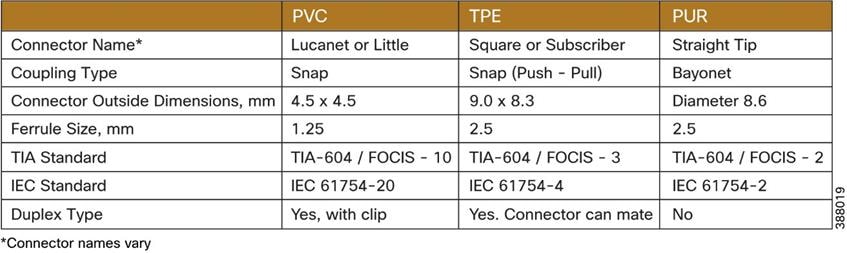

In addition, regulations and codes may govern the use of cabling in certain areas based on characteristics such as flammability rating, region deployed (such as low-smoke zero-halogen in Europe) and voltage rating (600 v). Table 2-1 shows some general cable characteristics.

Table 2-1 General Cable Characteristics

| Parameter |

Copper Cable |

Multimode Fiber |

Single-mode Fiber |

| Reach (maximum) |

100 m 1000m (SPE) |

2,000 m (1 Gbps) 400 m (10 Gbps) |

70 km (1 Gbps) 10 km (10 Gbps) |

| Noise Mitigation Option |

Foil shielding |

Noise immune* |

Noise immune* |

| Data Rate (Industrial) |

10Mbps (SPE) |

1 Gbps |

1 Gbps |

|

|

100 Mbps (Cat |

10 Gbps |

10 Gbps |

|

|

5e) 1 Gbps |

|

|

|

|

(Cat 6) |

|

|

|

|

10 Gbps (Cat 6a) |

|

|

Table 2-1 General Cable Characteristics

| Parameter |

Copper Cable |

Multimode Fiber |

Single-mode Fiber |

| Cable Bundles |

Large |

Small |

Small |

| Power over Ethernet (PoE) capable |

Yes Yes, PoDL for SPE applications |

Yes, with media conversion |

Yes, with media conversion |

*Fiber-optic media is inherently noise immune; however, optical transceivers can be susceptible to electrical noise.

Fiber and Copper Considerations

When cabling media decisions are made, the most significant constraint is reach (see Table 2- 1). If the required cable reach exceeds 100 m (328 feet), then the cable media choice is either Single Pair Ethernet (a copper cabling technology), non-standard long reach cable, PoE network extender, or optical fiber cable. Four-pair copper Ethernet cable is limited to a maximum link length of 100 m. Single Pair Ethernet (SPE) with a media converter extends the reach of copper cabling for specific applications, typically those found at the network edge, and can be used to form point-to-point links at distances up to 1000 meters (3280 feet). However, other considerations may be important for distances less than 100 m, such as extreme EMI, for which fiber-optic cable is preferred due to its inherent noise immunity.

Another consideration that warrants the use of fiber optic cabling network switch uplinks. Laboratory studies show that using optical fiber for these connections provide faster convergence after a switch power interruption, lessening the duration of the network outage. In a comparison of data rates, copper and fiber are similar for typical industrial applications (see Table 2-1); however, other higher performing optical fiber cables are available for high demand networks. A possible exception to the “copper/fiber” speed statement is Single Pair Ethernet. SPE, as defined by IEEE 802.3cg, has a maximum speed of 10 Mbps. However, this apparent speed penalty versus 4-pair performance comes with the advantage of 10x link length if the IACS application can tolerate the speed penalty.

Network life span is a significant consideration for media performance. For instance, installing a network with Cat 6 cabling is not recommended if growth is expected within 10 years, especially if the network will be transporting video. If the expectation is 10 or more years of service, a higher performance category cabling (such as Cat 6A) should be considered.

Long Reach applications

ANSI/TIA 568 standard defines that an Ethernet channel (structured cable plus patch cords) should not exceed 100 meters due to the amount of time it takes the electrical signal, at high bandwidth speed, to traverse the entire channel. However, with advancements in cable technology and the proliferation of IIoT sensors that don’t require high bandwidth, there are cable solutions that can provide longer reach due to UL validation testing but do not comply with the current ANSI/TIA 568 standard.

IIoT sensors are typically lower bandwidth, around 10 or 100 Mbps and therefore the signal can operate reliably at longer distances when using a higher quality cable that has been validated through testing. Additional long-reach applications, that benefit from this cable technology, include video cameras and wireless access points. Often these devices require greater than 100 meters of cabling and require power, such as PoE.

These long-reach applications are where cable construction and the corresponding performance are essential. The specified cable should have proof that a third party*, such as UL, has tested and approved the cable for the required installation, and provide a performance warranty. In addition, it is good practice to verify the performance of the installed cable with a network tester (this testing is often required for establishing the warranty). These long-reach cables may

eliminate the need for additional zone enclosures or fiber runs and when properly specified and installed will provide years of reliable cable infrastructure.

Long reach cables are also capable of supplying Power over Ethernet (PoE) for remote sensors, wireless access points, and cameras. As expected, the lower the required PoE power, the longer the cable distance. The table below shows the results of a UL test that addresses distance, power, and wireless access points on a high-quality Cat 6 and Cat 6a cable.

| Maximum horizontal transmission distance |

High-Quality Cat 6 |

High-Quality Cat 6a |

| 10 Mbps |

185m (606 ft.) |

185m (606 ft.) |

| 100 Mbps |

150m (492 ft.) |

150m (492 ft.) |

| 1 Gbps |

100m (328 ft.) |

100m (328 ft.) |

| 10 Gbps |

50m (164 ft.) |

100m (328 ft.) |

| PoE Specifications |

High-Quality Cat 6/Cat 6a |

| Type 1 – 15.4 watts |

185m (606 ft.) |

| Type 2 – 30 watts |

150m (492 ft.) |

| Type 3 – 60 watts |

100m (328 ft.) |

| Type 4 – 100 watts |

100m (328 ft.) |

| Wireless Specifications |

High-Quality Cat 6 |

High-Quality Cat 6a |

| WiFi (IEEE 802.11 ac) |

10 Gbps 37-50m (121-164 ft.) |

10 Gbps 100m (328 ft.) |

| WiFi (IEEE 802.11 ax) |

10 Gbps 37-50m (121-164 ft.) |

10 Gbps 100m (328 ft.) |

* Refer to verify.UL.com V558491 & V685853 for details on the above specifications

Network extenders

A network extender, also known as Power over Ethernet (PoE) Extender, is the simplest and most cost-effective method to extend data, and optionally PoE power, well beyond the 100- meter limitation of a traditional switch using standard twisted-pair cabling or long reach cabling described in the previous section. In fact, a network extender distance of up to 2,000 feet at a speed of 100 Mbps can be achieved.

The network extender hardware, consisting of a transmitter and receiver, is used to extend the Ethernet signal and, if using a PoE-powered switch, the power can be extended as well.

Network extenders can be powered using a PoE port on a switch or with a local DC power supply. The extender works by converting the standard Ethernet to a long-range signal and then converting it back to standard Ethernet. Therefore, the sourcing switch and end device are unaware the extender is being used and maintain operation with standard Ethernet protocol.

The extenders do not require any configuration setup and therefore are easy to install.

Industrial environments are often more demanding for network transmission due to nearby electrical noise that can interfere with the integrity of the signal. In some cases, a shielded cable is recommended to protect the network transmission from this electrical noise, often referred to as Electromagnetic Interference (EMI). When running a cable long distance for a network extender application it is best to avoid areas where EMI is present. However, avoiding the EMI area is not always possible and mitigation methods, such as using shielded cable, must be implemented. Cisco, Panduit, and Rockwell Automation recommend installing the cabling system using patch panels. Using patch panels requires some additional physical infrastructure as shown in figure 4. ‘Patching’ refers to interconnecting the cables through patch panels so

that standard patch cables can be used, and a structured cable can be set up to connect the patch panels. Structured horizontal cable is typically a solid conductor and can be conveniently tested with handheld test equipment. Patching can be set up with shielded or unshielded copper cabling, and it is recommended to follow structured cabling standards, such as TIA 1005.

Figure 2-7 Typical PoE network extender application

|

Industrial Control Panel Remote Station

Optical Fiber Cable Basics

Single-mode and multimode are the two fiber types. Multimode fiber has glass grades of OM1 to OM5. When selecting any optical fiber, the device port must first be considered and must be the same on both ends, (that is, port types cannot be mixed). In general, the port determines the type of fiber and glass grade. If the device port is SFP, it is possible to select compatible transceivers and the optimal transceiver for the application. Also, considerations for number of strands, mechanical protection, and outer jacket protection exist. See Chapter 5, “Physical Infrastructure Deployment for Level 3 Site Operations” for a more detailed explanation of optical fiber.

Optical Fiber Link Basics

The optical fiber link (that is, channel) is formed with a patch cord from the device to an adapter. Adapters hold connector ends together to make the connection and are designed for a single fiber (simplex), a pair (duplex), or as a panel containing many adapter pairs. One end of the adapter holds the connector for the horizontal cable that extends to an adapter on the opposite end. A patch cord from the end adapter connects to the device on the opposite end, completing the link. Various connectors and adapters exist in the field where Lucent Connector (LC) is the predominate choice due to the SFP and performance. However, many legacy devices may have older-style Subscriber (SC) or Straight Tip (ST) connectors. See Chapter 5, “Physical Infrastructure Deployment for Level 3 Site Operations” for a more detailed explanation of connectors and adapters.

Copper Network Cabling Basics

Copper network cabling performance is designated as a category (that is, Cat). Higher category numbers indicate higher performance. Currently, the predominant choice is Cat 6A (especially for video applications) where higher categories are beginning to be deployed. The copper conductor is typically 23 American wire gauge (AWG), although smaller diameter 28 AWG may be used in some cases for patching. Larger gauge wires are available, and the conductors can be stranded or solid. Typically, a solid conductor is used to help achieve maximum reach for the horizontal cable/permanent links, while a stranded conductor is used for patching or flex applications.

Different strand counts are available in stranded Ethernet cable. Select higher strand counts for high flex applications. Another consideration is EMI shielding. Various shielding possibilities can be employed to suppress EMI noise with foil and/or braided outer jacket or pairs with foil.

Mechanical protection and outer jacket protection also must be considered when selecting copper network cables. See Chapter 4, “Physical Infrastructure Design for the Industrial Zone” for a more detailed explanation of copper network cabling.

Copper Network Channel Basics

A structured copper network channel is formed with a patch cord plugged into the device and the other end of the patch cord plugged into a jack in a patch panel. The jack is terminated to solid copper horizontal cable that extends to a jack on the other end. A patch cord is plugged into the jack, and the other end of the patch cord is plugged into the device, completing the channel (see Figure 2-7). Two predominant, proven connectors or jacks exist for copper industrial networks:

• RJ45—Part of validated and tested patch cord or field terminable

• M12—Over-molded patch cord or field terminable

The RJ45 plug and jack have been adapted for industrial networks, where they can be deployed in a DIN patch panel. If the connector is located inside a protected space or enclosure, standard RJ45 connectivity is preferred. The plug could be part of a tested and validated patch cord or a durable field-attachable plug. The RJ45 bulkhead versions are available for quick connect/disconnect and versions that are sealed from ingress of liquids and particulates. The M12 is a screw-on sealed connector well suited for splashdown and harsh environments and can be a two-pair D-Code or a four-pair X-Code. See Chapter 4, “Physical Infrastructure Design for the Industrial Zone” for a detailed explanation of copper network cabling and connectivity.

Figure 2-7 Simplified Example of Copper Structured Cabling

|

Patch Cord

![]()

![]()

![]()

Channel

Channel

Minimum copper patch cord length

As network infrastructures continue to have a higher impact on an organization’s productivity and competitive position, it becomes increasingly important to select a reliable end-to-end structured cabling system that allows for future network growth. To maximize usable network bandwidth, it is critical that patch cords properly support the performance, quality, and dependability of the entire structured cabling system. The performance of the link between the IES and IACS device is most impacted by noise generated in the portion of the channel closest to the active equipment. This is where the patch cords are located and where quality should never be compromised.

It is vital that patch cords have the following design features:

· Mated performance with connecting hardware to maximize cancellation of near-end-crosstalk

· Robust construction to ensure long-term reliability of the network under day-to-day moves, adds and change conditions

· Certified component performance to ensure maximum network availability.

Patch cords are an integral component impacting the performance of the entire structured cabling system. Investment should be made in patch cords that utilize advanced features to deliver maximum reliability and usability of the network.

Sub-standard patch cords jeopardize network performance due to:

· High degree of plug variability which leads to degradation of channel performance

· Inferior design features which do not perform over time due to the mechanical stresses of real-world conditions

· Untested, low quality patch cords which may not meet electrical requirements. Often, field-produced patch cords made in the pursuit of expediency cannot come close to the performance of factory- produced cords. In addition, patch cords must be properly mated and tuned to connecting hardware (jacks, panels, and horizontal cable) to keep the network running at peak performance. This directly minimizes the impact of costly network downtime.

· All PHY chips produced to a given standard have a tolerance built in to offset variances in physical layer performance. Be aware that some of this silicon is “sensitive” to physical layer variance. Here sub-standard patch cord performance may result in a poor performing or even non-functional link.

The proven performance of high-quality Panduit copper patch cords, tested to exceed TIA/EIA 568-B.2 specifications as a key component of the complete end-to-end solution, helps ensure maximum network bandwidth. Specifying and installing these patch cords can support critical network demands.

In addition to quality and performance of patch cords, it’s important to consider the minimum feasible patch cord length. Patch cords that are too short can cause performance problems in practice. A good rule of thumb is to use cords no shorter than 0.5 meter (approximately 20 inches). Some PHY chips are sensitive to short cords.

While there is not a minimum patch cord length specified, ANSI/TIA 568-B.2 sets an implied minimum length for a certified patch cord at 0.5 meter. That’s because the calculation for the insertion loss limit lines does not work well below this length. When selecting patch cords, pay close attention to the chosen supplier. Many suppliers will not certify a patch cord below 1 meter.

From ANSI/TIA 568-B.2, K.7.3.2 Insertion Loss

“For cords or jumpers using stranded 26 AWG conductors, the insertion loss of any pair shall be less than or equal to the value computed by multiplying the result of the insertion loss equation in clause 4.3.4.7 by a factor of 1.5. This is to allow a 50% increase in insertion loss for stranded construction, AWG differences, and design differences.”

SPE (Single Pair Ethernet)

SPE (Single Pair Ethernet) is a form of copper-based Ethernet network communications technology for OT networks. SPE is governed by IEEE 802.3cg and provides for Ethernet

communications at speeds of 10 Mbps over a pair of balanced copper conductors. The IEEE standard created two new PHYs, 10BASE-T1L and 10BASE-T1S.

10BASE-T1L Point-to-point links of up to 1000 meters in length at 10 Mbps, with optional power delivery. This point-to-point link is designed to tolerate up to ten (10) inline connectors.

10BASE-T1S Multidrop links with up to 8 nodes over a total cabled length of 25 meters. T1S PHYs can also form point-to-point lengths of up to 15 meters in length.

Figure 2-8 Single pair Ethernet architecture

|

Both PHYs support topologies that the “built for purpose” legacy networks do today. Therefore, a key SPE benefit is building OT networks as you always have and gaining the advantages of Ethernet.

10BASE-T1L applications use the PHY’s ability to deliver long distance point-to-point links with optional power delivery. The SPE media required to form these links out to their maximum distance capability of 1000 meters with 18AWG STP cable. Links are connectorized on the ends using connection technologies described in the IEC 63171 series of international standards. It is generally understood that a continuous pull of 1000 meters of cable is nearly impossible; that is the reason the IEEE 802.cg standard permits up to ten (10) intermediate connectors within the 10BASE-T1L link.

10BASE-T1S PHY designs permit multidrop topologies to be designed and implemented, delivering data connectivity to multiple nodes over a span 25 meters of cable. Note that the 25-meter span is the sum of all trunk and drop segments. ODVA recently published a very unique 10BASE-T1S system in Volume 2, “EtherNet/P Adaptation of CIP, Chapter 8: Physical Layer” that is intended for the interconnection of In- Cabinet devices. Typical devices include contactors, motor starters, overload protectors, pushbuttons, indicators, and related devices.

This in-cabinet system replaces the hardwiring between devices with composite network cabling, taking the form of a 7-conductor ribbon cable. This system of automation panel construction brings rapid assembly, programmable alteration of function, and enabling intelligence in the connected devices to provide greater information for maintenance and process optimization.

The system achieves a reduction in device complexity by using a Single Pair Ethernet bus to reduce the device interface complexity and to reduce the average number of interfaces per device. Cabling is simplified by bundling of network power and switched power (for actuation) into a single multi- conductor cable. Assembly is simplified by utilization of insulation displacement/piercing connectors

that can be applied (i.e., mechanically attached and contacting the signals) without cable preparation. A technique to locate the relative position of the devices on the bus cable reduces the need for manual selector switches or direct configuration tool attachment to each device – reducing device and installation complexity.

SPE Benefits for OT Networks

· Seamless single physical layer from application edge to the enterprise. SPE simplifies network design and operation, eliminating the support and translation of legacy networks used in OT networks.

· Tighter integration with CPwE validated reference architectures. SPE being Ethernet, if supported by the IACS application, allows much more effective integration and control over the edge network versus legacy networks.

· SPE is Ethernet designed to support the way you build OT networks. SPE PHYs allow the network edge to function like legacy networks, doing so with Ethernet.

· Greatly enhancing security versus “cyber-vulnerable” legacy networks. Since SPE is Ethernet, edge networks incorporate the suite of robust security measures found in IP and OT SDO networks, such as CIPSecurity.

· Optional power delivery can transform OT network control power infrastructure. Many edge devices are reliant on local control power sources. 10BASE-T1L SPE links allow a single connection with control power and communications transforming the network edge control power infrastructure.

SPE Powering

OT devices require both data and power to perform their designed functions. Power is not always conveniently available at the device installed location. Power over data line (PoDL), like traditional PoE solves these problems by providing power alongside data connectivity on a single cable.

Figure 2-9 Power over data line architecture

|

IEEE 802.3 standardized over a single pair of conductors at 100 Mbps and 1000 Mbps in the IEEE 802.3bu standard, “Physical Layer and Management Parameters for Power over Data Lines (PoDL) of Single Balanced Twisted-Pair Ethernet.” The publication of IEEE 802.3cg extended the specification to include SPE applications at 10 Mbps for OT networks.

All single pair powering traces back to the PoDL standard and in that regard, all SPE powering is technically PoDL. PoDL provides very broad powering capabilities, encompassing multiple voltage levels and power delivery categories. To simplify discussion of SPE powering, industry experts speak about two variants of single-pair powering, SPoE and PoDL. SPoE is used to describe classification- based implementation used in OT networks; “plug & play” SPE powering applications that functionally mirror the user experience of 4-pair PoE installations. Experts use the term PoDL to reflect use of capabilities where control engineers design powering to function as required by their individual applications.

SPoE · Classifies end device prior to application of power

· Can measure cable resistance

· 24V, 55V ranges available (common in OT networks)

· Can deliver power up to 1 kilometer

· Increased power levels possible at shorter distances

PoDL · Extremely fast detection of end device prior to power

application

· No classification of end devices, appropriate for engineering systems

· 12V, 24V, or 48V ranges

· 15- and 40-meter cable reaches

Today, OT deployments often use a 24V DC supply voltage. However, power delivery on long cables at this voltage is subject to significant losses due to cable resistance. Power delivery efficiency is maximized when the voltage is raised near the SELV maximum, 60V DC. As shown below, SPoE provides two distinct operating voltages: 24V and 55V DC.

| Class |

10 |

11 |

12 |

13 |

14 |

15 |

| VPSE (V, min/typ/max) |

20 / 24 / 30 |

50 / 55 / 58 |

||||

| ICABLE (mA, max) |

92 |

240 |

632 |

231 |

600 |

1579 |

| PPD (W, max) |

1.23 |

3.2 |

8.4 |

7.7 |

20 |

52 |

| RCABLE (Ohms, max) |

65 |

25 |

9.5 |

6.5 |

25 |

9.5 |

Six unique classes are defined for OT networks. Classes 10, 11 and 12 enable 24 VDC power delivery over long, medium, and short cables respectively. Likewise, Classes 13, 14 and 15 enable 55VDC power delivery over long, medium, and short cables respectively.

SPE Physical Layer

Generally, installed SPE network links should conform to IEC 60950-1, IEC 62368-1, and IEC 61010-1. All equipment and cabled infrastructure should additionally conform to applicable local, state, or national standards.

All SPE equipment is expected to conform to the potential environmental stresses with respect to their mounting location, as defined in the following specifications, where applicable.

· Environmental stresses: IEC 60529 and ISO 4892

· Mechanical stresses: IEC 60068-2-6 and IEC 60068-2-31

· Climatic stresses: IEC 60068-2-1, IEC 60068-2-2, IEC 60068-2-14, IEC 60068-2-27, IEC

60068-2-30, IEC 60068-2-38, IEC 60068-2-52, and IEC 60068-2-78

SPE cable is readily routed by conventional means used for 4-pair Ethernet cables.

SPE Media

IEEE 802.3cg, like most IEEE 802.3 standards, does not prescribe physical media. Rather the standard defines electrical characteristics that must be present in the chosen media for the PHYs to perform correctly. 10BASE-T1L is designed to operate over a single balanced pair of conductors that meet the requirements specified in subclause 146.7 of IEEE 802.3cg. All SPE cabling and equipment is expected to be mechanically and electrically secure in a professional manner. All SPE cable is expected to be routed according to any applicable local, state, or national standards considering all relevant safety standards.

A 10BASE-T1L link segment supports up to ten (10) in-line connectors using a single balanced pair of conductors for up to at least 1000 meters. SPE links can be made using shielded or unshielded cables, depending upon the EMC environment encountered in the application. Transmission distance of

10BASE-T1L links is limited primarily on the DCR (direct current resistance) value of the single pair cable, e.g., smaller gauge cable limits the transmission distance.

SPE point-to-point links may be built using a variety of gauge sizes of balanced single pair cable. However, it’s worth noting that link segment electrical performance is predicated on a reference 18AWG SPE cable with a characteristic impedance of 100 ohms. Larger or smaller conductor sizes generally provide longer or shorter link lengths. From the chart below, 18AWG cable delivers 1000-meter length at the insertion loss limit. Substituting 14AWG cable delivers a length over 1500 meters. Conversely, dropping conductor size to 26AWG results in a link length of only 395 meters. Note: the largest conductor size accommodated by SPE connectors is 18 AWG.

By design, SPE links can accommodate the added insertion loss of inline connectors; up to ten (10) connectors in a 1000-meter link. This design feature is intended to permit SPE users significant implementation flexibility and in some cases, enable cable reuse.

|

Conductor Diameter mm (AWG) |

Resistance per Meter ohms |

Length at IL limit meters |

Conductor Resistance at IL limit ohms |

Loop Resistance at IL limit ohms |

10 Connector DCR ohms |

Link Segment Resistance at IL limit ohms |

| 1.63 (14) |

0.0092 |

1589 |

14.67 |

29.33 |

1 |

30.33 |

| 14.5 (15) |

0.0116 |

1415 |

16.47 |

32.94 |

1 |

33.94 |

| 1.29 (16) |

0.0147 |

1261 |

18.50 |

37.00 |

1 |

38.00 |

| 1.14 (17) |

0.0185 |

1123 |

20.78 |

41.55 |

1 |

42.55 |

| 1.02 (18) |

0.0233 |

1000 |

23.33 |

46.66 |

1 |

47.66 |

| 0.91 (19) |

0.0294 |

891 |

26.20 |

52.40 |

1 |

53.40 |

| 0.81 (20) |

0.0371 |

793 |

29.42 |

58.84 |

1 |

59.84 |

| 0.72 (21) |

0.0468 |

706 |

33.04 |

66.07 |

1 |

67.07 |

| 0.64 (22) |

0.0590 |

629 |

37.10 |

74.19 |

1 |

75.19 |

| 0.57 (23) |

0.0744 |

560 |

41.66 |

83.31 |

1 |

84.31 |

| 0.51 (24) |

0.0938 |

499 |

46.78 |

93.55 |

1 |

94.55 |

| 0.40 (26) |

0.1492 |

395 |

58.98 |

117.96 |

1 |

118.96 |

For 10BASE-T1L applications where the 1000-meter link length is desired, a balanced-pair 18AWG cable is required. Suitable cable has a characteristic impedance of 100 ohms. A word of caution. Many fieldbus cables in use today have characteristic impedance of 100 ohms but this impedance value is based on typical data rates of 31.2 kbps not the 10 Mbps rate provided by SPE. At 10 Mbps transmission rates, cables carry frequencies as high as 20 MHz which shift the characteristic impedance value, significantly in some fieldbus cables.

Cable reuse is possible given conspicuous caution. The prospect of reusing installed fieldbus cable is certainly enticing and arguably would lower the cost of deploying SPE or Ethernet-APL. Given specification-compliant media that is electrically healthy, the practice of fieldbus cable reuse can be very rewarding. There are risks that must be addressed in contemplating reuse.

· Fieldbus Cable Specification – Examine the specifications of the cable planned for reuse. Generally, a nominal impedance of 100 ohms is essential.

· Consider the link lengths needed in the application. Is the cable conductor size adequate?

· Cable topology – Determine that the installed cable topology works with SPE.

· Also, count inline connectors in each link. Are there 10 or less connectors?

Instrument Measurement of Link Performance – Analyzers are available to measure the electrical performance of links targeted for reuse. These analyzers compare actual measured performance against the accepted norms for SPE links.

Finally, bear in mind that environmental conditions that degrade cable performance – elevated and/or extremely low temperatures, high humidity, chemical contact, UV exposure and mechanical stress like shock and vibration, are available in abundance in plants and factories. These environmental stresses, combined with cable age, may push fieldbus cable beyond successful reuse parameters.

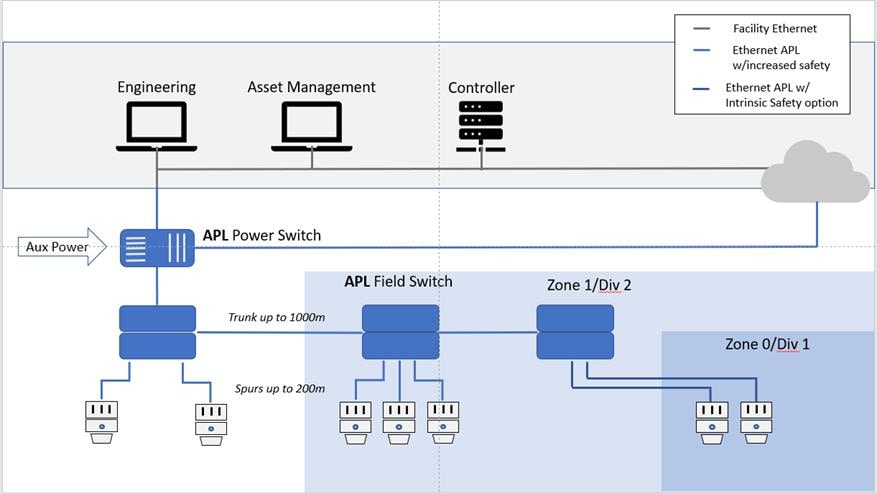

Ethernet-APL

Figure 2-10 Ethernet APL Trunk and Spur Technology

Ethernet-APL (advanced physical layer) is a ruggedized, two-wire, loop-powered Ethernet physical layer that uses 10BASE-T1L plus IEC extensions for installation within the demanding operating conditions and hazardous areas of process plants. It enables a direct connection of field instrumentation to plant- wide Ethernet networks in a way that process plants can benefit from a convergence of their OT and IT systems.

Ethernet-APL enables long cable lengths, loop power and explosion protection via intrinsic safety techniques. Based on IEEE 802.3cg and several IEC standards, Ethernet-APL physical layer supports any industrial Ethernet protocol, such as EtherNet/IP. These capabilities create a long-term stable infrastructure for the process automation community.

Ethernet-APL adopts technologies and options already established in the field of process automation. This includes the proven trunk-and-spur topology shown in the figure above with the ability to power up to 50 field devices with up to 500 mW each. Widely used and established cable infrastructures are specified to support the migration in brownfield installations to Ethernet-APL.

For more information on Ethernet-APL, visit https://www.ethernet-apl.org or https://www.odva.org/technology-standards/market-drivers/process-automation

Additional attributes beyond those of SPE are required by process automation applications.

| 2-WISE |

2-WISE stands for 2-Wire Intrinsically Safe Ethernet. IEC Technical Specification TS 60079-47 defines intrinsic safety protection for all hazardous environment Zones and Divisions. 2-WISE includes simple steps for verifying intrinsic safety without calculations. |

| Ethernet-APL Port Profiles |

Ethernet-APL defines port profiles for multiple power levels with and without explosion hazardous area protection. Markings on devices and instrumentation indicate power level and function as sourcing or sinking. This simple framework provides for interoperability from engineering to operation and maintenance. |

| Support for terminals |

Ethernet-APL allows wiring to screw-type or spring-clamp terminals, thus supporting cable entry through glands. Additionally, well-defined connector technology ensures simplicity during installation work |

Ethernet-APL Approved Connectors

Because Ethernet-APL focuses on process automation applications, its physical layer components must be appropriate for these applications. Therefore, the Ethernet-APL project has approved connection methods commonly encountered in this environment. These connection methods include:

· Spring-clamp terminals

· Screw-type terminals

· M8 and M12 connectors

When selecting these connection methods for use in Ethernet-APL, the connectors must also meet the following requirements.

| Specification |

Minimum |

Maximum |

| Current per contact |

4 amperes |

|

| Rated voltage, contact to contact |

50 VDC |

|

| Isolation voltage, contact to shield |

1500 VAC |

|

| Insertion Loss (IL) |

|

0.04 푓푓푓푓푓푓 0.1푀푀푀푀푀푀 ≤ 푓푓 < 4 푀푀푀푀푀푀

0.02 푥푥 √(푓푓 / 푀푀푀푀푀푀) 푓푓푓푓푓푓 4 푀푀푀푀푀푀 ≤ 푓푓 ≤ 20 푀푀푀푀푀푀 |

| Return Loss (RL) |

|

28 + 8 푥푥 푓푓 푑푑푑푑 푓푓푓푓푓푓 0.1푀푀푀푀푀푀 ≤ 푓푓 < 0.5 푀푀푀푀푀푀

32푑푑푑푑 푓푓푓푓푓푓 0.5 푀푀푀푀푀푀 ≤ 푓푓 ≤ 20 푀푀푀푀푀푀 |

| PSANEXT |

|

73 푑푑푑푑 푓푓푓푓푓푓 0.1 푀푀푀푀푀푀 ≤ 푓푓 < 0.95 푀푀푀푀푀푀,

50.5 푑푑푑푑 – 17푙푙푓푓푙푙10(푓푓 / 20) for 0.95MHz ≤ f < 3.68 MHz

63푑푑푑푑 푓푓푓푓푓푓 3.68푀푀푀푀푀푀 ≤ 푓푓 ≤ 20 푀푀푀푀푀푀 |

| DC resistance per contact |

|

0.025 ohms |

| NOTE 1: The values specified in this table shall be maintained across the full environmental temperature range of the device with which the terminal block or connector is used.

NOTE 2: The parameters given in this table are required for connector and terminal block selection. For practical reasons, for connectors built into an APL port implementation, no specific connector performance tests are required, as it is expected that the connector performance is validated during the port specific APL conformance tests (e.g. MDI return loss). |

||

Ethernet-APL Media

Ethernet-APL specifies fieldbus Type A cable, IEC 61158-2, as reference cable. Type A cable is nominally 100 ohms, +/- 20 ohms tolerance and 18 AWG. The intent in specifying this reference cable is cable reuse. Ethernet-APL, which is based on IEEE 802.3cg, includes the ten (10) inline connector allowance, again done in anticipation of cable reuse.

Ethernet-APL is specifically designed to accommodate the requirements of intrinsic safety and hazardous environments. These applications have specific environmental requirements for the physical infrastructure to operate safely. These requirements, in addition to the electrical requirements for SPE 10BASE-T1L links, drive the specifications of the Ethernet-APL physical layer.

Cable reuse is possible given conspicuous caution. The prospect of reusing installed fieldbus cable is certainly enticing and arguably would lower the cost of deploying SPE or Ethernet-APL. Given specification-compliant media that is electrically healthy, the practice of fieldbus cable reuse can be very rewarding. There are risks that must be addressed in contemplating reuse.

· Fieldbus cable specification – Examine the specifications of the cable planned for reuse.

o Generally, a nominal impedance of 100 ohms is essential.

· Consider the link lengths needed in the application.

o Is the cable conductor size adequate?

· Cable topology – Determine that the installed cable topology works with Ethernet-APL.

· Count inline connectors in each link.

o Are there 10 or less connectors?

o Can these connectors meet the IL, RL and PSANEXT performance listed in the table above?

· Instrument Measurement of Link Performance – Analyzers are available to measure the electrical performance of links targeted for reuse.

o These analyzers compare actual measured performance against the accepted norms for SPE and Ethernet-APL links.