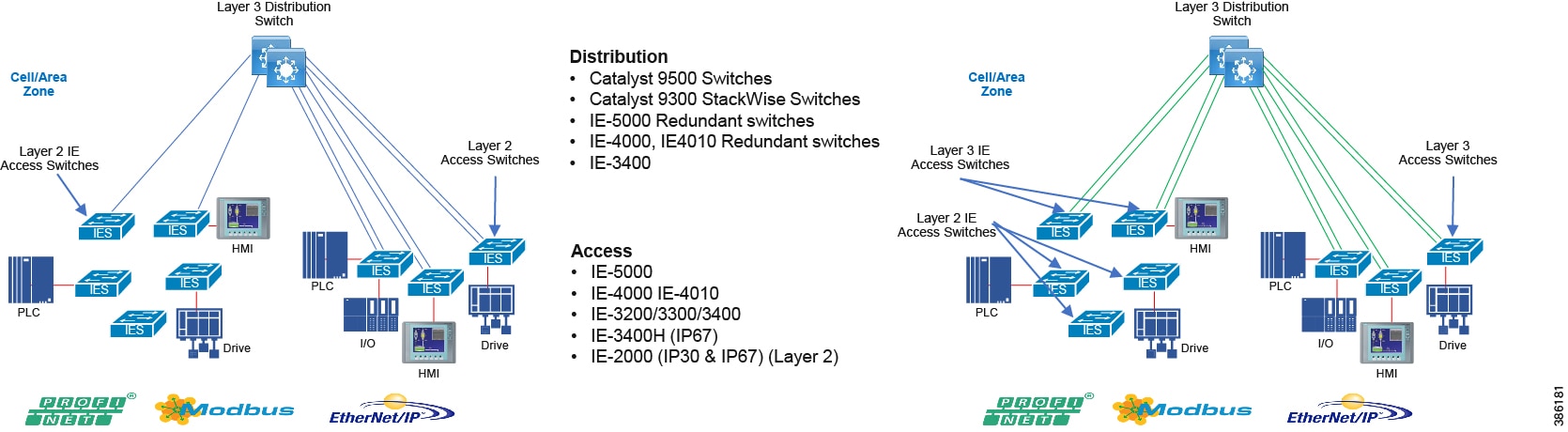

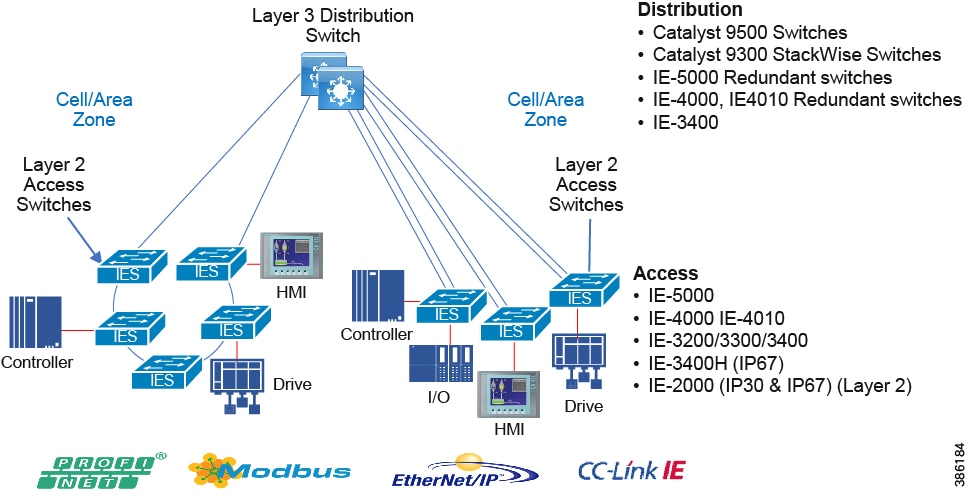

- Industrial Networking and Security Design for the Cell/Area Zone

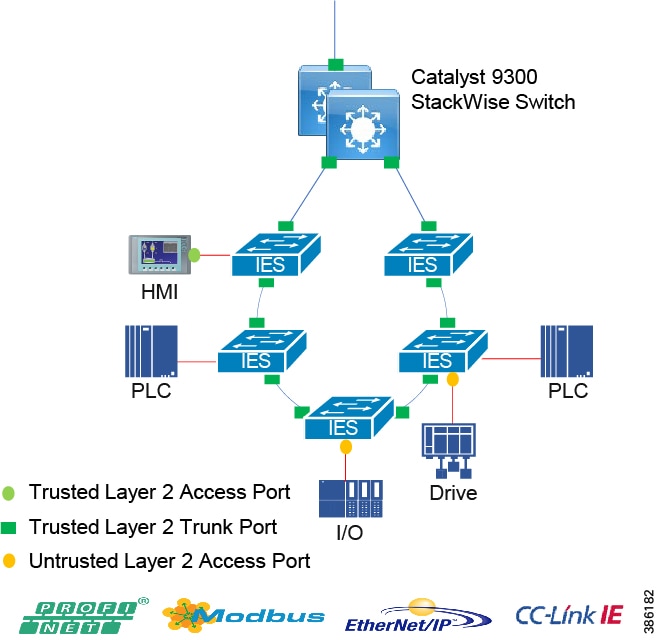

- Industrial Characteristics and Design Considerations

- Cell/Area Zone Components

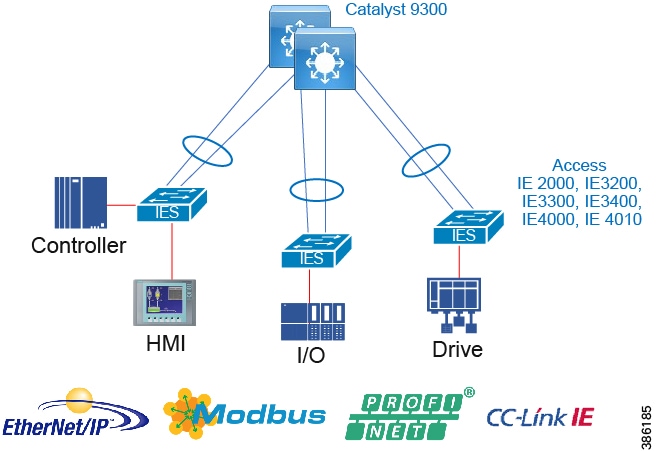

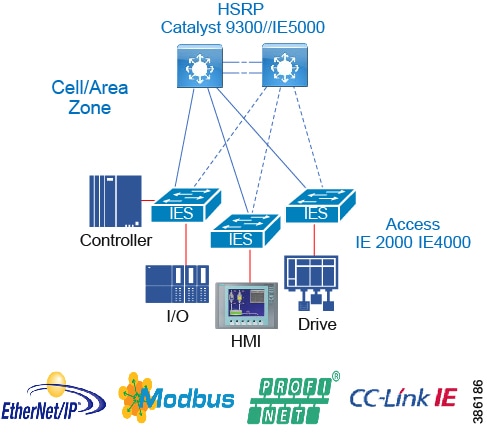

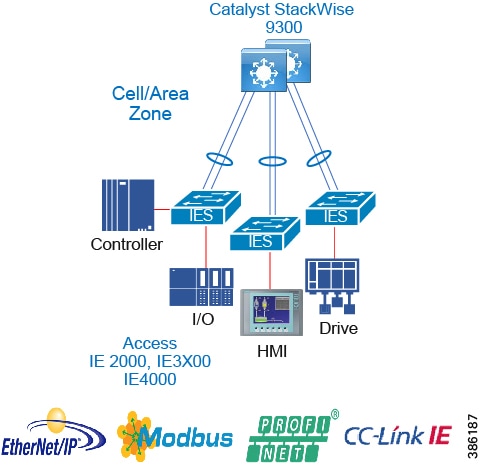

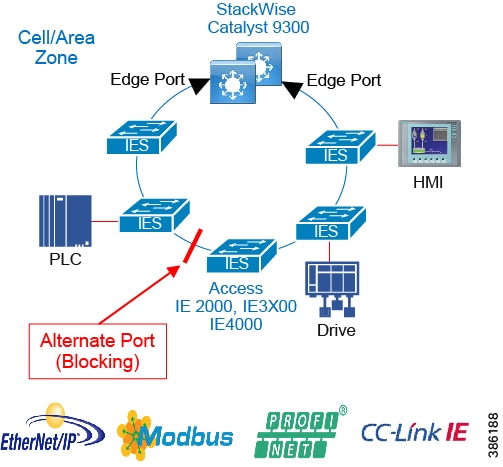

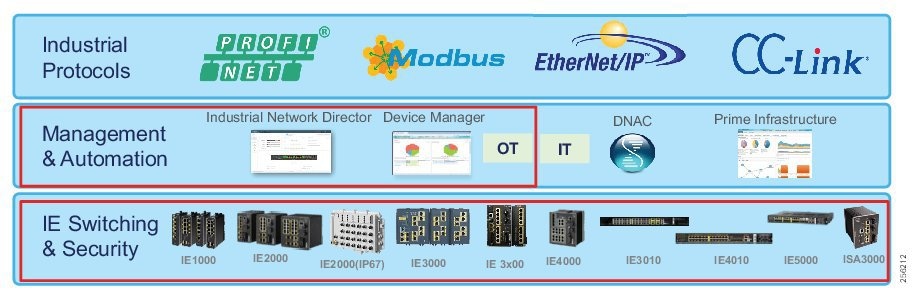

- Switching Platform, Industrial Security Appliance, and Industrial Compute Portfolio for the Cell/Area Zone

- Cell/Area Zone IP Addressing

- Cell/Area Zone Traffic Patterns and Considerations

- Cell/Area Zone Performance and QoS Design

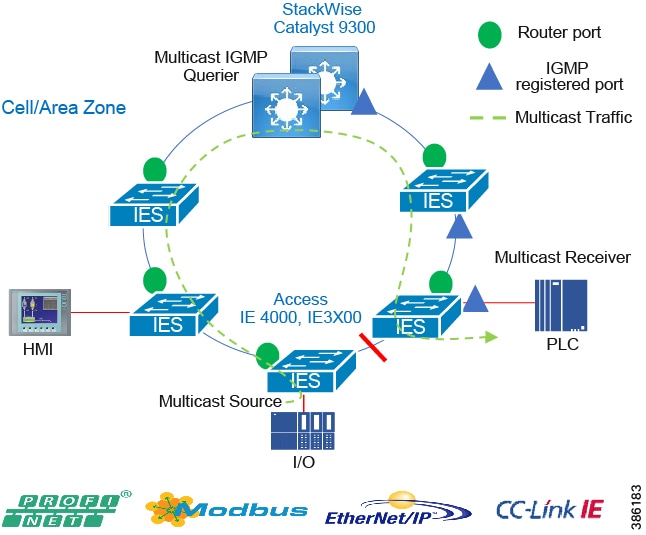

- Multicast Management in the Cell/Area Zone and ESP

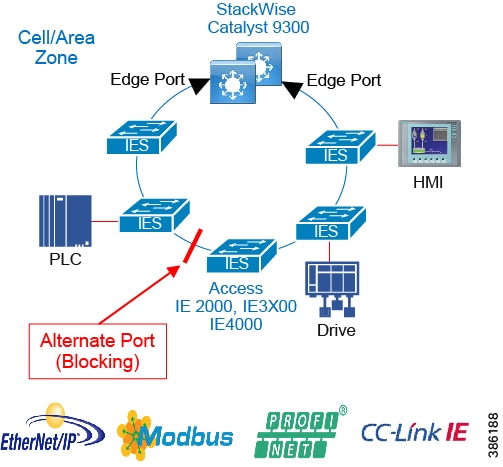

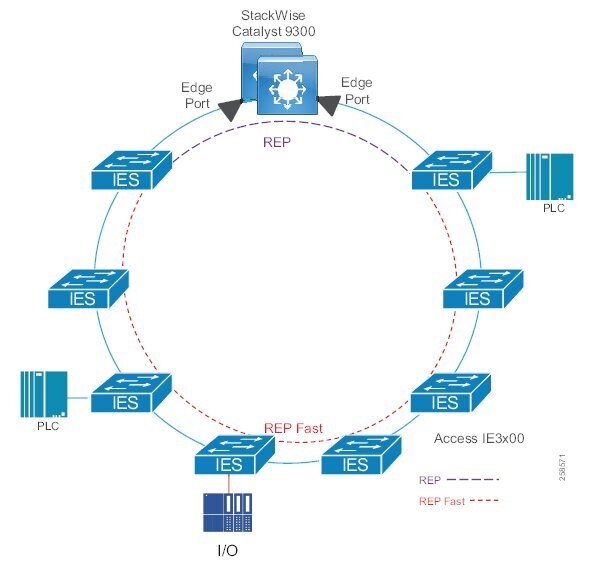

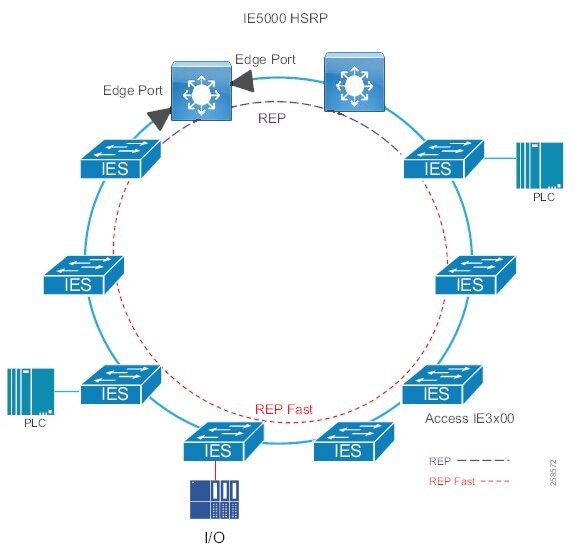

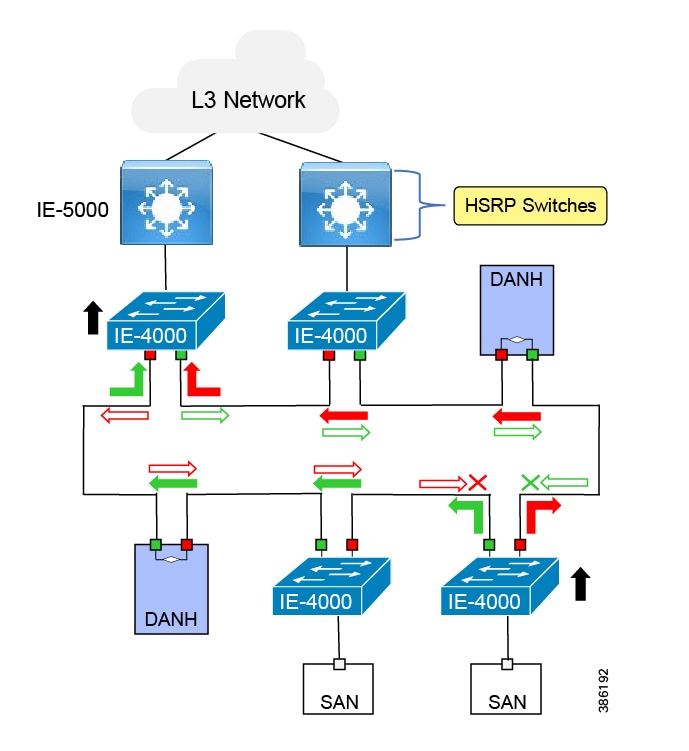

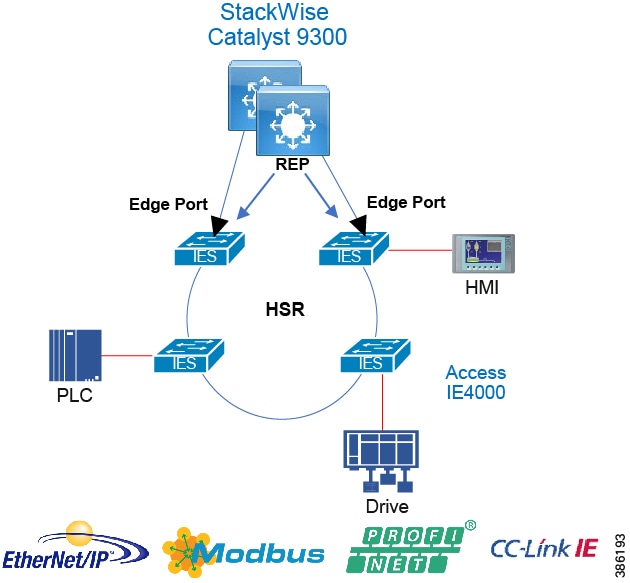

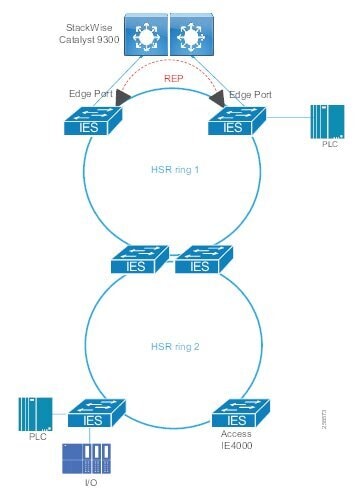

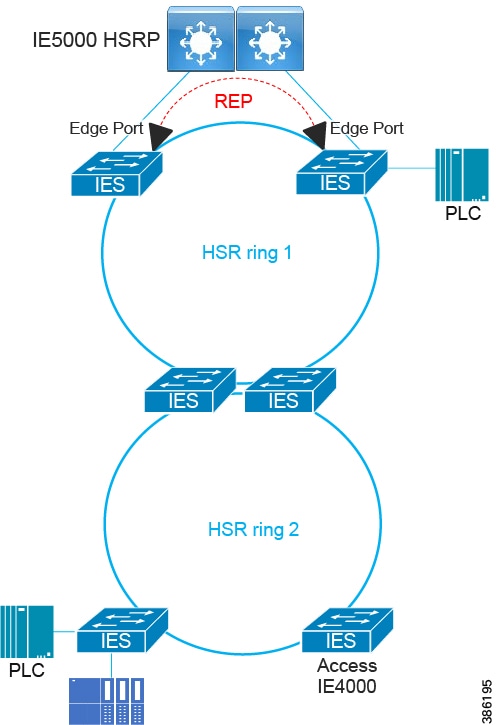

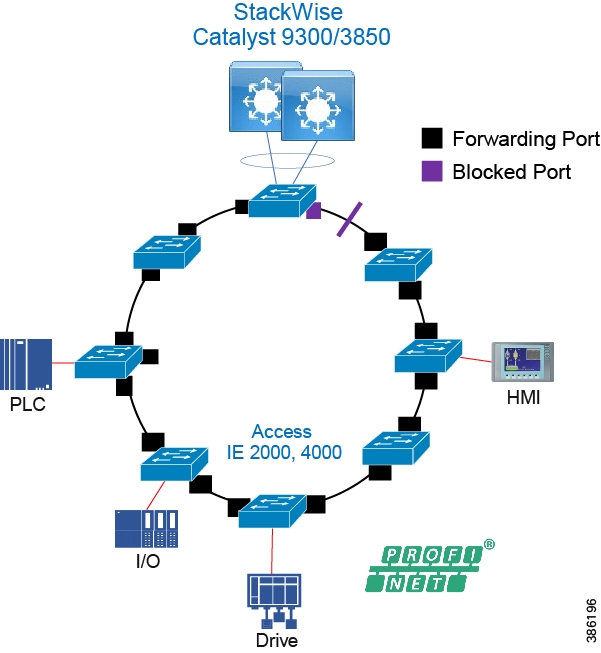

- Availability

- Resiliency Summary and Comparison

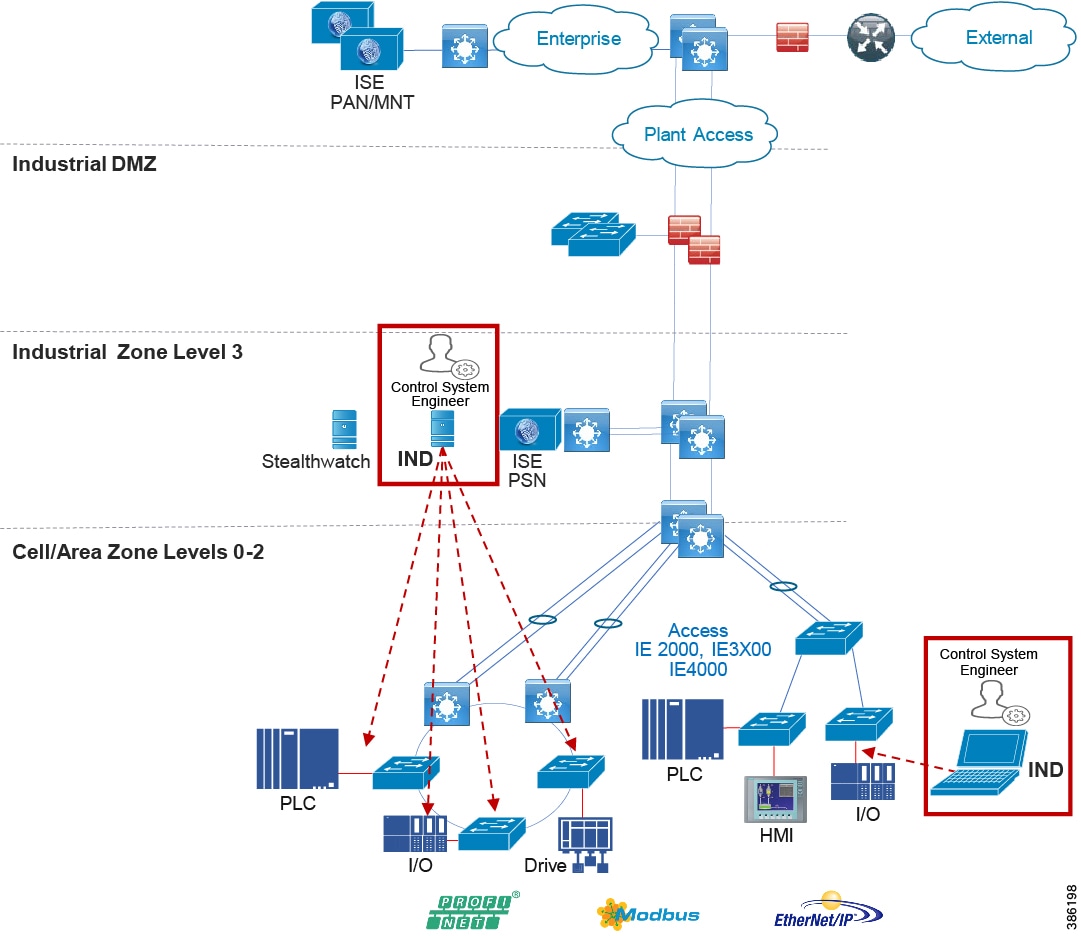

- Cell/Area Zone Management

- Cisco IND Deployment Options and Considerations

Industrial Automation in Mining Environments

Executive Summary

Operations in today's mining industry need to be flexible and reactive to commodity price fluctuations and shifting customer demand, while maintaining operational efficiency, product quality, sustainability and most importantly safety of the mine and its personnel. Mining companies are seeking to drive operational and safety improvements into their production systems and assets through convergence and digitization by leveraging new paradigms introduced by the Industrial Internet of Things (IIoT). However, such initiatives require the secure connection of process environments via standard networking technologies to allow mining companies and their key partners access to a rich stream of new data, real-time visibility, optimized production systems and when needed, secure remote access to the systems and assets in the operational environments.

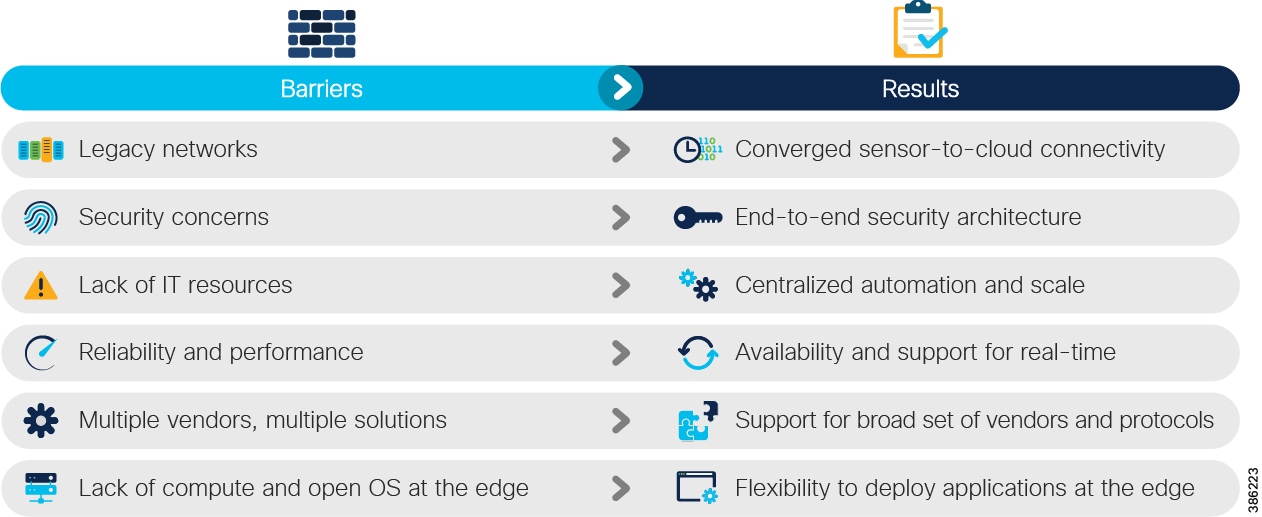

The Cisco® Industrial Automation (IA) Mining solution and relevant product technologies are an essential foundation to securely connect and digitize mining production environments to achieve these significantly improved business operational outcomes. The Cisco solution overcomes top customer barriers to digitization including security concerns, inflexible legacy networks, and complexity. The solution provides a proven and validated blueprint for connecting Industrial Automation and Control Systems (IACS) and production assets, improving industrial security, and improving plant data access and reliable operations. Following this best practice blueprint with Cisco market-leading technologies will help decrease deployment time, risk, complexity, and improve overall security and operating uptime. Why an Industrial Automation – Mining Solution below highlights key mining telecommunications infrastructure barriers and results of the IA Mining 1.0 solution and how this solution overcomes them.

Figure 1 Why an Industrial Automation – Mining Solution

Trends and Challenges in Mining

The Mining industry faces challenges from many fronts. Constant threat of the commodity price falling below the current production cost at a given location, environmental issues, need for water, power, waste storage, water treatment, regulatory compliance, and site remediation just to name a few.

Operations in today’s mining industry need to be flexible and reactive to commodity price fluctuations and shifting customer demand. Digitizing the mine helps provide greater visibility and insights, thus improving decision-making capabilities; helps lower safety risks and operational costs, resulting in increased operational efficiency and productivity.

Safety is paramount in a dangerous, nonstop, 24x7x365 mining production environment. Product grade, quality, worker productivity and overall equipment efficiency (OEE) metrics are key concerns mining companies.

Additionally, Mining is performed in isolated parts of the world, and requires the development of a local ecosystem comprised of infrastructure and services to support the operation. With remote locations, a mining company may be the landlord for housing, an Internet provider, water utility, waste management utility, transporter of people and product, phone company, power provider, with each of these being a necessary component to support the primary mining processes. Operators obtain licenses to mine from governments that impose strict environmental operational requirements, maintain the land lease, and obtain lease extensions for future operations.

The mining process disrupts water tables (reduction, pollution, and redirection), generates dust, impacts flora and fauna, and consumes vast amounts of energy. When transporting the ore from the mine to a processing plant or to a customer location, mines often use railway systems that cross public boundaries and roads. Mine bulk impacts nearby towns (dust, pollutants to marine environments, noise, light). If things go wrong, entire ecosystems can be disrupted – such as tailing dam failures, toxified water tables, land subsistence, and permanently redirected water flows. Ore refineries generate large volumes of unusable material that have to be handled in an environmentally responsible manner. Mines need both social and environmental licenses to operate. They have responsibilities to the communities and geographic areas in which they operate and need to remediate land back to a government in an agreed state at the completion of the mining operations.

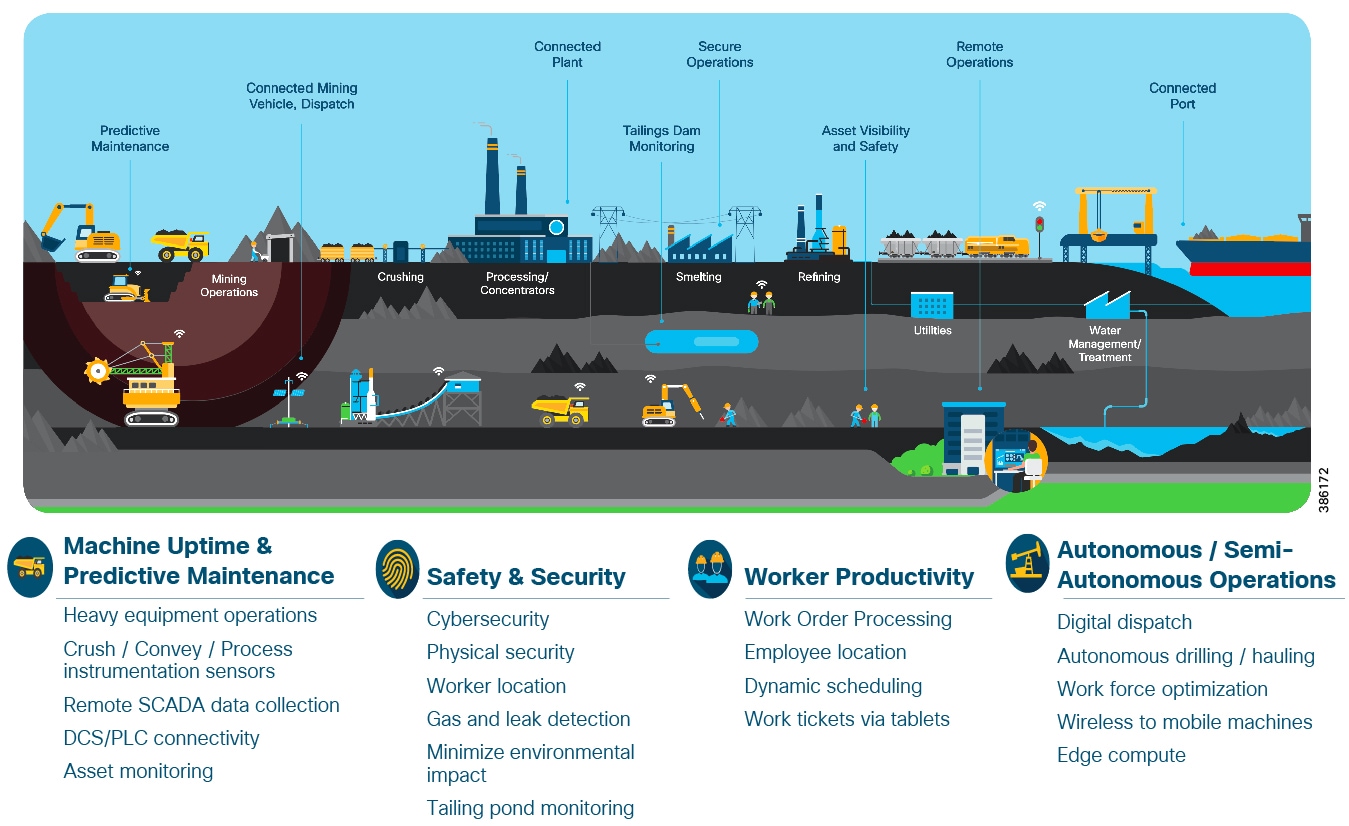

The mining sector plays a significant role in global metals, minerals, and energy production supply chains. Mining companies are major employers and contributors to government revenues via royalties and taxes. Smooth, reliable and consistent operations is vital to mining companies, countries and world economies alike. Mining Customer Objectives and Challenges below highlights key objectives and complexities of digitizing mining production environments, from extraction to transportation and all the steps in between.

Figure 2 Mining Customer Objectives and Challenges

Solution Features

This section covers the key benefits of deploying IA for Mining, the key use cases and process stages of mining and identifies how those areas are supported by this or other Cisco Validated solutions.

Benefits of deploying Industrial Automation for Mining

Key benefits of the solution include:

Increased productivity and efficiency, with greater agility to react to production-impacting failures, market trends, industry fluctuations, and shifting demand. Digitizing the mining supply chain from pit-to-port through the adoption of Industry 4.0 and Internet of Things (IoT) sensor connectivity, and leveraging digital technologies to enable critical applications, such as increased automation and remote operations, analytics, machine learning (ML), and artificial intelligence (AI). Enabling these capabilities will allow mine operators to make more informed decisions, improve productivity, and increase efficiency and safety. As an example, adopting remote and autonomous operations, increases productivity with higher asset utilization and reduces risk to personnel, by removing people from dangerous areas. IA Mining is a foundational reference architecture for the plant environment for locations such as Crush/Convey, Concentration, Smelter, Refinery, Port operation, and water treatment.

Managed cyber risk in the Industry 4.0 era, as the fourth industrial revolution is upon us, with the adoption of connecting the unconnected - driving mine digitization by interconnecting the customer and their supply chain from pit-to-port. Because the production output from mining does not vary as much as in the discrete manufacturing world, the emphasis in mining is on integration and optimization of internal supply chains – multiple mines, rail lines, ports, and refineries.

Cybersecurity is a prime concern for mining companies. Mine operation and production cannot risk being affected by cybersecurity breaches. In harsh industries, cyber-attacks could lead to environmental incidents. Security or safety compliance compromises could result in hefty fines, penalties, and potentially a loss of license(s) for a mine to operate.

Equally important in mining is the use of security and secure architectures to protect fragile control equipment from other equipment and to mitigate unintentional impacts -- such as malformed packets, variances in equipment manufacturer protocol implementations, broadcast storms, and network discovery loads, which may cause production failures. Therefore, mining companies are concerned about inadvertent intersystem communications triggering production shutdowns or failures in ever more complex interconnected mining systems. A common issue seen by mining companies is the unintentional outcomes from operator errors and misconfigurations with good intentions in mind. Without a true change control system and ability to control and audit changes operate error can cause catastrophic issues.

Improve the efficiency of high-impact resources such as water usage and energy. This not only assists in the operational efficiency of the mine and cost reductions, it also helps to align the mine with environmental laws and legislations. As the world becomes more environmentally conscious, there is a move for the mining industry to examine and improve environmental impacts. Mines need to adopt measures to improve energy utilization, reduce water consumption, improve water reclamation, reduce their carbon footprint, and become more eco-friendly. Enabling the digital mine, connecting IoT sensors, and leveraging digital technologies for real-time operational visibility and process optimization will assist in realizing these goals. With the ability to converge systems securely and reliably the mines of the future are able to leverage data like never before.

Prioritize safe, healthy, and sustainable operations, with worker and environmental safety as the top priority. In every part in the mining value chain safety is the top priority. The ultimate goal is to achieve zero worker injuries and minimize human error. Autonomous, semi-autonomous, and remote operations are helping achieve this goal today by removing people from high-risk environments. Machine autonomy demands a highly available, deterministic, and secure network infrastructure upon which network-intensive mining systems and applications rely. Slope and seismic activity monitoring allows for production optimization while diminishing safety risk.

Mining Process and Use Cases Overview

The value chain starts with the Exploration/Discovery process. In this phase, a discovery team finds and scopes a body of ore. Team access to geological, drilling and conventional business systems enables better cost reductions, efficiency and improved assay results. Once identified, the rights to extract the ore body is sold to a mining company with oversight from a regulatory body responsible for the region. After a mining company has obtained the right to extract the ore, the planning process of developing a life-of-mine plan is initiated. Interaction between Information Technology (IT) and Operational technology (OT) is paramount. With proper long-term planning, the IT organization, in conjunction with operations, can design a network infrastructure for the physical site with considerations for how the site will be developed, and how the mine will evolve over time.

These general mining processes are shown in Mining Process and Use Case Overview:

Figure 3 Mining Process and Use Case Overview

Key stages in the mining process are described below.

■![]() Extraction – Mining starts with the removal of “overburden” to expose the ore body. Blasting crews can use explosives to make the rock small enough to fit into the haulage and the crusher. Haulage in most above- and below-ground mines is the movement towards remote and autonomous operations.

Extraction – Mining starts with the removal of “overburden” to expose the ore body. Blasting crews can use explosives to make the rock small enough to fit into the haulage and the crusher. Haulage in most above- and below-ground mines is the movement towards remote and autonomous operations.

■![]() Crushing - make the rocks or ore small enough to go on the conveyor belt and into the rock mill for processing and concentration.

Crushing - make the rocks or ore small enough to go on the conveyor belt and into the rock mill for processing and concentration.

■![]() Beneficiaction/Processing/Concentration - In the mill the ore is broken down to a powder consistency. This powder is mixed with water and chemicals to extract the minerals and then sent to a thickener. This concentrate can then be sent to a smelter to refine further, or sold as a commodity to other companies seeking the concentrate blend.

Beneficiaction/Processing/Concentration - In the mill the ore is broken down to a powder consistency. This powder is mixed with water and chemicals to extract the minerals and then sent to a thickener. This concentrate can then be sent to a smelter to refine further, or sold as a commodity to other companies seeking the concentrate blend.

■![]() Smelting - The concentrate can be sent to the smelter where extreme heat is used to separate the ore from the waste (slag) the slag is then poured off into a dump area where the ore is formed into ingots for further processing in a rod plant or to be sold on the commodities market.

Smelting - The concentrate can be sent to the smelter where extreme heat is used to separate the ore from the waste (slag) the slag is then poured off into a dump area where the ore is formed into ingots for further processing in a rod plant or to be sold on the commodities market.

■![]() Refining - is the process of separating the desired ore from the waste this can be achieved with chemical or heat, for example.

Refining - is the process of separating the desired ore from the waste this can be achieved with chemical or heat, for example.

■![]() Waste - The part of the rock that is not used is sent to the tailings pond as waste. These tailings ponds, if not properly managed, can become a major environmental risk. Should they fail, they can cause irreversible damage to the environment, severely impact production and the resulting financial penalties can be extremely high if an incident occurs..

Waste - The part of the rock that is not used is sent to the tailings pond as waste. These tailings ponds, if not properly managed, can become a major environmental risk. Should they fail, they can cause irreversible damage to the environment, severely impact production and the resulting financial penalties can be extremely high if an incident occurs..

In addition to the above, many mining facilities include Utilities, such as potable water production, power generation, and transportation infrastructure. Typically, mine operations are far from general infrastructure and require significant power to run their operations. Therefore, on-site power generation and distribution are key considerations. Additionally, mining product can be moved over long distances depending on where the mine is located and where the market is that the product is being sold. Some common transportation examples are road trucking, train, pipeline, and shipping ports.

At this time, the solution focuses on support for Industrial Automation systems found in the Extraction, Crushing, Processing/Concentration, Smelting and Refinery stages of mining.

This solution does not specifically cover Utilities or Transportation as they are covered in separate Cisco Validated Solutions such as:

■![]() Substation Automation - -https://www.cisco.com/c/en/us/td/docs/solutions/Verticals/Utilities/SA/2-3-2/CU-2-3-2-DIG.html

Substation Automation - -https://www.cisco.com/c/en/us/td/docs/solutions/Verticals/Utilities/SA/2-3-2/CU-2-3-2-DIG.html

■![]() Connected Rail - www.cisco.com/go/connectedrail

Connected Rail - www.cisco.com/go/connectedrail

■![]() Wireless sensor networks in Refining and Waste Management stages of the mining process covered by the Oil & Gas Wireless sensor solution

Wireless sensor networks in Refining and Waste Management stages of the mining process covered by the Oil & Gas Wireless sensor solution

This release of the mining solution does not currently cover the following, but it will be addressed in future releases:

■![]() Wireless deployments for autonomous vehicles, for example during Extraction

Wireless deployments for autonomous vehicles, for example during Extraction

Solution overview

Industrial Automation Reference Architecture

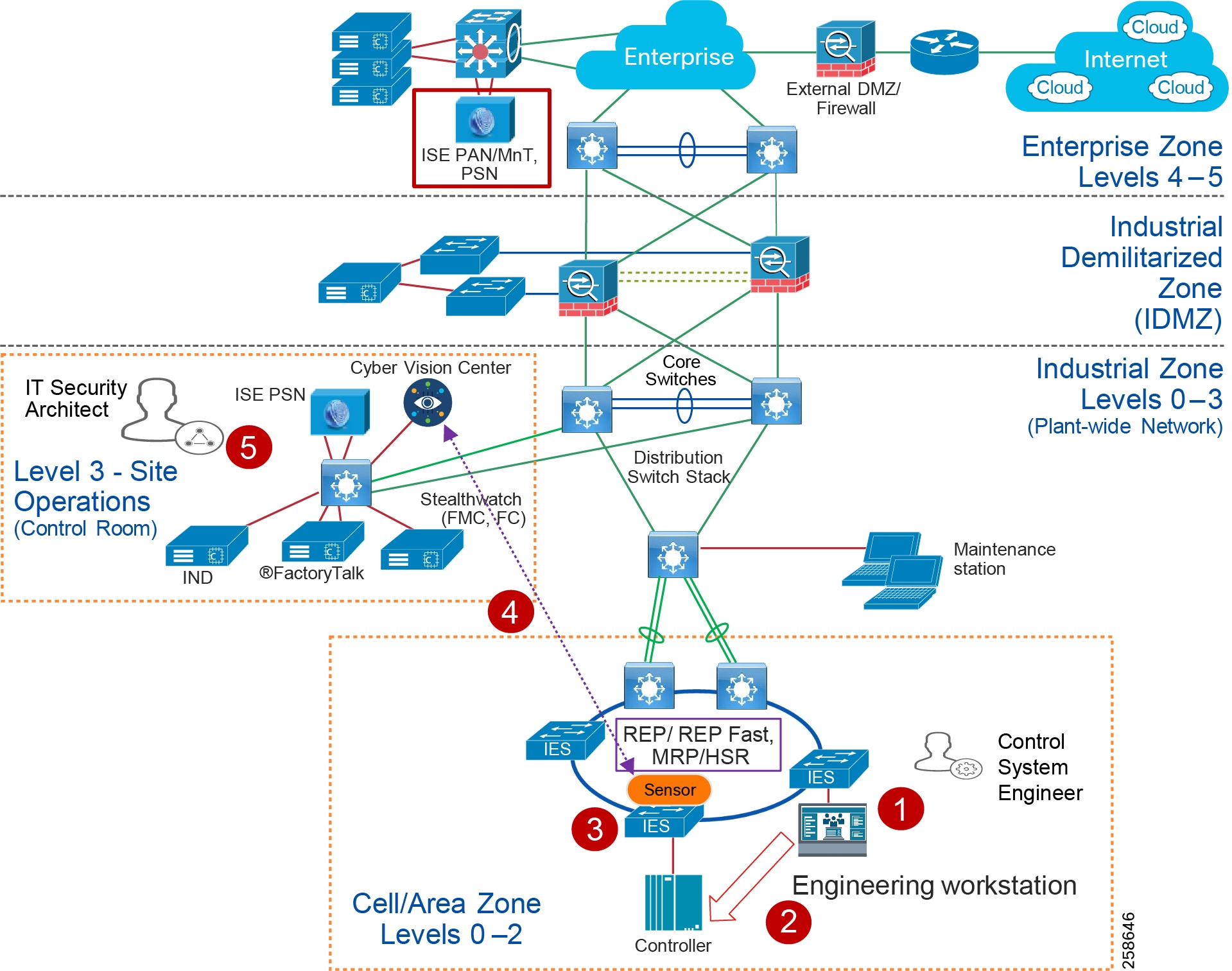

Figure 4 Industrial Automation - Mining Reference Architecture

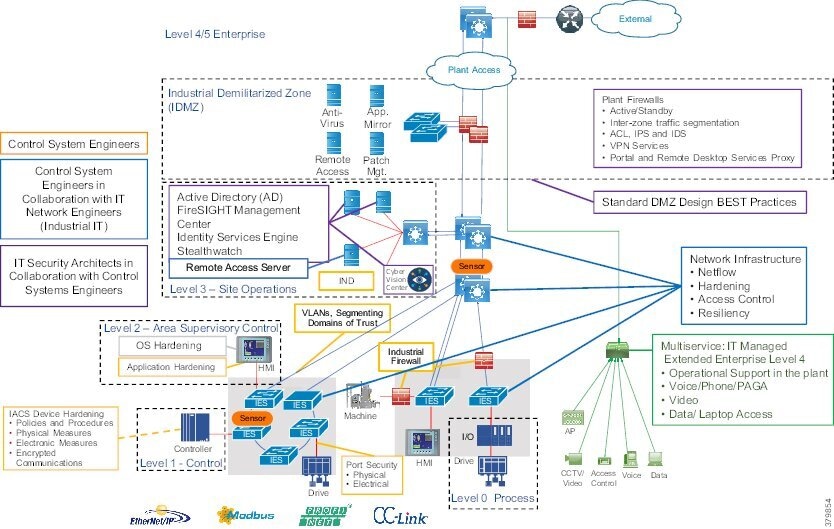

This Cisco Mining Industrial Automation CRD defines a reference architecture shown in Figure 3 above to support multiple operational and non-operational services over a secure, robust communications infrastructure. The architecture applies to the wired portion of industrial areas within the mining value chain, establishing a foundation for network design, security, and data management technologies for process manufacturing environments. The reference architecture in Mining Process and Use Case Overview is a blueprint for the security and connectivity building blocks required to deploy and implement digitized process control environments to significantly improve safety and business operation outcomes. The building blocks include:

■![]() Wired process control networks with safety and energy management systems

Wired process control networks with safety and energy management systems

■![]() Industrial network security throughout the plant including the Industrial DMZ.

Industrial network security throughout the plant including the Industrial DMZ.

The use cases and building blocks described in this guide provide a complete end-to-end view of the reference architecture, although the focus of this guide is on the use cases and architecture enabled through the wired connectivity and security technologies.

Note : The wired network and security architecture is sourced from the Industrial Automation CVD: https://www.cisco.com/c/en/us/td/docs/solutions/Verticals/Industrial_Automation/IA_Horizontal/DG/Industrial-AutomationDG.html.

The reader may be familiar with the architectural guidance and design principles in that guide, although there are some slight differences between the mining process control environment and the industrial automation design which are highlighted in Industrial Automation Reference Design.

Solution Features

The Mining Industrial Automation solution applies the best IT capabilities and expertise tuned and aligned with OT requirements and applications and delivers for industrial environments:

■![]() High Availability for all key industrial communication and services

High Availability for all key industrial communication and services

■![]() Real-time, deterministic application support with low network latency, packet loss, and jitter for the most challenging applications, such as motion control

Real-time, deterministic application support with low network latency, packet loss, and jitter for the most challenging applications, such as motion control

■![]() Deployable in a range of industrial environmental conditions with Industrial-grade as well as commercial-off-the-shelf (COTS) IT equipment

Deployable in a range of industrial environmental conditions with Industrial-grade as well as commercial-off-the-shelf (COTS) IT equipment

■![]() Scalable from small (tens to hundreds of IACS devices) to very large (thousands to 10,000s of IACS devices) deployments

Scalable from small (tens to hundreds of IACS devices) to very large (thousands to 10,000s of IACS devices) deployments

■![]() Intent-based manageability and ease-of-use to facilitate deployment and maintenance especially by OT personnel with limited IT capabilities and knowledge

Intent-based manageability and ease-of-use to facilitate deployment and maintenance especially by OT personnel with limited IT capabilities and knowledge

■![]() Compatible with industrial vendors, including Rockwell Automation, Schneider Electric, Siemens, Mitsubishi Electric, Emerson, Honeywell, Omron, and Schweitzer Engineering Labs (SEL)

Compatible with industrial vendors, including Rockwell Automation, Schneider Electric, Siemens, Mitsubishi Electric, Emerson, Honeywell, Omron, and Schweitzer Engineering Labs (SEL)

■![]() Reliance on open standards to ensure vendor choice and protection from proprietary constraints

Reliance on open standards to ensure vendor choice and protection from proprietary constraints

■![]() Distribution of Precise Time across the site to support motion applications and Schedule of Events data collection

Distribution of Precise Time across the site to support motion applications and Schedule of Events data collection

■![]() Converged network to support communication from sensor to cloud, enabling many Industry 4.0 use cases

Converged network to support communication from sensor to cloud, enabling many Industry 4.0 use cases

■![]() IT-preferred security architecture integrating OT context and applicable and validated for Industrial applications (achieves best practices for both OT and IT environments)

IT-preferred security architecture integrating OT context and applicable and validated for Industrial applications (achieves best practices for both OT and IT environments)

■![]() Deploy IoT application with support for Edge Compute

Deploy IoT application with support for Edge Compute

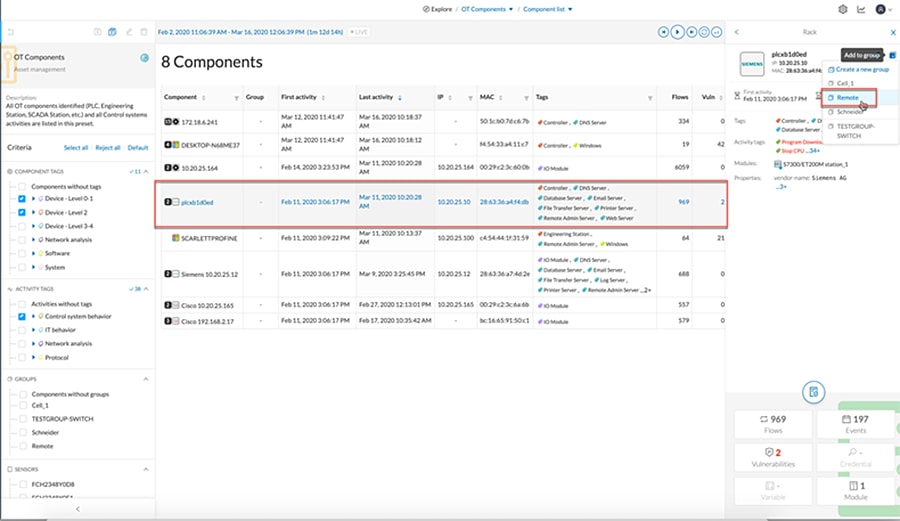

■![]() OT-focused, continuous cybersecurity monitoring of IACS devices and communications

OT-focused, continuous cybersecurity monitoring of IACS devices and communications

Mining Solution Overview and Use Cases

Reference Architecture Overview

The Cisco Industrial Automation for Mining Cisco Reference Design (CRD) defines a reference architecture to support multiple mining process and non-process services over a secure, reliable, robust communications infrastructure. The architecture applies to wired and wireless network designs, security and data management technologies across the mine. The reference architecture provides a blueprint for the essential security and connectivity foundation required to deploy and implement the various building blocks for a mine. The architecture is applicable for both open pit and underground mining operations. This solution is therefore key to digitizing mining use cases to achieve significantly improved safety and business relevant outcomes. The use cases and building blocks are described subsequently to provide a complete end-to-end view of the reference architecture for on-site and remote operations, though the focus of this CRD is on the use cases and architecture provided for the wired plant infrastructure supporting the process plant operations.

Plant Logical Framework

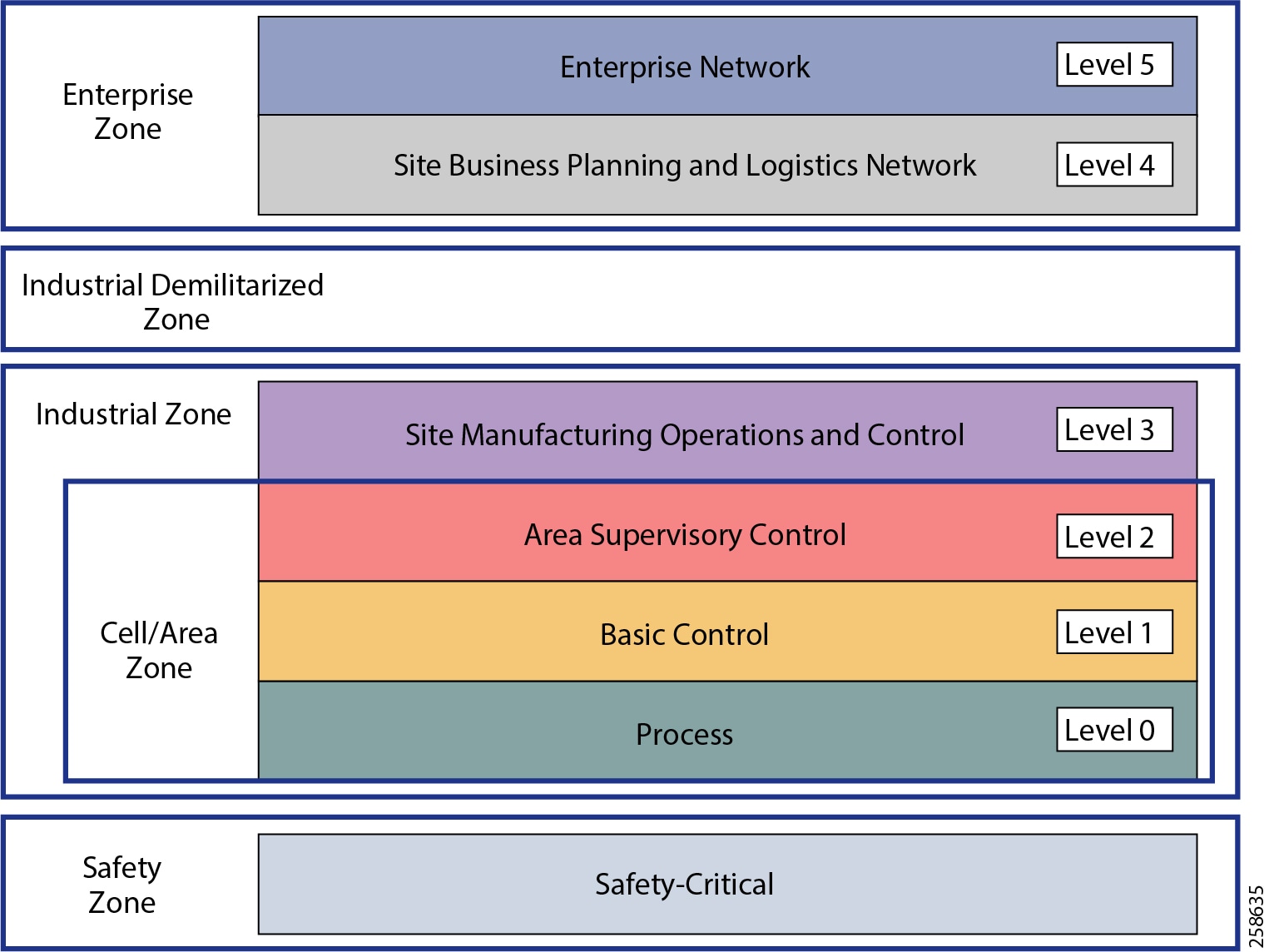

There are fundamental design considerations when looking at supporting the industrial process and operations across all industrial verticals including mining. The Plant Logical Framework (PLF) is well known throughout the industry, where the framework’s concepts are continuously referred to within the document.

To outline the security and network systems requirements, this CRD uses a logical framework to describe the basic functions and composition of an industrial system. The Purdue Model for Control Hierarchy (reference ISBN 1-55617-265-6) is a common and well-understood model in the industry that segments devices and equipment into hierarchical functions.

Figure 5 Purdue Model for Control Hierarchy

The model shown above identifies levels of operations and the subsequent definitions below highlights its function. Greater details into the model can be found in the Industrial Automation CVD here: https://www.cisco.com/c/en/us/td/docs/solutions/Verticals/Industrial_Automation/IA_Horizontal/DG/Industrial-AutomationDG.html

Safety Zone

Safety in the process control systems is so important that not only are safety networks isolated from the rest of the process control, they typically have color-coded hardware and are subject to more stringent physical and performance standards. In addition, Personal Protection Equipment (PPE) and physical barriers are required to enhance safety.

Cell Area/Zone

The Cell/Area Zone is a functional area within a plant facility and many plants have multiple Cell/Area Zones. Larger plants might have “Zones” designated for fairly broad processes that have smaller subsets of control within them where the process is broken down into multiple distributed subsets. Zones are typical of a distributed control system as defined earlier.

Level 0 Process

Level 0 consists of a wide variety of sensors and actuators involved in the basic industrial process. These devices perform the basic functions of the Industrial Automation and Control System (IACS) as part of the physical process, such as driving a motor, measuring variables such as temperature and pressure, and setting an output.

Level 1 Basic Control

Level 1 consists of controllers that direct and manipulate the local process, primarily interfacing with the Level 0 devices (for example, I/O, sensors and actuators).

IACS controllers are the intelligence of the industrial control system, making the basic decisions based on feedback from the devices found at Level 0. Controllers act alone or in conjunction with other controllers to manage the devices and thereby the industrial process.

Level 2 Supervisory Control

Level 2 represents the applications and functions associated with the Cell/Area Zone runtime supervision and operation, including DCS, HMI; supervisory control and data acquisition (SCADA) software. Depending on the size of the plant some of these functions may reside at the site level (Level 3). An example could be control room workstations monitoring process sitewide.

Industrial Zone

The Industrial zone comprises the Cell/Area zones (Levels 0 to 2) and site-level (Level 3) activities. The Industrial zone is important because all the IACS applications, devices, and controllers critical to monitoring and controlling the plant floor IACS operations are in this zone. To preserve smooth plant operations and functioning of the IACS applications and IACS network in alignment with standards such as IEC 62443, this zone requires clear logical segmentation and protection from Levels 4 and 5.

Level 3 Site Operations and Control

Level 3 is where the applications and systems reside that support plant wide control and monitoring. A centralized control room with operator stations monitoring and controlling many systems within the plant would be situated at this level. Level 3 IACS network may communicate with both Level 1 controllers and Level 0 devices, function as a staging area for changes into the Industrial zone, and share data with the enterprise (Levels 4 and 5) systems and applications via the demilitarized zone (DMZ), described later. Examples of services at this level would be site Historians, control applications, network and IACS management software, and network security services. Control applications will vary greatly on the specifics of the plant.

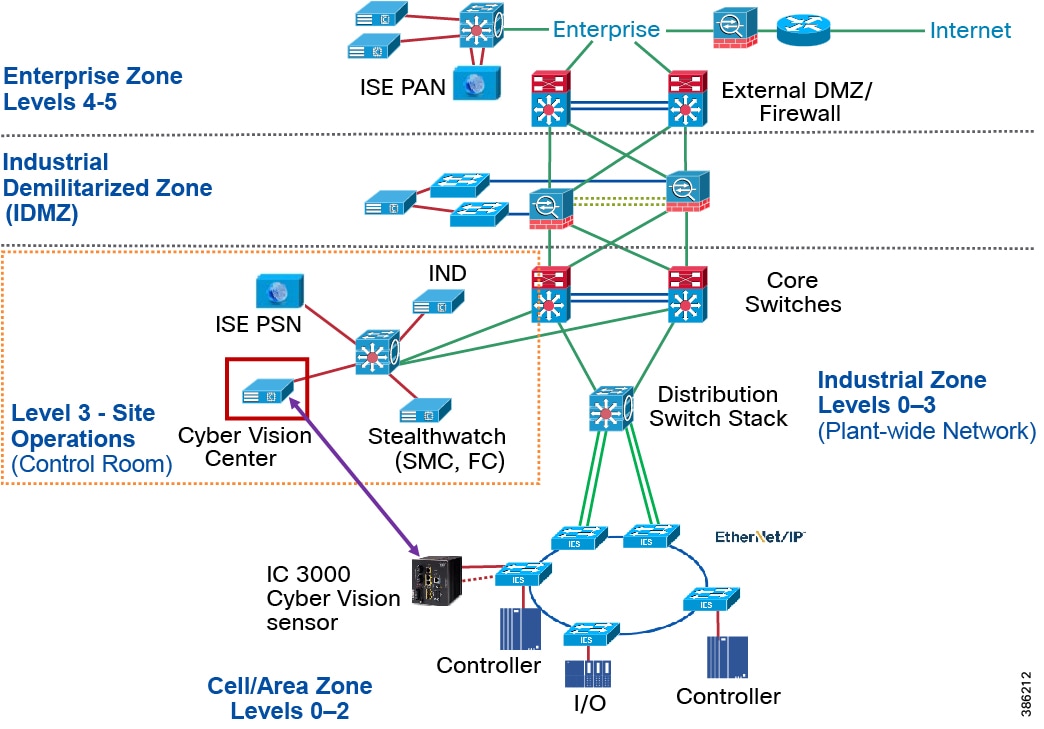

Enterprise Zone

Level 4 Site Business Planning and Logistics

Level 4 is where the functions and systems that need standard access to services provided by the enterprise network reside. This level is viewed as an extension of the enterprise network. The basic business administration tasks are performed here and rely on standard IT services. Although important, these services are not viewed as critical to the IACS and thus the mining operations. Because of the more open nature of the systems and applications within the enterprise network, this level is often viewed as a source of threats and disruptions to the IACS network. Example of applications may include Internet access, email, non-critical plant systems such as Manufacturing Execution Systems (MES) and access to enterprise applications such as SAP.

Level 5 Enterprise

Level 5 is where the centralized IT systems and functions exist. Enterprise resource management, business-to-business, and business-to-customer services typically reside at this level. The IACS must communicate with the enterprise applications to exchange manufacturing and resource data. Direct access to the IACS is typically not required or recommended.

Industrial DMZ

Although not part of Purdue reference model, the mining solution includes a DMZ between the Industrial and Enterprise zones. New industrial security standards such as ISA-99 (now also known as IEC-62443), NIST 800-82, and Department of Homeland Security INL/EXT-06-11478 include an Industrial DMZ as part of a security strategy. The IDMZ provides a buffer zone and segmentation between the enterprise zone and the industrial/plant zone. Data must be securely passed between the Industrial Zones and the Enterprise.

The IDMZ architecture provides termination points for the Enterprise and the Industrial domain and then has various servers, applications, and security policies to broker and police communications between the two domains and permit remote access services. Downtime in the IACS network can be costly and have a severe impact on revenue; the Industrial zone cannot be impacted by any outside influences, as availability of the IACS assets and processes are paramount. Network access is not permitted directly between the enterprise and the plant; data and services are required to be shared between the Industrial zone and the enterprise. A secure architecture for the industrial DMZ to provide secure traversal of data between the zones is required.

Mine Site Building Blocks and Use Cases

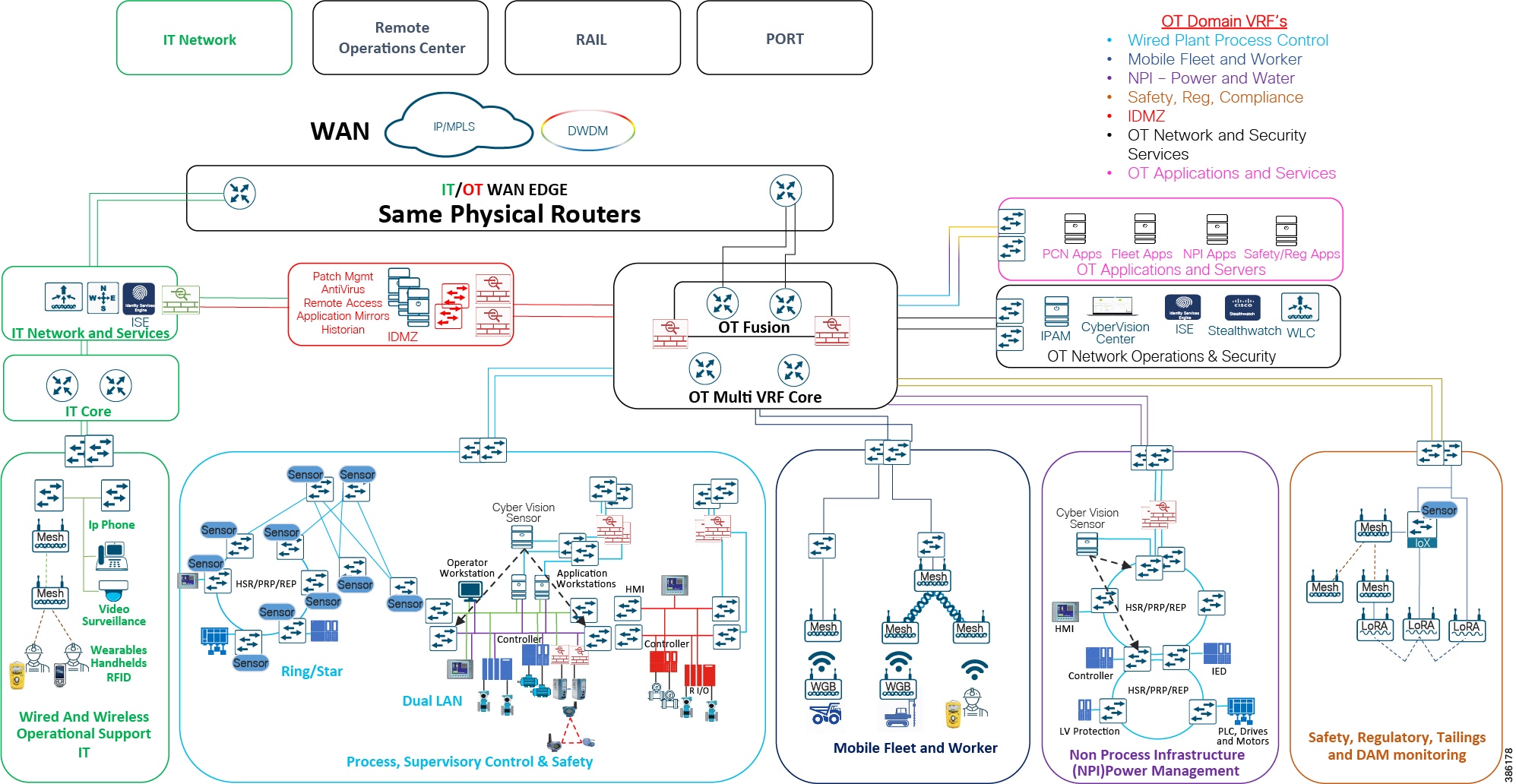

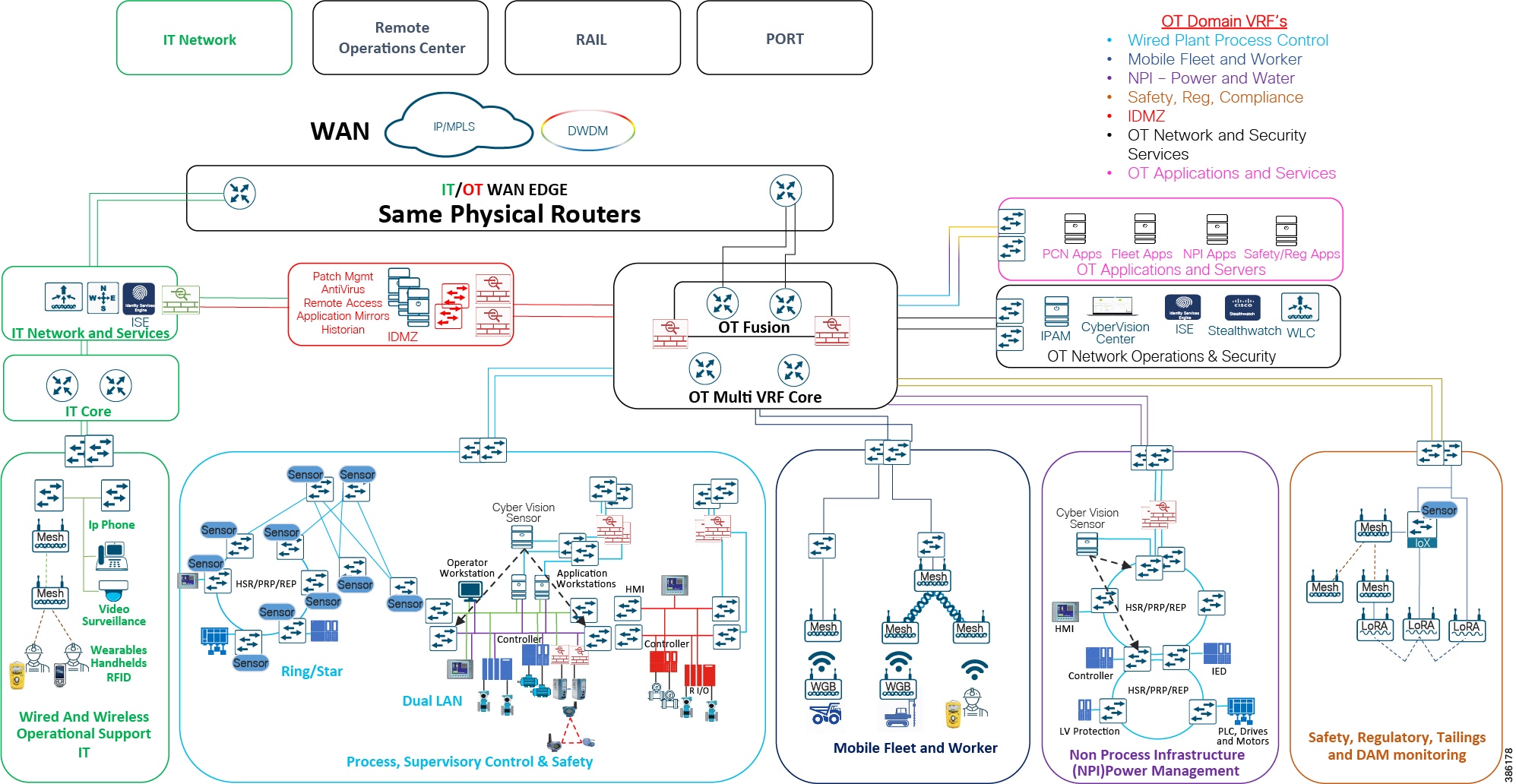

Figure 6 Industrial Automation Mining Architecture

Industrial Automation Mining Architecture, above, highlights OT and IT segmentation, outlines typical mine process areas identifies operational domains to support different control areas in the mining process. An operational domain represents network areas that support key functions of the mine process and are segmented (physically and logically) from each other to avoid outages or breaches to impact other mine processes and areas. These operational domains are based around the attributes and the support needed for the mining use cases in the industrial zone and is supported over a secure robust wired and wireless infrastructure. The different domains are highlighted from a logical perspective rather than a physical perspective. These building blocks within the reference architecture include the following:

■![]() Remote Operations – Supporting remote control and visibility across pit-to-port operations from a centralized location, including use cases such as drill control, digital dispatch for the mining fleet and train control.

Remote Operations – Supporting remote control and visibility across pit-to-port operations from a centralized location, including use cases such as drill control, digital dispatch for the mining fleet and train control.

■![]() Enterprise Wide Area Network (WAN) - Supporting connectivity to the Remote Operations Center and providing connectivity across the value chain pit-to-port

Enterprise Wide Area Network (WAN) - Supporting connectivity to the Remote Operations Center and providing connectivity across the value chain pit-to-port

■![]() Sitewide Networking and Security Services – Includes Enterprise WAN interface via an Industrial DMZ, Mine Core networking, and on-site Data Center support for network and security management,

Sitewide Networking and Security Services – Includes Enterprise WAN interface via an Industrial DMZ, Mine Core networking, and on-site Data Center support for network and security management,

■![]() Mine-wide Applications – These applications support the mining process and may include Historians, Asset Management systems, SCADA applications, and local operational centers

Mine-wide Applications – These applications support the mining process and may include Historians, Asset Management systems, SCADA applications, and local operational centers

■![]() Specific on-site Mining operational domains include:

Specific on-site Mining operational domains include:

–![]() Process - Includes fixed wired and wireless infrastructure that supports the industrial automation and controls systems in a variety of Mine process areas (such as Crushing, Smelting, Refining, etc.)

Process - Includes fixed wired and wireless infrastructure that supports the industrial automation and controls systems in a variety of Mine process areas (such as Crushing, Smelting, Refining, etc.)

–![]() Mobility – Supporting the connectivity demands of the mining Fleet for use cases such as autonomous and semi-autonomous operations (Haulage, Dozers and Drills) and local personnel

Mobility – Supporting the connectivity demands of the mining Fleet for use cases such as autonomous and semi-autonomous operations (Haulage, Dozers and Drills) and local personnel

–![]() Non-Process Infrastructure (NPI) - Supporting systems and processes not directly related to the process but essential to support the operations within the mine. Examples include Water treatment, and power/electrical management for the mine.

Non-Process Infrastructure (NPI) - Supporting systems and processes not directly related to the process but essential to support the operations within the mine. Examples include Water treatment, and power/electrical management for the mine.

–![]() Transportation – Supporting transportation control systems often with specific regulatory compliance requirements to be segmented.

Transportation – Supporting transportation control systems often with specific regulatory compliance requirements to be segmented.

–![]() Safety and Regulatory – This includes use cases that protect the mine, miners and the surrounding environment. Use cases include dam monitoring and dust monitoring.

Safety and Regulatory – This includes use cases that protect the mine, miners and the surrounding environment. Use cases include dam monitoring and dust monitoring.

The operational domains may exist in multiple mine process areas. The following table highlights these use cases and where they apply within the framework of the on-site operations.

Table 1 Mine Site Domains / Operations

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

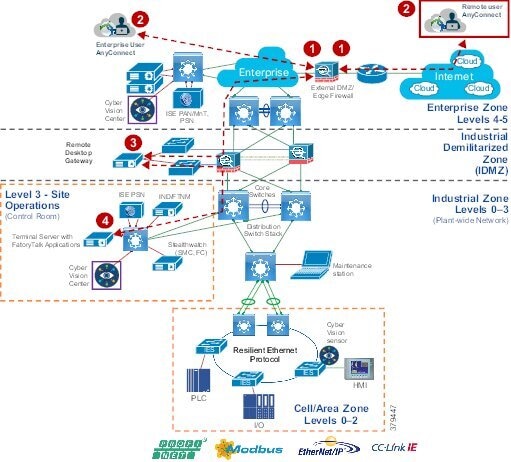

Remote Operations Centers

Remote Operations Centers (ROCs) allow mining companies to centralize their monitoring and controls of mines without having to put people in harms’ way at the mines, improving safety. The more functions that can be performed in the ROC increases operational efficiency and effectiveness while improving safety. For example, the adoption of remote control and autonomous equipment operations the ability to have high reliable paths without the chance of human error has dramatically decreased the number of safety incidents involving the mobile fleet and allowed for a smaller number of operators to manage a larger fleet at much lower cost.

Under traditional circumstances, because most mines are in very remote areas, companies need to build housing for the employees working at the mine and also transport them to and from the mine location (fly-in, fly-out). Creating the ROCs in metropolitan areas where the work force lives and can operate the mine, offers huge benefits such as, reduced housing needed on site, easer to attract skilled workforce, reduction in non-productive travel time, superior work-life balance for employees, plus many other benefits. Digital transformation changes many of the perceived negative aspects of placing personnel in the mine, as it is no longer a dirty and dangerous environment. People work from clean, conditioned spaces mining safely and more productively.

Sitewide Services

The OT Sitewide Networking Services can be thought of as the foundational block providing a primary set of functions; interface to the WAN infrastructure, sitewide networking and security services and the IDMZ, which provides segmentation and security between the IT and OT domains. These blocks are essentially components of the fixed wired infrastructure this building block is required to support all of the key mining operational domains. These services align with plant operations and control zone which reside at Level 3 of the Purdue model.

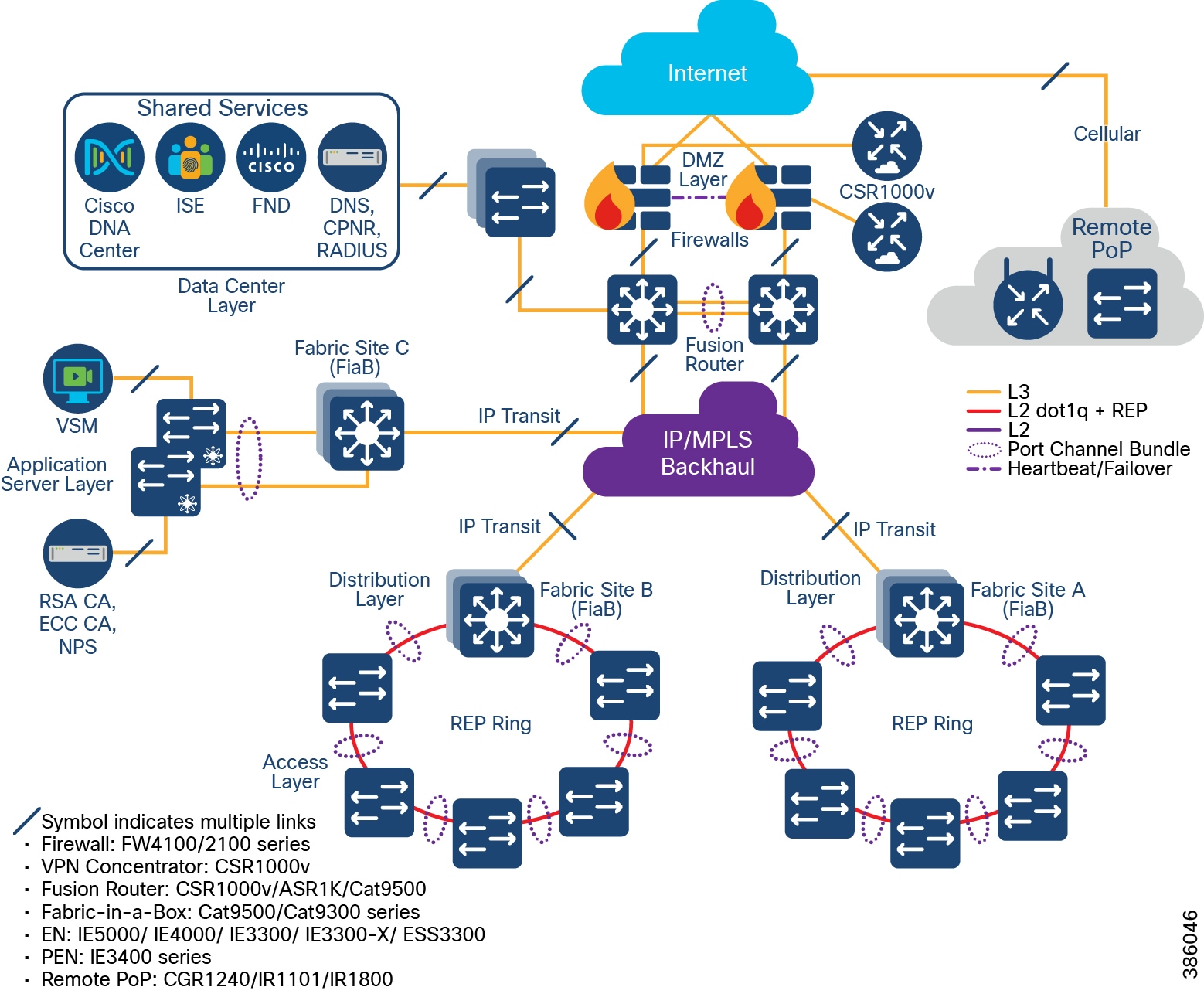

Core Network

The core network is designed to be highly reliable and stable to inter-connect all the elements in the operational plant. It consists of typically Layer 3 devices, with high speed connectivity, redundant links, and redundant hardware interconnecting the operational domains over wide-areas. Within the context of the mine architecture, the core aggregates all of the operational domain zones and provides access to the industrial DMZ, centralized Networking and Security services sitewide applications and enterprise WAN for connectivity for offsite functions such as the ROC. The core applies segmentation techniques to keep the domains separated, typically extending the VPN segmentation from the ROC and WAN into the OT fault domains. Any cross pollination of traffic between the domains must be considered as the core layer provides policy-based connectivity between the functional operational domains.

Wide Area Network

WAN services extend connectivity across the entire Mining value chain from Pit-to-Port, provide connectivity to support communications between the ROC and the OT domains on Site and finally to extend Enterprise support services to many sites. The physical infrastructure is, in most cases, shared physical infrastructure; the WAN needs to support and extend Segmentation between the IT and OT services as well as between OT operational domains. Technologies such as MPLS VPN, SD-WAN and VRF technologies are typically employed to provide these services across the shared WAN.

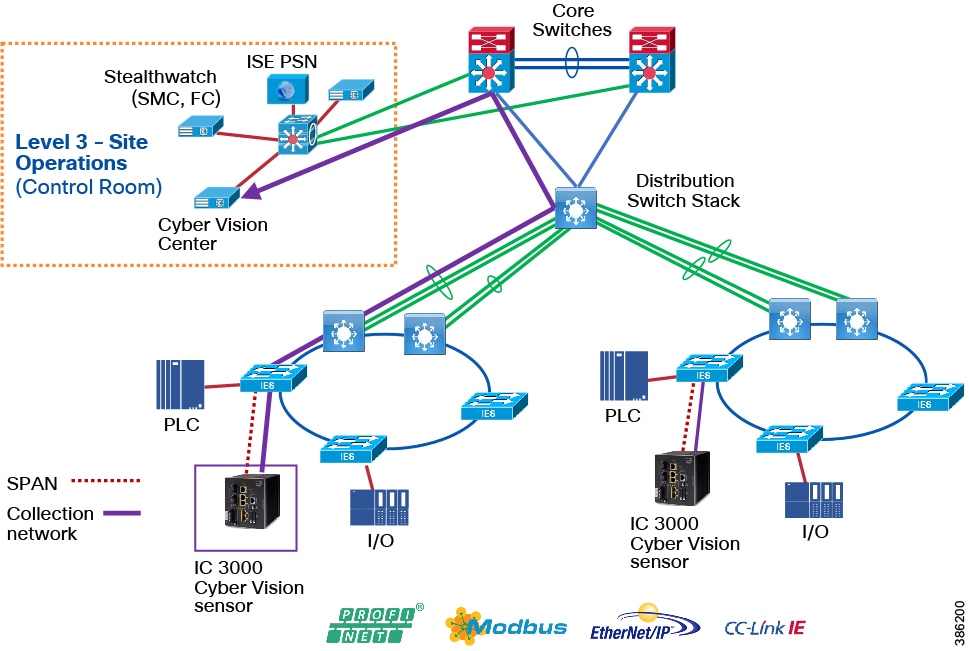

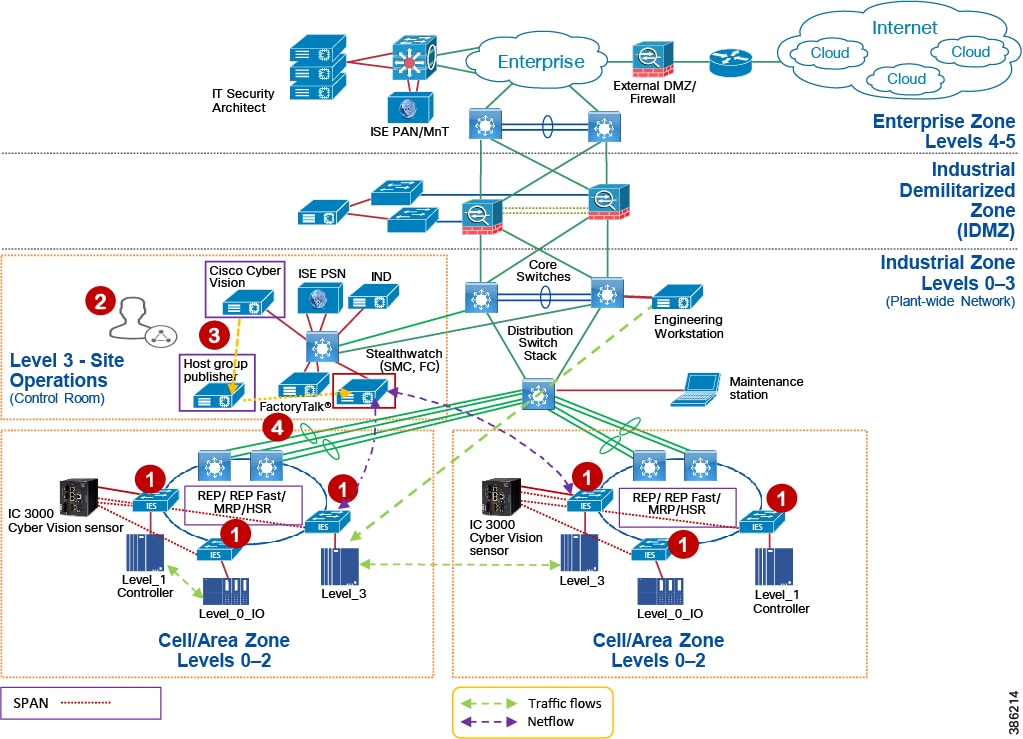

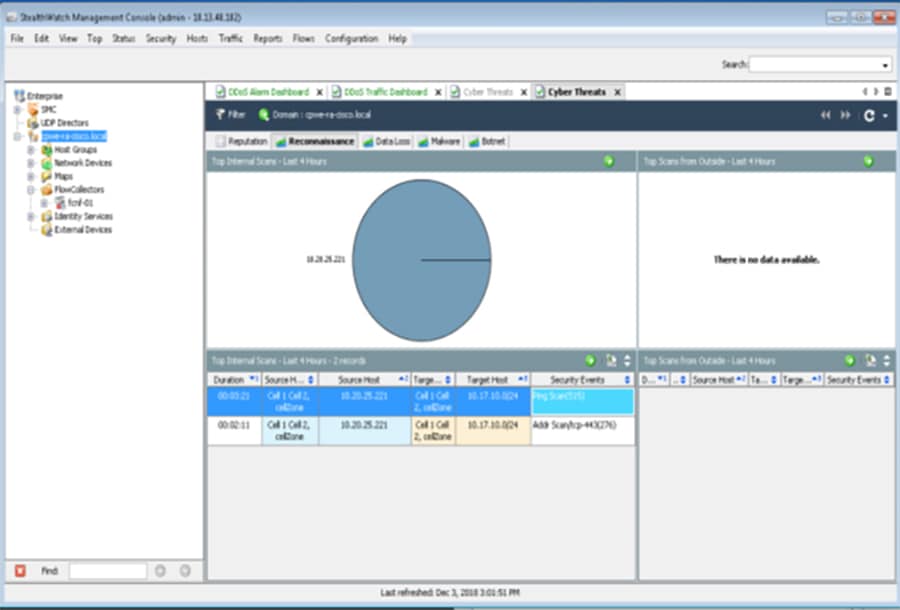

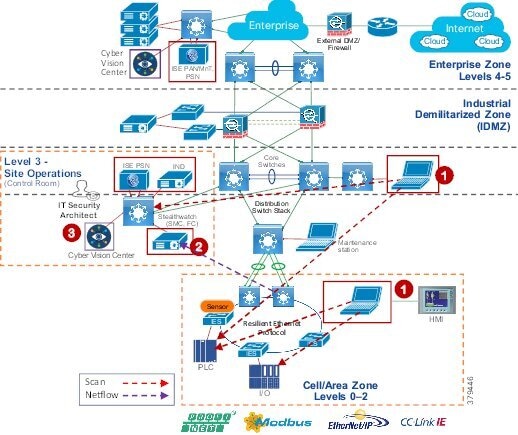

Sitewide Networking and Security Services

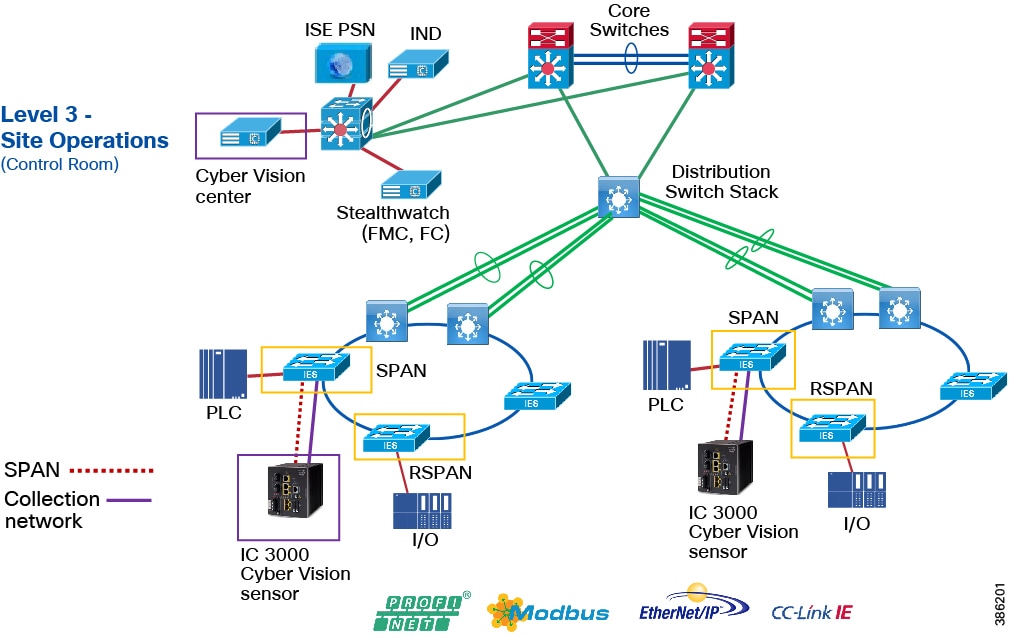

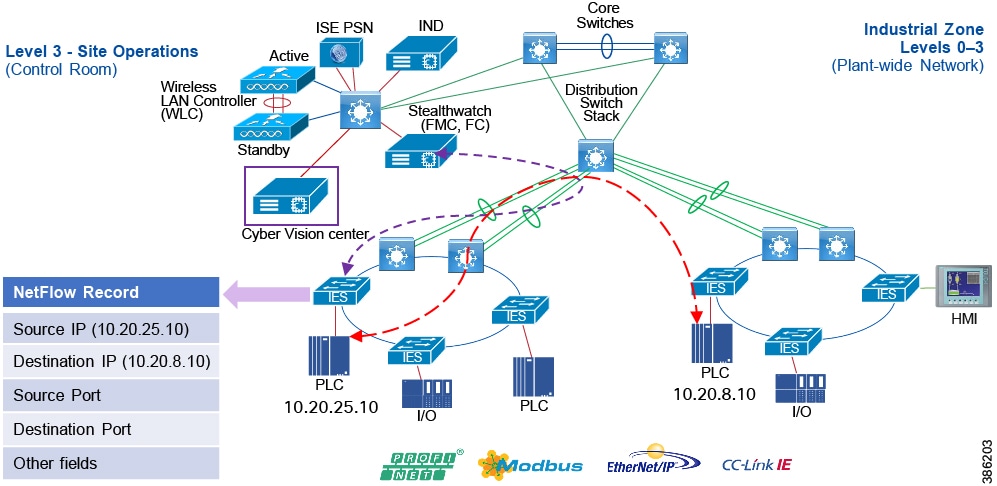

The sitewide networking and security services required across the plant include network and security management platforms such as Cisco Identity Services Engine (ISE), Cisco StealthWatch, and Cisco Cyber Vision Center.

Mine-wide Applications

A host of sitewide applications that are required to manage and operate a mine, including local operation centers, dispatch applications, historians, asset management systems, SCADA applications among many more. These applications rely on the core network to connect the to the various operational domains. The applications also require local data center services such as compute and storage platforms and specific data center networking capabilities to interconnect them.

Industrial DMZ

The Industrial DMZ is deployed within the mining environments to separate enterprise and operational domains, and separate operational domains of the production site environment. Typical services deployed in the IDMZ include Remote access servers and Mirrored services. Further details on the design recommendations for the industrial DMZ are included later in this guide.

Mine Operational Domains

This section will outline the key mine operational domains and the mine processes they support and describe the network capabilities.

Mobility Operational Domain

The Mobility operation domain primarily supports the Extraction process. Below is a basic description of the extraction process. Afterwards the specific mobility use cases and their networking requirements are described.

Drilling

After the overburden is removed and stored for use at the end of the life of the mine. The drills are used to create the blasting pattern. Drills create holes which range in size from 8-20 inches (20-50 cm) in diameter and 15-30 feet (4.5-9 meters) deep depending on the material being mined. Using remote operations over a reliable wireless network enables autonomous or semi-autonomous drilling, this shift from traditional drilling saves time, money and improves safety by allowing operators to control the drill outside of the danger zone.

Blasting

Blasting is typically performed with ammonium nitrate and fuel oil using exciter /blasting caps. The process is to use sufficient explosive to make the ore small enough for the haulage. If the rocks are smaller than needed, then blasting materials are wasted. If the rocks are too big for haulage a rock breaker is required -- this too is a waste of resources and time. The use of mobile worker capabilities (enabled with wireless coverage throughout the mine) allow the planning, staging, and digital sign off on work orders to help the blasting team be in the right place at the right time, stay on schedule and helps to keep the site safe during blasting.

Haulage

After blasting, loaders / shovels and haul trucks are used to move the ore body and waste to the proper location either the crusher or the waste pile. The optimization of the haulage is critical for the mine profitability. Digitalization plays a major role in the route planning, road maintenance and equipment utilization.

Mobility Use Cases

Currently, most heavy equipment operations in a mine are performed with an operator located within the mining equipment. Not only is this costly, but it also puts personnel into potentially hazardous situations such as equipment rolls or collisions.

For underground mines, transportation from personnel housing to mine operator staging areas can take over an hour one way. Workers are required to wear special personal protective equipment (PPE), which requires a significant amount of time to maintain and change into. In some underground areas that are extremely dangerous and unstable, such as wet muck underground tunnels, or even in extremely hot or cold mine locations, mine personnel can be directly exposed to dangerous environments for only limited amounts of time. The use of remote operations for mining also gives better visibility to location and health of the equipment, reducing time during shift change and improving the overall usage of the high value assets.

Mining operations are driving toward fully autonomous operational models throughout the supply chain. Removing humans that manually operate equipment will improve productivity, improve product quality, increase worker safety, and help reduce the overall cost of operations. Use cases today involving autonomous vehicles and equipment are either fully automated, without any direct human interaction, or semi-automated, with equipment that is remotely operated and monitored. Remote operations centers can be either located close to the mine site or located completely offsite and far away from the mine.

Digital dispatch

Digital dispatch processes connect mobile fleets to the mine network, thus allowing for proper route calculations and ensuring that operators unload the correct materials in the right spots, properly sending only high-grade ore to the crusher and appropriately delivering overburden to the correct dump. Digital dispatch requires connecting the mine fleet over a wireless network. Having the ability to optimize the haul routes is a huge savings for the mining operators and is the first step in digitizing the mine.

Semi-Autonomous

Semi-autonomous (Remote command) machine operations include loaders in a one-to-one or one-to-many remote operator to machine ratio. One use case is a haul truck operator who can control a loader from inside the cab of the truck to load ore into his truck, thus eliminating the need for an additional operator who would be sitting idle the entire time that the truck is in transit. A ratio of one-to-one or one-to-many allows remote personnel to operate the equipment from a safe location.

Allowing operators to work from a control room located aboveground while operating machinery located in a high-risk environment underground improves operational efficiency by eliminating some of the travel time, reducing downtime during shift change, improving visibility of equipment location, removing the need for PPE, and most importantly, removing personnel from harm’s way. In addition, remote operators can now simultaneously manage more than one machine, thus reducing the number of operators needed.

Autonomous

Likewise, autonomous trucks can haul resources from shovels or front-end loaders in a mine to a crusher area. When fully automated, trucks may continuously operate at optimum performance, thus reducing engine wear and improving tire performance and fuel efficiency. This reduces maintenance costs and downtime and increases productivity.

Reliable network performance is critical to ensure continuous operation of equipment. IT personal strive to minimize packet loss and roaming times to achieve optimal application performance. Any computer network issues, or prolonged roaming times can initiate safety systems that result in the vehicle or equipment stopping, ultimately affecting productivity and production. Cisco’s portfolio of industrial and outdoor wired and wireless products plays an integral part in providing a high-performing, highly available, and secured networking infrastructure for supporting autonomous systems in the mine.

Connecting the mine vehicle fleet to the network allows vehicle intelligent monitoring systems (VIMS) to feed a large data engine. Analysis of VIMS data by mine operators enables better equipment monitoring and proactive maintenance. Cisco’s solution has helped mining companies improve predictive maintenance, and it has also provided visibility into issues such as problems with engine oil pressure or faulty cooling systems before they escalated. Discovering and addressing these issues before a failure occurs can typically save hours of downtime and costly engine replacements.

The key networking capabilities required to support the mobility domain include:

■![]() Resilient, reliable and mobile wireless networks to connect key assets and personnel

Resilient, reliable and mobile wireless networks to connect key assets and personnel

■![]() Wireless backhaul and WAN technologies to interconnect the extraction zones to local sitewide operational services and Remote Operations Centers.

Wireless backhaul and WAN technologies to interconnect the extraction zones to local sitewide operational services and Remote Operations Centers.

Process Domain

At a high level the Process Domain supports the mining processes to prepare the extracted ore or resource before transportation to external markets. The use cases depending on the type of mine and resources can include conveyance, milling, and smelting.

Conveyance

The mining plant environment and process can span large geographical areas in harsh industrial environments. Conveyance systems can span kilometers across a mine from crushers to process plants. The processing use cases include conveyance, crushing, ore processing, flotation and these areas may deploy a number of different systems to automation and control as well as to ensure safety and reliability.

The transportation of the ore body and overburden in a safe, efficient and environmentally friendly way is a major concern to mining companies. In-pit or underground crushing and conveying plays a major role in this process. By some estimates, transportation of the material can be more than 50% of the total operating cost for the mine.

Using a primary crusher, close to the ore body, with a conveyance system to move the material to separate processing areas, reduces the fuel used in the haulage and also greatly reduces the dust caused by the large haulage trucks. A distributed approach reduces the need for the water track and lowers fuel consumption as well. The waste can also be moved with conveyors to an overburden area where it can be used for reclamation when the mine has ended it active mining time. These conveyor systems can extend for several miles / kilometers and have the capability of moving material at 10-15 miles (16-25 km) per hour. This provides a consistent feed to the process plant, where the ore is extracted, and waste is then transported to the tailings ponds.

The use of mobile conveyors or hoppers, allows for the transportation of the ore body to a leach pad for extraction and creates flexibility to use a stacker with a conveyance system to build out the leach pad with full autonomy. Many mining companies consider In-pit crushing and conveying (IPCC) as a way to reduce the haulage fleet significantly. After the blast is done, a mobile crusher is moved into the area and the mobile conveyors are then joined in a chain to move the ore to the next processing stage. Not only does a IPCC reduce the need for road construction and related maintenance, but is also not effected by weather like haulage can be. Conveyors can operated at a much higher grade, around 30%, as compared to haulage that is limited to grades averaging 10%.

According to the Encyclopedia of Occupational Health and Safety, using steeper grades lowers the need to remove low-grade overburden and may reduce the requirement to build high-cost haulage roads. Connecting the crusher and the conveyance system to monitor vibration, heat corrosion allows mine operators to optimize equipment efficacy and adjust maintenance for equipment as needed.

Milling

Milling is done on the ore body to reduce the size of the rocks to a manageable size if the ore is being sent to the leach pads the rock size is relatively large (up to several inches in diameter). This will then be mixed with a solvent to extract the desired minerals and sent to the stackers via a conveyor system and put into a leach pad. Is flotation is used for extraction the ore bode is milled extremely small like a talcum powder and sent the concentrators.

Leach pads tanks house

The leach pads use solvent and gravity to remove the desired material from the ore body. As the solvent moves through the ore body it dissolves the desired material into the solvent called Pregnant Leach Solution (PLS). The PLS is pumped to the tank house for Electrowinning and the final product called cathodes for sale on the commodities market. The cathodes can also be sent to the smelter to be mixed with concentrate to achieve a level of purity required for customer needs.

Flotation

The flotation process is used to remove the desired minerals from the waste. This is done with the use of several flotation tanks. The desired minerals are sent to the dryers and the finished product is concentrate. This concentrate can be sold on the open market or sent to a smelter for further processing. The waste is sent to a tailings pond as its final destination.

The key networking capabilities required to support the mobility domain include:

■![]() Resilient, reliable wired and wireless networks to connect the industrial automation and control systems

Resilient, reliable wired and wireless networks to connect the industrial automation and control systems

■![]() WAN technologies to interconnect the process zones to local sitewide operational services and Remote Operations Centers.

WAN technologies to interconnect the process zones to local sitewide operational services and Remote Operations Centers.

NPI Operational Domain

The Non-Process Infrastructure (NPI) supports key utilities that are required to be on-site due to the remoteness of the mine, such as power generation and water/waste-water processing.

Power generation

Because of the remote location for most of the mines and the massive amount of power they consume it is not uncommon for mining companies to build and operate their own power generation plants. It is not uncommon for mining companies to build power generation plants and turn them over to the local country to provide power to the communities in which the mine is operating.

Even if power utilities can support the massive demand of a mine on the power distribution system, mines still have extensive power distribution systems inside the mine. Although critical to the mine, utility use cases, reference architectures, design and implementation guidance are found in separate Cisco Validated Designs.

Refer to Utility Substation Automation and Distributed Automation CVDs for more specific reference architectures and validated design and implementation guidance here: https://www.cisco.com/c/en/us/solutions/enterprise/design-zone-industry-solutions/index.html#~all-industries-guides

Safety and Regulatory Operational Domain

Currently, many mining operations monitor tailing ponds manually. Operations management send personnel to tailing ponds; however, prior approval is typically required for access. Acquiring approval for access can take time, as does the drive to and from the tailing pond, which can take an hour in some facilities. Additionally, supervisors require that personnel check valves and place discharge hoses. Ultimately, a large amount of time is expended prior to the movement of any water or waste product.

Enabling connectivity and visibility into water and waste flow from the process plant to the tailing ponds improves production efficiency, resource utilization, monitoring for safety, and environmental compliance. Being able to monitor valve positions remotely allows operators to proactively identify where waste would be delivered without having to dispatch personnel to visually inspect valve conditions along the lengthy pipes that run between the processing plant and the tailing ponds. This capability will speed up the waste management process and improve safety with the knowledge that waste is being sent to the correct location. Otherwise, waste could cause instability if sent to an incorrect tailing pond and may potentially lead to environmental impact.

Digital permitting / work orders

Tailing dams require seismic and dam wall monitoring. Mobile mine workers want full coverage via remote access to production and corporate systems when working in and around tailing dams.

Dust control is a major concern around mines and at tailing areas, because tailing ponds are made of very small particles of earth. Environmental impact is a major concern, as not only could dust have a negative impact on the environment but it also could result in large fines from the local environmental supervisory agency. By automating dust control sprays and employing distributed air-quality sensors ND video to demonstrate dust control, a mining operation can limit the financial impact from penalties imposed should dust-related issues occur. Other places where automated dust control is needed include ore heaps and shipping ports.

Environmental regulations and safety concerns are a primary focus of mining companies operating globally. All mining companies need to meet or exceed the environmental regulations in the host country they are operating in. Dust management is a major environmental concern for both health and safety issues and regulatory compliance. Dust issues can be broken into two major categories, dust that effects the environment (affecting wildlife, streams, water tables, and so on) and dust that effects works and humans directly, either from breathing it or absorbing it through the skin. The U.S. Mine Safety and Health Administration (MSHA) considers respirable coal dust to be one of the most serious occupational hazards in the mining industry today. This is not just a coal mining issue. In hard rock mining, on average, the rock in hard rock mining contains 40% silica content, and human exposure to silica dust can cause silicosis, a form of lung disease. Major contributors to dust include but not limited to:

There are several methods of dealing with dust, but using the network to connect environmental sensors and cameras for real time monitoring is critical for compliance. Also, having records of monitoring can save companies considerably when an incident occurs that is not related to the mining operations.

Slope monitoring

Monitoring the stability of the mine area is critical to operations in the mine. In underground mines, cave-ins are just as dangerous as slope movement in open-pit mines. If the failure mechanisms are properly deployed, understood and monitored in a timely manner, the risk of slope failure can be scientifically reduced. Historically, slope engineers would monitor slope stability would drive to embedded sensors to record measurements on paper, and upon return to the office, would record their findings, compare them to the historical trend of readings and make a decision if there was an issue or not.

This extremely time-consuming process would lead to long intervals in which personal and equipment were and are not protected. With the use of different network technologies, LoRaWAN (900 MHz) Wi-Fi, Cellular, VSAT and PtMP sensors can be connected 24/7 and alert the mine operators when an event is happening, to better understand the stability of the slope and to support decisions to repair the area or evacuate personal and equipment to prevent future damage and injury.

Some of the newer sensors are extremely bandwidth intensive, supporting functions such as ground penetrating radar, GPS sensing for movement and thermal imaging to detect any movement. The images and data are then sent over the network to a monitoring station that can make comparisons and determine the stability of the slope. Key networking capabilities required to support the safety and regulatory domain include:

■![]() Resilient, reliable wireless networks to connect the sensing and condition monitoring applications and support any operational personnel that may be working in these areas

Resilient, reliable wireless networks to connect the sensing and condition monitoring applications and support any operational personnel that may be working in these areas

■![]() WAN technologies to interconnect the process zones to local sitewide operational services and Remote Operations Centers.

WAN technologies to interconnect the process zones to local sitewide operational services and Remote Operations Centers.

Transportation Operational Domain

The output of mine processes is a product that must be transported to customers or distributors over long distances. Often mining companies are required to operate rail or port facilities to get their product to market. These transportation functions are generally considered to operate in separate operational domains, both for safety and regulatory as well as operational segmentation reasons.

Although critical to the mine, the use cases, reference architectures, design and Implementation guidance are found in separate Cisco Validated designs.

Refer to Transportation CVDs here: https://www.cisco.com/c/en/us/solutions/enterprise/design-zone-industry-solutions/index.html#~all-industries-guides

Sitewide Mining Reference Design

The Sitewide Mining Reference Design will at this time primarily focus on the wired network design supporting plantwide operations. The design will accommodate and support other technologies that fit into this model. Examples include IEEE 802.11 WiFi for autonomous haulage and drilling operations, LoRA or WiFi mesh designs for Tailing Dam monitoring, however the specific designs for these use cases are currently outside the scope of this document.

The wired network design supporting process control, and safety networks is very much aligned with the Industrial Automation CVD. The Mine reference wired design is builds on the Industrial Automation CVD as a foundation where any differences will be highlighted in this reference design. This section provides a high-level view of the architecture and identifies differences from the Industrial Automation design as it relates to the mining sitewide operations.

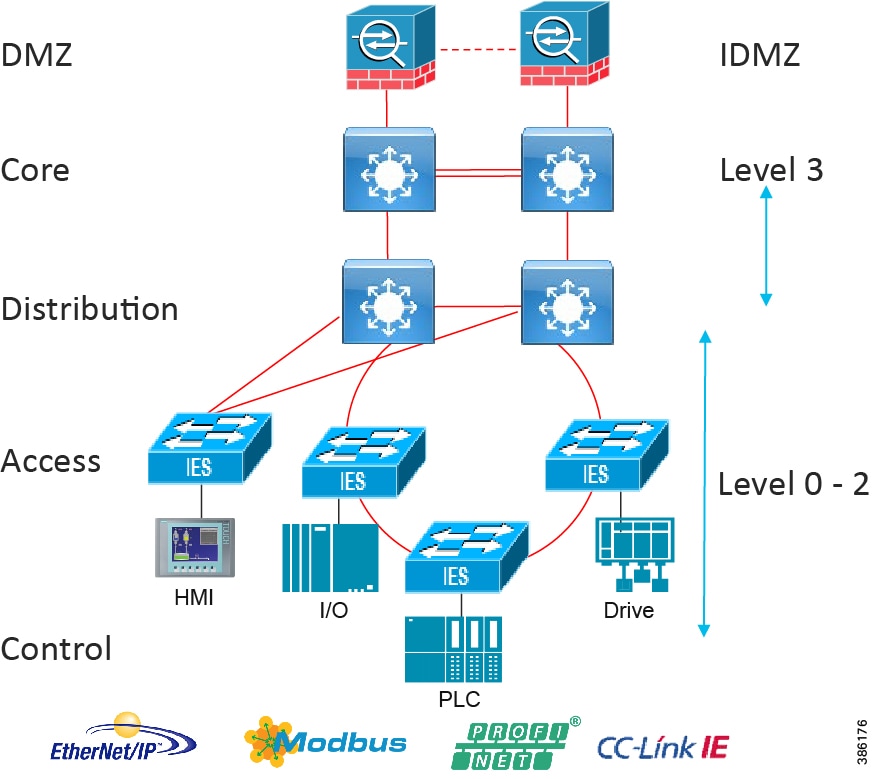

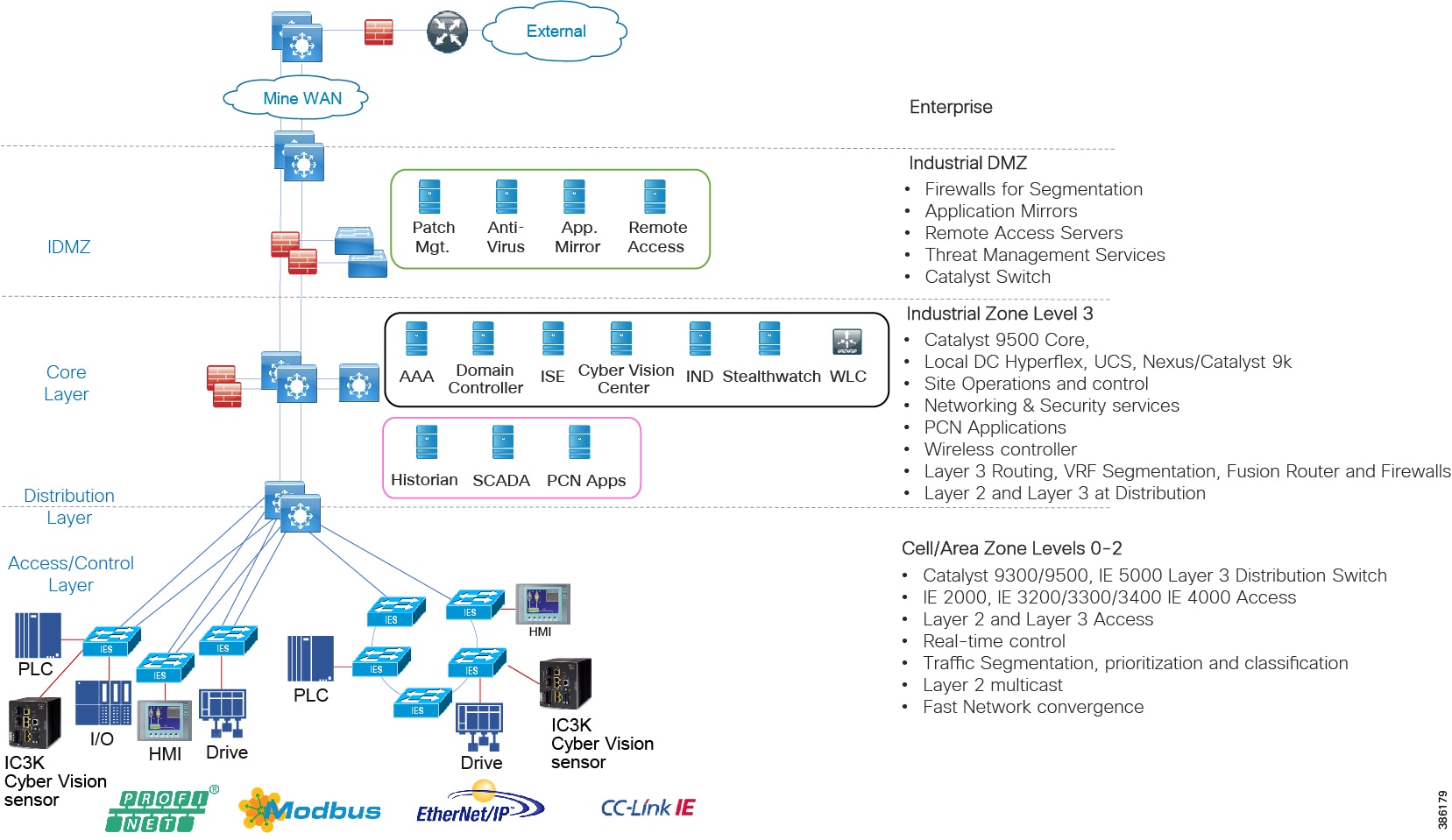

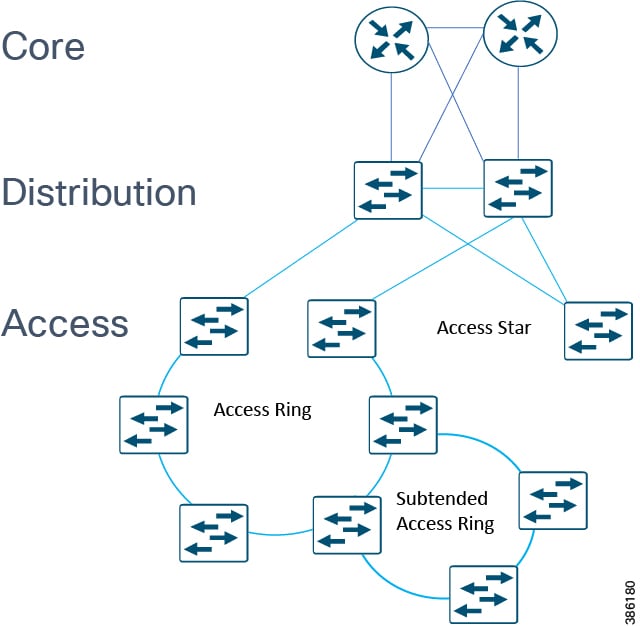

The typical enterprise campus network design is ideal for providing resilient, highly scalable, and secure connectivity for all network assets. The campus model is a proven hierarchal design that consists of three main layers: core, distribution, and access. The DMZ layer is added to provide a security interface outside of the operational plant domain. Purdue and Enterprise Models, below, highlights the alignment between the Purdue model and the enterprise campus model.

Figure 7 Purdue and Enterprise Models

Segmentation Strategy Across Mine Site Operations

The mining site as previously discussed has multiple operational domains supporting the process directly such as the wired Process plant and autonomous Haulage, or supporting non-process infrastructure and safety, such as tailings monitoring or water management services. All these operations are critical for supporting the mining operations, and therefore security and segmentation are critical. Segmentation is on a much larger scale than previously detailed within the Industrial Automation Solutions where VLANs, multiple firewalls and a level 3.5 DMZ have been deployed. An approach where the use of Virtual Route and Forwarding (VRF) networking is proposed that can provide the following benefits supporting a scalable and manageable sitewide security segmentation architecture for the mine.

Pros

■![]() Separated routing contexts using VRFs provide partitioning of the networks into logical units and provide major mine network isolation. This fits well with segmenting the operational domains into these operational units such as separate VRFs for the PCN, Mobile Fleet, Non-Process Infrastructure and Safety operations across the mining site.

Separated routing contexts using VRFs provide partitioning of the networks into logical units and provide major mine network isolation. This fits well with segmenting the operational domains into these operational units such as separate VRFs for the PCN, Mobile Fleet, Non-Process Infrastructure and Safety operations across the mining site.

■![]() VRFs can further be beneficial in providing segmentation of IT and OT when the mine infrastructure needs to support both production and non-production transport.

VRFs can further be beneficial in providing segmentation of IT and OT when the mine infrastructure needs to support both production and non-production transport.

■![]() Using Layer 3 VRF segmentation reduces the large VLAN based segmentation typically seen in a mine environment. These are difficult to scale, have large broadcast domains and are complex to manage.

Using Layer 3 VRF segmentation reduces the large VLAN based segmentation typically seen in a mine environment. These are difficult to scale, have large broadcast domains and are complex to manage.

■![]() Within the VRFs Multiple VLANS are still deployed at the access control layers but provide more of the networking functions that they were designed for which is restricting Broadcast domains, restricting network sizes etc.

Within the VRFs Multiple VLANS are still deployed at the access control layers but provide more of the networking functions that they were designed for which is restricting Broadcast domains, restricting network sizes etc.

■![]() VRF route leaking can be used to provide any inter VRF communications and help control or restrict communications. This helps restrict potential proliferation of networking or security issues in one operational domain impacting other domains.

VRF route leaking can be used to provide any inter VRF communications and help control or restrict communications. This helps restrict potential proliferation of networking or security issues in one operational domain impacting other domains.

–![]() ACLs perform egress filtering: packet traffic already carried within the fabric to destination, or SGACL enforcement point in the path.

ACLs perform egress filtering: packet traffic already carried within the fabric to destination, or SGACL enforcement point in the path.

–![]() VRF membership totally contains data traffic to service VRF serving switches only.

VRF membership totally contains data traffic to service VRF serving switches only.

–![]() Security demands – VRF segmentation, with Intrusion Protection/Detection security for all inter-VRF traffic, versus relying on ACL management only to achieve segmentation.

Security demands – VRF segmentation, with Intrusion Protection/Detection security for all inter-VRF traffic, versus relying on ACL management only to achieve segmentation.

–![]() Leads to Business function policy management analysis and awareness which can be an introduction or pathway into SDA with Policy Extended nodes.

Leads to Business function policy management analysis and awareness which can be an introduction or pathway into SDA with Policy Extended nodes.

Cons

■![]() Careful consideration of scoping the number of VRFs to support the mine site operations is required as VRF configuration can be labor intensive. Keeping the number of VRFs to a maximum of 10 is generally recommended.

Careful consideration of scoping the number of VRFs to support the mine site operations is required as VRF configuration can be labor intensive. Keeping the number of VRFs to a maximum of 10 is generally recommended.

The architecture will reference VRFs deployed for major segmentation and Scalable Group Tagging will be employed for micro-segmentation both within and across VRFs.

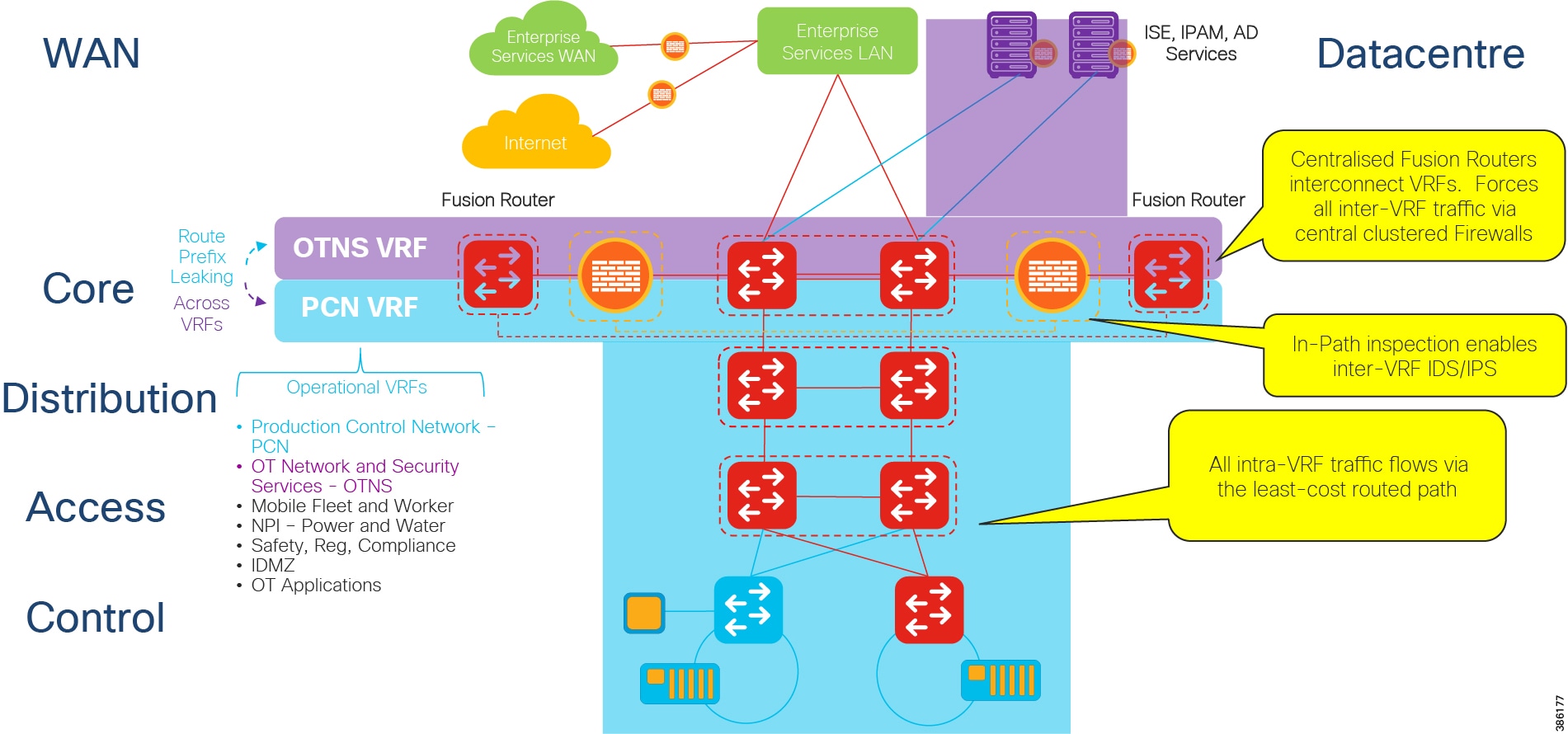

High Level VRF Design concepts for the mine

The following figure highlights the VRF design concepts and functional components.

Figure 8 VRF Design Concepts and Functional Components

VRF Design Concepts and Functional Components depicts a PCN contained within the segmentation of a VRF and the OT networking Services that would contain security and networking services for the site in its own VRF.

Inter VRF

Any Inter VRF traffic is forced through the centralized Fusion Routers. In this instance traffic flows from the PCN to the OT networking services VRF. Any Inter VRF traffic will flow via the Fusion routers where route leaking is administered between VRFs to control inter VRF traffic flow patterns. If further inspection of traffic is required of traffic flows outside of a VRF domain then a firewall can be deployed at this layer to provide in path inspection of any inter-VRF traffic with IPS/IDS.

Intra VRF

Within the VRF traditional VLANs will be provisioned to scope and size address space and control broadcast domain sizes. Trustsec and SGT’s would be deployed to administer policy within the VRF domain and any traversal of VLANS within a VRF would be routed at the distribution/core layer.

Segmentation VRF Design for the Mine

The sitewide figure below depicts the VRF concept across a mining site. The different domains are highlighted from a logical perspective rather than a physical perspective. The same physical distribution and access switches may connect networking and equipment from multiple domains. In smaller mines OT/IT services may be forced to use the same physical network infrastructure. The use of this segmentation architecture fully supports this requirement.

Figure 9 Mine Site Architecture - Logical Domains

Mine Process Control Network Reference Design

Figure 10 Industrial Automation Process Control Network Reference design

Level 3 Mine Sitewide Operations and Control - Level 3

Level 3 aligns closely with the core and sitewide services provided for all operational domains. Most industrial plant facilities are in a different physical environment supporting the wired plant at this layer of the architecture compared to process control Level 2 and below.

The real-time performance networking characteristics are less intense for industrial protocols and the equipment is in an environmentally controlled area, cabinet, or room. The core distribution networking platforms and data center services are deployed at this layer. The industrial Data Center houses plantwide applications such as site historians, asset management, plant systems visualization, monitoring, and reporting services. Network management, sitewide networking, and plant security services are housed in the industrial Data Center, which includes IND, Cyber Vision Center, Cisco ISE, and Cisco StealthWatch. Level 3 provides the networking functions to route traffic between the Process Control Cell/Area Zones and the applications within the Site Operations and Control.

Core/Distribution Layer Cisco Platforms:

■![]() Catalyst 9000 Series switches

Catalyst 9000 Series switches

■![]() Catalyst management through Cisco DNA Center

Catalyst management through Cisco DNA Center

■![]() Cisco ISE, Cisco Cyber Vision, Cisco StealthWatch, AMP for endpoints

Cisco ISE, Cisco Cyber Vision, Cisco StealthWatch, AMP for endpoints

■![]() Cisco Hyperflex, Cisco UCS Data center and compute platforms

Cisco Hyperflex, Cisco UCS Data center and compute platforms

Process Control Levels 0-2

The Process control network is where IACS devices and controllers are executing the real-time control of an industrial process. This network connects sensors, actuators, drives, controllers, and any other IACS devices that engage in real-time I/O communication. It is the major building block within the process control network architecture.

Within the process control levels 0-2, there are key requirements and industrial characteristics that the networking platforms must align with and support. This aligns with the Cell/Area Zone design and recommendations later in this document.

Environmental conditions such as temperature, humidity, and invasive materials require different physical protections than a networking platform. Continuous availability is also critical to ensure the uptime of the industrial process and minimize impact to revenue. Industrial networks differ from IT networks in that they need IACS protocol support to integrate with IACS systems.

In open pit mining, typically the access level switches are connected directly to the distribution switches. In underground mining the access ring switches may be in another ring extended off a traditional ring – a configuration referred to as Subtended rings. See Subtended Rings below.

Recommended Cisco Platforms for the access and process control networks:

■![]() IE 3200, IE 3300, IE 3400, IE 4000, IE 4010 and IE 5000

IE 3200, IE 3300, IE 3400, IE 4000, IE 4010 and IE 5000

■![]() Carpeted access with no industrial protocol support Cisco Catalyst 9000 series switches

Carpeted access with no industrial protocol support Cisco Catalyst 9000 series switches

■![]() Cisco Cyber Vision, Cisco ISE, TrustSec, Cisco StealthWatch

Cisco Cyber Vision, Cisco ISE, TrustSec, Cisco StealthWatch

■![]() Industrial Network Director (IND) providing OT network management support.

Industrial Network Director (IND) providing OT network management support.

Distribution Layer Switches

At the distribution layer in the architecture, the wired mining architecture is closely aligned with the Industrial Automation CVD. The distribution layer facilitates connectivity between the access layer and other services. In smaller plants the distribution and core layer can converge onto the same platform (collapsed core), where it may also provide site or plant wide connectivity.

The distribution switches are generally housed in controlled environments such as control rooms so may be more aligned with traditional enterprise switching unless certain industrial protocols are required such as in power networks, then Industrial Ethernet switches may be used at this layer. These switches provide the networking interface at the Level 2 and 3 within the Purdue Model and in smaller plants may be collapsed into the Level 3 function of a collapsed core distribution deployment.

Resiliency is provided by physically redundant components like redundant switches, power supplies, switch stacking, and redundant logical control planes HSRP, VRRP, stateful switchover. Extending segmentation with technologies such as VRF may be required at this layer too to promote segmentation, and fault domain isolation.

Across the mine there are going to be potentially multiple distribution switches due to the geographical dispersity of the process control and access layer switches. Think of Crush through convey back to the process plant. There are multiple process control systems across the mine that would fit into the realms of process control.

Distribution Layer Cisco Platforms

■![]() Catalyst 9000 Series switches

Catalyst 9000 Series switches

The Industrial DMZ

The Industrial Zone (Levels 0-3) contains all IACS network and automation equipment that is critical to controlling and monitoring plant-wide operations. Industrial security standards including IEC-62443 recommend strict separation between the Industrial zone (levels 0-3) and the Enterprise/business domain and above (Levels 4-5). Such segmentation and strict policy helps to provide a secure industrial infrastructure and availability of the Industrial processes. Data is still required to be shared between the two entities such as ERP data and security networking services may be required to be managed and applied throughout the enterprise and industrial zones.

A zone and infrastructure is required between the trusted industrial zone and the untrusted enterprise zone. The IDMZ, commonly referred to as Level 3.5, provides a point of access and control for the access and exchange of data between these two entities.

■![]() The IDMZ architecture provides termination points for the Enterprise and the Industrial domain and then has various servers, applications, and security policies to broker and police communications between the two domains. Key functions of the IDMZ include:

The IDMZ architecture provides termination points for the Enterprise and the Industrial domain and then has various servers, applications, and security policies to broker and police communications between the two domains. Key functions of the IDMZ include:

■![]() Best practices of no direct communications between the Enterprise and the Industrial Zone. There will be application mirror, replication or proxy services providing a non direct controlled data path. However in some instances where there is not a replication service that can proxy applications between the Industrial Zone and the Enterprise zone a direct path with appropriate firewall rules may be deployed. ISE design is an example. See the CPWE IDMZ design CVD.

Best practices of no direct communications between the Enterprise and the Industrial Zone. There will be application mirror, replication or proxy services providing a non direct controlled data path. However in some instances where there is not a replication service that can proxy applications between the Industrial Zone and the Enterprise zone a direct path with appropriate firewall rules may be deployed. ISE design is an example. See the CPWE IDMZ design CVD.

■![]() An IDMZ providing secure communications between the Enterprise and the Industrial Zone using mirrored/replicated servers, “jump-hosts” and applications located within the IDMZ

An IDMZ providing secure communications between the Enterprise and the Industrial Zone using mirrored/replicated servers, “jump-hosts” and applications located within the IDMZ

■![]() An IDMZ securing remote access service flows from the external networks into the Industrial Zone

An IDMZ securing remote access service flows from the external networks into the Industrial Zone

■![]() An IDMZ providing a security barrier to prevent unauthorized communications into the Industrial Zone and, therefore create security policies to explicitly allow authorized communications (ISE between Enterprise and Industrial Zone)

An IDMZ providing a security barrier to prevent unauthorized communications into the Industrial Zone and, therefore create security policies to explicitly allow authorized communications (ISE between Enterprise and Industrial Zone)

■![]() No IACS traffic passing directly through the IDMZ (Controller, I/O traffic).

No IACS traffic passing directly through the IDMZ (Controller, I/O traffic).

The IDMZ design for Process Control is aligned with Industrial Automation. The design guidance is detailed in the Industrial DMZ reference chapter and detailed implementation can be found in the Converged Plantwide Ethernet CVD at: https://www.cisco.com/c/en/us/td/docs/solutions/Verticals/CPwE/3-5-1/IDMZ/DIG/CPwE_IDMZ_CVD.html

■![]() Cisco ASA and Cisco Firepower Firewalls

Cisco ASA and Cisco Firepower Firewalls

■![]() Catalyst 9000 Series switches

Catalyst 9000 Series switches

Mine Process Plant Network Design

The Industrial Automation (IA) Cisco Validated Design is a cross industry reference architecture that also addresses the requirements for mining operations within the PCN. It has validated key Networking and Security functionality that is utilized within mines today. This Chapter will highlight the IA validated design concepts that apply to the mining environment. The key IA design section relevant to mining operations is the Cell Area Zone. Further releases of this Mining CRD will target other areas of the Mine Reference architecture the Core Distribution and Fusion router design aligned with the overall site operations detailed in Mine Process Control Network Reference Design.

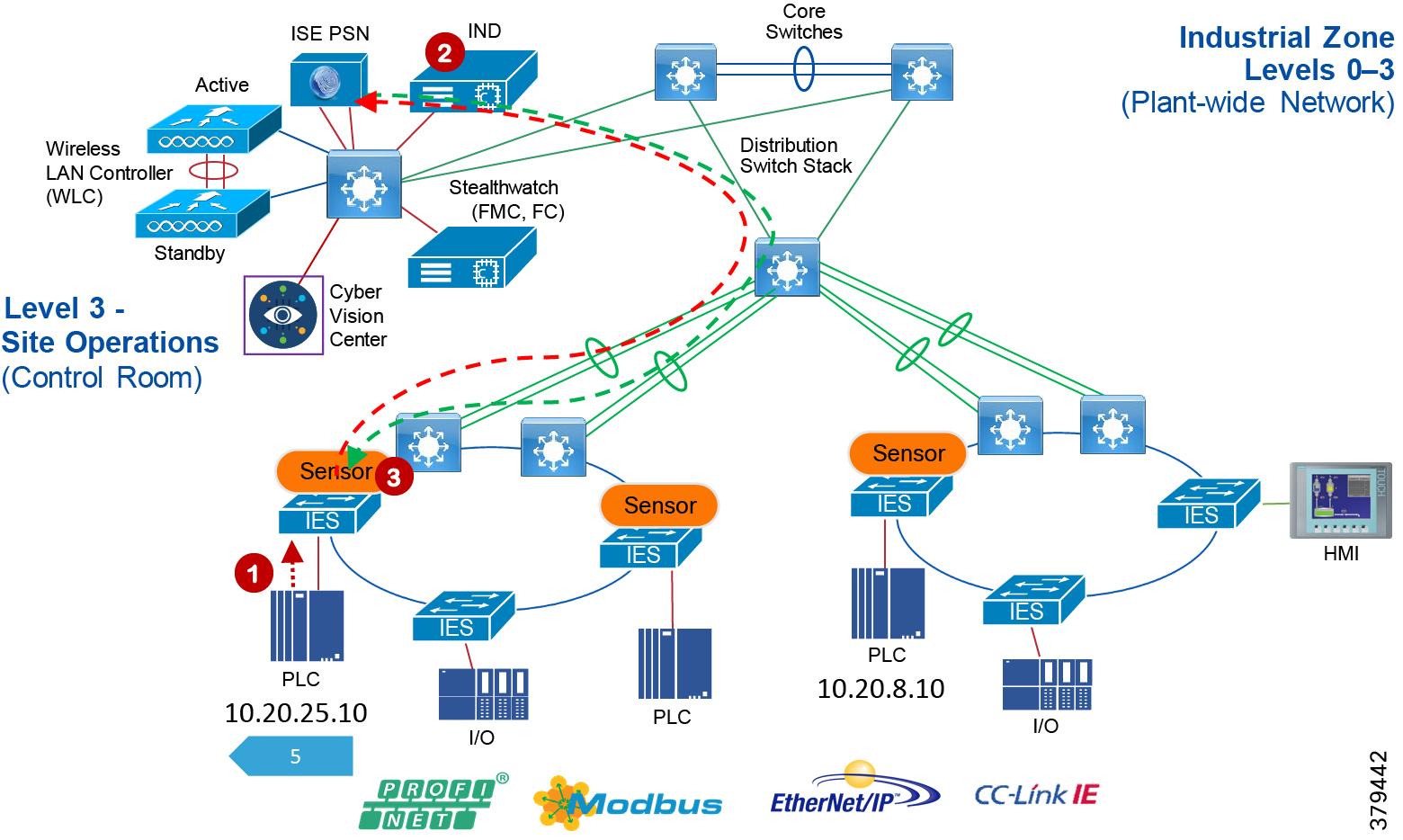

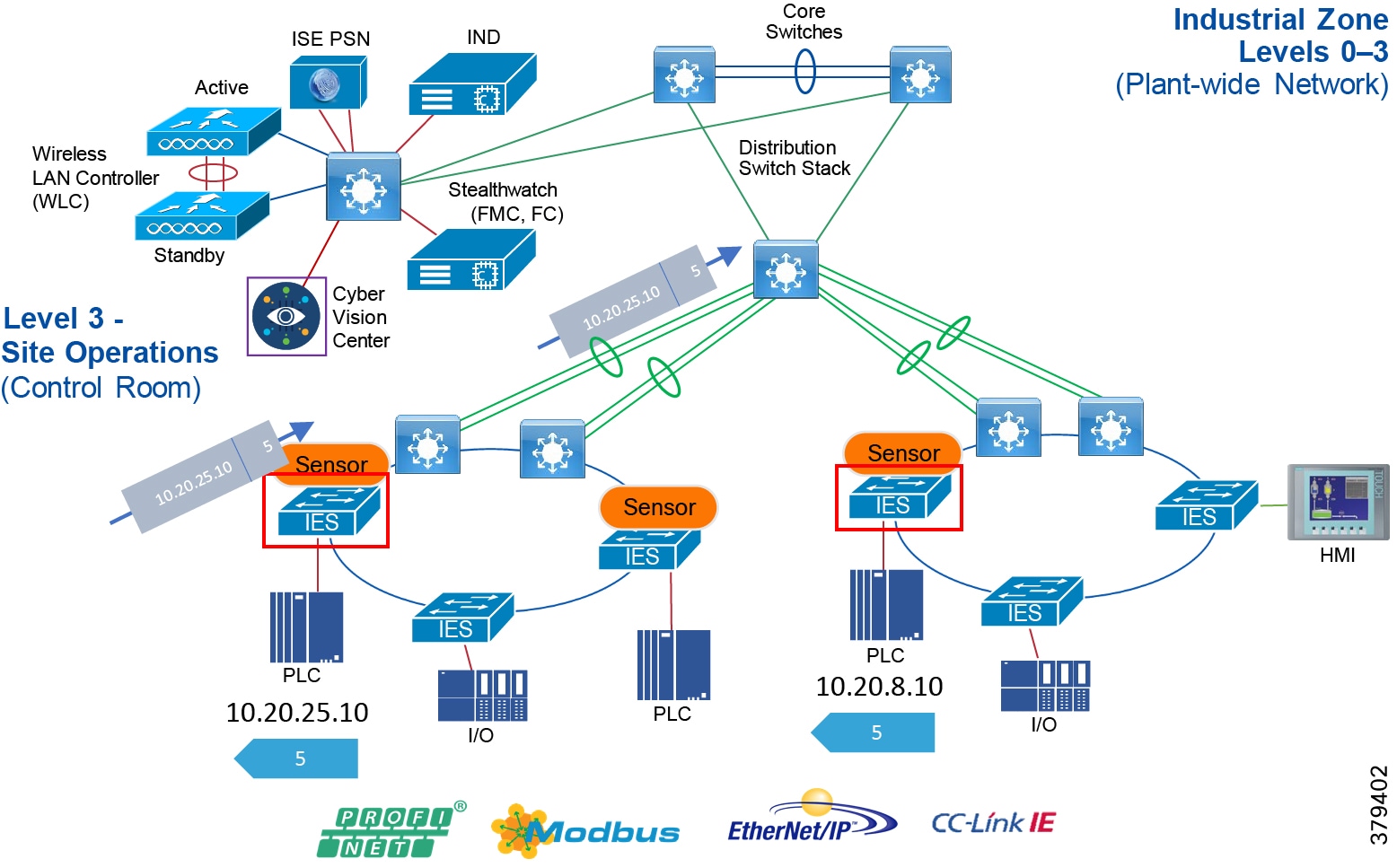

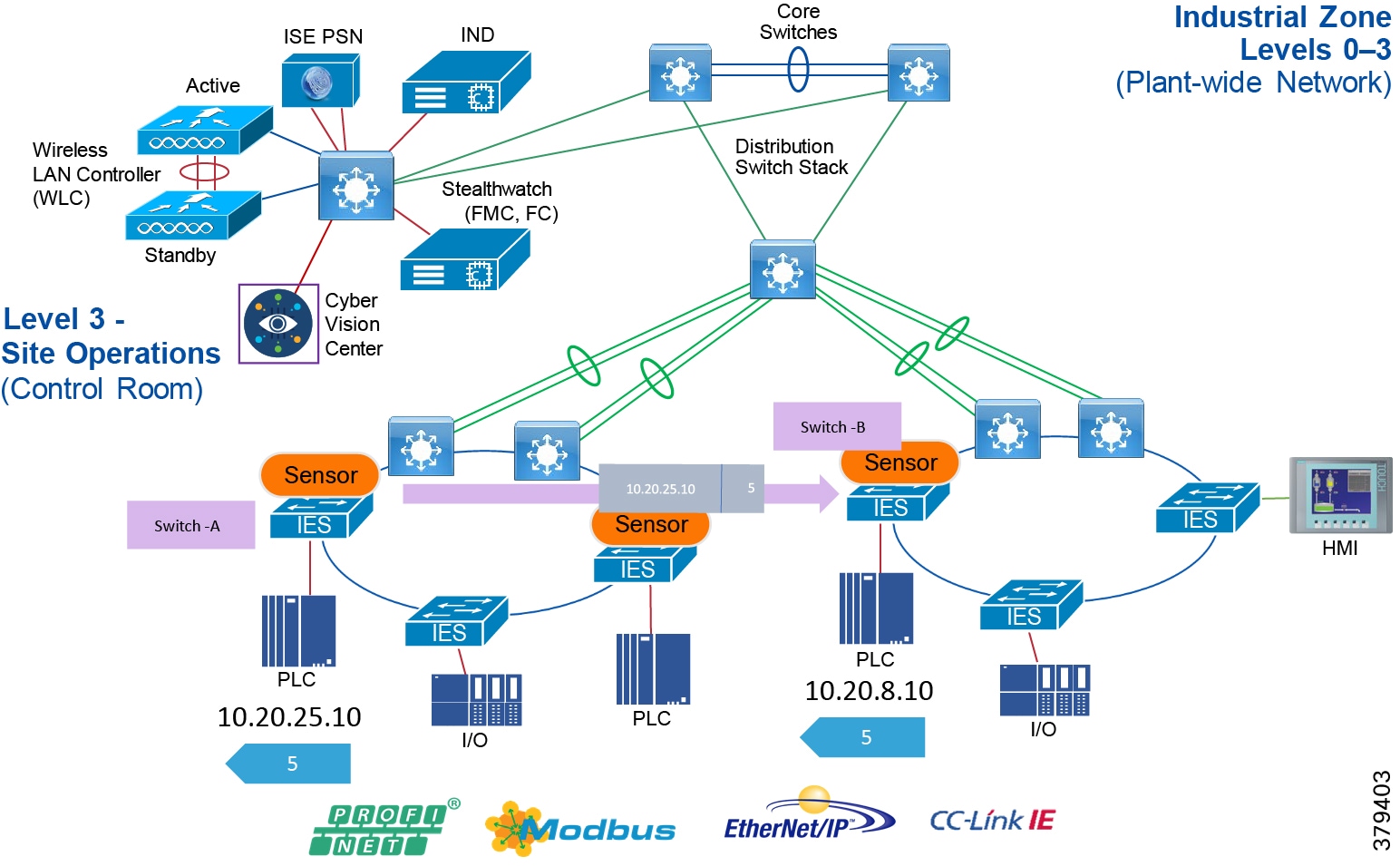

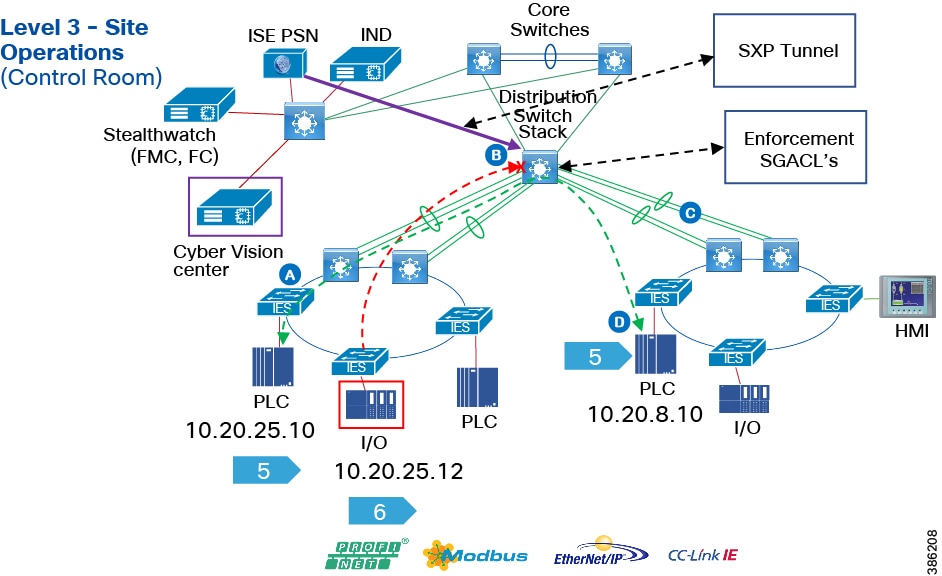

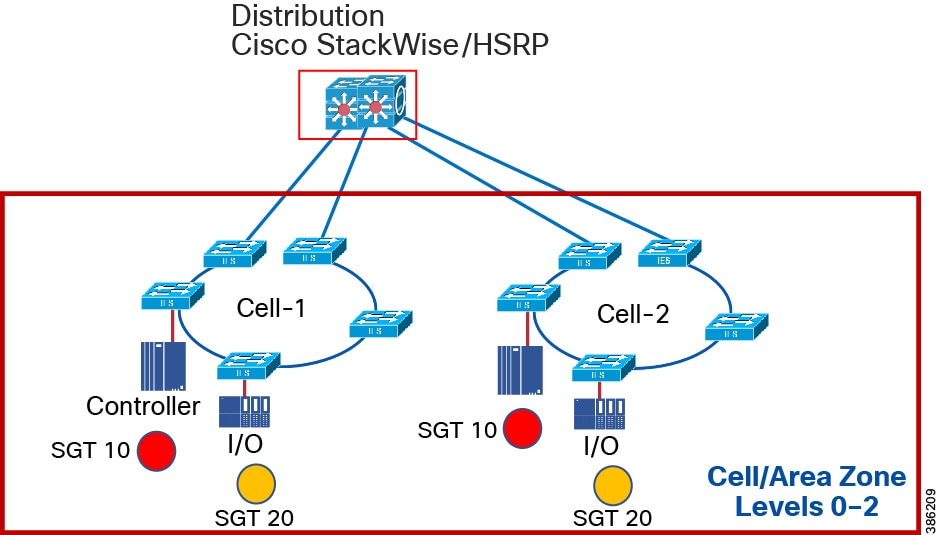

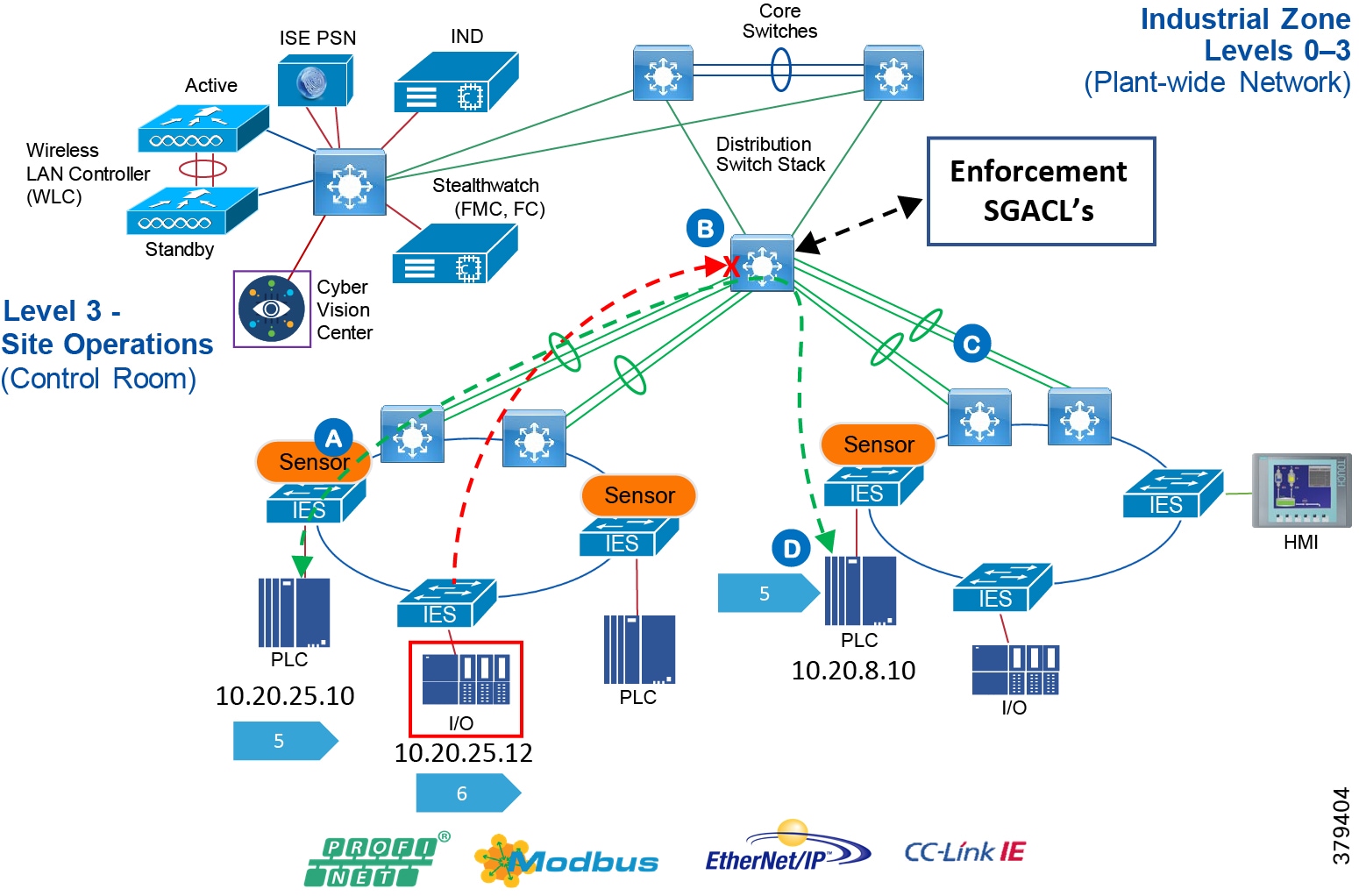

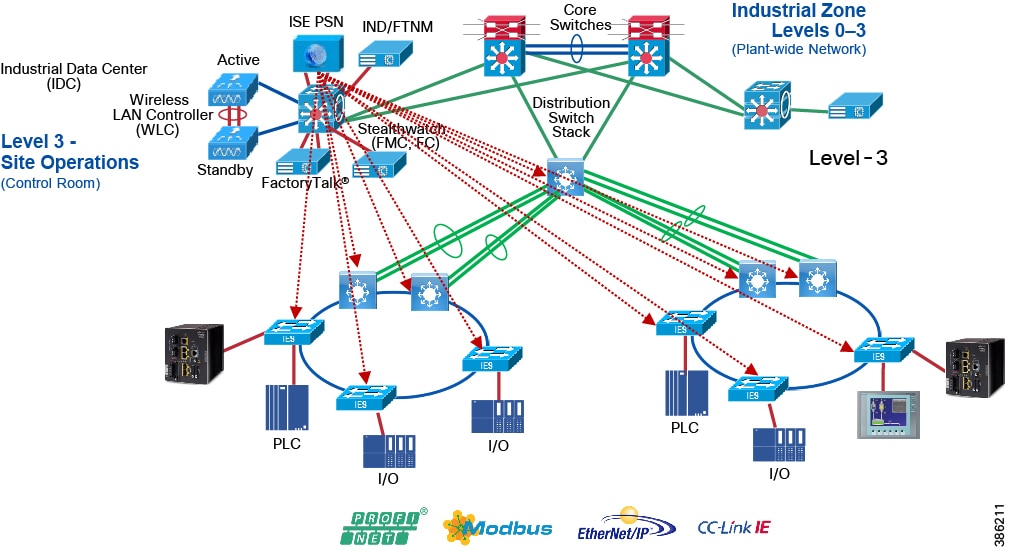

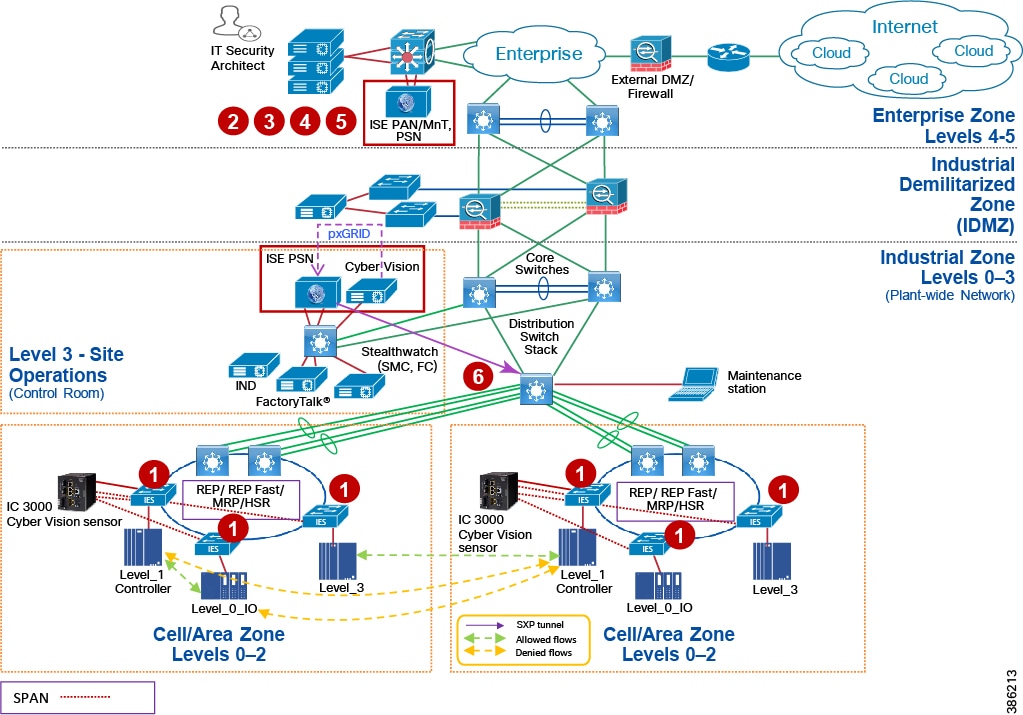

Industrial Networking and Security Design for the Cell/Area Zone

The Industrial zone contains the Site Operations (Level 3) and the Cell/Area Zone (Levels 0-2). The Cell/Area Zone comprises the systems, devices, controllers, and applications to keep the mining process control networks running. It is extremely important to preserve smooth mining operations, therefore security, segmentation, and availability best practices are key components of the design. There are some key Industrial characteristics of a mine and its operations that will drive certain products and design concepts. As with all designs there needs to be a balance between network, security and product feature parity with overall mine requirements in creating the best fit for purpose design.